1 Introduction

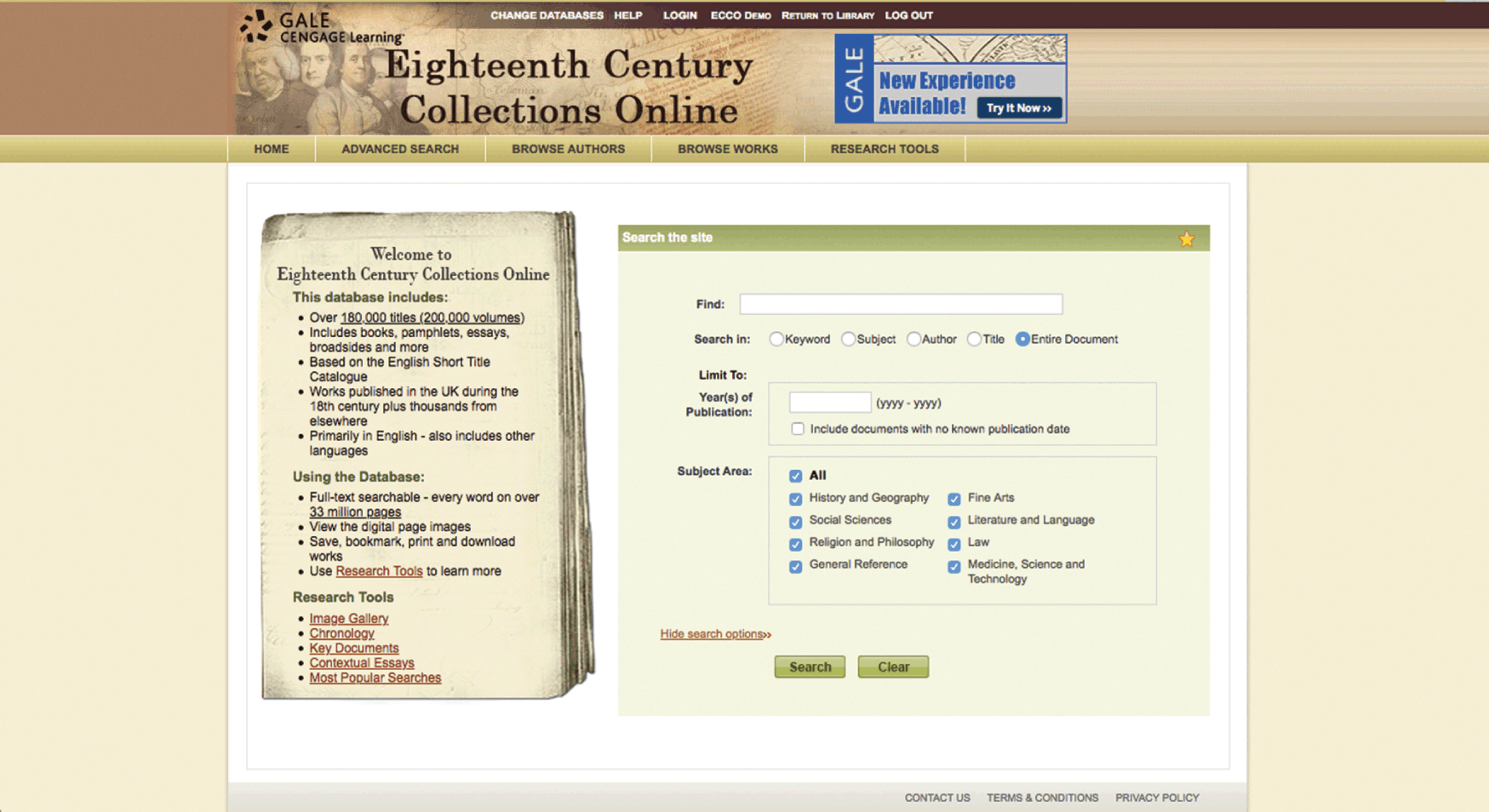

Eighteenth Century Collections Online (ECCO) is an online database published by Gale-Cengage. First published in 2003, it gives access via subscribing libraries to 184,536 titles of material printed between 1700 and 1800, comprising the text and digital images of their pages. In 2020 2,092 institutions and consortia in forty-two countries subscribe; in 2019 around 7.7 million search results, images, or texts were retrieved worldwide (Reference De MowbrayDe Mowbray, 2020a). It is arguably the largest single online collection of specifically eighteenth-century material available via academic institutions and has had a profound impact on how researchers conduct scholarship of the period. A history of a digital resource like ECCO is important because if we are at all interested in old books, and assuming we’re also aware of the exponential increase in accessing old books via resources like ECCO, then we should be interested in why digitised books look the way they do and the difference that makes, how ECCO works the way it does, and what we can – and can’t – do with these books.

Eighteenth Century Collections Online is deeply rooted in a longer history of representing eighteenth-century books, the effect of which can still be traced in the way ECCO works, since it is based upon a commercial microfilm collection, The Eighteenth Century, the contents of which were selected on the basis of a computerised cataloguing programme, the Eighteenth-Century Short Title Catalogue (18thC STC). As Sarah Werner and Matthew Kirschenbaum argue, we cannot ‘posit a transcendental “digital” that somehow stands outside the historical and material legacies of other artifacts and phenomena’; rather, the digital is a ‘frankly messy complex of extensions and extrusions of prior media and technologies’ (Reference Kirschenbaum and WernerKirschenbaum & Werner, 2014: 408; my emphasis).

This is why this book starts with a ‘prehistory’ in order to understand the twentieth-century contexts of earlier media technologies, the changing cultures of scholarship, and what was driving commercial academic publishing. The first section draws out two significant factors from the 18thC STC (begun in 1976). The first is that decisions had to be made about what material it would include and what material it would not – these decisions would subsequently affect the scope and nature of ECCO’s content. The second is that the catalogue was from the outset a digital project, but that presented a challenge: how would the idiosyncratic features of books produced by the hand press translate into standardised machine-readable data? This metadata (data about something, rather than the thing itself) would eventually shape how ECCO could be searched as well as how users apprehend the nature of old books when they are digital images. The second section moves to Research Publication’s (RPI) microfilm collection, The Eighteenth Century (published from 1982 onwards). It sets out the scholarly and commercial contexts for the development of this new media technology from the 1930s to the end of the twentieth century, illuminating the arguments of scholars, libraries, and microfilm companies for how this new technology would enable the preservation of and enable a wider access to research materials and old books.

At this point it’s worth establishing why a focus on the books within ECCO is important. My approach to the idea of the book is predicated on a series of axioms:

1. The form of the printed book is a particular medium for the words within (as opposed to, say, a scroll or an audiobook).

2. The meaning of a book does not solely consist of the words within; the material form and the design of the book itself have meaning.

3. Transform the book into another medium (‘remediation’) and you change the meanings of the book.

It’s for these reasons that this book, in the chapter ‘Bookishness’, looks at some case studies of individual eighteenth-century books in order to exemplify the effects of the 18thC STC and the microfilming programme on how users apprehend the physicality – the material life – of hand-press books as they are presented as digital images in ECCO. Part of this is a study of how users navigate between the image of a book and its record (spoiler: there is no seamless ‘fit’), but it also emphasises the effect of human agency and human decisions about technology on how old books look in ECCO. In this sense my conceptual framework for this history is indebted to the powerful arguments for the critical potential of book history D. F. McKenzie made in his lectures of 1985, in which he proposed that bibliography should concern itself with a ‘sociology of texts’. I start with his point that the term ‘texts’ should go beyond the printed text to encompass the very broadest set of human communication media and even – most vital for us – ‘computer-stored information’; there is, he argues, ‘no evading the challenge which those new forms have created’ (Reference McKenzieMcKenzie, 1999: 13). The discipline of bibliography ‘studies texts as recorded forms, and the processes of their transmission, including their production and reception’ (12). Perhaps the strongest argument for my history is that a ‘sociology of texts’ should allow ‘us to describe not only the technical but the social processes of their transmission’, and it ‘directs us to consider the human motives and interactions which texts involve at every stage of their production, transmission, and consumption. It alerts us to the role of institutions, and their own complex structures, in affecting the forms of social discourse, past and present’ (Reference McKenzieMcKenzie, 1999: 13, 15).Sociocultural forces, institutions, technology, and human agency all play their part in this history.

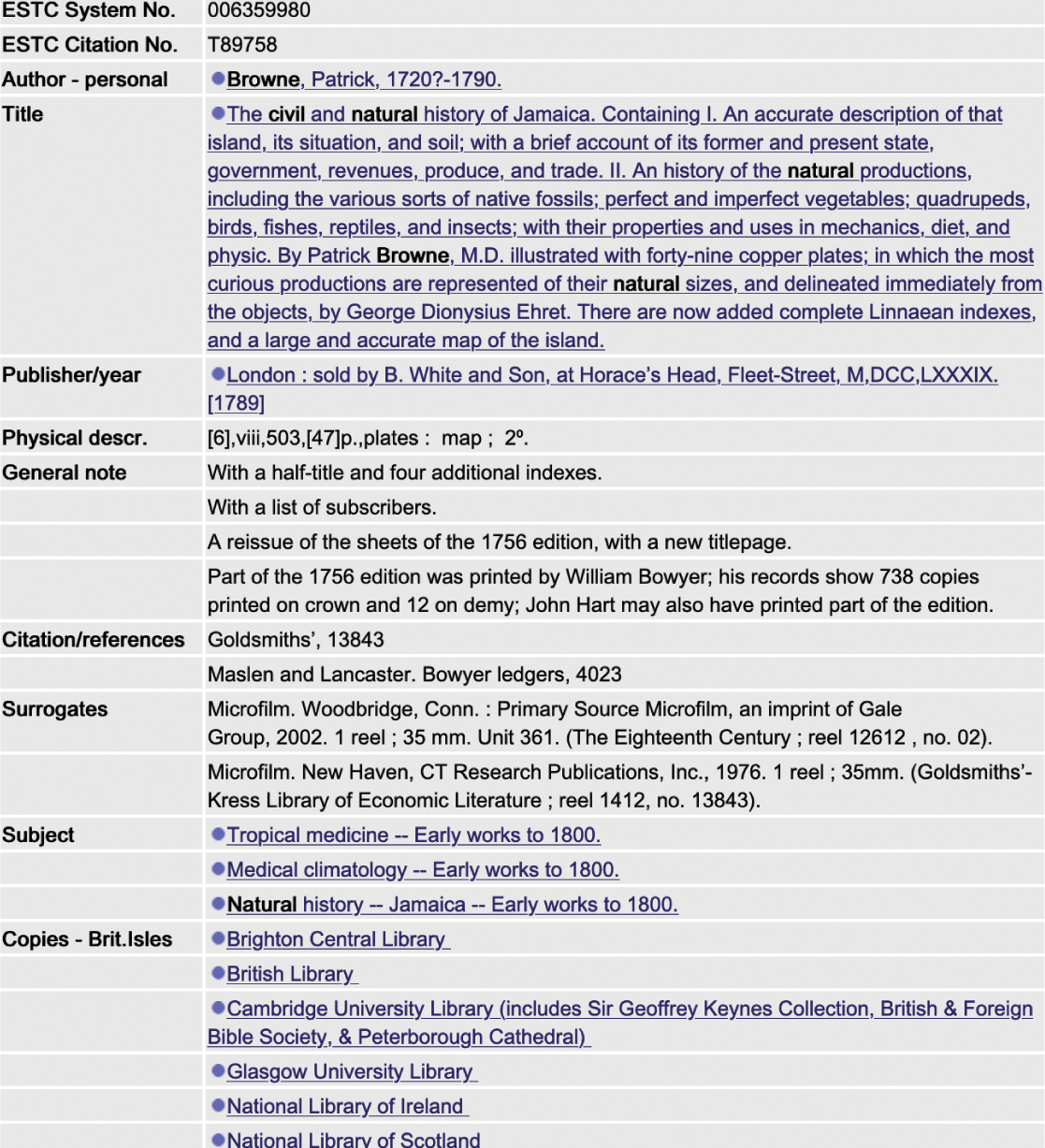

Eighteenth Century Collections Online – or any digital entity – is not a static or an unchanging entity: it has a history. The rapidity with which commercial publishing technology supersedes older versions of itself has meant that some circumstances of its development are now obscure and others are irrecoverable. Tellingly, a part of ECCO will become invisible from 2020 when its original interface is scheduled to be turned off: it will literally be history. So this book is partly an act of recovery. The third chapter, ‘Beginnings’, turns to the development of ECCO itself. In the first section, I examine the immediate contexts that shaped how ECCO was to work and to be sold. It was decisively influenced by the downward movement of the academic publishing market and the emerging so-called disruptive technologies in the 1990s (Reference Bower and ChristensenBower & Christensen, 1995). I focus on the techno-commercial choices facing Gale by illustrating contemporary digital resources created by two of its key commercial competitors in academic publishing of the time: Chadwyck-Healey and ProQuest. In addition, some aspects of how ECCO works ‘under the hood’ are – in common with many digital products – simply invisible to the public. The chapter goes on to explain Gale’s digitisation of the microfilm collection, discussing the problems created by the use of optical character recognition (OCR) software to automatically create text from digital images of old books, how its metadata was structured, and how ECCO’s original search interface worked.

The chapter ‘Interfacing’ takes us forward to Gale’s development of ECCO after 2010, but also returns us to the issues of access. First, it focuses on the conglomerate of deals and collaborations between Gale and various partners, all of which broadly attempted to address concerns about who could access ECCO, as well as how ECCO might be used and interrogated, including the Text Creation Partnership, Jisc, and the print-on-demand deal with BiblioLife. This chapter’s last section discusses the effects and meanings of the rise of the platform: this enabled the cross-searching of aggregated digital resources in a single package, but also a new way of interfacing with data and texts that was – for Gale’s platforms – influenced by their belated engagement with the scholarly field of digital humanities. However, the platform produces a crucial tension between two ways of understanding and using old books: the bibliographical (or the ‘bookishness’ of books) and the textual. I finish by considering the politics of how these platforms represent early print history, drawing on the insights of postcolonial digital humanities, and reminding us that the Anglocentric nature of digital resources like ECCO is a product – a partially obscured one – of human decisions made in its antecedents, the 18thC STC and the microfilm collection. Indeed, throughout the book I’ve tried to avoid the suggestion that technological change is the only driving force in the twentieth and twenty-first centuries; instead I hope to have demonstrated that in ECCO’s history – indeed, in the history of remediating and publishing old books – technology is inextricable from culture and human decisions.

I intend my history of a digital resource to mirror the methodology of bibliography. As W. W. Greg argued:

the object of bibliographical study is, I believe, to reconstruct for each particular book the history of its life, to make it reveal in its most intimate detail the story of its birth and adventures as the material vehicle of the living word.

Like the life history of old books, I hope to reveal the intimate details of the life history of ECCO: this book is partly an argument for the application of bibliography to digital entities, conceived as a ‘material vehicle of the living word’.Footnote 1 Looking over my introduction I hope it’s also clear that the status of entities like ECCO and their digitised books actually challenges the notion of a linear, progressive history. First, as Bonnie Mak has noted of books that have been subject to remediation, past and present versions of the same book copy exist simultaneously; in our case, as catalogue, as record, as microfilm, as digital images, as digital text, and even as a print-on-demand copy (Reference MakMak, 2014: 1516, 1519). This is echoed in the movement between past and present when we discuss ECCO’s place within wider historical contexts. Alan Liu’s comment about how we imagine and write narratives of media is suggestive: ‘the best stories of new media encounter – emergent from messy, reversible entanglements with history, socio-politics, and subjectivity – do not go from beginning to end, and so are not really stories at all’ (Reference Liu, Siemans and SchriebmanLiu, 2013: 16, my emphasis).

My aim is to speak to people interested in old books, people interested in how digitised collections of books work, and people interested in the history of how new media technologies have affected academic publishing. With such a broad reader in mind, I have tried not to assume any expert knowledge of old books, technology, or academic publishing even though this means taking the odd digression to explain a microhistory of file formats, or microfilm publishing, or some bibliographical terminology. The challenge of my history is to trace the digressive reverberation of ideas and debates that surrounded how we access and what we do with old books, and the chronological messiness of books whose lives have been subject to constant change. But this history is more than that; it is also an argument that we should better grasp the nature of something students and scholars rely upon for their understanding of eighteenth-century print and an argument for recovering, reading, and researching digital resources critically.

2 Prehistory

Cataloguing the Eighteenth Century

Eighteenth Century Collections Online is based on a microfilm collection produced between 1982 and the early 2000s. This film collection itself was based on a catalogue of books begun in 1976 called the Eighteenth-Century Short Title Catalogue, under the editorship of Robin Alston, consultant at the British Library, co-edited with Henry Snyder in the United States. From 1987 this project expanded to include material printed before 1700 and was eventually renamed the English Short Title Catalogue (ESTC). In its current form the ESTC is an online catalogue of printed material published from the fifteenth century to the end of the eighteenth century. It’s difficult to capture the sheer scale of the ESTC and its ambition: looking back over the project from 2003, Thomas Tanselle reaches for numbers: the ‘file (achieved at a cost of about 30 million dollars) consists of some 435,000 records, indicating the location of over 2,000,000 copies in 1,600 libraries around the world’ (in Snyder & Reference SmithSmith, 2003: xi). Currently it comprises more than 480,000 records from 2,000 libraries worldwide.Footnote 2 However, the project was initially confined to material printed between 1700 and 1800, and because it is this that shaped the underlying nature of ECCO, this history concentrates on the catalogue project before 1987.Footnote 3

The first discussions about the possibility of a catalogue that would cover the eighteenth century began as early as 1962 amongst members of the Bibliographical Society, and such a catalogue was perceived as the logical next step from the two Short Title Catalogues (STCs) covering material printed between 1475 and 1640 (Pollard and Redgrave) and 1641 and 1700 (Wing). However, it was from 1975 that the catalogue got the necessary backing to start. More significantly, the discussions and plans by the leading editors for the 18thC STC emphasised the necessity that it be produced as an electronic file so that it could be managed and queried by computers (Reference Alston and JanettaAlston & Janetta, 1978; Reference Crump, Snyder and SmithCrump, 2003: 54; Reference Snyder, Snyder and SmithSnyder, 2003: 21–30; Reference AlstonAlston, 2004). One of the most significant aspects of the 18thC STC is that it was deeply influenced by developments in computer-aided library cataloguing and online networked computer systems in the 1960s. The technology of machine-readable catalogue records would be an essential aspect of how metadata (that is, information about an object, distinct from the object itself) works alongside images and text of old books in digital archives and collections of the twenty-first century.

Korshin’s 1976 grant application to get the 18thC STC off the ground included the participation of Hank Epstein, the director of the Stanford University computing team (Reference AlstonAlston, 2004). Notably, the Stanford Research Institute was the first, in 1963, to demonstrate an ‘online bibliographical search system’, an ‘online full-text search system’, and systems that could be used remotely over long distances, and it was the first to use a screen display for interaction between human and computer (Reference Bourne and HahnBourne & Hahn, 2003: 14–15). In 1978 the Stanford-based Research Libraries Group developed an online networked database called the Research Libraries Information Network (RLIN).Footnote 4 In 1980 the 18thC STC at the British Library formed an important collaboration with the group, and by 1985 US and UK teams of the 18thC STC were able to edit the same file interactively online (Reference Crump, Snyder and SmithCrump, 2003: 55).

Technological solutions to managing information had been the subject of both visionary projects and practical application since the end of the nineteenth century; two particular figures are often cited as seminal thinkers in this field. One is Paul Otlet (1868–1944) who, with Henri La Fontaine, designed a huge card catalogue in the 1890s entitled the ‘Universal Bibliographic Repertory’. Otlet worked with Robert Goldschmidt on microfilming in the 1920s and 1930s, after which Otlet published a collection of his essays on the future of information science, Traité de Documentation, in 1934. The other is Vannevar Bush (1890–1974): in 1945 he proposed – but never built – a machine called ‘Memex’ that would display microfilm and that also had the capability to apply, search, and retrieve keywords about the information on the microfilms (Reference Deegan and SutherlandDeegan & Sutherland, 2009: 125–6; Reference BorsukBorsuk, 2018: 209–13).

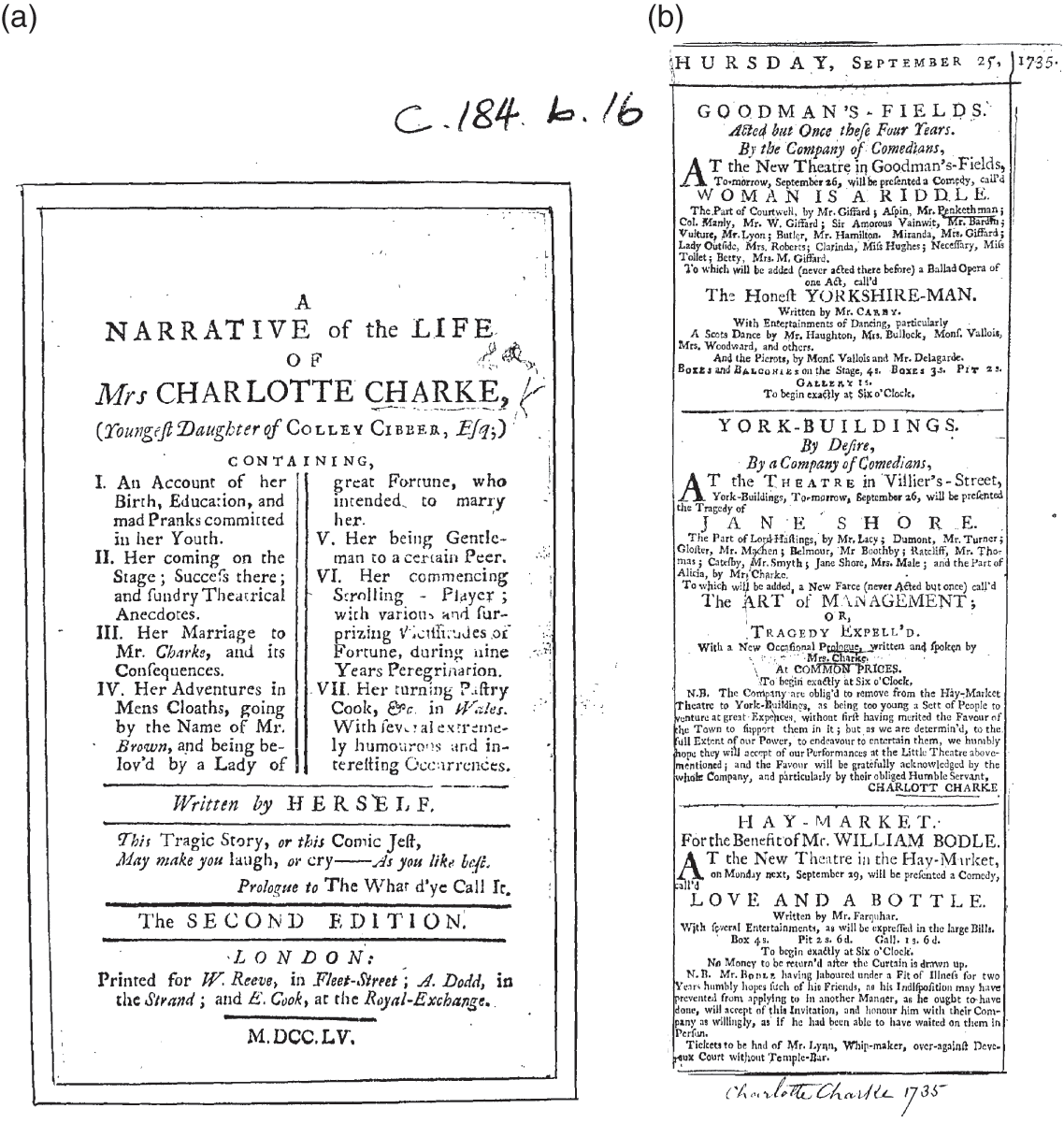

However, more significant than either of these men for the future of library information was Henriette Avram (1919–2006). Her career as one of the first computer programmers led eventually to designing library information systems at the Library of Congress in 1965. It was here that she developed the first – and what would become the standard – computerised library cataloguing system throughout the world: MAchine Readable Catalogue (MARC) (Reference Rather and WigginsRather & Wiggins, 1989). Before the advent of computer-aided catalogues libraries used card catalogues; the details about a book, for instance, would be recorded on a three-by-five card. The system for ensuring all libraries had access to and could update library catalogue records involved card-copying services and transporting duplicate card records by mail. By contrast a record that a machine can read can be disseminated and centralised much more easily. A MARC record is divided up into coded fields each of which contains a designated type of information, such as author, title, library location, subject, and many more. Avram’s pioneering work necessitated a thorough understanding of computing and the principles of bibliographical cataloguing. The MAchine Readable Catalogue, then, was not only about designing a record to be parsed by a computer; it also set the standard for bibliographical records that libraries across the world would follow. The eventual result is the kind of human-readable record you can see on the online ESTC catalogue entry for the 1789 issue of Patrick Browne’s The Civil and Natural History of Jamaica (Figure 1). Later I use this book to explore the relationship between the physical book and its record.

Figure 1 ESTC record, Patrick Browne, The Civil and Natural History of Jamaica, 1789

In fact, Alston discussed the 18thC STC with Henriette Avram in 1977 (Reference AlstonAlston, 2004). In the earliest discussions the catalogue was to adopt the principles of the seminal catalogue of early print: Pollard and Redgrave’s 1926 A Short-Title Catalogue of Books Printed in England, Scotland, & Ireland and of English Books Printed Abroad, 1475–1640. But it was clear that the 18thC STC would be a computerised catalogue and that therefore records would follow the much more detailed standards required by MARC (Reference Alston and JanettaAlston & Janetta, 1978: 24–6). In his 1981 lecture ‘Computers and Bibliography’ Alston was adamant that computing would enable more powerful scholarship. Alongside the inventions of writing and printing as technologies of knowledge, Alston noted, ‘we have now added a third (and by comparison with the former two) quite remarkable one: the storage, and virtually instant retrieval, of information about the present and the past in electro-mechanical form, and the mechanical aids available to assist in this process are now formidable – more powerful than anything we have known’ (Reference AlstonAlston, 1981a: 379).

Using machine-readable records meant that users could perform much more sophisticated searches than were possible with card catalogues (Reference ZeemanZeeman, 1980: 4). In addition, cataloguers, ‘keen to facilitate greater access to the online records, tended subconsciously (perhaps) to transcribe long titles’, enabling users to conduct complex keyword searches (Reference CrumpCrump, 1988: 5).

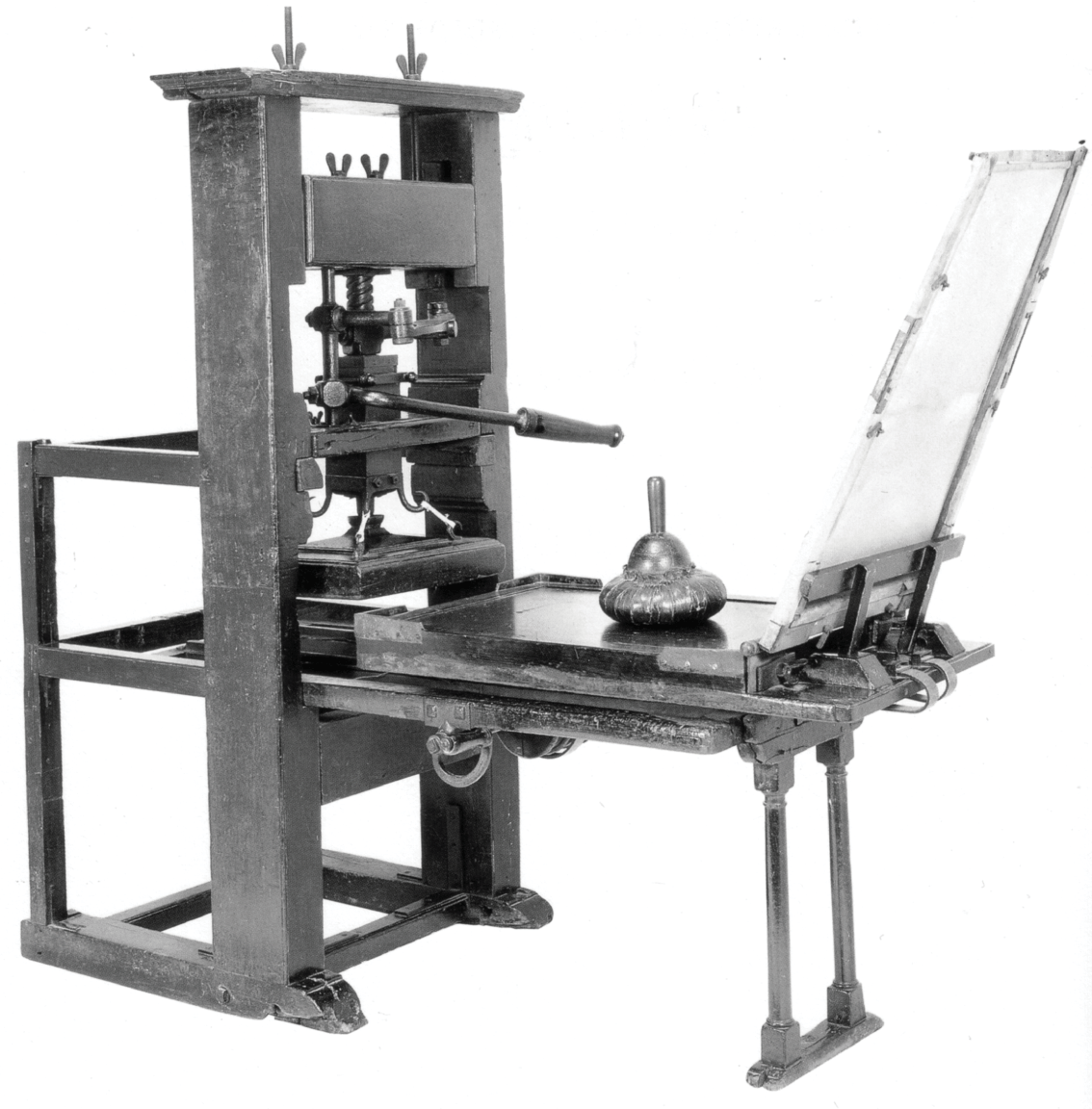

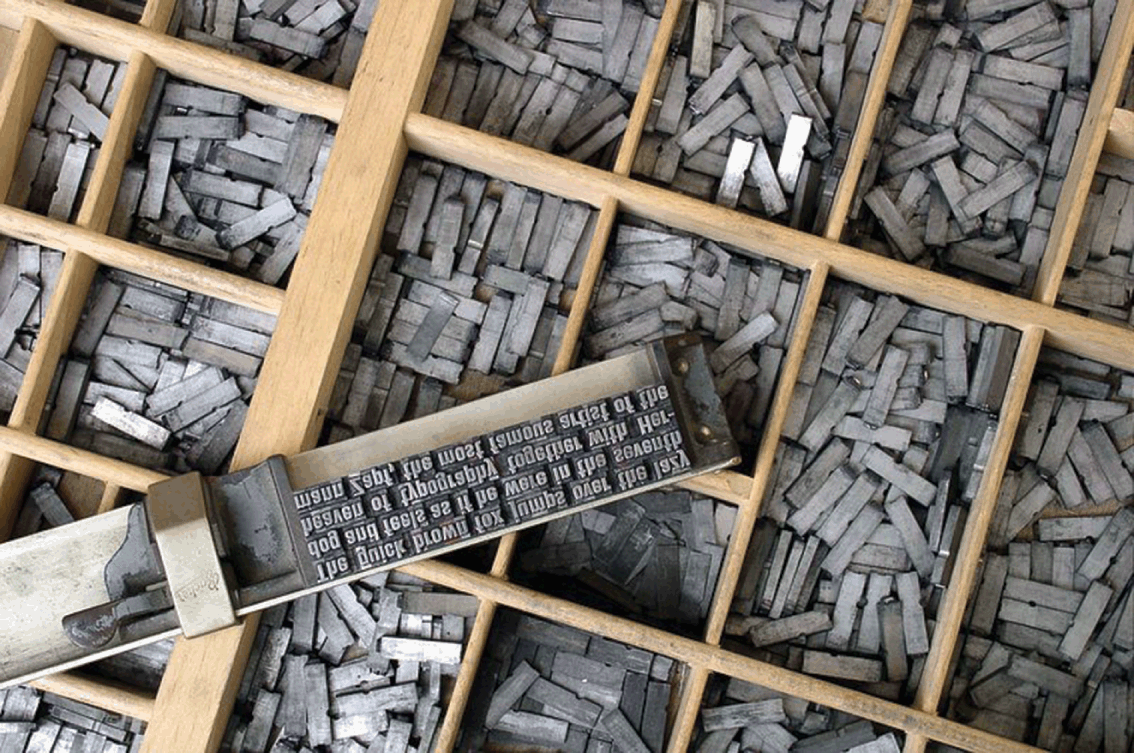

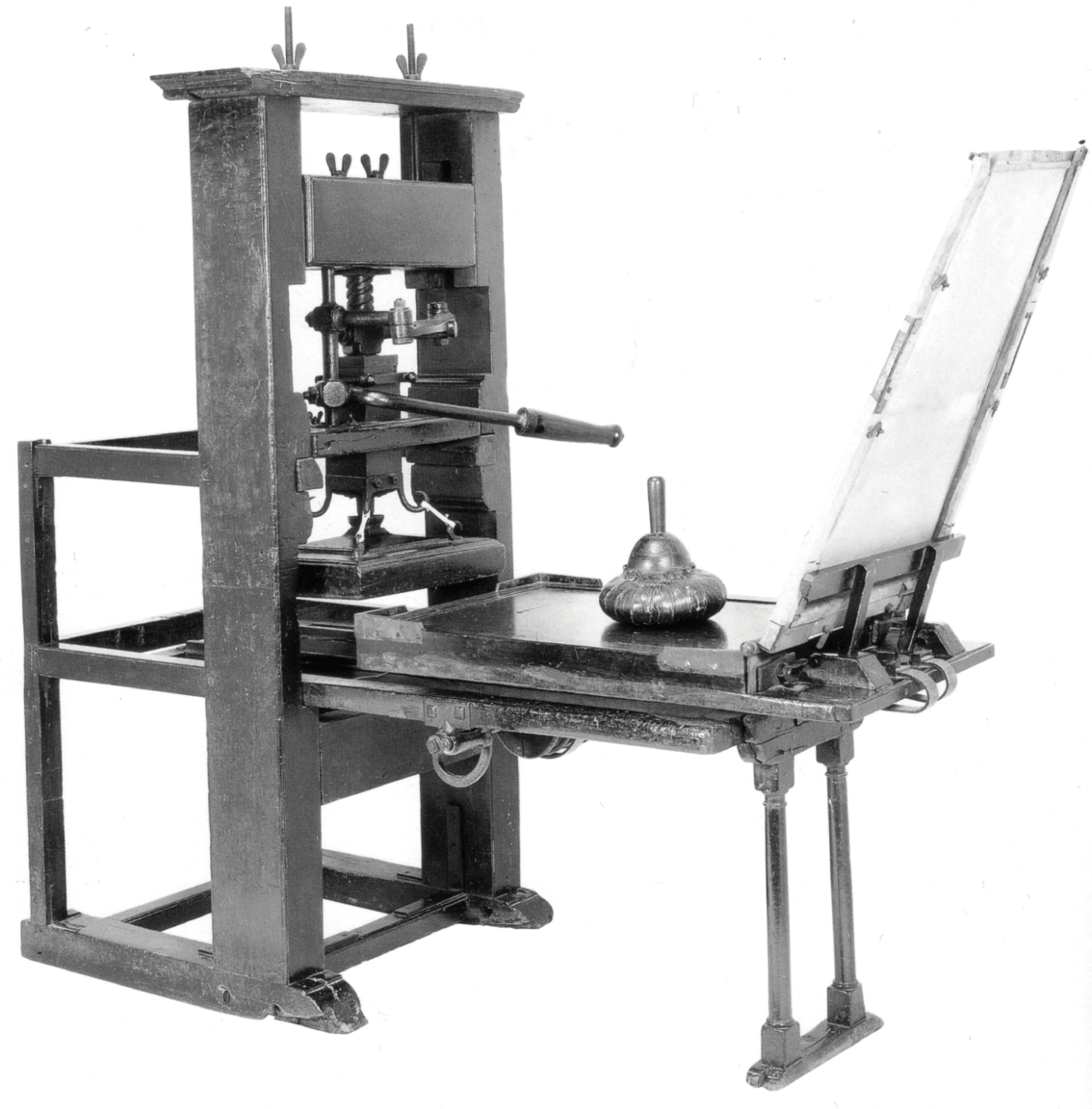

However, using MARC for the 18thC STC would not be a seamless fit. It’s worth reminding ourselves that each book in this period was handmade, the product of a series of processes which depended ‘upon a complex sequence of events, all of which were determined by humans capable of fallibility, stupidity, laziness, inconsistency, disobedience’ (Reference AlstonAlston, 1981a: 372). These included the making of paper, ink, and metal type, as well as composing type into sentences, locking those sentences into a frame, using the press to make each sheet, proofreading, and compiling it all into a book (Figures 2 & 3).

Figure 2 Metal type

Figure 3 Hand press, c.1700s

As Alston notes, ‘data must be stored within a system hospitable to eccentric evidence’ (Reference AlstonAlston, 1981a: 379). Books of the hand-press period and their records are ‘eccentric’ in the sense of being ‘Irregular, anomalous’ (OED, 6a). That is to say that – leaving aside editions and variants – the potential disparity between copies of the same book requires a method of cataloguing that can register such nonconformity. Alston emphasised the human agency involved in making books in order to highlight the limitations of the new ‘bibliographical networks’ that require ‘rigid’ cataloguing standards (Reference AlstonAlston, 1981a: 373). The implicit tension, then, was between information scientists for whom an isolated record sufficed to stand in for all copies and variations of a handmade book, and scholars for whom this would be a meaningless abstraction rather than a record of a book’s material life history – the purpose of bibliography that W. W. Greg persuasively defined (Reference Greg and FrancisGreg, 1945: 27). As Alston and Janetta put it in their outline of the 18thC STC, ‘the larger purpose, in which that record can reveal contextually its ancestry and its offspring, is for most students of cultural history of far greater significance’ (Reference Alston and Janetta1978: 29).

The sheer scale of the print output of the period meant that the 18thC STC had to delimit its scope in a variety of ways. Alston and Jannetta noted that the 18thC STC aimed to ‘describe a corpus of printing more than ten times the size’ of the two existing STCs of pre-1700 material (Reference Alston and JanettaAlston & Janetta, 1978: 24). It set generic limits to the material and would not include: engraved material; printed forms, such as licences, warrants, certificates, etc.; trade and visiting cards, tickets, invitations, currency (although advertisements were to be included); playbills and concert programmes; playing cards, games, and puzzles (Reference Alston and JanettaAlston & Janetta, 1978: 16–17). More significantly, it constructed its scope along geographic and linguistic lines. It would include:

1. All relevant items printed in the British Isles in any language;

2. All relevant items printed in Colonial America, the United States (1776–1800), and Canada in any language;

3. All relevant items printed in territories governed by Britain during any period of the eighteenth century in any language;

4. All relevant items printed wholly or partly in English, or other British vernaculars, in any part of the world.

Its Anglo-American foundation is clear here, as well as an unconscious legacy of British colonialism in the continuing idea of the British Commonwealth. For Alston the catalogue was also tied to the function of a new British national library. Conscious that the project came on the heels of the British Library’s creation after it was separated from the British Museum in 1973, he felt it was the new institution’s ‘responsibility for the production of the national bibliographic record’ (quoted in Crump, in Reference Snyder, Snyder and SmithSnyder, 2003: 49). Indeed, cataloguing is not a neutral process. Molly Hardy’s reflection on the cataloguing and marginalisation of African-American printers usefully highlights how ‘our organization of data, no matter how neutral we imagine it to be, is built out of and therefore reflects on a particular moment’ and is therefore organised ‘within a system that can never itself be neutral because its creation, like the data it captures, is a humanist endeavor’ (Reference HardyHardy, 2016).

The 18thC STC project was a bold attempt to catalogue in as much detail as possible the ‘ancestry and … offspring’ of thousands of printed works. But it is worth emphasising that a catalogue record is a representation of a book, and the catalogue as a whole was a select representation of the printed output of the period. Moreover, the way in which the electronic bibliographic data of the 18thC STC was able to capture the ‘eccentric evidence’ of hand-press books would directly shape how readers apprehended the nature of the books when they were transformed into microfilm images and, subsequently, into digital images and metadata in ECCO. In addition, the careful delineation of the scope of the 18thC STC would directly shape the content of both the microfilm collection and ECCO.

Books into Images: Microfilming in the Twentieth Century

In 1981 it was announced that the British Library Board had approved the bid from Research Publications (RPI) to ‘reproduce in microform a comprehensive library of eighteenth-century texts based on ESTC’. Robin Alston, the UK editor of the 18thC STC, was, initially at least, the director of the project.Footnote 5 Alston envisioned that the 18thC STC online catalogue would be a gateway to the filmed copy: ‘users – whether libraries or scholars – will be given a unique opportunity to acquire access to both bibliographical records and whole-text reproduction’ (Reference AlstonAlston, 1981b: 2). Alston also hoped that microfilm would preserve the fragile, rare books at the British Library from ‘the unequal struggle between book and reader’, anticipating that ‘the proposed microform library will have a tremendous impact on the total conservation effort’ (Reference AlstonAlston, 1981b: 3). Alston’s announcement emphasised access and preservation. His vision for the microfilming of the 18thC STC echoed the technological progressivism of a number of scholars, librarians, and publishers who promoted microfilm as the pre-eminent solution to the preservation of and access to rare books in the twentieth century.

Cultural demands of fidelity and accessibility have driven changes in the technology of reproduction since the 1700s and consequently have raised questions about how old books are represented as visual images (Reference McKitterickMcKitterick, 2013). Key to these issues was the application of photography to creating facsimiles. As David McKitterick argues, by the late 1800s the lure of the image had a profound effect: by ‘seeming to withdraw one of the veils between original and reproduction, replacing human intervention by chemical and mechanical processes, photography offered a new kind of reliability, and even a new kind of truth’ (Reference McKitterickMcKitterick, 2013: 127). The promise of absolute fidelity as a kind of preservation of or even substitute for the original, combined with cheapness and therefore accessibility, would not only spur the production and dissemination of photographic facsimiles of old books in the nineteenth century, but formed the driving arguments of both the twentieth-century microfilming and digitisation projects too.

What led microfilm to be the dominant reproduction technology of the twentieth century? It had been the subject of interest since the invention of photography, although early experiments were perceived as novelties. But it was the confluence of technology and sociocultural forces in 1920s and 1930s America that provided the conditions for the beginnings of the micropublishing industry.Footnote 6 One of the most significant moments for this history is the meeting in 1934 between historian Robert C. Binkley, secretary of the Joint Committee on Materials for Research (JCMR), and Eugene B. Power, of Edwards Brothers publishing: it was at this meeting that various developments in microfilming were brought together, and it was arguably the catalyst of a new publishing industry.Footnote 7 They discussed the microfilming experiments by R. H. Draeger of the US Navy, and the Recordak Corporation’s ‘Check-O-Graph’ machine that photographed cheques on a continuous 16 mm film, which enabled banks for the first time to verify cheques and to counter fraud (Reference PowerPower, 1990: 23–6). They also discussed the ‘Bibliofilm Service’ established by the librarian Claribel Barnett to copy the records of the hearings of the National Recovery and the Agricultural Adjustment Administrations (Reference BinkleyBinkley, 1936: 134). Binkley described its operation in this manner: ‘a page of print or typescript is photographically reduced twenty-three diameters in size, being copies on a strip of film ½ inch wide and one or two hundred feet long. The micro-copies are rendered legible by projection. A machine throws an enlarged image downward on a table, where the reader finds it just as legible as the original page’ (Reference Binkley and FischBinkley, 1935/Reference Binkley and Fisch1948: 183) (Figure 4).

Figure 4 Microfilming public records, New Jersey, 1937

Hearing about these projects in late 1934 provided Power with his notion of a radical new way of publishing:

If the film in [the] projector were a positive instead of a negative, it would be projected onto the screen black-on-white, reading exactly like the page of a book. I could photograph a page and print a positive-film copy for the customer, keeping the negative in my file to be duplicated over and over again in filling future requests. There would be no need, as there was in traditional publishing, to maintain a warehouse inventory of finished copies or to rephotograph the original material. Each copy made would be to fill a specific order. I could keep a vault full of negatives; therefore, no title need ever go out of print.

Power had already been photographing old books as early as 1931, but systems like Draeger’s or Recordak’s could do this on a bigger scale: the technology of bulk photography, the mass storage of filmed documents, and the ability to print the document – or book – when required would ‘make possible the production of a single, readable book at a low unit cost’ (Reference PowerPower, 1990: 17). It sounds uncannily like digital print-on-demand books of the early twenty-first century available via Amazon. Power began his own microfilm project based on the books catalogued by Pollard and Redgrave’s STC and started operations at the British Museum in 1935. After a number of US libraries took up a subscription service for these films, in 1938 Power left Edwards Brothers and formed University Microfilms Incorporated (UMI), arguably the most successful international microfilm publisher of scholarly materials (Reference PowerPower, 1990: 28–9, 32–5). It is perhaps significant for the history of academic publishing that the large-scale reproduction of old books was undertaken by a commercial business. As we will see in the chapter called ‘Interfacing’, the effect of commercial proprietorship over scholarly materials becomes a significant issue in relation to digitisation projects.

The dream that technology could offer more universal access to knowledge was perhaps most stirringly articulated by H. G. Wells in his 1937 essay ‘The Idea of a Permanent World Encyclopedia’:

There is no practical obstacle whatever now to the creation of an efficient index to all human knowledge, ideas and achievements, to the creation, that is, of a complete planetary memory for all mankind. And not simply an index; the direct reproduction of the thing itself can be summoned to any properly prepared spot. A microfilm … can be duplicated from the records and sent anywhere, and thrown enlarged upon the screen so that the student may study it in every detail.

However, Reference Binkley and FischBinkley’s 1935 paper ‘New Tools for Men of Letters’ (based on an earlier memorandum of 1934) is even more prescient. It is perhaps the earliest and fullest published reflection on access, scholarship, and new media technology. For both Power and Binkley the challenge was to figure out how to reproduce and distribute low-demand works and difficult-to-access scholarly materials:

[T]he Western scholar’s problem is not to get hold of the books that everyone else has read or is reading but rather to procure materials that hardly anyone else would think of looking at … Printing technique, scholarly activities, and library funds have increased the amount of available material at a tremendous rate, but widening interests and the three centuries’ accumulation of out-of-print titles have increased the number of desired but inaccessible books at an even greater rate.

While ‘New Tools’ considers a variety of reproduction technologies, microfilm is proposed as the best solution because it ‘offers the reader a book production system more elastic than anything he has had since the fifteenth century; it will respond to the demand for a unique copy, regardless of other market prospects. So the scholar in a small town can have resources of great metropolitan libraries at his disposal’ (Reference Binkley and FischBinkley, 1935/Reference Binkley and Fisch1948: 184).

This last remark about the ‘small town’ scholar is uniquely Binkley’s. His aim in harnessing the new technology of microfilm is to extend access: ‘Let there be included among our objectives’, he argues, ‘not only a bathroom in every home and a car in every garage but a scholar in every schoolhouse and a man of letters in every town. Towards this end technology offers new devices and points the way’ (Reference Binkley and FischBinkley, 1935/Reference Binkley and Fisch1948: 197).

In addition to access, issues of conservation and preservation drove the tremendous expansion of microfilming throughout the twentieth century, such as preserving the rare materials endangered by the wars in Europe (Reference PowerPower, 1990: 117, 125–7), preserving newspapers at the British Museum from the late 1940s (Reference HarrisHarris, 1998: 601–2), and, indeed, conserving the books in the British Library catalogued by the 18thC STC (Reference AlstonAlston, 1981b: 3). In 1988 Patricia M. Battin argued to a US committee that the fragility of books means that ‘it is the record of our shared symbolic code itself that is decaying and endangered. We cannot expect the societal cohesiveness that comes from a symbolic code if the record that comprises it is lost to us’ (Reference BattinBattin, 1988). Technology, it is implied, can save the texts of Western culture. In 2001 there was a burst of public debate about the effects of a mass microfilming project, spurred by Nicholson Baker’s provocative attack on libraries’ disposal of books and newspapers. Baker’s aim was to expose the ostensible fragility and brittleness of books and newspapers as a myth and to reveal the libraries’ microfilming (and digitisation) programmes as a kind of technological zealotry (Reference BakerBaker, 2001).Footnote 8

But what was being preserved: the information (the ideas and words) or the material medium (books, documents)? In this light, Robert Binkley’s conception of the scholar’s attitude to the nature of their material is striking. ‘All the documents’ which the scholar uses, he states,

are for him ‘materials for research,’ He does not care whether they are printed or typewritten or in manuscript form, whether durable or perishable, whether original or photostat, so long as they are legible. Whether the edition is large or small, whether the library buys, begs, or borrows the material makes no difference to him so long as he can have it in hand when he wants it.

In this conception the medium of reproduction does not affect the content or meaning of the document. The physical nature of the document or book is of little importance: access is everything. At the same time as this rush to microfilm, others were alarmed by what might be lost by the new media technologies of reproduction (Reference GitelmanGitelman, 2014: 63–4). A technology that can reproduce ad infinitum identical images of the original object in the name of accessibility poses questions about the relationship between original and copy. This is the burden of Walter Benjamin’s 1936 essay ‘The Work of Art in the Age of Mechanical Reproduction’. The essay is largely a response to the increasing popularity of film, but it argues that photography has precipitated ‘the most profound change’ on the authenticity of the work of art. This, he says

is the essence of all that is transmissible from its beginning, ranging from its substantive duration to its testimony to the history which it has experienced. Since the historical testimony rests on the authenticity, the former, too, is jeopardized by reproduction when substantive duration ceases to matter … that which withers in the age of mechanical reproduction is the aura of the work of art. This is a symptomatic process whose significance points beyond the realm of art. One might generalize by saying: the technique of reproduction detaches the reproduced object from the domain of tradition. By making many reproductions it substitutes a plurality of copies for a unique existence.

For scholars of the book the processes of reproduction potentially pose a similar danger to the ‘historical testimony’ of the ‘unique’ object of the book. In 1941 – at the same time UMI was busily filming at the British Museum – bibliographer William A. Jackson had been asked to comment on the technology of microfilm which had been ‘contagiously expounded.’ He enumerated the failures of two-dimensional black-and-white film shot in one plane to capture variations in the tone, colour, or quality of paper, to prevent the introduction of stray marks and blots, and finally, to reproduce the ‘intangible’ features of a book perceptible only by touch (Reference JacksonJackson, 1941: 281, 285). In his 2001 article ‘Not the Real Thing’ Thomas G. Tanselle cited the example of the American preservation project under Battin, pointing out that this project of microfilming ‘was not designed to save books but rather the texts in them’. Tanselle argued that such projects suffer from a ‘confusion surrounding the relation between books (physical objects) and verbal works (texts made of language)’ and suggested a ‘pernicious’ perception ‘that a text can be transferred without loss from one object … to another’ (Reference TanselleTanselle, 2001).

We’ve come to a striking set of parallel worlds: on one hand the scholar-technologists like Power and Binkley, and on the other theorists like Benjamin and scholar-bibliographers like Jackson and Tanselle. Binkley and Power clearly had in mind a method of disseminating costly or hard-to-access scholarly documents. Their aim was not essentially bibliographic – the concerns of historians of the book like Jackson and Tanselle – but ‘informatic’ (Reference GitelmanGitelman, 2014: 63). One question this book pursues is this: how would surrogates such as filmed books be used? As Deegan and Sutherland put it, ‘Under what circumstances or what purposes is a facsimile a satisfactory surrogate for the object itself? … Are we preserving features of the objects themselves or only the information they contain?’ (Reference Deegan and SutherlandDeegan & Sutherland, 2009: 157–8).

The Eighteenth Century: Microfilming the Catalogue

Research Publications was awarded the British Library contract for filming the 18thC STC. Robin Alston had been responsible for assessing bids for the filming in late 1980 from Chadwyck-Healey Ltd., University Microfilms International (UMI), Newspaper Archive Developments Ltd., and RPI (Reference AlstonAlston, 2004: n. 119, 120, 121).Footnote 9 Samuel Freedman founded RPI in 1966, in Meckler’s words, ‘for the express purpose of micropublishing significant archives and documents’. Significantly for Alston’s decision, RPI’s series Goldsmiths’-Kress Library of Economic Literature and American Fiction, 1774–1910 included work with library collections and catalogues that comprised eighteenth-century books (Reference MecklerMeckler, 1982: 96, 93).

Alston estimated that the filming of the 18thC STC would ‘take fifteen years to complete’ (Reference AlstonAlston, 1981b: 2). In fact, filming took approximately twenty-eight years between 1982 and 2010 when the programme stopped, and even then, did not manage to film everything in the ongoing and massive cataloguing programme of what was by then called the English Short Title Catalogue (Reference De Mowbrayde Mowbray, 2020a). Alston also envisaged that the bulk of the filming programme would be based on British Library holdings, and so the operation required the secure transportation of hundreds of thousands of eighteenth-century books to and from the British Library in London to RPI’s UK offices in Reading, where filming was monitored by visiting British Library librarians (Reference AlstonAlston, 2004; Reference Bankoski and De MowbrayBankoski & de Mowbray, 2019). The eventual programme would entail filming at many libraries outside the United Kingdom.

The collection was arranged into subject headings and comprised eight categories. These subject headings may well have had their origin in Alston’s experiments with the 18thC STC’s initial online interface at the British Library, which he felt could ‘help in the creation of subject packages which will form the basis of the RPI program to microfilm the substantive texts in ESTC’ (Reference AlstonAlston, 2004). The legacy of this arrangement can be seen in the interfaces of the ECCO and Jisc Historical Texts collections, which can both still be searched by these very same categories:

Religion and Philosophy

Literature and Language

History and Geography

Fine Arts and Antiquities

Social Science

Science, Technology, and Medicine

Law (Criminal, National, and International)

General Reference and Miscellaneous

Each reel of 35 mm film was devoted to books from one category. Research Publications sold the collection either in ‘units’, each comprising thirty-five reels, or by subject heading, or the complete collection. In 1994 the complete collection, at that point comprising 178 units, cost £341,760; each unit of thirty-five reels cost £1,920. Individual reels could be bought for £55 or £70 each depending on the total quantity ordered at one time. Given this was a major investment for most libraries, RPI offered ways of ameliorating up-front costs: libraries who took out a standing order of a minimum of one unit per year could obtain a discount and customers could also take out an annual subscription for future units (Research Publications, 1994).

The first films were produced in 1982. While filming rates varied depending on the site, by 1986 it was estimated that books were being filmed at a rate of around 50,000 pages per week at the British Library (Reference De MowbrayDe Mowbray, 2019b). By 1993 RPI had published 6,230 reels and was producing ‘16 units a year’ – that is, 560 reels (Research Publications, 1994). By 2007 the collection was ‘expected to contain over 200,000 items’, and by the time filming stopped in 2010 the collection amounted to 18,094 reels.Footnote 10

From the beginning the scale of the microfilming programme was a challenge. Given the huge amount of printed material in the period, Alston envisioned that the microfilming of the 18thC STC would be different from UMI’s pre-1700 collection, Early English Books, in which ‘no selection was involved, and every discrete item benefitting from an entry number was, and still is being filmed. This approach could not possibly have been adopted for ESTC: indeed, one pauses to wonder whether libraries (or scholars for that matter) have been significantly helped by the provision of whole-text filming for variants, re-impressions and re-issues, especially when set against the ever-rising costs of production’ (Reference AlstonAlston, 1981b: 2).

Alston proposed that the 18thC STC programme should be ‘selective’, in contrast to the apparent bibliographical promiscuity of UMI’s microfilming (Reference AlstonAlston, 1981b: 2). This policy would change, but at least with the first phase of filming, this vision seems to have been partly carried out. The ‘Research Tools’ section of ECCO’s original interface outlines this part of its microfilm history:

Guided by the interests of those studying the texts, items initially included were limited to first and significant editions of each title. Exceptions to this rule are the works of 28 major authors, all of whose editions are included where available:

Addison, Bentham, Bishop Berkeley, Boswell, Burke, Burns, Congreve, Defoe, Jonathan Edwards, Fielding, Franklin, Garrick, Gibbon, Goldsmith, Hume, Johnson, Paine, Pope, Reynolds, Richardson, Bolingbroke, Sheridan, Adam Smith, Smollett, Steele, Sterne, Swift and Wesley.Footnote 11

The selection is also an illuminating reflection of who was considered worthy of having all editions of their work microfilmed: in 1982 the scholarly perception of the canon of eighteenth-century ‘major authors’ included no women writers or persons of colour.

In May 1987 RPI announced in a newsletter to subscribers that it would be expanding its filming programme ‘to enrich the potential for research and study offered by the microfilm collection’. The announcement gives a sense of the scale of the new filming programme, expanding enormously beyond Alston’s initial vision: from 1988 ‘the selection criteria will be extended to include all distinct editions of a work insofar as the ESTC bibliographical record makes possible’ (Research Publications, 1987). A 1994 brochure added that the collection also includes ‘variant and pirated editions’ (Research Publications, 1994).

The 1987 announcement is also fascinating since it reveals how RPI identified and responded to a particular shift in scholarship:

Today there is clearly a growing interest on the part of scholars in a number of fields concerning the impact of the total output of the printing press on social history. At least five international congresses on the history of the book and printing have been held recently in places as far apart as Athens, Greece and Boston, U.S.A. Centres for the study of the book have been established in such places as Wolfenbuttel, Germany, Washington, D.C., U.S.A. and Worcester, U.S.A. Distinguished scholars everywhere are making more and more of an effort to understand this comparatively neglected area of history. It is generally recognized that in order to do this, it is necessary to have access to the actual books that were produced through printing.

Research Publications strategically positioned its decision in relation to the rise of studies in the history of the book, arguably attributable to the influence of the Histoire du Livre of the 1980s led by Roger Chartier and Robert Darnton. Research Publications’ argument rests on the implication that bibliographical records are insufficient on their own: you need access to the ‘actual books’. This is perfectly right, of course; certain aspects of book history and bibliography require the analysis of the physical book. However, RPI’s announcement was artful marketing: while the collection certainly enabled access to a version of a book, the collection could not offer access to a physical book.

Research Publications’ 1994 brochure draws on a similar discourse:

Here is the opportunity to study original primary source material from around the world without the time and expense of travel. We have preserved unique documents and rare books in a time-saving, cost-effective format. Now you can have easy access to the printed books, pamphlets and documents that were actually used during the eighteenth century.

As in Power’s and Binkley’s visions of the 1930s, access to rare research documents was the defining advantage to microfilm. Research Publications’ brochure echoes this affordance, claiming it ‘will bring a comprehensive rare book archive to your library’, as if delivering a research library to your doorstep. Like it had in its newsletter of 1987, RPI trumpets the collection’s ability to aid the scholar interested in the history of the book and in bibliography: ‘Literary scholars can make textual comparisons between variant editions of many works’ and can ‘investigate the mysteries of false imprints or examine the printing history of a particular work’ (Research Publications, 1994). This is true to an extent, but as we will see in ‘Bookishness’, some mysteries need more than a flat, two-dimensional representation of a book to be unlocked.

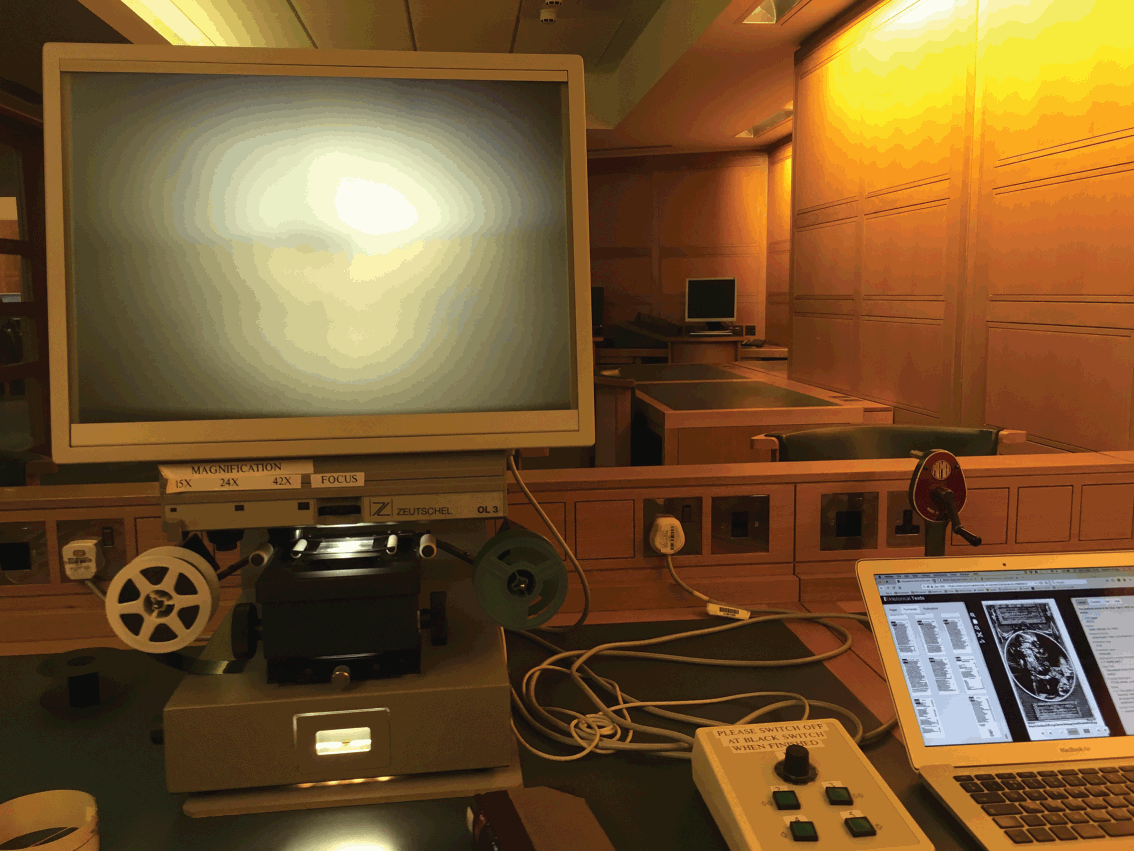

Access, however, is inextricable from the experience of how a particular technology of remediation is used, and there was a glaring disparity between the commercial praise of the medium and the physical experience of using the technology. It involved fiddling with reels and threading film, then shuttling through the items on each film to find the book you wanted (by hand-crank or motor power); even then, the image could often be marred by poor lighting. I feel the pain of a reader in 1940 who remarked, ‘reading by means of a mechanical contrivance is so new and so unprecedented in the entire history of writing and printing that it introduces, in addition to its real and obvious difficulties, those of a psychological nature on the part of the prospective reader’ (quoted in Reference MecklerMeckler, 1982: 60). As an interface to old books the microfilm reader was not user-friendly.

Figure 5 Microfilm reader and author’s laptop

Against the reality of microfilm use, the methods of reproduction promoted by libraries and publishers conjured an image of an easily and immediately navigable landscape of books and information. This is nicely captured in a 1979 study of microform publishing that ends with a vision of the future in which a car would have a microfiche viewer instead of using printed road maps, presumably using one while stationary (Reference Ashby and CampbellAshby & Campbell, 1979: 170–1). The future is here, these studies seem to be saying: witness the title of a study published in 2000 – Micrographics: Technology for the 21st Century (Reference SaffedySaffedy, 2000). Saffedy’s book, to be fair, concludes by considering the impact of digitisation and computers. He proposes a system whereby the use of microfilm collections might be synthesised with ‘electronic document imaging systems’ and ‘database management software’. Perhaps unconsciously echoing Vannevar Bush’s ‘Memex’, Saffedy imagines ‘retrieval stations’ that would comprise a mix of computers for images and database searches and microfilm reader-printers (Reference SaffedySaffedy, 2000: 120). Such an experience was clearly in the mind of Robin Alston when, in his announcement for the microfilming of the 18thC STC, he anticipated that the users could navigate between online access to electronic records and microfilmed images of eighteenth-century books (Reference AlstonAlston, 1981b: 2). His hopes echo the kind of utopianism of Binkley: that technology would enable not only access to rare texts, but also a way of practising scholarship. But how might this practice work when it comes to the study of an actual book from the eighteenth century, navigating between book, catalogue record, and filmed and digitised image?

3 Bookishness

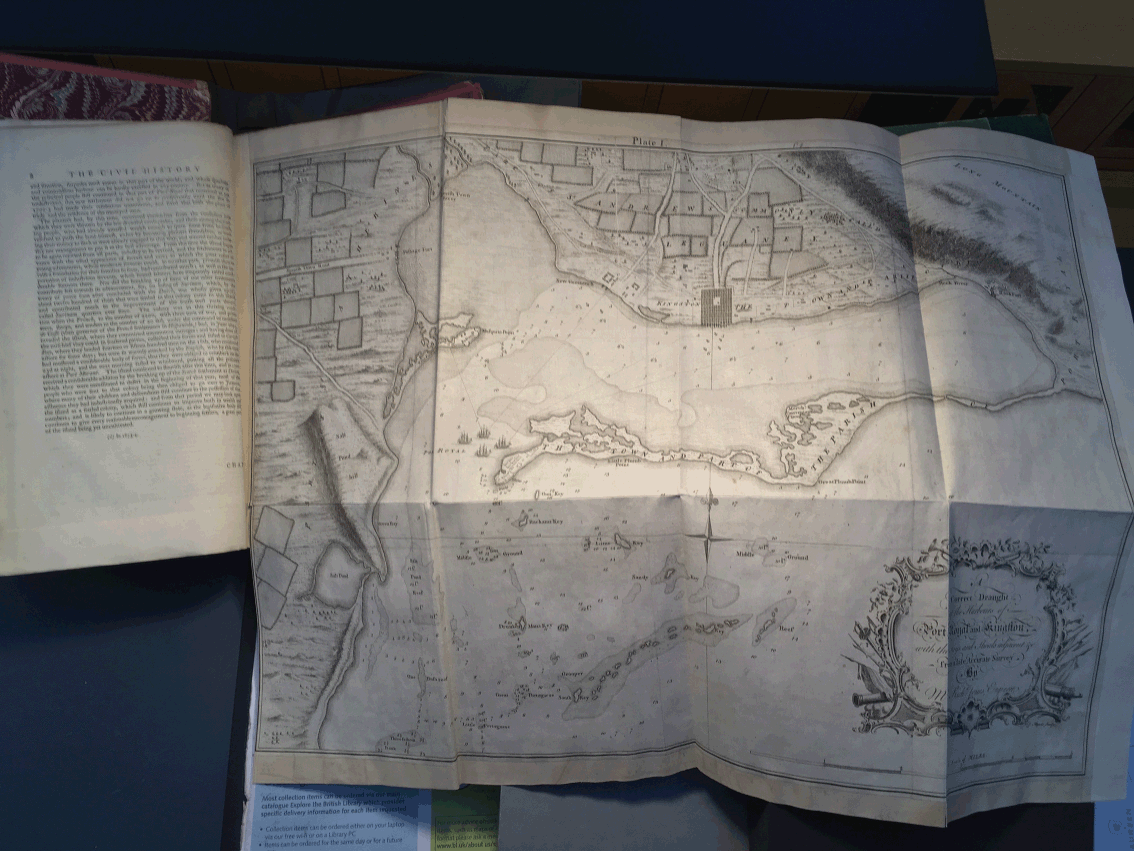

When I was examining the 1756 copy of Patrick Browne’s The Civil and Natural History of Jamaica in the British Library as part of my case study research, I was struck by the sheer physicality of this book. It was big and heavy and the paper was thick – I had to heave it onto the table, and I could feel the stiffness of the pages as I turned them. Yet despite the book’s heft, I had to be quite careful in opening up its large map of Jamaica (Figure 6). While we sometimes forget this, we always encounter the printed book physically, but this book’s shape imposed itself on me, reminding me that reading is a material experience, ‘an engagement with the body’, as Roger Chartier put it (quoted in Reference NunbergNunberg, 1993: 17). Partly for fun, but partly because I couldn’t find a suitable single word to describe this materiality, I use the term ‘bookishness’.Footnote 12

Figure 6 Opened map in The Civil and Natural History of Jamaica, 1756

Thinking about bookishness enables us to explore how the reproduction of an old book as a record, or as a microfilm, or as digital images radically alters the possibilities of scholarship but also amplifies the limits by which we can apprehend this materiality, this bookishness. This section in short asks: what are the traces of bookishness in a catalogue record and a digital image?

Book, Record, and Film

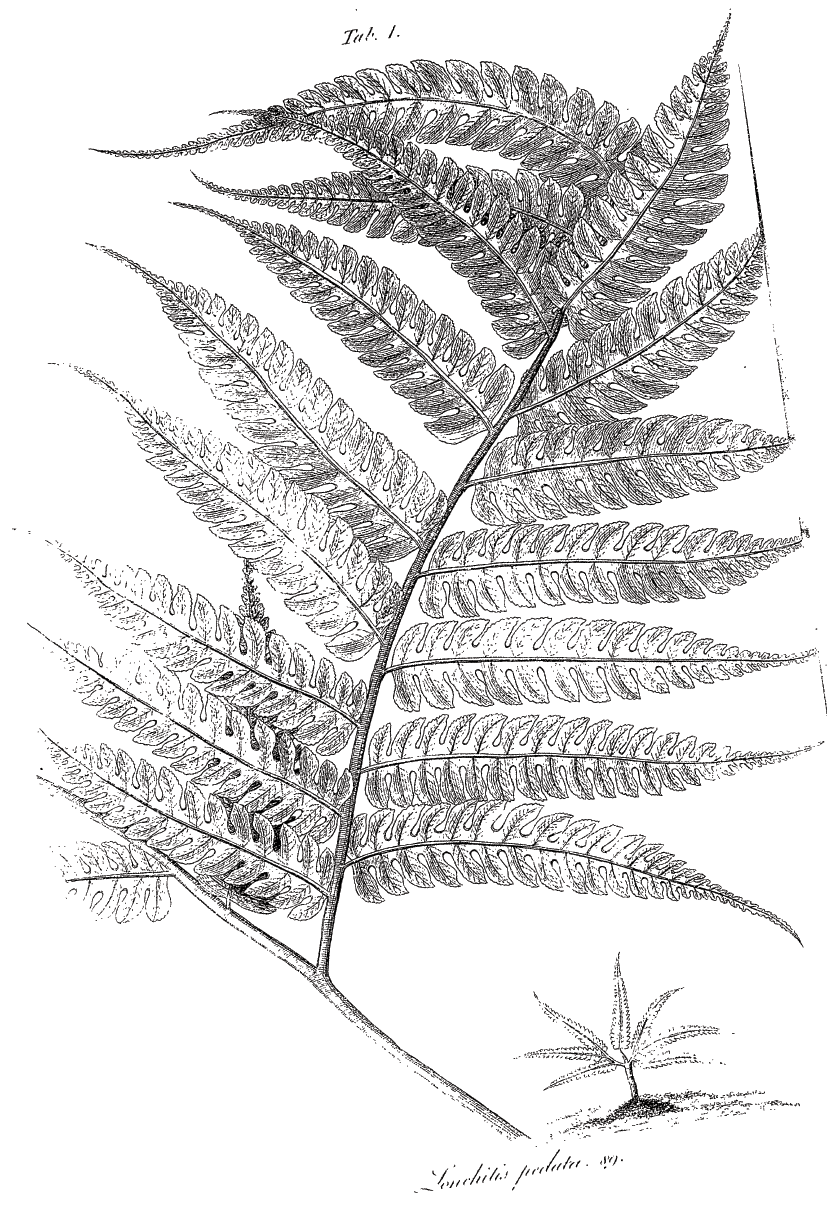

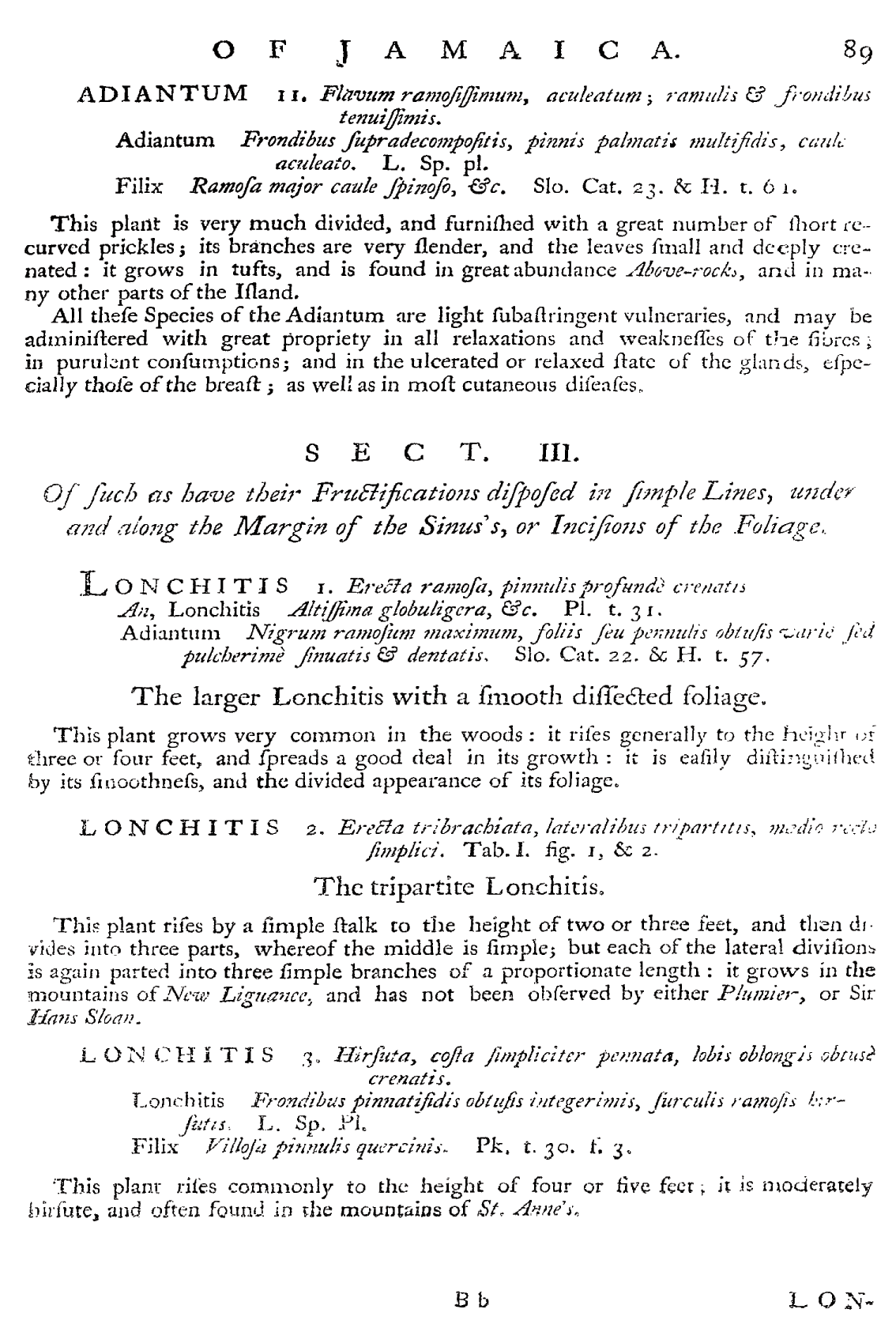

In 2002 a camera operator from Primary Source Microfilms arrived at the Kenneth Spencer Library of Kansas University to begin filming books, a process which took place from late May 2002 to early February 2003 (Reference CookCook, 2019). While there, the operator filmed a 1789 copy of Patrick Browne’s The Civil and Natural History of Jamaica. This is the story of that book and how it became a catalogue record and then photographic images, and eventually digitised in ECCO. The story aims to raise some important questions about how we read the relationship between a book’s record – or its bibliographical data, to be more precise – and its digital page images. These questions illuminate the cultural and technological contexts we’ve examined in our prehistory of ECCO.

The Civil and Natural History of Jamaica is famous for being the first book in print by an English speaker to use the classification system created by the pioneering botanist and zoologist Carl Linnaeus (1707–78). Its existence is recorded in the English Short Title Catalogue and represented by two versions of the book: the first edition published in 1756 (ESTC T89757) and a reissue in 1789 (ESTC T89758).Footnote 13 Both the 1756 and 1789 issues were filmed as part of the expanded programme from 1988: the presence of both versions in the microfilm collection was probably assured by RPI’s expanded rational to ‘include all distinct editions of a work’ and ‘all variants’ (Research Publications, 1987, 1994). The 1756 issue, according to the ESTC, was not published on film until 2005, and was a copy held in the British Library (shelfmark 459.c.4). However, the first copy of Browne’s History that was filmed was the 1789 copy held in the Spencer Library (call number Linnaeana G13), and it was filmed and published in 2002. The note accompanying the book on the microfilm has a ‘Batch date’ of 10 June 2001, suggesting that the filming of the copy was planned well in advance. Without records of filming operations, it is difficult to say why this copy was filmed before the British Library copy. Possibly of interest was the library’s collection of ‘Linnaeana’ (works associated with Carl Linnaeus) and the fact that the Spencer Library had at that point one of the largest collections of eighteenth-century material in the United States, and so it was probable that this library was made a specific target for filming and that Browne’s History was swept up in the filming of this collection.Footnote 14

However, the relationship between bibliographical records, this particular book copy, and its filmed and digitised copies illustrates a number of significant issues about how we read what the ESTC catalogue calls ‘Surrogates’. In the ESTC entry for the 1756 edition (T89757) the ‘General note’ states that a ‘variant has pp. 1–12 revised and reset’, yet this clearly relates to the 1789 reissue. When it comes to the physical description in the ESTC catalogue for the 1789 reissue (T89758) there are a number of interesting features. This is the full description:

Physical description.

[6], viii, 503, [47]p., plates: map; 2°.Footnote 15

General note.

With a half-title and four additional indexes. With a list of subscribers. A reissue of the sheets of the 1756 edition, with a new titlepage.Footnote 16

E. C. Nelson argues that, according to his research, the ESTC records are ‘incorrect’ (Reference NelsonNelson, 1997: 333, n. 9). It is true that the description of the 1789 issue does not mention features held in common by all the copies of the 1789 issue, such as the revised and reset pages, that both the 1756 and 1789 issues have a misnumbered page, or the reversed engravings.Footnote 17 But Nelson misunderstands the nature of a bibliographical description in a catalogue like the ESTC. More particularly, it reveals the challenges the ‘eccentric evidence’ of the unique or individual book copy presents to a universal cataloguing system like MARC (Reference AlstonAlston, 1981a: 379).

To illustrate this eccentricity, there are variations between copies of the 1789 issue itself, which a quick comparison with two other copies available via Google Books reveals. For example, the ESTC states that this issue has a ‘half-title’, but this element is not present in all the copies. In addition, not all copies have the map of Jamaica, and considerable differences appear in the order and presence of the preliminary material.

| Source location | Prelims (in the order that they appear) | Other features and pagination statement |

|---|---|---|

| British Library |

|

|

|

|

|

|

|

|

|

|

|

These variations amongst copies of the same issue of this book bear out Sarah Werner’s remarks about physical descriptions in catalogues, in that they relate to an ideal copy: ‘the imagined version of the book that is most perfect and complete, regardless of whether the library’s copy matches it or not’ (Reference WernerWerner, 2019: 121). In other words, no catalogue could possibly account for all the variations of all book copies held in the world. In this way the ESTC’s description of T89758 is a palimpsest of all the book copies consulted. It has everything: the half-title, the list of subscribers, and the map. To press this point home, not only is the half-title missing from the copy held in the Spencer Library, but the engravings are unusually placed. Rather than being arranged in a numbered sequence towards the end of the book, which is how other copies of T89758 are arranged, in this copy the engravings are scattered throughout the book.

This alone might be enough to make the point about the differences between a record and a unique book copy. However, the book’s appearance on both microfilm and ECCO even more strikingly opens up this gap between a record and a book’s representation in images: nearly every page that has an engraving and an opposite page of text is duplicated. In every instance of duplication there is a faint version of the page and a more defined one. In these page images the first image was also filmed at a skewed angle (Figures 7–10).

Figure 7 ECCO. Image no. 106

Figure 8 ECCO. Image no. 107

Figure 9 ECCO. Image no. 108

Figure 10 ECCO. Image no. 109

Certainly at the point of filming, moving from text to engraved images must have presented a problem in maintaining consistent image quality and contrast. In addition, books of natural history, which often incorporated illustrations and maps, some of which might have been printed on a fold-out page, pose challenges to any camera operator. In this copy our camera operator had three tries at filming the fold-out map of Jamaica (two are partial images, and one manages to fit in the whole map). Less obviously, but perhaps more important in reading the text or if one is relying on ‘all text’ searching, is the fact that six pages are missing.Footnote 18 In any case it is clear our camera operator was, in the words of one Kansas University librarian, ‘not having a good day’ (Reference CookCook, 2019). The bad day for this one operator was then replicated in the book’s digitisation.Footnote 19

This finally leads us to consider the refracted relationship between a bibliographical record and an image of a specific book copy. Alston hoped that readers could navigate between these and, to an extent, this was realised in ECCO interfaces where users can pull up bibliographic metadata alongside page images. However, there is an essential tension between the record and the book. Using either the record or the filmed image to read a particular significance into the order of the preliminary material, or into the relationship between the place of the engraved images and the corresponding text, is to confuse the ideal with the material, or the general record with the particular book that was filmed and digitised for ECCO. Michael Gavin has argued that such warnings from scholars are projections of ‘(presumably superseded) naiveté onto the digital project they wished to critique’ (Reference GavinGavin, 2019: 74, n. 5). Gavin, however, presumes a bibliographical knowledge that many humanities students do not possess. Gale never made any claim that individual book images represent the messy, material vagaries of every hand-press book copy in their collections (though EEBO did at one point; see Reference GaddGadd, 2009). However, without some understanding of cataloguing systems, the book in the hand-press era, or the processes involved in the remediation of a book copy, that pitfall exists for the unschooled user.

The aim of this analysis is not to emphasise failings on the part of ECCO or the ESTC or even students and scholars. Instead, this reading of one book from the eighteenth century helps us to understand how human, cultural, and technological factors affect and transform our apprehension of the physical book itself, its bookishness.

Bookishness and the Digital Image

This is a good moment to exemplify how the processes of microfilming interact with the next stage in our books’ lives: the digitisation of the microfilm collection. How we see and conceive these books as digital images in ECCO is to a significant degree dependent on decisions made by RPI/Primary Source Media during the filming programme and by Gale during the digitisation of the film images: these in turn shape our apprehension of these books’ bookishness.

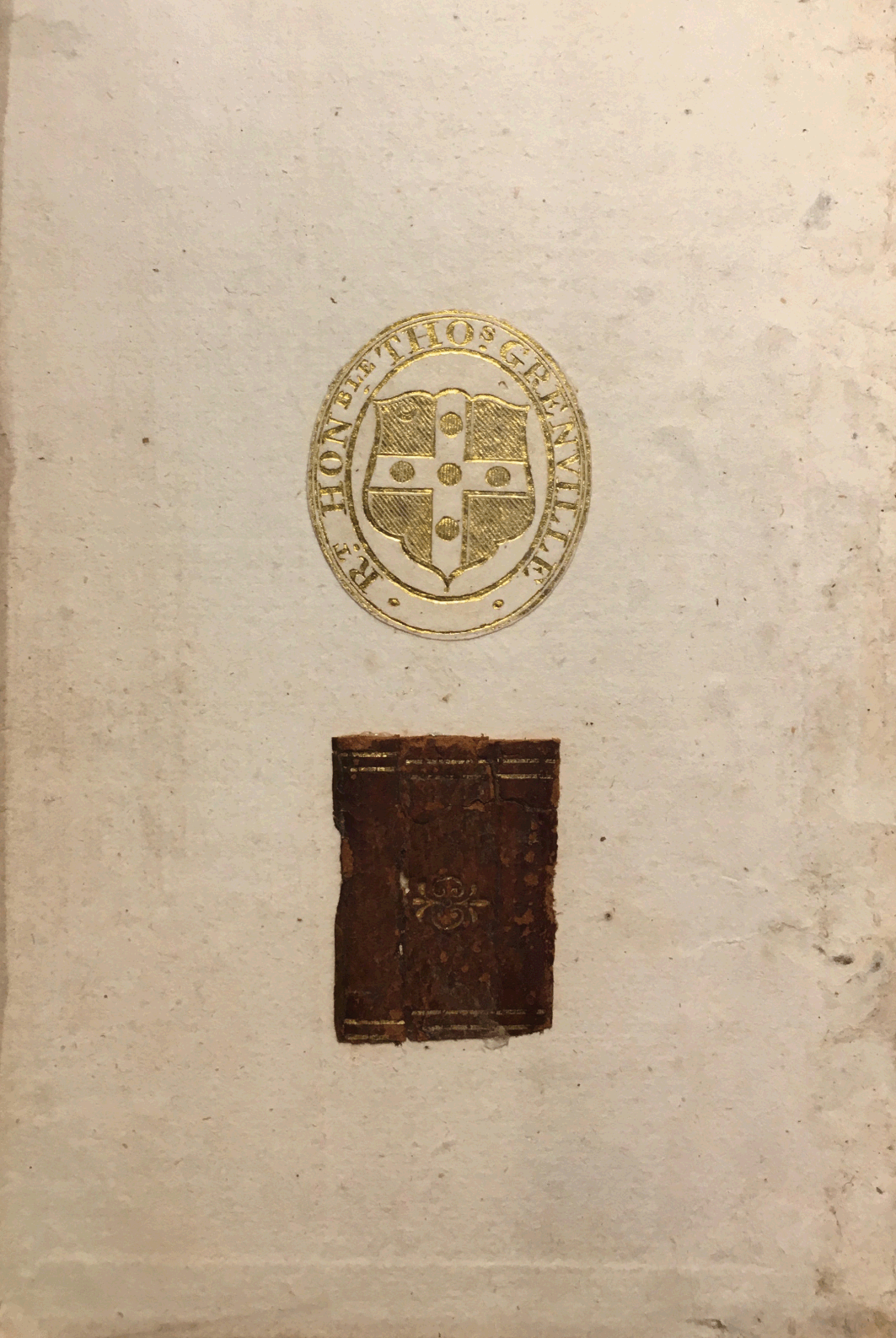

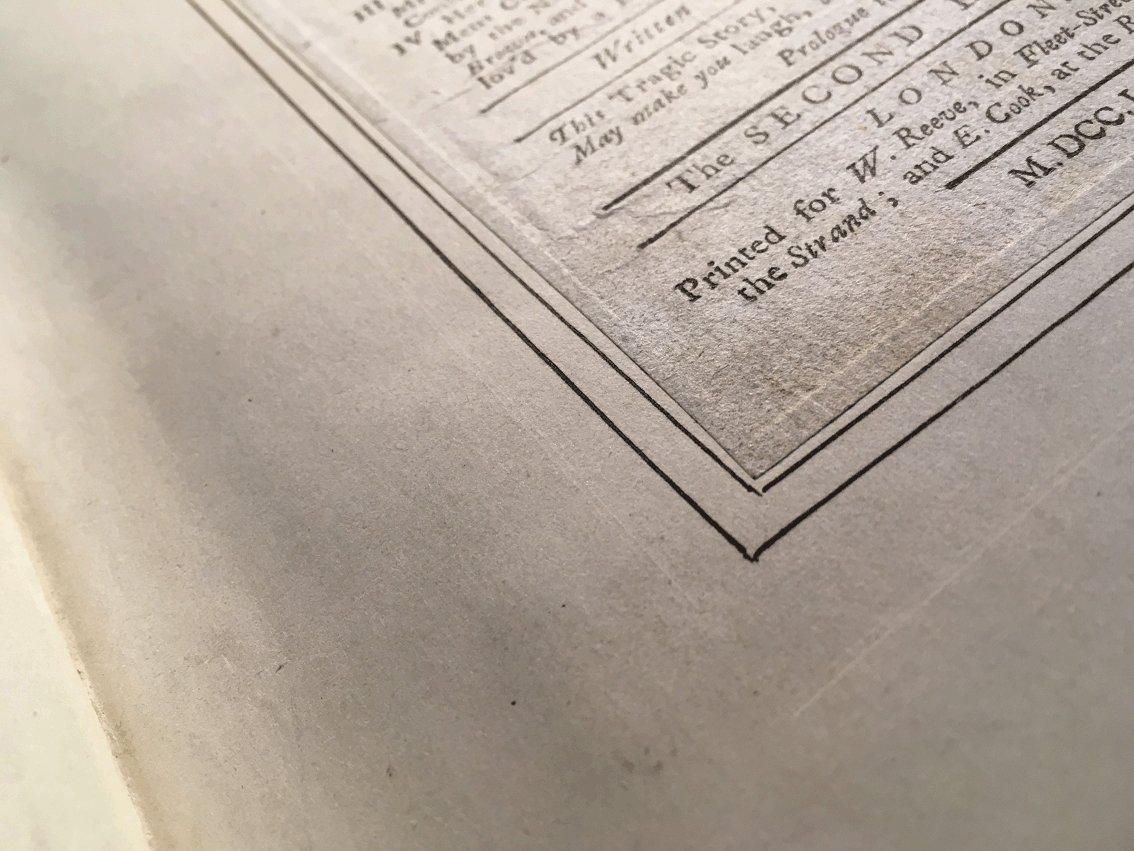

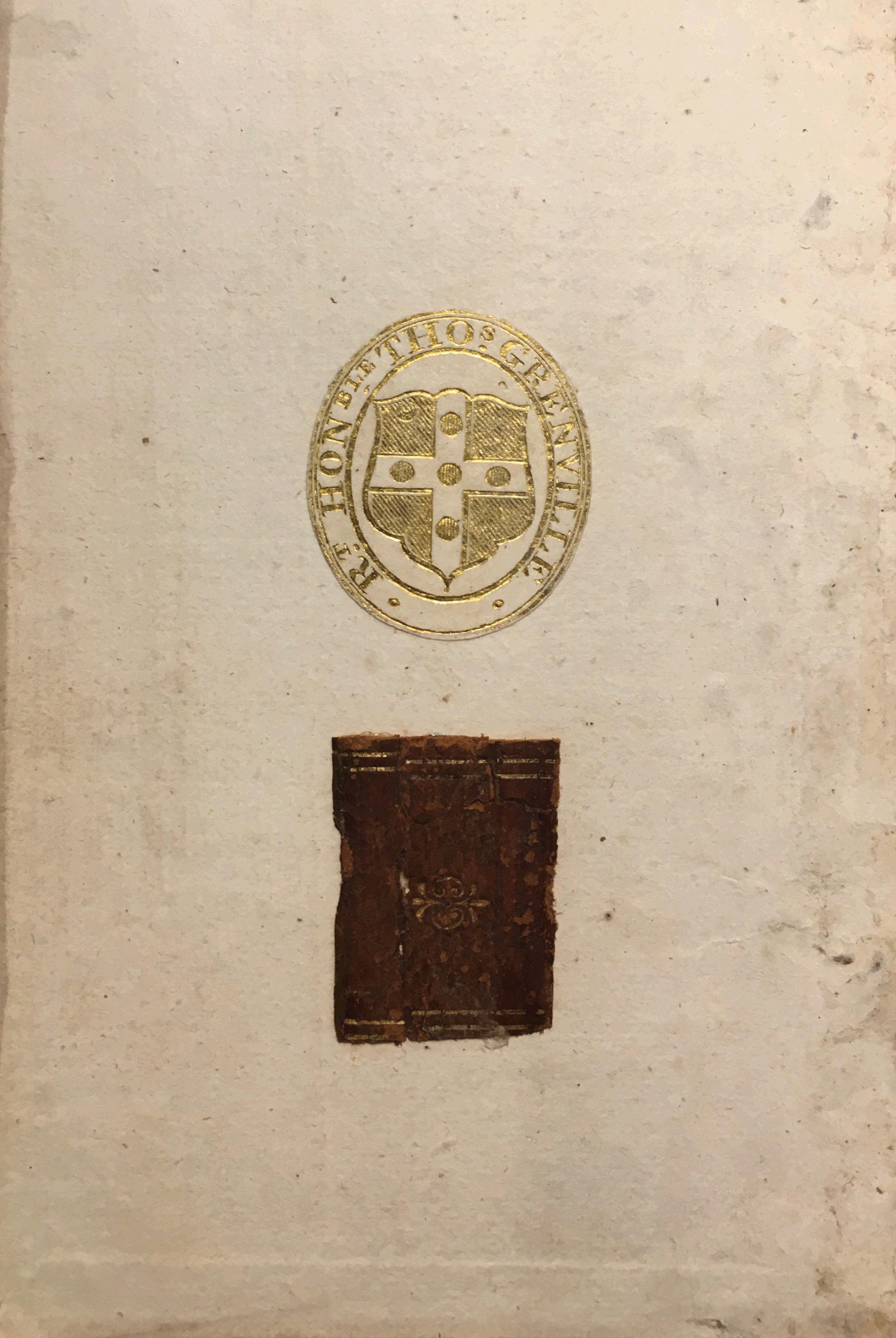

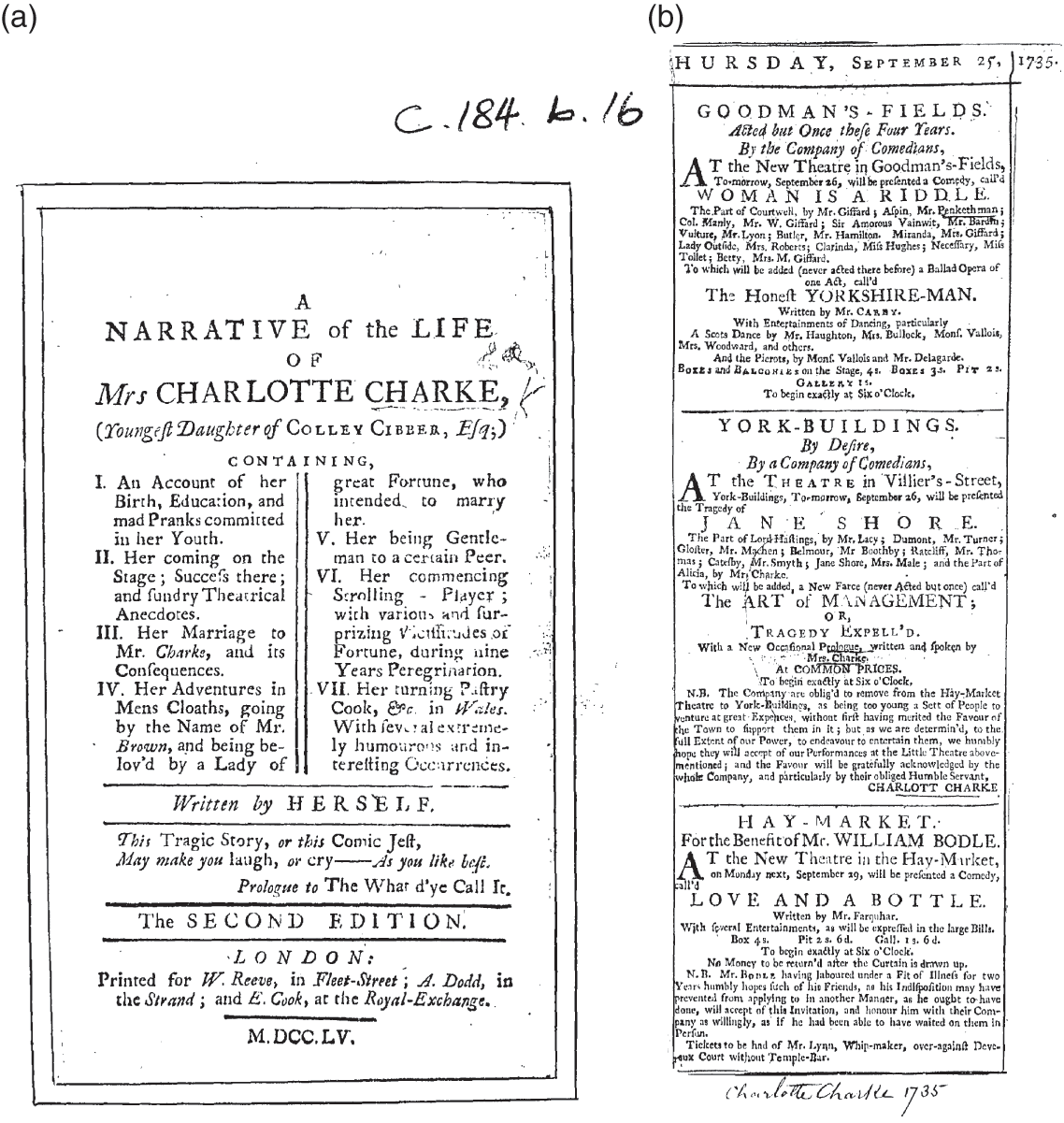

One decision made during the filming of the 18thC STC has profound consequences for understanding these books’ bookishness: no bindings or endleaves were filmed (the blank sheets of paper before and after the text). In the hand-press period these were not part of the printing process, but were the job of the binder, who would usually take direction from the book’s buyer as to the type and quality of the binding. I focus here on one part of the binding, the paste-down (where one endpaper was glued to the underside of the outer binding), because the paste-down is more than merely utilitarian and can be a space for aesthetic decoration (Reference Berger, Duncan and SmythBerger, 2019). Our example is from Charlotte Charke’s autobiography, The Narrative of Mrs Charlotte Charke, and the source copy for ECCO’s first edition of 1755 (ESTC: T68299) held in the British Library (shelfmark G.14246). On the paste-down of the Narrative we can see the owner’s stamp as well as a curious object that seems constructed from scraps of leather bookbinding and looks like a tiny book or the spines of three books on a shelf (Figure 11).Footnote 20

Figure 11 Pastedown, Narrative

The copy belonged to Sir Thomas Grenville; if you look him up you’ll find out that this politician was also a book collector, which might explain the lovely detail of a miniature book. Of course, books were sometimes bound or rebound long after the printing of the books, but nevertheless bindings and endleaves can give us important clues to significant aspects of bookishness, such as its readership or owners (‘provenance’), as well as clues to the context of its production, dissemination, and use (Reference PearsonPearson, 2008: 93–159; Reference Berger, Duncan and SmythBerger, 2019; Reference WernerWerner, 2019: 71–8, 137–8).

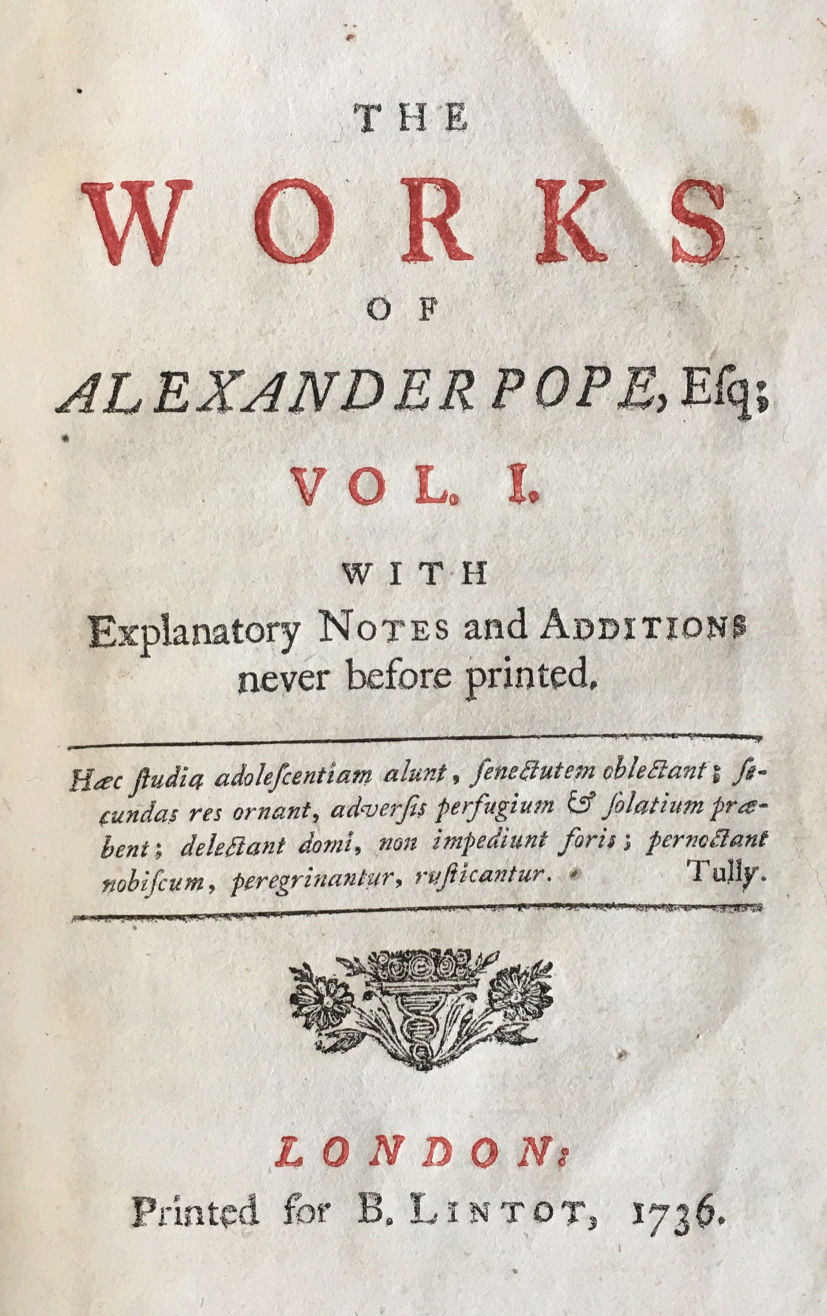

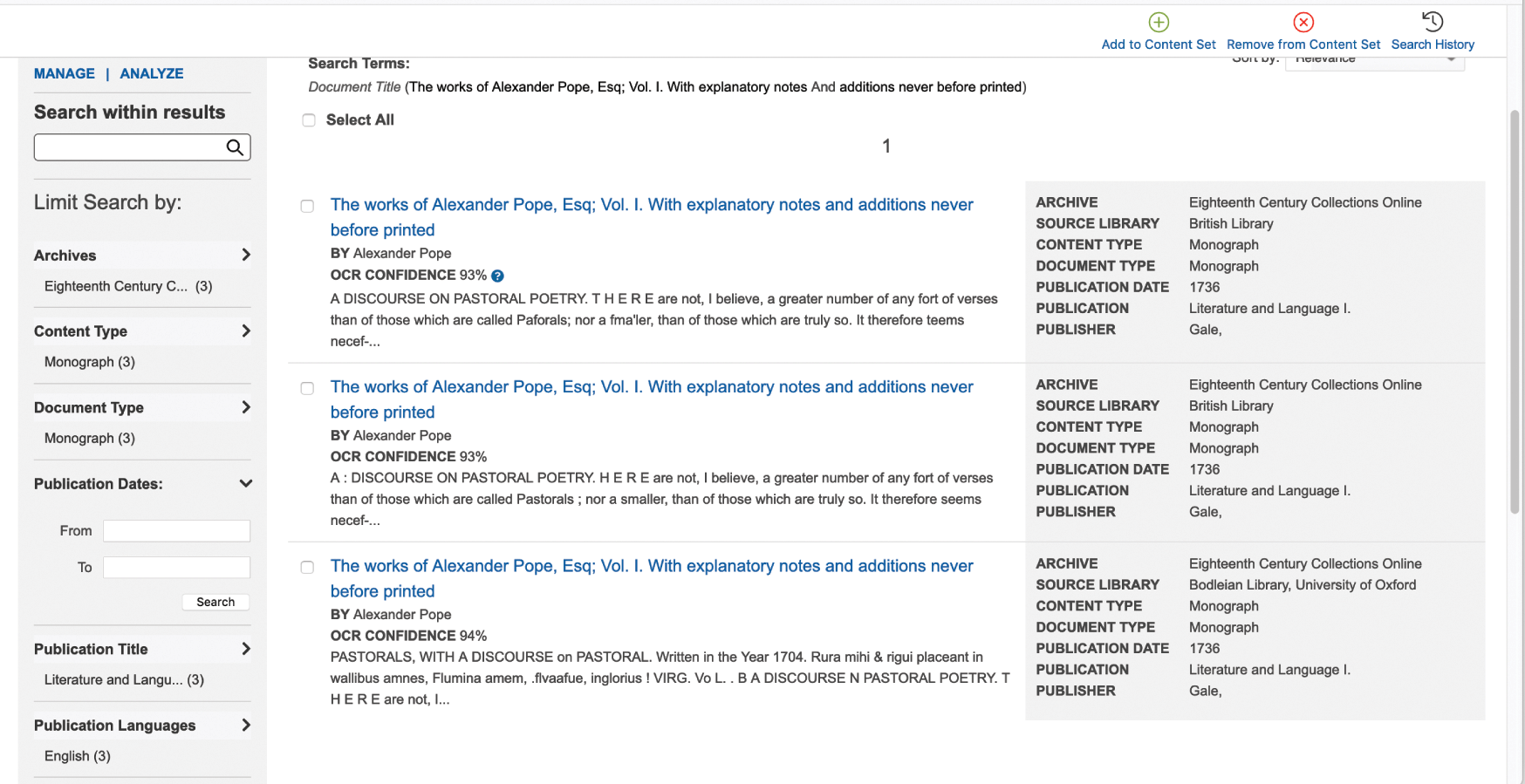

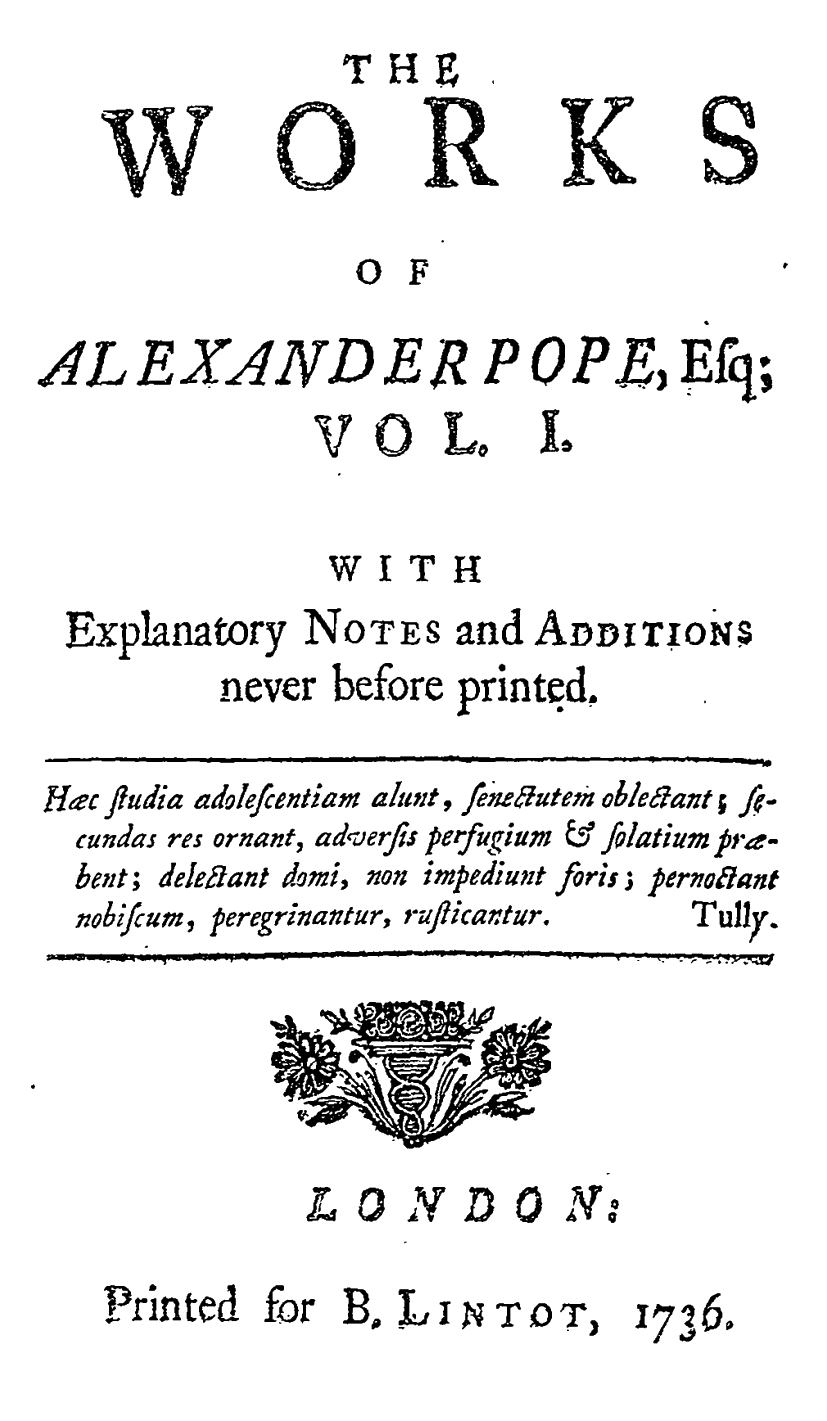

Another decision was to photograph books in black and white, a choice clearly based on the processing costs. Colour photography and processing would have increased costs enormously and there was understandably little benefit to be gained, given the fact that mostly all hand-press printing was done in black and white in the eighteenth century. However, it does mean that we miss the chance to see the occasional use of red ink. Compare the two images of this title page from volume one of The Works of Alexander Pope, 1736: one from ECCO, the other from my own copy (Figures 12–13).Footnote 21

Figure 12 Works. ECCO

Figure 13 Works. Author’s own copy

Red ink was usually reserved for religious works and almanacs and marked off special days, but red ink was also used sometimes to pick out words on a title page. This simple decision is revealing: title pages were a form of advertisement, so red ink added to the book’s visual impact, heightening the perceived value or status of the book and its author. But given it required the printer to run the sheet of paper through the printing press twice, it was costly in terms of the time and extra patience needed in the printing process: in short, the book better be worth it (Reference WernerWerner, 2019: 57–8). Looking at the title page from my copy of this volume of Pope’s Works, even if we know very little about Pope, the care attending this one page might start to tell us much about either his own or his publisher’s regard for the status and selling power of his works. Granted, the ESTC records the fact that the title page is in red and black and this bibliographic metadata is available in the original ECCO interface and the Gale Primary Sources interface, but we’d have to guess which words since that detail is not recorded. All this most certainly would be lost if we only depended upon the microfilm or the image in ECCO.

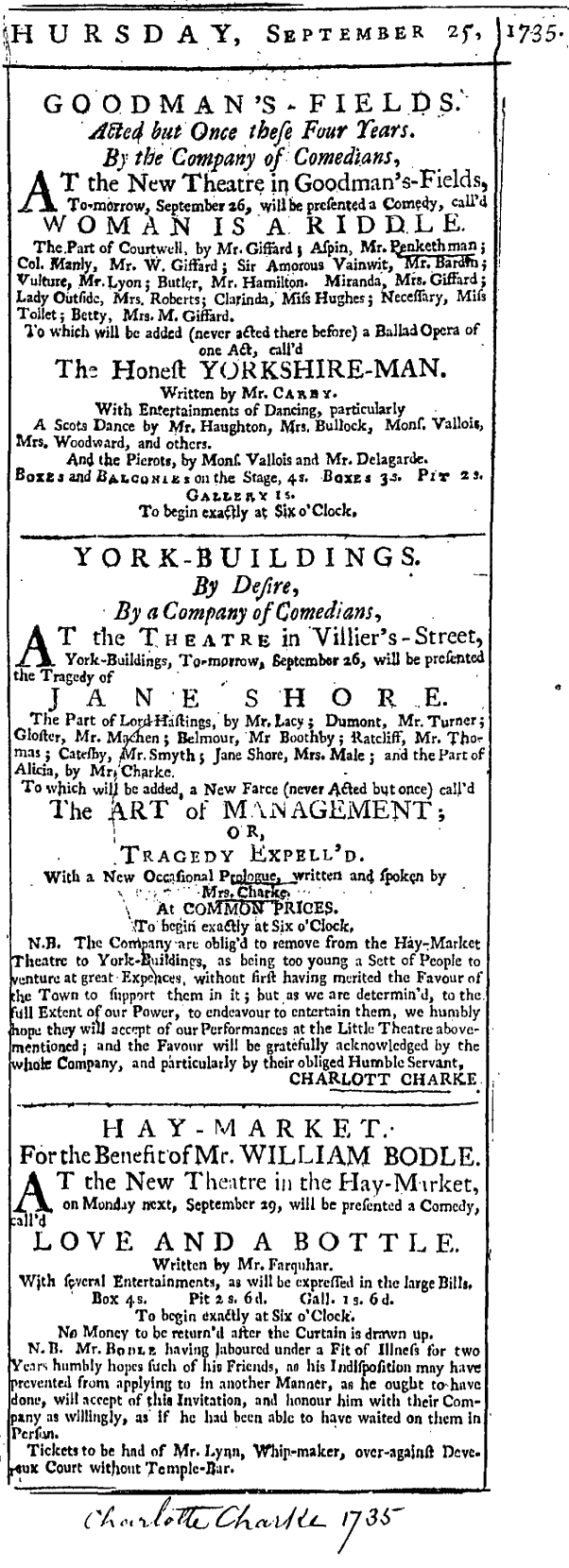

It is not just colour that is lost by microfilming. In 1941, in the early years of microfilming old books, William Jackson objected to what I could call the two-dimensionality of film images of books, saying, ‘it is a photograph taken … in one plane’, and adding that the reader can’t benefit from ‘raking’ light (Reference JacksonJackson, 1941: 283). More recently, Sarah Werner made the point that raking light during digital imaging can bring alive the material texture of old books (Reference WernerWerner, 2012). The example I use is again The Narrative of Mrs Charlotte Charke, but this time the second edition of 1755 (ESTC T68298), ECCO’s source copy in the British Library (shelfmark C.184.b.16). In both editions of 1755 the format of the book was duodecimo (12°), a popular, small, and relatively portable format. However, this particular copy is strikingly different: in addition to the printed text of her Narrative, throughout the book are interspersed playbills and newspaper cuttings (Figures 14–15), and there is a lot of blank space around some of the text.

Figure 14 Title page, Narrative, second edition. ECCO

Figure 15 Second page, Narrative, second edition. ECCO

Why is it like this and how was it made? The high-contrast two-dimensionality of the filmed page images in ECCO does not afford the kind of detail that might help. My own photograph of the title page, taken in raking light and from an angle, I hope, reveals more about the book’s construction (Figure 16).

Figure 16 Detail of title page, Narrative, second edition

The edge of ‘original’ printed text is visibly different from the larger-size paper upon which it is mounted. This is a good piece of evidence that we are looking at an example of scrapbooking, where a book owner has collected this miscellaneous material related to Charke’s life and had it interleaved with the printed text of Charke’s autobiography in a book of a much larger format (one of my students called this owner a ‘super fan’ of Charke’s). Double lines appear around the printed text (are these hand-drawn?), and a fainter impression emerges outside these lines (what made this?). Answers to these questions might provide valuable bookish clues as to how it was made, the role of the binder and owner in its construction, and perhaps even how it was used and valued.

Another aspect of bookishness affected by filming and digitisation is the experience of reading an open book. Typically, camera operators for RPI/Primary Source Media would film the books depending upon their size or format.Footnote 22 The smaller book formats like duodecimo and octavo tended to be filmed two pages at a time – that is, they preserved what’s known as the page spread or opening (larger sizes such folios were sometimes filmed one page at a time). As part of the processing of the films into digital image files Gale required single-page images. This processing has a number of consequences for how we see and apprehend books in ECCO.

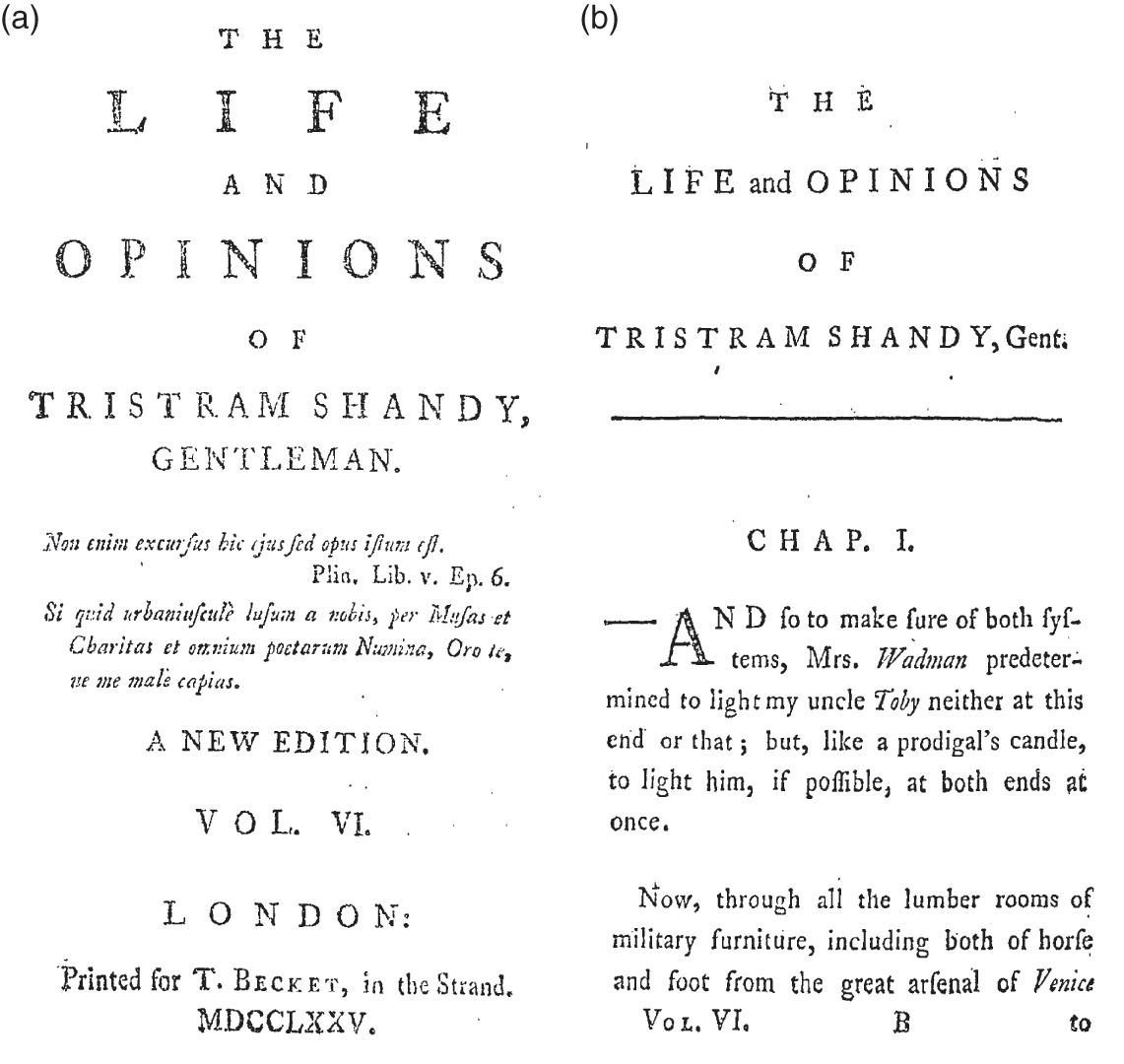

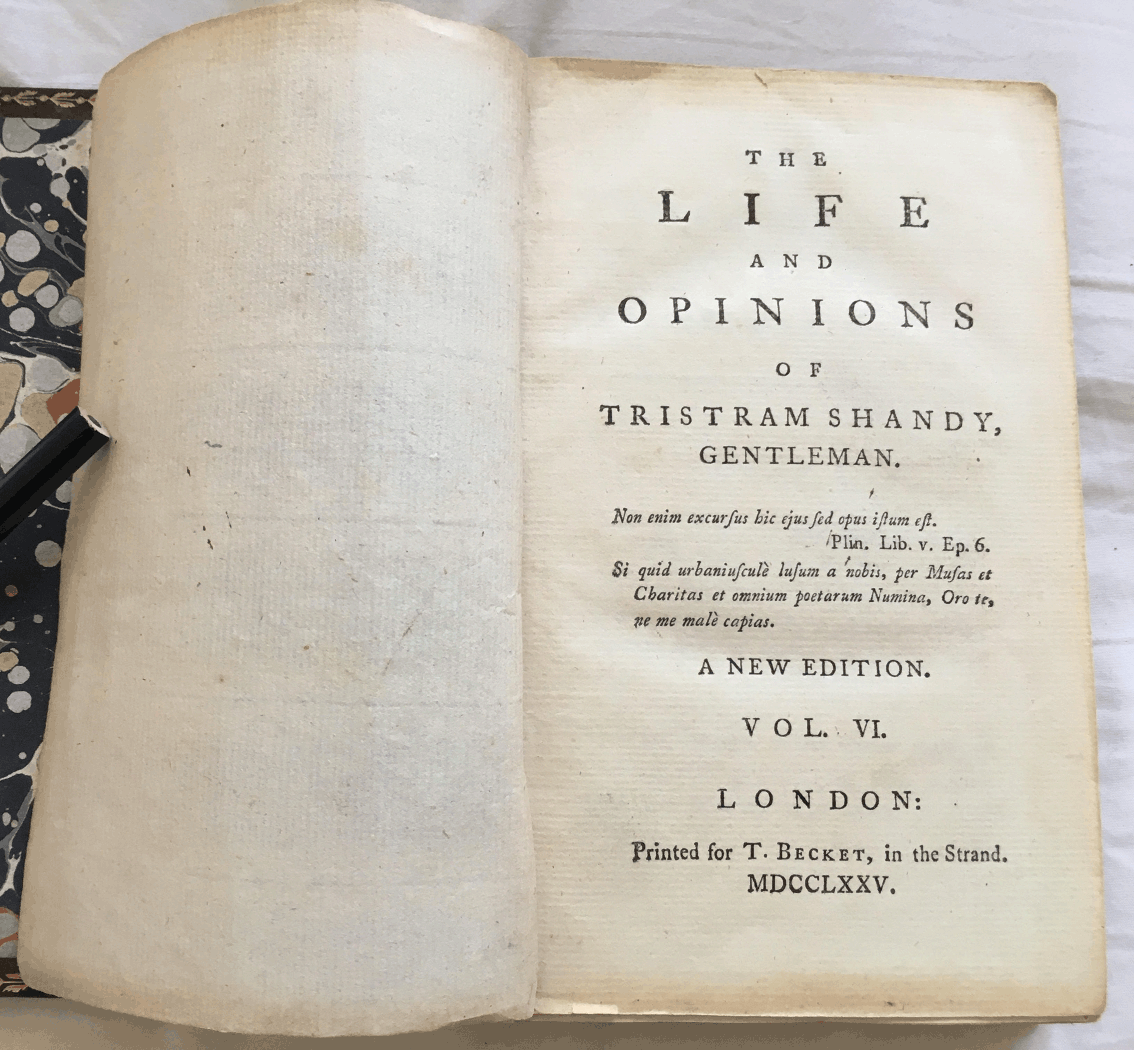

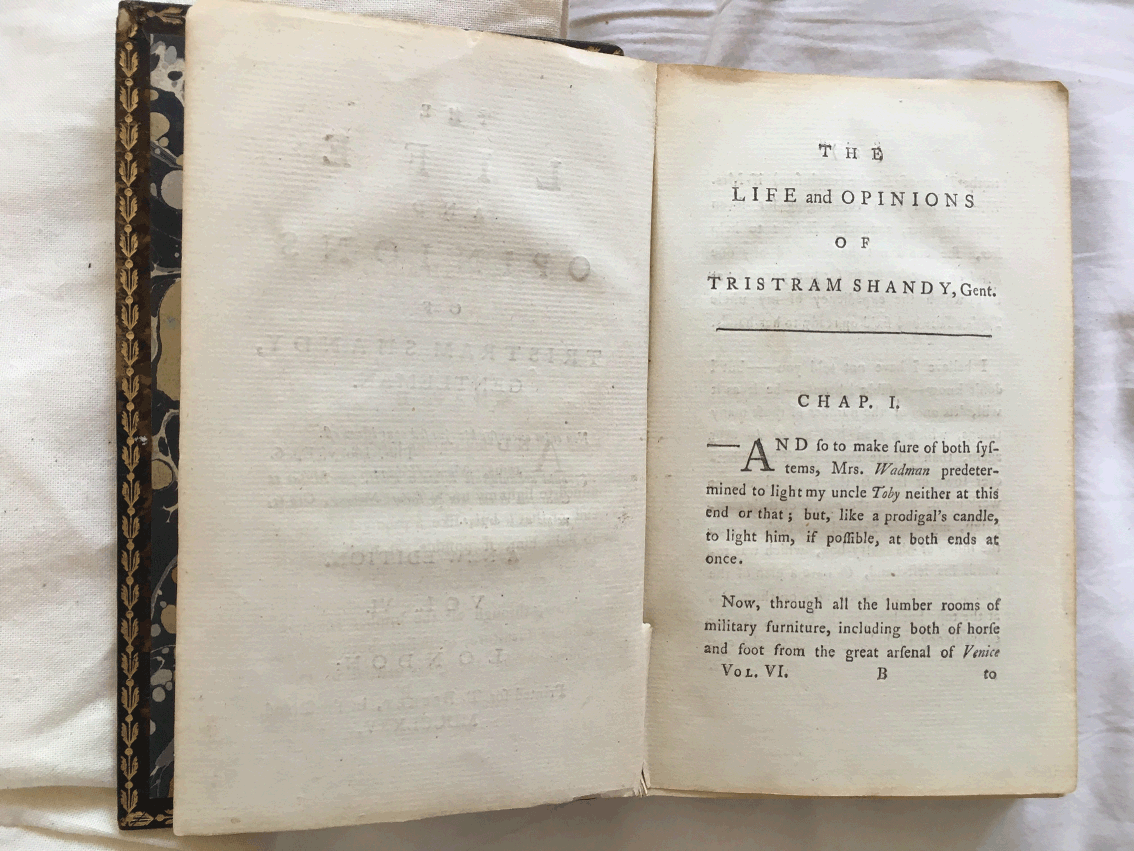

What we’re looking at now are two consecutive page images from volume six of a 1775 edition, in octavo, of Laurence Sterne’s novel, Tristram Shandy as seen in ECCO (Figures 17–18).

Figure 17 Title page, Tristram Shandy. ECCO

Figure 18 Chapter page, Tristram Shandy. ECCO

The first page image is the title page (as we’ve seen, endleaves and bindings were not filmed). Like in most book digitisations, we are invited to navigate right to see the next page, which is the first page of chapter one. Given that in the West we read from left to right, there is an understandable assumption when we imagine the actual book that the title page is on the left-hand side – the verso – and the next page is on the right – the recto. This is not actually the case, as we can see from a photo of my own copy (Figures 19–20).

Figure 19 Title page opening, Tristram Shandy, author’s copy

Figure 20 Chapter page opening, Tristram Shandy, author’s copy

This might seem a small issue, but it matters for two reasons. First, consider that we experience a book as two pages, or opening, a ‘visual unit formed by facing verso and recto pages in a codex’ (Reference GaleyGaley, 2012). The experience of reading a book in single-page units is a strange distortion of how we actually perceive the printed book as two pages. Second, the blank page opposite a first page of a chapter is not just meaningless, empty space: any printer worth their salt would be expected to give the first page of a new chapter the graphic space it needs. The decisions in digitisation have altered our apprehension of this book’s bookishness.

The examples of what’s happened to these books, and perhaps even the books I’ve chosen, might seem mundane or even unexceptional. But it is precisely because these examples are not exceptional that they are entirely representative, and what can happen can be so easily overlooked. Moreover, I’ve highlighted some of the ‘losses’ to a book’s bookishness during these remediations not as some kind of total critique of ECCO, but in order for us to understand the decisions and processes in ECCO’s history and so help us to understand what we are seeing via ECCO and how we might better use this digital archive.

4 Beginnings

Publishing, Technology, and ECCO, 1990–2004

It wasn’t called ECCO at first. The original name during the project’s development in 2002 was ‘The Eighteenth Century – Complete Digital Edition’, closely associating it with the microfilm collection on which it was based (Reference QuintQuint, 2002). The finished product, renamed ‘Eighteenth Century Collections Online’, was published by Thomson Gale in 2003 and completed in 2004. It was officially launched in the United Kingdom at the British Library on 24 July followed by a reception at Dr Johnson’s House, London (Reference De Mowbrayde Mowbray, 2019c). In the United States it premiered in early August at the thirty-fourth meeting of the American Society for Eighteenth-Century Studies (ASECS) in Los Angeles.

But let’s go back a little. Thomson Gale’s entry into digital publishing is a good example of the forces at work in the 1980s and 1990s that influenced the shape of commercial academic publishing. First, techno-commercial developments took place in digitisation and in electronic transmission, enabled by cheaper computing power and the rise of personal computing. Second, library budgets faced significant pressures as a result of the increasing prices of journals and the falling demand for monographs. Taken together, these technological and market contexts pushed academic publishers to diversify their product portfolios by merging companies with expertise in electronic publishing or with existing content. These commercial strategies were also a response to the explosion and spectacular failure of companies experimenting with digital platforms and electronic publishing in the 1990s – later dubbed the ‘dot.com bubble’ – arguably influenced by the radical business theory propounded in Bower and Christenson’s essay ‘Disruptive Technologies: Catching the Wave’ (Reference Bower and ChristensenBower & Christensen, 1995; Reference ThompsonThompson, 2005: 81–110, 309–12).

The story of Thomson Gale itself starts in 1998, as the result of a merger involving the long-established company of Gale Research (founded in 1954 by Frederick Gale Ruffner and sold to Thomson in 1985), Information Access Co., and Primary Source Media: this became the Gale Group, whose new headquarters was to be in Farmington Hills (Reference McCrackenMcCracken, 1998). It was a significant moment that combined three companies with particular but overlapping expertise: Gale’s specialism was reference books in the humanities, sciences, and technology; Information Access Co. specialised in CD-ROMs, microfilm, and online information; Primary Source Media was formerly Research Publications (RPI), who, as we know, produced microfilm collections of rare research materials including The Eighteenth Century. As one contemporary observed, the merger was ‘a move towards the electronic age and it helps to be big in this industry’ (quoted in Reference McCrackenMcCracken, 1998).

In contrast to the experimentation of digital companies in the mid-1990s was the more cautious strategy publishers adopted of diversifying and monetising their existing portfolio of resources by transforming them into a new medium. Former Gale CEO Dedria Bryfonski noted that their existing content formed ‘the backbone of electronic products widely used today’ (quoted in Reference EnisEnis, 2014). Thomson had decided to begin digitising its collections as early as 1996, just before the 1998 merger: its first was The Times Literary Supplement Centenary Archive, 1902–1990, published in 1999. ‘Almost all our growth now is from the migration from print to digital,’ as Thomson Gale’s CEO Gordon Macomber pointed out in 2004 (quoted in Reference BerryBerry, 2004). In another 2004 interview he emphasised that the ‘lion’s share of our business is nonaggregated – that is, the distribution of our proprietary content online’ (quoted in Reference HaneHane, 2004). Distributing its specialised (‘nonagreggated’) content enabled Gale to monetise its existing (‘proprietary’) investments, of which ECCO was the prime example.

Thomson Gale was of course eager to emphasise the affordances of digitisation for its products. Singling out ECCO as the exemplar of Thomson Gale’s digital products, Macomber stressed digital accessibility over print, noting that scholars ‘would have had to go [to the British Library] to get access to this material’ (quoted in Reference BerryBerry, 2004). But ECCO was promoted as more than just access; it was represented as offering a new way of learning: as Mary Mercante, vice president for marketing put it, ECCO was also about ‘searchability’ (quoted in Reference SmithSmith, 2003). Creating new markets by diversifying and transforming content also meant harnessing the unique affordances of digital technology. So what technologies of digital reproduction and publishing were available when Thomson Gale was considering digitising its collections in the late 1990s? What was the significance of these choices, and what were Thomson Gale’s competitors doing with their digitisation programmes? And what does ‘digitisation’ even mean?

In its broadest definition digitisation is the conversion of analogue information into digital form, such as a continuously varying voltage into a series of discrete bits of information.Footnote 23 The forms of digitisation that interest us here are methods that convert text and those that convert photographic images. Text is converted to digital form by encoding each character as a binary string of zeroes and ones, a series of discrete bits of information; for example, the letter ‘a’ would look like this: 01000001.Footnote 24 Photography, as we’ve seen, has been a key media technology for the representation of old books, but while photographs represent continuous gradations and variations in tone, shade, and colour, the digital image comprises discrete bits of information that approximate the effect of continuity. Images are transformed into a grid of discrete pixels, or bitmap, with each pixel assigned a number for colour and intensity. Black-and-white, or bitonal, images are the simplest binary forms, but 8-bit or 24-bit images can encode huge numbers of colours.

Thomson Gale began thinking about digitising its existing collections in 1996, and anyone contemplating digitising old books in the 1990s had a number of technological and commercial decisions to make. They would have to decide whether to transform the material into page images, or to produce it as searchable text, or to offer both: this would depend on the nature of the content, on who their users were, how they were expected to use it, and costs. If searchable text was on the cards, then they would need to decide between two options: to either have each word transcribed by hand (accurate but costly), or to use text automatically generated from page images using software (cheaper, but inaccurate, especially if the content was microfilm). In parallel, there were also decisions to make about how to publish the material: was it to be on CD-ROMs, or would it be online via the nascent World Wide Web?

Humanities computing had a history going back to Roberto Busa’s work in the late 1940s, and digitisation projects had been running since Project Gutenberg’s first electronic transcription in 1971 (Reference Hockey, Schreibman, Siemans and UnsworthHockey, 2004; Reference Deegan and SutherlandDeegan & Sutherland, 2009: 119–54; Reference JohnstonJohnston, 2012; Reference ThylstrupThylstrup, 2018: 1–31). However, Thomson Gale’s attention in the 1990s was fixed on its immediate competitors in the commercial market for educational and academic resources; its engagement with the scholarly field that became known as ‘Digital Humanities’ did not become significant until the 2010s. Google Books, of course, was perhaps the most high-profile mass digitisation project, and was launched at the Frankfurt Book Fair in 2004. The extent to which Thomson Gale knew about Google Books while developing ECCO is not clear, but it’s revealing that in 2004 CEO Macomber chose to characterise Google as an information search-and-retrieval business. Commenting on the ‘challenge … laid before us by Google and Yahoo! and others that dominate the Internet space’, he argued that: