‘We have not attempted an even-handed, “objective” approach. What is required, after […] the dominance of an approach that is unsupported scientifically and unhelpful in practice, is a balancing stance rather than a balanced one’ (Read et al (Reference Mutz, Vipulananthan and Carter2013), discussing schizophrenia).

‘Ethical Human Psychology and Psychiatry (EHPP) is a peer-reviewed journal that publishes original research reports, reviews […] examining the ethical ramifications of unjust practices in psychiatry and psychology’ (description of the remit of the journal in which the review by Read et al (Reference Read, Cunliffe and Jauhar2019a) is published: https://www.springerpub.com/ethical-human-psychology-and-psychiatry.html).

Electroconvulsive therapy (ECT) has a long history and pre-dates current drug treatments in psychiatry. It continues to attract controversy about the balance between its efficacy and adverse effects in a highly polarised debate (e.g. Read Reference Read, Cunliffe and Jauhar2019b).

The review in this month's Review Corner (Read Reference Read, Cunliffe and Jauhar2019a) is written from a particular perspective (illustrated by the quotations at the head of this article) and published in the journal of the International Society for Ethical Psychology and Psychiatry (ISEPP), which takes the position that mental illnesses should ‘not be considered medical problems and traditional medical treatment is not a solution’ (ISEPP 2021).

The structure could be described as a mixed-methods narrative review and opinion article falling roughly into two parts: (a) a systematic review of sham-ECT-controlled randomised controlled trials (RCTs) of ECT in the treatment of depression, followed by an elaboration of their non-generalisability, and (b) a non-systematic survey of wider issues related to ECT, which concludes with a judgement on the continuing use of ECT.

The systematic review

The question addressed

Read and colleagues (Read Reference Read, Cunliffe and Jauhar2019a) state that their aim is to review the ‘impartiality and robustness’ of the meta-analyses of RCTs of active ECT versus sham ECT (involving anaesthesia but no electrically induced seizure) and the quality of the studies on which they are based. They say that the goal is ‘not to assess whether or not ECT is effective’ but ‘to determine whether the available evidence is robust enough to answer that question’ and that ECT ‘must be assessed using the same standards applied to psychiatric medications and other medical interventions, with placebo-controlled studies as the primary method for assessment’.

It is worth noting here the limited stated aims of the review (which contrast with what the authors then go on to do), the suggestion that impartiality may be a problem in the published meta-analyses, and the emphasis on placebo-controlled studies as the primary method of assessment of efficacy (which is later interpreted to mean the ‘only’ method).

Methods

The authors carried out an electronic MEDLINE search to identify meta-analyses of sham ECT (SECT) against real ECT, last updated in March 2020. They assessed the included RCTs using a bespoke 24-point quality scale incorporating 8 items related to risk-of-bias domains from the Cochrane Handbook (Higgins Reference Higgins, Altman and Gøtzsche2011). Other items were related to definition or degree of representation of the clinical population (n = 5 items), inclusion of types of rating/rating scales (n = 6 items), study design (n = 4 items) and the requirement that included studies must report the means and standard deviations of the depression scale (n = 1 item). Two review authors independently rated the studies, with inconsistencies resolved by agreement. The ratings were added up to give a score out of 24 as a measure of overall quality. There is no description of the criteria by which the robustness of the meta-analyses was assessed.

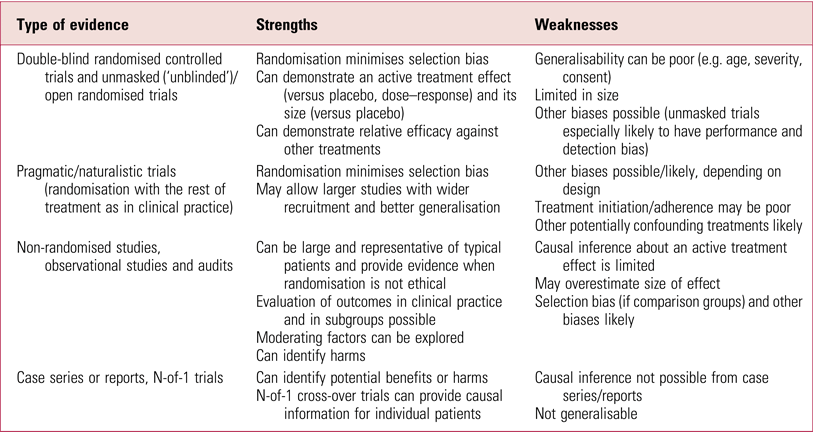

Assessing the quality of studies, at the heart of the review, is not straightforward and Table 1 summarises the range of factors that might affect the interpretation of clinical trials. Many different systems have been developed, of which GRADE (Grading of Recommendations Assessment, Development and Evaluations) (Box 1) is the most comprehensive, rigorous and widely accepted for developing and presenting summaries of evidence (Movsisyan Reference Lambourn and Gill2018). Summary scores on a quality scale, as used here, are not an appropriate way to appraise clinical trials, as they combine assessments of aspects of the quality of reporting with those of trial conduct, effectively giving weight to different items in ways that are difficult to justify and that result in inconsistent and unpredictable results (Higgins Reference Higgins, Altman and Gøtzsche2011). Assessment should focus on internal validity (risk of bias) separately from assessment of external validity (generalisability or applicability) and precision of the estimate (related to study size); these can then be used along with other factors, such as publication bias and heterogeneity (consistency), to interpret the results of systematic reviews (Higgins Reference Higgins, Altman and Gøtzsche2011).

BOX 1 The Grading of Recommendations Assessment, Development and Evaluations (GRADE) system

The GRADE approach provides a system for rating quality of evidence and strength of recommendations (Guyatt Reference Guyatt, Oxman and Kunz2008).

GRADE classifies the quality of evidence in one of four levels: high, moderate, low and very low.

• Evidence based on randomised controlled trials (RCTs) begins as high-quality evidence, but confidence can be decreased for several reasons, including study limitations, inconsistency of results, indirectness of evidence, imprecision and reporting bias.

• Observational studies (e.g. cohort and case–control studies) start with a low-quality rating but may be upgraded if the magnitude of the treatment effect is very large, there is evidence of a dose–response relationship or if all plausible biases would act to counteract the apparent treatment effect.

There are two grades of recommendations: strong and weak.

• Strong recommendations occur when the desirable effects of an intervention clearly outweigh (or clearly do not outweigh) the undesirable effects.

• Weak recommendations occur when the trade-offs are less certain (either low-quality evidence or desirable and undesirable effects are closely balanced).

TABLE 1 Considerations in critical appraisal of clinical trials

a Cochrane risk-of-bias items (Higgins Reference Higgins, Altman and Gøtzsche2011).

It should also be noted that a number of quality criteria in this review are idiosyncratic (requiring suicide and quality of life scales, more than one rater type, individual patients’ data, patient ratings, demonstrated previous treatment failure, no previous ECT) or not explained (requiring at least six ECTs, follow-up data after the acute course, group sample sizes of ten or more). The summary score used here means little and its relationship to disqualifying a study from being valid and able to contribute evidence is not clear. The authors are therefore not applying currently accepted methodology to assess study quality, nor do they provide clear criteria against which they judge the robustness or validity of individual studies and meta-analyses.

Results

The search resulted in 14 papers, with 5 meta-analyses finally included in the review (one in Hungarian was excluded), the most recent of which was a network meta-analysis of brain stimulation treatments for depression. These incorporated one or more of the available 11 RCTs of SECT compared with ECT.

The studies

The 11 individual studies are tabulated and discussed individually with regard to immediate and follow-up efficacy after the end of ECT. The authors examine each study in detail, discuss their design and weaknesses, and note the results of each rating scale used. All 11 studies reported a numerical advantage to ECT over SECT for acute treatment on the primarily reported doctor/observer rating, statistically significant in 6. Eight studies included other ratings, which were variably reported but mostly showed the same direction of effect. However, study designs differed widely, and notably four studies administered only six SECT/ECT treatments and a further two only two or four SECT treatments. Two early studies had only six and four patients per group respectively. Three studies provided data comparing SECT and ECT groups at least 1 month after ECT, but in one the comparison was only made possible by the review authors’ calculation from the 60% who had not received further ECT in the interim (note that this is open to selection and attrition bias and hence difficult to interpret). The authors of the review conclude that the quality of the studies is ‘unimpressive’, and they are ‘clearly unable to determine whether ECT is more, or less, effective than SECT in reducing depression’. The reasons given are a summary narrative of the performance of studies against their quality criteria, and all are found wanting. The key problems related to bias are: failure to describe the randomisation process in 5/11 studies, failure to assess masking (‘blinding’) in 5/11, an assertion that masking was lacking in all 11 studies because they included patients with experience of previous ECT who would ‘probably’ know whether or not they had had ECT, and 5/11 studies not reporting all outcomes.

The meta-analyses

The five meta-analyses are similarly tabulated, showing the individual studies included in each, with the estimate of effect for each study and when pooled. There was great variation in which studies were included, mostly related to inclusion criteria and the method of analysis. Some inconsistencies and inaccuracies were found, for example the UK ECT Review Group (2003), regarded as the best meta-analysis, had incorrectly excluded one RCT (Brandon Reference Brandon, Cowley and McDonald1984); given that this showed a strong benefit for ECT it would, however, have strengthened the pooled finding. All five meta-analyses found that ECT was more effective than SECT in acute treatment. Only the UK ECT Review Group (2003) reported longer-term outcomes (one study, Johnstone Reference Johnstone, Deakin and Lawler1980), which showed no significant difference between treatments). Two meta-analyses were found not to have rated study quality and all to have ignored their poor quality but still included them. The key criticism of all the meta-analyses was the inclusion of studies that were seriously flawed, therefore invalidating the meta-analyses and making it impossible to determine relative efficacy.

The authors do consider whether results from the three RCTs with the highest-quality ratings (Lambourn Reference Jolly and Singh1978; Johnstone Reference Johnstone, Deakin and Lawler1980; Brandon Reference Brandon, Cowley and McDonald1984) could be combined to examine acute efficacy but conclude against this on the basis that two of the studies did not report all the outcome data. This reason does not fit in with the GRADE approach which is to evaluate each outcome separately; in other words, there is no reason not to combine their Hamilton Rating Scale for Depression scores simply because other scores are not reported.

Interpreting the findings

There is no doubt that the SECT-controlled RCT evidence has limitations, as do the meta-analyses, with next to no evidence on efficacy after the end of acute treatment. Nonetheless, despite their methodological differences, there is a consistent direction in the findings of the RCTs of at least a numerical benefit for ECT over SECT at the end of the comparison treatment period, and all meta-analyses report a significant pooled result in favour of ECT irrespective of the combination of studies they include. For the studies to be ‘clearly’ unable to provide evidence for the efficacy of ECT therefore requires a strong case to overturn the unanimous direction of effect.

The two broad quality domains of bias and generalisability are blurred in this review. The first asks whether we can trust the results of the RCTs/meta-analyses, the second whether the results inform clinical practice. Randomisation addresses the key factor of patient selection bias, although in 5 of the 11 studies it is unclear how well this was done, as the method was not described. The strongest claim related to bias made by the authors of this review is the ‘fact’ that none of the studies was masked (blind), because participants who had previously received ECT would probably know their allocation, leading to a placebo response to real ECT because ‘ECT is always followed by headaches and temporary confusion’. This assertion is not justified, ignores side-effects from having had an anaesthetic and is contradicted by audit figures (Scottish ECT Acceditation Network 2019) showing the incidence of post-ECT headache to be about 30% and confusion about 20%. As an aside, this would arguably ‘invalidate’ RCTs of psychological treatment, in which it is impossible to hide allocation from patients.

To support their claim the authors analyse individual patient data from the Lambourn & Gill (Reference Jolly and Singh1978) study, in which 66% of patients had previously received ECT. They found that prior experience of ECT moderated the degree of improvement: it was better with ECT than with SECT for participants with previous exposure, but worse with ECT than with SECT for those without previous exposure. This secondary data analysis of small numbers is highly questionable, and this study reported only a small, non-significant positive benefit for ECT even though it had the highest proportion of patients with prior ECT exposure of all the studies, undermining the case that this accounts for the strong positive findings in favour of real ECT in most studies.

More importantly, relevant RCT evidence available in the meta-analyses is ignored: the evidence that bilateral ECT is more effective than unilateral ECT (UK ECT Review Group 2003; Mutz Reference Movsisyan, Dennis and Rehfuess2019), where unmasking because of previous experience of ECT cannot explain the findings.

Their other main concern was that not all outcomes were reported fully, presumably based on the risk that the most favourable result could be chosen and inflate the effect. However, current best practice emphasises giving weight to primary outcomes precisely to avoid cherry picking the best result, and it appears that the studies reported what would now be viewed as their primary clinician-rated outcome measure; it was secondary measures that were variably reported. Therefore, although correctly highlighting deficiencies in individual studies, the authors fail to establish the case that the studies’ results are invalidated because of their deficiencies and that bias accounts for the benefit found for ECT across all the studies and meta-analyses.

Standard practice is to use inclusion and exclusion criteria to select studies for a meta-analysis and quality criteria to qualify confidence in the outcome and its effect size. The authors of this review have instead used quality criteria to effectively disqualify studies without transparently describing the criteria and thresholds for doing so. Greenhalgh and colleagues (Greenhalgh Reference Greenhalgh, Knight and Hind2005) carried out a critical assessment of the UK ECT Review Group (2003) meta-analysis as part of an extensive Health Technology Assessment systematic review. While acknowledging the limitations in the studies, they conclude that ‘Real ECT is probably more effective than sham ECT, but as stimulus parameters have an important influence on efficacy, low-dose unilateral ECT is no more effective than sham ECT […] There is little evidence of the long-term efficacy of ECT’. This conservative conclusion provides a more balanced assessment of these data than this review.

What about the issue of generalisability? One can conclude that a treatment works in specific circumstances but not that it has been shown to work in the target population. This is extensively argued by the authors of this review as a reason to discredit the evidence from these studies. That some of these RCTs are limited in how representative they are has been noted before (UK ECT Review Group 2003; Greenhalgh Reference Greenhalgh, Knight and Hind2005) but it should be emphasised that this does not invalidate the results in the patients studied. It is not uncommon for RCTs to have restricted generalisability (Table 2), which is without doubt a key problem for new treatments being evaluated for introduction into practice. ECT, however, has been used for 80 years and there is a great deal of other evidence available (see the section ‘Evidence based?’, below). The effectiveness of ECT in different populations needs to be assessed on its more extensive evidence base, not only on this limited subset of studies.

TABLE 2 Strengths and weakness of some types of evidence

Beyond the systematic review

Read et al's review moves on to make other points about ECT and to examine whether adverse effects outweigh benefits. This literature is not assessed systematically and the authors become highly selective in the evidence considered, including using individual quotations to support particular points of view. It should be noted that this goes far beyond the stated aims of the review.

Tone and approach

The approach appears to be that described elsewhere by Read himself (see the quotation at the head of this commentary) of taking a ‘balancing [his emphasis] stance rather than a balanced one’ (Read Reference Mutz, Vipulananthan and Carter2013). Complete objectivity is impossible, as all evaluations are embedded in value judgements, but scientific and evidence-based methods attempt as far as possible to be even-handed and transparent. Here the tone is adversarial rather than inquisitorial, aiming to undermine and cast doubt rather than find out what the studies contribute, and arguing for a particular point of view. Read and colleagues are critical of those who carried out the meta-analyses and of their motives, stating ‘The authors' apparent disinterest [in the poor quality of the RCTs is] indicative of carelessness, bias, or both’. Emotive language is used, such as ‘Brain damaging therapeutics’, and ECT received by patients lacking capacity to consent is misleadingly described as ‘being forced to undergo ECT after stating that you do not want it’.

Evidence-based?

Evidence-based medicine is heavily weighted to using data from RCTs and sometimes this obscures that it means using the best evidence available and being aware of the strengths and weaknesses of different approaches, as no study design is flawless (Frieden Reference Frieden2017) (Table 2). In this review, Read and colleagues, contrary to their stated aims, conclude that the deficiencies in the SECT-controlled studies means that evidence that ECT works is lacking; however, they exclude all other RCTs of ECT, most notably those providing evidence for a stimulus dose–response relationship, and for an efficacy difference between bilateral and unilateral ECT, and for ECT compared with other treatments (e.g. UK ECT Review Group 2003; Mutz Reference Movsisyan, Dennis and Rehfuess2019). In addition, observational studies and audit data provide a wealth of informative descriptive evidence on outcomes and relative efficacy in different subpopulations (e.g. Heijnen Reference Heijnen, Birkenhäger and Wierdsma2010; Scottish ECT Accreditation Network 2019), and there is experience from clinical practice gathered over decades of use. The key point here is that wider evidence is disregarded. Even if we accept the authors’ view that these limited SECT-controlled data do not allow assessment of the efficacy of ECT, by ignoring other RCT data and other types of evidence they attract the criticism of ‘carelessness, bias, or both’ that they have made of others.

‘Brain damaging therapeutics’ and the cost–benefit of ECT

ECT has been used since the 1940s and techniques and safety have improved over time, as with all aspects of medicine, so that practice in the early days of ECT, with lack of monitoring or anaesthesia and uncontrolled electrical stimuli, does not reflect what happens today. Evidence provided by Read and colleagues for brain damage from ECT is based bizarrely on opinion quotes from American physicians from the early 1940s, which can only be seen as rhetorical rather than scientific. Current evidence based on extensive imaging and registry studies simply does not support the authors’ contention that ECT causes brain damage similar to that found after physical trauma (Jolly Reference Johnstone, Deakin and Lawler2020). ECT undoubtedly affects the brain, but so do other treatments, and depression itself.

Assessing the effect of ECT on cognition is a complicated issue, compounded here by bracketing cognitive effects with brain damage. The high rate of memory loss after ECT cited in this review refers to subjective retrospective evaluation, which it should be noted contrasts with the improvement found in prospective subjective evaluation of memory after ECT and which is mood-related (Anderson Reference Anderson, McAllister-Williams and Downey2020). The authors blur the difference between transient and longer-term effects, ignore retrospective versus prospective evaluation, subjective versus objective measures, different types of cognition and the effect of depression itself. Although there is good evidence that ECT transiently impairs some aspects of cognition, prospective studies of subjective and objective cognition simply do not find persistent impairment – in all but one aspect (Anderson Reference Anderson, McAllister-Williams and Downey2020). The exception is autobiographical and retrograde memory, where there is considerable uncertainty, largely due to difficulties in assessment (Semkovska Reference Semkovska and McLoughlin2013). This is important, not to be dismissed and certainly needs further research, as does lived experience after ECT more generally, but the discussion in this review is selective and superficial.

In discussing the mortality rate after ECT the authors of this review dismiss a recent meta-analysis of 15 studies finding low rates as a ‘study’ (not acknowledged as a meta-analysis) ‘based on medical records (relying on staff recording that [ECT treatments] had caused a death)’. One has to then ask why they did not instead report a meta-analysis of all-cause mortality after ECT based on data from 43 studies (Duma Reference Duma, Maleczek and Panjikaran2019). This found a mortality rate of 0.42/1000 patients and 6/100 000 ECT treatments, not all of which would be due to ECT, as depression itself is associated with a 1.5–2 times higher risk of death than in the general population. Read and colleagues prefer to rely on selective reporting of higher values from a few studies published between 1980 and 2001.

The authors conclude that ECT should be immediately suspended based on their cost–benefit analysis, i.e. no demonstrated benefit and important adverse effects. They have, however, failed to establish the former and the latter is asserted on the basis of opinion and selective quoting of the literature.

Conclusions

Read et al's systematic review (Read Reference Read, Kirsch and McGrath2019a) succeeds in identifying studies as set out in its aims, but does not define or justify the criteria used to determine robustness for a study to be able to contribute evidence. There is a legitimate debate to be had about what weight can be put on the evidence from SECT-controlled studies of ECT, and if this review had limited itself to its stated aims then it could at least be considered as part of the scientific debate.

However, the authors then extrapolate beyond the data to make the unsubstantiated claim that the deficiencies in these RCTs mean that there is no evidence supporting the efficacy of ECT, without even considering other RCTs or types of study. This is followed by a selective discussion of the adverse effects of ECT, leading to an unbalanced evaluation of the evidence in what can be best described as a polemic dressed in the clothes of a scientific review; indeed, it has been used to support a campaign headed by the lead author for the suspension of ECT (Read Reference Read2020). Read and colleagues conclude by saying that the ‘remarkably poor quality of the research in this field, and [its] uncritical acceptance […] is a sad indictment of all involved, and a grave disservice to the public’. The reader may wish to reflect on the harsh criticism as they evaluate the quality of this review and its conclusions.

Data availability

Data availability is not applicable to this article as no new data were created or analysed in this study.

Author contribution

I.M.A. was the sole contributor to the writing of this paper.

Funding

This research received no specific grant from any funding agency, commercial or not-for-profit sectors.

Declaration of interest

I.M.A. was Chief Investigator on an clinical trial funded by the Efficacy and Mechanism Evaluation (EME) Programme involving ECT that was subject to an open letter from Read and colleagues to the funder, and to all concerned with the study, requesting the trial be stopped on ethical grounds, a request that was not upheld.

An ICMJE form is in the supplementary material, available online at https://doi.org/10.1192/bja.2021.23.

eLetters

No eLetters have been published for this article.