Emergency preparedness and response (EPR) to handle radioactive materials is concerned with occupational health and safety at the work place. Enriching the high standard, quality work culture by adopting good radiation safety practices in a nuclear medicine setup is a vital step in minimizing hazards and protecting the health of people. Lack of knowledge, behavior (attitude), and practice process for handling the various awkward radiation emergency situations is alarming among all the stakeholders and creates panic situations and consequences that are hazardous to professional, public, patients, and environments. To assess and evaluate learners’ pre-existing level of learning, the learning acquired in course of time, continuous improvement in the delivery of content, and modes of training administration as per the Kirkpatrick level 2 evaluation, Reference Kirkpatrick and Kirkpatrick1,Reference Kirkpatrick, Brown and Seidner2 there is a need for an appropriate assessment tool, which is lacking at present for radiation emergency response team evaluation. Assessment of learners for psychometric parameters analysis, the close-ended questionnaires design, formatting, validating, and reliability are the systematic process and vital to analyze educational deliverable programs. In the same line, developing an evidence-based radiation emergency response assessment in the hospital setup is vital to design and develop a reliable and valid assessment tool.

Aims and Objectives

The aim of this present study is to develop a valid and reliable tool on radiation emergency response preparedness and response management (RadEM-PREM) also to explore the psychometric measures of the instrument. Once this instrument is ready for intervention, it will help to evaluate the net learning and the learning gap in future-ready multidisciplinary health science graduate students. Objective of the study is to design, develop, validate, and check the reliability of the items for “Radiation emergency preparedness response management (RadEM-PREM) Inter professional evaluation (IPE) assessment tool,” which is lacking at present.

This RadEM-PREM IPE evaluation tool would be significantly helpful to assess the readiness of health science interprofessional learners in team building for radiation emergency preparedness and response.

The process adopted in this work is designing the items’ content, content validation, face validation, analyzing test-retest of the items to check for their reliability for ready to use as assessment tool recommended by Considine et al. in 2005, Tsang et al. in 2017, Calonge-Pascual et al. in 2020. Reference Considine, Botti and Design3–Reference Calonge-Pascual, Fuentes-Jiménez and Casajús Mallén5

This work in the long-run will be very effective and productive to assess and evaluate the pre-existing learning level, net learning attributes and learning lag to bridge the continuous improvements in future training to check the interprofessional learner’s readiness for building multidisciplinary radiation emergency response team.

Methods

Ethical Committee Approval

This work is duly approved from KMC and KH Institutional Ethics Committee vide IEC :1017 /2019 in December 2019.

Study Design

Study design is prospective, cross-sectional, single centric, pilot study conducted in India.

Participants

Items were generated by 5 subject experts for instrument design for relevant content, and domain specifications followed the stages of questionnaire design and development systematic good practice process. The tool is hypothesized for initiating team work capacity building in radiation emergency preparedness and response area in a hospital setup. Psychometrics of the tool was assessed in terms of content validity, internal consistency, and test-retest reliability.

Of the 3 domains of knowledge, performance skill, and communication skill, items were sorted out based on the high percentage relevance of the content-domain compatibility. In the next step of validation, the 5 subject experts validated the contents of items, substituting to remove ambiguousness in the item contents, modification–remodification, screened for the levels of learning in the radiation emergency response area. Content validity was assessed to review the scale by a panel of 5 content experts with professional expertise in radiation protection. These 5 content experts were experienced either teaching faculty, a professional member of Nuclear Medicine Physicists Association of India (NMPAI)/Association of Medical Physicist India (AMPI), or having at least more than 10 y of professional practicing experience in radiation safety and the protection relevant field of medical radioisotope applications handling. All the item content experts and validators have at least a postgraduate degree in science holding with postgraduate degree/diploma in medical physics or nuclear medicine relevant professional qualifications and training in radiation protection.

Twenty-eight radiation safety expert professionals completed test re-test reliability of items for validation of RadEM preparedness management 21-item questionnaire Tool (RadEM-PREM tool) designed and developed for multidisciplinary interprofessional education (IPE) health science learners.

Study Size

In the present study, 92 items were screened out to 42 items in the first stage. In next stage, a further 21 items were sorted out for 3 domains such as knowledge learning attributes (KS), performance (PS), and communication (CS) skills based on the content validity index and high relevance in their respective domains. A total of 21 items were sorted out having percentage of agreement more than 70%, and each item’s content validity index with universal acceptability (I-CVI/UA) and scale content validity index with universal acceptability (S-CVI/ UA) is calculated. Of 21 items, 7 belong to the knowledge domain, 7 were from the performance domain, and another 7 were from the communication domain.

The inclusion criterion for the present study was working radiation professional experts in India. Entry-level future ready radiation professional were excluded.

Measurement Tool Used

Measurement tool includes close-ended questionnaires having 5 options which was curated and sent to the subject experts. The experts were then asked to rate each item based on relevance, clarity, grammatical /spelling, ambiguity, and structure of the sentences. Substituting content body, editing, correcting is adopted for domain-wise relevance of the items. Item-level content Validity index (I-CVI), Scale-Content Validity index (S-CVI)/Universal agreement (UA), Cronbach alpha coefficient, Pearson’s correlation coefficient (PC), intraclass correlation coefficient, percentage relevance of the tools are either discussed or tabulated.

Results

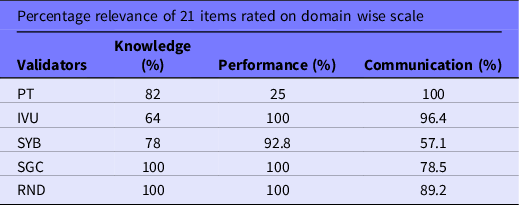

The high percentage relevance of the content-domain compatibility on the 4-rater scale initiated for instrument design process by 5 validators is shown in Table 1. In this table, validators’ percentage agreement for relevancy of the 21 items is tabulated in percentage relevance. The I-CVI, S-CVI, and modified kappa were analyzed for all the questions using Microsoft Excel (Microsoft Corp., Redmond, WA) (Table 2).

Table 1. Initial validators percentage agreement domain-wise rater scale

Table 2. Item-wise 21 item I-CVI, SCVI-average, and SCVI /UA

Lynn et al. Reference Lynn6 recommended that I-CVIs should be no lower than 0.78 to accept that item content valid. Researcher used I-CVI information in revising, deleting, or substituting items. The items that had a CVI over 0.80 were kept, and those ranged from 0.70 to 0.78 were revised. Items with CVI score of less than 0.70 were rejected and not considered for further inclusion or revision. The S-CVI calculated using universal agreement (UA) was calculated by adding all items with an I-CVI equal to 1 divided by the total number of items. The S-CVI/UA of 21 items ranged between ≥0.8 and ≥1.0, considering that the items had excellent content validity. The modified kappa was calculated using the formula: kappa = (I-CVI-Pc)/ (1-Pc), where Pc = [N!/A!(N-A)!]*0.5N. In this formula Pc = the probability of chance agreement; N = number of experts; and A = number of experts that agree the item is relevant. Reliability testing was done, and items with a kappa value ≥0.74 were retained and those with values ranging from 0.04 to 0.59 were revised.

Reliability of the developed tool done by doing test-retest of these questionnaire items measured Cronbach’s alpha coefficient. The test-retest reliability of all 21 items were administered on-line through MS form to 28 radiation safety professionals working in India within a 4-week interval. The internal consistency of the subscale was assessed using IBM SPSS-16 software in which Cronbach’s alpha was reported to be 0.449.

Pearson’s product-moment correlation was used to assess the correlation between the subscales of the questionnaire for item discrimination analysis as advocated by Haladyna 1999. Reference Haladyna7 A highly positive correlation was observed between communication and knowledge (r = 0.728). Moderate correlation was observed between performance and knowledge (r = 0.544) and also between performance and communication (r = 0.443). All 3 correlations among knowledge, communication, and performance were statistically significant at the 0.01 level (Table 3).

Table 3. Pearson correlation coefficient for the questionnaire

** Correlation is significant at 0.01 level (2-tailed).

Overall, intraclass correlation coefficient for all the measures was 0.646, which was statistically significant at 0.05 level (P < 0.05).

Limitations

This tool has been developed and validated in consultation with content experts and peers of radiation protection. Furthermore, the tool is checked for reliability and internal consistency on another group of radiation safety professionals working in India. However, this tool is not tested among the interprofessional learner sample group from multidisciplinary health sciences including medical students.

Discussion

The present study describes a newer tool to assess the knowledge along with performance and communication skills of interprofessional radiation emergency team learners, with 5 subject experts as recommended by Lynn 1986 Reference Lynn6 followed. In this tool design and development, all the steps have been taken into account (Polit and Beck 2006, Reference Polit and Beck8 Polit et al. 2007 Reference Polit, Beck and Owen9 ) to obtain content validity evidence. The example provided was taken from a construction process instead of an adaptation process. Thorndike 1994 Reference Thorndike10 advocates that both reliability coefficients and alternative form correlation be reported, so it is followed in this study too. Low reliability coefficients may indicate that the test done on 28 professionals are small. Another possible reason of less coefficiency, as Rattray and Jones in 2007 Reference Rattray and Jones11 reported, may be close-ended questions, which mainly restrict the depth of participants response and, hence, diminish or render incomplete the quality of the data. Another possible reason of low reliability coefficient may be that the professional who participated in the re-test either did not pay required attention on the items, were disinterested, or got distracted. A solution to this could be item discrimination analysis to conduct on a larger group of panel experts. To overcome, in this study, an alternative form of correlation has also been calculated following the guidelines and it is greater than the reliability coefficient (r = 0.66). Peer review practices using guidelines led to improved psychometric characteristics of items. Reference Abozaid, Park and Tekian12 This instrument has been constructed it has undergone the testing by limited experts as pilot data collection, and their results are very promising; need to check test-retest reliability in either a larger panel expert group or multidisciplinary health science learners group after the sufficient span of time.

The new “RadEM-PREM IPE assessment tool” would be very useful for assessment of multidisciplinary team-building in the area of radiation emergency preparedness and response in entry-level and intermediate-level emergency response team members.

Conclusions

This study concludes that the 21-item “RadEM-PREM IPE assessment tool” is a valid and reliable new measuring tool. “RadEM-PREM IPE assessment tool” is to assess knowledge, performance, and communication skills of interprofessional radiation emergency response team learners in hospital settings.

Acknowledgments

This project was done as a part of the Fellowship Program at MAHE-FAIMER International Institute for Leadership in Interprofessional Education (M-FIILIPE), Manipal Academy of Higher Education, (MAHE) Manipal, India. We thank Dr. Ciraj Ali Mohammed, Course Director M-FIILIPE and CCEID MAHE Manipal, all MAHE FAIMER faculty and MCHP Manipal for support and guidance.

Author contribution

B.S., M.K., P.T., S.S.M., W.W. Data curation: B.S., P.T., A.V., S.G.C., N.R.D. Formal analysis: A.V., A.K., B.S. Methodology: Project administration: B.S., S.G.C., N.R.D. Visualization: B.S., S.G. N.R.D. Writing–original draft: B.S., M.K., S.O., A.K.P., A.V. Writing–review and editing: B.S., M.K. S.O., A.V.

Funding

None.

Competing interests

None.

Appendix