Introduction

Evidence suggests that mentoring improves professional outcomes for both faculty mentors and mentees. In descriptive correlational studies, mentorship has been associated with improved mentee productivity [Reference Bertram1–Reference Steiner6], promotion [Reference Bertram1,Reference Lach2,Reference Buch7], academic self-efficacy [Reference Feldman8–Reference Palepu11], likelihood of mentoring others [Reference Steiner6], and career satisfaction [Reference Chung and Kowalski12–Reference August and Waltman14]. In a systematic review, mentorship has also been linked to personal development, career guidance, and career success [Reference Sambunjak, Straus and Marusic3]. Furthermore, in the only study with a comparison group, mentored faculty’s mean grant dollars and proposal counts were higher than the comparison cohort [Reference Libby15]. Thus, the preponderance of evidence and anecdotal confirmation reveal the implicit impact of mentoring for faculty career development.

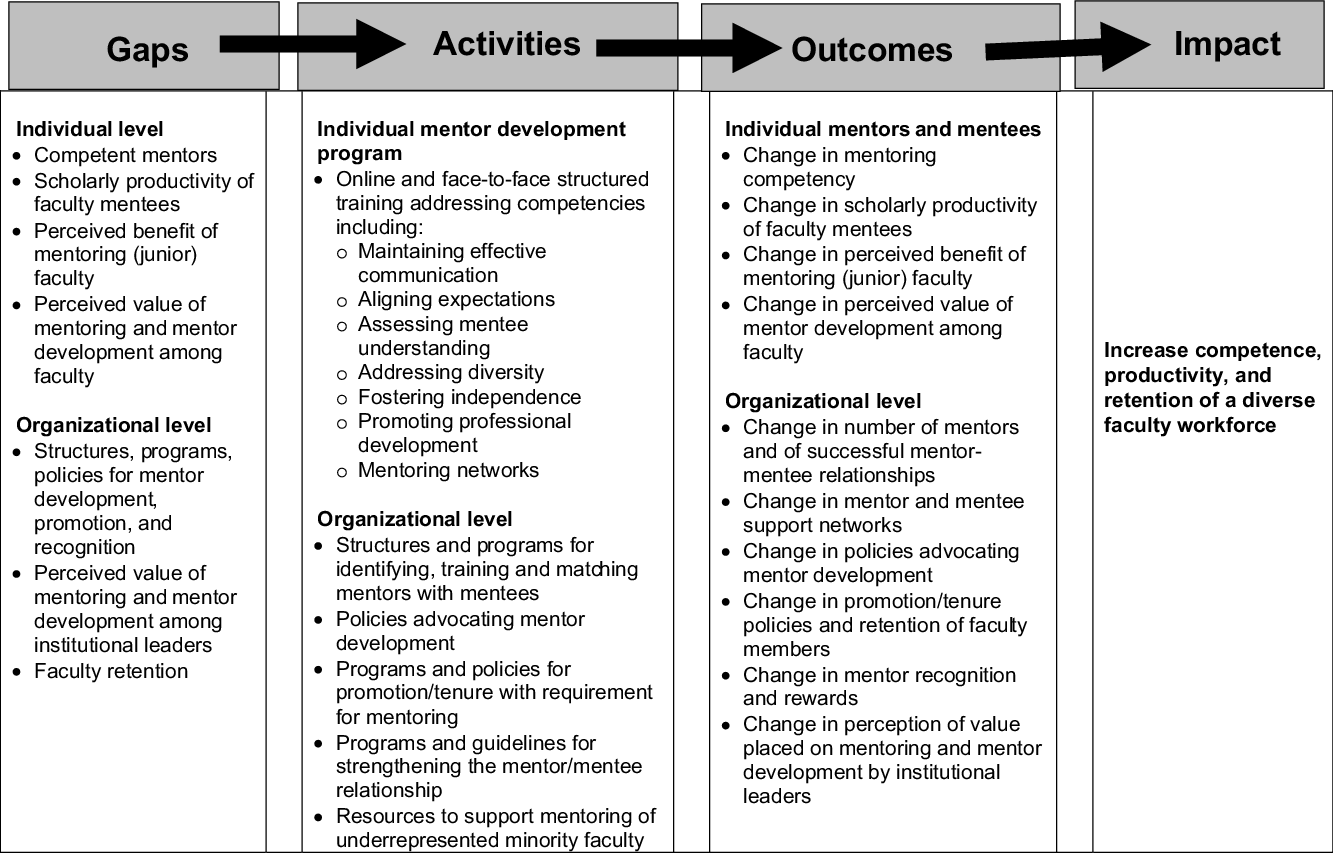

Individual faculty behavior, such as serving as a mentor, is likely influenced by individual beliefs, knowledge and actions; social network expectations and behaviors (perceived or real); and characteristics of the organization in which the individual works (Fig. 1) [Reference Keyser16–Reference Zachary19]. Organizational climate is defined as the shared perceptions of and the meaning attached to the policies, practice, and procedures employees experience and the behaviors they observe that are supported, expected, or rewarded [Reference Schneider, Ehrhart and Macey20]. In general, climates arise due to three distinct processes within organizations: exposure to tangible structural characteristics (e.g. committees); practices that lead to the attraction, selection, and retention of people with similar characteristics; and socialization that teaches appropriate behavior [Reference Grojean, Resick and Dickson21–Reference Schneider23]. Organizations do not have single climates; multiple climates co-exist, each related to various organizational processes and strategic outcomes [Reference Schneider, Ehrhart and Macey20]. For example, the climate for safety may be different from the climate for mentoring.

Fig. 1. Individual and organizational influences on mentoring behavior and outcomes (adapted from [Reference Sood, Tigges and Helitzer17]).

While there are measures of individual outcomes related to research mentoring [Reference Fleming24], no reliable and valid scales currently exist for assessing the importance and availability of components of the organizational mentoring climate at research institutions of higher education [Reference Keyser16,25]. There is also no information about which components of mentoring climate are relevant to faculty, whether the content or quality of organizational support for mentoring is related to mentoring behavior, and how to measure the impact of climate interventions. The lack of reliable and valid scales to assess the organizational mentoring climate constitutes a critical gap in studying interventions to advance the climate, which is crucial in order to achieve the long-term goal of creating and strengthening a skilled and diverse academic workforce. Our study’s objective was to perform psychometric testing of two novel scales to measure organizational mentoring climate importance and availability at research institutions of higher education.

Materials and Methods

Sample

In this cross-sectional, psychometric study, we invited a study population of 6152 faculty from two public, research-intensive universities in the southwest United States, one with 3195 faculty across main, health sciences, and branch campuses, and the other a university without a medical school with 2957 faculty. A total of 616 (10.0%) surveys were returned; 355 (5.8%) had complete data (defined as answering at least one item near the end of the survey; 8.4% from University #1 and 2.7% from University #2) and constituted the final sample for psychometric analyses. The sample size for the initial 36-item scales was adequate with 5–10 participants per item (per scale) using accepted techniques for sample size calculations for exploratory models of factor analysis when statistical significance is not being tested and the concept of power does not apply [Reference DeVellis26–Reference Tinsley and Tinsley29].

Procedures

Procedures for instrument development included item development based on the literature and investigators’ experience, the evaluation and rating of content validity, and final administration to faculty to evaluate the reliability and construct validity of the Organizational Mentoring Climate Importance (OMCI) Scale and the Organizational Mentoring Climate Availability (OMCA) Scale.

Item development

We developed 37 mentoring climate items classified in four dimensions: Structure (13 items), Programs/Activities (11 items), Policy/Guidelines (9 items), and Overall Value (4 items). Items in the first three dimensions were based on Keyser and colleagues’ [Reference Keyser16] conceptual model of institutional efforts to support academic mentoring. We supplemented those items with additional items from a literature review and our own experiences, particularly with mentoring faculty from under-represented populations.

Content validity

Content validity of the items was determined using established methods [Reference Lynn30]. Four independent, nationally-known content experts from the National Research Mentoring Network [Reference Sorkness31] with expertise in mentoring and mentoring climate (all from outside the institutions where items were developed and construct validity was studied) participated in this phase. The experts separately evaluated whether each item was relevant to the overall conceptual framework of organizational mentoring climate and was related to the dimension in which it was placed in the survey, using an ordinal 4-point rating scale (1 = not relevant; 2 = unable to assess relevance without revision or needs such a revision that it would no longer be relevant; 3 = relevant but needs minor alteration; 4 = very relevant and succinct). Based on the content validity process, 3 of the 37 items were deleted, 2 new items were written, and 8 were modified based on feedback. The remaining 36 items were judged as content valid and retained. The mean content validity scores for the individual items as they related to the overall conceptual framework ranged from 3.25 to 4.0 [Reference Sood, Tigges and Helitzer32]. The number of items in the developed instrument was consistent with guidelines that an instrument’s initial item pool be composed of 1.5 to 2 times as many items as the final instrument [Reference Nunnally and Bernstein33].

Instrument testing

We created email distribution lists using faculty rosters from both universities with permission from the appropriate institutional offices and distributed email invitations to participate in the online Research Electronic Data Capture (REDCap) survey [Reference Harris34]. The invitation clearly stated that participation in the study was purely voluntary. Faculty were provided a web-linked option in the email to accept or decline participation. Those who did not decline or complete the survey were sent additional bi-weekly invitations over 4 months using the automated REDCap survey administration feature. Those who started but did not complete the survey were provided a reminder to finish.

The online survey included an IRB-approved informed consent document, with an implicit consent if the participant clicked on the link provided in the email. The link provided access to the anonymous, structured, self-administered REDCap survey. The survey took approximately 20 min to complete. The first scale (OMCI) asked about the importance of 36 items (organizational structures, programs, policies, and values related to mentoring) using 5-point Likert response options ranging from “very important (1)” to “very unimportant (5)” and a neutral midpoint. A sample importance item is: “Rate how important you think the following is to mentoring success, in general, at any institution – An annual award for excellence in mentorship to a faculty member.” The second scale (OMCA), located in a different section of the survey, used the same 36 items but asked respondents about the presence or absence of the item at their college/school/department/division (30 items) or their institution (6 items). Response options were “no (−1),” “don’t know (0),” and “yes (1).” We also included a brief set of demographic questions including gender, race, ethnicity, career stage, institution, faculty track and rank, and experience with mentoring.

Data Analysis

For construct validity testing of both the OMCI and the OMCA Scales, we used exploratory common factor analysis with principal axis factoring and oblique Promax rotation, assuming that each item was composed of both systematic and random measurement error and that factors would be correlated. Because a higher correlation makes it more difficult to interpret the factor structure loadings, delta (δ) was set at −1.0 in SAS software [35] to limit the degree of correlation (obliqueness) between factors to approximately 0.30 [Reference Nunnally and Bernstein33,Reference Pett, Lackey and Sullivan36]. Factor extraction followed guidance that extraction be continued until all extracted factors account for at least 90% of the explained variance or until the last factor accounts for only a small portion (<5%) of the explained variance [Reference Hair37], as well as Eigen value and scree plot examination. We examined factor pattern matrices to determine simple structure for both scales as these matrices control for correlation among the factors and are most easily interpreted [Reference Hair37,Reference Tabachnick and Fidell38]. Criteria for retaining items included (a) rounded loading of ≥0.40 on a given factor; (b) loading is at least 0.10 to 0.15 higher than loading on any other factor; and (c) theoretical considerations.

We assessed internal consistency reliability using Cronbach’s alpha coefficients. We made final decisions about item retention and subscale composition by examining inter-item correlation matrices, average inter-item and item-to-total correlation coefficients, and alpha estimates if the items were dropped [Reference Ferketich39].

Results

Sample

Table 1 shows the characteristics of the 355 respondents from the two universities. The sample was predominantly female (62.5%), White (76.3%), non-Hispanic (77.5%), and on tenure-track (54.9%) from non-health science campuses (54.2%). In total, 54.6% of the respondents were at senior faculty ranks of Associate or Full Professor, evenly split across the individual ranks. The majority were not involved in a faculty mentoring relationship as mentors (59.7%) or mentees (73%) at the time of the study.

Table 1. Respondent characteristics (n = 355)

Item Analysis and Descriptive Statistics

Responses for each of the final 15 items (from the 36 initial items) ranged from 1 (very important) to 5 (very unimportant) for the OMCI; and −1 (no) to 0 (don’t know) to 1 (yes) for the OMCA Scales. The means of the individual items for the OMCI Scale (Table 2) ranged from 1.5 to 2.1 (SD = 0.8–1.1), indicating a general perception that all items were at least somewhat important.

Table 2. Descriptive statistics of 15-item Organizational Mentoring Climate Importance (OMCI) scale, and Organizational Mentoring Climate Availability (OMCA) scale items

1 Rate how important you think each of the following are to mentoring success, in general, at any institution. Responses: 1 = Very important; 2 = Somewhat important; 3 = Neither important nor unimportant; 4 = Somewhat unimportant; 5 = Very unimportant.

2 Available at my college/school/department/division (items 1–11) or institution (items 12–15). Responses: −1 = No; 0 = Unsure; 1 = Yes.

3 Remaining percentages to equal 100% from those who did not respond (only 0–3 respondents).

For the OMCA Scale, the means ranged from −0.65 to 0.16 (SD = 0.54–0.88), indicating that the perceived availability of the 15 items was generally low. At one extreme, 39.7% of respondents reported that their institution had a mentor training program, 36.6% had mentorship training materials, 29% discussed mentoring requirements at meetings, and 26.2% had a policy or guideline providing access to unconscious bias training for all faculty. On the other extreme, only 2.5% reported any type of policy or guideline to manage conflict in the mentor–mentee relationship; furthermore, as little as 2.3% reported the availability of either a policy or a committee delineating the criteria to evaluate mentoring success. In total, 14.9% to 60.8% of respondents reported that they did not know about availability of the 15 items.

Exploratory Factor Analysis

Matrix analysis demonstrated that factor analysis was appropriate to evaluate the data for both the OMCI and OMCA items. For the OMCI Scale, the Kaiser–Meyer–Olkin measure of sampling adequacy (MSA) was 0.95 and all MSAs for the individual items were higher than 0.90. For the OMCA Scale, the Kaiser–Meyer–Olkin MSA was 0.88 and all MSAs for the individual items were greater than 0.82.

We wanted to develop two parallel scales (OMCI and OMCA) with identical items for administration and interpretation ease. Our analysis began with 36 importance items. After examination of the correlation matrices, we dropped eight items highly correlated (r > 0.79) with similar items. Initial principal axis factor analysis with oblique rotation of the remaining 28 items suggested a three-factor solution and resulted in the elimination of four items with weak loadings (<0.40) and two items with cross-loadings separated ≤0.10 on two different factors. All six deleted items had other retained items with similar content.

We conducted factor analysis, using a three-factor solution, of the 22 OMCA items corresponding to the 22 remaining OMCI scale items to ascertain whether there was a similar factor structure for the two scales. Fifteen OMCA items loaded identically to the same OMCI scale items, resulting in the final two 15-item scales presented in this paper. Of the final seven items dropped from both scales based on the OMCA analysis, one item did not load on any factor, two had cross-loadings, and four loaded on different factors than the OMCI analysis. All seven deleted items had other retained items with similar content.

The final exploratory analyses showed a three-factor solution for each of the two scales that explained 94% and 88% of the variance for the OMCI and OMCA Scales, respectively. Table 3 shows the final factor pattern matrices for the 15-item scales and their three subscales: Organizational Expectations, Mentor–Mentee Relationships, and Resources. Loadings are similar to partial standardized regression coefficients in multiple regression.

Table 3. Oblique rotated (PROMAX) factor pattern matrices, means, standard deviations and standardized Cronbach alpha reliabilities for 15-item Organizational Mentoring Climate Importance (OMCI) and Availability (OMCA) scales and subscales

Internal Consistency Reliability

Table 3 shows the means, standard deviations, and standardized Cronbach alpha internal consistency reliabilities for the two scales and their three subscales. Cronbach alpha values ranged from 0.74 to 0.90 for the subscales, a very acceptable range for newly developed instruments [Reference DeVellis26,Reference Nunnally and Bernstein33]. Cronbach alpha values for the full 15-item OMCI and OMCA Scales were 0.94 and 0.87, respectively.

Discussion

For decades, faculty at research institutions of higher education have contended that mentoring is critical to faculty success [Reference Sood, Tigges and Helitzer17,Reference Steiner18,25,Reference Sorkness31]. Although organizational climate may affect faculty’s mentoring behaviors, until now, there has not been a research-based instrument to measure organizational mentoring climate. The results of this study provide evidence of the preliminary reliability and validity for the newly developed OMCI and OMCA Scales designed to measure the importance and availability of critical organizational structures, programs, and policies related to mentoring. Measures like these are essential for studying whether organizational climate is associated with mentoring behavior and if so, what interventions might be effective in changing the organizational mentoring climate.

We chose the items in this study based on the literature and our experience-based hypotheses about which organizational characteristics are important for mentoring climate; we empirically tested these items with faculty to determine perceived importance and availability. Based on this data, we posit that organizations that desire to have a positive organizational mentoring climate should invest in elements in three areas: organizational expectations related to mentoring involvement, guidance in formalizing the mentor–mentee relationship, and concrete resources to support mentoring. These investments would entail creating explicit expectations for senior faculty’s engagement in mentoring relationships with junior faculty; establishing opportunities for the regular discussion of specific qualifications to be an effective mentor; implementing structures for the frequent examination of the quality of the relationships; and including mentoring success as a criterion for tenure and promotion. There is a need to formalize the mentor–mentee relationship by assessing the mentors’ qualifications and mentor–mentees’ assignments; establishing the criteria to evaluate the success of the relationship; and, providing guidance and structures for managing conflict between mentors and mentees. Finally, mentor training is a major initiative identified for improving mentoring in academia [Reference Sood, Tigges and Helitzer17,Reference Pfund40] and the results of this study support that emphasis; mentor training programs and materials are identified as concrete resource components of organizational mentoring climate. Resources to support mentoring of under-represented groups are also crucial [Reference Gibson41,Reference Pololi, Cooper and Carr42].

There is a glaring discrepancy between the perceived importance of all organizational mentoring climate characteristics and their actual availability. What is considered important is often not available. These data suggest that research institutions of higher education may be slightly better in providing concrete resources than setting explicit expectations or formalizing mentor–mentee relationships. Even if one assumes that some of the respondents did not know that specific resources are available at their institution, the lack of awareness in itself is a concern. Further study is indicated to determine the accuracy of faculty’s perceptions of institutional mentoring supports relative to the actual availability of those resources. However, the results from this study are consistent with other reports demonstrating that less than half of the studied universities or departments require mentoring for faculty promotion [Reference Shea5,Reference Stolzenberg43]; have formal mentor training [Reference Stolzenberg43,Reference Tillman44]; or have criteria to qualify as a mentor, stated mentor responsibilities, mentor/mentee written agreements, or guidelines for conflict management [Reference Tillman44]. It is not surprising then that nearly two-thirds of faculty report that they are not currently involved in mentoring relationships with other faculty [Reference Stolzenberg43]. Only one-third to one-half of faculty report having a mentor; in some fields, the prevalence is as low as 20% [Reference Sambunjak, Straus and Marusic3,Reference Palepu11,Reference Chung and Kowalski12,Reference Ramanan45], and overall, less than half of the faculty surveyed have had sustained, influential mentoring relationships [Reference Steiner6].

Based on the results of this study, we offer several recommendations for advancing the science of faculty mentoring. First, we found beginning support for the reliability, content, and construct validity of the newly developed OMCI and OMCA Scales; however, construct validity must be further developed with additional diverse faculty samples from multiple institutions, using confirmatory factor analysis to test for evidence of replicated factor structure. Test–retest reliability, convergent, and discriminant validity must also be explored.

Second, we need to assess whether activities that address specific gaps in organizational mentoring climate impact faculty mentoring success and whether our measures of mentoring climate are sensitive or responsive to organizational interventions designed to improve that climate. Little agreement exists about organizational characteristics that will encourage, improve, or reward faculty mentoring behavior and relationships [Reference Keyser16,25,Reference Tillman44]. In studies of climates other than mentoring, not all demonstrate a significant moderator effect for climate strength in predicting outcomes, so merely counting the number of available mentoring climate items at an institution may be inadequate [Reference Schneider, Ehrhart and Macey20]. We may need to explore more complex causal models related to desired outcomes (see Fig. 1).

Third, consistent with existing measures of organizational climate, the scales measure individual faculty’s perceptions of the mentoring climate; however, there may be aggregate effects, such as school- or department-, or division-level effects, that are different from the individual. Microclimate or the climate of the organization most proximal to an individual may be more important than the climate of the overall parent organization. In this study, we asked participants to focus on the level most relevant to them when answering questions related to availability. Items in the availability scale that were linked to the college/school/department/division levels rather than the institutional level (the resource items) were also items with the highest ratings on the importance scale. We recommend that future studies pay attention to the level at which the mentoring climate is measured. Examination of inter-rater agreement, including both within and between-group inter-rater reliability in future studies would provide support for aggregation of individual perceptions into group or organizational levels of analysis [Reference Schneider, Ehrhart and Macey20]. Studies using multilevel modeling are ultimately necessary to discriminate between individual, dyadic, group, and organizational influences on mentoring behavior.

Finally, while there is some debate, we need to carefully consider whether or not organizational mentoring climate is the same as mentoring culture. Culture is defined as: “the shared values and basic assumptions that explain why organizations do what they do and focus on what they focus on; it exists at a fundamental, perhaps preconscious level of awareness that is grounded in history and tradition” (p. 468) [Reference Schneider46]. In this study, we made a distinction between organizational climate and organizational culture related to mentoring. Organizational climate includes factors that are more visible and easier to assess and change. In contrast, organizational culture includes the unspoken rules, values, and beliefs that may influence faculty behavior and engagement [Reference Schneider, Ehrhart and Macey20,25,Reference Schneider46]. Organizational mentoring culture is likely initiated and reinforced by the organizational climate – the structures, programs, and policies related to mentoring. In this study, the four values items developed in the content validity phase did not load in the final factor analyses; this suggests that either they were integral to the final remaining items and did not contribute unique variance or that they represented some other incompletely measured construct such as organizational culture. Organizational leaders play a crucial role in establishing and reinforcing a values-based organizational culture [Reference Grojean, Resick and Dickson21], and it is likely that both the climate and culture set the tone for the importance, value, rewards, and consequences of mentoring [Reference Steiner18,Reference Ramanan45,Reference Castellanos47,Reference Chandler, Kram and Yip48].

This study has several limitations. First, although we sent multiple email invitations, only a small number of potential participants responded to the survey. Despite that, the number of participants was sufficient to do the planned psychometric testing. Psychometric studies typically require 1.5–2 times as many initial items as the items in the final instruments [Reference Nunnally and Bernstein33]. Our survey was lengthy due to the need to test 36 items for two different scales. The possibility that length may have affected response is based on the observation that while 616 (10%) of surveys were returned, only 355 (5.8%) respondents completed an item toward the end of the survey and were usable. In addition, response was highest at the lead investigator’s institution (8.4% vs. 2.7%). We anticipate that shorter surveys (15-item scales), ideally sent from an investigator affiliated with the study university, would improve response rates in the future.

In addition, respondents were predominantly female (62.5%), White (76.3%), non-Hispanic (77.5%), and slightly more than half were on the tenure-track (54.9%). Female, Whites, and Hispanics on the tenure-track were over-represented in the sample when compared to the university populations. (For comparison, University 1: 50.8% female, 62.8% White, 85.6% non-Hispanic, 31.3% tenure-track [49]; University 2: 45.4% female, 73.2% White, 92.4% non-Hispanic, 42.1% tenure-track [50,51]). Just as construct validity of the scales must be tested in additional samples, future subgroup analyses are warranted to determine if perceptions of climate are the same across different demographic and professional groups. One could speculate that female, Whites, or Hispanics on the tenure-track may be more likely to respond to a survey about, and have heightened interest in, mentoring climate when compared to individuals in other groups. Finally, some respondents may have had limited knowledge about certain organizational characteristics related to mentoring, possibly reflecting the relatively high number of “I don’t know” responses. This perceived limited availability may reveal either a lack of high-level initiatives or designated leadership support for the promotion of mentoring activities at the institution.

Conclusions

This research addresses a new area of science, the study of organizational mentoring climate in universities. For the first time, there are self-report scales that may be used to describe and quantify both the availability of and perceptions about the importance of specific mentoring structures, programs, and policies; this will allow further studies about how organizational mentoring climate may affect mentor–mentee behavior and whether it is possible to change organizational mentoring climate to improve faculty mentoring outcomes.

Changing the organizational mentoring climate is not easy; however, it could have important ramifications for faculty development and sustainability, particularly the career advancement of individuals from under-represented groups [25]. Change requires a shared vision, energetic change agents, significant long-term support from institutional leaders, and widespread buy-in from all faculty. A healthy organizational mentoring climate is essential for achieving the long-term goal of a skilled and diverse academic workforce.

Acknowledgements

This study was supported by a University of New Mexico School of Medicine Research Allocation Committee grant; the U.S. National Institutes of Health, National Institute of General Medical Sciences, Grant Number U01 GM132175; and the U.S. National Institutes of Health, National Center for Advancing Translational Sciences, Grant Number UL1 TR001449.

Disclosures

The authors have no conflicts of interest to declare.