Introduction

The European Network for Health Technology Assessment (EUnetHTA) consists of over eighty organizations from thirty European countries involved in national and/or regional Health Technology Assessment (HTA) processes. The goals of the network are to share HTA knowledge, information, and experiences and make best use of resources and reduce duplication. EUnetHTA was established in 2006 and could benefit from several grants from the European Union (EUnetHTA project, 2006–8; EUnetHTA Joint Action 1, 2010–12; EUnetHTA Joint Action 2, 2012–15, and EUnetHTA Joint Action 3, 2016–21). Detailed information on the project phases is available at the EUnetHTA Web site (1). One of the main objectives of EUnetHTA JA3 is to lay the foundations for a sustainable model for scientific and technical collaboration within HTA at a European level (2).

The relevance of collaborations depends largely on the reliability of processes and outcomes. Trust in the work produced by other partners of the network is essential. Therefore, a mechanism to ensure that the jointly produced assessment reports are of a sufficiently high and consistent standard fulfilling the predefined expectations (i.e., are of high quality) is required. To this end, EUnetHTA established a quality management system (QMS) as one of its central deliverables in JA3. The aim was to create a sustainable framework consisting of QMS structures (quality policy, processes, procedures, and organizational structures) combined with quality management (QM) measures (quality planning, quality assurance, quality control, and quality improvement) (Reference Defeo3), see Figure 1). Besides meeting the aim of high-quality processes and outcomes, the QMS should help improve efficiency as well as transparency and standardization of processes and methods. A clear formulation of QM elements also supports communication and a shared understanding of quality and QM. Ultimately, this should increase the uptake and acceptance of EUnetHTA's joint work on a national level.

Figure 1. EUnetHTA QMS.

This article has two objectives: first, we aim to present EUnetHTA's newly established QMS, including its guiding principles, structures, and components. Second, we aim to outline our experiences during the development and implementation of the QMS, the approaches chosen to overcome challenges, and the lessons learnt that could also be of relevance for similar networks.

Methods

Defining a Common Understanding of Quality and QM

In 2011, the European Commission explicitly emphasized the link between quality and collaborative HTA work for Europe in the Cross-border Healthcare Directive. Article 15 of this directive outlines the key objectives of cooperative European HTA as the provision of objective, reliable, timely, transparent, comparable, and transferable HTA information, its effective exchange, and the avoidance of duplication of assessments (4). Based on a strategy paper developed in 2012 (5), in preparation for JA3, the EUnetHTA consortium partners agreed on initiating a comprehensive QMS for EUnetHTA. A dedicated work package (WP), WP6 “Quality Management, Scientific Guidance and Tools,” was established to coordinate all QMS-related activities. WP6 consists of twenty-seven partner organizations from all over Europe. Early in JA3, a QM concept paper comprehensively describing the fundamental aspects, as well as the EUnetHTA-specific understanding of QM, was developed by involving a broad range of partners and considering international standards as well as relevant scientific literature (Reference Fathollah-Nejad, Fujita-Rohwerder, Rüther, Teljeur, Ryan and Harrington6).

Developing and Improving Processes and Methods for Joint Work

Several components of the QMS, such as process descriptions, templates, and methods, had already been developed in previous EUnetHTA Joint Action phases (Reference Erdös, Ettinger, Mayer-Ferbas, de Villiers and Wild7;Reference Kristensen, Lampe, Chase, Lee-Robin, Wild and Moharra8). The aim in JA3 was to complement missing parts, reconfigure the overall framework, and build up a comprehensive QMS as a blueprint for the European HTA collaboration post-JA3. For this purpose, two strands of activities were established. As a first step, the WP6 partners mapped existing guidelines and IT tools early in JA3, and based on extensive discussions on processes, weighting, and prioritization, selected the ones to be updated or newly developed. The reasons for the revision of methodological guidelines were, for example, that described methods were outdated or that internal or external stakeholders perceived them to be imprecise or incomplete (for a detailed description, see published report (Reference Chalon and Neyt9)). Based on the findings, several working groups were established to refine and complement the existing guidelines and IT tools. The development of methodological guidelines also comprised a public consultation phase for all interested parties.

The second strand of activities focused on processes and procedures for joint work. As a first step, a formal and inclusive process for creating and revising standard operating procedures (SOPs) was consented. Based on the respective processes established in previous EUnetHTA Joint Action phases and in close collaboration with the partners responsible for the production of joint assessments (EUnetHTA WP4), the WP6 teams transferred the process descriptions provided in the procedure manuals into SOPs and other guidance documents that seamlessly and chronologically cover all phases of the assessment of pharmaceutical technologies and nonpharmaceutical technologies (Other Technologies).

All components of the QMS relevant for EUnetHTA assessments (e.g., SOPs, templates, and guidelines) were incorporated into a web-based platform, the EUnetHTA Companion Guide. From mid-2017 to mid-2018, this “single-stop shop” was conceptualized, technically implemented, and tested, during which feedback from all partner organizations was continuously collected. In May 2018, the Companion Guide was launched. It is of restricted access and available to EUnetHTA partners only. To further increase its user-friendliness, a user test was conducted between July and August 2019, resulting in a revised version.

Evaluating the Components of the QMS

An essential part of QM is continuous quality improvement (Reference Defeo3). Accordingly, all components of the QMS were subject to continuous evaluation and improvement by recurrently applying the “Plan-Do-Check-Act cycle,” also called the “Deming cycle” (Reference Deming10) (see Figure 1). Besides other sources of feedback, an online survey was set up to systematically collect feedback on acceptance and usability of the parts of the QMS from the EUnetHTA assessment teams and project managers after publication of each assessment. Details of the evaluation of the QMS were published (Reference Luhnen, Chalon, Fathollah-Nejad and Rehrmann11). In addition, the uptake of EUnetHTA assessments in national HTA procedures and reasons for their (non)use were surveyed continuously by a dedicated work package (WP7) by so-called implementation surveys from EUnetHTA partners and non-EUnetHTA agencies (12). These results also provided insights into the impact of the newly established QMS on implementation.

Interacting with EUnetHTA's Working Groups

Throughout the project, a third category of overarching procedural and methodological issues emerged that was not covered by the predefined tasks, such as the question of how to define the scope for an assessment from a European perspective. Several task groups and subgroups (hereafter referred to as working groups) were established in order to work on the needs additionally identified.Footnote 1 To ensure overall consistency, close collaboration between the working groups and the teams working on the QM processes and products was established.

Setting Up Expert Groups to Exchange Knowledge and Provide Methodological Support

Throughout the project, several methodological questions arose that called for ad-hoc support and further exploration. In order to bundle knowledge, allow for an effective exchange on methodological issues, and ensure that the expertise required is available for each assessment, EUnetHTA has established two support groups of specialists, the Information Specialist Network and the Statistical Specialist Network.

Results

The Implementation of the EUnetHTA QMS

Since the project start in May 2016, WP6 has developed and updated around forty SOPs and other parts of the QMS. The project managers of the assessments supported the assessment teams in identifying all relevant documents and tools for the specific process steps, monitored compliance with procedures, and informed about new or updated content. In the following sections, we outline the implementation of the main QMS activities and outputs.

SOPs and Process Flows

Separate SOPs for pharmaceutical and nonpharmaceutical technologies have been developed following a predefined and inclusive process. The SOPs describe in detail who is responsible for which task during the assessment process and provide timelines for the assessment phases and tasks. Furthermore, the SOPs and other guidance documents address the qualifications and skills needed for setting up an assessment team, internal and external review processes, as well as the identification of and collaboration with patients/patient representatives, healthcare professionals, and manufacturers/marketing authorization holders. The SOPs further provide checklists for quality control, email templates, and links to relevant methodological guidelines and tools in order to guide the assessors through the whole assessment process and provide them with the documents and tools needed in each step. Based on the feedback received via the accompanying assessment team survey and other sources of feedback, the SOP teams amended and refined around half of the SOPs to improve their comprehensibility and usability and to reflect new developments.

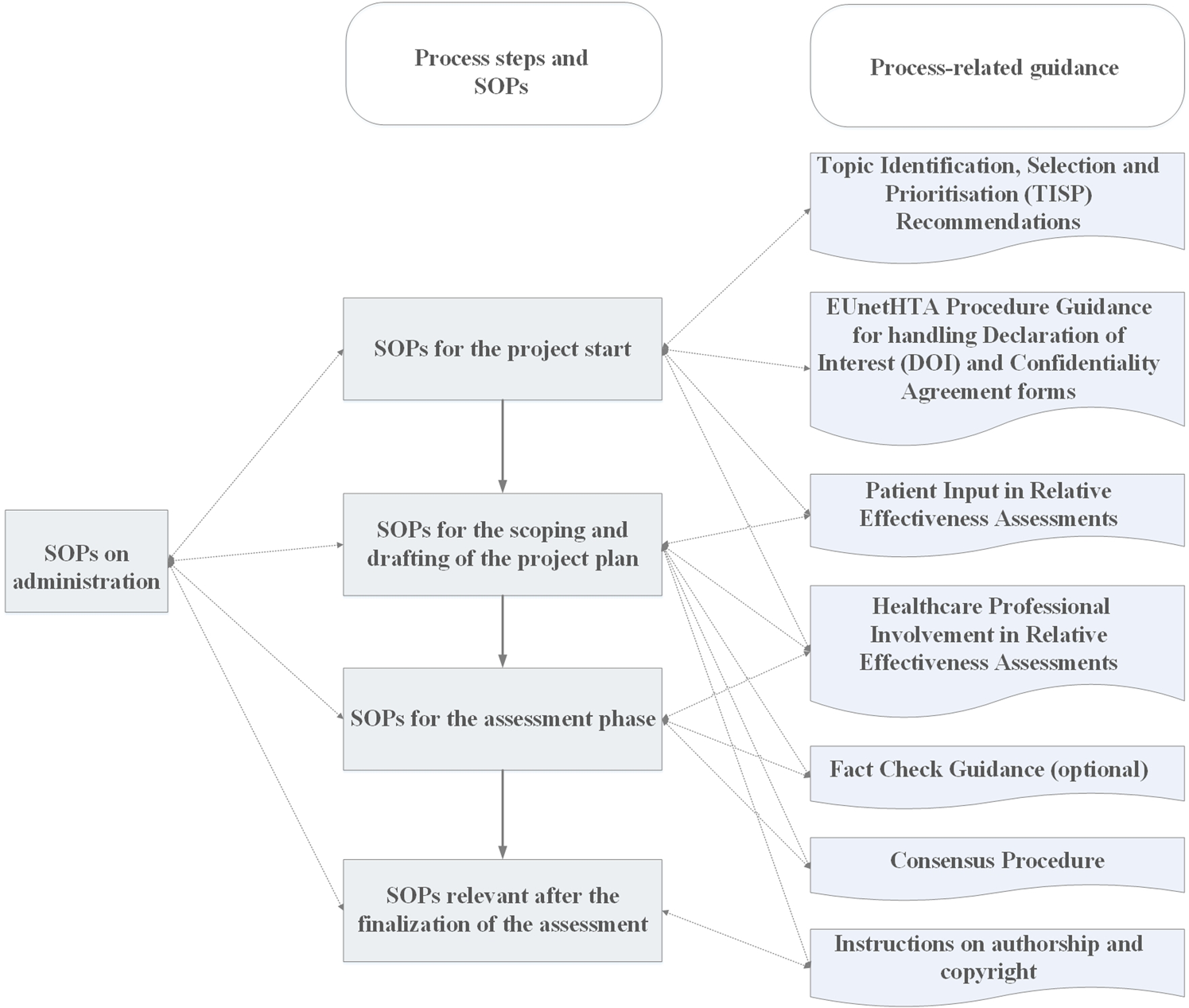

Process flows have been developed that provide graphical overviews of all SOPs and guidance documents relevant for the assessments in chronological order (see Figure 2).

Figure 2. Illustration of the process flows for EUnetHTA assessments.

Templates

A working group revised existing EUnetHTA templates for pharmaceutical technologies, including the letter of intent, project plan, submission dossier, and assessment report templates. The revisions aimed at facilitating the provision of required data by marketing authorization holders as well as improving the structure, readability, and relevance of the assessment reports. Based on two extensive surveys, evidence requirements and areas of improvement were identified for the submission dossier template and the assessment report template in order to determine how the usability and implementation of the pharmaceutical assessment reports on the national and regional level could be further increased. The templates for the assessments of nonpharmaceutical technologies were altered within the update of the HTA Core Model®. The HTA Core Model® is a methodological framework for the production and sharing of HTA information, developed by EUnetHTA (Reference Kristensen, Lampe, Wild, Cerbo, Goettsch and Becla14). In JA3, the HTA Core Model® was integrated into the newly established QM framework. Further, based on the feedback received from the assessment teams in JA3 and previous Joint Action phases, the reporting structure was translated into an assessment report template for a full assessment of nonpharmaceutical technologies.Footnote 2 This structure facilitates reading of the assessment reports to enhance their further uptake. Moreover, the template facilitates writing the assessment reports by guiding the authors through EUnetHTA's methodological and procedural requirements and by providing them with a clear and easy-to-handle structure. Two assessment teams piloted the new template and explored its usability for EUnetHTA assessments of nonpharmaceutical technologies starting in June 2020. Based on the feedbacks received, another minor revision of the template (inter alia to address formatting issues) was made.

Procedures for the revision of templates differed between branches to respond flexibly to the needs identified throughout the project. In addition to the work on the templates, submission requirements for assessments of pharmaceutical as well as nonpharmaceutical technologies were formulated.

Methodological Guidelines

All EUnetHTA guidelines are listed in Supplementary Table 1 and are available online under https://eunethta.eu/methodology-guidelines/. Based on the feedback received via several channels and a prioritization exercise conducted at the beginning of JA3, the existing guideline on information retrieval (15) was updated. A concept for the revision of the guideline on direct and indirect comparisons (16) was also finalized. In addition, a new methodological guideline on the assessment of economic evaluations was developed (17) and a concept for another new guideline on the assessment of clinical evidence was completed.

EUnetHTA Companion Guide

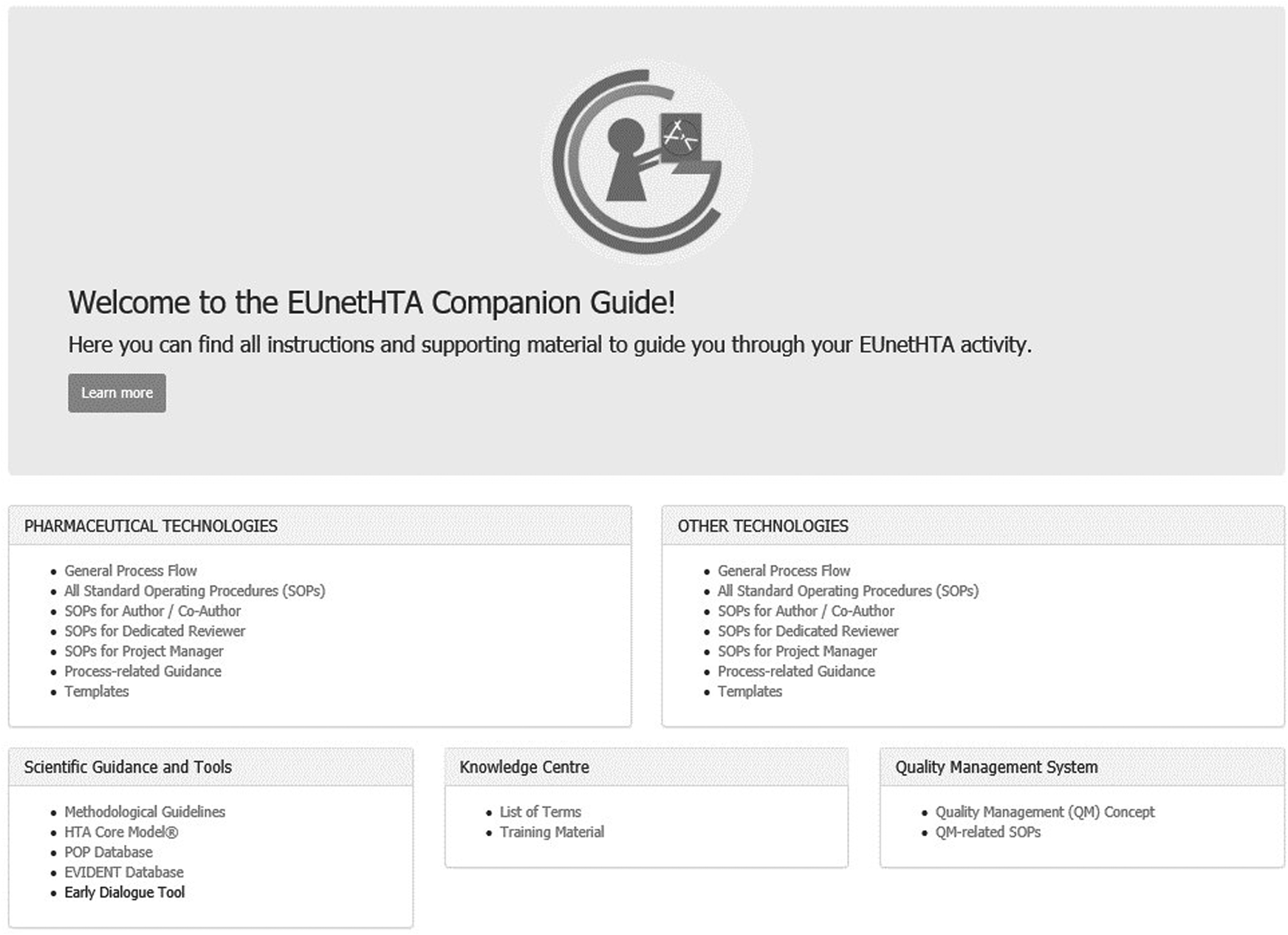

All QMS components described above are collected in the web-based EUnetHTA Companion Guide accessible to EUnetHTA partner organizations only. The Companion Guide is divided into five main parts:

(1) SOPs, guidance documents, and templates for pharmaceutical assessments,

(2) SOPs, guidance documents, and templates for nonpharmaceutical assessments,

(3) methodological guidelines and tools,

(4) a “Knowledge Centre” including a list of frequently used terms and QMS-related training materials, and

(5) EUnetHTA's QM concept and QM-related SOPs.

To facilitate navigation, the Companion Guide provides graphical overviews of the available content and options to filter the content, depending on the user's role in the assessment (author/coauthor, dedicated reviewer, project manager; see Figure 3).

Figure 3. Home page of the EUnetHTA Companion Guide.

The user has access to training modules providing information on how to use the Companion Guide and the methods, tools, and SOPs included. Moreover, the training material enables HTA producers to develop necessary QM and HTA capabilities that can support the work of EUnetHTA's partner organizations both during the joint and national work.

The Work of Working Groups and Their Impact on the QMS

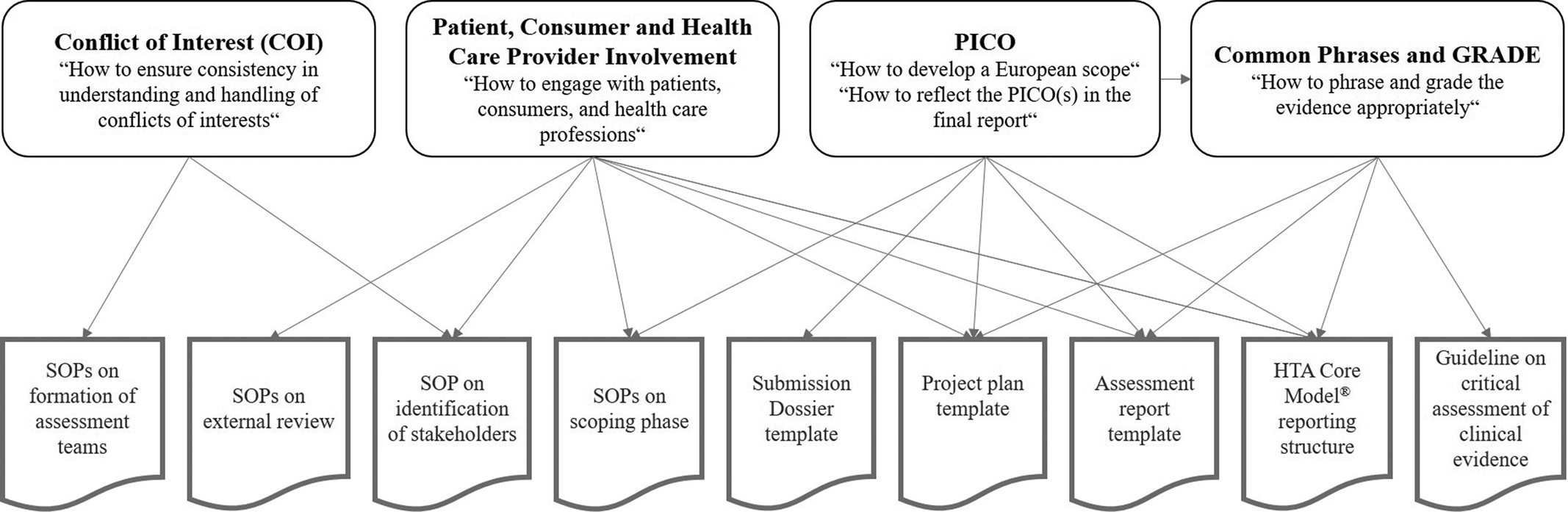

Throughout the project, several working groups developed recommendations on how EUnetHTA should approach different overarching methodological and procedural questions. As these activities were conducted in parallel to the establishment of the QMS, several interdependencies existed that required close collaboration and raised some challenges (e.g., delays and the need for revision of already completed work). The following section outlines four examples of working groups and illustrates how their activities affected each other as well as individual parts of the QMS (see also Figure 4).

Figure 4. Examples of working groups and interrelation with the QMS.

Handling Conflicts of Interest and Ensuring Confidentiality

To ensure consistency in how conflicts of interest (COI) are understood and handled, a guidance was developed (18). Moreover, a COI Committee was established that evaluates the declared interests of (internal and external) individuals prior to their involvement in specific EUnetHTA JA3 activities. The COI practices have been reflected throughout relevant SOPs.

Incorporating Input from Patients and Healthcare Professionals

The working group “Patient, Consumer and Health Care Provider Involvement” focused on ways to improve the involvement of patients, consumers, and healthcare professionals in assessments (Reference Elvsaas, Ettinger and Willemsen19). The assessment teams could use the recommendations produced by the working group (e.g., methods for the collection and incorporation of direct input from individual patients or healthcare professionals (20;21)), among others, to inform the development of the assessment's research question(s). Moreover, the template sections on patient and healthcare professionals involvement were aligned.

Defining a European Scope

To be of high relevance in as many national and regional settings as possible, a European assessment report might need to cover several PICO (population, intervention, comparator, and outcome) questions for the technology(s) under assessment (22). It became clear that the approach to the definition of the assessment's PICO/scope and its reflection in the final report varied between assessments. Consequently, a “PICO working group” was established to define guiding principles (23). These principles directly affected the structures and content of several assessment-related templates and the descriptions of the SOPs on the scoping phase. Moreover, the recommendations needed to be considered by the work of the “Working group for Common Phrases and GRADE” (see below).

Using Appropriate Standard Phrases and Grading the Evidence

Another question requiring concerted efforts was how EUnetHTA should phrase and grade the evidence on clinical effectiveness and safety in its assessment reports. Results and conclusions should be presented in a standardized and predictable manner without unintentionally implying or predetermining reimbursement decisions in some jurisdictions, which are under the responsibility of the national/regional decision maker. To this end, the objectives of the working group for Common Phrases and GRADE were (i) to define a set of standard phrases that should or should not be used and (ii) to provide recommendations on the use or nonuse of a specific evidence grading system. The project plan and assessment report templates will need further revision based on the outputs of the working group for Common Phrases and GRADE in the next phase. The work of the group, in turn, relied on the principles defined by the PICO working group.

The Achievements and Experiences of EUnetHTA's Expert Groups

The Information Specialist Network was established in March 2019. As of today, seventy-one information specialists from twenty-six EUnetHTA partner organizations form part of this network (Reference Waffenscfhmidt, van Amsterdam-Lunze, Gomez, Rehrmann, Harboe and Hausner24). The network is involved in identifying information specialists for individual assessments and in providing advice on difficult information-retrieval related issues and on how to apply the respective SOPs. Moreover, it provides training and facilitates regular exchange between information specialists. In the long run, the steering committee of the network could also address general questions that have to be solved, such as copyright issues, selection of information sources, and the use of software tools for screening.

The Statistical Specialist Network was established in April 2020 to support assessment teams with statistical analyses-related questions. In addition, this network has been used as an internal capacity building and discussion platform. Besides statistical support for ongoing assessments, one of the envisaged outcomes is a Frequently Asked Questions database in order to strengthen statistical practice throughout the jointly produced assessments. As of today, twenty-five statistical experts from sixteen EUnetHTA partner organizations are part of this network.

Discussion

Within JA3, EUnetHTA has taken a significant step toward a sustainable model of European collaboration on HTA. The newly established QMS and its parts can function as a blueprint for the future joint work. The results of the work benefited from the exchange of different views and from a broad spectrum of experiences and expertise. The concentrated scientific and practical knowledge was one of the major virtues of the project. Moreover, the intensive collaboration on several scientific and technical aspects helped to build trust among partners. The introduction of the QMS in JA3 seems to increase the implementation and confidence with which agencies use the jointly produced assessment reports (25). At the same time, the establishment of standardized processes and methods required extensive efforts for the coordination between parallel activities in order to maintain overall consistency.

Challenges Faced and Lessons Learnt

The teams working on the predefined and newly emerging tasks intensively discussed several issues. These debates revealed a large heterogeneity of HTA contexts, remits, and practices. The experiences from JA3 underline that, in order to be of relevance for as many member states as possible, diverging requirements and multiple views need to be reflected in jointly produced assessment reports. This is especially important, as the scenario of a continuous pan-European HTA cooperation is taking shape with the publication of the European Commission's legislative proposal for a regulation on HTA in January 2018 (26). A knowledge about and awareness of the history and status of various national and regional regulatory contexts and requirements is a prerequisite to identify appropriate modes of collaboration. The outputs of the working groups mentioned in this article (e.g., the PICO or Common Phrases and GRADE working groups) are examples of EUnetHTA's progress toward a European view on HTA. However, other aspects still need further reflection, such as the management of divergent opinions (unpublished data, 2020).

Furthermore, the feedback from several participants on the assessments indicates the need to refine the timelines of both the overall processes and the single process steps (Reference Luhnen, Chalon, Fathollah-Nejad and Rehrmann11). The assessment teams frequently mentioned that they had problems meeting the given timeframes and/or reacting to deviations from the initial project plans (resulting, for example, from a change in the regulatory approval process). From a QM perspective, the aim should be to define reliable timelines allowing to draft high-quality assessment reports covering all PICO questions relevant to European healthcare systems. At the same time, the timely availability of the report, which was identified as a major driver of uptake in the national and regional decision-making processes (22), needs to be ensured.

Another prerequisite for Europe-wide acceptance of common products is the appropriate level of involvement of affected parties (unpublished data, 2020). Although numerous network partners and external stakeholders have contributed to the output of the project, the level of involvement needs to be balanced with the objective to deliver outcomes in a timely manner, as defined in the project's Grant Agreement. On the one hand, more opportunities for participation might be needed, especially for partners not in prominent positions in the current project structure (e.g., a voting member of the EUnetHTA Executive Board) or lacking the resources required. On the other hand, the independence of EUnetHTA's processes from vested interests needs to be ensured to maintain objectivity of results. At the same time, stakeholders should be given the opportunity to play an appropriate role in the process. The balance between these requirements is subject to ongoing discussion between EUnetHTA partners (unpublished data, 2020). In the long run, more clarity is needed about individual roles, responsibilities, and general decision-making structures.

Building Capacity and Connecting Experts

Since the establishment of EUnetHTA in 2006, several methodological questions related to the jointly produced assessment reports have emerged and evolved over time. In response, methodological frameworks (the HTA Core Model® and methodological guidelines) have been developed to support consistency in the methods applied and to provide specific guidance (Reference Kristensen, Lampe, Chase, Lee-Robin, Wild and Moharra8). However, feedback from the assessment teams in JA3 showed that (i) the existing EUnetHTA guidelines are in many cases too broad or not instructive enough to allow for a clear and uniform methodological approach across assessments; (ii) not all methodological questions have so far been sufficiently addressed in the guidelines; and (iii) some guidelines developed in previous Joint Action phases need to be updated (Reference Chalon and Neyt9;27). Moreover, the gaps and updating requirements identified by a needs analysis at the beginning of JA3 (Reference Chalon and Neyt9) could not be fully addressed, the main reason being the lack of resources. Although EUnetHTA JA3 succeeded in integrating pre-existing material into the new QMS structure and making it available through a convenient new web platform (the Companion Guide), several needs expressed by the JA3 assessment teams could not be met. The actual development and revision of the guidelines for which concepts have been drafted in JA3 depends on the future framework and needs of a European HTA collaboration. Solid permanent QMS with structures and mechanisms of quality improvement and evaluation are needed to support the European HTA cooperation after JA3. Moreover, in order to provide more rapid responses to methodological questions, other approaches need to be investigated. For example, specialist networks established in JA3 could function as a blueprint for future expert groups in other fields.

Conclusion

A major achievement of EUnetHTA is the establishment of a QMS for the jointly produced assessment reports. The assessment teams are now better supported by templates, SOPs, methodological guidelines, and other guidance documents provided through an electronic platform. Several of the existing documents have been updated and refined. Furthermore, through training and knowledge sharing, EUnetHTA has contributed to HTA capacity building within its member organizations. The continuous evaluations have helped to identify gaps and shortcomings in the newly developed products, which, in turn, has led to continuous quality improvement. The implementation of the QMS seems to have helped to increase uptake and trust in joint work. However, the definition and maintenance of processes and methods meeting the multifold requirements of healthcare systems across Europe remains an ongoing and challenging task.

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/S0266462321000313.

Acknowledgment

The authors thank Natalie McGauran for editorial support.

Funding

The contents of this paper arise from the project "724130 / EUnetHTA JA3," which has received funding from the EU, in the framework of the Health Programme (2014–2020). Sole responsibility for its contents lies with the author(s), and neither the EUnetHTA Coordinator nor the Executive Agency for Health and Consumers is responsible for any use that may be made of the information contained therein.

Conflicts of Interest

The authors declare that they have no competing interests.