For centuries, the illustration of archaeological findings has been an essential part of communicating the past to other scholars and the public. Visual representations of artifacts, landscapes, and prehistoric scenes have been used to disseminate archaeological interpretations, reassembling unearthed material in scenes depicting past technologies, lifeways, and environments (Adkins and Adkins Reference Adkins and Adkins1989).

These processes of making visual representations have drawn on the technologies, techniques, and conventions of their times, and they have played a role in the establishment of archaeology as a scientific discipline (Moser Reference Moser2014). Line illustration, engravings, and painting in diverse media came to be complemented by photography in the nineteenth century (Bohrer Reference Bohrer2011). Computer-based techniques and later three-dimensional modeling came to be layered alongside their predecessors through the late twentieth century into the twenty-first century (Magnani Reference Magnani2014; Moser Reference Moser and Hodder2012). Today, archaeologists commonly employ technologies like photogrammetry to visualize artifacts smaller than a few centimeters or as large as landscapes (Magnani et al. Reference Magnani, Douglass, Schroder, Reeves and Braun2020).

Despite technological advances, visualizing the past remains a specialized undertaking, requiring high skill—whether conventional or digital—financing, and time. Illustrators are expensive. Digital techniques, from illustration to three-dimensional modeling, despite increasing in ubiquity and ease of practice, require software, know-how, and equipment. For this reason, depictions of the past have remained in the hands of just a few skilled individuals, often under the purview of large projects or institutions.

Recent innovations in artificial intelligence and machine learning have pushed the conversation in new directions. Now with simple text prompts, complex and original images can be generated within seconds. Although some have touted these transformations as detrimental to the employment of artists or as risks to the educational system (Huang Reference Huang2023; Roose Reference Roose2022), technological advances have the potential to democratize the imagination of the archaeological past through illustration. In archaeology, the conversation is just beginning about how generative AI will reshape education, research, and illustration (Cobb Reference Cobb2023). In this vein, we present a workflow for scholars and students of archaeology as an alternative past-making exercise, thinking through the impacts of technological shifts on archaeological representation. In the months and years to come, archaeological illustrations made using AI will find utility in publications and museum exhibitions. Through a case study related to Neanderthal–modern human interactions, this article presents a methodology for visualizing archaeological evidence using artificial intelligence.

METHODS

Specifically, this article shows readers how to use DALL-E 2, a generative AI model trained and released by OpenAI in 2022 to create images that correspond to textual input. DALL-E 2 has minimal user hardware requirements and can be used from any computer or smartphone with Internet access. DALL-E 2 employs the “Transformer” deep learning architecture (Vaswani et al. Reference Vaswani, Shazeer, Parmar, Uszkoreit, Jones, Gomez, Kaiser, Polosukhin, von Luxburg, Guyon, Bengio, Wallach and Fergus2017)—a type of model that was originally designed for machine translation tasks (e.g., translating an English sentence into a corresponding French sentence of arbitrary length). In recent years, however, such Transformer models have been found to be extraordinarily effective in a wide variety of other contexts that involve generating an output sequence of data that corresponds to an input sequence of data.

In natural language processing tasks, for instance, Transformer models are now the preferred approach for generating answers to questions, condensing long texts into summaries, and a wide variety of text classification tasks (Brown et al. Reference Brown, Mann, Ryder, Subbiah, Kaplan, Dhariwal, Neelakantan, Larochelle, Ranzato, Thais Hadsell, Florina Balcan and Lin2020; Devlin et al. Reference Devlin, Chang, Lee and Toutanova2019; Radford et al. Reference Radford, Narasimhan, Salimans and Sutskever2018, Reference Radford, Wu, Amodei, Amodei, Clark, Brundage and Sutskever2019; Raffel et al. Reference Raffel, Shazeer, Roberts, Lee, Narang, Matena, Zhou, Li and Liu2020). Despite their origin in text data processing, Transformers have found success in modeling other modalities as well, such as images, which are flattened into a sequence of image “patches” akin to words in a sentence in order to be processed in the same way as text by the Transformer (Dosovitskiy et al. Reference Dosovitskiy, Beyer, Kolesnikov, Weissenborn, Zhai, Unterthiner and Dehghani2020).

This capacity to consider images as sequences of data akin to text is what makes it possible to train Transformer models to produce images corresponding to input text. For instance, in recent years, there have been a variety of models that have been successfully trained to directly perform text-to-image translations, such as the first version of DALL-E (Ramesh et al. Reference Ramesh, Pavlov, Goh, Gray, Voss, Radford, Chen, Sutskever, Meila and Zhang2021), CogView (Ding et al. Reference Ding, Yang, Hong, Zheng, Chang Zhou and Lin2021), Make-A-Scene (Gafni et al. Reference Gafni, Polyak, Ashual, Sheynin, Parikh, Taigman, Avidan, Brostow, Cissé, Maria Farinella and Hassner2022), and Google's Parti (Yu et al. Reference Yu, Xu, Koh, Luong, Baid, Wang and Vasudevan2022). More recently, diffusion models have also been successfully incorporated into text-to-image generation—DALL-E 2 (Ramesh et al. Reference Ramesh, Dhariwal, Nichol, Chu and Chen2022) and Google's Imagen (Saharia et al. Reference Saharia, Chan, Saxena, Li, Whang, Denton and Ghasemipour2022), for example. Although there are many potential text-to-image models to choose from, we employ DALL-E 2 in this study due to its widespread popularity and continued use as a benchmark for state-of-the-art performance in the text-to-image generation field. The strategies of iterative AI prompt engineering that we employ in this article, however, can be translated for use with other models as well, and we expect the underlying strategies to remain relevant for prompting text-to-image models into the future.

DALL-E 2 builds on the work of previously trained Transformer encoder and decoder models to do the following (Ramesh et al. Reference Ramesh, Dhariwal, Nichol, Chu and Chen2022):

(1) Encode text input as a CLIP embedding—a fixed-size, quantitative representation of the text that contains semantic and contextual information and is generated from the CLIP model. CLIP is a separate model that was trained on 400 million pairs of images and associated captions drawn from across the Internet to produce a jointly learned image/text embedding space—a latent space that allows us to quantify the relationship between any text and image element. CLIP has been found to accurately predict known image categories out of the box, and as a result of it being trained on such a large and noisy web dataset, its performance is robust and generalizable to unseen domains (Radford et al. Reference Radford, Wook Kim, Hallacy, Ramesh, Goh, Agarwal, Sastry, Meila and Zhang2021). On the basis of a text embedding, DALL-E 2 has been trained to generate a possible CLIP image embedding that is closely related to the input text.

(2) Stochastically generate a high-resolution output image conditioned on the CLIP image embedding generated in the first step and (optional) additional text prompts. This text-conditioned diffusion decoder model builds off of the GLIDE model (Nichol et al. Reference Nichol, Dhariwal, Ramesh, Shyam, Mishkin, McGrew, Sutskever and Chen2021) and has been trained to upsample images up to 1024 × 1024 resolution, generate a diverse range of semantically related output images based on a single input image embedding (the decoding process is stochastic and nondeterministic), and also perform text-based photo edits.

There are currently two options for generating and editing images via DALL-E 2: an online graphic user interface (GUI) and a developer application programming interface (API). In the online GUI, users enter a text description of their desired image, and they are provided an output batch of four corresponding images. From this initial batch of photos, an individual image can be selected, edits can be made to the selected image, and more variants can be generated in an iterative process. The API provides the same generation and editing features as the GUI but makes it possible to interact with DALL-E programmatically via languages such as Python. The online GUI is credit based: each iteration (the generation of one batch of images, an edit request, or a variation request) costs one credit (Jang Reference Jang2023), each of which was approximately 13 cents at the time of writing. The API, on the other hand, is charged by the number of images generated and the resolution of the images, and as of the time of writing, it costs 2 cents per 1024 × 1024 image generated.

Ethics of Illustration and Artificial Intelligence

As an emergent technology, ongoing discussions about the ethical dilemmas posed by artificial intelligence are nascent, momentous, and shared beyond archaeology with society at large (Hagerty and Rubinov Reference Hagerty and Rubinov2019). Yet archaeologists will face unique challenges posed by AI, including not only its impact on the interpretation and depiction of the past but also the myriad of ways these reorganizations trickle into labor practices and power dynamics in our own field. Compared to other shifts in technology—for instance, digital revolutions that have changed the way we visualize archaeological subjects—artificial intelligence has the potential to reorder practice more rapidly. Just as we do not see an end to academic writing in sight, we do not anticipate a diminished need for specialized archaeological illustrators. As with other technological developments in the field, AI should complement and expand our ability to work rather than overturn the skillsets required to do so.

In particular, anthropologists and artists will need to support sustained discussion about the potential social biases and inequities that are pervasive in the field and reproduced by the technology (see Lutz and Collins [Reference Lutz and Collins1993] for a discussion of photographic representations for comparison). AI draws on a myriad of images and text scraped from the Internet to inform its output. The resulting images may tend to reproduce visual representations that reflect broader inequalities and troublesome trends in our society (Birhane et al. Reference Birhane, Prabhu and Kahembwe2021). Because of this, a thoughtful review of AI-generated output, as well as a reflexive approach to AI-prompt engineering, is necessary. Just as AI has the potential to reiterate contemporary stereotypes through the presentation of the archaeological record, it also has the potential to help conceive alternative pasts. Anthropologists have the capacity to critically integrate these technological advances into practice, elucidating the risks but also the potentials of new tools.

Case Study

Significant interest in depicting the lifeways of closely related species, including Neanderthals, has occupied antiquarians and archaeologists for generations. Past sketches reflected the stereotypes and biases about the species at the time they were made. For instance, early twentieth-century illustrations portrayed Neanderthals as hairy, brutish cave dwellers but transformed over time to depict increasingly modern humanoids (Moser Reference Moser1992). Later discussions about their interactions have considered exchange (or lack of exchange) through an analysis of material culture (e.g., d'Errico et al. Reference d'Errico, Zilhão, Julien, Baffier and Pelegrin1998) and skeletal morphology (Trinkaus Reference Trinkaus2007), whereas most recently, the burgeoning field of paleogenomics conclusively demonstrates admixture (Sankararaman et al. Reference Sankararaman, Mallick, Dannemann, Prüfer, Kelso, Pääbo, Patterson and Reich2014). The application of intersectional Black feminist approaches to the study of Neanderthal–modern human interactions suggests a continued gendered and race-based bias against this closely related species (Sterling Reference Sterling2015).

The karst systems of southern France have long been a battleground for ideologies about Neanderthal behavior, and by extension, our relation to them as a species. The caves’ Neanderthal stone-tool industries figured prominently in the Binford/Bordes debate essential to the establishment of processual archaeology. Today, the region remains central to examinations of Neanderthal behavioral variability and their (dis)similarity to Homo sapiens. Did Neanderthals just control fire, or could they also make it (Dibble et al. Reference Dibble, Abodolahzadeh, Aldeias, Goldberg, McPherron and Sandgathe2017, Reference Dibble, Sandgathe, Goldberg, McPherron and Aldeias2018)? Did they bury their dead (sensu Balzeau et al. Reference Balzeau, Turq, Talamo, Daujeard, Guérin, Welker and Crevecoeur2020) or unceremoniously die in caves (Sandgathe et al. Reference Sandgathe, Dibble, Goldberg and McPherron2011)?

This article presents a methodology using artificial intelligence to illustrate these conflicting archaeological hypotheses, visually representing alternative interpretations of Neanderthal behavioral variability. In the first generated image via artificial intelligence, we assume Neanderthals who neither made fire nor buried their dead. In the second, we consider a fire-making species that also buries its dead. We depict Neanderthals occupying a cave-like setting, adjusting their environmental context according to archaeological evidence from southern France. In the text that follows, we describe the process of using the DALL-E 2 GUI to generate and edit images. For more detailed information on using the API to accomplish the same tasks programmatically, consult our supplemental code (Clindaniel Reference Clindaniel2023).

RECONSTRUCTING ANCIENT SCENES

Step 1: Base Image Generation

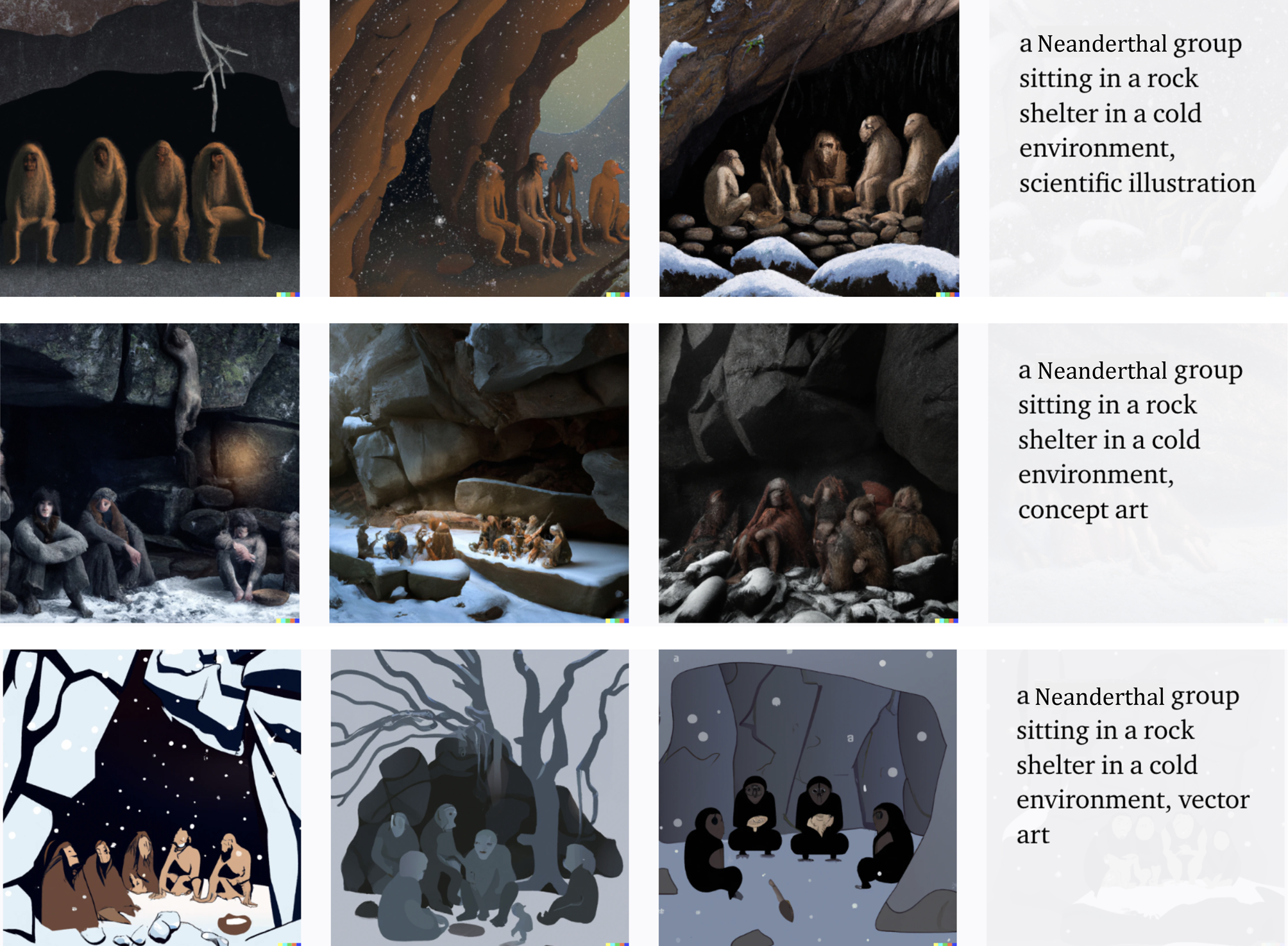

Users begin by writing a base text prompt, encapsulating the desired image content, size, and style; larger images take longer to generate. Under the hood, DALL-E 2 converts our text input to corresponding visual information in the CLIP image/text embedding space and then stochastically decodes the resulting image embedding as a high-resolution image corresponding to our original text input. We explored multiple styles of images prior to selecting one that appeared suitable for our purposes. For instance, a lack of a stylistic prompt generated unusable images, and a prompt indicating that the model should produce photographic realism similarly produced no usable base image. We tested additional styles featured in an online guide to DALL-E 2, including vector, concept art, and scientific illustration (see Figure 1). Through trial and error and visual inspection, we determined that a “digital art” style was conducive to visually communicating our hypotheses about Neanderthal behavioral variability. Other users will find other styles more helpful to communicate their desired scenes.

FIGURE 1. Text prompts exploring variable styles, including scientific illustration, concept art, and vector drawings. Image created by the coauthors using DALL-E 2.

The keywords selected for our initial base illustration included a “a Neanderthal group sitting in a rock shelter in a cold environment, digital art.” Given that the DALL-E 2 decoding process (from image embeddings to output images) is stochastic and nondeterministic, entering the same keywords can produce different batches of images each time the model is instantiated. Using the aforementioned keywords, we generated three batches of images, resulting in 12 images total (see Figure 2).

FIGURE 2. Three batches of images produced using the selected “digital art” style, from which we selected our base image. Image created by the coauthors using DALL-E 2.

Step 2: Base Image Selection

Given the wide range of image variants for each text prompt, we selected a base image to amend in subsequent steps through editing and integration of specific archaeological evidence. We considered the suitability of our base images in terms of anatomical realism, cultural context, and environmental accuracy. For instance, some images returned with ape-like or stooped humanoids, whereas others incorporated groups sitting in built structures reminiscent of more recent modern-human constructions. These depictions reproduced age-old stereotypes about Neanderthals, which—although debunked through more than a century of archaeology—appear pervasive across Internet sources. Through all stages but especially during initial base-image generation, archaeologists should be critical of the kinds of implicit, past, or widespread biases that may shape the output. Our final selections presented an archaeologically sound depiction of a group occupying a cave site. Once a base image was selected as an acceptable starting point, we could begin to modify the illustration further (see Figure 3).

FIGURE 3. Base image selected to represent a group of Neanderthals in a rock shelter in a cold-weather environment. Note the undesirable features in the image, including an anthropomorphic anomaly on the right. Image created by the coauthors using DALL-E 2.

Step 3: Image Modification

In our third stage of archaeological illustration using artificial intelligence, we sought to modify our base image through deletion and insertion of specific archaeological evidence. We sought to create two different images, supporting hypotheses reported by different camps of archaeologists. To this end, we sought to represent the ability or lack of ability to make fire, and the burial or decomposition of Neanderthal remains in cave contexts in cold climates (marine isotope stage [MIS] 3 and 4). We integrated additional information about the primary diets of Neanderthals yielded from archaeological evidence, which was dominated by reindeer in cold climatic phases (Goldberg et al. Reference Goldberg, Dibble, Berna, Sandgathe, McPherron and Turq2012).

To do so, we modified our base images to integrate into the following prehistoric cultural environments (see Figure 4 for an example of the integration of reindeer, which required that two batches of four photos be generated before we determined that a suitable image was available). Our first image depicts the often-assumed behavioral practices of Neanderthals, occupying a cold environment rich in reindeer, practicing burial, and making fire. The second image generated represents the opposing hypothesis. Also a reindeer-rich, cold-weather environment, our second representation depicted a species that could not make fire and did not bury its dead.

FIGURE 4. Two batches of photos generated using the prompt “Rangifer tarandus in the distance, digital art.” Image created by the coauthors using DALL-E 2.

DALL-E 2 was fine-tuned to learn how to fill in blank regions of an image, conditioned on optional text input (for details of this fine-tuning process, see Nichol et al. Reference Nichol, Dhariwal, Ramesh, Shyam, Mishkin, McGrew, Sutskever and Chen2021). This makes it possible to mask portions of a base image that we want to stay the same and provide text prompts indicating objects that should be added in unmasked, transparent regions (in the same style as the base image). Such edits can be performed within the current borders of an image (called “inpainting”), or this masking procedure can also be used to extend images beyond their original borders (“outpainting”).

Step 3.1. In cases where it was necessary to change data with the borders of our base image, we began by deleting a fragment of the original image. Using the in-built DALL-E eraser tool in the online GUI, we created a space to add additional objects to our image using a new text prompt, following Step 1 above. For instance, to generate a group of reindeer in the background, we deleted a small section of our base-image background and used the new text prompt “Rangifer tarandus in the distance, digital art” (see Figure 5). We repeated this process serially to add or remove elements of an image—for instance, to add fire “a small campfire glowing on logs, digital art”) or remove visual artifacts we determined were undesirable to remain in the image (deletions were replaced with prompts such as “cave wall” or “snowy background”).

FIGURE 5. Erasure of base layer section to produce mask for generating new content (i.e., “inpainting”), in this case a group of reindeer in the erased area. Image created by the coauthors using DALL-E 2.

Step 3.2. In cases where data needed to be added beyond the limits of the original scene, it became necessary to extend our image. Using an add generation frame in the online GUI, we provided a new prompt and set the limits of our desired scene. For instance, when we wanted to add a scene suggesting that Neanderthals did not bury their dead, we needed to expand an activity area in the foreground of the image (see Figure 6). To do so, we used a prompt to generate unburied human remains. Again, we generated multiple batches of photos before considering which image best served the needs of the project—that is, reflected a Neanderthal consistent with current scientific understandings (e.g., anatomically correct, without buttons on clothing, metal, etc.; see Figure 7, which is a random selection from more than 75 batches of images).

FIGURE 6. Expansion of frame downward to generate additional data representing Neanderthal remains in the cave foreground (i.e., “outpainting”). Image created by the coauthors using DALL-E 2.

FIGURE 7. A random selection of images depicting unburied Neanderthal remains. Over 75 batches of images using variable text inputs were generated before the final selection was made. Image created by the coauthors using DALL-E 2.

Step 4: Finalizing Image Selection

Following the integration of additional archaeological data and, in some cases, the expansion of the frame, we made our final selection of images portraying alternative hypotheses about Neanderthal cultural repertoires, including (1) a species that lacked the ability to make fire and that did not bury its dead, and (2) an illustration of a symbolic species that controlled fire and buried its dead (see Figures 8 and 9). Images were selected based on seamless inclusion of desired graphic elements in support of the selected hypotheses, representing behavioral repertoires reflected in competing hypotheses.

FIGURE 8. Image reflecting Neanderthals who could not create fire in cold weather climates with low environmental availability and did not inter their dead. The partially decomposed remains of a Neanderthal are in the foreground of the image. Image created by the coauthors using DALL-E 2.

FIGURE 9. Image reflecting Neanderthals who interred their dead and controlled fire during cold climatic phases. A young Neanderthal is being buried centrally in the image, with a fire to the left. Image created by the coauthors using DALL-E 2.

CONCLUSION

Artificial intelligence is poised to become more prevalent in our daily lives in the coming years and decades. Even in its infancy, artificial intelligence hints at its potential as a powerful tool to depict and interpret the past. Illustrations that once took weeks or months and significant capital can be rendered in hours. With nascent investments in the technology that are in the billions of dollars, the coming months and years will see unparalleled leaps forward in deep learning, artificial intelligence, and applications tailored to tackle the needs of archaeologists. As these technologies develop, archaeologists will need to be acutely aware of the ethical dilemmas that present themselves—often shared more broadly across society but in some cases unique to our field. Ultimately, the visions we have of the past will become legion in their ubiquity and ease of production, sometimes reinforcing systemic social biases, but most critically, providing the tools to present alternative interpretations of ancient life.

What does generative artificial intelligence mean for the field of archaeology? In the presented case study illustrating our methodology, we visualize multiple archaeological hypotheses about Neanderthal behavior using one program. However, the approach may be scaled to represent and interpret archaeological evidence more broadly, from any time or location, using a suite of ever-transforming algorithms. For the first time, artistic renderings of the record will become approachable by broader subsections of archaeologists and publics alike. Democratizing representations of the past, artificial intelligence will facilitate multiple perspectives and (re)interpretations to be generated and shared.

Acknowledgments

The authors would like to offer thanks to the reviewers who improved this piece through their constructive criticism.

Funding Statement

No funding was received for the research or writing of this article.

Data Availability Statement

All data and code reported in this manuscript are available on Zenodo: https://doi.org/10.5281/zenodo.7637300.

Competing Interests

The authors declare none.