African economies under colonial rule centered on raw material exports, amounting to what some have referred to as a “cash-crop revolution” (Tosh Reference Tosh1980). Even though some regions already produced substantial amounts of export commodities before the late nineteenth century “scramble,” commodity exports accelerated subsequently, financing colonial states, stimulating African consumption of metropolitan manufactures, and generating raw materials for European industries (Austen Reference Austen1987; Munro Reference Munro1976; Frankema, Woltjer, and Williamson 2018). In the late 1950s, at the dawn of independence, agricultural commodities comprised circa two-thirds of all exports from mainland tropical Africa (Hance, Kotschar, and Peterec 1961, p. 491).Footnote 1

The bulk of agricultural exports were produced by African farmers. Despite colonial coercive policies and investments that served to catalyze commodity specialization, the responses of African farmers often did not live up to colonizers’ expectations and were markedly uneven, both spatially and temporally (Austin 2014a). This was most clearly the case with cotton. Many Europeans believed cotton to be a crucial commodity, considering its importance to metropolitan textile industries and anxieties about raw cotton supplies since the U.S. civil war (Beckert Reference Beckert2014; Isaacman and Roberts 1995; Robins Reference Robins2016); why, then, did European ambitions to boost raw cotton exports from African colonies remain mostly unfulfilled? And why did African farmers’ participation in cotton export, even more so than for other crops, come to follow an uneven spatial pattern that defied European expectations and efforts?

While cash-crop adoption under colonial rule, and cotton, in particular, has received ample scholarly attention, we still lack a comprehensive understanding of why farmers across Africa responded so heterogeneously to the global demand for tropical commodities. By systematically analyzing the impact of seasonality on colonial cotton adoption, this study addresses an important yet underexamined piece of this unresolved puzzle. Following Tosh (Reference Tosh1980) and Austin (Reference Austin2008, 2014a, 2014b), I question a pervasive assumption, going back to Myint (Reference Myint1958), that underemployed African farmers could simply tap into surplus land and labor when a (rail)road arrived in their region, substituting cash-crop farming for leisure and cultivating previously idle land (Austin 2014a, pp. 300–5). In reality, the majority of African farmers operated in savanna conditions with short rainy seasons. Given that agriculture was labor-intensive and rainfed and that food crop markets tended to be thin and unreliable, such farmers had to grow all their food and cash crops in a single growing cycle. As a result, they faced seasonal labor bottlenecks and food-crop–cash-crop tradeoffs that could undermine their food security.

Indeed, it is striking how closely agricultural seasonality correlates with the spatial diffusion of Africa’s cash-crop revolution. Hance, Kotschar, and Peterec (1961, p. 494) found that a mere 6.4 percent of all export value in tropical Africa in 1957 was generated from rainfed agriculture in “the savanna proper,” compared with 43.2 percent from “rainy and adjacent savanna areas.” Nevertheless, despite its credibility as an explanation for this observed heterogeneity, variations in resource constraints and their seasonal nature are yet to be appreciated and unpacked. Austin (Reference Austin2014b), in a study of cocoa adoption in Ghana, and Fenske (Reference Fenske2013), in a study on the failure of rubber in Benin, remain among the few studies which have taken up “the unfinished business of analyzing the resource requirements of [Africa’s] export expansion” Austin (Reference Austin2014b, p. 1036).

My argument is built up in several steps. First, I argue that heterogeneous cotton adoption choices across sub-Saharan Africa, and most strikingly its largest exporter Uganda, cannot be sufficiently explained by colonial investment and coercion. Instead, I show that African regions with at least nine tropical rainy months, which allowed for two consecutive growing cycles per year, produced a substantially larger share of tropical Africa’s rainfed cotton output than we should expect based on their factor endowments, railroad infrastructure, and maximum potential cotton yields, given their agro-climatic suitability.

To better understand the mechanisms linking rainfall seasonality and cotton output, this study subsequently focuses on two contrasting cases: British Uganda, where African farmers adopted export cotton on a large scale, and northern Côte d’Ivoire and the Soudan (present-day Mali) in colonial French West Africa (FWA), where farmers persistently declined to produce cotton for export until after the colonial era. First, using historical farm-level data on the intra-annual distribution of agricultural labor inputs, I simulate cotton and food-crop outputs under the regions’ contrasting seasonality regimes. I find that evenly distributed rainfall and the resultant two growing cycles per year enabled Ugandan farmers to smooth agricultural labor inputs and grow three times as much cotton as their FWA counterparts, who faced much shorter unimodal rainy seasons and a single growing cycle. Second, analyzing a district-level panel, I show that Ugandan farmers were able to exploit bimodal rainfall patterns by calibrating their annual cotton planting during the year’s second growing cycle, based on the food crop harvest from the first growing cycle, which compounded their cotton production capacity.

Subsequently, I examine colonial investment, colonial coercion, local textile production, food crop markets, and labor migration in the two regions of interest. I argue that none of these relevant contextual factors independently explains the rift in cotton output between Uganda and FWA and point out that each, in turn, was shaped by agricultural seasonality. As such, I make the case that the role of seasonality, as a key determinant of resource allocation, was pervasive and deserves a more central role in explanations of historical cash-crop adoption outcomes in colonial Africa and the dynamics of colonial economies more generally. Before concluding, I discuss how a belated “peasant cotton revolution” in FWA became possible when the adoption of a set of key technologies eliminated the constraints posed by agricultural seasonality.

This study makes two interventions in the literature. The first and broadest aim is to advance our understanding of the determinants of cash-crop adoption. Recent studies have begun to show that specialization in cash crops and their limited diffusion beyond concentrated production “enclaves” has resulted in large and persistent spatial inequalities across Africa (Müller-Crepon Reference Müller-Crepon2020; Pengl, Roessler and Rueda 2021; Roessler et al. 2020; Tadei Reference Tadei2018, Reference Tadei2020). Despite this growing interest in the effects of cash-crop adoption, the systematic and comparative investigation of its determinants remains remarkably limited. The studies cited above, using spatial econometric, have typically not looked beyond a broad association between soil- or agro-climatic suitability and spatial production patterns. Such suitability variables only reflect the optimal growing conditions of crops themselves, however, and do not speak to the possibility that conditions may be conducive to the growth of crops but not to the farmers who have to grow them. In the wider literature, colonial interventions—both in the form of coercion and investment—have frequently been described as the prime drivers of cash-crop diffusion in Africa. Conversely, the limits of diffusion have been linked to African resistance and colonial underinvestment (Austen Reference Austen1987, pp. 122–29; Isaacman Reference Isaacman1990; Rodney Reference Rodney and Boahen1985; Roessler et al. 2020). But while the importance of such colonial interventions is beyond doubt, their uneven and often unanticipated returns warrant a closer look at environmental forces that shaped the decisions of African farmers (Tosh Reference Tosh1980).

Recent years have also seen a surging interest in the history of globalizing cotton production chains, in which European states and capitalists have been depicted as joining hands to effectively coerce colonial subjects into supplying cheap fiber for metropolitan industries. Most influentially, Sven Beckert has argued that the integration of colonized rural populations into global cotton chains “sharpened global inequalities and cemented them through much of the twentieth century” (Beckert Reference Beckert2014, p. 377). His argument for Africa, following a body of case-based historical scholarship, centers on the imperial thrust to extract cotton, while attributing its failure to local resistance and resilient local textile manufacturing sectors, which drew raw cotton supply away from export markets (Beckert Reference Beckert2014; Isaacman and Roberts 1995). However, the second contribution of this study is that I show that coercion and resistance leave unexplained much heterogeneity in cotton adoption across colonial Africa, a gap that seasonality can fill.

UNEVEN COTTON OUTCOMES IN COLONIAL AFRICA: AN UNRESOLVED PUZZLE

While the actual importance of empire cotton to European textile industries is disputable, it is beyond doubt that there was a widespread and persistent conviction in colonial circles that cotton should be exported from tropical Africa. British commentators hyperbolically branded West Africa as Lancashire’s potential sole supplier (1871, Dawe Reference Dawe1993, p. 24), “future salvation” (1904, in Ratcliffe Reference Ratcliffe1982, p. 113), and the “new Mecca” (1907, in Hogendorn Reference Hogendorn, Isaacman and Robert1995, p. 54). Similar sentiments were present in French and other colonial circles (Marseille Reference Marseille1975; Pitcher Reference Pitcher1993; Roberts Reference Roberts1996, pp. 60–75; Sunseri Reference Sunseri2001). After 1900, in the context of rising raw cotton prices, such sentiments began to be backed up with concerted efforts to stimulate cotton export from Europe’s African possessions. To that aim, textile lobbies throughout Europe established organizations receiving substantial financial and moral support from their governments (Dawe Reference Dawe1993, pp. 33–179; Roberts Reference Roberts1996, pp. 34–5; Robins Reference Robins2016, pp. 72–115). In the long run, France proved the most determined (Bassett Reference Bassett2001, pp. 90–4), and cotton promotion even remained a key feature of France’s involvement in West Africa long after the end of colonial rule (Lele, van de Walle, and Gbetibouo 1989).

Measured against the ambitious rhetoric and persistent efforts, cotton imperialism in Africa was far from successful. Footnote 2 Sub-Saharan Africa’s share of global cotton production (excluding communist countries) rose from a mere 0.3 percent in the 1910s to a still-modest 5 percent during the decade after WWII (Dawe Reference Dawe1993, p. 431). Even at the end of the colonial period, cotton accounted for less than 9 percent of tropical Africa’s exports, ranking behind coffee, copper, cocoa, and peanuts (Hance, Kotschar and Peterec 1961, p. 491). After half a century of disappointment, numerous colonial officials concluded with resignation that their attempts to entice African farmers to grow the crop had failed (Robins Reference Robins2016, p. 29; Bassett Reference Bassett2001, p. 81; Likaka Reference Likaka1997, p. 89). When investments did not yield the hoped-for returns, colonial governments often responded with coercive measures. In French Equatorial Africa, the Belgian Congo, and Portuguese Mozambique, where marketing was monopolized by concessionary companies and cotton was often grown in collective fields, substantial coercion was applied, at the expense of farmers’ freedom, food security, and income (Likaka Reference Likaka1997; Isaacman Reference Isaacman1996; Beckert Reference Beckert2014). Numerous scholars identify coercion as the key determinant of cotton adoption in Africa (Bassett Reference Bassett2001, p. 7; Beckert Reference Beckert2014, p. 373). According to Isaacman and Roberts (1995, p. 29), cotton not only became Africa’s “premier colonial crop,” but also its “premier forced crop.”

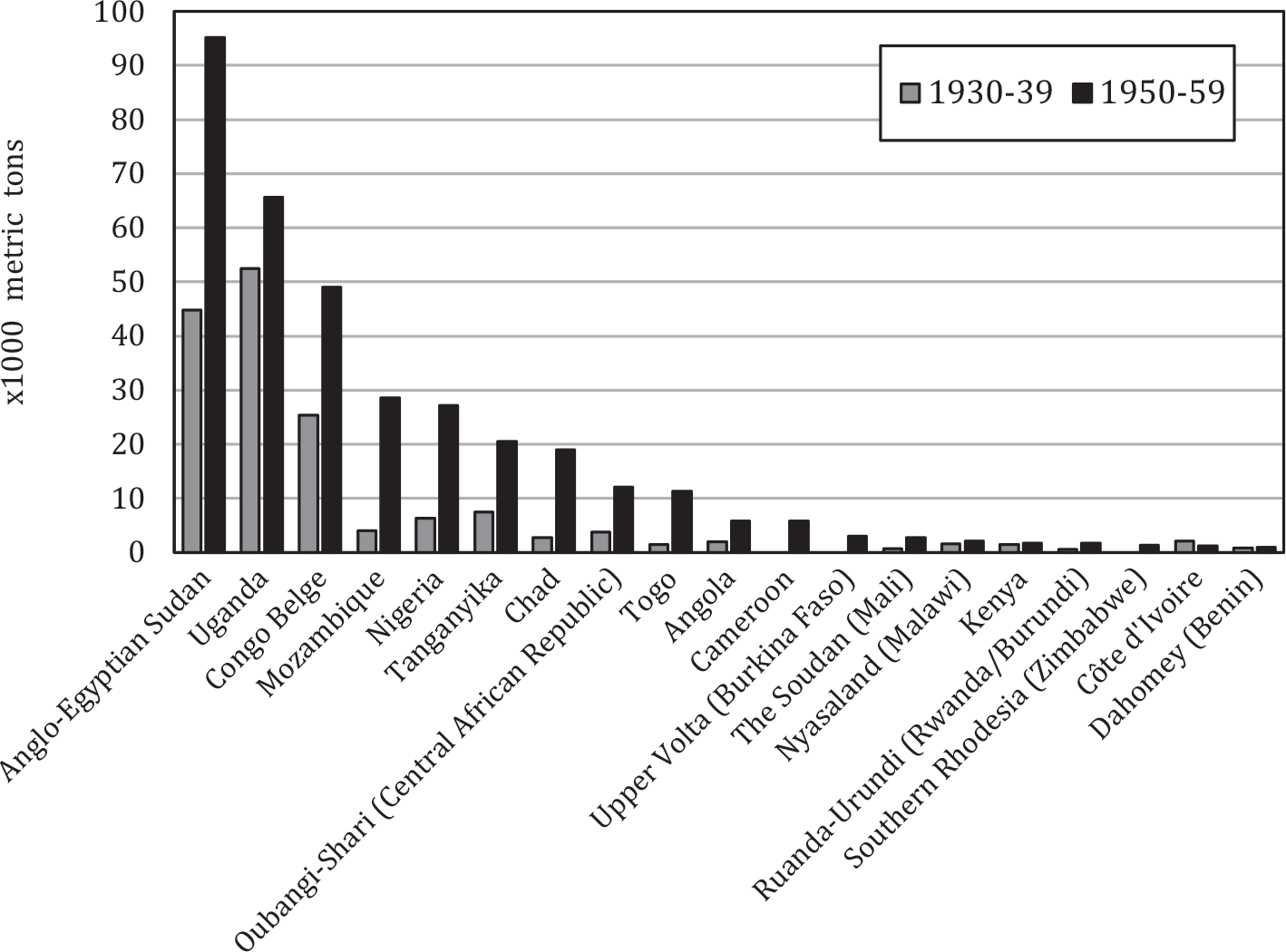

Did heterogeneous cotton adoption outcomes indeed correlate closely with colonial investment and coercive effort? For 20 African colonies where serious attempts were undertaken to expand output, Figure 1 gives the average cotton output during the 1930s and 1950s. The take-off in the Anglo-Egyptian Sudan was exceptional, relying on the capital-intensive “Gezira” irrigation scheme. Meanwhile, Africa’s largest and earliest rainfed cotton take-off took place in Uganda, which alone produced 47 percent of all rainfed cotton in tropical Africa in the 1930s and 26 percent in the 1950s (Figure 1). The scope of Uganda’s take-off becomes clear when we compare it with Egypt, by far the continent’s largest cotton producer: Ugandans produced 56.5 percent of the cotton of their Egyptian ounterparts per capita, even though Egypt was highly specialized in cotton production, had a much longer history of commercial cultivation, and had an advanced irrigation infrastructure (De Haas Reference De Haas2017b; Karakoç and Panza 2021).

Figure 1 COTTON OUTPUT IN 20 AFRICAN COLONIES IN THE 1930S AND 1950S

Sources: See Online Appendix A.1.1.

Despite its singular importance, Uganda barely features in the literature on cotton imperialism in Africa (Isaacman and Roberts 1995; Beckert Reference Beckert2014). When it does, the focus is placed on Buganda, a prominent kingdom in the southern part of the country, and emphasis is put on its purportedly exceptional characteristics—centralized pre-colonial institutions, fertile soils, and reliance on bananas (a relatively undemanding crop) as the staple food (Austin Reference Austin2008, p. 597, 601; De Haas Reference De Haas2017b, p. 610; Elliot Reference Elliot, Jones and Woolf1969, p. 136–7; Isaacman and Roberts 1995, p. 23). However, this Buganda-focus is not justified by the actual spatial pattern of cotton adoption in Uganda; although Buganda was the first area in which cotton took off, within a decade most cotton was grown in the northern and eastern provinces that did not share most of Buganda’s distinctive “cotton-prone” features (De Haas and Papaioannou 2017; Vail Reference Vail1972; Tosh Reference Tosh1978; Wrigley Reference Wrigley1959). In other words, Uganda’s cotton take-off cannot simply be reduced to Buganda as “an exception that proves the rule.”

The case of Uganda also does not conform with the idea that African cotton output was a direct corollary of targeted colonial effort. Uganda’s cotton sector did not receive a particularly large colonial investment. Unlike the railroad linking Kano to Nigeria’s coast in 1912 (Hogendorn Reference Hogendorn, Isaacman and Robert1995, pp. 54–6), the “Uganda railway” which unlocked Uganda to global commodity markets was not built with the prospect of cotton exports in mind (Ehrlich Reference Ehrlich1958, pp. 49, 63–8; Wrigley Reference Wrigley1959, pp. 13–5). Similarly, the British Cotton Growing Association focused its efforts on Nigeria and was initially only peripherally involved in Uganda. Tellingly, British cotton advocate J. A. Todd (Reference Todd1915, p. 170), reflecting decades of optimism about West Africa’s cotton potential, asserted that “the possible cotton crop of Nigeria is about 6,000,000 bales of 400 pounds.” About Uganda, in contrast, he stated that “it is doubtful whether any really large quantity of cotton, more than, say, 100,000 bales per annum, is likely to be raised for a good many years to come” (Todd Reference Todd1915, p. 170). In reality, Nigeria came to export 70,000 bales annually between 1920 and 1960 (1.2 percent of Todd’s projected amount), compared to 260,000 bales from Uganda (260 percent).

Finally, while Uganda’s cotton take-off certainly involved colonial coercion, this cannot explain why Uganda’s farmers adopted cotton more readily than their counterparts in most other places. Indeed, Uganda did not implement many of the repressive features that characterized the cotton regimes elsewhere in central Africa, a fact that was even observed by a contemporary Belgian colonial official in the Congo: “we have failed to make the crop as popular here as in Uganda. The remuneration is inadequate, and the Blacks [sic] are growing the crop only under the pressure of the administration” (Likaka Reference Likaka1997, p. 89).

SEASONALITY AND COTTON OUTPUT ACROSS TROPICAL AFRICA

It is clear that much of the heterogeneity in African cotton adoption remains unexplained by variations in colonial investment and coercion. Can a consideration of agricultural seasonality which, as hypothesized by Tosh (Reference Tosh1980) and others, crucially shaped Africans’ labor allocation to cash-crop production, bring us closer to understanding why cotton was widely adopted in some places but not others? A first step towards answering this question is to simply explore the association between the length of the agricultural growing period and rainfed cotton output across colonial Africa. Areas with no or brief agricultural cycles (four or fewer wet months per year), including large swaths of the steppe and desert, faced the most restrictive seasonality constraint. We expect them to barely cultivate any cotton unless irrigated. Most farmers in tropical Africa operated in savanna areas with longer but still single growing cycles (five to eight wet months). Such areas tended to be highly suitable for rain-fed cotton, but we expect output to be constrained by seasonal labor bottlenecks. Some areas were endowed with two growing cycles (nine wet months or more). Such conditions enabled farmers to smooth their labor inputs intra-annually, attenuating the tradeoff between food crops and cotton. If seasonality mattered, we should expect the largest involvement in cotton production here.

To explore this hypothesized relationship between cotton output and the number of wet months, I first impose a one-by-one-degree grid on tropical Africa. For each grid cell, I calculate the average annual number of months with average rainfall over 60 millimeters (the standard threshold for a “tropical wet month” in the Köppen climate classification) during the period 1901–1960, using gridded rainfall data (data sources are listed under Table 1). Next, I sort all grid-cells in mainland tropical Africa into 12 “rainfall zones” by their number of tropical wet months (Table 1, Column (1)). I then calculate the percentage share that each zone contributed to tropical Africa’s total rainfed cotton output, based on a map showing the sub-national distribution of cotton output in 1957 (Column (2)).

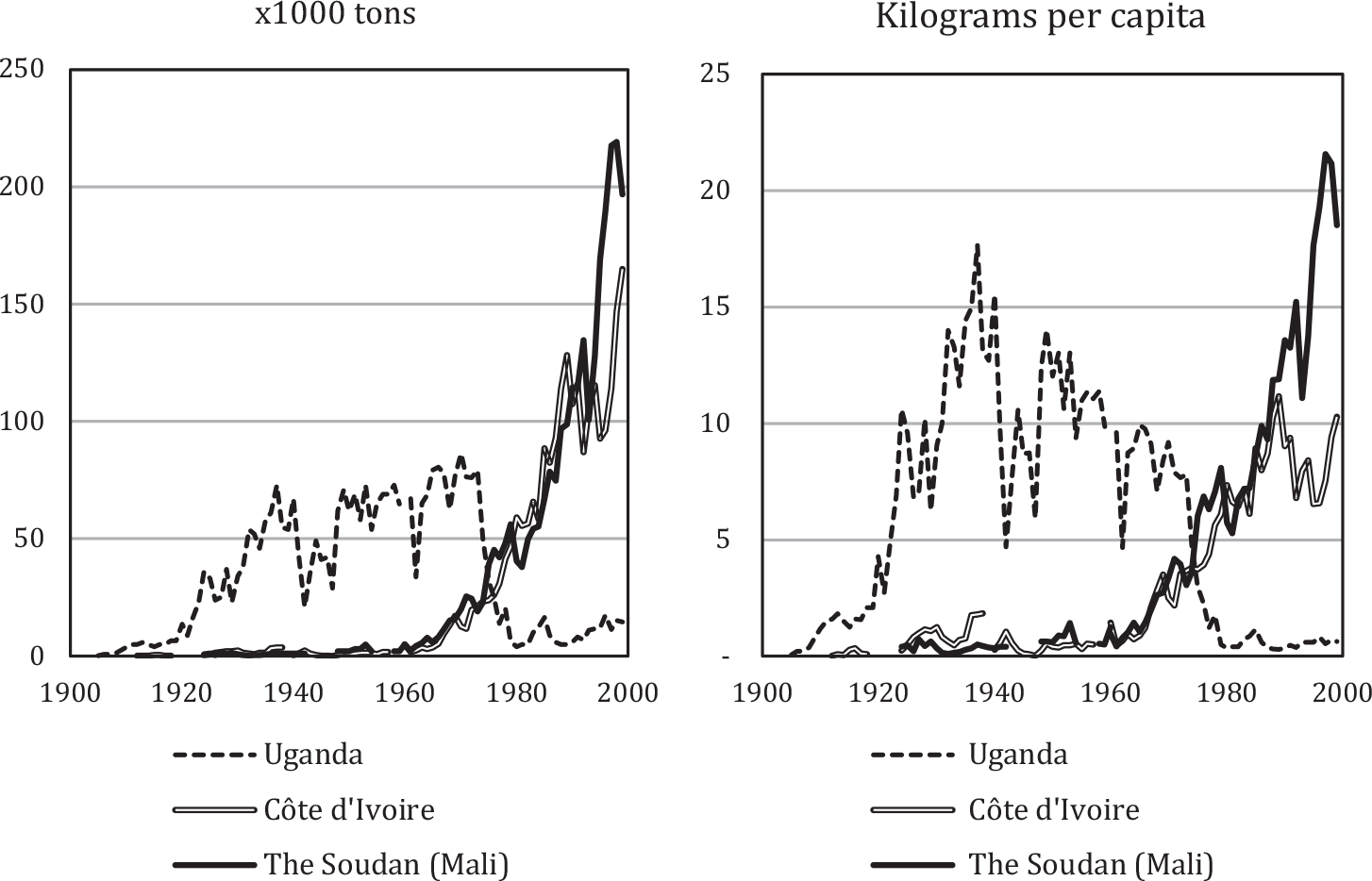

Table 1 RAINFED COTTON OUTPUT AND ENDOWMENTS OF LAND, POPULATION, RAILROADS, AND COTTON YIELD POTENTIAL C. 1960, BY RAINFALL ZONE (SHARES OF TROPICAL AFRICAN TOTAL)

Notes: Columns (2) to (6) each add up to 100 percent. Columns (7) to (10) average out at 1.0. A “wet month” is any month of the year with over 60 millimeters of rain on average in the period 1901–1960, measured per 1 degree by 1 degree grid cell. For the definition of “tropical Africa” see footnote 1.

Sources: Gridded rainfall from Matsuura and Willmot (2018). Rain-fed cotton output from a digitized map by Hance, Kotschar, and Peterec (1961) showing the spatial distribution of Africa’s commodity exports, including cotton. This map’s accuracy has been confirmed by Roessler et al. (2020). Additionally, Online Appendix A.1.3 shows that the country-aggregated output correlates well with the data independently collected for Figure 1. Sub-nationally disaggregated population estimates from Klein Goldewijk et al. (2017), railroads from Moradi and Jedwab (2016), cotton yield potential (“agro-climatic suitability”) from FAO/IISA (2011). Further details in Online Appendix A.1.2 For replication files, see De Haas (2021).

It is important to consider that we may expect more rainfed cotton output in some rainfall zones compared with others simply due to differences in their endowments of land, population, market access (railroads), and agro-climatic cotton yield potential (Columns (3)–(6)). Rather than presenting absolute values, I calculate shares by simply dividing each rainfall zone’s value over tropical Africa’s total so that all rainfall zones jointly add up to 100 percent. For the population shares, I aggregate the population of all constituent grid cells of each rainfall zone. For the landmass shares, I add up the surface area of each rainfall zone’s grid cells. For the railroad shares, I calculate the railway mileage traversing each rainfall zone. For the cotton yield potential, I measure potential yield at the grid-level and add up the values for each rainfall zone. For this purpose, I use the agro-climatic suitability variable from FAO’s Global Agro-Ecological Zones database, assuming rainfed cotton and low-input conditions. This variable considers how favorable local temperature, radiation, and moisture regimes are for crop growth and maturation. Rainfall is factored in, but only to the extent that it is relevant for the growth of the crop itself, not accounting for the seasonality-induced tradeoffs and constraints faced by the farmer who has to cultivate it.

As Columns (2) to (6) of Table 1 show, very little rainfed cotton was produced in areas with zero to four rainy months (1.9 percent of tropical Africa’s total rainfed cotton output), despite harboring a substantial share of tropical Africa’s landmass (40.1 percent), population (18.2 percent), railroads (21.3 percent), and cotton yield potential (22.4 percent). In areas with five to eight rainy months per year, cotton output was close to the share of landmass, population, railroads, and cotton suitability. In areas with 9 to 12 rainy months, cotton output (41.9 percent) substantially exceeded the shares in tropical Africa’s total landmass (17.1 percent), population (25.5 percent), railroad infrastructure (13.3 percent), and yield potential (16.8 percent).

To further facilitate comparison between the different rainfall zones, I divide cotton output shares by the landmass (Column (7)), population (Column (8)), railroad (Column (9)), and yield potential (Column (10)) shares. The resultant measure takes a value below one if a specific rainfall zone generated less cotton output than we might expect based on its share of tropical Africa’s endowments of landmass, population, railroad, and cotton yield potential. If the value is above one, it generated a larger cotton output share than expected based on these relevant endowments. For example, Column (10) shows that areas with 9 to 12 wet months contributed 2.49 times as much cotton to Africa’s aggregate cotton output as we should expect based on its yield potential. Areas with 5 to 8 wet months contributed 0.86 times the expected output. This allows us to infer that the output per “unit of yield potential” was almost three (2.49/0.86 = 2.90) times higher in areas with two growing cycles than in areas with a single growing cycle.

The above exercise does not have immediate causal implications and does not elucidate any of the mechanisms by which seasonality affected cotton output. However, the fact that substantially more cotton output was generated in areas with two agricultural growing cycles per year than its relevant resource and infrastructure endowments would “predict,” is consistent with our argument that seasonality mattered for cotton (non-)adoption. It also demonstrates that agro-climatic suitability alone poorly explains output. To assess more precisely how rainfall seasonality was linked to cotton output, I now zoom in on two regions, the interior savanna of FWA and Uganda, which were similar in many respects, but had very different rainfall distributions, as well as opposing cotton adoption outcomes.

CONTRASTING CASES: UGANDA AND FRENCH WEST AFRICA

Cotton exports from landlocked Uganda took off soon after the completion of a coastal railway in 1902 (Ehrlich Reference Ehrlich, Harlow and Chilver1965). During the 1920s, output further accelerated and expanded spatially. By the 1950s, cotton production had diffused to a diverse region of ca. 135,000 hectares, inhabited by approximately 4.5 million people (Uganda Protectorate 1961). During the 1950s, smallholder-grown coffee took over as Uganda’s most valuable export crop, but cotton production was maintained on a large scale and continued to be the sole major cash crop in most regions (De Haas Reference De Haas2017b). Uganda’s cotton exports declined precipitously during the 1970s under the Amin regime, in the context of collapsing institutions and the expulsion of Uganda’s commercially important Asian minority (Jamal Reference Jamal1976). Cotton production recovered somewhat later on but never bounced back to pre-collapse levels.

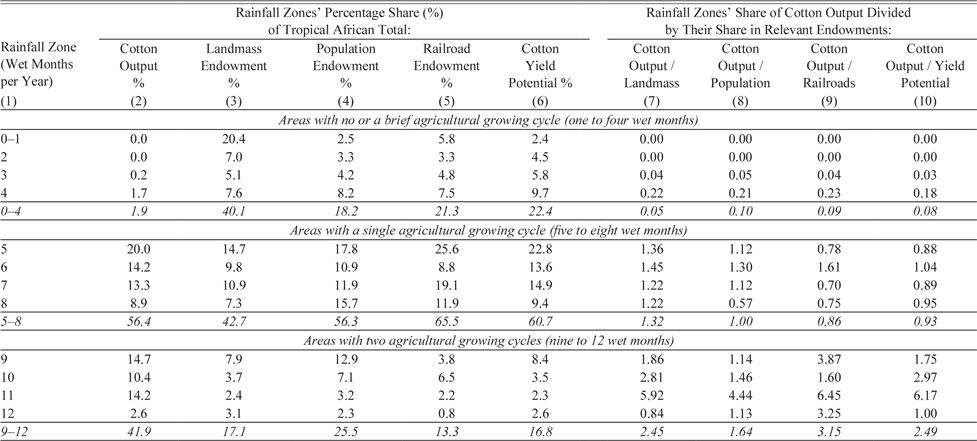

Meanwhile, French efforts to export cotton from the West African savanna started in earnest when two respective railways reached Bamako (the Soudan) in 1904 and Bouaké (northern Côte d’Ivoire) in 1912 (Bassett Reference Bassett2001, pp. 56–8; Roberts Reference Roberts1996, p. 80). Despite high expectations and a persistent politique cotonnière, the volume and quality of smallholder-grown cotton disappointed enormously and remained far behind Uganda’s (Figure 2). The French attempted to generate cotton exports in other ways. In the Niger River Valley in the Soudan, they established the “Office du Niger,” a highly capitalized and irrigated cotton-growing scheme (Roberts Reference Roberts1996, pp. 118–44, 223–48; van Beusekom 2002). In northern Côte d’Ivoire, they invited European planters, who already dominated coffee and cocoa production in the country’s southern forest regions (Bassett Reference Bassett2001, p. 77). However, these projects struggled to attract sufficient labor and settlers and incurred persistent losses, which made an influential strand of the French administration stick to the promise of rainfed cotton cultivation by African farmers (Roberts Reference Roberts1996, pp. 163–91, 149–82). After independence, this promise was finally delivered through a belated but impressive “peasant cotton revolution” (Bassett Reference Bassett2001). From 1961–1965 to 1995–1999, Benin, Burkina Faso, Côte d’Ivoire, Mali, and Togo significantly expanded their joint share of sub-Saharan cotton production from 2.8 to 35.4 percent, and in worldwide production from 0.2 to 2.8 percent (FAOSTAT). I will discuss the changing conditions that enabled this post-colonial take-off in a final section of the paper.

Figure 2 COTTON PRODUCTION IN UGANDA, CÔTE D’IVOIRE, AND MALI, 1900–2000

Note: Data for the Sudan before 1937 refer to export, not production.

Sources: Data post-1960 are from the FAOSTAT database, earlier data from Reference MitchellMitchell (1995, p. 244–45), and Côte d’Ivoire before 1948 from Bassett (Reference Bassett2001, p. 52), and the Soudan before 1948 from Roberts (Reference Roberts1996, pp. 268, 245). Whenever applicable, a 3:1 seed-cotton-to-cotton-lint conversion is used.

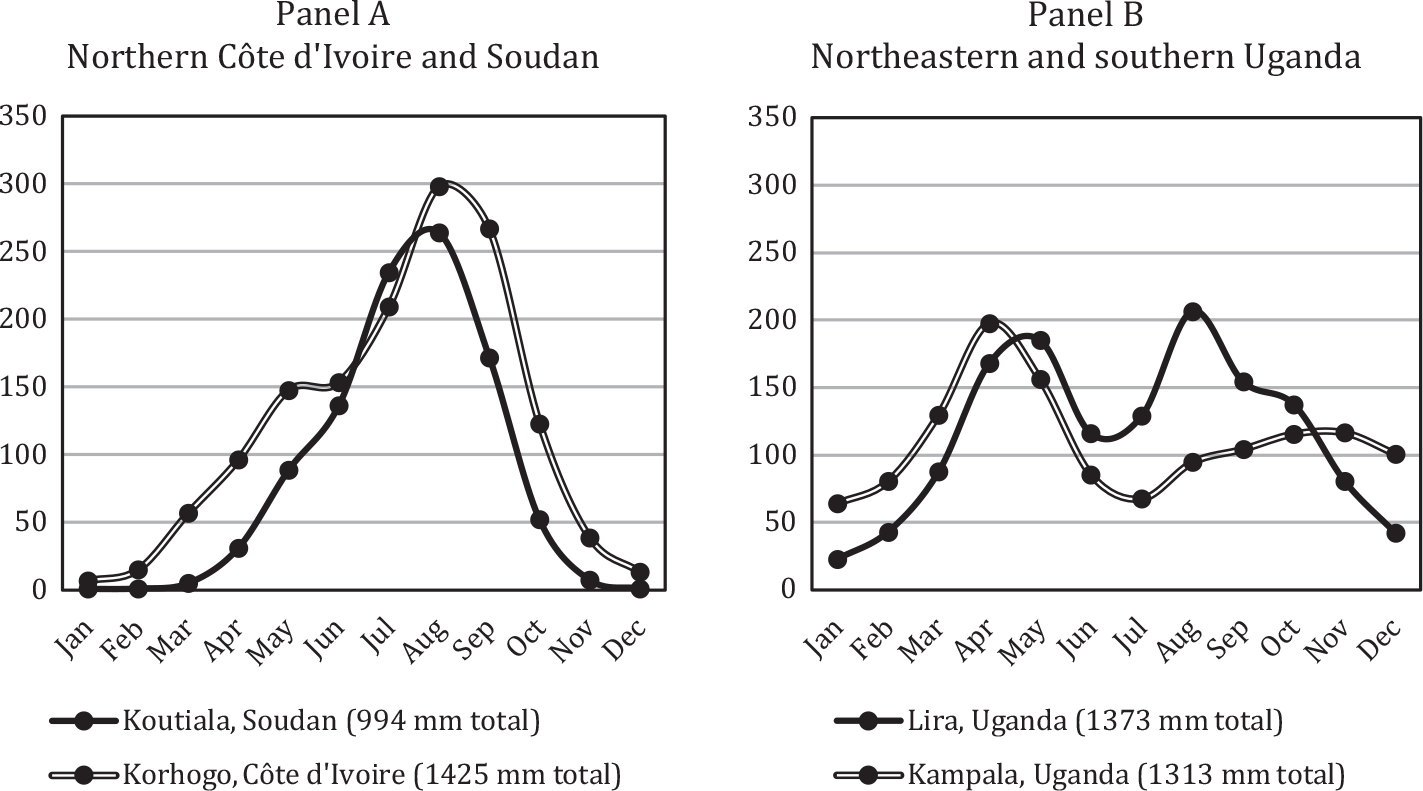

The Uganda and FWA cases not only present a compelling contrast of outcomes but also of rainfall seasonality patterns. Importantly, the two regions have similar agro-climatic conditions, which allows us to focus on the effect of rainfall seasonality. Annual rainfall quantities (1901–1960) suggest that environmental conditions in the two regions were overlapping rather than distinct: 1,425 mm in Korhogo (northern Côte d’Ivoire), 1,313 mm in Kampala (Buganda), and 1,373 mm in Lira (northeast Uganda), and a considerably lower annual total of 994 mm in Koutiala (Mali) (Matsuura and Willmott Reference Matsuura and Willmott2018). Aside from southern Uganda’s banana farmers, cropping patterns and agricultural practices in Uganda and FWA were also similar, revolving around annual crops such as grains, oil crops, and beans, supplemented by some cassava and yams (McMaster Reference McMaster1962; Parsons Reference Parsons1960a, pp. 14–67, 1960b, pp. 1–45; Tothill 1940; Jameson 1970). Importantly, Uganda did not outperform the other two countries in terms of cotton yield potential. Instead, the aggregate agro-climatological yield potential of cotton (under rainfed conditions) from Côte d’Ivoire and the Soudan jointly was over 3.5 times as large as Uganda’s (FAO/IIASA 2011). Where environmental conditions in Uganda and FWA clearly diverge is in their very different patterns of rainfall seasonality. As shown in Figure 3A, farmers in northern Côte d’Ivoire had to make do with a fairly short rainy season of seven months, during which they had to procure all of their food and cash crops. The rainy season in the Soudan was even shorter—five months. Ugandan farmers (Figure 3B) instead benefited from a bimodal rainfall distribution with 9 to 11 wet months, which enabled them to harvest two consecutive crops each year.

Figure 3 AVERAGE MONTHLY RAINFALL (IN MILLIMETERS), 1900–1960

Sources: Calculated based on closest grid-point in Matsuura and Willmott (Reference Matsuura and Willmott2018).

LABOR SEASONALITY AND COTTON OUTPUT:A SIMULATION

To quantify how seasonality affected the cotton-growing capacity of farmers in colonial Uganda versus FWA, I estimate and compare the maximum cotton output of a uniform, stylized farming unit under two different seasonality regimes. The farm maintains two adults and three children; cultivates cotton and grain; and is assumed to maximize cotton output facing three constraints: (1) a maximum daily labor input, (2) food self-sufficiency, and (3) a given seasonal distribution of agricultural labor inputs. In this simulation exercise, we hold all conditions constant, except for the intra-annual distribution of labor demands, which include clearing, planting, weeding, and harvesting operations. As the timing of these different operations was strictly circumscribed by the rainfall cycle, this allows us to isolate the effect of seasonality on cotton output.Footnote 3

As a first step in the simulation, I establish a feasible maximum labor availability. The vast majority of farm households in the cotton-growing regions of northeastern Uganda, Côte d’Ivoire, and the Soudan did not procure labor outside the household, aside from “work parties”— short-term village- or clan-based labor exchanges that alleviated some pressure during the peak season (Bassett Reference Bassett2001, pp. 130–2; Tosh Reference Tosh1978). While men and women traditionally had separate tasks (clearing and weeding, respectively), such distinctions largely faded during the colonial period, so that we can assume that households efficiently coordinated their farming duties, especially during the peak season (Boserup Reference Boserup1970, pp. 16–24; De Haas Reference De Haas2017b, p. 622). Women farmed at least as many hours as men during the year as a whole, but men could allocate their full labor time to farming during the peak season, while women had to also attend to reproductive duties, including child-rearing, food preparation, and water and firewood gathering (Bassett Reference Bassett2001, p. 134; Cleave Reference Cleave1974, p. 191; Vail Reference Vail1972, p. 37). Young children, even those not in school, generally performed little field labor (Cleave Reference Cleave1974, p. 191) but contributed by chasing away animals, tending livestock, and sorting the cotton post-harvest. Based on these considerations, I estimate that households were able to provide up to 1.6 adult male equivalents of agricultural field labor per day, every single day of the month during the peak season.

Second, I assume that the farm supported five consumers with an average nutritional requirement of 2,100 kilocalories (kcal) per day (De Haas Reference De Haas2017b) and produced an additional 25 percent surplus to hedge against partial harvest failures. The farming unit cultivated just enough food crops for self-sufficiency and used the remaining agricultural labor to maximize cotton output. This is consistent with a “safety first” farming strategy in a context of land abundance and thin food crop markets (Binswanger and McIntire 1987; De Janvry, Fafchamps, and Sadoulet 1991). In such conditions, an inverse relationship exists between the size of the food crop harvest and the price, which discourages specialization. Absent external demand, food crops cannot be sold profitably in years of localized abundance, leading farmers to diversify into other commercial activities (including cotton cultivation) to obtain non-subsistence commodities and fulfill taxes, school fees, and other cash obligations. In the absence of an external supply of food crops, farmers also avoid relying on non-edible crops, as food cannot be bought affordably and reliably in years of localized shortage (De Janvry, Fafchamps, and Sadoulet 1991, pp. 1401–3). Even in the postbellum southern United States, a context of much better food market functioning than FWA and Uganda, cotton-growing smallholders still pursued a “safety first” strategy. To explain this, Wright (Reference Wright1978, p. 64) points out that “using cotton as a means of meeting food requirements involved the combined risks of cotton yields, cotton prices, and corn prices. The man who grows his own corn need only worry about yields.” In a later section, I will discuss in more detail the potential substitution effects between cotton and food crop marketing.

Third, I estimate the total adult-male-equivalent days of farm labor required per hectare of food and cotton crops. Millet was the most important grain in farmers’ diets in the savannas of FWA and Uganda. I follow De Haas Reference De Haas2017b, p. 609) in estimating a millet labor requirement of 99 adult-male-equivalent days per hectare. Also, following De Haas (Reference De Haas2017b, p. 615) for Uganda, and Benjaminson (Reference Benjaminson, Benjaminsen and Lund2001, p. 264) and Labouret (Reference Labouret1941, pp. 211–6) for FWA, I estimate a typical millet yield of 606 kg/hectare after accounting for waste, losses, and seed retention, and a caloric value of 3,417 kcal/kg. We can now establish that the stylized farming unit required 2.31 hectares of food crops to be self-sufficient, which equates to a labor input of 229 adult-male-equivalent days per year. I estimate total annual labor requirements for cotton at 198 adult-male-equivalent days per hectare (De Haas Reference De Haas2017b, p. 615).

Fourth, I establish the monthly distribution of labor requirements for cotton and food crops which—crucially and unlike the previous three steps—differed between the stylized farms in FWA and Uganda due to their different seasonality patterns. For Uganda, I obtain monthly labor distribution data from 1964–1965, based on 30 farms each in Aboke (Lango district) and Koro (Acholi district), and one experimental farm observed ca. 1970 in Teso district (Cleave Reference Cleave1974, pp. 87, 121; Vail Reference Vail1972, p. 104). For FWA, I rely on data from seven farms in Katiali (northern Côte d’Ivoire), collected by Bassett in 1981 and 1982 (Bassett Reference Bassett2001, p. 126).

The Ivorian data have several issues that need to be resolved up front. First, the observed intra-annual labor-input distribution pertains to a period where farmers had gained improved access to labor-saving innovations. Applying it to an earlier period, before these innovations were adopted, implies that the intervening labor-saving effects were smoothly distributed throughout the year. For most of the cotton-growing cycle, this is a realistic assumption, as animal traction reduced labor inputs early in the season, while the application of herbicides reduced the need for mid-season weeding (Bassett Reference Bassett2001, pp. 107–45).Footnote 4 However, harvest labor demands increased between c. 1960 and 1980, as yields increased fourfold for cotton (Bosc and Hanak Freud 1995, p. 282).

It is possible that this increased labor demand was partly alleviated by increased picking efficiency, which could result, for example, from the introduction of new varieties. Indeed, Olmstead and Rhode (2008) have shown that biological innovation increased cotton-picking efficiency on the southern U.S. slave plantations by a factor of four between 1801 and 1862. Unlike in the southern United States, however (Olmstead and Rhode 2008, p. 1142), picking was not “the key binding constraint on cotton production” in FWA, so this type of innovation was not urgent. To be on the conservative side, I still assume that Ivorian cotton-picking efficiency doubled between 1960 and 1980. Footnote 5 I have no reason to account for changes in food crop harvest efficiency. I assume that the reported yield increase from c. 600 to 1,000 kilogram per hectare (Benjaminson Reference Benjaminson, Benjaminsen and Lund2001, p. 264) between 1960 and 1980 came with a proportional increase of required labor inputs.

A final limitation of the Ivorian data is that rainfall in the year of data collection tapered off early, in September instead of November (Bassett Reference Bassett2001, p. 120). Importantly, however, we will find that the seasonal labor bottleneck occurred before the year’s rainy season’s premature end, which largely mitigates this concern.

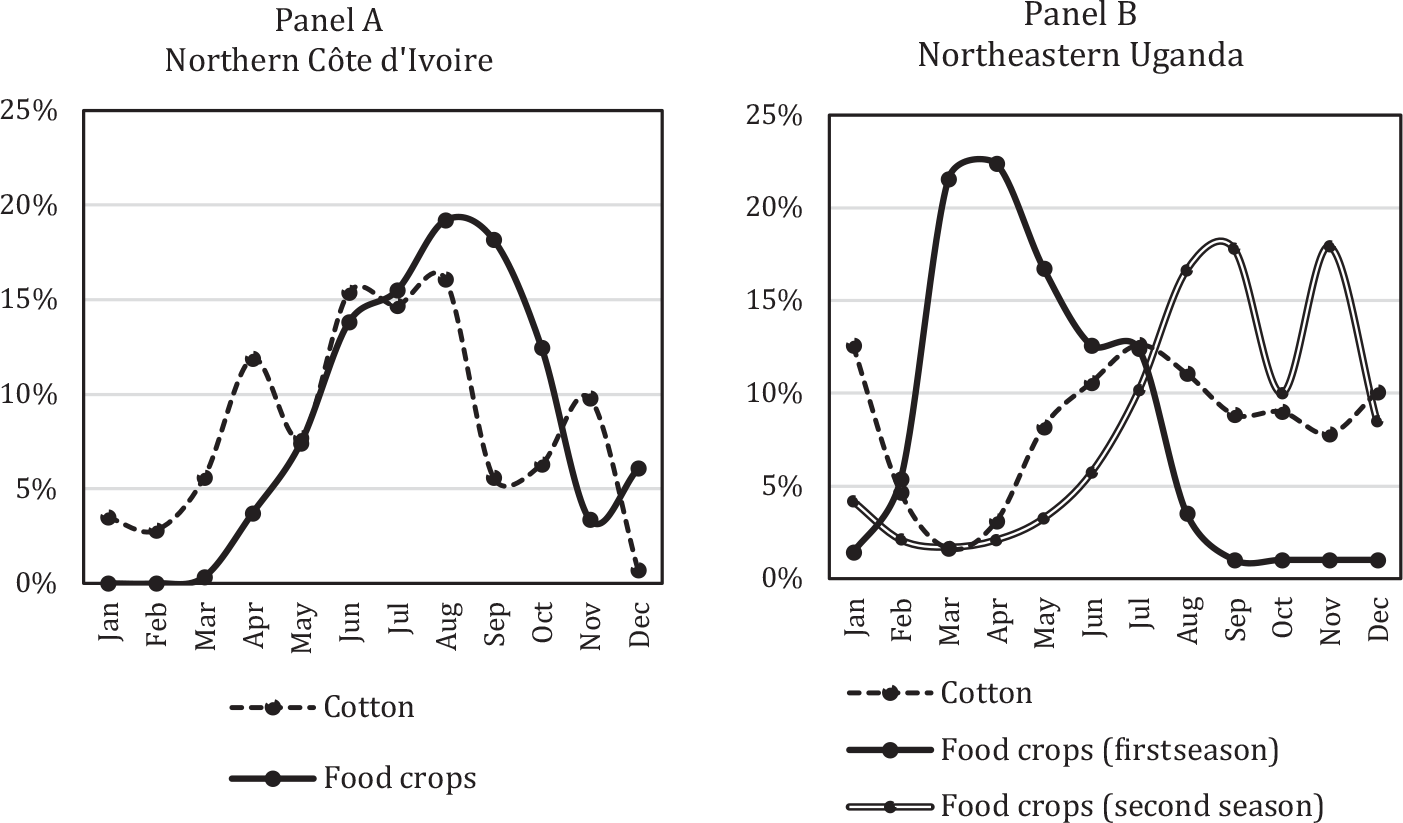

Figure 4 shows the intra-annual distribution of agricultural labor requirements, expressing the monthly inputs as shares of the annual total labor requirements of cotton and food crops, respectively. As can be seen on Panel A, Ivorian labor demands for food crops and cotton peaked jointly in a single agricultural cycle. In their writings, French colonial administrators espoused a clear awareness that cotton and food crops directly competed for scarce labor inputs during this time of the year. According to Bassett (Reference Bassett2001, p. 126), Ivorian officials (1912) observed “that it would be difficult to expand cotton cultivation […] without improving labor productivity. Otherwise, there simply was not enough time in the agricultural calendar if farmers gave priority to food security.” An official in the Soudan (1924) remarked that “the increase in cotton production in this colony will have as its corollary the reduction in the volume of grains […]. The agricultural labor […] will be thus allocated differently, but will not vary” (Roberts Reference Roberts1996, p. 167).

Figure 4 MONTHLY LABOR REQUIREMENTS (SHARE OF TOTAL ANNUAL LABOR INPUTS PER CROP CATEGORY)

Sources: Monthly shares calculated on the basis of data from Bassett (Reference Bassett2001, p. 126), Cleave (Reference Cleave1974, pp. 87, 121), and Vail (Reference Vail1972, p. 104).

In contrast, Uganda’s food crops could be sown and harvested twice during the year, while cotton was typically relegated to the second rainy season (Figure 4, Panel B). Because labor demands for Uganda’s two seasons overlapped from May to August, farmers had to calibrate their cropping choices to accommodate for the parallel tasks of harvesting first-season crops and planting second-season crops. To allow for the harvest of first-season food crops, as well as to mitigate the impact of adverse weather events (such as a hailstorm or short drought, which interfered with the germination of seeds), cotton planting was “staggered” over four months (May to August). Early cotton was sown in newly opened fields, while late cotton was planted in freshly harvested fields (Tothill 1940, pp. 42–52).

As a fifth and final step, I multiply the monthly labor shares (Figure 4) with the annual adult-male-equivalent day requirements per hectare (noted above) to obtain the actual number of adult-male-equivalent days required for each month per hectare of cotton and food crops. By dividing the monthly inputs by the number of days of each month, I establish a daily labor requirement for each month. The hectarage of cotton that can be cultivated is therefore given by the month in which the combined labor requirement for 2.31 hectares of food crops (at self-sufficiency) and cotton (maximized number of hectares) first reaches the point where the 1.6 daily available labor units are fully engaged.Footnote 6 Because farmers had access to work parties, which may have enabled them to shift labor inputs during the peak-labor month, I slightly relax this single peak-month condition, instead assuming that labor inputs in the three most labor-constrained months could be smoothed (June, July, and August in both FWA and Uganda). Because there is only one growing season, the procedure of calculating the maximum potential cotton hectarage is straightforward in FWA. In the case of Uganda, an intermediary calculation is necessary to calibrate the optimal shares of food crops cultivated in the first and second seasons to maximize the cotton acreage. In the optimized simulation, Ugandan households cultivated 63 percent of their food crops in the first season and 37 percent in the second season.Footnote 7

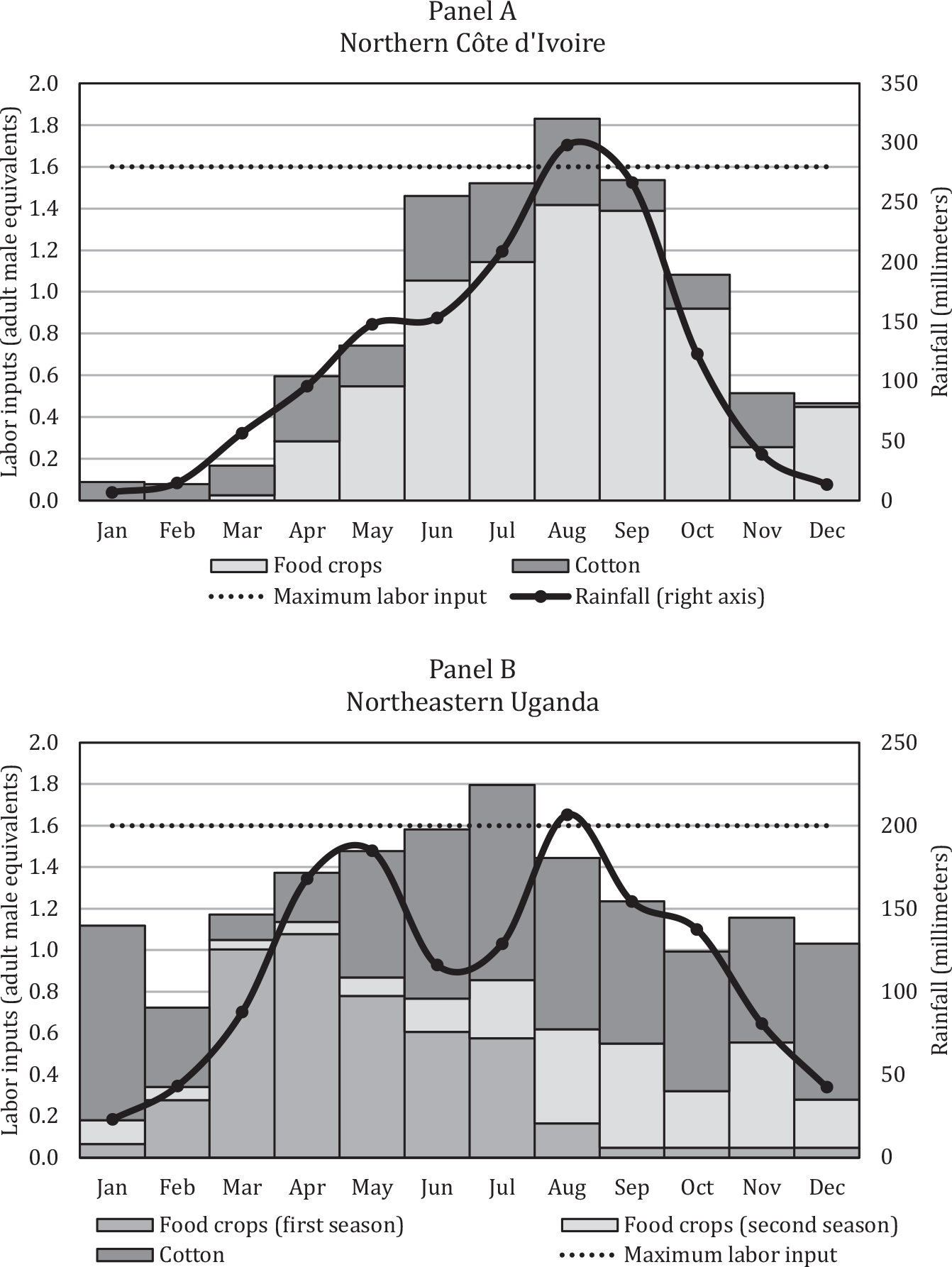

Figure 5 visualizes the final result. The difference between Côte d’Ivoire and Uganda is large. While farmers in FWA (Panel A) were capable of cultivating at most 0.40 hectares of cotton while retaining food self-sufficiency, farmers in Uganda (Panel B) could cultivate close to three times as much: 1.17 hectares. It is important to recall that this rift in simulated cotton output arises solely from differences in the intra-annual distribution of labor inputs. This, in turn, is a result of contrasting rainfall patterns, which allowed for only a single growing cycle in FWA versus two growing cycles in Uganda. It is reassuring that the simulated results are very close to farm survey data from colonial Uganda (Table 2), as well as farm-level data from colonial FWA. A large Soudanese farming unit was surveyed in 1937 consisting of eight adults (four men, four women) and seven children cultivated 10.84 hectares, including 7.0 hectares of millet, 3.14 hectares of secondary food crops, and 0.7 hectares of cotton (Labouret Reference Labouret1941, pp. 211–6). This translates to 2.54 hectares of food crops and 0.18 hectares of cotton per adult pair (the equivalent of our stylized farm unit), which is within the simulated cotton production possibilities in FWA.

Figure 5 SIMULATION OF MONTHLY FOOD CROP AND COTTON LABOR INPUTS

Sources: Author’s calculations (see text). Rainfall information taken from Figure 4.

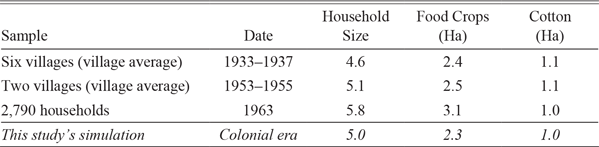

Table 2 UGANDAN HOUSEHOLD SIZES AND CROP ACREAGES: SIMULATION VERSUS ACTUAL FARM SURVEY DATA

Sources: De Haas (Reference De Haas2017b, Online Appendix, Table A2).

FOOD YIELDS AND COTTON PLANTING IN UGANDA:A PANEL ANALYSIS

Rainfall seasonality also impacted farmers’ cotton cultivation through a second mechanism. When facing short rainy seasons, farmers had to plant, tend, and harvest all food crops and cotton simultaneously. This created a risk assessment problem; in the Soudan, as noted by Roberts (Reference Roberts1980, p. 53), “where a farmer has devoted a significant portion of his energy to the cultivation of nonedible cash crops, a poor harvest may result in a reduced capacity to survive.” Not being able to anticipate fluctuations in harvest outcomes, farmers had to hedge against the possibility of partial harvest failure. This is why we assumed, in the simulation above, that farmers structurally planned for a “normal surplus” of 25 percent more food crops than their subsistence needs. This implies an inefficient allocation of resources: overinvestment in subsistence crops to reduce risk at the expense of cotton planting and cash income.

What we have not yet considered is that a longer growing season (and especially two growing cycles per year) enabled farmers to avoid growing a surplus, as they could assess the yields of their first season food crops before deciding whether to grow additional food crops in the second season to achieve self-sufficiency or to instead invest in cotton to augment cash income. If Ugandan farmers indeed made an informed decision to adjust their resource investment in cotton planting based on their mid-year food position, we should find that they reduced their second-season cotton acreage after a bad food crop yield in the preceding season, and vice versa. To test this hypothesis, I analyze a panel consisting of 10 districts in colonial Uganda over 38 years (1925–1962). The key variables are the rainfall during the first four months of the year, which proxies for the first-season food-crop harvest (independent) and cotton acreages planted during the second season (dependent).Footnote 8

Because cotton was crucial to Uganda’s economy, and output depended on farmers’ annual planting decisions, the colonial administration devised a system to monitor acreage. Local African chiefs were required to count cotton “gardens” in their administrative area. These counts were transformed into an acreage estimate using a standardized conversion based on a sample of representative fields and accumulated at the district level to be reported in the Annual Report of the Department of Agriculture.Footnote 9 Chiefs inflated acreages to meet performance expectations, and measuring practices were periodically adjusted.Footnote 10 However, the acreage statistics emerged from systematic and bottom-up data collection. After the inclusion of district and year controls, we do not expect non-random bias.

Unfortunately, colonial-era data on food crop acreages, let alone yields, was of very poor quality. Instead, we can use rainfall, measured monthly at numerous locations using precipitation gauges, as a workable proxy for harvest outcomes. In tropical conditions, rainfall during the growing season was the prime determinant of yield outcomes. A voluminous body of econometric studies has demonstrated that annual or seasonal rainfall variability explains a variety of economic and social outcomes in Uganda (Asiimwe and Mpuga 2007; Björkman-Nyqvist Reference Björkman-Nyqvist2013; Agamile, Dimova, and Golan 2021) and in economies with high dependence on rainfed agriculture more generally (Carleton and Hsiang 2016; Dell, Jones, and Olken 2014). The effects of rainfall variability have been found to be most pronounced during extreme events in both directions (droughts or floods), but smaller deviations from the expected rainfall pattern also adversely affect output.

To proxy first-season harvest outcomes, I consider the total rainfall during the first four months of the year. While the millet growing cycle lasted from January to August (Figure 5), the rainfall during the first four months of the year (January to April) was most crucial for yields because it was during these months that the newly planted seeds germinated and transformed moisture and nutrients into biomass. Another reason for considering these four months only is that rainfall conditions from May onwards had a direct bearing on cotton-planting decisions, as almost all cotton planting took place from May to August, which interferes with our identification of the food crop harvest effect. I express rainfall deviation during the first four months of the year in z-scores and transform to absolute (non-negative) values to capture the expectation that deviation from the long-run mean has an adverse linear impact on harvest outcomes:

where

![]() $${\bar x_i}$$

is the long-term mean (1925–1962) of each district,

$${\bar x_i}$$

is the long-term mean (1925–1962) of each district,

![]() $${x_{i,t}}$$

is the annual observation in time t for district i,

$${x_{i,t}}$$

is the annual observation in time t for district i,

![]() $${\sigma _i}$$

and is the standard deviation of each panel (that is, for every i).

$${\sigma _i}$$

and is the standard deviation of each panel (that is, for every i).

For each district, I use observations from the meteorological station for which most data points are available. Footnote 11 I merge two adjacent small cotton-growing districts, Mubende and Toro, because (i) consistent rainfall observations for Mubende are lacking; (ii) unlike other districts, these two districts were effectively treated as a single cotton zone by the colonial authorities; and (iii) for some years their acreage statistics were reported jointly. Although some earlier rainfall and acreage statistics are available, I take 1925 as the starting year because (1) we have almost complete data for all districts by this time; (2) acreage expansions before 1925 were substantial and haphazard and spurred by government cotton campaigns; (3) by 1925 cotton was widely diffused, and acreages (and data quality) had stabilized across districts; and (4) starting in 1925 excludes the sharp acreage fluctuations related to a currency realignment that took place in the early 1920s. The last year considered coincides with the last planting season under colonial rule (which ended on 9 October 1962).

To assess farmers’ investment in cotton, I estimate the following regression model:

where

![]() $$Ln{(CottonAcres)_{i,t}}$$

denotes the natural logarithm of the cotton acreage planted per district iand year t. District and year fixed effects are denoted

$$Ln{(CottonAcres)_{i,t}}$$

denotes the natural logarithm of the cotton acreage planted per district iand year t. District and year fixed effects are denoted

![]() $${\nu _i}$$

and

$${\nu _i}$$

and

![]() $${\mu _t}$$

respectively, while

$${\mu _t}$$

respectively, while

![]() $${\delta _{i,t}}$$

captures unobservable district characteristics

$${\delta _{i,t}}$$

captures unobservable district characteristics

![]() $$({\nu _i})$$

interacted with a linear time trend (t) to account for district-specific time trends. The coefficient of interest,

$$({\nu _i})$$

interacted with a linear time trend (t) to account for district-specific time trends. The coefficient of interest,

![]() $${\beta _1}$$

, is the estimated effect of a one standard deviation change (either positive or negative) in rainfall on the log cotton acreage. A negative sign,

$${\beta _1}$$

, is the estimated effect of a one standard deviation change (either positive or negative) in rainfall on the log cotton acreage. A negative sign,

![]() $${\beta _1} < 0$$

, indicates that, on average, rainfall deviations from January to April are associated with a lower second-season cotton acreage.

$${\beta _1} < 0$$

, indicates that, on average, rainfall deviations from January to April are associated with a lower second-season cotton acreage.

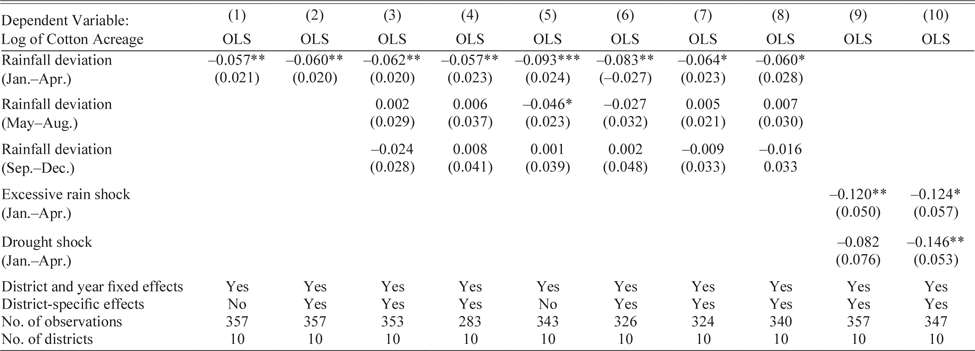

The results are reported in Table 3. Column (1) shows the results without district-specific time trends, which are added in Column (2). In Column (3), I add the rainfall from May to August (the cotton-planting season) and September to December (the cotton-growing season) as controls. In Column (4), I remove the years 1939 to 1945, as war conditions affected both cotton cultivation and data-reporting quality. Footnote 12 The results are significant and stable in each of these specifications, with a one standard deviation change in first-season rainfall reducing the cotton acreage by approximately 6.0 percent. Over the 38 years, cotton acreages potentially followed district-specific non-linear time trends or had structural breaks (e.g., unobserved changes in acreage measuring practices related to the preferences of a specific administrator or agricultural officer). To ascertain that the results are not driven by any trends and breaks in the time series that may correlate with rainfall, I replace the log cotton acreage (a level variable) with the log cotton acreage minus the log cotton acreage of the previous year in Column (5), and relative to the median of the previous three years in Column (6) (both change variables). The results hold up to this change of dependent variable.

Table 3 EFFECT OF FIRST-SEASON ABSOLUTE RAINFALL DEVIATION ON SECOND-SEASON COTTON ACREAGE, 1925–1962

Notes: Robust standard errors in parentheses.

*** p<0.01, ** p<0.05, * p<0.1

Source: Cotton acreages from Uganda Protectorate, Annual Reports of Agriculture for the Year [1926 to 1962]. Rainfall, see Online Appendix A.3.3.

In Column (7), I take the baseline specification with log cotton acreage but exclude outliers of cotton acreage growth or decline relative to the previous year (over two standard deviations). In Column (8), I exclude outliers with extreme rainfall (over two standard deviations). Reassuringly, the effect is not driven by extreme spikes of the dependent and independent variables. Finally, to establish whether there was a heterogeneous impact of excessively dry versus wet conditions, Column (9) shows the result using shock dummies, taking 1.25 standard deviations as the cut-off point. Both types of shocks are, on average, associated with a smaller cotton acreage, but only significantly so for the positive (excessive rainfall) shocks. Column (10) replicates this shock-specification using log cotton growth as the dependent variable (as in Column (5)).Footnote 13 The coefficient for positive shocks remains similar, and negative shocks also have a significant effect in this specification. All results are robust to correcting standard errors for the small number of clusters using the “wild bootstrap” procedure (Cameron, Gelbach, and Miller 2008).Footnote 14 A range of falsification exercises with rainfall lags, leads, annual rainfall, and linear (non-absolute) rainfall effects does not yield significant associations, as we should expect.Footnote 15

REPERCUSSIONS OF SEASONALITY IN FWA VERSUS UGANDA

We have seen that it is plausible that differences in seasonality set apart the cotton-growing capacity of farmers in FWA and Uganda; however, to come to a closer understanding of the actual impact of seasonality on divergent cotton outcomes, we must consider a range of alternative explanations and contextual dynamics. I draw from the literature to identify the most important such explanations and dynamics and discuss how they interacted with the constraints that seasonality imposed on agriculture.

Colonial Investment

We have seen that railroads unlocked both Uganda and FWA in the early twentieth century; however, we should also consider more specific infrastructural investments that may have provided Ugandan farmers with better opportunities to market their cotton. Crucial in that respect were ginneries, where the fiber (lint) was separated from the seed, reducing the weight by about two-thirds. The first ginneries in the Soudan and Uganda were erected in 1904 (Roberts Reference Roberts1996, pp. 81–2; Ehrlich Reference Ehrlich1958, p. 69). Subsequently, ginneries mushroomed across Uganda, especially as South Asians entered the market from the early 1910s onwards. By 1926, there were 177 mechanized ginneries in Uganda, compared with only 12 in the Soudan (Ehrlich Reference Ehrlich1958, p. 176; Roberts Reference Roberts1996, p. 170).

A countryside dotted with ginneries came to strongly benefit Uganda’s farmers, who no longer had to head-load their cotton to faraway markets and saw farm gate prices improve as transaction costs declined and competition increased (Nayenga Reference Nayenga1981, pp. 188–9). The proliferation of ginneries was primarily a consequence rather than a cause of abundant cotton output, however. Indeed, Uganda’s cotton take-off preceded the ginnery take-off. In 1914, when Uganda’s cotton production already far exceeded Ivorian and Soudanese levels (Figure 1), there were only 20 ginneries, most of them concentrated on the coast of Lake Victoria. In the Eastern Province, where about half of Uganda’s cotton was cultivated, transporting the harvest to processing facilities still required a half-million porter loads annually (Ehrlich Reference Ehrlich1958, pp. 90–2).

Meanwhile, why did investment in FWA’s ginnery infrastructure remain so limited? Tadei (Reference Tadei2020) has argued that the French pursued a policy of extraction rather than investment in their African territories, letting a small number of French trading companies control export trade and suppress export prices to reap large trade margins, which could, in turn, be taxed. He found that the resultant gaps between local and world market prices were “particularly large for cotton” compared with other crops and the largest in the Soudan among the French African colonies (Tadei Reference Tadei2020, p. 8). A direct comparison indeed shows that annual average cotton export prices from French Africa were 27 percent lower than from Uganda between 1920 and 1945 (Frankema, Williamson, and Woltjer 2018; Tadei Reference Tadei2020).

Does extractive policy, rather than seasonality, explain the persistently low cotton output from FWA? To answer this question, we must first consider why the trading companies and the French colonial state would opt for a policy of overtaxing farmers and suppressing producer prices, which we should expect to disincentivize farmers to grow cotton for export, leaving little output to tax and export to the metropole. A consideration of seasonality constraints on agriculture helps us make sense of this paradoxical and apparently self-undermining policy. Seasonality limited farmers’ maximum feasible cotton output, muting the price elasticity of supply. Given that output was capped at a low level, more extractive taxation (combined with coerced cultivation to counteract unfavorable producer prices, as argued later) becomes the optimal revenue-maximizing strategy.Footnote 16

Colonial Coercion

Coercion could either have drawn labor away from or towards cotton production. We should thus consider if Uganda’s cotton take-off can be attributed to forced labor policies that were more geared towards cotton production than in FWA. The coercive policies in FWA and Uganda did not reach the degree of compulsion that characterized the most coercive cotton regimes of French Central Africa, the Belgian Congo, or Portuguese Mozambique (Likaka Reference Likaka1997; Isaacman Reference Isaacman1996; Kassambara Reference Kassambara2010); still, in both contexts, colonial officials applied informal pressure and formal compulsion to increase cotton output. Local agents, including African chiefs whose tenure often depended on their ability to spur cotton output, were used to enforce acreage or output requirements. In Uganda, such policies were progressively removed before 1930, whereas in Côte d’Ivoire and the Soudan, they were intensified (Bassett Reference Bassett2001, pp. 61–2, 77, 197fn23; Ehrlich Reference Ehrlich1958, pp. 79, 88; Nayenga Reference Nayenga1981; Roberts Reference Roberts1996, pp. 221–46, 1996, pp. 92, 98, 124; Robins Reference Robins2016, p. 120; Vail Reference Vail1972, p. 63; Wrigley Reference Wrigley1959, p. 16). In an attempt to generate more output, the Ivorian authorities required 0.1 hectares in 1918 per household, which was doubled in 1925 (Bassett Reference Bassett2001, pp. 58, 66). It is telling that this imposed acreage was consistent with seasonality constraints on agriculture. As shown in the earlier simulation, 0.2 hectares fell within farmers’ agricultural production possibilities and was substantially below what Ugandan cotton farmers were cultivating (Table 2). Coercion, in other words, served to extract a minimal amount of cotton from local producers but not beyond the farmers’ constrained production capacity.

Colonial authorities also pursued various forms of non-agricultural labor compulsion, which were largely abolished in Uganda during the 1920s, while in FWA they persisted into the late 1940s (Powesland Reference Powesland1957, pp. 13–34; Roberts Reference Roberts1996, pp. 246–8; Van Waijenburg Reference Van Waijenburg2018). Still, it is implausible that sustained French labor requisition undermined cotton output. First, no direct substitution effect between forced labor and cotton output need have existed, as long as labor was not requisitioned during June to September, the months of peak labor demand (Figure 5).Footnote 17 Second, forced labor was often used for infrastructural development, which should eventually, as it did in Uganda, have benefited cotton exports, obviating the need for labor-intensive porterage to distant processing facilities and reducing transportation costs (Bassett Reference Bassett2001, p. 64; Ehrlich Reference Ehrlich1958, pp. 91–2; Roberts Reference Roberts1996, p. 228). Third, Uganda saw intense off-farm labor requisition during the 1900s and 1910s when cotton was already expanding rapidly (Nayenga Reference Nayenga1981; Powesland Reference Powesland1957). Indeed, it is more plausible that the causality between cotton output and labor coercion ran the other way: as the French colonizers failed to establish a successful agricultural export sector in the seasonality-constrained interior savanna, they remained dependent on the more costly alternative of labor taxes to capitalize on their colonial assets and raise revenues (Van Waijenburg Reference Van Waijenburg2018).

Domestic Textile Production

Arguably the most powerful alternative explanation for divergent outcomes in colonial FWA and Uganda revolves around the domestic cotton handicraft industry. In Uganda, before the export take-off, cotton was grown and used only on a very small scale “to manufacture small articles of clothing and adornment” (Nye and Hosking Reference Nye, Hosking and Tothill1940, p. 183; cf. Nayenga Reference Nayenga1981, p. 178). Clothing was more typically made from tree bark or animal hides, while cotton textiles were imported. This situation persisted throughout the period studied, aside from a small share of Ugandan cotton, which was taken up by a local mechanized textile factory during the 1950s and 1960s (De Haas Reference De Haas2017b, p. 610). In the Soudan and Côte d’Ivoire, by contrast, domestic textile handicraft production was firmly established and continued to thrive deep into the twentieth century, despite competition from imports (Bassett Reference Bassett2001, p. 84; Roberts Reference Roberts1987, Reference Roberts1996, pp. 274–8).Footnote 18

Roberts (Reference Roberts1996, p. 22) posits that “the failure of colonial cotton development in the French Soudan is directly attributable to the persistence of the precolonial handicraft textile industry.” He claims that “the Soudan produced vast amounts of cotton,” which the export industry was unable to capture (Roberts Reference Roberts1996, p. 278). Upon close inspection, however, this argument is unconvincing and overlooks the crucial role of seasonality constraints, even in light of Roberts’ own evidence. In pre-colonial West Africa, cotton cultivation was indeed widespread, but individual farmers grew small quantities, moreover saving on labor by intercropping with food and cultivating hardy and often perennial varieties (Bassett Reference Bassett2001, p. 57). That aggregate raw cotton production continued to be limited during the colonial period is not only plausible in light of the seasonality constraints I have demonstrated, but is also confirmed by archival evidence. In 1925–1926, only 34 percent of all cotton ginned in seven districts of the Soudan (making up 64 percent of all ginned cotton in the territory) was consumed by local industry (Roberts Reference Roberts1996, pp. 206–7). Between 1924 and 1938, approximately 37 percent of Côte d’Ivoire’s cotton output was marketed locally (Bassett Reference Bassett2001, pp. 65–6). Some cotton was ginned manually (an extremely labor-intensive process) and thus absent from ginnery statistics (Roberts Reference Roberts1996, p. 206). Therefore, let us conservatively triple the output statistics reported in Figure 2 to account for an unrecorded domestic economy. Even when applying such large mark-ups, cotton production in Côte d’Ivoire and the Soudan remains unimpressive: 9.7 and 8.6 percent of Uganda’s output respectively, for all years in which production statistics are available for all three territories up to 1960.

The characteristics of West Africa’s handicraft sector itself are also a testament to the scarcity of its key input. African textile manufacturers were able to convert raw cotton of irregular quality into a cloth of high durability. This was a labor-intensive process, reliant on the mobilization of labor outside the agricultural season (Austin Reference Austin2008, pp. 603–4); thus, the profit margins of domestic handicraft producers were determined less by cheap and abundant raw material input and more by the low dry-season opportunity costs of spinners and weavers.Footnote 19 While local textile production was important for local livelihoods and trade, its reliance on labor-intensive, input-saving production techniques, as well as its limited scale, suggests that it would not have absorbed more than a fraction of cotton output if production had taken off. Colonial officials themselves were very aware of the limited capacity of the local textile producers to absorb large amounts of cotton (Bassett Reference Bassett2001, pp. 51–80; Roberts Reference Roberts1996, pp. 260–3, 280). Rather than being outcompeted by local merchants over “vast amounts” of cotton, they were frustrated to see how resilient local producers—who, by metropolitan guidelines, they were not allowed to suppress (Roberts Reference Roberts1996, pp. 223–6)—absorbed whatever marginal increases of output were achieved from very low initial levels.

Commercial Food Crop Cultivation

Farmers in FWA may have had more opportunities to market food crops than their Ugandan counterparts, dissuading them from adopting cotton. It is worth comparing the market prices of food crops and cotton in FWA and Uganda. Unfortunately, farmgate prices for millet are not available, but millet retail price series for Dakar, Senegal (Westland Reference Westland2021), and Nairobi, Kenya (Frankema and Van Waijenburg 2012), Footnote 20 give at least a rough indication of relative food price levels in major railroad-connected consumer markets in both regions. The average annual millet prices in Dakar were 23 percent higher than in Nairobi during the interwar years and 74 percent higher during WII. I cannot ascertain if this price gap translated into equally large farm gate price gaps. Still, even if millet prices were slightly more favorable in FWA, the food trade was nowhere near large enough to explain why farmers generated so few cotton exports compared with their Ugandan counterparts. In fact, in the late 1940s, the share of the total food crop area that was allocated to production for the market rather than self-provision was comparably small in FWA (c. 11 percent) and Uganda (c. 14 percent) (United Nations 1954, pp. 11–3).Footnote 21 Millet was not exported on any substantial scale from either FWA or Uganda. Local market demand was also limited and mostly confined to areas around towns and along railroads and waterways (Roberts Reference Roberts1996, pp. 99, 165, 264; van Beusekom 2002, p. 22; Mukwaya Reference Mukwaya1962; Wrigley Reference Wrigley1959, p. 67). Rates of urbanization were low in both cases, with only 1 percent of Uganda’s population living in cities in 1950 and 4 percent each in Côte d’Ivoire and Mali, respectively.Footnote 22 Indeed, slow urban growth was plausibly reinforced by seasonality-constrained agricultural production possibilities, as well as poor infrastructural links between city and countryside. It is telling that Senegal, which became one of the very few regions in colonial Africa where cash-crop specialization came at the expense of food self-sufficiency, imported most of its calories (rice) from Indochina, rather than the neighboring Soudan (van Beusekom 2002, pp. 1–32).

Wage Labor and Migration

Finally, we should consider the possibility that farmers in FWA were dissuaded from growing cotton by more profitable off-farm wage labor opportunities. We can compare nominal (pound sterling–converted Footnote 23) unskilled wage rates in the cotton-growing zones of Uganda (De Haas Reference De Haas2019), Côte d’Ivoire and the Soudan (Van Waijenburg Reference Van Waijenburg2018), which can proxy for their relative purchasing power in terms of imported goods. Wages in Buganda exceeded those in Côte d’Ivoire and Mali during the 1920s, while the picture was reversed during the 1930s.Footnote 24 Nominal wages in Côte d’Ivoire and Mali only began to substantially exceed those in Uganda after 1945, as the new CFA franc appreciated relative to the pound sterling. In short, wages in the cotton zones of FWA were not consistently higher than in Uganda.

We should also consider wage levels in labor migration destination areas, especially since migration from the interior of FWA to coastal Senegal, Gambia, and the forest zone of the Gold Coast had deep roots and was of substantial magnitude (Dougnon Reference Dougnon2007; Manchuelle Reference Manchuelle1997). In Uganda’s cotton-growing zones, the picture was mixed; Buganda was a major destination for labor migrants (De Haas Reference De Haas2019), while some districts in northern Uganda saw substantial emigration (Powesland Reference Powesland1957). We can compare wages in Côte d’Ivoire and the Soudan to Senegal (Westland Reference Westland2021), the Gambia, and the Gold Coast (Frankema and Van Waijenburg 2012). Between 1903 and 1939, wages in these migrant destinations were, on average, 2.5 times higher than those paid in Côte d’Ivoire and the Soudan, a gap that subsequently closed rapidly.Footnote 25 Thus, until at least 1940, labor migration was a lucrative alternative to cultivating cotton in FWA.Footnote 26 Since Buganda was East Africa’s main destination of voluntary migrants, Uganda’s cotton growers did not have a similar migratory “exit option.”

Still, it is unlikely that the attraction of off-farm labor provides the main explanation for differential cotton adoption outcomes in the two regions. First, Soudanese and Ivorian migrants going to the Gold Coast (the most important migrant destination) benefited from complementary seasonality at both ends. Migration trips could be timed so that they would not coincide with the agricultural peak season in the sending areas. Such “dry season migration” competed primarily not with agricultural activities but with non-agricultural activities, including textile production (Johnson 1978, pp. 266–7).Footnote 27 Second, there was no consistent correlation between cotton output and wage labor opportunities. For example, as wages plummeted during the 1930s, Uganda’s cotton production peaked. In Côte d’Ivoire, production slightly increased (from a very low base), while in the Soudan, export plummeted.Footnote 28 Overall, while Ugandan farmers responded to declining opportunities outside agriculture by expanding their cotton production, farmers in FWA did not, consistent with their constrained agricultural production capacity.

Alongside differences in cotton production capacity, it is worth noting that smoother seasonal labor requirements enabled Ugandan households to deploy more productive labor in agriculture in total than their Ivorian/Soudanese counterparts. If we consider the optimized simulation presented earlier and assume that adults sought to work a total of 312 days a year, we find that Ugandan households allocated 92 percent of their labor capacity to agriculture, compared with only 62 percent in FWA, which is due to a much more pronounced seasonal agricultural underemployment in the latter. We should thus expect rural households in FWA to rely to a greater extent on non-agricultural income sources in the agricultural off-season. Plausibly, then, emigration was a consequence as well as a cause of constraints imposed by seasonality on cash-crop adoption. A further strong indication that migration itself did not suppress cotton adoption was that cotton finally began to take off in northern Côte d’Ivoire, right at the time when migration to the southern cocoa plantations intensified (Bassett Reference Bassett2001, pp. 96–7). It is this belated “cotton revolution” in FWA to which I now turn.

OVERCOMING SEASONALITY IN FRENCH WEST AFRICA

Strikingly, FWA realized a major cotton take-off in the post-colonial period (Bassett Reference Bassett2001), which shows that the constraining role of agricultural seasonality was not immutable. I identify two contextual factors that explain why seasonality was a major constraint in the colonial period and not afterward. First, specialization was inhibited by poorly developed markets for food, the causes of which I have already discussed. With rapidly rising urbanization and economic diversification after independence, food marketing became more lucrative. Second, agricultural output was constrained by the absence of yield-enhancing and labor-saving technological breakthroughs during the colonial period, which were achieved after independence.

During the colonial period, agricultural innovations such as new crop varieties (Arnold Reference Arnold and Jameson1970, pp. 155–64; Dawe Reference Dawe1993, pp. 149–59; Roberts Reference Roberts1996, pp. 224, 253–4) or the plow (Tosh Reference Tosh1978, p. 435; Roberts Reference Roberts1996, pp. 147, 176–7; Vail Reference Vail1972, p. 71) were haphazardly developed and had marginal impacts on labor productivity at best. In light of these persistent constraints, the cotton take-off in post-colonial FWA (Figure 2) is truly remarkable. How were farmers suddenly able to overcome the seasonality bottleneck that had prevented cotton adoption and frustrated colonial officials for over a half-century? The answer to this question largely resides in persistent research and extension efforts by the French former colonizers in collaboration with post-colonial governments (Lele, van de Walle, and Gbetibouo 1989). Even though they were unable to effectuate their ambitions, some colonial officials had understood early on that only by transforming labor productivity through higher yields and more efficient farming practices could cotton achieve the desired success (Roberts Reference Roberts1996, p. 168). From the 1960s onwards, in a context of a global “Green Revolution,” the renewed concerted efforts to generate export cotton finally produced a well-rounded package of new technologies and inputs that increased crop yields and reduced labor requirements per hectare. That farmers in FWA proved willing and able to adopt these technologies testifies to their suitability in a context of seasonality labor–constrained savanna agriculture (Bassett Reference Bassett2001; Benjaminson Reference Benjaminson, Benjaminsen and Lund2001; Bosc and Hanak Freud 1995; Lele, van de Walle, and Gbetibouo 1989).

As a result of the introduction of improved varieties and the application of mineral fertilizers, grain yields in Côte d’Ivoire and Mali rose from an initial 600–800 kg/hectare to over 1,000 kg/hectare for millet and sorghum, and over 1,700 kg/hectare for maize by the early 1980s (Benjaminson Reference Benjaminson, Benjaminsen and Lund2001, p. 264), before stagnating at this higher level. Seed cotton yield gains over the same period were even more impressive, rising approximately fourfold from c. 300kg/hectare to 1,200 kg/hectare (Bassett Reference Bassett2001, p. 186; Fok et al. 2000, p. 14).Footnote 29 Various innovations were adopted to a greater or lesser extent across FWA, substantially increasing labor productivity (Bosc and Hanak Freud 1995).

We can re-run the same simulation introduced earlier for FWA but now based on the higher labor productivity in the post-colonial era. I take into account that food crop production shifted from millet towards maize, which has a similar caloric value per kg but higher yields (Bassett Reference Bassett2001, pp. 127–9; Bosc and Hanak Freud 1995, p. 290). I conservatively estimate that food crop yields doubled and that labor demands per hectare in both food crop and cotton cultivation halved. Under these new conditions, farmers were able to cultivate 6.5 hectares of cotton, more than a sixfold increase compared to the colonial era. Cotton yields per hectare also increased fourfold. As a consequence, smallholders’ cotton production possibilities had risen over 26-fold. Footnote 30 This extension of agricultural production possibilities is reflected in the massive expansion of cotton output that was achieved over this period (Figure 2), while a further part of the increased production possibilities was allocated to surplus grain production (Bingen Reference Bingen1998 p. 271; Benjaminson Reference Benjaminson, Benjaminsen and Lund2001, p. 264).

CONCLUSION

Colonial efforts to spur agricultural exports from Africa, and cotton, in particular, produced unanticipated and uneven results. To understand such heterogeneous outcomes, we need to look beyond the dynamics of colonial coercion and investment, and instead consider how resource constraints informed African farmers’ adoption decisions and often thwarted colonial designs. I have shown that African savanna farmers facing short rainy seasons were unable, at the prevailing levels of technology and market access, to combine a substantial involvement in cotton production with sustained food security. In the context of short rainy seasons, farmers did not have enough labor at their disposal in the agricultural peak season and could not assess their food security before committing resources to an inedible cash crop. Colonial states, despite persistent attempts to generate cotton output, were unable to mitigate this bottleneck. As a result, cotton was far more widely grown in regions with comparatively long rainy seasons, most notably Uganda. Only after independence did the introduction of labor-saving technologies in the former French territories of West Africa enable widespread and large-scale cotton adoption among its savanna farmers.

Some of the dynamics studied for cotton will also be relevant to other savanna cash crops in Africa. Still, savanna farmers in northern Nigeria, Senegal, and the Gambia proved able to produce large quantities of groundnuts for export. Although these cases can only be addressed briefly here, it is important to note that their success relied on very specific conditions, which were not (yet) present in other parts of colonial Africa: large-scale rice imports in the Gambia and Senegal (Swindell and Jeng 2006; van Beusekom 2002) and agricultural intensification and fine-grained rural trade networks in northern Nigeria, linked to the region’s historically high population densities and pre-colonial processes of state formation (Hill Reference Hill1977; Hogendorn Reference Hogendorn1978). Emerging conditions in these areas, then, foreshadow a much wider breaking of resource bottlenecks in savanna agriculture, which colonial states, despite their efforts to export cotton from Africa, proved unable to effectuate, but which eventually occurred after independence in FWA and beyond.