Iodine

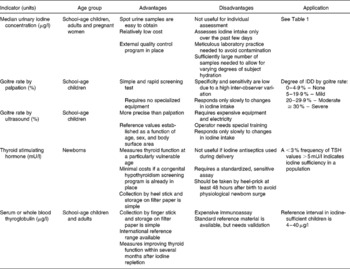

Four methods are generally recommended for assessment of iodine nutrition in populations: urinary iodine concentration (UI), the goitre rate, serum thyroid stimulating hormone (TSH), and serum thyroglobulin (Tg) (Tables 1 and 2). These indicators are complementary, in that UI is a sensitive indicator of recent iodine intake (days) and Tg shows an intermediate response (weeks to months), whereas changes in the goitre rate reflect long-term iodine nutrition (months to years).

Table 1 Epidemiological criteria for assessing iodine nutrition in a population based on median and/or range of urinary iodine concentrations (WHO/UNICEF/ICCIDD, 2007)

* The term ‘excessive’ means in excess of the amount required to prevent and control iodine deficiency.

† In lactating women, the figures for median urinary iodine are lower than the iodine requirements because of the iodine excreted in breast milk.

Table 2 Indicators of iodine status at population level (WHO/UNICEF/ICCIDD, 2007)

Thyroid size

Two methods are available for measuring goitre: neck inspection and palpation, and thyroid ultrasonography. By palpation, a thyroid is considered goitrous when each lateral lobe has a volume greater than the terminal phalanx of the thumbs of the subject being examined. In the classification system of WHO(1), grade 0 is defined as a thyroid that is not palpable or visible, grade 1 is a goitre that is palpable but not visible when the neck is in the normal position (i.e., the thyroid is not visibly enlarged), and grade 2 goitre is a thyroid that is clearly visible when the neck is in a normal position. Goitre surveys are usually done in school age children.

However, palpation of goitre in areas of mild iodine deficiency has poor sensitivity and specificity; in such areas, measurement of thyroid volume (Tvol) by ultrasound is preferable(Reference Zimmermann, Saad, Hess, Torresani and Chaouki2). Thyroid ultrasound is non-invasive, quickly done (2–3 min per subject) and feasible even in remote areas using portable equipment. However, interpretation of Tvol data requires valid references from iodine-sufficient children. In a recent multicentre study, Tvol was measured in 6–12 y-old children (n = 3529) living in areas of long-term iodine sufficiency on five continents. Age- and body surface area- specific 97th percentiles for Tvol were calculated for boys and girls(Reference Zimmermann, Hess and Molinari3). Goitre can be classified according to these international reference criteria, but they are only applicable if Tvol is determined by a standard method(Reference Zimmermann, Hess and Molinari3, Reference Brunn, Block, Ruf, Bos, Kunze and Scriba4). Thyroid ultrasound is subjective and requires judgement and experience. Differences in technique can produce interobserver errors in Tvol as high as 26 %(Reference Zimmermann, Molinari, Spehl, Weidinger-Toth, Podoba, Hess and Delange5).

In areas of endemic goitre, although thyroid size predictably decreases in response to increases in iodine intake, thyroid size may not return to normal for months or years after correction of iodine deficiency(Reference Aghini-Lombardi, Antonangeli, Pinchera, Leoli, Rago, Bartolomei and Vitti6, Reference Zimmermann, Hess, Adou, Toresanni, Wegmuller and Hurrell7). During this transition period, the goitre rate is difficult to interpret, because it reflects both a population's history of iodine nutrition and its present status. Aghini-Lombardi et al. (Reference Aghini-Lombardi, Antonangeli, Pinchera, Leoli, Rago, Bartolomei and Vitti6) suggested that enlarged thyroids in children who were iodine deficient during the first years of life may not regress completely after introduction of salt iodization. If true, this suggests that to achieve a goitre rate < 5 % in children may require that they grow up under conditions of iodine sufficiency. A sustained salt iodization program will decrease the goitre rate by ultrasound to < 5 % in school-age children and this indicates disappearance of iodine deficiency as a significant public health problem(1). WHO recommends the total goitre rate be used to define severity of iodine deficiency in populations using the following criteria: < 5 %, iodine sufficiency; 5·0 %–19·9 %, mild deficiency; 20·0 %–29·9 %, moderate deficiency; and >30 %, severe deficiency(1) (Table 2).

Urinary iodine concentration

Because >90 % of ingested iodine is excreted in the urine, UI is an excellent indicator of recent iodine intake. UI can be expressed as a concentration (μg/l), in relationship to creatinine excretion (μg iodine/g creatinine), or as 24-hour excretion (μg/day). For populations, because it is impractical to collect 24 hour samples in field studies, UI can be measured in spot urine specimens from a representative sample of the target group, and expressed as the median, in μg/l(1). Variations in hydration among individuals generally even out in a large number of samples, so that the median UI in spot samples correlates well with that from 24 hour samples. For national, school-based surveys of iodine nutrition, the median UI from a representative sample of spot urine collections from ≈ 1200 children (30 sampling clusters × 40 children per cluster) can be used to classify a population's iodine status(1) (Table 1).

However, the median UI is often misinterpreted. Individual iodine intakes, and, therefore, spot UI concentrations are highly variable from day-to-day(Reference Andersen, Karmisholt, Pedersen and Laurberg8), and a common mistake is to assume that all subjects with a spot UI < 100 μg/l are iodine deficient. To estimate iodine intakes in individuals, 24 hour collections are preferable, but difficult to obtain. An alternative is to use the age-and sex adjusted iodine:creatinine ratio in adults, but this also has limitations(Reference Knudsen, Christiansen, Brandt-Christensen, Nygaard and Perrild9). Creatinine may be unreliable for estimating daily iodine excretion from spot samples, especially in malnourished subjects where creatinine concentration is low. Daily iodine intake for population estimates can be extrapolated from UI, using estimates of mean 24 hour urine volume and assuming an average iodine bioavailability of 92 % using the formula: Urinary iodine (μg/l) × 0·0235 × body weight (kg) = daily iodine intake(10). Using this formula, a median UI of 100 μg/l in an adult corresponds roughly to an average daily intake of 150 μg.

Thyroid stimulating hormone

Because serum thyroid stimulating hormone (TSH) is determined mainly by the level of circulating thyroid hormone, which in turn reflects iodine intake, TSH can be used as an indicator of iodine nutrition. However, in older children and adults, although serum TSH may be slightly increased by iodine deficiency, values often remain within the normal range. TSH is therefore a relatively insensitive indicator of iodine nutrition in adults(1). In contrast, TSH is a sensitive indicator of iodine status in the newborn period(Reference Delange11, Reference Zimmermann, Aeberli, Torresani and Burgi12). Compared to the adult, the newborn thyroid contains less iodine but has higher rates of iodine turnover. Particularly when iodine supply is low, maintaining high iodine turnover requires increased TSH stimulation. Serum TSH concentrations are therefore increased in iodine deficient infants for the first few weeks of life, a condition termed transient newborn hypothyroidism. In areas of iodine deficiency, an increase in transient newborn hypothyroidism, indicated by >3 % of newborn TSH values above the threshold of 5 mU/l whole blood collected 3 to 4 days after birth, suggests iodine deficiency in the population(Reference Zimmermann, Aeberli, Torresani and Burgi12). TSH is used in many countries for routine newborn screening to detect congenital hypothyroidism. If already in place, such screening offers a sensitive indicator of iodine nutrition. Newborn TSH is an important measure because it reflects iodine status during a period when the developing brain is particularly sensitive to iodine deficiency.

Thyroglobulin

Thyroglobulin (Tg) is synthesized only in the thyroid, and is the most abundant intrathyroidal protein. In iodine sufficiency, small amounts of Tg are secreted into the circulation, and serum Tg is normally < 10 μg/l(Reference Spencer and Wang13). In areas of endemic goitre, serum Tg increases due to greater thyroid cell mass and TSH stimulation. Serum Tg is well correlated with the severity of iodine deficiency as measured by UI(Reference Knudsen, Bulow, Jorgensen, Perrild, Ovesen and Laurberg14). Intervention studies examining the potential of Tg as an indicator of response to iodized oil and potassium iodide have shown that Tg falls rapidly with iodine repletion, and that Tg is a more sensitive indicator of iodine repletion than TSH or T4(Reference Benmiloud, Chaouki, Gutekunst, Teichert, Wood and Dunn15, Reference Missler, Gutekunst and Wood16). However, commercially-available assays measure serum Tg, which requires venipuncture, centrifugation and frozen sample transport, which may be difficult in remote areas.

A new assay for Tg has been developed for dried blood spots taken by a finger prick(Reference Zimmermann, de Benoist and Corigliano17, Reference Zimmermann, Moretti, Chaouki and Torresani18), simplifying collection and transport. In prospective studies, dried blood spot Tg has been shown to be a sensitive measure of iodine status and reflects improved thyroid function within several months after iodine repletion(Reference Zimmermann, de Benoist and Corigliano17, Reference Zimmermann, Moretti, Chaouki and Torresani18). However, several questions need to be resolved before Tg can be widely adopted as an indicator of iodine status. One question is the need for concurrent measurement of anti-Tg antibodies to avoid potential underestimation of Tg; it is unclear how prevalent anti-Tg antibodies are in iodine deficiency, or whether they are precipitated by iodine prophylaxis(Reference Loviselli, Velluzzi and Mossa19, Reference Zimmermann, Moretti, Chaouki and Torresani20). Another limitation is large interassay variability and poor reproducibility, even with the use of standardization(Reference Spencer and Wang13). This has made it difficult to establish normal ranges and/or cutoffs to distinguish severity of iodine deficiency. However, recently an international reference range and a reference standard for DBS Tg in iodine-sufficient school children (4–40 μg/l) has been made available(Reference Zimmermann, de Benoist and Corigliano17) (Table 2).

Thyroid hormone concentrations

In contrast, thyroid hormone concentrations are poor indicators of iodine status. In iodine-deficient populations, serum T3 increases or remains unchanged, and serum T4 usually decreases. However, these changes are often within the normal range, and the overlap with iodine-sufficient populations is large enough to make thyroid hormone levels an insensitive measure of iodine nutrition(1).

Iron

Definitions of iron deficiency states

1 Iron deficiency (ID) is a reduction in body Fe to the extent that cellular storage Fe required for metabolic/physiological functions is fully exhausted, with or without anaemia.

2 Iron deficiency anaemia (IDA) is defined as ID and a low haemoglobin (Hb).

3 Iron-deficient erythropoiesis (IDE) is defined as laboratory evidence of a reduced supply of circulating Fe for erythropoesis, indicated by either reduced Fe saturation of plasma transferrin or signs of ID in circulating erythrocytes. IDE is not synonymous with ID or IDA; IDE can occur despite normal or even increased storage Fe, due to impaired release of Fe to the plasma(Reference Cook21). IDE is often associated with malignancy or inflammation.

Bone-marrow biopsy

Bone-marrow examination to establish the absence of stainable iron remains the gold standard for the diagnosis of iron deficiency, particularly when performed and reviewed under standardized conditions by experienced investigators. However, marrow examinations are expensive, uncomfortable, and require technical expertise, and are not performed routinely in clinical practice.

Haemoglobin

Haemoglobin (Hb) is a widely used screening test for ID, but used alone has low specificity and sensitivity. Its sensitivity is low because individuals with baseline Hb values in the upper range of normal need to lose 20–30 % of their body Fe before their Hb falls below the cut-off for anaemia(Reference Cook21). Its specificity is low because there are many causes of anaemia other than ID. Cut-off criteria differ with the age and sex of the individual (Table 3), between laboratories, and there are ethnic differences in normal Hb(Reference Himes, Walker, Williams, Bennett and Grantham-McGregor22, Reference Johnson-Spear and Yip23).

Table 3 Haemoglobin and haematocrit levels by age and gender below which anaemia is present* (WHO, 2001)

* At altitudes < 1000 m.

Mean corpuscular volume and reticulocyte Hb content

Measured on widely-available automated haematology analysers, the mean corpuscular volume (MCV) is a reliable, but relatively late indicator of ID, and its differential diagnosis includes thalassemia. The reticulocyte Hb content (CHr) has been proposed as a sensitive indicator that falls within days of the onset of IDE(Reference Mast, Blinder, Lu, Flax and Dietzen24). However, false normal values can occur when the MCV is increased or in thalassemia; its wide use is limited as it can only be measured on one model of analyzer. For both MCV and CHr, low specificity limits their clinical utility(Reference Thomas and Thomas25).

Erythrocyte zinc protoporphyrin

Erythrocyte zinc protoporphyrin (ZnPP) increases in IDE because zinc replaces the missing Fe during formation of the protoporphyrin ring(Reference Metzgeroth, Adelberger, Dorn-Beineke, Kuhn, Schatz, Maywald, Bertsch, Wisser, Hehlmann and Hastka26). The ratio of ZnPP/haem can be measured directly on a drop of blood using a portable hematofluorometer. In adults, ZnPP has a high sensitivity in diagnosing iron deficiency(Reference Hastka, Lasserre, Schwarzbeck and Hehlmann27–Reference Wong, Qutishat, Lange, Gornet and Buja32). In infants and children, ZnPP may also be a sensitive test for detecting iron deficiency(Reference Hershko, Konijn, Link, Moreb, Grauer and Weissenberg33–Reference Siegel and LaGrone36). However, the specificity of ZnPP in identifying iron deficiency may be limited, because ZnPP can be increased by lead poisoning, anaemia of chronic disease, chronic infections and inflammation, haemolytic anaemias, or haemoglobinopathies(Reference Labbe and Dewanji29, Reference Graham, Felgenhauer, Detter and Labbe37–Reference Pootrakul, Wattanasaree, Anuwatanakulchai and Wasi40). The effect of malaria on ZnPP in children is equivocal(Reference Asobayire, Adou, Davidsson, Cook and Hurrell41–Reference Stoltzfus, Chwaya, Albonico, Schulze, Savioli and Tielsch43).

Direct comparisons between studies of ZnPP are difficult because of interassay variation. Interfering substances in plasma produced by acute inflammation and haemolysis can increase ZnPP concentrations 3–4-fold in the absence of iron deficiency(Reference Hastka, Lasserre, Schwarzbeck, Strauch and Hehlmann28). These interfering substances can be removed by washing the erythrocytes, which markedly improves assay specificity(Reference Hastka, Lasserre, Schwarzbeck, Strauch and Hehlmann28, Reference Rettmer, Gunter and Labbe44); however, this procedure is not always done because it is time-consuming. Another problem with ZnPP is that results can be expressed as a concentration (free erythrocyte protoporphyrin, EP, or ZnPP, and these are not interchangeable) or as the ZnPP-haem molar ratio; the latter is recommended(45). Several cut-offs have been proposed for ZnPP to define iron deficiency(Reference Hastka, Lasserre, Schwarzbeck, Strauch and Hehlmann28, Reference Wong, Qutishat, Lange, Gornet and Buja32, Reference Harthoorn-Lasthuizen, van't Sant, Lindemans and Langenhuijsen38, Reference Labbe, Vreman and Stevenson46, 47). Using a haematofluorometer on washed erythrocytes, Hastka et al. (Reference Hastka, Lasserre, Schwarzbeck, Strauch and Hehlmann28) recommended a cutoff of >40 μmol/mol haem on the basis of studies in healthy adults. In contrast, other authors, using unwashed erythrocytes, have proposed a cutoff of >80 μmol to indicate iron deficiency(Reference Labbe, Vreman and Stevenson46, 47). ZnPP is a useful screening test in field surveys, particularly in children, where uncomplicated ID is the primary cause of anaemia. Because of the difficulty in automating the assay, ZPP has been not widely adopted by clinical laboratories.

Transferrin saturation

Transferrin saturation is a widely used screening test for ID, calculated as the ratio of plasma Fe to total Fe-binding capacity. Although relatively inexpensive, its use is limited by diurnal variation in serum Fe and the many clinical disorders that influence transferrin levels(Reference Cook21).

Serum ferritin

Serum ferritin (SF) may be the most useful laboratory measure of Fe status; a low value is diagnostic of IDA in a patient with anaemia (Table 4). In healthy individuals, SF is directly proportional to Fe stores: 1 μg/l SF corresponds to 8–10 mg body Fe or 120 μg storage Fe/kg body weight(Reference Cook21). It is widely available, well-standardized, and has repeatedly been demonstrated to be superior to other measurements for identifying IDA. In a pooled analysis of 2579 subjects using receiver-operator characteristic curves, the mean area-under-the-curve for serum ferritin was 0·95, 0·77 for the ZPP, 0·76 for the MCV and 0·74 for transferrin saturation(Reference Guyatt, Oxman, Ali, Willan, McIlroy and Patterson48). In a pooled analysis of 9 randomized iron intervention trials ferritin shows a larger and more consistent response to iron interventions than ZPP or TfR(Reference Mei, Cogswell, Parvanta, Lynch, Beard, Stoltzfus and Grummer-Strawn49). However, because it is an acute phase protein, SF is increased independent of Fe status by acute or chronic inflammation. It is also unreliable in the setting of malignancy, hyperthyroidism, liver disease, and heavy alcohol intake.

Table 4 Iron status on the basis of serum ferritin concentrations (μg/l) (WHO, 2001)

Serum transferrin receptor

The serum transferrin receptor (TfR) is a transmembrane glycoprotein that transfers circulating Fe into developing red cells; ≈ 80 % of TfR in the body are found on erythroid precursors. A circulating, soluble form of TfR consists of the extracellular domain of the receptor. The total mass of cellular TfR and, therefore, of serum TfR depends both on the number of erythroid precursors in the bone marrow and on the number of TfRs per cell, a function of the iron status of the cell(Reference Baillie, Morrison and Fergus50, Reference Beguin51). Serum TfR concentration appears to be a specific indicator of IDE that is not confounded by inflammation(Reference Skikne, Flowers and Cook52). However, normal expansion of the erythroid mass during growth(Reference Kling, Roberts and Widness53–Reference Olivares, Walter, Cook, Hertrampf and Pizarro55), as well as diseases common in developing countries, including thalassemia, megaloblastic anaemia due to folate deficiency, or hemolysis due to malaria, may increase erythropoiesis and TfR independent of iron status(Reference Menendez, Quinto, Kahigwa, Alvarez, Fernandez, Gimenez, Schellenberg, Aponte, Tanner and Alonso56–Reference Worwood58). Thus, the diagnostic value of TfR for IDA is uncertain in children from regions where inflammatory conditions, infection and malaria are endemic(Reference Beesley, Filteau, Tomkins, Doherty, Ayles, Reid, Ellman and Parton59–Reference Williams, Maitland, Rees, Peto, Bowden, Weatherall and Clegg61). The wider application of TfR has been limited by the high cost of commercial assays. Age-related data for TfR in children are scarce(Reference Anttila, Cook and Siimes62–Reference Virtanen, Viinikka, Virtanen, Svahn, Anttila, Krusius, Cook, Axelsson, Raiha and Siimes64), and direct comparison of values obtained with different assays is difficult because of the lack of an international standard. A comparison of the analytical performance of an automated immunoturbidimetric assay with two manual ELISA assays found good correlations (r>0·8); however, TfR values by the immunoturbidimetric assay were on average 30 % lower(Reference Pfeiffer, Cook, Mei, Cogswell, Looker and Lacher65).

TfR/SF ratio

The ratio of TfR/SF can be used to quantitatively estimate total body Fe(Reference Cook, Flowers and Skikne66). The logarithm of this ratio is directly proportional to the amount of stored Fe in Fe-replete subjects and the tissue Fe deficit in ID (Table 5). In elderly subjects, the ratio may be more sensitive than other laboratory tests for ID(Reference Rimon, Levy, Sapir, Gelzer, Peled, Ergas and Sthoeger67). However, it cannot be used in individuals with inflammation because the SF may be elevated independent of Fe stores, and is assay specific. In a recent analysis of pooled data from iron intervention studies, calculation of body iron from the TfR/SF ratio showed no clear advantage over SF alone(Reference Mei, Cogswell, Parvanta, Lynch, Beard, Stoltzfus and Grummer-Strawn49). Although only validated for adults, the ratio has also been used in children(Reference Cook, Boy, Flowers and Daroca Mdel68, Reference Zimmermann, Wegmueller, Zeder, Chaouki, Rohner, Saissi, Torresani and Hurrell69). Because TfR assays are currently not standardized, this affects the use of the TfR/SF ratio to estimate body iron; the most common used logarithm is assay specific.

Table 5 The main biochemical indicators of iron deficiency

* Using RAMCO® ELISA for transferrin receptor.

Assessing iron status using multiple indices

The major diagnostic challenge is to distinguish between IDA in otherwise healthy individuals, and the anaemia of chronic disease (ACD). Inflammatory disorders increase circulating hepcidin levels and hepcidin blocks Fe release from enterocytes and the reticuloendothelial system, resulting in IDE(Reference Nemeth, Rivera, Gabayan, Keller, Taudorf, Pedersen and Ganz70). This can occur despite adequate iron stores. If chronic, inflammation can produce ACD. The distinction between ACD and IDA is difficult, as an elevated SF in anaemia does not exclude IDA in the presence of inflammation. A widely-used marker of inflammation is the C-reactive protein (CRP), but the degree of CRP elevation that invalidates the use of SF to diagnose ID is uncertain; CRP values >10–30 mg/l have been used. Moreover, during the acute phase response, the increase in CRP is typically of shorter duration than the increase in SF. Alternate markers, such as alpha1-acid glycoprotein (AGP), may be useful, as AGP levels tend to increase later in infection than CRP, and remain elevated for several weeks. If anaemia is present and the CRP is elevated, IDA can usually be diagnosed in individuals with inflammatory disorders/anaemia of chronic disease by an elevated TfR and/or ZnPP(Reference Cook21, Reference Asobayire, Adou, Davidsson, Cook and Hurrell41). Although studies in adults have suggested that elevations in TfR(Reference Skikne, Flowers and Cook52) or in ZnPP(Reference Hastka, Lasserre, Schwarzbeck, Strauch and Hehlmann28) can be a definitive indicator of iron deficiency, a recent study in African children found there was large overlap in the distributions of these indicators in a comparison of children with IDA with those with normal iron status(Reference Zimmermann, Molinari, Staubli-Asobayire, Hess, Chaouki, Adou and Hurrell71). This overlap may be explained by a greater variability in the erythroid mass in children than in adults, together with the many variables affecting children in developing countries that influence TfR and ZnPP independent of iron status, as detailed above. Because of this overlap, the sensitivity and specificity of TfR and ZnPP in identifying iron deficiency and IDA in children will be modest, regardless of the diagnostic cutoffs chosen. Because each test of iron status has limitations in terms of its sensitivity and specificity, they have been combined in models to define iron deficiency. Examples include the model based on low transferrin saturation and high ZnPP, and the ferritin model based on low SF and transferrin saturation and high ZnPP. With these models, specificity increases but sensitivity is low, and they tend to underestimate ID(47). When it is feasible to measure several indices, the best combination is usually Hb, SF and, if CRP is elevated, TfR and/or ZnPP.