INTRODUCTION

Crowdsourcing is the practice of obtaining needed services, ideas, or content by soliciting contributions from a large group of people (i.e., the crowd), especially from an online community. It typically relies heavily on voluntary contributions rather than paid contributions and has often been used to solicit opinions and solve problems. Crowdsourcing was originally described in the context of for-profit industries where members of the crowd derive some monetary or extrinsic reward for participating. A function previously performed by employees can now be outsourced to an undefined (and generally large) network of people in the form of an open call.Reference Howe 1

Crowdsourcing has been used in educational activities.Reference Skaržauskaitė 2 These activities include educational and supporting tasks within educational organizations. Crowdsourcing has been used to collect data by scientific organizations and academic institutions, to design textbooks and curricula, to provide feedback to academic institutions, and to raise funds for educational projects.

The term crowdsourcing is relatively new,Reference Howe 3 but the concept of crowdsourcing and harnessing the “wisdom of crowds”Reference Surowiecki 4 has been used for years. The dramatic increase in popularity of crowdsourcing has been facilitated by the use of the Internet and social networking sites, such as Twitter and Facebook. Although crowdsourcing has been described in education and higher education, there is limited information on its use and effectiveness in continuing medical education (CME).

The effect of traditional CME meetings (including courses and workshops) on practice and patient outcomes has been called into question. There is evidence that interactive workshops, but not didactic sessions, result in moderate improvements in professional practice.Reference Bloom 5 Other reviews have demonstrated that educational meetings alone or combined with other interventions can improve professional practice. It appears that interventions with a mix of interactive and didactic education are more effective than either alone.Reference Forsetlund, Bjørndal and Rashidian 6

This study describes a novel teaching method using a crowdsourcing technique at a traditional CME course. It was hypothesized that 1) there is collective wisdom and expertise in the crowd, and 2) participants are motivated to contribute to a crowdsourced-facilitated discussion.

METHODS

Study design

This study employed mixed methods using survey methodology and observational data from participant involvement in an educational intervention. The study was approved by the North York General Hospital’s Research Ethics Board.

Study setting and population

Emergency Medicine Update Europe is a CME conference held biannually in Europe. In September 2013, a conference was held in Haro, Spain. The conference consisted of fifteen 45-minute traditional CME presentations over 5 consecutive days (three consecutive presentations each morning) presented by leading North American faculty. Sixty-three physicians registered for the conference, of which 57 were from Canada and 52 were practicing emergency medicine (EM).

Educational intervention

Two weeks prior to the start of the conference, registrants were contacted by email and invited to submit up to “three problems, controversies, or questions” related to EM that they wanted discussed during the conference. Submitted topics were reviewed by the course director (principal investigator RP) and one member of the course planning committee (AS). Each was rank ordered with consideration to frequency of response, importance, and relevance to EM. Each day of the conference, a 15-minute session was devoted to a discussion facilitated by the course director (RP). The facilitator (RP) posed questions from the list of rank-ordered topics but did not contribute to the conversation as a content expert. Depending on the flow of conversation (i.e., number of responses, saturation on a topic), the facilitator proposed subsequent questions to the group to ensure that the conversation continued. Prior to the first session, participants were instructed on the concept of crowdsourcing and the discussion process that would be followed.

Data collection

Participants were asked to complete an anonymous paper survey after the last crowdsourcing activity at the end of the 5-day conference. The survey consisted of a 15-item questionnaire exploring satisfaction and attitudes toward the activity. The survey tool was developed by the principal investigator and piloted with three medical educators (SR, SL, AS). Final edits were made to the questionnaire based on feedback. Participation in the survey was voluntary and not a condition of participation in the activity or the conference. Questionnaires did not request any participant identifiers, and all survey data were compiled anonymously. Participants were asked to indicate how closely they agreed with the statements in the questionnaire using a five-point Likert scale. Quantitative observational data were collected by one member of the course committee (AS) and included frequency of unique registrants participating in each topic.

Data analysis

After completion of the study, data were transferred to a Microsoft Excel spreadsheet (Microsoft Office Excel 2010, Microsoft Corporation, Redmond, WA, USA). The survey and observational data were analyzed with descriptive statistics.

RESULTS

Thirteen registrants (13/63) contributed 27 different topics prior to the conference. Twelve problems (range 2–3 topics per day) were discussed over the 5 days (Appendix 1 – Crowdsourcing Problems). The duration of each day’s crowdsourcing activity was approximately 15 minutes for a total of 75 minutes for the conference. The average attendance for each topic was 45 (range 42–48; standard deviation [SD] 1.8) with an average of 9 (SD 2.8) participants contributing to each conversation. Thirty-two unique individuals (32/48 [67%]) contributed to at least one of the conversations. Each of those who participated contributed to an average of 3.2 (SD 2.6) conversations.

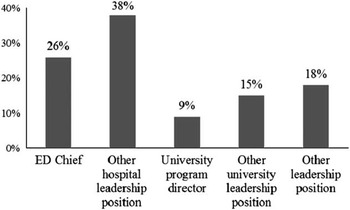

Thirty-nine participants out of 48 in attendance at the last session completed the survey (response rate 81%). Thirty-four participants (34/48 [87%]) practiced EM. Table 1 describes the experience and expertise of those respondents practicing EM. Many of the respondents hold or have held hospital and/or university leadership positions (Figure 1), and 27/34 (79%) believed that they had knowledge and/or expertise to share with their colleagues. Twenty-two participants (22/34 [64%]) had not used crowdsourcing.

Figure 1 Current and past leadership positions held by EM respondents.

Table 1 Demographics of EM respondents: a snapshot of expertise

Thirty respondents enjoyed the activity (30/39 [77%]); 29/39 (74%) found the crowdsourcing conversation valuable; and 22/39 (56%) reported that they learned something during the crowdsourcing activity that may change their practice. Most (25/39 [64%]) reported that they often or very often trusted the opinions of those speaking during the activity.

DISCUSSION

Traditional CME events with one person presenting in front of a group are often based on the assumption that the presenter has unique knowledge and expertise to share with the group. This form of CME has called into question whether it results in learning and changes to professional practice.Reference Forsetlund, Bjørndal and Rashidian 6 If there is collective wisdom in a group, then how can it be best harnessed? This study describes such an interactive teaching method. In this study, participants described themselves as a group with a significant amount of experience and expertise, with many in practice more than 15 years and many practicing in academic health science centres. Many of the participants reported involvement in teaching, and most reported that they had given continuing education presentations within the year. Why would one not tap into the collective wisdom of the crowd? SurowieckiReference Surowiecki 4 described in his book, The Wisdom of Crowds, that “under the right circumstances, groups are remarkably intelligent and are often smarter than the smartest people in them.”Reference Surowiecki 4 He described the “wisdom of crowds” as being derived not from averaging solutions, but from aggregating them. In other words, “the many are smarter than the few.” According to Surowiecki,Reference Surowiecki 4 one is more likely (but not guaranteed) to get a better estimate or decision from a group of diverse, independent, motivated people than from a single or even a couple of experts.

The crowdsourcing activity described in this study is supported by principles of adult learning, grounded in the constructivist learning theory and described by Knowles’Reference Knowles, Holton and Swanson 7 “assumptions about adult learning.” Adults have accumulated a great deal of experience that is a rich resource for learning, value learning that integrates with the demands of their everyday life, and are more interested in problem-centred approaches than in subject-centred approaches. In constructivism, the teacher is viewed not as an expert or a transmitter of knowledge, but rather a facilitator or a guide who facilitates learning. It is based on the assumptions that learning builds on prior knowledge and experiences and that a teacher’s role is to engage students in learning actively using relevant problems and group interaction.Reference Kaufman 8

A crowdsourcing discussion, whether online or in person, has become an important way to solve problems. The notion of an individual solving a problem is being replaced by distributed problem solving and team-based multidisciplinary practice.Reference Mau and Leonard 9 Problem solving is no longer the activity of the expert. Problem solving should be considered the domain of teams and groups of experts. Crowdsourcing has become a legitimate, complex problem-solving model.Reference Brabham 10 According to Hong and Page’s research,Reference Hong and Page 11 diverse groups of people can see a problem in different ways, and thus are able to solve it better and faster. The essence of this theorem is “a randomly selected collection of problem solvers outperforms a collection of the best individual problem solvers.”Reference Hong and Page 11 This is the basis for the success of crowdsourcing.

What is the motivation for members of the crowd to participate in activities like this? Howes’Reference Howe 1 original description of crowdsourcing was in the context of for-profit industries in which members of the crowd derive some monetary or extrinsic reward. Many crowdsourcing activities, such as contributing an entry to Wikipedia or participating in a crowdsourced discussion at a CME event, derive no extrinsic reward. Most of the participants in this study shared something with the larger group. One explanation for participation in activities, such as crowdsourcing, can be explained by the self-determination theory. In the self-determination theory, intrinsic motivation represents the potential for human curiosity, self-directed learning, challenge seeking, and skill development.Reference Ryan and Deci 12 Ryan and DeciReference Ryan and Deci 12 have identified three psychological needs essential for the fostering of this intrinsic motivation—autonomy, competence, and relatedness. Autonomy is acting with a sense of choice and volition. Individuals are motivated by the need to achieve competence and by being competent. Relatedness refers to a sense of connectivity to others.

Are all members of the crowd participating, or is this just the playground for a few vocal individuals? In this study, although two thirds of the crowd contributed to at least one conversation, there is no doubt that a core group contributed to many of the conversations. As in traditional online crowdsourcing projects, a “power law distribution” has been described when comparing the number of contributors and the frequency of contributions. Contributions are not evenly divided amongst contributors. Typically, there are large numbers of contributors who contribute only a small amount to projects; and at the other spectrum, there are a small number of contributors who are responsible for a significant number of contributions.Reference Shirky 13 This “power law distribution” was similarly observed in this crowdsourcing activity.

Did participants learn anything, and will their practice change as a result of this activity? This is the “holy grail” of CME activities. There is very little evidence that traditional CME events change professional practice. Most participants enjoyed the activity, found it valuable, and perceived that they learned something. Most participants trusted the opinions of those speaking and were engaged in the activity—essential starting points for a worthwhile conversation.

This study has several limitations. The concept of wisdom or expertise can be quite complex. In this study, expertise of the crowd was described based on years in practice, type of practice, and leadership positions held. Expertise was self-reported by participants. Although the response rate (81%) was significant, it may represent only those who participated in the crowdsourcing discussion or found the discussion valuable. Many participated in the discussion, but their motivation was not explored. Participants self-reported that they learned, but the study did not actually assess knowledge change or change in practice. This activity proves a concept, but it may be difficult to generalize to other CME events because the crowd may not have a similar level of expertise or motivation. It is hoped that this pilot observational study will lead to a more rigorous investigation of the use of crowdsourcing as an instructional method at CME events.

CONCLUSION

This study describes a novel teaching method and illustrates that a crowdsourcing technique at a traditional CME course is feasible and has impact. A facilitated crowdsourced discussion can be used to harness the collective wisdom and expertise in the crowd.

Acknowledgements

The author wishes to thank Savithri Ratnapalan and Shirley Lee for a review of the survey and manuscript; Voula Christofilos for a review of the manuscript; Arun Sayal for a review of the survey and contribution to the selection of the problems and collection of the observational data; and the participants of Emergency Medicine Update Europe.

Competing interests: None declared.

Supplementary material

To view supplementary material for this article, please visit http://dx.doi.org/10.1017/cem.2014.54