1 Introduction

1.1 Overview of this Volume

In recent decades, the number of archaeometric investigations that make use of physical‒chemical techniques for the analysis of the composition of various archaeological materials continues to grow, as evidenced by the increasing number of publications in this area. One example of this type of studies is provenance analysis, which tries to relate archaeological materials to their original natural sources by discriminating their characteristic chemical fingerprint. In brief, it tries to determine the geological or natural origin of materials found in different archaeological contexts to establish the places of acquisition and production of the raw materials. We have chosen to approach this complex subject in two different ways, both based on very similar datasets.

In this Element, we take an applied, practical approach, allowing the reader to experiment with the provided datasets and scripts to be used in the R software package. In Statistical Processing of Quantitative Data of Archaeological Materials, we take a more theoretical and mathematical avenue, allowing the reader to amend and apply the discussed methods freely. These two Volumes can be used independently as well as complementary, throughout both ample cross-references are provided to facilitate the latter. As an introduction to the subject, let us first remember that the methods, basic principles and when to apply different statistical processing depends on three data scenarios: (1) when dealing with high-dimensional spectral data, (2) when employing compositional data, and (3) when managing a combination of compositional and spectral data.

Case 1 considers high-dimensionality data (n ≪ p, where n relates to the number of observations and p are the number of variables) using full spectrum readings, such as those obtained with Fourier transform infrared spectroscopy (FT-IR), Raman spectroscopy, or X-ray fluorescence (XRF) spectroscopy. For this type of data, the suggested approach is to apply chemometric techniques and unsupervised machine learning methods. First, the spectra are preprocessed by filtering the additive and multiplicative noise, correcting misaligned peaks, and detecting outliers by robust methods. Afterwards, the data are clustered using a parametric Bayesian model that simultaneously conducts the tasks of variable selection and clustering. The variable selection employs mixture priors with a spike and slab component, which make use of the Bernoulli distributions and the Bayes factor method to quantify the importance of each variable in the clustering.

Case 2 contemplates low-dimensional data (n > p) where the recorded data have been converted to chemical compositions. For this case, the recommended approach is to adopt the methodology proposed by Reference AitchisonAitchison (1986), which discusses some of the algebraic–geometric properties of the sample space of this type of data and implements log-ratio transformations. Respecting adequate preprocessing of compositional data, such as robust normalization and outlier detection, the use of model-based clustering that fits a mixture model of multivariate Gaussian components with an unknown number of components is proposed. This allows choosing the optimal number of groups as part of the selection problem for the statistical model. Mixture models have the advantage of not depending on the distance matrix used in traditional clustering analyses. Instead, the key point of the model-based clustering is that each data point is assigned to a cluster from several possible k-groups according to its posterior probabilities, thus determining the membership of each of the observations to one of the groups.

For Case 3, if reliable calibrations are available to obtain compositional data, this information can be combined with the spectra to obtain groups. For handling the data, a combination of chemometric techniques is used. In this case, a dependent variable y (or compositional values) is related to the independent variables x (or spectral values). The preprocessing is performed similarly as in Case 2; this allows calibrating a model of predictive purposes that can discriminate those variables that provide significant information to the analysis and eliminating the redundancy of information as well as collinearity. Once the selection of variables has been made, a new methodology called Databionic Swarm (DBS; implemented by Reference Thrun, Ultsch and HüllermeierThrun, 2018) is applied for clustering the data.

To fully understand how the proposed methods work and how to apply them to your own data, these are exemplified in this Element with different case studies using quantitative data acquired from archaeological materials. The datasets used in the examples are provided in the electronic format of this Element as worksheet files with the “csv” extension. To process the data according to the exercises, the selected dataset must be imported and the source code executed in the R environment (R Development Core Team, 2011); we used version 3.6.1 on a 64-bit Windows system, although more recent versions of R are now available. R is a programming language for statistical analysis and data modeling that is used as a computational environment for the construction of predictive, classification, and clustering models. R allows you to give instructions sequentially to manipulate, process, and visualize the data. The instructions or scripts employed for each part of the process are detailed in the case studies. To learn how to employ the scripts in each step of the data processing, we encourage the reader to consult the videos associated with this Element in the electronic format of the Element.

1.2 Introduction to R

R is a public domain language and environment managed by the R Foundation for Statistical Computing (© 2016 The R Foundation) that has the virtue of being an exceptional tool for data statistical analysis and projection. This project contains a large collection of software, codes, applications, documentation, libraries, and development tools that users are free to copy, study, modify, and run. Therefore, it can be seen as a collaborative project in which anyone is invited to contribute. Although initially written by Robert Gentleman and Ross Ihaka, since 1997, it has been operated by the R Development Core Team. From early 2000 until now, it has become a kind of “standard of the scientific community.” There are many publications and tutorials on its use aimed at all levels of different areas and specializations, some of which focused on the most technical and computational aspects of the language.

1.2.1 Getting Started

First, search online for CRAN R (the Comprehensive R Archive Network) or follow the link http://cran.r-project.org/ that will direct you to the web page and the instructions to download and install the latest version of R in various platforms (Linux, MacOS and Windows).

1.2.2 Data Import

Once R is installed, the next step is to call our data in the R window to be able to process them. In the screen, the indicator “>” appears and is where we must define what task we want to perform. The most commonly used configurations to perform data analysis in R are data frames, which are two-dimensional (rectangular) data structures. As in this case, we deal with datasets of low or high dimensionality, which must be arranged so that the rows in a data frame represent the cases, individuals or observations, and the columns represent the attributes or variables (see example in Table 1). These data frames must be prepared in a folder available for import and analysis.

Table 1 Example of a data frame of chemical concentrations of obsidian samples.

| ID | Mn | Fe | Zn | Ga | Th | Rb | Sr | Y | Zr | Nb | Source |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ahuisculco | 378 | 7468 | 47 | 18 | 10 | 109 | 45 | 20 | 143 | 19 | 1 |

| Ahuisculco | 379 | 7889 | 166 | 19 | 9 | 113 | 50 | 19 | 146 | 21 | 1 |

| … | … | … | … | … | … | … | … | … | … | … | …. |

| … | … | … | … | … | … | … | … | … | … | … | …. |

| El Chayal | 476 | 8162 | 33 | 17 | 11 | 146 | 154 | 20 | 100 | 9 | 12 |

| El Chayal | 486 | 8799 | 45 | 18 | 11 | 151 | 158 | 20 | 104 | 8 | 12 |

| El Chayal | 545 | 8309 | 36 | 17 | 10 | 140 | 151 | 19 | 101 | 9 | 12 |

The working directory is the place on our computer where the files we are working with are located. You can find what the working directory is with the function “> getwd()”. You only have to write down the function and execute it. You can change the working directory using the function “setwd()”, defining the path of the directory you want to use [e.g., setwd(”C:\obsidian”)]. Although there is extensive documentation on how to import/export data to R, we use the traditional method, as our data are usually in a spreadsheet with a csv extension. In R, it is sufficient to use the following command:

> data <- read.csv(”C:\\obsidian\\mydataset.csv”, header=T)

This command line provides the path to the folder where the data are found; the command “read.csv” indicates that a file with a “csv” extension is read from the “obsidian” folder located in the C root directory, and it is indicated that the data contain a name for each variable with the command “header=T”. The symbol “<-” is only used for assignment; in the previous example, the file name “mydataset.csv” refers to the name of the data frame that you are going to work with in R. To see the structure of the data, write down the next command.

str (data)

Another way is by selecting the option “Change directory” from the File menu and navigating to the folder where our file is located. Once this path is established, you must go to the folder where your dataset is, select it and drag it with the mouse to the R console and, later, copy that path from the console and paste it into the command “read.csv”. To see the dimensions of the dataset, you can write the function “dim (data)”; “names()” shows the names of the columns. In R, the “summary” function shows a general summary of the data frame variables (minimum, maximum, mean, median, first, and third quartile).

To perform an algebraic operation on a data frame such as the one exemplified in Table 1, the first column containing the identifier of each sample would have to be excluded; this is achieved by typing the following command:

data1 <- data [, 2:11]

In this way, only numerical variables are considered. Now you can transform all the data in the data frame, such as base 10 logarithms:

data2 <- log10 (data1)

If you also would like to transform negative values with logarithms you can use DataVisualizations::SignedLog(). Depending on the application this can be meaningful (cf, Reference Aubert, Thrun, Breuer and UltschAubert et al., 2016), even if in a strictly mathematical sense it is not allowed. If you want to see the values of a specific variable, you can do it with the following command:

data2$Na.

Some analyses require that the data be recognized as a matrix. In R, a matrix is a data structure that stores objects of the same type, conversely to a data frame, which is a rectangular array of data consisting only of numeric values. To convert a data frame to a matrix, you can use the following command:

newdata <- as.matrix(data2).

To save a file that has been transformed, just type the following command:

write.csv (newdata, file=”my_data.csv”).

This will save the file named “my_data” with a “.csv” extension to the working folder.

1.2.3 Functions

Once a dataset has been loaded, a large number of operations can be performed on it. If, for example, you have a variable “Na” from which you want to produce a histogram, it is enough to write the “hist (Na)” function to produce a bar graph of the variable. In R, the “plot()” function is generally used to create graphs. This function always asks for an argument for the axis of the abscissa (x) and another for the ordered (y). If two variables, x and y are available, say Sr and Zr, and you want to see how these variables relate, “plot(Data2$Sr, Data2$Zr)” is enough to get the graph. The “plot()” function allows you to customize the graph by entering titles or changing the size of the dots, the color, etc.

In addition to the “plot()” function, there are other functions that generate specific types of graphs. In Windows, right clicking the graph can be copied to the clipboard, either as a bitmap or as a metafile. There are a variety of graphic packages for R that extend their functionality or are intended to optimize things for the user. If you have any questions about any other function, you can always access Help documentation using the “help()” command. For example, “> help(mean)” directs us to a web page where we can obtain information about the concept “mean” that corresponds to the arithmetic average.

1.2.4 Packages

R has a large number of packages that offer different statistical and graphical tools. Each package is a collection of features designed to meet a specific task. For example, there are specialized packages for data grouping, others for visualization or for data mining. These packages are hosted on CRAN [https://cran.r-project.org/]. A small set of these packets is loaded into the processor’s memory when R is initialized. You can install packages using the “install.packages()” function, and typing in quotation marks the name of the package you want to install. They can also be installed directly from the console by going to the R menu and then selecting “Packages.” For example, to install the “cluster” package, type the following:

install.packages(”cluster”).

After you complete the installation of a package, you can use its functions by calling the package with the “library(cluster)” command. Every time an operation is performed in R, it is important to use the “rm(list = ls())” command to delete all objects in the session and to be able to start without any remaining objects stored in the program memory. Additionally, when calling any file, it is recommended to use the “str ()” command to know the structure of the data object.

1.2.5 Scripts

Scripts are text documents with the “.R” file extension. The scripts are the same as any text documents, but R can read and execute the code they contain. Although R allows interactive use, it is advisable to save the code used in an R file; this way, it can be used as many times as necessary. In this Element, we made use of the scripts published by the authors of the packages available for R. An advantage of these scripts and packages is that you can make use of the tutorials available for each. For example, to transform the data to the isometric log-ratio (ilr), go to CRAN [https://cran.r-project.org/] and look for the “compositions” package of Reference van den Boogaart, Tolosana-Delgado and Brenvan den Boogaart, Tolosana-Delgado and Bren (2023). You will find that the related script is the following:

## log-ratio analysis # transformation of the data to the ilr log-ratio library(compositions) xxat1 <- acomp(data) # “acomp” represents one closed composition; with this #command the dataset is now closed xxat2 <- ilr(xxat1) # isometric log-ratio transformation of the data str(xxat2) write.csv(xxat2, file=”ilr-transformation.csv”)

In this script, the command “acomp” tells the system to consider the argument as a set of compositional values, implicitly forcing the data to close to 1. Subsequently, following Reference Egozcue, Pawlowsky-Glahn, Mateu-Figueras and Barceló-VidalEgozcue et al. (2003), the “ilr” command is used to transform the data to the isometric log-ratio, which produces compositions that are represented in Cartesian coordinates.

A more complex task can be done with R. For example, let us say that you want to implement a Principal Component Analysis with the idea of exploring the data and seeing if the first components can reveal the existence of a pattern in the data. By installing the “ggfortify” package (Reference Horikoshi, Tang and DickeyHorikoshi et al., 2023), it is easy to perform the analysis and graphical display of the data. For example, suppose we have a matrix of n x p with untransformed data and whose eleventh column indicates the natural source from which some obsidian samples come, such as the one in Table 1. The first step is to call the package “ggfortify” and read the data.

library(ggfortify) data <- read.csv(”Sources.csv”, header=T) ## Sources.csv is an example file name str(data)autoplot(stats::prcomp(data[-11])) ## PCA without labels; the 11th column ##is deleted

In this script, the function “prcomp” will perform a principal component analysis of the data matrix and return the results as a class object; in turn, the “autoplot” function will provide the graph of the first two components. To produce a plot of the first two components that includes the provenance label for each sample and a color assignation to each group, use the following command line:

autoplot(stats::prcomp(data[-11]), data1 = data, colour = ‘Source’) ## ‘ Source’ specifies column name keyword in your dataset

A biplot of the components that explain most of the variance of the data using the loading vectors and the PC scores is obtained through the following command line:

autoplot(stats::prcomp(data[-11]),label=TRUE,loadings=TRUE,loadings.label=TRUE)

Assuming the grouping variable is available, the following command line automatically locates the centroid of each group and performs a PCA with 95 percent confidence ellipses:

autoplot(stats::prcomp(datos[-11]),data1=data,frame=TRUE, frame.type=‘t’,frame.colour=‘ Source’) ## ‘ Source’ specifies column name keyword in your dataset

In R, there are numerous clustering algorithms ranging from distance-based algorithms (e.g., to determine whether the data present a clustering structure) to more formal statistical methods based on probabilities, such as Bayesian methods or model-based clustering. For instance, for conventional clustering, the package “cluster” can be used (Reference Maechler, Rousseeuw, Struyf, Hubert and HornikMaechler et al., 2022). With this package, several classical classifications can be performed by selecting both the metric used to calculate the differences between the observations and the grouping method, among which are average, single, weighted, ward, and others.

This Element provides the scripts to perform all the proposed preprocessing and clustering techniques so that the user can easily execute the commands by copying and pasting them into the R environment. For example, in Section 3, we worked with model-based clustering; for this, we employed the R libraries “Rmixmod” (Reference Lebret, Lovleff and LangrognetLebret et al., 2015) and “ClusVis” (Reference Biernacki, Marbac and VandewalleBiernacki et al., 2021):

library (Rmixmod) out_data<-mixmodCluster(data2,nbCluster=2:8) summary(out_data) plot(out_data) library(ClusVis) clusvisu<-clusvisMixmod(out_data) plotDensityClusVisu(clusvisu)

By using the command “mixmodCluster”, an unsupervised classification based on Gaussian models with a list of clusters (from two to eight clusters) is performed, determining which model best fits the data according to the BIC information criterion. In turn, the “plot()” command provides a 2D representation with isodensities, data points, and partitioning. . Alternatively, two-dimensional density-based structures can be visualized with “ScatterDensity” (Reference Brinkmann, Stier and ThrunBrinkmann et al., 2023). Similarly, the “ClusVis” package (Reference Biernacki, Marbac and VandewalleBiernacki et al., 2021) is used to obtain a graph of Gaussian components that supplies an entropic measure on the quality of the drawn overlay compared to the Gaussian clustering of the initial space. For this, only two commands are needed, “clusvisMixmod” and “plotDensityClusVisu”, which are provided by the package authors.

Thus, the user has free access to the tutorials and scripts of each of the algorithms used in this Element. In many cases, the only thing that needs to be done is to replace the author’s data with your own. You can also experiment with other strategies for the analysis by changing the parameters, such as the number of iterations, the initialization method, and the model selection criteria. The instructions to do so, as well as a variety of examples that the same user can reproduce, can be consulted in the documentation associated with each R package or script. It is very important to remember that the theoretical assumptions of each model must be respected; unfortunately, the data do not always conform to these. That is why it is recommended that the reader pay close attention to the theory of each method and to the behavior of his data, since a violation of the theoretical assumptions can lead to an incorrect interpretation of the data.

2 Processing Spectral Data

2.1 Applications and Case Studies

This section presents the numerical experiments conducted on archaeological materials using spectral data and the Bayesian approach. Although only examples of X-ray fluorescence data are used in this Element, the proposed methods can be applied in the same way to any other spectrometric technique such as Raman or FT-IR. To exemplify the performance of the proposed methods, two analyses were carried out, one with 156 obsidian geological samples that served as a control test (matrix available in the supplementary material as file ‘Obsidian_sources.csv’) and a second one using 185 ceramic fragments of archaeological interest (matrix available in the supplementary material as file ‘NaranjaTH_YAcim.csv’). For the analysis of all samples, we employed a TRACER III-SD XRF portable analyzer manufactured by Bruker Corporation, with an Rh tube at an angle of 52°, a drift silicon detector and a 7.5 μm Be detector window.

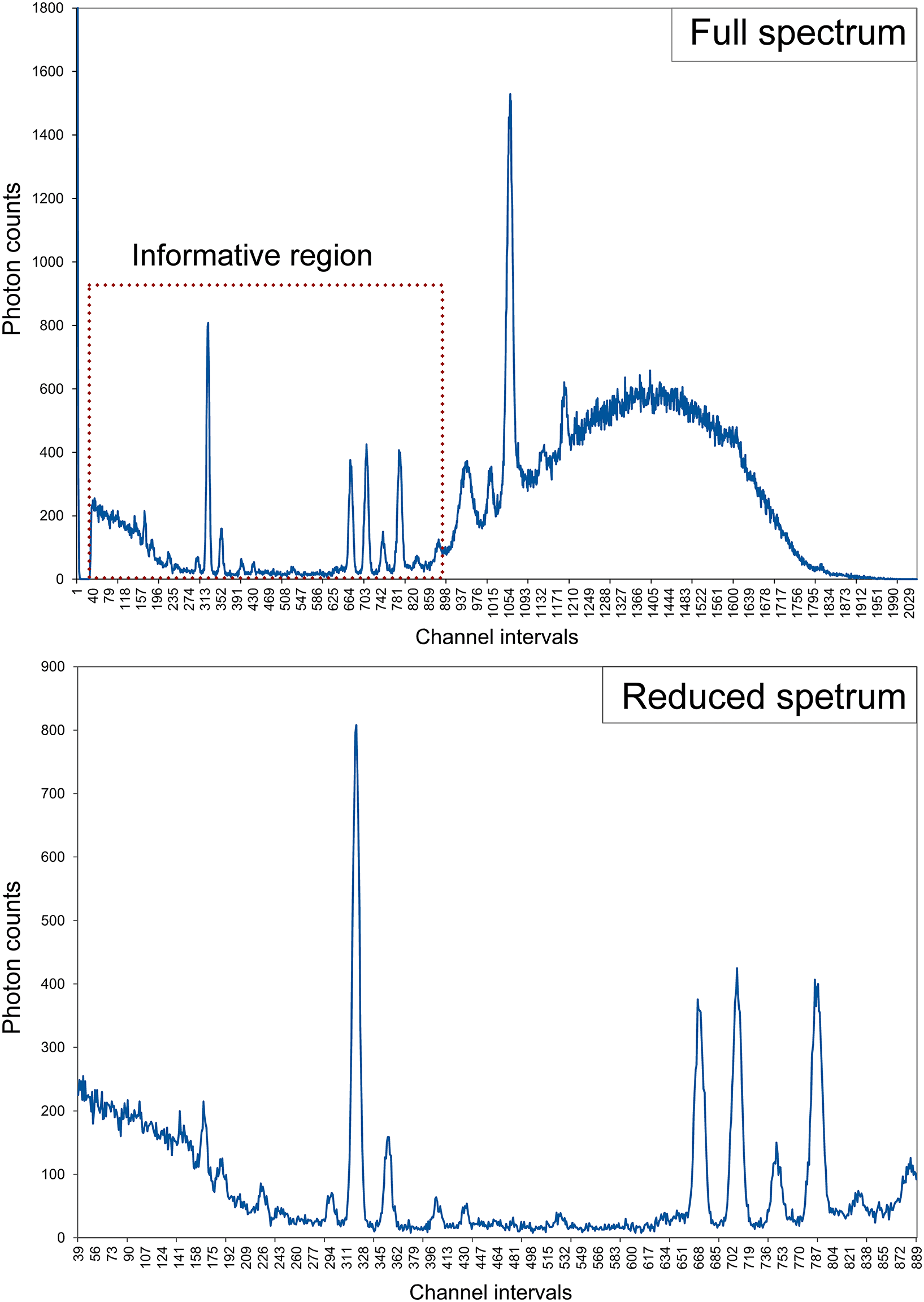

The instrument was set with a voltage of 40 kV, a current of 30 μA, and a measurement time of 200 live seconds. Only for the case of the obsidian samples was employed a factory filter composed of 6 μm Cu, 1 μm Tl and 12 μm Al. A spectrum of each sample was obtained by measuring the photon emissions in 2048 channel intervals (corresponding to the energy range of 0.019 to 40 keV of the detector resolution). Is important to remember that portable XRF is more effective for detecting Na to U elemental concentrations, which is the range that corresponds to 1.040 (in the K-alpha layer) to 13.614 keV (in the L-alpha layer). Therefore, any peaks outside of this range supply no useful information. That is the reason why the original 2,048 channels were reduced by cutting the tails of the spectra that corresponded to noninformative regions, such as low Z elements (lower than Na), the Compton peak, and the palladium and rhodium peaks (K-alpha and K-beta). For example, in the obsidian exercise, the data that were not in the range from channel 38 to channel 900 were manually deleted, leaving only the central 862 channels that correspond to the energy range of 0.74 to 17.57 keV of the detector resolution (Figure 1).

Figure 1 Comparison between the full spectrum of an obsidian sample (top) and a reduced spectrum containing only the informational region (bottom). Color version available at www.cambridge.org/argote_machine-learning

Using the numerical values obtained from the photon counts in each energy interval or channel, two n x p matrices were constructed (where n refers to the samples and p to the channel count interval):

1. An n = 149 x p = 862 matrix for the obsidians (available in the supplementary material as file ‘Obsidian_Sources_38_900.csv’).

2. An n = 185 x p = 791 matrix for the pottery fragments (available in the supplementary material as file ‘NaranjaTH_YAcim40_830.csv’).

The spectral intensities (photon counts) sampled at the given intervals (channels) represent the quantitative data employed in the statistical analysis instead of the major and trace element concentrations traditionally used for this purpose. Both datasets were preprocessed the same way. First, the EMSC algorithm, to filter the dispersion effects, and the smoothing procedure with the Savitzky‒Golay algorithm were applied. With this methodology, it is not necessary to standardize each variable before using the model-based clustering since applying the EMSC filter to the data are equivalent to normalizing it. If your own spectra show any peak displacement, you should apply at this point the CluPa algorithm for peak alignment. Before developing the classification model, the atypical values were detected, removing from the matrix the samples that recorded high values in their orthogonal and score distances with the ROBPCA algorithm. It is important to note that the clustering model presupposes that all the variable-wise centers equal zero.

2.2 Exercise 1: Obsidian Samples

As mentioned in Section 2.1, the control set consisted of 149 obsidian samples of known origin and p = 862 energy intervals (channels). These obsidian samples were collected from 12 Central Mexico (Figure 2). The number of samples analyzed from each source is specified in Table 2. A full description of the geological setting of the obsidian sources can be found in Reference Argote-Espino, Solé, Sterpone and López GarcíaArgote Espino et al. (2010), Reference Argote-Espino, Solé, López-García and SterponeArgote-Espino et al. (2012), Reference CobeanCobean (2002), and Reference López-García, Argote and BeirnaertLópez-García et al. (2019). These samples served as a controlled experiment for assessing the efficiency of the proposed method. The procedure is described step by step in the supplementary video “Video 1.”

Figure 2 Geographical location of the obsidian deposits. The numbers correspond to the following sources: (1) El Chayal, (2) Ixtepeque, and (3) San Martín Jilotepeque in Guatemala; (4) La Esperanza in Honduras; and (5–6) Otumba volcanic complex, Edo. México; (7) Ahuisculco, Jalisco; (8) El Paredón, Puebla; (9) El Pizarrín-Tulancingo, Hidalgo; (10) Sierra de Pachuca, Hidalgo; (11) Zacualtipán, Hidalgo; (12) Zinapécuaro, Michoacán. Color version available at www.cambridge.org/argote_machine-learning

Table 2 Number of obsidian samples per location.

| Source name | Sample ID | n | |

|---|---|---|---|

| 1 | El Chayal (Guatemala) | 1−17 | 17 |

| 2 | La Esperanza (Honduras) | 18−33 | 16 |

| 3 | Ixtepeque (Guatemala) | 34−50 | 17 |

| 4 | San Martin Jilotepeque (Guatemala) | 51−67 | 17 |

| 5 | Ahuisculco (Jalisco) | 68−76 | 9 |

| 6 | Otumba (Soltepec) | 77−86 | 10 |

| 7 | Otumba (Ixtepec-Pacheco-Malpaís) | 87−110 | 24 |

| 8 | El Paredon (Puebla) | 111−117 | 7 |

| 9 | El Pizarrin-Tulancingo (Hidalgo) | 118−122 | 5 |

| 10 | Sierra de Pachuca (Hidalgo) | 123−132 | 10 |

| 11 | Zacualtipán (Hidalgo) | 133−142 | 10 |

| 12 | Zinapecuaro (Michoacán) | 143−149 | 7 |

| Total: | 149 |

Step-by-step video on how to process spectral data of obsidian samples used in Video 1. Video files available at www.cambridge.org/argote_machine-learning

The reduced obsidian sample spectra were filtered using a combination of the EMSC + Savitzky-Golay filters; the script to perform this task is presented below.

## Script to filter with the EMSC algorithm version 0.9.2 (Reference Liland and IndahlLiland and Indahl, 2020) rm(list = ls()) library(EMSC) #Package EMSC (Performs model-based background correction and # normalisation of the spectra) dat <- read.csv(”Obsidian_source_38_900.csv”, header=T) # To call the spectral data file str(dat) # to see the data structure dat1 <- dat[2:863] # To eliminate the first column related to the sample identifier str(dat1) Obsidian.poly6 <- EMSC(dat1, degree = 6) #Filters the spectra with a 6th order #polynomial str(Obsidian.poly6) write.csv(Obsidian.poly6$corrected, file=”Obsidian_EMSC.csv”) # to save the data file # filtered with the EMSC. The User can choose other file names #To filter the spectra with the SG filter, use the ‘prospectr’ package (Stevens and #Reference Stevens and Ramirez-LopezRamirez–Lopez (2015) library(prospectr)## Miscellaneous functions for processing and sample selection of ## spectroscopic data (Reference Stevens, Ramirez-Lopez and HansStevens et al., 2022) dat2 <- read.csv(”Obsidian_EMSC.csv”, header=T) # Calls the file with EMSC filtered #data str(dat2) sg <- savitzkyGolay(Obsidian.poly6$corrected, p = 3, w = 11, m = 0) write.csv(sg, file=”Obsidian_EMSC_SG.csv”) # The user can choose another file name

Figure 3 shows the result of the filtered spectra compared to the untransformed raw data. Notice that the information was not altered. It is important to note that because we determined a polynomial of the sixth order for the SG filter, the initial matrix with p = 862 was reduced to p = 852, eliminating five channel intervals from each extreme of the data matrix.

Figure 3 Raw data (above) and EMSC + Savitzky‒Golay filtered spectra (below). Color version available at www.cambridge.org/argote_machine-learning

Because the original spectra were not shifted or the intensity peaks were misaligned, it was not necessary to apply the CluPa algorithm. Nevertheless, if anyone finds it necessary, the peaks can be aligned with the following script (published by Reference López-García, Argote and BeirnaertLópez-García et al., 2019):

# Run the whole script at one time devtools::install_github(”Beirnaert/speaq”) # download latest “speaq” package once! library(speaq) # Change file folder your_file_path = “/Users/” # Get the data (spectra in matrix format) matrix3 = read.csv2(file.path(your_file_path, “your_file.csv”), header = F, sep = “,”,colClasses = “numeric”, dec = ”.”) spectra.matrix = as.matrix(matrix3) index.vector = seq(1, ncol(spectra.matrix)) # Plot the spectra

speaq::drawSpec(X = spectra.matrix, main = ‘mexico’, xlab = “index”) # Peak detection

peaks <- speaq::getWaveletPeaks(Y.spec = spectra.matrix, X.ppm = index.vector, nCPU = 1, raw_peakheight = TRUE) # Grouping groups<- PeakGrouper(Y.peaks = peaks) # If the peaks are well formed and the peak detection threshold is set low, the filling step #is not necessary and can be omitted peakfill <- PeakFilling(groups_rawIntensity, spectra.matrix, max.index.shift = 5, window.width = “small”, nCPU = 1) Features <- BuildFeatureMatrix(Y.data = peakfill, var = “peakValue”, impute = “zero”, delete.below.threshold = FALSE, baselineThresh = 1, snrThres = 0)

# Aligning the raw spectra

peakList = list()

for(s in 1:length(unique(peaks$Sample))){

peakList[[s]] = peaks$peakIndex[peaks$Sample == s]

}

resFindRef<- findRef(peakList);

refInd <- resFindRef$refInd; Aligned.spectra <- dohCluster(spectra.matrix, peakList = peakList, refInd = refInd, maxShift = 5, acceptLostPeak = TRUE, verbose=TRUE); drawSpec(Aligned.spectra) write.csv (Aligned.spectra, file =” aligned.csv”)

After preprocessing the spectra, it is important to diagnose the data and detect outliers. For this task, use the following script extracted from the “rrcov” package (Reference TodorovTodorov, 2020):

## Script to diagnose outliers rm(list=ls()) library(rrcov) dat <- read.csv(File_X, header=T) str(dat) pca <- PcaHubert(dat, alpha = 0.90, mcd = FALSE, scale = FALSE) pca print(pca, print.x=TRUE) plot(pca) summary(pca)

The results can be observed in Figure 4. Reference Hubert, Rousseeuw and Vanden BrandenHubert et al. (2005) define this figure as a diagnostic plot based on the ROBPCA algorithm; it allows distinguishing regular observations and different types of outliers under the assumption that the relevant information is stored in the variance of the data (Reference López-García, Argote and ThrunLópez-García et al., 2020; Reference Thrun, Märte and StierThrun et al., 2023). In Figure 4, a group of orthogonal outliers (located at the top left quadrant of the graphic) that correspond to El Pizarrin-Tulancingo and Zinapecuaro source samples can be discriminated. According to this figure, there are ten observations with distances beyond the threshold of X2 that can be considered bad leverage points or outliers; the rest are regular observations. The bad leverage points correspond to the samples from Sierra de Pachuca, which have contrasting higher chemical concentrations of Zr, Zn, and Fe than the rest of the sources (Reference Argote-Espino, Solé, Sterpone and López GarcíaArgote-Espino et al., 2010). Therefore, they cannot be considered properly as outliers, but observations with a different behavior should not be deleted.

Figure 4 Diagnostic plot of the obsidian samples based on the ROBPCA algorithm. Color version available at www.cambridge.org/argote_machine-learning

Once the earlier steps were concluded, we can now classify the samples. In the Bayesian paradigm, the allocation of the samples in a cluster is regarded as a statistical parameter (Reference Partovi Nia and DavisonPartovi Nia and Davison, 2012). In general, it is better to work with the Gaussian distribution and set the default values of the hyperparameters given by the “bclust” algorithm. For this step, use the following script for R:

## bclust algorithm rm(list = ls()) library(bclust) # Reference Partovi Nia and DavisonPartovi Nia and. Davison (2015) datx <- read.csv(File_X, header=T) str(datx) dat2x <- as.matrix(datx) str(dat2x) Obsidian.bclust<-bclust(x=dat2x, transformed.par=c(-1.84,-0.99,1.63,0.08,-0.16,-1.68)) par(mfrow=c(2,1)) plot(as.dendrogram(Obsidian.bclust)) abline(h=Obsidian.bclust$cut) plot(Obsidian.bclust$clust.number,Obsidian.bclust$logposterior, xlab=”Number of clusters”,ylab=”Log posterior”,type=”b”) abline(h=max(Obsidian.bclust$logposterior)) str(Obsidian.bclust) Obsidian.bclust$optim.alloc # optimal partition of the sample Obsidian.bclust$order # produces teeth plot useful for demonstrating a grouping on clustered subjects Obsidian.bclust<-bclust(dat2x, transformed.par=c(-1.84,-0.99,1.63,0.08,-0.16,-1.68)) dptplot(Obsidian.bclust,scale=10,varimp=imp(Obsidian.bclust)$var, horizbar.plot=TRUE,plot.width=5,horizbar.size=0.2,ylab.mar=4) #unreplicated clustering wildtype<-rep(1,55) #initiate a vector wildtype[c(1:3,48:51,40:43)]<-2 #associate 2 to wildtypes dptplot(Obsidian.bclust,scale=10,varimp=imp(Obsidian.bclust)$var, horizbar.plot=TRUE,plot.width=5,horizbar.size=0.2,vertbar=wildtype, vertbar.col=c(”white”,”violet”),ylab.mar=4)

The result is displayed in the dendrogram of Figure 5, as well as in the partition of the sample space of Table 3. As expected, the Bayesian method clustered the obsidian samples into twelve groups (going from bottom to top in the dendrogram), all related to their geological sources: [1] Zacualtipan (ID. 133–142), which, according to the dendrogram, is subdivided into two subsources: [2] Zinapecuaro (ID. 143–149); [3] Ahuisculco (ID. 68–76); [4] El Paredon (ID. 111–117); [5] Otumba-Ixtepec, Pacheco, and Malpais (ID. 87–110); [6] Otumba-Soltepec (ID. 77–86); [7] El Pizarrin (ID.118–122); [8] Sierra de Pachuca (ID. 123–132), [9] El Chayal (ID. 1–17), which is also subdivided into two subsources: [10] La Esperanza, Honduras (ID.18–33); [11] San Martín Jilotepec (ID. 51–67), also subdivided into two subsources; and [12] Ixtepeque (ID. 34–50).

Figure 5 Dendrogram and optimal grouping found by the Gaussian model for the obsidian source data. The dendrogram visualizes the ultrametric portion of the selected distance (Reference MurtaghMurtagh, 2004). The method proposed twelve groups. Color version available at www.cambridge.org/argote_machine-learning

Table 3 Partition of the sample space

The Bayes factor (B10) can be regarded as a measure of the importance that each variable holds in the classification. To determine which oligo-elements are important, the algorithm provides a list of the potentially important variables that contribute to the grouping. The following script is used for this purpose:

# This function plots variable importance using a barplot Obsidian.bclust<-bclust(dat2x, transformed.par=c(-1.84,-0.99,1.63,0.08,-0.16,-1.68), var.select=TRUE) Obsidian.imp<-imp(Obsidian.bclust) #plots the variable importance par(mfrow=c(1,1)) #retrieve graphic defaults mycolor<-Obsidian.imp$var mycolor<-c() mycolor[Obsidian.imp$var>0]<-”black” mycolor[Obsidian.imp$var<=0]<-”white” viplot(var=Obsidian.imp$var,xlab=Obsidian.imp$labels,col=mycolor)

#plots important variables in black viplot(var=Obsidian.imp$var,xlab=Obsidian.imp$labels, sort=TRUE,col=heat.colors(length(Obsidian.imp$var)), xlab.mar=10,ylab.mar=4) mtext(1, text = “Obsidian”, line = 7,cex=1.5)# add X axis label mtext(2, text = “Log Bayes Factor”, line = 3,cex=1.2) # adds Y axis labels #Sorts the importance and uses heat colors; adds some labels to the X and Y axes str(Obsidian.imp) Obsidian.imp$var Obsidian.imp$order

The relevant variables can be separated from the nonrelevant variables by looking for the inflection point in the Gaussian variable selection model, such as the one observed in Figure 6, that is, the point in the distribution curve where the factor value of the variables stabilizes or stays more constant. For this case, the inflection point is presented at Log B10> 1.57E+07; therefore, values of B10 > 1.57E+07 are considered relevant variables. The higher Bayes factors correspond to the positions of Zr, Nb, and Sr peaks (Figure 7), indicating the relative importance of these elements in the classification task. The rest of the elements had negligible and negative Bayes factors (B10 <1.57E+07) and hence were irrelevant.

Figure 6 Log Bayes factor of variables (logB10) for the Gaussian variable selection model of the obsidian data. Color version available at www.cambridge.org/argote_machine-learning

Figure 7 XRF spectrum of an obsidian sample. Color version available at www.cambridge.org/argote_machine-learning

Although discriminating groups of samples with a similar spectral profile is not a simple task, the results obtained with this clustering algorithm leave no doubt of its accuracy. First, the structure of the dendrogram was clear, and atypical values were not observed. Second, it is possible to observe that the samples are not mixed with other groups and that each group remains characterized by its place of origin. Third, the number of groups calculated by the algorithm is correct, corresponding to the number of geological sources introduced. This allows us to conclude that each geological source has its own spectral signature, which is different from those of the other sources. It should be noted that to accurately identify the groups, it is necessary to always refer to the observations that serve as control samples (e.g., known sources).

2.3 Exercise 2: Thin Orange Pottery Samples

Thin orange ware, as its name says, is a light orange ceramic with very thin walls that became one of the main interchange products of the Classic period in Central Mexico. Its distribution over a large expanse of Mesoamerica has been considered to be closely related to the strong cultural dominion of Teotihuacan. Its wide geographical circulation has been documented in many places far from Teotihuacan (Reference Kolb and SandersKolb, 1973; Reference López Luján, Neff, Sugiyama, Carrasco, Jones and SessionsLópez Luján et al., 2000; Reference RattrayRattray, 1979), including Western Mexico, Oaxaca, and the Mayan Highlands (i.e., Kaminaljuyú, Tikal, and Copán). The use of this ware type had a broad extension over time, starting in the Tzacualli phase (ca. 50–150 AD), peaking at the Late Tlamimilolpa and Early Xolalpan phases (350–550 AD), and declining at approximately 700 AD (Reference Kolb and SandersKolb, 1973; Reference MüllerMüller, 1978).

According to Reference RattrayRattray (2001), the suggested chronology of the different ceramic forms of the Thin orange ware is the following. In the Tzacualli–Miccaotli phase (ca. 1–200 AD), some of the common forms are vessels with composite silhouettes, everted rims and rounded bases, vases with straight walls, pedestal base vessels, pots and a few miniatures; simple incised lines and red color decorations are present in some pieces. Some sherds found in archaeological contexts from the Miccaotli phase suggest that hemispherical forms were present. Nevertheless, the hemispherical bowls with ring bases, the most representative form in Thin orange, occur in the Early Tlamililolpan phase (ca. 200–300 AD) and continue until the end of the Metepec phase (ca. 650 AD).

Archaeological and petrographic studies performed in the 1930s (Reference LinnéLinné, 2003) found that the components of Thin orange pastes were homogeneous and of a nonvolcanic origin. Therefore, if Teotihuacan city was settled within a volcanic region, then the production center (or at least the raw material source) should be somewhere else. These findings opened the discussion about why the most distinctive ware of Teotihuacan culture was not produced there. In the 1950s, Cook de Leonard proposed that the natural clay deposits were located south of the state of Puebla based on the material excavated from some tombs in an archaeological site near Ixcaquixtla (Reference Brambila, Puche and NavarreteBrambila, 1988; Reference Cook de LeonardCook de Leonard, 1953).

Rattray and Harbottle performed neutron activation and petrographic analyses on samples classified as fine Thin orange ware and a coarse version of this ware called San Martin orange (or Tlajinga), the last one locally produced in Acatlán de Osorio, south of Puebla state (Reference Rattray, Harbottle and NeffRattray and Harbottle, 1992). In their conclusions, they proposed that the clay deposits and the production centers of Thin orange pottery were in the region of Río Carnero, 8 km south of Tepeji de Rodriguez town, south of Puebla state. Summarizing several former investigations about the compositional pattern of Thin orange ware, the following groups have been established:

1. A main ‘Core’ group, with clay and temper of homogeneous characteristics. Reference Rattray, Harbottle and NeffRattray and Harbottle (1992) and Reference López Luján, Neff, Sugiyama, Carrasco, Jones and SessionsLópez Luján et al. (2000) mentioned that its chemical profile is characterized mainly by high concentrations of Rb, Cs, Th, and K. This group was acknowledged as “Core Thin orange” by Reference AbascalAbascal (1974), “Thin Orange” by Reference Shepard, Kidder, Jenning and ShookShepard (1946), “group Alfa” by Reference Kolb and SandersKolb (1973), and “group A” by Reference Sotomayor and CastilloSotomayor and Castillo (1963).

2. A coarser second group, used for utilitarian purposes (domestic ware), is characterized by having different percentages of the minerals present in the first group. This group corresponds to the “group Beta” (Reference Kolb and SandersKolb, 1973) and the “Coarse Thin orange” group (Reference AbascalAbascal, 1974). Reference Rattray, Harbottle and NeffRattray and Harbottle (1992) assume that this group is formed by local imitations of the original Thin orange ware.

In this exercise, the purpose of this application is to determine possible differences in the manufacturing techniques of the Thin Orange pottery, providing a better understanding of the underlying production processes. The focus is on identifying natural groups with homogeneous chemical compositions within the data, leading to the determination of whether this ceramic type was crafted following a unique recipe (clay and temper) or if there were several ways to produce it. By comparing our results with those obtained by other researchers on the conformation of a single ‘core’ group (Reference AbascalAbascal, 1974; Reference Harbottle, Sayre and AbascalHarbottle et al., 1976; Reference Rattray, Harbottle and NeffRattray and Harbottle, 1992; Reference Shepard, Kidder, Jenning and ShookShepard, 1946), new evidence could be provided that might help refine the current classification of this significant ware.

The procedure is shown step by step in the supplementary video “Video 2”. The archaeological pottery set consisted of 176 ceramic fragments and 9 clay samples (extracted from a natural deposit near the Rio Carnero area). Both sets of materials were analyzed with a portable X-ray fluorescence spectrometer. To conduct the comparative analysis with adequate variability, it was necessary to collect several samples of the same ceramic type from different locations and contexts. Therefore, the pottery samples were provided by different research projects that performed systematic excavations at various Central Mexico archaeological sites (Figure 8; Table 4).

Step-by-step video on how to process spectral data of ceramic samples used in Video 2. Video files available at www.cambridge.org/argote_machine-learning

Figure 8 Geographical location of the archaeological sites from which the Thin orange pottery samples were collected. Color version available at www.cambridge.org/argote_machine-learning

Table 4 Number of Thin orange pottery samples and locations

| Archaeological site | Mexican state | N |

|---|---|---|

| Teteles de Santo Nombre | Puebla | 23 |

| Izote, Mimiahuapan, Mapache, and other sites | Puebla | 22 |

| Huejotzingo | Puebla | 6 |

| Xalasco | Tlaxcala | 53 |

| Teotihuacan | Mexico | 72 |

| Clay source | Puebla | 9 |

| Total: | 185 |

Pottery is a very heterogeneous material; therefore, the ceramic samples were treated differently. Following the recommendations of Reference Hunt and SpeakmanHunt and Speakman (2015), the ceramic samples were prepared as pressed powder pellets. From each pottery sherd, a fragment weighing approximately 2 g was cut. The external surfaces of each fragment were abraded with a tungsten carbide handheld drill, reducing the possibility of contamination from depositional processes. The residual dust was removed with pressurized air, and the fragments were pulverized in an agate mortar. After grinding and homogenizing, the powder was compacted into a 2-cm diameter pellet by a cylindrical steel plunger with a manually operated hydraulic press. No binding agent was added. These pellets provided samples that were more homogeneous and with a uniformly flat analytical surface.

Figure 9 displays an example XRF spectrum of one Thin orange pottery sample. The main elements in the ceramic matrix are iron (Fe), followed by calcium (Ca), potassium (K), silicon (Si), and titanium (Ti); aluminum (Al), manganese (Mn), nickel (Ni), rubidium (Rb), and strontium (Sr) are also present at lower intensities. Sulfur (S), chromium (Cr), copper (Cu), and zinc (Zn) can be considered trace elements.

Figure 9 XRF spectrum of a representative sample of Thin orange pottery. Color version available at www.cambridge.org/argote_machine-learning

In this case, the matrix has many more variables than observations (n ≪ p), with p = 2,048; thus, much of the information contained was irrelevant for clustering. As mentioned at the beginning of this section, it was decided to manually cut some readings as they contained values close to zero or corresponded to undesirable effects, such as light elements below detection limits, the Compton peak, Raleigh scattering and palladium and rhodium peaks (produced by the instrument). The cuts were made at the beginning (from channel 1 to 39) and end (from channel 831 to 2,048) of the spectrum, retaining the elemental information corresponding to the analytes between channels 40 and 830, related to the energy range of 0.78 to 16.21 keV of the detector resolution. In this way, only p = 791 channel intervals were kept. This matrix can be found in the supplementary material as file ‘NaranjaTH_YAcim40_830’. It should be noted that because there were no displacements in the spectra, it was not necessary to use the peak alignment algorithm.

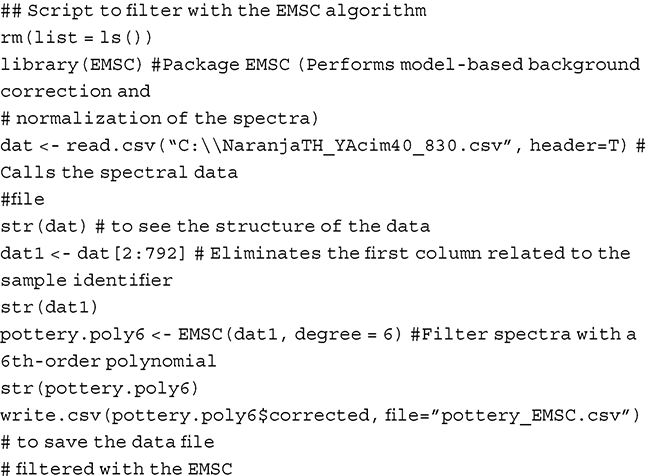

The next step was to filter the spectra as in the previous study case, using only the EMSC, as the spectrum did not contain scattering effects. For this purpose, use the following script:

## Script to filter with the EMSC algorithm rm(list = ls()) library(EMSC) #Package EMSC (Performs model-based background correction and # normalization of the spectra) dat <- read.csv(“C:\\NaranjaTH_YAcim40_830.csv”, header=T) # Calls the spectral data #file str(dat) # to see the structure of the data dat1 <- dat[2:792] # Eliminates the first column related to the sample identifier str(dat1) pottery.poly6 <- EMSC(dat1, degree = 6) #Filter spectra with a 6th-order polynomial str(pottery.poly6) write.csv(pottery.poly6$corrected, file=”pottery_EMSC.csv”) # to save the data file # filtered with the EMSC

Once filtering was performed, the diagnosis of outliers was performed with the following script:

## Script to diagnose outliers (Reference TodorovTodorov, 2020) rm(list=ls()) library(rrcov) dat <- read.csv(File_X, header=T) str(dat) pca <- PcaHubert(dat, alpha = 0.90, mcd = FALSE, scale = FALSE) pca print(pca, print.x=TRUE) plot(pca) summary(pca)

In the outlier detection with the ROBCA algorithm (Figure 10), most of the ceramic sample data vectors have regular patterns with normal punctuation and orthogonal distances. We can distinguish seventeen orthogonal outliers in the upper left quadrant of the graph, one observation with an extreme orthogonal distance (observation no. 126), and a small group of ten bad leverage points in the upper right quadrant. The robust PCA high-breakdown method treats this last group as one set of outliers. An interesting fact about the set of detected bad leverage points is that nine of them correspond to clay deposits (cases no. 177 to 185); only one sample (case no. 115) is related to an archaeological site in Puebla that shows a low amount of manganese content. Therefore, instead of just being measurement errors, outliers can also be seen as data points that have a different origin from regular observations, such as the case of the pure clay samples. According to this, no observations were removed from the analysis.

Figure 10 Outlier map of the Thin Orange pottery dataset computed with ROBPCA based on five principal components. Color version available at www.cambridge.org/argote_machine-learning

For the Bayesian clustering, the model parameters were set the same way as in the obsidian case. The algorithm provides a list of the potentially important variables that contribute to the clustering. In this case, the variable selection extension of the Gaussian model (Figure 11) selected 22 of the 791 initial variables as the most important ones. These twenty-two variables (channels) corresponded to the energy ranges of Fe (6.3 to 6.55 and 7 to 7.11 KeV) and Ca (3.7 to 3.75 KeV) chemical elements. Calcium and iron oxides (such as hematite) are two components that are commonly found in pottery and mudrock composition at variable concentrations depending on the parental material (Reference Callaghan, Pierce, Kovacevich and GlascockCallaghan et al., 2017; Reference Minc, Sherman and ElsonMinc et al., 2016; Reference Ruvalcaba-Sil, Ontalba Salamanca and ManzanillaRuvalcaba et al, 1999; Reference StonerStoner, 2016). These results are different from Reference Rattray, Harbottle and NeffRattray and Harbottle (1992) analysis in which the pottery was mainly determined by high concentrations of Rb, Cs, Th and K. On the other hand, Reference Kolb and SandersKolb (1973) found that Fe and Ti were important elements present in his Alpha and Beta groups.

Figure 11 Log Bayes factor (logB10) for the Gaussian variable selection model of Thin orange data. The Bayes factors are computed for the optimal grouping found by agglomerative clustering using the Gaussian model. Color version available at www.cambridge.org/argote_machine-learning

The resulting dendrogram (Figure 12) grouped the data into two main clusters (I and II) subdivided into six (1 to 6) and two subgroups (7 and 8), respectively. Subgroups 7 and 8 showed chemical differences that distinctively separated them from the rest of the groups. The number of samples (n) assigned to each subgroup were as follows: Group 1 = 23, Group 2 = 51, Group 3 = 16, Group 4 = 36, Group 5 = 13, Group 6 = 12, Group 7 = 18, and Group 8 = 16. Samples from group 1 come mostly from the archaeological site of Xalasco. Samples from Groups 2, 3, 6, 7, and 8 come from Teotihuacan, Xalasco, and several Puebla sites. Group 4 contains samples from some sites in Puebla State and the northeastern sector of Teotihuacan city. Group 5 has samples mainly from Teteles del Santo Nombre and a few from Xalasco and Teotihuacan. Groups 4 and 6 contain the clay samples from the Rio Carnero area. Table 5 summarizes the ceramic shapes included in each group, showing a great variability of forms in each group.

Figure 12 Dendrogram and optimal grouping found by the Gaussian model for the Thin Orange samples. The horizontal bar at the bottom refers to the optimal grouping found by the Gaussian model. Color version available at www.cambridge.org/argote_machine-learning

Table 5 Number of samples (n) and ceramic forms included in each subgroup:

| Group 1 | Group 2 | Group 3 | Group 4 |

|---|---|---|---|

| (n = 23) | (n = 51) | (n = 16) | (n = 36) |

| Hemispherical bowl with ring base (13 sherds) | Hemispherical bowl with ring base (12 sherds) | Bowl with ring base (4 sherds) | Bowl with ring base (11 sherds) |

| Vase with incised exterior decoration (1 sherd) | Hemispherical bowl (6 sherds) | Cylindrical vase (4 sherds) | Hemispherical bowl (3 sherds) |

| Tripod vase with nubbin supports ( 1 sherd) | Vessel with convex wall (4 sherds) | Jar with incised exterior decoration (2 sherds) | Cylindrical vase (4 sherds) |

| Jar with incurved rim and incised simple double-line (1 sherd) | Cylindrical vase (5 sherds) | Tripod vase with nubbin supports ( 1 sherd) | Incense burner (1 sherd) |

| Jar with incised exterior decoration (1 sherd) | Tripod vase with nubbin supports ( 2 sherds) | Undetermined shape (5 sherds) | Basin (1 sherd) |

| Pot (1 sherd) | Vase with small appliqués (1 sherd) | Vessel with pedestal base (1 sherd) | |

| Undetermined shape (5 sherds) | Tripod vessel with deep parallel grooves (1 sherd) | Vessel with incised exterior decoration (1 sherd) | |

| Vessel with pedestal base (1 sherd) | Tzacualli phase Pot (1 sherd) | ||

| Miniature vessel (1 sherd) | Undetermined shape (12 sherds) | ||

| Undetermined shape (18 sherds) | Natural clay deposit (1 sample) | ||

| Group 5 | Group 6 | Group 7 | Group 8 |

| (n = 13 ) | (n = 12 ) | (n = 18 ) | (n = 16 ) |

| Bowl with ring base (4 sherds) | Hemispherical bowl with ring base (3 sherds) | Hemispherical bowl with ring base (7 sherds) | Hemispherical bowl with ring base (5 sherds) |

| Vessel with pedestal base (1 sherd) | Jar with incised exterior decoration (1 sherd) | Hemispherical vessel (2 sherds) | Annular-based hemispherical bowls and incised exterior decoration (1 sherd) |

| Vessel with incised exterior decoration (1 sherd) | Natural clay deposit (8 samples) | Vessel with recurved composite wall (1 sherd) | Tripod vessel with everted rim (1 sherd) |

| Cylindrical vase (3 sherds) | Vessel with exterior punctate decoration (1 sherd) | Vessel with red pigment and incised exterior decoration (1 sherd) | |

| Vessel with convex wall and flat-convex base (1 sherd) | Pot (1 sherd) | Jar with incised exterior decoration (1 sherd) | |

| Undetermined shape (3 sherds) | Undetermined shape (6 sherds) |

The results obtained in this spectral analysis revealed the existence of two large groups subdivided into several subgroups that exhibit a certain degree of chemical differentiation, indicating that different raw materials were used to produce the Thin Orange ware. Pottery is produced by mixing clays and aplastic particles or temper, with the clay predominating over the temper. In this case, the clay deposit samples were classified inside the main group I (in subgroups 1 and 6); thus, it can be assumed that, for manufacturing the pieces related to this main group, clay banks from the same geological region were used. The Rio Carnero region is shaped by a set of deep barrancas (canyons) predominantly from the Acatlán Complex, the geological vestige of a Paleozoic ocean formed between the Cambro-Ordovician and late Permian periods (Reference Nance, Mille, Keppie, Murphy and DostalNance et al., 2006). This region contains banks of schists rich in hematite located in the ravines of Barranca Tecomaxuchitl and Rio Axamilpa. On the other hand, the chemical differences of main group II (with only 34 samples) indicate the extraction of clay from a different region.

According to the results, an interpretation can be as follows. The division of the samples into two main groups seems to be associated with two different clay deposit regions from which the raw material was exploited. The internal differences in their chemical composition, probably related to differences in temper, influenced the clustering algorithm to classify them into separate subgroups. This could mean that the aplastic particles used in the mixture for manufacturing the ceramic pieces did not naturally occur in the clay and were added by the artisans. The last observation is consistent with Reference Kolb and SandersKolb (1973), who stated that the temper was deliberately added and is not found in situ in the natural clay deposit.

The considerable variety of patterns presented by each of the eight subgroups suggests that the recipe for manufacturing the pieces was not used uniformly and that multiple ceramic production centers existed, employing their own and specific production recipes. In other words, each center would have produced its version of the Thin orange pottery with a standardized composition, and this was different to some extent from the ceramic made in other workshop centers. The results also support the idea that there was compositional continuity through time, despite the different shapes of the analyzed pieces.

3 Processing Compositional Data

3.1 Applications and Case Studies

In this section, data from published case studies were used to illustrate the techniques for processing compositional data. In summary, the steps for handling all datasets are as follows. First, the data are rescaled in such a way that the sum of the elemental concentrations row is equal to 100 percent. Afterwards, the data are transformed to log-ratios using the ilr transformation, translating the geometry of the Simplex into a real multivariate space. Once the ilr coordinates are obtained, data are standardized using a robust min/max-standardization. Any observation with a value equal to zero is imputed. As a diagnosis method, an MCD estimator is applied to identify the presence of outliers in the data. Then, model-based clustering is employed for the classification and visualization of the data. Finally, an SBP is calculated and graphically represented using the CoDa-dendogram for understanding differences in the composition of the clusters.

3.2 Exercise 1: Unsupervised Classification of Central Mexico Obsidian Sources

In this case study, the compositional values of obsidian samples collected from different natural sources (Table 6) located in Central Mexico, published by Reference López-García, Argote and BeirnaertLopez-Garcia et al. (2019), and Guatemala, retrieved from Reference CarrCarr (2015), were processed. The procedure is described step by step in the supplementary video “Video 3”. The intention of this analysis is to demonstrate the performance of the model-based clustering and an unbiased visualization system in a controlled environment. The dataset was ideal for the analysis because the correct number of clusters and the cluster to which each of the observations belonged were known.

Table 6 Samples collected from Mesoamerican obsidian sources.Footnote a

| Source name | Geographic region | Sample ID no. | N | ||

|---|---|---|---|---|---|

| Ahuisculco | Jalisco | 1−9 | 9 | ||

| El Chayal | Guatemala | 10−25 | 17 | ||

| San Martin Jilotepeque | Guatemala | 26−39 | 14 | ||

| Ixtepeque | Guatemala | 40−55 | 16 | ||

| Otumba (Soltepec) | Edo. de México | 56−65 | 10 | ||

| Otumba (Ixtepec-Malpais) | Edo. de México | 66−88 | 23 | ||

| Oyameles | Puebla | 89−95 | 7 | ||

| Paredon | Puebla | 96−102 | 7 | ||

| Tulancingo-El Pizarrin | Hidalgo | 103−107 | 5 | ||

| Sierra de Pachuca | Hidalgo | 108−117 | 10 | ||

| Zacualtipan | Hidalgo | 118−127 | 10 | ||

| Zinapécuaro | Michoacán | 128−134 | 8 |

a The compositional values of samples from El Chayal, San Martin Jilotepeque, and Ixtepeque were retrieved from Reference CarrCarr (2015).

Step-by-step video on how to process compositional data of the obsidian samples used in Video 3. Video files available at www.cambridge.org/argote_machine-learning

The dataset consisted of n = 136 samples with p = 10 variables containing the elemental composition of the samples (Mn, Fe, Zn, Ga, Th, Rb, Sr, Y, Zr, and Nb), obtained with a portable X-ray fluorescence (pXRF) instrument. This matrix is available in the supplementary material as file “Mayas_sources_onc.csv”. In this example, we considered the problem of determining the structure of the data without prior knowledge of the group membership. The estimation of the parameters was performed with the maximum likelihood, and the best model was selected using the ICL criterion, resulting in K = 12 components. Compositional data are constrained data and therefore must be translated into the appropriate geometric space. To convert the data to completely compositional data, the following code from the “compositions” package in R is used (Reference van den Boogaart, Tolosana-Delgado and Brenvan den Boogaart et al., 2023):

rm(list=ls()) # Delete all objects in R session ## log-ratio analysis # load quantitative dataset. The name of the file for this example is Sources.csv. You can #change it for the location and name of your own data file. data <- read.csv(”C:\\obsidian\\Mayas_sources_onc.csv”, header=T) str(data) # displays the internal structure of the file, including the format of each column dat2 <- data[2:11] # delete data identification column str(dat2) # transformation of the data to the ilr log-ratio library(compositions) ## Reference van den Boogaart, Tolosana-Delgado and Brenvan den Boogaart, Tolosana-Delgado and Bren (2023) xxat1 <- acomp(dat2) # the function “acomp” representing one closed composition. # With this command, the dataset is now closed. xxat2 <- ilr(xxat1) # isometric log-ratio transformation of the data str(xxat2) write.csv(xxat2, file=”ilt-transformation.csv”) #You can choose a personalized file name

In the isometric transformation output file, the geometric space is D – 1. Once the data have been taken to the Simplex geometry, it is important to normalize them robustly so that there are no variables with greater weight. This is achieved by loading the clusterSim package in R with the normalization option = na3 using the code presented below:

# Robust normalization # In our case, the file “xxat2” that contains the isometric transformation of the #compositional data was normalized with the robust equation presented in the #transformations section. library(clusterSim) ## Reference Walesiak and DudekWalesiak and Dudek (2020) z11<- data.Normalization(xxat2,type=”n3a”,normalization=”column”,na.rm=FALSE) # This corresponds to the robust normalization described in ‘Compositional and #Completely compositional data’ Section of Volume I. # n3a positional unitization ((x-median)/min(x)- max(x)) z12 <- data.frame(z11) # After the previous operations, it is necessary to convert the #data output to a data frame to tell the program that the observations are in the #rows and columns represent the attributes (variables) str(z12)

After transforming the data through robust normalization, it is convenient to verify that there are no values equal to 0. In this example, no zero values were present; thus, no imputation was needed. In the case that your data have values equal to zero, it is recommended to employ an imputation algorithm such as Amelia II:

# Imputation of data # Loads the user interface to perform the imputation of values Library (Amelis) AmeliaView()

Afterwards, to identify the presence of outliers in the data, a diagnosis is performed through the MCD estimator of the rrcov package (Reference Todorov and FilzmoserTodorov and Filzmoser, 2009):

# Parameters of the model # kmax maximal number of principal components to compute. The default is kmax=10. # Default k= 0; if we do not provide an explicit number of components, the algorithm # chooses the optimal number. alpha: 0.7500; this parameter measures the fraction # of outliers the algorithm should resist (default). # The matrix dimension in this example is n = 136 and p = 10 library(rrcov) ## Reference TodorovTodorov (2020) MCD_1D <- data.matrix(z12[, 1:9]) cv <- CovClassic(MCD_1D) plot(cv) rcv <- CovMest(MCD_1D) plot(rcv) summary(MCD_1D)

Figure 13 shows the distance–distance plot, which displays the robust distances versus the classical Mahalanobis distances (Reference Rousseeuw and van ZomerenRousseeuw and van Zomeren, 1990), allowing us to classify the observations and to identify the potential outliers. Note that the choice of appropriate distance metric is essential (Reference ThrunThrun, 2021a). The dotted line represents points for which the classical and robust distances are equal. Vertical and horizontal lines are plotted in values x = y =

![]() ; points beyond this threshold are considered outliers. While the robust estimation detects many observations whose robust distance is above the threshold, the Mahalanobis Distance classifies all points as regular observations. Observations with large robust distances are not candidates for outliers because they do not have an impact on the estimates. The only two observations that exceed the values of X2 are those with ID numbers 9 and 136, which are convenient to eliminate from the estimate and leave only n = 134 samples in the dataset.

; points beyond this threshold are considered outliers. While the robust estimation detects many observations whose robust distance is above the threshold, the Mahalanobis Distance classifies all points as regular observations. Observations with large robust distances are not candidates for outliers because they do not have an impact on the estimates. The only two observations that exceed the values of X2 are those with ID numbers 9 and 136, which are convenient to eliminate from the estimate and leave only n = 134 samples in the dataset.

Figure 13 Distance–distance plot of the samples from obsidian sources. Color version available at www.cambridge.org/argote_machine-learning

Once the preprocessing of the data has concluded, the mixed model of multivariate Gaussian components is adjusted for clustering purposes using the Rmixmod and Clusvis packages:

# Classification with Mixture Modeling: Clustering in Gaussian case # To fit the mixture models to the data and for the classification, two additionaL #programs must be loaded. rm(list=ls()) library(Rmixmod) ## Reference Lebret, Lovleff and LangrognetLebret et al., 2015) library(ClusVis) ## (Reference Biernacki, Marbac and VandewalleBiernacki et al., 2021) fammodel <- mixmodGaussianModel(family=”general”,equal. proportions=FALSE) Mod1<-mixmodCluster(data,12, strategy = mixmodStrategy(algo = “EM”, nbTryInInit = 50, nbTry=25)) #EM (Expectation Maximization) algorithm # nbTryInInit: integer defining the number of tries in the initMethod algorithm. # nbTry: integer defining the number of tries summary(Mod1) Mod1[”partition”] # partition output made by mixing model ## Gaussian-Based Visualization of Gaussian and Non-Gaussian Model-Based Clustering library(ClusVis) ## (Reference Biernacki, Marbac and VandewalleBiernacki et al., 2021) resvisu <- clusvisMixmod(Mod1) # Gaussian-Based Visualization of Gaussian Model-Based #Clustering plotDensityClusVisu(resvisu) # probabilities of classification are generated by using the model #parameters.

In this script, the command mixmodGaussianModel is an object defining the list of models to run. The function mixmodCluster computes an optimal mixture model according to the criteria supplied and the list of models defined in [Model] using the algorithm specified in the command ‘strategy’ [strategy = mixmodStrategy (algo = “CEM”, nbTryInInit = 50, nbTry=25)] (Reference Lebret, Lovleff and LangrognetLebret et al., 2015). The estimation of the mixture parameters can be carried out with a maximum likelihood using the EM algorithm (Expectation Maximization), the SEM (Stochastic EM), or by maximum likelihood classification using the CEM algorithm (Clustering EM). In this example, we use the EM algorithm. With the general family command, it is possible to give more flexibility to the model that best fits the data by allowing the volumes, shapes, and orientations of the groups to vary (Reference Lebret, Lovleff and LangrognetLebret et al., 2015).

Because our groups had different proportions (each group had a different sample size), the FALSE command was established as Z12 in the data frame. As an output of this estimation step, the program provides a partition and other parameters, including the proportions of the mixed model in each group, their averages, variances and likelihood, and the associated source of each group obtained for this example. Table 7 shows the output of the algorithm; in this table, it can be observed that although the analysis was carried out without labeling the observations, they were correctly assigned to their corresponding source group, except for a single observation of the Otumba (Ixtepec-Mailpais) subsource that was assigned to Otumba (Soltepec).

Table 7 Assignation of the mixing proportions with Rmixmod (z-partition of the obsidian sources).

| Cluster | Proportion | Group assigned | n | Source |

|---|---|---|---|---|

| 1 | 0.1269 | 6,6,6,6,6,6,6,6 | 8 | Ahuisculco |

| 2 | 0.0746 | 1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1 | 17 | El Chayal |

| 3 | 0.0522 | 4,4,4,4,4,4,4,4,4,4,4,4,4,4 | 14 | San Martin Jilotepeque |

| 4 | 0.1045 | 12,12,12,12,12,12,12,12,12,12,12,12, 12,12,12,12 | 16 | Ixtepeque |

| 5 | 0.0522 | 8,8,8,8,8,8,8,8,8,8,8 | 11 | Otumba (Soltepec) |

| 6 | 0.0597 | 7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7,7 | 22 | Otumba (Ixtepec-Malpais) |

| 7 | 0.1642 | 5,5,5,5,5,5,5 | 7 | Oyameles |

| 8 | 0.0821 | 11,11,11,11,11,11,11 | 7 | Paredon |

| 9 | 0.0746 | 10,10,10,10,10 | 5 | Tulancingo-El Pizarrin |

| 10 | 0.0373 | 2,2,2,2,2,2,2,2,2,2 | 10 | Sierra de Pachuca |

| 11 | 0.0522 | 9,9,9,9,9,9,9,9,9,9 | 10 | Zacualtipan |

| 12 | 0.1194 | 3,3,3,3,3,3,3 | 7 | Zinapécuaro |

In this case, the model that best fitted the data turned out to be “Gaussian_pk_Lk_C” with the following cluster properties: Volume = Free, Shape = Equal, and Orientation = Equal. It is also possible to analyze the clustering results graphically. It is important to note that the graphics produced by the “ClusVis” package may vary in the output. The authors state that, for some specific reproducibility purposes, the Rmixmod package allows the random seed to be exactly controlled by providing the optional seed argument (“set.seed: number”). However, despite having performed multiple tests with different seeds, the resulting graphics tend to vary. Figure 14 displays the bivariate spherical Gaussian visualization associated with the confidence areas; the size of the gray areas around the centers reflects the size of the components. The accuracy of this representation is given by the difference between entropies and the percentage of inertia of the axes.

Figure 14 Component interpretation graph of the obsidian sources. Color version available at www.cambridge.org/argote_machine-learning

In the graph, it can be observed that the mapping of f is accurate because the difference between entropies is zero:

![]() . The first dimension provided by the LDA mapping was the most discriminative, with 55.7 percent of the discriminant power; the sum of the inertia of the first two axes was 55.7 + 23.22 = 78.92 percent of the discriminant power; thus, most of the discriminant information was present on this two-dimensional mapping. Components 12, 7, 4, and 2 contain most of the observations. The components that show the greatest difference in their chemical composition are the samples from Components 1 and 6 (El Chayal and Ahuisculco, respectively) and Components 2 and 10 (Pachuca and Pizarrin) in the other extreme. Components 5 and 11 (Oyameles and El Paredon) are the ones that are closest to each other (regarding their mean vectors) and slightly join without meaning that the observations are mixed, as seen in the partition results of Table 7. An important observation about these results is that there are no overlaps between any of the sources used in the classification, hence fulfilling all the conditions of a good classification.

. The first dimension provided by the LDA mapping was the most discriminative, with 55.7 percent of the discriminant power; the sum of the inertia of the first two axes was 55.7 + 23.22 = 78.92 percent of the discriminant power; thus, most of the discriminant information was present on this two-dimensional mapping. Components 12, 7, 4, and 2 contain most of the observations. The components that show the greatest difference in their chemical composition are the samples from Components 1 and 6 (El Chayal and Ahuisculco, respectively) and Components 2 and 10 (Pachuca and Pizarrin) in the other extreme. Components 5 and 11 (Oyameles and El Paredon) are the ones that are closest to each other (regarding their mean vectors) and slightly join without meaning that the observations are mixed, as seen in the partition results of Table 7. An important observation about these results is that there are no overlaps between any of the sources used in the classification, hence fulfilling all the conditions of a good classification.

3.3 Exercise 2: Obsidian Sources in Guatemala

Reference CarrCarr (2015) performed a study to identify obsidian sources and subsources in the Guatemala Valley and the surrounding region. In his project, he analyzed a total of 215 samples from El Chayal, San Martin Jilotepeque, and Ixtepeque geological deposits with pXRF spectrometry. Of these samples, n = 159 were collected from 36 different localities in El Chayal, n = 34 were gathered from eight sampling localities in San Martin Jilotepeque, and n = 22 were collected from four sampling locations in Ixtepeque. The data matrix for this example can be found in the supplementary file “Obs_maya.csv”. To discriminate between these three main obsidian sources, Reference CarrCarr (2015) used bivariate graphs, plotting the concentrations of Rb versus Fe (see the graphic reproduced from his data in Figure 15). In the figure, it is possible to discriminate three different groups, but it is difficult to distinguish between different subsources. In addition, there is a great dispersion of the points from the El Chayal and Ixtepeque sources.

Figure 15 Bivariate plot using Rb (ppm) and Fe (ppm) concentrations of El Chayal, San Martin Jilotepeque, and Ixtepeque obsidian source systems (graphic reproduced with data from Reference CarrCarr, 2015). Color version available at www.cambridge.org/argote_machine-learning

Reference CarrCarr (2015) also intended to examine the chemical variability of the samples to discriminate subsources within each of the main sources. For this purpose, the author analyzed the samples from each of the regions separately. For the El Chayal region, he used Rb versus Zr components in a bivariate display that resulted in five different subsources. Using this procedure, the author determined the existence of two distinct geochemical groups for the San Martin Jilotepeque obsidian source and two for Ixtepeque. To support the bivariate classification, Carr calculated the Mahalanobis distances (MD) to obtain the group membership probabilities of the observations, making use of the following set of variables: Sr/Zr, Rb/Zr, Y/Zr, Fe/Mn, Mn, Fe, Zn, Ga, Rb, Sr, Y, Zr, Nb, and Th. It should be noted that this was not possible for El Chayal Subgroups 4 and 5 because their sample size was too small, preventing the calculation of their probabilities.

From his study, several observations can be made. First, the data were processed without any transformation. Therefore, it is advisable to open the data to remove the constant sum constraint. Second, the author managed to establish a total of nine obsidian subsources using bivariate graphs for each of the regions separately. Third, the calculation of probabilities to determine group memberships using the MD was not possible in all cases due to the restrictions imposed by the sample size. Furthermore, if Reference CarrCarr (2015) data were processed according to one of the commonly established methodologies, that is, transforming the data to log10 and applying a PCA, the explained variance of the first two components would have been only 56.53 percent, and six PCs would have been needed to explain 95.58 percent of the variance.

By plotting all the data of the nine subsources together using a PCA (Figure 16), including the information about the origin of the samples, it can be observed that the overlap between the different groups is unavoidable. In this way, it can be concluded that this methodology is not able to differentiate the chemical characteristics of the samples. Therefore, the discrimination of sources using concentrations is a procedure that requires nonconventional methods. According to this, we can assume that many of the published classifications that follow preestablished methods have incurred serious classification errors.

Figure 16 Plot of the two principal components of the samples from nine subsources in Guatemala valley. Color version available at www.cambridge.org/argote_machine-learning