1 Introduction

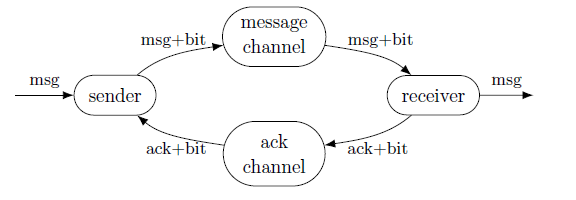

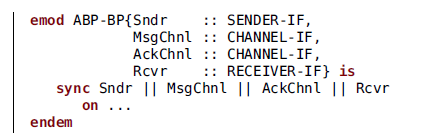

Rewriting logic (Meseguer Reference Meseguer1992) is a well established, logic-based formalism, useful, in particular, for the specification of concurrent and nondeterministic systems. There are ways, in this context, in which modularity can be achieved. The language Maude (Clavel et al. Reference Clavel, Durán, Eker, Escobar, Lincoln, Martí-Oliet, Meseguer, Rubio and Talcott2022), for example, strongly based on rewriting logic, includes a powerful system of modules which promotes a good organization of the code. Besides, multicomponent or distributed systems are sometimes modeled as a multiset of objects and messages. However, a truly compositional specification was not possible. By that, we mean one in which each component is an independent rewrite system and composition is specified separately, allowing, for example, reusability of components. In previous work (Martín et al. Reference Martní, Verdejo and Martí-Oliet2020), we proposed an operation of parallel composition of rewrite systems to achieve precisely that. In the present paper, we show how a compositional specification written according to our proposal can be the object of compositional verification. Note that, in this work we often use rewrite system as a shorthand for system specified using rewriting logic.

The reasons for the convenience of a compositional approach to verification are well known: to avoid the state-explosion problem; because some systems are inherently compounds and it makes all sense to specify and verify them as such; because verified systems can be safely reused as library components.

There are two alternative views on the meaning of compositional specification, which lead to different needs for compositional verification. In one, a composed specification is seen as modeling a distributed system, of which probably only one component is under our control, and the aim of verification is to ensure that our component behaves appropriately in an appropriate environment. Global states are out of the question, and the behavior we focus on is that of our component. The assume/guarantee technique (see Section 9) is designed to be helpful here.

In the other view, in contrast, the whole system is under our control, but working compositionally still makes sense for modular engineering. Then, the aim of compositional verification is to prove that each component behaves appropriately, not in a general, unknown environment, but in the particular one given by the rest of the components that we have also specified. The abstraction technique (see Section 8.2) helps here: given a component and its environment, we can abstract either or both, and perform verification on the abstracted, simplified component and/or environment. Assume/guarantee may also help. For example, we use both techniques in the mutual exclusion example introduced in Section 2.2.

We consider special kinds of atomic and composed rewrite systems which we call egalitarian and were introduced in our previous work (Martín et al. Reference Martní, Verdejo and Martí-Oliet2020). They are egalitarian in the sense that they give the same status to transitions and states. A composed egalitarian system is a set of independent but interacting atomic ones. We see them as modeling a distributed system. An egalitarian rewrite system, atomic or composed, can be translated into a standard rewrite system (called plain in this work) by the operation that we call the split. This allows to specify a system componentwise, translate the compound into a single plain system and, then, execute and verify the result monolithically using existing tools (the ones in Maude’s toolset, for example). The relation between this monolithic verification and the compositional one using assume/guarantee is our Theorem 5.

We are interested in rewriting logic and, in this paper, in verifying systems specified using that logic. The underlying expectation is that a firm logical basis makes it easier to define, study, and implement modularity and composition. However, satisfaction of temporal formulas is defined on the transition structures which represent the semantics of the logical specifications. Thus, transition structures play a fundamental role in this paper, even if only as proxies for the main characters.

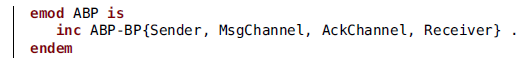

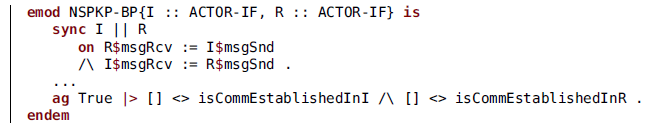

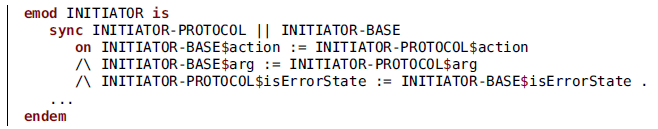

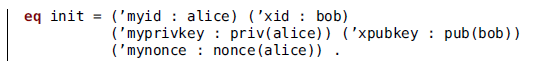

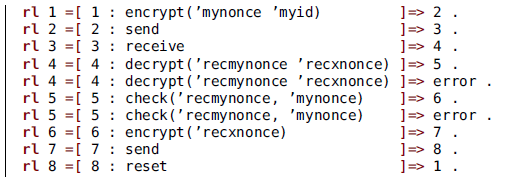

This is the plan of the paper. In Section 2 we show and explain the compositional specification of three simple but illustrative examples. They are revisited later in the paper, but here they are meant as an informal introduction to our previous work on composition. Section 3 contains a quick and mainly formal overview of our previous work. In Section 4, we study execution paths, needed to define satisfaction of formulas. We consider paths in atomic components, sets of compatible paths from different components, and global paths, representing, respectively, local, distributed, and global behaviors. The contrast and equivalence between a local view and a global one is a constant throughout the paper. In Section 5, we describe the variant of the temporal logic LTL against which we verify our systems. In Section 6, we define basic satisfaction of temporal formulas based on paths, and show the relation between the distributed and the global views of satisfaction. In Section 7, we discuss the concepts of fairness and deadlocks, and their importance for compositional verification. In Section 8, we consider the componentwise use of simulation and abstraction: simulating or abstracting a component induces the same on the whole system, with a potentially reduced effort. In addition, the abstracted system may be easier to verify. In Section 9, we consider the assume/guarantee technique, which allows the verification of isolated individual components, ensuring thus that the result holds for whatever appropriate environment the component is placed in, and we show how it can be adapted to our setting. In Section 10, we briefly present two additional examples of compositional specification and verification (fully discussed in (Martín 2021a)) which are more complex and realistic than the toy ones used throughout this paper. Finally, Section 11 discusses related and future work and contains some closing remarks.

These are the points we think may be of special interest in this paper:

-

We show how to work compositionally in rewriting logic, expanding and strengthening our previous work.

-

We keep in parallel, all throughout the paper, the distributed and the global (monolithic) views of satisfaction and related concepts, and show the equivalence of both views. We claim that keeping both views is worth the effort, both for our intuition and in practice.

-

We show how simulation and abstraction can be performed compositionally.

-

We show that the assume/guarantee technique can be transposed to our setting.

-

Our definition of assume/guarantee satisfaction (inductive, but not relying on the next temporal operator) is new, to the best of our knowledge.

-

We give path-based definitions of deadlock and fairness, discuss how they impact the verification tasks, and show how to deal with them in our setting.

2 Examples

We introduce here three examples of compositional specification. They are meant as a quick introduction to Maude and to our previous work (Martín et al. Reference Martní, Verdejo and Martí-Oliet2020), especially to compositional specification with the extended syntax we proposed. The formal definitions and results are in Section 3. Also, these examples set the base on which we later illustrate the techniques for compositional verification and simulation. They have been chosen to be illustrative, so they are quite simple. We are using Maude because of its availability, its toolset, and its efficient implementation. All the concepts and examples, however, are valid for rewriting logic in general.

The first example presents three buffers assembled in line. The second shows how to exert an external mutual exclusion control on two systems, provided they inform on when they are visiting their critical sections. Later, this gives us the opportunity of using componentwise simulation and a very simple case of assume/guarantee. The third example concerns the well-known puzzle of a farmer and three belongings crossing a river. We compose a system implementing the mere rules of the puzzle with several other components implementing, in particular, two guidelines which prove to be enough to reach a solution. The assume/guarantee technique is later used on this system.

The complete specification for all the examples in this paper is available online (Martín 2021b). Our prototype implementation, able to deal with these examples, is also available there, though the reader is warned that, in its current state, it is not a polished tool but, rather, a proof of concept.

2.1 Chained buffers

We model a chain of three buffers. We describe the system top-down. This is the specification of the composed system:

The ![]() sentence is not standard Maude, but part of our extension. That sentence expects three Maude modules to exist, called BUFFER1, BUFFER2, and BUFFER3, each defining the values of the so-called properties mentioned in the

sentence is not standard Maude, but part of our extension. That sentence expects three Maude modules to exist, called BUFFER1, BUFFER2, and BUFFER3, each defining the values of the so-called properties mentioned in the ![]() part of the sentence: isSending and isReceiving. We use the

part of the sentence: isSending and isReceiving. We use the ![]() sign to access a property defined in a Maude module. In words, that models a composed system in which the three buffers synchronize so that when one sends the next receives. The properties are assumed to be Boolean in this example, modeling the passing of tokens. Synchronizing on more complex values is also possible, as shown in other examples.

sign to access a property defined in a Maude module. In words, that models a composed system in which the three buffers synchronize so that when one sends the next receives. The properties are assumed to be Boolean in this example, modeling the passing of tokens. Synchronizing on more complex values is also possible, as shown in other examples.

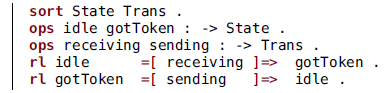

We call the result of the composition above 3BUFFERS. For this to be a complete model, we need to provide the specification of the internal workings of the three buffers, including the definition of the properties. There is no reason for the three buffers to be specified exactly the same. In principle, they even could be coded in different languages, as long as there is a way to access the values of the properties defined inside each of them. For the sake of simplicity, in this example the three modules are identical. This is the very simple code for each of them:

There are two states, represented by the State constants idle and gotToken, and two transitions between them, represented by the Trans constants receiving and sending. The keyword ![]() introduces the declaration of operators with their arities. The singular

introduces the declaration of operators with their arities. The singular ![]() can be used when only one operator is being declared. In this code, we are declaring constants, so the argument sorts are absent. The keyword

can be used when only one operator is being declared. In this code, we are declaring constants, so the argument sorts are absent. The keyword ![]() introduces each rewrite rule and the symbols

introduces each rewrite rule and the symbols ![]() separate the terms. We assume throughout the paper that the sort representing the states of the system is called State and the one representing transitions is called Trans. Also, it is convenient to have a supersort of both (not shown above), which we call Stage. Usually, we omit declarations of sorts and operators when they are clear from context.

separate the terms. We assume throughout the paper that the sort representing the states of the system is called State and the one representing transitions is called Trans. Also, it is convenient to have a supersort of both (not shown above), which we call Stage. Usually, we omit declarations of sorts and operators when they are clear from context.

Readers knowledgeable of rewriting logic and Maude would expect the rules above to be written instead as:

Here, receiving and sending are rule labels. The syntax we use does not only consists of moving the label to the middle of the rule. In our case, receiving and sending are not labels, but algebraic terms of sort Trans, in the same way that idle and gotToken are terms of sort State. In general, both States and Transs can be terms of any algebraic complexity. Other examples below make this clearer.

We call these rules egalitarian, because transitions are represented by terms, the same as states. The rewrite systems which include them are also called egalitarian. More precisely, each buffer is an atomic egalitarian rewrite system. The result of their composition is still called egalitarian, but not atomic.

As illustrated above, the way we have chosen to specify composition of systems is by equality of properties. These are functions which take values at each state and transition of each component system. The properties of a system provide a layer of isolation between the internals of each component and the specification of the composition. This is similar to the concept of ports in other settings. It is important that properties are defined not only on states, but also on transitions, because synchronization is more often than not specified on them. That is why we have developed egalitarian systems in which transitions are promoted to first-class citizenship.

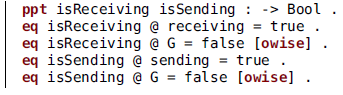

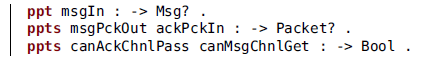

We declare and define two properties in each buffer:

The sentence introduced by the keyword ![]() is part of our extended syntax, as is the symbol @ representing the evaluation of a property on a state or transition. Thus, these lines declare two Boolean properties and define by means of equations (introduced by the keyword

is part of our extended syntax, as is the symbol @ representing the evaluation of a property on a state or transition. Thus, these lines declare two Boolean properties and define by means of equations (introduced by the keyword ![]() ) their values at each state and transition. The fact that receiving and sending are algebraic terms allows their use in equations.

) their values at each state and transition. The fact that receiving and sending are algebraic terms allows their use in equations.

The attribute ![]() (short for otherwise) in two of the equations is an extralogical feature of Maude: that equation is used whenever the term being reduced matches the left-hand side and the case is not dealt with by other equations. The variable G, whose declaration is not shown, has sort Stage, so that all properties evaluate to false except in the two cases explicitly set to true.

(short for otherwise) in two of the equations is an extralogical feature of Maude: that equation is used whenever the term being reduced matches the left-hand side and the case is not dealt with by other equations. The variable G, whose declaration is not shown, has sort Stage, so that all properties evaluate to false except in the two cases explicitly set to true.

Any property defined in a component can be used as well as a property for the resulting composed system. In this case, the properties isReceiving in BUFFER1 and isSending in BUFFER3 are defined but not used for synchronization. Those properties can be useful if the composed module 3BUFFERS is used in turn as a component to be synchronized with other modules.

It is a common case that a property is defined to be true exactly at one state or transition and false everywhere else, as above. This calls for some syntactic shortcut to help the user. We do not discuss in this paper how to implement such shortcuts (of which this is not at all the only possible one), and our prototype implementation does not include them.

The execution of the composed system 3BUFFERS consists in the independent execution of each of its three components, restricted by the need to keep the equality between properties. To that composed system, the operation we call the split can be applied to obtain an equivalent standard rewrite system. The resulting split system has as states triples like < idle, gotToken, idle >, formed from the states of the components, and has rewrite rules like

The split is named after this translation of each rule into two halves. The term split is also used later to describe related translations, though in some of those cases there is nothing split in the literal sense. The split is formally defined in Section 3.3. We usually do not care to show the internal appearance of a split system, but are only interested in the fact that it represents in a single system the global behavior of the composition.

2.2 Mutual exclusion

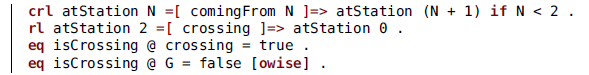

Consider a very simple model of a train, which goes round a closed railway in which there are three stations and a crossing with another railway. We use the three stations as the states of our model, and there are three transitions for moving between them. Using our extended syntax, we model it with the rule:

The keyword ![]() introduces a conditional rewrite rule. We omit the needed declarations for the integer variable N and the constructors atStation and comingFrom.

introduces a conditional rewrite rule. We omit the needed declarations for the integer variable N and the constructors atStation and comingFrom.

The stations are numbered 0–2. But the transit from station 2 to 0 is different, because it passes through the crossing:

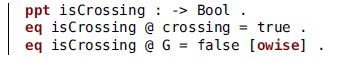

Indeed, we have two trains, modeled in this example by the same specification, but as two separate components. They share the crossing, so we need safety in the access to it. To this aim, we define for each train a Boolean property isCrossing to be true at the transition crossing and false everywhere else:

We call the two systems thus defined TRAIN1 and TRAIN2.

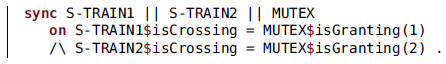

The mutex controller for safe access to the crossing is specified by these two rules:

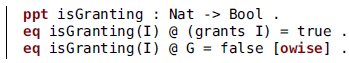

We call this system MUTEX and define in it the parametric Boolean property isGranting, which is defined to be true at the respective transitions and false everywhere else:

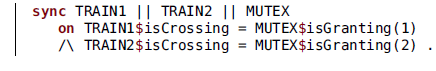

The final system is the composition of the two trains and MUTEX so that each isCrossing property is synchronized with the corresponding isGranting one:

In due time, in Sections 8.3 and 9.2, we will show how we can use simulation to work with even simpler models of the trains, and how we can justify that mutual exclusion holds for the composed system.

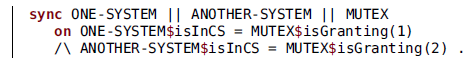

We want to insist in the value of modularity in our examples. The system MUTEX with its two properties can be used unchanged to control any two given systems, as long as they inform, by means of properties, of their being in their critical section. For general systems, the synchronization instruction would look something like

Mutual exclusion between the two systems, whatever they are, is guaranteed by MUTEX satisfying the appropriate formula – see Section 9.2.

We find cases like this of particular interest. We mean a component controlling others and imposing its behavior (mutual exclusion in this case) on the compound. This is the idea behind strategies, controllers, coordination, etc. In contrast, in the example of the chained buffers in Section 2.1, the composed behavior is emergent. Our next example involves both techniques.

2.3 Crossing the river

For a quick reminder, this is the statement of the puzzle. A farmer has got a wolf, a goat and a cabbage, and needs to cross a river using a boat with capacity for the farmer and, at most, one of the belongings. The wolf and the goat should not be left alone, because the wolf would eat the goat. In the same way, the goat would eat the cabbage if left unattended. The goal is to get the farmer and the three belongings at the opposite side of the river safely.

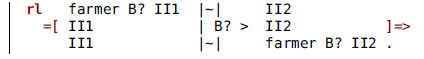

Our specification consists of two rules: one encompasses all possible ways the farmer can cross the river; the other represents eating. This is the rule for a crossing, explained below:

Each state term contains the symbol |~| representing the river. To each side of this symbol there is a set of items, which may include the farmer and the three belongings, respectively represented by the constants farmer, wolf, goat, and cabbage. Also, there is always a special item mark which marks the side that the farmer is trying to reach with her belongings. Thus, the initial state is defined like this:

The variables II1 and II2 are sets of items which, in particular, may be empty. The sort of the variable B? is MaybeBelong, that is, either one of the three belongings or the special value noBelong. Indeed, noBelong is also the identity element for sets of items. In this way, the transition term II1 | B? > II2 represents all possible crossings, with

![]() $\texttt{B?}=\texttt{noBelong}$

interpreted as the farmer crossing alone. The symbol |~| is formally a commutative operator, so that the same rule represents movements from any side to the other. That rule is rather terse. Alternative specifications, using more than one rule, would probably be easier to grasp. That is not important for the main purpose of this paper, which has to do with composition.

$\texttt{B?}=\texttt{noBelong}$

interpreted as the farmer crossing alone. The symbol |~| is formally a commutative operator, so that the same rule represents movements from any side to the other. That rule is rather terse. Alternative specifications, using more than one rule, would probably be easier to grasp. That is not important for the main purpose of this paper, which has to do with composition.

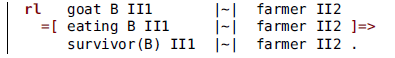

The rule for eating is this one:

Thus, when the goat and some other belonging are at one side with the farmer at the other side, eating can take place. The function survivor is defined by these equations:

Thus, the goat survives if the other belonging is the cabbage, but it dies (disappears from the state term) if the other belonging is the wolf.

Our specification does not require that eating happens as soon as it is possible, but only that it can happen. So our aim is to avoid all danger and ensure a safe transit.

This was the specification of the rules of the game. We propose now two guidelines for the farmer to follow. The first is to avoid all movements which lead to a dangerous situation, that is, one with the goat and some other belonging left by themselves. The second is to avoid undoing the most recent crossing: for example, after crossing one way with the goat, avoid going back the other way with the goat again. These are both quite obvious guidelines to follow, and we hypothesize that they are enough to ensure that the farmer reaches the goal. As it turns out, the hypothesis is false, and we will need to strengthen the second guideline; but let us work with this for the time being.

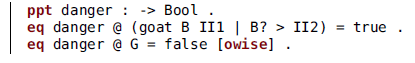

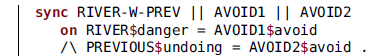

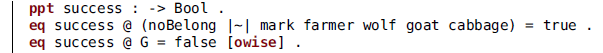

The guidelines are enforced by avoiding certain transitions to be triggered. For that, we need to identify said transitions. First, the dangerous ones:

The variable B represents a belonging, while B?, as before, can be either a belonging or noBelong. In words: there is danger if the farmer is in the boat and the goat has been left alone with some other belonging.

We need to restrict the execution of RIVER so that ![]()

at all times. This is another instance where a syntactic shortcut would help, but also this requirement can be enforced by a composition with an appropriate controller.

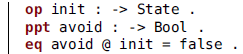

Let us call the following system AVOID. It is as simple as a system can possibly be:

There is a single state, called init, no transitions and no rules, and the property avoid is always false. Thus, the composed system

indeed avoids all situations at which danger is true.

Implementing the other guideline, avoidance of the undoing of movements, requires one more step, because we need to, somehow, store the previous movement so as to be able to compare it with the potential new one. We are after a composed system like this

where RIVER informs the new system PREVIOUS about the moves being made, and PREVIOUS stores at each moment the latest move. We name this composed system RIVER-W-PREV.

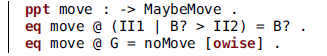

The new component PREVIOUS needs only this rule:

Its state sort is MaybeBelong, that is, either actually one of the three belongings or the value noBelong. In this case they are representing movements: the farmer crossing either with the specified belonging or alone. The transition term includes two such movements: the previous one and the new one. In this way, we can check them for equality when needed. To synchronize with the main system RIVER, we use this property in PREVIOUS:

Correspondingly, we need this property in RIVER:

We need to include the new constant noMove for when, indeed, no move is taking place.

Storing information about the past execution of the system is called instrumentation and is a common technique in system analysis. This is another instance calling for syntactic sugar. As shown with the RIVER || PREVIOUS example, it can be achieved by composition of atomic rewrite systems.

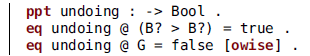

Whenever RIVER is executing a crossing, PREVIOUS is showing, in its transition term, the previous and the current moves, giving us the possibility of checking if they are equal:

Now, we need to restrict RIVER-W-PREV so as to avoid undoing movements. For that, we can use AVOID, as above. But we need two instances of that system, one to avoid danger, the other to avoid undoings, to which we refer as AVOID1 and AVOID2.

At the end, the system we are interested in is

This completes the specification of the system. Later in the paper, in Section 9.3, we show how to verify that it leads to a solution… or, rather, that it does not. But we will also show a sufficient strengthening of the concept of undoing.

As in the previous examples, we want to draw the reader’s attention to the modularity of our specification. Some previous treatments of this problem in rewriting logic (Palomino et al. Reference Palomino, Martí-Oliet and Verdejo2005; Rubio et al. Reference Rubio, Martí-Oliet, Pita and Verdejo2021) used several rules to model the different ways of crossing. But this is irrelevant to us, because any specification that defines the properties move and danger will do as well.

3 Background

This section is a formal summary of our previous work on the synchronous composition of rewrite systems (Martín et al. Reference Martní, Verdejo and Martí-Oliet2020). Detailed explanations and proofs can be found there. This whole section is quite theoretical, consisting of many definitions and a few propositions, to complement the informal and example-based introduction in Section 2.

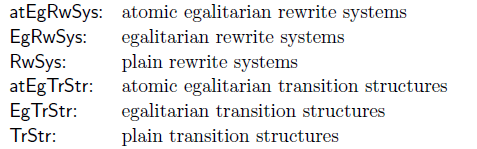

We define below a number of structures and systems. This is a list of them with the abbreviations we use to refer to them:

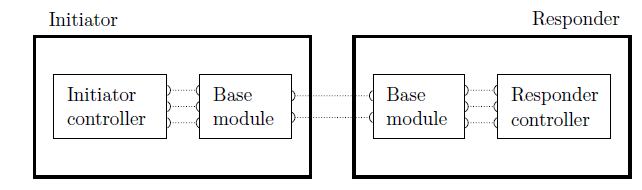

The polyhedron in Figure 1 shows the whole set of structures and systems with their related maps. Slanted dashed arrows represent the several concepts of split, that is, of obtaining plain transition structures or rewrite systems from egalitarian ones. Double horizontal arrows represent synchronous composition of systems or structures: composing systems or structures of the same kind produces another one of the same kind. Downward snake arrows represent semantic maps, assigning transition structures to rewrite systems. The two horizontal hooked arrows on the left represent inclusion: atomic systems and structures are particular cases of general systems and structures, respectively. All the elements in the diagram are defined below, and better explained in our previous paper (Martín et al. Reference Martní, Verdejo and Martí-Oliet2020).

Fig. 1. The types of systems we use and their relations.

3.1 Egalitarian structures and systems

As we mentioned above, we use transition structures (of particular types) as semantics for our rewrite systems. In due time, we define execution paths for transition structures, and satisfaction based on those paths. In this section we define atomic egalitarian transition structures, atomic egalitarian rewrite systems, the semantic relation between them, and their compositions.

Definition 1 (atomic egalitarian transition structure) An atomic egalitarian transition structure is a tuple

![]() $\mathcal{T}=(Q,T,\mathrel{\rightarrow},P,g_0)$

, where:

$\mathcal{T}=(Q,T,\mathrel{\rightarrow},P,g_0)$

, where:

-

Q is the set of states;

-

T is the set of transitions;

-

is the bipartite adjacency relation;

is the bipartite adjacency relation; -

P is the set of properties, each one a total function p from

$Q\mathrel{\cup} T$

to some codomain

$Q\mathrel{\cup} T$

to some codomain

$C_p$

;

$C_p$

; -

is the initial state or transition.

is the initial state or transition.

We refer to the elements of

![]() $Q\mathrel{\cup} T$

as stages. The class of atomic egalitarian transition structures is denoted by atEgTrStr.

$Q\mathrel{\cup} T$

as stages. The class of atomic egalitarian transition structures is denoted by atEgTrStr.

The adjacency relation allows for several arrows in and out of a transition, as well as a state. The egalitarian goal also mandates that not only an initial state is possible, but also an initial transition. We use variables typically called g, with or without ornaments, to range over stages.

The definition of an atomic egalitarian transition structure is almost identical to that of a Petri net. The difference, however, is in the semantics: we are interested in a simple path semantics, instead of sets of marked places. This is better explained in Section 4.

In the definitions below, for a given signature

![]() $\Sigma$

, we denote by

$\Sigma$

, we denote by

![]() $T_\Sigma$

the set of terms on

$T_\Sigma$

the set of terms on

![]() $\Sigma$

, by

$\Sigma$

, by

![]() $T_\Sigma(X)$

the terms with sorted variables from the set X, and by

$T_\Sigma(X)$

the terms with sorted variables from the set X, and by

![]() $T_{\Sigma,s}$

and

$T_{\Sigma,s}$

and

![]() $T_\Sigma(X)_s$

the terms of sort s from the respective sets. Finally,

$T_\Sigma(X)_s$

the terms of sort s from the respective sets. Finally,

![]() $\Sigma|_s=\{f:s\to s' \mid \text{ for some } s'\in S\}$

denotes the set of totally defined unary operators in

$\Sigma|_s=\{f:s\to s' \mid \text{ for some } s'\in S\}$

denotes the set of totally defined unary operators in

![]() $\Sigma$

with domain

$\Sigma$

with domain

![]() $s\in S$

.

$s\in S$

.

Definition 2 (atomic egalitarian rewrite system)

An atomic egalitarian rewrite system is a tuple

![]() $\mathcal{R}=(S,{\le},\Sigma,E,R)$

, where:

$\mathcal{R}=(S,{\le},\Sigma,E,R)$

, where:

-

$(S,{\le})$

is a poset of sorts. We assume

$(S,{\le})$

is a poset of sorts. We assume

$\texttt{State}, \texttt{Trans},\texttt{Stage}\in S$

with

$\texttt{State}, \texttt{Trans},\texttt{Stage}\in S$

with

$\texttt{State}\le\texttt{Stage}$

and

$\texttt{State}\le\texttt{Stage}$

and

$\texttt{Trans}\le\texttt{Stage}$

. The terms of sort Stage are called stages.

$\texttt{Trans}\le\texttt{Stage}$

. The terms of sort Stage are called stages. -

$\Sigma$

is a signature of operators (and constants)

$\Sigma$

is a signature of operators (and constants)

$f : \omega \to s$

for some

$f : \omega \to s$

for some

$\omega\in S^*$

and

$\omega\in S^*$

and

$s\in S$

. We assume there is a constant

$s\in S$

. We assume there is a constant

$\texttt{init}\in\Sigma$

of sort Stage.

$\texttt{init}\in\Sigma$

of sort Stage. -

E is a set of left-to-right oriented equations

where

$t,t'\in T_\Sigma(X)_s$

for some

$t,t'\in T_\Sigma(X)_s$

for some

$s\in S$

and the condition C (which may be absent) is a conjunction

$s\in S$

and the condition C (which may be absent) is a conjunction

$\bigwedge_i u_i=u_i'$

of equational conditions, for

$\bigwedge_i u_i=u_i'$

of equational conditions, for

$u_i,u_i'\in T_\Sigma(X)_{s_i}$

for some

$u_i,u_i'\in T_\Sigma(X)_{s_i}$

for some

$s_i\in S$

.

$s_i\in S$

.

-

The set R contains egalitarian rules, that is, rules of the form

where

$u,u'\in T_\Sigma(X)_\texttt{State}$

,

$u,u'\in T_\Sigma(X)_\texttt{State}$

,

$t\in T_\Sigma(X)_\texttt{Trans}$

and C (which may be absent) is as above.

$t\in T_\Sigma(X)_\texttt{Trans}$

and C (which may be absent) is as above.

We also refer as signature to the triple

![]() $(S,{\le},\Sigma)$

. A property is any element of

$(S,{\le},\Sigma)$

. A property is any element of

![]() $\Sigma|_\texttt{Stage}$

, that is, any unary operator in

$\Sigma|_\texttt{Stage}$

, that is, any unary operator in

![]() $\Sigma$

totally defined on Stage terms.

$\Sigma$

totally defined on Stage terms.

The main point in which we depart from the standard definitions of rewrite system (often called rather rewrite theory) (Meseguer Reference Meseguer1992) is that our rules are egalitarian, by which we mean that they include an explicit transition term. Properties are also a nonstandard ingredient. As a passing note, we have shown (Martín 2021a, Section 6.2.4) that requiring properties to be totally defined, as we do, is not a meaningful restriction.

In Maude, and in our examples in this paper, equations are introduced by the keywords ![]() or

or ![]() and rules by

and rules by ![]() ; in each case the

; in each case the ![]() form is used when conditions are present. The signature is represented by sentences with keywords

form is used when conditions are present. The signature is represented by sentences with keywords ![]() though we often omit such sentences in the examples in this paper.

though we often omit such sentences in the examples in this paper.

Some of the definitions and results that follow are very similar for transition structures and for rewrite systems. In particular, the synchronization mechanism is the same for one and the other. To minimize repetition, we deal with both of them jointly as much as possible. We refer to them in abstract as systems and with the letter

![]() $\mathcal{S}$

.

$\mathcal{S}$

.

We compose atomic systems and structures to create complex ones. In all this paper we consider each system to be its own namespace, so that the sets of properties, sorts and operators from different systems are disjoint.

Definition 3 (suitable synchronization criteria)

Given a set of atomic structures or systems, one for each

![]() $n=1,\dots,N$

, either all of them in atEgTrStr or all of them in atEgRwSys, each with set of properties

$n=1,\dots,N$

, either all of them in atEgTrStr or all of them in atEgRwSys, each with set of properties

![]() $P_n$

, a set of synchronization criteria for them is a set

$P_n$

, a set of synchronization criteria for them is a set

![]() $Y\subseteq\bigcup_nP_n\times\bigcup_nP_n$

.

$Y\subseteq\bigcup_nP_n\times\bigcup_nP_n$

.

We say that a set Y of synchronization criteria is suitable if it satisfies the following conditions. For transition structures, we require that, if

![]() $(p,p')\in Y\mathrel{\cap} (P_m\times P_n)$

, for some

$(p,p')\in Y\mathrel{\cap} (P_m\times P_n)$

, for some

![]() $m,n\in\{1,\dots,N\}$

, with

$m,n\in\{1,\dots,N\}$

, with

![]() $p : Q_m\mathrel{\cup} T_m\to C$

and

$p : Q_m\mathrel{\cup} T_m\to C$

and

![]() $p' : Q_n\mathrel{\cup} T_n\to C'$

, then the elements in C and C’ can be compared for equality. Correspondingly, for rewrite systems

$p' : Q_n\mathrel{\cup} T_n\to C'$

, then the elements in C and C’ can be compared for equality. Correspondingly, for rewrite systems

![]() $\mathcal{R}_n=(S_n,\le_n,\Sigma_n,E_n,R_n)$

, we require that, if

$\mathcal{R}_n=(S_n,\le_n,\Sigma_n,E_n,R_n)$

, we require that, if

![]() $(p,p')\in Y\mathrel{\cap}(P_m\times P_n)$

, with

$(p,p')\in Y\mathrel{\cap}(P_m\times P_n)$

, with

![]() $p : \texttt{Stage}_m \to s$

and

$p : \texttt{Stage}_m \to s$

and

![]() $p' : \texttt{Stage}_n \to s'$

, then there exists a sort

$p' : \texttt{Stage}_n \to s'$

, then there exists a sort

![]() $s_0$

, common to

$s_0$

, common to

![]() $\mathcal{R}_m$

and

$\mathcal{R}_m$

and

![]() $\mathcal{R}_n$

, with

$\mathcal{R}_n$

, with

![]() $s_m\le_m s_0$

and

$s_m\le_m s_0$

and

![]() $s_n\le_n s_0$

, and an equational theory

$s_n\le_n s_0$

, and an equational theory

![]() $\mathcal{E}_0$

of

$\mathcal{E}_0$

of

![]() $s_0$

, included as subtheory in both

$s_0$

, included as subtheory in both

![]() $\mathcal{R}_m$

and

$\mathcal{R}_m$

and

![]() $\mathcal{R}_n$

, in which the values of p and p’ can be checked for equality.

$\mathcal{R}_n$

, in which the values of p and p’ can be checked for equality.

To be precise, we should require that

![]() $\mathcal{E}_0$

be embedded (rather than included) by means of injective maps into the equational theories of

$\mathcal{E}_0$

be embedded (rather than included) by means of injective maps into the equational theories of

![]() $\mathcal{R}_m$

and

$\mathcal{R}_m$

and

![]() $\mathcal{R}_n$

. In that way, the namespaces of different systems are kept disjoint. While it is technically imprecise, we use the shorthand of saying that

$\mathcal{R}_n$

. In that way, the namespaces of different systems are kept disjoint. While it is technically imprecise, we use the shorthand of saying that

![]() $\mathcal{E}_0$

is the common equational theory of

$\mathcal{E}_0$

is the common equational theory of

![]() $s_0$

.

$s_0$

.

Definition 4 (synchronous composition)

The synchronous composition of

![]() $\mathcal{S}_n$

for

$\mathcal{S}_n$

for

![]() $n=1,\dots,N$

, either all of them in atEgTrStr or all of them in atEgRwSys, with respect to the suitable synchronization criteria Y is denoted by

$n=1,\dots,N$

, either all of them in atEgTrStr or all of them in atEgRwSys, with respect to the suitable synchronization criteria Y is denoted by

![]() $\|_Y\{\mathcal{S}_n\mid n=1,\dots,N\}$

, or usually just

$\|_Y\{\mathcal{S}_n\mid n=1,\dots,N\}$

, or usually just

![]() $\|_Y\mathcal{S}_n$

. From now on, whenever we write

$\|_Y\mathcal{S}_n$

. From now on, whenever we write

![]() $\|_Y\mathcal{S}_n$

, we are assuming Y is suitable. When only two components are involved, we usually write

$\|_Y\mathcal{S}_n$

, we are assuming Y is suitable. When only two components are involved, we usually write

![]() $\mathcal{S}_1\|_Y\mathcal{S}_2$

.

$\mathcal{S}_1\|_Y\mathcal{S}_2$

.

Definition 5 (egalitarian structures and systems)

We define the classes of egalitarian transition structures, denoted by EgTrStr, and, respectively, of egalitarian rewrite systems, denoted by EgRwSys, as the smallest ones that contain atEgTrStr or, respectively, atEgRwSys, and are closed with respect to the synchronous composition operation described above.

We need to consider a notion of equivalence: the one given by the different ways of composing the same components. For example,

![]() $(\mathcal{S}_1\|_{Y}\mathcal{S}_2)\|_{Y'}\mathcal{S}_3$

is equivalent to

$(\mathcal{S}_1\|_{Y}\mathcal{S}_2)\|_{Y'}\mathcal{S}_3$

is equivalent to

![]() $\|_{Y\mathrel{\cup} Y'}\{\mathcal{S}_1, \mathcal{S}_2, \mathcal{S}_3\}$

.

$\|_{Y\mathrel{\cup} Y'}\{\mathcal{S}_1, \mathcal{S}_2, \mathcal{S}_3\}$

.

Definition 6 (equivalent structures and systems)

The set of atomic components of an egalitarian transition structure or rewrite system is:

-

$\mathop{\textrm{atoms}}(\mathcal{S}) = \{\mathcal{S}\}$

if

$\mathop{\textrm{atoms}}(\mathcal{S}) = \{\mathcal{S}\}$

if

$\mathcal{S}$

is atomic,

$\mathcal{S}$

is atomic, -

$\mathop{\textrm{atoms}}(\|_Y\mathcal{S}_n) = \bigcup_n\mathop{\textrm{atoms}}(\mathcal{S}_n)$

.

$\mathop{\textrm{atoms}}(\|_Y\mathcal{S}_n) = \bigcup_n\mathop{\textrm{atoms}}(\mathcal{S}_n)$

.

The total set of criteria of an egalitarian transition structure or rewrite system is:

-

$\mathop{\mathrm{criteria}}(\mathcal{S}) = \emptyset$

if

$\mathop{\mathrm{criteria}}(\mathcal{S}) = \emptyset$

if

$\mathcal{S}$

is atomic,

$\mathcal{S}$

is atomic, -

$\mathop{\mathrm{criteria}}(\|_Y\mathcal{S}_n) = \widetilde{Y} \mathrel{\cup} \bigcup_n\mathop{\mathrm{criteria}}(\mathcal{S}_n)$

,

$\mathop{\mathrm{criteria}}(\|_Y\mathcal{S}_n) = \widetilde{Y} \mathrel{\cup} \bigcup_n\mathop{\mathrm{criteria}}(\mathcal{S}_n)$

,

where

![]() $\widetilde{Y}=\{\{p,q\} \mid (p,q)\in Y\}$

(so that (p,q) and (q,p) represent the same criterion).

$\widetilde{Y}=\{\{p,q\} \mid (p,q)\in Y\}$

(so that (p,q) and (q,p) represent the same criterion).

Two egalitarian structures or systems

![]() $\mathcal{S}_1$

and

$\mathcal{S}_1$

and

![]() $\mathcal{S}_2$

are said to be equivalent iff

$\mathcal{S}_2$

are said to be equivalent iff

![]() $\mathop{\textrm{atoms}}(\mathcal{S}_1)=\mathop{\textrm{atoms}}(\mathcal{S}_2)$

and

$\mathop{\textrm{atoms}}(\mathcal{S}_1)=\mathop{\textrm{atoms}}(\mathcal{S}_2)$

and

![]() $\mathop{\mathrm{criteria}}(\mathcal{S}_1)=\mathop{\mathrm{criteria}}(\mathcal{S}_2)$

.

$\mathop{\mathrm{criteria}}(\mathcal{S}_1)=\mathop{\mathrm{criteria}}(\mathcal{S}_2)$

.

Proposition 1 (equivalence to composition of atoms)

Every egalitarian transition structure or rewrite system is equivalent to one of the form

![]() $\|_Y\mathcal{S}_n$

where each

$\|_Y\mathcal{S}_n$

where each

![]() $\mathcal{S}_n$

is atomic.

$\mathcal{S}_n$

is atomic.

Namely,

![]() $\mathcal{S}=\|_Y\mathcal{S}_n$

is equivalent to

$\mathcal{S}=\|_Y\mathcal{S}_n$

is equivalent to

![]() $\|_{Y'}\mathop{\textrm{atoms}}(\mathcal{S})$

, where

$\|_{Y'}\mathop{\textrm{atoms}}(\mathcal{S})$

, where

![]() $Y'=\{(p,q) \mid \{p,q\}\in\mathop{\textrm{criteria}}(\mathcal{S})\}$

.

$Y'=\{(p,q) \mid \{p,q\}\in\mathop{\textrm{criteria}}(\mathcal{S})\}$

.

In our previous work (Martín et al. Reference Martní, Verdejo and Martí-Oliet2020; Martín 2021a) we showed that equivalent systems represent the same behavior, as given by paths and satisfaction of temporal formulas. This allows us to group the atomic components in the most suitable way for a modular design. Thus, in the example in Section 2.3, we first composed RIVER || PREVIOUS to obtain RIVER-W-PREV, which was then used in the composition RIVER-W-PREV || AVOID1 || AVOID2.

In short, the compound

![]() $\|_Y\mathcal{S}_n$

is a set of atomic components linked by synchronization criteria. The behavior it models is that in which each component evolves according to its internal specification, with the added restriction that all synchronization criteria have to be satisfied at all times.

$\|_Y\mathcal{S}_n$

is a set of atomic components linked by synchronization criteria. The behavior it models is that in which each component evolves according to its internal specification, with the added restriction that all synchronization criteria have to be satisfied at all times.

Definition 7 (signature and properties of a compound)

Let ![]() for

for

![]() $n=1,\dots,N$

. Let Y be a set of suitable synchronization criteria. The set of properties for

$n=1,\dots,N$

. Let Y be a set of suitable synchronization criteria. The set of properties for

![]() $\mathcal{R}_n$

has already being defined as

$\mathcal{R}_n$

has already being defined as

![]() $\Sigma|_{\texttt{Stage}_n}$

. The set of properties for

$\Sigma|_{\texttt{Stage}_n}$

. The set of properties for

![]() $\|_Y\mathcal{R}_n$

is defined to be

$\|_Y\mathcal{R}_n$

is defined to be

![]() $\biguplus_nP_n$

. Also, the signature for

$\biguplus_nP_n$

. Also, the signature for

![]() $\|_Y\mathcal{R}_n$

is defined to be

$\|_Y\mathcal{R}_n$

is defined to be

![]() $(\bigcup_nS_n,\bigcup_n{\le}_n,\bigcup_n\Sigma_n)$

.

$(\bigcup_nS_n,\bigcup_n{\le}_n,\bigcup_n\Sigma_n)$

.

This definition, as was the case for Definition 3, is not technically precise, because we require at the same time that the namespaces be disjoint and that they share the common equational theories. A precise definition would involve pushouts. We avoid it and allow the slight informality of saying that each rewrite system is its own namespace, disjoint from the rest except for those common equational theories.

Definition 8 (semantics in the atomic case)

Given ![]() , we define

, we define ![]() by:

by:

-

$Q = T_{\Sigma/E,\texttt{State}}$

(that is, E-equational classes of State terms);

$Q = T_{\Sigma/E,\texttt{State}}$

(that is, E-equational classes of State terms); -

$T = T_{\Sigma/E,\texttt{Trans}}$

(that is, E-equational classes of Trans terms);

$T = T_{\Sigma/E,\texttt{Trans}}$

(that is, E-equational classes of Trans terms); -

$\mathrel{\rightarrow}$

is the half-rewrite relation

$\mathrel{\rightarrow}$

is the half-rewrite relation

$\mathrel{\rightarrow}^{\text{eg}}_{\mathcal{R}}$

induced by R (Martín et al. Reference Martní, Verdejo and Martí-Oliet2020, Definition 6);

$\mathrel{\rightarrow}^{\text{eg}}_{\mathcal{R}}$

induced by R (Martín et al. Reference Martní, Verdejo and Martí-Oliet2020, Definition 6); -

$P=\Sigma|_\texttt{Stage}$

;

$P=\Sigma|_\texttt{Stage}$

; -

$g_0=[\texttt{init}]_{E}$

(that is, the E-equational class of init).

$g_0=[\texttt{init}]_{E}$

(that is, the E-equational class of init).

The half-rewrite relation

![]() $\to$

takes the system from a state to a transition, or vice versa, in contrast to the usual state-to-state rewrites. Roughly speaking, a rewrite rule

$\to$

takes the system from a state to a transition, or vice versa, in contrast to the usual state-to-state rewrites. Roughly speaking, a rewrite rule ![]() produces half rewrites from instances of u to instances of t, and from there to instances of u′.

produces half rewrites from instances of u to instances of t, and from there to instances of u′.

Definition 9 (semantics for the general egalitarian case)

Given ![]() we define its semantics componentwise:

we define its semantics componentwise:

A path semantics for the composition of egalitarian structures is given in Section 4.

3.2 Plain structures and systems

In addition to egalitarian structures and systems, we use standard ones which we call plain to avoid confusion with the egalitarian ones. An important feature of plain structures and systems is that they only have states, and not (explicit) transitions, and this allows their composition to be defined as a tuple construction. We see plain structures and systems as modeling the global behavior of composed systems, while we use egalitarian structures and systems to model local and distributed systems. The correspondence between them is given by the split operation defined later.

Definition 10 (plain transition structure)

A plain transition structure is a tuple

![]() $\mathcal{T}=(Q,\mathrel{\rightarrow},P,q_0)$

, where:

$\mathcal{T}=(Q,\mathrel{\rightarrow},P,q_0)$

, where:

-

• Q is the set of states;

-

•

${\mathrel{\rightarrow}}\subseteq Q\times Q$

is the adjacency relation;

${\mathrel{\rightarrow}}\subseteq Q\times Q$

is the adjacency relation; -

• P is the set of properties, each one a total function p from Q to some codomain

$C_p$

;

$C_p$

; -

•

$q_0\in Q$

is the initial state.

$q_0\in Q$

is the initial state.

The class of all plain transition structures is denoted by TrStr.

Definition 11 (plain rewrite system)

A plain rewrite system is a tuple

![]() $(S,{\le},\Sigma,E,R)$

, where:

$(S,{\le},\Sigma,E,R)$

, where:

-

$(S,{\le})$

is a poset of sorts which contains the element State.

$(S,{\le})$

is a poset of sorts which contains the element State. -

$\Sigma$

is a signature of operators which includes the constant

$\Sigma$

is a signature of operators which includes the constant

$\texttt{init}$

of sort State.

$\texttt{init}$

of sort State. -

E is a set of equations as in Definition 2.

-

R is a set of rules of the form

, where

, where

$t,t'\in T_\Sigma(X)_s$

for some

$t,t'\in T_\Sigma(X)_s$

for some

$s\in S$

, and C (which may be absent) is as in Definition 2.

$s\in S$

, and C (which may be absent) is as in Definition 2.

We also refer as signature to the triple

![]() $(S,{\le},\Sigma)$

. We call properties to the elements of

$(S,{\le},\Sigma)$

. We call properties to the elements of

![]() $\Sigma|_\texttt{State}$

. The class of all plain rewrite systems is denoted by RwSys.

$\Sigma|_\texttt{State}$

. The class of all plain rewrite systems is denoted by RwSys.

Definition 12 (composition for plain transition structures)

Given plain transition structures ![]() , for

, for

![]() $n=1,\dots,N$

, their synchronous composition with respect to the synchronization criteria

$n=1,\dots,N$

, their synchronous composition with respect to the synchronization criteria

![]() $Y\subseteq \bigcup_nP_n\times\bigcup_nP_n$

, is denoted by

$Y\subseteq \bigcup_nP_n\times\bigcup_nP_n$

, is denoted by

![]() $\|_Y\{\mathcal{T}_n \mid n=1,\dots,N\}$

, or usually just

$\|_Y\{\mathcal{T}_n \mid n=1,\dots,N\}$

, or usually just

![]() $\|_Y\mathcal{T}_n$

, and is defined to be

$\|_Y\mathcal{T}_n$

, and is defined to be ![]() , where:

, where:

-

;

; -

for

$\langle q_1,\dots,q_N\rangle, \langle q'_1,\dots,q'_N\rangle\in Q$

, we have

$\langle q_1,\dots,q_N\rangle, \langle q'_1,\dots,q'_N\rangle\in Q$

, we have

$\langle q_1,\dots,q_N\rangle \mathrel{\rightarrow} \langle q'_1,\dots,q'_N\rangle$

iff for each n either

$\langle q_1,\dots,q_N\rangle \mathrel{\rightarrow} \langle q'_1,\dots,q'_N\rangle$

iff for each n either

$q_n\mathrel{\rightarrow}_n q'_n$

or

$q_n\mathrel{\rightarrow}_n q'_n$

or

$q_n=q'_n$

, with at least one occurrence of the former;

$q_n=q'_n$

, with at least one occurrence of the former; -

$P = \bigcup_nP_n$

and, if p is a property originally defined in the component

$P = \bigcup_nP_n$

and, if p is a property originally defined in the component

$\mathcal{T}_m$

, then it is defined in

$\mathcal{T}_m$

, then it is defined in

$\mathcal{T}$

by

$\mathcal{T}$

by

$p(\langle q_1,\dots,q_N\rangle)=p(q_m)$

;

$p(\langle q_1,\dots,q_N\rangle)=p(q_m)$

; -

$q_0=\langle q_{10},\dots,q_{N0}\rangle$

, assumed to be in Q (that is, to satisfy the criteria in Y).

$q_0=\langle q_{10},\dots,q_{N0}\rangle$

, assumed to be in Q (that is, to satisfy the criteria in Y).

It is an important detail that the composition of plain transition structures can be evaluated to a single, monolithic structure of the same type, while the composition of egalitarian structures is just a set of interacting but independent components.

The composition of plain rewrite systems is defined next by a tuple-like construction; in particular, rewrite rules are produced in this way. For this to work, we need the components involved to be topmost. A plain rewrite system is said to be topmost if its rules can only be applied on whole State terms, not on its subterms – see more explanations in our previous work (Martín et al. Reference Martní, Verdejo and Martí-Oliet2020).

Definition 13 (composition for plain rewrite systems)

Given plain rewrite systems ![]() for

for

![]() $n=1,\dots,N$

, all of them topmost, their synchronous composition with respect to synchronization criteria Y is denoted by

$n=1,\dots,N$

, all of them topmost, their synchronous composition with respect to synchronization criteria Y is denoted by

![]() $\|_Y\{\mathcal{R}_n \mid n=1,\dots,N\}$

, or usually just

$\|_Y\{\mathcal{R}_n \mid n=1,\dots,N\}$

, or usually just

![]() $\|_Y\mathcal{R}_n$

, and is defined to be a new plain rewrite system

$\|_Y\mathcal{R}_n$

, and is defined to be a new plain rewrite system ![]() . The elements of

. The elements of

![]() $\mathcal{R}$

are defined as the disjoint union of the respective elements of each

$\mathcal{R}$

are defined as the disjoint union of the respective elements of each

![]() $\mathcal{R}_n$

(that is,

$\mathcal{R}_n$

(that is,

![]() $S=\biguplus_nS_n$

, and so on), except for the following:

$S=\biguplus_nS_n$

, and so on), except for the following:

-

There is in S a new sort State and a constructor

(

(

$\texttt{State}_n$

denotes the sort State from component

$\texttt{State}_n$

denotes the sort State from component

$\mathcal{R}_n$

).

$\mathcal{R}_n$

). -

There is a constant init of sort State and an equation

$\texttt{init} = \langle\texttt{init}_1,\dots,\texttt{init}_N\rangle$

(

$\texttt{init} = \langle\texttt{init}_1,\dots,\texttt{init}_N\rangle$

(

$\texttt{init}_n$

denotes the constant init from component

$\texttt{init}_n$

denotes the constant init from component

$\mathcal{R}_n$

).

$\mathcal{R}_n$

). -

For each

$(p,p')\in Y$

, suitability of Y (Definition 3) implies the existence of a common sort s and a common equational theory for it. These are common and, thus, included only once in the result of the composition.

$(p,p')\in Y$

, suitability of Y (Definition 3) implies the existence of a common sort s and a common equational theory for it. These are common and, thus, included only once in the result of the composition. -

For each property p defined in the component

$\mathcal{R}_m$

, there is in

$\mathcal{R}_m$

, there is in

$\Sigma$

a declaration of a property with the same name and in E an equation

$\Sigma$

a declaration of a property with the same name and in E an equation

$p(\langle q_1,\dots,q_N\rangle)=p(q_m)$

.

$p(\langle q_1,\dots,q_N\rangle)=p(q_m)$

. -

We assume an equational theory of the Booleans is included, and we add the declaration of a new operator

$\texttt{isValidState} : \Pi_{i=1}^N\texttt{State}_i\to \texttt{Boolean}$

, defined by this equation:

$\texttt{isValidState} : \Pi_{i=1}^N\texttt{State}_i\to \texttt{Boolean}$

, defined by this equation:  \[\texttt{isValidState}(\langle q_1,\dots,q_N\rangle) = \bigwedge_{(p,p')\in Y} p(\langle q_1,\dots,q_N\rangle)=p'(\langle q_1,\dots,q_N\rangle).\]

\[\texttt{isValidState}(\langle q_1,\dots,q_N\rangle) = \bigwedge_{(p,p')\in Y} p(\langle q_1,\dots,q_N\rangle)=p'(\langle q_1,\dots,q_N\rangle).\]

-

The rewrite rules from the components are dropped, and the set of rules R for the composition is built in the following way. For each nonempty set

$M\subseteq\{1,\dots,N\}$

, and for each set of rules

$M\subseteq\{1,\dots,N\}$

, and for each set of rules  , one from each

, one from each

$R_m$

for

$R_m$

for

$m\in M$

, and setting

$m\in M$

, and setting

$q'_m=q_m$

for

$q'_m=q_m$

for

$m\not\in M$

, there is the following rule in R:

$m\not\in M$

, there is the following rule in R:

With these rules, only State terms for which synchronization criteria are satisfied are reachable from init.

Equations from different components are mixed together, according to this definition, but there are no conflicts, because each component is its own namespace. The resulting plain rewrite system happens to be topmost as well, so it can be used as a component in turn.

Definition 14 (semantics for plain rewrite systems)

Given ![]() , we define its semantics

, we define its semantics ![]() by:

by:

-

$Q = T_{\Sigma/E,\texttt{State}}$

;

$Q = T_{\Sigma/E,\texttt{State}}$

; -

$\mathrel{\rightarrow}$

is the rewrite relation

$\mathrel{\rightarrow}$

is the rewrite relation

$\mathrel{\rightarrow}_{\mathcal{R}}$

induced by

$\mathrel{\rightarrow}_{\mathcal{R}}$

induced by

$\mathcal{R}$

;

$\mathcal{R}$

; -

$P=\Sigma|_\texttt{State}$

;

$P=\Sigma|_\texttt{State}$

; -

$q_0=[\texttt{init}]_{E}$

.

$q_0=[\texttt{init}]_{E}$

.

Concepts of equivalence can be defined for plain transition structures and for plain rewrite systems (Martín et al. Reference Martní, Verdejo and Martí-Oliet2020), corresponding to the equivalence in the egalitarian setting from Definition 6, to formalize the idea that the ordering and grouping of components in a composition are immaterial. For example,

![]() $(\mathcal{S}_1\|_{Y_1}\mathcal{S}_2)\|_{Y_2}\mathcal{S}_3$

is equivalent to

$(\mathcal{S}_1\|_{Y_1}\mathcal{S}_2)\|_{Y_2}\mathcal{S}_3$

is equivalent to

![]() $(\mathcal{S}_3\|_{Y_3}\mathcal{S}_1)\|_{Y_4}\mathcal{S}_2$

if

$(\mathcal{S}_3\|_{Y_3}\mathcal{S}_1)\|_{Y_4}\mathcal{S}_2$

if

![]() $Y_1\mathrel{\cup} Y_2=Y_3\mathrel{\cup} Y_4$

, for

$Y_1\mathrel{\cup} Y_2=Y_3\mathrel{\cup} Y_4$

, for

![]() $\mathcal{S}_n$

either plain rewrite systems or plain transition structures. (Remember that, whenever we write such composition expressions, we are assuming the synchronization criteria to be suitable.) Although we do not repeat those definitions here, when we write expressions like

$\mathcal{S}_n$

either plain rewrite systems or plain transition structures. (Remember that, whenever we write such composition expressions, we are assuming the synchronization criteria to be suitable.) Although we do not repeat those definitions here, when we write expressions like

![]() $\langle q_1,\dots,q_N\rangle\in\|_Y\mathcal{S}_n$

we are assuming that some ordering and grouping of the components have been arbitrarily fixed. And when we say that two systems are equal, we rather mean they are equivalent in that sense. This is the case in the following proposition.

$\langle q_1,\dots,q_N\rangle\in\|_Y\mathcal{S}_n$

we are assuming that some ordering and grouping of the components have been arbitrarily fixed. And when we say that two systems are equal, we rather mean they are equivalent in that sense. This is the case in the following proposition.

Proposition 2 (semantics and composition commute)

For plain rewrite systems

![]() $\mathcal{R}_n$

, each of them topmost, and for suitable synchronization criteria Y, we have that

$\mathcal{R}_n$

, each of them topmost, and for suitable synchronization criteria Y, we have that

![]() $\mathop{\mathrm{sem}}(\|_Y\mathcal{R}_n)=\|_Y\mathop{\mathrm{sem}}(\mathcal{R}_n)$

.

$\mathop{\mathrm{sem}}(\|_Y\mathcal{R}_n)=\|_Y\mathop{\mathrm{sem}}(\mathcal{R}_n)$

.

3.3 The split

Plain systems have the advantage that they are standard rewrite systems and existing theoretical and practical tools can be used on them. For that reason, it is sometimes useful to transform an egalitarian system into an equivalent plain one. This is what the operation that we call split does. The result of the split represents in a single system the joint evolution of the three components.

Definition 15 (the split)

Given ![]() , its split is

, its split is ![]() . That is, stages are transformed into states.

. That is, stages are transformed into states.

Given ![]() , its split is

, its split is ![]() , where

, where

-

S′ is the result of renaming in S the sort State to State’, and Stage to State (with the only aim of getting the top sort still being called State), and

-

R′ is the result of splitting each rule

$s -\!\![t]\!\!\!\rightarrow s^{\prime}$

if C in R to produce the two rules

$s -\!\![t]\!\!\!\rightarrow s^{\prime}$

if C in R to produce the two rules

$s\rightarrow t \,\,\mathtt{if}\,C$

and

$s\rightarrow t \,\,\mathtt{if}\,C$

and

$t\rightarrow s ^{\prime}$

if C in R′.

$t\rightarrow s ^{\prime}$

if C in R′.

For a nonatomic system

![]() $\|_Y\mathcal{S}_n$

in EgTrStr (resp., in EgRwSys), its split is recursively defined by

$\|_Y\mathcal{S}_n$

in EgTrStr (resp., in EgRwSys), its split is recursively defined by

![]() $\mathop{\mathrm{split}}(\|_Y\mathcal{S}_n) = \|_Y\mathop{\mathrm{split}}(\mathcal{S}_n)$

, a system in TrStr (resp., in RwSys).

$\mathop{\mathrm{split}}(\|_Y\mathcal{S}_n) = \|_Y\mathop{\mathrm{split}}(\mathcal{S}_n)$

, a system in TrStr (resp., in RwSys).

The composition of plain systems can always be evaluated to a single one, so the result of a split is always a single plain transition structure or rewrite system.

Proposition 3 (semantics and split commute)

For

![]() $\mathcal{R}\in{{\textsf{EgRwSys}}}$

all whose atomic components are topmost, we have that

$\mathcal{R}\in{{\textsf{EgRwSys}}}$

all whose atomic components are topmost, we have that ![]() .

.

Definition 16 (compatible stages)

Given

![]() $\mathcal{T}=(Q,T,\mathrel{\rightarrow},P,g_0)$

and

$\mathcal{T}=(Q,T,\mathrel{\rightarrow},P,g_0)$

and

![]() $\mathcal{T}'=(Q',T',\mathrel{\rightarrow}',P',g'_0)$

, the stages

$\mathcal{T}'=(Q',T',\mathrel{\rightarrow}',P',g'_0)$

, the stages

![]() $g\in Q\mathrel{\cup} T$

and

$g\in Q\mathrel{\cup} T$

and

![]() $g'\in Q'\mathrel{\cup} T'$

are said to be compatible (with respect to Y) iff all criteria in Y are satisfied when evaluated at them, that is,

$g'\in Q'\mathrel{\cup} T'$

are said to be compatible (with respect to Y) iff all criteria in Y are satisfied when evaluated at them, that is,

![]() $p(g)=p'(g')$

for each

$p(g)=p'(g')$

for each

![]() $(p,p')\in Y\mathrel{\cap}(P\times P')$

. More in general, given

$(p,p')\in Y\mathrel{\cap}(P\times P')$

. More in general, given

![]() $\mathcal{T}_n=(Q_n,T_n,\mathrel{\rightarrow}_n,P_n,g_{n0})$

for

$\mathcal{T}_n=(Q_n,T_n,\mathrel{\rightarrow}_n,P_n,g_{n0})$

for

![]() $n=1,\dots,N$

, we say that the stages

$n=1,\dots,N$

, we say that the stages

![]() $\{g_n\}_n$

, with

$\{g_n\}_n$

, with

![]() $g_n\in Q_n\mathrel{\cup} T_n$

, are compatible when they are so pairwise according to the above.

$g_n\in Q_n\mathrel{\cup} T_n$

, are compatible when they are so pairwise according to the above.

The intuitive meaning is that compatible stages can be visited simultaneously, each within its own component system. In the example of the chained buffers, Section 2.1, the states sending in BUFFER1 and receiving in BUFFER2 are compatible with respect to the synchronization criterion ![]() because isSending evaluates to true at sending and isReceiving evaluates also to true at receiving. There is a trivial bijection between compatible stages and states in the split which justifies the view that states in

because isSending evaluates to true at sending and isReceiving evaluates also to true at receiving. There is a trivial bijection between compatible stages and states in the split which justifies the view that states in

![]() $\mathop{\mathrm{split}}(\mathcal{T})$

represent global states for the compound

$\mathop{\mathrm{split}}(\mathcal{T})$

represent global states for the compound

![]() $\mathcal{T}$

.

$\mathcal{T}$

.

Proposition 4 (distributed and global states)

There is a bijection between the set of compatible stages in

![]() $\|_Y\mathcal{T}_n$

and the set of states in

$\|_Y\mathcal{T}_n$

and the set of states in

![]() $\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

.

$\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

.

4 Distributed and global paths

In preparation for the definition of satisfaction in following sections, we need an operational, or step, semantics for all our transition structures. They are given by paths (for atomic and plain structures) and sets of compatible paths (for compounds). They are defined in this section.

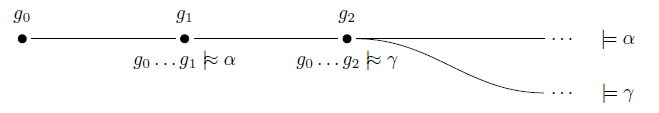

Definition 17 (path and maximal path)

A path in ![]() is a finite or infinite sequence of adjacent stages

is a finite or infinite sequence of adjacent stages

![]() $\overline{g}=g_0\mathrel{\rightarrow} g_1\mathrel{\rightarrow}\dots$

starting at the structure’s initial stage. We call such a path maximal if it is either infinite or it is finite and its final stage has no stages adjacent to it.

$\overline{g}=g_0\mathrel{\rightarrow} g_1\mathrel{\rightarrow}\dots$

starting at the structure’s initial stage. We call such a path maximal if it is either infinite or it is finite and its final stage has no stages adjacent to it.

Similarly, a path in

![]() $\mathcal{T}=(Q,\mathrel{\rightarrow},P,q_0)\in{{\textsf{TrStr}}}$

is a sequence of adjacent states

$\mathcal{T}=(Q,\mathrel{\rightarrow},P,q_0)\in{{\textsf{TrStr}}}$

is a sequence of adjacent states

![]() $\overline{q}=q_0\mathrel{\rightarrow} q_1\mathrel{\rightarrow}\dots$

. We call such a path maximal if it is either infinite or it is finite and its final state has no states adjacent to it.

$\overline{q}=q_0\mathrel{\rightarrow} q_1\mathrel{\rightarrow}\dots$

. We call such a path maximal if it is either infinite or it is finite and its final state has no states adjacent to it.

Compatibility of paths is defined by means of a relation between indices which shows a way in which all paths can be traversed together, interleaving some steps, making other simultaneous, and keeping compatibility of stages at all times. The intuitive meaning of the following definition is that, if

![]() $\langle i_1,\dots,i_N\rangle$

is in the relation X, then the stages

$\langle i_1,\dots,i_N\rangle$

is in the relation X, then the stages

![]() $g_{1i_1},\dots,g_{Ni_N}$

are visited at the same time, each in its structure. Thus, each relation X describes a possible execution of the composed system.

$g_{1i_1},\dots,g_{Ni_N}$

are visited at the same time, each in its structure. Thus, each relation X describes a possible execution of the composed system.

Definition 18 (compatible paths)

Let

![]() $\mathcal{T}_n\in{{\textsf{atEgTrStr}}}$

for

$\mathcal{T}_n\in{{\textsf{atEgTrStr}}}$

for

![]() $n=1,\dots,N$

. For each n, let

$n=1,\dots,N$

. For each n, let

![]() $\overline{g_n}=g_{n0}\mathrel{\rightarrow} g_{n1}\mathrel{\rightarrow}\dots$

be a finite or infinite path in

$\overline{g_n}=g_{n0}\mathrel{\rightarrow} g_{n1}\mathrel{\rightarrow}\dots$

be a finite or infinite path in

![]() $\mathcal{T}_n$

. The paths

$\mathcal{T}_n$

. The paths

![]() $\{\overline{g_n} \mid n=1,\dots,N\}$

are said to be compatible (with respect to a given Y) iff there exists a relation between indices

$\{\overline{g_n} \mid n=1,\dots,N\}$

are said to be compatible (with respect to a given Y) iff there exists a relation between indices

![]() $X\subseteq\mathbb{N}^{\{1,\dots,N\}}$

satisfying the following conditions:

$X\subseteq\mathbb{N}^{\{1,\dots,N\}}$

satisfying the following conditions:

-

1.

$\langle0,\dots,0\rangle\in X$

.

$\langle0,\dots,0\rangle\in X$

. -

2. If

$\langle i_1,\dots,i_N\rangle\in X$

and

$\langle i_1,\dots,i_N\rangle\in X$

and

$g_{ni_n}$

is not the last stage in

$g_{ni_n}$

is not the last stage in

$\overline{g_n}$

for at least one

$\overline{g_n}$

for at least one

$n\in\{1,\dots,N\}$

, then for exactly one nonempty

$n\in\{1,\dots,N\}$

, then for exactly one nonempty

$M\subseteq\{1,\dots,N\}$

we have that

$M\subseteq\{1,\dots,N\}$

we have that

$\langle i'_1,\dots,i'_N\rangle\in X$

, where

$\langle i'_1,\dots,i'_N\rangle\in X$

, where

$i'_n=i_n+1$

if

$i'_n=i_n+1$

if

$n\in M$

, and

$n\in M$

, and

$i'_n=i_n$

otherwise.

$i'_n=i_n$

otherwise. -

3. All tuples in X can be obtained by means of the two previous conditions.

-

4.

$\langle i_1,\dots,i_N\rangle\in X$

implies the compatibility (with respect to Y) of the stages

$\langle i_1,\dots,i_N\rangle\in X$

implies the compatibility (with respect to Y) of the stages

$g_{ni_n}$

(

$g_{ni_n}$

(

$n=1,\dots,N$

).

$n=1,\dots,N$

). -

5. For each stage

$g_{ni}$

in each path

$g_{ni}$

in each path

$\overline{g_n}$

, the index i appears as the nth component of some tuple in X.

$\overline{g_n}$

, the index i appears as the nth component of some tuple in X.

Further, a set of paths is said to be maximally compatible if no path or subset of paths in it can be extended with new stages in the respective components while maintaining compatibility.

The conditions, specially Condition 2, make it possible to arrange all the tuples in X in a linear sequence, which is shown in Proposition 5 to correspond to a path in the split system. Thus, paths in the split can be seen as global paths.

Condition 5 entails that the paths are all traversed together in their entirety. This, however, does not mean each path is maximal in its component: a partial path can be a member of a compatible set, as long as X shows how to traverse it to its last (though maybe not terminal) stage.

For example, consider the paths for the chained buffers from Section 2.1

-

in BUFFER1: idle

$\to$

receiving

$\to$

receiving

$\to$

gotToken

$\to$

gotToken

$\to$

sending

$\to$

sending

$\to\cdots$

;

$\to\cdots$

; -

in BUFFER2: the same as in BUFFER1;

-

in BUFFER3: the single-stage path idle.

A set X showing how to traverse these three paths would include, among others, the following triples:

-

$\langle 0,0,0\rangle$

, representing the three paths starting at idle;

$\langle 0,0,0\rangle$

, representing the three paths starting at idle; -

$\langle 1,0,0\rangle$

and

$\langle 1,0,0\rangle$

and

$\langle 2,0,0\rangle$

, representing only the first path advancing one step and two steps;

$\langle 2,0,0\rangle$

, representing only the first path advancing one step and two steps; -

$\langle 3,1,0\rangle$

, representing the first and second paths advancing to the respective stages sending and receiving, which are compatible.

$\langle 3,1,0\rangle$

, representing the first and second paths advancing to the respective stages sending and receiving, which are compatible.

As a side note, compatibility of paths cannot be defined pairwise, as we did for compatibility of stages in Definition 16. It need not be the case that three paths can be traversed simultaneously keeping compatibility of stages, even if any two of them can.

Consider

![]() $\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

. Its states are tuples of components’ stages. Thus, for each atomic component

$\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

. Its states are tuples of components’ stages. Thus, for each atomic component

![]() $\mathcal{T}_n$

of

$\mathcal{T}_n$

of

![]() $\mathcal{T}$

, a projection map

$\mathcal{T}$

, a projection map

![]() $\pi_n$

can be defined from the states of

$\pi_n$

can be defined from the states of

![]() $\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

to the stages of

$\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

to the stages of

![]() $\mathcal{T}_n$

. This projection can be extended to paths. However, our definitions allow for a component to advance while others stay in the same stage, so, in general, a pure projection would produce repeated stages (stuttering) that we want to remove.

$\mathcal{T}_n$

. This projection can be extended to paths. However, our definitions allow for a component to advance while others stay in the same stage, so, in general, a pure projection would produce repeated stages (stuttering) that we want to remove.

Definition 19 (projection)

Let

![]() $q=\langle g_1,\dots,g_N\rangle$

be a state of

$q=\langle g_1,\dots,g_N\rangle$

be a state of

![]() $\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

and

$\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

and

![]() $\overline{q}=q_0\mathrel{\rightarrow} q_1\mathrel{\rightarrow}\dots$

be a path in

$\overline{q}=q_0\mathrel{\rightarrow} q_1\mathrel{\rightarrow}\dots$

be a path in

![]() $\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

. For each

$\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

. For each

![]() $n=1,\dots,N$

:

$n=1,\dots,N$

:

-

we define

$\pi_n(q)=g_n$

;

$\pi_n(q)=g_n$

; -

we define

$\pi_n(\overline{q})$

as the result of removing stuttering (that is, simplifying consecutive repetitions) from

$\pi_n(\overline{q})$

as the result of removing stuttering (that is, simplifying consecutive repetitions) from

$\pi_n(q_0) \mathrel{\rightarrow} \pi_n(q_1) \mathrel{\rightarrow}\dots$

$\pi_n(q_0) \mathrel{\rightarrow} \pi_n(q_1) \mathrel{\rightarrow}\dots$

Proposition 5 (distributed and global paths)

There is a bijection between sets of compatible paths in

![]() $\{\mathcal{T}_n\}_n$

(with respect to Y) and paths in

$\{\mathcal{T}_n\}_n$

(with respect to Y) and paths in

![]() $\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

. Also, there is a bijection between sets of maximally compatible paths in

$\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

. Also, there is a bijection between sets of maximally compatible paths in

![]() $\{\mathcal{T}_n\}_n$

(with respect to Y) and maximal paths in

$\{\mathcal{T}_n\}_n$

(with respect to Y) and maximal paths in

![]() $\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

.

$\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

.

The paths in a compatible set are not required to be maximal. Indeed, any projection of

![]() $\overline{q}$

may fail to be maximal in its component, even if

$\overline{q}$

may fail to be maximal in its component, even if

![]() $\overline{q}$

is in the split.

$\overline{q}$

is in the split.

Proof. We prove first that, for

![]() $\overline{q}$

a path in

$\overline{q}$

a path in

![]() $\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

, the projections

$\mathop{\mathrm{split}}(\|_Y\mathcal{T}_n)$

, the projections

![]() $\pi_n(\overline{q})$

, for

$\pi_n(\overline{q})$

, for

![]() $n=1,\dots,N$

, are compatible paths, with the relation X (required by Definition 18) being induced by

$n=1,\dots,N$

, are compatible paths, with the relation X (required by Definition 18) being induced by

![]() $\overline{q}$

itself. Because the projections

$\overline{q}$

itself. Because the projections

![]() $\pi_n$

remove stuttering, we need to be careful with the resulting indices. We introduce a function s which, when applied to

$\pi_n$

remove stuttering, we need to be careful with the resulting indices. We introduce a function s which, when applied to

![]() $\pi_n(q_i)$

(the nth component of the ith state appearing in

$\pi_n(q_i)$

(the nth component of the ith state appearing in

![]() $\overline{q}$

), returns the index of that stage in the path

$\overline{q}$

), returns the index of that stage in the path

![]() $\pi_n(\overline{q})$