Policy Significance Statement

Various countries are embracing digital technologies, but little is known about citizen attitudes and if and how they differ across digital technologies and in different political and cultural contexts. This study looks at public opinion in China, Germany, the US, and the UK and highlights commonalities and differences. Policymakers should take these attitudes on the risks and benefits of digital technologies into account when devising digital policies in their respective contexts.

1. Introduction

The adoption of digital technologies is expanding rapidly across the globe. Governments’ embrace of digital tools has led to innovative breakthroughs in public service delivery, efficiency gains, and new forms of citizen participation (Chadwick, Reference Chadwick2003; Lee et al., Reference Lee, Chang and Berry2011). Algorithmic decision-making processes often lead to more evidence-based policy (Mayer-Schönberger and Cukier, Reference Mayer-Schönberger and Cukier2013; Höchtl et al., Reference Höchtl, Parycek and Schöllhammer2016; Pinker, Reference Pinker2018) and, thus, potentially result in fairer decisions than those formulated by persons who may be influenced by greed, prejudice, or simply incomplete information (Gandomi and Haider, Reference Gandomi and Haider2015; Lepri et al., Reference Lepri, Staiano, Sangokoya, Letouzé, Oliver, Cerquitelli, Quercia and Pasquale2017).

At the same time, the new reliance on digital tools has also stirred social, ethical, and legal concerns about governments’ extensive adoption of digital technologies. Increasing evidence points to the risks of biases, values, and ideologies being expressed through smart applications that run on flawed data inputs and algorithms (Kraemer et al., Reference Kraemer, van Overveld and Peterson2011; O’Neil, Reference O’Neil2016). Algorithmic decision-making carries the biases of the human actors who construct the algorithms and lacks transparency and accountability owing to technical complexity and/or intentional secrecy or biases (Citron and Pasquale, Reference Citron and Pasquale2014; Diakopoulos, Reference Diakopoulos2015; Pasquale, Reference Pasquale2015; Burrell, Reference Burrell2016; Lepri et al., Reference Lepri, Staiano, Sangokoya, Letouzé, Oliver, Cerquitelli, Quercia and Pasquale2017). Generally, the more sophisticated a technology is, the more “black-boxed” its functionality is to users and the more it lacks scrutiny from the general public (Bodo et al., Reference Bodo, Heberger, Irion, Zuiderveen Borgesius, Moller, van de Velde, Bol, van Es and de Vreese2017). Moreover, the increasing proliferation of digital devices in both public and private spaces raise unprecedented challenges concerning surveillance and defending citizens’ right to privacy (Brayne, Reference Brayne2017; Monahan, Reference Monahan and Watanabe2018; Park, Reference Park2020).

Democratic and authoritarian states alike are incorporating digital technologies into digital governance processes. In the context of authoritarian states, recent evidence suggests that digital-based governance approaches increase the chances of regime survival by enhancing autocrats’ surveillance and repression capacity while also providing new mechanisms of information collection and control (Gunitsky, Reference Gunitsky2015; Wright, Reference Wright2018; Guriev and Treisman, Reference Guriev and Treisman2019; Frantz et al., Reference Frantz, Kendall-Taylor and Wright2020; Earl et al., Reference Earl, Maher and Pan2022). For instance, the Iranian and Syrian governments have adopted various digital surveillance technologies to spy on citizens they perceive as political threats (Gohdes, Reference Gohdes2014; Gunitsky, Reference Gunitsky2015). The Singaporean People’s Action Party has used digital technology to soft-sell public policies and counter anti-regime comments (Tan, Reference Tan2020). Recently, the Russian regime used various forms of digital surveillance tools to suppress dissent against the war in Ukraine (Bushwick, Reference Bushwick2022). In sum, the evidence suggests that digital technologies overwhelmingly strengthen the state rather than society as they reduce the costs for authoritarian leaders to control information and identify potential political opponents.

Despite such a haunting specter generated by digital technologies, existing studies on public opinion show high public acceptance of digital technologies, surveillance, and intrusion by the state (Su et al., Reference Su, Xu and Cao2021; Liu, Reference Liu2022). While these studies are very informative in explaining this public support, which can be surprising at first sight, they ignored a small but significant social group, namely those who are suspicious of digital technologies. Here, these skeptics are referred to as digital doubters. Digital doubters are citizens who express unambiguous disapproval of government-led digital technologies. This study looks at citizens’ attitudes toward three major digital technologies in different political contexts: social credit systems (SCSs), facial recognition technology (FRT), and contact tracing apps (CTAs), with a focus on analyzing digital doubters. Based on three separate online surveys that are combined for this analysis, this study analyzes which characteristics are shared by citizens who become digital doubters and what separates them from the vast majority of supportive citizens. Particularly, the analysis checks whether the reasons are the same as those expected in the existing literature, namely that the risks from these technologies (including surveillance, privacy violations, and discrimination) outweigh the possible benefits (e.g., improved security, governance efficiency, or simply convenience). Little is known about digital doubters in different political contexts—a gap this article wants to help narrow.

This article studies citizens’ skeptical attitudes toward digital technologies in four countries: China, Germany, the UK, and the US. We selected these four countries because their governments have rolled out a variety of digital technology systems. China has most strongly embraced government applications of digital technologies by, for instance, piloting local social credit pilots in more than 60 cities (Li and Kostka, Reference Li and Kostka2022), setting up highway toll booths with facial recognition cameras to detect drivers evading fares (Ji et al., Reference Ji, Guo, Zhang and Feng2018), or equipping schools to monitor pupil attendance (Article 19, 2021). In February 2020, at the start of the COVID-19 pandemic, China was also the first country to introduce a CTA, the China Health Code, and also used FRT to enforce quarantine rules (Roussi, Reference Roussi2020). In the US, the adoption of FRT and CTAs is also spreading, albeit not as fast as in China (Prakash, Reference Prakash2018; Harwell, Reference Harwell2019). In the UK, police departments have experimented with live face-tracking (Satriano, Reference Satriano2019), whereas in Germany, a country where the topic of data privacy is especially prominent in public debate, FRT roll-out is limited and adoption is confined to major airports that integrate FRT for identity verification. These four countries also represent a politically diverse group: an authoritarian state, a federal parliamentary republic, a parliamentary constitutional monarchy, and a presidential republic. This mixture allows us to study different political contexts. We expect to identify overarching explanatory factors that are applicable across cases, as well as context-specific dimensions.

The results show that the number of digital doubters is not small, even in a strong authoritarian state like China. While up to 10% of Chinese citizens belong to the group of “digital doubters,” this group is the largest in Germany with 30% of citizens. The US and the UK are in the middle with approximately 20%. Numerous factors explain why digital doubters are vigilant about and suspicious of digital technologies, despite the state seeking to persuade citizens to tolerate or even welcome massive surveillance and digital technologies. Generally, citizens who belong to the group of strong doubters (i.e., strongly opposing these digital technologies) are not convinced of the effectiveness and usefulness of digital technologies, including perceived benefits such as more convenience, efficiency, or security. In the group of doubters (i.e., strongly opposing or being neutral toward these digital technologies), the doubting attitudes are also associated with concerns about technology risks, especially privacy concerns. In China, there are more doubts about visible digital technologies such as FRT than CTAs and SCSs. We find that the more citizens lack trust in their government, the more likely they are to belong to the group of digital doubters. The results demonstrate that in both democratic and authoritarian states, there are citizens opposing the adoption of certain technologies. This emphasizes the need for an urgent debate on how to regulate these technologies to ensure they align with societal preferences.

2. Literature Review

2.1. Authoritarian digital governance, public opinion, and doubters

Authoritarian rulers have traditionally used a variety of means to ensure control over the public, including repression, cooptation, surveillance, and manipulation of information (Davenport, Reference Davenport2007; Gerschewski, Reference Gerschewski2013). Digital technologies have helped autocrats pro-actively frame and manipulate information (Deibert, Reference Deibert2015; Guriev and Treisman, Reference Guriev and Treisman2019), co-opt social media (Gunitsky, Reference Gunitsky2015), or flood the web with distracting messages (Roberts, Reference Roberts2018; Munger et al., Reference Munger, Bonneau, Nagler and Tucker2019). Digital technologies are also used to expand the state’s capacity to monitor early protests and identify potential opponents while granting average people more freedom to access information (MacKinnon, Reference MacKinnon2011). In this sense, digital tools offer autocrats more social control at a much lower cost and reduce the likelihood of protest (Kendall-Taylor et al., Reference Kendall-Taylor, Frantz and Wright2020).

Facing both an expanding surveillance state and greater freedom to monitor or even challenge the state, citizens’ attitudes toward digital technology have become vitally important. A growing body of research on public opinion has found high public acceptance of digital technologies, surveillance, and intrusion by the state (Su et al., Reference Su, Xu and Cao2021; Liu, Reference Liu2022; Xu et al., Reference Xu, Kostka and Cao2022). Several explanations have been proposed for why citizens strongly support their authoritarian governments and various digital technology programs. The first explanation is that while economic development enhances the immediate effect of public support for authoritarian states, but in the long run, it may also foster critical citizens. For instance, in China over the past few decades, strong support of the government as a result of economic growth has eclipsed people’s distrust of the government generated by a change in values (Wang, Reference Wang2005; Holbig and Gilley, Reference Holbig and Gilley2010). Citizens would give up privacy, freedom, and other democratic rights for economic gains. For instance, Su et al. (Reference Su, Xu and Cao2021) find that in China, support for digital surveillance is positively associated with overall trust in the government and satisfaction with the regime.

The second explanation for strong support relates to increased nationalism. Online nationalism stands out as one of the most influential public opinion sentiments that can be exploited by authoritarian states. It is often agreed that the rise of nationalism in China in the post-Tiananmen era is engineered to counteract eroding communist ideology among the public (Downs and Saunders, Reference Downs and Saunders1999; Zheng, Reference Zheng1999; Zhao, Reference Zhao2004). The third major explanation is security over privacy as citizens value personal and financial safety more (Yao-Huai, Reference Yao-Huai2005; Wang and Yu, Reference Wang and Yu2015). Recent research suggests that when people think of digital technologies, surveillance, and control are not foremost in their minds but rather notions of convenience and security (Kostka et al., Reference Kostka, Steinacker and Meckel2021; Su et al., Reference Su, Xu and Cao2021). Yet, the existence of digital doubters’ challenges existing theories of economic for democracy, nationalism, and security.

At the same time, public support for the state’s digital tools and programs is fragile. For instance, the Chinese state is increasingly encountering social resistance to various aspects of its massive digital surveillance system. The Suzhou government, for example, faced large protests when it promoted a so-called “civility code” that was part of its local SCS system. Within a few days, this civility code had to be dropped (Chiu, Reference Chiu2020; Du, Reference Du2020). Similar resistance has been encountered when private companies and governments promote facial recognition. Citizens also complained and even filed lawsuits against companies who inappropriately collected their personal facial information (Huang et al., Reference Huang, Lu and Li2020. In addition, people have engineered various means to evade and counter such intensified state surveillance (Li, Reference Li2019). Generally, citizens’ trust in digital governance is tightly linked to their trust in government (Srivastava and Teo, Reference Srivastava and Teo2009). In China, for instance, citizens are generally found to have high trust in their government (Li, Reference Li2004; Manion, Reference Manion2006), but recent studies show that trust is lower among the young, more educated, and economically better-off (Zhao and Hu, Reference Zhao and Hu2017), which suggests that public opinion can shift quickly.

2.2. Cost–benefit calculus

To understand why citizens accept the adoption of digital technologies into governance processes despite possible disadvantages, the literature finds that a major reason is citizens’ risk–benefit calculations. According to the privacy calculus theory, people often weigh potential benefits and risks before deciding to disclose private information (Laufer and Wolfe, Reference Laufer and Wolfe1977).

Previous studies have shown significant variation in privacy attitudes within a society. For instance, Alan Westin’s research on public perceptions of privacy in the US shows the American public has a very pragmatic approach regarding specific privacy issues (Westin, Reference Westin1996). According to his surveys, 25% of the US population can be classified as “privacy fundamentalists,” 18% as “privacy unconcerned,” and the remaining 57% as “privacy pragmatists.” Privacy fundamentalists consider privacy an inherent right that should be protected at all costs, while privacy pragmatists decide on a case-by-case basis whether to align themselves with the privacy fundamentalists or the privacy unconcerned, depending on their assessment of the trade-off between giving up their private information and gaining valuable benefits (Westin, Reference Westin1996). Similarly, recent research on China shows varying privacy attitudes among different societal groups. While privacy concerns regarding data collection by the government are low among citizens, they are somewhat elevated among individuals who are not ideologically aligned with the state (Steinhardt et al., Reference Steinhardt, Holzschuh and MacDonald2022). The low level of privacy concerns in China may be attributed to the absence or weakness of data protection regulations prior to the introduction of the Personal Information Protection Law in 2021. By contrast, countries like Germany and the UK had already increased citizens’ awareness of privacy issues through the implementation of the General Data Protection Regulation (GDPR) laws in 2018.Footnote 1

Despite privacy concerns, people tend to disclose their personal information if they think the benefits outweigh the risks (Acquisti and Grossklags, Reference Acquisti and Grossklags2005; Krasnova et al., Reference Krasnova, Spiekermann, Koroleva and Hildebrand2010). That is, they sacrifice privacy in exchange for benefits. Davis and Silver (Reference Davis and Silver2004) show that citizens in the US trade their civil liberties, such as those infringed by electronic surveillance, for better security and safety, especially in the aftermath of 9/11. In their study on citizens’ acceptance of facial recognition technologies, Kostka et al. (Reference Kostka, Steinacker and Meckel2021) highlight a trade-off situation in which citizens value improved security and convenience over negative drawbacks. This research draws on the privacy calculus theory to understand why certain citizens are more dubious about certain technologies than others. To analyze citizens’ cost–benefit considerations, we test the perceived benefits by using the following broader dimensions: convenience, efficiency, security, and improved regulation (social order). The perceived costs or risks of digital technologies are tested by the following measurements: surveillance, privacy violation, discriminations and biases, commercial (mis)use, and data misuse.

2.3. Digital technology adoption in China, Germany, the UK, and the US

China, Germany, the UK, and the US have all experimented with and applied some or all forms of the three technologies in focus: SCS pilots, FRT, and COVID-19 CTAs. SCS pilots, which are only employed in China, have been part of the Chinese state effort to both surveil and morally educate its citizens, and their rewards and punishments for the country’s citizens are based on blacklists and redlists (Creemers, Reference Creemers2017; Engelmann et al., Reference Engelmann, Chen, Fischer, Kao and Grossklags2019).Footnote 2 It is not a single integrated initiative but a range of fragmented ones through which the Chinese government aims to consolidate legal and regulatory compliance and improve the financial services industry (Chorzempa et al., Reference Chorzempa, Triolo and Sacks2018). While some scholars have shown extreme uneasiness about SCSs becoming the precursor to an Orwellian society (Chorzempa et al., Reference Chorzempa, Triolo and Sacks2018; Dai, Reference Dai2018; Mac Síthigh and Siems, Reference Mac Síthigh and Siems2019), SCSs are generally supported in China among more socially advantaged citizens (wealthier, better-educated, and urban residents), who register the strongest approval of SCSs (Kostka, Reference Kostka2019; Liu, Reference Liu2022). As of 2022, there were 62 provincial and local government–led SCS initiatives serving as pilot projects (Li and Kostka, Reference Li and Kostka2022). Notable examples include the Hangzhou government’s Qianjiang Score (钱江分), the Rongcheng government’s Rongcheng Score (容成分), and the Fuzhou provincial government’s Jasmine Score (茉莉分). Technological intensity varies across these SCS projects, with some adopting low-tech approaches, like in Rongcheng (Gan, Reference Gan2019), while others have experimented with more advanced technologies such as facial recognition, as exemplified in Shenzhen (Creemers, Reference Creemers2018).

FRT adoption is widespread and has particularly high adoption rates in China, followed by the US and the UK, as well as a limited roll-out in Germany, where adoption is confined to major airports that integrate FRT for identity verification. In China, government agencies have adopted FRT for multiple purposes, including urban policing, transportation systems, digital payment systems (e.g., pension payments in Shenzhen), and the social control of Muslim Uighur monitories in Xinjiang (Mozur, Reference Mozur2019; Brown et al., Reference Brown, Statman and Sui2021). Therefore, it is not uncommon in China to find public spaces, including public libraries, train stations, and airports, equipped with FRT (Brown et al., Reference Brown, Statman and Sui2021). Commercial applications have also firmly embedded the technology in the daily lives of Chinese citizens through offerings such as online banking and commercial payment systems (e.g., Alipay’s Smile to Pay program).

With regard to CTAs, China was the first country to introduce mobile contact tracing as a means of curbing the spread of the COVID-19 virus, rolling out its Health Code app nationwide in February 2020. After registration, the app automatically collects travel and medical data, as well as self-reported travel histories, to assign users a red, yellow, or green QR code. A green code gives users unhindered access to public spaces, a yellow code indicates that the person might have come into contact with COVID-19 and, therefore, has to be confined to their homes, and a red code identifies users infected with the virus. As public spaces like shopping malls can only be accessed with a green QR code, installing the Health Code app became effectively mandatory in China, resulting in broad adoption of the app among Chinese citizens. By contrast, in Germany, the UK, and the US, CTAs were voluntary and predominantly based on Bluetooth technology. Germany launched its Corona-Warn-App in June 2020, after a drawn-out discussion over data privacy issues and the related design of the app. The app uses Bluetooth technology to track the distance and length of interpersonal encounters between people that carry a mobile phone with the app installed. In the US, rather than a top-down approach by the central government, state and local governments cooperated with Apple and Google to develop local apps (Fox Business, 2020; Johnson, Reference Johnson2020a). By the end of 2020, more than 30 states had adopted CTAs in the US (Johnson, Reference Johnson2020b). Like the German case, these apps rely on Bluetooth technology, their use is voluntary, and they notify users once they have been in close contact with infected persons for at least 5 min. They do not collect personal information and do not upload information about personal encounters to central servers (Kreps et al., Reference Kreps, Zhang and McMurry2020; Kostka and Habich-Sobiegalla, Reference Kostka and Habich-Sobiegalla2022).

2.4. Analytical framework

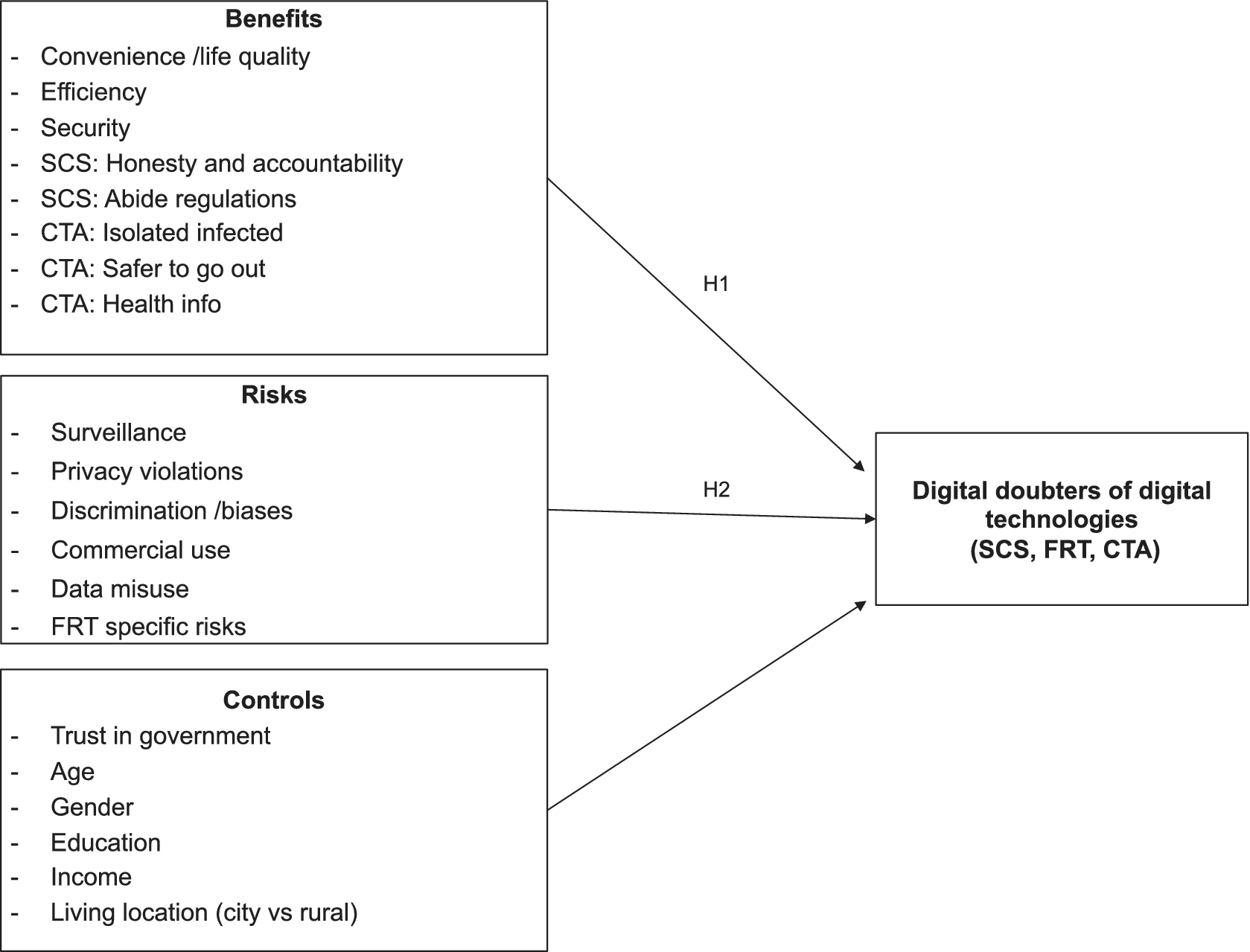

This study takes as its dependent variable respondents who expressed doubts about digital technologies. Building on previous studies that report on acceptance levels, this article studies the groups that were more doubtful about these new technologies. In the analysis, two groups are identified. Strong digital doubters of digital technologies are individuals who either strongly or somewhat oppose the use of digital technology. The group of digital doubters of digital technology are individuals who either strongly, somewhat oppose, or neither accept nor oppose the use of digital technology. The answer option of “neutral” is included in the group of digital doubters as it is likely that respondents in China opted for a more neutral answer when they actually do harbor doubts about the digital technologies.Footnote 3 The explanatory variables, which are derived from the literature on surveillance, security-private trade-offs, and privacy calculous theory, are a range of perceived benefits and risks. Two hypotheses are tested:

Hypothesis 1: Digital doubters are citizens who are not aware or do not believe in the various benefits of digital technologies, such as convenience, security, and efficiency (H1).

Hypothesis 2: Digital doubters are citizens who are aware or believe in the various risks of digital technologies, such as surveillance, privacy violations, discrimination, and misuse (H2).

Trust in government, along with sociodemographic variables, is controlled for. Figure 1 summarizes the analytical framework.

Figure 1. Analytical framework.

3. Data and Methods

This article looks at citizens’ attitudes toward major digital technologies in China, Germany, the UK, and the US by selecting SCSs (China only), FRT (all four countries), and CTAs (China, Germany, and the US only) as case studies. The analysis is based on three online surveys through one professional survey firm in 2018 (SCSs), 2019 (FRT), and 2020 (CTAs).Footnote 4 The survey company cooperated with app and mobile website providers. As a sampling method, we used river sampling, also referred to as intercept sampling or real‐time sampling, to draw participants from a base of between 1 and 3 million unique users (Lehdonvirta et al., Reference Lehdonvirta, Oksanen, Räsänen and Blank2021).Footnote 5 This allowed both first-time and regular survey-takers to participate. From a network of more than 40,000 participating apps and mobile websites, the different surveys included respondents through more than 100 apps comprising different formats and topics such as shopping (e.g., Amazon), photo-sharing (e.g., Instagram), lifestyle (e.g., DesignHome), and messaging (e.g., Line) providers. Offer walls provided participants the options to receive small financial and nonmonetary rewards as an incentive to take part in our survey, such as premium content, extra features, vouchers, and PayPal cash. Users did not know the topic of the questionnaire before opting in to participate. Instead, each participant underwent a prescreening before being directed to a survey that they were a match for. Table 1 summarizes the key features of each survey, and Tables A1–A3 in the Supplementary Material provide further data on the sample. The Supplementary Material offers further details on the survey method, questionnaire design, and sample representativeness.

Table 1. Three online surveys on SCSs, FRT, and CTAs

Abbreviations: CTAs, contact tracing apps; FRT, facial recognition technology; SCSs, social credit systems.

a Respondents in China were sampled by region; for the other three countries the sample provided by the survey company generally resembles the population and no additional regional sampling quota was needed.

Table 2 summarizes the measures and hypotheses for this article.

Table 2. Measurement table and hypotheses

Abbreviations: CTAs, contact tracing apps; FRT, facial recognition technology; SCSs, social credit systems.

a Low level includes: no formal education; medium level includes: high school or equivalent and vocational training; high level includes: bachelor’s degree and above.

4. Results

4.1. Digital doubters across the three technologies

The findings show that in China, the group of digital doubters is largest when it comes to FRT: Here, 9% of the respondents stated they strongly or somewhat oppose the technology, compared with only 2% for SCSs and CTAs. The group of neutral respondents is also largest for FRT with 25%, as compared with 19% and 17% for SCSs and CTAs, respectively. The group of digital doubters (i.e., strong doubters and neutral group) is 34% for FRT, 21% for SCSs, and 19% for CTAs. The different rates of doubt suggest that Chinese citizens are less accepting of FRT, which matches a person’s facial features from a digital image or video to identifying data. Interestingly, SCSs, which potentially covers a wider range of areas, has much lower rates of doubt. The lowest doubts are for CTAs, which could possibly be explained by the fact that during the pandemic, the perceived health benefits of CTAs have had a particularly strong effect on CTA acceptance in China (Kostka and Habich-Sobiegalla, Reference Kostka and Habich-Sobiegalla2022).

The group of strong doubters in Germany is much larger than in China, with 32% for FRT and 27% for CTAs. A third of the respondents hold a neutral attitude toward these two technologies, making the group of digital doubters 63% for FRT and 60% for CTAs. The high level of doubt regarding the German Corona-Warn-App is surprising, as during the design phase, numerous adjustments were made to address Germans’ high levels of privacy concerns, but the findings suggest that doubts remain. In the UK and the US, we find the group of strong digital doubters is slightly smaller than in Germany, with 23% strong doubters in the UK for FRT and 26% strong doubters in the US for FRT and 22% for CTAs. Table 3 summarizes this data.

Table 3. Number of strong doubters and doubters in the population

Note: Strong doubters of digital technology are individuals who either strongly or somewhat oppose the use of digital technology. Neutral respondents are individuals who neither accept nor oppose digital technology. Digital doubters are individuals who are either strong doubters or neutral.

Abbreviations: CTAs, contact tracing apps; FRT, facial recognition technology; SCSs, social credit systems.

4.2. Explanatory factors

We assessed the association between our predictor variables and digital technology doubts for the three different technologies by using logit regressions for a binary outcome dependent variable. We ran two models for each technology and used two dependent variables: strong doubters and digital doubters. In the model with strong doubters as the dependent variable, we coded respondents who either strongly or somewhat oppose a particular digital technology as 1, the others as 0. In the model with digital doubters as the dependent variable, we coded respondents who either strongly or somewhat oppose (or have a neutral opinion toward) a certain technology as 1, the others as 0. Trust in government and sociodemographic factors are included as control variables for each of the three technologies. The exponentiated coefficients in the graphics and regression tables indicate the odds ratio (OR). When 0 < OR < 1, it implies a negative relationship between the explanatory variable and the dependent variable; when OR > 1, it implies a positive relationship.

In line with our benefits–risks analytical framework, the different models measure the effects of different benefits (e.g., convenience, efficiency, security, improved regulations, isolation of COVID-infected) and risks (e.g., surveillance, privacy violations, discrimination /biases, data misuse). Tables A1–A5 in the Supplementary Material present the regression tables and additional information about the fit of the logistic regression model, including results for the VIF.

Numerous factors explain why a small group of citizens are doubtful of SCSs, as illustrated in Figure 2. Among the group of people who have strong doubts about SCSs, the most significant predictor is a belief that SCSs is not useful for pressuring companies to abide by regulations (positive odds ratio of 9.06). We also find a positive significant association between strong doubts about SCSs and the belief that SCSs are unfair. Furthermore, we find a significant positive association between respondents who have no confidence in the Chinese government and being strong doubters. In the model with digital doubters as the dependent variable, all groups with less confidence in the government show positive significant outcomes, with the “no confidence at all” group having the largest odds ratio. In other words, the less trust in the government there is, the more likely a respondent is to belong to the group of digital doubters. All other predictor variables and control variables are not significant, with the exception of the positive significant relation for education and income in the digital doubters group. In sum, strong doubters in SCSs believe the SCS system itself is not effective or unfair.

Figure 2. Odds ratios of effects on digital doubters’ concerns about social credit systems (SCSs). *p < 0.05, **p < 0.01, and ***p < 0.001.

Disbelief in the benefits of FRT also explains the attitude of respondents with strong doubts about FRT. With the exception of Germany for convenience and the UK for efficiency, the results in Figure 3 show a significant negative relationship between a doubting attitude toward FRT and beliefs in convenience and efficiency. In other words, digital doubters do not believe FRT offers more convenience and efficiency. For all four countries, there is also a negative significant association between doubts toward FRT and security, which suggests that digital doubters do not believe that a more widespread adoption of FRT results in advanced security. Except for China, the digital doubter group also believes FRT can result in privacy violations, and therefore, they are more doubtful. With regard to surveillance, the results are mixed. For China, there is a positive relationship between perceived surveillance and the likelihood to be strong doubters. For the UK, we find no significant relationship between whether respondents believe FRT will result in more surveillance and being a digital doubter. However, the results for Germany and the US are slightly surprising. We find that respondents who perceive FRT will increase surveillance are less likely to be digital doubters in Germany and less likely to be strong digital doubters in the US (significant negative association). One possible explanation might be that people have different positive or negative associations with surveillance, and some respondents might have associated surveillance with increased public security. Overall, in all four countries, respondents find that FRT generally has more risks than benefits. Overall, the sociodemographic control variables are not significant, except for age in China (positive), gender in Germany and the UK (positive), education in Germany and the US (negative), and income (negative for doubters in China and positive for strong doubters in Germany). In sum, one of the strongest indicators of being doubtful about FRT is a belief that the technology increases security or not. At the same time, the more risks someone associates with FRT, including privacy violations, the more likely they are to be a digital doubter.

Figure 3. Odds ratios of effects on digital doubters’ concerns about facial recognition technology (FRT).

The analysis of CTAs also finds that strong doubters do not believe in FRT having particular benefits. Figure 4 shows that in China, Germany, and the US, there is a negative, significant relation between strong doubters and the CTAs offering health information. In all three countries, respondents who believe CTAs do not make it safer for people to go out are more likely to belong to the group of digital doubters. In the US and Germany, the lack of a belief that CTAs help isolate infected people also helps explain doubts about the CTAs. The German and US citizens who perceive that CTAs will result in privacy violations and government surveillance are more likely to be digital doubters. In China, those who think that CTAs will result in more privacy violations are more likely to be strong digital doubters. Respondents who distrust the government somewhat or a lot are also likely to be digital doubters, as we find a positive signification association in all three countries with those that do not trust the government much or at all. Socio-demographic control variables are mostly insignificant, except for age in Germany (negative association), gender in Germany (negative association), education in China and the US (negative association), and income levels in China and Germany (negative association).

Figure 4. Odds ratios of effects on digital doubters’ concerns about contact tracing apps. *p < 0.05, **p < 0.01, and ***p < 0.001.

4.3. Research limitations

The analysis is subject to a number of limitations. First, as this was an online survey using mobile phones and desktops, the findings can only reflect the views of the Internet-connected population in the selected countries. Second, respondents who chose to participate in online surveys may already have a particular affinity for technology, which could positively affect their stance toward innovations in this field, and the group of digital doubters might actually be larger than reported here. This effect may have been exacerbated by the virtual rewards individuals were promised for participating, since they might have been more likely to associate the positivity of incentives with positivity toward digital technology. Third, as the study combined three datasets, the respondents’ attitudes toward the three technologies cannot be directly compared. In the future, it would be helpful to conduct one larger survey rather than combining three datasets.

Furthermore, China’s authoritarian political context makes it difficult to express dissent against technologies that are officially endorsed by the government, and this might be reflected in the reported levels of technology nonacceptance in this study. Although participants were aware that any identifying data was anonymized and analyzed for research purposes only, we cannot exclude the possibility of preference falsification as some more cautious respondents may have given false answers due to concerns over possible reprisals from the state. For instance, variables like attitudes toward surveillance might actually be underreported.

5. Conclusion

Governments around the world are embracing digital technologies for information collection, governance, and social control. Recent studies suggest citizens in both democratic and authoritarian regimes may accept or even support the adoption of digital technologies for governance purposes, despite their clear surveillance potential (Xu, Reference Xu2019; Kostka et al., Reference Kostka, Steinacker and Meckel2021; Liu, Reference Liu2021; Xu et al., Reference Xu, Kostka and Cao2022). Using an online survey dataset on the public’s opinion of digital technologies in China, Germany, the UK, and the US, this article shows that these findings overlooked a small yet significant group of digital technology doubters. The analysis looks at citizens’ attitudes toward three major digital technologies in China: FRT, CTAs, and SCSs. The findings show that the group of “digital doubters” is largest in Germany, followed by the UK and the US, and smallest in China.

For all three technologies, we find that digital doubters are engaging in a cost–benefit calculus, which results in them rating the benefits lower than the risks. Respondents who belonged to the group of strong doubters are not convinced of the effectiveness and usefulness of digital technologies, including benefits such as greater convenience, efficiency, or security. The doubting attitudes are also associated with concerns about technology risks, especially privacy risks and surveillance threats. The findings add to the privacy calculus literature (Dinev and Hart, Reference Dinev and Hart2006) and highlight that digital doubters are more often skeptics because they do not believe in the perceived benefits. Our findings show that in both democratic and authoritarian states, there are citizens opposing the adoption of certain digital technologies. This underlines the importance of initiating societal debate to determine the appropriate regulations that align with these societal preferences.

Interestingly, in China, FRT, which matches a person’s facial features from a digital image or video with identifying data, raises more doubts than SCSs that potentially cover a wider range of areas in society. Doubts toward FRT might be higher as access to biometric data is a more visible intrusion of privacy violations than local governments’ collection of a variety of personal data as part of local SCSs. Recent research shows that SCSs predominantly target businesses and not individuals (Krause and Fischer, Reference Krause, Fischer and Everling2020) and that, if they are affected by these SCSs, citizens interpret the SCS more as a regulatory tool to reenforce the social order (Kostka, Reference Kostka2019). Comparatively lower rates of doubt toward CTAs could possibly be explained by the fact that the technology came into use during the COVID-19 pandemic, with the pandemic offering a public health justification for technology adoption.

Finally, the impact of China’s digital technology entails more than its domestic influence as China has exported its information technology and potentially digital authoritarianism for years. With the country’s increasingly ability to utilize digital technology as both high-tech export goods and foreign policy strategy tools, observers worry that if liberal democracies fail to offer a compelling and cost-effective alternative, the Chinese style of digital governance will spread fast around the globe (Polyakova and Meserole, Reference Polyakova and Meserole2019). Various countries are embracing the Chinese-style digital authoritarianism of extensive censorship and automated surveillance systems (Shahbaz, Reference Shahbaz2018). For instance, AI-powered surveillance has been deployed most sweepingly in repression in China such as online censorship and in Xinjiang, with other countries following suit (Kendall-Taylor et al., Reference Kendall-Taylor, Frantz and Wright2020). Therefore, the study of digital doubters within China not only remains theoretically intriguing but could also offer important implications for other digitalizing countries.

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/dap.2023.25.

Acknowledgments

The author is very grateful for excellent research support from Danqi Guo.

Funding statement

Funding was provided by the European Research Council (ERC Starting Grant No: 852169) and the Volkswagen Foundation Planning Grant on “State-Business Relations in the Field of Artificial Intelligence and its Implications for Society” (Grant 95172).

Competing interest

The author declares no competing interests.

Author contribution

Conceptualization: G.K.; Data curation: G.K.; Funding acquisition: G.K.; Investigation: G.K.; Methodology: G.K.; Project administration: G.K.; Resources: G.K.; Writing—original draft: G.K.; Writing—review and editing: G.K.

Data availability statement

The data that support the findings are available via the author’s institutional repository: http://doi.org/10.17169/refubium-39987

Comments

No Comments have been published for this article.