Self-reported measures of political ideology have been extensively used in American politics scholarship.Footnote 1 It is generally assumed that such measures capture operational ideology, “ideological identification that is rooted in one's issue preferences” (Simas, Reference Simas2023, 7).Footnote 2 As such, individuals who self-identify as “liberals” or “conservatives” in surveys are often assumed to hold a liberal or conservative system of beliefs about policy issues.Footnote 3

One problem in using standard self-reported measures—including the 7-point measure in the American National Election Studies (ANES)—to capture operational ideology is that respondents may not understand what “liberal” and “conservative” mean in the first place (Converse, Reference Converse1964; Kinder and Kalmoe, Reference Kinder and Kalmoe2017; Kalmoe, Reference Kalmoe2020). If understandings of ideological labels systematically differ between social groups (such as Blacks versus Whites; see Jefferson, Reference Jeffersonforthcoming), standard self-reported measures may suffer from differential item functioning (DIF), or a lack of measurement equivalence (Brady, Reference Brady1985; King et al., Reference King, Murray, Salomon and Tandon2004). Moreover, given how entrenched the usage of “liberals” and “conservatives” is to reference Democrats and Republicans in the mass media and in the everyday lives of American citizens, conventional self-reported items may additionally capture symbolic ideology, “any ideological identification that stems from affect and/or desires to be associated with or distanced from those various groups or symbols typically connected to ideological labels” (Simas, Reference Simas2023, 7).Footnote 4 These concerns cast doubt on standard self-reported measures as an unbiased measure of operational ideology.

This article offers direct evidence to demonstrate the measurement problems of standard self-reported instruments. We devise an original survey to not only test individuals' understandings of ideological labels (“liberal” and “conservative”), but also experimentally show how these understandings—often colored by partisanship and other individual characteristics—can alter ideological self-placements. By randomizing the availability of objective definitions of ideological labels and the presence of ideological labels when respondents report their ideology, our experiment provides first-cut causal evidence on whether and how standard self-reported instruments—which are definition-free and label-driven—constitute an inappropriate measure of operational ideology.

Our original survey yields four major findings:

1. Mass understandings of “liberal” and “conservative” are weakly aligned with conventional definitions of these terms. In a series of close-ended questions that draw on textbook definitions of ideology to test individual knowledge about these terms, respondents’ performance is not much better than mere guessing. This finding calls into question the validity of standard measures of ideology, which solicit self-reported ideology without providing respondents with a clear definition of ideological terms.

2. Understandings of these terms are heterogeneous. Not only are respondents identifying as moderates or Democrats less familiar with the standard definitions of “liberal” and “conservative,” but Blacks, women, and the less politically sophisticated also appear to be less conversant with the ideological language and labels. This finding raises important concerns about DIF for standard measures of ideology, which assume that different social groups understand, interpret, and respond to the question in the same way.

3. An alternative measure of ideology, which defines “liberal” and “conservative” without displaying these ideological labels, dramatically shifts partisans’ self-reported ideology. Under our label-free measure that subtracts “liberal” and “conservative” from the question wording in a randomly assigned experimental condition, Democrats are less likely to self-identify as liberals and Republicans less likely to report as conservatives. Democrats’ modal response changes from liberal to moderate, and Republicans’ modal response changes from conservative to moderate, as we move from the standard ANES measure to our label-free measure. This finding shows that standard self-reported measures, which display ideological labels without clarifying their meanings to respondents, may not be able to cleanly capture operational ideology. Symbolic ideology can be additionally captured by these standard measures that prime partisan attachments with the labels “liberal” and “conservative.”

4. The label-free measure that removes ideological terms from the question wording substantially reduces the estimated degree of partisan-ideological sorting. In our baseline condition where respondents are subject to the standard ANES question on ideology, the correlation between self-reported ideology and party identification is 0.58. In our experimental condition where respondents report their ideology based on our label-free measure, the correlation drops to 0.26. This finding suggests that standard evidence of partisan-ideological sorting in the US (which often relies on self-reported ideology) can be highly sensitive to alternative measurement approaches. High correlations between self-reported ideology and party identification can be driven not only by operational ideology but also by symbolic ideology.

Collectively, these findings have important implications for the use of self-reported measures of ideology in American politics. When using these measures, the existing literature often implicitly or explicitly treats them as an unbiased measure of operational ideology. The underlying assumption is that these readily available measures can effectively capture individuals’ general sets of issue preferences or broader beliefs about the role of government in society. Our experiment, which allows us to estimate the causal impact of ideological labels on individuals’ self-reported ideology, offers new evidence that conventional measures may be biased by partisan attachments. We also demonstrate that these measures, which do not explicitly clarify the meanings of ideological terms to respondents, may suffer from DIF. Importantly, the measurement problems underlying standard self-reported measures of ideology may influence how we interpret well-known findings in American politics, as illustrated by our experimental finding that partisan-ideological sorting is substantially “weakened” if we adopt an alternative measurement approach, which provides clear definitions of ideological labels while removing these labels from the question wording to suppress partisan identity considerations.

Our study, therefore, contributes to ongoing debates about the appropriateness of standard measures of political ideology. While we do not contend that self-reported measures of ideology should be abandoned,Footnote 5 we recommend that researchers be clear and precise about what they plan to capture through these self-reported measures. Conceptualization and interpretation of the measure not only determine what instruments we should employ at the survey design stage, but also impact what inferences we can draw from them.

1. Potential problems of self-reported ideology

Building on the existing literature, we give four reasons for why standard self-reported instruments of political ideology may not be an appropriate measure of operational ideology.

First, respondents may not be conversant with ideological labels in the first place. As previous research shows, many Americans lack knowledge about “what goes with what,” that is, “which bundles of policy positions fall on the left and right sides of the liberal-conservative ideological dimension” (Freeder et al., Reference Freeder, Lenz and Turney2019, 275; see also Converse, Reference Converse1964).Footnote 6 Thus, they often self-identify with ideological labels only symbolically rather than substantively (Conover and Feldman, Reference Conover and Feldman1981; Ellis and Stimson, Reference Ellis and Stimson2012). The latest research also suggests that many Black respondents are unfamiliar with the terms “liberal” and “conservative” (Jefferson, Reference Jeffersonforthcoming) and that public understandings of ideological terms could be reoriented by political elites (Amira, Reference Amira2022; Hopkins and Noel, Reference Hopkins and Noel2022). If the public lacks a basic understanding or has a different understanding of ideological labels, it will be difficult to accurately capture operational ideology using self-reported measures of ideology.Footnote 7

Second, self-reported measures of ideology may suffer from DIF. That is, different social groups may understand, interpret, and respond to the same question differently (Bauer et al., Reference Bauer, Barberá, Ackermann and Venetz2017; Jessee, Reference Jessee2021; Pietryka and MacIntosh, Reference Pietryka and MacIntosh2022). One source of DIF is respondents’ differential knowledge of ideological terms. Because minority groups are often less mobilized by politicians in American politics (Fraga, Reference Fraga2018) and these politicians often invoke the language of ideology in their campaigns (Grossmann and Hopkins Reference Grossmann and Hopkins2016), majority groups—who are more exposed to the political world and to ideological appeals due to political mobilization—may be more familiar with ideological terms than minority groups (Jefferson, Reference Jeffersonforthcoming). Another source of DIF is partisan motivation. Partisans often view the opposing party as more ideologically extreme (Levendusky and Malhotra, Reference Levendusky and Malhotra.2016; Pew Research Center, 2018). Because individuals tend to view their political opponents as more extreme and the label “moderate” is intrinsically appealing, both liberal Democrats and conservative Republicans could identify as moderates (Hare et al., Reference Hare, Armstrong, Bakker, Carroll and Poole2015). Further highlighting the problem of DIF across partisan lines, Simas (Reference Simas2018) shows that “Democratic and Republican respondents interpret and use the response categories of the [ANES 7-point] ideological scale in systematically different ways such that Democrats have significantly lower thresholds for the distinctions between categories” (343).

Third, self-reported measures of ideology may confront social desirability bias. On the one hand, due to cultural, historical, and psychological reasons, the labels “moderate” and “conservative” are more socially desirable to Americans (Ellis and Stimson, Reference Ellis and Stimson2012; Hare et al., Reference Hare, Armstrong, Bakker, Carroll and Poole2015). This may induce ideologues to identify as “moderates” and non-conservatives to report as “conservatives.” On the other hand, the label “liberal” often carries negative connotations among the American public (Neiheisel, Reference Neiheisel2016; Schiffer, Reference Schiffer2000). This may, in turn, deter operational liberals from identifying themselves as “liberals.” Indeed, some evidence suggests that Americans systematically overestimate their level of conservatism (Zell and Bernstein, Reference Zell and Bernstein2014). Although the public has generally liberal policy views in opinion surveys, self-reported conservatives often outnumber self-reported liberals (Ellis and Stimson, Reference Ellis and Stimson2012; Claassen et al., Reference Claassen, Tucker and Smith2015).

Finally, the definitions of “liberal” and “conservative” are often contested in the political world. There is a constant struggle, even among political scientists, over the formal definitions of “liberal” and “conservative” (Gerring, Reference Gerring1997). The lack of a shared understanding of the ideological labels highlights an inherent drawback of using self-reported ideology to measure operational ideology. If different individuals understand the ideological labels differently, or some focus more on their social identities and less on their policy views when reporting their ideology, then the survey instrument not only lacks construct validity but also measures different constructs for different people. This issue, however, is often neglected by the existing literature. Not only do empirical studies seldom state what they mean by “liberal” and “conservative,” but they also seldom clarify what the self-reported measure is intended to capture in their analysis. For example, empirical studies often name the 7-point self-reported ideology as “Ideology” or “Political Ideology” in their regression tables—as an explanatory variable, a moderating variable, or simply a control variable. It is often unclear what this variable is intended by the researchers to explain, moderate, or control for.

Nevertheless, much research on American politics still relies extensively on self-reported ideology to measure operational, or substantive, ideology. This is understandable because despite the methodological concerns, direct empirical evidence that demonstrates the measurement problems of standard self-reported instruments is scarce. To fill this important gap, we design an original survey that allows us to systematically evaluate the appropriateness of standard self-reported instruments as a measure of operational ideology. The next section describes our survey design.

2. Survey design

We conducted a national survey in March 2022 on Lucid using quota sampling to match key demographic variables to national benchmarks (n = 3828). This platform has been carefully evaluated by Coppock and McClellan (Reference Coppock and McClellan2019) and widely used by political scientists, including Costa (Reference Costa2021), Lowande and Rogowski (Reference Lowande and Rogowski2021), and Peterson and Allamong (Reference Peterson and Allamong2022), who also examine Americans’ ideology in their Internet surveys. In addition, recent research has found that “a mix of classic and contemporary studies” covering various political science subfields were replicable on Lucid, even in the shadow of the COVID-19 pandemic (Peyton et al., Reference Peyton, Huber and Coppock2022, 383). Appendix A shows that our sample mirrors the national benchmarks in gender, age, race, and income.

2.1 Three measures of political ideology

We randomly assigned respondents to one of three experimental groups, each receiving a different measure of ideology. The first group, which serves as a control group, received the standard ANES question on political ideology:

We hear a lot of talk these days about liberals and conservatives. Here is a seven-point scale on which the political views that people might hold are arranged from extremely liberal to extremely conservative. Where would you place yourself on this scale?

The second group (Add Definitions condition), before being asked the same question, received textbook definitions of “liberal” and “conservative” that were directly taken from Lowi et al. (Reference Lowi, Ginsberg, Shepsle and Ansolabehere2019)—one of the most authoritative and commonly used textbooks in American politics.Footnote 8 Specifically, respondents in the Add Definitions condition read the following:Footnote 9

We hear a lot of talk these days about liberals and conservatives.

• Liberals believe that the government should play an active role in supporting social and political change, and support a strong role for the government in economic and social matters.

• Conservatives believe that the government should uphold traditional values, and that government intervention in economic and social matters should be as little as possible.

Here is a seven-point scale on which the political views that people might hold are arranged from extremely liberal to extremely conservative. Where would you place yourself on this scale?

The third group (Subtract Labels condition) received the same textbook definitions, but the terms “liberal” and “conservative” were removed and instead referred to as two ends of a 7-point scale:Footnote 10

Some people believe strongly that the government should play an active role in supporting social and political change, and they support a strong role for the government in economic and social matters. Suppose these people are on one end of the scale, at point 1.

Other people believe strongly that the government should uphold traditional values, and that government intervention in economic and social matters should be as little as possible. Suppose these people are at the other end, at point 7.

And, of course, some other people have beliefs somewhere in between.

Where would you place yourself on this scale?

Hence, the question is largely similar to that in the Add Definitions condition, except that it removes ideological labels. For this label-free measure of ideology, the available answer options range from 1 (“Government plays strong and active role”) to 7 (“Government intervenes as little as possible”). In the survey instrument, we also labeled option 4 as “Middle of the road” to maximize comparability with the measures in two other groups.Footnote 11

After respondents in the Subtract Labels condition finished a set of other unrelated survey questions, we asked them again the standard ANES question on ideology, which is the question we had asked the control group. Thus, our design allows us to not only make between-subjects comparisons (control group versus the two treatment groups that added ideological definitions and/or subtracted ideological labels), but also conduct a within-subjects analysis for the Subtract Labels condition across the two ideology measures. To verify that the three experimental groups are balanced, we follow Hartman and Hidalgo (Reference Hartman and Hidalgo2018) by conducting equivalence tests on an array of pretreatment covariates (Appendix B).

2.2 Outcome variables

Our analysis is based on two outcome variables. First is the 7-point self-reported ideology as described above, which we will use for experimental analysis.Footnote 12 Second is ideological knowledge, which we will use for descriptive analysis to motivate our experiment by showing that many Americans may not be familiar with ideological terms and such familiarity may systematically differ between social groups. This variable measures respondents’ understandings of ideology, and is constructed based on their number of correct answers to the following questions:

Does the following belief define a liberal, a conservative, or neither?

1. The government should play an active role in supporting social and political change.

2. Social institutions and the free market solve problems better than governments do.

3. A powerful government is a threat to citizens’ freedom.

4. The government should play a strong role in the economy and the provision of social services.

For each question, respondents can choose one of four options: “Liberal,” “Conservative,” “Neither,” or “Don't know.” The correct answers to items 1–4 are “Liberal,” “Conservative,” “Conservative,” and “Liberal,” respectively. These questions closely follow the textbook definitions from Lowi et al. (Reference Lowi, Ginsberg, Shepsle and Ansolabehere2019), who define a liberal as a “person who generally believes that the government should play an active role in supporting social and political change, and generally supports a strong role for the government in the economy, the provision of social services, and the protection of civil rights” (400) and a conservative as a “person who generally believes that social institutions (such as churches and corporations) and the free market solve problems better than governments do, that a large and powerful government poses a threat to citizens’ freedom, and that the appropriate role of government is to uphold traditional values” (401). Jost et al. (Reference Jost, Baldassarri and Druckman2022) similarly suggest that “[w]hereas conservative-rightist ideology is associated with valuing tradition, social order and maintenance of the status quo, liberal-leftist ideology is associated with a push for egalitarian social change” (560). Our classification of correct answers is also generally consistent with the understandings of “liberal” and “conservative” implied by prominent studies in American politics and political psychology (see, e.g., Conover and Feldman, Reference Conover and Feldman1981; Luttbeg and Gant, Reference Luttbeg and Gant1985; Jost et al., Reference Jost, Glaser, Kruglanski and Sulloway2003; Alford et al., Reference Alford, Funk and Hibbing2005; Jost et al., Reference Jost, Federico and Napier2009; Ellis and Stimson, Reference Ellis and Stimson2012; Kinder and Kalmoe, Reference Kinder and Kalmoe2017; Kalmoe, Reference Kalmoe2020; Jost, Reference Jost2021; van der Linden et al., Reference van der Linden, Panagopoulos, Azevedo and Jost2021; Elder and O'Brian, Reference Elder and O'Brian2022).

For each respondent, we count their number of correct answers, thus producing a 5-point variable that ranges from 0 (“Least knowledgeable”) to 4 (“Most knowledgeable”). These questions were asked (in randomized order) to respondents in the control group and the Subtract Labels condition in the later part of the survey. They were not asked to those in the Add Definitions condition because in this group, our treatment vignette—the question wording of the ideology measure—had already provided them with explicit definitions of “liberal” and “conservative.” The Cronbach's alpha of our measure of ideological knowledge is α = 0.65. In Appendix F, we validate our measure of ideological knowledge by examining how it relates to political knowledge, a key predictor of individual understandings of ideology in the literature (see, e.g., Converse, Reference Converse1964; Kalmoe, Reference Kalmoe2020; Simas, Reference Simas2023). We show that ideological knowledge corresponds strongly and positively with political knowledge, thereby underscoring the convergent validity of our measure.

Our measure of ideological knowledge is novel. To infer the levels of ideological knowledge among the American public, the existing literature does not directly evaluate respondents’ knowledge of the terms “liberal” and “conservative” per se but instead assesses how well respondents attach these labels to political candidates or parties. Freeder et al. (Reference Freeder, Lenz and Turney2019, 276), for example, “count respondents as knowing the candidates’ or parties’ issue positions if they placed the liberal/Democratic candidate or party at a more liberal position on a policy scale than the conservative/Republican candidate or party (Carpini and Keeter, Reference Carpini and Keeter1993; Sears and Valentino, Reference Sears and Valentino1997; Lewis-Beck et al., Reference Lewis-Beck, Jacoby, Norpoth and Weissberg2008; Sniderman and Stiglitz, Reference Sniderman and Stiglitz2012; Lenz, Reference Lenz2012).” Conversely, those placing the candidates’ or parties’ issue positions at the same point and those saying “Don't know” are classified as “ignorant of the relative policy positions” (276). Using similar questions in ANES that ask respondents to evaluate the ideological identities of presidential nominees and political parties, Jefferson (Reference Jeffersonforthcoming) gauges the “Liberal-Conservative Familiarity” of respondents. Finding that Whites perform notably better than Blacks in this measure, he argues that the self-reported measures of ideology are biased against Blacks, who may be less familiar with ideological terms as reflected by his measure. While innovative, the main limitation of these studies is that they rely heavily on respondents’ prior understandings of the politicians and parties in concern. Even if respondents know what “liberal” and “conservative” mean, correctly attaching the ideological labels requires them to also know what these politicians and parties advocate in the first place. Instead of inferring respondents’ ideological knowledge by assessing how accurately they map ideological labels onto politicians’ and parties’ issue positions, we directly test how well they understand the labels “liberal” and “conservative” per se.

2.3 Inattentiveness and preregistration

To address concerns about inattentiveness on Lucid (Ternovski and Orr, Reference Ternovski and Orr2022), we followed our preregistered procedure to remove “speeders,” who completed the survey in less than 5 minutes.Footnote 13 This procedure dropped 529 respondents, accounting for 12.1 percent of the original sample.Footnote 14 Removing speeders is important because they tend to provide low-quality responses (Aronow et al., Reference Aronow, Kalla, Orr and Ternovski2020), which could add noise to the data, reduce the precision of the estimates, and bias the effect size (Greszki et al., Reference Greszki, Meyer and Schoen2015; see also Peyton et al., Reference Peyton, Huber and Coppock2022). We therefore followed Leiner's (Reference Leiner2019) recommendation, as well as our pre-analysis plan, by removing speeders from our survey.Footnote 15 In Appendix E, we replicate our analyses with speeders included and show that the findings remain robust.

Ahead of data collection, we preregistered the hypotheses, estimation strategies, and inferential rules. We document any deviations from the pre-analysis plan in Appendix D.

3. Americans’ knowledge about ideological labels

We find that most American respondents did not share the standard understanding of the terms “liberal” and “conservative.” They performed poorly in the four close-ended questions on ideological knowledge. The average number of correct answers is 1.94 (n = 2774, SD = 1.39), with a median of 2.

Table 1 shows the percentage of correct responses to each ideology question. For “liberal” questions (Q1 and Q4), only 53 percent of our respondents provided correct responses. For “conservative” questions (Q2 and Q3), the overall performance was even worse: only slightly over two-fifths of respondents got them right. If we set three correct responses as the threshold for “passing” the ideology test, then only 36 percent of respondents passed. Less than one-fifth of respondents (18 percent) answered all questions correctly.

Table 1. Percentage of correct responses and don't knows for each ideology question

Note: 95 percent confidence intervals are shown in brackets.

3.1 Unpacking ideological understandings

The above analysis suggests that Americans’ understandings of ideological terms may be more nuanced than expected. But what predicts such nuanced understandings? We unpack them by first looking at self-reported ideology. Figure 1 depicts the differences in ideological knowledge by self-reported ideology.

Figure 1. Percentage of correct responses to each ideology question by self-reported ideology. Note: Conservatives are respondents who selected “slightly conservative,” “conservative,” or “extremely conservative” under ANES's 7-point ideology question. Liberals are those who selected “slightly liberal,” “liberal,” or “extremely liberal.” Moderates are those who selected “moderate; middle of the road.” Error bars represent 95 percent confidence intervals.

Two things are clear from Figure 1. First, self-reported moderates are less knowledgeable in ideology. The average numbers of correct responses by moderates and non-moderates are 1.30 and 2.31, respectively (β = −1.00, SE = 0.05, p < 0.001). What might explain this discrepancy? One potential explanation is that respondents who knew little about ideology—and thus unable to effectively locate their own ideology—picked the middle position of the ideology scale (“moderate; middle of the road”) as a safe choice (Treier and Hillygus, Reference Treier and Hillygus2009, 693). Another possibility is that the label “moderate” is more socially desirable (Hare et al., Reference Hare, Armstrong, Bakker, Carroll and Poole2015), so those who were unsure of their own ideological position were more likely to choose it. While investigating who self-report as moderates and why is beyond the scope of this paper, we believe it will be useful for future work to study this phenomenon more closely (Broockman, Reference Broockman2016; Fowler et al., Reference Fowler, Hill, Lewis, Tausanovitch, Vavreck and Warshaw2023).

Second, self-reported conservatives and liberals differ in their ideological knowledge. The average numbers of correct responses by self-reported conservatives and liberals are 2.42 and 2.18, respectively (β = 0.23, SE = 0.06, p < 0.001). What contributes to this difference? We find that self-reported liberals systematically performed lower in “conservative” questions (Figure 2). For conservatives, their average numbers of correct responses to the two conservative and two liberal questions are 1.22 and 1.19, respectively (β = 0.04, SE = 0.04, p = 0.346). For liberals, however, their average numbers of correct responses to the same sets of questions are 0.80 and 1.38, respectively (β = −0.57, SE = 0.04, p < 0.001).Footnote 16 Self-reported conservatives understand both ideological terms at a similar level, but self-reported liberals display a disproportionately lower level of understanding of the term “conservative.”

Figure 2. Average number of correct responses by question type and self-reported ideology. Note: Conservatives are respondents who selected “slightly conservative,” “conservative,” or “extremely conservative” under ANES's 7-point ideology question. Liberals are those who selected “slightly liberal,” “liberal,” or “extremely liberal.” Error bars represent 95 percent confidence intervals.

To further unpack ideological understandings and their predictors, we examine respondents’ ideological knowledge by partisanship, race, gender, and political sophistication. The results reveal substantial heterogeneity (Figure 3). First, Republicans were more familiar with the meanings of ideological terms than Democrats. The average number of correct responses among Republicans is 2.30, whereas that among Democrats is 1.96 (β = 0.34, 95 percent CI = [0.23, 0.45], n = 2263). If we set the passing threshold at 3, then 49 percent of Republicans “passed” the test, whereas only 32 percent of Democrats did so (p < 0.001, n = 2263).

Figure 3. Distribution of ideological knowledge across demographic subgroups. Note: The dashed lines indicate subgroup means.

Second, minority groups—Blacks and women—appeared to have greater difficulty with the ideological labels. On the one hand, the average number of correct responses among Blacks is 1.43 and that among non-Blacks is 2.02 (β = −0.58, 95 percent CI = [−0.71, −0.46], n = 2774). On the other hand, the average number of correct responses among women is 1.62 and that among men is 2.28 (β = −0.66, 95 percent CI = [−0.76, −0.56], n = 2774). Setting the passing threshold at 3, we find that 18 percent of Blacks “passed” the test compared to 39 percent of non-Blacks (p < 0.001, n = 2774). In addition, 28 percent of women “passed” the test compared to 45 percent of men (p < 0.001, n = 2774).

Third, politically sophisticated respondents performed better in our ideological knowledge test. In our survey, we asked respondents four questions to test their political knowledge (see Appendix F for survey items). We then classify those giving at least three correct responses to these questions as politically sophisticated. Following this classification, we find that the politically sophisticated answered 2.48 ideology questions correctly on average, compared to 1.56 among the less politically sophisticated (β = 0.92, 95 percent CI = [0.81, 1.02], n = 2757). With the passing threshold at 3, 54 percent of the politically sophisticated “passed” the test compared to 23 percent of the less politically sophisticated (p < 0.001, n = 2757). This result accords with previous work arguing that ideological labels are often less accessible to individuals who lack political knowledge (Converse, Reference Converse1964; Kalmoe, Reference Kalmoe2020).

Our results thus suggest that public understandings of ideological labels are heterogeneous and could be biased against minority groups. Not only do these findings provide direct evidence that African Americans are less familiar with ideological labels (see also Jefferson, Reference Jeffersonforthcoming), they also demonstrate that such systemic unfamiliarity is generalizable to other groups in the US. Our analysis here also highlights the problem of DIF with standard, self-reported measures of ideology. Researchers often rely on such measures to gauge and compare the ideological predispositions of different social groups. Yet, the fact that certain groups do not share the same understandings of the terms “liberal” and “conservative,” as documented here, renders it less meaningful to make such cross-group comparisons.

Some limitations of our descriptive analysis must be noted, however. First, we cannot rule out expressive responding as an underlying mechanism for the differences in ideological understanding among self-reported liberals/Democrats and conservatives/Republicans. This is a theoretical possibility because some research in psychology has suggested that liberals generally value emotions more than conservatives (Pliskin et al., Reference Pliskin, Bar-Tal, Sheppes and Halperin2014; Choi et al., Reference Choi, Karnaze, Lench and Levine2023). Relatedly, the second item of our ideology question (“Social institutions and the free market solve problems better than governments do”) might induce expressive responding, especially among liberals who could take this position on matters of economic policy. In Appendix F, however, we use an alternative operationalization of our measure that treats “Neither” as a correct answer on top of “Conservative” and obtain similar descriptive findings. Finally, because our ideology questions allowed respondents to report “Don't know,” the subgroup differences we documented may be biased against minority groups who could be more averse to making blind guesses.Footnote 17 We find that Black and female respondents were indeed more likely to report “Don't know” than their counterparts and that treating these responses as “correct” answers—an extreme case for robustness checks—reduces part, but not all, of the subgroup differences we documented (Appendix F). Despite these limitations, our descriptive findings raise concerns about DIF in standard measures of political ideology and, more generally, the ability of certain individuals to place themselves on an ideological scale—without the definitions provided—in the first place. These findings thus set the stage for our experiment, which we analyze in the next section.

4. Self-reported ideology with ideology defined

How does self-reported ideology change when respondents are provided with definitions of ideological labels? In the aggregate, it does not change. In the control group where respondents received the standard 7-point ANES measure of ideology, the mean is 4.12 (95 percent CI = [4.04, 4.19], n = 1883). In the Add Definitions condition where definitions of “liberal” and “conservative” were provided and the ideological labels kept, the mean is 4.02 (95 percent CI = [3.91, 4.14], n = 924). In the Subtract Labels condition where the same definitions were provided but the ideological labels removed, the mean is 4.08 (95 percent CI = [3.97, 4.18], n = 940). All group-mean differences are statistically insignificant at the 10 percent level.

Yet, the distribution of self-reported ideology changed when definitions of ideological labels were provided. Figure 4 visualizes the changes. In the Add Definitions condition where definitions were provided alongside ideological labels, respondents were less likely to identify as moderates but more likely to pick a side (p < 0.05 based on a preregistered Kolmogorov–Smirnov test). In addition, the variance of the measure increased substantially from 2.77 in the control group to 3.22 in this treatment condition (p < 0.001 based on a non-preregistered Fligner–Killeen test). However, there is no evidence that the distribution and variance of self-reported ideology in the Subtract Labels condition, where definitions were provided without ideological labels, differed from those in the control group (p > 0.1 in Kolmogorov–Smirnov and Fligner–Killeen tests).

Figure 4. Distribution of self-reported ideology across experimental conditions. Note: The dashed lines indicate subgroup means.

The aggregate comparisons, however, mask important heterogeneity. Unpacking the experimental data further, the rest of this section—by examining the heterogeneous treatment effects and conducting a within-subjects analysis based on partisanship—demonstrates the downstream consequences of measuring individual ideology with ideological definitions provided and/or with ideological labels removed. To preview our results, we find evidence that individual characteristics—especially partisanship—played an important role in contributing to the heterogeneity we documented above for the Subtract Labels condition.

4.1 How groups change their self-reported ideology across measures

Not only did female respondents move closer to the liberal side of the ideological scale (vis-á-vis male respondents) when the ideological terms were clearly defined for them (model 5 in Table 2), but less politically sophisticated respondents, compared to more sophisticated counterparts, also moved closer to the conservative side when ideological labels were further removed (model 6 in Table 2).Footnote 18 These findings have important implications for DIF and the appropriateness of using the standard self-reported measure to capture operational ideology, especially among minority groups. If individuals from different social groups had shared the same, scholarly aligned understandings of “liberal” and “conservative,” providing definitions in the question wording would not have meaningfully and differentially shifted their self-reported ideology in our treatment conditions. The substantial heterogeneity uncovered by our preregistered analysis, therefore, raises further concerns about the validity of standard self-reported instruments as a measure of operational ideology.

Table 2. Self-reported ideologies in different social groups are changed by question wording

Note: Entries are OLS estimates with robust standard errors in parentheses.

The problem of DIF is particularly concerning if we zoom in on treatment effect heterogeneity by partisanship. We find that Republicans moved closer to the liberal side of the ideological scale, and Democrats closer to the conservative side, when they were given the definitions—but not the labels—of “liberal” and “conservative” (model 3 in Table 2; see also panel B of Figures S8–S9 in Appendix F). Compared to Independents, Republicans became 0.5-point more liberal on average, and Democrats 0.6-point more conservative, under our label-free measure. On the one hand, the percentage of Democrats choosing points 1–3 (indicating liberal ideology) dropped from 53 percent with the standard ANES measure to 40 percent with our label-free measure, and the percentage of them choosing points 5–7 (indicating conservative ideology) rose from 13 percent with the ANES measure to 22 percent with the label-free measure (Figure S8B). On the other hand, the percentage of Republicans choosing points 1–3 increased from 7 percent with the ANES measure to 18 percent with the label-free measure, and the percentage of them choosing points 5–7 decreased from 71 percent with the ANES measure to 49 percent with the label-free measure (Figure S9B). To characterize our results differently, we find that Democrats’ modal response changed from 2 (liberal) to 4 (moderate), and Republicans’ modal response changed from 6 (conservative) to 4 (moderate), as we moved from the ANES measure to the label-free measure.

The heterogeneity by partisanship sheds light on the null average treatment effect in the Subtract Labels condition. It suggests a “canceling out” effect: When ideological labels were clearly defined without priming respondents with the terms “liberal” and “conservative,” Republicans reported more liberal ideology on the one hand, while Democrats reported more conservative ideology on the other. The opposite directions of such treatment effect heterogeneity by party identification, in turn, canceled each other out. This resulted in an unchanged self-reported ideology in aggregate.

4.2 Ideological gaps between Democrats and Republicans across measures

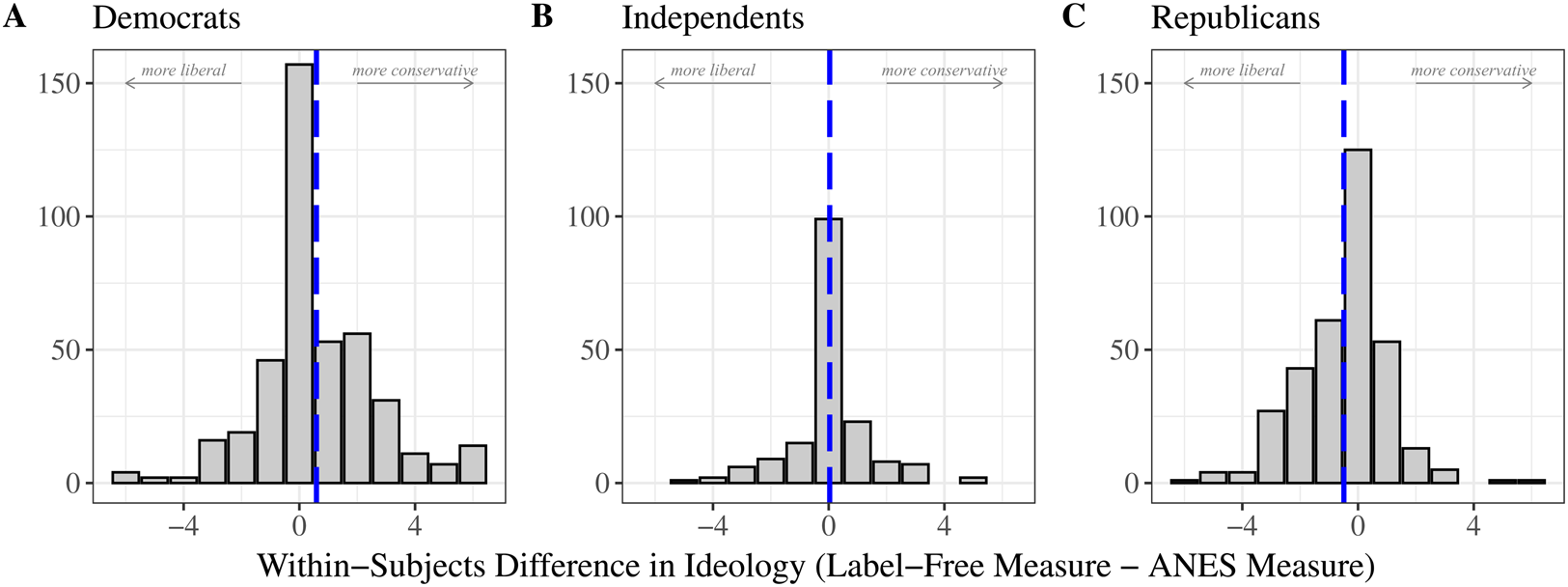

To bolster this claim, we analyze the within-subjects differences in ideology in the Subtract Labels condition. Figure 5 shows the within-subjects changes in self-reported ideology as we move from the ANES measure to our label-free measure of ideology.

Figure 5. Within-subjects differences in ideology by partisanship in the Subtract Labels condition.

Note: Positive (negative) values indicate that the respondent reported a more conservative (liberal) ideology under the label-free measure of ideology. The dashed lines indicate subgroup means.

While non-partisans’ self-reported ideology remained relatively stable,Footnote 19 partisans’ ideology was less so. As the question wording changed such that symbolic ideological cues were removed, Democrats moved toward the conservative direction on the one hand (β = 0.59, SE = 0.10, p < 0.001; see also panel A in Figure 5), while Republicans moved toward the liberal direction on the other hand (β = −0.49, SE = 0.08, p < 0.001; see also panel C in Figure 5). Additionally, we find that 52 of 938 Democrats (6 percent) shifted from a self-reported liberal to a conservative under our label-free measure, and that 26 of 936 Republicans (3 percent) went from being a self-reported conservative to a liberal. Although these respondents only accounted for a minority of our sample, the extreme changes in self-reported ideology that they exhibited were non-negligible and worth noting.

Not only does the within-subjects analysis corroborate our findings in Table 2, but it also highlights the magnitude of the ideological shifts. As shown in Figure 6, the baseline ideological differences between Democrats and Republicans—based on their self-reported ideology under the ANES measure—is 2.12 points (SE = 0.11) on a 7-point scale. Yet, the gap narrows to 1.04 points (SE = 0.12) if the measurement is based on our label-free measure instead. Therefore, a simple change in the measurement strategy of political ideology—by providing clear definitions without displaying ideological labels—narrows half the gap between Democrats’ and Republicans’ self-reported ideology.

Figure 6. Ideological differences between Democrats and Republicans based on two different self-reported measures in the Subtract Labels condition. Note: The dashed lines indicate subgroup means.

4.3 Implications for partisan-ideological sorting

What does this mean for partisan-ideological sorting—that “people have sorted into the ‘correct’ combination of party and ideology” (Mason, Reference Mason2015, 128)—in the US? Although scholars disagree on whether this phenomenon reflects ideological polarization of the masses (e.g., Abramowitz and Saunders, Reference Abramowitz and Saunders2008; Fiorina et al., Reference Fiorina, Abrams and Pope2008), they share the same view that sorting does exist among the American public (e.g., Levendusky, Reference Levendusky2009; Fiorina, Reference Fiorina2017; Abramowitz, Reference Abramowitz2018). By documenting the strongly positive correlation between 7-point self-reported ideology and 7-point party identification, past important work has argued that Americans are highly sorted or even ideologically polarized (e.g., Abramowitz and Saunders, Reference Abramowitz and Saunders2008; cf. Lupton et al., Reference Lupton, Smallpage and Enders2020).

This argument, however, assumes that the standard measure of self-reported ideology is substantively accurate—that Americans understand what “liberal” and “conservative” mean and do not view these labels through a partisan lens. Yet, our analyses above suggest that partisan-ideological sorting would be weakened if we properly define ideological terms for respondents and take out the ideological labels. Figure 7 supports this hypothesis. It shows:

• Comparing respondents in the control group (no ideological definitions provided) and the Subtract Labels condition (definitions provided without ideological labels), we find that the correlation between ideology and partisanship is significantly weaker in the latter group—the Pearson's r in the former is 0.58 (SE = 0.02), whereas that in the latter is 0.26 (SE = 0.03). A test for equality of correlation coefficients, based on a preregistered percentile bootstrap approach (Rousselet et al., Reference Rousselet, Pernet and Wilcox2021), suggests that the difference is statistically significant at the 0.01 percent level (n = 2814).Footnote 20

• The Pearson's r in the Add Definitions condition (definitions provided with ideological labels) is 0.56 (SE = 0.03). Formal tests show no evidence that it is statistically different from the Pearson's r in the control group (p = 0.581, n = 2800). But it is much higher than the Pearson's r under the label-free measure in the Subtract Labels condition (p < 0.001, n = 1862).

Figure 7. Correlation between self-reported ideology and partisanship under different measures of ideology. Note: The solid lines in panels A and B are best-fit lines for self-reported ideology and partisanship in the Add Definitions condition and the Subtract Labels condition, respectively. The dashed lines are best-fit lines under the standard ANES measure.

We thus find evidence that, when measuring ideology, providing ideological labels can fundamentally shift how partisans report their ideology. Once ideological labels are removed from the question wording, Republicans are less likely to self-identify as conservatives, while Democrats are less likely to report as liberals. In other words, minor changes in the question wording that introduce symbolic ideological cues can generate major changes in the widely used indicator of partisan-ideological sorting. By replicating the partisan-ideological sorting using the standard ANES measure, while also documenting the substantially weakened sorting using our label-free measure, our results point to the possibility that partisan-ideological sorting may be highly sensitive to alternative measurement approaches.

4.4 Caveats

To be clear, we do not dispute that partisan-ideological sorting exists in the US. Latest work by Fowler et al. (Reference Fowler, Hill, Lewis, Tausanovitch, Vavreck and Warshaw2023, 656), for example, demonstrates that their policy-based measure of ideology is strongly associated with party identification. Our point is that partisan-ideological sorting may be inflated if researchers overly rely on self-reported ideology, and correlate it with partisanship, to document sorting—without accounting for the identity component of this measure that may bias operational ideology (Kinder and Kalmoe, Reference Kinder and Kalmoe2017; Mason, Reference Mason2018; de Abreu Maia, Reference de Abreu Maia2021). Evidence of partisan-ideological sorting based on simple correlations between self-reported ideology and party identification should not be understood as purely driven by ideological substance. Symbolic ideology driven by labels could also contribute to this correlation.

Relatedly, we do not assert that the weakened partisan-ideological sorting as documented by our label-free measure is more reflective of reality. While labels can trigger identities that create bias, they can also help individuals navigate the political world. Grossmann and Hopkins (Reference Grossmann and Hopkins2016), for example, argue that because many Republicans have a conservative ideology which meaningfully structures their “broad system of ideas about the role of government” (14), Republican candidates often engage in ideological appeals as a campaign strategy. Top-down political communication about ideology could help Republicans map their beliefs about government onto appropriate partisan and ideological labels. Indeed, while our label-free measure significantly improved the correlation between self-reported ideology and preferences for government responsibility, it reduced the correlation between self-reported ideology and preferences for fiscal policy (Appendix C).

Note that we have been agnostic about whether ideology should be conceptualized and operationalized as a unidimensional or multidimensional construct (see, e.g., Heath et al., Reference Heath, Evans and Martin1994; Evans et al., Reference Evans, Heath and Lalljee1996; Treier and Hillygus, Reference Treier and Hillygus2009; Wood and Oliver, Reference Wood and Oliver2012; Feldman and Johnston, Reference Feldman and Johnston2014; Carmines and D'Amico, Reference Carmines and D'Amico2015; Broockman, Reference Broockman2016; Caughey et al., Reference Caughey, O'Grady and Warshaw2019; Fowler et al., Reference Fowler, Hill, Lewis, Tausanovitch, Vavreck and Warshaw2023). What we showed is that, even if ideology can be conceptualized within a single dimension, self-reported liberals and conservatives in standard measures could still be falsely identified, respectively, as individuals who possess liberal and conservative predispositions. One potential concern about our analysis is that the results could be sensitive to the specific definitions of “liberal” and “conservative” we provided in the survey. While we justified our vignette based on the existing literature (see Footnote 9), it would be valuable for future work to probe the robustness of our findings by adopting alternative reasonable definitions of liberalism and conservatism in the label-free measure.

5. Conclusion

Our findings raise important concerns about the use of self-reported measures of political ideology in American politics. We show that Americans’ understandings of “liberal” and “conservative” are weakly aligned with conventional definitions of these terms and that such understandings are heterogeneous across social groups. Building on these descriptive findings, our between-subjects analysis shows that minor changes in the question wording that introduce symbolic ideological cues can lead to big differences in how partisans report their ideology. Our within-subjects analysis reaffirms this finding: when ideology is solicited with clear definitions but without ideological labels, Republicans self-report greater liberalism and Democrats greater conservatism. The label-free measure—which follows a standard, recommended practice for survey research (i.e., every term that not all respondents might correctly know should be defined; see Fowler, Reference Fowler2014)—significantly reduces the correlation between self-reported ideology and partisanship. In short, individuals have nuanced understandings of what “liberal” and “conservative” entail, and their self-reported ideology is endogenous to their party identification.

Our paper therefore makes three contributions:

1. It contributes to ongoing debates about the appropriateness of standard measures of political ideology (Wood and Oliver, Reference Wood and Oliver2012; Feldman and Johnston, Reference Feldman and Johnston2014; Carmines and D'Amico, Reference Carmines and D'Amico2015; Broockman, Reference Broockman2016; de Abreu Maia, Reference de Abreu Maia2021; Fowler et al., Reference Fowler, Hill, Lewis, Tausanovitch, Vavreck and Warshaw2023; Jefferson, Reference Jeffersonforthcoming). When using these standard measures of ideology, much of the existing literature in American politics implicitly assumes that they can reliably capture operational ideology. We argue that ideological labels in the survey instrument can systematically bias how individuals report their political ideology, thereby reducing the measure's ability to gauge substantive issue preferences. Our experiment offers causal evidence that self-reported ideology will substantially change if researchers provide explicit definitions of “liberal” and “conservative” while removing ideological labels from the question.

2. We offer an alternative measure of political ideology that can potentially reduce DIF, which is prevalent in self-reported instruments (Bauer et al., Reference Bauer, Barberá, Ackermann and Venetz2017; Jessee, Reference Jessee2021; Pietryka and MacIntosh, Reference Pietryka and MacIntosh2022). To solve the problem of DIF, scholars have advocated the use of Aldrich–McKelvey scaling or anchoring vignettes (Hare et al., Reference Hare, Armstrong, Bakker, Carroll and Poole2015; Simas, Reference Simas2018). These methods, while useful, remain under-utilized in practice. This is partly because they usually demand longer survey duration and, consequently, researchers are often forced into a trade-off between the measurement accuracy gained and the survey time used. Our label-free measure suggests an alternative way—one that is straightforward and easy to implement—to solicit self-reported ideology, while potentially mitigating DIF. Of course, if the researcher does not find the textbook definition—and thus the operationalization—of ideology appropriate, they can simply alter the question wording to fit their own definitions of “liberal” and “conservative,” while following the same question format. The key is to be transparent about what the measure encapsulates in the researcher's context.

3. We contribute to the literature on partisan-ideological sorting (Levendusky, Reference Levendusky2009; Mason, Reference Mason2015; Abramowitz, Reference Abramowitz2018). The existing scholarship casts much doubt on whether the American public is ideologically polarized (e.g., Fiorina, Reference Fiorina2017), but less on whether the masses are highly sorted. However, our alternative measure of ideology—which defines ideological terms without displaying labels—greatly reduces the often-cited correlation between self-reported ideology and self-reported partisanship. Although we do not contend that our label-free measure captures the “true” degree of sorting better than conventional self-reported measures do, our results show that standard evidence of sorting in the US could be highly sensitive to alternative measurement approaches.

We clarify three points before closing. First, we do not advocate for abandoning self-reported measures of ideology, but urge researchers to be clear about what these measures are supposed to capture. If their objective, for example, is to understand how “liberal” and “conservative” as social identities shape political behavior, then the standard self-reported measures may be ideal (e.g., Mason, Reference Mason2018; Lupton et al., Reference Lupton, Smallpage and Enders2020; Groenendyk et al., Reference Groenendyk, Kimbrough and Pickup2023; cf. Devine, Reference Devine2015). In this case, clarifying upfront that the standard self-reported instrument is deployed to measure symbolic ideology would be helpful (e.g., Barber and Pope, Reference Barber and Pope2019; Jost, Reference Jost2021). If their goal, however, is to understand how individual ideological predispositions—or how liberal and conservative systems of beliefs about policy issues—shape political attitudes, then alternative measures would be more appropriate (e.g., Wood and Oliver, Reference Wood and Oliver2012; Simas, Reference Simas2023; Yeung, Reference Yeung2024). This is because the standard self-reported instrument, as our analysis suggests, is a crude—or even biased—measure of operational ideology. Earlier scholarship has developed targeted scales to measure individuals’ beliefs about socialism versus laissez-faire and libertarianism versus authoritarianism (Heath et al., Reference Heath, Evans and Martin1994; Evans et al., Reference Evans, Heath and Lalljee1996). Latest scholarship pairs individuals’ policy preferences with IRT models to gauge their latent political ideology (Caughey et al., Reference Caughey, O'Grady and Warshaw2019; Fowler et al., Reference Fowler, Hill, Lewis, Tausanovitch, Vavreck and Warshaw2023). These measurement approaches would be more appropriate than ideological self-placements when the researcher's goal is to measure operational ideology.

Second, we do not posit that individuals lack meaningful political ideologies. This is because individuals can have meaningful ideologies without knowing what “liberal” and “conservative” mean, or without using the terms in the same way that others—particularly scholars and elites—do. This leads to our final point: our results do not show that minority populations are less informed or less involved politically. What our study shows is that their understandings of ideological terms are less aligned—and largely inconsistent—with the “standard” understandings of ideology. Our study does not shed light on the source of such misalignment, and we hope that future research would continue to investigate the origins and consequences of public knowledge of ideology.

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/psrm.2024.2. To obtain replication material for this article, https://doi.org/10.7910/DVN/FLKUMG

Data

Replication material is publicly available at PSRM Dataverse.

Acknowledgments

We thank Anthony Fowler, Zac Peskowitz, and Mike Sances for their feedback. We also thank the editor and three anonymous reviewers at PSRM for their valuable suggestions and advice. The standard disclaimers apply.

Financial support

This work was supported by the University of Hong Kong.

Competing interest

None.

Ethical standards

Before we fielded our survey, we obtained IRB approval from the University of Hong Kong. All respondents were given detailed information about their rights as a survey participant, and were required to give their consent before they began the survey. We confirm compliance with APSA's Principles and Guidance for Human Subjects Research.