1. Introduction

Time delays can induce periodic solutions through Hopf bifurcations, and this phenomenon can explain population oscillations in the natural world. Take the following

![]() $n$

-patch single population model

$n$

-patch single population model

for instance. Here,

![]() $u_j$

denotes the population density in patch

$u_j$

denotes the population density in patch

![]() $j$

,

$j$

,

![]() $(L_{jk})$

is the dispersion matrix,

$(L_{jk})$

is the dispersion matrix,

![]() $\tau$

is the time delay and represents the maturation time, and

$\tau$

is the time delay and represents the maturation time, and

![]() $a_j$

and

$a_j$

and

![]() $b_j$

represent the intrinsic growth rate and intraspecific competition of the species in patch

$b_j$

represent the intrinsic growth rate and intraspecific competition of the species in patch

![]() $j$

, respectively. It was shown that time delay can induce Hopf bifurcations for model (1.1) when the dispersion matrix

$j$

, respectively. It was shown that time delay can induce Hopf bifurcations for model (1.1) when the dispersion matrix

![]() $(L_{jk})=\epsilon (\hat L_{jk})$

with

$(L_{jk})=\epsilon (\hat L_{jk})$

with

![]() $0\lt \epsilon \ll 1$

or

$0\lt \epsilon \ll 1$

or

![]() $\epsilon \gg 1$

[Reference Chen, Shen and Wei8, Reference Huang, Chen and Zou24]. Especially, if

$\epsilon \gg 1$

[Reference Chen, Shen and Wei8, Reference Huang, Chen and Zou24]. Especially, if

![]() $n=2$

, delay-induced Hopf bifurcations can occur for a wider range of parameters [Reference Liao and Lou31].

$n=2$

, delay-induced Hopf bifurcations can occur for a wider range of parameters [Reference Liao and Lou31].

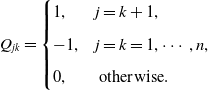

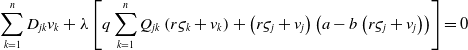

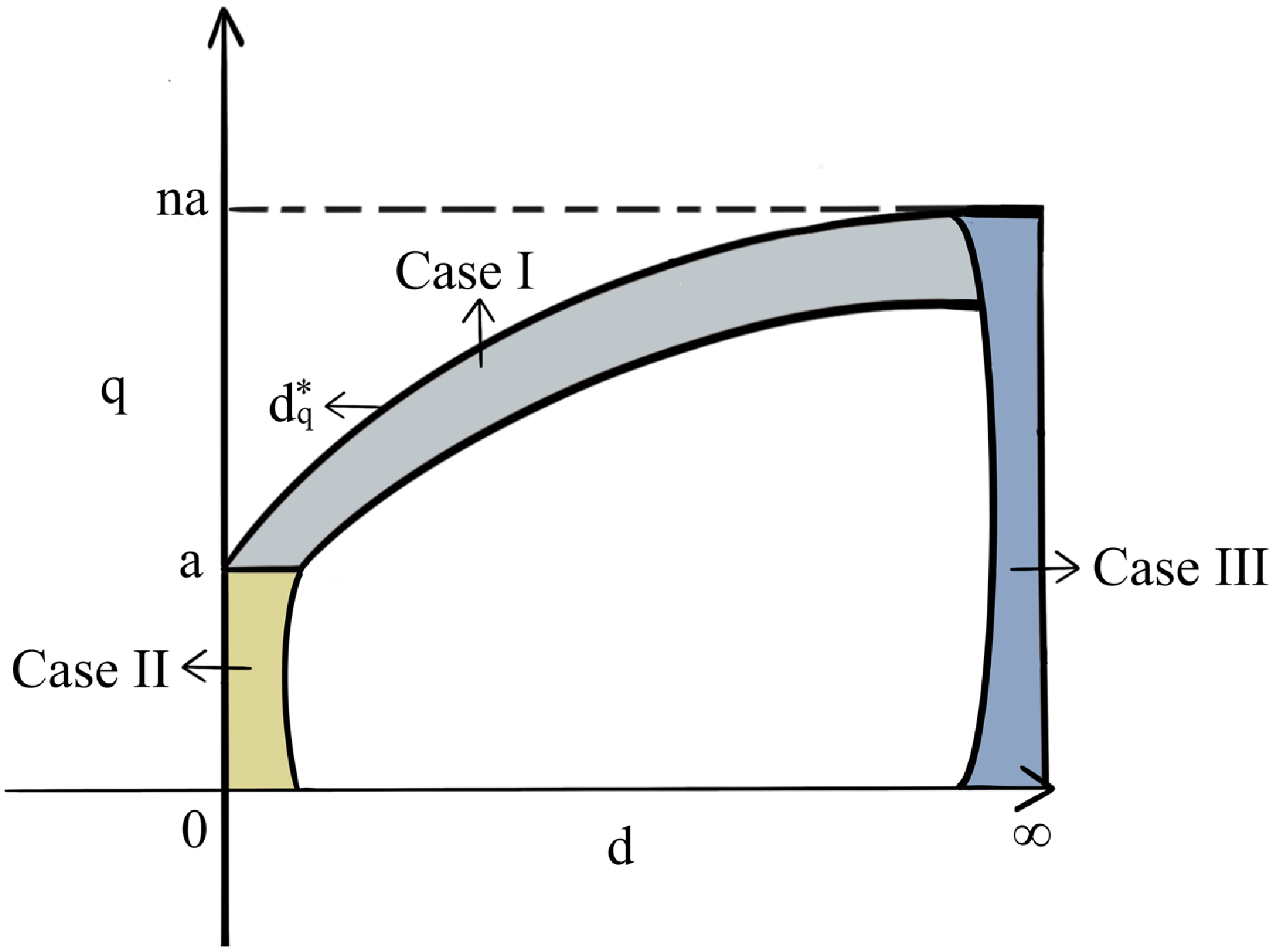

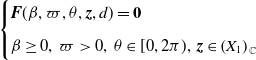

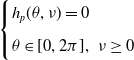

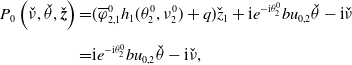

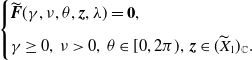

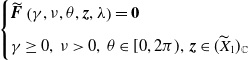

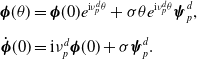

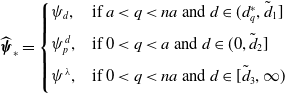

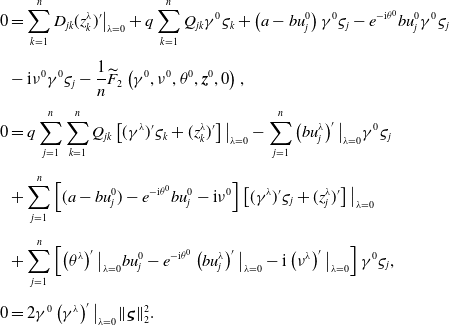

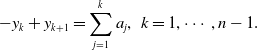

The species in streams are subject to both random and directed movements. For example, the following Figure 1 represents stream to a lake, and the diffusive flux into and from the lake balances. Therefore, the flux into the lake at the downstream end is only the advective flux, and one can refer to [Reference Lou34, Reference Lou and Lutscher35, Reference Vasilyeva and Lutscher49] for more biological explanation. For the river network illustrated in Figure 1, the dispersion matrix

![]() $ (L_{jk})$

in model (1.1) takes the following form:

$ (L_{jk})$

in model (1.1) takes the following form:

Here,

![]() $d$

and

$d$

and

![]() $q$

are the random diffusion rate and drift rate, respectively; and

$q$

are the random diffusion rate and drift rate, respectively; and

![]() $D=(D_{ij})$

and

$D=(D_{ij})$

and

![]() $Q=(Q_{ij})$

represent the diffusion pattern and directed movement pattern of individuals, respectively, where

$Q=(Q_{ij})$

represent the diffusion pattern and directed movement pattern of individuals, respectively, where

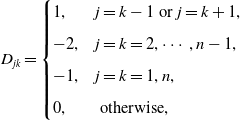

\begin{equation} D_{jk}= \begin{cases} 1, & j=k-1 \text{ or } j=k+1, \\[5pt] -2, & j=k=2,\cdots, n-1, \\[5pt] -1, & j=k=1, n, \\[5pt] 0, & \text{ otherwise, } \end{cases} \end{equation}

\begin{equation} D_{jk}= \begin{cases} 1, & j=k-1 \text{ or } j=k+1, \\[5pt] -2, & j=k=2,\cdots, n-1, \\[5pt] -1, & j=k=1, n, \\[5pt] 0, & \text{ otherwise, } \end{cases} \end{equation}

and

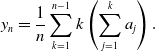

\begin{equation} Q_{jk}= \begin{cases} 1, & j=k+1, \\[5pt] -1, & j=k=1,\cdots, n, \\[5pt] 0, & \text{ otherwise. } \end{cases} \end{equation}

\begin{equation} Q_{jk}= \begin{cases} 1, & j=k+1, \\[5pt] -1, & j=k=1,\cdots, n, \\[5pt] 0, & \text{ otherwise. } \end{cases} \end{equation}

The population dynamics in streams have been studied extensively, and it can be modelled by discrete patch models or partial differential equations (PDE) models. It is well known that the stream flow takes individuals to the downstream locations, which is unfavourable for their persistence, see, for example, [Reference Lou and Lutscher35, Reference Lou and Zhou36, Reference Speirs and Gurney45, Reference Vasilyeva and Lutscher49] for PDE models. The directed drift is also a disadvantage for two competing species, and to win the competition, the species need a faster random movement rate to compensate the net loss induced by directed movements, see, for example, [Reference Cantrell, Cosner and Lou3, Reference Chen, Lam and Lou13, Reference Lou and Lutscher35, Reference Lou and Zhou36, Reference Zhou53] for PDE models and, for example, [Reference Chen, Liu and Wu6, Reference Hamida22, Reference Jiang, Lam and Lou25, Reference Jiang, Lam and Lou26, Reference Noble42] for discrete patch models. A natural question is:

-

(Q1) Whether model (1.1) undergoes Hopf bifurcations with

$(L_{jk})$

defined in (1.2) and how directed movements of individuals affect Hopf bifurcations?

$(L_{jk})$

defined in (1.2) and how directed movements of individuals affect Hopf bifurcations?

We remark that

![]() $d$

and

$d$

and

![]() $q$

are normally not proportional, and consequently results in [Reference Chen, Shen and Wei8, Reference Huang, Chen and Zou24] cannot apply to this type of dispersion matrix.

$q$

are normally not proportional, and consequently results in [Reference Chen, Shen and Wei8, Reference Huang, Chen and Zou24] cannot apply to this type of dispersion matrix.

Figure 1. A sample river network.

In this paper, we aim to answer question

![]() $(\text{Q}_1)$

and consider the river network illustrated in Figure 1. To emphasise the effect of directed drift, we exclude the effect of spatial heterogeneity, and let

$(\text{Q}_1)$

and consider the river network illustrated in Figure 1. To emphasise the effect of directed drift, we exclude the effect of spatial heterogeneity, and let

![]() $a_1=\cdots =a_n=a$

and

$a_1=\cdots =a_n=a$

and

![]() $b_1=\cdots =b_n=b$

in model (1.1). Then model (1.1) is reduced to the following form:

$b_1=\cdots =b_n=b$

in model (1.1). Then model (1.1) is reduced to the following form:

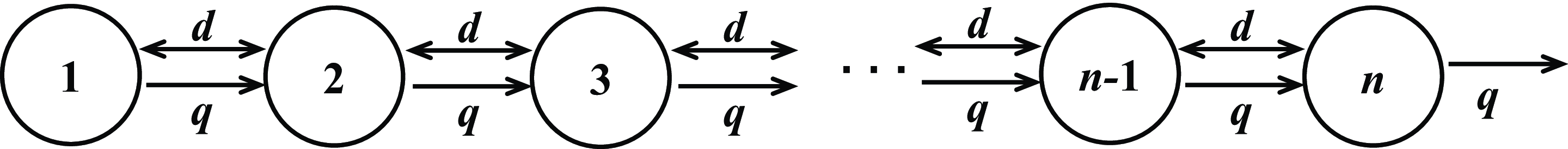

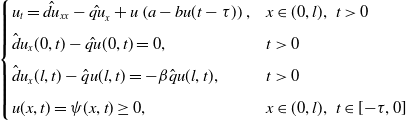

\begin{equation} \begin{cases} \displaystyle \frac{d u_j}{d t}=\sum _{k=1}^{n}\left (d D_{jk}+ q Q_{jk}\right )u_k + u_j\left (a- b u_j(t-\tau )\right ),&t\gt 0,\;\;j=1,\cdots, n,\\[9pt] \displaystyle {\boldsymbol{u}}(t)=\boldsymbol{\psi }(t)\geq 0,&t\in [\!-\!\tau, 0], \end{cases} \end{equation}

\begin{equation} \begin{cases} \displaystyle \frac{d u_j}{d t}=\sum _{k=1}^{n}\left (d D_{jk}+ q Q_{jk}\right )u_k + u_j\left (a- b u_j(t-\tau )\right ),&t\gt 0,\;\;j=1,\cdots, n,\\[9pt] \displaystyle {\boldsymbol{u}}(t)=\boldsymbol{\psi }(t)\geq 0,&t\in [\!-\!\tau, 0], \end{cases} \end{equation}

where

![]() $n\geq 2$

is the number of patches,

$n\geq 2$

is the number of patches,

![]() $d$

and

$d$

and

![]() $q$

are the random diffusion rate and drift rate, respectively,

$q$

are the random diffusion rate and drift rate, respectively,

![]() $D=(D_{jk})$

and

$D=(D_{jk})$

and

![]() $Q=(Q_{jk})$

are defined in (1.3) and (1.4), respectively, and parameters

$Q=(Q_{jk})$

are defined in (1.3) and (1.4), respectively, and parameters

![]() $a,b,\tau \gt 0$

have the same meanings as the above model (1.1).

$a,b,\tau \gt 0$

have the same meanings as the above model (1.1).

Our study is also motivated by some researches on reaction–diffusion models with time delay. One can refer to [Reference An, Wang and Wang1, Reference Busenberg and Huang2, Reference Chen and Shi9, Reference Chen and Yu12, Reference Guo19, Reference Guo and Yan20, Reference Hu and Yuan23, Reference Su, Wei and Shi46, Reference Yan and Li50, Reference Yan and Li51] and [Reference Chen, Lou and Wei7, Reference Chen, Wei and Zhang11, Reference Jin and Yuan27, Reference Li and Dai30, Reference Liu and Chen33, Reference Ma and Feng38, Reference Meng, Liu and Jin41, Reference Sun and Yuan47] for reaction–diffusion models without and with advection term, respectively. The following reaction–diffusion model with time delay

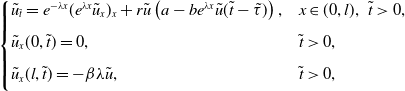

\begin{equation} \begin{cases} u_t=\hat{du}_{xx}-\hat{qu}_x + u\left (a- b u(t-\tau )\right ),&x\in (0,l),\;\;t\gt 0\\[5pt] \hat du_x(0,t)-\hat{qu}(0,t)=0,& t\gt 0\\[5pt] \hat du_x(l,t)-\hat q u(l,t)=-\beta \hat q u(l,t),& t\gt 0\\[5pt] u(x,t)=\psi (x,t)\geq 0,&x\in (0,l),\;\;t\in [\!-\!\tau, 0] \end{cases} \end{equation}

\begin{equation} \begin{cases} u_t=\hat{du}_{xx}-\hat{qu}_x + u\left (a- b u(t-\tau )\right ),&x\in (0,l),\;\;t\gt 0\\[5pt] \hat du_x(0,t)-\hat{qu}(0,t)=0,& t\gt 0\\[5pt] \hat du_x(l,t)-\hat q u(l,t)=-\beta \hat q u(l,t),& t\gt 0\\[5pt] u(x,t)=\psi (x,t)\geq 0,&x\in (0,l),\;\;t\in [\!-\!\tau, 0] \end{cases} \end{equation}

models population dynamics in streams, where

![]() $\beta \ge 0$

represents the loss of individuals at the downstream end. Actually, model (1.5) can be viewed as a discrete version of model (1.6) with

$\beta \ge 0$

represents the loss of individuals at the downstream end. Actually, model (1.5) can be viewed as a discrete version of model (1.6) with

![]() $\beta =1$

, which describes streams into a lake at the downstream end. Divide the interval

$\beta =1$

, which describes streams into a lake at the downstream end. Divide the interval

![]() $[0, l]$

into

$[0, l]$

into

![]() $n+1$

sub-intervals with equal length

$n+1$

sub-intervals with equal length

![]() $\Delta x=l/(n+1)$

, and denote the endpoints by

$\Delta x=l/(n+1)$

, and denote the endpoints by

![]() $0, 1,\cdots, n+1$

. Discretising the spatial variable of the first equation of (1.6) at endpoints

$0, 1,\cdots, n+1$

. Discretising the spatial variable of the first equation of (1.6) at endpoints

![]() $j=1,\cdots, n$

, we obtain the following equation:

$j=1,\cdots, n$

, we obtain the following equation:

where

![]() $u_j(t)$

is the population density at endpoint

$u_j(t)$

is the population density at endpoint

![]() $j$

. Let

$j$

. Let

![]() $d=\hat{d}/(\Delta x)^2$

and

$d=\hat{d}/(\Delta x)^2$

and

![]() $q=\hat{q}/\Delta x$

. Then we obtain (1.5) from (1.7) for

$q=\hat{q}/\Delta x$

. Then we obtain (1.5) from (1.7) for

![]() $j=2,\cdots, n-1$

. At the upstream end, we discretise the no-flux boundary condition and obtain that

$j=2,\cdots, n-1$

. At the upstream end, we discretise the no-flux boundary condition and obtain that

Plugging (1.8) into (1.7) with

![]() $j=1$

, we obtain (1.5) for

$j=1$

, we obtain (1.5) for

![]() $j=1$

. Similarly, discretising the boundary condition at the downstream end with

$j=1$

. Similarly, discretising the boundary condition at the downstream end with

![]() $\beta =1$

, we have

$\beta =1$

, we have

![]() $u_{n}=u_{n+1}$

. Plugging it into (1.7), we obtain (1.5) for

$u_{n}=u_{n+1}$

. Plugging it into (1.7), we obtain (1.5) for

![]() $j=n$

. The above discretisation for model (1.6) with

$j=n$

. The above discretisation for model (1.6) with

![]() $\tau =0$

can be found in [Reference Chen, Shi, Shuai and Wu10, Reference Lou34], and here we include it for the sake of completeness.

$\tau =0$

can be found in [Reference Chen, Shi, Shuai and Wu10, Reference Lou34], and here we include it for the sake of completeness.

For the non-advective case (

![]() $\hat q=0$

), model (1.6) admits a unique positive steady state

$\hat q=0$

), model (1.6) admits a unique positive steady state

![]() $u=a/b$

, and it was shown in [Reference Memory40, Reference Yoshida52] that large delay can make such a constant steady state unstable and induce Hopf bifurcations. If

$u=a/b$

, and it was shown in [Reference Memory40, Reference Yoshida52] that large delay can make such a constant steady state unstable and induce Hopf bifurcations. If

![]() $\hat d$

and

$\hat d$

and

![]() $\hat q$

are proportional with

$\hat q$

are proportional with

![]() $\hat q\ne 0$

, delay-induced Hopf bifurcations can also be investigated. Letting

$\hat q\ne 0$

, delay-induced Hopf bifurcations can also be investigated. Letting

![]() $\tilde u=e^{-(\hat q/\hat d)x}u$

and

$\tilde u=e^{-(\hat q/\hat d)x}u$

and

![]() $\tilde t=dt$

, model (1.6) can be transformed as follows:

$\tilde t=dt$

, model (1.6) can be transformed as follows:

\begin{equation} \begin{cases} \tilde u_{\tilde t}=e^{-\lambda x}(e^{\lambda x}\tilde u_{x})_x+r \tilde u\left (a- be^{\lambda x} \tilde u(\tilde t-\tilde \tau )\right ),&x\in (0,l),\;\;\tilde t\gt 0,\\[5pt] \tilde u_x(0,\tilde t)=0,& \tilde t\gt 0,\\[5pt] \tilde u_x(l,\tilde t)=-\beta \lambda \tilde u,& \tilde t\gt 0,\\[5pt] \end{cases} \end{equation}

\begin{equation} \begin{cases} \tilde u_{\tilde t}=e^{-\lambda x}(e^{\lambda x}\tilde u_{x})_x+r \tilde u\left (a- be^{\lambda x} \tilde u(\tilde t-\tilde \tau )\right ),&x\in (0,l),\;\;\tilde t\gt 0,\\[5pt] \tilde u_x(0,\tilde t)=0,& \tilde t\gt 0,\\[5pt] \tilde u_x(l,\tilde t)=-\beta \lambda \tilde u,& \tilde t\gt 0,\\[5pt] \end{cases} \end{equation}

where

![]() $\lambda =\hat d/\hat q$

,

$\lambda =\hat d/\hat q$

,

![]() $r=1/\hat d$

and

$r=1/\hat d$

and

![]() $\tilde \tau =\hat d\tau$

. For the case of

$\tilde \tau =\hat d\tau$

. For the case of

![]() $\beta =0$

, it was shown in [Reference Chen, Wei and Zhang11] that delay can induce Hopf bifurcations for model (1.9) if

$\beta =0$

, it was shown in [Reference Chen, Wei and Zhang11] that delay can induce Hopf bifurcations for model (1.9) if

![]() $r\ll 1$

, which implies that delay-induced Hopf bifurcations can occur if

$r\ll 1$

, which implies that delay-induced Hopf bifurcations can occur if

![]() $\hat d$

and

$\hat d$

and

![]() $\hat q$

are proportional and both large for the original model (1.6). To our knowledge, the case that

$\hat q$

are proportional and both large for the original model (1.6). To our knowledge, the case that

![]() $\hat d$

and

$\hat d$

and

![]() $\hat q$

are not proportional is also unknown for model (1.6). Our study on question

$\hat q$

are not proportional is also unknown for model (1.6). Our study on question

![]() $(\text{Q}_1)$

also solves this problem in a discrete setting.

$(\text{Q}_1)$

also solves this problem in a discrete setting.

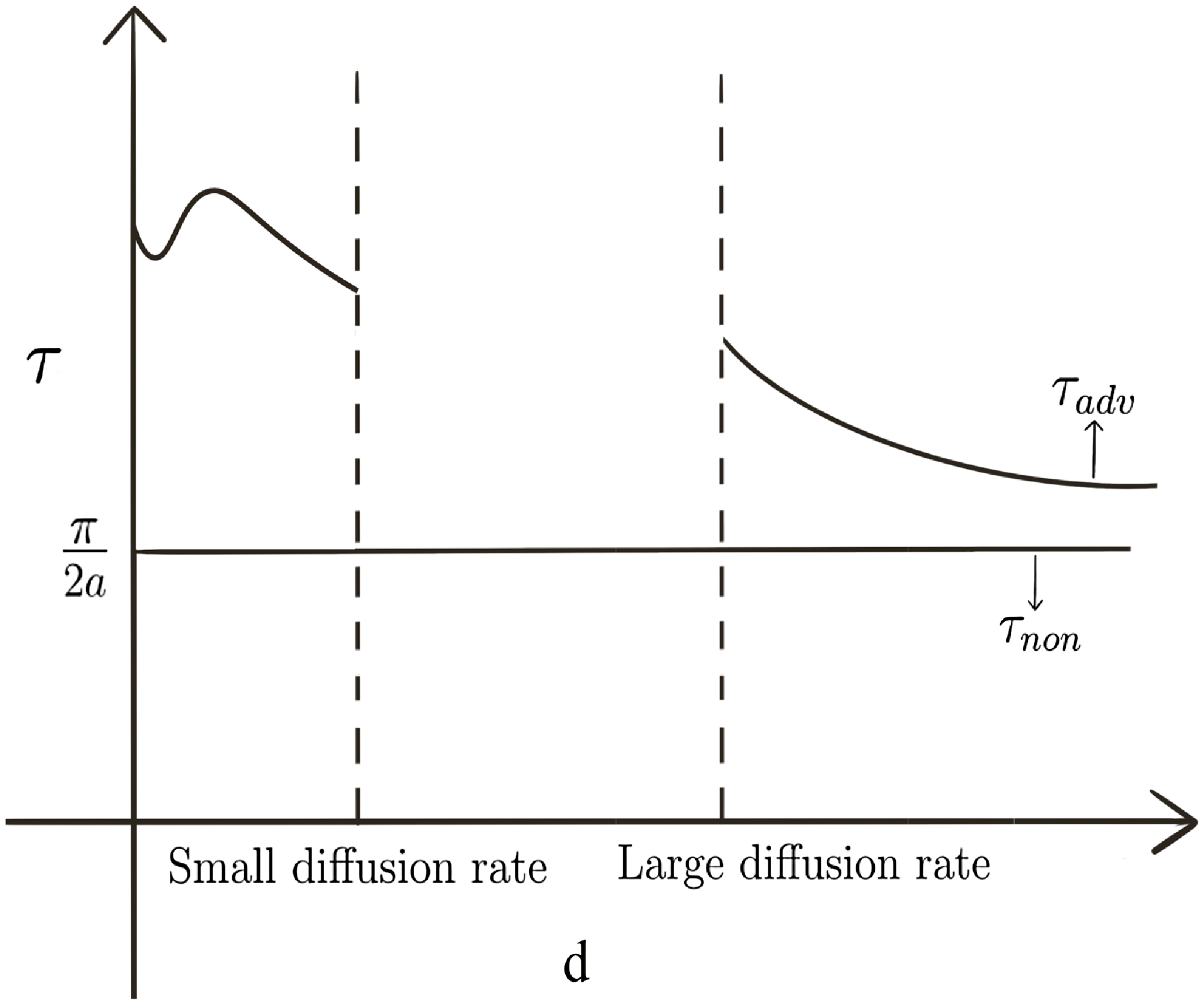

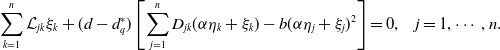

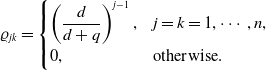

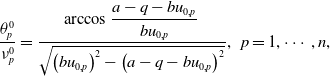

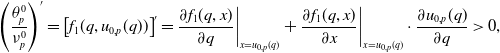

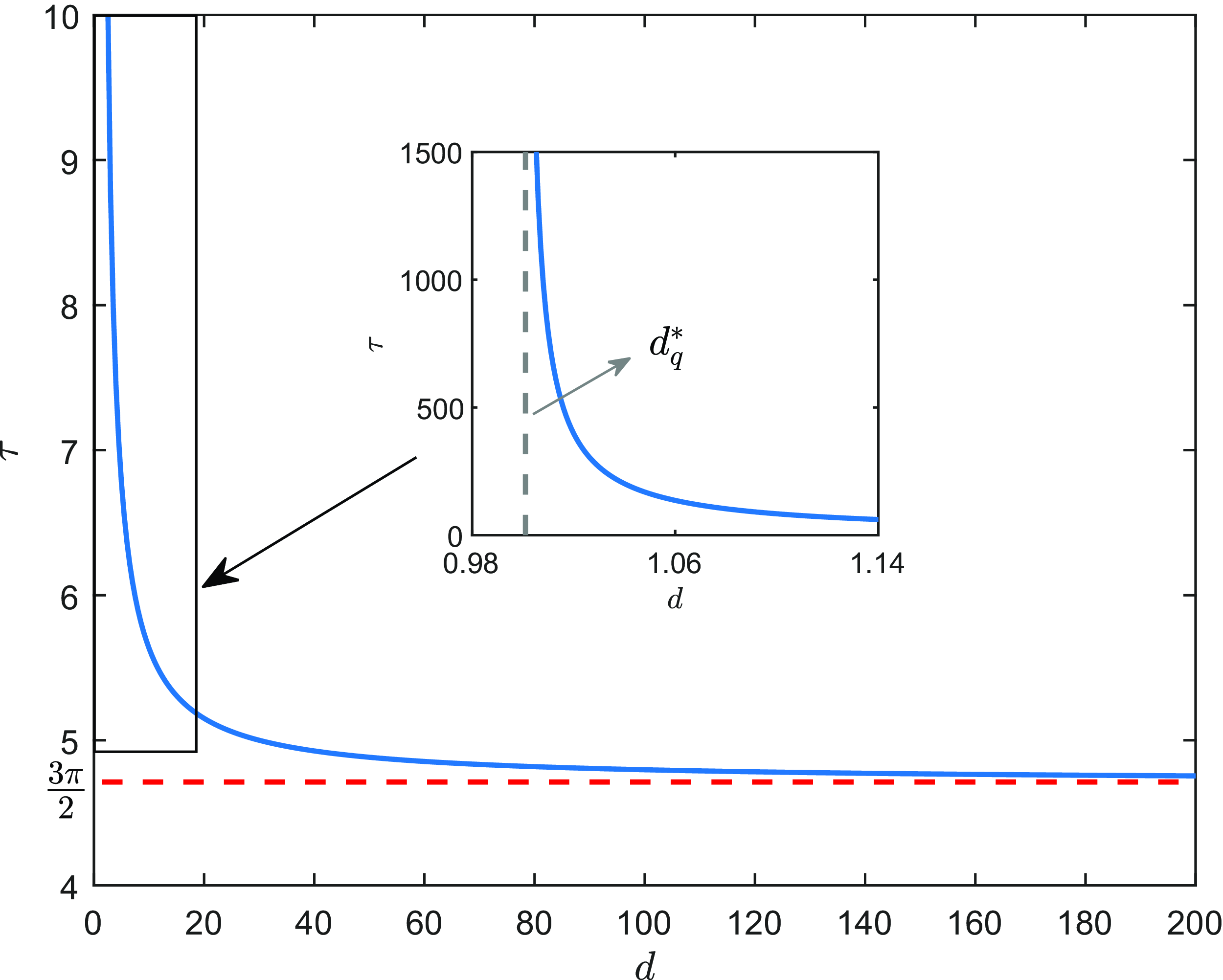

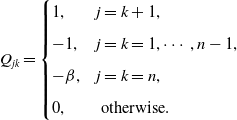

The main results of the paper are summarised as follows. It is well known that large delay can induce Hopf bifurcations for model (1.5) if the directed drift rate

![]() $q=0$

(the non-advective case), and the first Hopf bifurcation value is

$q=0$

(the non-advective case), and the first Hopf bifurcation value is

![]() $\displaystyle \tau _{non}=\pi /2a$

, which is independent of the random diffusion rate

$\displaystyle \tau _{non}=\pi /2a$

, which is independent of the random diffusion rate

![]() $d$

(Proposition 5.1). In contrast, if

$d$

(Proposition 5.1). In contrast, if

![]() $q\ne 0$

(the advection case), the first Hopf bifurcation value

$q\ne 0$

(the advection case), the first Hopf bifurcation value

![]() $\tau _{adv}$

depends on

$\tau _{adv}$

depends on

![]() $d$

and is strictly monotone decreasing in

$d$

and is strictly monotone decreasing in

![]() $d\in (\hat d_3,\infty )$

with

$d\in (\hat d_3,\infty )$

with

![]() $\hat d_{3}\gg 1$

(Proposition 5.2). Moreover, we show that

$\hat d_{3}\gg 1$

(Proposition 5.2). Moreover, we show that

![]() $\tau _{adv}\gt \tau _{non}$

for

$\tau _{adv}\gt \tau _{non}$

for

![]() $d\gg 1$

or

$d\gg 1$

or

![]() $d\ll 1$

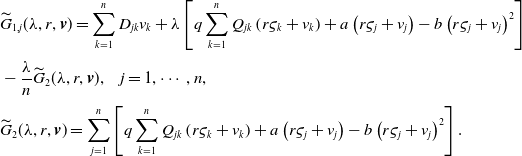

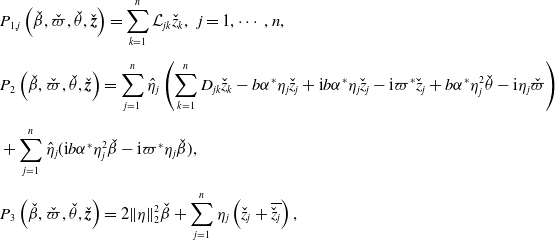

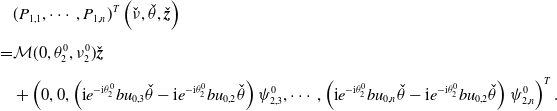

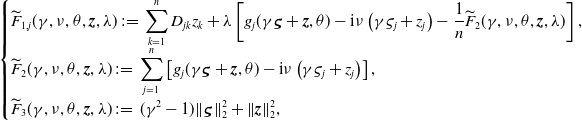

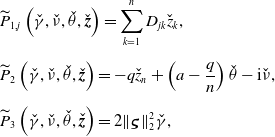

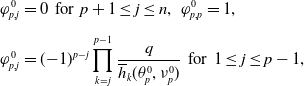

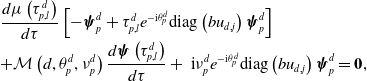

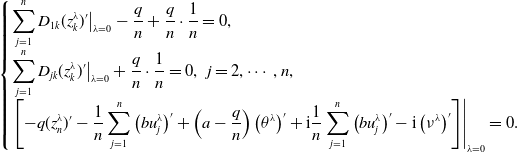

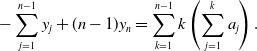

(Propositions 5.2 and 5.3), which suggests that directed movements of the individuals inhibit the occurrence of Hopf bifurcations. The comparison of Hopf bifurcation values between non-advective and advective cases is illustrated in Figure 2. Moreover, we obtain that the total population size is strictly increasing in

$d\ll 1$

(Propositions 5.2 and 5.3), which suggests that directed movements of the individuals inhibit the occurrence of Hopf bifurcations. The comparison of Hopf bifurcation values between non-advective and advective cases is illustrated in Figure 2. Moreover, we obtain that the total population size is strictly increasing in

![]() $d\in [\delta, \infty )$

with

$d\in [\delta, \infty )$

with

![]() $\delta \gg 1$

(Proposition 2.6 and remark 2.7).

$\delta \gg 1$

(Proposition 2.6 and remark 2.7).

Figure 2. The comparison of Hopf bifurcation values.

For patch models in homogeneous non-advective environments, one can refer to [Reference Fernandes and Aguiar16–Reference Gou, Song and Jin18] for the framework of Turing and Hopf bifurcations, see also [Reference Chang, Duan, Sun and Jin4, Reference Duan, Chang and Jin15] for cross diffusion-induced Turing bifurcations and [Reference Chang, Liu, Sun, Wang and Jin5, Reference Liu, Cong and Su32, Reference Madras, Wu and Zou39, Reference Petit, Asllani, Fanelli, Lauwens and Carletti43, Reference So, Wu and Zou44, Reference Tian and Ruan48] for delay-induced Hopf bifurcations. For homogeneous advective environments, one cannot obtain the explicit expressions for the positive equilibria. This brings some difficulties in bifurcation analysis, and we overcome them by constructing equivalent eigenvalue problems in this paper.

The rest of the paper is organised as follows. In Section 2, we give some preliminaries and obtain some properties for the unique positive equilibrium

![]() ${\boldsymbol{u}}_d$

of model (1.5). In Section 3, we study the eigenvalue problem associated with the positive equilibrium

${\boldsymbol{u}}_d$

of model (1.5). In Section 3, we study the eigenvalue problem associated with the positive equilibrium

![]() ${\boldsymbol{u}}_d$

for three cases. In Section 4, we obtain the local dynamics and the existence of Hopf bifurcations for model (1.5). Finally, we show the effect of drift rate on Hopf bifurcation values and give some numerical simulations in Section 5.

${\boldsymbol{u}}_d$

for three cases. In Section 4, we obtain the local dynamics and the existence of Hopf bifurcations for model (1.5). Finally, we show the effect of drift rate on Hopf bifurcation values and give some numerical simulations in Section 5.

2. Preliminary

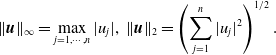

We first list some notations for later use. Denote

![]() $\textbf{1}=(1,\cdots, 1)^T$

and define the real and imaginary parts of

$\textbf{1}=(1,\cdots, 1)^T$

and define the real and imaginary parts of

![]() $\mu \in \mathbb{C}$

by

$\mu \in \mathbb{C}$

by

![]() $\mathcal{R}e \mu$

and

$\mathcal{R}e \mu$

and

![]() $\mathcal{I}m \mu$

, respectively. For a space

$\mathcal{I}m \mu$

, respectively. For a space

![]() $Z$

, we denote complexification of

$Z$

, we denote complexification of

![]() $Z$

to be

$Z$

to be

![]() $Z_{\mathbb{C}}\;:\!=\;\{x_1+\mathrm{i}x_2|x_1,x_2\in Z\}$

. For a linear operator

$Z_{\mathbb{C}}\;:\!=\;\{x_1+\mathrm{i}x_2|x_1,x_2\in Z\}$

. For a linear operator

![]() $T$

, we define the domain, the range and the kernel of

$T$

, we define the domain, the range and the kernel of

![]() $T$

by

$T$

by

![]() $\mathscr{D}(T)$

,

$\mathscr{D}(T)$

,

![]() $\mathscr{R}(T)$

and

$\mathscr{R}(T)$

and

![]() $\mathscr{N}(T)$

, respectively. For

$\mathscr{N}(T)$

, respectively. For

![]() $\mathbb{C}^n$

, we choose the inner product

$\mathbb{C}^n$

, we choose the inner product

![]() ${\langle {\boldsymbol{u}},{{\boldsymbol{v}}}\rangle }=\sum _{j=1}^{n}\overline{u}_j v_j$

for

${\langle {\boldsymbol{u}},{{\boldsymbol{v}}}\rangle }=\sum _{j=1}^{n}\overline{u}_j v_j$

for

![]() ${\boldsymbol{u}},{{\boldsymbol{v}}}\in \mathbb{C}^n$

and denote

${\boldsymbol{u}},{{\boldsymbol{v}}}\in \mathbb{C}^n$

and denote

\begin{equation*} \begin{split} \|{\boldsymbol{u}}\|_\infty =\max _{j=1,\cdots, n}|u_j|,\ \|{\boldsymbol{u}}\|_2=\left (\sum _{j=1}^{n}|u_j|^2 \right )^{1/2}. \end{split} \end{equation*}

\begin{equation*} \begin{split} \|{\boldsymbol{u}}\|_\infty =\max _{j=1,\cdots, n}|u_j|,\ \|{\boldsymbol{u}}\|_2=\left (\sum _{j=1}^{n}|u_j|^2 \right )^{1/2}. \end{split} \end{equation*}

For

![]() ${\boldsymbol{u}}=(u_1,\cdots, u_n)^T\in \mathbb{R}^n$

, we write

${\boldsymbol{u}}=(u_1,\cdots, u_n)^T\in \mathbb{R}^n$

, we write

![]() ${\boldsymbol{u}}\gg \textbf{0}$

if

${\boldsymbol{u}}\gg \textbf{0}$

if

![]() $u_j\gt 0$

for all

$u_j\gt 0$

for all

![]() $j=1,\cdots, n$

. For an

$j=1,\cdots, n$

. For an

![]() $n\times n$

real-valued matrix

$n\times n$

real-valued matrix

![]() $M$

, we denote the spectral bound of

$M$

, we denote the spectral bound of

![]() $M$

by:

$M$

by:

and the spectral radius of

![]() $M$

by:

$M$

by:

An eigenvalue of

![]() $M$

with a positive eigenvector is called the principal eigenvalue of

$M$

with a positive eigenvector is called the principal eigenvalue of

![]() $M$

. A real-valued square matrix

$M$

. A real-valued square matrix

![]() $M$

with non-negative off-diagonal entries is referred to as the essentially non-negative matrix. If

$M$

with non-negative off-diagonal entries is referred to as the essentially non-negative matrix. If

![]() $M$

is an irreducible essentially non-negative matrix, then there exists

$M$

is an irreducible essentially non-negative matrix, then there exists

![]() $c\gt 0$

such that

$c\gt 0$

such that

![]() $M+c I$

is an irreducible non-negative matrix. It follows from [Reference Li and Schneider28, Theorem 2.1] that

$M+c I$

is an irreducible non-negative matrix. It follows from [Reference Li and Schneider28, Theorem 2.1] that

-

(i)

$\rho (M+c I)$

is positive and is an algebraically simple eigenvalue of

$\rho (M+c I)$

is positive and is an algebraically simple eigenvalue of

$M+cI$

with a positive eigenvector.

$M+cI$

with a positive eigenvector. -

(ii)

$\rho (M+c I)$

is the unique eigenvalue with a non-negative eigenvector.

$\rho (M+c I)$

is the unique eigenvalue with a non-negative eigenvector.

By (i), we have

![]() $s(M)+c=s(M+cI)=\rho (M+cI)$

, and consequently,

$s(M)+c=s(M+cI)=\rho (M+cI)$

, and consequently,

![]() $s(M)$

is an algebraically simple eigenvalue of

$s(M)$

is an algebraically simple eigenvalue of

![]() $M$

with a positive eigenvector. By (ii), we see that

$M$

with a positive eigenvector. By (ii), we see that

![]() $s(M)$

is the unique principal eigenvalue of

$s(M)$

is the unique principal eigenvalue of

![]() $M$

.

$M$

.

Consider the following eigenvalue problem:

where

![]() $(D_{jk})$

and

$(D_{jk})$

and

![]() $(Q_{jk})$

are defined in (1.3) and (1.4), respectively. Since

$(Q_{jk})$

are defined in (1.3) and (1.4), respectively. Since

![]() $dD+qQ+aI$

is an irreducible and essentially non-negative matrix, it follows that (2.1) admits a unique principal eigenvalue

$dD+qQ+aI$

is an irreducible and essentially non-negative matrix, it follows that (2.1) admits a unique principal eigenvalue

![]() $\lambda _1(d,q)$

with

$\lambda _1(d,q)$

with

The global dynamics of model (1.5) for

![]() $\tau =0$

is determined by the sign of

$\tau =0$

is determined by the sign of

![]() $\lambda _1(d,q)$

(see [Reference Cosner14, Reference Li and Shuai29, Reference Lu and Takeuchi37] for the proof).

$\lambda _1(d,q)$

(see [Reference Cosner14, Reference Li and Shuai29, Reference Lu and Takeuchi37] for the proof).

Lemma 2.1.

Suppose that

![]() $\tau =0$

. If

$\tau =0$

. If

![]() $\lambda _1(d,q)\leq 0$

, then the trivial equilibrium

$\lambda _1(d,q)\leq 0$

, then the trivial equilibrium

![]() $\textbf{0}$

of model

(1.5) is globally asymptotically stable; if

$\textbf{0}$

of model

(1.5) is globally asymptotically stable; if

![]() $\lambda _1(d,q)\gt 0$

, then model

(1.5) admits a unique positive equilibrium, which is globally asymptotically stable.

$\lambda _1(d,q)\gt 0$

, then model

(1.5) admits a unique positive equilibrium, which is globally asymptotically stable.

For later use, we cite the following result from [Reference Chen, Shi, Shuai and Wu10].

Lemma 2.2.

Let

![]() $\lambda _1(d,q)$

be the principal eigenvalue of

(2.1). Then the following statements hold:

$\lambda _1(d,q)$

be the principal eigenvalue of

(2.1). Then the following statements hold:

-

(i) For fixed

$d\gt 0$

,

$d\gt 0$

,

$\lambda _1(d,q)$

is strictly decreasing with respect to

$\lambda _1(d,q)$

is strictly decreasing with respect to

$q$

in

$q$

in

$[0,\infty )$

, and there exists

$[0,\infty )$

, and there exists

$q^*_d\gt 0$

such that

$q^*_d\gt 0$

such that

$\lambda _1(d,q^*_d)=0$

,

$\lambda _1(d,q^*_d)=0$

,

$\lambda _1(d,q)\lt 0$

for

$\lambda _1(d,q)\lt 0$

for

$q\gt q^*_d$

, and

$q\gt q^*_d$

, and

$\lambda _1(d,q)\gt 0$

for

$\lambda _1(d,q)\gt 0$

for

$q\lt q^*_d$

;

$q\lt q^*_d$

; -

(ii)

$q^*_d$

is strictly increasing in

$q^*_d$

is strictly increasing in

$d\in (0,\infty )$

with

$d\in (0,\infty )$

with

$\lim _{d\to 0}q^*_d=a$

and

$\lim _{d\to 0}q^*_d=a$

and

$\lim _{d\to \infty }q^*_d=na$

.

$\lim _{d\to \infty }q^*_d=na$

.

Here, we remark that Lemma 2.2 (i) follows from [Reference Chen, Shi, Shuai and Wu10, Lemma 3.1 and Proposition 3.2 (i)], and Lemma 2.2 (ii) follows from [Reference Chen, Shi, Shuai and Wu10, Lemma 3.7]. The following result is deduced directly from Lemma 2.2.

Lemma 2.3.

Let

![]() $\lambda _1(d,q)$

be the principal eigenvalue of

(2.1). Then the following statements hold:

$\lambda _1(d,q)$

be the principal eigenvalue of

(2.1). Then the following statements hold:

-

(i) If

$q\in (0,a]$

, then

$q\in (0,a]$

, then

$\lambda _1(d,q)\gt 0$

for all

$\lambda _1(d,q)\gt 0$

for all

$d\gt 0$

;

$d\gt 0$

; -

(ii) If

$q\in (a,na)$

, then there exists

$q\in (a,na)$

, then there exists

$d^*_q\gt 0$

such that

$d^*_q\gt 0$

such that

$\lambda _1(d^*_q,q)=0$

,

$\lambda _1(d^*_q,q)=0$

,

$\lambda _1(d,q)\lt 0$

for

$\lambda _1(d,q)\lt 0$

for

$0\lt d\lt d^*_q$

and

$0\lt d\lt d^*_q$

and

$\lambda _1(d,q)\gt 0$

for

$\lambda _1(d,q)\gt 0$

for

$d\gt d^*_q$

;

$d\gt d^*_q$

; -

(iii) If

$q\in [na,\infty )$

, then

$q\in [na,\infty )$

, then

$\lambda _1(d,q)\lt 0$

for all

$\lambda _1(d,q)\lt 0$

for all

$d\gt 0$

.

$d\gt 0$

.

By Lemmas 2.1 and 2.3, we obtain the global dynamics of model (1.5) for

![]() $\tau =0$

.

$\tau =0$

.

Proposition 2.4.

Suppose that

![]() $d,q,a,b\gt 0$

and

$d,q,a,b\gt 0$

and

![]() $\tau =0$

. Then the following statements hold:

$\tau =0$

. Then the following statements hold:

-

(i) If

$q\in (0,a]$

, then model

(1.5) admits a unique positive equilibrium

$q\in (0,a]$

, then model

(1.5) admits a unique positive equilibrium

${\boldsymbol{u}}_{d}\gg \textbf{0}$

for all

${\boldsymbol{u}}_{d}\gg \textbf{0}$

for all

$d\gt 0$

, which is globally asymptotically stable;

$d\gt 0$

, which is globally asymptotically stable;

-

(ii) If

$q\in (a,na)$

, then the trivial equilibrium

$q\in (a,na)$

, then the trivial equilibrium

$\textbf{0}$

of model

(1.5) is globally asymptotically stable for

$\textbf{0}$

of model

(1.5) is globally asymptotically stable for

$d\in (0,d_q^*]$

; and for

$d\in (0,d_q^*]$

; and for

$d\in (d_q^*,\infty )$

, model

(1.5) admits a unique positive equilibrium

$d\in (d_q^*,\infty )$

, model

(1.5) admits a unique positive equilibrium

${\boldsymbol{u}}_{d}\gg \textbf{0}$

, which is globally asymptotically stable;

${\boldsymbol{u}}_{d}\gg \textbf{0}$

, which is globally asymptotically stable;

-

(iii) If

$q\in [na,\infty )$

, then the trivial equilibrium

$q\in [na,\infty )$

, then the trivial equilibrium

$\textbf{0}$

of model

(1.5) is globally asymptotically stable.

$\textbf{0}$

of model

(1.5) is globally asymptotically stable.

Clearly,

![]() ${\boldsymbol{u}}_d$

satisfies

${\boldsymbol{u}}_d$

satisfies

For simplicity, we first list some notations. Define

where

![]() $d_q^*$

is defined in Lemma 2.3. It follow from Lemma 2.3 (ii) that

$d_q^*$

is defined in Lemma 2.3. It follow from Lemma 2.3 (ii) that

![]() $0$

is the principal eigenvalue of

$0$

is the principal eigenvalue of

![]() $\mathcal L$

and a corresponding eigenvector is

$\mathcal L$

and a corresponding eigenvector is

Clearly,

![]() $0$

is also the principal eigenvalue of

$0$

is also the principal eigenvalue of

![]() $\mathcal L^T$

and a corresponding eigenvector is

$\mathcal L^T$

and a corresponding eigenvector is

Here, we remark that

![]() $0$

is an algebraically simple eigenvalue of

$0$

is an algebraically simple eigenvalue of

![]() $\mathcal L$

and

$\mathcal L$

and

![]() $\mathcal L^T$

, and the corresponding eigenvector is unique up to multiplying by a scalar. Then, we have the following decompositions:

$\mathcal L^T$

, and the corresponding eigenvector is unique up to multiplying by a scalar. Then, we have the following decompositions:

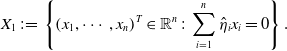

where

\begin{equation} \begin{split}{X}_{1}&\;:\!=\;\left \{\left (x_{1}, \cdots, x_{n}\right )^{T} \in \mathbb{R}^{n} \;:\; \sum _{i=1}^{n}{\hat \eta }_{i} x_{i}=0\right \}.\\[5pt] \end{split} \end{equation}

\begin{equation} \begin{split}{X}_{1}&\;:\!=\;\left \{\left (x_{1}, \cdots, x_{n}\right )^{T} \in \mathbb{R}^{n} \;:\; \sum _{i=1}^{n}{\hat \eta }_{i} x_{i}=0\right \}.\\[5pt] \end{split} \end{equation}

In fact, for any

![]() ${{\boldsymbol{y}}}=(y_1,\cdots, y_n)^T\in \mathbb{R}^n$

,

${{\boldsymbol{y}}}=(y_1,\cdots, y_n)^T\in \mathbb{R}^n$

,

![]() ${{\boldsymbol{y}}}=c_1\textbf{1}+\boldsymbol{\xi }_1=c_2\boldsymbol{\eta }+\boldsymbol{\xi }_2$

, where

${{\boldsymbol{y}}}=c_1\textbf{1}+\boldsymbol{\xi }_1=c_2\boldsymbol{\eta }+\boldsymbol{\xi }_2$

, where

Now, we explore further properties on the positive equilibrium

![]() ${\boldsymbol{u}}_d$

.

${\boldsymbol{u}}_d$

.

Proposition 2.5.

Let

![]() $\boldsymbol{\eta }$

,

$\boldsymbol{\eta }$

,

![]() $\hat{\boldsymbol{\eta }}$

and

$\hat{\boldsymbol{\eta }}$

and

![]() $X_1$

be defined in

(2.4),

(2.5) and

(2.7), respectively. Then the following statements hold:

$X_1$

be defined in

(2.4),

(2.5) and

(2.7), respectively. Then the following statements hold:

-

(i) For fixed

$q\in (0,a)$

,

$q\in (0,a)$

,

${\boldsymbol{u}}_{d}$

is continuously differentiable with respect to

${\boldsymbol{u}}_{d}$

is continuously differentiable with respect to

$d\in [0,\infty )$

, where

$d\in [0,\infty )$

, where

${\boldsymbol{u}}_d={\boldsymbol{u}}_{0}$

for

${\boldsymbol{u}}_d={\boldsymbol{u}}_{0}$

for

$d=0$

, and

$d=0$

, and

${\boldsymbol{u}}_{0}$

is the unique solution of

(2.8)Moreover,

${\boldsymbol{u}}_{0}$

is the unique solution of

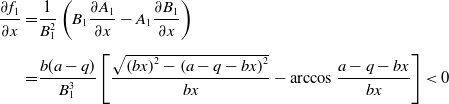

(2.8)Moreover, \begin{equation} \begin{cases} u_{0,1}=\displaystyle \frac{a-q}{b},\;\;qu_{0,j-1}=-u_{0,j}\left (a-q-bu_{0,j}\right )\;\;\text{for}\;\;j=2,\cdots, n,\\[5pt] u_{0,j}\gt 0\;\;\text{for}\;\;j=1,\cdots, n. \end{cases} \end{equation}

(2.9)

\begin{equation} \begin{cases} u_{0,1}=\displaystyle \frac{a-q}{b},\;\;qu_{0,j-1}=-u_{0,j}\left (a-q-bu_{0,j}\right )\;\;\text{for}\;\;j=2,\cdots, n,\\[5pt] u_{0,j}\gt 0\;\;\text{for}\;\;j=1,\cdots, n. \end{cases} \end{equation}

(2.9) \begin{equation} u_{0,1}\lt \dots \lt u_{0,n} ; \end{equation}

\begin{equation} u_{0,1}\lt \dots \lt u_{0,n} ; \end{equation}

-

(ii) For fixed

$q\in (a,na)$

,

$q\in (a,na)$

,

${\boldsymbol{u}}_d$

can be represented as follows:

(2.10)Here,

${\boldsymbol{u}}_d$

can be represented as follows:

(2.10)Here, \begin{equation} {\boldsymbol{u}}_d=(d-d_q^*)(\alpha _d\boldsymbol{\eta }+\boldsymbol{\xi }_d)\;\;\text{for}\;\;d\gt d^*_q. \end{equation}

\begin{equation} {\boldsymbol{u}}_d=(d-d_q^*)(\alpha _d\boldsymbol{\eta }+\boldsymbol{\xi }_d)\;\;\text{for}\;\;d\gt d^*_q. \end{equation}

$(\alpha _d,\boldsymbol{\xi }_d)\in \mathbb{R}\times X_1$

is continuously differentiable with respect to

$(\alpha _d,\boldsymbol{\xi }_d)\in \mathbb{R}\times X_1$

is continuously differentiable with respect to

$d\in [d^*_q,\infty )$

, and for

$d\in [d^*_q,\infty )$

, and for

$d=d^*_q$

,

$d=d^*_q$

,

$(\alpha _d,\boldsymbol{\xi }_d)=\left (\alpha ^*,\textbf{0}\right )$

with

(2.11)

$(\alpha _d,\boldsymbol{\xi }_d)=\left (\alpha ^*,\textbf{0}\right )$

with

(2.11) \begin{equation} \alpha ^*=\frac{\sum _{j,k=1}^nD_{jk}\eta _k\hat \eta _j}{b\sum _{j=1}^n\eta _j^2\hat \eta _j}\gt 0. \end{equation}

\begin{equation} \alpha ^*=\frac{\sum _{j,k=1}^nD_{jk}\eta _k\hat \eta _j}{b\sum _{j=1}^n\eta _j^2\hat \eta _j}\gt 0. \end{equation}

Proof. (i) It follows from Proposition 2.4 that

![]() ${\boldsymbol{u}}_d$

is the unique positive equilibrium of (1.5), which is stable (non-degenerate). Therefore, by the implicit function theorem, we obtain that

${\boldsymbol{u}}_d$

is the unique positive equilibrium of (1.5), which is stable (non-degenerate). Therefore, by the implicit function theorem, we obtain that

![]() ${\boldsymbol{u}}_d$

is continuously differentiable for

${\boldsymbol{u}}_d$

is continuously differentiable for

![]() $d\in (0,\infty )$

. Then we need to show that

$d\in (0,\infty )$

. Then we need to show that

![]() ${\boldsymbol{u}}_d$

is continuously differentiable for

${\boldsymbol{u}}_d$

is continuously differentiable for

![]() $d\in [0,d_1]$

with

$d\in [0,d_1]$

with

![]() $0\lt d_1\ll 1$

.

$0\lt d_1\ll 1$

.

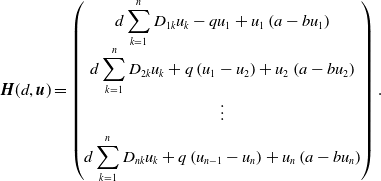

Define

\begin{eqnarray*}{{\boldsymbol{H}}}(d,{\boldsymbol{u}})= \begin{pmatrix} \displaystyle d\sum _{k=1}^{n} D_{1k} u_k-qu_{1}+u_{1}\left (a-bu_{1}\right ) \\[5pt] \displaystyle d\sum _{k=1}^{n} D_{2k} u_k+q\left (u_{1}-u_{2}\right )+u_{2}\left (a-bu_{2}\right ) \\[5pt] \displaystyle \vdots \\[5pt] \displaystyle d\sum _{k=1}^{n} D_{nk} u_k+q\left (u_{n-1}-u_{n}\right )+u_{n}\left (a-bu_{n}\right ) \end{pmatrix}. \end{eqnarray*}

\begin{eqnarray*}{{\boldsymbol{H}}}(d,{\boldsymbol{u}})= \begin{pmatrix} \displaystyle d\sum _{k=1}^{n} D_{1k} u_k-qu_{1}+u_{1}\left (a-bu_{1}\right ) \\[5pt] \displaystyle d\sum _{k=1}^{n} D_{2k} u_k+q\left (u_{1}-u_{2}\right )+u_{2}\left (a-bu_{2}\right ) \\[5pt] \displaystyle \vdots \\[5pt] \displaystyle d\sum _{k=1}^{n} D_{nk} u_k+q\left (u_{n-1}-u_{n}\right )+u_{n}\left (a-bu_{n}\right ) \end{pmatrix}. \end{eqnarray*}

Clearly,

![]() ${{\boldsymbol{H}}}(0,{\boldsymbol{u}}_0)=\textbf{0}$

, where

${{\boldsymbol{H}}}(0,{\boldsymbol{u}}_0)=\textbf{0}$

, where

![]() ${\boldsymbol{u}}_{0}$

is defined by (2.8). Let

${\boldsymbol{u}}_{0}$

is defined by (2.8). Let

![]() $D_{{\boldsymbol{u}}}{{\boldsymbol{H}}}(0,{\boldsymbol{u}}_0)$

be the Jacobian matrix of

$D_{{\boldsymbol{u}}}{{\boldsymbol{H}}}(0,{\boldsymbol{u}}_0)$

be the Jacobian matrix of

![]() ${{\boldsymbol{H}}}(d,{\boldsymbol{u}})$

with respect to

${{\boldsymbol{H}}}(d,{\boldsymbol{u}})$

with respect to

![]() ${\boldsymbol{u}}$

at

${\boldsymbol{u}}$

at

![]() $(0,{\boldsymbol{u}}_0)$

. A direct computation implies that

$(0,{\boldsymbol{u}}_0)$

. A direct computation implies that

![]() $D_{{\boldsymbol{u}}}{{\boldsymbol{H}}}(0,{\boldsymbol{u}}_0)=(h_{j,k})$

with

$D_{{\boldsymbol{u}}}{{\boldsymbol{H}}}(0,{\boldsymbol{u}}_0)=(h_{j,k})$

with

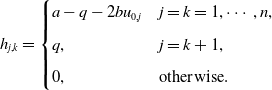

\begin{eqnarray*} h_{j,k}= \begin{cases} a-q-2bu_{0,j} & j=k=1,\cdots, n,\\[5pt] q, &j=k+1,\\[5pt] 0, &\text{otherwise}. \end{cases} \end{eqnarray*}

\begin{eqnarray*} h_{j,k}= \begin{cases} a-q-2bu_{0,j} & j=k=1,\cdots, n,\\[5pt] q, &j=k+1,\\[5pt] 0, &\text{otherwise}. \end{cases} \end{eqnarray*}

By (2.8), we have

![]() $\displaystyle \frac{a-q}{b}=u_{0,1}\lt \dots \lt u_{0,n}$

, which implies that

$\displaystyle \frac{a-q}{b}=u_{0,1}\lt \dots \lt u_{0,n}$

, which implies that

![]() $D_{{\boldsymbol{u}}}{{\boldsymbol{H}}}(0,{\boldsymbol{u}}_0)$

is invertible. Then we see from the implicit function theorem that there exist

$D_{{\boldsymbol{u}}}{{\boldsymbol{H}}}(0,{\boldsymbol{u}}_0)$

is invertible. Then we see from the implicit function theorem that there exist

![]() $d_{1}\gt 0$

, and a continuously differentiable mapping

$d_{1}\gt 0$

, and a continuously differentiable mapping

such that

![]() ${{\boldsymbol{H}}}(d,{\boldsymbol{u}}(d))=0$

,

${{\boldsymbol{H}}}(d,{\boldsymbol{u}}(d))=0$

,

![]() ${\boldsymbol{u}}(d)\gg \textbf{0}$

, and

${\boldsymbol{u}}(d)\gg \textbf{0}$

, and

![]() ${\boldsymbol{u}}(0)={\boldsymbol{u}}_0$

. Therefore,

${\boldsymbol{u}}(0)={\boldsymbol{u}}_0$

. Therefore,

![]() ${\boldsymbol{u}}(d)$

is the positive equilibrium of model (1.5) for small

${\boldsymbol{u}}(d)$

is the positive equilibrium of model (1.5) for small

![]() $d$

. This combined with Proposition 2.4 implies that

$d$

. This combined with Proposition 2.4 implies that

![]() ${\boldsymbol{u}}(d)= {\boldsymbol{u}}_{d}$

. Consequently,

${\boldsymbol{u}}(d)= {\boldsymbol{u}}_{d}$

. Consequently,

![]() ${\boldsymbol{u}}_{d}$

is continuously differentiable for

${\boldsymbol{u}}_{d}$

is continuously differentiable for

![]() $d \in [0,\infty )$

.

$d \in [0,\infty )$

.

(ii) Using similar arguments as in (i), we see that

![]() ${\boldsymbol{u}}_d$

is continuously differentiable for

${\boldsymbol{u}}_d$

is continuously differentiable for

![]() $d\in (d_q^*,\infty )$

. By the first decomposition in (2.6), we see that

$d\in (d_q^*,\infty )$

. By the first decomposition in (2.6), we see that

![]() ${\boldsymbol{u}}_d$

can be represented as in (2.10), where

${\boldsymbol{u}}_d$

can be represented as in (2.10), where

![]() $\alpha _d$

and

$\alpha _d$

and

![]() $\boldsymbol{\xi }_d$

are also continuously differentiable for

$\boldsymbol{\xi }_d$

are also continuously differentiable for

![]() $d\in (d_q^*,\infty )$

. Then we need to show that

$d\in (d_q^*,\infty )$

. Then we need to show that

![]() $\alpha _d$

and

$\alpha _d$

and

![]() $\boldsymbol{\xi }_d$

are also continuously differentiable for

$\boldsymbol{\xi }_d$

are also continuously differentiable for

![]() $d\in [d_q^*,\tilde d_1)$

with

$d\in [d_q^*,\tilde d_1)$

with

![]() $0\lt \tilde d_1-d_q^*\ll 1$

.

$0\lt \tilde d_1-d_q^*\ll 1$

.

We first show that

![]() $ \alpha ^*\gt 0$

. A direct computation yields

$ \alpha ^*\gt 0$

. A direct computation yields

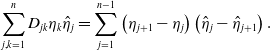

\begin{equation} \sum _{j,k=1}^nD_{jk}\eta _k\hat \eta _j =\sum _{j=1}^{n-1}\left (\eta _{j+1}-\eta _j\right )\left (\hat{\eta }_j-\hat{\eta }_{j+1}\right ). \end{equation}

\begin{equation} \sum _{j,k=1}^nD_{jk}\eta _k\hat \eta _j =\sum _{j=1}^{n-1}\left (\eta _{j+1}-\eta _j\right )\left (\hat{\eta }_j-\hat{\eta }_{j+1}\right ). \end{equation}

Noticing that

![]() $\boldsymbol{\eta }$

(respectively,

$\boldsymbol{\eta }$

(respectively,

![]() $\hat{\boldsymbol{\eta }}$

) is an eigenvector of

$\hat{\boldsymbol{\eta }}$

) is an eigenvector of

![]() $\mathcal L$

(respectively,

$\mathcal L$

(respectively,

![]() $\mathcal L^T$

) corresponding to eigenvalue

$\mathcal L^T$

) corresponding to eigenvalue

![]() $0$

, we have

$0$

, we have

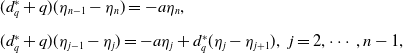

\begin{equation*} \begin{split} &(d^*_q+q)(\eta _{n-1}-\eta _n)=-a \eta _n,\\[5pt] &(d^*_q+q)(\eta _{j-1}-\eta _j)=-a\eta _j+d^*_q(\eta _{j}-\eta _{j+1}),\;\;j=2,\cdots, n-1, \end{split} \end{equation*}

\begin{equation*} \begin{split} &(d^*_q+q)(\eta _{n-1}-\eta _n)=-a \eta _n,\\[5pt] &(d^*_q+q)(\eta _{j-1}-\eta _j)=-a\eta _j+d^*_q(\eta _{j}-\eta _{j+1}),\;\;j=2,\cdots, n-1, \end{split} \end{equation*}

and

\begin{equation*} \begin{split} &(d^*_q+q)(\hat{\eta }_{2}-\hat{\eta }_1)=-a\hat{\eta }_1,\\[5pt] &(d^*_q+q)(\hat{\eta }_{j+1}-\hat{\eta }_{j})=-a\eta _j+d^*_q(\hat{\eta }_{j}-\hat{\eta }_{j-1}),\;\;j=2,\cdots, n-1, \end{split} \end{equation*}

\begin{equation*} \begin{split} &(d^*_q+q)(\hat{\eta }_{2}-\hat{\eta }_1)=-a\hat{\eta }_1,\\[5pt] &(d^*_q+q)(\hat{\eta }_{j+1}-\hat{\eta }_{j})=-a\eta _j+d^*_q(\hat{\eta }_{j}-\hat{\eta }_{j-1}),\;\;j=2,\cdots, n-1, \end{split} \end{equation*}

which implies that

![]() $\eta _1\lt \eta _2\lt \dots \lt \eta _n$

and

$\eta _1\lt \eta _2\lt \dots \lt \eta _n$

and

![]() $\hat{\eta }_1\gt \hat{\eta }_2\gt \dots \gt \hat{\eta }_n$

. This combined with (2.11) and (2.12) yields

$\hat{\eta }_1\gt \hat{\eta }_2\gt \dots \gt \hat{\eta }_n$

. This combined with (2.11) and (2.12) yields

![]() $\alpha ^*\gt 0$

.

$\alpha ^*\gt 0$

.

By the definition of

![]() $\mathcal L$

, we rewrite (2.2) as follows:

$\mathcal L$

, we rewrite (2.2) as follows:

From the first decomposition in (2.6), we see that

![]() ${\boldsymbol{u}}$

in (2.13) can be represented as follows:

${\boldsymbol{u}}$

in (2.13) can be represented as follows:

Plugging (2.14) into (2.13), we have

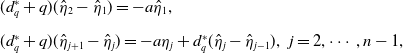

\begin{equation} \sum _{k=1}^n \mathcal L_{jk}\xi _{k}+(d-d^*_q)\left [\sum _{j=1}^nD_{jk}(\alpha \eta _k+\xi _{k})-b(\alpha \eta _j+\xi _{j})^2\right ]=0,\;\;\;\;j=1,\cdots, n. \end{equation}

\begin{equation} \sum _{k=1}^n \mathcal L_{jk}\xi _{k}+(d-d^*_q)\left [\sum _{j=1}^nD_{jk}(\alpha \eta _k+\xi _{k})-b(\alpha \eta _j+\xi _{j})^2\right ]=0,\;\;\;\;j=1,\cdots, n. \end{equation}

Denoting the left side of (2.15) by

![]() $y_j$

, we see from the second decomposition in (2.6) that

$y_j$

, we see from the second decomposition in (2.6) that

Therefore,

![]() ${{\boldsymbol{y}}}=\textbf{0}$

if and only if

${{\boldsymbol{y}}}=\textbf{0}$

if and only if

![]() $c=0$

and

$c=0$

and

![]() ${{\boldsymbol{z}}}=\textbf{0}$

. Since

${{\boldsymbol{z}}}=\textbf{0}$

. Since

![]() $\mathcal L\boldsymbol{\xi }\in X_1$

, it follows that

$\mathcal L\boldsymbol{\xi }\in X_1$

, it follows that

where

\begin{equation*} \begin{split} &G_{1,j}(d,\alpha, \boldsymbol{\xi })=\sum _{k=1}^n \mathcal L_{jk}\xi _{k}+(d-d^*_q)\left [\sum _{j=1}^nD_{jk}(\alpha \eta _k+\xi _{k})-b(\alpha \eta _j+\xi _{j})^2\right ]\\[5pt] &-(d-d^*_q)G_2(d,\alpha, \boldsymbol{\xi }),\;\;\;\;j=1,\cdots, n,\\[5pt] &G_2(d,\alpha, \boldsymbol{\xi })=\sum _{j=1}^n\hat \eta _j\left [\sum _{k=1}^nD_{jk}(\alpha \eta _k+\xi _k)-b(\alpha \eta _j+\xi _j)^2\right ]. \end{split} \end{equation*}

\begin{equation*} \begin{split} &G_{1,j}(d,\alpha, \boldsymbol{\xi })=\sum _{k=1}^n \mathcal L_{jk}\xi _{k}+(d-d^*_q)\left [\sum _{j=1}^nD_{jk}(\alpha \eta _k+\xi _{k})-b(\alpha \eta _j+\xi _{j})^2\right ]\\[5pt] &-(d-d^*_q)G_2(d,\alpha, \boldsymbol{\xi }),\;\;\;\;j=1,\cdots, n,\\[5pt] &G_2(d,\alpha, \boldsymbol{\xi })=\sum _{j=1}^n\hat \eta _j\left [\sum _{k=1}^nD_{jk}(\alpha \eta _k+\xi _k)-b(\alpha \eta _j+\xi _j)^2\right ]. \end{split} \end{equation*}

Define

![]() ${{\boldsymbol{G}}}(d,\alpha, \boldsymbol{\xi }) \;:\; \mathbb{R}^2\times X_1\to X_1\times \mathbb{R}$

by

${{\boldsymbol{G}}}(d,\alpha, \boldsymbol{\xi }) \;:\; \mathbb{R}^2\times X_1\to X_1\times \mathbb{R}$

by

![]() ${{\boldsymbol{G}}}\;:\!=\;(G_{1,1},\cdots, G_{1,n},G_2)^T$

. It follows that, for

${{\boldsymbol{G}}}\;:\!=\;(G_{1,1},\cdots, G_{1,n},G_2)^T$

. It follows that, for

![]() $d\gt d_q^*$

,

$d\gt d_q^*$

,

![]() ${\boldsymbol{u}}$

(represented in (2.14)) is a solution of (2.13) if and only if

${\boldsymbol{u}}$

(represented in (2.14)) is a solution of (2.13) if and only if

![]() $(\alpha, \boldsymbol{\xi })\in \mathbb{R}\times X_1$

is a solution of

$(\alpha, \boldsymbol{\xi })\in \mathbb{R}\times X_1$

is a solution of

![]() ${{\boldsymbol{G}}}(d,\alpha, \boldsymbol{\xi })=\textbf{0}$

.

${{\boldsymbol{G}}}(d,\alpha, \boldsymbol{\xi })=\textbf{0}$

.

Now we consider the equivalent problem

![]() ${{\boldsymbol{G}}}(d,\alpha, \boldsymbol{\xi })=\textbf{0}$

. Clearly,

${{\boldsymbol{G}}}(d,\alpha, \boldsymbol{\xi })=\textbf{0}$

. Clearly,

![]() ${{\boldsymbol{G}}}(d_q^*,\alpha ^*,\textbf{0})=\textbf{0}$

. Let

${{\boldsymbol{G}}}(d_q^*,\alpha ^*,\textbf{0})=\textbf{0}$

. Let

![]() ${{\boldsymbol{T}}}\left (\check{\alpha },\check{\boldsymbol{\xi }}\right )=(T_{1,1}, \cdots, T_{1,n},T_2)^T \;:\; \mathbb{R}\times X_1 \mapsto X_1\times \mathbb{R}$

be the Fréchet derivative of

${{\boldsymbol{T}}}\left (\check{\alpha },\check{\boldsymbol{\xi }}\right )=(T_{1,1}, \cdots, T_{1,n},T_2)^T \;:\; \mathbb{R}\times X_1 \mapsto X_1\times \mathbb{R}$

be the Fréchet derivative of

![]() ${\boldsymbol{G}}$

with respect to

${\boldsymbol{G}}$

with respect to

![]() $(\alpha, \boldsymbol{\xi })$

at

$(\alpha, \boldsymbol{\xi })$

at

![]() $(d^*_q,\alpha ^*,\textbf{0})$

. Then we compute that

$(d^*_q,\alpha ^*,\textbf{0})$

. Then we compute that

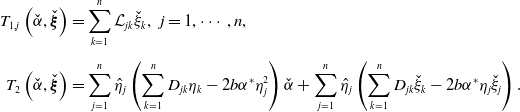

\begin{equation*} \begin{split} T_{1,j}\left (\check{\alpha }, \check{\boldsymbol{\xi }}\right )=&\sum _{k=1}^{n} \mathcal L_{jk} \check{\xi }_{k},\;\;j=1,\cdots, n,\\[5pt] T_{2}\left (\check{\alpha }, \check{\boldsymbol{\xi }}\right )=&\sum _{j=1}^{n}\hat{\eta }_j\left (\sum _{k=1}^{n}D_{jk}\eta _k-2b\alpha ^*\eta ^2_j\right )\check{\alpha }+ \sum _{j=1}^{n}\hat{\eta }_j\left (\sum _{k=1}^{n}D_{jk}\check{\xi }_{k}-2b\alpha ^*\eta _j\check \xi _j\right ).\\[5pt] \end{split} \end{equation*}

\begin{equation*} \begin{split} T_{1,j}\left (\check{\alpha }, \check{\boldsymbol{\xi }}\right )=&\sum _{k=1}^{n} \mathcal L_{jk} \check{\xi }_{k},\;\;j=1,\cdots, n,\\[5pt] T_{2}\left (\check{\alpha }, \check{\boldsymbol{\xi }}\right )=&\sum _{j=1}^{n}\hat{\eta }_j\left (\sum _{k=1}^{n}D_{jk}\eta _k-2b\alpha ^*\eta ^2_j\right )\check{\alpha }+ \sum _{j=1}^{n}\hat{\eta }_j\left (\sum _{k=1}^{n}D_{jk}\check{\xi }_{k}-2b\alpha ^*\eta _j\check \xi _j\right ).\\[5pt] \end{split} \end{equation*}

By (2.11), we see that

![]() ${\boldsymbol{T}}$

is a bijection from

${\boldsymbol{T}}$

is a bijection from

![]() $\mathbb{R} \times X_1$

to

$\mathbb{R} \times X_1$

to

![]() $X_1\times \mathbb{R}$

. Then it follows from the implicit function theorem that there exists

$X_1\times \mathbb{R}$

. Then it follows from the implicit function theorem that there exists

![]() $\tilde{d}_1\gt d^*_q$

and a continuously differentiable mapping

$\tilde{d}_1\gt d^*_q$

and a continuously differentiable mapping

![]() $d\in \left [d^*_q,\tilde{d}_1\right ] \mapsto \left (\tilde{\alpha }_d, \tilde{\boldsymbol{\xi }}_d\right )\in \mathbb{R}\times X_1$

such that

$d\in \left [d^*_q,\tilde{d}_1\right ] \mapsto \left (\tilde{\alpha }_d, \tilde{\boldsymbol{\xi }}_d\right )\in \mathbb{R}\times X_1$

such that

![]() ${{\boldsymbol{G}}}(d,\tilde{\alpha }_d,\tilde{\boldsymbol{\xi }}_d)=\textbf{0}$

, and

${{\boldsymbol{G}}}(d,\tilde{\alpha }_d,\tilde{\boldsymbol{\xi }}_d)=\textbf{0}$

, and

![]() $\tilde{\alpha }_d=\alpha ^*$

and

$\tilde{\alpha }_d=\alpha ^*$

and

![]() $\tilde{\boldsymbol{\xi }}_d=\textbf{0}$

for

$\tilde{\boldsymbol{\xi }}_d=\textbf{0}$

for

![]() $d=d^*_q$

. It follows from Proposition 2.4 and Eq. (2.6) that the unique positive equilibrium

$d=d^*_q$

. It follows from Proposition 2.4 and Eq. (2.6) that the unique positive equilibrium

![]() ${\boldsymbol{u}}_d$

can be represented as (2.10) for

${\boldsymbol{u}}_d$

can be represented as (2.10) for

![]() $d\gt d_q^*$

. Then we obtain that

$d\gt d_q^*$

. Then we obtain that

![]() $\alpha _d=\tilde{\alpha }_d$

,

$\alpha _d=\tilde{\alpha }_d$

,

![]() ${\boldsymbol{\xi }}_d=\tilde{\boldsymbol{\xi }}_d$

for

${\boldsymbol{\xi }}_d=\tilde{\boldsymbol{\xi }}_d$

for

![]() $d\in \left (d^*_q,\tilde{d}_1\right ]$

. Therefore,

$d\in \left (d^*_q,\tilde{d}_1\right ]$

. Therefore,

![]() $\alpha _d$

and

$\alpha _d$

and

![]() ${\boldsymbol{\xi }}_d$

are continuously differentiable for

${\boldsymbol{\xi }}_d$

are continuously differentiable for

![]() $d\in \left [d^*_q,\infty \right )$

if we define

$d\in \left [d^*_q,\infty \right )$

if we define

![]() $(\alpha _d,\boldsymbol{\xi }_d)=\left (\alpha ^*,\textbf{0}\right )$

for

$(\alpha _d,\boldsymbol{\xi }_d)=\left (\alpha ^*,\textbf{0}\right )$

for

![]() $d=d^*_q$

.

$d=d^*_q$

.

Now, we consider the case

![]() $d\gg 1$

. Clearly,

$d\gg 1$

. Clearly,

![]() ${\boldsymbol{u}}_d$

satisfies

${\boldsymbol{u}}_d$

satisfies

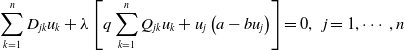

\begin{equation} \displaystyle \ \sum _{k=1}^{n} D_{jk} u_k+ \lambda \left [q\sum _{k=1}^{n} Q_{jk}u_k+u_j\left (a- b u_j\right )\right ]=0,\;\;j=1,\cdots, n\\[5pt] \end{equation}

\begin{equation} \displaystyle \ \sum _{k=1}^{n} D_{jk} u_k+ \lambda \left [q\sum _{k=1}^{n} Q_{jk}u_k+u_j\left (a- b u_j\right )\right ]=0,\;\;j=1,\cdots, n\\[5pt] \end{equation}

with

![]() $d=1/\lambda$

. To avoid confusion, we denote

$d=1/\lambda$

. To avoid confusion, we denote

![]() ${\boldsymbol{u}}_d$

by

${\boldsymbol{u}}_d$

by

![]() ${\boldsymbol{u}}^\lambda$

for the case

${\boldsymbol{u}}^\lambda$

for the case

![]() $d\gg 1$

. Then the properties of

$d\gg 1$

. Then the properties of

![]() ${\boldsymbol{u}}_d$

for

${\boldsymbol{u}}_d$

for

![]() $d\gg 1$

is equivalent to

$d\gg 1$

is equivalent to

![]() ${\boldsymbol{u}}^\lambda$

for

${\boldsymbol{u}}^\lambda$

for

![]() $0\lt \lambda \ll 1$

. Clearly,

$0\lt \lambda \ll 1$

. Clearly,

![]() $s(D)=0$

is the principal eigenvalue of

$s(D)=0$

is the principal eigenvalue of

![]() $D$

, and a corresponding eigenvector is

$D$

, and a corresponding eigenvector is

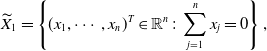

Define

\begin{equation} \begin{split} \displaystyle \widetilde{X}_1=\left \{(x_1,\cdots, x_n)^T \in \mathbb{R}^n\;:\;\sum _{j=1}^{n}x_j=0\right \}, \end{split} \end{equation}

\begin{equation} \begin{split} \displaystyle \widetilde{X}_1=\left \{(x_1,\cdots, x_n)^T \in \mathbb{R}^n\;:\;\sum _{j=1}^{n}x_j=0\right \}, \end{split} \end{equation}

and

![]() $\mathbb{R}^n$

also has the following decomposition:

$\mathbb{R}^n$

also has the following decomposition:

Proposition 2.6.

Suppose that

![]() $q\in (0,na)$

, let

$q\in (0,na)$

, let

![]() ${\boldsymbol{u}}^\lambda$

be the unique positive solution of

(2.16), and define

${\boldsymbol{u}}^\lambda$

be the unique positive solution of

(2.16), and define

![]() ${\boldsymbol{u}}^0=(u^{0}_1,\cdots, u^{0}_1)^T$

, where

${\boldsymbol{u}}^0=(u^{0}_1,\cdots, u^{0}_1)^T$

, where

Then the following statements hold:

-

(i)

${\boldsymbol{u}}^{\lambda }=\left (u^\lambda _1,u^\lambda _2,\cdots, u^\lambda _n\right )$

is continuously differentiable for

${\boldsymbol{u}}^{\lambda }=\left (u^\lambda _1,u^\lambda _2,\cdots, u^\lambda _n\right )$

is continuously differentiable for

$\lambda \in [0,\lambda _q^*)$

;

$\lambda \in [0,\lambda _q^*)$

; -

(ii)

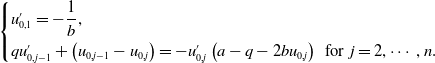

(2.20) \begin{equation} \sum _{j=1}^{n}\left (u^{\lambda }_j\right )'\big |_{\lambda =0}=-\frac{q^2(n+1)(n-1)}{6b}\lt 0, \end{equation}

\begin{equation} \sum _{j=1}^{n}\left (u^{\lambda }_j\right )'\big |_{\lambda =0}=-\frac{q^2(n+1)(n-1)}{6b}\lt 0, \end{equation}

and the total population size

$\sum _{j=1}^n u^{\lambda }_j$

is strictly decreasing in

$\sum _{j=1}^n u^{\lambda }_j$

is strictly decreasing in

$\lambda \in (0,\epsilon )$

with

$\lambda \in (0,\epsilon )$

with

$\epsilon \ll 1$

.

$\epsilon \ll 1$

.

Here,

![]() $'$

is the derivative with respect to

$'$

is the derivative with respect to

![]() $\lambda$

and

$\lambda$

and

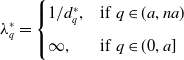

\begin{equation*} \lambda _q^*=\begin{cases} 1/d_q^*,&\text{if}\;\; q\in (a,na)\\[5pt] \infty, &\text{if}\;\; q\in (0,a] \end{cases} \end{equation*}

\begin{equation*} \lambda _q^*=\begin{cases} 1/d_q^*,&\text{if}\;\; q\in (a,na)\\[5pt] \infty, &\text{if}\;\; q\in (0,a] \end{cases} \end{equation*}

with

![]() $d_q^*$

defined in Proposition 2.5

.

$d_q^*$

defined in Proposition 2.5

.

Proof. (i) By Proposition 2.5, we see that

![]() ${\boldsymbol{u}}^{\lambda }$

is continuously differentiable for

${\boldsymbol{u}}^{\lambda }$

is continuously differentiable for

![]() $\lambda \in (0,\lambda _q^*)$

. Then we need to show that

$\lambda \in (0,\lambda _q^*)$

. Then we need to show that

![]() ${\boldsymbol{u}}^{\lambda }$

is continuously differentiable for

${\boldsymbol{u}}^{\lambda }$

is continuously differentiable for

![]() $\lambda \in [0,\tilde \lambda _1)$

with

$\lambda \in [0,\tilde \lambda _1)$

with

![]() $0\lt \tilde \lambda _1\ll 1$

. From the decomposition in (2.19), we see that

$0\lt \tilde \lambda _1\ll 1$

. From the decomposition in (2.19), we see that

![]() ${\boldsymbol{u}}=(u_1,\cdots, u_n)^T$

in (2.16) can be represented as follows:

${\boldsymbol{u}}=(u_1,\cdots, u_n)^T$

in (2.16) can be represented as follows:

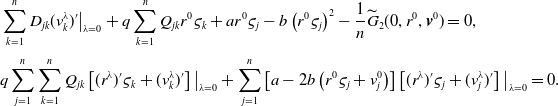

Plugging (2.21) into (2.16), we have

\begin{equation} \displaystyle \sum _{k=1}^{n} D_{jk} v_k+ \lambda \left [q\sum _{k=1}^{n} Q_{jk}\left (r \varsigma _k + v_k\right )+\left (r \varsigma _j + v_j\right )\left (a- b \left (r\varsigma _j + v_j\right )\right )\right ]=0 \end{equation}

\begin{equation} \displaystyle \sum _{k=1}^{n} D_{jk} v_k+ \lambda \left [q\sum _{k=1}^{n} Q_{jk}\left (r \varsigma _k + v_k\right )+\left (r \varsigma _j + v_j\right )\left (a- b \left (r\varsigma _j + v_j\right )\right )\right ]=0 \end{equation}

for

![]() $j=1,\cdots, n$

. Denoting the left side of (2.22) by

$j=1,\cdots, n$

. Denoting the left side of (2.22) by

![]() $\widetilde y_j$

, we see from (2.19) that

$\widetilde y_j$

, we see from (2.19) that

Therefore,

![]() $\widetilde{{{\boldsymbol{y}}}}=\textbf{0}$

if and only if

$\widetilde{{{\boldsymbol{y}}}}=\textbf{0}$

if and only if

![]() $\widetilde c=0$

and

$\widetilde c=0$

and

![]() $\widetilde{{{\boldsymbol{z}}}}=\textbf{0}$

. This combined with (2.22) implies that

$\widetilde{{{\boldsymbol{z}}}}=\textbf{0}$

. This combined with (2.22) implies that

where

\begin{equation*} \begin{split} &\widetilde{G}_{1,j}(\lambda, r,{{\boldsymbol{v}}})=\sum _{k=1}^n D_{jk}v_{k}+ \lambda \left [q\sum _{k=1}^{n} Q_{jk}\left (r\varsigma _k + v_k\right )+a\left (r \varsigma _j +v_j\right )- b \left (r\varsigma _j + v_j\right )^2\right ]\\[3pt] &-\frac{\lambda }{n}\widetilde{G}_2(\lambda, r,{{\boldsymbol{v}}}),\;\;\;\;j=1,\cdots, n,\\[3pt] &\widetilde{G}_2(\lambda, r,{{\boldsymbol{v}}})=\sum _{j=1}^n\left [q\sum _{k=1}^{n} Q_{jk}\left (r\varsigma _k + v_k\right )+a\left (r\varsigma _j + v_j\right )- b \left (r\varsigma _j + v_j\right )^2\right ]. \end{split} \end{equation*}

\begin{equation*} \begin{split} &\widetilde{G}_{1,j}(\lambda, r,{{\boldsymbol{v}}})=\sum _{k=1}^n D_{jk}v_{k}+ \lambda \left [q\sum _{k=1}^{n} Q_{jk}\left (r\varsigma _k + v_k\right )+a\left (r \varsigma _j +v_j\right )- b \left (r\varsigma _j + v_j\right )^2\right ]\\[3pt] &-\frac{\lambda }{n}\widetilde{G}_2(\lambda, r,{{\boldsymbol{v}}}),\;\;\;\;j=1,\cdots, n,\\[3pt] &\widetilde{G}_2(\lambda, r,{{\boldsymbol{v}}})=\sum _{j=1}^n\left [q\sum _{k=1}^{n} Q_{jk}\left (r\varsigma _k + v_k\right )+a\left (r\varsigma _j + v_j\right )- b \left (r\varsigma _j + v_j\right )^2\right ]. \end{split} \end{equation*}

Define

![]() $ \widetilde{{{\boldsymbol{G}}}}(\lambda, r,{{\boldsymbol{v}}}) \;:\; \mathbb{R}^2\times \widetilde{X}_1\to \widetilde{X}_1\times \mathbb{R}$

by

$ \widetilde{{{\boldsymbol{G}}}}(\lambda, r,{{\boldsymbol{v}}}) \;:\; \mathbb{R}^2\times \widetilde{X}_1\to \widetilde{X}_1\times \mathbb{R}$

by

![]() $ \widetilde{{{\boldsymbol{G}}}}\;:\!=\;(\widetilde{G}_{1,1},\cdots, \widetilde{G}_{1,n},G_2)^T$

. It follows that, for

$ \widetilde{{{\boldsymbol{G}}}}\;:\!=\;(\widetilde{G}_{1,1},\cdots, \widetilde{G}_{1,n},G_2)^T$

. It follows that, for

![]() $\lambda \in [0,\lambda _q^*)$

,

$\lambda \in [0,\lambda _q^*)$

,

![]() ${\boldsymbol{u}}$

(represented in (2.21)) is a solution of (2.16) if and only if

${\boldsymbol{u}}$

(represented in (2.21)) is a solution of (2.16) if and only if

![]() $(r,{{\boldsymbol{v}}})\in \mathbb{R}\times \widetilde{X}_1$

is a solution of

$(r,{{\boldsymbol{v}}})\in \mathbb{R}\times \widetilde{X}_1$

is a solution of

![]() $\widetilde{{{\boldsymbol{G}}}}(\lambda, r,{{\boldsymbol{v}}})=\textbf{0}$

. Then using similar arguments as in the proof of Proposition 2.5, we can show that there exists

$\widetilde{{{\boldsymbol{G}}}}(\lambda, r,{{\boldsymbol{v}}})=\textbf{0}$

. Then using similar arguments as in the proof of Proposition 2.5, we can show that there exists

![]() $\tilde{\lambda }_1\gt 0$

and a continuously differentiable mapping

$\tilde{\lambda }_1\gt 0$

and a continuously differentiable mapping

![]() $\lambda \in \left [0,\tilde{\lambda }_1\right ] \mapsto \left (r^\lambda, {{\boldsymbol{v}}}^\lambda \right )\in \mathbb{R}\times \widetilde X_1$

such that

$\lambda \in \left [0,\tilde{\lambda }_1\right ] \mapsto \left (r^\lambda, {{\boldsymbol{v}}}^\lambda \right )\in \mathbb{R}\times \widetilde X_1$

such that

This combined with (2.21) implies that, for

![]() $\lambda \in (0,\tilde \lambda _1)$

,

$\lambda \in (0,\tilde \lambda _1)$

,

Therefore,

![]() ${\boldsymbol{u}}^{\lambda }$

is continuously differentiable for

${\boldsymbol{u}}^{\lambda }$

is continuously differentiable for

![]() $\lambda \in [0,\tilde \lambda _1]$

if we defined

$\lambda \in [0,\tilde \lambda _1]$

if we defined

![]() ${\boldsymbol{u}}^0=r^0\boldsymbol \varsigma$

.

${\boldsymbol{u}}^0=r^0\boldsymbol \varsigma$

.

(ii) Now we compute

![]() $\sum _{j=1}^n\left ( u^{\lambda }_j\right )'\big |_{\lambda =0}$

. Differentiating (2.23) with respect to

$\sum _{j=1}^n\left ( u^{\lambda }_j\right )'\big |_{\lambda =0}$

. Differentiating (2.23) with respect to

![]() $\lambda$

at

$\lambda$

at

![]() $\lambda =0$

and noticing that

$\lambda =0$

and noticing that

![]() ${{\boldsymbol{v}}}^0=\textbf{0}$

, we have

${{\boldsymbol{v}}}^0=\textbf{0}$

, we have

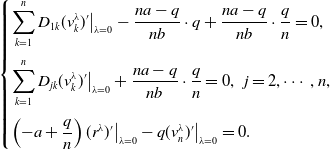

\begin{equation*} \begin{split} &\displaystyle \sum _{k=1}^n D_{jk}(v^\lambda _{k})'\big |_{\lambda =0}+q\sum _{k=1}^{n} Q_{jk}r^0\varsigma _k+ar^0 \varsigma _j - b \left (r^0\varsigma _j \right )^2-\frac{1}{n}\widetilde{G}_2(0,r^0,{{\boldsymbol{v}}}^0)=0,\\[3pt] &\displaystyle q\sum _{j=1}^n \sum _{k=1}^n Q_{jk}\left [(r^\lambda )'\varsigma _k+(v_k^\lambda )'\right ]\big |_{\lambda =0}+ \sum _{j=1}^n\left [a-2b\left (r^0\varsigma _j+v^0_j\right )\right ]\left [(r^\lambda )'\varsigma _j+(v_j^\lambda )'\right ]\big |_{\lambda =0}=0. \end{split} \end{equation*}

\begin{equation*} \begin{split} &\displaystyle \sum _{k=1}^n D_{jk}(v^\lambda _{k})'\big |_{\lambda =0}+q\sum _{k=1}^{n} Q_{jk}r^0\varsigma _k+ar^0 \varsigma _j - b \left (r^0\varsigma _j \right )^2-\frac{1}{n}\widetilde{G}_2(0,r^0,{{\boldsymbol{v}}}^0)=0,\\[3pt] &\displaystyle q\sum _{j=1}^n \sum _{k=1}^n Q_{jk}\left [(r^\lambda )'\varsigma _k+(v_k^\lambda )'\right ]\big |_{\lambda =0}+ \sum _{j=1}^n\left [a-2b\left (r^0\varsigma _j+v^0_j\right )\right ]\left [(r^\lambda )'\varsigma _j+(v_j^\lambda )'\right ]\big |_{\lambda =0}=0. \end{split} \end{equation*}

Noting that

![]() $r^0=\frac{na-q}{b}$

,

$r^0=\frac{na-q}{b}$

,

![]() $({{\boldsymbol{v}}}^\lambda )'\in \widetilde{X}_1$

and

$({{\boldsymbol{v}}}^\lambda )'\in \widetilde{X}_1$

and

![]() $\widetilde{G}_2(0,r^0,{{\boldsymbol{v}}}^0)=0$

, we have

$\widetilde{G}_2(0,r^0,{{\boldsymbol{v}}}^0)=0$

, we have

\begin{equation*} \begin{cases} \displaystyle \sum _{k=1}^n D_{1k}(v^\lambda _{k})'\big |_{\lambda =0}-\frac{na-q}{nb}\cdot q+\frac{na-q}{nb}\cdot \frac{q}{n}=0,\\[15pt] \displaystyle \sum _{k=1}^n D_{jk}(v^\lambda _{k})'\big |_{\lambda =0}+\frac{na-q}{nb}\cdot \frac{q}{n}=0,\;\;j=2,\cdots, n,\\[15pt] \displaystyle \left (-a+\frac{q}{n}\right )(r^\lambda )'\big |_{\lambda =0}-q(v^\lambda _{n})'\big |_{\lambda =0}=0. \end{cases} \end{equation*}

\begin{equation*} \begin{cases} \displaystyle \sum _{k=1}^n D_{1k}(v^\lambda _{k})'\big |_{\lambda =0}-\frac{na-q}{nb}\cdot q+\frac{na-q}{nb}\cdot \frac{q}{n}=0,\\[15pt] \displaystyle \sum _{k=1}^n D_{jk}(v^\lambda _{k})'\big |_{\lambda =0}+\frac{na-q}{nb}\cdot \frac{q}{n}=0,\;\;j=2,\cdots, n,\\[15pt] \displaystyle \left (-a+\frac{q}{n}\right )(r^\lambda )'\big |_{\lambda =0}-q(v^\lambda _{n})'\big |_{\lambda =0}=0. \end{cases} \end{equation*}

By a tedious computation (see Proposition 5.4 in the appendix), we obtain that

This, combined with (2.24), implies that

This completes the proof.

Remark 2.7.

Note that

![]() $\lambda =1/d$

. Then the total population size

$\lambda =1/d$

. Then the total population size

![]() $\sum _{j=1}^n u_{d,j}$

for

(2.2) is strictly increasing in

$\sum _{j=1}^n u_{d,j}$

for

(2.2) is strictly increasing in

![]() $d\in [\delta, \infty )$

with

$d\in [\delta, \infty )$

with

![]() $\delta \gg 1$

.

$\delta \gg 1$

.

3. Eigenvalue problem

Linearising model (1.5) at

![]() ${\boldsymbol{u}}_d$

, we have

${\boldsymbol{u}}_d$

, we have

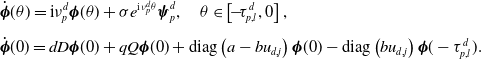

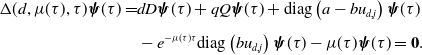

It follows from [Reference Hale21, Chapter 7] that the infinitesimal generator

![]() $A_{\tau }(d)$

of the solution semigroup of (3.1) is defined by:

$A_{\tau }(d)$

of the solution semigroup of (3.1) is defined by:

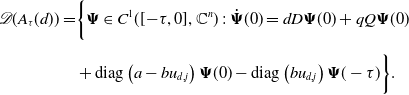

with the domain

\begin{equation} {\begin{aligned} \mathscr{D}(A_\tau (d)) =&\bigg \{\boldsymbol{\Psi }\in C^1([\!-\!\tau, 0],\mathbb{C}^n)\;:\;\dot{\boldsymbol{\Psi }}(0)=dD \boldsymbol{\Psi }(0)+ q Q \boldsymbol{\Psi }(0)\\[5pt] &+\text{diag}\left (a- b u_{d,j}\right )\boldsymbol{\Psi }(0)- \text{diag}\left ( b u_{d,j}\right )\boldsymbol{\Psi }(-\tau )\bigg \}. \end{aligned}} \end{equation}

\begin{equation} {\begin{aligned} \mathscr{D}(A_\tau (d)) =&\bigg \{\boldsymbol{\Psi }\in C^1([\!-\!\tau, 0],\mathbb{C}^n)\;:\;\dot{\boldsymbol{\Psi }}(0)=dD \boldsymbol{\Psi }(0)+ q Q \boldsymbol{\Psi }(0)\\[5pt] &+\text{diag}\left (a- b u_{d,j}\right )\boldsymbol{\Psi }(0)- \text{diag}\left ( b u_{d,j}\right )\boldsymbol{\Psi }(-\tau )\bigg \}. \end{aligned}} \end{equation}

Then

![]() $\mu \in \sigma _p(A_\tau (d))$

(resp.,

$\mu \in \sigma _p(A_\tau (d))$

(resp.,

![]() $\mu$

is an eigenvalue of

$\mu$

is an eigenvalue of

![]() $A_\tau (d)$

) if and only if there exists

$A_\tau (d)$

) if and only if there exists

![]() $\boldsymbol{\psi }=(\psi _1,\cdots, \psi _n)^T(\neq \textbf{0}) \in \mathbb{C}^n$

such that

$\boldsymbol{\psi }=(\psi _1,\cdots, \psi _n)^T(\neq \textbf{0}) \in \mathbb{C}^n$

such that

![]() $\triangle (d,\mu, \tau )\boldsymbol{\psi }=0$

, where matrix

$\triangle (d,\mu, \tau )\boldsymbol{\psi }=0$

, where matrix

To determine the distribution of the eigenvalues of

![]() $A_\tau (d)$

, one need to consider whether

$A_\tau (d)$

, one need to consider whether

By Proposition 2.4, we have

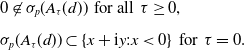

\begin{equation*} \begin{split} &0\not \in \sigma _p(A_\tau (d)) \;\;\text{for all}\;\;\tau \ge 0,\\[5pt] &\sigma _p(A_\tau (d))\subset \{x+\textrm{i} y:x\lt 0\}\;\; \text{for}\;\; \tau =0. \end{split} \end{equation*}

\begin{equation*} \begin{split} &0\not \in \sigma _p(A_\tau (d)) \;\;\text{for all}\;\;\tau \ge 0,\\[5pt] &\sigma _p(A_\tau (d))\subset \{x+\textrm{i} y:x\lt 0\}\;\; \text{for}\;\; \tau =0. \end{split} \end{equation*}

In fact, if

![]() $0\in \sigma _p(A_{\tau _0}(d))$

for some

$0\in \sigma _p(A_{\tau _0}(d))$

for some

![]() $\tau _0\ge 0$

, then

$\tau _0\ge 0$

, then

![]() $0$

is an eigenvalue of matrix

$0$

is an eigenvalue of matrix

which contradicts Proposition 2.4. By (3.4), we see that

![]() $\textrm{i}\nu (\nu \gt 0)\in \sigma _p(A_\tau (d))$

for some

$\textrm{i}\nu (\nu \gt 0)\in \sigma _p(A_\tau (d))$

for some

![]() $\tau \gt 0$

if and only if

$\tau \gt 0$

if and only if

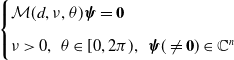

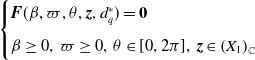

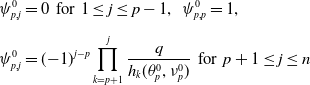

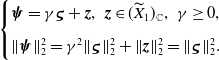

\begin{equation} \begin{cases} \mathcal M(d,\nu, \theta )\boldsymbol{\psi }=\textbf{0}\\[5pt] \nu \gt 0,\;\;\theta \in [0,2\pi ),\;\;\boldsymbol{\psi }(\neq \textbf{0}) \in \mathbb{C}^n \end{cases} \end{equation}

\begin{equation} \begin{cases} \mathcal M(d,\nu, \theta )\boldsymbol{\psi }=\textbf{0}\\[5pt] \nu \gt 0,\;\;\theta \in [0,2\pi ),\;\;\boldsymbol{\psi }(\neq \textbf{0}) \in \mathbb{C}^n \end{cases} \end{equation}

admits a solution

![]() $(\nu, \theta, \boldsymbol{\psi })$

, where matrix

$(\nu, \theta, \boldsymbol{\psi })$

, where matrix

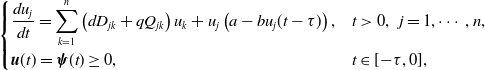

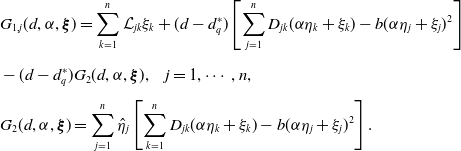

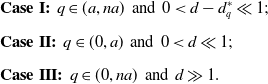

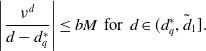

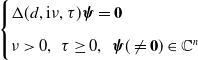

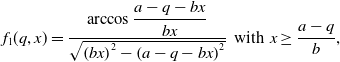

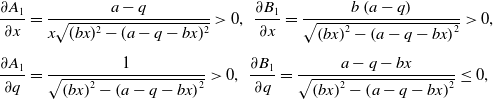

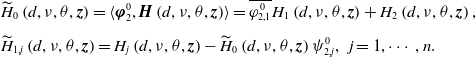

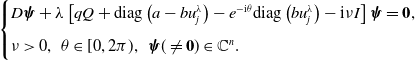

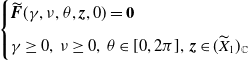

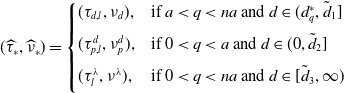

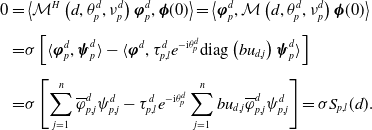

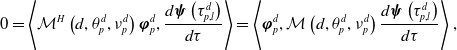

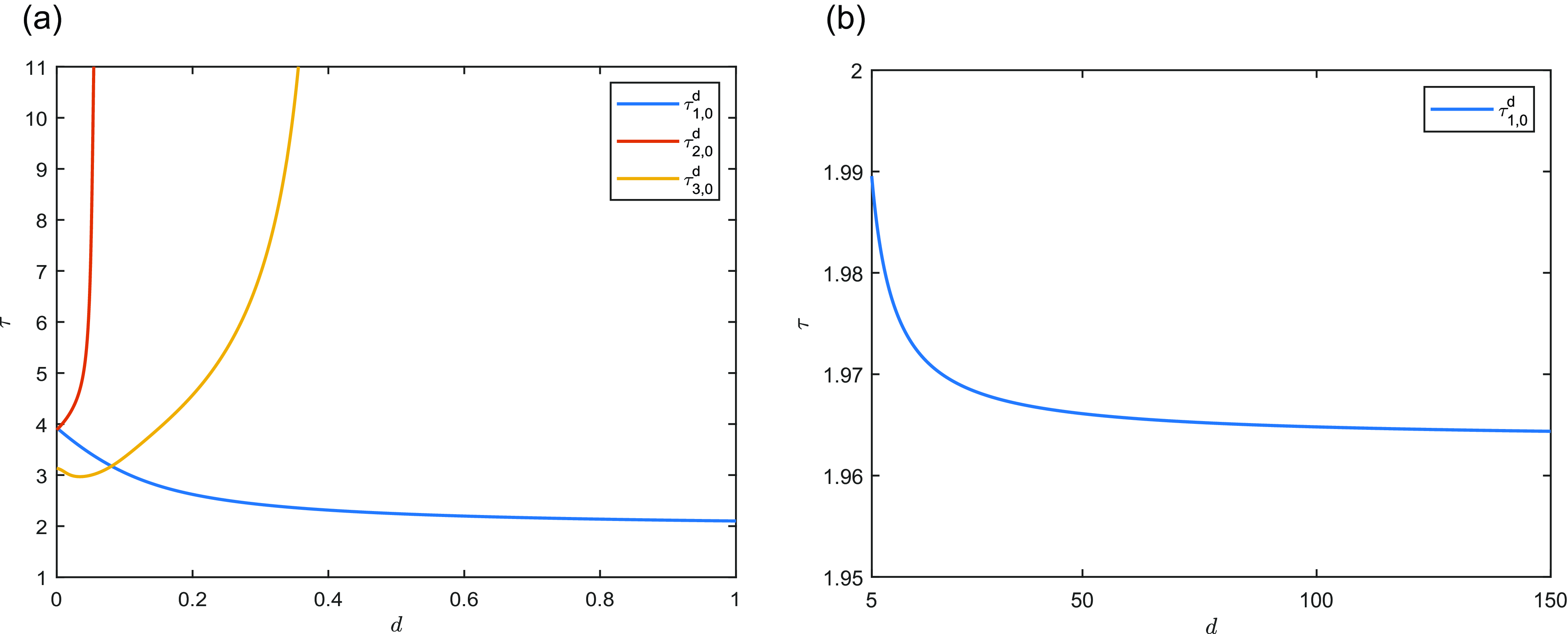

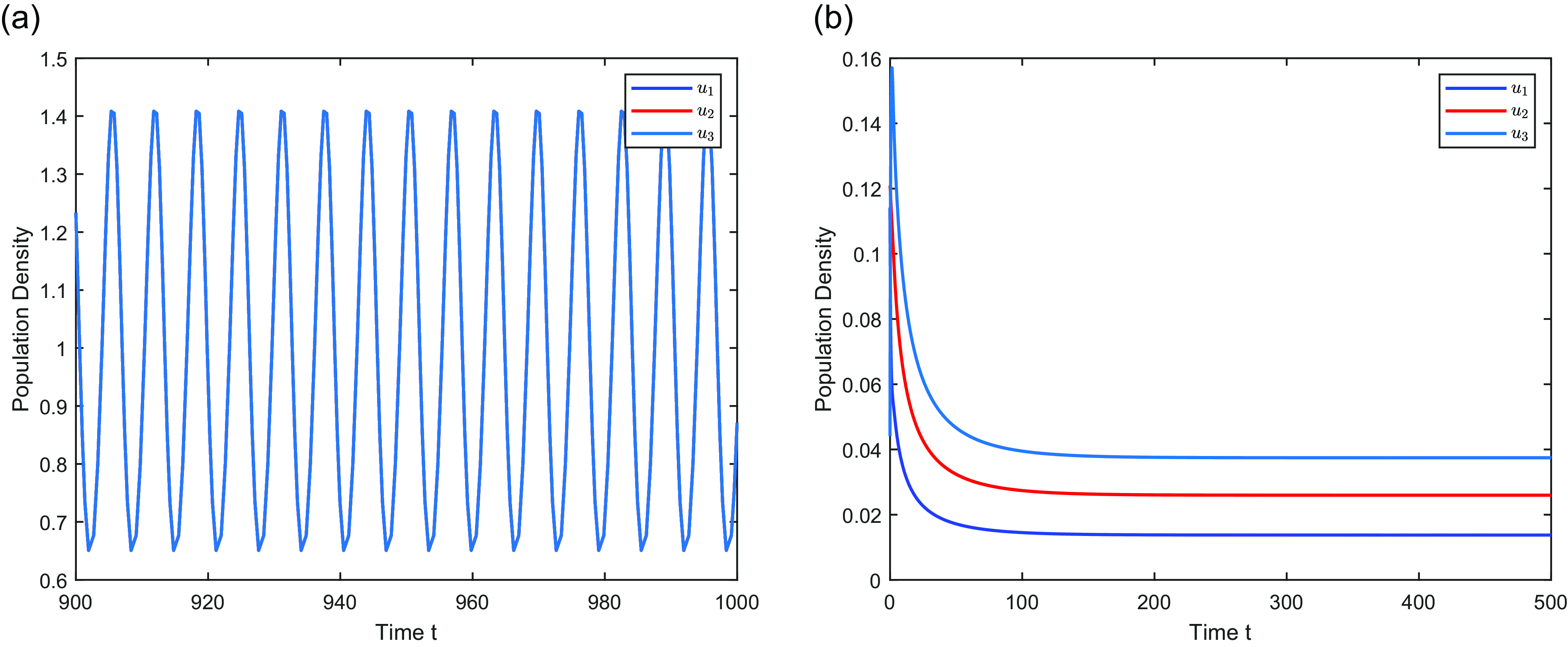

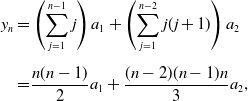

It follows from Proposition 2.5 that the properties of

![]() ${\boldsymbol{u}}_d$

are different for the following three cases (see Figure 3):

${\boldsymbol{u}}_d$

are different for the following three cases (see Figure 3):

\begin{equation*} \begin{split} &{\textbf{Case I:}}\;\;q\in (a,na)\;\;\text{and}\;\;0\lt d-d_q^*\ll 1;\\[5pt] &{\textbf{Case II:}}\;\;q\in (0,a)\;\;\text{and}\;\;0\lt d\ll 1;\\[5pt] &{\textbf{Case III:}}\;\;q\in (0,na)\;\;\text{and}\;\;d\gg 1. \end{split} \end{equation*}

\begin{equation*} \begin{split} &{\textbf{Case I:}}\;\;q\in (a,na)\;\;\text{and}\;\;0\lt d-d_q^*\ll 1;\\[5pt] &{\textbf{Case II:}}\;\;q\in (0,a)\;\;\text{and}\;\;0\lt d\ll 1;\\[5pt] &{\textbf{Case III:}}\;\;q\in (0,na)\;\;\text{and}\;\;d\gg 1. \end{split} \end{equation*}

Therefore, the following analysis on eigenvalue problem (3.5) is divided into three cases.

Figure 3. Diagram for parameter ranges of Cases I–III.

3.1. A priori estimates

In this subsection, we give a priori estimates for solutions of (3.5).

Lemma 3.1.

Let

![]() $\left (\nu ^{d}, \theta ^{d}, \boldsymbol{\psi }^{d}\right )$

be a solution of

(3.5). Then the following statements hold:

$\left (\nu ^{d}, \theta ^{d}, \boldsymbol{\psi }^{d}\right )$

be a solution of

(3.5). Then the following statements hold:

-

(i) For fixed

$q\in (a,na)$

,

$q\in (a,na)$

,

$\left |\displaystyle \frac{\nu ^{d}}{d-d^*_q}\right |$

is bounded for

$\left |\displaystyle \frac{\nu ^{d}}{d-d^*_q}\right |$

is bounded for

$d\in (d_q^*,\tilde d_1]$

with

$d\in (d_q^*,\tilde d_1]$

with

$0\lt \tilde d_1-d_q^*\ll 1$

;

$0\lt \tilde d_1-d_q^*\ll 1$

; -

(ii) For fixed

$q\in (0,a)$

,

$q\in (0,a)$

,

$|\nu ^{d}|$

is bounded for

$|\nu ^{d}|$

is bounded for

$d \in (0,\tilde d_2)$

with

$d \in (0,\tilde d_2)$

with

$0\lt \tilde d_2\ll 1$

;

$0\lt \tilde d_2\ll 1$

; -

(iii) For fixed

$q\in (0,na)$

,

$q\in (0,na)$

,

$|\nu ^{d}|$

is bounded for

$|\nu ^{d}|$

is bounded for

$d\in (\tilde d_3,\infty )$

with

$d\in (\tilde d_3,\infty )$

with

$\tilde d_3\gg 1$

.

$\tilde d_3\gg 1$

.

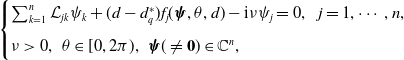

Proof. We only prove (i), and (ii)–(iii) can be proved similarly. Define matrix

![]() $\varrho \;:\!=\;\left (\varrho _{jk}\right )$

with

$\varrho \;:\!=\;\left (\varrho _{jk}\right )$

with

\begin{eqnarray*} \varrho _{jk}= \begin{cases} \left (\displaystyle \frac{d}{d+q}\right )^{j-1}, & j=k=1,\cdots, n,\\[5pt] 0, &\text{otherwise}. \end{cases} \end{eqnarray*}

\begin{eqnarray*} \varrho _{jk}= \begin{cases} \left (\displaystyle \frac{d}{d+q}\right )^{j-1}, & j=k=1,\cdots, n,\\[5pt] 0, &\text{otherwise}. \end{cases} \end{eqnarray*}

Substituting

![]() $(\nu, \theta, \boldsymbol \psi )=\left (\nu ^{d}, \theta ^{d}, \boldsymbol{\psi }^{d}\right )$

into (3.5), and multiplying both sides of (3.5) by

$(\nu, \theta, \boldsymbol \psi )=\left (\nu ^{d}, \theta ^{d}, \boldsymbol{\psi }^{d}\right )$

into (3.5), and multiplying both sides of (3.5) by

![]() $\left (\overline{\psi ^d_1},\overline{\psi ^d_2},\dots, \overline{\psi ^d_n}\right )\varrho$

to the left, we have

$\left (\overline{\psi ^d_1},\overline{\psi ^d_2},\dots, \overline{\psi ^d_n}\right )\varrho$

to the left, we have

where

Since

![]() $\varrho (dD+qQ)$

is symmetric, we see that

$\varrho (dD+qQ)$

is symmetric, we see that

![]() $\mathcal S\in \mathbb{R}$

. This combined with (3.7) yields

$\mathcal S\in \mathbb{R}$

. This combined with (3.7) yields

By Proposition 2.5 (ii), we see that there exists

![]() $ M\gt 0$

such that

$ M\gt 0$

such that

![]() $\displaystyle \frac{\|{\boldsymbol{u}}_d\|_\infty }{d-d^*_q}\lt M$

for

$\displaystyle \frac{\|{\boldsymbol{u}}_d\|_\infty }{d-d^*_q}\lt M$

for

![]() $d\in (d^*_q,\tilde d_1]$

with

$d\in (d^*_q,\tilde d_1]$

with

![]() $0\lt \tilde d_1-d_q^*\ll 1$

. This combined with (3.8) implies that

$0\lt \tilde d_1-d_q^*\ll 1$

. This combined with (3.8) implies that

\begin{equation*} \left |\frac {\nu ^d}{d-d^*_q}\right |\le bM\;\;\text {for}\;\;d\in (d^{\ast}_q,\tilde d_1]. \end{equation*}

\begin{equation*} \left |\frac {\nu ^d}{d-d^*_q}\right |\le bM\;\;\text {for}\;\;d\in (d^{\ast}_q,\tilde d_1]. \end{equation*}

This completes the proof.

3.2. Case I

For this case, the positive equilibrium

![]() ${\boldsymbol{u}}_d$

can be represented as (2.10). Plugging (2.10) into (3.5), we rewrite the eigenvalue problem (3.5) as follows:

${\boldsymbol{u}}_d$

can be represented as (2.10). Plugging (2.10) into (3.5), we rewrite the eigenvalue problem (3.5) as follows:

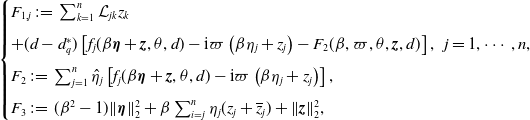

\begin{equation} \begin{cases} \sum _{k=1}^n \mathcal L_{jk}\psi _k+ (d-d_q^*)f_j(\boldsymbol{\psi },\theta, d)-\textrm{i}\nu \psi _j=0,\;\;\;j=1,\cdots, n,\\[5pt] \nu \gt 0,\;\;\theta \in [0,2\pi ),\;\;\boldsymbol{\psi }(\neq \textbf{0}) \in \mathbb{C}^n, \end{cases} \end{equation}

\begin{equation} \begin{cases} \sum _{k=1}^n \mathcal L_{jk}\psi _k+ (d-d_q^*)f_j(\boldsymbol{\psi },\theta, d)-\textrm{i}\nu \psi _j=0,\;\;\;j=1,\cdots, n,\\[5pt] \nu \gt 0,\;\;\theta \in [0,2\pi ),\;\;\boldsymbol{\psi }(\neq \textbf{0}) \in \mathbb{C}^n, \end{cases} \end{equation}

where

![]() $\mathcal L$

is defined in (2.3), and

$\mathcal L$

is defined in (2.3), and

with

![]() $\alpha _d$

and

$\alpha _d$

and

![]() $\boldsymbol{\xi }_d$

defined in (2.10). By (2.6), we see that, ignoring a scalar factor,

$\boldsymbol{\xi }_d$

defined in (2.10). By (2.6), we see that, ignoring a scalar factor,

![]() $\boldsymbol{\psi } (\ne \textbf{0})\in \mathbb{C}^n$

in (3.9) can be represented as follows:

$\boldsymbol{\psi } (\ne \textbf{0})\in \mathbb{C}^n$

in (3.9) can be represented as follows:

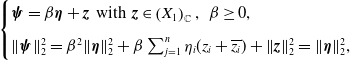

\begin{equation} \begin{cases} \boldsymbol{\psi }=\beta \boldsymbol{\eta }+{{\boldsymbol{z}}} \;\;\text{with}\;\;{{\boldsymbol{z}}} \in \left (X_{1}\right )_{\mathbb{C}}, \;\; \beta \geq 0, \\[5pt] \|\boldsymbol{\psi }\|_2^{2}=\beta ^2\|\boldsymbol{\eta }\|_2^2+\beta \sum _{j=1}^n\eta _i(z_i+\overline{z_i})+ \|{{\boldsymbol{z}}}\|_2^2=\|\boldsymbol{\eta }\|_2^2, \end{cases} \end{equation}

\begin{equation} \begin{cases} \boldsymbol{\psi }=\beta \boldsymbol{\eta }+{{\boldsymbol{z}}} \;\;\text{with}\;\;{{\boldsymbol{z}}} \in \left (X_{1}\right )_{\mathbb{C}}, \;\; \beta \geq 0, \\[5pt] \|\boldsymbol{\psi }\|_2^{2}=\beta ^2\|\boldsymbol{\eta }\|_2^2+\beta \sum _{j=1}^n\eta _i(z_i+\overline{z_i})+ \|{{\boldsymbol{z}}}\|_2^2=\|\boldsymbol{\eta }\|_2^2, \end{cases} \end{equation}

where

![]() $\boldsymbol{\eta }$

is defined in (2.4). Then we obtain an equivalent problem of (3.9) in the following.

$\boldsymbol{\eta }$

is defined in (2.4). Then we obtain an equivalent problem of (3.9) in the following.

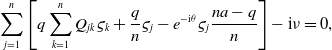

Lemma 3.2.

Assume that

![]() $d\gt d_q^*$

and

$d\gt d_q^*$

and

![]() $q\in (a,na)$

. Then

$q\in (a,na)$

. Then

![]() $(\boldsymbol{\psi },\nu, \theta )$

solves

(3.9), where

$(\boldsymbol{\psi },\nu, \theta )$

solves

(3.9), where

![]() $\boldsymbol{\psi }$

is defined in

(3.11) and

$\boldsymbol{\psi }$

is defined in

(3.11) and

![]() $\nu =(d-d^*_q)\varpi$

, if and only if

$\nu =(d-d^*_q)\varpi$

, if and only if

![]() $(\beta, \varpi, \theta, {{\boldsymbol{z}}})$

solves

$(\beta, \varpi, \theta, {{\boldsymbol{z}}})$

solves

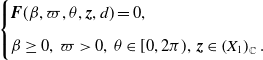

\begin{equation} \begin{cases}{{\boldsymbol{F}}}(\beta, \varpi, \theta, {{\boldsymbol{z}}},d)= 0,\\[5pt] \beta \ge 0,\;\varpi \gt 0,\;\theta \in [0,2\pi ),\;{{\boldsymbol{z}}}\in \left (X_{1}\right )_{\mathbb{C}}. \end{cases} \end{equation}