Introduction

One of the best-known findings from Amos Tversky and Daniel Kahneman was a framing effect according to whether losses or gains are emphasized in a dire choice. Their “Asian disease” experiment (ADE) confronted subjects with a stark, hypothetical choice between alternative disease-mitigation programs (1981). Whether people preferred an option described as having certain outcomes or an alternative wherein outcomes were described by probabilities seemed to depend on whether losses (deaths) or gains (lives saved) were broached.

I report findings from a replication retaining key features of the ADE, but with a modified vignette. The goal was to take advantage of the COVID-19 pandemic, on the assumption that real-world events made the rival options seem less artificial and stylized to recent respondents, for whom the decision, in turn, should have been more pressing. Re-checking malleability of preferences for disease mitigation in the context of a pandemic is compelling because the choices involved were suddenly much more familiar. In 2020, one could scarcely escape discussion of disease mitigation and how to balance reducing transmission against restricting normal life.

Experimenters, within and across disciplines, often disagree on the significance of realism of various sorts, from external validity, mundane and psychological realism, contextual richness, to stakes (e.g. Camerer Reference Camerer, John H. and Alvin E.1995, Reference Camera2003). Dickson’s summary, “As of now, there is nothing like a general theory that would give experimentalists guidance as to when stylization might pose problems for external validity” (2011: 61), is still generally true. McDermott sees external validity as the “central focus” of political science, where concern that “trivial tasks presented to subjects offer a poor analogue to… real-world experiences” (2011: 35–37). The ADE posed a single-shot choice of seemingly high hypothetical stakes, very likely to feel unfamiliar to most subjects. Tversky and Kahneman observed a dramatic effect, so perhaps there is little cause to worry about whether subjects were seriously engaged with the problem. However, the conclusion that losses and gains induce distinct responses to risk is less compelling if it describes only inconsequential, unfamiliar decisions. When history helpfully (tragically) pre-treats subjects with intense discussion and first-hand experience with the kind of public-health dilemma at play in the ADE, the measure almost certainly gains in familiarity and arguably should induce more careful or thoughtful choice by respondents.

Writing decades later (but pre-pandemic), Kahneman added a “grim note,” that Tversky had replicated the result with public-health officials and that “people who make decisions about vaccines… were susceptible to the framing” and when confronted with the inconsistency of the collective’s results responded with “embarrassed silence” (2011: 368–369).Footnote 1 Part of the significance of the result was to establish that patterns already observed in choices over monetary gambles would recur when lives were at stake (Kahneman Reference Kahneman2011: 368). Apart from its significance in the development of prospect theory and other non-traditional theories of choice under risk, the ADE looms large for public-health policy. “Does that finding still hold?” is a natural question for officials charged with producing and defending public-health policy, if they are strategic, or if they desire transparent debate.

Experimental design

The original ADE confronted subjects with this scenario.

Imagine that the US is preparing for the outbreak of an unusual Asian disease, which is expected to kill 600 people. Two alternative programs to combat the disease have been proposed. Assume that the exact scientific estimates of the consequences of the programs are as follows.

Subjects were then randomly assigned to one of the following conclusions to the question and asked to choose between the alternative programs.

If program A is adopted, 200 people will be saved.

If program B is adopted, there is a

![]() ${1 \over 3}$

probability that 600 people will be saved and a

${1 \over 3}$

probability that 600 people will be saved and a

![]() ${2 \over 3}$

probability that no one will be saved.

${2 \over 3}$

probability that no one will be saved.

Or, instead:

If program C is adopted, 400 people will die.

If program D is adopted, there is a

![]() ${1 \over 3}$

probability that nobody will die, and a

${1 \over 3}$

probability that nobody will die, and a

![]() ${2 \over 3}$

probability that 600 people will die.

${2 \over 3}$

probability that 600 people will die.

The experimental treatments thus varied whether outcome descriptions emphasized survival and lives saved (gains) or mortality and deaths (losses). Framing aside, programs A and C and programs B and D were identical in predicted outcomes. Subjects were more inclined to opt for programs described with probabilistic outcomes when primed to think of losses, but risk-averse, preferring certain outcomes, when given a gains frame (Tversky and Kahneman Reference Tversky and Kahneman1981). The asymmetry was large: about 70% of subjects picked the risk-averse (certain) option (A), wherein the outcomes were described as gains, while only 20% went for the certain option that emphasized losses (C).

A risk-neutral decision-maker regards 200 as equivalent to a lottery with

![]() ${1 \over 3}$

probability of 600 and

${1 \over 3}$

probability of 600 and

![]() ${2 \over 3}$

probability of 0, for an expected value of 200. There is nothing right or wrong about, instead, preferring either the certainty or the lottery. Risk aversion is a taste, not an error. But risk attitudes should not, in classical theory, be affected by mere terminology. Whether 600 deaths is modified to “200 saved” or “400 die” is immaterial to an expected-utility maximizer, whatever her risk attitudes. That a risky option might appeal or repel depending on terminology defies simple and elegant theory, and surprised many.

${2 \over 3}$

probability of 0, for an expected value of 200. There is nothing right or wrong about, instead, preferring either the certainty or the lottery. Risk aversion is a taste, not an error. But risk attitudes should not, in classical theory, be affected by mere terminology. Whether 600 deaths is modified to “200 saved” or “400 die” is immaterial to an expected-utility maximizer, whatever her risk attitudes. That a risky option might appeal or repel depending on terminology defies simple and elegant theory, and surprised many.

The experiment was subsequently replicated, with some variation in effect magnitude, but a fairly robust pattern of success (Druckman Reference Druckman2001). When the “replication crisis” emerged in psychology, the ADE was among those studies subjected to “many-labs” replication, which found a sufficiently large estimated treatment effect to reject the null hypothesis of no effect (Klein et al. Reference Klein2014). The estimated effect size from replications across 36 teams was, however, distinctly smaller, with a 99 percent confidence interval spanning 53% to 67% of the size of the original study (Klein et al. Reference Klein2014, Table 2).Footnote 2

Researchers have also probed heterogeneity of various kinds, examined covariates of gain/loss framing sensitivity, and checked parameterization. My aim is to explore only one aspect of the ADE. I take seriously Stroebe’s observation that “manipulations and measures often derive their meaning from the historical, social, and cultural context at a given time,” (2019) a claim both obvious and often overlooked. Replication by independent implementation of literally identical protocols, across time and space, is often not the ideal way to explore the robustness of important substantive findings. Historical context, necessarily outside of an experimenter’s control, can be much more important than exact question wording or mode of subject recruitment.

I aimed to retain key features of the original item, while adapting the choice to ubiquitous contemporary policy debates. My aim was to reproduce the original choices structurally, but with subjects inclined to understand the options to be gravely serious, and worthy of careful thought. Hence, I asked respondents about policy for responding to the coronavirus pandemic, the overwhelming issue at the time. I also followed Druckman (Reference Druckman2001) in offering three framings, one emphasizing losses or deaths, one laid out in terms of gains or lives saved, and a third mentioning both. The specific wording (omitting labels in parentheses) was:

Suppose that you were in charge of pandemic policy for your state. A scientific experts’ report on how to manage reopening says the following. Ending all remaining restrictions on normal life, to boost economic recovery, would cause 600 deaths per week. There are two feasible options to relax, without ending, the rules and thereby improve the economy, albeit more slowly.

Version A (saved/gains/survival) Option 1 for loosening the rules would save 200 of those 600 people. Option 2 would save all 600 with

![]() ${1 \over 3}$

probability, and save none of them with

${1 \over 3}$

probability, and save none of them with

![]() ${2 \over 3}$

probability.

${2 \over 3}$

probability.

Version B (deaths/losses/mortality) Option 1 for loosening the rules would result in 400 deaths. Option 2 would result in no deaths with

![]() ${1 \over 3}$

probability and 600 deaths with

${1 \over 3}$

probability and 600 deaths with

![]() ${2 \over 3}$

probability.

${2 \over 3}$

probability.

Version C (both) Option 1 for loosening the rules would result in 400 deaths, and save 200 of those 600 people. Option 2 would save all 600, for no deaths, with

![]() ${1 \over 3}$

probability, and save none of them, resulting in 600 deaths, with

${1 \over 3}$

probability, and save none of them, resulting in 600 deaths, with

![]() ${2 \over 3}$

probability.

${2 \over 3}$

probability.

Which option would you choose?

Beyond positioning the choice in the ongoing pandemic, and re-scaling 600 deaths to a weekly total for one state, not a one-time national value, another change was repeating the baseline value 600 in A and C. That syntax (accidentally) made the equality of expected values more obvious. Soon after the original article’s appearance, the authors described the ADE framing effects as highly robust and, “[in] their stubborn appeal…resembl[ing] perceptual illusions more than computational errors” (1984: 343). From that perspective, this revision should be inconsequential, not overriding the save/gains mindset.

Results

Subjects were 1,000 respondents to the 2020 Cooperative Election Study, a large online survey organized by researchers at Harvard and administered by YouGov on behalf of about 60 teams.Footnote 3 YouGov respondents are members of an online panel, recruited into studies to match random samples. The questions were part of the pre-election wave, conducted in September and October. Analysis below employs weights that aim to make them nationally representative.

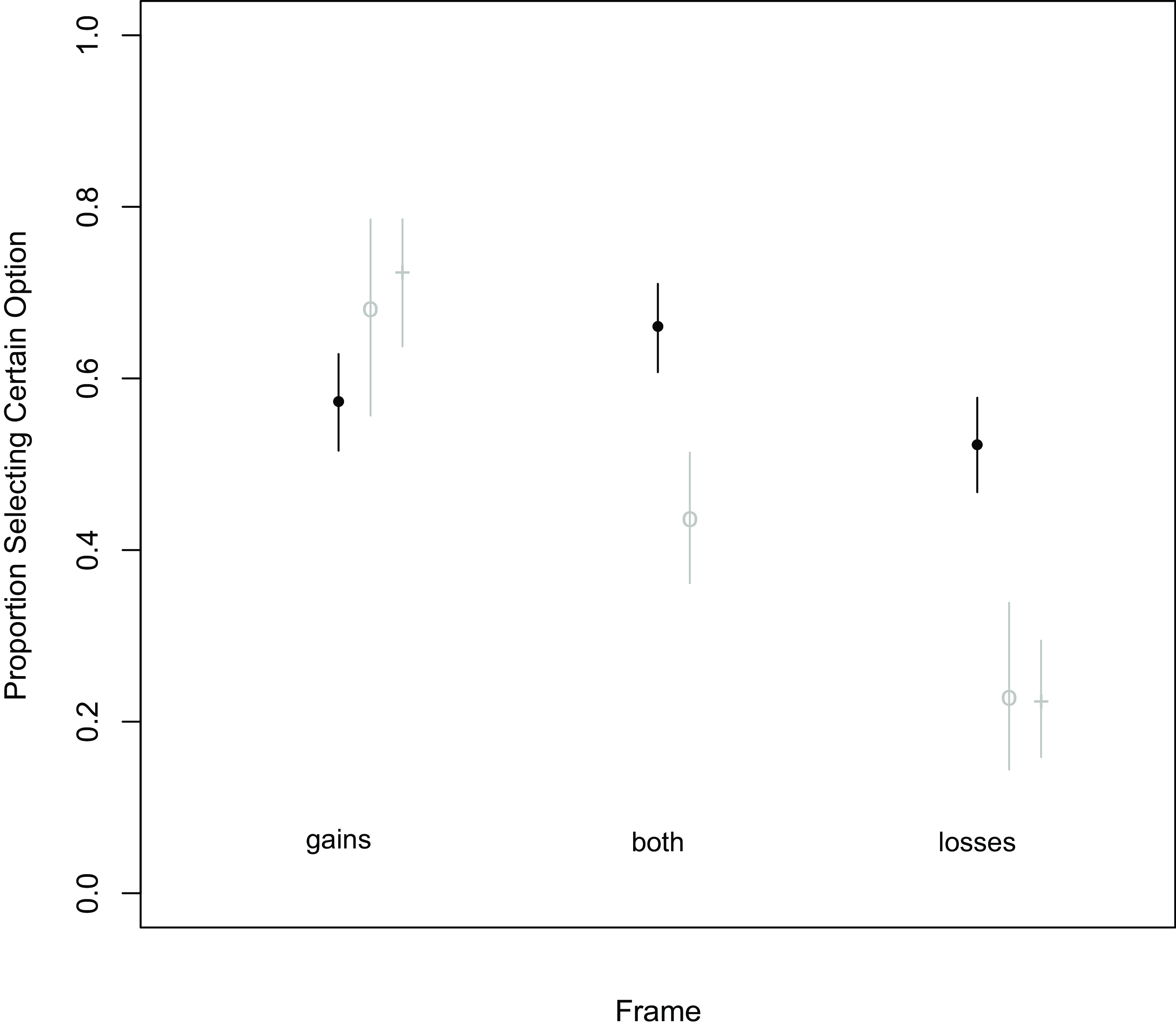

Figure 1 shows that subjects’ choices were little affected by the frames, in contrast to the original study and most replications. The figure shows proportions who chose the risk-averse option given each frame and, for comparison, the same quantities in the original data (gray crosses) and Druckman’s replication (gray circles), with 95 percent confidence intervals.

Figure 1. Replication versus original.

Each data point shows the proportion of selecting the certain option for the given frame, with a 95 percent confidence interval. Gray crosses show the matching values from the original Tversky-Kahenman ADE. Gray circles show values from Druckman’s 2001 replication.

Approximate p-values for difference-of-proportions tests between gains and both, gains and losses, and both and losses are 0.03, 0.23, and 0, respectively. So, the unexpected small increase in risk aversion when losses are added to gains (in “both”) is statistically significant, using a conventional 0.05 threshold, as is the drop when gains are removed. But the gains-versus-losses effect is small (about 5 percentage points) and statistically insignificant. Details are in the Appendix.Footnote 4

For a continuous measure of evidence, Bayes factors for testing the equality of the proportions in the three versions are: 2.1, 0.33, and 81.93.Footnote 5 The implication is that the data provide weak evidence of an unexpected gains-both contrast, strong evidence that certainty is more preferred with the losses frame than with both frames, and evidence in favor of the null (i.e. not mere absence of evidence of a difference but evidence of an absence of difference) for the gains-versus-losses framing effect that constituted the Tversky and Kahneman finding.

Even if subjects in 2020 were systematically more likely to engage with the ADE choices thoughtfully, there could be significant individual-level heterogeneity. For those skeptical that coronavirus posed an emergency, the hypothesis that disease-mitigation policy had enhanced salience might be wrong.

I considered two possible proxies for respondents’ likelihood to take choices related to COVID policy seriously, self-reported experiences with infection and death.Footnote 6

Separating respondents according to whether or not they reported close encounters with actual COVID infection yielded no appreciable difference. For the 476 (unweighted) who reported either having had COVID themselves or that a friend, family member, or co-worker had, proportions were almost identical to those shown in Figure 1, as were those for the 524 (unweighted) reporting no such direct contact with the disease. Tables in the Appendix document the similarity.

Given the significance of mortality to the ADE, brushes with death might better proxy motivation to wrestle with the choice. Only half of the sample was queried about connections to COVID deaths, and 134 individuals said that a friend, family member, or co-worker had died from the disease, while 353 said the opposite. The latter group again displayed a quite small gap in proportions choosing the certain option given gains frames (0.54) or losses frames (0.48). Against expectation, those reporting proximity to COVID deaths were a somewhat better match to the original ADE subjects, with a corresponding gap of about 0.20 (0.66–0.46). However, reduced sample size conspired against statistical significance (

![]() $p \approx 0.13$

).Footnote

7

$p \approx 0.13$

).Footnote

7

The survey had another item that might capture respondents’ treatability in regard to the subjective seriousness of the crisis. Those who reported loss of sleep were given “worrying about the COVID-19 pandemic” in a small battery of possible causes. The pandemic worriers, like those saying they knew at least one person who died from the disease, somewhat better resembled the 1981 subjects, with a roughly 14-percentage-point gap in preference for the certain option, comparing gains-frame to losses-frame treatments, than did the non-worriers, whose gap was −3 percentage points. But conditioning on sleep deprivation complicates expectations, and the concomitant small

![]() $N$

s again prevented statistical significance.

$N$

s again prevented statistical significance.

Other recent replications

The conclusion that the ADE result does not replicate in a climate wherein the choices it poses have added realism could be taken as a stark correction about choice contexts in which risk attitudes are malleable. It might also undercut the view that mortality is like money. The individual-level results above slightly qualify that result, given that the ADE is better replicated (but weakly) with those seemingly more primed to engage. Moreover, I was not alone in trying to replicate the ADE during the pandemic.

Some parallel studies eschewed the binary-choice setup employed in the original, so resist direct comparison (e.g. Biroli et al. Reference Biroli, Bosworth, Della Giusta, Di Girolamo, Jaworska and Vollen2020; Sanders et al. Reference Sanders, Stockdale, Hume and John2021) Others vary on multiple dimensions, including item wording. Table 1 shows that most, but not all, mimicked the original ADE’s death forecasts, but varied in whether they recast the problem as being about COVID. Some employed the exact questions of the original, others retrofitted to pandemic context. All studies employed the original probabilities,

![]() ${1 \over 3}$

and

${1 \over 3}$

and

![]() ${2 \over 3}$

, except Hameleers (Reference Hameleers2021), which tweaked to

${2 \over 3}$

, except Hameleers (Reference Hameleers2021), which tweaked to

![]() $0.35$

and

$0.35$

and

![]() $0.65$

.

$0.65$

.

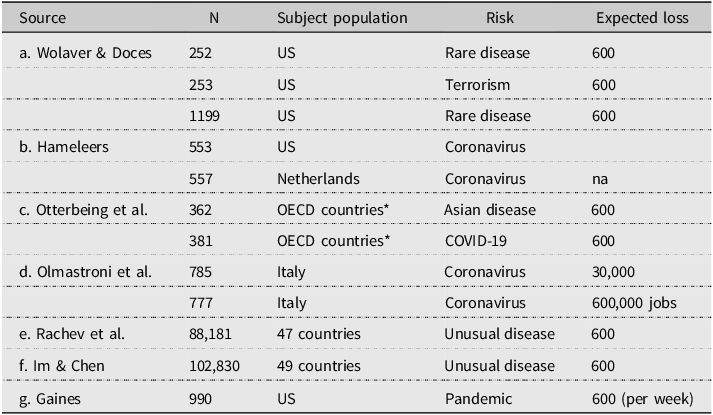

Table 1. Some details of pandemic ADE replications

Mine was thus not unique in updating the ADE to be about coronavirus rather than a purely hypothetical, possibly less scary, disease. My assumption is that direct experience of a pandemic pre-treated the respondents not to a gains or losses frame, but to prolonged discussion of public-health policy choices. One could argue that the generic (“Asian,” “rare,” or “unusual”) disease in other studies must have been understood to be COVID by nearly all 2020 subjects. On the other hand, the more obviously hypothetical the choice, the less momentous it seems. Respondents asked about a generic disease might have taken the question to have an implicit, “Forget reality and consider a world in which…” frame. Or, instead, they might have understood the choice to involve a second disease, on top of COVID, with distinct mitigation, complicating choices and interpretation thereof.

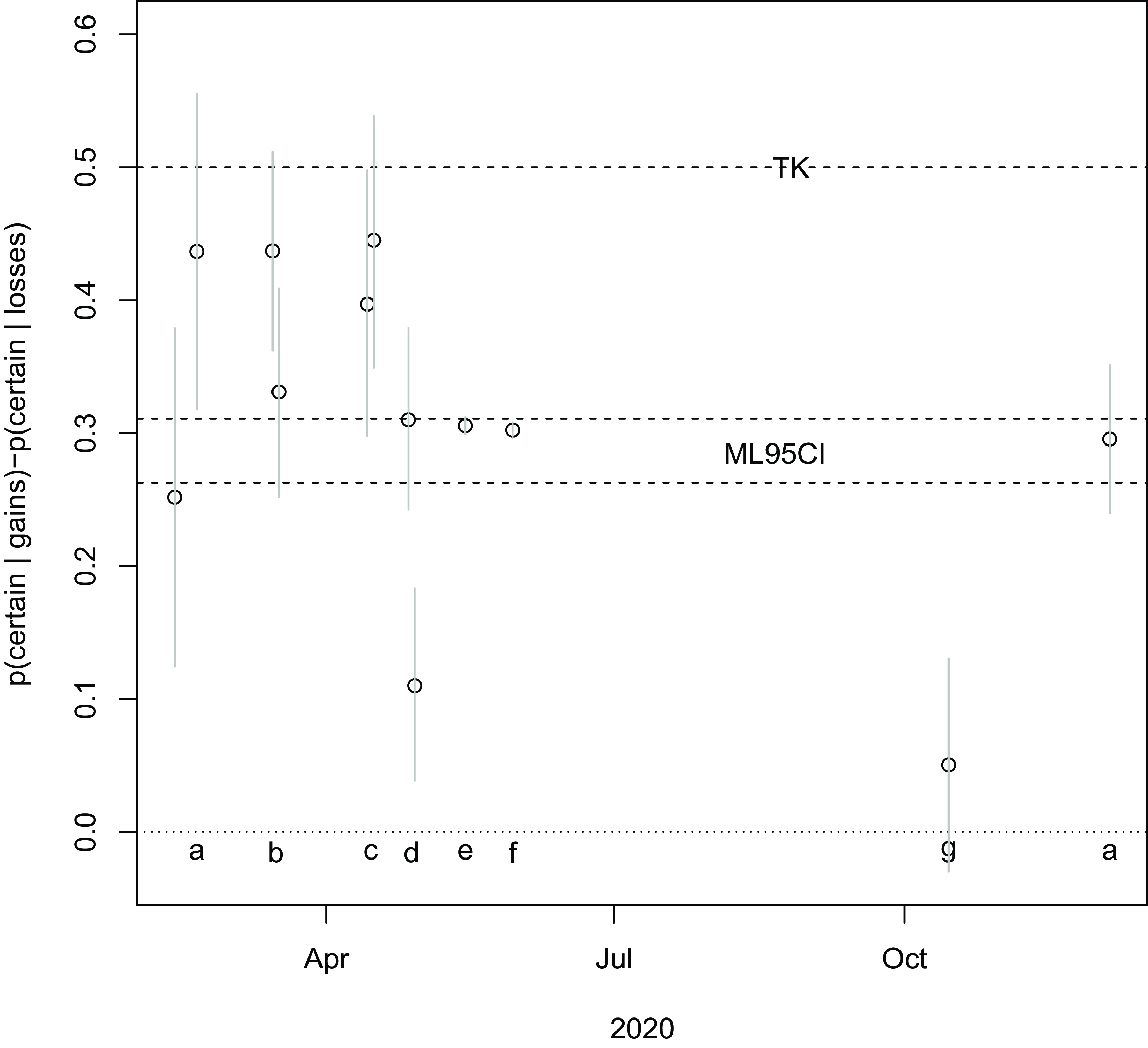

Notwithstanding differences, Figure 2 compares my overall effect – as the difference between proportions selecting the risk-averse option for the gains and losses frames – to 11 others from six articles reporting replications of the ADE during 2020. The lower-case letter labels correspond to markers in the Sources, identifying which study produced each estimate. My estimate is labeled “g.” The figure also shows the result reported in the original article and a 95% confidence interval for the effects detected by the 2014 “many-labs” replication.

Figure 2. Pandemic ADE replication results.

Each data point shows effect size (difference in proportions selecting the certain option for gains and losses frames) for a replication of the ADE, with an associated 95 percent confidence interval. Studies producing the replication are labeled with letters, matched to articles in Table 1 and in the Sources. “ML95CI” shows the 95-percent confidence interval for effect size from the 2014 “many-labs” replication. “TK” marks this effect size in the original Tversky-Kahneman study.

This study turns out to be unusual in not having found a strong gain-versus-loss framing effect. No replication produced an estimated effect as large as the original ADE, but most other pandemic replications detected effects at least as large as the many-labs replication. Comparing Table 1 and Figure 2, there is little sign of difference according to whether the queries pertained to COVID or a generic disease. Average effects may have been larger earlier, and smaller later, although the one study with waves well separated in time (Wolaver and Doces Reference Wolaver and Doces2021, labeled “a”) found indistinguishable effects early and late.

Replications fill out distributions of

![]() $p$

-values and effect sizes for a given experiment or phenomenon, and (initial) publication biases mean that both tend gradually to shift left, to smaller values (Stroebe Reference Stroebe2019). The ADE has followed that pattern. Collectively, however, the recent batch of pandemic replications stretch the many-labs estimates both ways.

$p$

-values and effect sizes for a given experiment or phenomenon, and (initial) publication biases mean that both tend gradually to shift left, to smaller values (Stroebe Reference Stroebe2019). The ADE has followed that pattern. Collectively, however, the recent batch of pandemic replications stretch the many-labs estimates both ways.

Conclusions

That a COVID-based replication of the ADE failed to detect loss/gain framing sensitivity could reveal that sufficiently important choices are less susceptible to such asymmetry. Two important qualifications are that other similar replications matched the original and that individual-level measures of motivation suggest better, not worse, fit with higher engagement during this period of enhanced realism.

The present study was retrofitted to COVID and also tweaked the numerical presentation of predicted outcomes in the gains frame. The fact that the losses frame was most discrepant, as compared to the original, reassures that this non-replication is not primarily an artifact. Still, an obvious follow-up would be more systematic variation in the presentation of numbers, retaining loss/gains frames. Kahneman’s faith that the ADE exposes biases of perception, not computation, could be misplaced.

I did not give subjects authority for public-health policy, so this replication yet again involved only hypothetical choices. The ADE had enhanced salience in the midst of a colossal pandemic. Subjects, as compared to those confronted by its scenarios in the early 1980s, were much more likely to have been recently exposed to debate over life-and-death, cost-benefit analysis of public health. Their task was thus more apt to seem realistic. But more realistic is not real. With sufficient creativity, one might glean from observational data on public reaction to policies and framing of policies by actual decision-makers whether there are discernible echoes of the ADE in reality. Few policymakers are acquainted with the ADE, and frames for defending and debating COVID policy invoked saving and losing lives haphazardly. Diligent, careful data collection and analysis could assess whether real-world framing effects arise in public opinion, as framed by rhetorical context.

That difficult project is one possible next step both on the ADE as a general result in theories of choice, and also for health policy. The COVID pandemic has made it very clear that the ADE touched on an aspect of public attitudes that can have great policy relevance, even as decision-makers largely miss the underlying psychology. Whether risk attitudes can be affected by mere frames, as opposed to argumentation, as most, but not all ADE studies suggest, matters a good deal, not only to campaign consultants and students of cognitive biases but to policymakers and, in turn, good governance.

Supplementary material

The supplementary material for this article can be found at https://doi.org/10.1017/XPS.2025.7.

Data availability statement

The data and R code required to replicate all analyses in this article are available at the Journal of Experimental Political Science Dataverse within the Harvard Dataverse Network, at https://doi.org/10.7910/DVN/EJ8UZQ.

Acknowledgements

Thanks to Aleks Ksiazkiewicz, Cara Wong, anonymous referees, and Associate Editor Bert Bakker for their suggestions and advice on prior drafts.

Competing Interests

I have no conflicts of interest to report for this research.

Ethics statement

The data analyzed herein were obtained through the 2020 Cooperative Election Study, implemented by YouGov on behalf of dozens of academic teams. Respondents were opt-in panelists, compensated for their time by the awarding of points that could later be redeemed as gift cards or cash. The University of Illinois Urbana-Champaign IRB approved the module submitted by Illinois political scientists Brian Gaines, Aleks Ksiazkiewicz, and Cara Wong (21118). A copy of the approval letter has been submitted to JEPS. Our research adheres to the APSA’s Principles and Guidance for Human Subjects Research (https://connect.apsanet.org/hsr/principles-and-guidance/).