1. Introduction

The focusing nonlinear Schrödinger equation is a universal evolution equation governing the complex amplitude of a weakly nonlinear, strongly dispersive wave packet over long time scales in very general settings. In one space dimension, this equation can be written in normalized form as

\begin{align}

{\mathrm{i}} q_t + \frac{1}{2}q_{xx} + |q|^2q = 0,\quad q=q(x,t),\quad (x,t)\in\mathbb{R}^2.

\end{align}

\begin{align}

{\mathrm{i}} q_t + \frac{1}{2}q_{xx} + |q|^2q = 0,\quad q=q(x,t),\quad (x,t)\in\mathbb{R}^2.

\end{align}For instance, in 1969, V. E. Zakharov studied the surface elevation of water wave packets over deep water in the classical setting of plane-parallel irrotational and incompressible (potential) flow below the free surface, which is subject to kinematic and pressure-balance boundary conditions [Reference Zakharov47]. He gave a derivation of (1.1) based on the formalism of the method of multiple scales, with the wave packet amplitude being the fundamental small parameter. This derivation has more recently been made fully rigorous [Reference Totz and Wu36]. Being as the multiple-scale argument is based on Taylor expansions of nonlinear terms and of the linearized dispersion relation, the derivation of (1.1) as a model equation applies in far more settings than surface water waves [Reference Benney and Newell4]. For example, it is also a fundamental model in nonlinear optics [Reference Newell and Moloney26] and in the theory of Bose-Einstein condensation (where it is known as the Gross-Pitaevskii equation) [Reference Ueda43].

In 1983, D. H. Peregrine found a compelling exact solution of (1.1) for which ![]() $q(x,t)$ is not of constant modulus, but nonetheless decays to the exact solution

$q(x,t)$ is not of constant modulus, but nonetheless decays to the exact solution ![]() $q(x,t)={\mathrm{e}}^{{\mathrm{i}} t}$ of (1.1) uniformly in all directions of space-time [Reference Peregrine31]. Peregrine’s solution is

$q(x,t)={\mathrm{e}}^{{\mathrm{i}} t}$ of (1.1) uniformly in all directions of space-time [Reference Peregrine31]. Peregrine’s solution is

\begin{align}

q(x,t)={\mathrm{e}}^{{\mathrm{i}} t}\left[1-4\frac{1+2{\mathrm{i}} t}{1+4x^2+4t^2}\right] = {\mathrm{e}}^{{\mathrm{i}} t}\left[1+O\left(\frac{1}{\sqrt{x^2+t^2}}\right)\right],\quad (x,t)\to\infty.

\end{align}

\begin{align}

q(x,t)={\mathrm{e}}^{{\mathrm{i}} t}\left[1-4\frac{1+2{\mathrm{i}} t}{1+4x^2+4t^2}\right] = {\mathrm{e}}^{{\mathrm{i}} t}\left[1+O\left(\frac{1}{\sqrt{x^2+t^2}}\right)\right],\quad (x,t)\to\infty.

\end{align} The error estimate term in (1.2) is not optimal, but it is the optimal radially symmetric estimate. Since in the setting of water waves, ![]() $q(x,t)={\mathrm{e}}^{{\mathrm{i}} t}$ is the complex amplitude of a uniform periodic wavetrain (a Stokes wave), Peregrine’s solution describes a space-time localized fluctuation of a Stokes wave, and as such it is a model for a rogue wave.

$q(x,t)={\mathrm{e}}^{{\mathrm{i}} t}$ is the complex amplitude of a uniform periodic wavetrain (a Stokes wave), Peregrine’s solution describes a space-time localized fluctuation of a Stokes wave, and as such it is a model for a rogue wave.

The focusing nonlinear Schrödinger equation (1.1) was shown to be a completely integrable system in the work of Zakharov and Shabat [Reference Zakharov and Shabat46]. This means that the methods of soliton theory apply, including tools for deriving numerous exact solutions such as (1.2). These tools have a recursive nature, allowing for a given exact solution to be generalized to a whole infinite family by means of iterated Bäcklund transformations. Thus, one sees that the Peregrine solution (1.2) is by no means the only solution of (1.1) that has the character of a rogue wave. Indeed, there exist algebraic representations in terms of determinants of exact solutions of (1.1) that for any ![]() $N\in\mathbb{N}$ can be viewed as a rogue wave of order N. At each subsequent value of N, new parameters enter into the algebraic solution formula that affect the details of the solution without influencing its fundamental property of decay to the background

$N\in\mathbb{N}$ can be viewed as a rogue wave of order N. At each subsequent value of N, new parameters enter into the algebraic solution formula that affect the details of the solution without influencing its fundamental property of decay to the background ![]() $q(x,t)={\mathrm{e}}^{{\mathrm{i}} t}$. If these parameters are scaled suitably, the rogue wave of order N can resemble an array of a triangular number of distant copies of the Peregrine solution on the same background, and it has been shown [Reference Yang and Yang45] that the locations of the Peregrine peaks in space-time are correlated with the complex zeros of the Yablonskii-Vorob’ev polynomials.

$q(x,t)={\mathrm{e}}^{{\mathrm{i}} t}$. If these parameters are scaled suitably, the rogue wave of order N can resemble an array of a triangular number of distant copies of the Peregrine solution on the same background, and it has been shown [Reference Yang and Yang45] that the locations of the Peregrine peaks in space-time are correlated with the complex zeros of the Yablonskii-Vorob’ev polynomials.

However, if the parameters are chosen in a highly correlated way at each order, then the numerous peaks all combine and form a rogue wave of significantly higher amplitude, termed a fundamental rogue wave. For instance one can see that the Peregrine solution (1.2) corresponding to N = 1 has an amplitude ![]() $|q(x,t)|$ that grows to a maximum value of 3 times the (unit) background level. At the level N = 2, the maximum amplitude obtainable is actually 5 times the background level. As such, the N = 2 fundamental rogue wave is a better model than the Peregrine solution for the famous Draupner event [Reference Sunde34] in the North Sea that is frequently cited as the first quantitative observation of sea-surface rogue waves.

$|q(x,t)|$ that grows to a maximum value of 3 times the (unit) background level. At the level N = 2, the maximum amplitude obtainable is actually 5 times the background level. As such, the N = 2 fundamental rogue wave is a better model than the Peregrine solution for the famous Draupner event [Reference Sunde34] in the North Sea that is frequently cited as the first quantitative observation of sea-surface rogue waves.

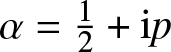

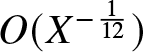

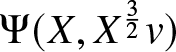

To study large-amplitude rogue waves it then becomes of some interest to allow the order N to grow and seek an asymptotic description of fundamental rogue waves as ![]() $N\to\infty$. This limit became tractable with the introduction of a modified form of the inverse scattering transform for (1.1) with nonzero boundary conditions at infinity, which yielded for the first time a Riemann–Hilbert representation of rogue wave solutions of arbitrary order [Reference Bilman and Miller9]. In [Reference Bilman, Ling and Miller8], this representation was used to analyze the fundamental rogue wave

$N\to\infty$. This limit became tractable with the introduction of a modified form of the inverse scattering transform for (1.1) with nonzero boundary conditions at infinity, which yielded for the first time a Riemann–Hilbert representation of rogue wave solutions of arbitrary order [Reference Bilman and Miller9]. In [Reference Bilman, Ling and Miller8], this representation was used to analyze the fundamental rogue wave ![]() $q=q(x,t)$ of order N in the large-order/near-field limit that

$q=q(x,t)$ of order N in the large-order/near-field limit that ![]() $N\to\infty$ while simultaneously the independent variables are rescaled near the peak

$N\to\infty$ while simultaneously the independent variables are rescaled near the peak ![]() $(x,t)=(0,0)$ so that

$(x,t)=(0,0)$ so that ![]() $x=2X/N$ and

$x=2X/N$ and ![]() $t=4T/N^2$ for fixed

$t=4T/N^2$ for fixed ![]() $(X,T)\in\mathbb{R}^2$. It was found that a limiting profile

$(X,T)\in\mathbb{R}^2$. It was found that a limiting profile ![]() $\Psi(X,T)$ of

$\Psi(X,T)$ of ![]() $2q/N$ exists as

$2q/N$ exists as ![]() $N\to\infty$ that was called the rogue wave of infinite order, and was shown (see Theorem 1.10 below) to be a global solution of the focusing nonlinear Schrödinger (NLS) equation in the form

$N\to\infty$ that was called the rogue wave of infinite order, and was shown (see Theorem 1.10 below) to be a global solution of the focusing nonlinear Schrödinger (NLS) equation in the form

\begin{align}

{\mathrm{i}} \Psi_{T}+\frac{1}{2} \Psi_{X X}+|\Psi|^{2} \Psi=0.

\end{align}

\begin{align}

{\mathrm{i}} \Psi_{T}+\frac{1}{2} \Psi_{X X}+|\Psi|^{2} \Psi=0.

\end{align} It turns out the same solution also appeared recently in the physical literature [Reference Suleimanov33] to describe a universal dispersive regularization of an anomalously catastrophic self-focusing effect predicted by the geometrical optics approximation in self-focusing Kerr media as noted in the 1960s by Talanov [Reference Talanov35], and there is also a rigorous proof that the solution arises in the semiclassical limit scaling of (1.1) when it is taken with real semicircle-profile initial data matching the Talanov form [Reference Buckingham, Jenkins and Miller18]. Indeed, several of the properties of ![]() $\Psi(X,T)$ that were proven in [Reference Bilman, Ling and Miller8] had been also noted independently in the paper of Suleimanov [Reference Suleimanov33].

$\Psi(X,T)$ that were proven in [Reference Bilman, Ling and Miller8] had been also noted independently in the paper of Suleimanov [Reference Suleimanov33].

The methodology developed in [Reference Bilman and Miller9] also allowed for a streamlined analysis of multisoliton solutions of (1.1) on the zero background, and in [Reference Bilman and Buckingham7] the soliton analogue of high-order fundamental rogue waves was analysed in a similar near-field limit. Here one considers reflectionless potentials corresponding to a transmission coefficient with a single pole of arbitrarily high order in the upper half-plane. Unlike the case of fundamental rogue waves, two additional parameters appear in the iterated Darboux transformation that influence the shape of the limiting wave profile. Thus one sees that the rogue wave of infinite order ![]() $\Psi(X,T)$ is a special case of a more general family of special solutions of (1.3). We call these solutions general rogue waves of infinite order.

$\Psi(X,T)$ is a special case of a more general family of special solutions of (1.3). We call these solutions general rogue waves of infinite order.

For rogue-wave solutions, the iterated Darboux transformations are applied to a “seed solution” that is the uniform plane wave ![]() $q(x,t)={\mathrm{e}}^{{\mathrm{i}} t}$, while for high-order soliton solutions the seed is instead the vacuum solution

$q(x,t)={\mathrm{e}}^{{\mathrm{i}} t}$, while for high-order soliton solutions the seed is instead the vacuum solution ![]() $q(x,t)=0$. In both cases, the same type of limiting object was observed to appear in the high-order/near-field limit. This observation was first generalized in [Reference Bilman and Miller12] in which a family of exact solutions of (1.1) was described involving a continuous order parameter M that when discretized in two different ways yielded both the fundamental rogue waves and also the arbitrary order solitons, with the same near-field limit appearing no matter how the continuous order was allowed to grow without bound, suggesting a type of universality of the limiting general rogue wave of infinite order. This notion of universality was fully generalized in [Reference Bilman and Miller10], where it was shown that the seed solution could be completely arbitrary (and need not represent any type of explicit solution at all), and the same family of general rogue wave solutions always appears in the high-order/near-field limit.

$q(x,t)=0$. In both cases, the same type of limiting object was observed to appear in the high-order/near-field limit. This observation was first generalized in [Reference Bilman and Miller12] in which a family of exact solutions of (1.1) was described involving a continuous order parameter M that when discretized in two different ways yielded both the fundamental rogue waves and also the arbitrary order solitons, with the same near-field limit appearing no matter how the continuous order was allowed to grow without bound, suggesting a type of universality of the limiting general rogue wave of infinite order. This notion of universality was fully generalized in [Reference Bilman and Miller10], where it was shown that the seed solution could be completely arbitrary (and need not represent any type of explicit solution at all), and the same family of general rogue wave solutions always appears in the high-order/near-field limit.

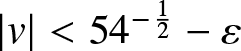

General rogue waves of infinite order also have other applications. For one thing, the initial condition for Ψ at T = 0 would be expected to generate corresponding integrable dynamics in any evolution equation that commutes with (1.3), i.e., in other equations of the same integrable hierarchy associated with the Zakharov–Shabat operator. One such system is the sharp-line Maxwell-Bloch system, and in [Reference Li and Miller24] the initial profiles of the general rogue waves of infinite order are identified with a family of self-similar solutions of the Maxwell-Bloch system that describe an important boundary-layer phenomenon. There are also analogues of general rogue waves of infinite order in the modified Korteweg-de Vries equation (some constraints on the parameters are required to ensure reality of the solution) [Reference Bilman, Blackstone, Miller and Young6], and in simultaneous solutions of arbitrarily many commuting flows in the focusing NLS hierarchy [Reference Buckingham, Jenkins and Miller18]. There is also some recent interest in general rogue waves of infinite order in the analysis community due to the fact that they lie in ![]() $L^2(\mathbb{R})$ for each fixed

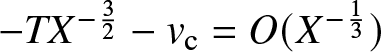

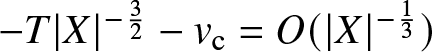

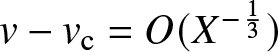

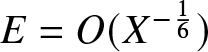

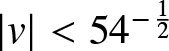

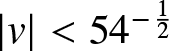

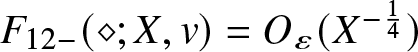

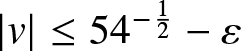

$L^2(\mathbb{R})$ for each fixed ![]() $T\in\mathbb{R}$ (see Theorem 1.9 below) and while (1.3) is globally well-posed on this space [Reference Tsutsumi42], these solutions neither generate any coherent structures (solitons) for large time T nor do they exhibit the expected

$T\in\mathbb{R}$ (see Theorem 1.9 below) and while (1.3) is globally well-posed on this space [Reference Tsutsumi42], these solutions neither generate any coherent structures (solitons) for large time T nor do they exhibit the expected  $O(T^{-\frac{1}{2}})$ decay consistent with solitonless initial data in smaller spaces such as

$O(T^{-\frac{1}{2}})$ decay consistent with solitonless initial data in smaller spaces such as ![]() $H^{1,1}(\mathbb{R})$ [Reference Borghese, Jenkins and McLaughlin15]. In fact, they decay at the anomalously slow rate of

$H^{1,1}(\mathbb{R})$ [Reference Borghese, Jenkins and McLaughlin15]. In fact, they decay at the anomalously slow rate of  $O(T^{-\frac{1}{3}})$ (see Theorem 1.22 below) and there is no reason to expect that notions such as “soliton content” from inverse-scattering theory apply (see Remark 1.21).

$O(T^{-\frac{1}{3}})$ (see Theorem 1.22 below) and there is no reason to expect that notions such as “soliton content” from inverse-scattering theory apply (see Remark 1.21).

The main purpose of this paper is to present in one place all of the important properties of the family of general rogue waves of infinite order along with related computational methods. We therefore begin by properly defining these solutions.

1.1. Mathematical definition of general rogue waves of infinite order

In what follows, we denote by ![]() $\mathbf{G}^*$ the entry-wise complex conjugation (without the transpose) for a matrix G and we use the following standard notation for the Pauli spin matrices:

$\mathbf{G}^*$ the entry-wise complex conjugation (without the transpose) for a matrix G and we use the following standard notation for the Pauli spin matrices:

\begin{equation*}

\sigma_1 := \begin{bmatrix} 0 & 1 \\ 1 & 0\end{bmatrix},\quad \sigma_2 := \begin{bmatrix} 0 & -{\mathrm{i}} \\ {\mathrm{i}} & 0\end{bmatrix},\quad \sigma_3:=\begin{bmatrix} 1 & 0 \\ 0 & -1\end{bmatrix}.

\end{equation*}

\begin{equation*}

\sigma_1 := \begin{bmatrix} 0 & 1 \\ 1 & 0\end{bmatrix},\quad \sigma_2 := \begin{bmatrix} 0 & -{\mathrm{i}} \\ {\mathrm{i}} & 0\end{bmatrix},\quad \sigma_3:=\begin{bmatrix} 1 & 0 \\ 0 & -1\end{bmatrix}.

\end{equation*}General rogue waves of infinite order are defined in terms of the following Riemann–Hilbert problem.

Riemann–Hilbert Problem 1.

Let ![]() $(X,T)\in\mathbb{R}^2$ and B > 0 be fixed and let G be a

$(X,T)\in\mathbb{R}^2$ and B > 0 be fixed and let G be a ![]() $2\times 2$ matrix satisfying

$2\times 2$ matrix satisfying ![]() $\det(\mathbf{G})=1$ and

$\det(\mathbf{G})=1$ and ![]() $\mathbf{G}^*=\sigma_2\mathbf{G}\sigma_2$. Find a

$\mathbf{G}^*=\sigma_2\mathbf{G}\sigma_2$. Find a ![]() $2\times 2$ matrix

$2\times 2$ matrix ![]() $\mathbf{P}(\Lambda;X,T,\mathbf{G},{B})$ with the following properties:

$\mathbf{P}(\Lambda;X,T,\mathbf{G},{B})$ with the following properties:

• Analyticity:

$\mathbf{P}(\Lambda;X,T,\mathbf{G},{B})$ is analytic in Λ for

$\mathbf{P}(\Lambda;X,T,\mathbf{G},{B})$ is analytic in Λ for  $|\Lambda|\neq 1$, and it takes continuous boundary values on the clockwise-oriented unit circle from the interior and exterior.

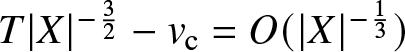

$|\Lambda|\neq 1$, and it takes continuous boundary values on the clockwise-oriented unit circle from the interior and exterior.• Jump condition: The boundary valuesFootnote 1 on the unit circle are related as follows:

(1.4) \begin{align}

\mathbf{P}_+(\Lambda;X,T,\mathbf{G},{B})=\mathbf{P}_-(\Lambda;X,T,\mathbf{G},{B})

{\mathrm{e}}^{-{\mathrm{i}}(\Lambda X+\Lambda^2T+2 {B} \Lambda^{-1})\sigma_3}\mathbf{G}

{\mathrm{e}}^{{\mathrm{i}}(\Lambda X+\Lambda^2T+2{B} \Lambda^{-1})\sigma_3},\quad |\Lambda|=1.

\end{align}

\begin{align}

\mathbf{P}_+(\Lambda;X,T,\mathbf{G},{B})=\mathbf{P}_-(\Lambda;X,T,\mathbf{G},{B})

{\mathrm{e}}^{-{\mathrm{i}}(\Lambda X+\Lambda^2T+2 {B} \Lambda^{-1})\sigma_3}\mathbf{G}

{\mathrm{e}}^{{\mathrm{i}}(\Lambda X+\Lambda^2T+2{B} \Lambda^{-1})\sigma_3},\quad |\Lambda|=1.

\end{align}• Normalization:

$\mathbf{P}(\Lambda;X,T,\mathbf{G},{B})\to\mathbb{I}$ as

$\mathbf{P}(\Lambda;X,T,\mathbf{G},{B})\to\mathbb{I}$ as  $\Lambda\to\infty$.

$\Lambda\to\infty$.

In general any matrix G satisfying ![]() $\det(\mathbf{G})=1$ and

$\det(\mathbf{G})=1$ and ![]() $\sigma_2 \mathbf{G}^* \sigma_2 = \mathbf{G}$ as in Riemann–Hilbert Problem 1 can be written as

$\sigma_2 \mathbf{G}^* \sigma_2 = \mathbf{G}$ as in Riemann–Hilbert Problem 1 can be written as

\begin{align}

\mathbf{G}=\mathbf{G}(a,b)=\frac{1}{\sqrt{|a|^{2}+|b|^{2}}}\begin{bmatrix}

a & b^{*} \\

-b & a^{*}

\end{bmatrix}

\end{align}

\begin{align}

\mathbf{G}=\mathbf{G}(a,b)=\frac{1}{\sqrt{|a|^{2}+|b|^{2}}}\begin{bmatrix}

a & b^{*} \\

-b & a^{*}

\end{bmatrix}

\end{align} for complex numbers ![]() $a,b$ not both zero.

$a,b$ not both zero.

It is a consequence of the conditions on G and the analytic dependence of the jump matrix on (X, T) that the following holds.

Proposition 1.1 (Global existence)

For each ![]() $(X,T)\in\mathbb{R}^2$ there exists a unique solution to Riemann–Hilbert Problem 1, and the solution depends real-analytically on

$(X,T)\in\mathbb{R}^2$ there exists a unique solution to Riemann–Hilbert Problem 1, and the solution depends real-analytically on ![]() $(X,T)\in\mathbb{R}^2$ and the real and imaginary parts of the parameters

$(X,T)\in\mathbb{R}^2$ and the real and imaginary parts of the parameters ![]() $a,b$ of the elements of G.

$a,b$ of the elements of G.

This follows from Zhou’s vanishing lemma [Reference Zhou48, Theorem 9.3] and the application of analytic Fredholm theory. The special solution ![]() $\Psi(X,T)=\Psi(X,T;\mathbf{G}, {B})$ of (1.3) is defined in terms of the solution of Riemann–Hilbert Problem 1 by

$\Psi(X,T)=\Psi(X,T;\mathbf{G}, {B})$ of (1.3) is defined in terms of the solution of Riemann–Hilbert Problem 1 by

\begin{align}

\Psi(X,T;\mathbf{G},{B}):= 2{\mathrm{i}} \lim_{\Lambda\to\infty}\Lambda P_{12}(\Lambda;X,T,\mathbf{G},{B}).

\end{align}

\begin{align}

\Psi(X,T;\mathbf{G},{B}):= 2{\mathrm{i}} \lim_{\Lambda\to\infty}\Lambda P_{12}(\Lambda;X,T,\mathbf{G},{B}).

\end{align} This is in general a transcendental solution of the NLS equation; therefore its quantitative properties and the qualitative the nature of its profile (for instance what boundary conditions are satisfied as ![]() $X\to\pm\infty$), and how these depend on parameters are not immediately clear.

$X\to\pm\infty$), and how these depend on parameters are not immediately clear.

The function ![]() $\Psi(X,T;\mathbf{G},{B})$ was first studied by Suleimanov [Reference Suleimanov33] and independently by the authors with L. Ling [Reference Bilman, Ling and Miller8] for the special case of

$\Psi(X,T;\mathbf{G},{B})$ was first studied by Suleimanov [Reference Suleimanov33] and independently by the authors with L. Ling [Reference Bilman, Ling and Miller8] for the special case of

\begin{align}

\mathbf{G}=\mathbf{Q}^{-1},\qquad \mathbf{Q}:=\frac{1}{\sqrt{2}}\begin{bmatrix}1& -1 \\ 1 & 1 \end{bmatrix},

\end{align}

\begin{align}

\mathbf{G}=\mathbf{Q}^{-1},\qquad \mathbf{Q}:=\frac{1}{\sqrt{2}}\begin{bmatrix}1& -1 \\ 1 & 1 \end{bmatrix},

\end{align} which corresponds to the choice ![]() $a=b=1$ (or any positive number) in (1.5).

$a=b=1$ (or any positive number) in (1.5).

In order to study properties of the special solution ![]() $\Psi(X,T;\mathbf{G},{B})$ and how they depend on parameters, three approaches come to mind: (i) investigate its exact properties including symmetries, special values, differential equations satisfied, and equivalent representations; (ii) work in a variety of interesting asymptotic regimes to obtain rigorous approximations to

$\Psi(X,T;\mathbf{G},{B})$ and how they depend on parameters, three approaches come to mind: (i) investigate its exact properties including symmetries, special values, differential equations satisfied, and equivalent representations; (ii) work in a variety of interesting asymptotic regimes to obtain rigorous approximations to ![]() $\Psi(X,T;\mathbf{G},{B})$; and (iii) compute

$\Psi(X,T;\mathbf{G},{B})$; and (iii) compute ![]() $\Psi(X,T;\mathbf{G},{B})$ accurately in the (X, T)-plane by a suitable numerical method. In this paper, we use all three approaches.

$\Psi(X,T;\mathbf{G},{B})$ accurately in the (X, T)-plane by a suitable numerical method. In this paper, we use all three approaches.

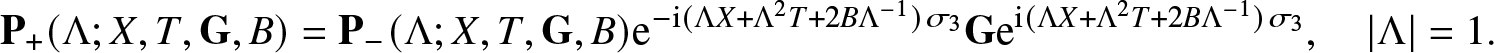

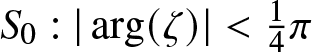

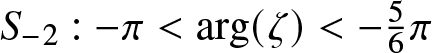

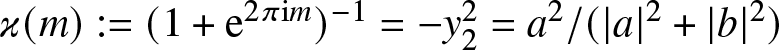

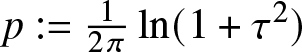

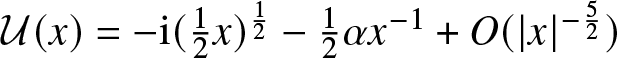

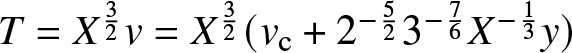

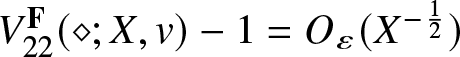

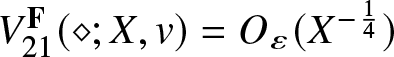

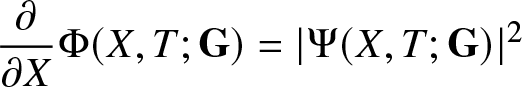

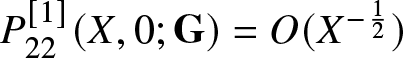

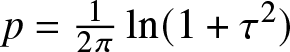

In the rest of this introduction section, we summarize our results in the three areas mentioned above. To set the scene, plots of ![]() $\Psi(X,T;\mathbf{G},{B})$ computed with RogueWaveInfiniteNLS.jl with

$\Psi(X,T;\mathbf{G},{B})$ computed with RogueWaveInfiniteNLS.jl with ![]() $a=b={B}=1$ are shown in Figure 1. RogueWaveInfiniteNLS.jl is a software package for the Julia programming language developed as part of this work to compute rogue waves of infinite order through numerical solution of suitable Riemann–Hilbert problems; see Section 1.4 below.

$a=b={B}=1$ are shown in Figure 1. RogueWaveInfiniteNLS.jl is a software package for the Julia programming language developed as part of this work to compute rogue waves of infinite order through numerical solution of suitable Riemann–Hilbert problems; see Section 1.4 below.

Figure 1. The solution ![]() $\Psi(X,T;\mathbf{G},{B})$ computed with RogueWaveInfiniteNLS.jl with

$\Psi(X,T;\mathbf{G},{B})$ computed with RogueWaveInfiniteNLS.jl with ![]() $a=b={B}=1$. RogueWaveInfiniteNLS.jl is a software package developed in this work for the Julia programming language to compute rogue waves of infinite order through numerical solution of suitable Riemann–Hilbert problems.

$a=b={B}=1$. RogueWaveInfiniteNLS.jl is a software package developed in this work for the Julia programming language to compute rogue waves of infinite order through numerical solution of suitable Riemann–Hilbert problems.

1.2. Exact properties of Ψ

Here we describe the symmetries of ![]() $\Psi(X,T;\mathbf{G},{B})$ (Section 1.2.1), evaluate it and its derivative

$\Psi(X,T;\mathbf{G},{B})$ (Section 1.2.1), evaluate it and its derivative ![]() $\Psi_X$ at

$\Psi_X$ at ![]() $(X,T)=(0,0)$ (Section 1.2.2) and give its L 2-norm (Section 1.2.3), give partial and ordinary differential equations satisfied by

$(X,T)=(0,0)$ (Section 1.2.2) and give its L 2-norm (Section 1.2.3), give partial and ordinary differential equations satisfied by ![]() $\Psi(X,T;\mathbf{G},{B})$ (Section 1.2.4), and give a new Fredholm determinant formula for the initial condition (Section 1.2.5).

$\Psi(X,T;\mathbf{G},{B})$ (Section 1.2.4), and give a new Fredholm determinant formula for the initial condition (Section 1.2.5).

1.2.1. Symmetries

In the setting that ![]() $\Psi(X,T;\mathbf{G},{B})$ arises from the joint near-field/high-order limit of rogue-wave solutions of (1.1), the parameter B > 0 has the interpretation of the amplitude of the background wave supporting the rogue waves. However, it is not hard to see that the dependence on B > 0 can be scaled out of Ψ by the scaling invariance

$\Psi(X,T;\mathbf{G},{B})$ arises from the joint near-field/high-order limit of rogue-wave solutions of (1.1), the parameter B > 0 has the interpretation of the amplitude of the background wave supporting the rogue waves. However, it is not hard to see that the dependence on B > 0 can be scaled out of Ψ by the scaling invariance ![]() $\Psi(X,T;\mathbf{G},{B}) \mapsto {B}^{-1} \Psi({B}^{-1}X, {B}^{-2} T;\mathbf{G},{B})$ of the focusing NLS equation (1.3).

$\Psi(X,T;\mathbf{G},{B}) \mapsto {B}^{-1} \Psi({B}^{-1}X, {B}^{-2} T;\mathbf{G},{B})$ of the focusing NLS equation (1.3).

Proposition 1.2 (Scaling symmetry)

Given G with ![]() $\det(\mathbf{G})=1$ and

$\det(\mathbf{G})=1$ and ![]() $\mathbf{G}^*=\sigma_2\mathbf{G}\sigma_2$, for each B > 0 and

$\mathbf{G}^*=\sigma_2\mathbf{G}\sigma_2$, for each B > 0 and ![]() $(X,T)\in\mathbb{R}^2$, we have

$(X,T)\in\mathbb{R}^2$, we have

We give a proof in Appendix A. In this paper we make use of Proposition 1.2 and take B = 1. Accordingly, we write

to denote the special solution under study, and similarly we generally omit B = 1 from the argument list of P going forward. On the other hand, the dependence of ![]() $\Psi(X,T;\mathbf{G})$ on the

$\Psi(X,T;\mathbf{G})$ on the ![]() $2\times 2$ matrix G is nontrivial.

$2\times 2$ matrix G is nontrivial.

We proceed with two observations that concern the symmetries with respect to reflections in the X variable and in the T variable.

Proposition 1.3 (Reflection in  $X$)

$X$)

![]() $\Psi(X,T; \mathbf{G}(a,b)) = \Psi(-X,T; \mathbf{G}(b,a))$.

$\Psi(X,T; \mathbf{G}(a,b)) = \Psi(-X,T; \mathbf{G}(b,a))$.

Similarly, we have

Proposition 1.4 (Reflection in  $T$)

$T$)

![]() $\Psi(X,-T; \mathbf{G}(a,b)) = \Psi(X,T; \mathbf{G}(a,b)^*)^*$.

$\Psi(X,-T; \mathbf{G}(a,b)) = \Psi(X,T; \mathbf{G}(a,b)^*)^*$.

The proofs of Proposition 1.3 and Proposition 1.4 are in Appendix A. The next observation we make concerns a useful normalization of the parameters ![]() $a,b$. Indeed, we have the following result, which is also proved in Appendix A.

$a,b$. Indeed, we have the following result, which is also proved in Appendix A.

Proposition 1.5 (Normalized parameters)

For all ![]() $(X,T)\in\mathbb{R}^2$ and

$(X,T)\in\mathbb{R}^2$ and ![]() $a,b\in\mathbb{C}$ with

$a,b\in\mathbb{C}$ with ![]() $ab\neq 0$,

$ab\neq 0$,

where

\begin{align}

\mathfrak{a}:=\frac{|a|}{\sqrt{|a|^2+|b|^2}}\quad\text{and}\quad

\mathfrak{b}:=\frac{|b|}{\sqrt{|a|^2+|b|^2}}

\end{align}

\begin{align}

\mathfrak{a}:=\frac{|a|}{\sqrt{|a|^2+|b|^2}}\quad\text{and}\quad

\mathfrak{b}:=\frac{|b|}{\sqrt{|a|^2+|b|^2}}

\end{align} satisfy ![]() $\mathfrak{a},\mathfrak{b} \gt 0$ with

$\mathfrak{a},\mathfrak{b} \gt 0$ with ![]() $\mathfrak{a}^2+\mathfrak{b}^2=1$.

$\mathfrak{a}^2+\mathfrak{b}^2=1$.

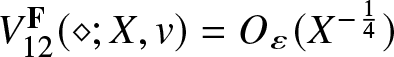

Therefore, up to a phase factor, there is just one real parameter in the family of solutions ![]() $\Psi(X,T;\mathbf{G})$ with ab ≠ 0, which one could take as

$\Psi(X,T;\mathbf{G})$ with ab ≠ 0, which one could take as ![]() $\mathfrak{a}\in (0,1)$, or equivalently as an angle

$\mathfrak{a}\in (0,1)$, or equivalently as an angle  $\eta\in (0,\frac{1}{2}\pi)$ for which

$\eta\in (0,\frac{1}{2}\pi)$ for which ![]() $\mathfrak{a}=\cos(\eta)$ and

$\mathfrak{a}=\cos(\eta)$ and ![]() $\mathfrak{b}=\sin(\eta)$. The coordinate η was used, for example, in the analysis of [Reference Li and Miller24, Section 2.3]. Combining Proposition 1.4 and Proposition 1.5 for T = 0 shows that

$\mathfrak{b}=\sin(\eta)$. The coordinate η was used, for example, in the analysis of [Reference Li and Miller24, Section 2.3]. Combining Proposition 1.4 and Proposition 1.5 for T = 0 shows that

\begin{align}

\begin{split}

\Psi(X,0;\mathbf{G}(a,b))&={\mathrm{e}}^{-{\mathrm{i}}\arg(ab)}\Psi(X,0;\mathbf{G}(\mathfrak{a},\mathfrak{b}))\\ &={\mathrm{e}}^{-{\mathrm{i}}\arg(ab)}\Psi(X,0;\mathbf{G}(\mathfrak{a},\mathfrak{b}))^*\\ &={\mathrm{e}}^{-2{\mathrm{i}}\arg(ab)}\Psi(X,0;\mathbf{G}(a,b))^*

\end{split}

\end{align}

\begin{align}

\begin{split}

\Psi(X,0;\mathbf{G}(a,b))&={\mathrm{e}}^{-{\mathrm{i}}\arg(ab)}\Psi(X,0;\mathbf{G}(\mathfrak{a},\mathfrak{b}))\\ &={\mathrm{e}}^{-{\mathrm{i}}\arg(ab)}\Psi(X,0;\mathbf{G}(\mathfrak{a},\mathfrak{b}))^*\\ &={\mathrm{e}}^{-2{\mathrm{i}}\arg(ab)}\Psi(X,0;\mathbf{G}(a,b))^*

\end{split}

\end{align} because ![]() $\mathbf{G}(\mathfrak{a},\mathfrak{b})$ is a real matrix. It follows that

$\mathbf{G}(\mathfrak{a},\mathfrak{b})$ is a real matrix. It follows that ![]() $X\mapsto {\mathrm{e}}^{{\mathrm{i}}\arg(ab)}\Psi(X,0;\mathbf{G}(a,b))$ is a real-valued function of

$X\mapsto {\mathrm{e}}^{{\mathrm{i}}\arg(ab)}\Psi(X,0;\mathbf{G}(a,b))$ is a real-valued function of ![]() $X\in\mathbb{R}$.

$X\in\mathbb{R}$.

The normalized parameters ![]() $\mathfrak{a} \gt 0$ and

$\mathfrak{a} \gt 0$ and ![]() $\mathfrak{b} \gt 0$ with

$\mathfrak{b} \gt 0$ with ![]() $\mathfrak{a}^2+\mathfrak{b}^2=1$ will be used in the proofs of our asymptotic results to be described in Section 1.3 below. So that they are available later we record here the following four standard matrix factorizations of the central factor

$\mathfrak{a}^2+\mathfrak{b}^2=1$ will be used in the proofs of our asymptotic results to be described in Section 1.3 below. So that they are available later we record here the following four standard matrix factorizations of the central factor ![]() $\mathbf{G}(\mathfrak{a},\mathfrak{b})$ in the jump matrix (1.4), which have been further manipulated to have a diagonal matrix as the leftmost factor:

$\mathbf{G}(\mathfrak{a},\mathfrak{b})$ in the jump matrix (1.4), which have been further manipulated to have a diagonal matrix as the leftmost factor:

\begin{align}

\mathbf{G}(\mathfrak{a},\mathfrak{b})=\begin{bmatrix}\mathfrak{a} & \mathfrak{b}\\-\mathfrak{b} & \mathfrak{a}\end{bmatrix}=\mathfrak{a}^{\sigma_3}\begin{bmatrix}1 & 0\\-\mathfrak{ab} & 1\end{bmatrix}\begin{bmatrix}1 & \displaystyle\frac{\mathfrak{b}}{\mathfrak{a}}\\0 & 1\end{bmatrix},\quad \text{(\unicode{x201C}LDU\unicode{x201C})},

\end{align}

\begin{align}

\mathbf{G}(\mathfrak{a},\mathfrak{b})=\begin{bmatrix}\mathfrak{a} & \mathfrak{b}\\-\mathfrak{b} & \mathfrak{a}\end{bmatrix}=\mathfrak{a}^{\sigma_3}\begin{bmatrix}1 & 0\\-\mathfrak{ab} & 1\end{bmatrix}\begin{bmatrix}1 & \displaystyle\frac{\mathfrak{b}}{\mathfrak{a}}\\0 & 1\end{bmatrix},\quad \text{(\unicode{x201C}LDU\unicode{x201C})},

\end{align} \begin{align}

\mathbf{G}(\mathfrak{a},\mathfrak{b})=\begin{bmatrix}\mathfrak{a} & \mathfrak{b}\\-\mathfrak{b} & \mathfrak{a}\end{bmatrix}=\mathfrak{a}^{-\sigma_3}\begin{bmatrix}1 & \mathfrak{ab}\\0 & 1\end{bmatrix}\begin{bmatrix}1 & 0\\\displaystyle-\frac{\mathfrak{b}}{\mathfrak{a}} & 1\end{bmatrix},\quad\text{(\unicode{x201C}UDL\unicode{x201D})},

\end{align}

\begin{align}

\mathbf{G}(\mathfrak{a},\mathfrak{b})=\begin{bmatrix}\mathfrak{a} & \mathfrak{b}\\-\mathfrak{b} & \mathfrak{a}\end{bmatrix}=\mathfrak{a}^{-\sigma_3}\begin{bmatrix}1 & \mathfrak{ab}\\0 & 1\end{bmatrix}\begin{bmatrix}1 & 0\\\displaystyle-\frac{\mathfrak{b}}{\mathfrak{a}} & 1\end{bmatrix},\quad\text{(\unicode{x201C}UDL\unicode{x201D})},

\end{align} \begin{align}

\mathbf{G}(\mathfrak{a},\mathfrak{b})=\begin{bmatrix}\mathfrak{a} & \mathfrak{b}\\-\mathfrak{b} & \mathfrak{a}\end{bmatrix}=\mathfrak{a}^{\sigma_3}\begin{bmatrix}1&0\\

\displaystyle\frac{\mathfrak{a}^3}{\mathfrak{b}} & 1\end{bmatrix}

\begin{bmatrix}0&\displaystyle \frac{\mathfrak{b}}{\mathfrak{a}}\\\displaystyle -\frac{\mathfrak{a}}{\mathfrak{b}} & 0\end{bmatrix}

\begin{bmatrix}1 & 0\\\displaystyle\frac{\mathfrak{a}}{\mathfrak{b}} & 1\end{bmatrix},\quad

\text{(\unicode{x201C}LTL\unicode{x201D})},

\end{align}

\begin{align}

\mathbf{G}(\mathfrak{a},\mathfrak{b})=\begin{bmatrix}\mathfrak{a} & \mathfrak{b}\\-\mathfrak{b} & \mathfrak{a}\end{bmatrix}=\mathfrak{a}^{\sigma_3}\begin{bmatrix}1&0\\

\displaystyle\frac{\mathfrak{a}^3}{\mathfrak{b}} & 1\end{bmatrix}

\begin{bmatrix}0&\displaystyle \frac{\mathfrak{b}}{\mathfrak{a}}\\\displaystyle -\frac{\mathfrak{a}}{\mathfrak{b}} & 0\end{bmatrix}

\begin{bmatrix}1 & 0\\\displaystyle\frac{\mathfrak{a}}{\mathfrak{b}} & 1\end{bmatrix},\quad

\text{(\unicode{x201C}LTL\unicode{x201D})},

\end{align} \begin{align}

\mathbf{G}(\mathfrak{a},\mathfrak{b})=\begin{bmatrix}\mathfrak{a} & \mathfrak{b}\\-\mathfrak{b} & \mathfrak{a}\end{bmatrix}=\mathfrak{a}^{-\sigma_3}\begin{bmatrix}1 & \displaystyle -\frac{\mathfrak{a}^3}{\mathfrak{b}}\\0 & 1\end{bmatrix}

\begin{bmatrix}0 & \displaystyle\frac{\mathfrak{a}}{\mathfrak{b}}\\\displaystyle-\frac{\mathfrak{b}}{\mathfrak{a}} & 0\end{bmatrix}\begin{bmatrix}1 & \displaystyle-\frac{\mathfrak{a}}{\mathfrak{b}}\\0 & 1\end{bmatrix},\quad \text{(\unicode{x201C}UTU\unicode{x201D})}.

\end{align}

\begin{align}

\mathbf{G}(\mathfrak{a},\mathfrak{b})=\begin{bmatrix}\mathfrak{a} & \mathfrak{b}\\-\mathfrak{b} & \mathfrak{a}\end{bmatrix}=\mathfrak{a}^{-\sigma_3}\begin{bmatrix}1 & \displaystyle -\frac{\mathfrak{a}^3}{\mathfrak{b}}\\0 & 1\end{bmatrix}

\begin{bmatrix}0 & \displaystyle\frac{\mathfrak{a}}{\mathfrak{b}}\\\displaystyle-\frac{\mathfrak{b}}{\mathfrak{a}} & 0\end{bmatrix}\begin{bmatrix}1 & \displaystyle-\frac{\mathfrak{a}}{\mathfrak{b}}\\0 & 1\end{bmatrix},\quad \text{(\unicode{x201C}UTU\unicode{x201D})}.

\end{align}1.2.2.  $\Psi(X,0;\mathbf{G})$ near

$\Psi(X,0;\mathbf{G})$ near  $X$ = 0

$X$ = 0

It is straightforward to solve Riemann–Hilbert Problem 1 explicitly when ![]() $(X,T)=(0,0)$. Indeed, one can verify directly that the solution is:

$(X,T)=(0,0)$. Indeed, one can verify directly that the solution is:

\begin{align}

\mathbf{P}(\Lambda;0,0,\mathbf{G})

=\begin{cases}

\mathbf{G}^{-1},& |\Lambda| \lt 1,\\

\mathbf{G}^{-1}{\mathrm{e}}^{-2{\mathrm{i}}\Lambda^{-1}\sigma_3}\mathbf{G}{\mathrm{e}}^{2{\mathrm{i}}\Lambda^{-1}\sigma_3},&|\Lambda| \gt 1.

\end{cases}

\end{align}

\begin{align}

\mathbf{P}(\Lambda;0,0,\mathbf{G})

=\begin{cases}

\mathbf{G}^{-1},& |\Lambda| \lt 1,\\

\mathbf{G}^{-1}{\mathrm{e}}^{-2{\mathrm{i}}\Lambda^{-1}\sigma_3}\mathbf{G}{\mathrm{e}}^{2{\mathrm{i}}\Lambda^{-1}\sigma_3},&|\Lambda| \gt 1.

\end{cases}

\end{align} Then, it follows from this formula assuming ![]() $|\Lambda| \gt 1$ that

$|\Lambda| \gt 1$ that ![]() $\mathbf{P}(\Lambda;0,0,\mathbf{G})=\mathbb{I} + (2{\mathrm{i}}\sigma_3 - 2{\mathrm{i}}\mathbf{G}^{-1}\sigma_3\mathbf{G})\Lambda^{-1} + O(\Lambda^{-2})$ as

$\mathbf{P}(\Lambda;0,0,\mathbf{G})=\mathbb{I} + (2{\mathrm{i}}\sigma_3 - 2{\mathrm{i}}\mathbf{G}^{-1}\sigma_3\mathbf{G})\Lambda^{-1} + O(\Lambda^{-2})$ as ![]() $\Lambda\to\infty$. Therefore (1.6) yields the following.

$\Lambda\to\infty$. Therefore (1.6) yields the following.

Theorem 1.6 (Value at the origin)

\begin{align}

\Psi(0,0;\mathbf{G})=4\left(\mathbf{G}^{-1}\sigma_3\mathbf{G}\right)_{12} = 8\mathfrak{a}\mathfrak{b}{\mathrm{e}}^{-{\mathrm{i}}\arg(ab)}=\frac{8a^*b^*}{|a|^2+|b|^2}.

\end{align}

\begin{align}

\Psi(0,0;\mathbf{G})=4\left(\mathbf{G}^{-1}\sigma_3\mathbf{G}\right)_{12} = 8\mathfrak{a}\mathfrak{b}{\mathrm{e}}^{-{\mathrm{i}}\arg(ab)}=\frac{8a^*b^*}{|a|^2+|b|^2}.

\end{align} Following [Reference Li and Miller24, Section 2.3.3], it is then systematic to calculate derivatives of ![]() $\mathbf{P}(\Lambda;X,0,\mathbf{G})$ with respect to X at X = 0. For instance, setting

$\mathbf{P}(\Lambda;X,0,\mathbf{G})$ with respect to X at X = 0. For instance, setting ![]() $\mathbf{F}(\Lambda;X):=\mathbf{P}_X(\Lambda;X,0,\mathbf{G})\mathbf{P}(\Lambda;X,0,\mathbf{G})^{-1}$, one sees that

$\mathbf{F}(\Lambda;X):=\mathbf{P}_X(\Lambda;X,0,\mathbf{G})\mathbf{P}(\Lambda;X,0,\mathbf{G})^{-1}$, one sees that ![]() $\Lambda\mapsto\mathbf{F}(\Lambda;X)$ is analytic for

$\Lambda\mapsto\mathbf{F}(\Lambda;X)$ is analytic for ![]() $|\Lambda|\neq 1$, that

$|\Lambda|\neq 1$, that ![]() $\mathbf{F}(\Lambda;X)\to \mathbf{0}$ as

$\mathbf{F}(\Lambda;X)\to \mathbf{0}$ as ![]() $\Lambda\to\infty$, and that

$\Lambda\to\infty$, and that ![]() $\mathbf{P}_+(\Lambda;X,0,\mathbf{G})=\mathbf{P}_-(\Lambda;X,0,\mathbf{G})\mathbf{V}(\Lambda;X)$ implies that also

$\mathbf{P}_+(\Lambda;X,0,\mathbf{G})=\mathbf{P}_-(\Lambda;X,0,\mathbf{G})\mathbf{V}(\Lambda;X)$ implies that also

It follows that (using the Plemelj formula and taking into account the clockwise orientation of the jump contour)

\begin{align}

\begin{split}

\mathbf{F}(\Lambda;X)&=-\frac{1}{2\pi{\mathrm{i}}}\oint_{|\mu|=1}\frac{\mathbf{P}_-(\mu;X,0,\mathbf{G})\mathbf{V}_X(\mu;X)\mathbf{V}(\mu;X)^{-1}\mathbf{P}_-(\mu;X,0,\mathbf{G})^{-1}}{\mu-\Lambda}\, \mathrm{d}\mu\\

&=\frac{1}{2\pi{\mathrm{i}}\Lambda}\oint_{|\mu|=1}\mathbf{P}_-(\mu;X,0,\mathbf{G})\mathbf{V}_X(\mu;X)\mathbf{V}(\mu;X)^{-1}\mathbf{P}_-(\mu;X,0,\mathbf{G})^{-1}\, \mathrm{d}\mu + O(\Lambda^{-2})

\end{split}

\end{align}

\begin{align}

\begin{split}

\mathbf{F}(\Lambda;X)&=-\frac{1}{2\pi{\mathrm{i}}}\oint_{|\mu|=1}\frac{\mathbf{P}_-(\mu;X,0,\mathbf{G})\mathbf{V}_X(\mu;X)\mathbf{V}(\mu;X)^{-1}\mathbf{P}_-(\mu;X,0,\mathbf{G})^{-1}}{\mu-\Lambda}\, \mathrm{d}\mu\\

&=\frac{1}{2\pi{\mathrm{i}}\Lambda}\oint_{|\mu|=1}\mathbf{P}_-(\mu;X,0,\mathbf{G})\mathbf{V}_X(\mu;X)\mathbf{V}(\mu;X)^{-1}\mathbf{P}_-(\mu;X,0,\mathbf{G})^{-1}\, \mathrm{d}\mu + O(\Lambda^{-2})

\end{split}

\end{align} as ![]() $\Lambda\to\infty$, where on both lines the integration contour has counterclockwise orientation, and where

$\Lambda\to\infty$, where on both lines the integration contour has counterclockwise orientation, and where ![]() $\mathbf{P}_-(\mu;X,0,\mathbf{G})$ refers to the boundary value taken from the interior of the unit circle. Since according to (1.4) the jump matrix is given by

$\mathbf{P}_-(\mu;X,0,\mathbf{G})$ refers to the boundary value taken from the interior of the unit circle. Since according to (1.4) the jump matrix is given by  $\mathbf{V}(\Lambda;X):={\mathrm{e}}^{-{\mathrm{i}}(\Lambda X + 2\Lambda^{-1})\sigma_3}\mathbf{G}{\mathrm{e}}^{{\mathrm{i}} (\Lambda X+2\Lambda^{-1})\sigma_3}$, we get that

$\mathbf{V}(\Lambda;X):={\mathrm{e}}^{-{\mathrm{i}}(\Lambda X + 2\Lambda^{-1})\sigma_3}\mathbf{G}{\mathrm{e}}^{{\mathrm{i}} (\Lambda X+2\Lambda^{-1})\sigma_3}$, we get that

\begin{align}

\mathbf{V}_X(\Lambda;0)\mathbf{V}(\Lambda;0)^{-1}=-{\mathrm{i}}\Lambda\sigma_3 +{\mathrm{i}}\Lambda {\mathrm{e}}^{-2{\mathrm{i}}\Lambda^{-1}\sigma_3}\mathbf{G}\sigma_3\mathbf{G}^{-1}{\mathrm{e}}^{2{\mathrm{i}}\Lambda^{-1}\sigma_3},

\end{align}

\begin{align}

\mathbf{V}_X(\Lambda;0)\mathbf{V}(\Lambda;0)^{-1}=-{\mathrm{i}}\Lambda\sigma_3 +{\mathrm{i}}\Lambda {\mathrm{e}}^{-2{\mathrm{i}}\Lambda^{-1}\sigma_3}\mathbf{G}\sigma_3\mathbf{G}^{-1}{\mathrm{e}}^{2{\mathrm{i}}\Lambda^{-1}\sigma_3},

\end{align} and according to (1.17) we have ![]() $\mathbf{P}_-(\Lambda;0,0,\mathbf{G})=\mathbf{G}^{-1}$. Therefore, as

$\mathbf{P}_-(\Lambda;0,0,\mathbf{G})=\mathbf{G}^{-1}$. Therefore, as ![]() $\Lambda\to\infty$,

$\Lambda\to\infty$,

\begin{align}

\mathbf{F}(\Lambda;0)=\frac{1}{2\pi{\mathrm{i}}\Lambda}\oint_{|\mu|=1}\left[-{\mathrm{i}}\mu\mathbf{G}^{-1}\sigma_3\mathbf{G} + {\mathrm{i}}\mu \mathbf{G}^{-1}{\mathrm{e}}^{-2{\mathrm{i}}\mu^{-1}\sigma_3}\mathbf{G}\sigma_3\mathbf{G}^{-1}{\mathrm{e}}^{2{\mathrm{i}}\mu^{-1}\sigma_3}\mathbf{G}\right]\, \mathrm{d}\mu + O(\Lambda^{-2}).

\end{align}

\begin{align}

\mathbf{F}(\Lambda;0)=\frac{1}{2\pi{\mathrm{i}}\Lambda}\oint_{|\mu|=1}\left[-{\mathrm{i}}\mu\mathbf{G}^{-1}\sigma_3\mathbf{G} + {\mathrm{i}}\mu \mathbf{G}^{-1}{\mathrm{e}}^{-2{\mathrm{i}}\mu^{-1}\sigma_3}\mathbf{G}\sigma_3\mathbf{G}^{-1}{\mathrm{e}}^{2{\mathrm{i}}\mu^{-1}\sigma_3}\mathbf{G}\right]\, \mathrm{d}\mu + O(\Lambda^{-2}).

\end{align} The first term vanishes by Cauchy’s theorem, and the second term can be evaluated by residues at ![]() $\mu=\infty$ using the expansion

$\mu=\infty$ using the expansion  ${\mathrm{e}}^{\pm 2{\mathrm{i}}\mu^{-1}\sigma_3}=\mathbb{I} \pm 2{\mathrm{i}}\sigma_3\mu^{-1}-2\mathbb{I}\mu^{-2} + O(\mu^{-3})$ as

${\mathrm{e}}^{\pm 2{\mathrm{i}}\mu^{-1}\sigma_3}=\mathbb{I} \pm 2{\mathrm{i}}\sigma_3\mu^{-1}-2\mathbb{I}\mu^{-2} + O(\mu^{-3})$ as ![]() $\mu\to\infty$. The result is that

$\mu\to\infty$. The result is that

\begin{align}

\mathbf{F}(\Lambda;0)=\left[4{\mathrm{i}}\mathbf{G}^{-1}\sigma_3\mathbf{G}\sigma_3\mathbf{G}^{-1}\sigma_3\mathbf{G}-4{\mathrm{i}}\sigma_3\right]\Lambda^{-1}+O(\Lambda^{-2}),\quad\Lambda\to\infty.

\end{align}

\begin{align}

\mathbf{F}(\Lambda;0)=\left[4{\mathrm{i}}\mathbf{G}^{-1}\sigma_3\mathbf{G}\sigma_3\mathbf{G}^{-1}\sigma_3\mathbf{G}-4{\mathrm{i}}\sigma_3\right]\Lambda^{-1}+O(\Lambda^{-2}),\quad\Lambda\to\infty.

\end{align}Differentiation of (1.6) then yields

\begin{align}

\Psi_X(0,0;\mathbf{G}) = 2{\mathrm{i}}\lim_{\Lambda\to\infty}\Lambda\frac{\partial P_{12}}{\partial X}(\Lambda;0,0,\mathbf{G}) = 2{\mathrm{i}}\lim_{\Lambda\to\infty}\Lambda F_{12}(\Lambda;0) = -8\left[\mathbf{G}^{-1}\sigma_3\mathbf{G}\sigma_3\mathbf{G}^{-1}\sigma_3\mathbf{G}\right]_{12}.

\end{align}

\begin{align}

\Psi_X(0,0;\mathbf{G}) = 2{\mathrm{i}}\lim_{\Lambda\to\infty}\Lambda\frac{\partial P_{12}}{\partial X}(\Lambda;0,0,\mathbf{G}) = 2{\mathrm{i}}\lim_{\Lambda\to\infty}\Lambda F_{12}(\Lambda;0) = -8\left[\mathbf{G}^{-1}\sigma_3\mathbf{G}\sigma_3\mathbf{G}^{-1}\sigma_3\mathbf{G}\right]_{12}.

\end{align}Explicit evaluation using (1.5) then yields the following result.

Theorem 1.7 (Derivative at the origin)

\begin{align}

\Psi_X(0,0;\mathbf{G})=32{\mathrm{e}}^{-{\mathrm{i}}\arg(ab)}\mathfrak{a}\mathfrak{b} (\mathfrak{b}^2-\mathfrak{a}^2)=32a^*b^*\frac{|b|^2-|a|^2}{(|a|^2+|b|^2)^2}.

\end{align}

\begin{align}

\Psi_X(0,0;\mathbf{G})=32{\mathrm{e}}^{-{\mathrm{i}}\arg(ab)}\mathfrak{a}\mathfrak{b} (\mathfrak{b}^2-\mathfrak{a}^2)=32a^*b^*\frac{|b|^2-|a|^2}{(|a|^2+|b|^2)^2}.

\end{align}1.2.3. Exceptional parameter values and  $L^2$-norm

$L^2$-norm

Another interesting result for the family ![]() $\Psi(X,T;\mathbf{G})$ of exact solutions of (1.3) has to do with special values of the parameters.

$\Psi(X,T;\mathbf{G})$ of exact solutions of (1.3) has to do with special values of the parameters.

Proposition 1.8 (Degeneration property)

If either ![]() $a=0$ or

$a=0$ or ![]() $b=0$, then

$b=0$, then ![]() $\Psi(X,T;\mathbf{G})\equiv 0$.

$\Psi(X,T;\mathbf{G})\equiv 0$.

We give a proof of Proposition 1.8 in Appendix A. The proof relies on the fact that Riemann–Hilbert Problem 1 can be solved explicitly in either of the cases a = 0 or b = 0. It follows from Proposition 1.1 that the function ![]() $\Psi(X,T;\mathbf{G})$ depends continuously on the parameters (a, b) for fixed

$\Psi(X,T;\mathbf{G})$ depends continuously on the parameters (a, b) for fixed ![]() $(X,T)\in\mathbb{R}^2$, so Proposition 1.8 also implies the pointwise limit

$(X,T)\in\mathbb{R}^2$, so Proposition 1.8 also implies the pointwise limit ![]() $\Psi(X,T;\mathbf{G})\to 0$ as a → 0 or b → 0 for each

$\Psi(X,T;\mathbf{G})\to 0$ as a → 0 or b → 0 for each ![]() $(X,T)\in\mathbb{R}^2$, and this convergence can be generalized to be uniform over (X, T) ranging over any given compact set in

$(X,T)\in\mathbb{R}^2$, and this convergence can be generalized to be uniform over (X, T) ranging over any given compact set in ![]() $\mathbb{R}^2$. To avoid trivial cases, from this point on in the paper we therefore assume that

$\mathbb{R}^2$. To avoid trivial cases, from this point on in the paper we therefore assume that ![]() $a,b$ are complex numbers with ab ≠ 0.

$a,b$ are complex numbers with ab ≠ 0.

Another result is that for general ![]() $\mathbf{G}(a,b)$ with ab ≠ 0,

$\mathbf{G}(a,b)$ with ab ≠ 0, ![]() $\Psi(X,T;\mathbf{G})$ lies in

$\Psi(X,T;\mathbf{G})$ lies in ![]() $L^2(\mathbb{R})$ as a function of X with an

$L^2(\mathbb{R})$ as a function of X with an ![]() $L^2(\mathbb{R})$-norm that is independent of the parameter matrix

$L^2(\mathbb{R})$-norm that is independent of the parameter matrix ![]() $\mathbf{G}=\mathbf{G}(a,b)$. Namely, we prove the following theorem.

$\mathbf{G}=\mathbf{G}(a,b)$. Namely, we prove the following theorem.

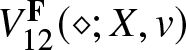

Theorem 1.9 ( $L^2$-norm of

$L^2$-norm of  $\Psi(\diamond,T;\mathbf{G})$)

$\Psi(\diamond,T;\mathbf{G})$)

Let ![]() $\mathbf{G}=\mathbf{G}(a,b)$ be as in (1.5) with

$\mathbf{G}=\mathbf{G}(a,b)$ be as in (1.5) with ![]() $ab\neq 0$. We have that

$ab\neq 0$. We have that ![]() $\Psi(\diamond, T; \mathbf{G})\in L^2(\mathbb{R})$ for all

$\Psi(\diamond, T; \mathbf{G})\in L^2(\mathbb{R})$ for all ![]() $T\in\mathbb{R}$ with

$T\in\mathbb{R}$ with  $\| \Psi(\diamond, T;\mathbf{G} )\|_{L^2(\mathbb{R})}=\sqrt{8}$.

$\| \Psi(\diamond, T;\mathbf{G} )\|_{L^2(\mathbb{R})}=\sqrt{8}$.

We prove Theorem 1.9 in Section 2.4 essentially as a corollary of Theorem 1.18 below. When combined with the degeneration property given in Proposition 1.8, the independence of the L 2-norm of ![]() $\Psi(X,T,\mathbf{G})$ from the matrix

$\Psi(X,T,\mathbf{G})$ from the matrix ![]() $\mathbf{G}=\mathbf{G}(a,b)$ asserted in Theorem 1.9 leaves us with an interesting conundrum! While

$\mathbf{G}=\mathbf{G}(a,b)$ asserted in Theorem 1.9 leaves us with an interesting conundrum! While  $\|\Psi(\diamond,T;\mathbf{G}(a,b))\|_{L^2(\mathbb{R})}=\sqrt{8}$ for any nonzero

$\|\Psi(\diamond,T;\mathbf{G}(a,b))\|_{L^2(\mathbb{R})}=\sqrt{8}$ for any nonzero ![]() $a,b\in\mathbb{C}$, we have

$a,b\in\mathbb{C}$, we have

\begin{align}

\lim_{a\to 0}\Psi(X,T;\mathbf{G}(a,b)) = 0\quad\text{and}\quad \lim_{b\to 0}\Psi(X,T;\mathbf{G}(a,b)) = 0

\end{align}

\begin{align}

\lim_{a\to 0}\Psi(X,T;\mathbf{G}(a,b)) = 0\quad\text{and}\quad \lim_{b\to 0}\Psi(X,T;\mathbf{G}(a,b)) = 0

\end{align} pointwise for any ![]() $(X,T)\in\mathbb{R}^2$. A mechanism for this limiting behaviour could be that the

$(X,T)\in\mathbb{R}^2$. A mechanism for this limiting behaviour could be that the ![]() $L^2$-mass of the wave packet

$L^2$-mass of the wave packet ![]() $\Psi(X,T;\mathbf{G}(a,b))$ at any given time T escapes to

$\Psi(X,T;\mathbf{G}(a,b))$ at any given time T escapes to ![]() $X=\pm\infty$ in the limit a → 0 or b → 0, or alternately, the wave packet spreads in the same limit so as to preserve the L 2-norm while still decaying pointwise or perhaps even uniformly to zero. In fact, it turns out that a combination of both of these mechanisms is at play. The explanation of this phenomenon lies in a double-scaling limit in which

$X=\pm\infty$ in the limit a → 0 or b → 0, or alternately, the wave packet spreads in the same limit so as to preserve the L 2-norm while still decaying pointwise or perhaps even uniformly to zero. In fact, it turns out that a combination of both of these mechanisms is at play. The explanation of this phenomenon lies in a double-scaling limit in which ![]() $X\to+\infty$ while also a → 0 or b → 0 at suitably related rates. The details can be found in our next paper on the subject, [Reference Bilman and Miller14].

$X\to+\infty$ while also a → 0 or b → 0 at suitably related rates. The details can be found in our next paper on the subject, [Reference Bilman and Miller14].

1.2.4. Differential equations

It is straightforward to derive three different first-order systems of differential equations satisfied by the matrix function

\begin{align}

\mathbf{W}(\Lambda;X,T,\mathbf{G}):=\mathbf{P}(\Lambda;X,T,\mathbf{G}){\mathrm{e}}^{-{\mathrm{i}} (\Lambda X+\Lambda^2T+2\Lambda^{-1})\sigma_3}

\end{align}

\begin{align}

\mathbf{W}(\Lambda;X,T,\mathbf{G}):=\mathbf{P}(\Lambda;X,T,\mathbf{G}){\mathrm{e}}^{-{\mathrm{i}} (\Lambda X+\Lambda^2T+2\Lambda^{-1})\sigma_3}

\end{align} by following the procedure in [Reference Bilman, Ling and Miller8, Section 3.2.1], which though written with the special case (1.7) in mind, in fact does not depend at all on the details of the matrix ![]() $\mathbf{G}(a,b)$ and hence applies to general rogue waves of infinite order. It follows that the matrix

$\mathbf{G}(a,b)$ and hence applies to general rogue waves of infinite order. It follows that the matrix ![]() $\mathbf{W}(\Lambda;X,T,\mathbf{G})$ satisfies the three Lax equations

$\mathbf{W}(\Lambda;X,T,\mathbf{G})$ satisfies the three Lax equations

\begin{align}

\frac{\partial \mathbf{W}}{\partial X} = \mathbf{X}\mathbf{W},\quad\frac{\partial \mathbf{W}}{\partial T}=\mathbf{T}\mathbf{W},\quad\frac{\partial \mathbf{W}}{\partial\Lambda}=\boldsymbol{\Lambda}\mathbf{W},

\end{align}

\begin{align}

\frac{\partial \mathbf{W}}{\partial X} = \mathbf{X}\mathbf{W},\quad\frac{\partial \mathbf{W}}{\partial T}=\mathbf{T}\mathbf{W},\quad\frac{\partial \mathbf{W}}{\partial\Lambda}=\boldsymbol{\Lambda}\mathbf{W},

\end{align} wherein the coefficient matrices X, T, and ![]() $\boldsymbol{\Lambda}$ are explicitly represented in terms of the coefficients

$\boldsymbol{\Lambda}$ are explicitly represented in terms of the coefficients ![]() $\mathbf{P}^{[j]}=\mathbf{P}^{[j]}(X,T;\mathbf{G})$ in the convergent Laurent expansion

$\mathbf{P}^{[j]}=\mathbf{P}^{[j]}(X,T;\mathbf{G})$ in the convergent Laurent expansion

\begin{align}

\mathbf{P}(\Lambda;X,T,\mathbf{G})=\mathbb{I}+\sum_{j=1}^\infty\mathbf{P}^{[j]}(X,T;\mathbf{G})\Lambda^{-j},\quad |\Lambda| \gt 1

\end{align}

\begin{align}

\mathbf{P}(\Lambda;X,T,\mathbf{G})=\mathbb{I}+\sum_{j=1}^\infty\mathbf{P}^{[j]}(X,T;\mathbf{G})\Lambda^{-j},\quad |\Lambda| \gt 1

\end{align} or alternatively in terms of the Taylor coefficients ![]() $\mathbf{P}_0:=\mathbf{P}(0;X,T,\mathbf{G})$ etc., of

$\mathbf{P}_0:=\mathbf{P}(0;X,T,\mathbf{G})$ etc., of ![]() $\mathbf{P}(\Lambda;X,T,\mathbf{G})$ at

$\mathbf{P}(\Lambda;X,T,\mathbf{G})$ at ![]() $\Lambda=0$. Thus:

$\Lambda=0$. Thus:

\begin{align}

\mathbf{X} = -{\mathrm{i}}\Lambda\sigma_3 + {\mathrm{i}} [\sigma_3,\mathbf{P}^{[1]}] = \begin{bmatrix}-{\mathrm{i}}\Lambda & \Psi\\-\Psi^* & {\mathrm{i}}\Lambda\end{bmatrix},

\end{align}

\begin{align}

\mathbf{X} = -{\mathrm{i}}\Lambda\sigma_3 + {\mathrm{i}} [\sigma_3,\mathbf{P}^{[1]}] = \begin{bmatrix}-{\mathrm{i}}\Lambda & \Psi\\-\Psi^* & {\mathrm{i}}\Lambda\end{bmatrix},

\end{align} \begin{align}

\mathbf{T}=-{\mathrm{i}}\Lambda^2\sigma_3 + {\mathrm{i}} [\sigma_3,\mathbf{P}^{[1]}]\Lambda + {\mathrm{i}}[\mathbf{P}^{[1]},\sigma_3\mathbf{P}^{[1]}] + {\mathrm{i}}[\sigma_3,\mathbf{P}^{[2]}] = \begin{bmatrix}

-{\mathrm{i}}\Lambda^2 +\frac{1}{2}{\mathrm{i}} |\Psi|^2 & \Lambda\Psi + \frac{1}{2}{\mathrm{i}}\Psi_X\\

-\Lambda\Psi^* +\frac{1}{2}{\mathrm{i}}\Psi^*_X & {\mathrm{i}}\Lambda^2 -\frac{1}{2}{\mathrm{i}} |\Psi|^2\end{bmatrix},

\end{align}

\begin{align}

\mathbf{T}=-{\mathrm{i}}\Lambda^2\sigma_3 + {\mathrm{i}} [\sigma_3,\mathbf{P}^{[1]}]\Lambda + {\mathrm{i}}[\mathbf{P}^{[1]},\sigma_3\mathbf{P}^{[1]}] + {\mathrm{i}}[\sigma_3,\mathbf{P}^{[2]}] = \begin{bmatrix}

-{\mathrm{i}}\Lambda^2 +\frac{1}{2}{\mathrm{i}} |\Psi|^2 & \Lambda\Psi + \frac{1}{2}{\mathrm{i}}\Psi_X\\

-\Lambda\Psi^* +\frac{1}{2}{\mathrm{i}}\Psi^*_X & {\mathrm{i}}\Lambda^2 -\frac{1}{2}{\mathrm{i}} |\Psi|^2\end{bmatrix},

\end{align}and

\begin{align}

\boldsymbol{\Lambda}=\begin{bmatrix}-2{\mathrm{i}} T\Lambda -{\mathrm{i}} X +{\mathrm{i}} T|\Psi|^2\Lambda^{-1} & 2T\Psi +(X\Psi+{\mathrm{i}} T\Psi_X)\Lambda^{-1}\\-2T\Psi^*+(-X\Psi^*+{\mathrm{i}} T\Psi_X^*)\Lambda^{-1} & 2{\mathrm{i}} T\Lambda +{\mathrm{i}} X-{\mathrm{i}} T|\Psi|^2\Lambda^{-1}\end{bmatrix} + 2{\mathrm{i}} \mathbf{P}_0\sigma_3\mathbf{P}_0^{-1}\Lambda^{-2}.

\end{align}

\begin{align}

\boldsymbol{\Lambda}=\begin{bmatrix}-2{\mathrm{i}} T\Lambda -{\mathrm{i}} X +{\mathrm{i}} T|\Psi|^2\Lambda^{-1} & 2T\Psi +(X\Psi+{\mathrm{i}} T\Psi_X)\Lambda^{-1}\\-2T\Psi^*+(-X\Psi^*+{\mathrm{i}} T\Psi_X^*)\Lambda^{-1} & 2{\mathrm{i}} T\Lambda +{\mathrm{i}} X-{\mathrm{i}} T|\Psi|^2\Lambda^{-1}\end{bmatrix} + 2{\mathrm{i}} \mathbf{P}_0\sigma_3\mathbf{P}_0^{-1}\Lambda^{-2}.

\end{align} The global existence of ![]() $\mathbf{P}(\Lambda;X,T,\mathbf{G})$ from Riemann–Hilbert Problem 1 recorded in Proposition 1.1 then guarantees that the three Lax equations (1.28) are mutually compatible. In particular, the compatibility condition

$\mathbf{P}(\Lambda;X,T,\mathbf{G})$ from Riemann–Hilbert Problem 1 recorded in Proposition 1.1 then guarantees that the three Lax equations (1.28) are mutually compatible. In particular, the compatibility condition ![]() $\mathbf{X}_T-\mathbf{T}_X + [\mathbf{X},\mathbf{T}]=\mathbf{0}$ implies the following basic result which has already been mentioned.

$\mathbf{X}_T-\mathbf{T}_X + [\mathbf{X},\mathbf{T}]=\mathbf{0}$ implies the following basic result which has already been mentioned.

Theorem 1.10 ( $\Psi(X,T)$ solves NLS)

$\Psi(X,T)$ solves NLS)

The function ![]() $\mathbb{R}^2\ni (X,T)\mapsto \Psi(X,T;\mathbf{G})\in\mathbb{C}$ obtained from Riemann–Hilbert Problem 1 via (1.6) is a global solution of the focusing NLS equation in the form (1.3).

$\mathbb{R}^2\ni (X,T)\mapsto \Psi(X,T;\mathbf{G})\in\mathbb{C}$ obtained from Riemann–Hilbert Problem 1 via (1.6) is a global solution of the focusing NLS equation in the form (1.3).

On the other hand, the compatibility condition ![]() $\mathbf{X}_\Lambda-\boldsymbol{\Lambda}_X + [\mathbf{X},\boldsymbol{\Lambda}]=\mathbf{0}$ is a system of ordinary differential equations with respect to X (in which

$\mathbf{X}_\Lambda-\boldsymbol{\Lambda}_X + [\mathbf{X},\boldsymbol{\Lambda}]=\mathbf{0}$ is a system of ordinary differential equations with respect to X (in which ![]() $T\in\mathbb{R}$ plays the role of a parameter) that was shown in [Reference Bilman, Ling and Miller8, Section 3.2.1] to be related to the second equation in the Painlevé-III hierarchy of Sakka [Reference Sakka32] when T ≠ 0 and to the Painlevé-III (D6) equation itself when T = 0. More explicitly, we have the following.

$T\in\mathbb{R}$ plays the role of a parameter) that was shown in [Reference Bilman, Ling and Miller8, Section 3.2.1] to be related to the second equation in the Painlevé-III hierarchy of Sakka [Reference Sakka32] when T ≠ 0 and to the Painlevé-III (D6) equation itself when T = 0. More explicitly, we have the following.

Theorem 1.11 ( $\Psi(X,0)$ and the Painlevé-III (D6) equation [Reference Bilman, Ling and Miller8, Corollary 4])

$\Psi(X,0)$ and the Painlevé-III (D6) equation [Reference Bilman, Ling and Miller8, Corollary 4])

The function ![]() $u(x)$ defined for

$u(x)$ defined for ![]() $x\in\mathbb{R}\cup({\mathrm{i}}\mathbb{R})$ by

$x\in\mathbb{R}\cup({\mathrm{i}}\mathbb{R})$ by

\begin{align}

u(x):=2\left(\frac{ \mathrm{d}}{ \mathrm{d} x}\log\left(x^2\Psi(-\tfrac{1}{8}x^2,0;\mathbf{G})\right)\right)^{-1}

\end{align}

\begin{align}

u(x):=2\left(\frac{ \mathrm{d}}{ \mathrm{d} x}\log\left(x^2\Psi(-\tfrac{1}{8}x^2,0;\mathbf{G})\right)\right)^{-1}

\end{align}is a solution of the Painlevé-III (D6) equation

\begin{align}

\frac{ \mathrm{d}^2u}{ \mathrm{d} x^2}=\frac{1}{u}\left(\frac{ \mathrm{d} u}{ \mathrm{d} x}\right)^2-\frac{1}{x}\frac{ \mathrm{d} u}{ \mathrm{d} x} + \frac{4\Theta_0 u^2 + 4(1-\Theta_\infty)}{x}+4u^3-\frac{4}{u}

\end{align}

\begin{align}

\frac{ \mathrm{d}^2u}{ \mathrm{d} x^2}=\frac{1}{u}\left(\frac{ \mathrm{d} u}{ \mathrm{d} x}\right)^2-\frac{1}{x}\frac{ \mathrm{d} u}{ \mathrm{d} x} + \frac{4\Theta_0 u^2 + 4(1-\Theta_\infty)}{x}+4u^3-\frac{4}{u}

\end{align} in the case that both formal monodromy parameters vanish: ![]() $\Theta_0=\Theta_\infty=0$.

$\Theta_0=\Theta_\infty=0$.

Corollary 1.12 (Behaviour of  $u(x)$ near

$u(x)$ near  $x=0$)

$x=0$)

The function ![]() $u(x)$ defined by (1.33) is an odd function of

$u(x)$ defined by (1.33) is an odd function of ![]() $x$ that is analytic at the origin with Taylor expansion

$x$ that is analytic at the origin with Taylor expansion

\begin{align}

u(x)=x + \frac{u'''(0)}{3!}x^3 + O(x^5),\quad x\to 0,\quad u'''(0)=3(\mathfrak{b}^2-\mathfrak{a}^2)=3\frac{|b|^2-|a|^2}{|a|^2+|b|^2}.

\end{align}

\begin{align}

u(x)=x + \frac{u'''(0)}{3!}x^3 + O(x^5),\quad x\to 0,\quad u'''(0)=3(\mathfrak{b}^2-\mathfrak{a}^2)=3\frac{|b|^2-|a|^2}{|a|^2+|b|^2}.

\end{align}Proof. Combining Proposition 1.1 with (1.33) shows that u(x) is an odd function having a Taylor expansion about x = 0 with  $u'''(0)=\frac{3}{4}\Psi_X(0,0;\mathbf{G})/\Psi(0,0;\mathbf{G})$. Theorems 1.6 and 1.7 then yield the claimed value of

$u'''(0)=\frac{3}{4}\Psi_X(0,0;\mathbf{G})/\Psi(0,0;\mathbf{G})$. Theorems 1.6 and 1.7 then yield the claimed value of ![]() $u'''(0)$.

$u'''(0)$.

Since the value of ![]() $\Psi(0,0;\mathbf{G})$ is known from Theorem 1.6, it is straightforward to invert (1.33) to explicitly express

$\Psi(0,0;\mathbf{G})$ is known from Theorem 1.6, it is straightforward to invert (1.33) to explicitly express ![]() $\Psi(X,0;\mathbf{G})$ in terms of u:

$\Psi(X,0;\mathbf{G})$ in terms of u:

\begin{align}

\Psi(X,0;\mathbf{G})=\Psi(0,0;\mathbf{G})\exp\left(2\int_0^x\left[\frac{1}{u(y)}-\frac{1}{y}\right]\, \mathrm{d} y\right),\quad X=-\frac{1}{8}x^2.

\end{align}

\begin{align}

\Psi(X,0;\mathbf{G})=\Psi(0,0;\mathbf{G})\exp\left(2\int_0^x\left[\frac{1}{u(y)}-\frac{1}{y}\right]\, \mathrm{d} y\right),\quad X=-\frac{1}{8}x^2.

\end{align} We note that ![]() $-3 \lt u'''(0) \lt 3$. In fact, when

$-3 \lt u'''(0) \lt 3$. In fact, when ![]() $\Theta_0=\Theta_\infty=0$ there is for each

$\Theta_0=\Theta_\infty=0$ there is for each ![]() $\omega\in (-3,3)$ a unique solution of (1.34) analytic at the origin with

$\omega\in (-3,3)$ a unique solution of (1.34) analytic at the origin with ![]() $u(0)=0$,

$u(0)=0$, ![]() $u'(0)=1$,

$u'(0)=1$, ![]() $u''(0)=0$, and

$u''(0)=0$, and ![]() $u'''(0)=\omega$. This family of solutions of the Painlevé-III (D6) equation has not only been associated with limits of sequences of solutions of the focusing NLS equation [Reference Bilman and Buckingham7, Reference Bilman, Ling and Miller8], but has also appeared in the description of self-similar boundary layers in the sharp-line Maxwell-Bloch equations [Reference Li and Miller24].

$u'''(0)=\omega$. This family of solutions of the Painlevé-III (D6) equation has not only been associated with limits of sequences of solutions of the focusing NLS equation [Reference Bilman and Buckingham7, Reference Bilman, Ling and Miller8], but has also appeared in the description of self-similar boundary layers in the sharp-line Maxwell-Bloch equations [Reference Li and Miller24].

Remark 1.13. The parametrization of solutions of (1.34) is discussed for example in [Reference Barhoumi, Lisovyy, Miller and Prokhorov3, Section 4.6] (see also [Reference van der Put and Saito44]). Solutions are parametrized by points on a certain monodromy manifold characterized by a cubic equation in three variables. In particular, the monodromy manifold for the Painlevé-III (D6) equation (1.34) with parameters ![]() $\Theta_0=\Theta_\infty=0$ consists of those

$\Theta_0=\Theta_\infty=0$ consists of those ![]() $(x_1,x_2,x_3)\in \mathbb{C}^3$ for which

$(x_1,x_2,x_3)\in \mathbb{C}^3$ for which

\begin{align}

x_1x_2x_3+x_1^2+x_2^2+2x_1+2x_2+1=0.

\end{align}

\begin{align}

x_1x_2x_3+x_1^2+x_2^2+2x_1+2x_2+1=0.

\end{align} This is a smooth complex surface except at two points obtained by also enforcing that the gradient of the left-hand side vanishes: ![]() $(x_1,x_2,x_3)=(0,-1,2)$ and

$(x_1,x_2,x_3)=(0,-1,2)$ and ![]() $(x_1,x_2,x_3)=(-1,0,2)$. Because the Stokes phenomenon is trivial both at

$(x_1,x_2,x_3)=(-1,0,2)$. Because the Stokes phenomenon is trivial both at ![]() $\Lambda=0$ and

$\Lambda=0$ and ![]() $\Lambda=\infty$ (nontrivial Stokes phenomenon would be evident in additional jump conditions for Riemann–Hilbert Problem 1 on two contours approaching the origin and two contours approaching

$\Lambda=\infty$ (nontrivial Stokes phenomenon would be evident in additional jump conditions for Riemann–Hilbert Problem 1 on two contours approaching the origin and two contours approaching ![]() $\Lambda=\infty$), the coordinates of the Painlevé-III (D6) solution arising for T = 0 from Riemann–Hilbert Problem 1 are determined from the matrix G alone (which plays the role of a connection matrix in the isomonodromy theory) by

$\Lambda=\infty$), the coordinates of the Painlevé-III (D6) solution arising for T = 0 from Riemann–Hilbert Problem 1 are determined from the matrix G alone (which plays the role of a connection matrix in the isomonodromy theory) by ![]() $(x_1,x_2,x_3)=(G_{11}G_{22}-1,-G_{11}G_{22},2)=(\mathfrak{a}^2-1,-\mathfrak{a}^2,2)$. Since

$(x_1,x_2,x_3)=(G_{11}G_{22}-1,-G_{11}G_{22},2)=(\mathfrak{a}^2-1,-\mathfrak{a}^2,2)$. Since ![]() $0 \lt \mathfrak{a}^2 \lt 1$, this is a segment of a line in

$0 \lt \mathfrak{a}^2 \lt 1$, this is a segment of a line in ![]() $\mathbb{C}^3$ parametrized by

$\mathbb{C}^3$ parametrized by ![]() $G_{11}G_{22}=\mathfrak{a}^2$ that is fully contained within the monodromy manifold (1.37). The line passes through both singular points at parameter values

$G_{11}G_{22}=\mathfrak{a}^2$ that is fully contained within the monodromy manifold (1.37). The line passes through both singular points at parameter values ![]() $G_{11}G_{22}=\mathfrak{a}^2=0$ and

$G_{11}G_{22}=\mathfrak{a}^2=0$ and ![]() $G_{11}G_{22}=\mathfrak{a}^2=1$, which are the endpoints of the relevant segment. Thus, the endpoints of the segment correspond to normalized parameters

$G_{11}G_{22}=\mathfrak{a}^2=1$, which are the endpoints of the relevant segment. Thus, the endpoints of the segment correspond to normalized parameters ![]() $(\mathfrak{a},\mathfrak{b})=(0,1)$ or

$(\mathfrak{a},\mathfrak{b})=(0,1)$ or ![]() $(\mathfrak{a},\mathfrak{b})=(1,0)$ respectively. The singular points on the monodromy manifold then both correspond to the trivial solution

$(\mathfrak{a},\mathfrak{b})=(1,0)$ respectively. The singular points on the monodromy manifold then both correspond to the trivial solution ![]() $\Psi(X,T;\mathbf{G})\equiv 0$ according to Proposition 1.8. However, each interior point of the segment yields a distinct nontrivial solution

$\Psi(X,T;\mathbf{G})\equiv 0$ according to Proposition 1.8. However, each interior point of the segment yields a distinct nontrivial solution ![]() $u(x)=u(x;\mathfrak{a})$ of the Painlevé-III (D6) equation (1.34) with

$u(x)=u(x;\mathfrak{a})$ of the Painlevé-III (D6) equation (1.34) with ![]() $\Theta_0=\Theta_\infty=0$ related to

$\Theta_0=\Theta_\infty=0$ related to ![]() $\Psi(X,0;\mathbf{G})$ via (1.33) and its inverse (1.36). Note that the presence of the logarithmic derivative in (1.33) cancels the constant phase factor

$\Psi(X,0;\mathbf{G})$ via (1.33) and its inverse (1.36). Note that the presence of the logarithmic derivative in (1.33) cancels the constant phase factor ![]() ${\mathrm{e}}^{-{\mathrm{i}}\arg(ab)}$ in

${\mathrm{e}}^{-{\mathrm{i}}\arg(ab)}$ in ![]() $\Psi(X,0;\mathbf{G}(a,b))$ (see (1.12)) so that while Ψ depends on the parameters (a, b), u indeed depends only on the normalized parameters

$\Psi(X,0;\mathbf{G}(a,b))$ (see (1.12)) so that while Ψ depends on the parameters (a, b), u indeed depends only on the normalized parameters ![]() $(\mathfrak{a},\mathfrak{b})$.

$(\mathfrak{a},\mathfrak{b})$.

The compatibility condition ![]() $\mathbf{T}_\Lambda-\boldsymbol{\Lambda}_T + [\mathbf{T},\boldsymbol{\Lambda}]=\mathbf{0}$ is a system of ordinary differential equations with respect to T in which

$\mathbf{T}_\Lambda-\boldsymbol{\Lambda}_T + [\mathbf{T},\boldsymbol{\Lambda}]=\mathbf{0}$ is a system of ordinary differential equations with respect to T in which ![]() $X\in\mathbb{R}$ is a parameter. This system was written out in general in [Reference Bilman, Ling and Miller8, Eqn. (119)], and here we expand on a remark made in that paper concerning a symmetric special case (see also [Reference Suleimanov33]). Suppose that b = a. Then according to Proposition 1.3,

$X\in\mathbb{R}$ is a parameter. This system was written out in general in [Reference Bilman, Ling and Miller8, Eqn. (119)], and here we expand on a remark made in that paper concerning a symmetric special case (see also [Reference Suleimanov33]). Suppose that b = a. Then according to Proposition 1.3, ![]() $X\mapsto\Psi(X,T;\mathbf{G}(a,a))$ is an even function for all

$X\mapsto\Psi(X,T;\mathbf{G}(a,a))$ is an even function for all ![]() $T\in\mathbb{R}$, which in light of real analyticity at X = 0 implies also that

$T\in\mathbb{R}$, which in light of real analyticity at X = 0 implies also that

as is consistent with the conclusion of Theorem 1.7 when also T = 0. Thus X = 0 is an axis of symmetry of ![]() $\Psi(X,T;\mathbf{G}(a,a))$, and one may expect some simplification of the compatibility condition

$\Psi(X,T;\mathbf{G}(a,a))$, and one may expect some simplification of the compatibility condition ![]() $\mathbf{T}_\Lambda-\boldsymbol{\Lambda}_T+[\mathbf{T},\boldsymbol{\Lambda}]=\mathbf{0}$ yielding ordinary differential equations satisfied by

$\mathbf{T}_\Lambda-\boldsymbol{\Lambda}_T+[\mathbf{T},\boldsymbol{\Lambda}]=\mathbf{0}$ yielding ordinary differential equations satisfied by ![]() $T\mapsto \Psi(X,T;\mathbf{G})$. To see this, we remark that Proposition A.1 in Appendix A implies that

$T\mapsto \Psi(X,T;\mathbf{G})$. To see this, we remark that Proposition A.1 in Appendix A implies that ![]() $\mathbf{P}(0;0,T,\mathbf{G}(a,a))=-\sigma_3\mathbf{P}(0;0,T,\mathbf{G}(a,a))\sigma_1$, and therefore for some function

$\mathbf{P}(0;0,T,\mathbf{G}(a,a))=-\sigma_3\mathbf{P}(0;0,T,\mathbf{G}(a,a))\sigma_1$, and therefore for some function ![]() $s(T)\neq 0$ the unit-determinant matrix

$s(T)\neq 0$ the unit-determinant matrix ![]() $\mathbf{P}_0=\mathbf{P}(0;0,T,\mathbf{G}(a,a))$ has the form

$\mathbf{P}_0=\mathbf{P}(0;0,T,\mathbf{G}(a,a))$ has the form

\begin{align}

\mathbf{P}_0=\begin{bmatrix} s(T) & -s(T)\\ (2s(T))^{-1} & (2s(T))^{-1}\end{bmatrix}\implies 2{\mathrm{i}}\mathbf{P}_0\sigma_3\mathbf{P}_0^{-1}=2{\mathrm{i}}\begin{bmatrix}0 & n(T)\\n(T)^{-1} & 0\end{bmatrix},\quad n(T):=2s(T)^2.

\end{align}

\begin{align}

\mathbf{P}_0=\begin{bmatrix} s(T) & -s(T)\\ (2s(T))^{-1} & (2s(T))^{-1}\end{bmatrix}\implies 2{\mathrm{i}}\mathbf{P}_0\sigma_3\mathbf{P}_0^{-1}=2{\mathrm{i}}\begin{bmatrix}0 & n(T)\\n(T)^{-1} & 0\end{bmatrix},\quad n(T):=2s(T)^2.

\end{align} Using this and (1.38), and setting X = 0, the matrix coefficients T and ![]() $\boldsymbol{\Lambda}$ defined in (1.31) and (1.32) respectively take the simplified form

$\boldsymbol{\Lambda}$ defined in (1.31) and (1.32) respectively take the simplified form

\begin{align}

\mathbf{T}=-{\mathrm{i}}\Lambda^2\sigma_3 +\begin{bmatrix}0 & \Psi\\-\Psi^* & 0\end{bmatrix}\Lambda +\frac{1}{2}{\mathrm{i}} |\Psi|^2\sigma_3

\end{align}

\begin{align}

\mathbf{T}=-{\mathrm{i}}\Lambda^2\sigma_3 +\begin{bmatrix}0 & \Psi\\-\Psi^* & 0\end{bmatrix}\Lambda +\frac{1}{2}{\mathrm{i}} |\Psi|^2\sigma_3

\end{align}and

\begin{align}

\boldsymbol{\Lambda}=-2{\mathrm{i}} T\Lambda\sigma_3 +\begin{bmatrix}0 & 2T\Psi\\-2T\Psi^* & 0\end{bmatrix}+{\mathrm{i}} T|\Psi|^2\Lambda^{-1}\sigma_3 +\frac{2{\mathrm{i}}}{\Lambda^2}\begin{bmatrix} 0 & n(T)\\n(T)^{-1} & 0\end{bmatrix},

\end{align}

\begin{align}

\boldsymbol{\Lambda}=-2{\mathrm{i}} T\Lambda\sigma_3 +\begin{bmatrix}0 & 2T\Psi\\-2T\Psi^* & 0\end{bmatrix}+{\mathrm{i}} T|\Psi|^2\Lambda^{-1}\sigma_3 +\frac{2{\mathrm{i}}}{\Lambda^2}\begin{bmatrix} 0 & n(T)\\n(T)^{-1} & 0\end{bmatrix},

\end{align} where ![]() $\Psi=\Psi(T):=\Psi(0,T;\mathbf{G}(a,a))$. In [Reference Kitaev and Vartanian22] a Lax pair is presented for the partially-degenerate Painlevé-III equation (D7 type, with parameters

$\Psi=\Psi(T):=\Psi(0,T;\mathbf{G}(a,a))$. In [Reference Kitaev and Vartanian22] a Lax pair is presented for the partially-degenerate Painlevé-III equation (D7 type, with parameters ![]() $\mathscr{A},\mathscr{B}\in\mathbb{C}$ and

$\mathscr{A},\mathscr{B}\in\mathbb{C}$ and ![]() $\varepsilon=\pm 1$)

$\varepsilon=\pm 1$)

\begin{align}

\frac{ \mathrm{d}^2\mathfrak{u}}{ \mathrm{d} t^2}=\frac{1}{\mathfrak{u}}\left(\frac{ \mathrm{d}\mathfrak{u}}{ \mathrm{d} t}\right)^2 -\frac{1}{t}\frac{ \mathrm{d}\mathfrak{u}}{ \mathrm{d} t} + \frac{-8\varepsilon\mathfrak{u}^2+2\mathscr{A}\mathscr{B}}{t}+\frac{\mathscr{B}^2}{\mathfrak{u}},\quad\mathfrak{u}=\mathfrak{u}(t),

\end{align}

\begin{align}

\frac{ \mathrm{d}^2\mathfrak{u}}{ \mathrm{d} t^2}=\frac{1}{\mathfrak{u}}\left(\frac{ \mathrm{d}\mathfrak{u}}{ \mathrm{d} t}\right)^2 -\frac{1}{t}\frac{ \mathrm{d}\mathfrak{u}}{ \mathrm{d} t} + \frac{-8\varepsilon\mathfrak{u}^2+2\mathscr{A}\mathscr{B}}{t}+\frac{\mathscr{B}^2}{\mathfrak{u}},\quad\mathfrak{u}=\mathfrak{u}(t),

\end{align} that, after a Fabry transformation, resembles the compatible linear system ![]() $\mathbf{W}_T=\mathbf{T W}$ and

$\mathbf{W}_T=\mathbf{T W}$ and ![]() $\mathbf{W}_\Lambda=\boldsymbol{\Lambda} \mathbf{W}$ with the coefficient matrices given in (1.40) and (1.41). Hence we may expect that

$\mathbf{W}_\Lambda=\boldsymbol{\Lambda} \mathbf{W}$ with the coefficient matrices given in (1.40) and (1.41). Hence we may expect that ![]() $\Psi(T)$ is related to a solution of the Painlevé-III (D7) equation. To complete the correspondence, we change variables and make a gauge transformation by

$\Psi(T)$ is related to a solution of the Painlevé-III (D7) equation. To complete the correspondence, we change variables and make a gauge transformation by

\begin{align}

\lambda:= T^\frac{1}{4}\Lambda,\quad t:=T^{\frac{1}{2}},\quad \mathbf{W}=t^{\frac{1}{2}\sigma_3}\mathbf{V},

\end{align}

\begin{align}

\lambda:= T^\frac{1}{4}\Lambda,\quad t:=T^{\frac{1}{2}},\quad \mathbf{W}=t^{\frac{1}{2}\sigma_3}\mathbf{V},

\end{align}and then the resulting system takes the form

\begin{align}

\frac{\partial\mathbf{V}}{\partial\lambda}=\left(-2{\mathrm{i}} t\lambda\sigma_3 + 2t\begin{bmatrix}

0 & t^{-\frac{1}{2}}\Psi\\-t^\frac{3}{2}\Psi^* & 0\end{bmatrix}-\frac{1}{\lambda}\left(-{\mathrm{i}} t^2|\Psi|^2\right)\sigma_3

+\frac{1}{\lambda^2}\begin{bmatrix}0 & 2{\mathrm{i}} t^{-\frac{1}{2}}n\\

2{\mathrm{i}} t^\frac{3}{2}n^{-1} & 0\end{bmatrix}\right)\mathbf{V}

\end{align}

\begin{align}

\frac{\partial\mathbf{V}}{\partial\lambda}=\left(-2{\mathrm{i}} t\lambda\sigma_3 + 2t\begin{bmatrix}

0 & t^{-\frac{1}{2}}\Psi\\-t^\frac{3}{2}\Psi^* & 0\end{bmatrix}-\frac{1}{\lambda}\left(-{\mathrm{i}} t^2|\Psi|^2\right)\sigma_3

+\frac{1}{\lambda^2}\begin{bmatrix}0 & 2{\mathrm{i}} t^{-\frac{1}{2}}n\\

2{\mathrm{i}} t^\frac{3}{2}n^{-1} & 0\end{bmatrix}\right)\mathbf{V}

\end{align}and

\begin{align}

\frac{\partial\mathbf{V}}{\partial t}=\left(-{\mathrm{i}}\lambda^2\sigma_3 + \lambda\begin{bmatrix}0 & t^{-\frac{1}{2}}\Psi\\

-t^\frac{3}{2}\Psi^* & 0\end{bmatrix} + \left(\frac{1}{2}{\mathrm{i}} t|\Psi|^2-\frac{1}{2t}\right)\sigma_3

-\frac{1}{2t\lambda}\begin{bmatrix}0 & 2{\mathrm{i}} t^{-\frac{1}{2}}n\\2{\mathrm{i}} t^\frac{3}{2}n^{-1} & 0\end{bmatrix}\right)\mathbf{V},

\end{align}

\begin{align}

\frac{\partial\mathbf{V}}{\partial t}=\left(-{\mathrm{i}}\lambda^2\sigma_3 + \lambda\begin{bmatrix}0 & t^{-\frac{1}{2}}\Psi\\

-t^\frac{3}{2}\Psi^* & 0\end{bmatrix} + \left(\frac{1}{2}{\mathrm{i}} t|\Psi|^2-\frac{1}{2t}\right)\sigma_3

-\frac{1}{2t\lambda}\begin{bmatrix}0 & 2{\mathrm{i}} t^{-\frac{1}{2}}n\\2{\mathrm{i}} t^\frac{3}{2}n^{-1} & 0\end{bmatrix}\right)\mathbf{V},

\end{align} in which we now view Ψ, ![]() $\Psi^*$, and n as functions of t. This system has exactly the form of [Reference Kitaev and Vartanian22, Eqn. (12)] provided we make the correspondences

$\Psi^*$, and n as functions of t. This system has exactly the form of [Reference Kitaev and Vartanian22, Eqn. (12)] provided we make the correspondences

\begin{align}

D=t^{\frac{3}{2}}\Psi^*,\quad \frac{2{\mathrm{i}} A}{\sqrt{-AB}}=t^{-\frac{1}{2}}\Psi,

\end{align}

\begin{align}

D=t^{\frac{3}{2}}\Psi^*,\quad \frac{2{\mathrm{i}} A}{\sqrt{-AB}}=t^{-\frac{1}{2}}\Psi,

\end{align} \begin{align}

{\mathrm{i}} \mathscr{A}+\frac{1}{2}+\frac{2t AD}{\sqrt{-AB}}=-{\mathrm{i}} t^2|\Psi|^2,\quad \frac{{\mathrm{i}} \mathscr{A}}{2t}-\frac{AD}{\sqrt{-AB}}=\frac{1}{2}{\mathrm{i}} t|\Psi|^2-\frac{1}{2t},

\end{align}

\begin{align}

{\mathrm{i}} \mathscr{A}+\frac{1}{2}+\frac{2t AD}{\sqrt{-AB}}=-{\mathrm{i}} t^2|\Psi|^2,\quad \frac{{\mathrm{i}} \mathscr{A}}{2t}-\frac{AD}{\sqrt{-AB}}=\frac{1}{2}{\mathrm{i}} t|\Psi|^2-\frac{1}{2t},

\end{align} \begin{align}

\widetilde{\alpha}=2{\mathrm{i}} t^{-\frac{1}{2}}n,\quad {\mathrm{i}} t B=2{\mathrm{i}} t^\frac{3}{2}n^{-1}.

\end{align}

\begin{align}

\widetilde{\alpha}=2{\mathrm{i}} t^{-\frac{1}{2}}n,\quad {\mathrm{i}} t B=2{\mathrm{i}} t^\frac{3}{2}n^{-1}.

\end{align} where the notation of [Reference Kitaev and Vartanian22] is on the left-hand sideFootnote 2 and ![]() $A,B,C,D,\widetilde{\alpha}$ are functions of t while

$A,B,C,D,\widetilde{\alpha}$ are functions of t while ![]() $\mathscr{A}$ is a constant. Using the product of the identities in (1.46) to eliminate

$\mathscr{A}$ is a constant. Using the product of the identities in (1.46) to eliminate ![]() $AD/\sqrt{-AB}$ shows that the two equations in (1.47) actually coincide, and yield

$AD/\sqrt{-AB}$ shows that the two equations in (1.47) actually coincide, and yield  $\mathscr{A}=\frac{1}{2}{\mathrm{i}}$. Then, according to [Reference Kitaev and Vartanian22, Lemma 2.1], the second parameter

$\mathscr{A}=\frac{1}{2}{\mathrm{i}}$. Then, according to [Reference Kitaev and Vartanian22, Lemma 2.1], the second parameter ![]() $\mathscr{B}$ is determined from (1.48) up to a sign by

$\mathscr{B}$ is determined from (1.48) up to a sign by ![]() $\mathscr{B}=4\varepsilon$. The corresponding solution of (1.42) is then given by

$\mathscr{B}=4\varepsilon$. The corresponding solution of (1.42) is then given by  $\mathfrak{u}(t)=\varepsilon t\sqrt{-A(t)B(t)}$. This proves the following, which is also easy to verify directly from the compatibility condition for the system (1.44)–(1.45).

$\mathfrak{u}(t)=\varepsilon t\sqrt{-A(t)B(t)}$. This proves the following, which is also easy to verify directly from the compatibility condition for the system (1.44)–(1.45).

Theorem 1.14 ( $\Psi(0,T)$ for

$\Psi(0,T)$ for  $b=a$ and the Painlevé-III (D7) equation)

$b=a$ and the Painlevé-III (D7) equation)

Fix ![]() $a\in\mathbb{C}$ nonzero, and let

$a\in\mathbb{C}$ nonzero, and let ![]() $\varepsilon=\pm 1$. The function

$\varepsilon=\pm 1$. The function

\begin{align}

\mathfrak{u}(t):=\frac{1}{4}{\mathrm{i}}\varepsilon t\Psi\cdot\left(\Psi^*+t\frac{ \mathrm{d}\Psi^*}{ \mathrm{d} t}\right),\quad t\in\mathbb{R},\quad\Psi=\Psi(0,t^2;\mathbf{G}(a,a))

\end{align}

\begin{align}

\mathfrak{u}(t):=\frac{1}{4}{\mathrm{i}}\varepsilon t\Psi\cdot\left(\Psi^*+t\frac{ \mathrm{d}\Psi^*}{ \mathrm{d} t}\right),\quad t\in\mathbb{R},\quad\Psi=\Psi(0,t^2;\mathbf{G}(a,a))

\end{align} satisfies the Painlevé-III (D7) equation in the form (1.42) with parameters  $\mathscr{A}=\frac{1}{2}{\mathrm{i}}$ and

$\mathscr{A}=\frac{1}{2}{\mathrm{i}}$ and ![]() $\mathscr{B}=4\varepsilon$.

$\mathscr{B}=4\varepsilon$.

This result was known to Suleimanov [Reference Suleimanov33], although to extract it from his paper one must take the independent variable to be ![]() $t=T^\frac{1}{2}$ instead of T and correct some constants. Another simple identity satisfied by Ψ that follows from the compatibility condition for (1.44)–(1.45) is

$t=T^\frac{1}{2}$ instead of T and correct some constants. Another simple identity satisfied by Ψ that follows from the compatibility condition for (1.44)–(1.45) is

\begin{align}

\left|\Psi+t\frac{ \mathrm{d}\Psi}{ \mathrm{d} t}\right|^2=16,\quad t\in\mathbb{R},\quad\Psi=\Psi(0,t^2;\mathbf{G}(a,a)).

\end{align}

\begin{align}

\left|\Psi+t\frac{ \mathrm{d}\Psi}{ \mathrm{d} t}\right|^2=16,\quad t\in\mathbb{R},\quad\Psi=\Psi(0,t^2;\mathbf{G}(a,a)).

\end{align}Corollary 1.15 (Behaviour of  $\mathfrak{u}(t)$ near

$\mathfrak{u}(t)$ near  $t=0)$

$t=0)$