1 Introduction

Item factor analysis (IFA; e.g., Bock et al., Reference Bock, Gibbons and Muraki1988; Wirth & Edwards, Reference Wirth and Edwards2007) is a powerful statistical framework used to model and measure latent variables. The latent variables can be mathematical ability (Rutkowski et al., Reference Rutkowski, von Davier and Rutkowski2013), mental disorder (Stochl et al., Reference Stochl, Jones and Croudace2012), or personality traits (Balsis et al., Reference Balsis, Ruchensky and Busch2017) that influence individuals’ responses to various items, such as test or survey questions. IFA aims to uncover these hidden factors by analyzing patterns in the responses, allowing researchers to understand the relationships between the latent variables and the items. Understanding these latent structures is crucial across fields, such as psychology and education for accurate measurement of human abilities and characteristics (Chen et al., Reference Chen, Li, Liu and Ying2021; Wirth & Edwards, Reference Wirth and Edwards2007).

Psychological and educational research now frequently involves diverse data types, including large-scale assessment, high-volume data, and process data involving unstructured and textual responses (e.g., Ma et al., Reference Ma, Ouyang, Wang and Xu2024; Weston & Laursen, Reference Weston and Laursen2015; Zhang et al., Reference Zhang, Wang, Qi, Liu and Ying2023). Traditional estimation algorithms often struggle to efficiently process and analyze these data, leading to unstable model estimates, long computation times, and excessive memory usage. Deep learning (DL) literature has much to offer to handle the above challenges. If we view IFA from the lens of generalized latent variable models (Muthén, Reference Muthén2002; Rabe-Hesketh & Skrondal, Reference Rabe-Hesketh and Skrondal2008), representation learning (Bengio et al., Reference Bengio, Courville and Vincent2013) can help model and capture intricate latent patterns from observed data. Unlike traditional IFA estimation methods, which often rely on strict parametric and distributional assumptions, representation learning leverages powerful learning algorithms and expressive models such as neural networks to generate richer and highly flexible representations when modeling data. This improves the modeling and detection of subtle patterns for high-dimensional latent variables that classical methods might underfit. Therefore, by integrating representation learning with IFA, researchers can achieve a more comprehensive understanding of latent traits.

One of the representation techniques that is being actively applied in IFA (Cho et al., Reference Cho, Wang, Zhang and Xu2021; Hui et al., Reference Hui, Warton, Ormerod, Haapaniemi and Taskinen2017; Jeon et al., Reference Jeon, Rijmen and Rabe-Hesketh2017) is variants based on variational autoencoders (VAEs; Curi et al., Reference Curi, Converse, Hajewski and Oliveira2019; Wu et al., Reference Wu, Davis, Domingue, Piech and Goodman2020). VAEs consist of two main components: an encoder and a decoder. The encoder network compresses the high-dimensional input data into a lower-dimensional latent space, effectively capturing the essential features that define the data’s underlying structure. Conversely, the decoder network reconstructs the original data from these latent representations, ensuring that the compressed information retains the critical characteristics. Urban & Bauer (Reference Urban and Bauer2021) further incorporated an importance-weighted (IW) approach (Burda et al., Reference Burda, Grosse and Salakhutdinov2015) to improve the estimation performance of VAEs in IFA. These advances have an advantage over the traditional techniques, such as Gauss–Hermite quadrature or the EM algorithm (Bock & Aitkin, Reference Bock and Aitkin1981; Cai, Reference Cai2010; Darrell Bock & Lieberman, Reference Darrell Bock and Lieberman1970) for large sample size and models with high-dimensional latent variables.

Despite the significant advancements brought by VAEs in IFA, conventional VAEs may inadequately capture the true posterior distribution due to insufficiently expressive inference models (Mescheder et al., Reference Mescheder, Nowozin and Geiger2017). Specifically, VAEs might focus on the modes of the prior distribution and fail to represent the latent variables in some local regions (Makhzani et al., Reference Makhzani, Shlens, Jaitly, Goodfellow and Frey2015). Consequently, enhancing the expressiveness of the approximate posterior is imperative for improved performance (Cremer et al., Reference Cremer, Li and Duvenaud2018).

An alternative representation paradigm, generative adversarial networks (GANs; Goodfellow et al., Reference Goodfellow, Pouget-Abadie, Mirza, Xu, Warde-Farley, Ozair, Courville and Bengio2014), a celebrated framework for estimating generative models in DL and computer vision, offer greater flexibility in modeling arbitrary probability densities and can contribute to the expressiveness. Multiple variants of GANs have achieved remarkable success in complex tasks, such as image generation (e.g., Arjovsky et al., Reference Arjovsky, Chintala and Bottou2017; Radford, Reference Radford2015; Zhu, Park, et al., Reference Zhu, Park, Isola and Efros2017). Among these models, the combination of GANs and VAEs can be traced back to adversarial autoencoders (AAEs; Makhzani et al., Reference Makhzani, Shlens, Jaitly, Goodfellow and Frey2015) and the VAE-GAN model (Larsen et al., Reference Larsen, Sønderby, Larochelle and Winther2016). Figure 1 provides an intuitive visual example demonstrating how integrating GANs and VAEs can lead to better representation learning. The figure compares ten handwritten images sampled from four models: (a) GAN, (b) WGAN (a GAN variant, Arjovsky et al., Reference Arjovsky, Chintala and Bottou2017), (c) VAE, and (d) VAE-GAN. While GAN generated only the digit 1,Footnote 1 WGAN exhibited unstable performance, and the images produced by VAE appeared blurry. In contrast, VAE-GAN generated relatively clear results for most of digits. However, vanilla GANs, VAE-GANs, and AAEs rely solely on adversarial loss, formulated as a minimax game between networks to deceive a discriminator. As a result, they are not motivated from a maximum-likelihood perspective and are therefore not directly applicable for improving marginal maximum likelihood (MML) estimation in IFA.

Figure 1 Ten handwritten images sampled from model (a) GAN, (b) WGAN, (c) VAE, and (d) VAE-GAN. Adapted from Mi et al. (Reference Mi, Shen and Zhang2018).

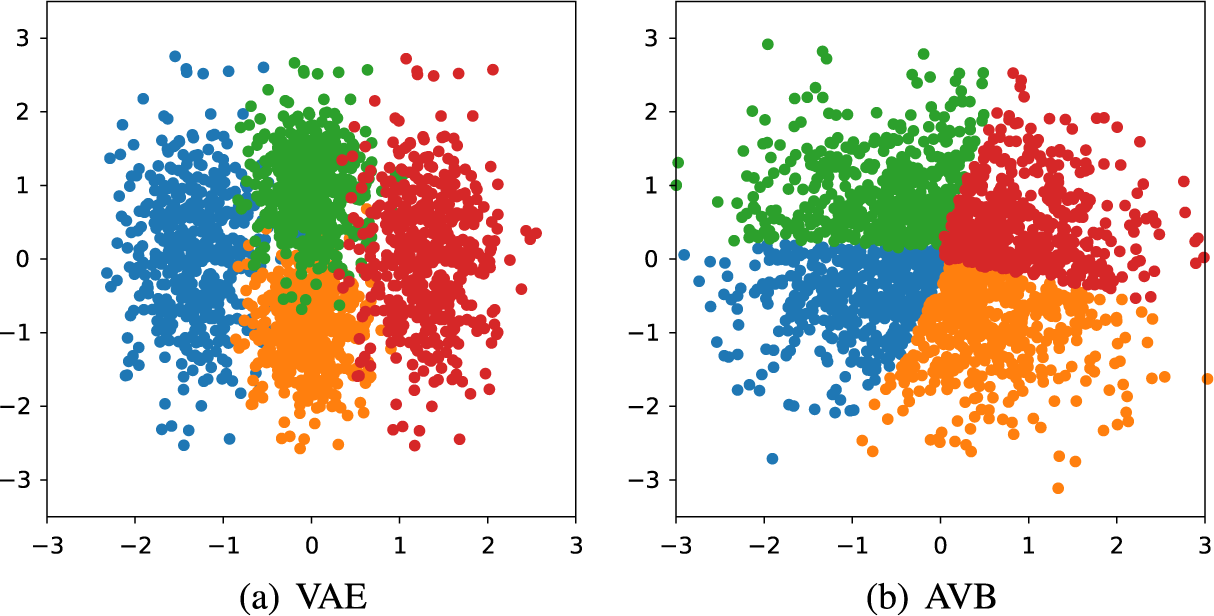

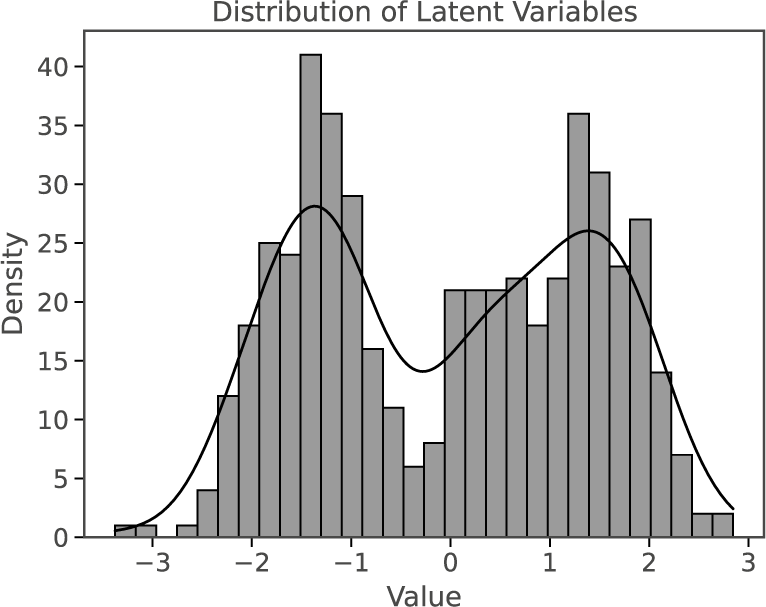

Therefore, this article focuses on an improved approach based on VAEs, adversarial variational Bayes (AVB; Mescheder et al., Reference Mescheder, Nowozin and Geiger2017), which has a flexible and general approach to construct inference models. AVB improves the traditional VAE framework by integrating elements from GANs, specifically by introducing an auxiliary discriminator network. This discriminator engages in an adversarial training process with the encoder to help the inference model produce more accurate and expressive latent variable estimates. By combining the strengths of both VAEs and GANs, AVB removes the restrictive assumption of standard normal distributions in the inference model and enables effective exploration of complex, multimodal latent spaces. Theoretical and empirical evidence has shown that AVB can achieve higher log-likelihood, lower reconstruction error, and more precise approximation of the posterior (Mescheder et al., Reference Mescheder, Nowozin and Geiger2017). For example, we consider a simple dataset containing samples from four different labels whose latent codes are uniformly distributed. Figure 2 compares the recovered latent distribution: the unallocated regions (“empty space”) and overlap between regions in VAE’s result can yield ambiguous latent codes and compromise both inference precision and the quality of generated samples, but AVB produces a posterior with well-defined boundaries around each region. This approach not only improves the flexibility and accuracy of MML estimation but also provides more potential for better handling more diverse and high-dimensional data that become increasingly common in psychological and educational research.

Figure 2 Distribution of latent variables for VAE and AVB trained on a simple synthetic dataset containing samples from four different labels.

This study proposes an innovative algorithm combining IWAE (IWAE; Burda et al., Reference Burda, Grosse and Salakhutdinov2015) with AVB to enhance the estimation of parameters for more general and complex IFA. The proposed algorithm can be regarded as an improvement of the IWAE algorithm by Urban & Bauer (Reference Urban and Bauer2021). The main contributions are two-fold: (1) the novel importance-weighted AVB (IWAVB) method is proposed for exploratory and confirmatory IFA of polytomous item response data and (2) compared with the IWAE method, which is the current state-of-the-art and was shown to be more efficient than the classical Metropolis–Hastings Robbins–Monro (MH-RM; Cai, Reference Cai2010), numerical experiment results support similarly satisfactory performance of IWAVB, with improvement when the latent variables have a multimodal distribution.

The remaining parts are organized as follows. Section 2 explains how AVB builds upon traditional VAE methods for parameter estimation for the graded response model (GRM), a general framework within IFA. Section 3 details the implementation steps of the AVB algorithm and introduces strategies for improving its overall performance. Section 4 then provides a comparative evaluation of AVB and VAE, using both empirical and simulated data. Finally, Section 5 examines potential limitations of the proposed approach and highlights directions for future development.

2 Adversarial variational Bayes for item factor analysis

In the context of IFA, given the observed responses

![]() $\boldsymbol {x}$

, the variational inference (VI) method uses an inference model

$\boldsymbol {x}$

, the variational inference (VI) method uses an inference model

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

to approximate the posterior distribution of the latent variable

$q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

to approximate the posterior distribution of the latent variable

![]() ${p_{\boldsymbol {\theta }}(\boldsymbol {z}\mid \boldsymbol {x})}$

. The

${p_{\boldsymbol {\theta }}(\boldsymbol {z}\mid \boldsymbol {x})}$

. The

![]() $\boldsymbol {\phi }$

represents the parameters for the inference model, and

$\boldsymbol {\phi }$

represents the parameters for the inference model, and

![]() ${\boldsymbol {\theta }}$

includes the parameters for the posterior distributions (e.g., items’ parameters). As a specific approach to perform VI, AVB can be regraded as an improved version of VAE which constructs more expressive inference model

${\boldsymbol {\theta }}$

includes the parameters for the posterior distributions (e.g., items’ parameters). As a specific approach to perform VI, AVB can be regraded as an improved version of VAE which constructs more expressive inference model

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

, and is shown to improve the likelihood estimation of parameters for the GRM in this section.

$q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

, and is shown to improve the likelihood estimation of parameters for the GRM in this section.

2.1 The graded response model

The GRM (Samejima, Reference Samejima1969) is a specific model for IFA used for analyzing ordinal response data, particularly in psychological and educational measurement. The GRM assumes responses to an item are ordered categories (e.g., Likert scale). We follow the notation as in Cai (Reference Cai2010).

Given

![]() $i=1,\dots ,N$

distinct respondents and

$i=1,\dots ,N$

distinct respondents and

![]() $j=1,\dots ,M$

items, the response for respondent i to item j in

$j=1,\dots ,M$

items, the response for respondent i to item j in

![]() $C_j$

graded categories is

$C_j$

graded categories is

![]() $x_{i,j}\in \left \{0,1,\dots ,C_j-1\right \}$

. Assuming the number of latent variables is P, the

$x_{i,j}\in \left \{0,1,\dots ,C_j-1\right \}$

. Assuming the number of latent variables is P, the

![]() $P \times 1$

vector of latent variables for respondent i is denoted as

$P \times 1$

vector of latent variables for respondent i is denoted as

![]() $\boldsymbol {z}_i$

. For item j, the

$\boldsymbol {z}_i$

. For item j, the

![]() $P \times 1$

vector of loadings is

$P \times 1$

vector of loadings is

![]() $\boldsymbol {\beta }_j$

, and the

$\boldsymbol {\beta }_j$

, and the

![]() $(C_j-1)\times 1$

vector of strictly ordered category intercepts is

$(C_j-1)\times 1$

vector of strictly ordered category intercepts is

![]() $\boldsymbol {\alpha }_j=(\alpha _{j,1},\dots ,\alpha _{j,C_j-1})^T$

. Also, the parameters of item j are denoted as

$\boldsymbol {\alpha }_j=(\alpha _{j,1},\dots ,\alpha _{j,C_j-1})^T$

. Also, the parameters of item j are denoted as

![]() $\boldsymbol {\theta }_j=(\boldsymbol {\alpha }_j,\boldsymbol {\beta }_j)$

. The GRM is defined as the boundary probability conditional on the item parameters

$\boldsymbol {\theta }_j=(\boldsymbol {\alpha }_j,\boldsymbol {\beta }_j)$

. The GRM is defined as the boundary probability conditional on the item parameters

![]() $\boldsymbol {\theta }_j$

and latent variables

$\boldsymbol {\theta }_j$

and latent variables

![]() $\boldsymbol {z}_i$

: for

$\boldsymbol {z}_i$

: for

![]() $k\in \left \{1,\dots ,C_j-1\right \}$

,

$k\in \left \{1,\dots ,C_j-1\right \}$

,

$$ \begin{align} \begin{aligned} p(x_{i,j}\geq k \mid \boldsymbol{z}_i,\boldsymbol{\theta}_j)&=\frac{1}{1+\exp\left[-(\boldsymbol{\beta}_j^T\boldsymbol{z}_i+\alpha_{j,k})\right]},\\ p(x_{i,j}\geq 0 \mid \boldsymbol{z}_i,\boldsymbol{\theta}_j)&=1,\; p(x_{i,j}\geq C_j \mid \boldsymbol{z}_i,\boldsymbol{\theta}_j)=0. \end{aligned}\end{align} $$

$$ \begin{align} \begin{aligned} p(x_{i,j}\geq k \mid \boldsymbol{z}_i,\boldsymbol{\theta}_j)&=\frac{1}{1+\exp\left[-(\boldsymbol{\beta}_j^T\boldsymbol{z}_i+\alpha_{j,k})\right]},\\ p(x_{i,j}\geq 0 \mid \boldsymbol{z}_i,\boldsymbol{\theta}_j)&=1,\; p(x_{i,j}\geq C_j \mid \boldsymbol{z}_i,\boldsymbol{\theta}_j)=0. \end{aligned}\end{align} $$

The conditional probability for a specific response

![]() $x_{i,j}=k\in \left \{0,\dots ,C_j-1\right \}$

is

$x_{i,j}=k\in \left \{0,\dots ,C_j-1\right \}$

is

2.2 Marginal maximum likelihood

MML estimates model parameters by maximizing the likelihood of the observed response data while integrating over latent variables. As a classical approach for parameter estimation in item response models, MML typically employs the expectation–maximization (EM) algorithm (e.g., Bock & Aitkin, Reference Bock and Aitkin1981; Dempster et al., Reference Dempster, Laird and Rubin1977; Johnson, Reference Johnson2007; Muraki, Reference Muraki1992; Rizopoulos, Reference Rizopoulos2007) or quadrature-based methods (e.g., Darrell Bock & Lieberman, Reference Darrell Bock and Lieberman1970; Rabe-Hesketh et al., Reference Rabe-Hesketh, Skrondal and Pickles2005). The basic concepts of MML estimation are outlined as follows.

For the respondent i and the item j, with Equation (2), the response

![]() $x_{i,j}$

follows the multinomial distribution with 1 trial and

$x_{i,j}$

follows the multinomial distribution with 1 trial and

![]() $C_j$

mutually exclusive outcomes. The probability mass function is

$C_j$

mutually exclusive outcomes. The probability mass function is ![]() , where

, where ![]() if

if

![]() $x_{i,j}=k$

and otherwise, it is 0.

$x_{i,j}=k$

and otherwise, it is 0.

Considering the response pattern

![]() $\boldsymbol {x}_i=(x_{i,j})_{j=1}^M$

of the respondent i, since each response is conditionally independent given the latent variables, the conditional probability is, for

$\boldsymbol {x}_i=(x_{i,j})_{j=1}^M$

of the respondent i, since each response is conditionally independent given the latent variables, the conditional probability is, for

![]() $\boldsymbol {\theta }=(\boldsymbol {\theta }_j)_{j=1}^M$

,

$\boldsymbol {\theta }=(\boldsymbol {\theta }_j)_{j=1}^M$

,

$$ \begin{align} p_{\boldsymbol{\theta}}(\boldsymbol{x}_i \mid \boldsymbol{z}_i)=\prod\limits_{j=1}^M p_{\boldsymbol{\theta_j}}(x_{i,j} \mid \boldsymbol{z}_i). \end{align} $$

$$ \begin{align} p_{\boldsymbol{\theta}}(\boldsymbol{x}_i \mid \boldsymbol{z}_i)=\prod\limits_{j=1}^M p_{\boldsymbol{\theta_j}}(x_{i,j} \mid \boldsymbol{z}_i). \end{align} $$

Given the prior distribution of latent variables is

![]() $p(\boldsymbol {z}_i)$

and

$p(\boldsymbol {z}_i)$

and

![]() $p_{\boldsymbol {\theta }}(\boldsymbol {x}_i \mid \boldsymbol {z}_i)$

, the marginal likelihood of

$p_{\boldsymbol {\theta }}(\boldsymbol {x}_i \mid \boldsymbol {z}_i)$

, the marginal likelihood of

![]() $\boldsymbol {x}_i$

is

$\boldsymbol {x}_i$

is

$$ \begin{align} p_{\boldsymbol{\theta}}(\boldsymbol{x}_i)=\int_{\mathbb{R}^P}\prod\limits_{j=1}^M p_{\boldsymbol{\theta_j}}(x_{i,j} \mid \boldsymbol{z}_i)p(\boldsymbol{z}_i)d\boldsymbol{z}_i. \end{align} $$

$$ \begin{align} p_{\boldsymbol{\theta}}(\boldsymbol{x}_i)=\int_{\mathbb{R}^P}\prod\limits_{j=1}^M p_{\boldsymbol{\theta_j}}(x_{i,j} \mid \boldsymbol{z}_i)p(\boldsymbol{z}_i)d\boldsymbol{z}_i. \end{align} $$

Given the full

![]() $N\times M$

responses matrix

$N\times M$

responses matrix

![]() $\boldsymbol {X}=[x_{i,j}]_{i=1,\dots ,N,\,j=1\dots ,M}$

of all the respondents, the MML estimator

$\boldsymbol {X}=[x_{i,j}]_{i=1,\dots ,N,\,j=1\dots ,M}$

of all the respondents, the MML estimator

![]() $\boldsymbol {\theta }^{\ast}$

of item parameter is achieved by maximizing the likelihood of the observed responses:

$\boldsymbol {\theta }^{\ast}$

of item parameter is achieved by maximizing the likelihood of the observed responses:

$$ \begin{align} \mathcal{L}(\boldsymbol{\theta}\mid\boldsymbol{X})=\prod_{i=1}^N p_{\boldsymbol{\theta}}(\boldsymbol{x}_i)=\prod_{i=1}^N\left[\int_{\mathbb{R}^P}\prod\limits_{j=1}^M p_{\boldsymbol{\theta_j}}(x_{i,j} \mid \boldsymbol{z}_i)p(\boldsymbol{z}_i)d\boldsymbol{z}_i\right]. \end{align} $$

$$ \begin{align} \mathcal{L}(\boldsymbol{\theta}\mid\boldsymbol{X})=\prod_{i=1}^N p_{\boldsymbol{\theta}}(\boldsymbol{x}_i)=\prod_{i=1}^N\left[\int_{\mathbb{R}^P}\prod\limits_{j=1}^M p_{\boldsymbol{\theta_j}}(x_{i,j} \mid \boldsymbol{z}_i)p(\boldsymbol{z}_i)d\boldsymbol{z}_i\right]. \end{align} $$

Since the above equation contains N integrals in the high-dimensional latent space

![]() $\mathbb {R}^P$

, directly maximizing the likelihood is computationally intensive. Instead, variational methods that the current study focuses on improving approximate

$\mathbb {R}^P$

, directly maximizing the likelihood is computationally intensive. Instead, variational methods that the current study focuses on improving approximate

![]() $\log \mathcal {L}(\boldsymbol {\theta }\mid \boldsymbol {X})$

by one lower bound and achieve efficient computation. This lower bound is derived based on the latent variable posterior

$\log \mathcal {L}(\boldsymbol {\theta }\mid \boldsymbol {X})$

by one lower bound and achieve efficient computation. This lower bound is derived based on the latent variable posterior

![]() $p_{\boldsymbol {\theta }}(\boldsymbol {z}\mid \boldsymbol {x}) = p_{\boldsymbol {\theta }}(\boldsymbol {x}\mid \boldsymbol {z})p(\boldsymbol {z})/p_{\boldsymbol {\theta }}(\boldsymbol {x})$

.

$p_{\boldsymbol {\theta }}(\boldsymbol {z}\mid \boldsymbol {x}) = p_{\boldsymbol {\theta }}(\boldsymbol {x}\mid \boldsymbol {z})p(\boldsymbol {z})/p_{\boldsymbol {\theta }}(\boldsymbol {x})$

.

2.3 Variational inference and variational autoencoder

Variational methods approximate the intractable posterior

![]() $p_{\boldsymbol {\theta }}(\boldsymbol {z}\mid \boldsymbol {x})$

with a simpler inference model

$p_{\boldsymbol {\theta }}(\boldsymbol {z}\mid \boldsymbol {x})$

with a simpler inference model

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

, and have gained increasing attention in the context of IFA (e.g., Cho et al., Reference Cho, Wang, Zhang and Xu2021; Jeon et al., Reference Jeon, Rijmen and Rabe-Hesketh2017; Urban & Bauer, Reference Urban and Bauer2021). For distributions p and q, VI aims to minimize the Kullback-Leibler (KL) divergence,

$q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

, and have gained increasing attention in the context of IFA (e.g., Cho et al., Reference Cho, Wang, Zhang and Xu2021; Jeon et al., Reference Jeon, Rijmen and Rabe-Hesketh2017; Urban & Bauer, Reference Urban and Bauer2021). For distributions p and q, VI aims to minimize the Kullback-Leibler (KL) divergence,

![]() ${\text {KL}}\left (q , p\right ),$

Footnote

2

which measures the difference between p and q. This is related to the marginal log-likelihood of each response pattern

${\text {KL}}\left (q , p\right ),$

Footnote

2

which measures the difference between p and q. This is related to the marginal log-likelihood of each response pattern

![]() $\boldsymbol {x}$

, due to the following decomposition:

$\boldsymbol {x}$

, due to the following decomposition:

$$ \begin{align} \log p_{\boldsymbol{\theta}}(\boldsymbol{x}) = \text{KL}\left[q_{\boldsymbol{\phi}}(\boldsymbol{z}\mid\boldsymbol{x}) , p_{\boldsymbol{\theta}}(\boldsymbol{z}\mid\boldsymbol{x})\right] + \underbrace{\mathbb{E}_{q_{\boldsymbol{\phi}}(\boldsymbol{z}\mid\boldsymbol{x})}\left[ \log p_{\boldsymbol{\theta}}(\boldsymbol{z}, \boldsymbol{x}) - \log q_{\boldsymbol{\phi}}(\boldsymbol{z}\mid\boldsymbol{x})\right]}_{\text{ELBO}(\boldsymbol{x})}. \end{align} $$

$$ \begin{align} \log p_{\boldsymbol{\theta}}(\boldsymbol{x}) = \text{KL}\left[q_{\boldsymbol{\phi}}(\boldsymbol{z}\mid\boldsymbol{x}) , p_{\boldsymbol{\theta}}(\boldsymbol{z}\mid\boldsymbol{x})\right] + \underbrace{\mathbb{E}_{q_{\boldsymbol{\phi}}(\boldsymbol{z}\mid\boldsymbol{x})}\left[ \log p_{\boldsymbol{\theta}}(\boldsymbol{z}, \boldsymbol{x}) - \log q_{\boldsymbol{\phi}}(\boldsymbol{z}\mid\boldsymbol{x})\right]}_{\text{ELBO}(\boldsymbol{x})}. \end{align} $$

![]() $\text {KL}(q,p)$

is non-negative, so the expectation term is the evidence lower bound (ELBO). For fixed

$\text {KL}(q,p)$

is non-negative, so the expectation term is the evidence lower bound (ELBO). For fixed

![]() $\boldsymbol {\theta }$

, maximizing ELBO in

$\boldsymbol {\theta }$

, maximizing ELBO in

![]() $\boldsymbol {\phi }$

space is equivalent to minimizing

$\boldsymbol {\phi }$

space is equivalent to minimizing

![]() $\text {KL}(q,p)$

, while for fixed

$\text {KL}(q,p)$

, while for fixed

![]() $\boldsymbol {\phi }$

, increasing ELBO with respect to

$\boldsymbol {\phi }$

, increasing ELBO with respect to

![]() $\boldsymbol {\theta }$

will push the marginal likelihood

$\boldsymbol {\theta }$

will push the marginal likelihood

![]() $p_{\boldsymbol {\theta }}(\boldsymbol {x})$

higher. The best case is that if

$p_{\boldsymbol {\theta }}(\boldsymbol {x})$

higher. The best case is that if

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

exactly matches

$q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

exactly matches

![]() $p_{\boldsymbol {\theta }}(\boldsymbol {z}\mid \boldsymbol {x})$

,

$p_{\boldsymbol {\theta }}(\boldsymbol {z}\mid \boldsymbol {x})$

,

![]() $\text {KL}(q,p)=0$

and the MML estimator

$\text {KL}(q,p)=0$

and the MML estimator

![]() $\boldsymbol {\theta }^{\ast}$

will also be the maximizer of ELBO. The ELBO can be further decomposed:

$\boldsymbol {\theta }^{\ast}$

will also be the maximizer of ELBO. The ELBO can be further decomposed:

The first term is the negative reconstruction loss, quantifying how accurately the estimated GRM can get the original input response

![]() $\boldsymbol {x}$

from the respondent’s latent

$\boldsymbol {x}$

from the respondent’s latent

![]() $\boldsymbol {z}$

. The second term is the KL divergence between

$\boldsymbol {z}$

. The second term is the KL divergence between

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

and

$q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

and

![]() $p(\boldsymbol {z})$

, regularizing them to stay close. Considering the empirical distribution of the observed item response patterns,

$p(\boldsymbol {z})$

, regularizing them to stay close. Considering the empirical distribution of the observed item response patterns,

![]() $p_{\boldsymbol {D}}(\boldsymbol {x})$

, our objective,

$p_{\boldsymbol {D}}(\boldsymbol {x})$

, our objective,

![]() $max_{\boldsymbol {\theta },\boldsymbol {\phi }} \;\mathbb {E}_{p_{\boldsymbol {D}}(\boldsymbol {x})}\left [\text {ELBO}(\boldsymbol {x})\right ]$

, can be further derived as

$max_{\boldsymbol {\theta },\boldsymbol {\phi }} \;\mathbb {E}_{p_{\boldsymbol {D}}(\boldsymbol {x})}\left [\text {ELBO}(\boldsymbol {x})\right ]$

, can be further derived as

VAE (Kingma, Reference Kingma2013; Urban & Bauer, Reference Urban and Bauer2021) leverages VI to learn a probabilistic representation of observed variables and generate new samples. It combines ideas from DL via neural networks and statistical inference. The latent variables

![]() $\boldsymbol {z}$

are typically assumed to follow a standard normal distribution

$\boldsymbol {z}$

are typically assumed to follow a standard normal distribution

![]() $\mathcal {N}(\boldsymbol {0},\boldsymbol {I})$

, where

$\mathcal {N}(\boldsymbol {0},\boldsymbol {I})$

, where

![]() $\boldsymbol {I}$

is the identity matrix. The simpler and tractable distribution

$\boldsymbol {I}$

is the identity matrix. The simpler and tractable distribution

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

for continuous latent variables

$q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

for continuous latent variables

![]() $\boldsymbol {z}$

is parameterized by a neural network as the encoder. A typical choice is the isotropic normal distribution:

$\boldsymbol {z}$

is parameterized by a neural network as the encoder. A typical choice is the isotropic normal distribution:

where

![]() $\boldsymbol {\phi }(\boldsymbol {x})=(\boldsymbol {\mu }(\boldsymbol {x}),\boldsymbol {\sigma }(\boldsymbol {x}))$

denotes the normal distribution’s configuration in the form of vector function of

$\boldsymbol {\phi }(\boldsymbol {x})=(\boldsymbol {\mu }(\boldsymbol {x}),\boldsymbol {\sigma }(\boldsymbol {x}))$

denotes the normal distribution’s configuration in the form of vector function of

![]() $\boldsymbol {x}$

for convenience;

$\boldsymbol {x}$

for convenience;

![]() $\boldsymbol {x}$

,

$\boldsymbol {x}$

,

![]() $\boldsymbol {\mu }(\boldsymbol {x})$

, and

$\boldsymbol {\mu }(\boldsymbol {x})$

, and

![]() $\boldsymbol {\sigma }(\boldsymbol {x})$

are vectors with the same dimension. Given the latent representation

$\boldsymbol {\sigma }(\boldsymbol {x})$

are vectors with the same dimension. Given the latent representation

![]() $\boldsymbol {z}$

, the decoder is the probabilistic model which reconstructs or generates data via

$\boldsymbol {z}$

, the decoder is the probabilistic model which reconstructs or generates data via

![]() $p_{\boldsymbol {\theta }}(\boldsymbol {x} \mid \boldsymbol {z})$

. However, the inference model expressed in Equation (9) might not be expressive enough to capture the true posterior (Mescheder et al., Reference Mescheder, Nowozin and Geiger2017). The KL divergence term in the ELBO requires explicit forms for both

$p_{\boldsymbol {\theta }}(\boldsymbol {x} \mid \boldsymbol {z})$

. However, the inference model expressed in Equation (9) might not be expressive enough to capture the true posterior (Mescheder et al., Reference Mescheder, Nowozin and Geiger2017). The KL divergence term in the ELBO requires explicit forms for both

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

and

$q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

and

![]() $p(\boldsymbol {z})$

, which can limit the choice of posterior families. Highly expressive inference models can lead to higher log-likelihood bounds and stronger decoders making full use of the latent space (Chen et al., Reference Chen, Kingma, Salimans, Duan, Dhariwal, Schulman, Sutskever and Abbeel2016; Kingma et al., Reference Kingma, Salimans, Jozefowicz, Chen, Sutskever and Welling2016). One remedy is to replace the inference model with an implicit distribution that lacks a closed-form density and to approximate the intractable

$p(\boldsymbol {z})$

, which can limit the choice of posterior families. Highly expressive inference models can lead to higher log-likelihood bounds and stronger decoders making full use of the latent space (Chen et al., Reference Chen, Kingma, Salimans, Duan, Dhariwal, Schulman, Sutskever and Abbeel2016; Kingma et al., Reference Kingma, Salimans, Jozefowicz, Chen, Sutskever and Welling2016). One remedy is to replace the inference model with an implicit distribution that lacks a closed-form density and to approximate the intractable

![]() $\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}) , p(\boldsymbol {z})\right ]$

via auxiliary networks (Shi et al., Reference Shi, Sun and Zhu2018). AVB is a representative example of this approach.

$\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}) , p(\boldsymbol {z})\right ]$

via auxiliary networks (Shi et al., Reference Shi, Sun and Zhu2018). AVB is a representative example of this approach.

2.4 Adversarial variational Bayes

AVB borrows ideas from the GAN framework, which leads to remarkable results in areas, such as image generation (Goodfellow et al., Reference Goodfellow, Pouget-Abadie, Mirza, Xu, Warde-Farley, Ozair, Courville and Bengio2014) and data augmentation (Zhu, Liu, et al., Reference Zhu, Liu, Qin and Li2017). As shown in Figure 3, GANs employ adversarial training by pitting a generator against a discriminator, compelling each to enhance its performance. This competition results in highly convincing synthetic data, highlighting GANs’ power in discovering intricate patterns found in real-world samples. In the context of IFA, item response data are inputs to both the generator and discriminator. Random noise sampled from the prior distribution is also required for the generator to get “generated” latent variables, which, along with “true” samples drawn directly from the prior distribution, are then evaluated by the discriminator.

Figure 3 Schematic illustration of a standard generative adversarial network.

Note: In some GAN variants, real data serve only as true samples and are not fed into the generator. However, in the AVB application to IFA, the generator and discriminator take item response data as input, and the discriminator distinguishes between samples in the latent space.

To integrate GANs into VAE, AVB regards the inference model

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

as the generator of latent variables and replaces the explicit KL divergence computation in the VAE with an adversarially trained discriminator, allowing for more expressive inference models. To be specific, besides the Encoder network

$q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

as the generator of latent variables and replaces the explicit KL divergence computation in the VAE with an adversarially trained discriminator, allowing for more expressive inference models. To be specific, besides the Encoder network

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

and the Decoder network

$q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

and the Decoder network

![]() $p_{\boldsymbol {\theta }}(\boldsymbol {x} \mid \boldsymbol {z})$

, a neural network

$p_{\boldsymbol {\theta }}(\boldsymbol {x} \mid \boldsymbol {z})$

, a neural network

![]() $T_{\boldsymbol {\psi }}(\boldsymbol {x}, \boldsymbol {z})$

with parameters

$T_{\boldsymbol {\psi }}(\boldsymbol {x}, \boldsymbol {z})$

with parameters

![]() $\boldsymbol {\psi }$

as the Discriminator learns to distinguish between samples from the inference model

$\boldsymbol {\psi }$

as the Discriminator learns to distinguish between samples from the inference model

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z} \mid \boldsymbol {x})$

and the prior

$q_{\boldsymbol {\phi }}(\boldsymbol {z} \mid \boldsymbol {x})$

and the prior

![]() $p(\boldsymbol {z})$

via minimizing the binary classification loss, which can be formulated as the following objective:

$p(\boldsymbol {z})$

via minimizing the binary classification loss, which can be formulated as the following objective:

Here,

![]() $\sigma (t) := \left (1 + e^{-t}\right )^{-1}$

denotes the sigmoid-function. Intuitively,

$\sigma (t) := \left (1 + e^{-t}\right )^{-1}$

denotes the sigmoid-function. Intuitively,

![]() $T_{\boldsymbol {\psi }}(\boldsymbol {x}, \boldsymbol {z})$

tries to distinguish pairs

$T_{\boldsymbol {\psi }}(\boldsymbol {x}, \boldsymbol {z})$

tries to distinguish pairs

![]() $(\boldsymbol {x}, \boldsymbol {z})$

that are sampled independently using the distribution

$(\boldsymbol {x}, \boldsymbol {z})$

that are sampled independently using the distribution

![]() $p_{\boldsymbol {D}}(\boldsymbol {x}) p(\boldsymbol {z})$

from those that are sampled using the current inference model (i.e.,

$p_{\boldsymbol {D}}(\boldsymbol {x}) p(\boldsymbol {z})$

from those that are sampled using the current inference model (i.e.,

![]() $p_{\boldsymbol {D}}(\boldsymbol {x}) q_{\boldsymbol {\phi }}(\boldsymbol {z} \mid \boldsymbol {x})$

). Equation (10) encourages samples from

$p_{\boldsymbol {D}}(\boldsymbol {x}) q_{\boldsymbol {\phi }}(\boldsymbol {z} \mid \boldsymbol {x})$

). Equation (10) encourages samples from

![]() ${q_{\boldsymbol {\phi }}(\boldsymbol {z} \mid \boldsymbol {x})}$

but punishes samples from

${q_{\boldsymbol {\phi }}(\boldsymbol {z} \mid \boldsymbol {x})}$

but punishes samples from

![]() $p(\boldsymbol {z})$

. As shown by Mescheder et al. (Reference Mescheder, Nowozin and Geiger2017), the KL term in the ELBO can be recovered by the following theorem.

$p(\boldsymbol {z})$

. As shown by Mescheder et al. (Reference Mescheder, Nowozin and Geiger2017), the KL term in the ELBO can be recovered by the following theorem.

Theorem 1 (Mescheder et al., Reference Mescheder, Nowozin and Geiger2017).

For

![]() $p_{\boldsymbol {\theta }}(\boldsymbol {x} \mid \boldsymbol {z})$

and

$p_{\boldsymbol {\theta }}(\boldsymbol {x} \mid \boldsymbol {z})$

and

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z} \mid \boldsymbol {x})$

fixed, the optimal discriminator

$q_{\boldsymbol {\phi }}(\boldsymbol {z} \mid \boldsymbol {x})$

fixed, the optimal discriminator

![]() $T^{\ast}$

according to the Objective (10) is given by

$T^{\ast}$

according to the Objective (10) is given by

so

![]() $\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}), p(\boldsymbol {z})\right ] = \mathbb {E}_{q_{\boldsymbol {\phi }}(\boldsymbol {z} \mid \boldsymbol {x})} \left (T^{\ast}(\boldsymbol {x}, \boldsymbol {z})\right )$

.

$\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}), p(\boldsymbol {z})\right ] = \mathbb {E}_{q_{\boldsymbol {\phi }}(\boldsymbol {z} \mid \boldsymbol {x})} \left (T^{\ast}(\boldsymbol {x}, \boldsymbol {z})\right )$

.

On the other hand, the encoder

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z} \mid \boldsymbol {x})$

is trained adversarially to “fool” the discriminator, since if

$q_{\boldsymbol {\phi }}(\boldsymbol {z} \mid \boldsymbol {x})$

is trained adversarially to “fool” the discriminator, since if

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z} \mid \boldsymbol {x})$

is indistinguishable from

$q_{\boldsymbol {\phi }}(\boldsymbol {z} \mid \boldsymbol {x})$

is indistinguishable from

![]() $p(\boldsymbol {z})$

, then they can be regarded as similar distributions. Using the reparameterization trick, the Objective (8) can be rewritten in the form:

$p(\boldsymbol {z})$

, then they can be regarded as similar distributions. Using the reparameterization trick, the Objective (8) can be rewritten in the form:

Therefore, we need to do two optimizations for Objectives (10) and (12), summarized in Algorithm 1. Note that T ψ ( x , z ) and z ϕ ( x , ϵ ) can be implemented as neural networks, but in the context of the GRM, the decoder p θ ( x ∣ z ϕ ( x , ϵ )) corresponds directly to the likelihood definition for the GRM. As such, it is modeled by a single-layer neural network consisting of a linear function and a sigmoid activation.

2.5 Our contributions to the literature

Given that VAEs have been a powerful approach to perform exploratory IFA (Urban & Bauer, Reference Urban and Bauer2021), the innovative contribution of the AVB method is to bring richer inference model choices for the implementation by DL. Over the past decade, DL techniques have thrived (LeCun et al., Reference LeCun, Bengio and Hinton2015), and IFA has derived considerable benefits from these advances. When applying neural networks to estimate parameters in IFA, key distinctions arise in the design of the network architecture and the choice of loss function. A straightforward strategy is to feed one-hot encodingsFootnote 3 for both respondents and items into two separate feedforward neural networks (FNNs): one infers the latent variables of the respondents, while the other estimates the item parameters. This approach typically employs a variation of the joint maximum likelihood (JML) for its loss function, relying on the product of conditional probabilities in Equation (3) across all respondents (Tsutsumi et al., Reference Tsutsumi, Kinoshita and Ueno2021; Zhang & Chen, Reference Zhang and Chen2024). However, as demonstrated by Ghosh (Reference Ghosh1995), the JML solution leads to inconsistent estimates for item parameters.

An alternative that proves more statistically robust relies on the MML procedure discussed earlier (Bock & Aitkin, Reference Bock and Aitkin1981; Wirth & Edwards, Reference Wirth and Edwards2007). Drawing on these perspectives, researchers merge representation learning for latent-variable models with neural architectures by adopting autoencoder designs. Through variational methods, they approximate maximum likelihood estimation for latent variable models. A notable example in the literature is the IWAE algorithm (Urban & Bauer, Reference Urban and Bauer2021). In our study, we propose an IWAVB, refines this approach.

Building on insights from Sections 2.3 and 2.4, we observe that the major contrast between AVB and conventional VAE approaches centers on the inference model. Figure 4 visually contrasts these two approaches. While generative models demand precise techniques for latent-variable estimation, basic Gaussian assumptions might restrict the range of distributions in standard VAEs. Although researchers have attempted to design more sophisticated neural network architectures for VAE inference, these solutions may still lack the expressiveness of the black-box method employed by AVB (Mescheder et al., Reference Mescheder, Nowozin and Geiger2017).

Figure 4 Schematic comparison of the encoder and decoder designs for the AVB method and a standard VAE.

To address this limitation, the AVB framework modifies

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

into a fully black-box neural network

$q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

into a fully black-box neural network

![]() $\boldsymbol {z}_{\boldsymbol {\phi }}(\boldsymbol {x}, \boldsymbol {\epsilon })$

. Rather than a standard approach where random noise is added only at the final step, AVB incorporates

$\boldsymbol {z}_{\boldsymbol {\phi }}(\boldsymbol {x}, \boldsymbol {\epsilon })$

. Rather than a standard approach where random noise is added only at the final step, AVB incorporates

![]() $\boldsymbol {\epsilon }$

as an additional input earlier in the inference process. As neural networks can almost represent any probability density in the latent space (Cybenko, Reference Cybenko1989), this design empowers the network to represent intricate probability distributions free from the Gaussian assumption, thus expanding the scope of patterns it can capture. However, such implicit

$\boldsymbol {\epsilon }$

as an additional input earlier in the inference process. As neural networks can almost represent any probability density in the latent space (Cybenko, Reference Cybenko1989), this design empowers the network to represent intricate probability distributions free from the Gaussian assumption, thus expanding the scope of patterns it can capture. However, such implicit

![]() $q_{\boldsymbol {\phi }}$

lacks a tractable density,

$q_{\boldsymbol {\phi }}$

lacks a tractable density,

![]() $\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}) , p(\boldsymbol {z})\right ]$

cannot be directly computed so another discriminator neural network learns to recover

$\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}) , p(\boldsymbol {z})\right ]$

cannot be directly computed so another discriminator neural network learns to recover

![]() $\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}) , p(\boldsymbol {z})\right ]$

via adversarial process. The recovery of

$\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}) , p(\boldsymbol {z})\right ]$

via adversarial process. The recovery of

![]() $\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}) , p(\boldsymbol {z})\right ]$

imposes a prior, such as a standard normal, on

$\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}) , p(\boldsymbol {z})\right ]$

imposes a prior, such as a standard normal, on

![]() $\boldsymbol {z}$

. This prior anchors the location and scale of

$\boldsymbol {z}$

. This prior anchors the location and scale of

![]() $\boldsymbol {z}$

, thereby alleviating the translational and scaling indeterminacies that cause model non-identifiability (De Ayala, Reference De Ayala2013).

$\boldsymbol {z}$

, thereby alleviating the translational and scaling indeterminacies that cause model non-identifiability (De Ayala, Reference De Ayala2013).

Through the adversarial process, the algorithm derives item parameter estimates

![]() $\boldsymbol {\theta }^{\ast}$

that optimizes the likelihood of the observed data, while also constructing a distribution

$\boldsymbol {\theta }^{\ast}$

that optimizes the likelihood of the observed data, while also constructing a distribution

![]() $q_{\boldsymbol {\phi }^{\ast}}(\boldsymbol {z}\mid \boldsymbol {x})$

that closely approximates the true posterior

$q_{\boldsymbol {\phi }^{\ast}}(\boldsymbol {z}\mid \boldsymbol {x})$

that closely approximates the true posterior

![]() $p_{\boldsymbol {\theta }^{\ast}}(\boldsymbol {z}\mid \boldsymbol {x})$

. From a theoretical perspective, Mescheder et al. (Reference Mescheder, Nowozin and Geiger2017) established that AVB can achieve performance on par with at least that of VAEs in terms of likelihood, as evidenced by the following theorem.

$p_{\boldsymbol {\theta }^{\ast}}(\boldsymbol {z}\mid \boldsymbol {x})$

. From a theoretical perspective, Mescheder et al. (Reference Mescheder, Nowozin and Geiger2017) established that AVB can achieve performance on par with at least that of VAEs in terms of likelihood, as evidenced by the following theorem.

Theorem 2 (Mescheder et al., Reference Mescheder, Nowozin and Geiger2017).

Assume that T can represent any function of

![]() $\boldsymbol {x}$

and

$\boldsymbol {x}$

and

![]() $\boldsymbol {z}$

. If

$\boldsymbol {z}$

. If

![]() $(\boldsymbol {\theta }^{\ast},\boldsymbol {\phi }^{\ast},T^{\ast})$

defines a Nash-equilibriumFootnote

4

of the two-player game defined by Equations (10) and (12), then

$(\boldsymbol {\theta }^{\ast},\boldsymbol {\phi }^{\ast},T^{\ast})$

defines a Nash-equilibriumFootnote

4

of the two-player game defined by Equations (10) and (12), then

and

![]() $(\boldsymbol {\theta }^{\ast},\boldsymbol {\phi }^{\ast})$

is a global optimum of the variational lower bound in Equation (8).

$(\boldsymbol {\theta }^{\ast},\boldsymbol {\phi }^{\ast})$

is a global optimum of the variational lower bound in Equation (8).

Under misspecification, such as inappropriate assumptions of latent variable distributions, AVB’s architecture can deliver a higher marginal likelihood theoretically. Meanwhile, by improving the marginal log-likelihood, one can reduce the reconstruction error

![]() $-\mathbb {E}_{q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})}\left [ \log p_{\boldsymbol {\theta }}(\boldsymbol {x} \mid \boldsymbol {z}) \right ]$

, which measures the disparity between real and reconstructed data, and can be reduced by increasing marginal likelihood according to Equation (7). In the context of item response theory (IRT), AVB thus stands out by capturing a more accurate respondents’ latent traits. Research in the computer science field also highlights that the combination of GAN and VAE can extract more discriminative information and provide a more precise and clear model in the latent variable space (e.g., Hou et al., Reference Hou, Sun, Shen and Qiu2019; Makhzani et al., Reference Makhzani, Shlens, Jaitly, Goodfellow and Frey2015).

$-\mathbb {E}_{q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})}\left [ \log p_{\boldsymbol {\theta }}(\boldsymbol {x} \mid \boldsymbol {z}) \right ]$

, which measures the disparity between real and reconstructed data, and can be reduced by increasing marginal likelihood according to Equation (7). In the context of item response theory (IRT), AVB thus stands out by capturing a more accurate respondents’ latent traits. Research in the computer science field also highlights that the combination of GAN and VAE can extract more discriminative information and provide a more precise and clear model in the latent variable space (e.g., Hou et al., Reference Hou, Sun, Shen and Qiu2019; Makhzani et al., Reference Makhzani, Shlens, Jaitly, Goodfellow and Frey2015).

3 Implementation of AVB and IWAVB

This section introduces the implementation details of the AVB method. A fundamental neural network design is provided first and to achieve better approximation and higher likelihood, the adaptive contrast (AC) and IW techniques are introduced. A summary with a detailed algorithm combining all the techniques is shown in the end.

3.1 Neural network design

Considering the schematic diagram in Figure 4(b), the encoder

![]() $\boldsymbol {z}_{\boldsymbol {\phi }}(\boldsymbol {x}, \boldsymbol {\epsilon })$

and discriminator

$\boldsymbol {z}_{\boldsymbol {\phi }}(\boldsymbol {x}, \boldsymbol {\epsilon })$

and discriminator

![]() $T_{\boldsymbol {\psi }}(\boldsymbol {x}, \boldsymbol {z})$

are designed to be multiple-layer FNNs in this study. An FNN is a type of artificial neural network where information flows in one direction, from the input layer to the output layer, through one or more hidden layers, without forming any cycles or loops. It is the simplest form of a neural network and by increasing the number of neurons in the hidden layer, any continuous function on a closed interval can be approximated at an arbitrary precision (Cybenko, Reference Cybenko1989).

$T_{\boldsymbol {\psi }}(\boldsymbol {x}, \boldsymbol {z})$

are designed to be multiple-layer FNNs in this study. An FNN is a type of artificial neural network where information flows in one direction, from the input layer to the output layer, through one or more hidden layers, without forming any cycles or loops. It is the simplest form of a neural network and by increasing the number of neurons in the hidden layer, any continuous function on a closed interval can be approximated at an arbitrary precision (Cybenko, Reference Cybenko1989).

FNN consists of layers of neurons, where each layer l is represented mathematically by the following recursive relation: given the weight matrix

![]() $\boldsymbol {W}^{(l)}$

connecting layer

$\boldsymbol {W}^{(l)}$

connecting layer

![]() $l-1$

and l, the

$l-1$

and l, the

![]() $P_{l-1}\times 1$

output vector

$P_{l-1}\times 1$

output vector

![]() $\boldsymbol {h}^{(l-1)}$

from the previous layer

$\boldsymbol {h}^{(l-1)}$

from the previous layer

![]() $l-1$

and the

$l-1$

and the

![]() $P_l\times 1$

bias vector

$P_l\times 1$

bias vector

![]() $\boldsymbol {b}^{(l)}$

for layer l, the

$\boldsymbol {b}^{(l)}$

for layer l, the

![]() $P_{l}\times 1$

output

$P_{l}\times 1$

output

![]() $\boldsymbol {h}^{(l)}$

of the neurons in layer l is defined by

$\boldsymbol {h}^{(l)}$

of the neurons in layer l is defined by

For

![]() $l=0$

,

$l=0$

,

![]() $\boldsymbol {h}^{(0)}=\boldsymbol {x}$

, the input vector to the network. If the total number of layers is L,

$\boldsymbol {h}^{(0)}=\boldsymbol {x}$

, the input vector to the network. If the total number of layers is L,

![]() $\boldsymbol {h}^{(1)},\dots ,\boldsymbol {h}^{(L-1)}$

are the hidden layers and the final output vector of the FNN is

$\boldsymbol {h}^{(1)},\dots ,\boldsymbol {h}^{(L-1)}$

are the hidden layers and the final output vector of the FNN is

![]() $\boldsymbol {h}^{(L)}$

. Therefore, the

$\boldsymbol {h}^{(L)}$

. Therefore, the

![]() $\boldsymbol {\phi }$

for encoder and

$\boldsymbol {\phi }$

for encoder and

![]() $\boldsymbol {\psi }$

for discriminator include a set of weight matrices

$\boldsymbol {\psi }$

for discriminator include a set of weight matrices

![]() $\boldsymbol {W}^{(l)}$

and bias vectors

$\boldsymbol {W}^{(l)}$

and bias vectors

![]() $\boldsymbol {b}^{(l)}$

for

$\boldsymbol {b}^{(l)}$

for

![]() $l=1,\dots ,L,$

respectively.

$l=1,\dots ,L,$

respectively.

The activation function

![]() $f(\cdot )$

applied element-wise introduces non-linearity into the network, enabling it to approximate complex functions. In this research, the Gaussian error linear unit (GELU; Hendrycks & Gimpel, Reference Hendrycks and Gimpel2016) is set as the activation functions

$f(\cdot )$

applied element-wise introduces non-linearity into the network, enabling it to approximate complex functions. In this research, the Gaussian error linear unit (GELU; Hendrycks & Gimpel, Reference Hendrycks and Gimpel2016) is set as the activation functions

![]() $f^{(1)},\dots ,f^{(L-1)}$

for the hidden layers in the encoder network and discriminator network:

$f^{(1)},\dots ,f^{(L-1)}$

for the hidden layers in the encoder network and discriminator network:

where

![]() $\Phi (x)$

is the cumulative distribution function of the standard normal distribution. GELU is continuously differentiable, which facilitates stable gradient propagation during training. Studies have demonstrated that models utilizing GELU outperform those with ReLU and exponential linear unit (ELU) activations across various tasks, including computer vision and natural language processing (Hendrycks & Gimpel, Reference Hendrycks and Gimpel2016). The final activation function for the output of the network is set to the identity function

$\Phi (x)$

is the cumulative distribution function of the standard normal distribution. GELU is continuously differentiable, which facilitates stable gradient propagation during training. Studies have demonstrated that models utilizing GELU outperform those with ReLU and exponential linear unit (ELU) activations across various tasks, including computer vision and natural language processing (Hendrycks & Gimpel, Reference Hendrycks and Gimpel2016). The final activation function for the output of the network is set to the identity function

![]() $f^{(L)}(x)=x$

.

$f^{(L)}(x)=x$

.

Parameter initialization is essential for training neural networks, as it sets initial values for weights and biases to ensure effective learning. Proper initialization prevents common training issues, such as vanishing or exploding gradients. In this study, Kaiming initialization (He et al., Reference He, Zhang, Ren and Sun2015) was chosen for the encoder and discriminator networks, as it is specifically designed for asymmetric activations such as GELU, maintaining stable variance across layers. For the decoder, viewed as a single-layer neural network with a sigmoid activation, Xavier initialization (Glorot & Bengio, Reference Glorot and Bengio2010) was applied to loading and intercept parameters to balance variance effectively.

Neural network parameters are commonly optimized using stochastic gradient descent (SGD), an iterative method that updates parameters by minimizing a loss function computed from random batches of training data (LeCun et al., Reference LeCun, Bengio and Hinton2015). Formally, parameters

![]() $\boldsymbol {\omega }_t$

(e.g.,

$\boldsymbol {\omega }_t$

(e.g.,

![]() $\boldsymbol {\theta },\boldsymbol {\phi },\boldsymbol {\psi }$

in Algorithm 1) are updated according to

$\boldsymbol {\theta },\boldsymbol {\phi },\boldsymbol {\psi }$

in Algorithm 1) are updated according to

![]() $\boldsymbol {\omega }_{t+1} = \boldsymbol {\omega }_t - \eta \nabla _{\boldsymbol {\omega }} \mathcal {L}(\boldsymbol {\omega })$

, where

$\boldsymbol {\omega }_{t+1} = \boldsymbol {\omega }_t - \eta \nabla _{\boldsymbol {\omega }} \mathcal {L}(\boldsymbol {\omega })$

, where

![]() $\eta $

is the learning rate, and

$\eta $

is the learning rate, and

![]() $\nabla _{\boldsymbol {\omega }} \mathcal {L}(\boldsymbol {\omega })$

is the gradient. Modern extensions of SGD methods, such as AdamW (Loshchilov, Reference Loshchilov2017), improve on SGD by adaptive learning rates and decoupled weight decay. AdamW has become a popular choice in optimizing neural networks due to its ability to converge faster and improve model performance by effectively mitigating overfitting caused by large parameter values (Zhuang et al., Reference Zhuang, Liu, Cutkosky and Orabona2022). Further details on initialization and AdamW optimization are provided in the Appendix.

$\nabla _{\boldsymbol {\omega }} \mathcal {L}(\boldsymbol {\omega })$

is the gradient. Modern extensions of SGD methods, such as AdamW (Loshchilov, Reference Loshchilov2017), improve on SGD by adaptive learning rates and decoupled weight decay. AdamW has become a popular choice in optimizing neural networks due to its ability to converge faster and improve model performance by effectively mitigating overfitting caused by large parameter values (Zhuang et al., Reference Zhuang, Liu, Cutkosky and Orabona2022). Further details on initialization and AdamW optimization are provided in the Appendix.

In Algorithm 1, the gradient descent step tries to force

![]() $T(\boldsymbol {x},\boldsymbol {z})$

close to

$T(\boldsymbol {x},\boldsymbol {z})$

close to

![]() $T^{\ast}(\boldsymbol {x},\boldsymbol {z})$

but the gap might fail to be sufficiently small.

$T^{\ast}(\boldsymbol {x},\boldsymbol {z})$

but the gap might fail to be sufficiently small.

![]() $T(\boldsymbol {x},\boldsymbol {z})$

tries to recover

$T(\boldsymbol {x},\boldsymbol {z})$

tries to recover

![]() $\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}) , p(\boldsymbol {z})\right ]$

, but this task can be quite challenging if there’s a large difference between

$\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}) , p(\boldsymbol {z})\right ]$

, but this task can be quite challenging if there’s a large difference between

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

and the prior distribution

$q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

and the prior distribution

![]() $p(\boldsymbol {z})$

. Therefore, Mescheder et al. (Reference Mescheder, Nowozin and Geiger2017) also provided a technique to replace

$p(\boldsymbol {z})$

. Therefore, Mescheder et al. (Reference Mescheder, Nowozin and Geiger2017) also provided a technique to replace

![]() $\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}) , p(\boldsymbol {z})\right ]$

with another KL divergence between

$\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}) , p(\boldsymbol {z})\right ]$

with another KL divergence between

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

and an adaptive distribution

$q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

and an adaptive distribution

![]() $r_{\alpha }(\boldsymbol {z}\mid \boldsymbol {x})$

rather than the simple, fixed Gaussian

$r_{\alpha }(\boldsymbol {z}\mid \boldsymbol {x})$

rather than the simple, fixed Gaussian

![]() $p(\boldsymbol {z})$

.

$p(\boldsymbol {z})$

.

3.2 Adaptive contrast technique

AC (Mescheder et al., Reference Mescheder, Nowozin and Geiger2017) is a technique to handle the issue of a large gap between

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

and

$q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

and

![]() $p(\boldsymbol {z})$

. Instead of contrasting

$p(\boldsymbol {z})$

. Instead of contrasting

![]() $q_{\phi }(\boldsymbol {z}\mid \boldsymbol {x})$

with

$q_{\phi }(\boldsymbol {z}\mid \boldsymbol {x})$

with

![]() $p(\boldsymbol {z})$

, an auxiliary conditional distribution

$p(\boldsymbol {z})$

, an auxiliary conditional distribution

![]() $r_{\alpha }(\boldsymbol {z}\mid \boldsymbol {x})$

serves as an intermediate step to bridge their gap. Then, the Objective (8) can be rewritten as

$r_{\alpha }(\boldsymbol {z}\mid \boldsymbol {x})$

serves as an intermediate step to bridge their gap. Then, the Objective (8) can be rewritten as

Also, the

![]() $\theta $

is updating for

$\theta $

is updating for

![]() $p_{\boldsymbol {\theta }}(\boldsymbol {x} , \boldsymbol {z})$

instead of

$p_{\boldsymbol {\theta }}(\boldsymbol {x} , \boldsymbol {z})$

instead of

![]() $p_{\boldsymbol {\theta }}(\boldsymbol {x} \mid \boldsymbol {z})$

so it will also be easier to update correlation between different dimensions of latent variables.

$p_{\boldsymbol {\theta }}(\boldsymbol {x} \mid \boldsymbol {z})$

so it will also be easier to update correlation between different dimensions of latent variables.

To closely approximate the

![]() $q_{\phi }(\boldsymbol {z}\mid \boldsymbol {x})$

, a tractable density choice of

$q_{\phi }(\boldsymbol {z}\mid \boldsymbol {x})$

, a tractable density choice of

![]() $r_{\alpha }(\boldsymbol {z}\mid \boldsymbol {x})$

is a Gaussian distribution with a diagonal covariance matrix, which matches the mean

$r_{\alpha }(\boldsymbol {z}\mid \boldsymbol {x})$

is a Gaussian distribution with a diagonal covariance matrix, which matches the mean

![]() $\boldsymbol {\mu }(\boldsymbol {x})$

and variance

$\boldsymbol {\mu }(\boldsymbol {x})$

and variance

![]() $\boldsymbol {\sigma }^2(\boldsymbol {x})$

of

$\boldsymbol {\sigma }^2(\boldsymbol {x})$

of

![]() $q_{\phi }(\boldsymbol {z}\mid \boldsymbol {x})$

. Therefore,

$q_{\phi }(\boldsymbol {z}\mid \boldsymbol {x})$

. Therefore,

![]() $r_{\alpha }(\boldsymbol {z}\mid \boldsymbol {x})$

is adaptive according to updating

$r_{\alpha }(\boldsymbol {z}\mid \boldsymbol {x})$

is adaptive according to updating

![]() $\phi $

. The KL term can also be approximated by Theorem 1 and

$\phi $

. The KL term can also be approximated by Theorem 1 and

![]() $\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}), r_{\alpha }(\boldsymbol {z}\mid \boldsymbol {x})\right ]$

can be much smaller than

$\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}), r_{\alpha }(\boldsymbol {z}\mid \boldsymbol {x})\right ]$

can be much smaller than

![]() $\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}), p(\boldsymbol {z})\right ]$

, which makes the construction of an effective discriminator easier (Mescheder et al., Reference Mescheder, Nowozin and Geiger2017). Moreover, given the distribution of the normalized vector

$\text {KL}\left [ q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x}), p(\boldsymbol {z})\right ]$

, which makes the construction of an effective discriminator easier (Mescheder et al., Reference Mescheder, Nowozin and Geiger2017). Moreover, given the distribution of the normalized vector

![]() $\tilde {\boldsymbol {z}}$

,

$\tilde {\boldsymbol {z}}$

,

![]() $\tilde {q}_{\boldsymbol {\phi }}(\tilde {\boldsymbol {z}}\mid \boldsymbol {x})$

, reparameterization trick can further simplify the KL term into

$\tilde {q}_{\boldsymbol {\phi }}(\tilde {\boldsymbol {z}}\mid \boldsymbol {x})$

, reparameterization trick can further simplify the KL term into

By using this reparameterization, we allow

![]() $T(\boldsymbol {x},\boldsymbol {z})$

to focus only on deviations of

$T(\boldsymbol {x},\boldsymbol {z})$

to focus only on deviations of

![]() $q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

from a Gaussian distribution’s shape, rather than its location and scale. This refinement reduces the complexity of the adversarial task and improves the stability and accuracy of the learning process, ultimately enhancing the quality of the VI approximation. Moreover, as

$q_{\boldsymbol {\phi }}(\boldsymbol {z}\mid \boldsymbol {x})$

from a Gaussian distribution’s shape, rather than its location and scale. This refinement reduces the complexity of the adversarial task and improves the stability and accuracy of the learning process, ultimately enhancing the quality of the VI approximation. Moreover, as

![]() $p_{\boldsymbol {\theta }}(\boldsymbol {x},\boldsymbol {z}) = p_{\boldsymbol {\theta }}(\boldsymbol {x} \mid \boldsymbol {z})p(\boldsymbol {z})$

, compared to the Objective (8), the Objective (16) can simultaneously estimate the factor correlation

$p_{\boldsymbol {\theta }}(\boldsymbol {x},\boldsymbol {z}) = p_{\boldsymbol {\theta }}(\boldsymbol {x} \mid \boldsymbol {z})p(\boldsymbol {z})$

, compared to the Objective (8), the Objective (16) can simultaneously estimate the factor correlation

![]() $\boldsymbol {\Sigma }$

if

$\boldsymbol {\Sigma }$

if

![]() $\boldsymbol {\Sigma }$

can be introduced into

$\boldsymbol {\Sigma }$

can be introduced into

![]() $p(\boldsymbol {z})$

as a new parameter.

$p(\boldsymbol {z})$

as a new parameter.

3.3 Importance-weighted technique

IW variational inference (IWVI; Burda et al., Reference Burda, Grosse and Salakhutdinov2015) connects VI with the MML estimation. Instead of maximizing the ELBO in Equation (6), the amortized IWVI now maximizes the importance-weighted ELBO (IW-ELBO):

$$ \begin{align} \boldsymbol{z}_{1:R} &\sim \prod\limits_{r=1}^R q_{\boldsymbol{\phi}}(\boldsymbol{z}_r \mid \boldsymbol{x}), w_r = p_{\boldsymbol{\theta}}(\boldsymbol{z}_r,\boldsymbol{x}) / q_{\boldsymbol{\phi}}(\boldsymbol{z}_r \mid \boldsymbol{x})\nonumber\\ \text{IW-ELBO} &= \mathbb{E}_{\boldsymbol{z}_{1:R}}\left[\log \frac{1}{R}\sum\limits_{r=1}^R w_r\right] \leq \log p_{\boldsymbol{\theta}}(\boldsymbol{x}). \end{align} $$

$$ \begin{align} \boldsymbol{z}_{1:R} &\sim \prod\limits_{r=1}^R q_{\boldsymbol{\phi}}(\boldsymbol{z}_r \mid \boldsymbol{x}), w_r = p_{\boldsymbol{\theta}}(\boldsymbol{z}_r,\boldsymbol{x}) / q_{\boldsymbol{\phi}}(\boldsymbol{z}_r \mid \boldsymbol{x})\nonumber\\ \text{IW-ELBO} &= \mathbb{E}_{\boldsymbol{z}_{1:R}}\left[\log \frac{1}{R}\sum\limits_{r=1}^R w_r\right] \leq \log p_{\boldsymbol{\theta}}(\boldsymbol{x}). \end{align} $$

If the number of IW samples

![]() $R=1$

, the IW-ELBO is reduced to the ELBO. As R increases, IW-ELBO becomes more similar to the marginal likelihood than ELBO (Burda et al., Reference Burda, Grosse and Salakhutdinov2015). Therefore, optimizing IW-ELBO, which is the IW Objective (8) or (16), achieves a better approximation to the MML estimator with slightly lower computational efficiency due to more samples but GPU computation source can ensure the computation time under control and even less than the time required by traditional CPU computation.

$R=1$

, the IW-ELBO is reduced to the ELBO. As R increases, IW-ELBO becomes more similar to the marginal likelihood than ELBO (Burda et al., Reference Burda, Grosse and Salakhutdinov2015). Therefore, optimizing IW-ELBO, which is the IW Objective (8) or (16), achieves a better approximation to the MML estimator with slightly lower computational efficiency due to more samples but GPU computation source can ensure the computation time under control and even less than the time required by traditional CPU computation.

The unbiased estimator for the gradient of the IW-ELBO w.r.t.

![]() $\boldsymbol {\xi }=(\boldsymbol {\theta }^T,\boldsymbol {\phi }^T)$

can be represented as follows:

$\boldsymbol {\xi }=(\boldsymbol {\theta }^T,\boldsymbol {\phi }^T)$

can be represented as follows:

$$ \begin{align} \nabla_{\boldsymbol{\xi}}\mathbb{E}_{\boldsymbol{z}_{1:R}}\left[ \log \frac{1}{R}\sum\limits_{r=1}^R w_r\right] = \mathbb{E}_{\boldsymbol{\epsilon}_{1:R}}\left[ \sum\limits_{r=1}^R \tilde{w}_r \nabla_{\boldsymbol{\xi}} \log w_r\right]\approx \frac{1}{S}\sum\limits_{s=1}^S\left[ \sum\limits_{r=1}^R \tilde{w}_{r,s} \nabla_{\boldsymbol{\xi}} \log w_{r,s}\right], \end{align} $$

$$ \begin{align} \nabla_{\boldsymbol{\xi}}\mathbb{E}_{\boldsymbol{z}_{1:R}}\left[ \log \frac{1}{R}\sum\limits_{r=1}^R w_r\right] = \mathbb{E}_{\boldsymbol{\epsilon}_{1:R}}\left[ \sum\limits_{r=1}^R \tilde{w}_r \nabla_{\boldsymbol{\xi}} \log w_r\right]\approx \frac{1}{S}\sum\limits_{s=1}^S\left[ \sum\limits_{r=1}^R \tilde{w}_{r,s} \nabla_{\boldsymbol{\xi}} \log w_{r,s}\right], \end{align} $$

where

![]() $\boldsymbol {\epsilon }_{1:R}\sim \prod _{r=1}^R\mathcal {N}(\boldsymbol {\epsilon }_r)$

and

$\boldsymbol {\epsilon }_{1:R}\sim \prod _{r=1}^R\mathcal {N}(\boldsymbol {\epsilon }_r)$

and

![]() $\tilde {w}_r = w_r / \sum _{r'=1}^R w_{r'}$

(Urban & Bauer, Reference Urban and Bauer2021). However, increasing R might make the gradient estimator with respect to

$\tilde {w}_r = w_r / \sum _{r'=1}^R w_{r'}$

(Urban & Bauer, Reference Urban and Bauer2021). However, increasing R might make the gradient estimator with respect to

![]() $\boldsymbol {\phi }$

in the encoder network become completely random and lead to inefficient computation (Rainforth et al., Reference Rainforth, Kosiorek, Le, Maddison, Igl, Wood and Teh2018). The

$\boldsymbol {\phi }$

in the encoder network become completely random and lead to inefficient computation (Rainforth et al., Reference Rainforth, Kosiorek, Le, Maddison, Igl, Wood and Teh2018). The

![]() $\boldsymbol {\theta }$

in the decoder does not have this problem. Doubly reparameterized gradient (DReG; Tucker et al., Reference Tucker, Lawson, Gu and Maddison2018; Urban & Bauer, Reference Urban and Bauer2021) estimator can be used to change

$\boldsymbol {\theta }$

in the decoder does not have this problem. Doubly reparameterized gradient (DReG; Tucker et al., Reference Tucker, Lawson, Gu and Maddison2018; Urban & Bauer, Reference Urban and Bauer2021) estimator can be used to change

![]() $\nabla _{\boldsymbol {\phi }}\mathbb {E}_{\boldsymbol {z}_{1:R}}\left [ \log \frac {1}{R}\sum _{r=1}^R w_r\right ]$

which attains lower variance:

$\nabla _{\boldsymbol {\phi }}\mathbb {E}_{\boldsymbol {z}_{1:R}}\left [ \log \frac {1}{R}\sum _{r=1}^R w_r\right ]$

which attains lower variance:

$$ \begin{align} \begin{aligned} \nabla_{\boldsymbol{\phi}}\mathbb{E}_{\boldsymbol{z}_{1:R}}\left[ \log \frac{1}{R}\sum\limits_{r=1}^R w_r\right] &= \mathbb{E}_{\boldsymbol{\epsilon}_{1:R}}\left[ \sum\limits_{r=1}^R \tilde{w}_r^2 \frac{\partial \log w_r}{\partial \boldsymbol{z}_r}\frac{\partial\boldsymbol{z}_r}{\partial\boldsymbol{\phi}}\right]\\ &\approx \frac{1}{S}\sum\limits_{s=1}^S\left[ \sum\limits_{r=1}^R \tilde{w}_{r,s}^2 \frac{\partial \log w_{r,s}}{\partial \boldsymbol{z}_{r,s}}\frac{\partial\boldsymbol{z}_{r,s}}{\partial\boldsymbol{\phi}}\right]. \end{aligned} \end{align} $$

$$ \begin{align} \begin{aligned} \nabla_{\boldsymbol{\phi}}\mathbb{E}_{\boldsymbol{z}_{1:R}}\left[ \log \frac{1}{R}\sum\limits_{r=1}^R w_r\right] &= \mathbb{E}_{\boldsymbol{\epsilon}_{1:R}}\left[ \sum\limits_{r=1}^R \tilde{w}_r^2 \frac{\partial \log w_r}{\partial \boldsymbol{z}_r}\frac{\partial\boldsymbol{z}_r}{\partial\boldsymbol{\phi}}\right]\\ &\approx \frac{1}{S}\sum\limits_{s=1}^S\left[ \sum\limits_{r=1}^R \tilde{w}_{r,s}^2 \frac{\partial \log w_{r,s}}{\partial \boldsymbol{z}_{r,s}}\frac{\partial\boldsymbol{z}_{r,s}}{\partial\boldsymbol{\phi}}\right]. \end{aligned} \end{align} $$

One single Monte Carlo sample (i.e.,

![]() $S=1$

) is enough to attain a satisfactory approximation to the gradient estimators in practice (Burda et al., Reference Burda, Grosse and Salakhutdinov2015; Tucker et al., Reference Tucker, Lawson, Gu and Maddison2018; Urban & Bauer, Reference Urban and Bauer2021).

$S=1$

) is enough to attain a satisfactory approximation to the gradient estimators in practice (Burda et al., Reference Burda, Grosse and Salakhutdinov2015; Tucker et al., Reference Tucker, Lawson, Gu and Maddison2018; Urban & Bauer, Reference Urban and Bauer2021).

3.4 Implementation summary

In summary, Algorithm 2 shows the implementation details of the IWAVB method.

Determining an optimal neural network configuration (e.g., the number and size of hidden layers) and other hyperparameters, such as learning rates, is critical for satisfactory performance. GAN-based methods like AVB are particularly sensitive to these settings since they involve two interacting neural networks (Lucic et al., Reference Lucic, Kurach, Michalski, Gelly and Bousquet2018; Roth et al., Reference Roth, Lucchi, Nowozin and Hofmann2017). However, there is no panacea solution, as the architecture often depends on the specific application and the characteristics of the data. Empirical studies have shown that one or two hidden layers are typically sufficient to represent most continuous functions efficiently (Huang, Reference Huang2003; Huang & Babri, Reference Huang and Babri1997), and a maximum of

![]() $2n+1$

nodes can capture complex patterns (Hecht-Nielsen, Reference Hecht-Nielsen1987). In the current study, various experimental settings were explored, and hyperparameters that yielded the best results were selected. Experiments demonstrated that enhancing discriminator complexity (increased width or depth) generally improved feature learning, subsequently benefiting generator performance. However, an excessively complex discriminator negatively impacted generator learning, highlighting the necessity of careful hyperparameter tuning to maintain a proper balance.

$2n+1$

nodes can capture complex patterns (Hecht-Nielsen, Reference Hecht-Nielsen1987). In the current study, various experimental settings were explored, and hyperparameters that yielded the best results were selected. Experiments demonstrated that enhancing discriminator complexity (increased width or depth) generally improved feature learning, subsequently benefiting generator performance. However, an excessively complex discriminator negatively impacted generator learning, highlighting the necessity of careful hyperparameter tuning to maintain a proper balance.

The learning rate in the AdamW gradient update significantly impacts the model’s efficiency and effectiveness, as this hyperparameter affects the speed at which the model converges to an optimal solution. A learning rate that is too small may result in exceedingly slow learning or convergence to an undesired local minimum, while a learning rate that is too large can cause oscillations between different local minima. To address these challenges, cyclical learning rate (CLR) schedules (Smith, Reference Smith2017) have been developed to dynamically adjust the learning rate throughout the training process. Unlike traditional fixed or monotonically decreasing learning rates, CLR oscillates the learning rate within predefined bounds, enabling the model to escape local minima and explore the optima more effectively. To ensure that the discriminator can learn more rapidly and provide more informative gradients to the generator, the discriminator can be assigned a higher learning rate compared to the generator (Heusel et al., Reference Heusel, Ramsauer, Unterthiner, Nessler and Hochreiter2017). The base values for the CLR were selected from the range

![]() $\{1,2,3,4,5,6,7,8,9,10\} \times 10^{-3}$

for the discriminator and

$\{1,2,3,4,5,6,7,8,9,10\} \times 10^{-3}$

for the discriminator and

![]() $\{1,2,3,4,5,6,7,8,9,10\} \times 10^{-4}$

for the encoder, and the upper bound was set to five times the base value. Experiments were conducted to identify the optimal base values for different tasks.

$\{1,2,3,4,5,6,7,8,9,10\} \times 10^{-4}$

for the encoder, and the upper bound was set to five times the base value. Experiments were conducted to identify the optimal base values for different tasks.

To ensure the convergence of the algorithm, the IW-ELBO value for each mini-batch is recorded. Every 100 fitting iterations, the average IW-ELBO across these 100 mini-batches is calculated. If this average does not improve after 500 such calculations, the fitting process is terminated, and convergence is considered achieved.

In exploratory studies, although different optimization runs may produce varied estimates, some results can be shown to be equivalent after a suitable transformation. The equivalence is checked by comparing the loading matrices from each run, following the process suggested by Urban & Bauer (Reference Urban and Bauer2021). First, the factors are rotated using the Geomin oblique rotation method (Yates, Reference Yates1987). Next, any factor whose loadings sum to a negative value is reversed (Asparouhov & Muthén, Reference Asparouhov and Muthén2009) so that all factors point in the same direction. Then, one matrix is chosen as a reference while the columns in the other matrices are rearranged to reduce the mean-squared error between matching entries. After aligning the matrices, Tucker’s congruence coefficient is calculated (Lorenzo-Seva & Ten Berge, Reference Lorenzo-Seva and Ten Berge2006); if this value is above 0.98, the solutions are considered equivalent (MacCallum et al., Reference MacCallum, Widaman, Zhang and Hong1999). The same process of inversion and rearrangement is applied to factor correlation matrices, where both rows and columns are reordered for a direct comparison.

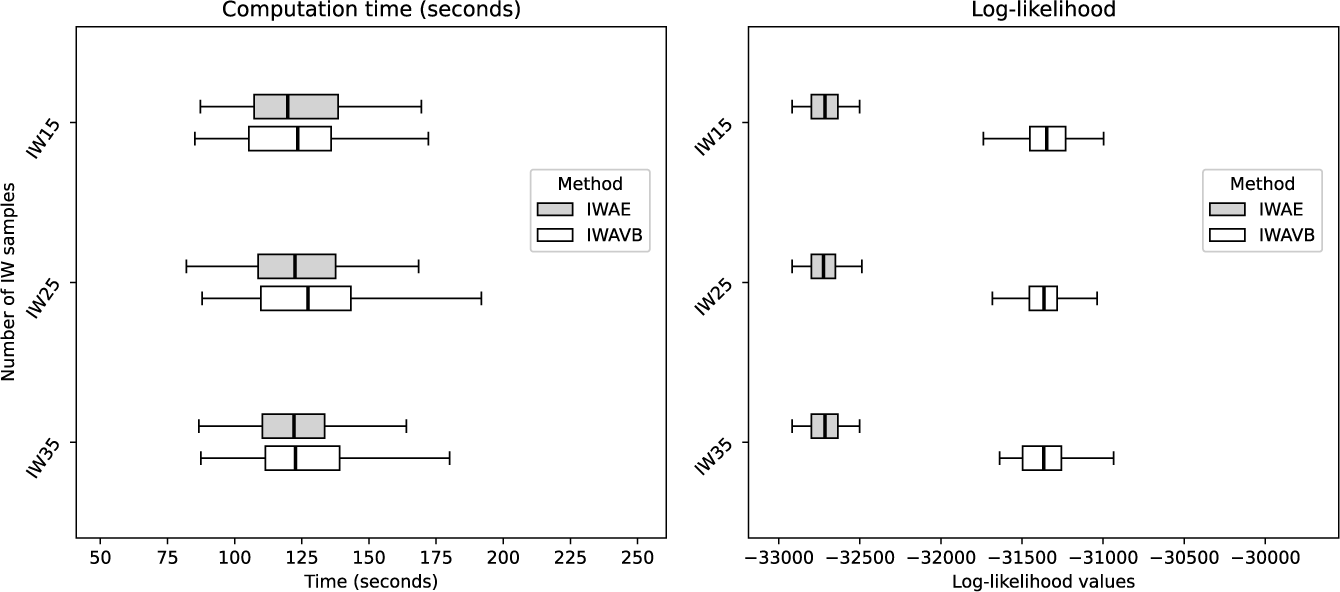

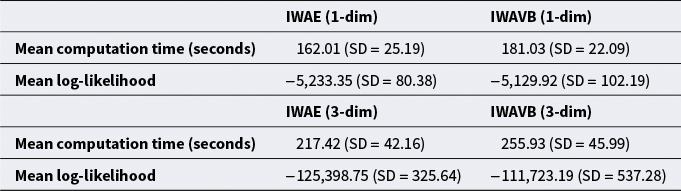

4 Experiment results of the comparison between AVB and VAE

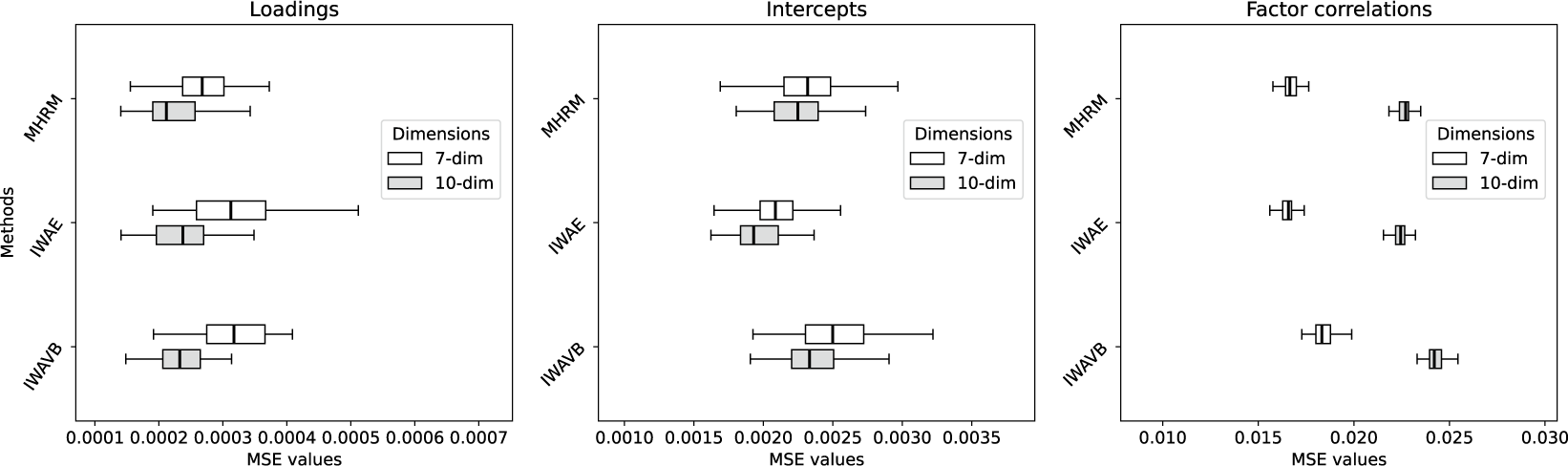

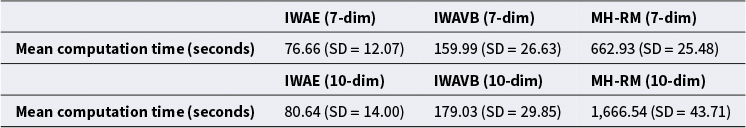

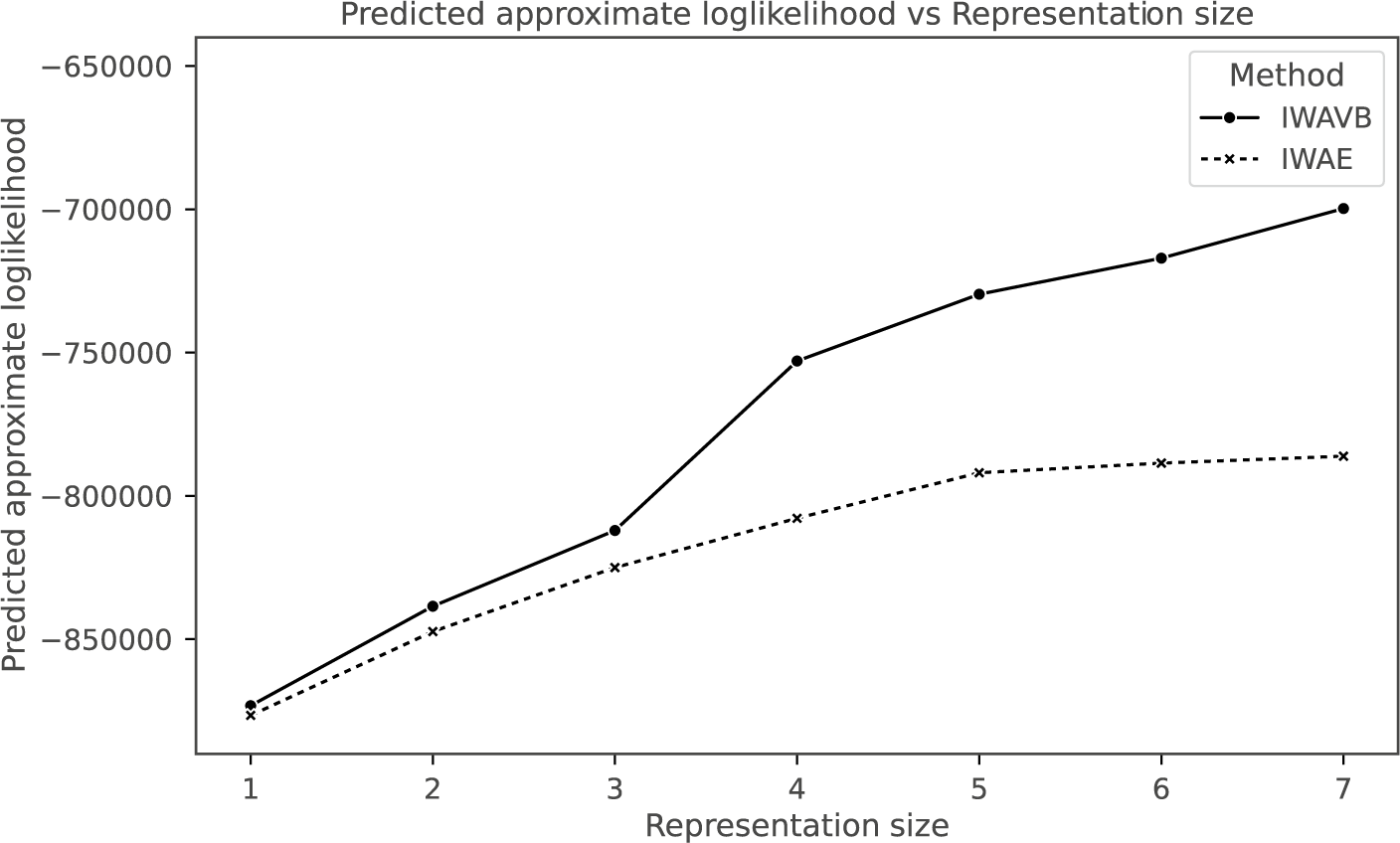

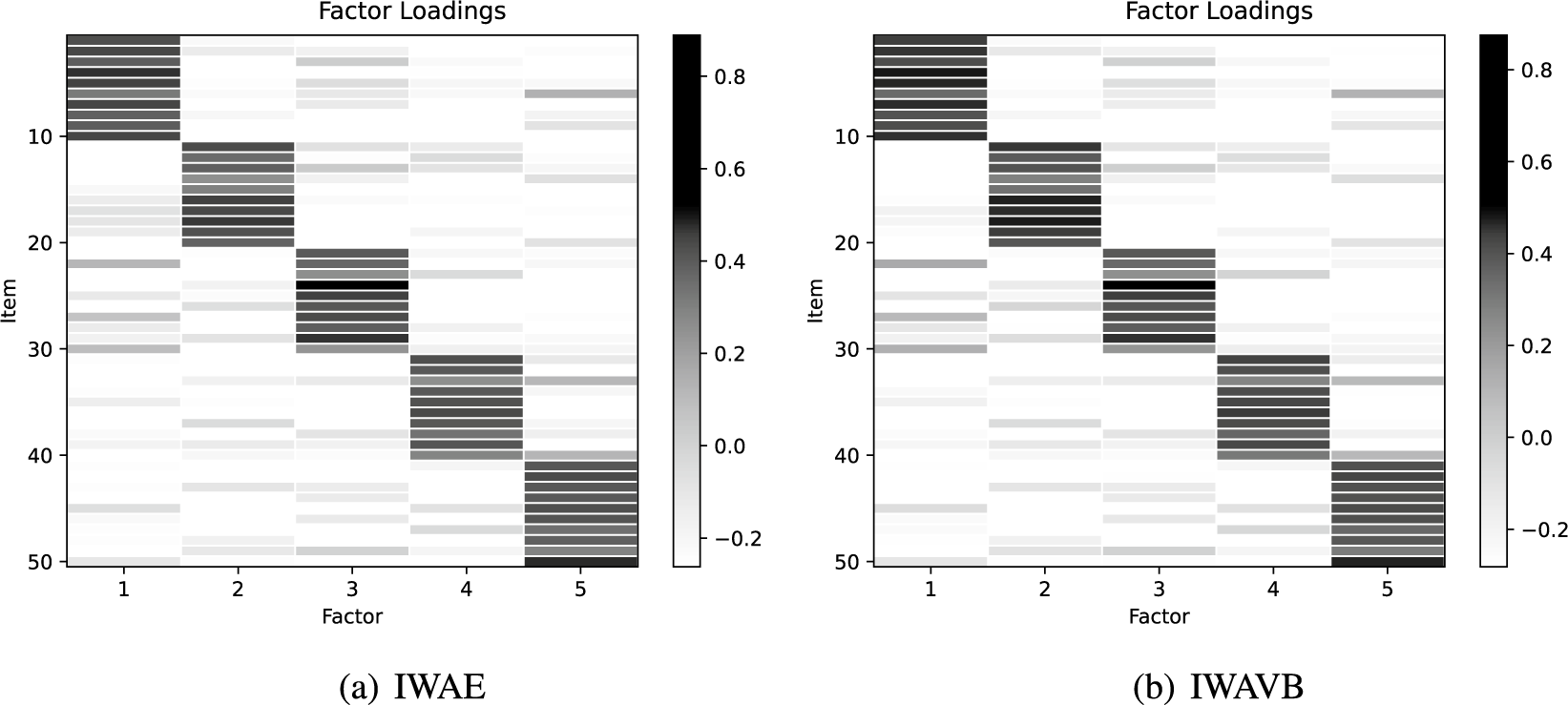

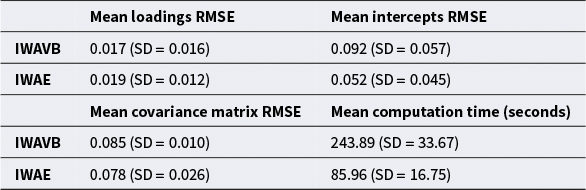

This section describes the results of the empirical study and simulation studies comparing the IWAVB and the IWAE by Urban & Bauer (Reference Urban and Bauer2021). IWAE was shown to be more efficient (Urban & Bauer, Reference Urban and Bauer2021) than the popular MH-RM algorithm (Cai, Reference Cai2010), while IWAVB achieved similar finite sample performance as IWAE in normal latent variables cases but outperformed it when handling more complex latent variables distribution. The algorithms were implemented in PyTorch 2.6.0. All experiments were conducted on a Kaggle computational environment equipped with an Intel(R) Xeon(R) CPU @ 2.00 GHz (4 vCPUs) and two Tesla T4 GPUs (each with 16 GB of memory).

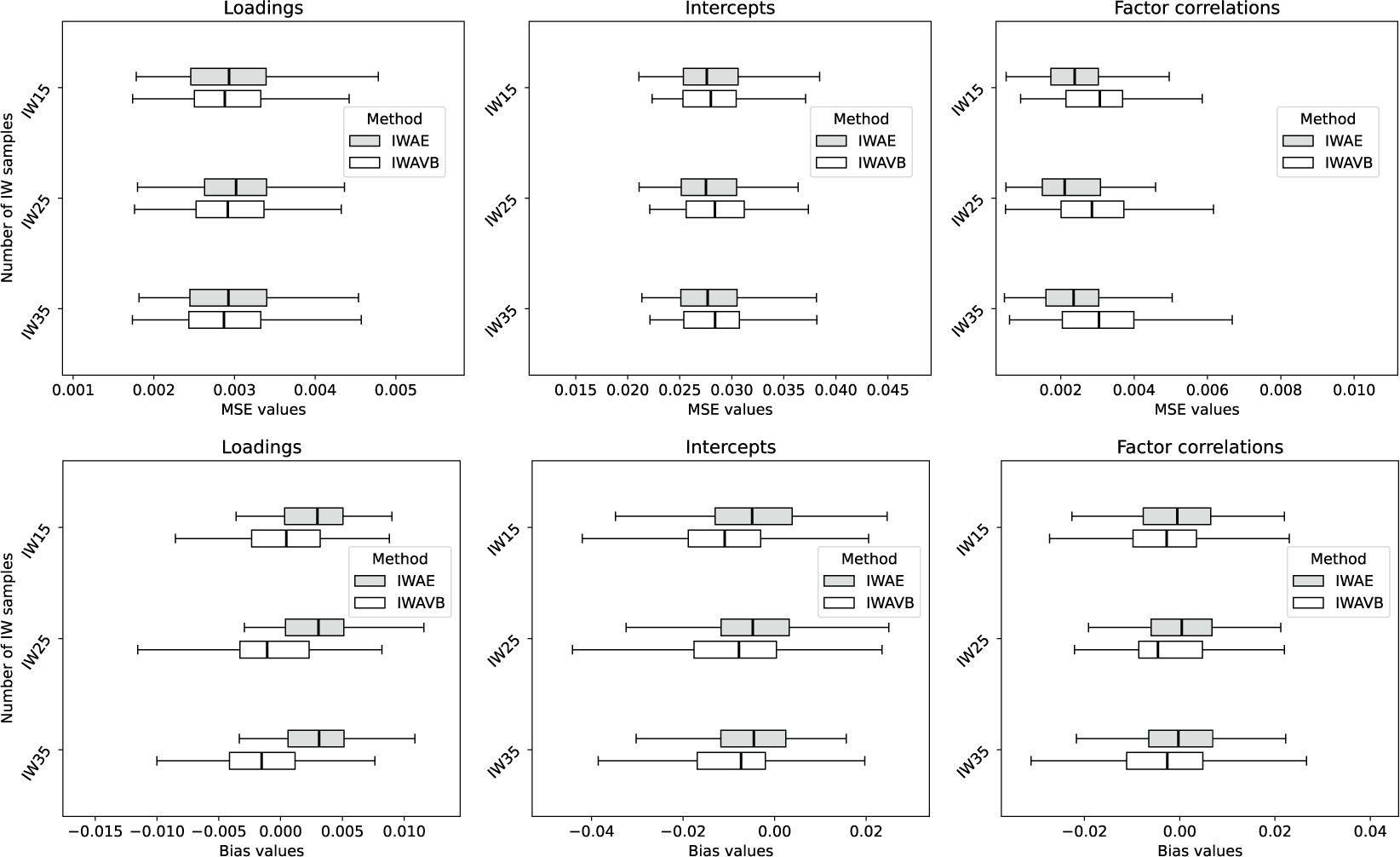

4.1 Simulation study: Latent variables following normal distribution

4.1.1 Comparison with IWAE: Importance-weighting technique