1 Introduction

Notation3 Logic (

![]() $N_3$

) is an extension of the Resource Description Framework (RDF) which allows the user to quote graphs, to express rules, and to apply built-in functions on the components of RDF triples (Woensel et al. Reference Woensel, Arndt, Champin, Tomaszuk and Kellogg2023; Berners-Lee et al. Reference Berners-Lee, Connolly, Kagal, Scharf and Hendler2008). Facilitated by reasoners like cwm (Berners-Lee Reference Berners-Lee2009), Data-Fu (Harth and Käfer Reference Harth and Käfer2018), or EYE (Verborgh and De Roo Reference Verborgh and De roo2015),

$N_3$

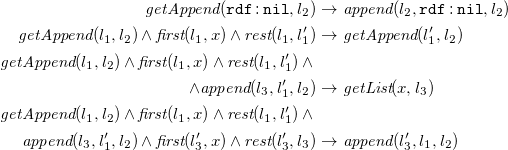

) is an extension of the Resource Description Framework (RDF) which allows the user to quote graphs, to express rules, and to apply built-in functions on the components of RDF triples (Woensel et al. Reference Woensel, Arndt, Champin, Tomaszuk and Kellogg2023; Berners-Lee et al. Reference Berners-Lee, Connolly, Kagal, Scharf and Hendler2008). Facilitated by reasoners like cwm (Berners-Lee Reference Berners-Lee2009), Data-Fu (Harth and Käfer Reference Harth and Käfer2018), or EYE (Verborgh and De Roo Reference Verborgh and De roo2015),

![]() $N_3$

rules directly consume and produce RDF graphs. This makes

$N_3$

rules directly consume and produce RDF graphs. This makes

![]() $N_3$

well-suited for rule exchange on the Web.

$N_3$

well-suited for rule exchange on the Web.

![]() $N_3$

supports the introduction of new blank nodes through rules, that is, if a blank node appears in the headFootnote

1

of a rule, each new match for the rule body produces a new instance of the rule’s head containing fresh blank nodes. This feature is interesting for many use cases – mappings between different vocabularies include blank nodes, workflow composition deals with unknown existing instances (Verborgh et al. Reference Verborgh, Arndt, Van hoecke, De roo, Mels, Steiner and Gabarró2017) – but it also impedes reasoning tasks: from a logical point of view these rules contain existentially quantified variables in their heads. Reasoning with such rules is known to be undecidable in general and very complex on decidable cases (Baget et al. Reference Baget, Leclère, Mugnier and Salvat2011; Krötzsch et al. Reference Krötzsch, Marx, Rudolph, Barceló and Calautti2019).

$N_3$

supports the introduction of new blank nodes through rules, that is, if a blank node appears in the headFootnote

1

of a rule, each new match for the rule body produces a new instance of the rule’s head containing fresh blank nodes. This feature is interesting for many use cases – mappings between different vocabularies include blank nodes, workflow composition deals with unknown existing instances (Verborgh et al. Reference Verborgh, Arndt, Van hoecke, De roo, Mels, Steiner and Gabarró2017) – but it also impedes reasoning tasks: from a logical point of view these rules contain existentially quantified variables in their heads. Reasoning with such rules is known to be undecidable in general and very complex on decidable cases (Baget et al. Reference Baget, Leclère, Mugnier and Salvat2011; Krötzsch et al. Reference Krötzsch, Marx, Rudolph, Barceló and Calautti2019).

Even though recent projects like jen3Footnote

2

or RoXi (Bonte and Ongenae Reference Bonte and Ongenae2023) aim at improving the situation, the number of fast

![]() $N_3$

reasoners fully supporting blank node introduction is low. This is different for reasoners acting on existential rules, a concept very similar to blank-node-producing rules in

$N_3$

reasoners fully supporting blank node introduction is low. This is different for reasoners acting on existential rules, a concept very similar to blank-node-producing rules in

![]() $N_3$

, but developed for databases. Sometimes it is necessary to uniquely identify data by a value that is not already part of the target database. One tool to achieve that are labeled nulls which – just as blank nodes – indicate the existence of a value. This problem from databases and the observation that rules may provide a powerful, yet declarative, means of computing has led to more extensive studies of existential rules (Baget et al. Reference Baget, Leclère, Mugnier and Salvat2011; Calì et al. Reference Calì, Gottlob, Pieris, Hitzler and Lukasiewicz2010). Many reasoners like for example VLog (Carral et al. Reference Carral, Dragoste, González, Jacobs, Krötzsch, Urbani and Ghidini2019) or Nemo (Ivliev et al. Reference Ivliev, Ellmauthaler, Gerlach, Marx, Meissner, Meusel, Krötzsch, Pontelli, Costantini, Dodaro, Gaggl, Calegari, d’Avila Garcez, Fabiano, Mileo, Russo and Toni2023) apply dedicated strategies to optimize reasoning with existential rules.

$N_3$

, but developed for databases. Sometimes it is necessary to uniquely identify data by a value that is not already part of the target database. One tool to achieve that are labeled nulls which – just as blank nodes – indicate the existence of a value. This problem from databases and the observation that rules may provide a powerful, yet declarative, means of computing has led to more extensive studies of existential rules (Baget et al. Reference Baget, Leclère, Mugnier and Salvat2011; Calì et al. Reference Calì, Gottlob, Pieris, Hitzler and Lukasiewicz2010). Many reasoners like for example VLog (Carral et al. Reference Carral, Dragoste, González, Jacobs, Krötzsch, Urbani and Ghidini2019) or Nemo (Ivliev et al. Reference Ivliev, Ellmauthaler, Gerlach, Marx, Meissner, Meusel, Krötzsch, Pontelli, Costantini, Dodaro, Gaggl, Calegari, d’Avila Garcez, Fabiano, Mileo, Russo and Toni2023) apply dedicated strategies to optimize reasoning with existential rules.

This paper aims to make existing and future optimizations on existential rules usable in the Semantic Web. We introduce a subset of

![]() $N_3$

supporting existential quantification but ignoring features of the language not covered in existential rules, like for example built-in functions or lists. We provide a mapping between this logic and existential rules: The mapping and its inverse both preserve equivalences of formulae, enabling

$N_3$

supporting existential quantification but ignoring features of the language not covered in existential rules, like for example built-in functions or lists. We provide a mapping between this logic and existential rules: The mapping and its inverse both preserve equivalences of formulae, enabling

![]() $N_3$

reasoning via existential rule technologies. We discuss how the framework can be extended to also support lists – a feature of

$N_3$

reasoning via existential rule technologies. We discuss how the framework can be extended to also support lists – a feature of

![]() $N_3$

used in many practical applications, for example to support n-ary predicates. We implement the defined mapping in python and compare the reasoning performance of the existential rule reasoners Vlog and Nemo, and the

$N_3$

used in many practical applications, for example to support n-ary predicates. We implement the defined mapping in python and compare the reasoning performance of the existential rule reasoners Vlog and Nemo, and the

![]() $N_3$

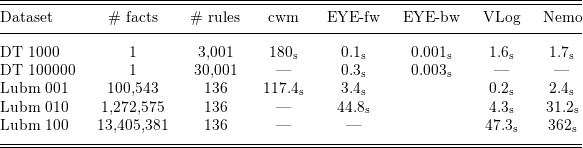

reasoners EYE and cwm for two benchmarks: one applying a fixed set of rules on a varying size of facts, and one applying a varying set of highly dependent rules to a fixed set of facts. In our tests VLog and Nemo together with our mapping outperform the traditional

$N_3$

reasoners EYE and cwm for two benchmarks: one applying a fixed set of rules on a varying size of facts, and one applying a varying set of highly dependent rules to a fixed set of facts. In our tests VLog and Nemo together with our mapping outperform the traditional

![]() $N_3$

reasoners EYE and cwm when dealing with a high number of facts while EYE is the fastest on large dependent rule sets. This is a strong indication that our implementation will be of practical use when extended by further features.

$N_3$

reasoners EYE and cwm when dealing with a high number of facts while EYE is the fastest on large dependent rule sets. This is a strong indication that our implementation will be of practical use when extended by further features.

We motivate our approach by providing examples of

![]() $N_3$

and existential rule formulae, and discuss how these are connected, in Section 2. In Section 3 we provide a more formal definition of Existential

$N_3$

and existential rule formulae, and discuss how these are connected, in Section 2. In Section 3 we provide a more formal definition of Existential

![]() $N_3$

(

$N_3$

(

![]() ${N_3}^\exists$

), introduce its semantics and discuss its properties. We then formally introduce existential rules, provide the mapping from

${N_3}^\exists$

), introduce its semantics and discuss its properties. We then formally introduce existential rules, provide the mapping from

![]() ${N_3}^\exists$

into this logic, and prove its truth-preserving properties in Section 4.

${N_3}^\exists$

into this logic, and prove its truth-preserving properties in Section 4.

![]() $N_3$

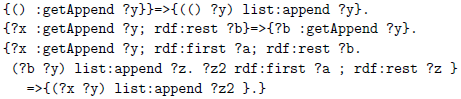

lists and the built-ins associated with them are introduced as

$N_3$

lists and the built-ins associated with them are introduced as

![]() $N_3$

primitives as well as their existential rule translations are subject to Section 5. In Section 6 we discuss our implementation and provide an evaluation of the different reasoners. Related work is presented in Section 7. We conclude our discussion in Section 8. Furthermore, the code needed for reproducing our experiments is available on GitHub (https://github.com/smennicke/n32rules).

$N_3$

primitives as well as their existential rule translations are subject to Section 5. In Section 6 we discuss our implementation and provide an evaluation of the different reasoners. Related work is presented in Section 7. We conclude our discussion in Section 8. Furthermore, the code needed for reproducing our experiments is available on GitHub (https://github.com/smennicke/n32rules).

This article is an extended and revised version of our work (Arndt and Mennicke Reference Arndt, Mennicke, Fensel, Ozaki, Roman and Soylu2023a) presented at Rules and Reasoning – 7th International Joint Conference (RuleML+RR) 2023. Compared to the conference paper, we include full proofs to all theorems and lemmas. Furthermore, we strengthen the statements of correctness of our translation (Theorem4.3 in Section 4), imposing stronger guarantees with effectively the same proofs as we had for the conference version, back then included in the technical appendix (Arndt and Mennicke Reference Arndt and Mennicke2023b) only. A discussion about the particular difference is appended to Theorem4.3. Finally, we extend our considerations by

![]() $N_3$

lists and respective built-ins (cf. Section 5).

$N_3$

lists and respective built-ins (cf. Section 5).

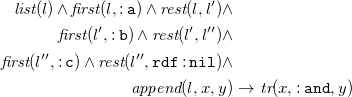

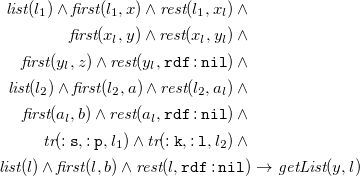

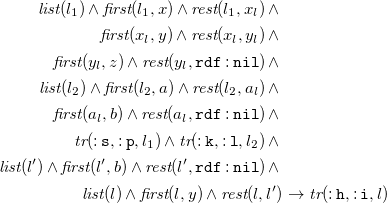

2 Motivation

![]() $N_3$

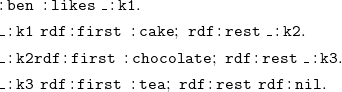

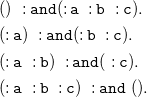

has been inroduced as a rule-based extension of RDF. As in RDF,

$N_3$

has been inroduced as a rule-based extension of RDF. As in RDF,

![]() $N_3$

knowledge is stated in triples consisting of subject, predicate, and object. In ground triples these can either be Internationalized Resource Identifiers (IRIs) or literals. The expression

$N_3$

knowledge is stated in triples consisting of subject, predicate, and object. In ground triples these can either be Internationalized Resource Identifiers (IRIs) or literals. The expression

meansFootnote

3

that “lucy knows tom.” Sets of triples are interpreted as their conjunction. Like RDF,

![]() $N_3$

supports blank nodes, usually starting with _:, which stand for (implicitly) existentially quantified variables. The statement

$N_3$

supports blank nodes, usually starting with _:, which stand for (implicitly) existentially quantified variables. The statement

means “there exists someone who is known by lucy.”

![]() $N_3$

furthermore supports implicitly universally quantified variables, indicated by a leading question mark (?), and implications which are stated using graphs, i.e., sets of triples, surrounded by curly braces ({}) as body and head connected via an arrow (= >). The formula

$N_3$

furthermore supports implicitly universally quantified variables, indicated by a leading question mark (?), and implications which are stated using graphs, i.e., sets of triples, surrounded by curly braces ({}) as body and head connected via an arrow (= >). The formula

means that “everyone known by Lucy also knows her.” Furthermore,

![]() $N_3$

allows the use of blank nodes in rules. These blank nodes are not quantified outside the rule like the universal variables, but in the rule part they occur in, that is either in its body or its head.

$N_3$

allows the use of blank nodes in rules. These blank nodes are not quantified outside the rule like the universal variables, but in the rule part they occur in, that is either in its body or its head.

means “everyone knowing Tom knows someone whose name is Tom.”

This last example shows, that

![]() $N_3$

supports rules concluding the existence of certain terms which makes it easy to express them as existential rules. An existential rule is a first-order sentence of the form

$N_3$

supports rules concluding the existence of certain terms which makes it easy to express them as existential rules. An existential rule is a first-order sentence of the form

where

![]() $\mathbf{x}, \mathbf{y}, \mathbf{z}$

are mutually disjoint lists of variables,

$\mathbf{x}, \mathbf{y}, \mathbf{z}$

are mutually disjoint lists of variables,

![]() $\varphi$

and

$\varphi$

and

![]() $\psi$

are conjunctions of atoms using only variables from the given lists, and

$\psi$

are conjunctions of atoms using only variables from the given lists, and

![]() $\varphi$

is referred to as the body of the rule while

$\varphi$

is referred to as the body of the rule while

![]() $\psi$

is called the head. Using the basic syntactic shape of (5) we go through all the example

$\psi$

is called the head. Using the basic syntactic shape of (5) we go through all the example

![]() $N_3$

formulae (1)–(4) again and represent them as existential rules. To allow for the full flexibility of

$N_3$

formulae (1)–(4) again and represent them as existential rules. To allow for the full flexibility of

![]() $N_3$

and RDF triples, we translate each RDF triple, just like the one in (1) into a first-order atom

$N_3$

and RDF triples, we translate each RDF triple, just like the one in (1) into a first-order atom

![]() ${\textit {tr}}(\mathtt {:lucy}, \mathtt {:knows}, \mathtt {:tom})$

. Here,

${\textit {tr}}(\mathtt {:lucy}, \mathtt {:knows}, \mathtt {:tom})$

. Here,

![]() $\textit {tr}$

is a ternary predicate holding subject, predicate, and object of a given RDF triple. This standard translation makes triple predicates (e.g.,

$\textit {tr}$

is a ternary predicate holding subject, predicate, and object of a given RDF triple. This standard translation makes triple predicates (e.g.,

![]() $\mathtt {:knows}$

) accessible as terms. First-order atoms are also known as facts, finite sets of facts are called databases, and (possibly infinite) sets of facts are called instances. Existential rules are evaluated over instances (cf. Section 4).

$\mathtt {:knows}$

) accessible as terms. First-order atoms are also known as facts, finite sets of facts are called databases, and (possibly infinite) sets of facts are called instances. Existential rules are evaluated over instances (cf. Section 4).

Compared to other rule languages, the distinguishing feature of existential rules is the use of existentially quantified variables in the head of rules (cf.

![]() $\mathbf{z}$

in (5)). The

$\mathbf{z}$

in (5)). The

![]() $N_3$

formula in (2) contains an existentially quantified variable and can, thus, be encoded as

$N_3$

formula in (2) contains an existentially quantified variable and can, thus, be encoded as

Rule (6) has an empty body, which means the head is unconditionally true. Rule (6) is satisfied on instances containing any fact

![]() ${\textit {tr}}(\mathtt {:lucy},\mathtt {:knows},\_)$

(e.g.,

${\textit {tr}}(\mathtt {:lucy},\mathtt {:knows},\_)$

(e.g.,

![]() ${\textit {tr}}(\mathtt {:lucy},\mathtt {:knows},\mathtt {:tim})$

so that variable

${\textit {tr}}(\mathtt {:lucy},\mathtt {:knows},\mathtt {:tim})$

so that variable

![]() $x$

can be bound to

$x$

can be bound to

![]() $\mathtt {:tim}$

).

$\mathtt {:tim}$

).

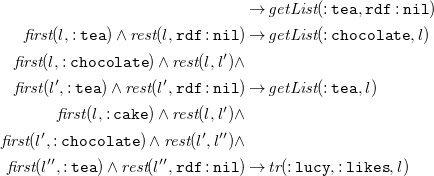

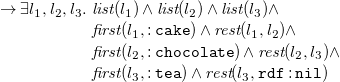

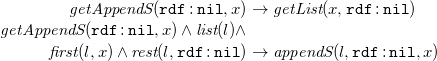

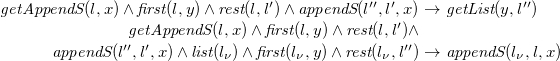

The implication of (3) has

as its (existential) rule counterpart, which does not contain any existentially quantified variables. Rule (7) is satisfied in the instance

but not in

since the only fact in

![]() ${\mathcal{K}}_1$

matches the body of the rule, but there is no fact reflecting on its (instantiated) head (i.e., the required fact

${\mathcal{K}}_1$

matches the body of the rule, but there is no fact reflecting on its (instantiated) head (i.e., the required fact

![]() ${\textit {tr}}(\mathtt {:tom},\mathtt {:knows},\mathtt {:lucy})$

is missing). Ultimately, the implication (4) with blank nodes in its head may be transferred to a rule with an existential quantifier in the head:

${\textit {tr}}(\mathtt {:tom},\mathtt {:knows},\mathtt {:lucy})$

is missing). Ultimately, the implication (4) with blank nodes in its head may be transferred to a rule with an existential quantifier in the head:

It is clear that rule (8) is satisfied in instance

However, instance

![]() ${\mathcal{K}}_1$

does not satisfy rule (8) because although the only fact satisfies the rule’s body, there are no facts jointly satisfying the rule’s head.

${\mathcal{K}}_1$

does not satisfy rule (8) because although the only fact satisfies the rule’s body, there are no facts jointly satisfying the rule’s head.

Note, for query answering over databases and rules, it is usually not required to decide for a concrete value of

![]() $y$

(in rule (8)). Many implementations, therefore, use some form of abstraction: for instance, Skolem terms. VLog and Nemo implement the standard chase which uses another set of terms, so-called labeled nulls. Instead of injecting arbitrary constants for existentially quantified variables, (globally) fresh nulls are inserted in the positions existentially quantified variables occur. Such a labeled null embodies the existence of a constant on the level of instances (just like blank nodes in RDF graphs). Let

$y$

(in rule (8)). Many implementations, therefore, use some form of abstraction: for instance, Skolem terms. VLog and Nemo implement the standard chase which uses another set of terms, so-called labeled nulls. Instead of injecting arbitrary constants for existentially quantified variables, (globally) fresh nulls are inserted in the positions existentially quantified variables occur. Such a labeled null embodies the existence of a constant on the level of instances (just like blank nodes in RDF graphs). Let

![]() $n$

be such a labeled null. Then

$n$

be such a labeled null. Then

![]() ${{\mathcal{I}}}_{2}$

can be generalized to

${{\mathcal{I}}}_{2}$

can be generalized to

on which rule (8) is satisfied, binding null

![]() $n$

to variable

$n$

to variable

![]() $y$

.

$y$

.

![]() ${{\mathcal{I}}}_{3}$

is, in fact, more general than

${{\mathcal{I}}}_{3}$

is, in fact, more general than

![]() ${{\mathcal{I}}}_{2}$

by the following observation: There is a mapping from

${{\mathcal{I}}}_{2}$

by the following observation: There is a mapping from

![]() ${{\mathcal{I}}}_{3}$

to

${{\mathcal{I}}}_{3}$

to

![]() ${{\mathcal{I}}}_{2}$

that is a homomorphism (see Subsection 4.1 for a formal introduction) but not vice versa. The homomorphism here maps the null

${{\mathcal{I}}}_{2}$

that is a homomorphism (see Subsection 4.1 for a formal introduction) but not vice versa. The homomorphism here maps the null

![]() $n$

(from

$n$

(from

![]() ${{\mathcal{I}}}_{3}$

) to the constant

${{\mathcal{I}}}_{3}$

) to the constant

![]() $\mathtt {:tom}$

(in

$\mathtt {:tom}$

(in

![]() ${\mathcal{I}}_{2}$

). Intuitively, the existence of a query answer (for a conjunctive query) on

${\mathcal{I}}_{2}$

). Intuitively, the existence of a query answer (for a conjunctive query) on

![]() ${\mathcal{I}}_{3}$

implies the existence of a query answer on

${\mathcal{I}}_{3}$

implies the existence of a query answer on

![]() ${\mathcal{I}}_{2}$

. Existential rule reasoners implementing some form of the chase aim at finding the most general instances (universal models) in this respect (Deutsch et al. Reference Deutsch, Nash, Remmel, Lenzerini and Lembo2008).

${\mathcal{I}}_{2}$

. Existential rule reasoners implementing some form of the chase aim at finding the most general instances (universal models) in this respect (Deutsch et al. Reference Deutsch, Nash, Remmel, Lenzerini and Lembo2008).

In the remainder of this paper, we further analyze the relation between

![]() $N_3$

and existential rules. First, we give a brief formal account of the two languages and then provide a correct translation function from

$N_3$

and existential rules. First, we give a brief formal account of the two languages and then provide a correct translation function from

![]() $N_3$

to existential rules.

$N_3$

to existential rules.

3 Existential N3

In the previous section we introduced essential elements of

![]() $N_3$

, namely triples and rules.

$N_3$

, namely triples and rules.

![]() $N_3$

also supports more complex constructs like lists, nesting of rules, and quotation. As these features are not covered by existential rules, we define a subset of

$N_3$

also supports more complex constructs like lists, nesting of rules, and quotation. As these features are not covered by existential rules, we define a subset of

![]() $N_3$

excluding them, called existential

$N_3$

excluding them, called existential

![]() $N_3$

(

$N_3$

(

![]() ${N_3}^\exists$

). This fragment of

${N_3}^\exists$

). This fragment of

![]() $N_3$

is still very powerful as it covers ontology mapping, one of N3’s main use cases. Many ontologies rely on patterns including auxiliary blank nodes.

$N_3$

is still very powerful as it covers ontology mapping, one of N3’s main use cases. Many ontologies rely on patterns including auxiliary blank nodes.

![]() ${N_3}^\exists$

supports the production of these.Footnote

4

In practice, these mappings are often connected with build-in functions like calculations or string operations,Footnote

5

these are not covered yet, but could be added. A more difficult feature to add would be the support of so-called rule-producing rules: In N3 it is possible to nest rules into the head of other rules. While this technique does not yield more expressivity, it is commonly used to translate from RDF datasets to N3 rules (see e.g., Arndt et al. Reference Arndt, De meester, Bonte, Schaballie, Bhatti, Dereuddre, Verborgh, Ongenae, De turck, Van de walle and Mannens2016). Such rule-producing rules can not be covered by existential rules as these only allow the derivation of facts.

${N_3}^\exists$

supports the production of these.Footnote

4

In practice, these mappings are often connected with build-in functions like calculations or string operations,Footnote

5

these are not covered yet, but could be added. A more difficult feature to add would be the support of so-called rule-producing rules: In N3 it is possible to nest rules into the head of other rules. While this technique does not yield more expressivity, it is commonly used to translate from RDF datasets to N3 rules (see e.g., Arndt et al. Reference Arndt, De meester, Bonte, Schaballie, Bhatti, Dereuddre, Verborgh, Ongenae, De turck, Van de walle and Mannens2016). Such rule-producing rules can not be covered by existential rules as these only allow the derivation of facts.

We base our definitions on so-called simple

![]() $N_3$

formulae (Arndt Reference Arndt2019, Chapter 7), these are

$N_3$

formulae (Arndt Reference Arndt2019, Chapter 7), these are

![]() $N_3$

formulae which do not allow for nesting.

$N_3$

formulae which do not allow for nesting.

3.1 Syntax

![]() ${N_3}^\exists$

relies on the RDF alphabet. As the distinction is not relevant in our context, we consider IRIs and literals together as constants. Let

${N_3}^\exists$

relies on the RDF alphabet. As the distinction is not relevant in our context, we consider IRIs and literals together as constants. Let

![]() $C$

be a set of such constants,

$C$

be a set of such constants,

![]() $U$

a set of universal variables (starting with ?), and

$U$

a set of universal variables (starting with ?), and

![]() $E$

a set of existential variables (i.e., blank nodes). If the sets

$E$

a set of existential variables (i.e., blank nodes). If the sets

![]() $C$

,

$C$

,

![]() $U$

,

$U$

,

![]() $E$

, and

$E$

, and

![]() $\{\mathtt {\{}, \mathtt {\}}, \mathtt {= \gt }, \mathtt {.}\}$

are mutually disjoint, we call

$\{\mathtt {\{}, \mathtt {\}}, \mathtt {= \gt }, \mathtt {.}\}$

are mutually disjoint, we call

![]() $\mathfrak{A}:=C \,\cup \, U \,\cup \, E \,\cup \, \{\mathtt {\{}, \mathtt {\}}, \mathtt {= \gt }, \mathtt {.}\}$

an

$\mathfrak{A}:=C \,\cup \, U \,\cup \, E \,\cup \, \{\mathtt {\{}, \mathtt {\}}, \mathtt {= \gt }, \mathtt {.}\}$

an

![]() $N_3$

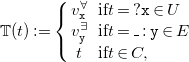

alphabet. Figure 1 provides the syntax of

$N_3$

alphabet. Figure 1 provides the syntax of

![]() ${N_3}^\exists$

over

${N_3}^\exists$

over

![]() $\mathfrak{A}$

.

$\mathfrak{A}$

.

Fig. 1. Syntax of

![]() $\operatorname {N3}^\exists$

.

$\operatorname {N3}^\exists$

.

![]() ${N_3}^\exists$

fully covers RDF. RDF formulae are conjunctions of atomic formulae. Just as generalized RDF (Cyganiak et al. Reference Cyganiak, Wood and Lanthaler2014),

${N_3}^\exists$

fully covers RDF. RDF formulae are conjunctions of atomic formulae. Just as generalized RDF (Cyganiak et al. Reference Cyganiak, Wood and Lanthaler2014),

![]() ${N_3}^\exists$

allows for literals and blank nodes to occur in subject, predicate, and object position. The same holds for universal variables which are not present in RDF. This syntactical freedom is inherited from full N3 and makes it possible to – among other things – express the rules for RDF/S (Hayes and Patel-Schneider Reference Hayes and Patel-schneider2014, Appendix A) and OWL-RL (Motik et al. Reference Motik, Cuenca grau, Horrocks, Wu, Fokoue and Lutz2009, Section 4.3) entailment via N3. As an example for that, consider the following ruleFootnote

6

for inverse properties:

${N_3}^\exists$

allows for literals and blank nodes to occur in subject, predicate, and object position. The same holds for universal variables which are not present in RDF. This syntactical freedom is inherited from full N3 and makes it possible to – among other things – express the rules for RDF/S (Hayes and Patel-Schneider Reference Hayes and Patel-schneider2014, Appendix A) and OWL-RL (Motik et al. Reference Motik, Cuenca grau, Horrocks, Wu, Fokoue and Lutz2009, Section 4.3) entailment via N3. As an example for that, consider the following ruleFootnote

6

for inverse properties:

If we apply this rule on triple (1) in combination with

we derive

Similar statements and rules can be made for triples including literals. We can for example declare that the :name from rule (4) is the owl:inverseOf of :isNameOf.Footnote 7 With rule (10) we then derive from

that

In that sense the use of generalized RDF ensures that all logical consequences we are able to produce via rules can also be stated in the language. This principle of syntactical completeness is also the reason to allow literals and blank nodes in predicate position. As universals may occur in predicate position, this also needs to be the case for all other kinds of symbols.

Currently, there is one exception to our principle: The syntax above allows rules having new universal variables in their head like for example

which results in a rule expressing “if lucy knows tom, everyone is happy.” This implication is problematic: Applied on triple (1), it yields

![]() $\mathtt {? x\;\;:is\;\;:happy.}$

which is a triple containing a universal variable. Such triples are not covered by our syntax, the rule thus introduces a fact we cannot express. Therefore, we restrict

$\mathtt {? x\;\;:is\;\;:happy.}$

which is a triple containing a universal variable. Such triples are not covered by our syntax, the rule thus introduces a fact we cannot express. Therefore, we restrict

![]() ${N_3}^\exists$

rules to well-formed implications which rely on components. Components can be seen as direct partsFootnote

8

an N3 formula consists of. Let

${N_3}^\exists$

rules to well-formed implications which rely on components. Components can be seen as direct partsFootnote

8

an N3 formula consists of. Let

![]() $f$

be a formula or an expression over an alphabet

$f$

be a formula or an expression over an alphabet

![]() $\mathfrak{A}$

. The set

$\mathfrak{A}$

. The set

![]() ${\operatorname {comp}}(f)$

of components of

${\operatorname {comp}}(f)$

of components of

![]() $f$

is defined as:

$f$

is defined as:

-

• If

$f$

is an atomic formula or a triple expression of the form

$f$

is an atomic formula or a triple expression of the form

$t_1\, t_2\, t_3.$

,

$t_1\, t_2\, t_3.$

,

${\operatorname {comp}}(f)=\{t_1,t_2,t_3\}$

.

${\operatorname {comp}}(f)=\{t_1,t_2,t_3\}$

. -

• If

$f$

is an implication of the form

$f$

is an implication of the form

$\mathtt {{e_{1}}= \gt {e_{2}}}.$

, then

$\mathtt {{e_{1}}= \gt {e_{2}}}.$

, then

${\operatorname {comp}}(f)=\{\mathtt {{e_1}, {e_2}}\}$

.

${\operatorname {comp}}(f)=\{\mathtt {{e_1}, {e_2}}\}$

. -

• If

$f$

is a conjunction of the form

$f$

is a conjunction of the form

$f_1 f_2$

, then

$f_1 f_2$

, then

${\operatorname {comp}}(f)={\operatorname {comp}}(f_1)\cup {\operatorname {comp}}(f_2)$

.

${\operatorname {comp}}(f)={\operatorname {comp}}(f_1)\cup {\operatorname {comp}}(f_2)$

.

A rule

![]() $\mathtt {\{e}_1\mathtt {\}= \gt \{e}_2\mathtt {\}} .$

is called well-formed if

$\mathtt {\{e}_1\mathtt {\}= \gt \{e}_2\mathtt {\}} .$

is called well-formed if

![]() $({\operatorname {comp}}(\mathtt {e}_2)\setminus {\operatorname {comp}}(\mathtt {e}_1))\cap U=\emptyset$

. For the remainder of this paper we assume all implications to be well-formed. Note that this definition of well-formed formulae is closely related to the idea of safety in logic programing. Well-formed rules are safe.

$({\operatorname {comp}}(\mathtt {e}_2)\setminus {\operatorname {comp}}(\mathtt {e}_1))\cap U=\emptyset$

. For the remainder of this paper we assume all implications to be well-formed. Note that this definition of well-formed formulae is closely related to the idea of safety in logic programing. Well-formed rules are safe.

3.2 Semantics

In order to define the semantics of

![]() ${N_3}^\exists$

we first note, that in our fragment of

${N_3}^\exists$

we first note, that in our fragment of

![]() $N_3$

all quantification of variables is only defined implicitly. The blank node in triple (2) is understood as an existentially quantified variable, the universal in formula (3) as universally quantified. Universal quantification spans over the whole formula – variable ?x occurring in body and head of rule (3) is universally quantified for the whole implication – while existential quantification is local – the conjunction in the head of rule (4) is existentially quantified there. Adding new triples as conjuncts to formula (4) like

$N_3$

all quantification of variables is only defined implicitly. The blank node in triple (2) is understood as an existentially quantified variable, the universal in formula (3) as universally quantified. Universal quantification spans over the whole formula – variable ?x occurring in body and head of rule (3) is universally quantified for the whole implication – while existential quantification is local – the conjunction in the head of rule (4) is existentially quantified there. Adding new triples as conjuncts to formula (4) like

leads to the new statement that “lucy knows someone who likes cake” but even though we are using the same blank node identifier _:y in both formulae, the quantification of the variables in this formula is totally seperated and the person named “Tom” is not necessarily related to the cake-liker. With the goal to deal with this locality of blank node scoping, we define substitutions which are only applied on components of formulae and leave nested elements like for example the body and head of rule (3) untouched.

A substitution

![]() $\sigma$

is a mapping from a set of variables

$\sigma$

is a mapping from a set of variables

![]() $X\subset U\cup E$

to the set of

$X\subset U\cup E$

to the set of

![]() $N_3$

terms. We apply

$N_3$

terms. We apply

![]() $\sigma$

to a term, formula or expression

$\sigma$

to a term, formula or expression

![]() $x$

as follows:

$x$

as follows:

-

•

$x \sigma = \sigma (x)$

if

$x \sigma = \sigma (x)$

if

$x\in X$

,

$x\in X$

, -

•

$(s\,p\,o) \sigma =(s\sigma )( p\sigma ) (o\sigma )$

if

$(s\,p\,o) \sigma =(s\sigma )( p\sigma ) (o\sigma )$

if

$x=s\,p\, o$

is an atomic formula or a triple expression,

$x=s\,p\, o$

is an atomic formula or a triple expression, -

•

$(f_1f_2) \sigma =(f_1\sigma )( f_2\sigma )$

if

$(f_1f_2) \sigma =(f_1\sigma )( f_2\sigma )$

if

$x=f_1f_2$

is a conjunction,

$x=f_1f_2$

is a conjunction, -

•

$x \sigma = x$

else.

$x \sigma = x$

else.

For formula

![]() $f=\mathtt {\_:x\;\;:p\;\;:o. \{\_:x\;\;:b\;\;:c\}= \gt \{\_:x\;\;:d\;\;:e\}.}$

, substitution

$f=\mathtt {\_:x\;\;:p\;\;:o. \{\_:x\;\;:b\;\;:c\}= \gt \{\_:x\;\;:d\;\;:e\}.}$

, substitution

![]() $\sigma$

and

$\sigma$

and

![]() $\mathtt {\_:x}\in \text{dom}(\sigma )$

, we get:

$\mathtt {\_:x}\in \text{dom}(\sigma )$

, we get:

![]() $f\sigma =\sigma (\mathtt {\_:x}) \mathtt {:p\;\;:o. \{\_:x\;\;:b\;\;:c\}= \gt \{\_:x\;\;:d\;\;:e\}}$

.Footnote

9

We use the substitution to define the semantics of

$f\sigma =\sigma (\mathtt {\_:x}) \mathtt {:p\;\;:o. \{\_:x\;\;:b\;\;:c\}= \gt \{\_:x\;\;:d\;\;:e\}}$

.Footnote

9

We use the substitution to define the semantics of

![]() ${N_3}^\exists$

which additionally makes use of N3 interpretations

${N_3}^\exists$

which additionally makes use of N3 interpretations

![]() $\mathfrak{I} = (\mathfrak{D},\mathfrak{a},\mathfrak{p})$

consisting of (1) a set

$\mathfrak{I} = (\mathfrak{D},\mathfrak{a},\mathfrak{p})$

consisting of (1) a set

![]() $\mathfrak{D}$

, called the domain of

$\mathfrak{D}$

, called the domain of

![]() $\mathfrak{I}$

; (2) a mapping

$\mathfrak{I}$

; (2) a mapping

![]() $\mathfrak{a}: C\rightarrow \mathfrak{D}$

, called the object function; (3) a mapping

$\mathfrak{a}: C\rightarrow \mathfrak{D}$

, called the object function; (3) a mapping

![]() $\mathfrak{p}: \mathfrak{D} \rightarrow 2^{\mathfrak{D} \times \mathfrak{D}}$

, called the predicate function.

$\mathfrak{p}: \mathfrak{D} \rightarrow 2^{\mathfrak{D} \times \mathfrak{D}}$

, called the predicate function.

Just as the function IEXT in RDF’s simple interpretations (Hayes Reference Hayes2004),

![]() $N_3$

’s predicate function maps elements from the domain of discourse to a set of pairs of domain elements and is not applied on relation symbols directly. This makes quantification over predicates possible while not exceeding first-order logic in terms of complexity. To introduce the semantics of

$N_3$

’s predicate function maps elements from the domain of discourse to a set of pairs of domain elements and is not applied on relation symbols directly. This makes quantification over predicates possible while not exceeding first-order logic in terms of complexity. To introduce the semantics of

![]() ${N_3}^\exists$

, let

${N_3}^\exists$

, let

![]() $\mathfrak{I}=(\mathfrak{D},\mathfrak{a,p})$

be an

$\mathfrak{I}=(\mathfrak{D},\mathfrak{a,p})$

be an

![]() $N_3$

interpretation. For an

$N_3$

interpretation. For an

![]() ${N_3}^\exists$

formula

${N_3}^\exists$

formula

![]() $f$

:

$f$

:

-

1. If

$W=\text{comp}(f)\cap E \neq \emptyset$

, then

$W=\text{comp}(f)\cap E \neq \emptyset$

, then

$\mathfrak{I}\models f$

iff

$\mathfrak{I}\models f$

iff

$\mathfrak{I}\models f\mu$

for some substitution

$\mathfrak{I}\models f\mu$

for some substitution

$\mu \;:\; W\rightarrow C$

.

$\mu \;:\; W\rightarrow C$

. -

2. If

$\text{comp}(f)\cap E=\emptyset$

:

$\text{comp}(f)\cap E=\emptyset$

:

-

(a) If

$f$

is an atomic formula

$f$

is an atomic formula

$t_1\, t_2\, t_3$

, then

$t_1\, t_2\, t_3$

, then

$\mathfrak{I} \models t_1\, t_2\, t_3$

. iff

$\mathfrak{I} \models t_1\, t_2\, t_3$

. iff

$(\mathfrak{a}(t_1),\mathfrak{a}(t_3))\in \mathfrak{p}(\mathfrak{a}(t_2))$

.

$(\mathfrak{a}(t_1),\mathfrak{a}(t_3))\in \mathfrak{p}(\mathfrak{a}(t_2))$

. -

(b) If

$f$

is a conjunction

$f$

is a conjunction

$f_1f_2$

, then

$f_1f_2$

, then

$\mathfrak{I}\models f_1 f_2$

iff

$\mathfrak{I}\models f_1 f_2$

iff

$\mathfrak{I}\models f_1$

and

$\mathfrak{I}\models f_1$

and

$\mathfrak{I}\models f_2$

.

$\mathfrak{I}\models f_2$

. -

(c) If

$f$

is an implication, then

$f$

is an implication, then

$\mathfrak{I} \models \mathtt {{ e_1 } = \gt {e_2}}$

iff

$\mathfrak{I} \models \mathtt {{ e_1 } = \gt {e_2}}$

iff

$\mathfrak{I} \models e_2\sigma$

if

$\mathfrak{I} \models e_2\sigma$

if

$\mathfrak{I} \models e_1\sigma$

for all substitutions

$\mathfrak{I} \models e_1\sigma$

for all substitutions

$\sigma$

on the universal variables

$\sigma$

on the universal variables

$\text{comp}(\mathtt {e}_1)\cap U$

by constants.

$\text{comp}(\mathtt {e}_1)\cap U$

by constants.

The semantics as defined above uses a substitution into the set of constants instead of a direct assignment to the domain of discourse to interpret quantified variables. This design choice inherited from

![]() $N_3$

ensures referential opacity of quoted graphs and means, in essence, that quantification always refers to named domain elements.

$N_3$

ensures referential opacity of quoted graphs and means, in essence, that quantification always refers to named domain elements.

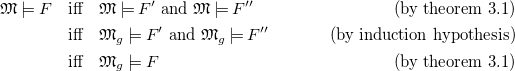

With that semantics, we call an interpretation

![]() $\mathfrak{M}$

model of a dataset

$\mathfrak{M}$

model of a dataset

![]() $\Phi$

, written as

$\Phi$

, written as

![]() $\mathfrak{M}\models \Phi$

, if

$\mathfrak{M}\models \Phi$

, if

![]() $\mathfrak{M}\models f$

for each formula

$\mathfrak{M}\models f$

for each formula

![]() $f\in \Phi$

. We say that two sets of

$f\in \Phi$

. We say that two sets of

![]() ${N_3}^\exists$

formulae

${N_3}^\exists$

formulae

![]() $\Phi$

and

$\Phi$

and

![]() $\Psi$

are equivalent, written as

$\Psi$

are equivalent, written as

![]() $\Phi \equiv \Psi$

, if for all interpretations

$\Phi \equiv \Psi$

, if for all interpretations

![]() $\mathfrak{M}$

:

$\mathfrak{M}$

:

![]() $\mathfrak{M}\models \Phi$

iff

$\mathfrak{M}\models \Phi$

iff

![]() $\mathfrak{M}\models \Psi$

. If

$\mathfrak{M}\models \Psi$

. If

![]() $\Phi =\{\phi \}$

and

$\Phi =\{\phi \}$

and

![]() $\Psi =\{\psi \}$

are singleton sets, we write

$\Psi =\{\psi \}$

are singleton sets, we write

![]() $\phi \equiv \psi$

omitting the brackets.

$\phi \equiv \psi$

omitting the brackets.

3.2.1 Piece normal form

![]() ${N_3}{}^\exists$

formulae consist of conjunctions of triples and implications. For our goal of translating such formulae to existential rules, it is convenient to consider sub-formulae seperately.

${N_3}{}^\exists$

formulae consist of conjunctions of triples and implications. For our goal of translating such formulae to existential rules, it is convenient to consider sub-formulae seperately.

Below, we therefore define the so-called Piece Normal Form (PNF) for

![]() ${N_3}{}^\exists$

formulae and show that each such formula

${N_3}{}^\exists$

formulae and show that each such formula

![]() $f$

is equivalent to a set of sub-formulae

$f$

is equivalent to a set of sub-formulae

![]() $\Phi$

(i.e.,

$\Phi$

(i.e.,

![]() $\Phi \equiv \phi$

) in PNF. We proceed in two steps. First, we separate formulae based on their blank node components. If two parts of a conjunction share a blank node component, as in formula (15), we cannot split the formula into two since the information about the co-reference would get lost. However, if conjuncts either do not contain blank nodes or only contain disjoint sets of these, we can split them into so-called pieces: Two formulae

$\Phi \equiv \phi$

) in PNF. We proceed in two steps. First, we separate formulae based on their blank node components. If two parts of a conjunction share a blank node component, as in formula (15), we cannot split the formula into two since the information about the co-reference would get lost. However, if conjuncts either do not contain blank nodes or only contain disjoint sets of these, we can split them into so-called pieces: Two formulae

![]() $f_1$

and

$f_1$

and

![]() $f_2$

are called pieces of a formula

$f_2$

are called pieces of a formula

![]() $f$

if

$f$

if

![]() $f=f_1f_2$

and

$f=f_1f_2$

and

![]() ${\operatorname {comp}}(f_1)\cap {\operatorname {comp}}(f_2)\cap E=\emptyset$

. For such formulae we know:

${\operatorname {comp}}(f_1)\cap {\operatorname {comp}}(f_2)\cap E=\emptyset$

. For such formulae we know:

Lemma 3.1 (Pieces). Let

![]() $f=f_1f_2$

be an

$f=f_1f_2$

be an

![]() ${N_3}^\exists$

conjunction and let

${N_3}^\exists$

conjunction and let

![]() ${\operatorname {comp}}(f_1)\cap {\operatorname {comp}}(f_2)\cap E=\emptyset$

, then for each interpretation

${\operatorname {comp}}(f_1)\cap {\operatorname {comp}}(f_2)\cap E=\emptyset$

, then for each interpretation

![]() $\mathfrak{I}$

,

$\mathfrak{I}$

,

![]() $\mathfrak{I}\models f \text{ iff } \mathfrak{I}\models f_1 \text{ and } \mathfrak{I}\models f_2$

.

$\mathfrak{I}\models f \text{ iff } \mathfrak{I}\models f_1 \text{ and } \mathfrak{I}\models f_2$

.

Proof.

-

1. If

${\operatorname {comp}}(f)\cap E=\emptyset$

the claim follows immediately by point 2b in the semantics definition.

${\operatorname {comp}}(f)\cap E=\emptyset$

the claim follows immediately by point 2b in the semantics definition. -

2. If

$W=\text{comp}(f)\cap E \neq \emptyset$

: (

$W=\text{comp}(f)\cap E \neq \emptyset$

: (

$\Rightarrow$

) If

$\Rightarrow$

) If

$\mathfrak{I}\models f$

then there exists a substitution

$\mathfrak{I}\models f$

then there exists a substitution

$\mu \;:\;{\operatorname {comp}}(f)\cap E\rightarrow C$

such that

$\mu \;:\;{\operatorname {comp}}(f)\cap E\rightarrow C$

such that

$\mathfrak{I}\models f\mu$

, that is

$\mathfrak{I}\models f\mu$

, that is

$\mathfrak{I}\models (f_1\mu )\,(f_2\mu )$

. According to the previous point that implies

$\mathfrak{I}\models (f_1\mu )\,(f_2\mu )$

. According to the previous point that implies

$\mathfrak{I}\models f_1\mu$

and

$\mathfrak{I}\models f_1\mu$

and

$\mathfrak{I}\models f_2\mu$

and thus

$\mathfrak{I}\models f_2\mu$

and thus

$\mathfrak{I}\models f_1$

and

$\mathfrak{I}\models f_1$

and

$\mathfrak{I}\models f_2$

. (

$\mathfrak{I}\models f_2$

. (

$\Leftarrow$

) If

$\Leftarrow$

) If

$\mathfrak{I}\models f_1$

and

$\mathfrak{I}\models f_1$

and

$\mathfrak{I}\models f_2$

, then there exist two substitutions

$\mathfrak{I}\models f_2$

, then there exist two substitutions

$\mu _1:{\operatorname {comp}}(f_1)\cap E\rightarrow C$

and

$\mu _1:{\operatorname {comp}}(f_1)\cap E\rightarrow C$

and

$\mu _2:{\operatorname {comp}}(f_2)\cap E\rightarrow C$

such that

$\mu _2:{\operatorname {comp}}(f_2)\cap E\rightarrow C$

such that

$\mathfrak{I}\models f_1\mu _1$

and

$\mathfrak{I}\models f_1\mu _1$

and

$\mathfrak{I}\models f_2\mu _2$

. As the domains of the two substitutions are disjoint (by assumption), we can define the substitution

$\mathfrak{I}\models f_2\mu _2$

. As the domains of the two substitutions are disjoint (by assumption), we can define the substitution

$\mu \;:\;{\operatorname {comp}}(f)\cap E\rightarrow C$

as follows:Then

$\mu \;:\;{\operatorname {comp}}(f)\cap E\rightarrow C$

as follows:Then \begin{align*} \mu (v) = {\begin{cases} \mu _1(v) & \text{ if } v\in {\operatorname {comp}}(f_1) \\ \mu _2(v)& \, \text{else}\\ \end{cases}} \end{align*}

\begin{align*} \mu (v) = {\begin{cases} \mu _1(v) & \text{ if } v\in {\operatorname {comp}}(f_1) \\ \mu _2(v)& \, \text{else}\\ \end{cases}} \end{align*}

$\mathfrak{I}\models f\mu$

and therefore

$\mathfrak{I}\models f\mu$

and therefore

$\mathfrak{I}\models f$

.

$\mathfrak{I}\models f$

.

If we recursively divide all pieces into sub-pieces, we get a maximal set

![]() $F=\{f_1, f_2, \ldots , f_n\}$

for each formula

$F=\{f_1, f_2, \ldots , f_n\}$

for each formula

![]() $f$

such that

$f$

such that

![]() $F\equiv \{f\}$

and for all

$F\equiv \{f\}$

and for all

![]() $1 \leq i, j \leq n$

,

$1 \leq i, j \leq n$

,

![]() $\text{comp}(f_{i})\cap \text{comp}(f_{j})\cap E \neq \emptyset$

implies

$\text{comp}(f_{i})\cap \text{comp}(f_{j})\cap E \neq \emptyset$

implies

![]() $i=j$

.

$i=j$

.

Second, we replace all blank nodes occurring in rule bodies by fresh universals. The rule

becomes

Note that both rules have the same meaning, namely “if someone likes cake, then cake is good.” We generalize that:

Lemma 3.2 (Eliminating Existentials). Let

![]() $f= \mathtt {{e_1} = \gt { e_2}}$

and

$f= \mathtt {{e_1} = \gt { e_2}}$

and

![]() $g=\mathtt {{ e'_1 } = \gt { e_2}}$

be

$g=\mathtt {{ e'_1 } = \gt { e_2}}$

be

![]() ${N_3}^\exists$

implications such that

${N_3}^\exists$

implications such that

![]() $e'_1=e_1\sigma$

for some injective substitution

$e'_1=e_1\sigma$

for some injective substitution

![]() $\sigma \;:\;{\operatorname {comp}}(e_1)\cap E\rightarrow U\setminus {\operatorname {comp}}(e_1)$

of the existential variables of

$\sigma \;:\;{\operatorname {comp}}(e_1)\cap E\rightarrow U\setminus {\operatorname {comp}}(e_1)$

of the existential variables of

![]() $e_1$

by universals. Then

$e_1$

by universals. Then

![]() $f\equiv g$

.

$f\equiv g$

.

Proof.

We first note that

![]() ${\operatorname {comp}}(f)\cap E=\emptyset$

and

${\operatorname {comp}}(f)\cap E=\emptyset$

and

![]() ${\operatorname {comp}}(g)\cap E=\emptyset$

since both formulae are implications.

${\operatorname {comp}}(g)\cap E=\emptyset$

since both formulae are implications.

(

![]() $\Rightarrow$

) We assume that

$\Rightarrow$

) We assume that

![]() $\mathfrak{M}\not \models g$

for some model

$\mathfrak{M}\not \models g$

for some model

![]() $\mathfrak{M}$

. That is, there exists a substitution

$\mathfrak{M}$

. That is, there exists a substitution

![]() $\nu \;:\; ({\operatorname {comp}}(e'_1)\cup {\operatorname {comp}}(e_2))\cap U\rightarrow C$

such that

$\nu \;:\; ({\operatorname {comp}}(e'_1)\cup {\operatorname {comp}}(e_2))\cap U\rightarrow C$

such that

![]() $\mathfrak{M}\models e'_1 \nu$

and

$\mathfrak{M}\models e'_1 \nu$

and

![]() $\mathfrak{M}\not \models e_2\nu$

. We show that

$\mathfrak{M}\not \models e_2\nu$

. We show that

![]() $\mathfrak{M}\models e_1\nu$

: As

$\mathfrak{M}\models e_1\nu$

: As

![]() $(({\operatorname {comp}}(e_1)\cup {\operatorname {comp}}(e_2))\cap U)\subset (({\operatorname {comp}}(e'_1)\cup {\operatorname {comp}}(e_2))\cap U)$

, we know that

$(({\operatorname {comp}}(e_1)\cup {\operatorname {comp}}(e_2))\cap U)\subset (({\operatorname {comp}}(e'_1)\cup {\operatorname {comp}}(e_2))\cap U)$

, we know that

![]() ${\operatorname {comp}}(e_1\nu )\cap U =\emptyset$

. With the substitution

${\operatorname {comp}}(e_1\nu )\cap U =\emptyset$

. With the substitution

![]() $\mu := \nu \circ \sigma$

for the existential variables in

$\mu := \nu \circ \sigma$

for the existential variables in

![]() $e_1\nu$

we get

$e_1\nu$

we get

![]() $\mathfrak{M}\models (e_1 \nu ) \sigma$

and thus

$\mathfrak{M}\models (e_1 \nu ) \sigma$

and thus

![]() $\mathfrak{M}\models (e_1 \nu )$

, but as

$\mathfrak{M}\models (e_1 \nu )$

, but as

![]() $\mathfrak{M}\not \models (e_2 \nu )$

we can conclude that

$\mathfrak{M}\not \models (e_2 \nu )$

we can conclude that

![]() $\mathfrak{M}\not \models f$

.

$\mathfrak{M}\not \models f$

.

(

![]() $\Leftarrow$

) We assume that

$\Leftarrow$

) We assume that

![]() $\mathfrak{M}\not \models f$

. That is, there exists a substitution

$\mathfrak{M}\not \models f$

. That is, there exists a substitution

![]() $\nu \;:\; ({\operatorname {comp}}(e_1)\cup {\operatorname {comp}}(e_2))\cap U\rightarrow C$

such that

$\nu \;:\; ({\operatorname {comp}}(e_1)\cup {\operatorname {comp}}(e_2))\cap U\rightarrow C$

such that

![]() $\mathfrak{M}\models e_1 \nu$

and

$\mathfrak{M}\models e_1 \nu$

and

![]() $\mathfrak{M}\not \models e_2\nu$

. As

$\mathfrak{M}\not \models e_2\nu$

. As

![]() $\mathfrak{M}\models e_1 \nu$

, there exists a substitution

$\mathfrak{M}\models e_1 \nu$

, there exists a substitution

![]() $\mu \;:\;{\operatorname {comp}}(e_1\nu )\cap E\rightarrow C$

such that

$\mu \;:\;{\operatorname {comp}}(e_1\nu )\cap E\rightarrow C$

such that

![]() $\mathfrak{M}\models (e_1 \nu )\mu$

. With that we define a substitution

$\mathfrak{M}\models (e_1 \nu )\mu$

. With that we define a substitution

![]() $\nu ':({\operatorname {comp}}(e_1)\cup {\operatorname {comp}}(e_2))\cap U\rightarrow C$

as follows:

$\nu ':({\operatorname {comp}}(e_1)\cup {\operatorname {comp}}(e_2))\cap U\rightarrow C$

as follows:

![]() $\nu ':U\rightarrow C$

as follows:

$\nu ':U\rightarrow C$

as follows:

\begin{equation*}\nu '(v) = \begin{cases} \mu (\sigma ^{-1}(v)) & \text{ if } v\in range(\sigma ) \\ \nu (v)& \, \text{else} \end{cases}\end{equation*}

\begin{equation*}\nu '(v) = \begin{cases} \mu (\sigma ^{-1}(v)) & \text{ if } v\in range(\sigma ) \\ \nu (v)& \, \text{else} \end{cases}\end{equation*}

With that substitution we get

![]() $\mathfrak{M}\models e'_1\nu '$

but

$\mathfrak{M}\models e'_1\nu '$

but

![]() $\mathfrak{M}\not \models e_2\nu '$

and thus

$\mathfrak{M}\not \models e_2\nu '$

and thus

![]() $\mathfrak{M}\not \models g$

.

$\mathfrak{M}\not \models g$

.

For a rule

![]() $f$

we call the formula

$f$

we call the formula

![]() $f'$

in which all existentials occurring in its body are replaced by universals following Lemma 3.2 the normalized version of the rule. We call an

$f'$

in which all existentials occurring in its body are replaced by universals following Lemma 3.2 the normalized version of the rule. We call an

![]() ${N_3}{}^{\exists }$

formula

${N_3}{}^{\exists }$

formula

![]() $f$

normalized, if all rules occurring in it as conjuncts are normalized. Combining the findings of the two previous lemmas, we introduce the Piece Normal Form:

$f$

normalized, if all rules occurring in it as conjuncts are normalized. Combining the findings of the two previous lemmas, we introduce the Piece Normal Form:

Definition 3.3 (Piece Normal Form). A finite set

![]() $\Phi = { f_1, f_2, \ldots , f_n }$

of

$\Phi = { f_1, f_2, \ldots , f_n }$

of

![]() ${N_3}{}^\exists$

formulae is in piece normal form (PNF) if all

${N_3}{}^\exists$

formulae is in piece normal form (PNF) if all

![]() $f_i \in \Phi$

(

$f_i \in \Phi$

(

![]() $1 \leq i \leq n$

) are normalized and

$1 \leq i \leq n$

) are normalized and

![]() $n \in \mathbb{N}$

is the maximal number such that for

$n \in \mathbb{N}$

is the maximal number such that for

![]() $1 \leq i,j \leq n$

,

$1 \leq i,j \leq n$

,

![]() ${\operatorname {comp}}(f_i) \cap {\operatorname {comp}}(f_j) \cap E \neq \emptyset$

implies

${\operatorname {comp}}(f_i) \cap {\operatorname {comp}}(f_j) \cap E \neq \emptyset$

implies

![]() $i = j$

. If

$i = j$

. If

![]() $f_i \in \Phi$

is a conjunction of atomic formulae, we call

$f_i \in \Phi$

is a conjunction of atomic formulae, we call

![]() $f_i$

an atomic piece.

$f_i$

an atomic piece.

We get the following result for

![]() ${N_3}^\exists$

formulae:

${N_3}^\exists$

formulae:

Theorem 3.4.

For every well-formed

![]() ${N_3}^\exists$

formula

${N_3}^\exists$

formula

![]() $f$

, there exists a set

$f$

, there exists a set

![]() $F =\{ f_{1}, f_{2}, \ldots , f_{k}\}$

of

$F =\{ f_{1}, f_{2}, \ldots , f_{k}\}$

of

![]() ${N_3}^\exists$

formulae such that

${N_3}^\exists$

formulae such that

![]() $F\equiv \{f\}$

and

$F\equiv \{f\}$

and

![]() $F$

is in piece normal form.

$F$

is in piece normal form.

Since the piece normal form

![]() $F$

of

$F$

of

![]() ${N_3}^\exists$

formula

${N_3}^\exists$

formula

![]() $f$

is obtained by only replacing variables and separating conjuncts of

$f$

is obtained by only replacing variables and separating conjuncts of

![]() $f$

into the set form, the overall size of

$f$

into the set form, the overall size of

![]() $F$

is linear in

$F$

is linear in

![]() $f$

.

$f$

.

4 From N3 to existential rules

Due to Theorem3.4, we translate sets

![]() $F$

of

$F$

of

![]() ${N_3}^\exists$

formulae in PNF (cf. Definition 3.3) to sets of existential rules

${N_3}^\exists$

formulae in PNF (cf. Definition 3.3) to sets of existential rules

![]() $\mathcal{T}(F)$

without loss of generality. As a preliminary step, we introduce the language of existential rules formally. Later on, we explain and formally define the translation function already sketched in Section 2. We close this section with a correctness argument, paving the way for existential rule reasoning for

$\mathcal{T}(F)$

without loss of generality. As a preliminary step, we introduce the language of existential rules formally. Later on, we explain and formally define the translation function already sketched in Section 2. We close this section with a correctness argument, paving the way for existential rule reasoning for

![]() ${N_3}^\exists$

formulae.

${N_3}^\exists$

formulae.

4.1 Foundations of existential rule reasoning

As for

![]() $N_3$

, we consider a first-order vocabulary, consisting of countably infinite mutually disjoint sets of constants (

$N_3$

, we consider a first-order vocabulary, consisting of countably infinite mutually disjoint sets of constants (

![]() $\mathbf{C}$

), variables (

$\mathbf{C}$

), variables (

![]() $\mathbf{V}$

), and additionally so-called (labeled) nulls (

$\mathbf{V}$

), and additionally so-called (labeled) nulls (

![]() $\mathbf{N}$

).Footnote

10

As already mentioned in Section 2, we use the same set of constants as

$\mathbf{N}$

).Footnote

10

As already mentioned in Section 2, we use the same set of constants as

![]() $N_3$

formulae, meaning

$N_3$

formulae, meaning

![]() ${\mathbf{C}} = C$

. Furthermore, let

${\mathbf{C}} = C$

. Furthermore, let

![]() $\mathbf{P}$

be a (countably infinite) set of relation names, where each

$\mathbf{P}$

be a (countably infinite) set of relation names, where each

![]() $p\in {\mathbf{P}}$

comes with an arity

$p\in {\mathbf{P}}$

comes with an arity

![]() $\mathit{ar}(p)\in \mathbb{N}$

.

$\mathit{ar}(p)\in \mathbb{N}$

.

![]() $\mathbf{P}$

is disjoint from the term sets

$\mathbf{P}$

is disjoint from the term sets

![]() $\mathbf{C}$

,

$\mathbf{C}$

,

![]() $\mathbf{V}$

, and

$\mathbf{V}$

, and

![]() $\mathbf{N}$

. We reserve the ternary relation name

$\mathbf{N}$

. We reserve the ternary relation name

![]() ${\textit {tr}}\in {\mathbf{P}}$

for our encoding of

${\textit {tr}}\in {\mathbf{P}}$

for our encoding of

![]() $N_3$

triples. If

$N_3$

triples. If

![]() $p\in {\mathbf{P}}$

and

$p\in {\mathbf{P}}$

and

![]() $t_{1},t_{2},\ldots ,t_{\mathit{ar}(p)}$

is a list of terms (i.e., each

$t_{1},t_{2},\ldots ,t_{\mathit{ar}(p)}$

is a list of terms (i.e., each

![]() $t_{i}\in {\mathbf{C}}\cup {\mathbf{N}}\cup {\mathbf{V}}$

),

$t_{i}\in {\mathbf{C}}\cup {\mathbf{N}}\cup {\mathbf{V}}$

),

![]() $p(t_{1},t_{2},\ldots ,t_{\mathit{ar}(p)})$

is called an atom. We often use

$p(t_{1},t_{2},\ldots ,t_{\mathit{ar}(p)})$

is called an atom. We often use

![]() $\mathbf{t}$

to summarize a term list like

$\mathbf{t}$

to summarize a term list like

![]() $t_{1},\ldots ,t_{n}$

(

$t_{1},\ldots ,t_{n}$

(

![]() $n\in \mathbb{N}$

), and treat it as a set whenever order is irrelevant. An atom

$n\in \mathbb{N}$

), and treat it as a set whenever order is irrelevant. An atom

![]() $p(\mathbf{t})$

is ground if

$p(\mathbf{t})$

is ground if

![]() $\mathbf{t} \subseteq {\mathbf{C}}$

. An instance is a (possibly infinite) set

$\mathbf{t} \subseteq {\mathbf{C}}$

. An instance is a (possibly infinite) set

![]() $\mathcal{I}$

of variable-free atoms and a finite set of ground atoms

$\mathcal{I}$

of variable-free atoms and a finite set of ground atoms

![]() ${\mathcal{D}}$

is called a database.

${\mathcal{D}}$

is called a database.

For a set of atoms

![]() ${\mathcal{I}}[A]$

and an instance

${\mathcal{I}}[A]$

and an instance

![]() $\mathcal{I}$

, we call a function

$\mathcal{I}$

, we call a function

![]() $h$

from the terms occurring in

$h$

from the terms occurring in

![]() ${\mathcal{I}}[A]$

to the terms in

${\mathcal{I}}[A]$

to the terms in

![]() $\mathcal{I}$

a homomorphism from

$\mathcal{I}$

a homomorphism from

![]() ${\mathcal{I}}[A]$

to

${\mathcal{I}}[A]$

to

![]() $\mathcal{I}$

, denoted by

$\mathcal{I}$

, denoted by

![]() $h \;:\; {\mathcal{I}}[A]\to {\mathcal{I}}$

, if (1)

$h \;:\; {\mathcal{I}}[A]\to {\mathcal{I}}$

, if (1)

![]() $h(c)=c$

for all

$h(c)=c$

for all

![]() $c\in {\mathbf{C}}$

(occurring in

$c\in {\mathbf{C}}$

(occurring in

![]() ${\mathcal{I}}[A]$

), and (2)

${\mathcal{I}}[A]$

), and (2)

![]() $p(\mathbf{t})\in {\mathcal{I}}[A]$

implies

$p(\mathbf{t})\in {\mathcal{I}}[A]$

implies

![]() $p(h(\mathbf{t}))\in {\mathcal{I}}$

. If any homomorphism from

$p(h(\mathbf{t}))\in {\mathcal{I}}$

. If any homomorphism from

![]() ${\mathcal{I}}[A]$

to

${\mathcal{I}}[A]$

to

![]() $\mathcal{I}$

exists, write

$\mathcal{I}$

exists, write

![]() ${\mathcal{I}}[A]\to {\mathcal{I}}$

. Please note that if

${\mathcal{I}}[A]\to {\mathcal{I}}$

. Please note that if

![]() $n$

is a null occurring in

$n$

is a null occurring in

![]() ${\mathcal{I}}[A]$

, then

${\mathcal{I}}[A]$

, then

![]() $h(n)$

may be a constant or null.

$h(n)$

may be a constant or null.

For an (existential) rule

![]() $r\colon \forall \mathbf{x}, \mathbf{y} .\ \varphi [\mathbf{x},\mathbf{y}] \rightarrow \exists \mathbf{z} .\ \psi [\mathbf{y},\mathbf{z}]$

(cf. (5)), rule body (

$r\colon \forall \mathbf{x}, \mathbf{y} .\ \varphi [\mathbf{x},\mathbf{y}] \rightarrow \exists \mathbf{z} .\ \psi [\mathbf{y},\mathbf{z}]$

(cf. (5)), rule body (

![]() ${\textsf {body}(r)} := \varphi$

) and head (

${\textsf {body}(r)} := \varphi$

) and head (

![]() ${\textsf {head}(r)} := \psi$

) will also be considered as sets of atoms for a more compact representation of the semantics. The notation

${\textsf {head}(r)} := \psi$

) will also be considered as sets of atoms for a more compact representation of the semantics. The notation

![]() $\varphi [\mathbf{x},\mathbf{y}]$

(

$\varphi [\mathbf{x},\mathbf{y}]$

(

![]() $\psi [\mathbf{y},\mathbf{z}]$

, resp.) indicates that the only variables occurring in

$\psi [\mathbf{y},\mathbf{z}]$

, resp.) indicates that the only variables occurring in

![]() $\varphi$

(

$\varphi$

(

![]() $\psi$

, resp.) are

$\psi$

, resp.) are

![]() $\mathbf{x}\cup \mathbf{y}$

(

$\mathbf{x}\cup \mathbf{y}$

(

![]() $\mathbf{y}\cup \mathbf{z}$

, resp.). A finite set of existential rules

$\mathbf{y}\cup \mathbf{z}$

, resp.). A finite set of existential rules

![]() $\Sigma$

is called an (existential) rule program.

$\Sigma$

is called an (existential) rule program.

Let

![]() $r$

be a rule and

$r$

be a rule and

![]() $\mathcal{I}$

an instance. We call a homomorphism

$\mathcal{I}$

an instance. We call a homomorphism

![]() $h \;:\; {\textsf {body}(r)} \to {\mathcal{I}}$

a match for

$h \;:\; {\textsf {body}(r)} \to {\mathcal{I}}$

a match for

![]() $r$

in

$r$

in

![]() $\mathcal{I}$

. Match

$\mathcal{I}$

. Match

![]() $h$

is satisfied for

$h$

is satisfied for

![]() $r$

in

$r$

in

![]() $\mathcal{I}$

if there is an extension

$\mathcal{I}$

if there is an extension

![]() $h^{\star }$

of

$h^{\star }$

of

![]() $h$

(i.e.,

$h$

(i.e.,

![]() $h\subseteq h^{\star }$

) such that

$h\subseteq h^{\star }$

) such that

![]() $h^{\star }({\textsf {head}(r)})\subseteq {\mathcal{I}}$

. If all matches of

$h^{\star }({\textsf {head}(r)})\subseteq {\mathcal{I}}$

. If all matches of

![]() $r$

are satisfied in

$r$

are satisfied in

![]() $\mathcal{I}$

, we say that r is satisfied in

$\mathcal{I}$

, we say that r is satisfied in

![]() $\mathcal{I}$

, denoted by

$\mathcal{I}$

, denoted by

![]() ${\mathcal{I}}\models r$

. For a rule program

${\mathcal{I}}\models r$

. For a rule program

![]() $\Sigma$

and database

$\Sigma$

and database

![]() ${\mathcal{D}}$

, instance

${\mathcal{D}}$

, instance

![]() $\mathcal{I}$

is a model of

$\mathcal{I}$

is a model of

![]() $\Sigma$

and

$\Sigma$

and

![]() ${\mathcal{D}}$

, denoted by

${\mathcal{D}}$

, denoted by

![]() ${\mathcal{I}} \models \Sigma ,{\mathcal{D}}$

, if

${\mathcal{I}} \models \Sigma ,{\mathcal{D}}$

, if

![]() ${\mathcal{D}}\subseteq {\mathcal{I}}$

and

${\mathcal{D}}\subseteq {\mathcal{I}}$

and

![]() ${\mathcal{I}}\models r$

for each

${\mathcal{I}}\models r$

for each

![]() $r\in \Sigma$

.

$r\in \Sigma$

.

Labeled nulls play the role of fresh constants without further specification, just like blank nodes in RDF or

![]() $N_3$

. The chase is a family of algorithms that soundly produces models of rule programs by continuously applying rules for unsatisfied matches. Rule heads are then instantiated and added to the instance. Existentially quantified variables are thereby replaced by (globally) fresh nulls in order to facilitate arbitrary constant injections. More formally, we call a sequence

$N_3$

. The chase is a family of algorithms that soundly produces models of rule programs by continuously applying rules for unsatisfied matches. Rule heads are then instantiated and added to the instance. Existentially quantified variables are thereby replaced by (globally) fresh nulls in order to facilitate arbitrary constant injections. More formally, we call a sequence

![]() ${\mathcal{D}}^0 {\mathcal{D}}^1 {\mathcal{D}}^2 \ldots$

a chase sequence of rule program

${\mathcal{D}}^0 {\mathcal{D}}^1 {\mathcal{D}}^2 \ldots$

a chase sequence of rule program

![]() $\Sigma$

and database

$\Sigma$

and database

![]() ${\mathcal{D}}$

if (1)

${\mathcal{D}}$

if (1)

![]() ${\mathcal{D}}^0 = {\mathcal{D}}$

and (2) for

${\mathcal{D}}^0 = {\mathcal{D}}$

and (2) for

![]() $i \gt 0$

,

$i \gt 0$

,

![]() ${\mathcal{D}}^i$

is obtained from

${\mathcal{D}}^i$

is obtained from

![]() ${\mathcal{D}}^{i-1}$

by applying a rule

${\mathcal{D}}^{i-1}$

by applying a rule

![]() $r\in \Sigma$

for match

$r\in \Sigma$

for match

![]() $h$

in

$h$

in

![]() ${\mathcal{D}}^{i-1}$

(i.e.,

${\mathcal{D}}^{i-1}$

(i.e.,

![]() $h \;:\; {\textsf {body}(r)} \to {\mathcal{D}}^{i-1}$

is an unsatisfied match and

$h \;:\; {\textsf {body}(r)} \to {\mathcal{D}}^{i-1}$

is an unsatisfied match and

![]() ${\mathcal{D}}^i = {\mathcal{D}}^{i-1} \cup \{ h^{\star }({\textsf {head}(r)}) \}$

for an extension

${\mathcal{D}}^i = {\mathcal{D}}^{i-1} \cup \{ h^{\star }({\textsf {head}(r)}) \}$

for an extension

![]() $h^{\star }$

of

$h^{\star }$

of

![]() $h$

). The chase of

$h$

). The chase of

![]() $\Sigma$

and

$\Sigma$

and

![]() ${\mathcal{D}}$

is the limit of a chase sequence

${\mathcal{D}}$

is the limit of a chase sequence

![]() ${\mathcal{D}}^0 {\mathcal{D}}^1 {\mathcal{D}}^2 \ldots$

, that is

${\mathcal{D}}^0 {\mathcal{D}}^1 {\mathcal{D}}^2 \ldots$

, that is

![]() $\bigcup _{i\geq 0} {\mathcal{D}}^0$

. Although chase sequences are not necessarily finite,Footnote

11

the chase always is a (possibly infinite) modelFootnote

12

(Deutsch et al. Reference Deutsch, Nash, Remmel, Lenzerini and Lembo2008). The described version of the chase is called standard chase or restricted chase.

$\bigcup _{i\geq 0} {\mathcal{D}}^0$

. Although chase sequences are not necessarily finite,Footnote

11

the chase always is a (possibly infinite) modelFootnote

12

(Deutsch et al. Reference Deutsch, Nash, Remmel, Lenzerini and Lembo2008). The described version of the chase is called standard chase or restricted chase.

We say that two rule programs

![]() $\Sigma _{1}$

and

$\Sigma _{1}$

and

![]() $\Sigma _{2}$

are equivalent, denoted

$\Sigma _{2}$

are equivalent, denoted

![]() $\Sigma _{1} {\mathrel {\leftrightarrows }} \Sigma _{2}$

, if for all instances

$\Sigma _{1} {\mathrel {\leftrightarrows }} \Sigma _{2}$

, if for all instances

![]() $\mathcal{I}$

,

$\mathcal{I}$

,

![]() ${\mathcal{I}}\models \Sigma _{1}$

if and only if

${\mathcal{I}}\models \Sigma _{1}$

if and only if

![]() ${\mathcal{I}}\models \Sigma _{2}$

. Equivalences of existential rules have been extensively studied in the framework of data exchange (Fagin et al. Reference Fagin, Kolaitis, Nash and Popa2008; Pichler et al. Reference Pichler, Sallinger and Savenkov2011). Our equivalence is very strong and is called logical equivalence in the data exchange literature. For an alternative equivalence relation between rule programs, we could have equally considered equality of ground models (i.e., those models that are null-free). Let us define this equivalence as follows:

${\mathcal{I}}\models \Sigma _{2}$

. Equivalences of existential rules have been extensively studied in the framework of data exchange (Fagin et al. Reference Fagin, Kolaitis, Nash and Popa2008; Pichler et al. Reference Pichler, Sallinger and Savenkov2011). Our equivalence is very strong and is called logical equivalence in the data exchange literature. For an alternative equivalence relation between rule programs, we could have equally considered equality of ground models (i.e., those models that are null-free). Let us define this equivalence as follows:

![]() $\Sigma _{1} {\mathrel {\leftrightarrows }}_{g} \Sigma _{2}$

if for each ground instance

$\Sigma _{1} {\mathrel {\leftrightarrows }}_{g} \Sigma _{2}$

if for each ground instance

![]() $\mathcal{I}$

,

$\mathcal{I}$

,

![]() ${\mathcal{I}}\models \Sigma _{1}$

if and only if

${\mathcal{I}}\models \Sigma _{1}$

if and only if

![]() ${\mathcal{I}}\models \Sigma _{2}$

. The following lemma helps simplifying the proofs concerning the correctness of our transformationFootnote

13

function later on.

${\mathcal{I}}\models \Sigma _{2}$

. The following lemma helps simplifying the proofs concerning the correctness of our transformationFootnote

13

function later on.

Lemma 4.1.

![]() $\mathrel {\leftrightarrows }$

and

$\mathrel {\leftrightarrows }$

and

![]() ${\mathrel {\leftrightarrows }}_{g}$

coincide.

${\mathrel {\leftrightarrows }}_{g}$

coincide.

Proof.

Of course,

![]() ${\mathrel {\leftrightarrows }}\subseteq {\mathrel {\leftrightarrows }}_{g}$

holds since since the set of all ground models of a rule program is a subset of all models of that program.

${\mathrel {\leftrightarrows }}\subseteq {\mathrel {\leftrightarrows }}_{g}$

holds since since the set of all ground models of a rule program is a subset of all models of that program.

Towards showing

![]() ${\mathrel {\leftrightarrows }}_{g}\subseteq {\mathrel {\leftrightarrows }}$

, assume rule programs

${\mathrel {\leftrightarrows }}_{g}\subseteq {\mathrel {\leftrightarrows }}$

, assume rule programs

![]() $\Sigma _{1}$

and

$\Sigma _{1}$

and

![]() $\Sigma _{2}$

such that

$\Sigma _{2}$

such that

![]() $\Sigma _{1}{\mathrel {\leftrightarrows }}_{g}\Sigma _{2}$

, but

$\Sigma _{1}{\mathrel {\leftrightarrows }}_{g}\Sigma _{2}$

, but ![]() . Then there is a model

. Then there is a model

![]() ${\mathcal{I}}[M]$

of

${\mathcal{I}}[M]$

of

![]() $\Sigma _{1}$

, such that

$\Sigma _{1}$

, such that ![]() (or vice versa), implying that for some rule

(or vice versa), implying that for some rule

![]() $r\in \Sigma _{2}$

there is a match

$r\in \Sigma _{2}$

there is a match

![]() $h$

in

$h$

in

![]() ${\mathcal{I}}[M]$

but for no extension

${\mathcal{I}}[M]$

but for no extension

![]() $h^{\star }$

, we get

$h^{\star }$

, we get

![]() $h^{\star }({\textsf {head}(r)})\subseteq {\mathcal{I}}[M]$

. As

$h^{\star }({\textsf {head}(r)})\subseteq {\mathcal{I}}[M]$

. As

![]() $\Sigma _{1}{\mathrel {\leftrightarrows }}_{g} \Sigma _{2}$

,

$\Sigma _{1}{\mathrel {\leftrightarrows }}_{g} \Sigma _{2}$

,

![]() ${\mathcal{I}}[M]$

cannot be a ground instance and, thus, contains at least one null.

${\mathcal{I}}[M]$

cannot be a ground instance and, thus, contains at least one null.

Claim: Because of

![]() ${\mathcal{I}}[M]$

, there is a ground instance

${\mathcal{I}}[M]$

, there is a ground instance

![]() ${\mathcal{I}}[M]_{g}$

, such that

${\mathcal{I}}[M]_{g}$

, such that

![]() ${\mathcal{I}}[M]_{g}\models \Sigma _{1}$

and

${\mathcal{I}}[M]_{g}\models \Sigma _{1}$

and ![]() . But then

. But then

![]() ${\mathcal{I}}[M]_{g}$

constitutes a counterexample to the assumption that

${\mathcal{I}}[M]_{g}$

constitutes a counterexample to the assumption that

![]() $\Sigma _{1}{\mathrel {\leftrightarrows }}_{g} \Sigma _{2}$

. Thus, the assumption

$\Sigma _{1}{\mathrel {\leftrightarrows }}_{g} \Sigma _{2}$

. Thus, the assumption ![]() would be disproven.

would be disproven.

In order to show the claim, we construct

![]() ${\mathcal{I}}[M]_{g}$

from

${\mathcal{I}}[M]_{g}$

from

![]() ${\mathcal{I}}[M]$

by replacing every null

${\mathcal{I}}[M]$

by replacing every null

![]() $n$

in

$n$

in

![]() ${\mathcal{I}}[M]$

by a (globally) fresh constant

${\mathcal{I}}[M]$

by a (globally) fresh constant

![]() $c_{n}$

. Since there might not be enough constants –

$c_{n}$