1. Introduction

Traditional auto-insurance ratemaking relies on driver and vehicle characteristics—such as driver’s age, driving experience, and vehicle specifications—for risk classification, claim prediction, and premium determination. These ‘traditional’ covariates provide a static representation of risk but fail to capture real-time driving behaviour that significantly influence accident likelihood. The rise of telematics, enabled by on-board diagnostics systems and smartphone applications, has introduced granular data on speed, acceleration, and GPS location. Such data offer a dynamic perspective on risk and are increasingly adopted by insurers to supplement traditional models, with the potential to enhance pricing accuracy and fairness (Eling and Kraft Reference Eling and Kraft2020).

However, the use of telematics data faces two primary challenges. First, identifying meaningful features from high-frequency telematics data is complex. Traditional feature engineering aggregates metrics like harsh braking, cornering events, or time spent driving on specific road types (see Paefgen et al. Reference Paefgen, Staake and Fleisch2014, Verbelen et al. Reference Verbelen, Antonio and Claeskens2018, Bian et al. Reference Bian, Yang, Zhao and Liang2018, Jin et al. Reference Jin, Deng, Jiang, Xie, Shen and Han2018, Denuit et al. (Reference Denuit, Guillen and Trufin2019), Ayuso et al. Reference Ayuso, Guillen and Nielsen2019, Huang and Meng Reference Huang and Meng2019, Sun et al. Reference Sun, Bi, Guillen and Pérez-Marn2020, Longhi and Nanni Reference Longhi and Nanni2020), but these approaches often neglect critical temporal patterns, trends, and interactions between telematics variables. Emerging methods, such as speed-acceleration heatmaps (Wüthrich Reference Wüthrich2017, Gao et al. Reference Gao, Meng and Wüthrich2019, Gao et al. Reference Gao, Wang and Wüthrich2022) and time-series modelling with neural networks (Fang et al. Reference Fang, Yang, Zhang, Xie, Wang, Yang, Zhang and Zhang2021), have been proposed to analyse raw telematics data. While promising, these techniques demand careful feature design and interpretation, particularly for insurance applications.

Second, integrating telematics into insurance models requires balancing the granular nature of telematics data with the sparsity of claims data. Accidents are rare and claims are often aggregated over policy periods, whereas telematics data are recorded continuously and at high frequency. Most existing studies address this mismatch by aggregating telematics metrics at the trip level and regressing annual claim probabilities and frequencies on these features. However, these approaches often assume stable driving behaviour over time, failing to capture the variability and evolving patterns in risk. These limitations necessitate new methods that preserve the richness of telematics data and perform risk evaluation on a more granular level.

We would like to highlight that this study is not a typical actuarial paper focused on modelling claim numbers or developing pricing frameworks. Rather, it aims to understand driving behaviour using telematics data, detect anomalies, and evaluate risk at both the trip and driver levels. While the insights gained can benefit actuarial applications such as pricing and risk classification, the primary objective is to advance methodological approaches for telematics-based risk analysis, rather than directly linking driving behaviour to claims frequency or premium determination. Moreover, all the analysis presented in this paper is based solely on telematics data—no classical covariates commonly used in Property and Casualty (P&C) insurance are considered. This is a significant contribution, as we demonstrate that meaningful insights and effective risk analysis can be achieved without relying on traditional covariates. By shifting the focus to directly observable driving behaviour, our approach provides a data-driven alternative that may potentially avoid fairness-related concerns associated with demographic characteristics (e.g., gender, race) and socioeconomic status. While fairness in insurance is often framed as a pricing problem, we emphasize that fairness begins at the risk assessment stage, which ultimately informs pricing decisions. Studies have shown that telematics data can capture behavioural differences that correlate with risk, reducing the need for demographic proxies. For example, Ayuso et al. Reference Ayuso, Guillen and Pérez-Marn2016 demonstrated that average distance travelled per day can replace gender in risk assessment. While we do not explicitly test such substitutions, our findings demonstrate that risk differentiation is possible using telematics data alone. By focusing solely on driving behaviour, our approach provides a more individualized and transparent framework for risk evaluation, independent of classical covariates. While integrating such methods into pricing models is beyond the scope of this study, future research could explore their potential role in fairer insurance pricing.

This work tackles the aforementioned challenges by addressing three specific problems. First, we would like to define an anomaly index to quantify the level of anomalousness of each trip, capturing deviations from normal driving behaviour. Driving behaviour such as aggressive driving, drunk driving, and fatigued driving are well-known contributors to accidents (Deng and Söffker, Reference Deng and Söffker2022). Existing studies on driving behaviour recognition and prediction often focus on distinguishing between specific behaviour, leveraging labelled datasets where driving conditions or behaviour are explicitly known. However, in real-world scenarios, such labels are rarely available, limiting the applicability of these methods. To overcome this, we do not target specific behaviour and instead, categorize diverse accident-prone behaviour broadly as ‘abnormal’ behaviour. This motivates the need for a generalized anomaly detection method that can operate without reliance on labelled data.

Second, by detecting anomalous driving behaviour, we aim to identify trips associated with accidents. When claims involving accidents are reported, they often include only the date of loss, leaving the specific trip unknown. Pinpointing the exact trip enhances claims investigations, uncovers contributing factors, and supports preventative measures to reduce future occurrences. Furthermore, accident detection improves insurance pricing by differentiating risk profiles, rewarding safer drivers, and pricing riskier behaviour appropriately. From a fleet management perspective, identifying accident-prone trips enables proactive strategies such as targeted driver training, route optimization, and maintenance scheduling, ultimately leading to increased operational efficiency, reduced costs, and safer roads. While our analysis in this work does not explicitly include location data, future research could extend our model to incorporate GPS coordinates, enabling route optimization based on trip duration, route length, and anomalous driving behaviour. Similarly, for maintenance scheduling, our anomaly detection framework could flag trips that are outliers, signalling potential accidents or vehicle issues. A rising trend in overall anomaly levels could also suggest the need for vehicle inspections and repairs. While these applications would benefit from additional data, such as real-time traffic conditions and vehicle characteristics, our approach provides a foundation for enhancing fleet management through data-driven insights.

Third, we would like to evaluate risk at both the trip and driver levels. Not all trips by a claimed driver are dangerous, nor are all trips by a no-claim driver safe. A more granular, trip-based risk evaluation allows for dynamic updates to risk profiles, as well as improved risk classification that are not solely based on claims history. Rather than solely identifying accident-related trips, our anomaly detection method assesses deviations from typical driving behaviour, providing a broader measure of driving risk. Importantly, a trip without an accident does not necessarily mean it was completely safe—risky manoeuvres and near-misses (e.g., Arai et al. Reference Arai, Nishimoto, Ezaka and Yoshimoto2001, Guillen et al. Reference Guillen, Nielsen, Pérez-Marn and Elpidorou2020, Guillen et al. Reference Guillen, Nielsen and Pérez-Marn2021, Sun et al. Reference Sun, Bi, Guillen and Pérez-Marn2021) can occur without resulting in a recorded crash. These events are strong indicators of increased risk and should be accounted for when evaluating driving behaviour. Moreover, risk assessment must extend beyond accident identification, as even claim-free drivers need to be ranked by their riskiness. While a simpler classification model might suffice for distinguishing accident-related trips with precise accident labels, our approach applies to all trips, regardless of whether an accident occurs. Accident-related trips are used to establish the relationship between the anomaly index and accident likelihood, allowing us to predict accident probability and assess trip riskiness for trips with no accidents. This ensures that our method provides a more comprehensive evaluation of risk, capturing both actual accidents and high-risk driving patterns that could lead to future incidents. Trip-level risk assessments can then be aggregated to the driver level by evaluating extreme behaviour and focusing on the right-tail of the risk distribution. This approach enables better identification of claim-prone individuals and more effective differentiation of risk for insurance ratemaking purposes.

We tackle these problems by proposing a flexible framework that uses continuous-time hidden Markov models (CTHMMs) to model and analyse trip-level telematics time series. By modelling multivariate telematics time series — speed, longitudinal acceleration, and lateral acceleration—the CTHMM exploits the time-series structure of telematics and captures the dependencies between different variables. This enables the detection of abnormal driving patterns and their connection to risk, providing detailed and novel insights into driver risk profiles. Importantly, the CTHMM accommodates unevenly spaced time intervals in telematics data, fully utilizing data granularity without interpolation, which could otherwise distort driving behaviour patterns.

Hidden Markov models (HMMs), as dynamic mixture models supported by extensive statistical theories, offer significant flexibility and have been applied to various domains, including speech recognition, finance, and bioinformatics. HMMs have also been widely used to study driving behaviour. Deng and Söffker (Reference Deng and Söffker2022) conducted a survey of HMM-based approaches in driving behaviour recognition and prediction, especially in the development of advanced driver assistance systems (ADAS), and we refer interested readers to an extensive lists of literature therein. However, the complex structure of real-world telematics data presents unique challenges: irregular time intervals pose difficulties for data modelling, while boundary points complicate anomaly detection. Most of the reviewed literature uses discrete-time HMMs, which struggle with these challenges, demanding modifications to the standard HMM framework.

In the context of actuarial science, the study of vehicle telematics at the trip level is relatively new. To our best knowledge, Jiang and Shi (Reference Jiang and Shi2024) is the only other work that also applied HMMs to model trip-level telematics data. However, their approach and ours differ substantially. Jiang and Shi (Reference Jiang and Shi2024) employed discrete-time HMMs to model driving behaviour that have been categorized based on predefined thresholds (e.g., hard braking, sharp turns) and analysed each trip’s (latent) state durations with assumed probabilities for claim occurrences. In contrast, our CTHMM models numerical telematics data directly in a continuous-time setting, accounting for the irregular time intervals while avoiding hard thresholds. Additionally, our analysis is based on anomaly detection, which does not require explicit state interpretations. Instead, we link claims to the proportion of anomalies across telematics dimensions using logistic models, without assuming any direct relationship between claim probabilities and latent states.

We further developed a generalized anomaly detection method using a fitted CTHMM. Traditional HMM-based anomaly detection methods rely on labelled normal sequences for training (Khreich et al. Reference Khreich, Granger, Sabourin and Miri2009, Li et al. Reference Li, Pedrycz and Jamal2017, Leon-Lopez et al. Reference Leon-Lopez, Mouret, Arguello and Tourneret2021) and identify anomalies based on low likelihoods of observations (Khreich et al. Reference Khreich, Granger, Sabourin and Miri2009, Florez-Larrahondo et al. Reference Florez-Larrahondo, Bridges and Vaughn2005, Goernitz et al. Reference Goernitz, Braun, Kloft, Bach and Blei2015). However, not only is labelled data often unavailable, defining thresholds for low likelihoods is also challenging, especially when telematics sequences vary in length. Our method overcomes these limitations by unsupervisedly learning the most prevalent transitions and observations across trips. We quantify anomaly level based on the proportion of outliers within each trip, introducing the concepts of the ‘anomaly index’ and the ‘normalized anomaly index’. Through a controlled study and a real data application, we show that the method is effective in identifying outliers and detecting deviations in driving behaviour, proving its practicality in telematics-based risk analysis.

Anomaly detection can be performed at either the driver level using an individual-specific CTHMM, or at the group level using a pooled CTHMM. The individual-specific model captures each driver’s unique, normal driving patterns and identifies anomalous trips that deviate from these norms. For instance, we find that a higher anomaly index in longitudinal acceleration correlates with aggressive driving, while increased anomaly indices in both longitudinal and lateral accelerations are strongly linked to a higher probability of accident involvement. Classification models based on these features achieve ROC-AUCs (areas under the receiver operating characteristic curve) of at least 0.8, demonstrating the effectiveness of our approach in identifying high-risk trips. To the best of our knowledge, there are no existing classification models in the actuarial science literature specifically designed to identify accident-related trips. Given this, our result is particularly notable, as values above 0.8 are generally considered to indicate excellent classification performance.

In the context of trip-level risk evaluation, Meng et al. (Reference Meng, Wang, Shi and Gao2022) proposed an alternative scoring method using telematics data. However, their approach imposes stricter preprocessing requirements, such as focusing only on speed ranges between 10 and 60 ![]() $km/h$, analysing the first five minutes of each trip, and limiting the analysis to a fixed number of trips per driver. In contrast, our methodology considers all non-zero speed intervals, evaluates entire trips, and accommodates varying trip lengths and counts, capturing richer temporal dynamics. Furthermore, the training datasets used for trip-level evaluations differ fundamentally. Meng et al. (Reference Meng, Wang, Shi and Gao2022) used Poisson generalized linear model (GLM) deviance residuals to classify drivers as risky or safe, labelling all trips from high-residual drivers as risky and those from high-exposure, claimless drivers as safe. In comparison, our individual-specific CTHMM approach targets trips associated with accidents and at-fault claims, based on analysis on accelerations and speed. While one claim occurred in each of the studied 3-day windows, we do not assume trips in these windows to be equally risky.

$km/h$, analysing the first five minutes of each trip, and limiting the analysis to a fixed number of trips per driver. In contrast, our methodology considers all non-zero speed intervals, evaluates entire trips, and accommodates varying trip lengths and counts, capturing richer temporal dynamics. Furthermore, the training datasets used for trip-level evaluations differ fundamentally. Meng et al. (Reference Meng, Wang, Shi and Gao2022) used Poisson generalized linear model (GLM) deviance residuals to classify drivers as risky or safe, labelling all trips from high-residual drivers as risky and those from high-exposure, claimless drivers as safe. In comparison, our individual-specific CTHMM approach targets trips associated with accidents and at-fault claims, based on analysis on accelerations and speed. While one claim occurred in each of the studied 3-day windows, we do not assume trips in these windows to be equally risky.

Conversely, the pooled model learns common, normal driving patterns across a population. It detects anomalous trips among drivers and facilitates the comparison and ranking of drivers based on their level of anomalousness. Our real-data analysis reveals notable differences in driving behaviour between drivers with at-fault claims and those without claims. While trips by claimed drivers exhibit lower average anomaly indices, their maximum anomaly indices attained are consistently higher—over time, drivers in the claimed group demonstrate more extreme behaviour than those in the no-claim group. Using the right tail of each driver’s anomaly index empirical distribution to classify drivers, our models achieve ROC-AUCs ranging from 0.7 to 0.78, with improved results when incorporating exposure (number of trips). These results are competitive with existing studies on policy-level claim classification incorporating telematics data, where most models report ROC-AUCs (if available) between 0.58 and 0.62 (Paefgen et al. Reference Paefgen, Staake and Fleisch2014, Baecke and Bocca Reference Baecke and Bocca2017, Bian et al. Reference Bian, Yang, Zhao and Liang2018, Jin et al. Reference Jin, Deng, Jiang, Xie, Shen and Han2018, Huang and Meng Reference Huang and Meng2019, Duval et al. Reference Duval, Boucher and Pigeon2022, Duval et al. Reference Duval, Boucher and Pigeon2023). The highest reported ROC-AUC in this domain is 0.80, achieved by Li et al. (Reference Li, Luo, Zhang, Jiang and Huang2023), which also relies solely on telematics data, but incorporates a much broader set of features, including annual mileage, number of trips, exposure to different times of the day and days of the week, and harsh events, etc. More broadly, models based only on traditional covariates generally perform the worst, followed by those relying only on telematics data, with the best results achieved when both sources are combined. These comparisons further highlight the strength of our anomaly-based approach, as it achieves comparable or superior performance using only a minimal set of telematics features. We emphasize that our risk evaluation relies exclusively on telematics data, as traditional covariates regarding the driver or vehicle are unavailable. Nevertheless, our ROC-AUCs outperform the reported industry benchmark of 0.65, demonstrating the effectiveness of our methodology.

The remainder of this paper is organized as follows. Section 2 gives an overview of the telematics dataset analysed in this work and discusses the associated data challenges which motivate the proposed modelling. Section 3 introduces the continuous-time hidden Markov model (CTHMM) framework for modelling trip-level telematics data and outlines the proposed anomaly detection method. Section 4 details the estimation algorithm. Section 5 validates the framework and methodology through a controlled study, while Section 6 applies the model to real-world data, focusing on accident-related trips and driver behaviour. Finally, Section 7 concludes the paper with a discussion of future research direction.

2. Data description and challenges

This section describes the real-world telematics dataset we will study throughout this paper and the associated challenges which have motivated our proposed modelling.

2.1. Overview of data and challenges

We analyse a rental car dataset from Australia focused on medium- to long-term rentals, with a median duration of 1 month and a mean of 2 months. For each trip, telematics information are recorded at irregular time intervals: timestamp, GPS locations (latitude and longitude) and speed. This raw telematics data allow the calculation of time lapse (time since the last observation) and lateral and longitudinal accelerations. The dataset also includes claims reported between January 1, 2019 and January 31, 2023.

The first challenge with this dataset is the lack of labels. On one hand, we do not have labels regarding the driving behaviour (e.g., normal or aggressive) nor the riskiness of each trip. On the other hand, in cases where the rental results in claims and the date of loss is known, we do not know exactly in which trip the accident or damage occurred. Therefore, we will employ unsupervised learning for trip telematics modelling.

Moreover, this dataset poses another challenge, which is the lack of traditional covariates, such as driver-specific or vehicle-specific characteristics commonly used in risk modelling. As a result, we focus solely on telematics data to assess driving risk. First, we would like to use the proposed model to study and analyse various driving behaviour. However, driving behaviour can be influenced by many factors, such as the driver’s experience, habits and the current traffic environment, leading to considerable heterogeneity. Furthermore, driving behaviour can be categorized into different driving styles, such as normal, cautious, and aggressive (Ma et al. Reference Ma, Li, Tang, Zhang and Chen2021). However, there is no unified standard to clearly distinguish these styles. Deng and Söffker (Reference Deng and Söffker2022) pointed out that in existing literature, the levels, terms, and concepts often depend on the authors’ own definitions. For this reason, instead of pre-specifying the different behaviour, we let the fitted model inform us what the various types of behaviour and the transitions between them are. This is similar to the study of animal movements (Michelot et al. Reference Michelot, Langrock and Patterson2016, Whoriskey et al. Reference Whoriskey, Auger-Méthé, Albertsen, Whoriskey, Binder, Krueger and Mills Flemming2017, Grecian et al. Reference Grecian, Lane, Michelot, Wade and Hamer2018). Second, while claims records are available for the dataset under consideration, this is not typically the case for our industry collaborator’s other datasets. Yet, telematics datasets without claims records are exactly where we would like to apply our proposed methodology for risk evaluation. The absence of claims or accidents does not imply an absence of risk, as driving risk is not constant even when no claims or accidents occur. Hence, we require a model that can learn and analyse driving behaviour without supervision and quantify the riskiness of each trip independently of claims data. Using the available claims records, we further validate that the anomaly indices are positively associated with accident involvement, demonstrating that our method can effectively assess driving risk in both settings.

There are two more challenges regarding the structure of the telematics records. First, the time series are irregular, where the sequence of data points is recorded at unevenly spaced time intervals. The reason is that our collaborator employs a patented curve logging technique in its telematics tracking system, which eliminates intermediate data points when changes are ‘insignificant’, to reduce the burden of data transmission and storage. In the dataset, this technique has been applied to both GPS locations logging and speed logging, and consequently, the telematics time series become irregular. Although this technique eases data transmission and storage burden, analysis and modelling of irregular time series are more challenging because traditional time-series methods often assume a regular time interval between observations. One of the simplest and most straight-forward solutions is to choose a regular time interval (e.g., per second) and fill in missing data via linear interpolation. However, such method conflicts with the purpose of curve logging. Moreover, while we can return to traditional time-series analysis after linear interpolation, much of the modelling and computing effort will be placed on the interpolated points which provide no new information. For these reasons, we opt against using linear interpolation.

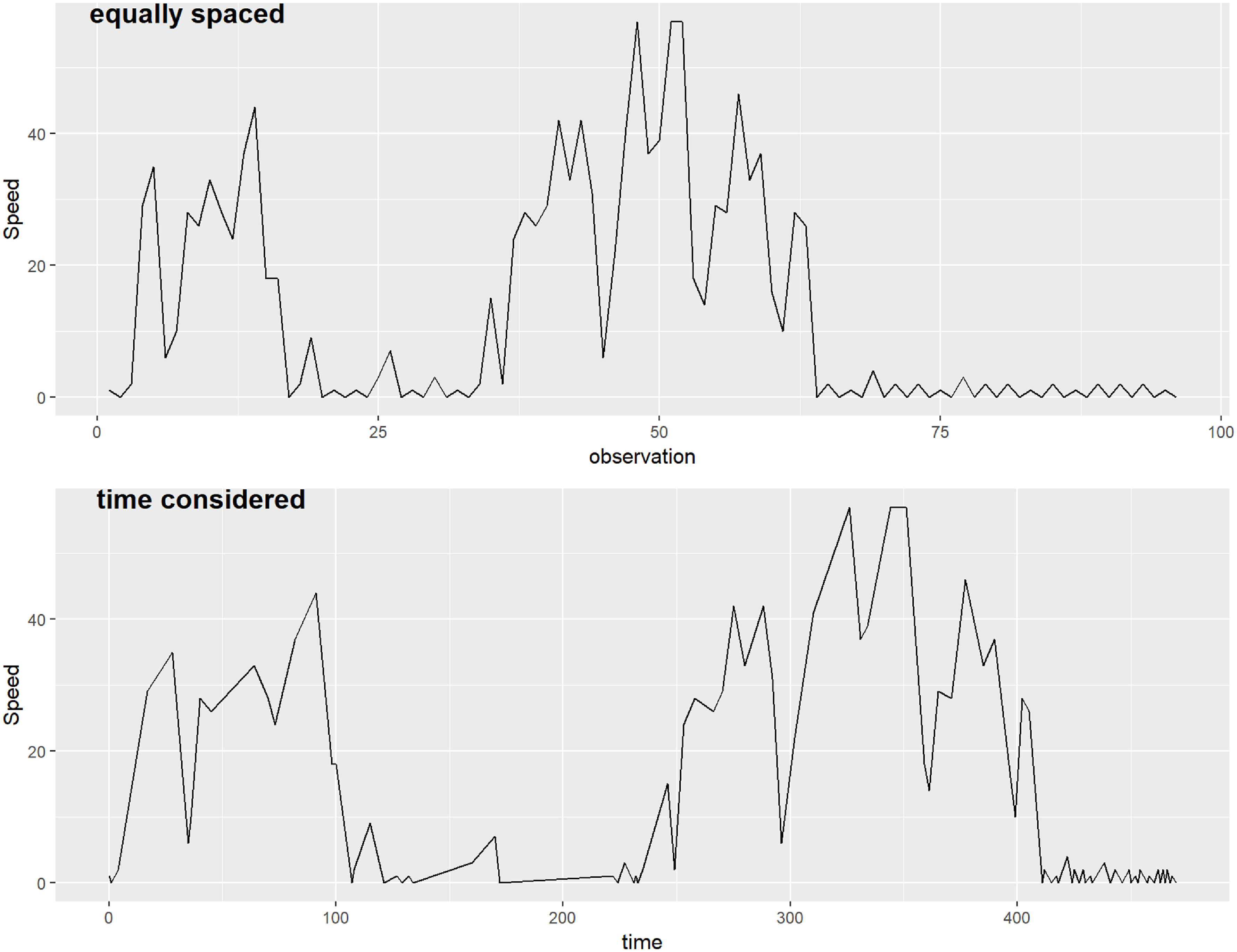

It is natural to question how important it is to consider the irregular time intervals, and how different the time series can be if these intervals are neglected. For illustrative purpose, Figure 1 shows the speed time series of a sample trip. The top sub-figure illustrates how the time series would appear if observations were assumed to be recorded at regular intervals. While the x-axis is labelled ‘observation’, it can also be interpreted as if the observations were collected at a fixed rate (e.g., one per second or at another constant interval). The bottom sub-figure represents the time series considering the actual timestamps (in seconds) of each observation, hence accounting for the irregular time intervals between observations; this reflects the true speed time series as it occurred in reality. For example, the small ‘V’ shape at the start of the trip consists of observations recorded approximately one second apart, whereas the observations centered around 200 seconds have a time gap of 50 seconds between them.

Figure 1. Speed time series (in ![]() $km/h$) of a sample trip. Top: assuming observations are recorded regularly; Bottom: considering the actual observation times (in seconds).

$km/h$) of a sample trip. Top: assuming observations are recorded regularly; Bottom: considering the actual observation times (in seconds).

We observe that the patterns are similar, since the ‘significant’ changes should have been captured by curve logging. However, the interpretation can be different, especially if we would like to study the different driving behaviour, such as the magnitudes of acceleration and braking. In this example, we observe that first, changes can seem less or more abrupt than they actually are; and second, the small fluctuations at the end of the trip appear to have a much longer duration if observation times are not considered (around 40% of the entire trip vs. less than 25% of the trip).

Another challenge with the telematics records is that the trips (and their time series) are of different lengths. A possible solution is dynamic time warping (DTW), which is a technique to align, compare and measure the similarity of time series of different lengths. However, DTW requires regular time series, hence it is not directly applicable to our data unless the time series have been linearly interpolated.

The three aforementioned challenges demand a flexible, unsupervised time series model, which can incorporate time series irregularity and of varying lengths. We propose the use of a time-homogeneous CTHMM, where different states of the latent Markov chain will represent various driving actions and can be inferred via the observed telematics data. Together, all these states will describe the prevalent driving behaviour observed in the telematics data.

2.2. Data preparation

In particular, we model the following telematics responses: time (implicitly incorporated in the hidden Markov chain transitions), speed, and acceleration, which we further decompose into longitudinal (acceleration and braking) and lateral (left and right turning) components. Longitudinal acceleration reflects forward and backward movements, capturing braking and acceleration patterns, while lateral acceleration represents side-to-side movements, associated with cornering and swerving (Rajamani Reference Rajamani2012, Gillespie Reference Gillespie2020). This decomposition provides a more detailed characterization of driving behaviour, allowing us to distinguish between different driving styles and risk factors. For example, high longitudinal acceleration may indicate aggressive driving or stop-and-go traffic, whereas high lateral acceleration could suggest sharp cornering or sudden lane changes, which may result from reckless maneuvering or defensive evasive actions. Moreover, this separation enhances anomaly detection, enabling the model to identify distinct types of risky events rather than treating all acceleration events uniformly.

For illustrative purposes, let us consider a trip starting at time ![]() $t_0$ and ending at time

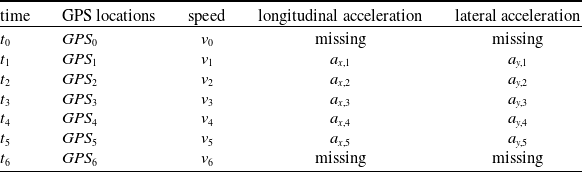

$t_0$ and ending at time ![]() $t_6$. We will employ the response alignments as shown in Table 1. To achieve the necessary structure, we first convert the GPS locations in latitude and longitude to Universal Transverse Mercator (UTM) coordinates, then we apply the following physics formula to obtain the longitudinal and lateral accelerations.

$t_6$. We will employ the response alignments as shown in Table 1. To achieve the necessary structure, we first convert the GPS locations in latitude and longitude to Universal Transverse Mercator (UTM) coordinates, then we apply the following physics formula to obtain the longitudinal and lateral accelerations.

Table 1. Telematics response alignments.

Let ![]() $\textbf{s} = (e, n)$ be the position of the vehicle at some time t, where e denotes the Easting and n denotes the Northing of the vehicle in UTM coordinates. From two consecutive UTM coordinates and the time interval in between, we calculate the velocity of the vehicle as

$\textbf{s} = (e, n)$ be the position of the vehicle at some time t, where e denotes the Easting and n denotes the Northing of the vehicle in UTM coordinates. From two consecutive UTM coordinates and the time interval in between, we calculate the velocity of the vehicle as

In the case of discrepancy between this velocity and the GPS speed, velocity is scaled such that the magnitude matches the latter speed. Hence, velocity will be missing at the start of the trip. Then from two consecutive velocities, the acceleration is given by

It is noteworthy that this acceleration is attributed to the initial time point ![]() $t_1$ as this is the (average) acceleration applied from time

$t_1$ as this is the (average) acceleration applied from time ![]() $t_1$ to

$t_1$ to ![]() $t_2$. Consequently, the acceleration values at both the start and end of the trip will be missing. Then the x-axis/longitudinal/tangential component of acceleration is

$t_2$. Consequently, the acceleration values at both the start and end of the trip will be missing. Then the x-axis/longitudinal/tangential component of acceleration is

and the y-axis/lateral/normal component of acceleration is

3. Modelling framework: Continuous-time hidden Markov models

In this section, we introduce the time-homogeneous, continuous-time hidden Markov model (CTHMM) used to model trip-level telematics data. Then, we outline an anomaly detection method to quantify the degree of deviation in driving behaviour demonstrated by each trip. Finally, we discuss some assumptions and adjustments made to ensure the CTHMM can effectively accommodate the complexities of telematics data and fulfill our analysis need.

We consider a population of M drivers. For driver i, ![]() $i = 1, 2, ..., M$, we have his/her telematics records over

$i = 1, 2, ..., M$, we have his/her telematics records over ![]() $N_i$ trips. For trip j,

$N_i$ trips. For trip j, ![]() $j = 1, ..., N_i$, the multi-dimensional telematics data

$j = 1, ..., N_i$, the multi-dimensional telematics data ![]() $\mathbf{y}_{ijl}$ are recorded at

$\mathbf{y}_{ijl}$ are recorded at ![]() $t_{ijl}$,

$t_{ijl}$, ![]() $l = 0, 1, ..., T_{ij}$, time points with irregular intervals.

$l = 0, 1, ..., T_{ij}$, time points with irregular intervals.

3.1. Model description

The CTHMM is driven by a hidden Markov chain. A continuous-time hidden Markov chain with S discrete latent states is defined by an initial state probability distribution ![]() $\pi$ and a state transition rate matrix

$\pi$ and a state transition rate matrix ![]() $\mathbf{Q}$. The elements

$\mathbf{Q}$. The elements ![]() $q_{uv}$ in

$q_{uv}$ in ![]() $\mathbf{Q}$ describe the rate the process transitions from states u to v for

$\mathbf{Q}$ describe the rate the process transitions from states u to v for ![]() $u \neq v$,

$u \neq v$, ![]() $u, v = 1,...,S$, and

$u, v = 1,...,S$, and ![]() $q_{uu} = - q_u = - \sum_{v\neq u} q_{uv}$. The chain is time homogeneous in the sense that

$q_{uu} = - q_u = - \sum_{v\neq u} q_{uv}$. The chain is time homogeneous in the sense that ![]() $q_{uv}$ are independent of time t. The probability that the process transitions from states u to v is

$q_{uv}$ are independent of time t. The probability that the process transitions from states u to v is ![]() $q_{uv}/q_u$, while the sojourn time in state u follows an exponential distribution with rate

$q_{uv}/q_u$, while the sojourn time in state u follows an exponential distribution with rate ![]() $q_u$.

$q_u$.

We assume drivers are independent, and each trip for a given driver is an independent time series generated from the CTHMM. To simplify the notation, we first consider a single trip j. At time point ![]() $t_l$, the available observed telematics data

$t_l$, the available observed telematics data ![]() $\mathbf{y}_l$ depends on the current latent state

$\mathbf{y}_l$ depends on the current latent state ![]() $Z_l$ through the state-dependent distribution

$Z_l$ through the state-dependent distribution ![]() $f(\mathbf{y}_l | Z_l = z(t_l))$, where

$f(\mathbf{y}_l | Z_l = z(t_l))$, where ![]() $z(t_l) \in \{1,2,...,S\}$ is the latent state at time

$z(t_l) \in \{1,2,...,S\}$ is the latent state at time ![]() $t_l$. Denote

$t_l$. Denote ![]() $\boldsymbol{\Phi} = (\pi, \mathbf{Q}, \boldsymbol{\Theta})$ the set of model parameters, where

$\boldsymbol{\Phi} = (\pi, \mathbf{Q}, \boldsymbol{\Theta})$ the set of model parameters, where ![]() $\pi$ is the initial state probability distribution,

$\pi$ is the initial state probability distribution, ![]() $\mathbf{Q}$ is the transition rate matrix and

$\mathbf{Q}$ is the transition rate matrix and ![]() $\boldsymbol{\Theta}$ are the parameters of the state-dependent distributions. If the continuous-time Markov model has been fully observed, meaning that one can observe every state transition time

$\boldsymbol{\Theta}$ are the parameters of the state-dependent distributions. If the continuous-time Markov model has been fully observed, meaning that one can observe every state transition time ![]() $\mathbf{T}' = (t'_0, t'_1, ..., t'_{T'})$, the corresponding states

$\mathbf{T}' = (t'_0, t'_1, ..., t'_{T'})$, the corresponding states ![]() $\mathbf{Z}' = (z'_0 = z(t'_0), z'_1 = z(t'_1), ..., z'_{T'} = z(t'_{T'}))$, the observed data

$\mathbf{Z}' = (z'_0 = z(t'_0), z'_1 = z(t'_1), ..., z'_{T'} = z(t'_{T'}))$, the observed data ![]() $\mathbf{Y} = (\mathbf{y}_0, \mathbf{y}_1, ..., \mathbf{y}_T)$ at observation time points

$\mathbf{Y} = (\mathbf{y}_0, \mathbf{y}_1, ..., \mathbf{y}_T)$ at observation time points ![]() $\mathbf{T} = (t_0, t_1, ..., t_T)$, then the complete data likelihood is

$\mathbf{T} = (t_0, t_1, ..., t_T)$, then the complete data likelihood is

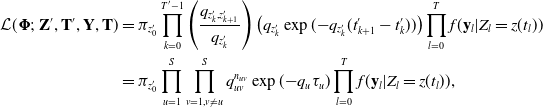

\begin{align*} \mathcal{L}(\boldsymbol{\Phi};\ \mathbf{Z}', \mathbf{T}', \mathbf{Y}, \mathbf{T}) &= \pi_{z'_0} \prod_{k=0}^{T' - 1} \left(\frac{q_{z'_{k}, z'_{k+1}}}{q_{z'_{k}}}\right)\left(q_{z'_{k}} \exp({-}q_{z'_{k}}(t'_{k+1} - t'_{k}))\right) \prod_{l=0}^T f(\mathbf{y}_l | Z_l = z(t_l)) \\ &= \pi_{z'_0} \prod_{u=1}^{S} \prod_{v=1, v\neq u}^{S} q_{uv}^{n_{uv}} \exp({-}q_u \tau_u) \prod_{l=0}^T f(\mathbf{y}_l | Z_l = z(t_l)),\end{align*}

\begin{align*} \mathcal{L}(\boldsymbol{\Phi};\ \mathbf{Z}', \mathbf{T}', \mathbf{Y}, \mathbf{T}) &= \pi_{z'_0} \prod_{k=0}^{T' - 1} \left(\frac{q_{z'_{k}, z'_{k+1}}}{q_{z'_{k}}}\right)\left(q_{z'_{k}} \exp({-}q_{z'_{k}}(t'_{k+1} - t'_{k}))\right) \prod_{l=0}^T f(\mathbf{y}_l | Z_l = z(t_l)) \\ &= \pi_{z'_0} \prod_{u=1}^{S} \prod_{v=1, v\neq u}^{S} q_{uv}^{n_{uv}} \exp({-}q_u \tau_u) \prod_{l=0}^T f(\mathbf{y}_l | Z_l = z(t_l)),\end{align*}

where ![]() $n_{uv}$ is the number of transitions from states u to v and

$n_{uv}$ is the number of transitions from states u to v and ![]() $\tau_u$ is the total time the chain remains in state u.

$\tau_u$ is the total time the chain remains in state u.

However in a realization of a CTHMM, there are two levels of hidden information. First, none of the states ![]() $\mathbf{Z}$ (at observation times) nor

$\mathbf{Z}$ (at observation times) nor ![]() $\mathbf{Z}'$ (at transition times) are directly observed in the hidden Markov chain and can only be inferred from the observed data

$\mathbf{Z}'$ (at transition times) are directly observed in the hidden Markov chain and can only be inferred from the observed data ![]() $\mathbf{Y}$. Second, the state transitions between two consecutive observations are also hidden– note the difference between state transition times

$\mathbf{Y}$. Second, the state transitions between two consecutive observations are also hidden– note the difference between state transition times ![]() $\mathbf{T}'$ and observation times

$\mathbf{T}'$ and observation times ![]() $\mathbf{T}$. In other words, the chain may have multiple transitions before reaching a state which emits an observation. As a consequence, both

$\mathbf{T}$. In other words, the chain may have multiple transitions before reaching a state which emits an observation. As a consequence, both ![]() $n_{uv}$ and

$n_{uv}$ and ![]() $\tau_u$ are also unobserved. Here we assume the observed telematics process possesses the ‘snapshot’ property, such that the observation made at time

$\tau_u$ are also unobserved. Here we assume the observed telematics process possesses the ‘snapshot’ property, such that the observation made at time ![]() $t_l$ depends only on the state active at time

$t_l$ depends only on the state active at time ![]() $t_l$, instead of the entire state trajectory over the interval

$t_l$, instead of the entire state trajectory over the interval ![]() $(t_{l-1},t_l]$ (Patterson et al., Reference Patterson, Parton, Langrock, Blackwell, Thomas and King2017).

$(t_{l-1},t_l]$ (Patterson et al., Reference Patterson, Parton, Langrock, Blackwell, Thomas and King2017).

We introduce two indicator functions: ![]() $\mathbb{1}_{\{Z_l = u\}} = 1$ if the latent state

$\mathbb{1}_{\{Z_l = u\}} = 1$ if the latent state ![]() $z_l$ at observation time

$z_l$ at observation time ![]() $t_l$ is u, and 0 otherwise; and

$t_l$ is u, and 0 otherwise; and ![]() $\mathbb{1}_{\{Z_l = u, Z_{l+1} = v\}} = 1$ if the latent states

$\mathbb{1}_{\{Z_l = u, Z_{l+1} = v\}} = 1$ if the latent states ![]() $z_l$,

$z_l$, ![]() $z_{l+1}$ at observation times

$z_{l+1}$ at observation times ![]() $t_l$ and

$t_l$ and ![]() $t_{l+1}$ are u and v, respectively, and 0 otherwise. The likelihood can be expressed instead as

$t_{l+1}$ are u and v, respectively, and 0 otherwise. The likelihood can be expressed instead as

\begin{equation*}\mathcal{L}(\boldsymbol{\Phi};\ \mathbf{Y}, \mathbf{T}) = \prod_{u=1}^S \pi_u ^{\mathbb{1}_{\{Z_0 = u\}}} \prod_{l=0}^{T - 1} \prod_{u,v=1}^S \mathbf{P}_{uv}(\Delta_{l+1})^{\mathbb{1}_{\{Z_l = u,Z_{l+1} = v\}}} \prod_{l=0}^T \prod_{u=1}^S f_u(\mathbf{y}_l)^{\mathbb{1}_{\{Z_l = u\}}},\end{equation*}

\begin{equation*}\mathcal{L}(\boldsymbol{\Phi};\ \mathbf{Y}, \mathbf{T}) = \prod_{u=1}^S \pi_u ^{\mathbb{1}_{\{Z_0 = u\}}} \prod_{l=0}^{T - 1} \prod_{u,v=1}^S \mathbf{P}_{uv}(\Delta_{l+1})^{\mathbb{1}_{\{Z_l = u,Z_{l+1} = v\}}} \prod_{l=0}^T \prod_{u=1}^S f_u(\mathbf{y}_l)^{\mathbb{1}_{\{Z_l = u\}}},\end{equation*}

where ![]() $\mathbf{P}(t) = \exp(\mathbf{Q}(t))$ with

$\mathbf{P}(t) = \exp(\mathbf{Q}(t))$ with ![]() $\Delta_l = t_l-t_{l-1}$ and matrix exponential

$\Delta_l = t_l-t_{l-1}$ and matrix exponential ![]() $\exp$, and

$\exp$, and ![]() $f_u(\mathbf{y}_l) = f(\mathbf{y}_l | Z_l = u)$.

$f_u(\mathbf{y}_l) = f(\mathbf{y}_l | Z_l = u)$. ![]() $\mathbf{P}(t)$ is a state-transition matrix with the (u,v)-th entry

$\mathbf{P}(t)$ is a state-transition matrix with the (u,v)-th entry ![]() $\mathbf{P}_{uv}(t)$, which is the probability that the (latent) Markov chain transitions from state u to state v after time t. It accounts for all possible intermediate state transitions which emit no observations happening before time t.

$\mathbf{P}_{uv}(t)$, which is the probability that the (latent) Markov chain transitions from state u to state v after time t. It accounts for all possible intermediate state transitions which emit no observations happening before time t.

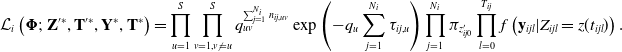

In an individual-specific model, where a distinct CTHMM is fitted for each individual, the complete data likelihood for a driver i with ![]() $N_i$ trips is given by

$N_i$ trips is given by

\begin{align*} \mathcal{L}_i \left(\boldsymbol{\Phi};\ \mathbf{Z}'^*, \mathbf{T}'^*, \mathbf{Y}^*, \mathbf{T}^* \right) &= \prod_{u=1}^{S} \prod_{v=1, v\neq u}^{S} q_{uv}^{\sum_{j=1}^{N_i} n_{ij,uv}} \exp\left({-}q_u \sum_{j=1}^{N_i} \tau_{ij,u}\right) \prod_{j=1}^{N_i} \pi_{z'_{ij0}} \prod_{l=0}^{T_{ij}} f \left(\mathbf{y}_{ijl} | Z_{ijl} = z(t_{ijl}) \right).\end{align*}

\begin{align*} \mathcal{L}_i \left(\boldsymbol{\Phi};\ \mathbf{Z}'^*, \mathbf{T}'^*, \mathbf{Y}^*, \mathbf{T}^* \right) &= \prod_{u=1}^{S} \prod_{v=1, v\neq u}^{S} q_{uv}^{\sum_{j=1}^{N_i} n_{ij,uv}} \exp\left({-}q_u \sum_{j=1}^{N_i} \tau_{ij,u}\right) \prod_{j=1}^{N_i} \pi_{z'_{ij0}} \prod_{l=0}^{T_{ij}} f \left(\mathbf{y}_{ijl} | Z_{ijl} = z(t_{ijl}) \right).\end{align*}

Alternatively, it can be represented as

\begin{equation*}\mathcal{L}_i(\boldsymbol{\Phi};\ \mathbf{Y}, \mathbf{T}) = \prod_{j=1}^{N_i} \left(\prod_{u=1}^S \pi_u ^{\mathbb{1}_{\{Z_{ij0} = u\}}} \prod_{l=0}^{T_{ij} - 1} \prod_{u,v=1}^S \mathbf{P}_{uv}(\Delta_{ij,l+1})^{\mathbb{1}_{\{Z_{ijl} = u,\;Z_{ij,l+1} = v\}}} \prod_{l=0}^{T_{ij}} \prod_{u=1}^S f_u(\mathbf{y}_{ijl})^{\mathbb{1}_{\{Z_{ijl}= u\}}}\right).\end{equation*}

\begin{equation*}\mathcal{L}_i(\boldsymbol{\Phi};\ \mathbf{Y}, \mathbf{T}) = \prod_{j=1}^{N_i} \left(\prod_{u=1}^S \pi_u ^{\mathbb{1}_{\{Z_{ij0} = u\}}} \prod_{l=0}^{T_{ij} - 1} \prod_{u,v=1}^S \mathbf{P}_{uv}(\Delta_{ij,l+1})^{\mathbb{1}_{\{Z_{ijl} = u,\;Z_{ij,l+1} = v\}}} \prod_{l=0}^{T_{ij}} \prod_{u=1}^S f_u(\mathbf{y}_{ijl})^{\mathbb{1}_{\{Z_{ijl}= u\}}}\right).\end{equation*}

In a pooled model, where a single CTHMM is trained to accommodate multiple individuals, each with ![]() $N_i$ trips for

$N_i$ trips for ![]() $i=1,...,M$, the complete data likelihood across all drivers is

$i=1,...,M$, the complete data likelihood across all drivers is

or equivalently

The rationale for choosing between the individual-specific model and the pooled model will be discussed in Section 3.3.

While our modelling framework assumes independence across both drivers and trips by the same driver, the latter requires further consideration to assess its validity and implications. Rather than modelling each trip separately, we fit a single individual-specific CTHMM per driver, allowing all trips to contribute to learning that driver’s unique driving patterns. This ensures that recurring behaviour, such as tendencies in acceleration, braking, and cornering, is reflected in the estimated state transitions. As a result, while individual trips are treated as independent realizations from the same model, the model itself captures intra-driver dependencies by learning stable behavioural tendencies over time.

Although consecutive trips within a short time window (e.g., within the same day) may exhibit stronger correlations due to persistent road, weather, or driver conditions, variations still arise due to factors such as traffic conditions, driver fatigue, and trip purpose. These sources of randomness support the assumption that trips are drawn from the same underlying distribution but are not necessarily dependent on one another. Given that our focus is on long-term behavioural patterns and anomaly detection rather than short-term trip clustering, assuming independence across trips remains a reasonable and practical simplification for risk assessment.

If strong intra-driver dependence exists beyond what is captured by the driver-specific model, it may lead to an underestimation of variability in a driver’s risk profile. Future extensions could incorporate hierarchical models to explicitly account for temporal dependencies across trips, though at the cost of increased complexity. For this study, our approach balances model complexity and interpretability while effectively capturing key aspects of driver-specific behaviour, as will be demonstrated in the applications in Sections 5 and 6.

3.2. Anomaly detection

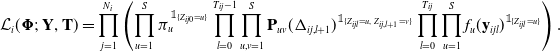

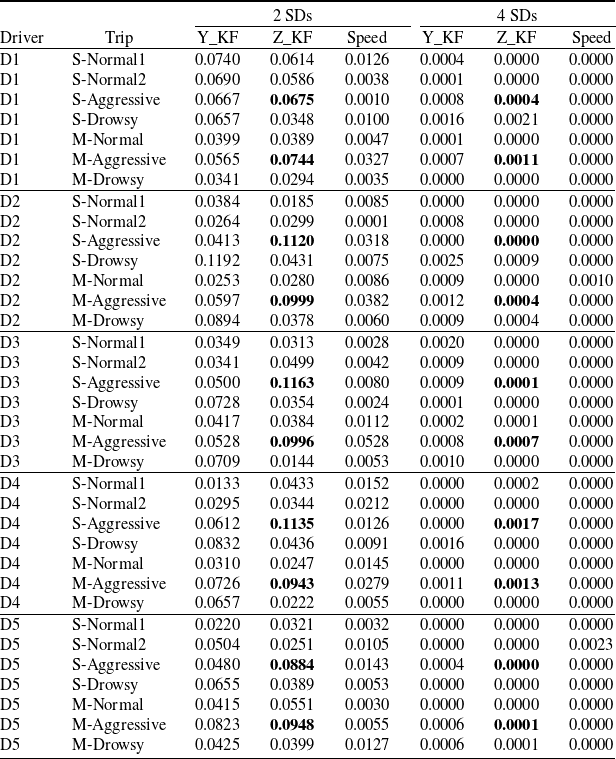

Interpreting the hidden states and their corresponding state-dependent distributions in a CTHMM can be challenging, especially when many states are needed to capture all significant combinations of the response dimensions. To illustrate this, Table 2 presents the state-dependent distributions from a 5-state individual-specific CTHMM for Driver 1 from the UAH-DriveSet (to be studied in detail in Section 5). Each row represents a latent state, and for instance, the last row (state 5) can be roughly interpreted as a driving action of ‘left turn (negative lateral acceleration) and deceleration (negative longitudinal acceleration) at a lower speed range (with the lowest mean speed of 81 ![]() $km/h$, though with a large standard deviation)’.

$km/h$, though with a large standard deviation)’.

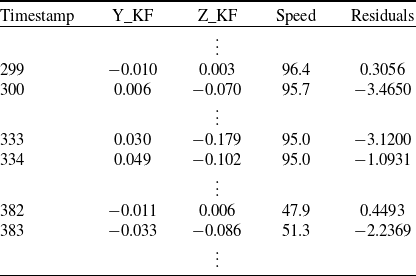

Table 2. UAH-DriveSet: Summary of state-dependent distributions from a 5-state individual-specific CTHMM for D1. SD: standard deviation.

As shown in this example, a CTHMM with just 5 latent states for 3 response dimensions already requires interpreting 15 distributions. With fewer states, the distributions exhibit large standard deviations and significant overlap, making it unclear which driving action each state represents. Furthermore, certain driving actions may not be captured, e.g., lower speed ranges appear underrepresented in this example. Conversely, with more states, similar actions may be represented by multiple distributions, making it challenging to differentiate between them. More importantly, interpreting these latent states does not directly help achieve our goal of risk assessment, as associating states with driving risk levels is even more difficult and often subjective, especially in the absence of collision data. For example, Jiang and Shi (Reference Jiang and Shi2024) assumed specific accident probabilities associated with hard braking and big angle changes and simulated accidents based on these assumptions. As explained in Section 1, instead of focusing on specific accident-prone driving behaviour, we classify them broadly as ‘abnormal’ behaviour and propose to perform anomaly detection under a (fitted) CTHMM. Our proposed method is statistically supported and does not require explicit state interpretations, yet it can effectively identify outliers—in our case, the deviating driving behaviour.

Given a fitted CTHMM, we can perform anomaly detection on both entire trips and individual data points within a trip. Specifically, we utilize the ‘forecast pseudo-residuals’ introduced in Zucchini et al. (Reference Zucchini, MacDonald and Langrock2016). For a trip j from driver i at timepoint t, the uniform forecast pseudo-residual ![]() $u_{ijt,d}$ for the d-th dimension of the response

$u_{ijt,d}$ for the d-th dimension of the response ![]() $\textbf{Y}_{ijt}$, denoted as

$\textbf{Y}_{ijt}$, denoted as ![]() $Y_{ijt,d}$, is computed under the fitted model as

$Y_{ijt,d}$, is computed under the fitted model as

In the context of HMMs, the analysis of pseudo-residuals serves two purposes: model checking and outlier detection, and we focus on the latter for this work. The forecast pseudo-residuals help identify observations that are extreme relative to the model and all preceding observations, highlighting the abrupt changes in driving behaviour. If a forecast pseudo-residual is extreme, it indicates that the corresponding observation is an outlier, or that the model no longer provides an acceptable description of the time series.

If the model fits the time series well, ![]() $u_{ijt,d}$ should follow a Uniform(0, 1) distribution, with extreme observations indicated by residuals close to 0 or 1. However, it can be hard to determine the extremity of a value under the uniform scale. For example, while a value of 0.97 might seem extreme on its own, it is less so when compared to 0.999. Consequently, Zucchini et al. (Reference Zucchini, MacDonald and Langrock2016) suggest using the normal forecast pseudo-residual

$u_{ijt,d}$ should follow a Uniform(0, 1) distribution, with extreme observations indicated by residuals close to 0 or 1. However, it can be hard to determine the extremity of a value under the uniform scale. For example, while a value of 0.97 might seem extreme on its own, it is less so when compared to 0.999. Consequently, Zucchini et al. (Reference Zucchini, MacDonald and Langrock2016) suggest using the normal forecast pseudo-residual

where ![]() $\Phi$ is the standard normal cumulative density function. If the model is an accurate representation,

$\Phi$ is the standard normal cumulative density function. If the model is an accurate representation, ![]() $z_{ijt,d}$ should follow a standard normal distribution. In our analysis, we propose to classify observations with normal residuals exceeding 3 standard deviations as outliers. The 3-standard deviation threshold is widely used in statistical outlier detection, particularly for normally distributed data (Barnett and Lewis Reference Barnett and Lewis1994, Aggarwal Reference Aggarwal2015). This threshold is based on the empirical rule (also known as the 68-95-99.7 rule) for normal distributions. Flagging observations with residuals beyond 3 standard deviations—representing a 0.3% probability—effectively identifies points that are truly extreme relative to the model and preceding observations. We further examine the impact of threshold selection on anomaly detection performance in Section 5.2. Empirical results support the use of three standard deviations as the outlier threshold, as it effectively identifies anomalous trips and driving behaviour while maintaining a clear and consistent interpretation of what constitutes an extreme deviation from normal driving patterns.

$z_{ijt,d}$ should follow a standard normal distribution. In our analysis, we propose to classify observations with normal residuals exceeding 3 standard deviations as outliers. The 3-standard deviation threshold is widely used in statistical outlier detection, particularly for normally distributed data (Barnett and Lewis Reference Barnett and Lewis1994, Aggarwal Reference Aggarwal2015). This threshold is based on the empirical rule (also known as the 68-95-99.7 rule) for normal distributions. Flagging observations with residuals beyond 3 standard deviations—representing a 0.3% probability—effectively identifies points that are truly extreme relative to the model and preceding observations. We further examine the impact of threshold selection on anomaly detection performance in Section 5.2. Empirical results support the use of three standard deviations as the outlier threshold, as it effectively identifies anomalous trips and driving behaviour while maintaining a clear and consistent interpretation of what constitutes an extreme deviation from normal driving patterns.

Although we compute forecast pseudo-residuals ![]() $u_{ijt,d}$ and

$u_{ijt,d}$ and ![]() $z_{ijt,d}$ for each dimension d separately, all response dimensions influence these values, as

$z_{ijt,d}$ for each dimension d separately, all response dimensions influence these values, as ![]() $u_{ijt,d}$ is conditioned on the history across all dimensions. Consequently, the other dimensions also impact the probability of being in a particular latent state at each timepoint t.

$u_{ijt,d}$ is conditioned on the history across all dimensions. Consequently, the other dimensions also impact the probability of being in a particular latent state at each timepoint t.

In summary, our anomaly detection approach identifies deviations from learnt normal driving behaviour without predefining specific risky states (e.g., ‘aggressive’ or ‘drowsy’ driving). Instead, the model estimates the states in which a driver is most likely to be at a given time based on the (multivariate) telematics response, such as speed, longitudinal acceleration, and lateral acceleration in our analysis. Normal forecast pseudo-residuals ![]() $z_{ijt,d}$ are computed for each dimension d of telematics observations, and residuals beyond 3 standard deviations are flagged as outliers. At the trip level, we quantify the degree of anomaly by calculating the proportion of outliers in each dimension, defining this as the ‘anomaly index’. Rather than classifying certain states as inherently risky, our approach evaluates whether an individual is in an unexpected state given their usual driving patterns. This allows us to detect a broad range of abnormal behaviour without requiring predefined labels or assumptions about specific risk types, making the method both flexible and widely applicable.

$z_{ijt,d}$ are computed for each dimension d of telematics observations, and residuals beyond 3 standard deviations are flagged as outliers. At the trip level, we quantify the degree of anomaly by calculating the proportion of outliers in each dimension, defining this as the ‘anomaly index’. Rather than classifying certain states as inherently risky, our approach evaluates whether an individual is in an unexpected state given their usual driving patterns. This allows us to detect a broad range of abnormal behaviour without requiring predefined labels or assumptions about specific risk types, making the method both flexible and widely applicable.

3.3. Adapting and applying CTHMMs for anomaly detection in telematics

We perform anomaly detection at two levels to address two distinct objectives. At the driver level, we focus on identifying anomalous behaviour and trips that deviate from a driver’s typical patterns, particularly those associated with accidents. For this purpose, we use individual-specific models to account for heterogeneity in individual driving behaviour. Deng and Söffker (Reference Deng and Söffker2022) listed five factors influencing driving behaviour, which are driving styles, fatigue driving, drunk driving, driving skills, and traffic environment. Additional factors, such as vehicle characteristics, locations, and weather conditions, also play a role but are not available for consideration in our modelling. Lefèvre et al. (Reference Lefèvre, Carvalho, Gao, Tseng and Borrelli2015) compared the results of predicted acceleration and highlighted that a personalized/individualized model always outperforms an average/general model. While prediction is not our primary focus, the forecast pseudo-residuals in our approach calculate the predictive probability that a response dimension at the next time point is less extreme than the observed value given all previous observations. For reliable anomaly detection, the CTHMM must accurately capture a driver’s typical behaviour. A pooled model trained on data from multiple drivers may obscure individual anomalies, particularly for drivers whose deviations are minor within the group. Therefore, we fit individual-specific models to each driver’s trips to detect anomalies effectively.

At the group level, we aim to identify trips that deviate from the population norm and pinpoint drivers associated with at-fault claims. For this, we use a pooled model. While individual-specific models generate forecast pseudo-residuals to detect anomalies for each driver, these residuals may not be directly comparable across individuals. A pooled model, trained on trips from multiple drivers, enables us to compare trips across drivers, identify population-level anomalies, and rank drivers by their relative anomalousness.

For driver i with ![]() $N_i$ trips, the anomaly index for response dimension d in trip j with

$N_i$ trips, the anomaly index for response dimension d in trip j with ![]() $T_{ij}+1$ observations is defined as:

$T_{ij}+1$ observations is defined as:

Note that the denominator is ![]() $T_{ij}$ only as forecast pseudo-residuals are not calculated at the first observation. To rank each driver’s trips by their level of anomalousness, we further define the normalized anomaly index, which is the ratio of each trip’s index to the maximum index for that driver:

$T_{ij}$ only as forecast pseudo-residuals are not calculated at the first observation. To rank each driver’s trips by their level of anomalousness, we further define the normalized anomaly index, which is the ratio of each trip’s index to the maximum index for that driver:

The (raw) anomaly indices for response dimension d across ![]() $N_i$ trips form an empirical distribution

$N_i$ trips form an empirical distribution ![]() $\{A_{ij,d}, j = 1,...,N_i\}$ with empirical cumulative distribution function

$\{A_{ij,d}, j = 1,...,N_i\}$ with empirical cumulative distribution function ![]() $F_{i,d}(p)$. For group-level analysis, we will focus on the right tail of this distribution, reflecting extreme anomaly levels in dimension d. In particular, the maximum and

$F_{i,d}(p)$. For group-level analysis, we will focus on the right tail of this distribution, reflecting extreme anomaly levels in dimension d. In particular, the maximum and ![]() $\alpha$-th percentiles will be of interest:

$\alpha$-th percentiles will be of interest:

Although HMM is a flexible time-series model and the anomaly detection method is statistically grounded, there are several assumptions and adjustments that we need to make to adapt the proposed framework for the complex telematics data and ensure effective analysis.

1. We assume that driving behaviour is predominately normal.

• While we aim to identify all abnormal driving behaviour, instead of focusing on just one or a few, we still assume that driving behaviour is mostly normal and safe. This means that for each driver, anomalous and risky trips are rare, and even within those risky trips (e.g., ones that result in accidents), abnormal driving instances occur infrequently. Consequently, the CTHMM, no matter individualized or generalized, is fitted to the normal and safe driving behaviour, with anomaly detection used to identify deviating ones. This assumption should be fair as accidents would no longer be rare events otherwise. As will be shown in Section 6.3, we tested pooled models with various portfolio compositions (having different claim rates), and the method proves robust regardless of the proportion of drivers with accidents the model is fitted on.

2. The CTHMM should have a reasonable number of states.

• The CTHMM should have sufficient (latent) states to capture the major combinations of response dimensions, which ensures accurate modelling of a driver’s (in individual-specific models) and a group’s (in a pooled model) normal driving behaviour. However, it is equally important to avoid an excessive number of states, which can lead to model overfitting and mistakenly fitting well to abnormal behaviour as well. As a general guideline, we suggest starting with a number of latent states greater than the number of response dimensions (e.g., at least 5 states for 3 response dimensions) and increasing the number of states incrementally. The optimal number of states can then be selected based on Akaike information criterion (AIC) or Bayesian information criterion (BIC).

3. We divide each trip into intervals of non-zero speed and assume these sub-intervals of each driver are independent time series generated from the same CTHMM.

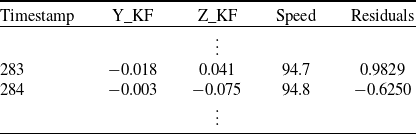

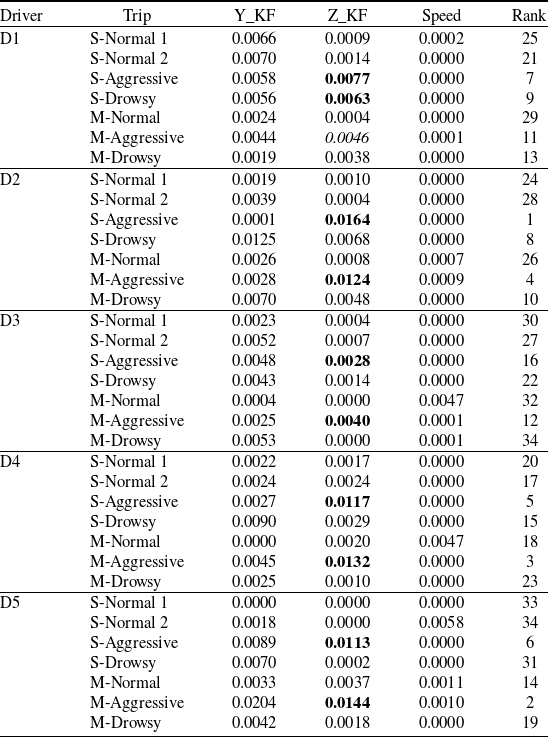

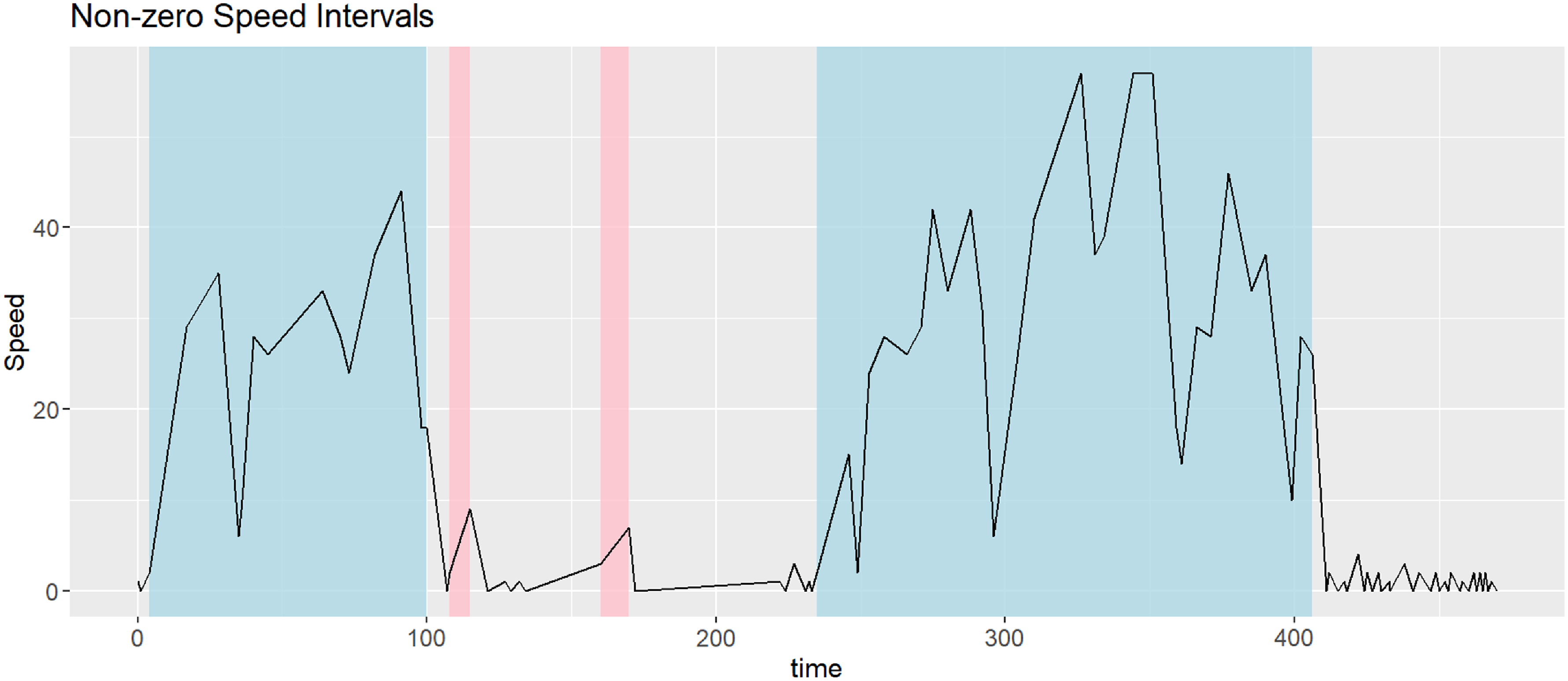

• The forecast pseudo-residuals we consider for anomaly detection are based on probability integral transform, which applies to continuous distributions. While continuity adjustments can be made for discrete distributions, boundary points still pose problems. Harte (Reference Harte2021) pointed out that pseudo-residuals are poor indicators of the model goodness-of-fit when observations are near or at the boundary of the domain. Similarly, we find that under these conditions, pseudo-residuals are also ineffective at detecting outliers. Although we can model all values of speed with a semi-continuous distribution (e.g., a mixture of a continuous distribution on the positive real line and a point mass at 0), the boundary point 0 is problematic for anomaly detection. For this reason, we exclude 0-speed observations by dividing each trip into intervals of non-zero speed and non-missing accelerations, capturing moments when the driver is actually driving and the vehicle is moving. Consequently, we assume these sub-intervals (instead of trips) of each driver are independent time series generated by the same model and the CTHMM is fitted to sub-intervals. For analysis and anomaly detection, we still evaluate on a trip basis: we first compute the pseudo-residuals for each sub-interval, then aggregate the total number of outliers across all sub-intervals to compute the anomaly index (outlier proportion) for the trip; see Figure 2 and the description that follows for an illustration. By cutting trips into shorter, non-zero speed intervals, we aim to standardize the time series and reduce trip heterogeneity due to varying trip duration, road and weather condition, etc.

• Periods of low speed (near zero) may correspond to stops at traffic lights or other circumstances beyond the driver’s control and therefore may not be relevant for identifying anomalous driving behaviour. While excluding 0-speed intervals was necessary for effective outlier detection, this adjustment also mitigates the concern about such low-speed periods. By focusing on non-zero speed intervals, representing moments when the driver is actively driving and the vehicle is moving, this approach ensures that stopping events, such as waiting at traffic lights, do not influence the anomaly detection process.

4. Estimation algorithm

The proposed CTHMM is fitted using the expectation-maximization (EM) algorithm. As discussed in Section 3.3, we fit the model to trip sub-intervals—either for individual driver i in an individual-specific model or for a group of drivers ![]() $i=1,...,M$ in a pooled model. In the following, we only present the EM algorithm for the pooled model. With the assumption that drivers are independent, we note that the individual-specific model is simply a special case where

$i=1,...,M$ in a pooled model. In the following, we only present the EM algorithm for the pooled model. With the assumption that drivers are independent, we note that the individual-specific model is simply a special case where ![]() $M=1$.

$M=1$.

4.1. E-step

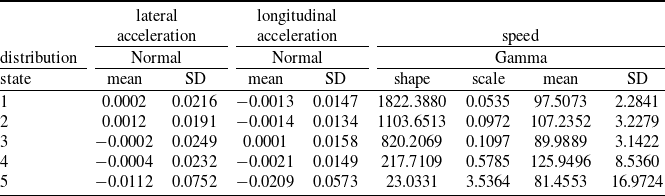

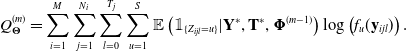

At the m-th iteration, we first calculate the expected complete data loglikelihood

\begin{equation}\begin{split} Q(\boldsymbol{\Phi};\ \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)}) &= \mathbb{E}\left(\ell(\boldsymbol{\Phi};\ \mathbf{Z}', \mathbf{T}', \mathbf{Y}, \mathbf{T}) | \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)}\right) \\

&= \sum_{i=1}^M \sum_{j=1}^{N_i} \sum_{u=1}^S \mathbb{E}\left(\mathbb{1}_{\{Z_{ij0} = u\}} | \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right) \log(\pi_u) \\ &\quad + \sum_{i=1}^M \sum_{j=1}^{N_i} \sum_{u=1}^S \sum_{v=1, v\neq u}^S \left\{ \log(q_{uv}) \mathbb{E}\left(n_{ij,uv}|\mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)}\right) \right. \\ &\left. \qquad - q_{u} \mathbb{E} \left(\tau_{ij,u}|\mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right)\right\} \\ &\quad + \sum_{i=1}^M \sum_{j=1}^{N_i} \sum_{l=0}^T \sum_{u=1}^S \mathbb{E} \left(\mathbb{1}_{\{Z_{ijl} = u\}} | \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right) \log\left(f_u(\mathbf{y}_{ijl}) \right),\end{split}\end{equation}

\begin{equation}\begin{split} Q(\boldsymbol{\Phi};\ \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)}) &= \mathbb{E}\left(\ell(\boldsymbol{\Phi};\ \mathbf{Z}', \mathbf{T}', \mathbf{Y}, \mathbf{T}) | \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)}\right) \\

&= \sum_{i=1}^M \sum_{j=1}^{N_i} \sum_{u=1}^S \mathbb{E}\left(\mathbb{1}_{\{Z_{ij0} = u\}} | \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right) \log(\pi_u) \\ &\quad + \sum_{i=1}^M \sum_{j=1}^{N_i} \sum_{u=1}^S \sum_{v=1, v\neq u}^S \left\{ \log(q_{uv}) \mathbb{E}\left(n_{ij,uv}|\mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)}\right) \right. \\ &\left. \qquad - q_{u} \mathbb{E} \left(\tau_{ij,u}|\mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right)\right\} \\ &\quad + \sum_{i=1}^M \sum_{j=1}^{N_i} \sum_{l=0}^T \sum_{u=1}^S \mathbb{E} \left(\mathbb{1}_{\{Z_{ijl} = u\}} | \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right) \log\left(f_u(\mathbf{y}_{ijl}) \right),\end{split}\end{equation}

where ![]() $\mathbb{E}(\cdot|\mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)})$ is the expected value given observed data

$\mathbb{E}(\cdot|\mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)})$ is the expected value given observed data ![]() $\mathbf{Y}^*$, observation times

$\mathbf{Y}^*$, observation times ![]() $ \mathbf{T}^*$ and the current estimate of model parameters

$ \mathbf{T}^*$ and the current estimate of model parameters ![]() $\boldsymbol{\Phi}^{(m-1)}$ resulted from the

$\boldsymbol{\Phi}^{(m-1)}$ resulted from the ![]() $(m-1)$-th iteration.

$(m-1)$-th iteration.

To simplify the notation, we first state the computation algorithms for a single trip sub-interval j from a driver i.

4.1.1. Conditional expectation of  $\mathbb{1}_{\{Z_{l} = u\}}$

$\mathbb{1}_{\{Z_{l} = u\}}$

To compute the terms ![]() $\mathbb{E}(\mathbb{1}_{\{Z_{l} = u\}} | \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)})$ for

$\mathbb{E}(\mathbb{1}_{\{Z_{l} = u\}} | \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)})$ for ![]() $l = 0,1,...,T_j$,

$l = 0,1,...,T_j$, ![]() $u = 1,...,S$, one can apply standard decoding methods—forward–backward algorithm (soft decoding) or Viterbi algorithm (hard decoding). Given the current model parameter estimates

$u = 1,...,S$, one can apply standard decoding methods—forward–backward algorithm (soft decoding) or Viterbi algorithm (hard decoding). Given the current model parameter estimates ![]() $\boldsymbol{\Phi}^{(m-1)}$, which include the initial state probabilities

$\boldsymbol{\Phi}^{(m-1)}$, which include the initial state probabilities ![]() $\pi$, transition rate matrix

$\pi$, transition rate matrix ![]() $\mathbf{Q}$, and state-dependent distributions

$\mathbf{Q}$, and state-dependent distributions ![]() $f_u(\mathbf{y}_l)$, we will utilize the state-transition matrix

$f_u(\mathbf{y}_l)$, we will utilize the state-transition matrix ![]() $\mathbf{P}(\Delta_l)$, and let

$\mathbf{P}(\Delta_l)$, and let ![]() $\mathbf{f}(\mathbf{y}_l)$ be a diagonal matrix with the u-th diagonal element

$\mathbf{f}(\mathbf{y}_l)$ be a diagonal matrix with the u-th diagonal element ![]() $f_u(\mathbf{y}_l)$.

$f_u(\mathbf{y}_l)$.

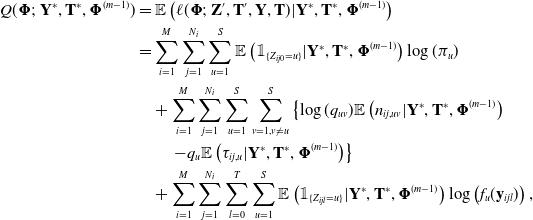

4.1.2. Soft decoding—Forward–backward algorithm

We define the forward probabilities ![]() $\boldsymbol{\alpha}_l$, backward probabilities

$\boldsymbol{\alpha}_l$, backward probabilities ![]() $\boldsymbol{\beta}_l$ and likelihood

$\boldsymbol{\beta}_l$ and likelihood ![]() $L_{T_j}$ (at time

$L_{T_j}$ (at time ![]() $T_j$) recursively as follows:

$T_j$) recursively as follows:

\begin{align*} \boldsymbol{\alpha}_0 &= \pi \mathbf{f}(\mathbf{y}_0) \\ \boldsymbol{\alpha}_l &= \boldsymbol{\alpha}_{l-1} \mathbf{P}(\Delta_l) \mathbf{f}(\mathbf{y}_l) \quad \text{for } l=1,2,...,T_j;\\ \boldsymbol{\beta}_{T_j} &= \mathbf{1} \\ \boldsymbol{\beta}'_l &= \mathbf{P}(\Delta_{l+1}) \mathbf{f}(\mathbf{y}_{l+1})\boldsymbol{\beta}'_{l+1} \quad \text{for } l=0,1,...,T_j-1;\\ L_{T_j} &= \boldsymbol{\alpha}_l \boldsymbol{\beta}'_l \quad \text{for } l=0,1,...,T_j.\end{align*}

\begin{align*} \boldsymbol{\alpha}_0 &= \pi \mathbf{f}(\mathbf{y}_0) \\ \boldsymbol{\alpha}_l &= \boldsymbol{\alpha}_{l-1} \mathbf{P}(\Delta_l) \mathbf{f}(\mathbf{y}_l) \quad \text{for } l=1,2,...,T_j;\\ \boldsymbol{\beta}_{T_j} &= \mathbf{1} \\ \boldsymbol{\beta}'_l &= \mathbf{P}(\Delta_{l+1}) \mathbf{f}(\mathbf{y}_{l+1})\boldsymbol{\beta}'_{l+1} \quad \text{for } l=0,1,...,T_j-1;\\ L_{T_j} &= \boldsymbol{\alpha}_l \boldsymbol{\beta}'_l \quad \text{for } l=0,1,...,T_j.\end{align*}

Then we can define the ‘smoother’ for ![]() $l=0,1,...,T_j$

$l=0,1,...,T_j$

where ![]() $\boldsymbol{\alpha}_l[u]$ and

$\boldsymbol{\alpha}_l[u]$ and ![]() $\boldsymbol{\beta}_l[u]$ are the u-th elements in the vectors

$\boldsymbol{\beta}_l[u]$ are the u-th elements in the vectors ![]() $\boldsymbol{\alpha}_l$ and

$\boldsymbol{\alpha}_l$ and ![]() $\boldsymbol{\beta}_l$, respectively.

$\boldsymbol{\beta}_l$, respectively.

To this end, we also define the ‘two-slice marginal’ for ![]() $l=0,1,...,T_j-1$, which will be used in the computation in Section 4.1.2,

$l=0,1,...,T_j-1$, which will be used in the computation in Section 4.1.2,

where ![]() $\mathbf{P}(\Delta_{l+1})[u,v]$ is the (u,v)-th element of

$\mathbf{P}(\Delta_{l+1})[u,v]$ is the (u,v)-th element of ![]() $\mathbf{P}(\Delta_{l+1})$.

$\mathbf{P}(\Delta_{l+1})$.

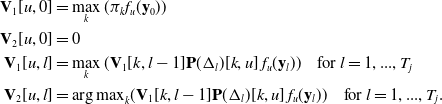

4.1.3. Hard decoding—Viterbi algorithm

We first construct two S-by-![]() $(T_j+1)$ matrices

$(T_j+1)$ matrices ![]() $\mathbf{V}_1$ and

$\mathbf{V}_1$ and ![]() $\mathbf{V}_2$ with their (u,l)-th elements as follows:

$\mathbf{V}_2$ with their (u,l)-th elements as follows:

\begin{align*} \mathbf{V}_1[u,0] &= \max_k (\pi_k f_u(\mathbf{y}_0)) \\

\mathbf{V}_2[u,0] &= 0 \\

\mathbf{V}_1[u,l] &= \max_k (\mathbf{V}_1[k,l-1] \mathbf{P}(\Delta_{l})[k,u]\, f_u(\mathbf{y}_l)) \quad \text{for } l=1,...,T_j\\

\mathbf{V}_2[u,l] &= {\arg\max}_k (\mathbf{V}_1[k,l-1] \mathbf{P}(\Delta_{l})[k,u]\, f_u(\mathbf{y}_l)) \quad \text{for } l=1,...,T_j.\\\end{align*}

\begin{align*} \mathbf{V}_1[u,0] &= \max_k (\pi_k f_u(\mathbf{y}_0)) \\

\mathbf{V}_2[u,0] &= 0 \\

\mathbf{V}_1[u,l] &= \max_k (\mathbf{V}_1[k,l-1] \mathbf{P}(\Delta_{l})[k,u]\, f_u(\mathbf{y}_l)) \quad \text{for } l=1,...,T_j\\

\mathbf{V}_2[u,l] &= {\arg\max}_k (\mathbf{V}_1[k,l-1] \mathbf{P}(\Delta_{l})[k,u]\, f_u(\mathbf{y}_l)) \quad \text{for } l=1,...,T_j.\\\end{align*}

Then we backtrack to find the most likely sequence of latent states:

We can define the ‘smoother’ for ![]() $l=0,1,...,T_j$

$l=0,1,...,T_j$

and the ‘two-slice marginal’ for ![]() $l=0,1,...,T_j-1$

$l=0,1,...,T_j-1$

4.1.4. Conditional expectations of  $n_{uv}$ and

$n_{uv}$ and  $\tau_{u}$

$\tau_{u}$

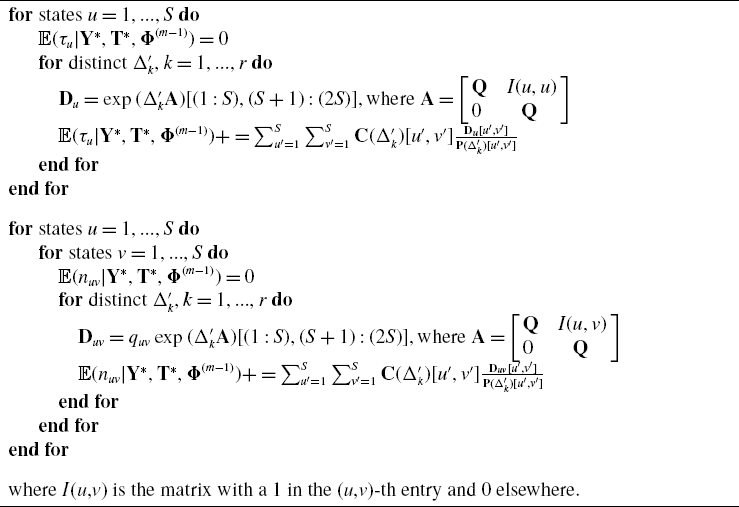

The complication in the E-step lies in the calculation of ![]() $\mathbb{E}(n_{uv}|\mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)})$ and

$\mathbb{E}(n_{uv}|\mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)})$ and ![]() $\mathbb{E}(\tau_{u}|\mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)})$. We make use of the Expm algorithm proposed by Liu et al. (Reference Liu, Li, Li, Song and Rehg2015), which provides an efficient way for computing integrals of matrix exponentials; it is restated in Algorithm 1.

$\mathbb{E}(\tau_{u}|\mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)})$. We make use of the Expm algorithm proposed by Liu et al. (Reference Liu, Li, Li, Song and Rehg2015), which provides an efficient way for computing integrals of matrix exponentials; it is restated in Algorithm 1.

The algorithm exploits the fact that the hidden Markov chain is time-homogeneous and the state-transition matrix at any timepoint ![]() $t_{l-1}$,

$t_{l-1}$, ![]() $\mathbf{P}(\Delta_l)$, only depends on the time interval between now and the next observation timepoint

$\mathbf{P}(\Delta_l)$, only depends on the time interval between now and the next observation timepoint ![]() $\Delta_l = t_l - t_{l-1}$. As a consequence, the conditional expectations of

$\Delta_l = t_l - t_{l-1}$. As a consequence, the conditional expectations of ![]() $n_{ij,uv}$ and

$n_{ij,uv}$ and ![]() $\tau_{ij,u}$ only have to be evaluated for each distinct time interval rather than at each observation time. We denote

$\tau_{ij,u}$ only have to be evaluated for each distinct time interval rather than at each observation time. We denote ![]() $\Delta'_k, k=1,...,r,$ the r distinct values of

$\Delta'_k, k=1,...,r,$ the r distinct values of ![]() $\Delta_l, l=1,...,T_j$, and create count tables

$\Delta_l, l=1,...,T_j$, and create count tables ![]() $\mathbf{C}(\Delta'_k)$ with the two-slice marginals from Equation 6 or Equation 8 as

$\mathbf{C}(\Delta'_k)$ with the two-slice marginals from Equation 6 or Equation 8 as

Algorithm 1 Expm Algorithm for Computing ![]() $\mathbb{E} \left(\tau_{u}|\mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right)$ and

$\mathbb{E} \left(\tau_{u}|\mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right)$ and ![]() $\mathbb{E} \left(n_{uv}|\mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right)$

$\mathbb{E} \left(n_{uv}|\mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right)$

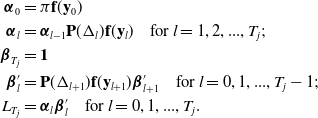

4.2. M-step

The goal of the M-step is to maximize ![]() $Q(\boldsymbol{\Phi};\ \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)})$ with respect to

$Q(\boldsymbol{\Phi};\ \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)})$ with respect to ![]() $\boldsymbol{\Phi}$. However, it is too computational costly to directly find the global maximum. Instead, we aim to use a computational effective algorithm to find a near-maximum to update the parameters

$\boldsymbol{\Phi}$. However, it is too computational costly to directly find the global maximum. Instead, we aim to use a computational effective algorithm to find a near-maximum to update the parameters ![]() $\boldsymbol{\Phi}^{(m)}$ such that

$\boldsymbol{\Phi}^{(m)}$ such that ![]() $Q(\boldsymbol{\Phi}^{(m)};\ \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)}) \geq Q(\boldsymbol{\Phi}^{(m-1)};\ \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)})$. It follows from Equation 4 that

$Q(\boldsymbol{\Phi}^{(m)};\ \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)}) \geq Q(\boldsymbol{\Phi}^{(m-1)};\ \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)})$. It follows from Equation 4 that ![]() $Q(\boldsymbol{\Phi};\ \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)})$ can be decomposed into three parts

$Q(\boldsymbol{\Phi};\ \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)})$ can be decomposed into three parts

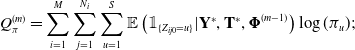

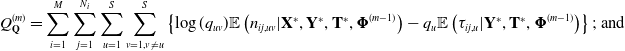

where

\begin{align} Q^{(m)}_{\pi} &= \sum_{i=1}^M \sum_{j=1}^{N_i} \sum_{u=1}^S \mathbb{E}\left(\mathbb{1}_{\{Z_{ij0} = u\}} | \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right) \log(\pi_u); \\[-24pt] \nonumber

\end{align}

\begin{align} Q^{(m)}_{\pi} &= \sum_{i=1}^M \sum_{j=1}^{N_i} \sum_{u=1}^S \mathbb{E}\left(\mathbb{1}_{\{Z_{ij0} = u\}} | \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right) \log(\pi_u); \\[-24pt] \nonumber

\end{align}

\begin{align} Q^{(m)}_{\mathbf{Q}} &= \sum_{i=1}^M \sum_{j=1}^{N_i} \sum_{u=1}^S \sum_{v=1, v\neq u}^S \left\{ \log(q_{uv}) \mathbb{E} \left(n_{ij,uv}|\mathbf{X}^*, \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right) - q_{u} \mathbb{E} \left(\tau_{ij,u}| \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right)\right\};\ \text{and} \\[-24pt] \nonumber \end{align}

\begin{align} Q^{(m)}_{\mathbf{Q}} &= \sum_{i=1}^M \sum_{j=1}^{N_i} \sum_{u=1}^S \sum_{v=1, v\neq u}^S \left\{ \log(q_{uv}) \mathbb{E} \left(n_{ij,uv}|\mathbf{X}^*, \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right) - q_{u} \mathbb{E} \left(\tau_{ij,u}| \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)} \right)\right\};\ \text{and} \\[-24pt] \nonumber \end{align}

\begin{align} Q^{(m)}_{\boldsymbol{\Theta}} &= \sum_{i=1}^M \sum_{j=1}^{N_i} \sum_{l=0}^{T_j} \sum_{u=1}^S \mathbb{E} \left(\mathbb{1}_{\{Z_{ijl} = u\}} | \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)}\right) \log\left(f_u(\mathbf{y}_{ijl})\right) .\end{align}

\begin{align} Q^{(m)}_{\boldsymbol{\Theta}} &= \sum_{i=1}^M \sum_{j=1}^{N_i} \sum_{l=0}^{T_j} \sum_{u=1}^S \mathbb{E} \left(\mathbb{1}_{\{Z_{ijl} = u\}} | \mathbf{Y}^*, \mathbf{T}^*, \boldsymbol{\Phi}^{(m-1)}\right) \log\left(f_u(\mathbf{y}_{ijl})\right) .\end{align}

Clearly, ![]() $Q^{(m)}_{\pi}$ depends only on

$Q^{(m)}_{\pi}$ depends only on ![]() $\pi$,

$\pi$, ![]() $Q^{(m)}_{\mathbf{Q}}$ depends only on

$Q^{(m)}_{\mathbf{Q}}$ depends only on ![]() $\mathbf{Q}$, and

$\mathbf{Q}$, and ![]() $Q^{(m)}_{\boldsymbol{\Theta}}$ depends only on

$Q^{(m)}_{\boldsymbol{\Theta}}$ depends only on ![]() $\boldsymbol{\Theta}$. As a consequence, the problem reduces to maximize the three functions separately with respect to the corresponding subset of parameters. While we can compute the E-step for each trip sub-interval separately, we have to consider all of them collectively in the M-step calculation.

$\boldsymbol{\Theta}$. As a consequence, the problem reduces to maximize the three functions separately with respect to the corresponding subset of parameters. While we can compute the E-step for each trip sub-interval separately, we have to consider all of them collectively in the M-step calculation.

4.2.1. Initial state probability distribution  $\pi$

$\pi$

We constrain ![]() $\pi_u$ summing to unity by use of a Lagrange multiplier. Then by taking the derivative of

$\pi_u$ summing to unity by use of a Lagrange multiplier. Then by taking the derivative of ![]() $Q^{(m)}_{\pi}$ with respect to

$Q^{(m)}_{\pi}$ with respect to ![]() $\pi_u$, we have the maximum likelihood estimator (MLE) in the m-th iteration as

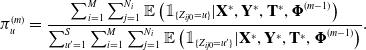

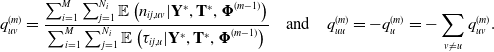

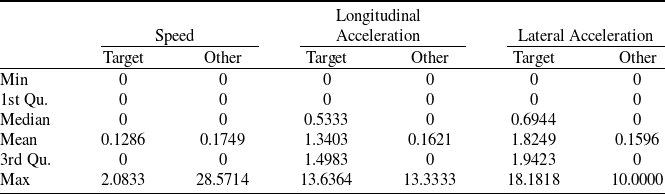

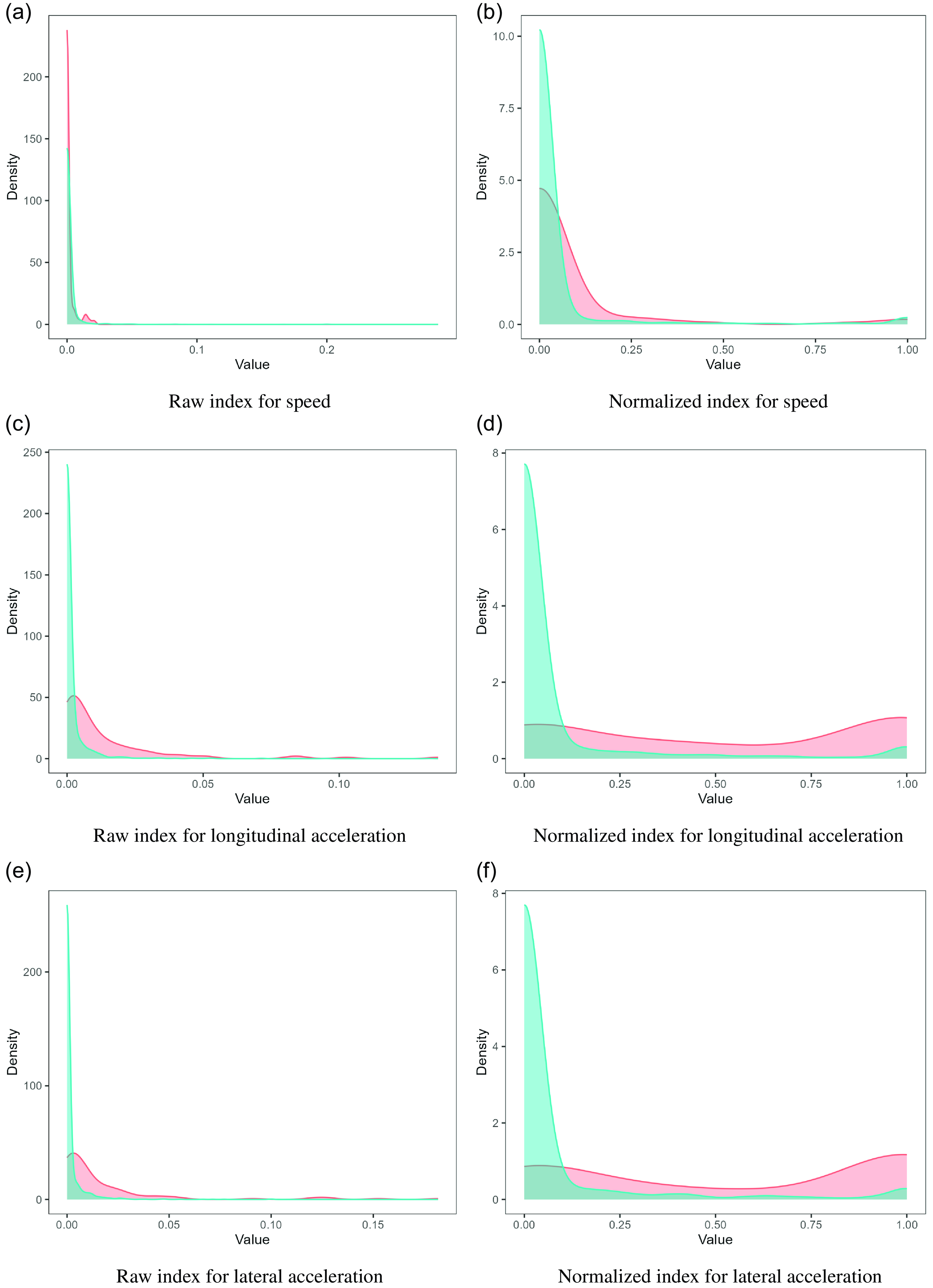

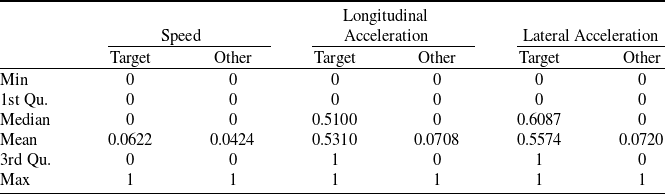

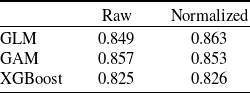

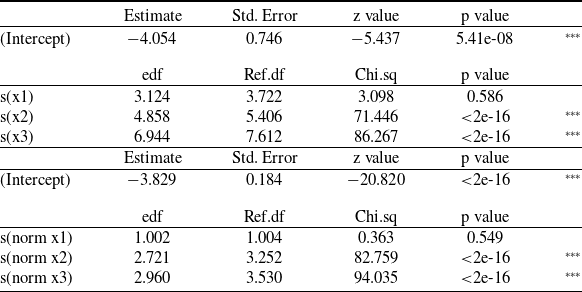

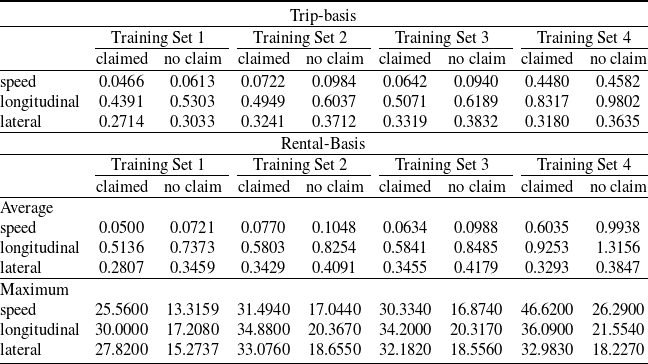

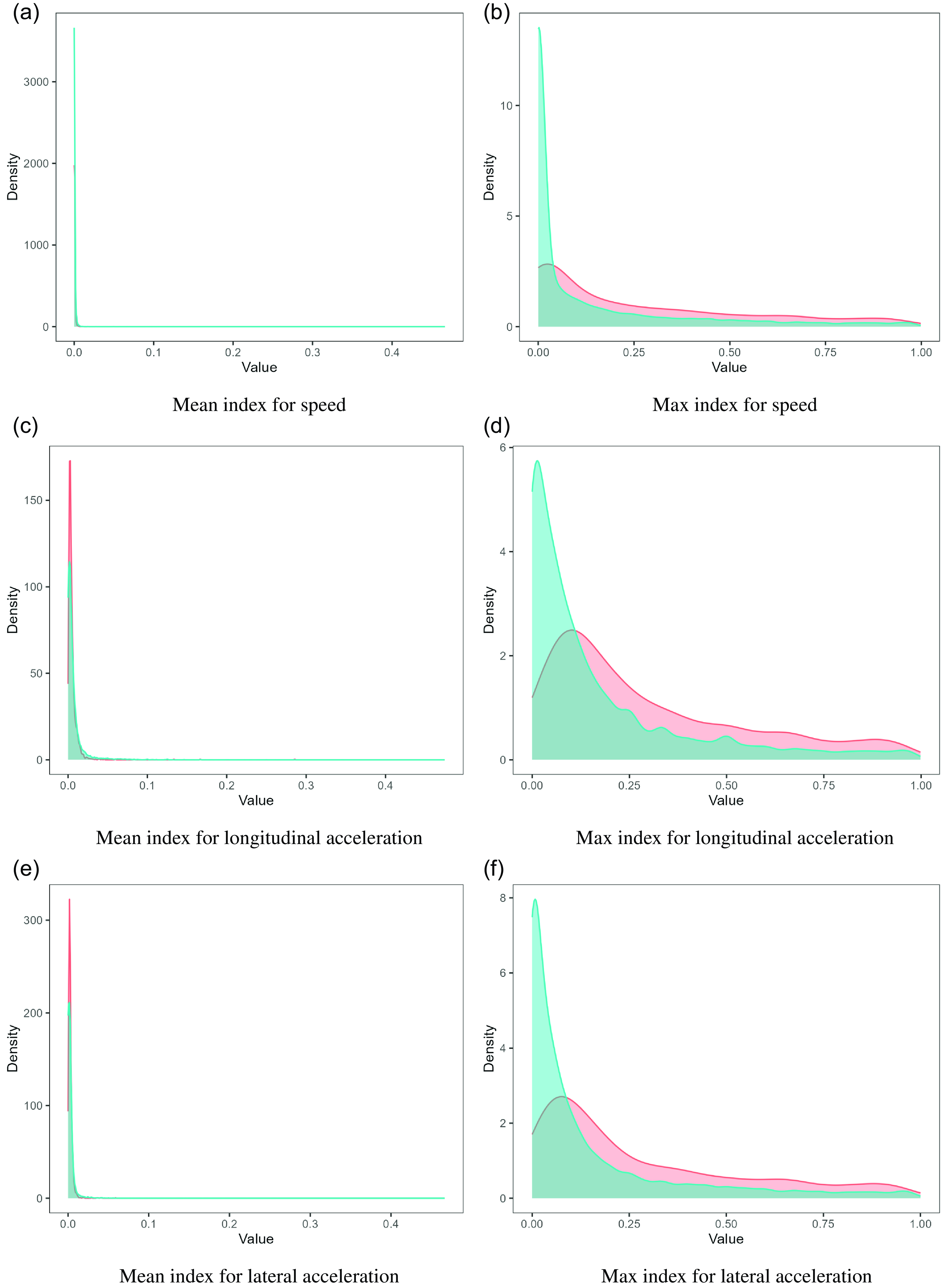

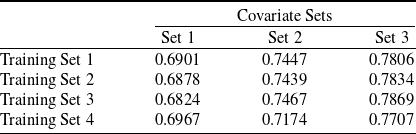

$\pi_u$, we have the maximum likelihood estimator (MLE) in the m-th iteration as