1. Introduction

Let ![]() $\{X_{i}$,

$\{X_{i}$, ![]() $\geq 1\}$ be a sequence of non-negative continuous random variables and

$\geq 1\}$ be a sequence of non-negative continuous random variables and ![]() $S_{d,n}$ be the partial sums

$S_{d,n}$ be the partial sums ![]() $S_{d,n} = X_{1} + X_{2} + \cdots + X_{n}$,

$S_{d,n} = X_{1} + X_{2} + \cdots + X_{n}$, ![]() $n \geq 1$, with

$n \geq 1$, with ![]() $S_{d,0} = 0$. Suppose that the random variable

$S_{d,0} = 0$. Suppose that the random variable ![]() $X_{1}$ has distribution function

$X_{1}$ has distribution function ![]() $G(x) = 1 - \overline{G}(x)$ with

$G(x) = 1 - \overline{G}(x)$ with ![]() $G(0) = 0$, and

$G(0) = 0$, and ![]() ${\{X}_{i}$,

${\{X}_{i}$, ![]() $i \geq 2\}$ constitute a sequence of independent and identically distributed (iid) random variables, independent of

$i \geq 2\}$ constitute a sequence of independent and identically distributed (iid) random variables, independent of ![]() $X_{1}$, with common distribution function

$X_{1}$, with common distribution function ![]() $F(x) = 1 - \overline{F}(x)$ with

$F(x) = 1 - \overline{F}(x)$ with ![]() $F(0) = 0$. Furthermore, the equilibrium distribution associated with

$F(0) = 0$. Furthermore, the equilibrium distribution associated with ![]() $G$, denoted by

$G$, denoted by ![]() $G_e$, is defined as

$G_e$, is defined as  $G_e(t) = \mu_G^{-1} \int_{0}^{t} \overline{G}(y)\,dy,$ and similarly,

$G_e(t) = \mu_G^{-1} \int_{0}^{t} \overline{G}(y)\,dy,$ and similarly,  $F_e(t) = \mu^{-1} \int_{0}^{t} \overline{F}(y)\,dy,$ where

$F_e(t) = \mu^{-1} \int_{0}^{t} \overline{F}(y)\,dy,$ where  $\mu_G = \int_{0}^{\infty} y\, dG(y)

\quad \text{and} \quad

\mu = \int_{0}^{\infty} y\, dF(y)$ represent the mean interarrival times associated with

$\mu_G = \int_{0}^{\infty} y\, dG(y)

\quad \text{and} \quad

\mu = \int_{0}^{\infty} y\, dF(y)$ represent the mean interarrival times associated with ![]() $G$ and

$G$ and ![]() $F$, respectively. If

$F$, respectively. If ![]() ${0 \leq S}_{d,1} \leq$

${0 \leq S}_{d,1} \leq$ ![]() $S_{d,2} \leq S_{d,3}$

$S_{d,2} \leq S_{d,3}$ ![]() $\cdots$ denote the times of occurrences of some phenomenon, then

$\cdots$ denote the times of occurrences of some phenomenon, then ![]() $\{S_{d,n},\ n\mathbb{\in N\}}$ is called a delayed or modified renewal process. The

$\{S_{d,n},\ n\mathbb{\in N\}}$ is called a delayed or modified renewal process. The ![]() $S_{d,n}$ are called the renewals or the epochs of the delayed renewal process, and

$S_{d,n}$ are called the renewals or the epochs of the delayed renewal process, and ![]() $X_{n} = S_{d,n} - S_{d,n - 1}$,

$X_{n} = S_{d,n} - S_{d,n - 1}$, ![]() $n \geq 1$, are the interarrival or waiting times of renewals.

$n \geq 1$, are the interarrival or waiting times of renewals.

With each delayed renewal process, we can associate the stochastic process ![]() $\{N_{d}(t),t \geq 0\}$, continuous in time, with values in

$\{N_{d}(t),t \geq 0\}$, continuous in time, with values in ![]() $\mathbb{N}$, defined by

$\mathbb{N}$, defined by  $N_{d}(t) = \sup\left\{n\mathbb{\in N\ :\ }S_{d,n} \leq t \right\}$ if

$N_{d}(t) = \sup\left\{n\mathbb{\in N\ :\ }S_{d,n} \leq t \right\}$ if ![]() $X_{1} \leq t$, to be the number of renewals in

$X_{1} \leq t$, to be the number of renewals in ![]() $\lbrack 0,\ t\rbrack$ with

$\lbrack 0,\ t\rbrack$ with ![]() $N(t) = 0$, if

$N(t) = 0$, if ![]() $X_{1} \gt t$. Then,

$X_{1} \gt t$. Then, ![]() $N_{d}(t)$ is called the associated counting process or the delayed renewal counting process and represents the total number of renewals on

$N_{d}(t)$ is called the associated counting process or the delayed renewal counting process and represents the total number of renewals on ![]() $(0,\ t\rbrack$, and it holds true that

$(0,\ t\rbrack$, and it holds true that

\begin{equation*}\Pr\left( N_{d}(t) \geq n\right) = \Pr(S_{d,n} \leq t)) = \left( G*F^{(n - 1)} \right)(t),\end{equation*}

\begin{equation*}\Pr\left( N_{d}(t) \geq n\right) = \Pr(S_{d,n} \leq t)) = \left( G*F^{(n - 1)} \right)(t),\end{equation*}and

\begin{equation*}\Pr\left( N_{d}(t) = n\right) = \left( G*F^{(n - 1)} \right)(t) - \left(G*F^{(n)} \right)(t),\end{equation*}

\begin{equation*}\Pr\left( N_{d}(t) = n\right) = \left( G*F^{(n - 1)} \right)(t) - \left(G*F^{(n)} \right)(t),\end{equation*} where ![]() $F^{(n)}$ denotes the n-fold Lebesgue–Stieltjes convolution product of the distribution function F with itself. We recall that the Lebesgue–Stieltjes-type convolution of functions

$F^{(n)}$ denotes the n-fold Lebesgue–Stieltjes convolution product of the distribution function F with itself. We recall that the Lebesgue–Stieltjes-type convolution of functions ![]() $g\ :\lbrack 0,\ \infty) \rightarrow \mathbb{R}$ and

$g\ :\lbrack 0,\ \infty) \rightarrow \mathbb{R}$ and ![]() $F$ will be denoted by

$F$ will be denoted by ![]() $g*F$ and is defined as

$g*F$ and is defined as  $(g*F)(x) = \int_{0}^{x}{g(x - y)dF(y)}$. For any

$(g*F)(x) = \int_{0}^{x}{g(x - y)dF(y)}$. For any ![]() $n = 1,\ 2,\ 3,\ \cdots$, by

$n = 1,\ 2,\ 3,\ \cdots$, by  $F^{(n)}(x) = 1 - {\overline{F}}^{(n)}(x)$ we shall denote the

$F^{(n)}(x) = 1 - {\overline{F}}^{(n)}(x)$ we shall denote the ![]() $n -$ fold convolution of the distribution function

$n -$ fold convolution of the distribution function ![]() $F$, defined by

$F$, defined by ![]() $F^{(n)}(x) = (F^{(n - 1)}*F)(x)$, with

$F^{(n)}(x) = (F^{(n - 1)}*F)(x)$, with  $F^{(0)}(x) = 1 - {\overline{F}}^{(0)}(x) = 1$ for

$F^{(0)}(x) = 1 - {\overline{F}}^{(0)}(x) = 1$ for ![]() $x \geq 0$,

$x \geq 0$,  $F^{(0)}(x) = 1 - {\overline{F}}^{(0)}(x) = 0$ for

$F^{(0)}(x) = 1 - {\overline{F}}^{(0)}(x) = 0$ for ![]() $x \lt 0$ and

$x \lt 0$ and ![]() $F^{(1)}(x) = F(x)$.

$F^{(1)}(x) = F(x)$.

A primary quantity of interest is the delayed or modified renewal function, which is defined as ![]() $M_{d}(t) = E\lbrack N_{d}(t)\rbrack$. The function

$M_{d}(t) = E\lbrack N_{d}(t)\rbrack$. The function ![]() $M_{d}(t)$ represents the expected number of renewals in

$M_{d}(t)$ represents the expected number of renewals in ![]() $\lbrack 0,\ t\rbrack$ for

$\lbrack 0,\ t\rbrack$ for ![]() $t \in \lbrack 0,\ \infty)$ and it is well known (see, e.g., Karling and Taylor [Reference Karlin and Taylor13] p. 198) that

$t \in \lbrack 0,\ \infty)$ and it is well known (see, e.g., Karling and Taylor [Reference Karlin and Taylor13] p. 198) that ![]() $M_{d}(t)$ satisfies the following renewal equation

$M_{d}(t)$ satisfies the following renewal equation

\begin{equation*}

M_{d}(t) = \sum_{n = 1}^{\infty}{\left( G*F^{(n - 1)} \right)(t) = \sum_{n = 0}^{\infty}{\left( G*F^{(n)} \right)(t)}}

\end{equation*}

\begin{equation*}

M_{d}(t) = \sum_{n = 1}^{\infty}{\left( G*F^{(n - 1)} \right)(t) = \sum_{n = 0}^{\infty}{\left( G*F^{(n)} \right)(t)}}

\end{equation*} From the above equation, it follows (e.g., Resnick [Reference Resnick20], Section 3.5) that the delayed renewal function ![]() $M_{d}$ satisfies the renewal-type equation

$M_{d}$ satisfies the renewal-type equation

\begin{equation*}M_{d}(t) = A(t) + \int_{0}^{t}{M_{d}(t - y)dF(y)}, ~t \geq 0. \end{equation*}

\begin{equation*}M_{d}(t) = A(t) + \int_{0}^{t}{M_{d}(t - y)dF(y)}, ~t \geq 0. \end{equation*} There are many practical situations where the delayed renewal process can be used to model several physical phenomena (see, e.g., Pekalp et al. [Reference Pekalp, Altındağ, Acar and Aydoğdu19] and Chadjiconstantinidis [Reference Chadjiconstantinidis4]). For example, let us consider an item, say an electric bulb, that fails at times ![]() $S_{d,0}$,

$S_{d,0}$, ![]() $S_{d,1}$,

$S_{d,1}$, ![]() $S_{d,2}$,

$S_{d,2}$, ![]() $\cdots$, and is replaced at the time of failure by anew item on the same sort. Then

$\cdots$, and is replaced at the time of failure by anew item on the same sort. Then ![]() $F$ is the distribution of the lifetime of an item. If the item present at time

$F$ is the distribution of the lifetime of an item. If the item present at time ![]() $x = 0$ is not new, then its lifetime distribution need not have distribution

$x = 0$ is not new, then its lifetime distribution need not have distribution ![]() $F$. Another example is the following. The first engine used in vehicle generally lasts longer than renewals (where the renewal might be the same engine after being reconditioned).

$F$. Another example is the following. The first engine used in vehicle generally lasts longer than renewals (where the renewal might be the same engine after being reconditioned).

Analytical solutions of the delayed renewal function are possible only for a very few lifetime distributions of the inter-arrival times. See, for example, Pekalp et al. [Reference Pekalp, Altındağ, Acar and Aydoğdu19], who obtained an exact formula for ![]() $M_{d}(x)$ when

$M_{d}(x)$ when ![]() $G$ is an exponential distribution and

$G$ is an exponential distribution and ![]() $F$ is an exponential (with different parameter) and/or an Erlang (

$F$ is an exponential (with different parameter) and/or an Erlang (![]() $2$) distribution. In the same paper, the authors obtained parametric plug-in estimators for the delayed renewal function and the variance of the delayed renewal counting process. In general, if the distributions of

$2$) distribution. In the same paper, the authors obtained parametric plug-in estimators for the delayed renewal function and the variance of the delayed renewal counting process. In general, if the distributions of ![]() $X_{1}$ and

$X_{1}$ and ![]() $X_{i}$,

$X_{i}$, ![]() $i \geq 2$ belong to the rational family of distributions (e.g., if they have phase-type distributions), then we can obtain

$i \geq 2$ belong to the rational family of distributions (e.g., if they have phase-type distributions), then we can obtain ![]() $M_{d}(x)$ analytically. For other distributions of the inter-arrival times, the delayed renewal equation on the one hand usually do not have analytical solutions, and on the other hand, it is very difficult and complicated to obtain such solutions. For this reason, alternate approaches, such as bounds for

$M_{d}(x)$ analytically. For other distributions of the inter-arrival times, the delayed renewal equation on the one hand usually do not have analytical solutions, and on the other hand, it is very difficult and complicated to obtain such solutions. For this reason, alternate approaches, such as bounds for ![]() $M_{d}(t)$ have been developed (see, e.g., Chadjiconsatntinidis ([Reference Chadjiconstantinidis4], [Reference Chadjiconstantinidis5])).

$M_{d}(t)$ have been developed (see, e.g., Chadjiconsatntinidis ([Reference Chadjiconstantinidis4], [Reference Chadjiconstantinidis5])).

If ![]() $X_{1} \triangleq X_{i}$ for any

$X_{1} \triangleq X_{i}$ for any ![]() $i \geq 2$, the symbol

$i \geq 2$, the symbol ![]() $\triangleq$ means equality in distribution, that is, if

$\triangleq$ means equality in distribution, that is, if ![]() ${\{X}_{i}$,

${\{X}_{i}$, ![]() $i \geq 1\}$ is a sequence of iid random variables, then

$i \geq 1\}$ is a sequence of iid random variables, then ![]() $G(t) = F(t)$ and in this case

$G(t) = F(t)$ and in this case ![]() $S_{d,n}$ will usually denoted by

$S_{d,n}$ will usually denoted by ![]() $S_{n}$, and

$S_{n}$, and ![]() $N_{d}(t)$ by

$N_{d}(t)$ by ![]() $N(t)$. Then

$N(t)$. Then ![]() $\{S_{n},\ n\mathbb{\in N\}}$ is called the ordinary renewal process and

$\{S_{n},\ n\mathbb{\in N\}}$ is called the ordinary renewal process and ![]() $\{N(t),\ t \gt 0\}$ the ordinary renewal counting process. Hence,

$\{N(t),\ t \gt 0\}$ the ordinary renewal counting process. Hence, ![]() $M_{d}(t)$ is reduced to the ordinary renewal function denoted by

$M_{d}(t)$ is reduced to the ordinary renewal function denoted by ![]() $M(t)$, and is defined by

$M(t)$, and is defined by  $M(t) = E\lbrack N(t)\rbrack = \sum_{n = 1}^{\infty}{F^{(n)}(t)}$. Therefore, the delayed or modified renewal process differs from an ordinary renewal process in that the distribution of

$M(t) = E\lbrack N(t)\rbrack = \sum_{n = 1}^{\infty}{F^{(n)}(t)}$. Therefore, the delayed or modified renewal process differs from an ordinary renewal process in that the distribution of ![]() $X_{1}$ is different from that of subsequent

$X_{1}$ is different from that of subsequent ![]() $X_{i}$,

$X_{i}$, ![]() $i \geq 2$, that is, if the first arrival time

$i \geq 2$, that is, if the first arrival time ![]() $X_{1}$ is allowed to have a different distribution than the other inter-arrival times

$X_{1}$ is allowed to have a different distribution than the other inter-arrival times ![]() $\left\{X_{2},\ {\ X}_{3},\ \cdots \right\}$ then we obtain the delayed or modified renewal process. However, in many cases, if not all interarrival times have the same distribution is more realistic. For this reason, in this paper, we study some quantities related to the delayed renewal process.

$\left\{X_{2},\ {\ X}_{3},\ \cdots \right\}$ then we obtain the delayed or modified renewal process. However, in many cases, if not all interarrival times have the same distribution is more realistic. For this reason, in this paper, we study some quantities related to the delayed renewal process.

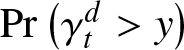

Apart from the delayed renewal function, two other quantities of primary interest in renewal theory are the forward and backward recurrence times. The backward recurrence time, also known as the age at time ![]() $t$, will be denoted by

$t$, will be denoted by ![]() $\delta_{t}^{d}$ and is defined by

$\delta_{t}^{d}$ and is defined by  $\delta_{t}^{d} = t - S_{d,N_{d}(t)}$. It represents the time that has elapsed since the last renewal. The forward recurrence time at time

$\delta_{t}^{d} = t - S_{d,N_{d}(t)}$. It represents the time that has elapsed since the last renewal. The forward recurrence time at time ![]() $t$, denoted by

$t$, denoted by ![]() $\gamma_{t}^{d}$, is defined by

$\gamma_{t}^{d}$, is defined by  $\gamma_{t}^{d} = S_{d,N_{d}(t) + 1} - t$ and corresponds to the length of time from

$\gamma_{t}^{d} = S_{d,N_{d}(t) + 1} - t$ and corresponds to the length of time from ![]() $t$ until the occurrence of the next renewal. It is also called residual lifetime, and it occurs frequently in various stochastic models, such as in reliability, queueing, or inventory theory. For several applications of these quantities, see in Chadjiconstantinidis and Politis [Reference Chadjiconstantinidis and Politis6] and the references therein.

$t$ until the occurrence of the next renewal. It is also called residual lifetime, and it occurs frequently in various stochastic models, such as in reliability, queueing, or inventory theory. For several applications of these quantities, see in Chadjiconstantinidis and Politis [Reference Chadjiconstantinidis and Politis6] and the references therein.

The forward and backward recurrence times in an ordinary renewal process have been studied, and various results are available (see, e.g., Karlin and Taylor [Reference Karlin and Taylor13], Gupta [Reference Gupta11], Gakis and Sivazlian ([Reference Gakis and Sivazlian9],[Reference Gakis and Sivazlian10]), and most recently Losidis and Politis [Reference Losidis and Politis16], Losidis ([Reference Losidis14], [Reference Losidis15]), Losidis et al. ([Reference Losidis, Politis and Psarrakos17], [Reference Losidis, Politis and Psarrakos18])) and Chadjiconsatntinidis and Politis ([Reference Chadjiconstantinidis and Politis6]). Karling and Taylor [Reference Karlin and Taylor13] and Janssen and Manca [Reference Janssen and Manca12] offer some results for the survival function of the forward recurrence time ![]() $\gamma_{t}^{d}$. These are the only known results in the literature concerning quantities related to the delayed forward and/or backward recurrence times.

$\gamma_{t}^{d}$. These are the only known results in the literature concerning quantities related to the delayed forward and/or backward recurrence times.

So, in this paper, the focus is on the joint and marginal distributions of ![]() $\gamma_{t}^{d}$ and

$\gamma_{t}^{d}$ and ![]() $\delta_{t}^{d}$. To the best of our knowledge, results for the joint distribution of

$\delta_{t}^{d}$. To the best of our knowledge, results for the joint distribution of ![]() $\gamma_{t}^{d}$ and

$\gamma_{t}^{d}$ and ![]() $\delta_{t}^{d}$ are presented for the first time in this paper. In more detail, we study the joint distribution of the forward and backward recurrence times

$\delta_{t}^{d}$ are presented for the first time in this paper. In more detail, we study the joint distribution of the forward and backward recurrence times ![]() $\gamma_{t}^{d}$,

$\gamma_{t}^{d}$, ![]() $\delta_{t}^{d}$, and we obtain exact results as well as bounds for the right tails of those quantities. As a result, we obtain exact results for the marginal distributions of

$\delta_{t}^{d}$, and we obtain exact results as well as bounds for the right tails of those quantities. As a result, we obtain exact results for the marginal distributions of ![]() $\gamma_{t}^{d}$,

$\gamma_{t}^{d}$, ![]() $\delta_{t}^{d}$ and bounds for their right tail probabilities.

$\delta_{t}^{d}$ and bounds for their right tail probabilities.

We assume throughout this paper that the distribution functions F and G are absolutely continuous with densities f and g respectively, so that ![]() $M(t)$ and

$M(t)$ and ![]() $M_{d}(t)$ also have densities which are denoted by

$M_{d}(t)$ also have densities which are denoted by ![]() $m(t)$ and

$m(t)$ and ![]() $m_{d}(t)$, and are called the renewal densities of an ordinary and a delayed renewal process.

$m_{d}(t)$, and are called the renewal densities of an ordinary and a delayed renewal process.

For a distribution function ![]() $V$, we write

$V$, we write ![]() $\overline{V} = 1 - V$ for the associated tail function. We shall use the following well-known aging classes of distributions on the nonnegative half-line. A distribution

$\overline{V} = 1 - V$ for the associated tail function. We shall use the following well-known aging classes of distributions on the nonnegative half-line. A distribution ![]() $V$ is said to have an increasing failure rate (IFR) if

$V$ is said to have an increasing failure rate (IFR) if ![]() $log\ V$ is concave, that is, for all

$log\ V$ is concave, that is, for all ![]() $x \geq 0$, the function

$x \geq 0$, the function ![]() $\overline{V}(t + x)\text{/}\overline{V}(t)$ is decreasing in t for all t such that

$\overline{V}(t + x)\text{/}\overline{V}(t)$ is decreasing in t for all t such that ![]() $\overline{V}(t) \geq 0$. Similarly, if

$\overline{V}(t) \geq 0$. Similarly, if ![]() $log\ V$ is convex, then

$log\ V$ is convex, then ![]() $V$ is called a decreasing failure rate (decreasing failure rate) distribution. Next, a distribution

$V$ is called a decreasing failure rate (decreasing failure rate) distribution. Next, a distribution ![]() $V$ is called new better than used (new better than used) if

$V$ is called new better than used (new better than used) if ![]() $\overline{V}(t + x) \leq \overline{V}(t)\overline{V}(x)$ for all

$\overline{V}(t + x) \leq \overline{V}(t)\overline{V}(x)$ for all ![]() $x,t \geq 0$, while

$x,t \geq 0$, while ![]() $V$ is called new worse than used (new worse than used) if

$V$ is called new worse than used (new worse than used) if ![]() $\overline{V}(t + x) \geq \overline{V}(t)\overline{V}(x)$ for all

$\overline{V}(t + x) \geq \overline{V}(t)\overline{V}(x)$ for all ![]() $x,t \geq 0$. It is known that the decreasing failure rate (IFR) is a subclass of the new worse than used (resp., new better than used) class of distributions. Further details on these and other aging classes of distributions can be found in the books of Shaked and Shanthikumar [Reference Shaked and Shanthikumar21] and Willmot and Lin [Reference Willmot and Lin22].

$x,t \geq 0$. It is known that the decreasing failure rate (IFR) is a subclass of the new worse than used (resp., new better than used) class of distributions. Further details on these and other aging classes of distributions can be found in the books of Shaked and Shanthikumar [Reference Shaked and Shanthikumar21] and Willmot and Lin [Reference Willmot and Lin22].

The paper is organized as follows: In the next section, we demonstrate why the joint distribution of the forward and backward recurrence times and their marginal tails is essential using examples from real-life applications. In Section 3, we obtain exact results for the right tail ![]() $\Pr(\delta_{t}^{d} \geq x,\ \gamma_{t}^{d} \gt y)$ of the joint distribution of the forward and backward recurrence times

$\Pr(\delta_{t}^{d} \geq x,\ \gamma_{t}^{d} \gt y)$ of the joint distribution of the forward and backward recurrence times ![]() $\gamma_{t}^{d}$ and

$\gamma_{t}^{d}$ and ![]() $\delta_{t}^{d}$, as well as for their marginal tails

$\delta_{t}^{d}$, as well as for their marginal tails ![]() $\Pr(\delta_{t}^{d} \geq x)$ and

$\Pr(\delta_{t}^{d} \geq x)$ and ![]() $\Pr(\gamma_{t}^{d} \gt y)$. Also, several general bounds are obtained for all these quantities, as well as bounds which are based on several aging classes of the distributions of the interarrival times and bounds based on the delayed renewal density. Finally, in Section 4, we give several numerical examples to illustrate our results.

$\Pr(\gamma_{t}^{d} \gt y)$. Also, several general bounds are obtained for all these quantities, as well as bounds which are based on several aging classes of the distributions of the interarrival times and bounds based on the delayed renewal density. Finally, in Section 4, we give several numerical examples to illustrate our results.

2. Applications of the backward and forward recurrence times

Renewal theory, particularly the analysis of forward and backward recurrence times, plays a crucial role in various real-time applications such as healthcare, reliability engineering, and queueing systems. These applications depend not only on average behavior but also on the tail properties of recurrence time distributions, which can reveal rare but critical scenarios. The ability to model and respond to such temporal uncertainty makes renewal theory a powerful and versatile framework in time-sensitive environments.

In this section, we present an example from the aviation industry, where operational reliability and safety are vital. Predictive maintenance is critical in minimizing risks and optimizing aircraft availability. Renewal theory can be applied to model the timing of past and future maintenance events by analyzing the backward recurrence time (time since the last maintenance) and the forward recurrence time (time until the next maintenance).

In this context, the joint tail behavior of the backward and forward recurrence times provides insight into situations where both times are large, identifying potential high-risk periods of system degradation or unexpected failure. Such analysis supports condition-based maintenance strategies, where service decisions depend on actual operating conditions and event timing rather than fixed schedules.

In practical applications such as aviation maintenance, the assumptions of the ordinary renewal process—that all inter-arrival times, including the first, are identically distributed may not hold. Often, aircraft enter observation or service at arbitrary points in their operational cycle. The delayed renewal process offers a more appropriate framework, allowing the first inter-arrival time to follow a different distribution, thereby capturing more realistic operational dynamics.

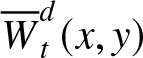

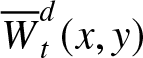

3. The joint distribution of the forward and backward recurrence times

Let  ${\overline{W}}_{t}^{d}(x,y) = \Pr(\delta_{t}^{d} \geq x,\ \gamma_{t}^{d} \gt y)$,

${\overline{W}}_{t}^{d}(x,y) = \Pr(\delta_{t}^{d} \geq x,\ \gamma_{t}^{d} \gt y)$, ![]() $0 \leq x \leq t$,

$0 \leq x \leq t$, ![]() $y \geq 0$, be the joint survival function (joint right-tail) of the random variables

$y \geq 0$, be the joint survival function (joint right-tail) of the random variables ![]() $\gamma_{t}^{d}$ and

$\gamma_{t}^{d}$ and ![]() $\delta_{t}^{d}$. In this notation, we have used

$\delta_{t}^{d}$. In this notation, we have used ![]() $\delta_{t}^{d} \geq x$ rather than

$\delta_{t}^{d} \geq x$ rather than ![]() $\delta_{t}^{d} \gt x$ for notational convenience and since the distribution of

$\delta_{t}^{d} \gt x$ for notational convenience and since the distribution of ![]() $\delta_{t}^{d}$ possesses a point mass at

$\delta_{t}^{d}$ possesses a point mass at ![]() $t$, so that our results remain valid without change for

$t$, so that our results remain valid without change for ![]() $x = t$. Our first result concerns a formula for computing the joint right-tail of the random variables

$x = t$. Our first result concerns a formula for computing the joint right-tail of the random variables ![]() $\gamma_{t}^{d}$ and

$\gamma_{t}^{d}$ and ![]() $\delta_{t}^{d}$.

$\delta_{t}^{d}$.

Proposition 1. For any ![]() $0 \leq x \leq t$ and

$0 \leq x \leq t$ and ![]() $y \geq 0$, the joint right-tail of

$y \geq 0$, the joint right-tail of ![]() $\gamma_{t}^{d}$ and

$\gamma_{t}^{d}$ and ![]() $\delta_{t}^{d}$ is given by

$\delta_{t}^{d}$ is given by

\begin{equation}

{\overline{W}}_{t}^{d}(x,y) = \overline{G}(t + y) + \int_{0}^{t - x}{\overline{F}(t + y - s)dM_{d}(s)}

\end{equation}

\begin{equation}

{\overline{W}}_{t}^{d}(x,y) = \overline{G}(t + y) + \int_{0}^{t - x}{\overline{F}(t + y - s)dM_{d}(s)}

\end{equation}Proof. We have

\begin{align*}

{\overline{W}}_{t}^{d}(x,y) &= \sum_{n = 1}^{\infty}\Pr\left( S_{n - 1} \leq t - x,S_{n} \geq t + y \right)\\

&= \Pr\left( X_{1} \gt t + y \right) + \sum_{n = 2}^{\infty}{\int_{0}^{t - x}{\Pr\left( X_{n} \gt t + y - s \right)d\left( \Pr\left( \sum_{i = 1}^{n - 1}X_{i} \leq s \right) \right)}}\\

&= \overline{G}(t + y) + \int_{0}^{t - x}{\Pr\left( X_{n} \gt t + y - s \right)d\sum_{n = 2}^{\infty}G}*F^{(n - 2)}(s)\\

&= \overline{G}(t + y) + \int_{0}^{t - x}{\overline{F}(t + y - s)dM_{d}(s)}.

\end{align*}

\begin{align*}

{\overline{W}}_{t}^{d}(x,y) &= \sum_{n = 1}^{\infty}\Pr\left( S_{n - 1} \leq t - x,S_{n} \geq t + y \right)\\

&= \Pr\left( X_{1} \gt t + y \right) + \sum_{n = 2}^{\infty}{\int_{0}^{t - x}{\Pr\left( X_{n} \gt t + y - s \right)d\left( \Pr\left( \sum_{i = 1}^{n - 1}X_{i} \leq s \right) \right)}}\\

&= \overline{G}(t + y) + \int_{0}^{t - x}{\Pr\left( X_{n} \gt t + y - s \right)d\sum_{n = 2}^{\infty}G}*F^{(n - 2)}(s)\\

&= \overline{G}(t + y) + \int_{0}^{t - x}{\overline{F}(t + y - s)dM_{d}(s)}.

\end{align*} Therefore, if there exists an exact formula for the delayed renewal function ![]() $M_{d}(t)$, one can use Proposition 1 to obtain an exact representation for

$M_{d}(t)$, one can use Proposition 1 to obtain an exact representation for  ${\overline{W}}_{t}^{d}(x,y)$ as well. For example, Chadjiconstantinidis [Reference Chadjiconstantinidis5] proved that if the random variable

${\overline{W}}_{t}^{d}(x,y)$ as well. For example, Chadjiconstantinidis [Reference Chadjiconstantinidis5] proved that if the random variable ![]() $X_{1}$ follows an exponential distribution with parameter

$X_{1}$ follows an exponential distribution with parameter ![]() $\lambda_{1} \gt 0$, and the i.i.d. random variables

$\lambda_{1} \gt 0$, and the i.i.d. random variables ![]() $X_{i}$,

$X_{i}$, ![]() $i \geq 2$ have also an exponential distribution with parameter

$i \geq 2$ have also an exponential distribution with parameter ![]() $\lambda_{2} \gt 0$ with

$\lambda_{2} \gt 0$ with ![]() $\lambda_{1} \neq \lambda_{2}$, then

$\lambda_{1} \neq \lambda_{2}$, then

\begin{equation*}M_{d}(t) = \frac{\lambda_{1} - \lambda_{2}}{\lambda_{1}} + \lambda_{2}t + \frac{\lambda_{2} - \lambda_{1}}{\lambda_{1}}e^{- \lambda_{1}t},~ t \geq 0.\end{equation*}

\begin{equation*}M_{d}(t) = \frac{\lambda_{1} - \lambda_{2}}{\lambda_{1}} + \lambda_{2}t + \frac{\lambda_{2} - \lambda_{1}}{\lambda_{1}}e^{- \lambda_{1}t},~ t \geq 0.\end{equation*} Therefore, using Proposition 1, we get the following exact formula for  ${\overline{W}}_{t}^{d}(x,y)$

${\overline{W}}_{t}^{d}(x,y)$

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) = e^{- \lambda_{1}(t + y)} + \left\lbrack 1 - e^{- \lambda_{1}(t - x)} \right\rbrack e^{- \lambda_{2}(x + y)}, 0 \leq x \leq t, y \geq 0.\end{equation*}

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) = e^{- \lambda_{1}(t + y)} + \left\lbrack 1 - e^{- \lambda_{1}(t - x)} \right\rbrack e^{- \lambda_{2}(x + y)}, 0 \leq x \leq t, y \geq 0.\end{equation*} Another representation for the evaluation of  ${\overline{W}}_{t}^{d}(x,y)$ is given in the following.

${\overline{W}}_{t}^{d}(x,y)$ is given in the following.

Proposition 2. For any ![]() $0 \leq x \leq t$ and

$0 \leq x \leq t$ and ![]() $y \geq 0$, it holds that

$y \geq 0$, it holds that

\begin{equation}

{\overline{W}}_{t}^{d}(x,y) = 1 - M_{d}(t + y) + M_{d}(t - x)\overline{F}(x + y) + \int_{0}^{x + y}{M_{d}(t + y - s)dF(s)}.

\end{equation}

\begin{equation}

{\overline{W}}_{t}^{d}(x,y) = 1 - M_{d}(t + y) + M_{d}(t - x)\overline{F}(x + y) + \int_{0}^{x + y}{M_{d}(t + y - s)dF(s)}.

\end{equation}Proof. First, we calculate the integral  $\int_{0}^{x}{M_{G}(t - s)dF(s)}$ as follows:

$\int_{0}^{x}{M_{G}(t - s)dF(s)}$ as follows:

\begin{align*}

\int_{0}^{x}{M_{G}(t - s)dF(s)} &= M_{G}(t) - M_{G}(t - x)\overline{F}(x) - \int_{0}^{x}{m_{G}(t - s)\overline{F}(s)ds}\\ &= M_{G}(t) - M_{G}(t - x)\overline{F}(x) - \int_{t - x}^{t}{\overline{F}(t - s)dM_{G}(s)}.

\end{align*}

\begin{align*}

\int_{0}^{x}{M_{G}(t - s)dF(s)} &= M_{G}(t) - M_{G}(t - x)\overline{F}(x) - \int_{0}^{x}{m_{G}(t - s)\overline{F}(s)ds}\\ &= M_{G}(t) - M_{G}(t - x)\overline{F}(x) - \int_{t - x}^{t}{\overline{F}(t - s)dM_{G}(s)}.

\end{align*} Setting ![]() $y = 0$ into (1), yields that

$y = 0$ into (1), yields that

\begin{equation*}\overline{G}(t) + \int_{0}^{t - x}{\overline{F}(t - s)dM_{G}(s)} + \int_{t - x}^{t}{\overline{F}(t - s)dM_{G}(s)} = 1,\end{equation*}

\begin{equation*}\overline{G}(t) + \int_{0}^{t - x}{\overline{F}(t - s)dM_{G}(s)} + \int_{t - x}^{t}{\overline{F}(t - s)dM_{G}(s)} = 1,\end{equation*}and then

\begin{equation*}\int_{0}^{x}{M_{G}(t - s)dF(s)} = M_{G}(t) - M_{G}(t - x)\overline{F}(x) - G(t) + \int_{0}^{t - x}{\overline{F}(t - s)dM_{G}(s)}.\end{equation*}

\begin{equation*}\int_{0}^{x}{M_{G}(t - s)dF(s)} = M_{G}(t) - M_{G}(t - x)\overline{F}(x) - G(t) + \int_{0}^{t - x}{\overline{F}(t - s)dM_{G}(s)}.\end{equation*} Setting ![]() $x = x + y$ and

$x = x + y$ and ![]() $t = t + y$ into the above gives:

$t = t + y$ into the above gives:

\begin{align*}

\int_{0}^{t - x}{\overline{F}(t + y - s)dM_{G}(s)} &=G(t + y) + M_{G}(t + y) - M_{G}(t - x)\overline{F}(x + y)\\ &+ \int_{0}^{x + y}{M_{G}(t + y - s)dF(s)}.

\end{align*}

\begin{align*}

\int_{0}^{t - x}{\overline{F}(t + y - s)dM_{G}(s)} &=G(t + y) + M_{G}(t + y) - M_{G}(t - x)\overline{F}(x + y)\\ &+ \int_{0}^{x + y}{M_{G}(t + y - s)dF(s)}.

\end{align*}Inserting the last equation into (1) completes the proof.

The results in Propositions 1 and 2 are also useful to obtain bounds for the joint right-tail  ${\overline{W}}_{t}^{d}(x,y)$. A general two-sided bound in terms of the delayed renewal function

${\overline{W}}_{t}^{d}(x,y)$. A general two-sided bound in terms of the delayed renewal function ![]() $M_{d}(t)$ is given in the following.

$M_{d}(t)$ is given in the following.

Theorem 1. For any ![]() $0 \leq x \leq t$ and

$0 \leq x \leq t$ and ![]() $y \geq 0$, it holds

$y \geq 0$, it holds

\begin{equation}

\overline{G}(t + y) + \overline{F}(t + y)M_{d}(t - x) \leq {\overline{W}}_{t}^{d}(x,y) \leq 1 - \left\lbrack M_{d}(t + y) - M_{d}(t - x) \right\rbrack\overline{F}(x + y).

\end{equation}

\begin{equation}

\overline{G}(t + y) + \overline{F}(t + y)M_{d}(t - x) \leq {\overline{W}}_{t}^{d}(x,y) \leq 1 - \left\lbrack M_{d}(t + y) - M_{d}(t - x) \right\rbrack\overline{F}(x + y).

\end{equation}Proof. For ![]() $0 \leq s \leq t - x$ we have

$0 \leq s \leq t - x$ we have ![]() $t + y - s \leq t + y$ and since

$t + y - s \leq t + y$ and since ![]() $\overline{F}(x)$ is a decreasing function in

$\overline{F}(x)$ is a decreasing function in ![]() $x$, it holds

$x$, it holds ![]() $\overline{F}(t + y - s) \geq \overline{F}(t + y)$, and hence from Proposition 1, we get

$\overline{F}(t + y - s) \geq \overline{F}(t + y)$, and hence from Proposition 1, we get

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \geq \overline{G}(t + y) + \overline{F}(t + y)\int_{0}^{t - x}{dM_{d}(s)},\end{equation*}

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \geq \overline{G}(t + y) + \overline{F}(t + y)\int_{0}^{t - x}{dM_{d}(s)},\end{equation*}and the lower bound in (3) follows immediately.

Also, for ![]() $s \geq 0$ since the delayed renewal function

$s \geq 0$ since the delayed renewal function ![]() $M_{d})(t)$ is a non-decreasing function in

$M_{d})(t)$ is a non-decreasing function in ![]() $t \geq 0$, it holds

$t \geq 0$, it holds ![]() $M_{d}(t + y - s) \leq M_{d}(t + y)$ and hence from Proposition 2 we obtain

$M_{d}(t + y - s) \leq M_{d}(t + y)$ and hence from Proposition 2 we obtain

\begin{align*}

{\overline{W}}_{t}^{d}(x,y) &\leq 1 - M_{d}(t + y) + M_{d}(t - x)\overline{F}(x + y) + M_{d}(t + y)\int_{0}^{x + y}{dF(s)}\\ &= 1 - M_{d}(t + y) + M_{d}(t - x)\overline{F}(x + y) + M_{d}(t + y)F(x + y)\\ &= 1 - M_{d}(t + y)\overline{F}(x + y) + M_{d}(t - x)\overline{F}(x + y).

\end{align*}

\begin{align*}

{\overline{W}}_{t}^{d}(x,y) &\leq 1 - M_{d}(t + y) + M_{d}(t - x)\overline{F}(x + y) + M_{d}(t + y)\int_{0}^{x + y}{dF(s)}\\ &= 1 - M_{d}(t + y) + M_{d}(t - x)\overline{F}(x + y) + M_{d}(t + y)F(x + y)\\ &= 1 - M_{d}(t + y)\overline{F}(x + y) + M_{d}(t - x)\overline{F}(x + y).

\end{align*} The usefulness of the bounds given in (3) is that we can use suitable known lower and upper bounds for ![]() $M_{d}(t)$ by inserting them into (3) to obtain bounds for

$M_{d}(t)$ by inserting them into (3) to obtain bounds for  ${\overline{W}}_{t}^{d}(x,y)$, provided that the resulting bounds lie in the interval [0, 1]. In particular, one can use only lower bounds for

${\overline{W}}_{t}^{d}(x,y)$, provided that the resulting bounds lie in the interval [0, 1]. In particular, one can use only lower bounds for ![]() $M_{d}(t)$, say

$M_{d}(t)$, say ![]() $A(t)$, in order to obtain lower bounds for

$A(t)$, in order to obtain lower bounds for  ${\overline{W}}_{t}^{d}(x,y)$, provided that

${\overline{W}}_{t}^{d}(x,y)$, provided that  $A(t - x) \leq \frac{G(t + y)}{\overline{F}(t + y)}$, for

$A(t - x) \leq \frac{G(t + y)}{\overline{F}(t + y)}$, for ![]() $0 \leq x \leq t$,

$0 \leq x \leq t$, ![]() $y \geq 0$. Also, one can find lower bounds for the difference

$y \geq 0$. Also, one can find lower bounds for the difference ![]() $M_{d}(t + y) - M_{d}(t - x)$, say

$M_{d}(t + y) - M_{d}(t - x)$, say ![]() $B_{t}(x,y)$, in order to obtain upper bounds for

$B_{t}(x,y)$, in order to obtain upper bounds for  ${\overline{W}}_{t}^{d}(x,y)$, provided that

${\overline{W}}_{t}^{d}(x,y)$, provided that  $B_{t}(x,y) \leq \frac{1}{\overline{F}(x + y)}$, for

$B_{t}(x,y) \leq \frac{1}{\overline{F}(x + y)}$, for ![]() $0 \leq x \leq t$,

$0 \leq x \leq t$, ![]() $y \geq 0$. For example, Chadjiconstantinidis [Reference Chadjiconstantinidis5] obtained the following lower bound for

$y \geq 0$. For example, Chadjiconstantinidis [Reference Chadjiconstantinidis5] obtained the following lower bound for ![]() $M_{d}(t)$

$M_{d}(t)$

\begin{equation}

M_{d}(t) \geq \frac{t - \mu_{G}G_{e}(t)}{\mu F_{e}(t)}, t \geq 0,

\end{equation}

\begin{equation}

M_{d}(t) \geq \frac{t - \mu_{G}G_{e}(t)}{\mu F_{e}(t)}, t \geq 0,

\end{equation} provided that ![]() $0 \lt \mu \lt \infty$,

$0 \lt \mu \lt \infty$, ![]() ${0 \lt \mu}_{G} \lt \infty$. Inserting the above bound into the lower bound of (3), we obtain immediately

${0 \lt \mu}_{G} \lt \infty$. Inserting the above bound into the lower bound of (3), we obtain immediately

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \geq \overline{G}(t + y) + \frac{t - x - \mu_{G}G_{e}(t - x)}{\mu F_{e}(t - x)}\overline{F}(t + y), 0 \leq x \leq t \textit{, } y \geq 0.\end{equation*}

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \geq \overline{G}(t + y) + \frac{t - x - \mu_{G}G_{e}(t - x)}{\mu F_{e}(t - x)}\overline{F}(t + y), 0 \leq x \leq t \textit{, } y \geq 0.\end{equation*}Chadjiconstantinidis [Reference Chadjiconstantinidis5] also obtained the following upper bound for the delayed renewal function

\begin{equation*}M_{d}(t) \leq U(t) - \frac{\mu_{G}}{\mu} \frac{G_{e}(t)}{F_{e}(t)},~ t \geq 0\end{equation*}

\begin{equation*}M_{d}(t) \leq U(t) - \frac{\mu_{G}}{\mu} \frac{G_{e}(t)}{F_{e}(t)},~ t \geq 0\end{equation*} and combining this with the famous Lorden’s upper bound for ![]() $U(t)$, namely

$U(t)$, namely

\begin{equation*}U(t) \leq \frac{t}{\mu} + \frac{\mu_{2}}{\mu^{2}},\end{equation*}

\begin{equation*}U(t) \leq \frac{t}{\mu} + \frac{\mu_{2}}{\mu^{2}},\end{equation*}we get

\begin{equation}

M_{d}(t) \leq \frac{t}{\mu} + \frac{\mu_{2}}{\mu^{2}} - \frac{\mu_{G}}{\mu} \frac{G_{e}(t)}{F_{e}(t)},~ t \geq 0.

\end{equation}

\begin{equation}

M_{d}(t) \leq \frac{t}{\mu} + \frac{\mu_{2}}{\mu^{2}} - \frac{\mu_{G}}{\mu} \frac{G_{e}(t)}{F_{e}(t)},~ t \geq 0.

\end{equation}A weaker but simpler bound than that given by (4) is

\begin{equation*}M_{d}(t) \geq \frac{t - \mu_{G}}{\mu},~ t \geq 0.\end{equation*}

\begin{equation*}M_{d}(t) \geq \frac{t - \mu_{G}}{\mu},~ t \geq 0.\end{equation*}Now, using this and (5) we obtain

\begin{align*}

M_{d}(t + y) - M_{d}(t - x) &\geq \frac{t + y - \mu_{G}}{\mu} - \frac{t - x}{\mu} - \frac{\mu_{2}}{\mu^{2}} + \frac{\mu_{G}}{\mu} \frac{G_{e}(t - x)}{F_{e}(t - x)}\\ &=\frac{x + y}{\mu} - \frac{\mu_{2}}{\mu^{2}} - \frac{\mu_{G}}{\mu}\left\lbrack 1 - \frac{G_{e}(t - x)}{F_{e}(t - x)} \right\rbrack,

\end{align*}

\begin{align*}

M_{d}(t + y) - M_{d}(t - x) &\geq \frac{t + y - \mu_{G}}{\mu} - \frac{t - x}{\mu} - \frac{\mu_{2}}{\mu^{2}} + \frac{\mu_{G}}{\mu} \frac{G_{e}(t - x)}{F_{e}(t - x)}\\ &=\frac{x + y}{\mu} - \frac{\mu_{2}}{\mu^{2}} - \frac{\mu_{G}}{\mu}\left\lbrack 1 - \frac{G_{e}(t - x)}{F_{e}(t - x)} \right\rbrack,

\end{align*}and inserting this lower bound into the RHS of (3), we get

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \leq 1 - \left\{\frac{x + y}{\mu} - \frac{\mu_{2}}{\mu^{2}} - \frac{\mu_{G}}{\mu}\left\lbrack 1 - \frac{G_{e}(t - x)}{F_{e}(t - x)} \right\rbrack \right\}\overline{F}(x + y).\end{equation*}

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \leq 1 - \left\{\frac{x + y}{\mu} - \frac{\mu_{2}}{\mu^{2}} - \frac{\mu_{G}}{\mu}\left\lbrack 1 - \frac{G_{e}(t - x)}{F_{e}(t - x)} \right\rbrack \right\}\overline{F}(x + y).\end{equation*} Similarly, one can use several upper and lower bounds for ![]() $M_{d}(t)$ and the difference

$M_{d}(t)$ and the difference ![]() $M_{d}(t + x) - M_{d}(t)$ obtained by Chadjiconstantinidis ([Reference Chadjiconstantinidis4], [Reference Chadjiconstantinidis5]) to obtain bounds for the joint right-tail

$M_{d}(t + x) - M_{d}(t)$ obtained by Chadjiconstantinidis ([Reference Chadjiconstantinidis4], [Reference Chadjiconstantinidis5]) to obtain bounds for the joint right-tail  ${\overline{W}}_{t}^{d}(x,y)$ by using Theorem 1. The details are omitted.

${\overline{W}}_{t}^{d}(x,y)$ by using Theorem 1. The details are omitted.

By letting ![]() $x = 0$ and

$x = 0$ and ![]() $y = 0$ in (1) and (2), we get formulae for the evaluation of the right-tail distribution of the marginal random variables

$y = 0$ in (1) and (2), we get formulae for the evaluation of the right-tail distribution of the marginal random variables ![]() $\gamma_{t}^{d}$ and

$\gamma_{t}^{d}$ and ![]() $\delta_{t}^{d}$, respectively. Hence, we have the following

$\delta_{t}^{d}$, respectively. Hence, we have the following

Corollary 1. (i) For any ![]() $y \geq 0$ it holds

$y \geq 0$ it holds

\begin{equation}

\Pr\left( \gamma_{t}^{d} \gt y \right) = \overline{G}(t + y) + \int_{0}^{t}{\overline{F}(t + y - s)dM_{d}(s)},

\end{equation}

\begin{equation}

\Pr\left( \gamma_{t}^{d} \gt y \right) = \overline{G}(t + y) + \int_{0}^{t}{\overline{F}(t + y - s)dM_{d}(s)},

\end{equation}and

\begin{equation}

\Pr\left( \gamma_{t}^{d} \gt y \right) = 1 - M_{d}(t + y) + M_{d}(t)\overline{F}(y) + \int_{0}^{y}{M_{d}(t + y - s)dF(s)}.

\end{equation}

\begin{equation}

\Pr\left( \gamma_{t}^{d} \gt y \right) = 1 - M_{d}(t + y) + M_{d}(t)\overline{F}(y) + \int_{0}^{y}{M_{d}(t + y - s)dF(s)}.

\end{equation} (ii) For any ![]() $0 \leq x \leq t$ it holds

$0 \leq x \leq t$ it holds

\begin{equation}

\Pr\left( \delta_{t}^{d} \geq x \right) = \overline{G}(t) + \int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)},

\end{equation}

\begin{equation}

\Pr\left( \delta_{t}^{d} \geq x \right) = \overline{G}(t) + \int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)},

\end{equation}and

\begin{equation}

\Pr\left( \delta_{t}^{d} \geq x \right) = 1 - M_{d}(t) + M_{d}(t - x)\overline{F}(x) + \int_{0}^{x}{M_{d}(t - s)dF(s)}.

\end{equation}

\begin{equation}

\Pr\left( \delta_{t}^{d} \geq x \right) = 1 - M_{d}(t) + M_{d}(t - x)\overline{F}(x) + \int_{0}^{x}{M_{d}(t - s)dF(s)}.

\end{equation} Using Theorem 1 (and or Corollary 1 by employing similar arguments as in Theorem 1), we directly obtain two-sided bounds for  $\Pr\left( \gamma_{t}^{d} \gt y \right)$ and

$\Pr\left( \gamma_{t}^{d} \gt y \right)$ and  $\Pr\left( \delta_{t}^{d} \geq x \right)$. Thus, we have the following

$\Pr\left( \delta_{t}^{d} \geq x \right)$. Thus, we have the following

Corollary 2. (i) For any ![]() $y \geq 0$ it holds

$y \geq 0$ it holds

\begin{equation*}\overline{G}(t + y) + \overline{F}(t + y)M_{d}(t) \leq \Pr\left( \gamma_{t}^{d} \gt y \right) \leq 1 - \left\lbrack M_{d}(t + y) - M_{d}(t) \right\rbrack\overline{F}(y).\end{equation*}

\begin{equation*}\overline{G}(t + y) + \overline{F}(t + y)M_{d}(t) \leq \Pr\left( \gamma_{t}^{d} \gt y \right) \leq 1 - \left\lbrack M_{d}(t + y) - M_{d}(t) \right\rbrack\overline{F}(y).\end{equation*} (ii) For any ![]() $0 \leq x \leq t$ it holds

$0 \leq x \leq t$ it holds

\begin{equation*}\overline{G}(t) + \overline{F}(t)M_{d}(t - x) \leq \Pr\left( \delta_{t}^{d} \geq x \right) \leq 1 - \left\lbrack M_{d}(t) - M_{d}(t - x) \right\rbrack\overline{F}(x).\end{equation*}

\begin{equation*}\overline{G}(t) + \overline{F}(t)M_{d}(t - x) \leq \Pr\left( \delta_{t}^{d} \geq x \right) \leq 1 - \left\lbrack M_{d}(t) - M_{d}(t - x) \right\rbrack\overline{F}(x).\end{equation*} Therefore, as previously one can use known upper and lower bounds for ![]() $M_{d}$ to obtain bounds for the right-tails

$M_{d}$ to obtain bounds for the right-tails  $\Pr\left( \gamma_{t}^{d} \gt y \right)$ and

$\Pr\left( \gamma_{t}^{d} \gt y \right)$ and  $\Pr\left( \delta_{t}^{d} \geq x \right)$.

$\Pr\left( \delta_{t}^{d} \geq x \right)$.

In an ordinary renewal process, Chen [Reference Chen7] proves that if the forward recurrence time is stochastically decreasing (increasing) in ![]() $t \geq 0$, then the distribution of the inter-arrival times is new better than used (new worse than used). In the following result, we show that the stochastic decrease (increase) of the

$t \geq 0$, then the distribution of the inter-arrival times is new better than used (new worse than used). In the following result, we show that the stochastic decrease (increase) of the ![]() $\gamma_{t}^{d}$ is linked with the new better than used (new worse than used) class for the distribution of the first inter-arrival time.

$\gamma_{t}^{d}$ is linked with the new better than used (new worse than used) class for the distribution of the first inter-arrival time.

Proposition 3. If ![]() $\gamma_{t}^{d}$ is stochastically decreasing (increasing) in

$\gamma_{t}^{d}$ is stochastically decreasing (increasing) in ![]() $t \geq 0$, then the distribution function

$t \geq 0$, then the distribution function ![]() $G$ of the first inter-arrival time is new better than used (new worse than used).

$G$ of the first inter-arrival time is new better than used (new worse than used).

Proof. Karlin and Taylor ([Reference Karlin and Taylor13], p. 200) proved that

\begin{equation*}\Pr\left( \gamma_{t}^{d} \gt y \right) = \overline{G}(t + y) + \int_{0}^{t}{\Pr\left( \gamma_{t - s}^{d} \gt y \right)dG(s)}.\end{equation*}

\begin{equation*}\Pr\left( \gamma_{t}^{d} \gt y \right) = \overline{G}(t + y) + \int_{0}^{t}{\Pr\left( \gamma_{t - s}^{d} \gt y \right)dG(s)}.\end{equation*} Assuming that ![]() $\gamma_{t}^{d}$ is stochastically decreasing (increasing) in

$\gamma_{t}^{d}$ is stochastically decreasing (increasing) in ![]() $t \geq 0$, since for

$t \geq 0$, since for ![]() $0 \leq s \leq t$ we also have

$0 \leq s \leq t$ we also have ![]() $0 \leq t - s \leq t$, then it holds

$0 \leq t - s \leq t$, then it holds  $\Pr{\left( \gamma_{t - s}^{d} \gt y \right) \geq ( \leq )}\Pr\left( \gamma_{t}^{d} \gt y \right)$ and hence from the above equation, we get

$\Pr{\left( \gamma_{t - s}^{d} \gt y \right) \geq ( \leq )}\Pr\left( \gamma_{t}^{d} \gt y \right)$ and hence from the above equation, we get

\begin{align*}

\Pr\left( \gamma_{t}^{d} \gt y \right) &\geq ( \leq )\overline{G}(t + y) + \int_{0}^{t}{\Pr\left( \gamma_{t}^{d} \gt y \right)dG(s)}\\ & = \overline{G}(t + y) + \Pr\left( \gamma_{t}^{d} \gt y \right)G(t),

\end{align*}

\begin{align*}

\Pr\left( \gamma_{t}^{d} \gt y \right) &\geq ( \leq )\overline{G}(t + y) + \int_{0}^{t}{\Pr\left( \gamma_{t}^{d} \gt y \right)dG(s)}\\ & = \overline{G}(t + y) + \Pr\left( \gamma_{t}^{d} \gt y \right)G(t),

\end{align*}implying that

\begin{equation}

\Pr\left( \gamma_{t}^{d} \gt y \right)\overline{G}(t) \geq ( \leq )\overline{G}(t + y).

\end{equation}

\begin{equation}

\Pr\left( \gamma_{t}^{d} \gt y \right)\overline{G}(t) \geq ( \leq )\overline{G}(t + y).

\end{equation} Also, for ![]() $t \geq 0$ it holds

$t \geq 0$ it holds  $\Pr\left( \gamma_{0}^{d} \gt y \right) \geq ( \leq )\Pr\left( \gamma_{t}^{d} \gt y \right)$. By letting

$\Pr\left( \gamma_{0}^{d} \gt y \right) \geq ( \leq )\Pr\left( \gamma_{t}^{d} \gt y \right)$. By letting ![]() $t = 0$ in (3) it follows that

$t = 0$ in (3) it follows that  $\Pr{\left( \gamma_{0}^{d} \gt y \right) = \overline{G}(y)}$, and thus it holds

$\Pr{\left( \gamma_{0}^{d} \gt y \right) = \overline{G}(y)}$, and thus it holds  $\overline{G}(y) \geq ( \leq )\Pr\left( \gamma_{t}^{d} \gt y \right)$, Therefore, from (10) we get that

$\overline{G}(y) \geq ( \leq )\Pr\left( \gamma_{t}^{d} \gt y \right)$, Therefore, from (10) we get that ![]() $\overline{G}(t + y) \leq ( \geq )\overline{G}(t)\overline{G}(y)$, implying that the distribution function

$\overline{G}(t + y) \leq ( \geq )\overline{G}(t)\overline{G}(y)$, implying that the distribution function ![]() $G$ is new better than used (new worse than used).

$G$ is new better than used (new worse than used).

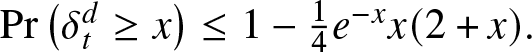

3.1. Right-tail bounds for recurrence times under aging properties

In this subsection, we shall give bounds for the joint right-tail  ${\overline{W}}_{t}^{d}(x,y)$ as well as for

${\overline{W}}_{t}^{d}(x,y)$ as well as for  $\Pr\left( \gamma_{t}^{d} \gt y \right)$ and

$\Pr\left( \gamma_{t}^{d} \gt y \right)$ and  $\Pr\left( \delta_{t}^{d} \geq x \right)$ which are based on several reliability classes of the common distribution function

$\Pr\left( \delta_{t}^{d} \geq x \right)$ which are based on several reliability classes of the common distribution function ![]() $F$ of

$F$ of ![]() $\{X_{i}$,

$\{X_{i}$, ![]() $i \geq 2\}$. Our first bound for

$i \geq 2\}$. Our first bound for  ${\overline{W}}_{t}^{d}(x,y)$ is expressed in terms of the right-tail probability of the backward recurrence time.

${\overline{W}}_{t}^{d}(x,y)$ is expressed in terms of the right-tail probability of the backward recurrence time.

Corollary 3. If the distribution function ![]() $F$ is new worse than used (new better than used), then

$F$ is new worse than used (new better than used), then

\begin{equation}

{\overline{W}}_{t}^{d}(x,y) \geq ( \leq )\overline{G}(t + y) - \overline{F}(y)\overline{G}(t) + \overline{F}(y)\Pr\left( \delta_{t}^{d} \geq x \right),

\end{equation}

\begin{equation}

{\overline{W}}_{t}^{d}(x,y) \geq ( \leq )\overline{G}(t + y) - \overline{F}(y)\overline{G}(t) + \overline{F}(y)\Pr\left( \delta_{t}^{d} \geq x \right),

\end{equation}and

\begin{equation}

\Pr{\left( \gamma_{t}^{d} \gt y \right) \geq ( \leq )\overline{G}(t + y) + \overline{F}(y)G(t)}.

\end{equation}

\begin{equation}

\Pr{\left( \gamma_{t}^{d} \gt y \right) \geq ( \leq )\overline{G}(t + y) + \overline{F}(y)G(t)}.

\end{equation}Proof. If the distribution function ![]() $F$ is new worse than used (new better than used), then for

$F$ is new worse than used (new better than used), then for ![]() $0 \leq s \leq t - x \leq t$, it holds

$0 \leq s \leq t - x \leq t$, it holds ![]() $\overline{F}(t + y - s) \geq ( \leq )\overline{F}(y)\overline{F}(t - s)$, and hence from (1) and (8) we get

$\overline{F}(t + y - s) \geq ( \leq )\overline{F}(y)\overline{F}(t - s)$, and hence from (1) and (8) we get

\begin{align*}

{\overline{W}}_{t}^{d}(x,y) &\geq ( \leq )\overline{G}(t + y) + \overline{F}(y)\int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)}\\ &= \overline{G}(t + y) + \overline{F}(y)\left\lbrack \Pr\left( \delta_{t}^{d} \geq x \right) - \overline{G}(t) \right\rbrack,

\end{align*}

\begin{align*}

{\overline{W}}_{t}^{d}(x,y) &\geq ( \leq )\overline{G}(t + y) + \overline{F}(y)\int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)}\\ &= \overline{G}(t + y) + \overline{F}(y)\left\lbrack \Pr\left( \delta_{t}^{d} \geq x \right) - \overline{G}(t) \right\rbrack,

\end{align*} which gives the bound in (11).By letting ![]() $x = 0$ in (11) we immediately obtain the bound in (12).

$x = 0$ in (11) we immediately obtain the bound in (12).

By letting ![]() $y = 0$ in we get (11) that

$y = 0$ in we get (11) that  $\Pr{\left( \delta_{t}^{d} \geq x \right) \geq ( \leq )\overline{G}(t)}$ for all

$\Pr{\left( \delta_{t}^{d} \geq x \right) \geq ( \leq )\overline{G}(t)}$ for all ![]() $0 \leq x \leq t$, if the distribution function

$0 \leq x \leq t$, if the distribution function ![]() $F$ is new worse than used (new better than used).

$F$ is new worse than used (new better than used).

In the following, we give an upper (lower) bound for  ${\overline{W}}_{t}^{d}(x,y)$ in terms of the delayed renewal function

${\overline{W}}_{t}^{d}(x,y)$ in terms of the delayed renewal function ![]() $M_{d}$, under the same assumption that the interarrival distribution

$M_{d}$, under the same assumption that the interarrival distribution ![]() $F$ is new better than used (new worse than used). It should be noted that an upper bound for

$F$ is new better than used (new worse than used). It should be noted that an upper bound for  ${\overline{W}}_{t}^{d}(x,y)$ when the distribution function

${\overline{W}}_{t}^{d}(x,y)$ when the distribution function ![]() $F$ is new worse than used, will be given in Corollary 9 below.

$F$ is new worse than used, will be given in Corollary 9 below.

Proposition 4. Let ![]() $0 \leq x \leq t$ and

$0 \leq x \leq t$ and ![]() $y \geq 0$.

$y \geq 0$.

(i) If the distribution function ![]() $F$ is new better than used, then

$F$ is new better than used, then

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \leq \overline{G}(t + y) + \overline{F}(y)\left\{G(t) + \left\lbrack M_{d}(t - x) - M_{d}(t) \right\rbrack\overline{F}(x) \right\}.\end{equation*}

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \leq \overline{G}(t + y) + \overline{F}(y)\left\{G(t) + \left\lbrack M_{d}(t - x) - M_{d}(t) \right\rbrack\overline{F}(x) \right\}.\end{equation*} (ii) If the ![]() $F$ is new worse than used, then

$F$ is new worse than used, then

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \geq \overline{G}(t + y) + \overline{F}(y)\left\{G(t) + M_{d}(t - x) - M_{d}(t) \right\}.\end{equation*}

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \geq \overline{G}(t + y) + \overline{F}(y)\left\{G(t) + M_{d}(t - x) - M_{d}(t) \right\}.\end{equation*}Proof. (i) It holds

\begin{align}

\int_{0}^{t - x}{\overline{F}(t + y - s)dM_{d}(s)} &\leq \overline{F}(y)\int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)}\cr

&= \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) - \int_{0}^{t - x}{f(t - s)M_{d}(s)ds} \right\}\cr

&= \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) - \int_{x}^{t}{M_{d}(t - s)dF(s)} \right\}\cr

&= \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) - \int_{0}^{t}{M_{d}(t - s)dF(s)} \right.\cr

&\left. \quad + \int_{0}^{x}{M_{d}(t - s)dF(s)} \right\}\cr

&= \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) + G(t) - M_{d}(t) \right.\ \cr

&\left. \quad + \int_{0}^{x}{M_{d}(t - s)dF(s)} \right\}

\end{align}

\begin{align}

\int_{0}^{t - x}{\overline{F}(t + y - s)dM_{d}(s)} &\leq \overline{F}(y)\int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)}\cr

&= \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) - \int_{0}^{t - x}{f(t - s)M_{d}(s)ds} \right\}\cr

&= \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) - \int_{x}^{t}{M_{d}(t - s)dF(s)} \right\}\cr

&= \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) - \int_{0}^{t}{M_{d}(t - s)dF(s)} \right.\cr

&\left. \quad + \int_{0}^{x}{M_{d}(t - s)dF(s)} \right\}\cr

&= \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) + G(t) - M_{d}(t) \right.\ \cr

&\left. \quad + \int_{0}^{x}{M_{d}(t - s)dF(s)} \right\}

\end{align} Since for ![]() $0 \leq s \leq x \leq t$, we have

$0 \leq s \leq x \leq t$, we have ![]() $t - x \leq t - s \leq t$, implying that

$t - x \leq t - s \leq t$, implying that ![]() $M_{d}(t - x) \leq M_{d}(t - s) \leq M_{d}(t)$, from (10) we get

$M_{d}(t - x) \leq M_{d}(t - s) \leq M_{d}(t)$, from (10) we get

\begin{align*}

\int_{0}^{t - x}{\overline{F}(t + y - s)dM_{d}(s)} &\leq \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) + G(t) - M_{d}(t) + M_{d}(t)\int_{0}^{x}{dF(s)} \right\}\\ &= \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) + G(t) - \overline{F}(x)M_{d}(t) \right\}\\ &= \overline{F}(y)\left\{G(t) + \left\lbrack M_{d}(t - x) - M_{d}(t) \right\rbrack\overline{F}(x) \right\},

\end{align*}

\begin{align*}

\int_{0}^{t - x}{\overline{F}(t + y - s)dM_{d}(s)} &\leq \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) + G(t) - M_{d}(t) + M_{d}(t)\int_{0}^{x}{dF(s)} \right\}\\ &= \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) + G(t) - \overline{F}(x)M_{d}(t) \right\}\\ &= \overline{F}(y)\left\{G(t) + \left\lbrack M_{d}(t - x) - M_{d}(t) \right\rbrack\overline{F}(x) \right\},

\end{align*}and hence the result follows from (1).

(ii) If the distribution function ![]() $F$ is new worse than used, then as in (10) we get that

$F$ is new worse than used, then as in (10) we get that

\begin{align*}

\int_{0}^{t - x}{\overline{F}(t + y - s)dM_{d}(s)} &\geq \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) + G(t) - M_{d}(t)+ \int_{0}^{x}{M_{d}(t - s)dF(s)} \right\}\\ &\geq \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) + G(t) - M_{d}(t) + M_{d}(t - x)\int_{0}^{x}{dF(s)} \right\}\\ &= \overline{F}(y)\left\{G(t) + M_{d}(t - x) - M_{d}(t) \right\},

\end{align*}

\begin{align*}

\int_{0}^{t - x}{\overline{F}(t + y - s)dM_{d}(s)} &\geq \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) + G(t) - M_{d}(t)+ \int_{0}^{x}{M_{d}(t - s)dF(s)} \right\}\\ &\geq \overline{F}(y)\left\{\overline{F}(x)M_{d}(t - x) + G(t) - M_{d}(t) + M_{d}(t - x)\int_{0}^{x}{dF(s)} \right\}\\ &= \overline{F}(y)\left\{G(t) + M_{d}(t - x) - M_{d}(t) \right\},

\end{align*}and thus, again from (1) we obtain the required lower bound.

By letting ![]() $x = 0$ in the bounds of Proposition 4, we reveal the result in (12). Also, by letting

$x = 0$ in the bounds of Proposition 4, we reveal the result in (12). Also, by letting ![]() $y = 0$ in the bounds of Proposition 4, we get the following

$y = 0$ in the bounds of Proposition 4, we get the following

Corollary 4. For any ![]() $0 \leq x \leq t$, if the distribution function

$0 \leq x \leq t$, if the distribution function ![]() $F$ is new better than used, then

$F$ is new better than used, then

\begin{equation*}\Pr\left( \delta_{t}^{d} \geq x \right) \leq 1 - \left\lbrack M_{d}(t) - M_{d}(t - x) \right\rbrack\overline{F}(x),\end{equation*}

\begin{equation*}\Pr\left( \delta_{t}^{d} \geq x \right) \leq 1 - \left\lbrack M_{d}(t) - M_{d}(t - x) \right\rbrack\overline{F}(x),\end{equation*} and if the ![]() $F$ is new worse than used, then

$F$ is new worse than used, then

\begin{equation*}\Pr\left( \delta_{t}^{d} \geq x \right) \geq 1 - \left\lbrack M_{d}(t) - M_{d}(t - x) \right\rbrack.\end{equation*}

\begin{equation*}\Pr\left( \delta_{t}^{d} \geq x \right) \geq 1 - \left\lbrack M_{d}(t) - M_{d}(t - x) \right\rbrack.\end{equation*} One can use well-known bounds for the difference ![]() $M_{d}(t) - M_{d}(t - x)$ (see, e.g., Chadjiconstantinidis [Reference Chadjiconstantinidis4]) to obtain bounds for

$M_{d}(t) - M_{d}(t - x)$ (see, e.g., Chadjiconstantinidis [Reference Chadjiconstantinidis4]) to obtain bounds for  ${\overline{W}}_{t}^{d}(x,y)$ using Proposition 4, and bounds for

${\overline{W}}_{t}^{d}(x,y)$ using Proposition 4, and bounds for  $\Pr\left( \delta_{t}^{d} \geq x \right)$ using Corollary 4. For example, if the distribution function

$\Pr\left( \delta_{t}^{d} \geq x \right)$ using Corollary 4. For example, if the distribution function ![]() $F$ is decreasing failure rate, Chadjiconstantinidis [Reference Chadjiconstantinidis5] proved that

$F$ is decreasing failure rate, Chadjiconstantinidis [Reference Chadjiconstantinidis5] proved that

\begin{equation*}G(t) + \frac{\mu_{G}F(t)G_{e}(t)}{\mu F_{e}(t)} \leq M_{d}(t) \leq \frac{G(t)}{\overline{F}(t)},\end{equation*}

\begin{equation*}G(t) + \frac{\mu_{G}F(t)G_{e}(t)}{\mu F_{e}(t)} \leq M_{d}(t) \leq \frac{G(t)}{\overline{F}(t)},\end{equation*}from which we get that

\begin{equation*}M_{d}(t - x) - M_{d}(t) \geq G(t - x) - \frac{\mu_{G}F(t - x)G_{e}(t - x)}{\mu F_{e}(t - x)} - \frac{G(t)}{\overline{F}(t)},~0 \leq x \leq t.\end{equation*}

\begin{equation*}M_{d}(t - x) - M_{d}(t) \geq G(t - x) - \frac{\mu_{G}F(t - x)G_{e}(t - x)}{\mu F_{e}(t - x)} - \frac{G(t)}{\overline{F}(t)},~0 \leq x \leq t.\end{equation*} If the distribution function ![]() $F$ is decreasing failure rate, it is also new better than used and hence from Proposition 4 we get that

$F$ is decreasing failure rate, it is also new better than used and hence from Proposition 4 we get that

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \geq \overline{G}(t + y) + \overline{F}(y)\left\{G(t) + G(t - x) - \frac{\mu_{G}F(t - x)G_{e}(t - x)}{\mu F_{e}(t - x)} - \frac{G(t)}{\overline{F}(t)} \right\},0 \leq x \leq t.\end{equation*}

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \geq \overline{G}(t + y) + \overline{F}(y)\left\{G(t) + G(t - x) - \frac{\mu_{G}F(t - x)G_{e}(t - x)}{\mu F_{e}(t - x)} - \frac{G(t)}{\overline{F}(t)} \right\},0 \leq x \leq t.\end{equation*} Also, from Corollary 4 we obtain that if the distribution function ![]() $F$ is decreasing failure rate, then

$F$ is decreasing failure rate, then

\begin{equation*}\Pr\left( \delta_{t}^{d} \geq x \right) \geq 1 + G(t - x) - \frac{\mu_{G}F(t - x)G_{e}(t - x)}{\mu F_{e}(t - x)} - \frac{G(t)}{\overline{F}(t)}, 0 \leq x \leq t.\end{equation*}

\begin{equation*}\Pr\left( \delta_{t}^{d} \geq x \right) \geq 1 + G(t - x) - \frac{\mu_{G}F(t - x)G_{e}(t - x)}{\mu F_{e}(t - x)} - \frac{G(t)}{\overline{F}(t)}, 0 \leq x \leq t.\end{equation*} A two-sided bound for  ${\overline{W}}_{t}^{d}(x,y)$ when the distribution function

${\overline{W}}_{t}^{d}(x,y)$ when the distribution function ![]() $F$ is decreasing failure rate, is given in the next Proposition. It should be noted that the lower bound in (14) below is different that obtained above.

$F$ is decreasing failure rate, is given in the next Proposition. It should be noted that the lower bound in (14) below is different that obtained above.

Let ![]() ${\overline{F}}_{t}(y) = \Pr\left( X_{t} \gt y \right)$ denotes the right-tail of the residual lifetime random variable

${\overline{F}}_{t}(y) = \Pr\left( X_{t} \gt y \right)$ denotes the right-tail of the residual lifetime random variable ![]() $X_{t} = X - t\left| X \gt t \right.\ $.

$X_{t} = X - t\left| X \gt t \right.\ $.

Proposition 5. (i) If the distribution function ![]() $F$ is decreasing failure rate, then for any

$F$ is decreasing failure rate, then for any ![]() $0 \leq x \leq t$ and

$0 \leq x \leq t$ and ![]() $y \geq 0$, it holds that

$y \geq 0$, it holds that

\begin{equation}

\overline{G}(t + y) + {\overline{F}}_{x}(y)\left\lbrack \Pr\left( \delta_{t}^{d}\geq x \right) - \overline{G}(t) \right\rbrack \leq {\overline{W}}_{t}^{d}(x,y)\leq \overline{G}(t + y) + {\overline{F}}_{t}(y)\left\lbrack \Pr\left( \delta_{t}^{d} \geq x \right) - \overline{G}(t) \right\rbrack

\end{equation}

\begin{equation}

\overline{G}(t + y) + {\overline{F}}_{x}(y)\left\lbrack \Pr\left( \delta_{t}^{d}\geq x \right) - \overline{G}(t) \right\rbrack \leq {\overline{W}}_{t}^{d}(x,y)\leq \overline{G}(t + y) + {\overline{F}}_{t}(y)\left\lbrack \Pr\left( \delta_{t}^{d} \geq x \right) - \overline{G}(t) \right\rbrack

\end{equation} (ii) If the distribution function ![]() $F$ is IFR, then for any

$F$ is IFR, then for any ![]() $0 \leq x \leq t$ and

$0 \leq x \leq t$ and ![]() $y \geq 0$, it holds that

$y \geq 0$, it holds that

\begin{equation}

\overline{G}(t + y) + {\overline{F}}_{t}(y)\left\lbrack \Pr\left( \delta_{t}^{d} \geq x \right) - \overline{G}(t) \right\rbrack \leq {\overline{W}}_{t}^{d}(x,y)\leq \overline{G}(t + y) + {\overline{F}}_{x}(y)\left\lbrack \Pr\left( \delta_{t}^{d} \geq x \right) - \overline{G}(t) \right\rbrack.

\end{equation}

\begin{equation}

\overline{G}(t + y) + {\overline{F}}_{t}(y)\left\lbrack \Pr\left( \delta_{t}^{d} \geq x \right) - \overline{G}(t) \right\rbrack \leq {\overline{W}}_{t}^{d}(x,y)\leq \overline{G}(t + y) + {\overline{F}}_{x}(y)\left\lbrack \Pr\left( \delta_{t}^{d} \geq x \right) - \overline{G}(t) \right\rbrack.

\end{equation}Proof. (i) The right-tail of the residual lifetime is given by  ${\overline{F}}_{t}(y) = \frac{\overline{F}(t + y)}{\overline{F}(t)}$, and thus the tail

${\overline{F}}_{t}(y) = \frac{\overline{F}(t + y)}{\overline{F}(t)}$, and thus the tail ![]() $\overline{F}(t + y - s)$ can be written as

$\overline{F}(t + y - s)$ can be written as

and hence from Proposition 1 we get that  ${\overline{W}}_{t}^{d}(x,y)$ also satisfies the equation

${\overline{W}}_{t}^{d}(x,y)$ also satisfies the equation

\begin{equation}

{\overline{W}}_{t}^{d}(x,y) = \overline{G}(t + y) + \int_{0}^{t - x}{{\overline{F}}_{t - s}(y)\overline{F}(t - s)dM_{d}(s)}.

\end{equation}

\begin{equation}

{\overline{W}}_{t}^{d}(x,y) = \overline{G}(t + y) + \int_{0}^{t - x}{{\overline{F}}_{t - s}(y)\overline{F}(t - s)dM_{d}(s)}.

\end{equation} For ![]() $0 \leq s \leq t - x$ we also have that

$0 \leq s \leq t - x$ we also have that ![]() $x \leq t - s \leq t$, and since the survival function

$x \leq t - s \leq t$, and since the survival function ![]() ${\overline{F}}_{t}(y)$ is a non-decreasing function

${\overline{F}}_{t}(y)$ is a non-decreasing function ![]() $t \geq 0$ if the distribution function

$t \geq 0$ if the distribution function ![]() $F$ is decreasing failure rate, it holds that

$F$ is decreasing failure rate, it holds that

Therefore, we have that

\begin{align*}

\int_{0}^{t - x}{{\overline{F}}_{x}(y)\overline{F}(t - s)dM_{d}(s)} &\leq \int_{0}^{t - x}{{\overline{F}}_{t - s}(y)\overline{F}(t - s)dM_{d}(s)}\\ & \leq \int_{0}^{t - x}{{\overline{F}}_{t}(y)\overline{F}(t - s)dM_{d}(s)},

\end{align*}

\begin{align*}

\int_{0}^{t - x}{{\overline{F}}_{x}(y)\overline{F}(t - s)dM_{d}(s)} &\leq \int_{0}^{t - x}{{\overline{F}}_{t - s}(y)\overline{F}(t - s)dM_{d}(s)}\\ & \leq \int_{0}^{t - x}{{\overline{F}}_{t}(y)\overline{F}(t - s)dM_{d}(s)},

\end{align*}and using (8) the above relationship yields

\begin{align*}

{\overline{F}}_{x}(y)\left\lbrack \Pr\left( \delta_{t}^{d} \geq x \right) - \overline{G}(t) \right\rbrack &\leq \int_{0}^{t - x}{{\overline{F}}_{t - s}(y)\overline{F}(t - s)dM_{d}(s)}\\ &\leq {\overline{F}}_{t}(y)\left\lbrack \Pr\left( \delta_{t}^{d} \geq x \right) - \overline{G}(t) \right\rbrack

\end{align*}

\begin{align*}

{\overline{F}}_{x}(y)\left\lbrack \Pr\left( \delta_{t}^{d} \geq x \right) - \overline{G}(t) \right\rbrack &\leq \int_{0}^{t - x}{{\overline{F}}_{t - s}(y)\overline{F}(t - s)dM_{d}(s)}\\ &\leq {\overline{F}}_{t}(y)\left\lbrack \Pr\left( \delta_{t}^{d} \geq x \right) - \overline{G}(t) \right\rbrack

\end{align*}Now, the two-sided bound in (14) follows immediately from the last relationship and (16).

(ii) The proof of (15) is similar to that in (i) by reversing the inequalities.

By letting ![]() $x = 0$ in (14) and (15) we immediately obtain the following two-sided bounds for the right-tail probability

$x = 0$ in (14) and (15) we immediately obtain the following two-sided bounds for the right-tail probability ![]() $\Pr(\gamma_{t}^{d} \gt y)$.

$\Pr(\gamma_{t}^{d} \gt y)$.

Corollary 5. If the distribution function ![]() $F$ is decreasing failure rate (IFR), then for any

$F$ is decreasing failure rate (IFR), then for any ![]() $y \geq 0$, it holds that

$y \geq 0$, it holds that

\begin{equation*}\overline{G}(t + y) + \overline{F}(y)G(t) \leq ( \geq )\Pr(\gamma_{t}^{d} \gt y) \leq ( \geq )\overline{G}(t + y) + {\overline{F}}_{t}(y)G(t).\end{equation*}

\begin{equation*}\overline{G}(t + y) + \overline{F}(y)G(t) \leq ( \geq )\Pr(\gamma_{t}^{d} \gt y) \leq ( \geq )\overline{G}(t + y) + {\overline{F}}_{t}(y)G(t).\end{equation*} The bounds in Proposition 5 and Corollary 5 are exact for ![]() $x = 0$ and

$x = 0$ and ![]() $y = 0$ respectively, implying that for small values of

$y = 0$ respectively, implying that for small values of ![]() $x$ and

$x$ and ![]() $y$, these bounds are good approximations for

$y$, these bounds are good approximations for  ${\overline{W}}_{t}^{d}(x,y)$ and

${\overline{W}}_{t}^{d}(x,y)$ and ![]() ${\Pr(\gamma}_{t}^{d} \gt y)$.

${\Pr(\gamma}_{t}^{d} \gt y)$.

Another two-sided bound for  ${\overline{W}}_{t}^{d}(x,y)$ when the distribution function

${\overline{W}}_{t}^{d}(x,y)$ when the distribution function ![]() $F$ is IFR is given in Corollary 15 below.

$F$ is IFR is given in Corollary 15 below.

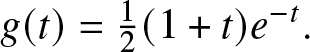

Theorem 2. If ![]() $A(y) = 1 - \overline{A}(y)$ is a distribution function on

$A(y) = 1 - \overline{A}(y)$ is a distribution function on ![]() $(0,\infty)$ satisfying

$(0,\infty)$ satisfying

then for any ![]() $0 \leq x \leq t$ and

$0 \leq x \leq t$ and ![]() $y \geq 0$

$y \geq 0$

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \leq \left\{\frac{\overline{G}(t + y)}{\overline{G}(t)} + \overline{A}(y) \right\} \Pr(\delta_{t}^{d} \geq x).\end{equation*}

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \leq \left\{\frac{\overline{G}(t + y)}{\overline{G}(t)} + \overline{A}(y) \right\} \Pr(\delta_{t}^{d} \geq x).\end{equation*}Proof. Using that for ![]() $0 \leq s \leq t - x \leq t$, from (17) it follows that

$0 \leq s \leq t - x \leq t$, from (17) it follows that ![]() ${\overline{F}}_{t - s}(y) \leq \overline{A}(y)$. Therefore, from (16) and (8) and we get

${\overline{F}}_{t - s}(y) \leq \overline{A}(y)$. Therefore, from (16) and (8) and we get

\begin{align*}

\Pr(\gamma_{t}^{d} \gt y/\delta_{t}^{d} \geq x)& = \ \frac{{\overline{W}}_{t}^{d}(x,y)}{\Pr(\delta_{t}^{d} \geq x)} = \frac{\overline{G}(t + y) + \int_{0}^{t - x}{{\overline{F}}_{t - s}(y)\overline{F}(t - s)dM_{d}(s)}}{\overline{G}(t) + \int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)}}\\ & \leq \frac{\overline{G}(t + y) + \overline{A}(y)\int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)}}{\overline{G}(t) + \int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)}}\\ & \leq \frac{\overline{G}(t + y)}{\overline{G}(t)} + \frac{\overline{A}(y)\int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)}}{\int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)}}\\ &= \frac{\overline{G}(t + y)}{\overline{G}(t)} + \overline{A}(y),

\end{align*}

\begin{align*}

\Pr(\gamma_{t}^{d} \gt y/\delta_{t}^{d} \geq x)& = \ \frac{{\overline{W}}_{t}^{d}(x,y)}{\Pr(\delta_{t}^{d} \geq x)} = \frac{\overline{G}(t + y) + \int_{0}^{t - x}{{\overline{F}}_{t - s}(y)\overline{F}(t - s)dM_{d}(s)}}{\overline{G}(t) + \int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)}}\\ & \leq \frac{\overline{G}(t + y) + \overline{A}(y)\int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)}}{\overline{G}(t) + \int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)}}\\ & \leq \frac{\overline{G}(t + y)}{\overline{G}(t)} + \frac{\overline{A}(y)\int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)}}{\int_{0}^{t - x}{\overline{F}(t - s)dM_{d}(s)}}\\ &= \frac{\overline{G}(t + y)}{\overline{G}(t)} + \overline{A}(y),

\end{align*}and hence the result follows.

In the following, we give some applications of Theorem 2.

Corollary 6. If the distribution function ![]() $F$ is IFR, then for any

$F$ is IFR, then for any ![]() $0 \leq x \leq t$ and

$0 \leq x \leq t$ and ![]() $y \geq 0$

$y \geq 0$

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \leq \left\{\frac{\overline{G}(t + y)}{\overline{G}(t)} + e^{- \lambda(0)y} \right\} \Pr(\delta_{t}^{d} \geq x).\end{equation*}

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \leq \left\{\frac{\overline{G}(t + y)}{\overline{G}(t)} + e^{- \lambda(0)y} \right\} \Pr(\delta_{t}^{d} \geq x).\end{equation*}Proof. Under the assumption of IFR inter-arrival times, it holds that ![]() $\lambda(t) \geq \lambda(0)$,

$\lambda(t) \geq \lambda(0)$, ![]() $t \geq 0$ and hence

$t \geq 0$ and hence

\begin{equation*}{\overline{F}}_{t}(y) = \frac{\overline{F}(t + y)}{\overline{F}(t)} = e^{- \int_{t}^{t + y}{\lambda(s)ds}}\leq e^{- \int_{t}^{t + y}{\lambda(0)ds}} = e^{- \lambda(0)y}.\end{equation*}

\begin{equation*}{\overline{F}}_{t}(y) = \frac{\overline{F}(t + y)}{\overline{F}(t)} = e^{- \int_{t}^{t + y}{\lambda(s)ds}}\leq e^{- \int_{t}^{t + y}{\lambda(0)ds}} = e^{- \lambda(0)y}.\end{equation*} Thus, Theorem 2 holds with ![]() $\overline{A}(y) = e^{- \lambda(0)y}$.

$\overline{A}(y) = e^{- \lambda(0)y}$.

In Corollary 3, we derived an upper bound for the joint right-tail  ${\overline{W}}_{t}^{d}(x,y)$ under the assumption that the distribution function

${\overline{W}}_{t}^{d}(x,y)$ under the assumption that the distribution function ![]() $F$ is new better than used. Using Theorem 2, under the same assumption, we give another different upper bound.

$F$ is new better than used. Using Theorem 2, under the same assumption, we give another different upper bound.

Corollary 7. If the distribution function ![]() $F$ is new better than used, then for any

$F$ is new better than used, then for any ![]() $0 \leq x \leq t$ and

$0 \leq x \leq t$ and ![]() $y \geq 0$

$y \geq 0$

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \leq \left\{\frac{\overline{G}(t + y)}{\overline{G}(t)} + \overline{F}(y) \right\} \Pr(\delta_{t}^{d} \geq x).\end{equation*}

\begin{equation*}{\overline{W}}_{t}^{d}(x,y) \leq \left\{\frac{\overline{G}(t + y)}{\overline{G}(t)} + \overline{F}(y) \right\} \Pr(\delta_{t}^{d} \geq x).\end{equation*}Proof. If the distribution function ![]() $F$ is new better than used, then

$F$ is new better than used, then

\begin{equation*}{\overline{F}}_{t}(y) = \frac{\overline{F}(t + y)}{\overline{F}(t)} \leq \frac{\overline{F}(t)\overline{F}(y)}{\overline{F}(t)} = \overline{F}(y),\end{equation*}

\begin{equation*}{\overline{F}}_{t}(y) = \frac{\overline{F}(t + y)}{\overline{F}(t)} \leq \frac{\overline{F}(t)\overline{F}(y)}{\overline{F}(t)} = \overline{F}(y),\end{equation*} and thus Theorem 2 holds with ![]() $\overline{A}(y) = \overline{F}(y)$.

$\overline{A}(y) = \overline{F}(y)$.

By letting ![]() $x = 0$ in Corollaries 5 and 6, we immediately obtain the following

$x = 0$ in Corollaries 5 and 6, we immediately obtain the following

Corollary 8. (i) If the distribution function ![]() $F$ is IFR, then for any

$F$ is IFR, then for any ![]() $y \geq 0$

$y \geq 0$

\begin{equation*}\Pr(\gamma_{t}^{d} \gt y) \leq \frac{\overline{G}(t + y)}{\overline{G}(t)} + e^{- \lambda(0)y}.\end{equation*}

\begin{equation*}\Pr(\gamma_{t}^{d} \gt y) \leq \frac{\overline{G}(t + y)}{\overline{G}(t)} + e^{- \lambda(0)y}.\end{equation*} (ii) If the distribution function ![]() $F$ is new better than used, then for any

$F$ is new better than used, then for any ![]() $y \geq 0$

$y \geq 0$

\begin{equation*}\Pr(\gamma_{t}^{d} \gt y) \leq \frac{\overline{G}(t + y)}{\overline{G}(t)} + \overline{F}(y).\end{equation*}

\begin{equation*}\Pr(\gamma_{t}^{d} \gt y) \leq \frac{\overline{G}(t + y)}{\overline{G}(t)} + \overline{F}(y).\end{equation*} Now, in the following proposition, we shall give some other simple but useful representations for  ${\overline{W}}_{t}^{d}(x,y)$.

${\overline{W}}_{t}^{d}(x,y)$.

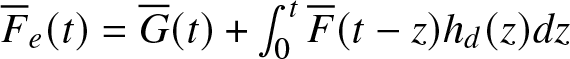

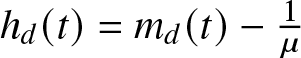

Proposition 6. For any ![]() $0 \leq x \leq t$ and

$0 \leq x \leq t$ and ![]() $y \geq 0$, the joint right-tail

$y \geq 0$, the joint right-tail  ${\overline{W}}_{t}^{d}(x,y)$ admits the following representations

${\overline{W}}_{t}^{d}(x,y)$ admits the following representations

\begin{equation}

{\overline{W}}_{t}^{d}(x,y) = 1 - \int_{t - x}^{t + y}{\overline{F}(t + y - s)}dM_{d}(s),

\end{equation}

\begin{equation}

{\overline{W}}_{t}^{d}(x,y) = 1 - \int_{t - x}^{t + y}{\overline{F}(t + y - s)}dM_{d}(s),

\end{equation} \begin{equation}

{\overline{W}}_{t}^{d}(x,y) = {\overline{F}}_{e}(x + y) - \int_{0}^{x + y}\overline{F}(s)h_{d}(t + y - s)ds,

\end{equation}

\begin{equation}

{\overline{W}}_{t}^{d}(x,y) = {\overline{F}}_{e}(x + y) - \int_{0}^{x + y}\overline{F}(s)h_{d}(t + y - s)ds,

\end{equation}and

\begin{align*}

{\overline{W}}_{t}^{d}(x,y) = \overline{G}(t + y) + \mu\left\lbrack {\overline{F}}_{e}(x + y)m_{d}(t - x) - {\overline{F}}_{e}(t + y)g(0) \right\rbrack

\end{align*}

\begin{align*}

{\overline{W}}_{t}^{d}(x,y) = \overline{G}(t + y) + \mu\left\lbrack {\overline{F}}_{e}(x + y)m_{d}(t - x) - {\overline{F}}_{e}(t + y)g(0) \right\rbrack

\end{align*} \begin{equation}

- \mu\int_{0}^{t - x}{{\overline{F}}_{e}(t + y - s)dm_{d}(s)}.~~~~~~~~~~~~~~~~

\end{equation}

\begin{equation}

- \mu\int_{0}^{t - x}{{\overline{F}}_{e}(t + y - s)dm_{d}(s)}.~~~~~~~~~~~~~~~~

\end{equation} where  $h_{d}(t) = m_{d}(t) - \frac{1}{\mu}$.

$h_{d}(t) = m_{d}(t) - \frac{1}{\mu}$.

Proof. Integrating by parts yields

\begin{align*}

\int_{0}^{x + y}{M_{d}(t + y - s)dF(s)} &= - \int_{0}^{x + y}{M_{d}(t + y - s){\overline{F}}^{'}(s)ds}\\ &= - M_{d}(t - x)\overline{F}(x + y) + M_{d}(t + y)\\ &- \int_{0}^{x + y}{m_{d}(t + y - s)}\overline{F}(s)ds\\ &= M_{d}(t + y) - M_{d}(t - x)\overline{F}(x + y)\\ &- \int_{t - x}^{t + y}{\overline{F}(t + y - s)}dM_{d}(s),

\end{align*}

\begin{align*}

\int_{0}^{x + y}{M_{d}(t + y - s)dF(s)} &= - \int_{0}^{x + y}{M_{d}(t + y - s){\overline{F}}^{'}(s)ds}\\ &= - M_{d}(t - x)\overline{F}(x + y) + M_{d}(t + y)\\ &- \int_{0}^{x + y}{m_{d}(t + y - s)}\overline{F}(s)ds\\ &= M_{d}(t + y) - M_{d}(t - x)\overline{F}(x + y)\\ &- \int_{t - x}^{t + y}{\overline{F}(t + y - s)}dM_{d}(s),

\end{align*}and now the result in (18) follows immediately from (2).

Also, (18) can be rewritten as

\begin{align*}

{\overline{W}}_{t}^{d}(x,y) &= 1 - \int_{0}^{x + y}{\overline{F}(s)}m_{d}(t + y - s)ds\\ & = 1 - \int_{0}^{x + y}{\overline{F}(s)}\left\{h_{d}(t + y - s) + \frac{1}{\mu} \right\} ds\\ & = 1 - \int_{0}^{x + y}{\overline{F}(s)}\left\{h_{d}(t + y - s) + \frac{1}{\mu} \right\} ds\\ & = 1 - F_{e}(x + y) - \int_{0}^{x + y}\overline{F}(s)h_{d}(t + y - s)ds,

\end{align*}

\begin{align*}

{\overline{W}}_{t}^{d}(x,y) &= 1 - \int_{0}^{x + y}{\overline{F}(s)}m_{d}(t + y - s)ds\\ & = 1 - \int_{0}^{x + y}{\overline{F}(s)}\left\{h_{d}(t + y - s) + \frac{1}{\mu} \right\} ds\\ & = 1 - \int_{0}^{x + y}{\overline{F}(s)}\left\{h_{d}(t + y - s) + \frac{1}{\mu} \right\} ds\\ & = 1 - F_{e}(x + y) - \int_{0}^{x + y}\overline{F}(s)h_{d}(t + y - s)ds,

\end{align*}which yields (19).

Finally, from (3), we obtain

\begin{align*}

{\overline{W}}_{t}^{d}(x,y) &= \overline{G}(t + y) + \mu\int_{0}^{t - x}{F_{e}(t + y - s)m_{d}(s)ds}\\ & = \overline{G}(t + y) + \mu\left\lbrack {\overline{F}}_{e}(x + y)m_{d}(t - x) - {\overline{F}}_{e}(t + y)m_{d}(0) \right\rbrack\\ & - \mu\int_{0}^{t - x}{{\overline{F}}_{e}(t + y - s)m_{d}^{'}(s)},

\end{align*}

\begin{align*}