Making decisions regarding risk is an integral part of clinical mental health work (Reference FlewettFlewett 2010). A plethora of tools and approaches (Reference Quinsey, Harris and RiceQuinsey 1998; Reference OttoOtto 2000; Reference Douglas and ReevesDouglas 2010a) now exists to assist with clinical judgement when undertaking this task in the context of risk of violence. The tremendous empirical advances in the field over the past two decades (Reference Douglas and ReevesDouglas 2010a) have seen a welcome increase in emphasis on systematic, structured approaches. In tandem with these developments, however, the role of ‘clinical intuition’ has been marginalised or even denigrated (Reference Quinsey, Harris and RiceQuinsey 1998). This article will assert that the intuitive mode of thought has considerable value for clinicians charged with the task of violence risk assessment, provided it is applied in a thoughtful and systematic way. It will outline practical guidelines for such application, derived from the work of cognitive psychologist Robin Hogarth.

Contemporary models of intuition

Psychologists have long distinguished between two modes of thinking, referred to here as ‘deliberative thinking’ and ‘intuitive thinking’ (intuition). The main differences between the two are shown in Table 1. Reference Betsch, Plessner, Betsch and BetschBetsch (2008: p. 4) defines intuition as:

TABLE 1 Characteristics of ‘intuitive’ and ‘deliberative’ thinking processes

‘a process of thinking. The input to this process is mostly provided by knowledge stored in long-term memory that has been primarily acquired via associative learning. The input is processed automatically and without conscious awareness. The output of the process is a feeling that can serve as a basis for judgement and decisions’ [my italics].

This definition does not explicitly include instinctive behaviours, although other theorists accept that intuitive responses can involve ‘a mix of innate and learned behaviour’ (Reference Hogarth, Plessner, Betsch and BetschHogarth 2008).

Central to this definition is the concept of a long-term knowledge store, based on a person’s experience of being exposed to a variety of stimuli and corresponding responses and outcomes. These associations are acquired experientially via observational learning and instrumental and classical conditioning (Reference Epstein, Plessner, Betsch and BetschEpstein 2008). There are two corresponding principles to such learning (Reference Hogarth, Plessner, Betsch and BetschHogarth 2008):

-

• observation of frequencies of events and objects in the environment and the extent to which they co-vary;

-

• the principle of reinforcement: that is to say, the positive (reward) or negative (cost) value of environmental phenomena.

Importantly, the output of the intuitive system is a feeling rather than a verbal proposition. Often this will involve at least a ‘faint whisper’ of emotion (Reference Slovic, Finucane and PetersSlovic 2004), indicating a specific quality such as goodness, badness or riskiness. However, less emotionally charged feelings such as the ‘feeling of knowing’ (Reference Liu, Yanjie and XuLiu 2007) can also occur. Such feelings are believed to help people to navigate quickly and efficiently through the complex and sometimes risky environments of everyday life. They have attracted a variety of terms, such as ‘vibes’ – defined as ‘vague feelings such as disquietude and agitation’ (Reference Epstein, Plessner, Betsch and BetschEpstein 2008).

An influential neurobiological model of decision-making – the ‘somatic marker hypothesis’ (Reference DamasioDamasio 1994) – similarly proposes that feelings arising from a longer-term knowledge store help to guide behaviour. This model posits a long-term knowledge store that links new stimuli to ‘somatic markers’ (internal mental representations marked by positive or negative feelings, linked to distinctive ‘somatic’ visceral states) that guide decisions advantageously and mostly unconsciously.

Research in cognitive psychology has confirmed that, depending on the predispositions of the person and the characteristics of the task at hand, some decisions are made following a predominantly effortful thinking process and others tend to be reached with more reliance on rapid, emotionally coloured ‘gut feelings’ (Reference Deutsch, Strack, Plessner, Betsch and BetschDeutsch 2008).

Most decision-making theorists now accept that optimal decision-making requires an integration of both deliberative and intuitive modes (Reference Baumeister, DeWall, Zhang, Vohs, Baumeister and LoewensteinBaumeister 2007; Reference Plessner, Czenna, Plessner, Betsch and BetschPlessner 2008). The two systems appear to operate in parallel and depend on each other for guidance (Reference Slovic, Finucane and PetersSlovic 2004), with the relative contribution of each system varying according to the situational demands and the person making the decision (Reference Epstein, Plessner, Betsch and BetschEpstein 2008). In practice, we can have reflective thoughts about our feelings and intuitive feelings about our thoughts, such that the two systems interact simultaneously and sequentially (Reference Epstein, Plessner, Betsch and BetschEpstein 2008). Various terms have been coined for the proper integration of both modes of thought to produce truly ‘rational’ decision-making, including the concept of ‘affective rationality’ (Reference Peters, Vastfjall and GarlingPeters 2006) and ‘the dance of affect and reason’ (Reference Finucane, Peters, Slovic, Schneider and ShanteauFinucane 2003).

Problems with intuition in risk assessment

Progress in violence risk assessment in clinical practice reflects the realisation that both modes of thinking have potential value. Methodologies were previously limited to either purely unstructured approaches (which gave clinical intuition free rein; Reference MonahanMonahan 1984) or to hyperrational actuarially based models (which sought to minimise the role of intuitive clinical judgements; Reference Quinsey, Harris and RiceQuinsey 1998). However, there is a third way, generally called ‘structured professional judgement’, that attempts to integrate the best features of each approach and has been shown to be both reliable and valid (Reference Douglas, Reeves, Otto and DouglasDouglas 2010b).

The emerging consensus in the decision-making literature is that integrated models incorporating both deliberative and intuitive reasoning are most appropriate to meet real-world challenges. However, the precise role of the intuitive system remains underdeveloped in the context of violence risk assessment. There are several possible reasons for this (Box 1).

BOX 1 Key problems with using intuition in violence risk assessment

-

• Validity and reliability: research suggests that the intuitive system lacks validity and reliability in at least some assessment contexts (e.g. longer-term assessments)

-

• Opacity: assessments based on intuition are opaque to evaluation by others

-

• Heuristic biases: human judgements are subject to various heuristic biases, such as the affect heuristic, racial prejudice, groupthink and cognitive dissonance

Concerns about validity and reliability

The first generation of empirical research looking at the capacity of clinicians to predict long-term ‘dangerousness’ of patients is notable for its findings that even experienced clinicians could do little better than chance in projecting long-term outcomes of patients with a history of violence (Reference MonahanMonahan 1984). Given the likelihood of vastly different experiences of different clinicians and hence different knowledge stores, this is unsurprising.Footnote a

Opacity

This was referred to by Reference MeehlMeehl (1954) as the aspect of so-called clinical expertise that is the most ‘irritating to non clinicians […] when asked for the evidence [the clinician] states simply that he feels intuitively that such and such is the case’. Such invidious usage of clinical experience has led to its being denigrated as a ‘prestigious synonym for anecdotal evidence’ (Reference Grove and MeehlGrove 1996). In the realm of violence risk assessment when defending opinions before tribunals or courts, such opacity renders expert opinions open to challenge on grounds of fairness and validity.

Heuristic biases

The vast research base on the propensity of human judgement to show certain predictable biases has now been extended to the clinical realm (Reference Ruscio, Lilienfeld and O'DonohueRuscio 2007), including violence risk assessment (Reference Slovic and MonahanSlovic 2000). Decisions made without a significant input from the deliberative system appear to be prone to bias. Various classes of such bias exist but the ‘affect heuristic’ (Reference Finucane, Alhakami and SlovicFinucane 2000; Reference Slovic, Finucane and PetersSlovic 2004) may be particularly relevant to violence risk assessment. The essence of this bias is that, just as imaginability, memorability and similarity can serve as misleading cues for probability judgements (the ‘availability’ and ‘representativeness’ heuristics), so affective signals can also mislead with respect to both probability and consequences of particular outcomes. For example, it has been shown that even experienced forensic mental health clinicians are biased towards a more conservative assessment of risk when given information in a format that encourages concrete images (which are more likely to engage the affective system) rather than in the abstract format of percentages (Reference Slovic and MonahanSlovic 2000). The unfortunate bias of racial prejudice has also been shown to engender misleading judgements of risk of violence (Reference McNiel and BinderMcNiel 1995; Reference Wittenbrink, Judd and ParkWittenbrink 1997). In addition, interpersonal influences may bias decisions, particularly in mental health contexts where team-working is the norm (as previously explored in this journal: see Reference CarrollCarroll 2009), by mechanisms such as ‘groupthink’ (Reference JanisJanis 1982) and ‘cognitive dissonance’ (Reference FestingerFestinger 1957).

Guidelines for using intuition

The intuitive system can lead to dangerously biased and prejudicial decisional outcomes. It is therefore unsurprising that pure unstructured clinical intuition is now rarely advocated as best practice for violence risk assessment in mental health. However, the widely advocated risk assessment approach of ‘structured professional judgement’ (Reference Webster and HuckerWebster 2007) must perforce incorporate the professional’s intuitive functioning. Therefore, the question is not ‘Can intuition play a role?’, but ‘How can the role of intuition be best utilised?’.

Ideally, deliberative and intuitive modes need to be carefully synthesised with relative emphases and inputs appropriate to the decision or task at hand. Preclinical laboratory-based research suggests that ‘either system can out-perform the other depending on the nature of the problem at issue’ (Reference Epstein, Plessner, Betsch and BetschEpstein 2008). Therefore, the task is how to apply reason to temper strong emotions engendered by some risk issues and how to infuse ‘needed doses of feeling’ into circumstances where lack of experience may result in decision-making that is too ‘coldly rational’ (Reference Slovic, Finucane and PetersSlovic 2004).

The structured professional judgement literature (e.g. Reference Webster, Douglas and EavesWebster 1997; Reference Douglas, Webster and EavesDouglas 2001; Reference Webster, Martin and BrinkWebster 2004) and associated training workshops (e.g. Reference OgloffOgloff 2011) tend to focus largely on the ‘structured’ aspect – the operationalising and rating of empirically derived risk factors – but provide less guidance for the ‘professional judgement’ aspect. The following section attempts to provide such guidance, based on proposals developed by Reference HogarthHogarth (2001), a cognitive psychologist who has extensively researched the functions and limitations of intuitive thinking. Essentially, his proposals comprise a set of guidelines by which the deliberative system may systematically be utilised to guide the intuitive system, helping to optimise overall decision-making processes. Each guideline (Box 2) will be considered in the context of violence risk assessment.

BOX 2 Guiding the intuitive system in decision-making

Consider the learning structure

-

• ‘Kind’ structures: large, representative samples; rapid feedback; outcomes tightly linked to decisions

-

• ‘Wicked’ structures: small, potentially biased samples; delayed feedback; outcomes affected by multiple other variables

Use your own emotions as data

-

• Interpersonal dynamics

-

• Calibration of your own estimates

Impose ‘circuit breakers’

-

• Cost–benefit analyses

-

• Structured professional judgement tools

Tell stories

-

• Develop formulations with:

-

• plausible hypotheses

-

• individualised theories

(After Reference HogarthHogarth 2001)

Guideline 1: Consider the learning structure

Laboratory-based research suggests that the intuitive system can yield accurate judgements for a specific decisional task, provided that the prior sample of experiences (on which the relevant knowledge base is founded) is representative for that task (Reference Betsch, Plessner, Betsch and BetschBetsch 2008). Hence, the context in which relevant information is learnt is critical to the quality of subsequent related intuitive judgements. Reference Hogarth, Plessner, Betsch and BetschHogarth (2008) refers to these contexts as ‘learning structures’ and proposes that ‘the validity of intuitions depends on the learning structures prevailing when these were acquired’. He distinguishes between ‘kind’ learning structures, which provide feedback for learners’ errors and lead to subsequent accurate intuitions, and ‘wicked’ learning structures, which fail to correct learners’ errors and hence lead to subsequent inaccurate intuitions.

Kind learning contexts have the following characteristics: large sample sizes (lots of relevant experience of decisions and outcomes), reasonably immediate feedback, outcomes that are tightly linked to the judgemental decisions made and experience of representative rather than biased samples. When feedback is relevant to the validity of judgements made, accurate learning follows automatically but where feedback is irrelevant, usually because it is affected by multiple confounding factors, then intuitive learning is not to be trusted (Reference Einhorn and WallstenEinhorn 1980).

Medium-to long-term risk assessment: a ‘wicked’ learning structure

With respect to the task of predicting violence, there is evidence that even very experienced clinicians tend to do poorly at predicting likelihood of violence in mental health patients in the medium to long term when using unstructured approaches to judgement (Reference Cocozza and SteadmanCocozza 1976; Reference MonahanMonahan 1981). This is unsurprising if we consider the relevant learning structure: clinical intuition about medium-term (weeks to months) risk of violence in the community is likely acquired in a ‘wicked’ learning context. Consider the clinician who makes a decision to discharge a patient based on an assessment of likelihood of violence over the following 3 months. Given the staffing models that prevail in most mental health services, the clinician may well not be privy to information regarding the subsequent violence of that specific patient. In addition, the role of variables such as the social context of the patient in the community means that the relationship between the discharge decision itself and violent behaviour is confounded by multiple factors beyond the clinician’s control. Furthermore, clinicians inevitably take action on the basis of their judgements (for example, they may not release patients who are considered to pose a very high risk), meaning that the learning environment is inevitably biased since clinicians are unable to learn from what might happen if such patients were released. The learning structure is ‘wicked’; an awareness of this fact will steer the clinician toward a greater emphasis on deliberative analytic approaches rather than a reliance on clinical intuition (even that of experienced staff) in making judgements.

Institutional violence risk assessment: a ‘kinder’ learning structure

The task of assessing the risk of institutional violence, particularly in the short term, involves rather different learning structures. Given the commonality of violence within hospitals (Reference Bowers, Allan and SimpsonBowers 2009), it is likely that clinicians with a reasonable experience of busy in-patient work will have acquired a fairly high sample size of violent outcomes from which their knowledge base can be constructed. Feedback on violent incidents is likely to reach the knowledge base of the clinician because it is likely to be received within hours or days of an assessment. These features are indicative of a relatively ‘kind’ learning structure. Correspondingly, there is indeed some evidence that for assessment of violence in institutional settings, unstructured clinical judgements can work reasonably well (Reference McNiel and BinderMcNiel 1995; Reference Fuller and CowanFuller 1999; Reference Hoptman, Yates and PatalinjugHoptman 1999). However, they may usefully be augmented by simple tools that impose some level of structure on clinicians’ intuitive sense of patients’ likelihood of imminent violence (Reference Ogloff and DaffernOgloff 2006; Reference Barry-Walsh, Daffern and DuncanBarry-Walsh 2009). Qualitative research also suggests that nursing response styles which are intuitive and ‘emergent’ and depend on understandings that have been ‘garnered from numerous clinical situations and varied patterns of escalations’ are most effective in assessing, and indeed managing, acute risk of violence in the in-patient context (Reference Finfgeld-ConnettFinfgeld-Connett 2009). Hence, there may be legitimate grounds for placing more emphasis on the role of the intuitive judgement of experienced staff members when determining the likelihood of imminent violence within an institutional context.

Guideline 2: Use your own emotions as a source of data

Feelings or ‘vibes’ generated by the intuitive system, Reference Hogarth, Plessner, Betsch and BetschHogarth (2008) asserts, can usefully be treated simply as part of the informational matrix to be considered in any decisional context: ‘rather than ignoring or trusting one’s emotions blindly, I believe it is best to treat emotions as data [that are] just part of the data that should be considered’.

In the context of violence risk assessment, it is useful to consider two distinct kinds of such data to which the clinician will have access: emotions about patients that arise in the context of the therapeutic relationship; and emotions about the accuracy or otherwise of the clinician’s own risk assessment. These will be considered in turn.

Interpersonal dynamics in therapeutic relationships

A basic tenet of psychodynamic therapeutic practice is that clinicians can develop a deeper understanding of their patients by using their own emotions about the patient as a guide. Such emotions are often considered under the rubric of ‘countertransference’ and awareness of the transference/countertransference relationship ‘allows reflection and thoughtful response rather than unthinking reaction from the doctor’, as this journal has reported (Reference Hughes and KerrHughes 2000).

The academic psychologist Paul Meehl, who is generally best remembered for his strong advocacy of actuarial methods of decision-making, nonetheless recognised that determining the significance of the interpersonal dynamics evident in a clinical relationship is a task that is inevitably principally handled by the intuitive rather than the deliberative system (Reference MeehlMeehl 1954):

‘In a therapeutic handling of the case, it is impossible for the clinician to get up in the middle of the interview, saying to the patient, “leave yourself in suspended animation for 48 hours. Before I respond to your last remark it is necessary for me to do some work on my calculating machine” […] at the moment of action in the clinical interview the appropriateness of the behaviour will depend in part upon things which are learnable only by a multiplicity of concrete experiences and not by formal didactic exposition’ [my italics] (pp. 81–82).

Thus, he recognised that informed clinical experience is essential to a sophisticated understanding and management of interpersonal dynamics in the therapeutic context.

Interpersonal style

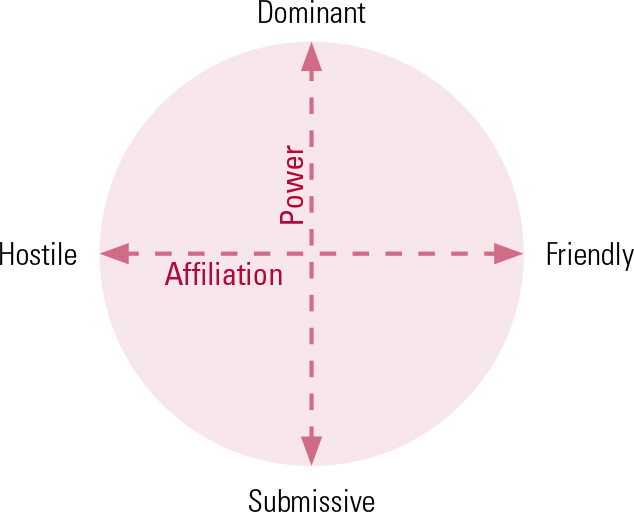

Empirical consideration of the role of clinicians’ emotions as data, however, requires a theoretical framework to provide a basis for the operational definition of such emotions. The concept of ‘interpersonal style’, based on Kiesler’s theory of interpersonal behaviour (Reference Kiesler, Schmidt, Wagner, Plutchik and ConteKiesler 1997), may be used to provide such a framework. Interpersonal style refers to how individuals characteristically relate to each other and how people perceive themselves in relation to others. Although it is considered to be a characteristic of an individual, people react to their interpersonal environment and in turn influence the relationships that others have with them. Such interactions can be considered and understood in relation to the core dimensions of ‘power’ or ‘status’ (which ranges from dominance to submission) and ‘affiliation’ (which ranges from hostility to friendliness) (Fig. 1) (Reference LearyLeary 1957; Reference Kiesler, Schmidt, Wagner, Plutchik and ConteKiesler 1997). Behaviour generally elicits a corresponding response from the affiliation dimension (i.e. friendliness elicits friendliness, hostility elicits hostility), but reciprocal responses from the control dimension (i.e. dominance elicits submission and vice versa).

FIG 1 The interpersonal circumplex (after Reference Kiesler, Schmidt, Wagner, Plutchik and ConteKiesler 1997).

An understanding of interpersonal style and of the corresponding countertransference feelings can help clinicians to avoid overly reactive decision-making, which is sometimes prompted by emotional responses to their patients. Such reactivity is generally recognised to lead to an inefficient decision-making strategy in interpersonal settings (Reference Baumeister, DeWall, Zhang, Vohs, Baumeister and LoewensteinBaumeister 2007).

The value of emotional responses

A careful consideration by clinicians of the informational value of their own emotional responses can improve clinical judgement and decision-making. In the context of violence risk assessments, understanding interpersonal style (by tapping into the clinician’s own emotional reactions in the interpersonal encounter) may help to indicate the likelihood of future interpersonal conflict. It may also give clues as to particular dynamic ‘risk signatures’ (as has been explored in this journal; Reference Reiss and KirtchukReiss 2009) or the ‘relevance’ (Reference Douglas, Reeves, Otto and DouglasDouglas 2010b) of specific interpersonal interactions for a particular person. For example, the interpersonal style of a narcissist, marked by ‘dominance’, may be dangerously sensitive to even minor slights, which are perceived as intensely humiliating (Reference NestorNestor 2002).

Research tools

The Chart of Interpersonal Reactions in Closed Living Environments (CIRCLE; Reference BlackburnBlackburn 1998) and the Impact Message Inventory – Circumplex (IMI–C; Reference Kiesler and SchmidtKiesler 2006) are empirical measures, comprising structured questionnaires filled in by clinicians, that can rate a patient’s interpersonal style based on the clinician’s emotional responses to the patient. Assessment of interpersonal style using these tools shows good interrater reliability (Reference BlackburnBlackburn 1998; Reference Kiesler and SchmidtKiesler 2006) and research has suggested that interpersonal style can indeed add to the predictive validity of violence risk assessment, at least in institutional settings. A study that used the CIRCLE to evaluate forensic in-patients (predominantly with psychotic illnesses) found that dominant, hostile and coercive interpersonal styles were associated with a higher risk of violent behaviour over the following 3 months, whereas a compliant interpersonal style was protective against subsequent violence (Reference Doyle and DolanDoyle 2006). Another forensic in-patient study using the CIRCLE (Reference Daffern, Tonkin and HowellsDaffern 2010a) found that a coercive interpersonal style was associated with increased risk of aggression and self-harm during the following 6 months. A study of patients admitted to acute psychiatric units found that a hostile, dominant interpersonal style measured using the IMI–C predicted violence during the subsequent in-patient stay more strongly than did either acute psychiatric symptoms or patients’ perception of being coerced (Reference Daffern, Thomas and FergusonDaffern 2010b).

Utilising emotions

The systematic use of a clinician’s own emotions as a data source may represent something of a rapprochement between psycho-dynamic and more mainstream approaches to risk assessment. This does not, however, legitimise a return to a pure unstructured approach. Some psychodynamically oriented critics of systematic, empirically grounded approaches to risk assessment have wrongly seen the emphasis on structure as having a defensive quality that militates against genuine understanding of the human interactions involved (Reference MurphyMurphy 2002; Reference DoctorDoctor 2004). Others, however, have recognised that there is in fact no such conflict and that ‘with experience, clinicians begin to recognise countertransferential responses in certain types of clinical situations and, as the likelihood of acting on them reduces, these responses can be utilised as tools both in helping to make the diagnosis, but also to assist in exploring the meaning of the risk’ (Reference FlewettFlewett 2010).

Reference MeehlMeehl (1954) draws a crucial distinction between the relevant facts, which may include ‘immediate impressionistic clinical judgements’ (in which the intuitive subjective clinical impression is treated as a type of fact – the clinician being ‘a testing instrument of a sort’) and methods of combination; such methods, he asserts, can be ‘actuarial’ even for such emotionally based data. He argues that the value of such facts in predicting outcomes is an empirical question to be answered scientifically: ‘it is still an open question whether the fact that the patient acts hostile or dominant ought to be given the weight that the clinician gives it at arriving at his predictions’ [my italics]. Studies such as those described above are beginning, half a century after Meehl’s writing, to empirically address this question.

Emotions about accuracy of judgements

Clinicians also have an emotional sense of the likely accuracy of their own judgement – a level of confidence in their own estimates. ‘Calibration’ refers to the relationship between a clinician’s sense of confidence and subsequent accuracy of their assessment decisions: if clinicians are highly confident about a violence risk assessment and this turns out to be accurate, but less confident about predictions that turn out to be inaccurate, then they are well calibrated. Research in this area is limited. US studies have shown positive relationships between accuracy and confidence for both unstructured (Reference McNiel, Sandberg and BinderMcNiel 1998) and structured (Reference Douglas and OgloffDouglas 2003) professional judgement approaches. However, other studies, such as that by Reference De Vogel and De RuiterDe Vogel & De Ruiter (2004) in the Netherlands, have found that treating clinicians may be overconfident and less accurate in risk assessments involving their own patients. A Canadian study using the Short-Term Assessment of Risk and Treatability (START; Reference Webster, Martin and BrinkWebster 2004) – a structured professional judgement tool – failed to show significant correlations between confidence and accuracy with respect to violence risk assessment (Reference Desmarais, Nicholls and ReadDesmarais 2010). This will be an important area for further research in risk assessment.

Guideline 3: Impose ‘circuit breakers’

Hogarth asserts that the potentially deleterious effect of heuristic biases can be minimised by explicitly and deliberately using ‘circuit breakers’. By this, he refers to explicit processes that stop automatic decisional processing in its tracks and encourage the decision maker to incorporate deliberative reasoning. One such circuit breaker is the well-established process of ‘cost–benefit analysis’: a process that compares options by systematically comparing their possible costs and benefits to determine which is optimal.

When assessing and managing clinical risk, commonly there is an array of competing factors to be considered in this way (Reference Miller, Tabakin and SchimmelMiller 2000), such as:

-

• How will this look if the worst happens?

-

• What are the likely outcomes in the longer term as opposed to the short term?

-

• What is the scientific evidence base and how similar is this patient to patients in that evidence base?

-

• What resources are available?

-

• What are the legal and ethical issues involved?

It is immediately apparent that these competing considerations cover a range of different domains, including the clinical, legal, ethical and political. It has been proposed (Reference Montague and BernsMontague 2002; Reference Peters, Vastfjall and GarlingPeters 2006) that the human decision-making system, when considering pros and cons from such a panoply of different domains, uses affect as a kind of ‘common currency’. This allows us to compare the values of very different decision options: when comparing ‘apples with oranges’, each is first translated to a certain number of ‘affective units’ that may be of positive or negative valence. Reference Peters, Vastfjall and GarlingPeters (2006) describes this process as follows:

‘by translating more complex thoughts into simpler affective evaluations, decision makers can compare and integrate good and bad feelings rather than attempt to make sense out of a multitude of conflicting logical reasons. This function is thus an extension of the affect-as-information function into more complex decisions that require integration of information’.

Hence, the cost–benefit matrix (see Reference CarrollCarroll 2009), a deceptively simple tool, represents an integration of two approaches: the deliberative (systematically ensuring consideration of all logically relevant data) and the intuitive (facilitating the translation of the informational matrix into an overall affective output, favouring one decision pathway over another).

As an example, Table 2 shows a simplified cost–benefit matrix for the clinical dilemma of whether to compulsorily admit to hospital a man with schizophrenia who has disclosed the emergence of violent command hallucinations. A structured, evidence-based approach is used to ensure that all relevant factors are considered. For the purposes of illustration, each consideration has been given an arbitrary score in ‘affective units’. In this example, the clinician’s overall ‘feel’ is in favour of admission to hospital.

TABLE 2 Simplified cost–benefit matrix for deciding whether to compulsorily admit a man with schizophrenia

Violence risk assessment tools

Another circuit breaker is the violence risk assessment tool. The superiority of such tools over unstructured, purely intuitive clinical approaches has now been comprehensively demonstrated (Reference Douglas, Reeves, Otto and DouglasDouglas 2010b). There are, no doubt, various reasons for this, not least that they force the clinician to focus on items of predictive validity and ignore invalid factors. However, they are generally seen not as wholesale replacements for clinical judgement, but as decision support tools (Reference Monahan, Steadman and SilverMonahan 2001). Risk assessment tools can therefore be appropriately used in conjunction with cost–benefit analyses. The complexity of real-world risk management decisions means that simply allocating patients to a category of low, medium or high risk is generally only the start of the decisional task and rarely in itself will be determinative: the pros and cons of the various management options (such as coercive psychiatric care) still need to be systematically considered. This nuanced approach to risk assessment and management is now recognised in the more sophisticated critiques of the state of the field (Reference MossmanMossman 2006), but is unfortunately absent from some polemics (Reference Ryan, Nielssen and PatonRyan 2010).

Guideline 4: Tell stories

Another way in which the intuitive mode of thought may be harnessed is by the conscious and deliberate use of narrative to ‘make connections that would not be suggested by more logical modes of thought’ (Reference HogarthHogarth 2001). The development of coherent narratives to better make sense of patients’ predicaments, although hardly a new approach, is enjoying a renaissance in clinical psychiatry (Reference LewisLewis 2011). This task is generally performed under the broad rubric of formulation: ‘distilling a clinical case into an explanatory summary with a high “signal to noise” ratio’ (Reference Reilly and NewtonReilly 2011). There are multiple models for clinical formulation (Reference WeerasekeraWeerasekera 1993; Reference Ward, Nathan and DrakeWard 2000; Reference SummersSummers 2003; Reference Sim, Gwee and BatemanSim 2005) but an element common to all is to ‘highlight possible linkages or connections between different aspects of the case’ with the aim that ‘the focus upon these inter-relationships adds something new’ (Royal Australian and New Zealand College of Psychiatrists 2011).

High-quality formulations invariably involve speculation and the creative generation of hypotheses that draw on clinical intuition: which elements of the patient’s narrative ‘feel’ to be central elements in the story and which ‘feel’ more peripheral? Distinguishing ‘signal’ from ‘noise’ is often a challenge and should draw on the clinician’s intuitive sense of the case: a good formulation ‘feels right’ for that particular patient.

Create plausible hypotheses

Such intuitive thinking however, needs to be complemented by rigorous and systematic use of deliberative reasoning in at least two ways. In drawing up the initial formulation, the hypotheses need to at least be plausible and grounded in evidence: for example, drawing links between developmental trauma or genetic background and current psychopathology is acceptable, whereas speculations linking the patient’s astrological star sign to their personality features is not. Thus, the task requires the application of empirical knowledge (derived from group-based data) to make sense of the predicament of the individual patient. Subsequently, the formulation needs to be tested for explanatory and predictive power in clinical work with the patient and revised accordingly. This iterative process, drawing on both intuitive and deliberative processes, mirrors the essence of the ‘scientific method’ in general (Reference MedawarMedawar 1984).

Reference MeehlMeehl (1954) supported the role of clinical intuition in this sense when discussing the creation of psychotherapeutic interpretations. He viewed these as preliminary hypotheses to be tested, asserting:

‘it is dangerous to require that, in the process of hypotheses creation, i.e., in the context of discovery, a set of rules or principles […] is a necessary condition for rationality. What should be required is that a hypothesis, once formulated, should be related to the facts in an explicit, although perhaps very probabilistic way’ (p. 73).

Individualised theories

In a structured professional judgement (SPJ) risk assessment, it is at the formulation stage that the rather prosaically derived set of risk factors is put together into a meaningful story – a unique pattern with individualised meaning for that patient and for that particular risk assessment challenge. The individual’s life and pattern of violent behaviour – the relevant aspects of their story – may be considered in terms of plots and themes that are common in those with a propensity for violent behaviour. For example, themes of abandonment as a young child may be related to a later pattern of aggressive over-controlling behaviour in relationships. Within this narrative, some risk factors have greater relevance to individuals than others. Thus, ‘the SPJ process encourages decision makers to build “individual theories” of violence for each person they evaluate. It may facilitate the identification of “configural relations” between a set of risk factors and violence, one in which risk factors might not firmly interact with one other, but may transact with one another, and with violence’ (Reference Douglas, Reeves, Otto and DouglasDouglas 2010b: p. 174). Such ‘individual theories’ will guide the individualised management plan that should follow on from any risk assessment.

Conclusions

Although sometimes portrayed as a purely analytical process, the optimal assessment and management of risk of violence in psychiatry also draws on more emotionally laden ‘intuitive’ modes of thought. Clinicians have much to gain from playing to the different strengths of the intuitive and deliberative systems while avoiding their respective pitfalls. Simple awareness of these pitfalls, although necessary, is not sufficient (Reference PlousPlous 1993). Hogarth’s guidelines for ‘educating intuition’, although not specifically developed with clinical tasks in mind, provide useful pointers for clinicians when employing their intuitive skills.

When assessing risk of violence, psychiatrists would do well to heed Hogarth’s advice that professional decision makers learn to ‘manage their thought processes actively’ (Reference HogarthHogarth 2001). This requires time and effort to understand their own thinking and feeling processes, as previously examined in this journal (Reference Reiss and KirtchukReiss 2009). Importantly, this is not a ‘one-off’ learning exercise, but should be at the heart of an ongoing reflective learning process. Clinicians need to heed the words of a psychodynamic psychiatrist working in the National Health Service:

‘Emotional literacy can be developed but it can also be lost, particularly when subject to the cumulative psychic assaults that are quotidian in mental health work. Emotional literacy involves continuing self reflection and this can be eroded by the demands of functioning in a system which calls more for action than for thought’ (Reference JohnstonJohnston 2010).

MCQs

Select the single best option for each question stem

-

1 Epstein describes the intuitive mode of thinking as:

-

a rational

-

b slow

-

c resistant to change

-

d more highly differentiated nuanced thinking

-

e abstract.

-

-

2 ‘Kind’ learning contexts have the following characteristics:

-

a smaller sample sizes

-

b outcomes that are tightly linked to the judgemental decisions

-

c delayed feedback

-

d unrepresentative samples

-

e low calibration.

-

-

3 A dominant interpersonal style on the part of a patient:

-

a is related to a lower risk of violent behaviour

-

b elicits corresponding feelings of dominance in the clinician

-

c can be measured using the Short-Term Assessment of Risk and Treatability (START)

-

d is a measure of affiliation

-

e may indicate a specific ‘risk signature’.

-

-

4 Structured professional judgement approaches to violence risk assessment:

-

a attempt to negate the role of clinical judgement

-

b have been shown to have good reliability and validity

-

c make cost–benefit analyses superfluous

-

d always require the Historical-Clinical-Risk Management–20 (HCR–20) to be completed

-

e indicate whether coercive treatment is indicated.

-

-

5 When carrying out a violence risk assessment, countertransference:

-

a is impossible to test empirically

-

b should be ignored, to assure maximum reliability and validity

-

c may be a useful source of data

-

d is reliably measured by the HCR–20

-

e should be quickly acted on by the clinician.

-

MCQ answers

| 1 | c | 2 | b | 3 | e | 4 | b | 5 | c |

eLetters

No eLetters have been published for this article.