The vast worldwide investment in biomedical research during the past 50 years has led to important advances in healthcare. Despite the availability of vast quantities of evidence from biomedical and clinical studies, a gap often exists between the optimal practice suggested by the evidence and routine patient care. Unfortunately, it often takes more than 20 years for even the most important scientific advances to be integrated into clinical practice. Many remediable factors are responsible for this problem of knowledge transfer.

Systematic literature review is a fundamental scientific activity. It is important that clinical decisions in psychiatry are not based solely on one or two studies without taking account of the whole range of information available on a particular topic.

The Cochrane Collaboration has revolutionised the summarisation of evidence in the preparation and dissemination of systematic reviews. These overviews seek to empower clinicians by quickly providing them with review evidence most relevant to a specific clinical question. The aim is to shift the centre of gravity of clinical decision-making to a speedy, explicit consideration of high-quality evidence.

Reviews of summarised evidence have become an essential tool for psychiatrists who want to keep up to date with the wide-ranging and fast-accumulating evidence in their field. As it is impossible to read every research article, overviews have become a quick and efficient way to keep pace with recent developments. They make large quantities of information palatable and make sense of scientific chaos.

Evidence-based medicine is a form of information management in which high-quality knowledge is produced and quickly disseminated. The systematic review is an essential part of this process.

What is a systematic review?

A systematic review is essentially an observational study of the evidence that allows large quantities of information to be speedily refined and reduced to a manageable size.

Systematic reviews consist of a clearly formulated question and use explicit methods to identify, critically appraise and analyse data from all of the relevant research to address a problem such as the treatment of depression. A meta-analysis is simply one statistical technique that can be used in an overview to integrate the results of included studies. It is essentially a form of statistical pooling.

A review, of course, may not necessarily include a meta-analysis. However, if the review can pool the individual data from each of the included studies, it can greatly increase the statistical power of the research by treating the studies, after weighing them, as if they were one large sample (Reference Deeks, Higgins, Altman, Higgins and GreenDeeks 2008). This pooling may increase power and so increase the chance of detecting a real effect of a treatment as statistically significant, if it exists.

In the treatment of schizophrenia, for instance, only 3% of studies were found to be large enough to detect an important improvement (Reference Thornley and AdamsThornley 1998). The assumption of the meta-analysis is that a ‘superstudy’ will provide a more reliable and precise overall result than any of the smaller, individual studies (Reference BowersBowers 2008). It is hoped that the review may go some way to settling controversies arising from apparently conflicting studies regarding, for instance, the relative effectiveness of medication and psychotherapy.

Other advantages of systematic reviews are that their results can be generalised to a wider patient population and in a broader setting than would be possible from just a single study. The overview can also shorten the time lag between medical developments and their implementation.

The increasing importance of systematic reviews has created a need to understand their development and then acquire the skills necessary to critically appraise them.

Karl Pearson

Knowledge synthesis is not a new concept. In the 17th century, the idea began to evolve that combinations of data might be better than simply attempting to choose between different data-sets (Reference Chalmers, Hedges and CooperChalmers 2002). Systematic efforts to compile summaries of research began in the 18th century. It was necessary to wait until the 20th century before the science of synthesis began in earnest, with Pearson’s 1904 review in the British Medical Journal of 11 studies dealing with typhoid vaccine. As a result, the distinguished statistician Karl Pearson is often regarded as the first medical researcher to use formal techniques to combine data from different studies.

By the latter part of the 20th century, the Scottish physician Archie Cochrane highlighted the importance of systematic reviews in the process of making informed clinical decisions. It is better to use a summary of evidence than decide on a treatment for schizophrenic illness based on intuition. Systematic reviews, because they provide the most powerful and useful evidence available, have become extremely important to clinicians and patients and now attract a massive citation rate (Reference TyrerTyrer 2008).

However, despite their reputed advantages, systematic reviews have received a mixed reception to date. Some fear that systematic reviews have the potential to mislead when the quality of the review is poor and all the relevant studies are not identified. A review with these limitations may overestimate the effectiveness of a given intervention. If the results of a review that combines several small studies are compared with those of a large randomised controlled trial (RCT), the conclusion may not be the same. Meta-analyses have also been construed as moving too far away from the individual patient (Reference Seers, Dawes, Davies and GraySeers 2005). Many still prefer the traditional narrative review.

Numbers and narrative

Reference Oxman and GuyattOxman & Guyatt (1988) drew attention to the poor quality of traditional narrative reviews. These reviews can be subjective and the majority do not specify the source of their information or assess its quality. Selective inclusion of studies that support prevailing opinion is common.

In controversial areas, the conclusions drawn from a given body of research may be associated more with the specialty of the reviewer, be they social worker, psychologist or psychiatrist, than with the available evidence (Reference Lang and SecicLang 2006). By contrast, a systematic review of the literature is governed by formal rules that are established for each step in advance. Consequently, bias is reduced, the results are reproducible and the validity of the overview can be enhanced.

Unlike traditional literature reviews, the systematic review of interventions involves an exhaustive literature search and then a synthesis of studies involving the same or similar treatments. The purpose is to summarise a large and complex body of literature on a particular topic and the essential aim of an overview is to give an improved reflection of reality. The first step of this important process is to formulate the review question.

Defining the question

An idea for a review usually arises when a gap in knowledge has been identified. Suppose the primary objective of our systematic review is to assess the effects of psychodynamic psychotherapy relative to medication in out-patients with depression. Developing a clear and concise question is important here as it guides the whole review process. The types of participants, interventions and outcomes would all be specified in advance. A diversity of studies is sought, but should still be sufficiently narrow to ensure that a meaningful answer could be obtained when all of the studies are considered together.

A structured way to formulate a question is to specify the population, intervention, comparators and outcome (known as the PICO format). This particular approach may not fit all questions but is a useful guideline for treatment studies. A key component of a well-formulated question is that common or core features of the interventions, such as medication and psychotherapy, are defined and the variations described. Box 1 contains an example of how a review question on the management of difficult-to-treat depression is addressed.

BOX 1 An example of a systematic review

-

• First, review all randomised controlled trials that assess either pharmacological or non-pharmacological interventions for treatment-refractory depression

-

• Next, assess the methodological quality and generalisability of the trials in this area

-

• Finally, perform a synthesis and a meta-analysis (if appropriate) of the evidence in the area

Certain study designs are more appropriate than others for answering particular questions. As our question is about the effects of a treatment, psychotherapy v. medication, we can expect the focus of the systematic review to be on RCTs and clinical trials. Box 2 sets out the typical stages in an overview.

BOX 2 Steps in a systematic review

-

1 Specification of evidence sought

-

2 Identification of evidence

-

3 Critical appraisal of evidence

-

4 Synthesis of evidence

Search strategy

Next, we need to look at the ways in which the studies were searched for in the review. Conducting a thorough and transparent search to identify relevant studies is a key factor in minimising bias in the review process.

There is no agreed standard of what constitutes an acceptable search in terms of the number of databases used. The selection of electronic databases to be searched depends on the review topic. For healthcare interventions, MEDLINE and Embase are the databases most commonly used to identify trials. Importantly, the Cochrane Central Register of Controlled Trials (CENTRAL) has articles already taken from a wide range of bibliographic databases and from other published and unpublished sources. Some databases can have a narrow focus. PsycINFO, for example, deals with psychology and psychiatry, whereas CINAHL covers nursing and allied health professions.

Restricting the search to a small number of databases might unintentionally introduce bias into the review. Wider searching is needed to identify research results circulated as reports, discussion articles and conference proceedings.

The search strategy used in a review to identify primary studies needs to be comprehensive and exhaustive. Searching just one electronic database is insufficient as many relevant articles may be missed. Searching MEDLINE alone may yield only 30% of known RCTs (Reference Lefebvre, Manheimer, Glanville, Higgins and GreenLefebvre 2008).

It is important to search for both published and unpublished studies and in all languages, not just English. An extensive search avoids publication bias. This type of bias occurs because trials that have statistically significant results in favour of the intervention are more likely to be published, cited and preferentially published in English language journals, and also included in MEDLINE. Reviewers can miss out on important reports with non-significant results.

After outlining their search strategy, reviewers should state their predetermined method for assessing the eligibility and quality of the studies they have decided to include in the overview (Box 3).

BOX 3 What to look for in overviews

-

• Whether the review found and included all good-quality studies

-

• How it extracted and pooled all good-quality studies

-

• Whether it made sense to combine the studies

Selecting studies and assessing bias

Systematic reviewers generally separate the study selection process into two stages: first, a broad screen of the titles and abstracts retrieved from the search; and second, a strict screen of full-text articles to select the final included studies.

Two or more reviewers usually carry out the selecting by applying eligibility criteria based on the review question.

Critical appraisal is simply the application of critical thinking to the evaluation of clinical evidence. The key points of critical appraisal are outlined in Box 4. The quality of an overview depends on the quality of the selected primary studies and the validity of the results of a systematic review will depend on the risk of bias in individual studies. A ‘risk of bias assessment’ of the primary studies can be completed using scales or checklists. What is important is that all systematic reviews should include a risk of bias assessment of the included studies.

BOX 4 Questions in critical appraisal of primary research

-

• Were the included patients representative of the target population?

-

• Was the intervention allocation concealed before randomisation?

-

• Were the groups comparable at the beginning of the study?

-

• Was the comparable nature of the groups under investigation maintained through equal management and sufficient follow-up?

-

• Were the outcomes measured with ‘masked’ patients and objective outcome measures?

In primary studies, the investigators select and collect data from individual patients. In systematic reviews, they select and collect data from primary studies. Analysis of these primary studies may be narrative, such as using a structured summary, or quantitative, such as conducting a statistical analysis. Meta-analysis, the statistical combination of results from two or more studies, is the most commonly used statistical method (Box 5).

BOX 5 The two steps of meta-analysis

-

• 1 A summary statistic, such as a risk ratio or mean difference, is calculated for each primary study

-

• 2 The pooled, overall treatment effect is calculated as a weighted average of these summary statistics

So the next step in the review process involves determining whether a statistical synthesis such as a meta-analysis is possible or appropriate. If there is excessive variation or heterogeneity between studies then a statistical synthesis of this sort may not be suitable.

The meta-analysis revolution

An important step in a systematic review is a thoughtful consideration of whether it is appropriate to combine the numerical results of all, or some, of the studies. A meta-analysis is a particular type of statistical technique performed, if appropriate, after a systematic review. It focuses on numerical results. A narrative synthesis may be used if meta-analysis is not sensible because of major differences between, for instance, the treatments used.

A meta-analysis yields an overall statistic, and a confidence interval, that summarises the efficacy of the experimental intervention compared with the control intervention. The contrast between the outcomes of two groups treated differently is known as the ‘treatment effect’. The main aim in a meta-analysis is to combine the results from all of the individual studies included in the review to produce an estimate of the overall effect of interest.

Meta-analyses focus on pair-wise comparisons of interventions, such as a treatment and a control intervention. Meta-analysis is essentially a method whereby outcome measures from many studies are standardised to make them comparable. Large numbers of primary studies can then be combined statistically to form a generalised conclusion.

It must be remembered that just because a statistical method is used does not necessarily mean that the results of a review are always correct. Like any tool, a statistical method can be misused and reviewers certainly do not want to compound an error.

Main steps

Meta-analysis is typically a two-stage process. In the first stage, a summary statistic is calculated for each study, to describe the observed intervention effect in that primary study. For example, the summary statistic may be a risk ratio if the data are dichotomous (recovered v. non-recovered) or a difference between means if the data are continuous (using scales such as the Beck Depression Inventory).

In the second stage, a summary or pooled intervention effect estimate is calculated as a (weighted) average of the intervention effects estimated in the individual studies. The weights are chosen to reflect the amount of information that each individual study contains. Finally, the findings are then interpreted and a conclusion is reached about how much confidence should be placed in the overall result.

Summary statistics

Summary statistics for each individual study included in the review may be risk ratios or mean differences. Risk is the probability that an event will happen. It is calculated by dividing the number of ‘events’ by the number of ‘people at risk’.

Risk ratios

Risk ratios are used in one in six papers (Reference Taylor and HarrisTaylor 2008) and are used to investigate the effect of a treatment. They are calculated by dividing the risk in the treated group by the risk in the control group. A risk ratio of 1 indicates no difference between the groups. A risk ratio of an event greater than 1 means that the rate of that event is increased in the treatment group compared with the controls. Risk ratios are frequently given with their confidence intervals (CIs). If the confidence interval does not contain 1 (no difference in risk), it is statistically significant (see discussion of forest plots below).

Mean difference

The mean difference is another standard summary statistic that can be used for each individual study in the review. It measures the absolute difference between the mean values in two groups in a clinical trial and estimates the amount by which the experimental intervention changes the outcome on average compared with the control group. It can be used as a summary statistic in meta-analysis when outcome measurements in all studies are made on the same scale such as the Beck Depression Inventory. In a clinical trial comparing two treatments, the summary statistic used in the meta-analysis may be the difference in treatment means, with a zero difference implying no treatment effect.

Heterogeneity

Next, it must make sense to combine the various primary studies. Heterogeneity occurs when there is genuine variation between the different studies. If we are not satisfied that the clinical and methodological characteristics of the studies are sufficiently similar, we can decide that it is not valid to combine the data. If there is evidence of significant heterogeneity, reviewers should proceed cautiously and investigate the reasons for its presence.

The evidence pipeline

Next, the ‘average’ effect of interest, with the associated confidence interval, is calculated from each study summary statistic, risk ratio or mean difference. This overall ‘pooled effect’ is then investigated to see if it is significant.

Last, the reviewer interprets the result and presents the findings. It is helpful to summarise the results from each trial in a table, detailing the sample size, effect of interest (such as the risk ratio or mean difference) and the related confidence intervals for each.

It is often difficult to digest a long list of means and confidence intervals. Some papers may show a chart to make this easier so that after the table of the individual study results has been constructed, a ‘graphical display’ can be generated. The most common display is called a ‘forest plot’.

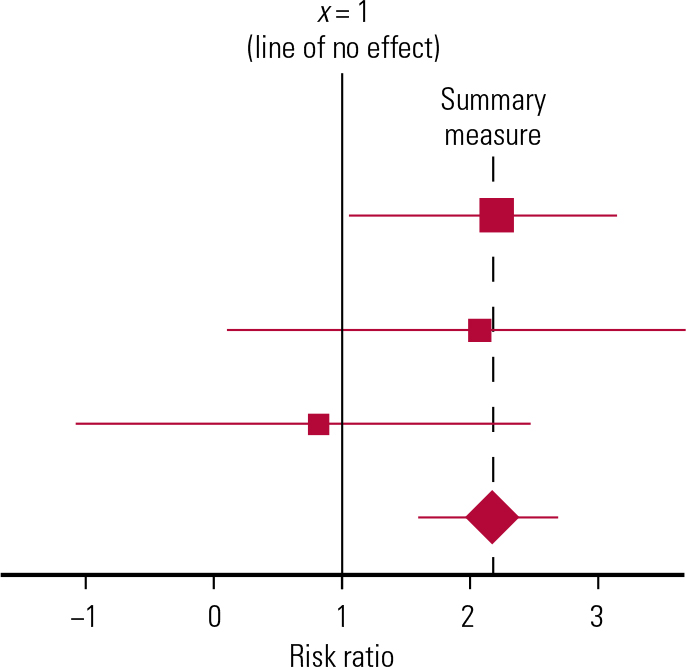

A forest plot is a diagrammatic representation of the results of each study in a meta-analysis (Reference Glasziou, Irwig and BainGlasziou 2001), displaying treatment effect estimates and confidence intervals (Fig. 1). Each study is represented by a block at the point estimate of intervention effect, with a horizontal line extending either side of the block. The area of the block indicates the weight assigned to that study in the meta-analysis. The horizontal line passing through the block depicts the confidence interval. If the confidence interval surrounding the diamond, or summary estimate, does not cross the line of no effect (no difference in risk), as in Fig. 1, the result is statistically significant (Reference Heneghan and BadenochHeneghan 2006).

FIG 1 An example forest plot. See text for explanation.

The forest plot allows immediate visual assessment of the significance of the treatment effects from each individual study by simply observing whether the confidence interval around each study result crosses the ‘line of no effect’. The overall ‘combined estimate’ from all of the individual trials may also be shown in the shape of a diamond.

Combining the different studies reduces the confidence interval (CI) around the pooled effect or combined estimate, giving a more accurate estimate or reflection of the true treatment effect (Reference Taylor and HarrisTaylor 2008).

P-values

Confidence intervals and P-values are statistics that test reviewers’ confidence in the result. More than four out of five research papers give P-values. The P -value is used to see how likely it is that a hypothesis is true (Reference Taylor and HarrisTaylor 2008). The hypothesis is usually the null hypothesis that there is no difference between treatments. The P -value gives the probability of any observed difference having happened by chance. When a figure is quoted as statistically significant, it is unlikely to have happened by chance: P = 0.05 means that the probability of the difference having happened by chance is 0.05 or 1 in 20. The lower the P -value, the less likely it is that the observed difference between treatments happened by chance, and so the greater the significance of the result.

Confidence intervals

The results of any study are estimates of what might happen if the treatment were given to the entire population of interest; the result is an estimate of the ‘true treatment effect’ for the entire population.

Confidence intervals are reported in three-quarters of published papers (Reference Taylor and HarrisTaylor 2008). Generally, the 95% confidence interval of our estimate will be the range within which we are 95% confident that the true population treatment effect will lie.

A confidence interval may be used to give an idea of how precise the measured treatment effect is. The width of a confidence interval indicates the precision of the estimate. The wider the interval, the less precise the estimate. The size of a confidence interval is related to the sample size of the study, with larger studies having narrower confidence intervals.

Systematic reviews often report an overall, combined effect from the different studies by using meta-analysis. The direction and magnitude of this average effect of interest, together with a consideration of its associated confidence interval, can be used to make decisions about the therapy under investigation, such as psychotherapy or medication. Box 6 shows examples of currently available systematic review questions and protocols (protocols are sometimes withdrawn).

BOX 6 Current examples of Cochrane protocols

Leucht C, Huhn M, Leucht S (2011) Amitriptyline versus placebo for major depressive disorder (Protocol). Cochrane Database of Systematic Reviews, issue 5: CD009138 (doi: 10.1002/14651858.CD009138).

van Marwijk H, Bax A (2008) Alprazolam for depression (Protocol). Cochrane Database of Systematic Reviews, issue 2: CD007139 (doi: 10.1002/14651858.CD007139).

As with other publications, the reporting quality of systematic reviews varies, limiting readers’ ability to assess the strength and weaknesses of these reviews. Advice is available about how to report items for systematic reviews and meta-analysis. The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA; Reference Moher, Liberati and TetzlaffMoher 2009) statement is an attempt to guide and improve reporting of overviews (Box 7).

BOX 7 PRISMA statement

-

• Reviews should have a protocol and any protocol changes should be reported

-

• Assessment of risk of bias should be carried out on each primary study

-

• The reliability of the data for each important outcome should be evaluated

-

• Selective reporting of complete studies or publication bias should be addressed

-

• Selective reporting of certain outcomes and not others, should be identified

The rise of evidence synthesis

According to Reference AkobengAkobeng (2005), systematic reviews occupy the highest position in the hierarchy of evidence for papers on the effectiveness of treatments. But it should not be assumed that a study is obviously worthwhile simply because it is called a ‘systematic review’.

One should never be misled by the quantitative nature of meta-analysis to viewing the exercise as possessing greater logical force than is warranted. The overview is simply a review of the extant literature. It is a more thorough and more fair exercise than a narrative review perhaps, but a review nevertheless.

Improper use of overviews can lead to erroneous conclusions regarding treatment effectiveness. Publication bias especially needs to be investigated, because if the review contains only published papers, this will favour the incorporation of statistically significant findings over studies with non-significant findings.

It is often appropriate to systematically review a particular body of data, but it may be inappropriate and misleading to statistically pool results from the separate studies because of excessive heterogeneity or differences between the primary studies. It is vital to acknowledge the limitations of meta-analysis and indeed, in some situations, to resist the temptation to combine the individual studies statistically.

Applying the results to psychiatrists’ own patients

The reviewers should outline whether the evidence identified allows a robust conclusion regarding the objectives of the review (Reference Higgins, Green, Higgins and GreenHiggins 2008c). A psychiatrist has to make a decision that the valid results of a study are applicable to their individual patient. Good evidence may be available but may not be relevant to the patient in question. It is important for psychiatrists to decide whether the participants in the study being appraised are similar enough to their patients to allow application of the conclusions of the review to an individual’s problem.

A specific treatment may be shown to be efficacious in an overview but the side-effects may outweigh the benefits. It may be decided by the psychiatrist, in conjunction with the patient, that it is best not to offer the treatment, despite the evidence for its efficacy. The views, expectations and values of the patient may be such that the treatment should not be offered and despite the evidence, the cost may be prohibitive or the resource may not be available locally.

How science takes stock

It is part of the mission and a basic principle of the Cochrane Collaboration to promote the accessibility of systematic reviews of the effects of healthcare interventions to anyone wanting to make a decision about health issues. Simplicity and clarity are central, so it is important that the review is succinct and readable (Reference Higgins, Green, Higgins and GreenHiggins 2008c).

In the presystematic review era, clinicians deferred to experts whose allegiance was unknown and whose word was sufficient. Systematic reviews, however, attempt to take account, in an explicit fashion, of a whole range of relevant findings on a particular topic. They try to provide a summary of all studies addressing a particular question. They also place the individual studies in context while separating the salient from the redundant.

A meta-analysis may accompany the systematic review, pooling the results of the different primary studies. This may increase the power and precision of estimates of overall treatment effects and give an improved reflection of reality.

Conclusions

It is important to develop the ability to critically appraise the methodology of review articles, but also to appraise the applicability of the results to individual patients. These reviews can induce humility by highlighting the inadequacy of the existing evidence.

Every systematic review is derivative and can make few claims for originality. It is a significant but interim station on the ‘evidence journey’. The systematic review is often not the final destination (Reference TyrerTyrer 2008) and overviews may not be the pinnacle of the evidence-based table. Research in any one area may be patchy, so evidence synthesis will often highlight important knowledge gaps.

Systematic reviews allow clinicians to rise above the evidence, survey the terrain and examine the many pieces of a complex puzzle. Overviews can guide difficult clinical decision-making. They can also channel the stream of clinical research towards relevant horizons (Reference Egger, Smith and AltmanEgger 2001). In psychiatry, the evidence from quality systematic reviews can be combined with clinical experience and the patient’s preferences to help a vulnerable and disadvantaged patient group.

MCQs

Select the single best option for each question stem

-

1 Systematic reviews should:

-

a always contain a meta-analysis

-

b include all studies, regardless of quality

-

c search the smallest number of electronic sources

-

d avoid English language studies

-

e always assess bias in included studies.

-

-

2 Systematic reviews should:

-

a take second place to narrative reviews

-

b avoid critical appraisal of included studies

-

c avoid focused questions

-

d include studies with non-significant results

-

e avoid specific databases, such as PsycInfo.

-

-

3 Traditional narrative reviews:

-

a now never appear

-

b are never popular

-

c often use informal, subjective methods to collect and interpret studies

-

d are consistently of high quality

-

e never report a personal view.

-

-

4 Critical appraisal:

-

a never addresses the validity of trial methodology

-

b is a form of critical thinking

-

c never assesses whether the right type of study was included in overviews

-

d never looks at bias assessment

-

e is not applicable to systematic reviews.

-

-

5 Meta-analysis is:

-

a a one-stage process

-

b a qualitative, rather than a quantitative, technique

-

c a way of decreasing statistical power

-

d never displayed in graphic form as a forest plot

-

e a statistical technique in which weighted data from individual studies are pooled.

-

MCQ answers

| 1 | e | 2 | d | 3 | c | 4 | b | 5 | e |

eLetters

No eLetters have been published for this article.