Implications

Our aim is to establish vocalisation as an innovative parameter for oestrus detection in dairy cows. We present a combined hardware and software solution for individual vocalisation recording in group-housed cattle for use as an additional oestrus indicator on dairy farms. This could be of interest for researchers, farmers and the farm equipment industry. We show that the automatic detection of individual vocalisations and the correct identification of callers are possible, even in freely moving group-housed cows. These results are promising for automatic oestrus detection systems and, beyond that, for further applications in terms of monitoring animal health and welfare status.

Introduction

Oestrus detection is an essential component of successful reproduction management for dairy cattle. In the last several decades, automatic devices have been developed to assist farmers in this task. The most frequently used parameter acquired by automatic oestrus detection devices is an increase in physical activity, as measured by pedometers attached to the leg of the animal or by other devices located at its neck (Rutten et al., Reference Rutten, Velthuis, Steeneveld and Hogeveen2013). Another behaviour that is currently monitored in the context of oestrus detection is rumination (Reith et al., Reference Reith, Fengels and Hoy2012), with duration of rumination and changes in the time spent ruminating in certain daytime intervals being the main parameters. Additional commercially available devices detect mounting behaviour as part of oestrus behaviour (Walker et al., Reference Walker, Nebel and McGilliard1996). These mounting detectors are attached to the cow’s back and detect the mounting activity of herd members to identify cows that show standing heat behaviour, that is, those who will tolerate being mounted by a potential mating partner. However, although these different parameters have been implemented in commercially available automatic oestrus detection devices, farmers are still facing significant economic losses due to undetected oestrus cycles. Beyond oestrus detection, physical activity and rumination are also typically used for health monitoring in commercially available devices.

Another behavioural parameter of farm animals that is monitored (albeit rarely) is vocal behaviour. Vocalisations convey not only semantic information (e.g., ‘I am here’ in contact calls) but also contextual information about, for example, body size, sex, identity, state of the sexual cycle, rank and exhaustion (Fischer et al., Reference Fischer, Kitchen, Seyfarth and Cheney2004; Mielke and Zuberbühler, Reference Mielke and Zuberbühler2013; Pitcher et al., Reference Pitcher, Briefer, Vannoni and McElligott2014). Pigs produce context-specific distress calls (screams) when confronted with acute, severe stressors (Puppe et al., Reference Puppe, Schön, Tuchscherer and Manteuffel2005; Düpjan et al., Reference Düpjan, Schön, Puppe, Tuchscherer and Manteuffel2008), which can be detected automatically to monitor their welfare (Schön et al., Reference Schön, Puppe and Manteuffel2001; Schön et al., Reference Schön, Puppe and Manteuffel2004), but they also demonstrate subtle variations in vocalisation indicative of their affective state (Leliveld et al., Reference Leliveld, Düpjan, Tuchscherer and Puppe2016) and emotional reactivity (Leliveld et al., Reference Leliveld, Düpjan, Tuchscherer and Puppe2017). For cattle, it is known that vocalisation rates increase at the day of oestrus (Schön et al., Reference Schön, Hämel, Puppe, Tuchscherer, Kanitz and Manteuffel2007; Dreschel et al., Reference Dreschel, Schön, Kanitz and Mohr2014) and that the vocalisation climax occurs shortly before or at the climax of oestrus behaviour (Röttgen et al., Reference Röttgen, Becker, Tuchscherer, Wrenzycki, Düpjan, Schön and Puppe2018). Considering that endogenous hormone secretion is likewise associated with oestrus behaviour (Lyimo et al., Reference Lyimo, Nielen, Ouweltjes, Kruip and van Eerdenburg2000; Aungier et al., Reference Aungier, Roche, Duffy, Scully and Crowe2015), vocalisation can serve as an indirect indicator of endogenous hormonal changes and therefore as a potential additional parameter for automatic oestrus detection.

There are some examples of successful acoustic monitoring of farm animals, for instance, a commercial device for cough detection in pigs. The device records and analyses incoming sounds via stationary microphones, identifies coughs and triangulates the housing compartment where they originate from (Silva et al., Reference Silva, Ferrari, Costa, Aerts, Guarino and Berckmans2008). Meen et al. (Reference Meen, Schellekens, Slegers, Leenders, van Erp-van der Kooij and Noldus2015) proposed a similar system to monitor animal welfare in cattle. However, while the assignment to a specific individual animal (henceforth termed ‘caller identification’) is not essential for cough detection in pigs, it is essential for oestrus detection. Yajuvendra et al. (Reference Yajuvendra, Lathwal, Rajput, Raja, Gupta, Mohanty, Ruhil, Chakravarty, Sharma, Sharma and Chandra2013) tried to solve the problem of caller identification by using individual structural characteristics in vocalisations. Similar techniques have been used in wildlife species (e.g., in blue monkeys: Mielke and Zuberbühler, Reference Mielke and Zuberbühler2013). However, these techniques require high-quality samples of vocalisations for each individual animal to enable the identification of individual call characteristics. For vocalisation to be used in automatic oestrus detection devices on farms, it must be easy to use, and caller identification must be accurate. Therefore, a system based on individual samples seems unlikely to be a feasible solution. Another possibility that has been investigated is to equip each animal with a microphone (as tested in chipmunks: Couchoux et al., Reference Couchoux, Aubert, Garant and Reale2015). In group-housed animals, however, caller identification might be inaccurate, as group mates can vocalise close to another individual’s microphone (but see Gill et al., Reference Gill, D’Amelio, Adreani, Sagunsky, Gahr and Maat2016, for a technical solution in zebra finches).

Automatic detection of specific behaviours on an individual level is the first step towards implementing such detection in technical devices that support farmers. A very challenging task – beyond vocalisation detection itself – is to develop algorithms that make the gained data easily assessable on farms. Algorithms must compensate for individual differences and must be self-adaptive to individual variation to accurately identify a large percentage of animals without manual adjustments. Therefore, these algorithms must be based on fundamental knowledge of individual variation, the detection errors of the system and the timing of the behaviours in relation to the event (e.g., oestrus) they are developed to detect.

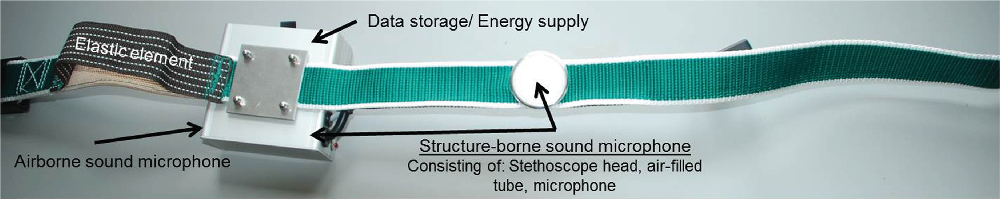

If calls can be detected and the caller correctly identified with sufficient sensitivity and specificity, future developments can implement the data to detect peaks in individual vocalisation rates to assist in oestrus detection. Therefore, we developed a collar-based cattle call monitor (CCM) with structure-borne (SBM) and airborne sound microphones (ABM) and a recording unit that uses a postprocessing algorithm to identify the caller by matching the information from both microphones. The aim of this paper is to first present the developed system and to then present the validation of its functionality in a pilot study in which the automatic detection of calls from individual heifers was compared to a video-based assessment of these calls by a trained human observer, a technique that has, until now, been considered the ‘gold standard’.

Materials and methods

Development of the Cattle Call Monitor

Collar

The CCM was developed based on a regular cattle neck strap with a plastic locking system (see Figure 1). To ensure proper contact with the animal’s neck, an elastic band was inserted close to the lock. A box for technical equipment was mounted on the collar next to the inserted elastic band. This box included an ABM (Elecret-Condenser-Microphone EMY-9765P, EKULIT Elektrotechnik, Ostfildern, Germany; frequency range: 30 to 16 000 Hz), an SBM for recording structure-borne sounds (MicW i456 Cardioid Recording Microphone, frequency range: 100 Hz to 10 kHz; MicW Audio, Beijing, China), an energy supply, a microSD card (Verbatim 8 GB microSDHC Class 10; Verbatim Ltd., Charlotte, NC, USA) as a recording unit and a processing unit (TMS320C5515 eZdsp; Spectrum Digital, Inc., Stafford, TX, USA). To capture structure-borne sounds, a stethoscope head was mounted on the inside of the strap and connected via a plastic air-filled tube to the SBM in the box. The stethoscope head was positioned on the left side of the animal’s neck over the cleido-occipital muscle and the cervical part of the trapezius muscle. This area allows firm contact between the stethoscope head and the animal’s body and vibrates noticeably when an animal vocalises. Audio data were sampled using the stereo line-in attached to the processing unit. One channel was used for the SBM, and the other was used for the ABM. Data from both microphones were sampled at a rate of 22 048 Hz to transform the analogue sound signal into digital data. Data were saved on the microSD card in files of a fixed size of 44 112 KB. Every day, the collars were detached from the animals, so that the data could be read out and stored in a central storage location.

Figure 1 Picture of the collar-based cattle call monitor (CCM). The essential components and their location at the collar are marked either with arrows or written on the component itself.

Machine-aided vocalisation detection

The stored data were analysed on a stationary computer using an algorithm implemented in custom-built software (see Supplementary Figure S1) coded in LabVIEW™ (LabVIEW 2014, Service Pack 1, Version 14.0.1f3; National Instruments, Austin, TX, USA). In general, automatic recording of vocalisations requires the separation of sound events from silent periods and the subsequent identification of animal calls amongst these sound events. As the exact start and end of a sound can hardly be detected automatically under on-farm recording conditions, this can only be done by analysing the incoming signal from the microphone in segments called ‘windows’. We set the window size at 1024 data points, which at our sampling rate of 22 048 Hz (i.e., 22 048 data points per second) corresponds to a duration of 46.4 ms. Windows that exceeded the amplitude threshold of 1% of the maximum voltage qualified as a sound event and were temporarily saved to the buffer and merged with all adjacent windows passing the criterion. Sound events ended once a window fell below the amplitude threshold. This procedure for the event-oriented recording of animal calls was previously developed by our research group (Schön et al., Reference Schön, Puppe and Manteuffel2004). In addition to those from the ABM, the SBM signals were also saved in the buffer.

To qualify as a potential call and be permanently stored, the recorded sound events had to meet three criteria (see Figure 2): (1) The signal of the ABM had to be in the frequency range of 50 to 2000 Hz. (2) The duration of the ABM sound event had to be between 0.5 and 10 s. (3) The corresponding signal of the SBM had to have, on average, more than five zero crossings per window. These criteria were implemented to record vocalisations from only the focus animal and to prevent false recordings. Criterion (1) prevented the recording of low-amplitude background noise, for example, noise from heavy farm machinery such as feed mixing vehicles. Criterion (2) excluded normal/high-amplitude short-duration background noise, for example, noise from rattling of the equipment. Criterion (3) ensured that both microphones (ABM and SBM) were active and therefore should have excluded calls from group mates (see Figure 3). If all three criteria (frequency range, duration and zero crossings) were met, the signals from both microphones were stored in a separate file together with a timestamp. Due to the windowing of the data, a single vocalisation could be split into two separate ‘calls’ (split call) if one window in the middle failed the criteria, but the remaining rest of the vocalisation met the criteria of the algorithm again. These preselected vocalisations were analysed for the remaining ambient sounds by a human observer, and any such sounds were manually deleted (18% of the original detections by the CCM).

Figure 2 Process of call recording. Program flow chart of the developed algorithm for caller identification in dairy cattle (Bos taurus).

Figure 3 Visualisation of caller identification. The picture shows the time signals of the airborne sound microphone (ABM, top row) and the structure-borne sound microphone (SBM, bottom row) for three possible scenarios in dairy cattle (Bos taurus): (a) a correctly detected call of the focus animal (match of ABM and SBM), (b) a correctly undetected call emitted by a group mate (mismatch of ABM and SBM) and (c) noise (correctly undetected; mismatch of ABM and SBM).

Validation of the Cattle Call Monitor (pilot study)

Animals and housing

Recordings were conducted at the Experimental Facility for Cattle at the Leibniz Institute for Farm Animal Biology in Dummerstorf, Germany. We observed five CCM-wearing German Holstein heifers from three groups of four animals each (group 1: three focus animals, i.e., heifers in oestrus or perioestrus; group 2: one focus animal and group 3: one focus animal). The observed animals were randomly selected from a larger group of heifers at the institute. The groups were successively housed in a 5- by 10-m pen with wood shavings as the bedding material. The pen was equipped with a self-locking feeding fence and three freely accessible drinking bowls. The animals were fed once a day with a total mixed ration ad libitum.

All procedures were approved by the federal state of Mecklenburg-Western Pomerania (LALLF M-V/TSD/7221.3-2.1-021/13).

Video observation

Audiovisual data were recorded using two cameras (EverFocus HDTV, EverFocus Co. Ltd., New Taipei City, Taiwan) positioned on the left and right of the pen and a microphone (Sennheiser MKE600; Sennheiser Electronic GmbH & Co. KG, Wedemark, Germany) positioned under the ceiling above the heifers, all of which were attached to a digital video recorder (EDR HD-4H4, EverFocus Co. Ltd., New Taipei City, Taiwan). Video recording was performed for 24 h on all analysed days. To synchronise the CCM data with the video data, a vocal timestamp was included on the audio stream shortly before the collars were reattached to the heifers (i.e., the experimenter announced the date, hour, minute and second, as given in the video live stream, and the subject’s identification number).

Video analysis was performed with The Observer XT 10.1 software (Noldus Information Technology, Wageningen, the Netherlands) over a period of 5 days in the perioestrus and oestrus periods. Every vocalisation was coded as a point event, and the caller was identified (as the focus animal v. the group mates) based on characteristic head movements and/or exhalation of the focus animal that was synchronous with the vocalisation (Röttgen et al., Reference Röttgen, Becker, Tuchscherer, Wrenzycki, Düpjan, Schön and Puppe2018). These video-observed vocalisations were used as a gold standard for comparison to the machine-detected vocalisations.

Comparing video observation to machine-aided detection

The machine-detected and the video-observed vocalisations were first compared based on the timestamps in order to find the matching pairs. To do this, the CCM data were imported into the video observation files as external data.

Events recorded by the CCM and in the videos were classified into the following categories: (1) true positive: vocalisation of the focus animal was identified in the video and correctly detected by the CCM; (2) true negative: vocalisation by a group mate was identified in the video and was (correctly) not assigned to the focus animal by the CCM; (3) false positive: no vocalisation of the focus animal was identified in the video, but vocalisation was incorrectly detected by the CCM and (4) false negative: vocalisation of the focus animal was identified in the video but was not identified by the CCM.

Statistical analysis

Vocalisations were excluded when they occurred during collar changes (n=37) or one CCM malfunction (n=53), where the microSD card was compromised and the data could not be accessed.

The following indices were calculated:

For split calls (see the ‘Machine-aided vocalisation detection’ section) the first ‘call’ was counted as a true positive, and the second ‘call’ was considered a false positive.

Results

In total, 2171 vocalisations were detected from the five focus animals and their group mates. Thereof, 1404 vocalisations were assigned to the focus animals by video observation and 721 vocalisations were emitted by respective group mates (Table 1).

Table 1 Total number of vocalisations. Overview of the number of calls detected in dairy cattle (Bos taurus) by video observation and cattle call monitor (CCM) across subjects and the derived indices

The CCM operated continually for 24 h until the power supply changes and operated over the whole period of the pilot study. The system recorded 576 h of audio data, which were analysed by the algorithm. Only 24 h had to be omitted from the analyses due to the CCM malfunction. Technical problems that occurred during the testing of our prototype were the limited buffer capacity of the processing unit and the limited writing speeds of the microSD cards. These problems caused an offset between the timestamp of the audio file and the video timestamp. Additionally, these technical issues caused data gaps within and between files. The gaps between the files caused approximately 1% of the undetected vocalisations for the CCM.

During the observation period, 1404 vocalisations were observed from five heifers by analysing 576 h of audiovisual recordings, and 1266 vocalisations were detected by the algorithm in the same period (Table 1). Out of the 1404 vocalisations, 1220 (87%) were detected by the CCM with correct caller identification (true positives). On the other hand, 184 vocalisations were not detected by the CCM (false negatives). Among these, 82 vocalisations were clearly visible and audible in the video but could still not be found in the automatic detection data; another 81 vocalisations were ‘moo’-type vocalisations that were (too) short and/or (too) quiet, and 6 vocalisations were throaty. In 15 cases, the vocalisations occurred in the gap between two data blocks.

The CCM detected 46 vocalisations that were not emitted by the focus heifer (false positives). Out of these vocalisations, 19 were vocalisations by group mates, 5 were probably vocalisations by group mates but occurred simultaneously with farm equipment noise, and the remaining 22 were split calls (see above).

These findings resulted in a sensitivity of 87%, a specificity of 94%, a negative predictive value of 80% and a positive predictive value of 96%.

The detection rates varied between individual heifers, ranging from 60% to 100% (Table 2).

Table 2. Individual detection rates and main detection errors. Individual call numbers, detection rates and the main detection errors (percentages unless indicated otherwise) of the five focus heifers (Bos taurus)

CCM=cattle call monitor.

Discussion

In this study, a collar-based system for individual vocalisation detection in cattle was developed and validated. The results of the pilot study show that vocalisations during perioestrus and oestrus of individual animals can be detected with a sensitivity of 87% and a positive predictive value of 96%. The specificity was 94%, and the negative predictive value was 80%.

Most of the undetected vocalisations were short and quiet mooing that most likely did not meet criteria 2 (duration of the ABM signal between 0.5 and 10 s) and 3 (five zero crossings per window for the SBM signal). These sounds are produced with a closed mouth and minimal change in posture. While these vocalisations might be of interest in the context of animal welfare, in the context of oestrus detection, these vocalisations might be irrelevant. A study by Schön et al. (Reference Schön, Hämel, Puppe, Tuchscherer, Kanitz and Manteuffel2007) showed a tendency towards more nonharmonic vocalisations on the day of oestrus compared to those on di-oestrus days. These nonharmonic calls differ in their structure and length from the short and quiet mooing calls. The developed algorithm appears to have had problems detecting the mooing vocalisations. This is also apparent in the detection rates for the individual animals, for example, an animal that produced more mooing vocalisations than the others had the lowest detection rate of 60%. The length of the calls being detected must be limited to suppress the detection of ambient sounds. During the development of the prototype, metallic sounds close to the SBM (e.g., caused by the self-locking feeding fence) were found to have great impact on that microphone, with amplitudes above the threshold (1% of the maximal voltage of the ABM). The duration restriction is likely to have lowered the negative predictive value for these metallic sounds, as duration is the only criterion that discriminates these sound events from calls. This index gives the probability that a ‘no vocalisation’ readout from the CCM is correct. In contrast, the positive predictive value, which gives the probability that a registered vocalisation was indeed produced by the focus animal, was high. Therefore, since eliminating noise is crucial and the proportion of undetected mooing calls may not have an impact on oestrus detection (as nonharmonic calls seem to be more important to detect oestrus in cows), we see no need to alter the algorithm.

The second large group of undetected vocalisations included vocalisations that were clearly visible/audible and assignable to the focus animal. The reason that these vocalisations were not detected by the CCM might be that the SBM was not attached properly to the body of the heifer or that a data gap occurred while the data were written to the memory card during the time of the vocalisation. The contact between the heifer’s neck and the SBM was sufficient in most cases, but contact can be impaired during the contraction of specific neck muscles. This problem can only be solved if the SBM is permanently and directly mounted on the skin or with a much tighter-fitting collar. A permanent solution can only be achieved by implanting or stitching the microphone to the animal. However, this solution would not be feasible for on-farm use. A tighter fitting collar would probably result in mechanical problems during feed intake due to the constriction of the oesophagus. Additionally, a tighter collar might cause venous stasis of the jugular vein and mechanical skin erosions. Hence, given the high overall sensitivity and the significant side effects of possible solutions that could impair animal welfare, we consider this inaccuracy negligible.

The results showed two kinds of data gaps: the first kind was within a file and the second kind was between files. Gaps within files were difficult to reconstruct with an accurate timeline, so vocalisations that were missing in the automatic detection data as a result of such gaps were counted as mismatches. The gaps between files were, however, easy to reconstruct, and the vocalisations that were emitted during these gaps were excluded. To solve the problem of data gaps, technical improvements must be made. One approach could be to integrate the algorithm into the collar-mounted processing unit itself and to relay the audio data stream without previous saving. This would also provide considerable progress towards developing an applicable system for on-farm use.

Some vocalisations were divided into two parts because one window failed to match the recording criteria, but a subsequent window during that vocalisation fulfilled the criteria. This caused a split recording of these vocalisations. One potential adjustment to the algorithm could be to make it end the recording only if two or more consecutive windows fail the criteria.

Once these technical problems are solved, analysis of algorithms for oestrus detection must begin. These algorithms need to identify peaks in individual vocalisation rates based on the CCM data and must therefore be robust against significant individual variations in vocal behaviour. Our focus animals showed considerable variation in individual vocalisation rates, but our data do not indicate that these influence the reliability of call detection (with the highest detection rate of 100% in an animal with 54 vocalisations and the second highest detection rate of 89% in an animal with 1135 total detected calls). An adaptive phase is the approach used to deal with such individual vocalisation rates. In this phase, the data from the CCM would be analysed and the mean vocalisation rate per time interval calculated for each individual cow. Significant increases in mean vocalisation rate could then indicate oestrus for that particular animal. A higher number of vocalisations are also observed in stressful situations (Stookey et al., Reference Stookey, Watts and Schwartzkopf1996; Grandin, Reference Grandin2001; Green et al., Reference Green, Johnston and Clark2018). These events should also be considered in the algorithm, for example, by comparing the data to the date of the last oestrus event (adequate time span) and/or by cross-checking the data with that of herd members during raw data processing. This would exclude the increased number of vocalisations that can occur during routine events such as regrouping or routine treatments (e.g., hoof trimming) that affect the whole herd.

Various technical approaches have been used to assess the vocalisations of animals, particularly those of farm animals. Yajuvendra et al. (Reference Yajuvendra, Lathwal, Rajput, Raja, Gupta, Mohanty, Ruhil, Chakravarty, Sharma, Sharma and Chandra2013) chose to detect individual cows by applying speech analysis techniques to samples of cattle vocalisations. This approach requires a set of sample vocalisations for each animal, so that the selected parameters can be recorded. During routine daily farm work, such samples are hard to obtain. Aside from the fact that cattle rarely vocalise, there are also many ambient sounds on a farm throughout the day, such as those emitted by other animals, farm equipment and personnel. It would also be time-consuming to obtain vocalisation samples for each cow and would therefore not be economically viable. In chipmunks, Couchoux et al. (Reference Couchoux, Aubert, Garant and Reale2015) attached microphones to the necks of the animals and were able to record their vocal behaviours throughout the day. This approach does not require a set of individual vocalisation samples, and it can record all vocalisations of the focus animal. However, the problem with using a single microphone in a farm environment is that many animals of the same species live in a limited space, and other than in zebra finches (Gill et al., Reference Gill, D’Amelio, Adreani, Sagunsky, Gahr and Maat2016), the use of amplitude differences for caller identification is not applicable in dairy cattle. Therefore, techniques used in free-ranging solitary-living animals or songbirds would need to be adapted for on-farm application. The CCM relies neither on individual vocalisation samples nor on isolated individual housing, increasing its applicability on-farm.

A technical acoustic solution to monitor coughing in pigs involves installing multiple microphones, which enables detection of the compartment where the cough was emitted by triangulation (Silva et al., Reference Silva, Ferrari, Costa, Aerts, Guarino and Berckmans2008). Meen et al. (Reference Meen, Schellekens, Slegers, Leenders, van Erp-van der Kooij and Noldus2015) suggested a similar system for cows to monitor their vocalisations. However, in the context of oestrus detection, the localisation of the housing compartment is not sufficient, as the individual cow must be identified, for example, for artificial insemination. Another problem with this approach is the lack of a specific call indicating oestrus in cows. It has been proven only that the vocalisation rate rises on the day of oestrus (Schön et al., Reference Schön, Hämel, Puppe, Tuchscherer, Kanitz and Manteuffel2007; Dreschel et al., Reference Dreschel, Schön, Kanitz and Mohr2014; Röttgen et al., Reference Röttgen, Becker, Tuchscherer, Wrenzycki, Düpjan, Schön and Puppe2018); individual vocalisation rates show a significant variation both within and between animals. Even if further studies identify a specific vocalisation or a specific change in vocalisation patterns during oestrus, individual assignment would remain a challenge.

The CCM could also be integrated into or combined with existing oestrus detection devices that measure other behaviours, for example, physical activity or rumination. Synchronously monitoring more than one behavioural parameter would likely result in a higher accuracy of oestrus detection in cattle (Reith and Hoy, Reference Reith and Hoy2018) and might be especially effective in cases where current methods fail (e.g., ‘silent heat’). Additionally, analysis of the sequence of behaviours occurring during oestrus in cattle might enable improvements. For example, the climax of the vocalisation rate is almost synchronous with the climax of oestrus behaviour (Röttgen et al., Reference Röttgen, Becker, Tuchscherer, Wrenzycki, Düpjan, Schön and Puppe2018), and both are synchronous with the oestradiol peak (Lyimo et al., Reference Lyimo, Nielen, Ouweltjes, Kruip and van Eerdenburg2000), whereas the climax in physical activity appears 6 to 12 h after the oestradiol peak (Aungier et al., Reference Aungier, Roche, Duffy, Scully and Crowe2015). Monitoring vocalisation could therefore provide farmers with early information that can be substantiated by later observations of increased physical activity.

Conclusion

As a whole, the CCM has the potential to record cattle vocalisations and to correctly identify the calling individual in commercial housing environments. The automatic assignment of vocalisations to an individual animal and a specific time point will limit the time required for video analysis searching for rare cattle vocalisations and will therefore enhance progress towards decoding specific vocalisations. Such scientific findings could be implemented in the CCM to further improve its on-farm applicability. These results are promising for the future use of vocalisation in automatic oestrus detection systems and for further applications in monitoring animal health and welfare status.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/S1751731119001733

Acknowledgements

The authors thank the staff of the experimental facility for cattle of the Leibniz Institute for Farm Animal Biology and the technicians of the Institute of Behavioural Physiology and Institute of Reproductive Biology for their admirable penitence and support during the development. The funding of this project by the European Social Fund Mecklenburg- Western Pomerania (V-630-F-124-2011/150 V-630-S-124-2011/151) was greatly appreciated. Two anonymous reviewers helped increasing the clarity of the manuscript with their valuable input.

Declaration of interest

The authors have no conflicting interests to disclose.

Ethics statement

The experiments were approved by the federal state of Mecklenburg-Western Pomerania (LALLF M-V/TSD/7221.3-2.1-021/13) according to German regulations.

Software and data repository resources

All raw data can be provided on request.