1. Introduction

Actuarial reserving amounts to forecasting future claim costs from incurred claims that the insurer is unaware of and from claims known to the insurer that may lead to future claim costs. The predictor commonly used is an expectation of future claim costs computed with respect to a parametric model, conditional on the currently observed data, where the unknown parameter vector is replaced by a parameter estimator. A natural question is how to calculate an estimate of the conditional mean squared error of prediction, MSEP, given the observed data, so that this estimate is a fair assessment of the accuracy of the predictor. The main question is how the variability of the predictor due to estimation error should be accounted for and quantified.

Mack’s seminal paper Mack (Reference Mack1993) addressed this question for the chain ladder reserving method. Given a set of model assumptions, referred to as Mack’s distribution-free chain ladder model, Mack justified the use of the chain ladder reserve predictor and, more importantly, provided an estimator of the conditional MSEP for the chain ladder predictor. Another signifi-cant contribution to measuring variability in reserve estimation is the paper England and Verrall (Reference England and Verrall1999), which introduced bootstrap techniques to actuarial science. For more on other approaches to assess the effect of estimation error in claims reserving, see, for example, Buchwalder et al. (Reference Buchwalder, Bühlmann, Merz and Wüthrich2006), Gisler (Reference Gisler2006), Wüthrich and Merz (Reference Wüthrich and Merz2008b), Röhr (Reference Röhr2016), Diers et al. (Reference Diers, Linde and Hahn2016) and the references therein.

Even though Mack (Reference Mack1993) provided an estimator of conditional MSEP for the chain ladder predictor of the ultimate claim amount, the motivation for the approximations in the derivation of the conditional MSEP estimator is somewhat opaque – something commented upon in, for example, Buchwalder et al. (Reference Buchwalder, Bühlmann, Merz and Wüthrich2006). Moreover, by inspecting the above references it is clear that there is no general agreement on how estimation error should be accounted for when assessing prediction error.

Many of the models underlying commonly encountered reserving methods, such as Mack’s distribution-free chain ladder model, have an inherent conditional or autoregressive structure. This conditional structure will make the observed data not only a basis for parameter estimation, but also a basis for prediction. More precisely, expected future claim amounts are functions, expressed in terms of observed claim amounts, of the unknown model parameters. These functions form the basis for prediction. Predictors are obtained by replacing the unknown model parameters by their estimators. In particular, the same data are used for the basis for prediction and parameter estimation. In order to estimate prediction error in terms of conditional MSEP, it is necessary to account for the fact that the parameter estimates differ from the unknown parameter values. As demonstrated in Mack (Reference Mack1993), not doing so will make the effect of estimation error vanish in the conditional MSEP estimation.

We start by considering assessment of a prediction method without reference to a specific model. Given a random variable X to be predicted and a predictor

![]() $\widehat{X}$

, the conditional MSEP, conditional on the available observations, is defined as

$\widehat{X}$

, the conditional MSEP, conditional on the available observations, is defined as

$${\rm MSEP}_{\cal F_0}(X,\widehat{X}) ,:= {\rm \mathbb{E}}\big[(X-\widehat{X})^2 \mid \cal F_0\big] = {\rm Var}\!(X\mid\cal F_0) + {\rm \mathbb{E}}\big[(\widehat{X} - {\rm \mathbb{E}}[X \mid \cal F_0])^2 \mid \cal F_0\big]\nonumber$$

$${\rm MSEP}_{\cal F_0}(X,\widehat{X}) ,:= {\rm \mathbb{E}}\big[(X-\widehat{X})^2 \mid \cal F_0\big] = {\rm Var}\!(X\mid\cal F_0) + {\rm \mathbb{E}}\big[(\widehat{X} - {\rm \mathbb{E}}[X \mid \cal F_0])^2 \mid \cal F_0\big]\nonumber$$

The variance term is usually referred to as the process variance and the expected value is referred to as the estimation error. Notice that

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

is the optimal predictor of the squared prediction error

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

is the optimal predictor of the squared prediction error

![]() ${(X-\widehat{X})^2}$

in the sense that it minimises

${(X-\widehat{X})^2}$

in the sense that it minimises

![]() ${\rm \mathbb {E}}[((X-\widehat{X})^2-V)^2]$

over all

${\rm \mathbb {E}}[((X-\widehat{X})^2-V)^2]$

over all

![]() $\cal F_0$

-measurable random variables V having finite variance. However,

$\cal F_0$

-measurable random variables V having finite variance. However,

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

typically depends on unknown parameters. In a time-series setting, we may consider a time series (S

t

) depending on an unknown parameter vector

θ

and the problem of assessing the accuracy of a predictor

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

typically depends on unknown parameters. In a time-series setting, we may consider a time series (S

t

) depending on an unknown parameter vector

θ

and the problem of assessing the accuracy of a predictor

![]() $\widehat{X}$

of X = St

for some fixed t > 0 given that (St

)

t≤0 has been observed. The claims reserving applications we have in mind are more involved and put severe restrictions on the amount of data available for prediction assessment based on out-of-sample performance.

$\widehat{X}$

of X = St

for some fixed t > 0 given that (St

)

t≤0 has been observed. The claims reserving applications we have in mind are more involved and put severe restrictions on the amount of data available for prediction assessment based on out-of-sample performance.

Typically, the predictor

![]() $\widehat{X}$

is taken as the plug-in estimator of the conditional expectation

$\widehat{X}$

is taken as the plug-in estimator of the conditional expectation

![]() ${\rm \mathbb {E}}[X \mid \cal F_0]$

if X has a probability distribution with a parameter vector

θ

, then we may write

${\rm \mathbb {E}}[X \mid \cal F_0]$

if X has a probability distribution with a parameter vector

θ

, then we may write

where

![]() $z \mapsto h(z;{\kern 1pt} {{\cal F}_0})$

is an

$z \mapsto h(z;{\kern 1pt} {{\cal F}_0})$

is an

![]() $\cal F_0$

measurable function and

$\cal F_0$

measurable function and

![]() $\widehat{\boldsymbol{\theta}}$

an

$\widehat{\boldsymbol{\theta}}$

an

![]() $\cal F_0$

measurable estimator of

θ

. (Note that this definition of a plug-in estimator, i.e. the estimator obtained by replacing an unknown parameter

θ

with an estimator

$\cal F_0$

measurable estimator of

θ

. (Note that this definition of a plug-in estimator, i.e. the estimator obtained by replacing an unknown parameter

θ

with an estimator

![]() $\widehat{\boldsymbol{\theta}}$

of the parameter, is not to be confused with the so-called plug-in principle, see e.g. Efron and Tibshirani (Reference Efron and Tibshirani1994: Chapter 4.3), where the estimator is based on the empirical distribution function.) Since the plug-in estimator of

$\widehat{\boldsymbol{\theta}}$

of the parameter, is not to be confused with the so-called plug-in principle, see e.g. Efron and Tibshirani (Reference Efron and Tibshirani1994: Chapter 4.3), where the estimator is based on the empirical distribution function.) Since the plug-in estimator of

is equal to 0, it is clear that the plug-in estimator of

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

coincides with the plug-in estimator of

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

coincides with the plug-in estimator of

![]() ${\rm Var}_{\cal F_0}(X,\widehat{X})$

,

${\rm Var}_{\cal F_0}(X,\widehat{X})$

,

which fails to account for estimation error and underestimates

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

. We emphasise that

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

. We emphasise that

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

and

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

and

![]() ${\rm Var}\!(X\mid\cal F_0)$

can be seen as functions of the unknown parameter

θ

and

${\rm Var}\!(X\mid\cal F_0)$

can be seen as functions of the unknown parameter

θ

and

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})(\widehat{\boldsymbol{\theta}})$

and

${\rm MSEP}_{\cal F_0}(X,\widehat{X})(\widehat{\boldsymbol{\theta}})$

and

![]() ${\rm Var}\!(X\mid\cal F_0)(\widehat{\boldsymbol{\theta}})$

are to be interpreted as the functions

${\rm Var}\!(X\mid\cal F_0)(\widehat{\boldsymbol{\theta}})$

are to be interpreted as the functions

evaluated at

![]() $z = \widehat{\boldsymbol{\theta}}$

. This notational convention will be used throughout the paper for other quantities as well.

$z = \widehat{\boldsymbol{\theta}}$

. This notational convention will be used throughout the paper for other quantities as well.

In the present paper, we suggest a simple general approach to estimate conditional MSEP. The basis of this approach is as follows. Notice that (1) may be written as

whose plug-in estimator, as demonstrated above, is flawed. Consider a random variable

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

such that

$\widehat{\boldsymbol{\theta}}^{\,*}$

such that

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

and X are conditionally independent, given

$\widehat{\boldsymbol{\theta}}^{\,*}$

and X are conditionally independent, given

![]() $\cal F_0$

. Let

$\cal F_0$

. Let

$${\rm MSEP}^*_{\cal F_0}(X,\widehat{X}) \,:= {\rm \mathbb {E}}\big[(X-h(\widehat{\boldsymbol{\theta}}^{\,*};\,\cal F_0)^2 \mid \cal F_0\big] = {\rm Var}\!(X\mid\cal F_0) + {\rm \mathbb {E}}\big[(h(\widehat{\boldsymbol{\theta}}^{\,*};\,\cal F_0) - h(\boldsymbol{\theta};\,\cal F_0)^2 \mid \cal F_0\big].$$

$${\rm MSEP}^*_{\cal F_0}(X,\widehat{X}) \,:= {\rm \mathbb {E}}\big[(X-h(\widehat{\boldsymbol{\theta}}^{\,*};\,\cal F_0)^2 \mid \cal F_0\big] = {\rm Var}\!(X\mid\cal F_0) + {\rm \mathbb {E}}\big[(h(\widehat{\boldsymbol{\theta}}^{\,*};\,\cal F_0) - h(\boldsymbol{\theta};\,\cal F_0)^2 \mid \cal F_0\big].$$

The definition of

![]() ${\rm MSEP}^*_{\cal F_0}(X,\widehat{X})$

is about disentangling the basis of prediction

${\rm MSEP}^*_{\cal F_0}(X,\widehat{X})$

is about disentangling the basis of prediction

![]() ${{{z}}}\mapsto h({{{z}}};\,\cal F_0)$

and the parameter estimator

${{{z}}}\mapsto h({{{z}}};\,\cal F_0)$

and the parameter estimator

![]() $\,\widehat{\boldsymbol{\theta}}$

that together form the predictor

$\,\widehat{\boldsymbol{\theta}}$

that together form the predictor

![]() $\widehat{X}$

. Both are expressions in terms of the available noisy data generating

$\widehat{X}$

. Both are expressions in terms of the available noisy data generating

![]() $\cal F_0$

, the “statistical basis” in the terminology of Norberg (Reference Norberg1986).

$\cal F_0$

, the “statistical basis” in the terminology of Norberg (Reference Norberg1986).

The purpose of this paper is to demonstrate that a straightforward estimator of

![]() ${\rm MSEP}^*_{\cal F_0}(X,\widehat{X})$

is a good estimator of

${\rm MSEP}^*_{\cal F_0}(X,\widehat{X})$

is a good estimator of

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

that coincides with estimators that have been proposed in the literature for specific models and methods, with Mack’s distribution-free chain ladder method as the canonical example. If

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

that coincides with estimators that have been proposed in the literature for specific models and methods, with Mack’s distribution-free chain ladder method as the canonical example. If

![]() $\,\widehat{\boldsymbol{\theta}}^{\,*}$

is chosen as

$\,\widehat{\boldsymbol{\theta}}^{\,*}$

is chosen as

![]() $\,\widehat{\boldsymbol{\theta}}^{\perp}$

, an independent copy of

$\,\widehat{\boldsymbol{\theta}}^{\perp}$

, an independent copy of

![]() $\,\widehat{\boldsymbol{\theta}}$

independent of

$\,\widehat{\boldsymbol{\theta}}$

independent of

![]() $\cal F_0$

, then

$\cal F_0$

, then

![]() ${\rm MSEP}^*_{\cal F_0}(X,\widehat{X})$

coincides with Akaike’s final prediction error (FPE) in the conditional setting; see, for example, Remark 1 for details. Akaike’s FPE is a well-studied quantity used for model selection in time-series analysis; see Akaike (Reference Akaike1969), Akaike (Reference Akaike1970), and further elaborations and analysis in Bhansali and Downham (Reference Bhansali and Downham1977) and Speed and Yu (Reference Speed and Yu1993).

${\rm MSEP}^*_{\cal F_0}(X,\widehat{X})$

coincides with Akaike’s final prediction error (FPE) in the conditional setting; see, for example, Remark 1 for details. Akaike’s FPE is a well-studied quantity used for model selection in time-series analysis; see Akaike (Reference Akaike1969), Akaike (Reference Akaike1970), and further elaborations and analysis in Bhansali and Downham (Reference Bhansali and Downham1977) and Speed and Yu (Reference Speed and Yu1993).

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

should be chosen to reflect the variability of the parameter estimator

$\widehat{\boldsymbol{\theta}}^{\,*}$

should be chosen to reflect the variability of the parameter estimator

![]() $\,\widehat{\boldsymbol\theta}$

. Different choices of

$\,\widehat{\boldsymbol\theta}$

. Different choices of

![]() $\,\widehat{\boldsymbol{\theta}}^{\,*}$

may be justified and we will in particular consider choices that make the quantity

$\,\widehat{\boldsymbol{\theta}}^{\,*}$

may be justified and we will in particular consider choices that make the quantity

![]() ${\rm MSEP}^*_{\cal F_0}(X,\widehat{X})$

computationally tractable. In Diers et al. (Reference Diers, Linde and Hahn2016), “pseudo-estimators” are introduced as a key step in the analysis of prediction error in the setting of the distribution-free chain ladder model. Upon identifying the vector of “pseudo-estimators” with

${\rm MSEP}^*_{\cal F_0}(X,\widehat{X})$

computationally tractable. In Diers et al. (Reference Diers, Linde and Hahn2016), “pseudo-estimators” are introduced as a key step in the analysis of prediction error in the setting of the distribution-free chain ladder model. Upon identifying the vector of “pseudo-estimators” with

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

the approach in Diers et al. (Reference Diers, Linde and Hahn2016) and the one presented in the present paper coincide in the setting of the distribution-free chain ladder model. Moreover, the approaches considered in Buchwalder et al. (Reference Buchwalder, Bühlmann, Merz and Wüthrich2006) are compatible with the general approach of the present paper for the special case of the distribution-free chain ladder model when assessing the prediction error of the ultimate claim amount.

$\widehat{\boldsymbol{\theta}}^{\,*}$

the approach in Diers et al. (Reference Diers, Linde and Hahn2016) and the one presented in the present paper coincide in the setting of the distribution-free chain ladder model. Moreover, the approaches considered in Buchwalder et al. (Reference Buchwalder, Bühlmann, Merz and Wüthrich2006) are compatible with the general approach of the present paper for the special case of the distribution-free chain ladder model when assessing the prediction error of the ultimate claim amount.

When considering so-called distribution-free models, that is, models only defined in terms of a set of (conditional) moments, analytical calculation of

![]() ${\rm MSEP}^*_{\cal F_0}(X,\widehat{X})$

requires the first-order approximation

${\rm MSEP}^*_{\cal F_0}(X,\widehat{X})$

requires the first-order approximation

where

![]() $\nabla h(\boldsymbol{\theta};\,\cal F_0)$

enotes the gradient of

$\nabla h(\boldsymbol{\theta};\,\cal F_0)$

enotes the gradient of

![]() ${{{z}}}\mapsto h({{{z}}};\,\cal F_0)$

valuated at

θ

. However, this is the only approximation needed. The use of this kind of linear approximation is very common in the literature analysing prediction error. For instance, it appears naturally in the error propagation argument used for assessing prediction error in the setting of the distribution-free chain ladder model in Röhr (Reference Röhr2016), although the general approach taken in Röhr (Reference Röhr2016) is different from the one presented here.

${{{z}}}\mapsto h({{{z}}};\,\cal F_0)$

valuated at

θ

. However, this is the only approximation needed. The use of this kind of linear approximation is very common in the literature analysing prediction error. For instance, it appears naturally in the error propagation argument used for assessing prediction error in the setting of the distribution-free chain ladder model in Röhr (Reference Röhr2016), although the general approach taken in Röhr (Reference Röhr2016) is different from the one presented here.

Before proceeding with the general exposition, one can note that, as pointed out above, Akaike’s original motivation for introducing FPE was as a device for model selection in autoregressive time-series modelling. In section 4, a class of conditional, autoregressive, reserving models is introduced for which the question of model selection is relevant. This topic will not be pursued any further, but it is worth noting that the techniques and methods discussed in the present paper allow for “distribution-free” model selection.

In section 2, we present in detail the general approach to estimation of conditional MSEP briefly summarised above. Moreover, in section 2, we illustrate how the approach applies to the situation with run-off triangle-based reserving when we are interested in calculating conditional MSEP for the ultimate claim amount and the claims development result (CDR). We emphasise the fact that the conditional MSEP given by (1) is the standard (conditional) L 2 distance between a random variable and its predictor. The MSEP quantities considered in Wüthrich et al. (Reference Wüthrich, Merz and Lysenko2009) in the setting of the distribution-free chain ladder model are not all conditional MSEP in the sense of (1).

In section 3, we put the quantities introduced in the general setting in section 2 in the specific setting where data emerging during a particular time period (calendar year) form a diagonal in a run-off triangle (trapezoid).

In section 4, development-year dynamics for the claim amounts are given by a sequence of general linear models. Mack’s distribution-free chain ladder model is a special case but the model structure is more general and include, for example, development-year dynamics given by sequences of autoregressive models. Given the close connection between our proposed estimator of conditional MSEP and Akaike’s FPE, our approach naturally lends itself to model selection within a set of models.

In section 5, we show that we retrieve Mack’s famous conditional MSEP estimator for the ultimate claim amount and demonstrate that our approach coincides with the approach in Diers et al. (Reference Diers, Linde and Hahn2016) to estimation of conditional MSEP for the ultimate claim amount for Mack’s distribution-free chain ladder model. We also argue that conditional MSEP for the CDR is simply a special case, choosing CDR as the random variable of interest instead of, for example, the ultimate claim amount. In section 5, we show agreement with certain CDR expressions obtained in Wüthrich et al. (Reference Wüthrich, Merz and Lysenko2009) for the distribution-free chain ladder model, while noting that the estimation procedure is different from those used in, for example, Wüthrich et al. (Reference Wüthrich, Merz and Lysenko2009) and Diers et al. (Reference Diers, Linde and Hahn2016).

Although Mack’s distribution-free chain ladder model and the associated estimators/predictors provide canonical examples of the claim amount dynamics and estimators/predictors of the kind considered in section 4, analysis of the chain ladder method is not the purpose of the present paper. In section 6, we demonstrate that the general approach to estimation of conditional MSEP presented here applies naturally to non-sequential models such as the overdispersed Poisson chain ladder model. Moreover, for the overdispersed Poisson chain ladder model we derive a (semi-) analytical MSEP-approximation which turns out to coincide with the well-known estimator from Renshaw (Reference Renshaw1994).

2. Estimation of Conditional MSEP in a General Setting

We will now formalise the procedure briefly described in section 1. All random objects are defined on a probability space (

![]() $(\Omega,\cal F,{\rm \mathbb P})$

). Let

$(\Omega,\cal F,{\rm \mathbb P})$

). Let

![]() $\cal T=\{\underline{t},\underline{t}+1,\dots,\overline{t}\}$

be an increasing sequence of integer times with

$\cal T=\{\underline{t},\underline{t}+1,\dots,\overline{t}\}$

be an increasing sequence of integer times with

![]() $\underline{t}<0<\overline{t}$

and

$\underline{t}<0<\overline{t}$

and

![]() $0 \in \cal T$

representing current time. Let

$0 \in \cal T$

representing current time. Let

![]() ${((S_t,S^{\perp}_t))_{t \in \cal T}}$

be a stochastic process generating the relevant data.

${((S_t,S^{\perp}_t))_{t \in \cal T}}$

be a stochastic process generating the relevant data.

![]() ${(S_t)_{t \in \cal T}}$

and

${(S_t)_{t \in \cal T}}$

and

![]() ${(S^{\perp}_t)_{t \in \cal T}}$

are independent and identically distributed stochastic processes, where the former represents outcomes over time in the real world and the latter represents outcomes in an imaginary parallel universe. Let

${(S^{\perp}_t)_{t \in \cal T}}$

are independent and identically distributed stochastic processes, where the former represents outcomes over time in the real world and the latter represents outcomes in an imaginary parallel universe. Let

![]() ${(\cal F_t)_{t \in \cal T}}$

denote the filtration generated by

${(\cal F_t)_{t \in \cal T}}$

denote the filtration generated by

![]() ${(S_t)_{t \in \cal T}}$

.It is assumed that the probability distribution of

${(S_t)_{t \in \cal T}}$

.It is assumed that the probability distribution of

![]() ${(S_t)_{t \in \cal T}}$

is parametrised by an unknown parameter vector

θ

. Consequently, the same applies to

${(S_t)_{t \in \cal T}}$

is parametrised by an unknown parameter vector

θ

. Consequently, the same applies to

![]() ${(S^{\perp}_t)_{t \in \cal T}}$

. The problem considered in this paper is the assessment of the accuracy of the prediction of a random variable X, that may be expressed as some functional applied to

${(S^{\perp}_t)_{t \in \cal T}}$

. The problem considered in this paper is the assessment of the accuracy of the prediction of a random variable X, that may be expressed as some functional applied to

![]() ${(S_t)_{t \in \cal T}}$

, given the currently available information represented by

${(S_t)_{t \in \cal T}}$

, given the currently available information represented by

![]() ${\cal F_0}$

. The natural object to consider as the basis for predicting X is

${\cal F_0}$

. The natural object to consider as the basis for predicting X is

which is an

![]() $\cal F_0$

measurable function evaluated at

θ

. The corresponding predictor is then obtained as the plug-in estimator

$\cal F_0$

measurable function evaluated at

θ

. The corresponding predictor is then obtained as the plug-in estimator

where

![]() $\widehat{\boldsymbol{\theta}}$

is an

$\widehat{\boldsymbol{\theta}}$

is an

![]() $\cal F_0$

measurable estimator of

θ

. We define

$\cal F_0$

measurable estimator of

θ

. We define

$${\rm MSEP}_{\cal F_0}(X,\widehat{X}) ,:= {\rm \mathbb E}\big[(X-\widehat{X})^2 \mid \cal F_0\big] = {\rm Var}\!(X\mid\cal F_0) + {\rm \mathbb E}\big[(\widehat{X} - {\rm \mathbb E}[X \mid \cal F_0])^2 \mid \cal F_0\big]$$

$${\rm MSEP}_{\cal F_0}(X,\widehat{X}) ,:= {\rm \mathbb E}\big[(X-\widehat{X})^2 \mid \cal F_0\big] = {\rm Var}\!(X\mid\cal F_0) + {\rm \mathbb E}\big[(\widehat{X} - {\rm \mathbb E}[X \mid \cal F_0])^2 \mid \cal F_0\big]$$

and notice that

$${\rm MSEP}_{\cal F_0}(X,\widehat{X}) = {\rm Var}\!(X\mid\cal F_0) + {\rm \mathbb E}\big[(h(\widehat{\boldsymbol{\theta}};\,\cal F_0)-h(\boldsymbol{\theta};\,\cal F_0))^2 \mid \cal F_0\big] = {\rm Var}\!(X\mid\cal F_0)(\boldsymbol{\theta}) + (h(\widehat{\boldsymbol{\theta}};\,\cal F_0)-h(\boldsymbol{\theta};\,\cal F_0))^2 \nonumber $$

$${\rm MSEP}_{\cal F_0}(X,\widehat{X}) = {\rm Var}\!(X\mid\cal F_0) + {\rm \mathbb E}\big[(h(\widehat{\boldsymbol{\theta}};\,\cal F_0)-h(\boldsymbol{\theta};\,\cal F_0))^2 \mid \cal F_0\big] = {\rm Var}\!(X\mid\cal F_0)(\boldsymbol{\theta}) + (h(\widehat{\boldsymbol{\theta}};\,\cal F_0)-h(\boldsymbol{\theta};\,\cal F_0))^2 \nonumber $$

We write

to emphasise that

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

can be seen as an

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

can be seen as an

![]() $\cal F_0$

measurable function of

θ

. Consequently, the plug-in estimator of

$\cal F_0$

measurable function of

θ

. Consequently, the plug-in estimator of

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

is given by

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

is given by

which coincides with the plug-in estimator of the process variance leading to a likely underestimation of

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

. This problem was highlighted already in Mack (Reference Mack1993) in the context of prediction/reserving using the distribution-free chain ladder model. The analytical MSEP approximation suggested for the chain ladder model in Mack (Reference Mack1993) is, in essence, based on replacing the second term on the right-hand side in (5), relating to estimation error, by another term based on certain conditional moments, conditioning on σ fields strictly smaller than

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

. This problem was highlighted already in Mack (Reference Mack1993) in the context of prediction/reserving using the distribution-free chain ladder model. The analytical MSEP approximation suggested for the chain ladder model in Mack (Reference Mack1993) is, in essence, based on replacing the second term on the right-hand side in (5), relating to estimation error, by another term based on certain conditional moments, conditioning on σ fields strictly smaller than

![]() $\cal F_0$

. These conditional moments are natural objects and straightforward to calculate due to the conditional structure of the distribution-free chain ladder claim-amount dynamics. This approach to estimate conditional MSEP was motivated heuristically as “average over as little as possible”; see Mack (Reference Mack1993: 219). In the present paper, we present a conceptually clear approach to quantifying the variability due to estimation error that is not model specific. The resulting conditional MSEP estimator for the ultimate claim amount is found to coincide with that found in Mack (Reference Mack1993) for the distribution-free chain ladder model; see section 5. This is further illustrated by applying the same approach to non-sequential, unconditional, models; see section 6, where it is shown that the introduced method can provide an alternative motivation of the estimator from Renshaw (Reference Renshaw1994) for the overdispersed Poisson chain ladder model.

$\cal F_0$

. These conditional moments are natural objects and straightforward to calculate due to the conditional structure of the distribution-free chain ladder claim-amount dynamics. This approach to estimate conditional MSEP was motivated heuristically as “average over as little as possible”; see Mack (Reference Mack1993: 219). In the present paper, we present a conceptually clear approach to quantifying the variability due to estimation error that is not model specific. The resulting conditional MSEP estimator for the ultimate claim amount is found to coincide with that found in Mack (Reference Mack1993) for the distribution-free chain ladder model; see section 5. This is further illustrated by applying the same approach to non-sequential, unconditional, models; see section 6, where it is shown that the introduced method can provide an alternative motivation of the estimator from Renshaw (Reference Renshaw1994) for the overdispersed Poisson chain ladder model.

With the aim of finding a suitable estimator of

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

, notice that the predictor

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

, notice that the predictor

![]() $\widehat{X} \,:= h(\widehat{\boldsymbol{\theta}};\,\cal F_0)$

is obtained by evaluating the

$\widehat{X} \,:= h(\widehat{\boldsymbol{\theta}};\,\cal F_0)$

is obtained by evaluating the

![]() $\cal F_0$

measurable function

$\cal F_0$

measurable function

![]() ${{{z}}}\mapsto h({{{z}}};\,\cal F_0)$

at

${{{z}}}\mapsto h({{{z}}};\,\cal F_0)$

at

![]() $\widehat{\boldsymbol{\theta}}$

The chosen model and the stochastic quantity of interest, X, together form the function

$\widehat{\boldsymbol{\theta}}$

The chosen model and the stochastic quantity of interest, X, together form the function

![]() ${{{z}}}\mapsto h({{{z}}};\,\cal F_0)$

that is held fixed. This function may be referred to as the basis of prediction. However, the estimator

${{{z}}}\mapsto h({{{z}}};\,\cal F_0)$

that is held fixed. This function may be referred to as the basis of prediction. However, the estimator

![]() $\widehat{\boldsymbol{\theta}}$

is a random variable whose observed outcome may differ substantially from the unknown true parameter value

θ

. In order to obtain a meaningful estimator of the

$\widehat{\boldsymbol{\theta}}$

is a random variable whose observed outcome may differ substantially from the unknown true parameter value

θ

. In order to obtain a meaningful estimator of the

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

, the variability in

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

, the variability in

![]() $\widehat{\boldsymbol{\theta}}$

should be taken into account. Towards this end, consider the random variable

$\widehat{\boldsymbol{\theta}}$

should be taken into account. Towards this end, consider the random variable

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

which is not

$\widehat{\boldsymbol{\theta}}^{\,*}$

which is not

![]() $\cal F_0$

measurable, which is constructed to share key properties with

$\cal F_0$

measurable, which is constructed to share key properties with

![]() $\widehat{\boldsymbol{\theta}}$

. Based on

$\widehat{\boldsymbol{\theta}}$

. Based on

![]() $\widehat{X}^* \,:= h(\widehat{\boldsymbol{\theta}}^{\,*};\,\cal F_0)$

, we will introduce versions of conditional MSEP from which estimators of conditional MSEP in (5) will follow naturally.

$\widehat{X}^* \,:= h(\widehat{\boldsymbol{\theta}}^{\,*};\,\cal F_0)$

, we will introduce versions of conditional MSEP from which estimators of conditional MSEP in (5) will follow naturally.

Assumption 2.1.

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

and X are conditionally independent, given

$\widehat{\boldsymbol{\theta}}^{\,*}$

and X are conditionally independent, given

![]() $\cal F_0$

$\cal F_0$

Definition 2.1. Define

![]() ${\rm MSEP}_{\cal F_0}^*(X,\widehat{X})$

by

${\rm MSEP}_{\cal F_0}^*(X,\widehat{X})$

by

Definition 2.1 and Assumption 2.1 together immediately yield

In general, evaluation of the second term on the right-hand side above requires full knowledge about the model. Typically, we want only to make weaker moment assumptions. The price paid is the necessity to consider the approximation

where

![]() $\nabla h(\boldsymbol{\theta};\,\cal F_0)$

denotes the gradient of

$\nabla h(\boldsymbol{\theta};\,\cal F_0)$

denotes the gradient of

![]() ${{{z}}}\mapsto h({{{z}}};\,\cal F_0)$

evaluated at

θ

.

${{{z}}}\mapsto h({{{z}}};\,\cal F_0)$

evaluated at

θ

.

Notice that if

![]() ${\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0] = \boldsymbol{\theta}$

and

${\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0] = \boldsymbol{\theta}$

and

![]() ${\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0)$

exists finitely a.s., then

${\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0)$

exists finitely a.s., then

$${\rm \mathbb E}[(h^{\nabla}(\widehat{\boldsymbol{\theta}}^{\,*};\,\cal F_0) - h(\boldsymbol{\theta};\,\cal F_0))^2 \mid \cal F_0]=\nabla h(\boldsymbol{\theta};\,\cal F_0)^{\prime}{\rm \mathbb E}[(\widehat{\boldsymbol{\theta}}^{\,*}-\boldsymbol{\theta})(\widehat{\boldsymbol{\theta}}^{\,*}-\boldsymbol{\theta})^{\prime} \mid \cal F_0]\nabla h(\boldsymbol{\theta};\,\cal F_0)\\=\nabla h(\boldsymbol{\theta};\,\cal F_0)^{\prime}{\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*}\mid\cal F_0)\nabla h(\boldsymbol{\theta};\,\cal F_0)$$

$${\rm \mathbb E}[(h^{\nabla}(\widehat{\boldsymbol{\theta}}^{\,*};\,\cal F_0) - h(\boldsymbol{\theta};\,\cal F_0))^2 \mid \cal F_0]=\nabla h(\boldsymbol{\theta};\,\cal F_0)^{\prime}{\rm \mathbb E}[(\widehat{\boldsymbol{\theta}}^{\,*}-\boldsymbol{\theta})(\widehat{\boldsymbol{\theta}}^{\,*}-\boldsymbol{\theta})^{\prime} \mid \cal F_0]\nabla h(\boldsymbol{\theta};\,\cal F_0)\\=\nabla h(\boldsymbol{\theta};\,\cal F_0)^{\prime}{\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*}\mid\cal F_0)\nabla h(\boldsymbol{\theta};\,\cal F_0)$$

Assumption 2.2.

![]() ${\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0] = \boldsymbol{\theta}$

and

${\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0] = \boldsymbol{\theta}$

and

![]() ${\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0)$

exists finitely a.s.

${\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0)$

exists finitely a.s.

Definition 2.2.

Define

![]() ${\rm MSEP}_{\cal F_0}^{*,\nabla}(X,\widehat{X})$

by

${\rm MSEP}_{\cal F_0}^{*,\nabla}(X,\widehat{X})$

by

Notice that

![]() ${\rm MSEP}_{\cal F_0}^*(X,\widehat{X})={\rm MSEP}_{\cal F_0}^{*,\nabla}(X,\widehat{X})$

if

${\rm MSEP}_{\cal F_0}^*(X,\widehat{X})={\rm MSEP}_{\cal F_0}^{*,\nabla}(X,\widehat{X})$

if

![]() $h^{\nabla}(\widehat{\boldsymbol{\theta}}^{\,*} ;\,\cal F_0) = h(\widehat{\boldsymbol{\theta}}^{\,*} ;\,\cal F_0)$

$h^{\nabla}(\widehat{\boldsymbol{\theta}}^{\,*} ;\,\cal F_0) = h(\widehat{\boldsymbol{\theta}}^{\,*} ;\,\cal F_0)$

Remark 1. Akaike presented, in Akaike (Reference Akaike1969, Reference Akaike1970), the quantity FPE (final prediction error) for assessment of the accuracy of a predictor, intended for model selection by rewarding models that give rise to small prediction errors. Akaike demonstrated the merits of FPE when used for order selection among autoregressive processes.

Akaike’s FPE assumes a stochastic process

![]() ${(S_t)_{t \in \cal T}}$

of interest and an independent copy

${(S_t)_{t \in \cal T}}$

of interest and an independent copy

![]() ${(S^{\perp}_t)_{t \in \cal T}}$

of that process. Let

${(S^{\perp}_t)_{t \in \cal T}}$

of that process. Let

![]() ${\cal F_0}$

be the σ field generated by

${\cal F_0}$

be the σ field generated by

![]() ${(S_t)_{t \in \cal T,t\leq 0}}$

and let X be the result of applying some functional to

${(S_t)_{t \in \cal T,t\leq 0}}$

and let X be the result of applying some functional to

![]() ${(S_t)_{t \in \cal T}}$

such that X is not

${(S_t)_{t \in \cal T}}$

such that X is not

![]() ${\cal F_0}$

measurable. If

${\cal F_0}$

measurable. If

![]() ${(S_t)_{t \in \cal T}}$

is one-dimensional, then X = St

, for some t > 0, is a natural example. Let

${(S_t)_{t \in \cal T}}$

is one-dimensional, then X = St

, for some t > 0, is a natural example. Let

![]() ${h(\boldsymbol{\theta};\,\cal F_0)\,:={\rm \mathbb E}[X\mid\cal F_0]}$

and let

${h(\boldsymbol{\theta};\,\cal F_0)\,:={\rm \mathbb E}[X\mid\cal F_0]}$

and let

![]() ${h(\widehat{\boldsymbol{\theta}};\,\cal F_0)}$

be the corresponding predictor of X based on the

${h(\widehat{\boldsymbol{\theta}};\,\cal F_0)}$

be the corresponding predictor of X based on the

![]() ${\cal F_0}$

-measurable parameter estimator

${\cal F_0}$

-measurable parameter estimator

![]() ${\widehat{\boldsymbol{\theta}}}$

. Let

${\widehat{\boldsymbol{\theta}}}$

. Let

![]() ${\cal F_0^{\perp}}$

, X

⊥,

${\cal F_0^{\perp}}$

, X

⊥,

![]() ${h(\boldsymbol{\theta};\,\cal F_0^{\perp})}$

and

${h(\boldsymbol{\theta};\,\cal F_0^{\perp})}$

and

![]() ${\widehat{\boldsymbol{\theta}}^{\perp}}$

be the corresponding quantities based on

${\widehat{\boldsymbol{\theta}}^{\perp}}$

be the corresponding quantities based on

![]() ${(S^{\perp}_t)_{t \in \cal T}}$

. FPE is defined as

${(S^{\perp}_t)_{t \in \cal T}}$

. FPE is defined as

and it is clear that the roles of

![]() ${(S_t)_{t \in \cal T}}$

and

${(S_t)_{t \in \cal T}}$

and

![]() ${(S^{\perp}_t)_{t \in \cal T}}$

may be interchanged to get

${(S^{\perp}_t)_{t \in \cal T}}$

may be interchanged to get

$${\rm FPE}(X,\widehat{X}) = {\rm \mathbb E}\big[(X^{\perp}-h(\widehat{\boldsymbol{\theta}};\,\cal F_0^{\perp}))^2\big]={\rm \mathbb E}\big[(X-h(\widehat{\boldsymbol{\theta}}^{\perp};\,\cal F_0))^2\big]$$

$${\rm FPE}(X,\widehat{X}) = {\rm \mathbb E}\big[(X^{\perp}-h(\widehat{\boldsymbol{\theta}};\,\cal F_0^{\perp}))^2\big]={\rm \mathbb E}\big[(X-h(\widehat{\boldsymbol{\theta}}^{\perp};\,\cal F_0))^2\big]$$

Naturally, we may consider the conditional version of FPE which gives

$${\rm FPE}_{\cal F_0}(X,\widehat{X}) = {\rm \mathbb E}\big[(X^{\perp}-h(\widehat{\boldsymbol{\theta}};\,\cal F_0^{\perp}))^2\mid\cal F_0^{\perp}\big]={\rm \mathbb E}\big[(X-h(\widehat{\boldsymbol{\theta}}^{\perp};\,\cal F_0))^2\mid\cal F_0\big]$$

$${\rm FPE}_{\cal F_0}(X,\widehat{X}) = {\rm \mathbb E}\big[(X^{\perp}-h(\widehat{\boldsymbol{\theta}};\,\cal F_0^{\perp}))^2\mid\cal F_0^{\perp}\big]={\rm \mathbb E}\big[(X-h(\widehat{\boldsymbol{\theta}}^{\perp};\,\cal F_0))^2\mid\cal F_0\big]$$

Clearly,

![]() $\widehat{\boldsymbol{\theta}}^{\,*}=\widehat{\boldsymbol{\theta}}^{\perp}$

gives

$\widehat{\boldsymbol{\theta}}^{\,*}=\widehat{\boldsymbol{\theta}}^{\perp}$

gives

If

![]() $h^{\nabla}(\widehat{\boldsymbol{\theta}}^{\,*};\,\cal F_0)=h(\widehat{\boldsymbol{\theta}}^{\,*} ;\,\cal F_0)$

, then choosing

$h^{\nabla}(\widehat{\boldsymbol{\theta}}^{\,*};\,\cal F_0)=h(\widehat{\boldsymbol{\theta}}^{\,*} ;\,\cal F_0)$

, then choosing

![]() $\widehat{\boldsymbol{\theta}}^{\,*}=\widehat{\boldsymbol{\theta}}^{\perp}$

gives

$\widehat{\boldsymbol{\theta}}^{\,*}=\widehat{\boldsymbol{\theta}}^{\perp}$

gives

$${\rm MSEP}_{\cal F_0}^{*,\nabla}(X,\widehat{X})\,:={\rm Var}\!(X \mid \cal F_0)(\boldsymbol{\theta})+\nabla h(\boldsymbol{\theta};\,\cal F_0)^{\prime}{\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0)\nabla h(\boldsymbol{\theta};\,\cal F_0)\\= {\rm Var}\!(X \mid \cal F_0)(\boldsymbol{\theta})+\nabla h(\boldsymbol{\theta};\,\cal F_0)^{\prime}{\rm Cov}(\widehat{\boldsymbol{\theta}})\nabla h(\boldsymbol{\theta};\,\cal F_0)\\={\rm FPE}_{\cal F_0}(X,\widehat{X})$$}

$${\rm MSEP}_{\cal F_0}^{*,\nabla}(X,\widehat{X})\,:={\rm Var}\!(X \mid \cal F_0)(\boldsymbol{\theta})+\nabla h(\boldsymbol{\theta};\,\cal F_0)^{\prime}{\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0)\nabla h(\boldsymbol{\theta};\,\cal F_0)\\= {\rm Var}\!(X \mid \cal F_0)(\boldsymbol{\theta})+\nabla h(\boldsymbol{\theta};\,\cal F_0)^{\prime}{\rm Cov}(\widehat{\boldsymbol{\theta}})\nabla h(\boldsymbol{\theta};\,\cal F_0)\\={\rm FPE}_{\cal F_0}(X,\widehat{X})$$}

Since

![]() ${\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0)$

is an

${\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0)$

is an

![]() $\cal F_0$

measurable function of

θ

, we may write

$\cal F_0$

measurable function of

θ

, we may write

where

We write

to emphasise that

![]() ${\rm MSEP}_{\cal F_0}^*(X,\widehat{X})$

and

${\rm MSEP}_{\cal F_0}^*(X,\widehat{X})$

and

![]() ${\rm MSEP}_{\cal F_0}^{*,\nabla}(X,\widehat{X})$

are

${\rm MSEP}_{\cal F_0}^{*,\nabla}(X,\widehat{X})$

are

![]() $\cal F_0$

measurable functions of

θ

.

$\cal F_0$

measurable functions of

θ

.

The plug-in estimator

![]() $H^*(\widehat{\boldsymbol{\theta}};\,\cal F_0)$

of

$H^*(\widehat{\boldsymbol{\theta}};\,\cal F_0)$

of

![]() ${\rm MSEP}_{\cal F_0}^*(X,\widehat{X})$

may appear to be a natural estimator of

${\rm MSEP}_{\cal F_0}^*(X,\widehat{X})$

may appear to be a natural estimator of

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

. However, in most situations there will not be sufficient statistical evidence to motivate specifying the full distribution of

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

. However, in most situations there will not be sufficient statistical evidence to motivate specifying the full distribution of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

. Therefore,

$\widehat{\boldsymbol{\theta}}^{\,*}$

. Therefore,

![]() $H^*(\widehat{\boldsymbol{\theta}};\,\cal F_0)$

is not likely to be an attractive estimator of

$H^*(\widehat{\boldsymbol{\theta}};\,\cal F_0)$

is not likely to be an attractive estimator of

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

. The plug-in estimator

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

. The plug-in estimator

![]() $H^{*,\nabla}(\widehat{\boldsymbol{\theta}};\,\cal F_0)$

of

$H^{*,\nabla}(\widehat{\boldsymbol{\theta}};\,\cal F_0)$

of

![]() ${\rm MSEP}_{\cal F_0}^{*,\nabla}(X,\widehat{X})$

is more likely to be a computable estimator of

${\rm MSEP}_{\cal F_0}^{*,\nabla}(X,\widehat{X})$

is more likely to be a computable estimator of

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

, requiring only the covariance matrix

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

, requiring only the covariance matrix

![]() $\boldsymbol{\Lambda}(\boldsymbol{\theta};\,\cal F_0)\,:={\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0)$

as a matrix-valued function of the parameter

θ

instead of the full distribution of

$\boldsymbol{\Lambda}(\boldsymbol{\theta};\,\cal F_0)\,:={\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0)$

as a matrix-valued function of the parameter

θ

instead of the full distribution of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

. We will henceforth focus solely on the estimator

$\widehat{\boldsymbol{\theta}}^{\,*}$

. We will henceforth focus solely on the estimator

![]() $H^{*,\nabla}(\widehat{\boldsymbol{\theta}};\,\cal F_0)$

.

$H^{*,\nabla}(\widehat{\boldsymbol{\theta}};\,\cal F_0)$

.

Definition 2.3. The estimator of the conditional MSEP is given by

We emphasise that the estimator we suggest in Definition 2.3 relies on one approximation and one modelling choice. The approximation refers to

and no other approximations will appear. The modelling choice refers to deciding on how the estimation error should be accounted for in terms of the conditional covariance structure

![]() ${\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid~\cal F_0)$

, where

${\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid~\cal F_0)$

, where

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

satisfies the requirement

$\widehat{\boldsymbol{\theta}}^{\,*}$

satisfies the requirement

![]() ${\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0] = \boldsymbol{\theta}$

.

${\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0] = \boldsymbol{\theta}$

.

Before proceeding further with the specification of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

, one can note that in many situations it will be natural to structure data according to, for example, accident year. In these situations, it will be possible to express X as

$\widehat{\boldsymbol{\theta}}^{\,*}$

, one can note that in many situations it will be natural to structure data according to, for example, accident year. In these situations, it will be possible to express X as

![]() $X=\sum_{i\in\cal I}X_i$

and consequently also

$X=\sum_{i\in\cal I}X_i$

and consequently also

![]() $h(\boldsymbol\theta;\,\cal F_0)=\sum_{i\in\cal I} h_i(\boldsymbol\theta;\,\cal F_0)$

.This immediately implies that the estimator (7) of conditional MSEP can be expressed in a way that simplifies computations.

$h(\boldsymbol\theta;\,\cal F_0)=\sum_{i\in\cal I} h_i(\boldsymbol\theta;\,\cal F_0)$

.This immediately implies that the estimator (7) of conditional MSEP can be expressed in a way that simplifies computations.

Lemma 2.1. Given that

![]() $X=\sum_{i\in\cal I}X_i$

and

$X=\sum_{i\in\cal I}X_i$

and

![]() $h(\boldsymbol\theta;\,\cal F_0)=\sum_{i\in\cal I} h_i(\boldsymbol\theta;\,\cal F_0)$

, the estimator (7) takes the form

$h(\boldsymbol\theta;\,\cal F_0)=\sum_{i\in\cal I} h_i(\boldsymbol\theta;\,\cal F_0)$

, the estimator (7) takes the form

$$\widehat{{\rm MSEP}}_{\cal F_0}(X,\widehat{X})=\sum_{i\in\cal I} \widehat{{\rm MSEP}}_{\cal F_0}(X_i,\widehat{X}_i)\\\quad + 2\sum_{i,j\in\cal I, i < j} \Big({\rm Cov}(X_i, X_j \mid\cal F_0)(\widehat{\boldsymbol{\theta}})+Q_{i,j}(\widehat{\boldsymbol{\theta}} ;\,\cal F_0)\Big)$$

$$\widehat{{\rm MSEP}}_{\cal F_0}(X,\widehat{X})=\sum_{i\in\cal I} \widehat{{\rm MSEP}}_{\cal F_0}(X_i,\widehat{X}_i)\\\quad + 2\sum_{i,j\in\cal I, i < j} \Big({\rm Cov}(X_i, X_j \mid\cal F_0)(\widehat{\boldsymbol{\theta}})+Q_{i,j}(\widehat{\boldsymbol{\theta}} ;\,\cal F_0)\Big)$$

where

$$\widehat{{\rm MSEP}}_{\cal F_0}(X_i,\widehat{X}_i) ,:= {\rm Var}\!(X_i\mid\cal F_0)(\widehat{\boldsymbol{\theta}})+Q_{i,i}(\widehat{\boldsymbol{\theta}} ;\,\cal F_0)\\Q_{i,j}(\widehat{\boldsymbol{\theta}} ;\,\cal F_0)\,:=\nabla h_i(\widehat{\boldsymbol{\theta}} ;\,\cal F_0)^{\prime} \boldsymbol{\Lambda}(\widehat{\boldsymbol{\theta}};\,\cal F_0) \nabla h_j(\widehat{\boldsymbol{\theta}} ;\,\cal F_0)$$

$$\widehat{{\rm MSEP}}_{\cal F_0}(X_i,\widehat{X}_i) ,:= {\rm Var}\!(X_i\mid\cal F_0)(\widehat{\boldsymbol{\theta}})+Q_{i,i}(\widehat{\boldsymbol{\theta}} ;\,\cal F_0)\\Q_{i,j}(\widehat{\boldsymbol{\theta}} ;\,\cal F_0)\,:=\nabla h_i(\widehat{\boldsymbol{\theta}} ;\,\cal F_0)^{\prime} \boldsymbol{\Lambda}(\widehat{\boldsymbol{\theta}};\,\cal F_0) \nabla h_j(\widehat{\boldsymbol{\theta}} ;\,\cal F_0)$$

The proof of Lemma 2.1 follows from expanding the original quadratic form in the obvious way; see Appendix C. Even though Lemma 2.1 is trivial, it will be used repeatedly in later sections when the introduced methods are illustrated using, for example, different models for the data-generating process.

Assumption 2.3.

![]() ${\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}] = \boldsymbol{\theta}$

and

${\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}] = \boldsymbol{\theta}$

and

![]() ${\rm Cov}(\widehat{\boldsymbol{\theta}})$

exist finitely.

${\rm Cov}(\widehat{\boldsymbol{\theta}})$

exist finitely.

Given Assumption 2.3, one choice of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

is to choose

$\widehat{\boldsymbol{\theta}}^{\,*}$

is to choose

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

as an independent copy

$\widehat{\boldsymbol{\theta}}^{\,*}$

as an independent copy

![]() $\widehat{\boldsymbol\theta}^{\perp}$

, based entirely on

$\widehat{\boldsymbol\theta}^{\perp}$

, based entirely on

![]() ${(S^{\perp}_t)_{t \in \tau}}$

, of

${(S^{\perp}_t)_{t \in \tau}}$

, of

![]() ${\widehat{\boldsymbol\theta}}$

independent of

${\widehat{\boldsymbol\theta}}$

independent of

![]() ${\cal F_0}$

. An immediate consequence of this choice is

${\cal F_0}$

. An immediate consequence of this choice is

Since the specification

![]() $\widehat{\boldsymbol{\theta}}^{\,*}\,:=\widehat{\boldsymbol{\theta}}^{\perp}$

implies that

$\widehat{\boldsymbol{\theta}}^{\,*}\,:=\widehat{\boldsymbol{\theta}}^{\perp}$

implies that

![]() ${\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0)$

does not depend on

${\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0)$

does not depend on

![]() $\cal F_0$

, we refer to

$\cal F_0$

, we refer to

![]() $\widehat{\boldsymbol{\theta}}^{\perp}$

as the unconditional specification of

$\widehat{\boldsymbol{\theta}}^{\perp}$

as the unconditional specification of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

. In this case, as described in Remark 1,

$\widehat{\boldsymbol{\theta}}^{\,*}$

. In this case, as described in Remark 1,

![]() ${\rm MSEP}^{*}_{\cal F_0}(X,\widehat{X})$

coincides with Akaike’s FPE in the conditional setting. Moreover,

${\rm MSEP}^{*}_{\cal F_0}(X,\widehat{X})$

coincides with Akaike’s FPE in the conditional setting. Moreover,

For some models for the data-generating process

![]() ${(S_t)_{t \in \cal T}}$

, such as the conditional linear models investigated in section 4, computation of the unconditional covariance matrix

${(S_t)_{t \in \cal T}}$

, such as the conditional linear models investigated in section 4, computation of the unconditional covariance matrix

![]() ${{\rm Cov}(\widehat{\boldsymbol{\theta}})}$

is not feasible. Moreover, it may be argued that observed data should be considered also in the specification of

${{\rm Cov}(\widehat{\boldsymbol{\theta}})}$

is not feasible. Moreover, it may be argued that observed data should be considered also in the specification of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

although there is no statistical principle justifying this argument. The models investigated in section 4 are such that

θ

= (

θ

1, …,

θ

p

) and there exist nested σ-fields

$\widehat{\boldsymbol{\theta}}^{\,*}$

although there is no statistical principle justifying this argument. The models investigated in section 4 are such that

θ

= (

θ

1, …,

θ

p

) and there exist nested σ-fields

![]() $\sigma$-fields $\cal G_1\subseteq \dots \cal G_p \subseteq \cal F_0$

such that

$\sigma$-fields $\cal G_1\subseteq \dots \cal G_p \subseteq \cal F_0$

such that

![]() ${\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}_k \mid \cal G_k] = \boldsymbol\theta_k$

for k = 1, …, p and

${\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}_k \mid \cal G_k] = \boldsymbol\theta_k$

for k = 1, …, p and

![]() $\widehat{\boldsymbol{\theta}}_k$

is

$\widehat{\boldsymbol{\theta}}_k$

is

![]() $\cal G_{k+1}$

-measurable for k = 1, …, p − 1. The canonical example of such a model within a claims reserving context is the distribution-free chain ladder model from Mack (Reference Mack1993). Consequently,

$\cal G_{k+1}$

-measurable for k = 1, …, p − 1. The canonical example of such a model within a claims reserving context is the distribution-free chain ladder model from Mack (Reference Mack1993). Consequently,

![]() ${\rm Cov}(\widehat{\boldsymbol{\theta}}_j,\widehat{\boldsymbol{\theta}}_k \mid \cal G_j,\cal G_k)=0$

for j ≠ k. If further the covariance matrices

${\rm Cov}(\widehat{\boldsymbol{\theta}}_j,\widehat{\boldsymbol{\theta}}_k \mid \cal G_j,\cal G_k)=0$

for j ≠ k. If further the covariance matrices

![]() ${\rm Cov}(\widehat{\boldsymbol{\theta}}_k \mid \cal G_k)$

can be computed explicitly, as demonstrated in section 4, then we may choose

${\rm Cov}(\widehat{\boldsymbol{\theta}}_k \mid \cal G_k)$

can be computed explicitly, as demonstrated in section 4, then we may choose

![]() $\widehat{\boldsymbol{\theta}}^{\,*}\,:=\widehat{\boldsymbol\theta}^{\,*,c}$

such that

$\widehat{\boldsymbol{\theta}}^{\,*}\,:=\widehat{\boldsymbol\theta}^{\,*,c}$

such that

![]() ${\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}_k^* \mid \cal F_0]={\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}_k \mid \cal G_k]$

,

${\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}_k^* \mid \cal F_0]={\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}_k \mid \cal G_k]$

,

![]() ${\rm Cov}(\widehat{\boldsymbol{\theta}}_k^* \mid \cal F_0)={\rm Cov}(\widehat{\boldsymbol{\theta}}_k \mid \cal G_k)$

for k=1, …, p and

${\rm Cov}(\widehat{\boldsymbol{\theta}}_k^* \mid \cal F_0)={\rm Cov}(\widehat{\boldsymbol{\theta}}_k \mid \cal G_k)$

for k=1, …, p and

![]() ${\rm Cov}(\widehat{\boldsymbol{\theta}}_j^*,\widehat{\boldsymbol{\theta}}_k^* \mid \cal F_0)=0$

for j ≠ k. These observations were used already in Mack’s original derivation of the conditional MSEP; see Mack (Reference Mack1993). Since the specification

${\rm Cov}(\widehat{\boldsymbol{\theta}}_j^*,\widehat{\boldsymbol{\theta}}_k^* \mid \cal F_0)=0$

for j ≠ k. These observations were used already in Mack’s original derivation of the conditional MSEP; see Mack (Reference Mack1993). Since the specification

![]() $\widehat{\boldsymbol{\theta}}^{\,*}\,:=\widehat{\boldsymbol{\theta}}^{*,c}$

implies that

$\widehat{\boldsymbol{\theta}}^{\,*}\,:=\widehat{\boldsymbol{\theta}}^{*,c}$

implies that

![]() ${\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0)$

depends on

${\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*} \mid \cal F_0)$

depends on

![]() $\cal F_0$

, we refer to

$\cal F_0$

, we refer to

![]() $\widehat{\boldsymbol{\theta}}^{*,c}$

as the conditional specification of

$\widehat{\boldsymbol{\theta}}^{*,c}$

as the conditional specification of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

. In this case,

$\widehat{\boldsymbol{\theta}}^{\,*}$

. In this case,

Notice that if

![]() $\widehat{\boldsymbol{\theta}}^{*,u}\,:=\widehat{\boldsymbol{\theta}}^{\perp}$

and

$\widehat{\boldsymbol{\theta}}^{*,u}\,:=\widehat{\boldsymbol{\theta}}^{\perp}$

and

![]() $\widehat{\boldsymbol{\theta}}^{*,c}$

denote the unconditional and conditional specifications of

$\widehat{\boldsymbol{\theta}}^{*,c}$

denote the unconditional and conditional specifications of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

, respectively, then covariance decomposition yields

$\widehat{\boldsymbol{\theta}}^{\,*}$

, respectively, then covariance decomposition yields

$${\rm Cov}(\widehat{\boldsymbol{\theta}}^{*,u}_k\mid\cal F_0)={\rm Cov}(\widehat{\boldsymbol{\theta}}_k)= {\rm \mathbb E}[\!{\rm Cov}(\widehat{\boldsymbol{\theta}}_k\mid\cal G_k)]+{\rm Cov}({\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}_k\mid\cal G_k])= {\rm \mathbb E}[\!{\rm Cov}(\widehat{\boldsymbol{\theta}}_k\mid\cal G_k)]= {\rm \mathbb E}[\!{\rm Cov}(\widehat{\boldsymbol{\theta}}^{*,c}_k\mid\cal F_0)]$$

$${\rm Cov}(\widehat{\boldsymbol{\theta}}^{*,u}_k\mid\cal F_0)={\rm Cov}(\widehat{\boldsymbol{\theta}}_k)= {\rm \mathbb E}[\!{\rm Cov}(\widehat{\boldsymbol{\theta}}_k\mid\cal G_k)]+{\rm Cov}({\rm \mathbb E}[\,\widehat{\boldsymbol{\theta}}_k\mid\cal G_k])= {\rm \mathbb E}[\!{\rm Cov}(\widehat{\boldsymbol{\theta}}_k\mid\cal G_k)]= {\rm \mathbb E}[\!{\rm Cov}(\widehat{\boldsymbol{\theta}}^{*,c}_k\mid\cal F_0)]$$

Further, since

it directly follows that

![]() ${\rm Cov}(\widehat{\boldsymbol{\theta}}^{*,c}\mid\cal F_0)$

is an unbiased estimator of

${\rm Cov}(\widehat{\boldsymbol{\theta}}^{*,c}\mid\cal F_0)$

is an unbiased estimator of

![]() ${\rm Cov}(\widehat{\boldsymbol{\theta}}^{*,u}\mid\cal F_0)$

.

${\rm Cov}(\widehat{\boldsymbol{\theta}}^{*,u}\mid\cal F_0)$

.

The estimators of conditional MSEP for the distribution-free chain ladder model given in Buchwalder et al. (Reference Buchwalder, Bühlmann, Merz and Wüthrich2006) and, more explicitly, in Diers et al. (Reference Diers, Linde and Hahn2016) are essentially based on the conditional specification of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

. We refer to section 5 for details.

$\widehat{\boldsymbol{\theta}}^{\,*}$

. We refer to section 5 for details.

2.1 Selection of estimators of conditional MSEP

As noted in the Introduction,

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

is the optimal predictor of the squared prediction error

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

is the optimal predictor of the squared prediction error

![]() ${(X \,{-}\,\widehat{X})^2}]$

in the sense that it minimises

${(X \,{-}\,\widehat{X})^2}]$

in the sense that it minimises

![]() ${\rm \mathbb E}[((X\,{-}\,\widehat{X})^2\,{-}\,V)^2]$

over all

${\rm \mathbb E}[((X\,{-}\,\widehat{X})^2\,{-}\,V)^2]$

over all

![]() $\cal F_0$

-measurable random variables V having finite variance. Therefore, given a set of estimators

$\cal F_0$

-measurable random variables V having finite variance. Therefore, given a set of estimators

![]() $\widehat{V}$

of

$\widehat{V}$

of

![]() ${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

, the best estimator is the one minimising

${\rm MSEP}_{\cal F_0}(X,\widehat{X})$

, the best estimator is the one minimising

![]() ${\rm \mathbb E}[((X-\widehat{X})^2-\widehat{V})^2]$

. Write

${\rm \mathbb E}[((X-\widehat{X})^2-\widehat{V})^2]$

. Write

![]() $V\,:={\rm MSEP}_{\cal F_0}(X,\widehat{X})$

and

$V\,:={\rm MSEP}_{\cal F_0}(X,\widehat{X})$

and

![]() $\widehat{V}\,:=\ V+\Delta V$

and notice that

$\widehat{V}\,:=\ V+\Delta V$

and notice that

Since

$${\rm \mathbb E}[(X - \widehat X)^2] = {\rm \mathbb E}[{\rm \mathbb E}[(X - \widehat X)^2 \mid \cal F_0]]= {\rm \mathbb E}[V]\\{\rm \mathbb E}[(X - \widehat X)^2 \Delta V] = {\rm \mathbb E}[{\rm \mathbb E}[(X - \widehat X)^2 \Delta V \mid \cal F_0]]= {\rm \mathbb E}[{\rm \mathbb E}[(X - \widehat X)^2 \mid \cal F_0]\Delta V]= {\rm \mathbb E}[V\Delta V]$$

$${\rm \mathbb E}[(X - \widehat X)^2] = {\rm \mathbb E}[{\rm \mathbb E}[(X - \widehat X)^2 \mid \cal F_0]]= {\rm \mathbb E}[V]\\{\rm \mathbb E}[(X - \widehat X)^2 \Delta V] = {\rm \mathbb E}[{\rm \mathbb E}[(X - \widehat X)^2 \Delta V \mid \cal F_0]]= {\rm \mathbb E}[{\rm \mathbb E}[(X - \widehat X)^2 \mid \cal F_0]\Delta V]= {\rm \mathbb E}[V\Delta V]$$

we find that

$${\rm \mathbb E}[((X-\widehat{X})^2-\widehat{V})^2]={\rm Var}\!((X-\widehat{X})^2)+{\rm \mathbb E}[(X-\widehat{X})^2]^2-2{\rm \mathbb E}[(X-\widehat{X})^2(V+\Delta V)]\\\quad+{\rm \mathbb E}[(V+\Delta V)^2]={\rm Var}\!((X-\widehat{X})^2)+{\rm \mathbb E}[V]^2-2\big({\rm \mathbb E}[V^2]+{\rm \mathbb E}[(X-\widehat{X})^2\Delta V]\big)\\\quad+{\rm \mathbb E}[V^2]+{\rm \mathbb E}[\Delta V^2]+2{\rm \mathbb E}[V\Delta V]={\rm Var}\!((X-\widehat{X})^2)-{\rm Var}\!(V)+{\rm \mathbb E}[\Delta V^2]$$

$${\rm \mathbb E}[((X-\widehat{X})^2-\widehat{V})^2]={\rm Var}\!((X-\widehat{X})^2)+{\rm \mathbb E}[(X-\widehat{X})^2]^2-2{\rm \mathbb E}[(X-\widehat{X})^2(V+\Delta V)]\\\quad+{\rm \mathbb E}[(V+\Delta V)^2]={\rm Var}\!((X-\widehat{X})^2)+{\rm \mathbb E}[V]^2-2\big({\rm \mathbb E}[V^2]+{\rm \mathbb E}[(X-\widehat{X})^2\Delta V]\big)\\\quad+{\rm \mathbb E}[V^2]+{\rm \mathbb E}[\Delta V^2]+2{\rm \mathbb E}[V\Delta V]={\rm Var}\!((X-\widehat{X})^2)-{\rm Var}\!(V)+{\rm \mathbb E}[\Delta V^2]$$

Recall from (5) that

and from (7) that

Recall also that

![]() $\boldsymbol{\Lambda}(\boldsymbol{\theta};\,\cal F_0)={\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*}\mid\cal F_0)$

depends on the specification of

$\boldsymbol{\Lambda}(\boldsymbol{\theta};\,\cal F_0)={\rm Cov}(\widehat{\boldsymbol{\theta}}^{\,*}\mid\cal F_0)$

depends on the specification of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

. Therefore, we may in principle search for the optimal specification of

$\widehat{\boldsymbol{\theta}}^{\,*}$

. Therefore, we may in principle search for the optimal specification of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

. However, it is unlikely that any specifications will enable explicit computation of

$\widehat{\boldsymbol{\theta}}^{\,*}$

. However, it is unlikely that any specifications will enable explicit computation of

![]() ${\rm \mathbb E}[\Delta V^2]$

. Moreover, for so-called distribution-free models defined only in terms of certain (conditional) moments, the required moments appearing in the computation of

${\rm \mathbb E}[\Delta V^2]$

. Moreover, for so-called distribution-free models defined only in terms of certain (conditional) moments, the required moments appearing in the computation of

![]() ${\rm \mathbb E}[\Delta V^2]$

may be unspecified.

${\rm \mathbb E}[\Delta V^2]$

may be unspecified.

We may consider the approximations

$$V={\rm MSEP}_{\cal F_0}(X,\widehat{X})\approx {\rm Var}\!(X\mid\cal F_0)+\nabla h(\boldsymbol{\theta} ;\,\cal F_0)^{\prime} (\widehat{\boldsymbol{\theta}}-\boldsymbol{\theta}) (\widehat{\boldsymbol{\theta}}-\boldsymbol{\theta})^{\prime}\nabla h(\boldsymbol{\theta} ;\,\cal F_0)\\\widehat{V}\approx {\rm MSEP}_{\cal F_0}(X,\widehat{X})^{*,\nabla}={\rm Var}\!(X\mid\cal F_0)+\nabla h(\boldsymbol{\theta} ;\,\cal F_0)^{\prime} \boldsymbol{\Lambda}(\boldsymbol{\theta};\,\cal F_0)\nabla h(\boldsymbol{\theta} ;\,\cal F_0)$$

$$V={\rm MSEP}_{\cal F_0}(X,\widehat{X})\approx {\rm Var}\!(X\mid\cal F_0)+\nabla h(\boldsymbol{\theta} ;\,\cal F_0)^{\prime} (\widehat{\boldsymbol{\theta}}-\boldsymbol{\theta}) (\widehat{\boldsymbol{\theta}}-\boldsymbol{\theta})^{\prime}\nabla h(\boldsymbol{\theta} ;\,\cal F_0)\\\widehat{V}\approx {\rm MSEP}_{\cal F_0}(X,\widehat{X})^{*,\nabla}={\rm Var}\!(X\mid\cal F_0)+\nabla h(\boldsymbol{\theta} ;\,\cal F_0)^{\prime} \boldsymbol{\Lambda}(\boldsymbol{\theta};\,\cal F_0)\nabla h(\boldsymbol{\theta} ;\,\cal F_0)$$

which yield

Therefore, the specification of

![]() ${\widehat{\boldsymbol{\theta}}^{\,*}}$

should be such that

${\widehat{\boldsymbol{\theta}}^{\,*}}$

should be such that

${(\widehat{\boldsymbol{\theta}}-\boldsymbol{\theta}) (\widehat{\boldsymbol{\theta}}-\boldsymbol{\theta})^{\prime}}$

and

${(\widehat{\boldsymbol{\theta}}-\boldsymbol{\theta}) (\widehat{\boldsymbol{\theta}}-\boldsymbol{\theta})^{\prime}}$

and

${\boldsymbol{\Lambda}(\boldsymbol{\theta};\,\cal F_0)}$

are close, and

${\boldsymbol{\Lambda}(\boldsymbol{\theta};\,\cal F_0)}$

are close, and $\boldsymbol{\Lambda}(\widehat{\boldsymbol{\theta}};\,\cal F_0)$

is computable.

$\boldsymbol{\Lambda}(\widehat{\boldsymbol{\theta}};\,\cal F_0)$

is computable.

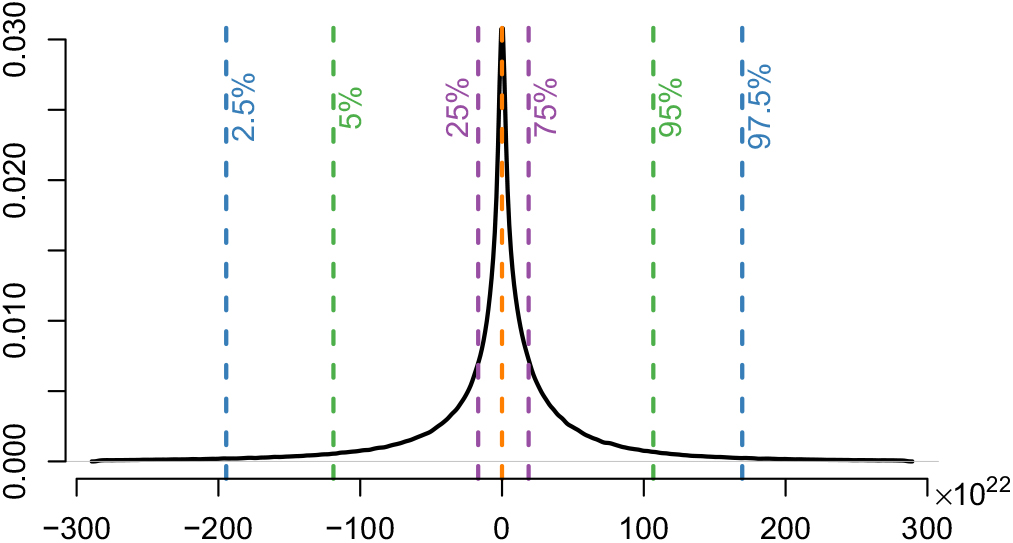

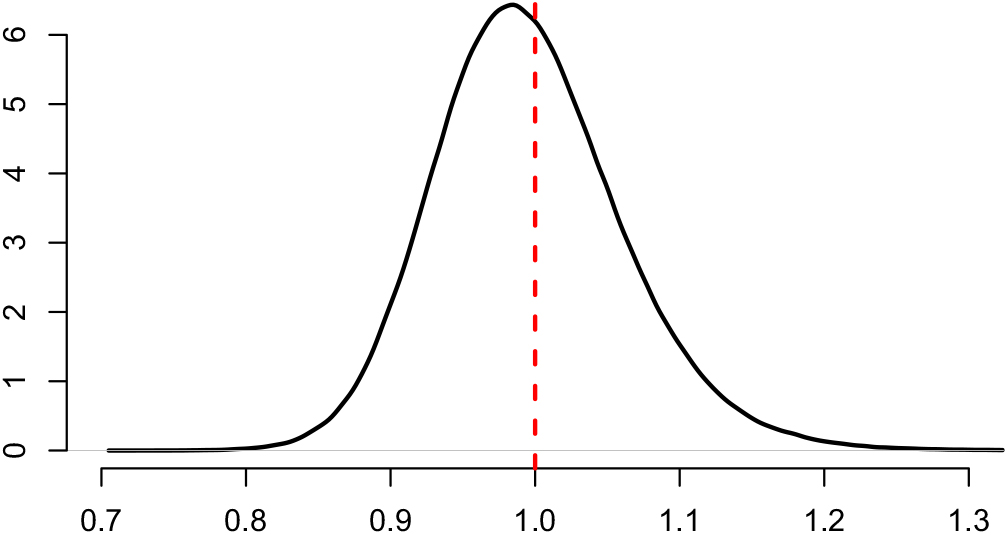

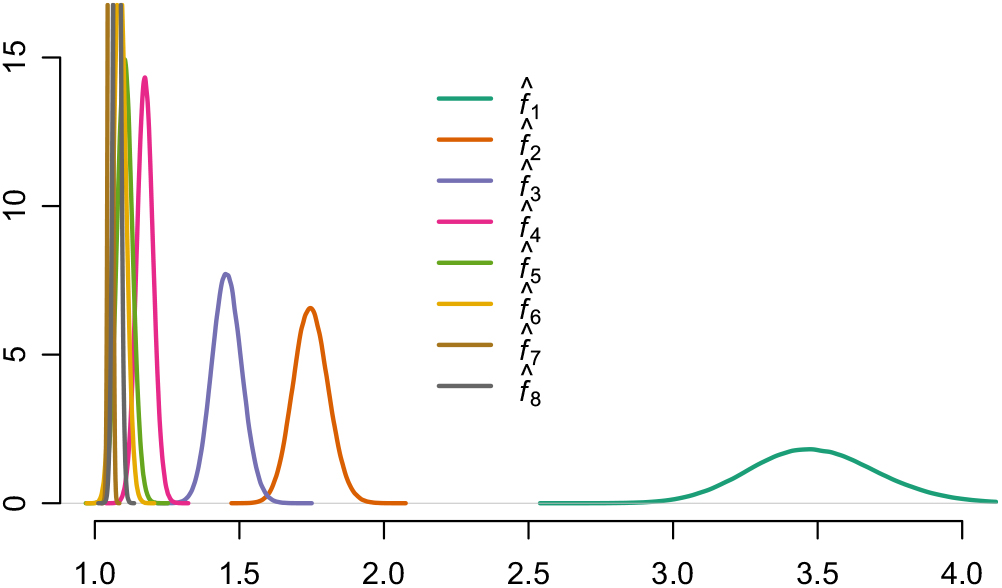

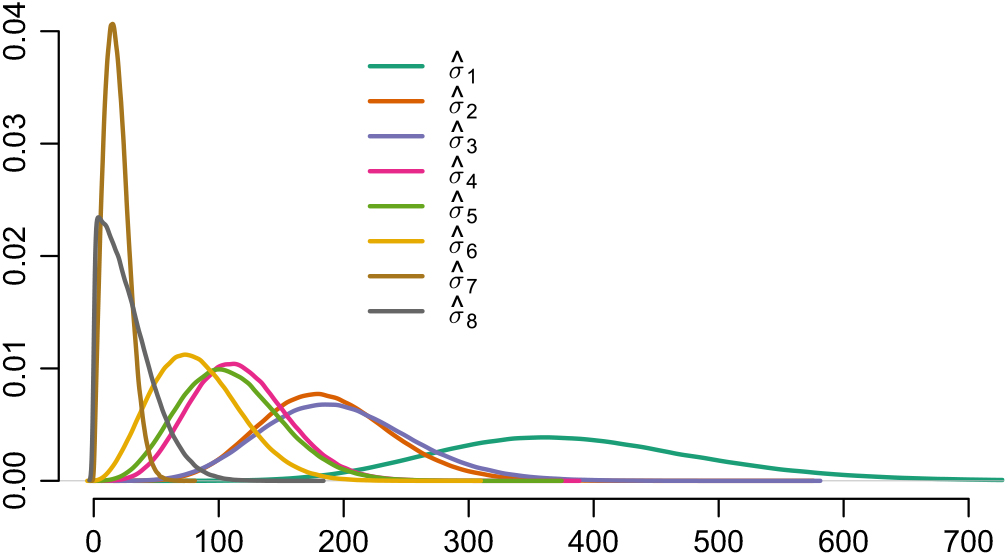

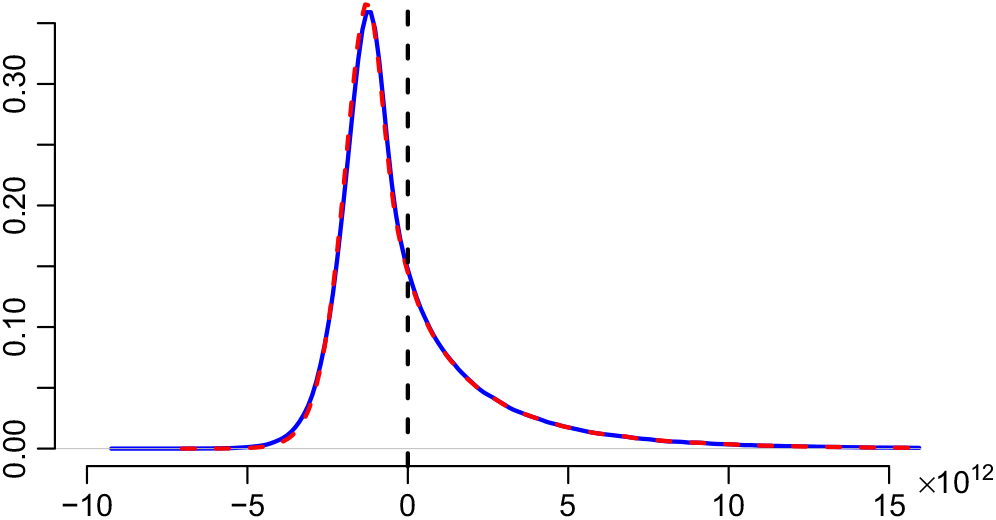

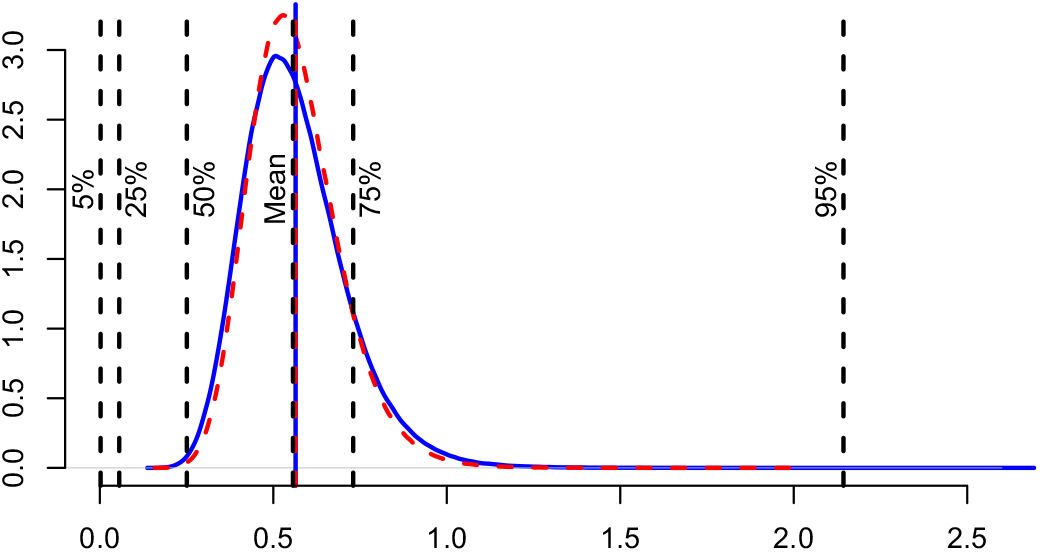

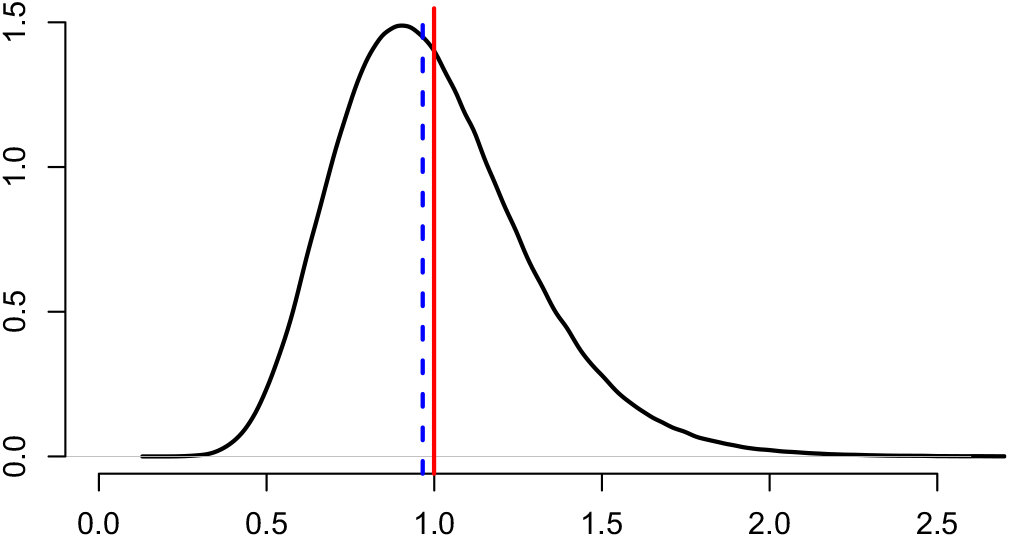

Appendix D compares the two estimators of conditional MSEP based on unconditional and conditional, respectively, specification of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

, in the setting of Mack’s distribution-free chain ladder model. No significant difference between the two estimators can be found. However, in the setting of Mack’s distribution-free chain ladder model, only the estimator based on the conditional specification of

$\widehat{\boldsymbol{\theta}}^{\,*}$

, in the setting of Mack’s distribution-free chain ladder model. No significant difference between the two estimators can be found. However, in the setting of Mack’s distribution-free chain ladder model, only the estimator based on the conditional specification of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

is computable.

$\widehat{\boldsymbol{\theta}}^{\,*}$

is computable.

3. Data in the Form of Run-off Triangles

One of the main objectives of this paper is the estimation of the precision of reserving methods when the data in the form of run-off triangles (trapezoids), explained in the following, have conditional development-year dynamics of a certain form. Mack’s chain ladder model, see, for example, Mack (Reference Mack1993), will serve as the canonical example.

Let I

i,j

denote the incremental claims payments during development year

![]() $j\in \{1,\dots,J\}=:\,\mathcal{J}$

and from accidents during accident year

$j\in \{1,\dots,J\}=:\,\mathcal{J}$

and from accidents during accident year

![]() $i\in \{i_0,\dots,J\}=:\,\mathcal{I}$

, where i

0 ≤ 1. This corresponds to the indexation used in Mack (Reference Mack1993); that is, j = 1 corresponds to the payments that are made during a particular accident year. Clearly, the standard terminology accident and development year used here could refer to any other appropriate time unit. The observed payments as of today, at time 0, is what is called a run-off triangle or run-off trapezoid:

$i\in \{i_0,\dots,J\}=:\,\mathcal{I}$

, where i

0 ≤ 1. This corresponds to the indexation used in Mack (Reference Mack1993); that is, j = 1 corresponds to the payments that are made during a particular accident year. Clearly, the standard terminology accident and development year used here could refer to any other appropriate time unit. The observed payments as of today, at time 0, is what is called a run-off triangle or run-off trapezoid:

and let

![]() $\cal F_0\,:=\sigma(D_0)$

. Notice that accident years i ≤ 1 are fully developed. Notice also that in the often considered special case i

0 = 1, the run-off trapezoid takes the form of a triangle. Instead of incremental payments I

i,j

, we may of course equivalently consider cumulative payments

$\cal F_0\,:=\sigma(D_0)$

. Notice that accident years i ≤ 1 are fully developed. Notice also that in the often considered special case i

0 = 1, the run-off trapezoid takes the form of a triangle. Instead of incremental payments I

i,j

, we may of course equivalently consider cumulative payments

![]() $C_{i,j}\,:=\sum_{k = 1}^{j} I_{i,k}$

, noticing that

$C_{i,j}\,:=\sum_{k = 1}^{j} I_{i,k}$

, noticing that

![]() $\cal F_0=\sigma(\{C_{i,j}\,{:}\,(i,j)\in \mathcal{I}\times \mathcal{J}, i+j\leq J+1\})$

.

$\cal F_0=\sigma(\{C_{i,j}\,{:}\,(i,j)\in \mathcal{I}\times \mathcal{J}, i+j\leq J+1\})$

.

The incremental payments that occur between (calendar) time t − 1 and t corresponds to the following diagonal in the run-off triangle of incremental payments:

Consequently the filtration

![]() ${({{\cal F}_t})_{t \in {\cal T}}}$

is given by

${({{\cal F}_t})_{t \in {\cal T}}}$

is given by

Let

that is, the subset of D

0 corresponding to claim amounts up to and including development year k, and notice that

![]() $\cal G_k\,:=\sigma(B_k)\subset \cal F_0$

, k = 1, …, J, form an increasing sequence of σ-fields. Conditional expectations and covariances with respect to these σ-fields appear naturally when estimating conditional MSEP in the distribution-free chain ladder model, see Mack (Reference Mack1993), and also in the more general setting considered here when

$\cal G_k\,:=\sigma(B_k)\subset \cal F_0$

, k = 1, …, J, form an increasing sequence of σ-fields. Conditional expectations and covariances with respect to these σ-fields appear naturally when estimating conditional MSEP in the distribution-free chain ladder model, see Mack (Reference Mack1993), and also in the more general setting considered here when

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

is chosen according to the conditional specification. We refer to section 4 for details.

$\widehat{\boldsymbol{\theta}}^{\,*}$

is chosen according to the conditional specification. We refer to section 4 for details.

3.1 Conditional MSEP for the ultimate claim amount

The outstanding claims reserve Ri for accident year i that is not yet fully developed, that is, the future payments stemming from claims incurred during accident year i, and the total outstanding claims reserve R are given by

$$R_i \,:= \sum_{j=J-i+2}^J I_{i,j}=C_{i, J} - C_{i, J-i+1}, \quad R \,:= \sum_{i=2}^J R_i$$

$$R_i \,:= \sum_{j=J-i+2}^J I_{i,j}=C_{i, J} - C_{i, J-i+1}, \quad R \,:= \sum_{i=2}^J R_i$$

The ultimate claim amount Ui for accident year i that is not yet fully developed, that is, the future and past payments stemming from claims incurred during accident year i, and the ultimate claim amount U are given by

$$U_i \,:= \sum_{j=1}^J I_{i,j}=C_{i, J}, \quad U \,:= \sum_{i=2}^J U_i$$

$$U_i \,:= \sum_{j=1}^J I_{i,j}=C_{i, J}, \quad U \,:= \sum_{i=2}^J U_i$$

Similarly, the amount of paid claims Pi for accident year i that is not yet fully developed, that is, the past payments stemming from claims incurred during accident year i, and the total amount of paid claims P are given by

$$P_i \,:= \sum_{j=1}^{J-i+1} I_{i,j}=C_{i, J-i+1}, \quad P \,:= \sum_{i=2}^J P_i$$

$$P_i \,:= \sum_{j=1}^{J-i+1} I_{i,j}=C_{i, J-i+1}, \quad P \,:= \sum_{i=2}^J P_i$$

Obviously, Ui = Pi + Ri and U = P + R.

We are interested in calculating the conditional MSEP of U and we can start by noticing that if the

![]() $\cal F_0$

-measurable random variable P is added to the random variable R to be predicted, then the same applies to its predictor:

$\cal F_0$

-measurable random variable P is added to the random variable R to be predicted, then the same applies to its predictor:

![]() $\widehat{U}=P+\widehat{R}$

. Therefore,

$\widehat{U}=P+\widehat{R}$

. Therefore,

Further, in order to be able compute the conditional MSEP estimators from Definition 2.1, and in particular the final plug-in estimator given by (7), we need to specify the basis of prediction, that is,

![]() ${{{z}}}\mapsto h({{{z}}};\,\cal F_0)$

, which is given by

${{{z}}}\mapsto h({{{z}}};\,\cal F_0)$

, which is given by

as well as specify the choice of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

In sections 4–6, we discuss how this can be done for specific models using Lemma 2.1.

$\widehat{\boldsymbol{\theta}}^{\,*}$

In sections 4–6, we discuss how this can be done for specific models using Lemma 2.1.

3.2 Conditional MSEP for the CDR

In section 3.1, we described an approach for estimating the conditional MSEP for the ultimate claim amount. Another quantity which has received considerable attention is the conditional MSEP for the CDR, which is the difference between the ultimate claim amount predictor and its update based on one more year of observations. For the chain ladder method, an estimator of the variability of CDR is provided in Wüthrich and Merz (Reference Wüthrich and Merz2008a). We will now describe how this may be done consistently in terms of the general approach for estimating the conditional MSEP described in section 2. As will be seen, there is no conceptual difference compared to the calculations for the ultimate claim amount – all steps will follow verbatim from section 2. For more on the estimator in Wüthrich and Merz (Reference Wüthrich and Merz2008a) for the distribution-free chain ladder model, see section 5.

Let

where

and

![]() $\widehat{\boldsymbol{\theta}}^{\,(0)}$

and

$\widehat{\boldsymbol{\theta}}^{\,(0)}$

and

![]() $\widehat{\boldsymbol{\theta}}^{\,(1)}$

are

$\widehat{\boldsymbol{\theta}}^{\,(1)}$

are

![]() $\cal F_0$

- and

$\cal F_0$

- and

![]() $\cal F_1$

-measurable estimators of

θ

, based on the observations at times 0 and 1, respectively. Hence, CDR is simply the difference between the predictor at time 0 of the ultimate claim amount and that at time 1. Thus, given the above, it follows by choosing

$\cal F_1$

-measurable estimators of

θ

, based on the observations at times 0 and 1, respectively. Hence, CDR is simply the difference between the predictor at time 0 of the ultimate claim amount and that at time 1. Thus, given the above, it follows by choosing

that we may again estimate

![]() ${\rm MSEP}_{\cal F_0}(\!{\rm CDR},\widehat{{\rm CDR}})$

using Definitions 2.1 and 2.2 – in particular we may calculate the plug-in estimator given by (7). Note, from the definition of CDR, regardless of the specification of

${\rm MSEP}_{\cal F_0}(\!{\rm CDR},\widehat{{\rm CDR}})$

using Definitions 2.1 and 2.2 – in particular we may calculate the plug-in estimator given by (7). Note, from the definition of CDR, regardless of the specification of

![]() $\widehat{\boldsymbol{\theta}}^{\,*}$

, that it directly follows that

$\widehat{\boldsymbol{\theta}}^{\,*}$

, that it directly follows that

$${\rm MSEP}_{\cal F_0}(\!CDR,\widehat{CDR}) \nonumber\\\quad={\rm MSEP}_{\cal F_0}\Big({\rm \mathbb E}[U\mid\cal F_1](\widehat{\boldsymbol{\theta}}^{\,(1)}),{\rm \mathbb E}[{\rm \mathbb E}[U\mid\cal F_1](\widehat{\boldsymbol{\theta}}^{\,(1)})\mid\cal F_0](\widehat{\boldsymbol{\theta}}^{\,(0)})\Big) \nonumber\\\quad={\rm MSEP}_{\cal F_0}\Big(h^{(1)}(\widehat{\boldsymbol{\theta}}^{\,(1)};\,\cal F_1), {\rm \mathbb E}[h^{(1)}(\widehat{\boldsymbol{\theta}}^{\,(1)};\,\cal F_1) \mid \cal F_0](\widehat{\boldsymbol{\theta}}^{\,(0)})\Big)$$

$${\rm MSEP}_{\cal F_0}(\!CDR,\widehat{CDR}) \nonumber\\\quad={\rm MSEP}_{\cal F_0}\Big({\rm \mathbb E}[U\mid\cal F_1](\widehat{\boldsymbol{\theta}}^{\,(1)}),{\rm \mathbb E}[{\rm \mathbb E}[U\mid\cal F_1](\widehat{\boldsymbol{\theta}}^{\,(1)})\mid\cal F_0](\widehat{\boldsymbol{\theta}}^{\,(0)})\Big) \nonumber\\\quad={\rm MSEP}_{\cal F_0}\Big(h^{(1)}(\widehat{\boldsymbol{\theta}}^{\,(1)};\,\cal F_1), {\rm \mathbb E}[h^{(1)}(\widehat{\boldsymbol{\theta}}^{\,(1)};\,\cal F_1) \mid \cal F_0](\widehat{\boldsymbol{\theta}}^{\,(0)})\Big)$$

where the

![]() $\cal F_0$

-measurable term

$\cal F_0$

-measurable term

![]() $h^{(0)}(\widehat{\boldsymbol{\theta}}^{\,(0)};\,\cal F_0)$

cancels out when taking the difference between CDR and

$h^{(0)}(\widehat{\boldsymbol{\theta}}^{\,(0)};\,\cal F_0)$

cancels out when taking the difference between CDR and

![]() $\widehat{{\rm CDR}}$

. Thus, from the above definition of

$\widehat{{\rm CDR}}$

. Thus, from the above definition of

![]() $h(\boldsymbol{\theta};\,\cal F_0)$

together with the definition of MSEP∗, Definition 2.1, it is clear that the estimation error will only correspond to the effect of perturbing

θ

in

$h(\boldsymbol{\theta};\,\cal F_0)$

together with the definition of MSEP∗, Definition 2.1, it is clear that the estimation error will only correspond to the effect of perturbing

θ

in

![]() ${\rm \mathbb E}[h^{(1)}(\widehat{\boldsymbol{\theta}}^{\,(1)};\,\cal F_1) \mid \cal F_0](\boldsymbol\theta)$

. Moreover, the notion of conditional MSEP and the suggested estimation procedure for the CDR is in complete analogy with that for the ultimate claim amount. This estimation procedure is however different from the ones used in, for example, Wüthrich and Merz (Reference Wüthrich and Merz2008a), Wüthrich et al. (Reference Wüthrich, Merz and Lysenko2009), Röhr (Reference Röhr2016) and Diers et al. (Reference Diers, Linde and Hahn2016) for the distribution-free chain ladder model. For Mack’s distribution-free chain ladder model,

${\rm \mathbb E}[h^{(1)}(\widehat{\boldsymbol{\theta}}^{\,(1)};\,\cal F_1) \mid \cal F_0](\boldsymbol\theta)$

. Moreover, the notion of conditional MSEP and the suggested estimation procedure for the CDR is in complete analogy with that for the ultimate claim amount. This estimation procedure is however different from the ones used in, for example, Wüthrich and Merz (Reference Wüthrich and Merz2008a), Wüthrich et al. (Reference Wüthrich, Merz and Lysenko2009), Röhr (Reference Röhr2016) and Diers et al. (Reference Diers, Linde and Hahn2016) for the distribution-free chain ladder model. For Mack’s distribution-free chain ladder model,

and therefore

![]() ${\rm MSEP}_{\cal F_0}(\!{\rm CDR},\widehat{{\rm CDR}})={\rm MSEP}_{\cal F_0}(\!{\rm CDR},0)$

. This is however not true in general for other models. More details on CDR calculations for the distribution-free chain ladder model are found in section 5.