Sign language research has significantly informed basic and applied sciences, yielding practical implications for both. For example, research on Swedish Sign Language, Svenskt teckenspråk (henceforth, STS), contributed to the recognition of STS as a language by the Swedish parliament in 1981 (Proposition 1980/1981:100) and the consequent implementation of sign bilingualism in the national educational curriculum. Swedish Sign Language has been a part of deaf bilingual education for nearly 40 years, both as the language of instruction for deaf pupils in classrooms, and as a school subject with its own curriculum in Swedish deaf schools (Mahshie, Reference Mahshie1995; Svartholm, Reference Svartholm2010). The 2009 Swedish Language Act proclaimed STS as equal to other minority languages used in Sweden and emphasized society’s responsibility toward STS (SFS, 2009:600). This study describes the development of the first Sentence Repetition Task (SRT)-based STS proficiency that provides a score that can be used in future studies as a continuous variable.

Psychometrically sound sign language proficiency assessments have been developed for different signed languages for first language learners (see Enns et al., Reference Enns, Haug, Herman, Hoffmeister, Mann, McQuarrie, Marschark, Lampropoulou and Skordilis2016; Paludnevičience et al., Reference Paludnevičience, Hauser, Daggett, Kurz, Morere and Allen2012 for a review) and second language learners (see Landa & Clark, Reference Landa and Clark2019; Schönström et al., Reference Schönström, Hauser, Rathmann, Haug, Mann and Knochin press for a review). Hauser et al. (Reference Hauser, Paludnevičiene, Supalla, Bavelier and de Quadros2008) developed ASL-SRT, for American Sign Language (ASL), based on an English oral repetition test (Hammill et al., Reference Hammill, Brown, Larsen and Wiederholt1994) that required participants to listen to English sentences of increasing length and morphosyntactic complexity and immediately repeat them correctly with 100% accuracy. They chose to use the SRT approach because it can be administered both to children and adults, and because past studies have shown it to be a good measure of language proficiency. Some have described the SRT as a global proficiency measure because it involves sentence processing, reconstruction, and reproduction (Haug et al., Reference Haug, Batty, Venetz, Notter, Girard-Groeber, Knoch and Audeoud2020; Jessop et al., Reference Jessop, Suzuki and Tomita2007), while others have described it as a test of grammatical processing (Hammill et al., Reference Hammill, Brown, Larsen and Wiederholt1994; Spada et al., Reference Spada, Shiu and Tomita2015) and have used it to study children’s syntactic development (e.g., Kidd et al., Reference Kidd, Brandt, Lieven and Tomasello2007).

Spoken language SRTs are sensitive to the developmental proficiency of first language learners (e.g., Devescovi & Caselli, Reference Devescovi and Caselli2007; Klem et al., Reference Klem, Melby-Lervåg, Hagtvet, Lyster, Gustafsson and Hulme2015) and second language learners (e.g., Erlam, Reference Erlam2006; Gaillard & Tremblay, Reference Gaillard and Tremblay2016; Spada et al., Reference Spada, Shiu and Tomita2015). Implicit long-term linguistic knowledge – that is, language proficiency – enhances SRT performance. When native speakers were asked to repeat ungrammatical sentences, they unconsciously applied their linguistic knowledge and corrected the grammar 91% of the time when they repeated the sentences (Erlam, Reference Erlam2006). This happens because individuals’ language knowledge helps them to scaffold sentences in working memory so they can hold more information than just rote memory allows. Relying on rote memory alone, individuals have difficulty with sentences of more than four words (see Haug et al., Reference Haug, Batty, Venetz, Notter, Girard-Groeber, Knoch and Audeoud2020 and Polišenská et al., Reference Polišenská, Chiat and Roy2015 for discussion). Correct sentence repetitions have been found to positively correlate with 2–4-year-old children’s mean length of utterances in free speech and verbal memory span (Devescovi & Caselli, Reference Devescovi and Caselli2007). Further, the accuracy of sentence repetitions of 4–5-year-old children depended more on their familiarity with morphosyntax and lexical phonology than on their familiarity with semantics or prosody (Polišenská et al., Reference Polišenská, Chiat and Roy2015).

Studies with both language and memory measures have shown that SRTs measure an underlying unitary language construct rather than a measure of working memory because they draw upon a wide range of language processing skills (e.g., Gaillard & Tremblay, Reference Gaillard and Tremblay2016; Klem et al., Reference Klem, Melby-Lervåg, Hagtvet, Lyster, Gustafsson and Hulme2015; Tomblin & Zhang, Reference Tomblin and Zhang2006). Sentence Repetition Test have been claimed to be the best single test for identifying children with Specific Language Impairment (SLI) due to their high specificity and sensitivity (Archibald & Joanisse, Reference Archibald and Joanisse2009; Conti-Ramsden et al., Reference Conti-Ramsden, Botting and Faragher2001; Fleckstein et al., Reference Fleckstein, Prévost, Tuller, Sizaret and Zebib2016; Stokes et al., Reference Stokes, Wong, Fletcher and Leonard2006). Individuals with SLI perform poorly on SRTs because the test heavily recruits linguistic processing abilities (Leclercq et al., Reference Leclercq, Quémart, Magis and Maillart2014) although some have argued that SRTs test memory more than language proficiency (Borwnell, Reference Brownell1988; Henner et al., Reference Henner, Novogrodsky, Reis and Hoffmeister2018). It has been claimed that individuals with developing language skills or SLI have less accurate sentence repetitions because they do not have available linguistic knowledge to scaffold sentences in episodic memory (Alptekin & Erçetin, Reference Alptekin and Erçetin2010; Coughlin & Tremblay, Reference Coughlin and Tremblay2013; Van den Noort et al., Reference van den Noort, Bosch and Hugdahl2006).

Hauser et al. (Reference Hauser, Paludnevičiene, Supalla, Bavelier and de Quadros2008) administered the ASL-SRT to deaf and hearing children and adults and found that native signers more accurately repeated the ASL sentences than non-native signers. Supalla et al. (Reference Supalla, Hauser and Bavelier2014) analyzed the ASL-SRT error patterns of native signers and found that fluent signers made more semantic types of errors, suggesting top-down scaffolding mechanisms are used in working memory to succeed in the task, whereas less fluent signers made errors that were motoric imitations of signs (similar phonology) that were ungrammatical or lacked meaning. In a case study of a deaf adult native ASL signer with SLI, the participant performed poorly on the ASL-SRT compared to peers, including non-native deaf signers (Quinto-Pozos et al., Reference Quinto-Pozos, Singleton and Hauser2017). The results cited above support the claim that the SRT measures sign language proficiency as well as it does spoken language proficiency.

The ASL-SRT has been adapted to several languages, including German Sign Language (DGS, Kubus et al., Reference Kubus, Villwock, Morford and Rathmann2015), British Sign Language (BSL, Cormier et al., Reference Cormier, Adam, Rowley, Woll and Atkinson2012), and Swiss German Sign Language (DSGS, Haug et al., Reference Haug, Batty, Venetz, Notter, Girard-Groeber, Knoch and Audeoud2020), among others. Other sign language tests are also sentence repetition task-based, for example, those assessing Italian Sign language (LIS, Rinaldi et al., Reference Rinaldi, Caselli, Lucioli, Lamano and Volterra2018) and French Sign Language (LSF, Bogliotti et al., Reference Bogliotti, Aksen and Isel2020), but those follow a different methodological framework, and development, administration, and coding procedures. This article describes how the STS-SRT was developed and outlines its psychometric properties.

Method

STS-SRT development

Translated, corpus, and novel sentences

The STS-SRT began with 60 sentences from 3 different sources; 20 were translated from the ASL-SRT (Hauser et al., Reference Hauser, Paludnevičiene, Supalla, Bavelier and de Quadros2008), 20 were from the Swedish Sign Language Corpus (STSC, Mesch & Wallin, Reference Mesch and Wallin2012), and 20 novel sentences were developed for this project. For the first source, the original 20 ASL sentences were translated into STS by a deaf native STS signer proficient in ASL along with a trained linguist proficient in both languages. There were two reasons for translating the ASL sentences. Instead of making all sentences novel, we decided to try the sentences that have been developed and proofed for ASL-SRT, in order to keep open the possibility of future cross-linguistic comparisons. For the second source, the team extracted 20 authentic sentences from the STSC (Mesch & Wallin, Reference Mesch and Wallin2012). The STSC consists of naturally occurring, authentic STS productions between deaf people. The motivation behind using STSC as a resource was to enhance the authenticity of the sentences. Only sentences from native STS users were selected. Efforts were made to select authentic sentences that could work context-free. Some of the sentences relate to typical topics within the deaf community; that is, basic cultural knowledge associated with being deaf. For example, one sentence contains elements from the history of oral deaf education, and another sentence describes the signing skills of teachers of the deaf. For the third source, the team also created 20 novel items. We varied items by length (short, 3–4 signs; intermediate, 5–6 signs; and long, 7–10 signs), lexical classification (e.g., lexical, fingerspelled, and classifier signs), and morphological (e.g., numeral incorporation, modified signs), and syntactic (e.g., determiners, main and subordinate clauses) complexity.

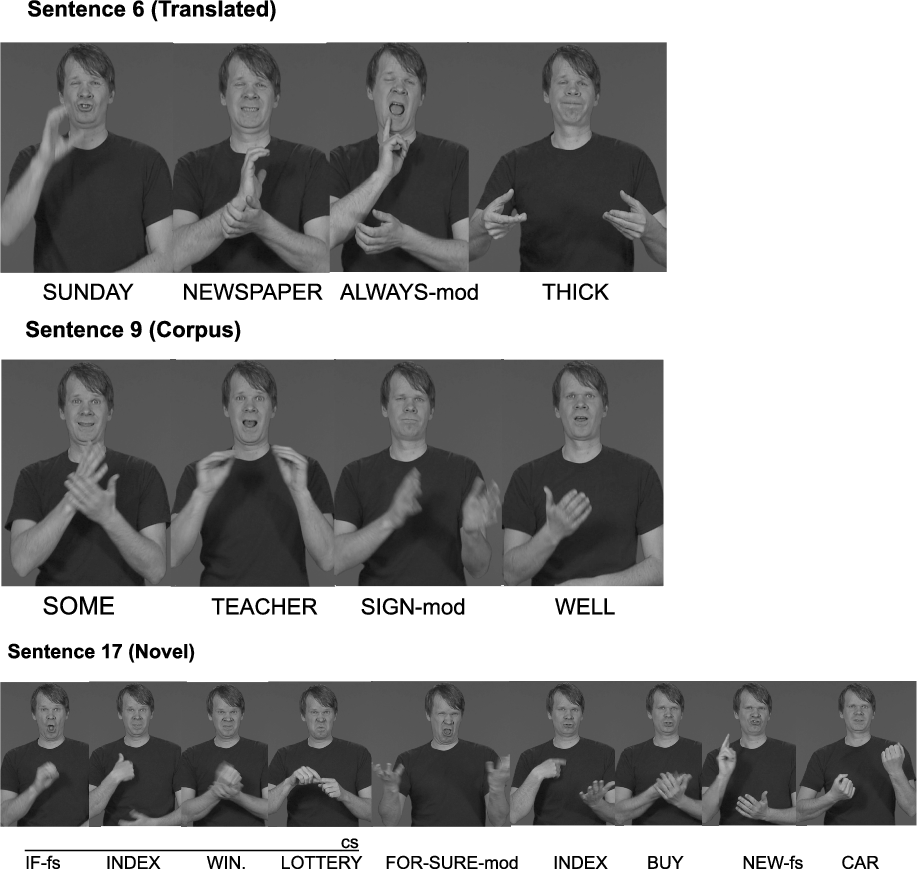

A deaf native signer was filmed signing the sentences in a natural manner and tempo against a dark studio background. Care was taken to reproduce the signs precisely as produced by the authentic signers in the original ASL or STSC sentences, that is, using the same variants of signs, the same syntactic order, the same speed, etc. The 60 sentences were later presented to 3 deaf native signers for review. A criterion based on 2/3 exclusion was applied; that is, if two of the three reviewers rejected a sentence as inauthentic, inexplicit, non-fluent, or even ungrammatical, the sentence was removed. This review process left 40 sentences, of which 16 were translated sentences, 11 sentences were corpus sentences, and 13 were novel sentences (Figure 1).

Figure 1. Three examples of STS-SRT sentences: Translated, Corpus, and Novel.

Test administration procedures

A QuickTime video file was created with the STS-SRT instructions, practice sentences, and test sentences. Every sentence video clip was followed by a gray background for 7–9 s, depending on the sentence length; see Figure 2. There were 10–12 frames at the beginning of each sentence before the signing start time to give participants enough time to prepare themselves after pushing the space button to play the clip. Five frames were also added at the signing end time to eliminate any negative effect on memory and to give participants time to start imitating the sentences as fast as possible. The STS-SRT was presented on an Apple MacBook Pro 15 with a 15-inch screen and a built-in camera to record repetitions, in a quiet room without visual distractions. The laptop was placed on a table with a deaf research assistant sitting beside the participant.

Figure 2. Format of STS-SRT sentence delivery.

The test begins with instructions in which the deaf signing research assistant describes the test and the procedure to be followed, that is, that the participant is going to see some sentences and will be told to repeat the sentences. Participants are instructed to repeat the sentences as seen, and are explicitly asked to use the sign produced by the signer rather than a synonym. The instructions conclude by announcing that there will be three practice sentences before the actual test begins. During the practice, the research assistant checks understanding and corrects any mistakes. During testing, the research assistant can use the space bar to pause the sentences as necessary, for example, to ensure that the participant answers within the allotted response time (7–9 s), that is, before the start of the next item. After completion, the recording is saved for later scoring.

Scoring procedures

The STS-SRT scoring procedures follow the same principles and basic instructions as the ASL-SRT (Hauser et al., Reference Hauser, Paludnevičiene, Supalla, Bavelier and de Quadros2008), that is, each correct response is scored 1 point, and each incorrect response is scored 0 points. A sentence that is not produced with the same phonology, morphology, or syntax as the stimulus sentence is considered an error and is marked as 0. Raters use a scoring protocol and mark signs in the sentences that were repeated incorrectly or omitted. The STS-SRT scoring instructions (Figure 3) include examples of errors base on phonological (e.g., different handshape used), morphological (e.g., omission of reduplication forms), and syntactic (e.g., different word order) differences. Omissions, replacements, or changes of lexical items were counted as errors. Metathesis and altered direction of signing were considered acceptable as long as they did not affect meaning.

Figure 3. Two example sentences from the Scoring Instruction Manual, Original with English Translation in parenthesis.

The scoring protocol focused on manual features only, that is the signs, and not on nonmanual features, that is, use of eye gaze, eyebrows, etc., for grammatical and prosodic as well as pragmatic purposes, with a few exceptions: the use of another mouthing with a completely different meaning in otherwise ambiguous sign pairs, for example, the mouthing /fa/ as in FABRIK (factory) where appropriate mouthing for the target item FUNGERA (working) would have been /fu/ even though the manual sign for FUNGERA and FABRIK is the same, but with different mouthings depending on the meaning. Additionally, nonmanual markers such as headshaking were required for the accurate production of a negative utterance.

The scoring instructions describe the point system, correct versus error responses (e.g., omission, replacement, change in word order), and examples of acceptable/unacceptable response variants for each item. It is important that the raters are fluent STS signers and have basic knowledge of sign language linguistics in order to accurately rate the responses. We created test administration instructions aimed at test instructors, as well as scoring instructions and sheets for the raters to use. It is recommended that new raters practice with experienced raters to ensure confidence about the procedure.

STS-SRT pilot testing

The purpose of pilot testing was to review the sentences and to practice some testing in a real-life situation to examine the test’s functionality. In the pilot testing, we also observed some recurring phonological variants in signs to use in the scoring instructions, that is, a list of acceptable phonological variants on signs. A purposeful sample of 10 participants was chosen according to different background variables: four participants were deaf adults of deaf signing parents, five participants were deaf adults of hearing parents, and one was a hearing skilled signer of hearing parents. The parental hearing status of the adult participants is important because, in the absence of proficiency tests, researchers have identified deaf individuals as native signers if they were raised by deaf signing parents; they are classified as non-native signers if they have hearing parents (see Humphries et al., Reference Humphries, Kushalnagar, Mathur, Napoli, Padden and Rathmann2014 for discussion on the linguistic experience of deaf children of hearing parents). The pilot sample included both native and non-native signers.

Data from the pilot study helped to adjust the acceptable phonological variants according to STS linguistic rules and usage. For example, the movement of the sign LÄSA (read) in sentence number 12 in Figure 3 was shown to vary widely between signers, that is, alternating between a variant with movement with contact (which was the target form as produced by the signer) and a variant without. The variant was considered acceptable if more than 40% of signers in the pilot test group exhibited it.

STS-SRT final version

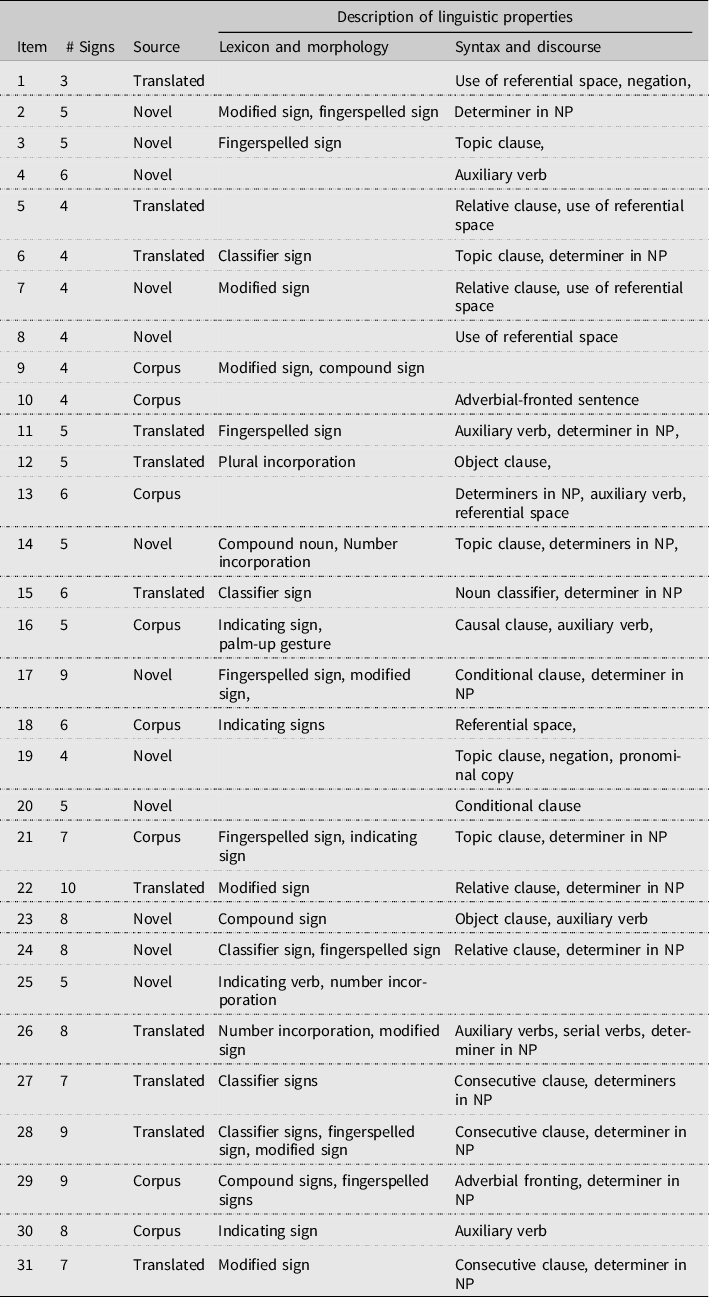

The number of sentences was reduced from 40 to 31 based on the pilot testing results. In the final version of the STS-SRT, 11 were translated sentences, 8 were corpus sentences, and 12 were novel sentences. Table 1 provides an overview of the 31 sentences and their linguistic properties. It was important that the remaining sentences included a wide variety of different linguistic properties. For lexicon and morphology, different types of signs such as lexical signs (including fingerspelled signs) and classifier signs were included. We also included numeral incorporations, as well as signs modified to indicate plurality, aspect, and spatial reference. For syntax, we aimed to have a variety of main and subordinate clauses, as well as different kinds of phrasal structures (e.g., determiners in noun phrases, verb phrases with and without auxiliary verbs). Subordinate clause types included relative, conditional, object, consecutive, as well as causal. In addition, clauses were presented in various word orders depending on information structure, and included such components as topic clauses, adverbial fronting, etc. Some sentences were constructed with simple syntax and simple lexical and morphological content, whereas some sentences have a complex combination of both, or a combination of both simple and complex constructions.

Table 1. Linguistic properties of the 31 STS-SRT sentences

Sentences which no one repeated correctly in the pilot version were omitted, as were some simple sentences that everyone repeated correctly. However, anticipating a possible application to children, we decided to keep a few more of the simple sentences in order not to make the test too difficult. In total, one simple sentence, two intermediate sentences, and six complex sentences were omitted. The sentences in the final version were ordered beginning with the items most of the pilot participants repeated correctly and ending with the items that the fewest repeated correctly. Correct sentence repetitions are awarded one point, allowing for a maximum STS-SRT score of 31. The test administration time is 15 min per participant, and rating time varies between 15 and 20 min.

STS-SRT psychometric testing

Sample

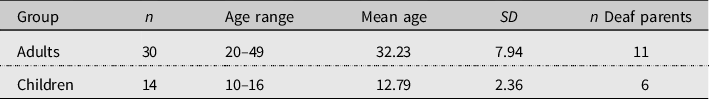

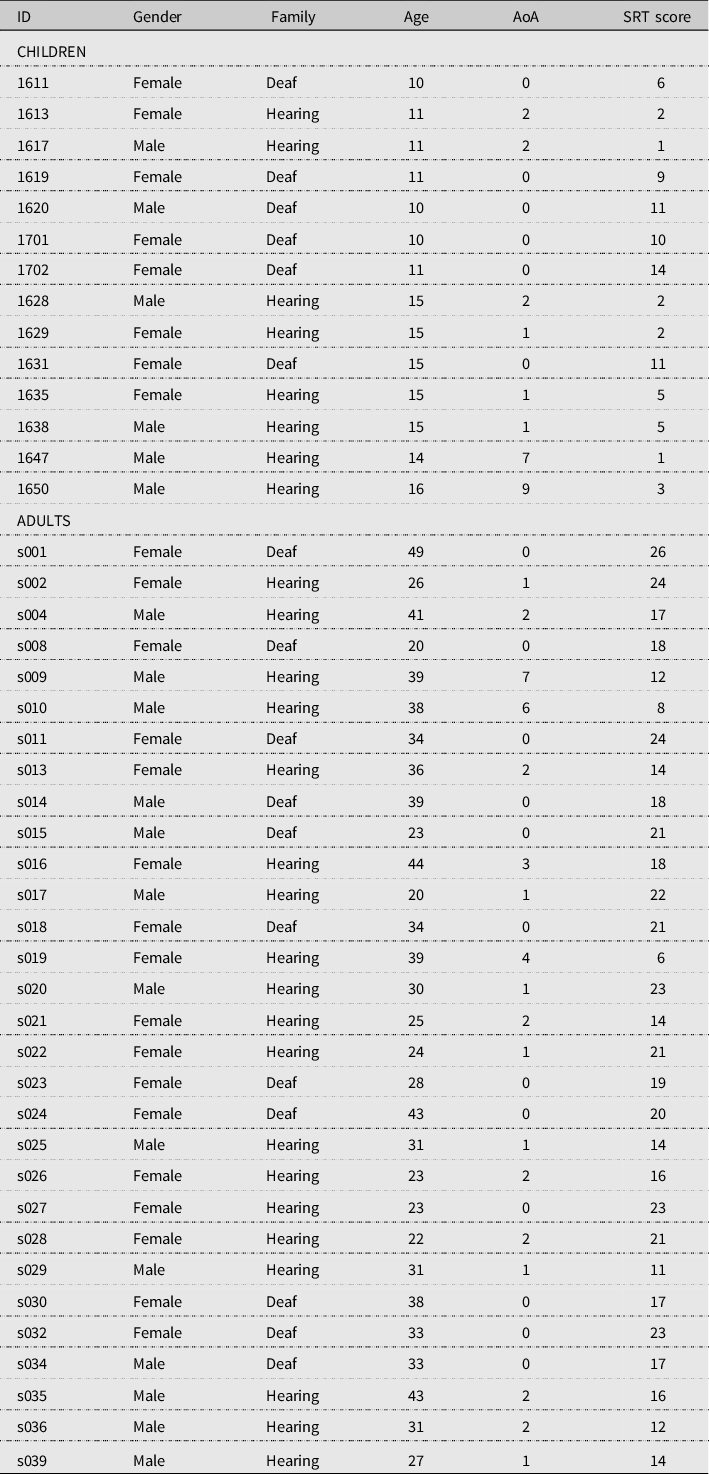

There were 44 participants in the psychometric testing sample, including 14 deaf children (8 females and 6 males) and 30 deaf adults (17 females and 13 males); see Tables 2 and 3 for descriptive statistics and overview of the participants. Adult participants were asked to fill out a background questionnaire in which they answered questions about their language, identity, education, hearing status, parents’ hearing status and language background, and age they were first exposed to STS (Age of Acquisition [AoA]). Background questionnaires of child participants were filled by their parents. The study procedures were approved by the Swedish Ethical Board.

Table 2. Descriptive statistics of test participants

Table 3. Participants’ gender, parent hearing status, Age, Age of Acquisition, and STS-SRT score

Note: AoA = Age of Acquisition.

Results

Reliability evidence

A deaf fluent signer, who is a trained linguist, rated all of the 44 participants’ STS-SRT repetitions and a second rater, also a deaf fluent signer and trained linguist, independently rated the repetitions of a subsample of 29 participants to determine inter-rater reliability. Inter-rater reliability was computed between the raters using the Intraclass Correlation Coefficient (ICC) with a two-way mixed-effects model for single rater protocols (Koo & Li, Reference Koo and Li2016). Intraclass Correlation Coefficient values between 0.60 and 0.74 are good and values between 0.75 and 1.0 are excellent (Cicchetti, Reference Cicchetti1994). The ICC for the STS-SRT raters was 0.900 (95% confidence interval [CI] = .787, .953, p < .001), which is in the excellent range of inter-rater reliability. Cronbach’s alpha was used to determine the STS-SRT’s internal reliability. Analyses revealed that the test has an alpha of 0.915 (95% CI = .875, .948, p < .001), suggesting that there is excellent internal consistency between the items. As an additional analysis and comparison, internal consistency was measured for the three sentence sources, Translated (n = 11), Corpus (n = 8), and Novel (n = 12), yielding Cronbach’s alpha of 0.774, 0.714, and 0.828, and 95% CI = [.661, .862], p < .001, [.565, .826], p < .001, and [.742, .894], p < .001, respectively.

Validity evidence

Validity support for the claim that the STS-SRT results can be interpreted as a deaf test taker’s language competency in STS can be provided by demonstrating that those with greater language mastery (i.e., adults or those with exposure to the language from birth) perform better on the test than those who are expected to have lesser language mastery (i.e., children or those who have experienced language deprivation). There were some factors that were introduced to this test such as the sentences originating from three different sources (ASL, STS, Corpus) and the sentences varied in the Number of Signs (2–4, 5–6, and 7+). A linear mixed model, with Modified Forward Selection, was used to capture the fixed main effects and interaction between Source and Number of Signs, with response variable the percent correct within each Source/Number of Signs combination. The independent variable contains a mix of random effects and fixed effects. Participant ID was used to account for the multiple measurements on each participant, and was a factor with random effects. Source, Number of Signs, Child_Adult (Child, Adult) Family (Deaf family, Hearing family), and AoA Group (0, 1, 2, or 3+ years) were the factors with fixed effects, and Age, AoA, and Years of Signing were the covariates. See Tables 4 and 5 for raw scores and Table 6 for mean percent correct repetitions.

Table 4. Participants’ STS-SRT raw scores by children or adult and parental hearing status

Table 5. Participants’ STS-SRT raw scores by Age of Acquisition Group

Note: AoA = Age of Acquisition.

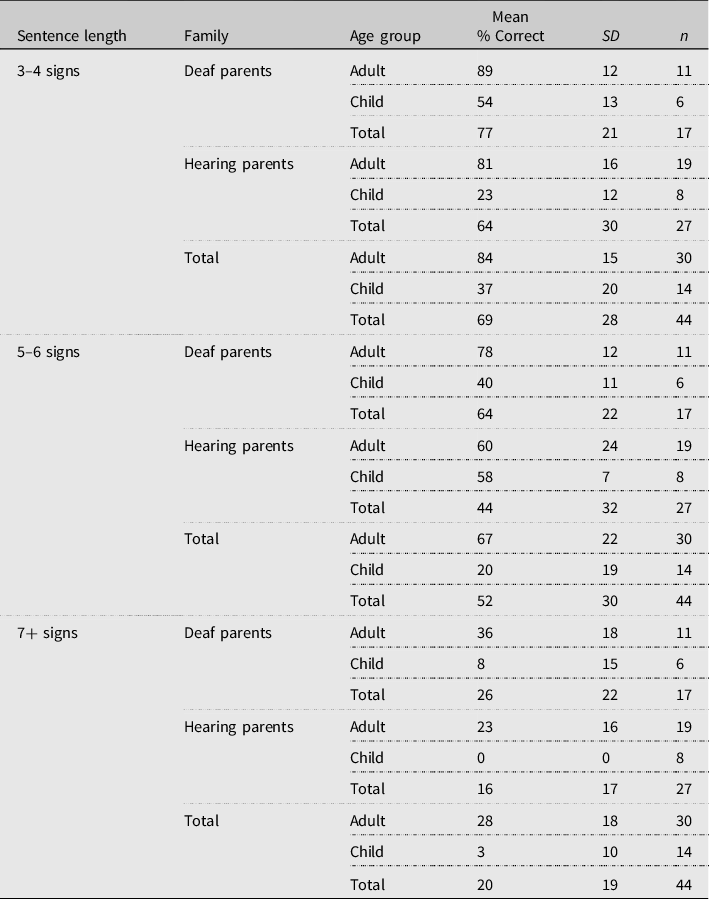

Table 6. Percent correct repetitions based on sentence length by family and age group

The Mixed-Effects ANOVA Analysis in Minitab was able to run the full model by using a restricted maximum likelihood method of variance estimation. However, this model suffered from multicollinearity. Therefore, the Akaike Information Criterion (AIC, corrected for small sample size) was used to build the appropriate model in order to evaluate the effects of a base set of variables related to STS-SRT performance, while accounting for subject variance and avoiding multicollinearity. Modified Forward Selection is a model-building technique in which a base set of variables (Source, Number of Signs, Source × Number of Signs) appear in each model, and additional variables are considered one a time, starting with Child_Adult, Family, Age, AoA, AoA_Group, and Years_Signing in Model 1. Child_Adult had the lowest AICc (92.24) and, hence, was added to the base set of variables for Round 2. In Round 2, among all potential models at most one additional variable over the base set of variables, the model with AoA_Group had the lowest IACc (80.64) and was added to the base set of variables for Round 3. In Round 3, the model with no additional variables had the lowest AICs (80.64), so Model 3 was used in the following analyses to determine the main effects and coefficients of the variables.

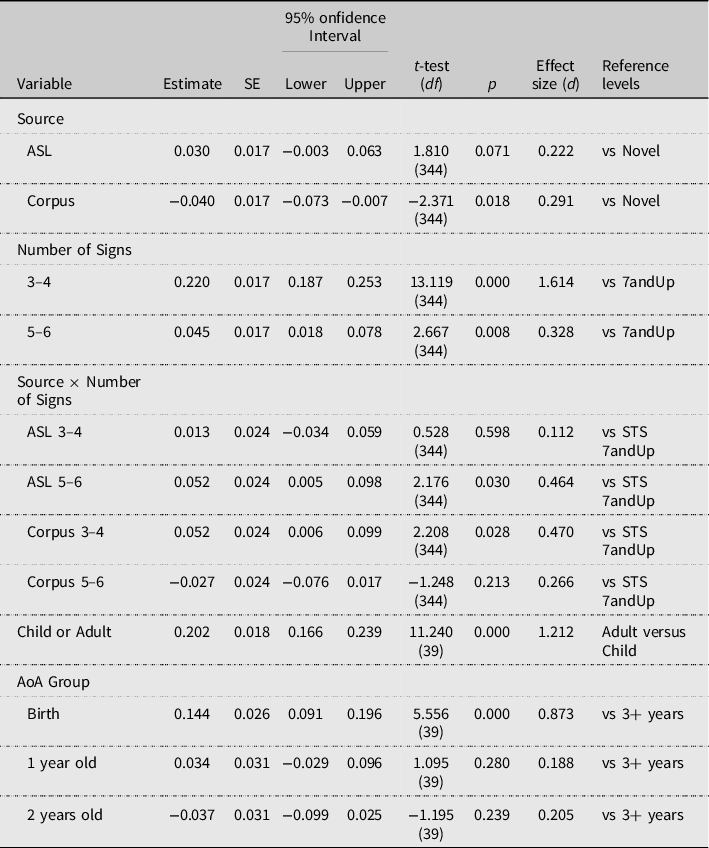

With a −2 log likelihood of 76.612, it was found that ID accounted for 9.93% of the estimated subjects’ variance (z = 2.169, p = .015), leaving a substantial amount of between-subject variability, 90.07% (z = 13.115, p < .001). Main effects were found for all fixed effects: Source F(2, 344) = 3.07, p = .048, ηp 2 = 0.018; Number of Signs F(2, 344) = 142.80, p < .001, ηp 2 = 0.454; Source × Number of Signs F(4, 344) = 4.61, p < .001, ηp 2 = 0.051; Child_Adult F(1, 39) = 126.33, p < .001, ηp 2 = 0.764; and, AoA_Group F(3, 39) = 12.11, p < .001, ηp 2 = 0.482. Model 3 accounted for 65.47% of the residual variance (S = 0.236, AIC = 80.64, BIC = 88.51). See Table 7 for a summary of the results. Partial eta-squared is the variance explained by a given variable of the variance remaining after excluding variance explained by other predictors.

Table 7. Coefficients for percent correct STS-SRT repetitions

Adults scored 20.2% higher than children, and earlier AoA was associated with higher scores. With the base level for AoA Group being 3+ years, participants who were exposed to STS from birth performed 14.4% better than those who acquired STS when they were 3 years old or older. Those who acquired STS around the age of 1 or 2 years old did not have significantly more correct repetitions than those who acquired the language later. With the base level for Source being STS, participants performed 3.0% better on the sentences that were translated from the ASL-SRT than on the STS sentences that the team had developed. However, correct reproduction was 4.0% less on the sentences developed from the STS corpus than on items developed by the team. The results also indicated that participants performed 22.0% better on items of 3–4 signs than on items of 7+ signs, but only 4.5% better on items of 5–6 signs.

Discussion

Similar to the ASL-SRT (Hauser et al., Reference Hauser, Paludnevičiene, Supalla, Bavelier and de Quadros2008), the STS-SRT finds that adults produce more correct repetitions than children, and those with early AoA produce more correct repetitions than those with delayed STS acquisition. The authors utilize the argument-based validation framework (Chapelle, Reference Chapelle2020; Knoch & Chapelle, Reference Knoch and Chapelle2018) to describe the validity of STS-SRT interpretation. This framework requires test developers to be explicit about the claims and inferences they make about the test, and about its interpretation and use. Here, we make evaluation inferences (Kane, Reference Kane and Brennan2006) that the STS-SRT is a good measure of STS proficiency both because of how it was developed and because of its results. The sentences were carefully crafted by qualified individuals with extensive STS linguistic knowledge, and demonstrated excellent internal consistency. The scoring protocol supported this with excellent inter-rater reliability. The inference that the test is good was further supported by results demonstrating that the translated, corpus, and novel sentences did not impact sentence repetition differently. This is an explanation inference, about why the test scores represent an individual’s language proficiency. It supports past research suggesting that the SRT measures language, not memory per se. This inference was supported by results demonstrating that native signers perform better than non-native signers and that adults perform better than children. The sign span results also provide evidence to support explanation inferences about the results because this study demonstrated that longer sentences cannot be reproduced with rote memory alone, providing support that implicit linguistic knowledge is needed to correctly repeat sentences.

Age of Acquisition accounted for 14.4% of the variance of the STS-SRT participants’ correct repetitions. It is unclear what explains the remaining variance, although in the USA, Stone et al. (Reference Stone, Kartheiser, Hauser, Petitto and Allen2015) found that AoA explained only 15.2% of the variance in ASL-SRT correct repetitions. Our hypothesis is that there are fewer individuals in Sweden who experience language acquisition delays compared to in other countries because Sweden has a relatively long history with an infrastructure of sign language intervention for hearing parents. This intervention includes teaching STS to hearing parents of deaf children during the children’s first years. Many deaf children of hearing parents have therefore been exposed to STS from early on and have access to STS at home. As can be seen in the AoA distribution of the participants of this study, there are relatively few participants who have had first exposure to STS after the age of 3. In fact, most deaf children will have been exposed to STS through their entire childhood up to adult years. Nevertheless, it is hard to track the degree of exposure, and one would expect more variability in STS proficiency within that group compared to among deaf children of deaf parents, who are expected to have been exposed to STS from birth.

One limitation of the study is the small sample size. There is no official record of the size of the deaf (since childhood) population in Sweden, but one recurring estimate is 8,000–10,000 people in total (Parkvall, Reference Parkvall2015). The deaf population is thus much smaller than in some other countries such as the USA. This makes it difficult to have a large sample size of subgroups within the deaf community such as native signers, who typically represent only 5–10% of the deaf community (Mitchell & Karchmer, Reference Mitchell and Karchmer2004). Regardless, no test should be considered valid based on a single psychometric property or publication. Under the argument-based validity framework, we can justify the claim that STS-SRT scores can reveal how well a person is able to comprehend and reproduce STS sentences compared to others. However, more studies are needed to add to the overall construct validity of the STS-SRT as a test of STS proficiency. Future studies of the STS-SRT are needed to determine, for example, test–retest reliability and how claims and inferences about the test’s interpretation and use are validated.

Another possible limitation pertains to the test scoring system itself. There is rigorous research on the importance of nonmanual components for the structure of sign languages, as well as for the emergence of the same in stages of sign language development. However, ASL-SRT inter-rater reliability was poor when the test included the scoring of nonmanual components. The gradual variation of nonmanual components among the signers was highly variable, which made it hard for the raters to detect and rate them. STS-SRT followed the same scoring protocol as ASL-SRT, but added a limited set of nonmanual components in the scoring protocol, namely mouthing and headshaking for grammatical functions, in order to increase the efficiency of rating. It is advisable, however, that future tests include the scoring of the nonmanual components in order to capture sign language proficiency more holistically.

Most importantly, the results of this study support the Critical Period Hypothesis for language acquisition (see Newport et al., Reference Newport, Bavelier, Neville and Doupoux2001 for discussion). The compromised performance by deaf individuals with delayed language exposure, and/or those raised in inaccessible, language-impoverished environments, is a concern because language deprivation is prevalent in the deaf community (Hall, Reference Hall2017; Mayberry, Reference Mayberry, Marshark and Spencer2010; Mayberry, Lock & Kazmi, Reference Mayberry, Lock and Kazmi2002). Research has demonstrated that the early acquisition of sign language is important for cognitive (e.g., Courtin, Reference Courtin2000; Hall et al., Reference Hall, Eigsti, Bortfeld and Lillo-Martin2018; Hauser, Lukomski & Hillman, Reference Hauser, Lukomski, Hillman, Marschark and Hauser2008) and literacy development (e.g., Clark et al., Reference Clark, Hauser, Miller, Kargin, Rathmann, Guldenoglu, Kubus and Israel2016; Stone et al., Reference Stone, Kartheiser, Hauser, Petitto and Allen2015). The results of this study provide evidence not only of the validity of the STS-SRT, but also that delayed sign language acquisition is happening in Sweden as it is elsewhere.

Conclusion

This study on the one hand provides straightforward implications for sign language testing and on the other hand, widens our understanding of language testing more generally. This study proposes an STS test that has excellent reliability and validity, and which can be used by researchers and practitioners to document child and adult STS proficiency. The STS-SRT can be used as a continuous variable in theoretical, clinical, and applied psycholinguistics and other language and behavioral sciences, as well as by educators and other specialists tracking language development.

Acknowledgments

First of all, we also would like to express our gratitude to the following people for their assistance in the STS-SRT development process: Magnus Ryttervik, Joel Bäckström, Mats Jonsson, Pia Simper-Allen, and Thomas Björkstrand. We would also like to thank Okan Kubus and Christian Rathmann for sharing their valuable experience of the development of DGS-SRT. Thanks also to Carol Marchetti and Robert Parody for statistics assistance and Susan Rizzo and Angela Terrill for proofreading the paper. We also express our gratitude to the three anonymous reviewers for their valuable comments on earlier drafts of this paper. Finally, we would like to thank our incredible test takers for participating in the study.

Conflict of Interest

No conflicts were reported.

Ethical Consideration

This STS test development study was approved by the Swedish Ethical Review Board (EPN 2013/1220-31/5). Child data were obtained from another study also approved by the Swedish Ethical Review Board (EPN 2016/469-31/5).