1. Introduction

Bilingualism is intrinsically a multifaceted and heterogeneous construct, relevant to many scientific and applied fields, including linguistics, psychology, neuroscience, education, and speech-language pathology, among others. While these fields might focus on different aspects of bilingualism, their mutual relevance requires a common understanding of how bilingualism is operationalised. Interdisciplinary research requires some objective common ground. Furthermore, within each field, and as an essential condition for robust science, research findings need to be replicable. An example of how the lack of such common ground may potentially complicate our understanding of research findings is the prolific debate on whether bilingualism confers cognitive benefits (e.g., Adescope, Lavin, Thompson & Ungerleider, Reference Adescope, Lavin, Thompson and Ungerleider2010; Lehtonen, Soveri, Laine, Järvenpää, de Briun & Antfolk, Reference Lehtonen, Soveri, Laine, Järvenpää, de Briun and Antfolk2018). Some recent opinion papers have tried to provide reasons for the conflicting results and suggested that diverging approaches to the multidimensional nature of bilingualism might be partly responsible for the lack of replicability in findings (Valian, Reference Valian2015; Bak, Reference Bak2016). Marian and Hayakawa (Reference Marian and Hayakawa2020) and Kremin and Byers-Heinlein (Reference Kremin and Byers-Heinlein2020) have convincingly argued that bilingualism research would benefit from greater transparency regarding the measures used to operationalise the variables of interest.

Many studies rely on questionnaires to identify the bilingualism profiles of their participants or to estimate how bilingual they are. In the case of children, the information tends to be obtained from parents/caregivers, teachers, and to a lesser extent from the children themselves. While it is now widely acknowledged that several components of bilingualism vary along a continuum (e.g., language proficiency, age of first exposure), data obtained from questionnaires are regularly used to characterise bilinguals through discrete categories, such as simultaneous vs. sequential bilinguals, (child/adult) second language learners, speakers of additional languages, heritage speakers. In some cases, attribution to a category depends on a relatively arbitrary cut-off point. It is however unclear to what extent different questionnaires tap into the same constructs, even when they use identical labels (e.g., language proficiency, language exposure, language mixing, etc.).

The variability in documenting bilingualism has been observed in a number of recent reviews. For instance, Surrian and Luk (Reference Surrian and Luk2017) reviewed 186 studies from 165 empirical articles published between 2005 and 2015. They noted that specific components of bilingual experience were measured to different degrees and they offered several explanations as to why this might be. For instance, whether a specific variable inquired about differed depending on the geographic region where the study was conducted. In particular, the language of schooling was reported in all of the studies from Asia, Australia, and Africa, while only in 46% of studies from the US, most likely as it was assumed that the language of schooling was English. Similarly, the inclusion of information on language history depended on the age of participants (children vs. adults). More specifically, language history was reported in 56% of child studies and in 83% of adult studies, which the authors assumed was probably due to the fact that adults have a longer history to report.

Variability in the documentation of bilingual experience was also observed by Li, Sepanski, and Zhao (Reference Li, Sepanski and Zhao2006). In a review of 41 studies, Li et al. (Reference Li, Sepanski and Zhao2006) identified the dimensions that most researchers measured through language background questionnaires. Although their review included mostly studies conducted with adults, it informed their creation of a freely available questionnaire (the Language History Questionnaire) which can be adapted for use with children. This questionnaire has since been adopted in many studies, enabling the use of common scales to measure various components of bilingualism, and it has been updated twice since (see Li, Zhang, Tsai & Puls, Reference Li, Zhang, Tsai and Puls2014; Li, Zhang, Yu & Zhao, Reference Li, Zhang, Yu and Zhao2019). While Li and colleagues observed that some components of bilingualism were documented less often than others (e.g., writing ability in 36.6% of studies, frequency of speaking L1 at home in 7.3% of studies, etc.), they did not review or discuss differences in how these dimensions were operationalised across studies. For instance, reading or speaking ability were both documented in 41.5% of the studies surveyed, but no further information was provided about the comparability of scales used across the studies.

De Bruin (Reference de Bruin2019) offers some discussion of the importance of describing measures used to quantify bilingualism in relation to the following components: age of acquisition, proficiency, language use, language switching and language context. Her review focuses on a number of questionnaires designed for use with adults (Language Experience and Proficiency Questionnaire, LEAP-Q, Marian, Blumfield & Kaushanskaya, Reference Marian, Blumfield and Kaushanskaya2007; Language and Social Background Questionnaire, LSBQ, Anderson, Mak, Keyvani Chahi & Bialystok, Reference Anderson, Mak, Keyvani Chahi and Bialystok2018; Bilingual Switching Questionnaire, BSWQ, Rodriguez-Fornells, Krämer, Lorenzo-Seva, Festman & Münte, Reference Rodriguez-Fornells, Krämer, Lorenzo-Seva, Festman and Münte2012) and on specific studies (e.g., in case of age of acquisition: Luk, De Sa & Bialystok, Reference Luk, De Sa and Bialystok2011; Pelham & Abrams, Reference Pelham and Abrams2014; Paap, Johnson & Sawi, Reference Paap, Johnson and Sawi2014; Tao, Marzecová, Taft, Asanowicz & Wodniecka, Reference Tao, Marzecová, Taft, Asanowicz and Wodniecka2011). While focusing on how the documentation of these components could be improved, de Bruin (Reference de Bruin2019) illustrates the variability in their operationalisation, especially regarding age of acquisition and proficiency. For instance, age of acquisition can be documented differently across different studies: as the start of language acquisition/learning, as the age of arrival in the new country (in case of immigrants), as the age of fluency in the second language, as the age at which bilinguals started using languages actively on a daily basis, as the age of first exposure to a language, or as the age of formal classroom instruction. Similarly, when proficiency is estimated based on questionnaires, the resulting scales can vary widely (e.g., 1-7-point scales, 1-10-point scales).

In sum, previous reviews have considered how frequently specific components of bilingual experience are operationalised or how much variability there is in the operationalisation of particular components of bilingual experience. What is currently missing is a comprehensive review of the components of bilingual experience documented across questionnaires, considering the commonality of the components included as well as how they are operationalised. We aim to fill that gap.

Our study focuses on questionnaires used to document bilingualism in children (0–18 years) as estimating their bilingualism poses a specific set of challenges. Some of these include practicality issues, such as generally having to rely on carers’ reports because children are often unable to complete reports themselves. Nevertheless, while we might rely on different informants and while different components of bilingual experience might be documented for children as opposed to adults (see Surrian & Luk, Reference Surrian and Luk2017), we expect there to be substantial overlap. As such, our findings will also be relevant to research on adults. The use of questionnaires with adult participants faces similar issues, such as determining the most felicitous ways of grouping bilinguals in specific categories or using questionnaire data to create composite scores that characterise bilinguals.

Our aim is not to argue for or against the use of composite measures - such as language entropy (Gullifer & Titone, Reference Gullifer and Titone2020) or the continuous factor score method advocated by Anderson et al. (Reference Anderson, Mak, Keyvani Chahi and Bialystok2018). Furthermore, while acknowledging the multi-dimensional nature of bilingualism, our purpose is not to discuss the relative importance of each dimension - such as language proficiency (Hulstijn, Reference Hulstijn2012) or child-level and context-level variables (Byers-Heinlein, Esposito, Winsler, Marian, Castro & Luk, Reference Byers-Heinlein, Esposito, Winsler, Marian, Castro and Luk2019a), nor to argue about the relative merits or the comparability of categorical vs. continuous approaches to bilingualism (Kremin & Byers-Heinlein, Reference Kremin and Byers-Heinlein2020). Rather, we will focus on the raw measures used to operationalise bilingualism in order to:

1. provide a list of available questionnaires used to quantify bilingual experience in children;

2. identify the components of bilingual experience that are documented across questionnaires;

3. discuss the comparability of the measures used to operationalise these components.

2. Methodology

2.1 A systematic review

Our initial aim was to conduct a systematic review. To this end, in October 2019, we searched PsycINFO, Embase Classic + Embase, ERIC, Web of Science, and Scopus by using a list of key terms. These were: Key terms 1 [bilingual* OR multilingual*] AND Key terms 2 [questionnaire* OR assess* OR tool* OR measur* OR report* OR estimat* OR rating* OR instrument* OR quantif* OR survey*] AND Key terms 3 [dominance OR proficiency OR ‘length of exposure’ OR ‘length of residency’ OR impairment* OR codeswitching OR code-switching OR code-mixing OR ses OR ‘parental education’ OR ‘input quality’ OR ‘input quantity’ OR ‘diversity of linguistic environment’] AND Key terms 4 [child* OR pupil* OR kid*]. This yielded a large number of papers across databases: PsycINFO (1,098), Embase Classic + Embase (418), ERIC (1,442), Web of Science (1,026), Scopus (655).

Several reasons prevented us from further pursuing the systematic review, however. First, questionnaires quantifying bilingual experience were often not the focus or the main topic of research articles. The use of questionnaires was therefore rarely mentioned in the abstract, requiring scanning the entire paper. Second, the very large range of research topics associated with the operationalisation of bilingualism made it extremely challenging (if not impossible) to identify them all with a single search string. Third, even among studies reporting the use of questionnaires to quantify bilingual experience, the questionnaires themselves tended not to be included in the papers or even available. As an illustration, in the review by Li et al. (Reference Li, Sepanski and Zhao2006), out of 41 studies which used questionnaires, only 7 included them in the publication. These challenges led us to adopt an alternative approach to the identification of relevant questionnaires.

2.2 An alternative approach

Initially, 13 questionnaires were already available to us at the beginning of the review process (these questionnaires are marked with an asterisk in section A in the supplementary material). We created a Google Form survey open to all bilingualism researchers, asking them whether they had used any of these 13 questionnaires, modifications of these questionnaires, or any other questionnaires designed by themselves or by anyone else. Respondents were also asked to email us any questionnaires or questionnaire modifications that they were familiar with and that were not on our original list. This survey was advertised on social media (Facebook, Twitter, LinkedIn), via specialist websites, groups and mailing lists (The LINGUIST List, Info-CHILDES, Bi-SLI COST Action) and at the Dutch national research meeting on language development in children (Amsterdam, 20 November 2019). At the same time, we did a non-systematic search for available questionnaires across the bilingualism literature, including a Google search exploiting some of the key terms listed in section 2.1, and through the resources available at the UCLA National Heritage Language Resource Center. Finally, several researchers familiar with our review project emailed us their own questionnaires. The collection process lasted between October 2019 and June 2020. The number of relevant questionnaires identified, as well as the exclusion criteria, are provided in the results section.

2.3 Analytic strategy

To identify the components of bilingual experience that the questionnaires were designed to document, we adopted an inductive method, allowing categories to emerge from the list of raw measures documented by the questionnaires. Using this method, we identified (a) overarching constructs, (b) specific components of these overarching constructs, and (c) how each component was operationalised. An illustration is provided in Table 1. The coding procedure was as follows: the first author examined each questionnaire and classified the questions into the categories which were emerging. Subsequently, the first author repeated the process to check for any inconsistencies in coding. Questions which were difficult to categorise were flagged and discussed between the first and the last author until consensus was reached. In the list of overarching constructs and their components (see supplements, section B), the first author noted any peculiarities in classification or explanations for components that might not be straightforward to understand. All authors looked at these notes and commented if further clarifications were necessary, which were then addressed by the first author.

Table 1. An example of an overarching construct, its components and operationalisations

In total, 32 overarching constructs were identified, as well as 194 components, each operationalised in a variety of ways. A detailed list of overarching constructs and their components can be found in section B in the supplementary materials. Where necessary, an explanation of what each component embodies is provided.

In order to be able to compare questionnaires, we had to decide on a set of terms to use which do not necessarily reflect the circumstances of all of the communities the questionnaires were intended for. We chose to use the labels home language (HL) and societal language (SL) to refer to the languages of bilinguals. This enabled us to capture a variety of labels in the questionnaires (e.g., mother tongue, L1-L4, language A/B/C, other language, target language, additional language, country language, language X/Y, etc.). Whenever the questionnaire included specific language names (e.g., English and Spanish), we assigned them the label HL or SL depending on the context. For instance, if a questionnaire was created by a US team of researchers and it was aimed at Spanish–English bilinguals in the US, Spanish was labelled as the HL, and English as the SL. While the HL/SL labels are not universally adequate, they provide a practical solution to identify the child's languages for the purpose of this review. For the questionnaires documenting more than two languages, we mapped the two main ones onto HL and SL, and noted that information on one or more other languages was also collected.

Finally, in order to address our third research aim (i.e., discuss the comparability of the measures used to operationalise identified components), we looked into the ways in which questionnaires documented components of the overarching constructs. Due to space limitations, and by way of illustration, we focus on a few overarching constructs which are common across questionnaires: exposure and use, activities, and current skills in HL and SL.

3. Results

3.1 Questionnaires

The first research aim was to provide a list of available questionnaires used to quantify bilingual experience in children. We identified 81 questionnaires, 33 of which had to be excluded from analysis (as shown in Figure 1). Reasons for exclusion included: being designed for quantification of bilingualism in adults (n = 25), being designed for use with foreign language learners (n = 2), inquiring mostly about a specific bilingual school (n = 1), extreme brevity (n = 1; this questionnaire included only three language-related questions), focussing on something other than language (n = 1), focus on speech and language problems rather than bilingual language experience (n = 1), the impossibility of translating the questionnaire (from German) within our timeframe (n = 1), being a duplicate of another questionnaire (n = 1). Before the coding procedure started, all authors agreed that questionnaires designed for use with adults would be excluded, even though in practice they might be adapted for use with children. Other reasons for exclusion were raised by the first author after reading through the questionnaires (e.g., whether to exclude the questionnaire for foreign language learners or the one focusing on a specific bilingual school). These decisions were then discussed and approved by all authors. The remaining 48 questionnaires were included in the analysis.

Figure 1. Number of questionnaires included in and excluded from the review

Out of these 48 questionnaires, most were in English (n = 42), 3 had a version in both English and Russian, 1 was in both Spanish and English, 1 was in Spanish only, and 1 was in Dutch only. Note that in practice, some of these questionnaires have translations in other languages. Here we reported the breakdown of the versions collected in our dataset. Five questionnaires were to be administered to teachers, 4 to children themselves, and the other 39 to parents/caregivers. See section A of the supplementary materials for a complete list of questionnaires (Supplementary Materials).

3.2 Documented components across the questionnaires

The second aim of this review was to identify components of bilingual experience documented across questionnaires. We identified 32 overarching constructs and their 194 components. Considering the large number of constructs and their components, space limitations prevent us from outlining all of them in the paper. We therefore present those constructs that in our judgement are the most informative of an individual's level of bilingualism. These include language exposure and language use (Table 2), current skills in HL and SL (Table 3), and activities in each language (Table 4). Our decision to focus on these particular constructs was also informed by previous work in the field. Looking at the variables which emerged in Li et al.'s (Reference Li, Sepanski and Zhao2006) review of 41 studies that used language history questionnaires, apart from the demographics (e.g., age and years of residence), most other variables were exposure-, use- or language skills-related. Furthermore, input quantity (operationalised through exposure- and use-related variables) and input quality (often estimated through involvement in particular activities) have been shown to play a significant role in language outcomes depending on child's age and the context (see Unsworth, Reference Unsworth2016).

Table 2. Exposure and use variables documented across 48 questionnaires (number and percentage of questionnaires documenting each component)

Note.

a This component includes operationalisations about exposure until a certain point in early life, with an exception of one questionnaire, which inquired about exposure until the 12th year in child's life (which we did not consider early life). The same is the case for ‘Early use’ (see below in the same table).

b Most frequent language or interlocutor.

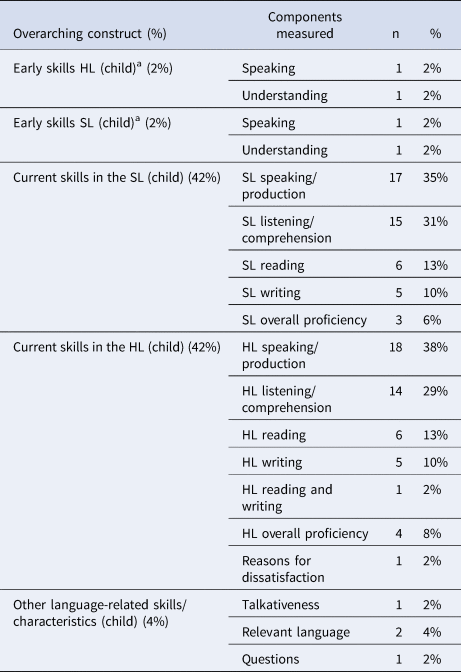

Table 3. Child proficiency variables documented across 48 questionnaires (number and percentage of questionnaires documenting each component)

Note. aUp to a certain point in life.

Table 4. Variables related to activities and context quality documented across 48 questionnaires (number and percentage of questionnaires documenting each component)

Section B of the supplementary material contains a complete list of the overarching constructs and their components, as well as more comprehensive clarifications regarding what each documented component embodies (Supplementary Materials).Footnote 1 Section C of the supplementary material (Tables S1-S7) outlines the frequency of constructs/components not discussed here (Supplementary Materials). Those constructs certainly require attention in future work as specific research questions might find them to be relevant factors.

In the first column of each table, next to the name of each overarching construct, we specified what percentage of questionnaires documented that particular construct in at least one way. The second column lists the components of that construct, while the numbers and percentages in the third and the fourth column show how many out of the 48 questionnaires documented each component. Note that the tables do not indicate in how many different ways each component was operationalised, as this varied widely across questionnaires and it would be impossible to visualise in a comprehensive way. No matter in how many different ways a questionnaire operationalised a certain component, it was counted only once. For instance, the frequency of relative exposure was documented in 29 out of 48 questionnaires (see Table 2). However, in some questionnaires, it might be documented in one way only (e.g., with two main caregivers), while in others it might be estimated in several ways (e.g., with household members, in school and outside of school, while reading or while listening, etc.).

Table 2 relates to exposure and use. While these two constructs are presented separately in the table below, the distinction was not always made explicit across questionnaires. Where no (clear) distinction was made between exposure and use, the question was counted under the construct ‘exposure’. Furthermore, we avoided introducing components such as ‘cumulative exposure/use’ or ‘weighted exposure/use’, as the ways in which these composite measures were calculated across some tools varied substantially. Rather, we considered raw estimates only – that is, the precise information gathered by the questionnaires. For instance, the component ‘frequency of exposure (relative)’ embodies all questions about the target child's language exposure documented in relative terms (e.g., using percentages; frequency adverbs such as ‘never’, ‘sometimes’, ‘often’, ‘always’). In some cases, questionnaires asked about the overall relative exposure to a language; in other cases, they did so with respect to a particular type of language experience (e.g., reading). Both were classified here under ‘frequency of exposure (relative)’.

Table 3 shows the components documenting the target child's language skills in the HL and SL (leaving aside other potential languages).

Table 4 relates to the target child's activities, as well as explicit questions about the quality of the child's language context.

As presented in the Tables 2–4, as well as the Tables S1-S7 in the supplementary materials (see section C, Supplementary Materials), there is substantial variability as to which constructs were documented across the 48 questionnaires surveyed. Excluding the demographics of children (in 94% of questionnaires) and the demographics of parents/caregivers/informants (in 75% of questionnaires), only the following constructs were included in more than 50% of reviewed tools: language exposure (96%), language use (73%), type of bilingualism (60%), socioeconomic status (58%), and developmental issues/concerns (56%). Components documented in 40-50% of questionnaires include: activities (44%), children's current skills in SL and HL (both in 42% of questionnaires), information about siblings (42%), and SL skills/quality of the first caregiver (42%). Other constructs were included in less than 40% of questionnaires.

We now turn to the third research aim: estimating the comparability of the measures used to operationalise key components of bilingual experience.

3.3 Comparability of measures used across questionnaires

As justified above, we focus on the components presented in Tables 2 through 4 only: language exposure and language use, current skills in HL and SL, and activities in each language. Our discussion of the components related to language exposure and language use is organised thematically below: relative frequency of exposure and use; language used with interlocutors, in contexts and during activities; age/period of exposure in relation to interlocutors/contexts; time-unit-based measures of exposure and use; language mixing; other exposure- and use-related components. Operationalisations of any components other than these, presented in the tables in section C of the supplementary materials, are referred to where relevant (Supplementary Materials).

Exposure and use

Most questionnaires documented language exposure, but not all documented language use (see Table 2). Furthermore, when language use was documented, this was not always separate from language exposure. For instance, informants might be asked to estimate how frequently the child “hears or speaks” each of their languages. In some cases, although the questionnaire appeared to document language use only, both exposure and use were conflated in the response scale. For instance, in the Teacher Questionnaire by Gutiérrez-Clellen and Kreiter (Reference Gutiérrez-Clellen and Kreiter2003), respondents are asked to document the child's ‘language use’, clearly defined as how much the child uses each language. However, the scale the participants are invited to use contains descriptors which refer to both speaking and hearing: “0 = Never uses the indicated language. Never hears it.”, “1 = Never uses the indicated language. Hears it very little.”, “2 = Uses the indicated language a little. Hears it sometimes.”, “3 = Uses the indicated language sometimes. Hears it most of the time.”, “4 = Uses the indicated language all of the time. Hears it all of the time.”, “DK = Don't know”. This overlap potentially compromises the accuracy of such measures as it is unclear which dimension the informants will have considered. In turn, this can affect the comparability of measures collected with different questionnaires. In this review, we only classified under ‘language use’ those questions which explicitly referred to the child's output and only if exposure was documented separately. In other words, when a question about ‘use’ was contrasted with a question about ‘exposure’, we assumed that ‘use’ could be interpreted as a synonym of ‘speak’. When there was just one question targeting ‘use’ only, we assumed that the questionnaire designer intended it to mean ‘interact’. In this latter case, the relevant questions were classified under ‘language exposure’, as a construct necessary to document.

Relative frequency of exposure and use

When a questionnaire asked about language exposure or use, it was usually in relative terms – that is, comparing frequency of exposure/use of one language compared to the other. This was the case in 60% of questionnaires for exposure, and 40% for use. Relative estimates were based on different ways of apprehending language experience: as overall exposure/use (e.g., an estimation of overall exposure to each language), as exposure/use with particular interlocutors (e.g., “Mark how often mother uses each language with the child”), in specific contexts (e.g., exposure to each language at school), or during certain activities (e.g., frequency of reading in each language).

Within each of these approaches to language experience, there was also substantial variability in the approach to quantification. For instance, relative exposure to languages in interactions with specific interlocutors was documented in a number of ways. These included 3- to 6-point scales using quantifying adverbs/descriptors, percentage-based questions (e.g., caregiver 1 to child: HL 100% and SL 0%, HL 90% and SL 10%, etc.), a combination of frequency adverbs and percentage scales (e.g., father to child: hardly ever SL and almost always HL = 0% SL, seldom SL and usually HL = 25% SL, etc.), or open-ended questions (e.g., “How often does a sibling speak each language to the target child?”). Even when scales included an identical number of points (e.g., 5-point scales), these also varied in how they were labelled (e.g., De Cat, Reference De Cat2020: always, usually, half the time, rarely, never; Wilson, Reference Wilson2017: never, rarely, sometimes, usually, always; Gunning & Klepousniotou [child questionnaire], Reference Gunning and Klepousniotoun.d.: never, rarely/a bit, half and half, usually/a lot, always). In some cases, these label differences affected the precision of the scale. For instance, the comparability of scales in (1) and (2) could be limited across studies as the highest point in (1) is distributed over the highest two points in (2), thereby leaving the one but highest point in (1) without an equivalent in scale (2).

(1) never, rarely, sometimes, usually, very often/always (PaBiQ, Tuller, Reference Tuller, Armon-Lotem, de Jong and Meir2015)

(2) never, rarely, sometimes, quite often, always (Parent Questionnaire, Arredondo, Reference Arredondo2017)

Potential issues with data comparability also arose with percentage scales, which in principle would be expected to ensure more equivalence between the tools. First, the numerical distance between the points on the scale varied across questionnaires (e.g., 10% or 20% in Lyutykh, Reference Lyutykh2012, vs. 25% in Unsworth, Reference Unsworth2013 or in Cattani, Abbot-Smith, Farag, Krott, Arreckx, Dennis & Floccia, Reference Cattani, Abbot-Smith, Farag, Krott, Arreckx, Dennis and Floccia2014). Second, some scales combined percentages and adverbs (Unsworth, Reference Unsworth2013 or Cattani et al., Reference Cattani, Abbot-Smith, Farag, Krott, Arreckx, Dennis and Floccia2014), while others do not (Lyutykh, Reference Lyutykh2012). Third, as questions about each of the child's languages were usually kept separate, there is a possibility that the sum of percentages could add up to more than 100% (of total language experience). For instance, in documenting languages used between the child and a caregiver, the caregiver might list 50% for one language, 30% for another and 30% for a third one. Some questionnaires avoid this by combining the two languages in the scale (e.g., Lyutykh, Reference Lyutykh2012 uses the following scale: HL 100% and SL 0%, HL 90% and SL 10%, etc.), or by automatically calculating one on the basis of the other (e.g., Unsworth, Reference Unsworth2013). Some explicitly state that the percentage of language exposure for each interlocutor should add up to 100% (e.g., Blume & Lust, Reference Blume, Lust, Blume, Lust, Chien, Dye, Foley and Kedar2017). The same observations also applied to estimates of the relative frequency of language use.

Finally, variability across questionnaires increased even more when we consider how relative exposure and/or use was operationalised in different contexts (e.g., in school, at home) or during certain activities (e.g., playing games, watching TV, when reading or when being read to), rather than with specific interlocutors alone.

Language used with interlocutors, in contexts and during activities

Another frequently documented component included languages used in interactions with interlocutors, in specific contexts, and during specific activities (for exposure in 65% of questionnaires and for use in 35% of cases). This component differed from the previous one in the sense that it was not estimated in a relative way. Here, for each interlocutor, context or activity, the informant has to provide details on which language or languages are used. In principle, this component was therefore documented more consistently across the questionnaires. There are, however, two notable points of divergence. The first is that the number of interlocutors, contexts and activities is either pre-specified (e.g., de Diego-Lázaro, Reference de Diego-Lázaro2019; De Houwer, Reference De Houwer2009) or open-ended (e.g., DeAnda, Bosch, Poulin-Dubois, Zesinger & Friend, Reference DeAnda, Bosch, Poulin-Dubois, Zesinger and Friend2016). While the pre-specified approach offers more comparability between the datasets collected with the same tool, the open-ended approach offers more flexibility, and consequently less comparability. The second difference relates to the ways of documenting languages used with or by interlocutors. This varied in terms of whether the interlocutors were observed separately or as a group. For instance, De Houwer (Reference De Houwer2009) documents the language that each sibling speaks to the child, while De Cat (Reference De Cat2020) asks about the language used with siblings as a group. Similarly, in the measures of language use (i.e., the languages specifically used by the child when communicating with interlocutors), Marinis ([parent questionnaire], Reference Marinis2012) asks about siblings as a group, whereas de Diego-Lázaro (Reference de Diego-Lázaro2019) allows a separate entry for each sibling.

Age/Period of exposure in relation to interlocutors/contexts

Just over a third of questionnaires documented children's language experience history (or part of it) in relation to specific interlocutors and/or contexts. This was in addition to the 29% of questionnaires that enquired about children's language experience history as a whole (see Table S2, ‘Date/Age of exposure/acquisition’). Looking at the way in which the age/periods of exposure to languages are operationalised in relation to interlocutors and contexts, we observe diverse levels of granularity. A large set of questionnaires asked about the length of exposure (across different interlocutors or contexts) from a specific age, which is either pre-specified or to be determined by the informant (Antonijevic-Elliott, Reference Antonijevic-Elliottn.d.; Antonijevic-Elliott, Lyons, O’ Malley, Meir, Haman, Banasik, Carroll, McMenamin, Rodden & Fitzmaurice, Reference Antonijevic-Elliott, Lyons, O’ Malley, Meir, Haman, Banasik, Carroll, McMenamin, Rodden and Fitzmaurice2020; Scharff-Rethfeldt, Reference Scharff-Rethfeldt2012a; Arredondo, Reference Arredondo2017; Gagarina, Klassert & Topaj [questionnaires for preschool and school children], Reference Gagarina, Klassert and Topaj2010; Prentza, Kaltsa, Tsimpli & Papadopoulou [questionnaires for children and parents], Reference Prentza, Kaltsa, Tsimpli and Papadopoulou2017; De Houwer, Reference De Houwer2002; Byers-Heinlein, Schott, Gonzalez-Barrero, Brouillard, Dubé, Laoun-Rubsenstein, Morin-Lessard, Mastroberardino, Jardak, Pour Iliaei, Salama-Siroishka & Tamayo, Reference Byers-Heinlein, Schott, Gonzalez-Barrero, Brouillard, Dubé, Laoun-Rubsenstein, Morin-Lessard, Mastroberardino, Jardak, Pour Iliaei, Salama-Siroishka and Tamayo2019b; Blumenthal & Julien, Reference Blumenthal and Julien2000; de Diego-Lázaro, Reference de Diego-Lázaro2019). Another set of questionnaires documented language experience separately for each year of life, with the important difference that the number of years varies across the questionnaires (first eight years of life in Peña, Gutiérrez-Clellen, Iglesias, Goldstein & Bedore [BESA:BIOS, Parent], Reference Peña, Gutiérrez-Clellen, Iglesias, Goldstein and Bedore2018; every year until the child's age at time of testing in Unsworth, Reference Unsworth2013; three years of preschool and five years of primary school in Cohen, Reference Cohen2015a). Finally, other questionnaires inquired about this information using age bands (e.g., from birth until 1, between 1 and 3, etc.), either to be specified by the informant (DeAnda et al., Reference DeAnda, Bosch, Poulin-Dubois, Zesinger and Friend2016; Blume & Lust, Reference Blume, Lust, Blume, Lust, Chien, Dye, Foley and Kedar2017) or pre-specified (De Houwer, Reference De Houwer2002; Prentza et al. [parent questionnaire], 2017). In addition to these diverse levels of granularity, across questionnaires information about age/period of exposure was not always documented for all languages of the child or with the same interlocutors and in identical contexts.

Time-unit-based measures of exposure and use

Questionnaires varied substantially in time-unit measures. For instance, language exposure could be documented as: hours per day (in 42% of questionnaires),Footnote 2 hours per week (in 33% of questionnaires), weeks per year (in 2% of questionnaires). The average number of waking hours was rarely documented (in 13% of questionnaires). Questionnaires also varied as to whether they asked about the time that the child spends with specific interlocutors (e.g., Gutiérrez-Clellen & Kreiter [parent questionnaire], 2003), the time that the child is in contact with a specific language (e.g., Cohen, Reference Cohen2015a, Reference Cohen2015b), or the time spent in each language with an interlocutor or doing an activity (e.g., de Diego-Lázaro, Reference de Diego-Lázaro2019). Furthermore, daily estimates were documented in different ways. Some questionnaires ask about each day of the week (e.g., Gutiérrez-Clellen & Kreiter [parent questionnaire], 2003; Blume & Lust, Reference Blume, Lust, Blume, Lust, Chien, Dye, Foley and Kedar2017), while others further distinguish term-time weeks from holiday weeks (e.g., Cohen, Reference Cohen2015a, Reference Cohen2015b). One questionnaire documents hours per day for each day of the week, but each day is recorded in a separate week (De Houwer & Bornstein, Reference De Houwer and Bornstein2003). Other daily estimates included hours per day on an average weekday and on an average weekend day (e.g., De Cat, Reference De Cat2020) or average hours per day of doing an activity on school days and separately on weekends (e.g., watch TV, De Houwer, Reference De Houwer2002).

Language mixing

We adopt a broad interpretation of the term ‘language mixing’, encompassing what is variously referred to as ‘code-switching’, ‘code-mixing’, ‘language mixing’, ‘language switching’, ‘borrowing’, etc. While language mixing is gaining more attention in the child bilingualism literature, only 13% of questionnaires asked about it with reference to children's language exposure, and 17% with reference to their language use. How it is documented varied substantially across questionnaires.

For exposure, this component was documented through questions targeting: interlocutors’ mixing when speaking with the child (each interlocutor separately, Blume & Lust, Reference Blume, Lust, Blume, Lust, Chien, Dye, Foley and Kedar2017), parents using both languages in conversation with the child (De Houwer, Reference De Houwer2017), relative frequency of code-switching by each parent with the child (Wilson, Reference Wilson2017), mixing rules in the home (Read, Contreras, Rodriguez & Jara, Reference Read, Contreras, Rodriguez and Jara2020), caregiver's frequency of borrowing, switching, translating a word/phrase when with the child (Read et al., Reference Read, Contreras, Rodriguez and Jara2020), caregiver responding in a language different from the one in which the child speaks (De Houwer, Reference De Houwer2017; Prentza et al. [parent questionnaire], 2017), or about the frequency of caregiver switching languages in a sentence, mixing languages in general, and borrowing a word from the other language (Byers-Heinlein, Reference Byers-Heinlein2013).

For language use, there was even more variability in how language mixing was documented. Questions targeted: language mixing with each interlocutor separately (Blume & Lust, Reference Blume, Lust, Blume, Lust, Chien, Dye, Foley and Kedar2017), types of language mixing (Blume & Lust, Reference Blume, Lust, Blume, Lust, Chien, Dye, Foley and Kedar2017; Scharff-Rethfeldt, Reference Scharff-Rethfeldt2012a), the relative frequency of the child code-switching with each parent separately (Wilson, Reference Wilson2017), child language mixing when they speak (Özturk, Reference Özturkn.d.), frequency of children's mixing in speaking and writing (Lyutykh, Reference Lyutykh2012), child mixing in the company of bilinguals or monolinguals (Scharff-Rethfeldt, Reference Scharff-Rethfeldt2012a), starting a sentence in one language and finishing it in another (Gunning & Klepousniotou [child questionnaire], Reference Gunning and Klepousniotoun.d.), frequency of using both languages in a single sentence (De Houwer, Reference De Houwer2017), the ease of language switching (De Houwer, Reference De Houwer2017), frequency of responding in one language when being asked in another (in both speaking and writing, Lyutykh, Reference Lyutykh2012), frequency of translating words from one language to another (Lyutykh, Reference Lyutykh2012), frequency of using words from one language when speaking another (Prentza et al. [parent questionnaire], 2017), not using the same language that the parents speak to them (De Houwer, Reference De Houwer2017).

Other exposure- and use-related components

A number of additional components relating to language exposure and use were also documented. Some of these were more frequent, such as the following exposure-related components: number of interlocutors (31%), early exposure (19%), and changes in exposure (25%). Questionnaires varied in the range of interlocutors targeted: for example, in the home, at (pre)school (Gagarina et al. [questionnaire for school children], 2010), or frequent interlocutors in other settings (e.g., Blume & Lust, Reference Blume, Lust, Blume, Lust, Chien, Dye, Foley and Kedar2017). Questions about early exposure mostly differed in terms of the point in the child's life until which the questionnaire documents information: for instance, before the age of 4 (e.g., DeAnda et al., Reference DeAnda, Bosch, Poulin-Dubois, Zesinger and Friend2016; Tuller, Reference Tuller, Armon-Lotem, de Jong and Meir2015), before preschool (e.g., Gagarina et al. [questionnaires for preschool and school children], 2010), within the first two years (e.g., Scharff-Rethfeldt, Reference Scharff-Rethfeldt2012a), until the age of eight (e.g., Gutierrez-Clellen & Kreiter [parent questionnaire], 2003), etc. Changes in exposure were also documented in a variety of ways, such as changes over the years in the languages used by the adults or children in the household (e.g., Antonijevic-Elliott et al., Reference Antonijevic-Elliott, Lyons, O’ Malley, Meir, Haman, Banasik, Carroll, McMenamin, Rodden and Fitzmaurice2020), changes in language situation before entry into preschool (e.g., Gagarina et al. [questionnaires for preschool and school children], 2010), or languages that the child does not hear or need anymore (e.g., Scharff-Rethfeldt, Reference Scharff-Rethfeldt2012a).

Finally, there were several less frequently documented components of exposure and use. For language exposure, these included: changes in the number of interlocutors (2%), nativeness of interlocutors (4%), dialect of interlocutors/exposure (10%), and other interlocutor related questions mostly about friends’ demographics (6%). For language use, less frequently documented components included the questions about early use (2%), about changes in the use (2%), and questions about the language or the interlocutor that the child speaks the most (in)to (4%).

In the following section, we present components related to the documentation of activities, some of which have already been included in the exposure/use section.

Activities

Under the overarching construct ‘activities’, nine different components emerged. As Table 4 illustrates, these components were operationalised in just 2-25% of questionnaires. This low number is mostly due to our approach in classifying activity-related questions. Specifically, as explained in a previous section, given that involvement in activities often reflects a child's exposure and use, many activity-related questions were classified under exposure- and use-related components. This was done whenever the frequency of doing a specific activity was operationalised in one of the following ways: in relative terms (e.g., in HL always, in SL never), in terms of hours per day, hours per week, or in terms of language used during the activity (with an interlocutor or in a context). These patterns of documenting frequency emerged quite clearly under the constructs of exposure and use during the inductive coding procedure. Hence, we classified activity-related questions of this kind under those categories. The purpose of this approach was to ensure consistency in classifying measures in their raw form (i.e., in the manner in which they were documented or asked about) rather than relying on whether a specific questionnaire considered involvement in a given activity as a part of the exposure/use quantification (in one of the ways listed above) or as an estimate of another domain (e.g., exposure/use richness, exposure/use quality). Therefore, operationalisations listed under the activity-related components presented in Table 4 include any questions which (a) queried the frequency of activities in a way different from operationalisations under the constructs of exposure and use (listed above) or (b) any other activity-related questions, such as the language of the written material in the house, attending HL/SL classes, preferred activities, etc.

The most commonly documented component among the activities was ‘literacy and other activities in HL and/or SL’ (in 25% of questionnaires). Some of the operationalisations encountered under this component included: times per week of reading, using computer, TV, watching movies, storytelling, or singing songs separately for SL and HL (Paradis, Reference Paradis2011), specification of languages in which the written material (e.g., books, newspapers, periodicals) is available at home (Blume & Lust, Reference Blume, Lust, Blume, Lust, Chien, Dye, Foley and Kedar2017), number of books in the home (if any) for SL and HL separately (either less than 10 or more than 10, McKendry & Murphy, Reference McKendry and Murphy2011), frequency of parents borrowing or buying books separately in each language to be rated on a scale consisting of never, a few times a year, 1-2 times a month, 1-2 times a week, 4-5 times a week, usually daily (Cohen, Reference Cohen2015a, Reference Cohen2015b), using computers outside of school for games, for staying in touch with family, for staying in touch with friends, or for looking at websites (McKendry & Murphy, Reference McKendry and Murphy2011), or specifications of the alphabet used when texting or emailing in HL (HL alphabet, Latin alphabet, both, Prentza et al. [child questionnaire], 2017). In addition, there were other frequency-related questions using diverse scales and inquiring about the number of times per week/month/year (or a combination of these) that the activity was conducted. Furthermore, there were various operationalisations about the written material in the home.

Reading in HL and/or SL was the next most frequently documented activity (in 17% of the questionnaires). Questions included asking parents to specify if they read to their child at least twice a week (completely agree, more or less agree, not quite agree, entirely disagree, De Houwer, Reference De Houwer2017), or about the frequency of reading activities for each language separately (never or almost never, at least once a week, every day, Tuller, Reference Tuller, Armon-Lotem, de Jong and Meir2015). Sometimes the frequency of reading was documented separately for the child reading alone and for the parents/caregivers reading with the child (e.g., Cohen, Reference Cohen2015a, Reference Cohen2015b, on a 6-point scale; Arredondo, Reference Arredondo2017, on a 4-point scale). There were also questions about who reads the most with the child and whether the comments/questions by the child during a story depend on the language of reading (Read et al., Reference Read, Contreras, Rodriguez and Jara2020). Other examples included questions about the place where the child started learning to read in each language (Arredondo, Reference Arredondo2017), whether anyone reads to the child, since what age, and how often during preschool age (De Houwer, Reference De Houwer2002), whether the child is learning to read in HL, who teaches them and how often (McKendry & Murphy, Reference McKendry and Murphy2011). There was also a case when the informants were given a choice between five age bands and they needed to select when they started and stopped reading to the child (language not specified, Arredondo, Reference Arredondo2017).

Courses/classes and travel/holidays were documented in 15% of the questionnaires. Operationalisations of classes/courses mostly included questions referring to instruction in HL/SL, or about any other instruction or classes that the child is attending. For instance, Lyutykh (Reference Lyutykh2012) asked about SL classes/courses at school, gifted/advanced placement classes, weekend school, formal instruction in HL reading, writing or language study, textbooks used in HL study, benefits of HL weekend school, reasons for not attending the HL weekend school, attendance in any other weekend, ethnic, or religious school other than the HL one, or things about the HL weekend school not liked by the parents. Other questions targeted: whether there was a place of formal instruction in HL (Arredondo, Reference Arredondo2017), reasons for choosing additional HL or SL lessons (Prentza et al. [parent questionnaire], 2017), whether children attended a bilingual school and parents’ opinions of this (Özturk, Reference Özturkn.d.), the person teaching the child a specific language for religious purposes, as well as frequency of this activity (McKendry & Murphy, Reference McKendry and Murphy2011), or whether children attended a HL school (Antonijevic-Elliott, Reference Antonijevic-Elliottn.d.). Travel/holidays was mostly operationalised as the frequency of visiting the HL country or the frequency of visitors from the HL country. It should be noted that one holiday-related operationalisation (in Wilson, Reference Wilson2017) was classified under the construct ‘Socioeconomic status’ (SES), as this question was a part of the child's SES estimation (see Table S4).

Several other activity-related components were documented across the questionnaires, although with such low frequency that we do not discuss them in any detail here. These included, preferred activities (4%), ease of learning new things (2%), activity patterns (2%), extra-curricular activities (6%), other activities (4%).

To sum up, the various components documenting a child's activities were operationalised in diverse ways. This illustrates how difficult it is to compare data collected with different tools. This diversity further increases when we factor in the various ways in which activities classified under the constructs ‘exposure’ and ‘use’ were operationalised (see section Exposure and use).

Current skills in HL and SL

As shown in Table 3, when it comes to children's current skills in HL and in SL (each estimated by 42% of questionnaires), the most frequently documented components included speaking/production (in 35% of questionnaires for SL, and in 38% for HL) and listening/comprehension (in 31% of questionnaires for SL, and in 29% for HL). Speaking/production was assessed on a variety of scales across the questionnaires. These ranged from 3-point scales to 10-point scales for both HL and SL, and they also included binary questions such as the one in Blume and Lust (Reference Blume, Lust, Blume, Lust, Chien, Dye, Foley and Kedar2017) about whether the production/comprehension in all languages was appropriate. This diversity makes it highly unlikely that the data collected with these tools will be readily comparable.

Even when scales of the same length were used, the point descriptors could vary widely between the tools. An illustration of this can be seen in comparing the 5-point scales used to assess SL speaking/production. Some scales were based on general qualifiers of proficiency (e.g., de Diego-Lázaro, Reference de Diego-Lázaro2019: very poor, poor, acceptable, good, very good; and Prentza et al. [parent questionnaire], 2017: not at all, a little, adequately, well, very well), and could therefore be considered rather similar. However, data collected with these tools are much less comparable with the SL speaking proficiency documented by Özturk's (Reference Özturkn.d.) questionnaire, which used the following descriptors and for each of these provided real-life illustrations: not fluent, limited fluency, somewhat fluent, quite fluent, very fluent. Apart from questions about speaking/production skills in general, this component also included questions targeting more specific skills, such as vocabulary proficiency, speech proficiency, grammatical proficiency (Peña et al. [BESA:ITALK at school and at home], 2018), the ability to produce sentences of a certain complexity (De Houwer, Reference De Houwer2017), or expressive language skills in relation to specific interlocutor groups or specific contexts (Restrepo, Reference Restrepo1998).

When it comes to SL listening/comprehension, most operationalisations included rating understanding skills in general. However, there were also examples of rating the understanding ability or receptive language in specific situations, contexts or with certain interlocutor groups (e.g., Blume & Lust, Reference Blume, Lust, Blume, Lust, Chien, Dye, Foley and Kedar2017; Prentza et al. [parent questionnaire], 2017; Marinis [parent questionnaire], Reference Marinis2012; Restrepo, Reference Restrepo1998). The points regarding variability illustrated above also apply to the HL speaking/production and HL listening/comprehension assessment.

Reading and writing skills were not frequently documented – for both languages separately, reading was documented in 13% of questionnaires, and for writing in only 10%. One further operationalisation asked jointly about reading and writing in HL. Despite only a few questionnaires documenting these skills, the assessment scales varied considerably, as they included 3- to 6-point scales, as well as binary choice questions, such as whether the child can read/write.

Finally, 6% of questionnaires asked about overall SL proficiency (4- to 6-point scales), while 8% of questionnaires documented overall HL proficiency (4- to 6-point scales). Furthermore, one questionnaire contained an open-ended question about the reasons for parental dissatisfaction with a child's HL and why the child might be different from children in the HL country (Paradis, Emmerzael & Sorenson Duncan, Reference Paradis, Emmerzael and Sorenson Duncan2010) – this question was classified under a separate component ‘reasons for dissatisfaction’.

It is important to mention that some of the ways in which the component ‘language problems’ was operationalised (classified under the construct ‘developmental issues/concerns’, see Table S5) included questions about children's current language skills. Examples include questions about: how often children struggled with reading/writing/listening/speaking activities (Gunning & Klepousniotou [teacher questionnaire], Reference Gunning and Klepousniotoun.d.), difficulties in understanding the child because of the way he or she says words (Antonijevic-Elliott, Reference Antonijevic-Elliottn.d.; Antonijevic-Elliott et al., Reference Antonijevic-Elliott, Lyons, O’ Malley, Meir, Haman, Banasik, Carroll, McMenamin, Rodden and Fitzmaurice2020), concerns and descriptions of the way in which the child talks (Peña et al. [BESA:ITALK home], 2018), etc. However, as these operationalisations focused on language-related issues, delays, struggles, worries, concerns, frustration, problems, difficulties, or diagnosis of language impairment/delay, they were classified under language problems rather than under current skills. Nevertheless, they can provide a useful insight into a child's language abilities or lack thereof.

4. Discussion

In this paper, we have reviewed the extent to which the descriptive tools used in bilingualism research tap into the same constructs and yield comparable measures, focusing on questionnaires designed to document bilingualism in children (age range: 0-18 years). This is the first comprehensive review of the components of bilingual experience across questionnaires that has considered the commonality of the components documented as well as how they are operationalised. As per our three aims, we have (i) surveyed available questionnaires used to quantify bilingual experience in children; (ii) identified (and classified) the components of bilingual experience that are documented across questionnaires; and (iii) discussed the comparability of the measures used to operationalise some of the most important components.

Most of the tools surveyed have been used to inform research in developmental psycholinguistics. This means that, given the practical impossibility of a truly systematic literature review to inform our survey and in spite of our attempts to be fully comprehensive, there remains the possibility of a bias in the sample of questionnaires we were able to find. The importance attributed to language exposure and use in our comparability analysis might therefore be a reflection of such a bias. Nevertheless, we trust that our comprehensive review will be beneficial for bilingualism research in its broadest definition.

With these caveats in mind, our review has highlighted the components most frequently documented across questionnaires (expressed via percentages in our summary tables). This revealed that, in spite of the different research aims across studies, there is a broad common ground in what manifestations of bilingualism are documented. Of particular note are the quantification of language exposure and use, and (albeit less frequently) the documentation of language difficulties possibly experienced by the child. Many factors contribute to explaining the considerable variability in how these components are documented, including, for instance, the broad age range the various questionnaires are intended for, and the different levels of heterogeneity of the populations targeted. Furthermore, reviewed questionnaires were of different lengths. This implies that those constructs and their components which were included more frequently across the tools (even in the shorter ones) might be considered more important by the questionnaire designers.

Other components of bilingualism are less frequently documented across questionnaires, but we do not think that this can be meaningfully interpreted. Such differences are not necessarily indicative of frequency of implementation across studies, as some questionnaires are used more frequently than others. Importantly, we do not mean to argue for any ranking of measures due to a potential bias in questionnaire selection for this review as pointed out above. Furthermore, the tools used to document bilingualism evolve along with research questions in the field, and components such as ‘language mixing’ (see Byers-Heinlein, Reference Byers-Heinlein2013) or ‘the quality of language exposure’ (see, for instance, the double special issue dedicated to this topic by the Journal of Child Language - Blom & Soderstrom, Reference Blom and Soderstrom2020) might become more prevalent in the future.

Importantly, this review has unveiled differences in the operationalisation of apparently similar constructs. Such differences can jeopardise the comparability of the resulting measures (Marian & Hayakawa, Reference Marian and Hayakawa2020). For instance, careful scrutiny has revealed the difficulty of documenting language exposure and language use separately: even when a questionnaire is designed to document one of them, the relevant questions could be insufficiently clear in their formulation, making the resulting measure a conflation of both exposure and use. Another case in point is the degree of measurement error associated with the choice of response scales. For instance, there is a lot of variability in the way questionnaires collect language exposure measures: not all break this construct down by interlocutor and/or by context, and the number of interlocutors and contexts considered is also variable. Furthermore, the amount of time spent with each interlocutor or in each context is not always documented itself. Importantly, there is no consensus on how to deal with overlap between the interlocutors. Does the proportion of overall language exposure get split between interlocutors, and if so, should equal weight be assigned to each interlocutor? What if different languages are used with different interlocutors present at the same time? There are also differences in the types of scales used in terms of measure (e.g., percentages, frequency adverbs) and in terms of granularity (e.g., number of points on a Likert scale). The precision of the scale should in principle affect the precision of the resulting measure, but we have to bear in mind that what is being documented is based on recollections, filtered through the respondent's generalisation and quantification abilities. The impact of scale precision on the degree of measurement error is an empirical question we aim to address in future research. For now, we agree with Kremin and Byers-Heinlein (Reference Kremin and Byers-Heinlein2020) that the field of bilingualism could benefit from advances in psychometric research methods. That is, by using models such as the factor mixture model or the grade-of-membership model, a better solution might emerge on how to define bilingualism. As explained by Kremin and Byers-Heinlein (Reference Kremin and Byers-Heinlein2020), the factor mixture model allows capturing variation within categories such as bilingual and monolingual. In addition, the grade-of-membership model allows individuals to belong to different categories to varying degrees. Based on the work presented here, we argue that the building blocks of those models (i.e., raw measures) require further scrutiny.

Not all components of bilingualism are quantifiable, and in some cases, what is crucial is not the quantity or frequency of a component but simply its existence, alongside other relevant components. This information can be used to derive complex indices, used as predictors in a particular field of enquiry. For instance, language impairment is strongly associated with a constellation of indicators (including, among others, early language milestones and parental concerns) which can be translated into a composite score expressing the level of risk of atypical development (Tuller, Reference Tuller, Armon-Lotem, de Jong and Meir2015). The richness of the language environment (e.g., Paradis, Reference Paradis2011) is another dimension that might be best captured by the use of a composite index. The set of components that such composite indices are based on currently varies across studies. For instance, the set of factors informing the level of ‘richness of the language environment’ may include – among others – the number of interlocutors in each language, whether the child has older siblings, the number and types of activities in each language, levels of literacy, etc. Composite scores may assign a weight to each of their components (e.g., if early language milestones are scored out of 4 and parental concerns on a scale from 0 to 5, a cumulative composite score based on these measures would automatically result in a heavier weight attributed to parental concerns), and this can induce variability across studies. If the components are documented differently in this way, this can result in an additional hidden level of variability.

Reviewing the manifestations of bilingualism documented across questionnaires has required us to classify them as components of overarching constructs. This classification highlighted a number of challenges. One challenge was the choice of terminology. We aimed to be strictly descriptive and neutral, but this was not always possible. For instance, our choice of ‘home language’ vs. ‘societal language’ to label the child's languages is not adequate for all types of bilingualism (such as in households featuring two majority languages) but we estimated these labels would be easy to translate into the realities of most bilingual children, and that they were more broadly encompassing and less controversial than ‘heritage language’ or ‘minority/majority languages’. Another challenge arose from the mapping of questions onto components of bilingualism. We adopted the principle that any one question could only be mapped onto one component (and hence one overarching construct). For instance, there was a questionnaire in which a scale documenting relative frequency of exposure included an option about language mixing. We classified this under the relative frequency of exposure only, as that was the main aim of the question, rather than additionally including this item under the component of language mixing.

While we focused on questionnaires used with children, we also expect our findings to extend to the adult population. Specifically, the overarching constructs which we discussed in more detail (exposure and use, activities, and current skills in HL and SL) are most likely to also be relevant for adults. Other constructs (not discussed in the paper, but outlined in the supplements, see section C) could also be pertinent, depending on the aim of individual studies (Supplementary Materials). We might also expect that questionnaires with adults would also show similar variability as the studies with children in how specific variables are operationalised. At the same time, there are also a number of differences between adults and children which necessitate alternative approaches with adults. The most obvious difference is the fact that for adults, the questionnaires will likely always be distributed to the participants themselves rather than to their caregivers or teachers. Furthermore, some contexts of language exposure and use, such as (pre)school, do not apply to adults; other contexts, such as college/university setting and/or the work environment, are more relevant to the adults. The adults’ own education, occupation or income might be used as estimates of their socioeconomic status. The home environment is also likely to be different in cases of adults not living with their caregivers/siblings. This will have an effect on how child questionnaires can be adapted for use with adults (or vice versa) and the implications of these factors should be considered when designing/choosing questionnaires to be used with both populations.

In practical terms, bilingualism can only be measured indirectly, and its operationalisation necessarily builds on (the combination of) several phenomena or dimensions. Our aim was not to address the operationalisation of bilingualism itself (as a latent construct), but to assess the comparability of the ‘building blocks’ researchers exploit to generate the calculation of their choice. Without direct comparability of those building blocks, there is no possible comparability even across studies that rely on the same formula for an overall bilingualism score (e.g., language entropy, see Gullifer & Titone, Reference Gullifer and Titone2020).

To ascertain the extent to which there is a consensus regarding what components of bilingualism are desirable or essential to document, our team has recently carried out an international Delphi Consensus Survey (De Cat et al., Reference De Cat, Kašćelan, Prévost, Serratrice, Tuller and Unsworth2021). This will inform the creation of a modular questionnaire and associated calculator of language exposure and use.

5. Concluding remarks

We agree with Marian and Hayakawa (Reference Marian and Hayakawa2020) that the field of bilingualism is in urgent need of greater transparency in how we operationalise bilingualism, as well as greater comparability of measures. We hope that the taxonomy delineated in this paper will provide a framework within which scholars/researchers can collaborate towards a consensus on a core set of measures to report across studies, in order to enhance transparency and reproducibility. Ideally, a consensus should be reached across bilingualism researchers, leading to the adoption of a common set of tools. However, the use of different core sets of measures in different subfields of enquiry is not precluded. Specifically, since bilingualism research is a fast-moving field, the tools we use will necessarily have to adapt to account for particular research aims and novel discoveries. However, as a minimum, we believe it is necessary for all studies to report transparently how the relevant components of bilingualism were measured, and to publish the relevant questionnaire(s) in online supplements. Ultimately, no matter which questionnaires are used or devised, a common understanding of the raw measures (i.e., building blocks) should remain a basic requirement across studies.

Methods in bilingualism research are evolving fast, and the time is ripe for a more critical approach to the documentation of bilingualism. This includes the validation of questionnaires and their methods of administration, and the critical appraisal of fitness-for-purpose when selecting a tool to inform research or practice.

Acknowledgements

We are thankful to the colleagues who shared their questionnaires with us, as well as to those who directed us to tools which they used or adapted. We are also thankful to the reviewers whose comments improved the quality of this paper. Finally, we thank the Economic and Social Research Council for funding this project (grant reference: ES/S010998/1).

Supplementary Material

For supplementary material accompanying this paper, visit http://dx.doi.org/10.1017/S1366728921000390

Competing interests

The authors declare none.