1. Introduction

Actuaries must understand the mortality characteristics of portfolios they work on, and a key goal of any model is that it must reflect reality. This has two facets. First, a model must not make assumptions that contradict the data. In particular, the analyst should not have to discard data because it is inconvenient for the model; a model should match the data, not the other way around. Second, a model should be capable of reproducing real-world features of the risk. As we will see, continuous-time methods have specific advantages over discrete-time models in both respects.

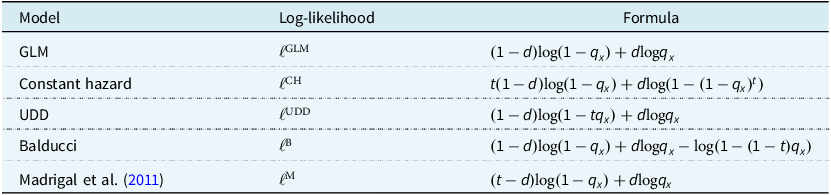

In their landmark paper, Forfar et al. (Reference Forfar, McCutcheon and Wilkie1988) considered three different metrics for modelling mortality: (i) the discrete-time probability of death,

![]() ${q_x}$

, (ii) the central mortality rate,

${q_x}$

, (ii) the central mortality rate,

![]() ${m_x}$

, and (iii) the continuous-time hazard rate,

${m_x}$

, and (iii) the continuous-time hazard rate,

![]() ${\mu _x}$

. Of the three,

${\mu _x}$

. Of the three,

![]() ${m_x}$

has fallen somewhat into disuse, in part because it does not arise as a parameter in a statistical model. The probability

${m_x}$

has fallen somewhat into disuse, in part because it does not arise as a parameter in a statistical model. The probability

![]() ${q_x}$

retains an important pedagogical role for students – there is nothing simpler than the idea of mortality viewed as a binomial trial over a fixed period like a year. However, this simplicity often collides with the messier reality of business processes. This paper explores some practical reasons why

${q_x}$

retains an important pedagogical role for students – there is nothing simpler than the idea of mortality viewed as a binomial trial over a fixed period like a year. However, this simplicity often collides with the messier reality of business processes. This paper explores some practical reasons why

![]() ${\mu _x}$

is usurping

${\mu _x}$

is usurping

![]() ${q_x}$

in actuarial work. The companion paper, Macdonald and Richards (Reference Macdonald and Richards2025), provides some theoretical underpinning.

${q_x}$

in actuarial work. The companion paper, Macdonald and Richards (Reference Macdonald and Richards2025), provides some theoretical underpinning.

The plan of the rest of this paper is as follows. In Section 2 we state assumptions and define some terms. Section 3 compares the ability of discrete- and continuous-time models to represent the data produced by various business processes. Section 4 looks at a variety of mortality features that require greater granularity than a one-year, discrete-time view of risk. Section 5 illustrates the greater flexibility with investigation period offered by continuous-time methods. Section 6 explores the analysis of competing decrements. Section 7 considers the uses of some non- and semi-parametric techniques for data-quality checking, while Section 8 discusses their application for real-time management information. Section 9 discusses the results and Section 10 concludes. Appendix A contains numerous case studies based on actual business experience. Appendix B details the difficulties that discrete-time models have in dealing with fractional periods of exposure. Appendix C considers the bias introduced when discarding data. Appendix D provides a primer in non- and semi-parametric methods for actuaries, while Appendix E outlines some features of grouped-count models.

2. Assumptions and Terminology

We assume that we are primarily interested in the analysis of mortality, although many of the comments apply to other decrements. Mortality is most naturally described in a continuous-time settingFootnote 1 . That said, the most granular measure of time recorded in an administration system is typically a calendar date. This means that “continuous time” is for practical purposes synonymous with “daily.” However, while an individual can die on any date, this information may not always be available for analysis – Case Study A.10 describes an example where only the month of death was available, while in Case Study A.17 only the year of death was available (see Appendix A). Where the date of death is not known exactly, deaths are said to be interval censored; the event is only known to have occurred between two points. In Case Study A.10, the interval is a calendar month, whereas in Case Study A.17 it is a year.

An event is an occurrence at a point in time that is of financial interest, such as a death or policy lapse. Other events are deemed irrelevantFootnote 2 . Events can occur in any order consistent with physical or procedural possibility, e.g. a pension-scheme member cannot retire after dying. Simultaneous events are ruled out by assumption. Note that there is a distinction between an event occurring and when (or if) that event is reported to the insurer or pension administrator; see the discussion of late-reported deaths in Section 4.3.

A discrete-time model for mortality assumes that the life in question exists at the start of a fixed period, such as one year, and is either alive (

![]() $d = 0$

) or dead (

$d = 0$

) or dead (

![]() $d = 1$

) at the end of this period. No other event is permitted, such as an earlier exit. No information is available on the time of the event during the fixed period; we only know whether the life died, not when. Discrete-time models are typically parameterized by

$d = 1$

) at the end of this period. No other event is permitted, such as an earlier exit. No information is available on the time of the event during the fixed period; we only know whether the life died, not when. Discrete-time models are typically parameterized by

![]() $q$

or

$q$

or

![]() ${q_x}$

. We will call such models “

${q_x}$

. We will call such models “

![]() $q$

-type models.”

$q$

-type models.”

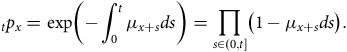

A continuous-time model for mortality assumes that the life in question is aged

![]() $x \ge 0$

at the start of observation and is observed for

$x \ge 0$

at the start of observation and is observed for

![]() $t \gt 0$

years. At age

$t \gt 0$

years. At age

![]() $x + t$

the life is either dead (

$x + t$

the life is either dead (

![]() $d = 1$

) or was alive when last observed (

$d = 1$

) or was alive when last observed (

![]() $d = 0$

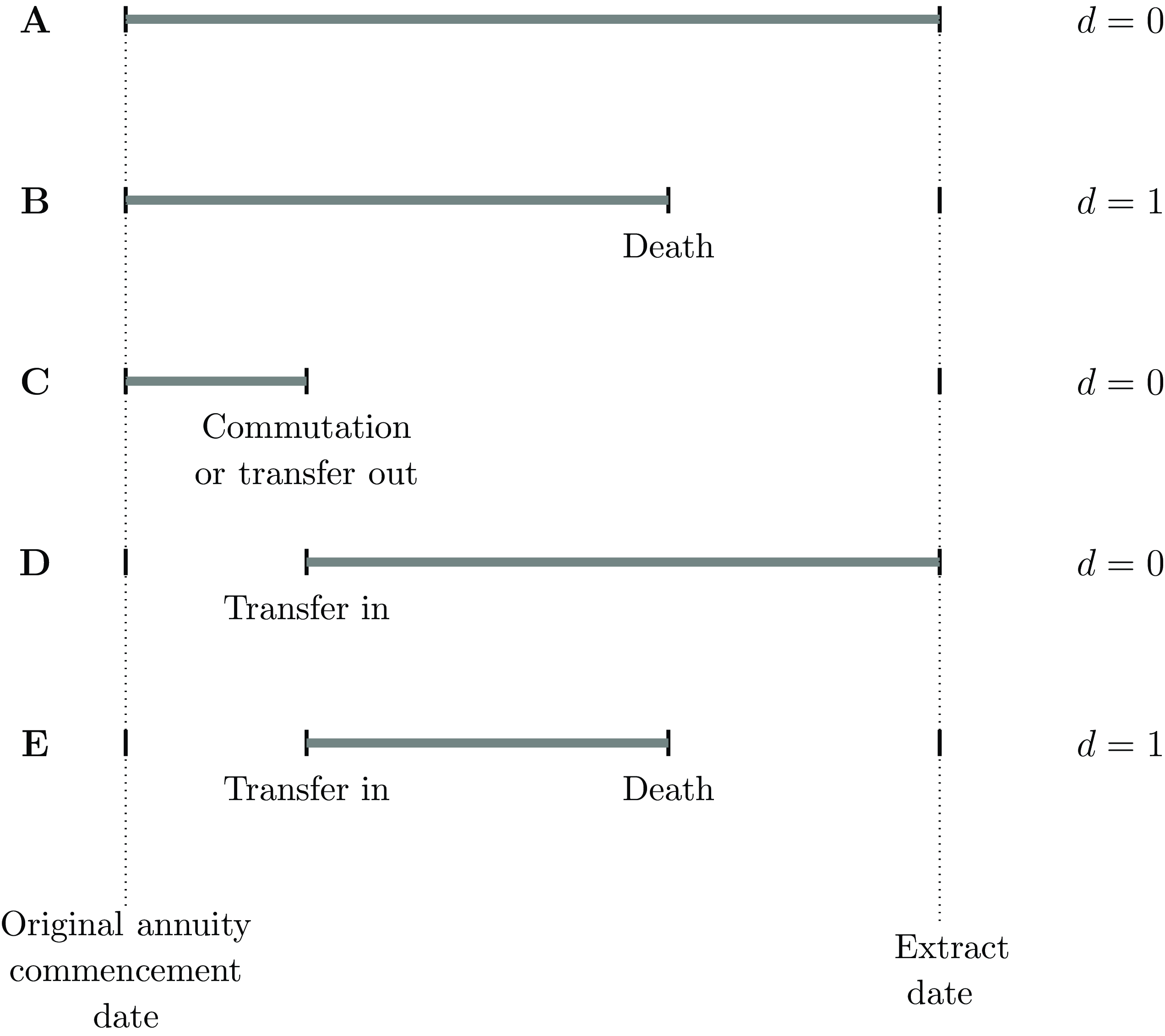

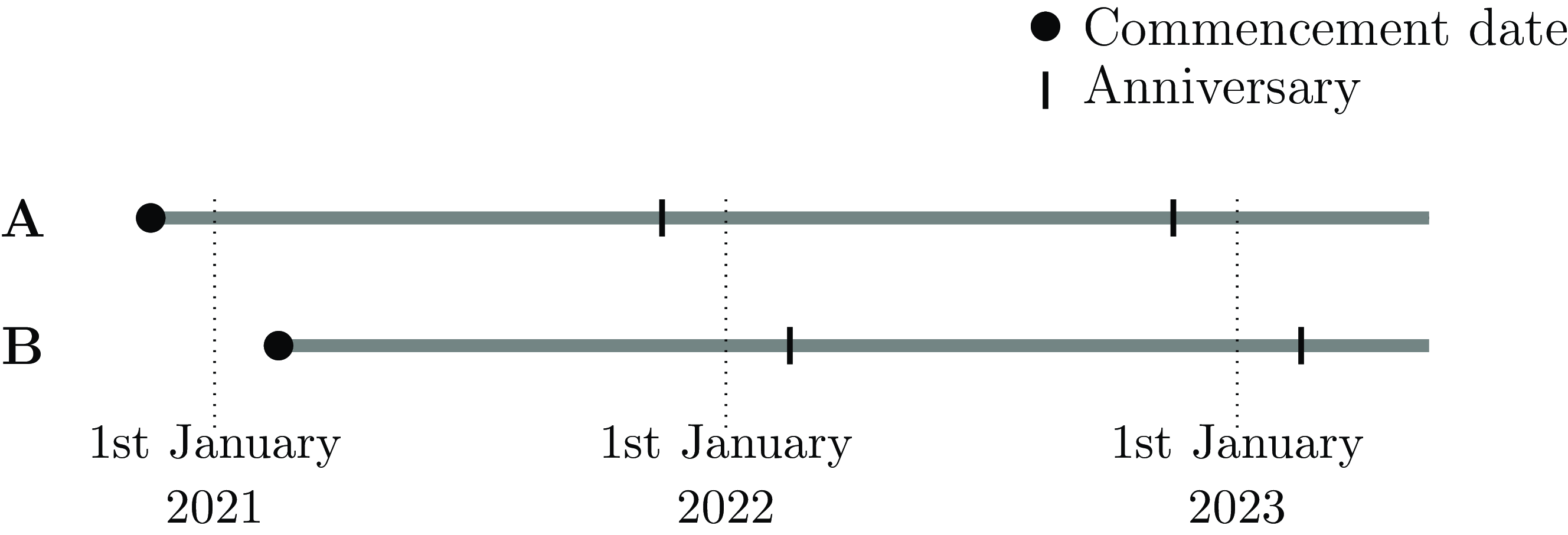

); see Cases A and B in Figure 1. If other events are accommodated by ceasing observation at age

$d = 0$

); see Cases A and B in Figure 1. If other events are accommodated by ceasing observation at age

![]() $x + t$

, then the observation is regarded as censored with respect to the decrement of interest, i.e. death. A crucial distinction from discrete-time models is that we know when the event of interest took place. Continuous-time models typically denote the risk by

$x + t$

, then the observation is regarded as censored with respect to the decrement of interest, i.e. death. A crucial distinction from discrete-time models is that we know when the event of interest took place. Continuous-time models typically denote the risk by

![]() $\mu $

or

$\mu $

or

![]() ${\mu _x}$

.

${\mu _x}$

.

![]() ${\mu _x}$

was known to actuaries as the force of mortality, and to engineers as the failure rate. However, we prefer the term hazard rate, as used by statisticians (Collett, Reference Collett2003, Section 1.3). We will refer to such models as “

${\mu _x}$

was known to actuaries as the force of mortality, and to engineers as the failure rate. However, we prefer the term hazard rate, as used by statisticians (Collett, Reference Collett2003, Section 1.3). We will refer to such models as “

![]() $\mu $

-type models.”

$\mu $

-type models.”

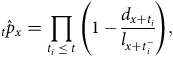

Figure 1. Timelines for various annuitant cases with usable exposure time,

![]() $t$

, marked in grey. Case A is a survivor administered on the system from the annuity commencement date to the extract date. Case B is an annuity administered on the system from outset, but where death has occurred prior to the extract date. Case C represents an annuity administered on the system until either commutation, transfer to another system or transfer to another insurer. Cases D and E are annuities that were set up on another system or insurer at outset and subsequently transferred onto the current system. Cases with

$t$

, marked in grey. Case A is a survivor administered on the system from the annuity commencement date to the extract date. Case B is an annuity administered on the system from outset, but where death has occurred prior to the extract date. Case C represents an annuity administered on the system until either commutation, transfer to another system or transfer to another insurer. Cases D and E are annuities that were set up on another system or insurer at outset and subsequently transferred onto the current system. Cases with

![]() $d = 0$

are all right-censored with respect to mortality.

$d = 0$

are all right-censored with respect to mortality.

The most common type of censoring is where all that can be said is that death had not been reported at the last point of observation, known as right-censoring. Examples of right-censoring points include the date of extract, the date of transfer out or the date of trivial commutation; in each case, the life under observation was not reported to have died at the point of last observation. Cases A, C and D in Figure 1 are examples of right-censored observations. There are two sub-types of right-censoring: (i) “obligate” right-censoring, where observation ceases at a point in time known in advance, and (ii) random right-censoring, where observation ceases at a point in time not known in advance. The distinction becomes important when the analyst decides to discard data; see Appendix C.

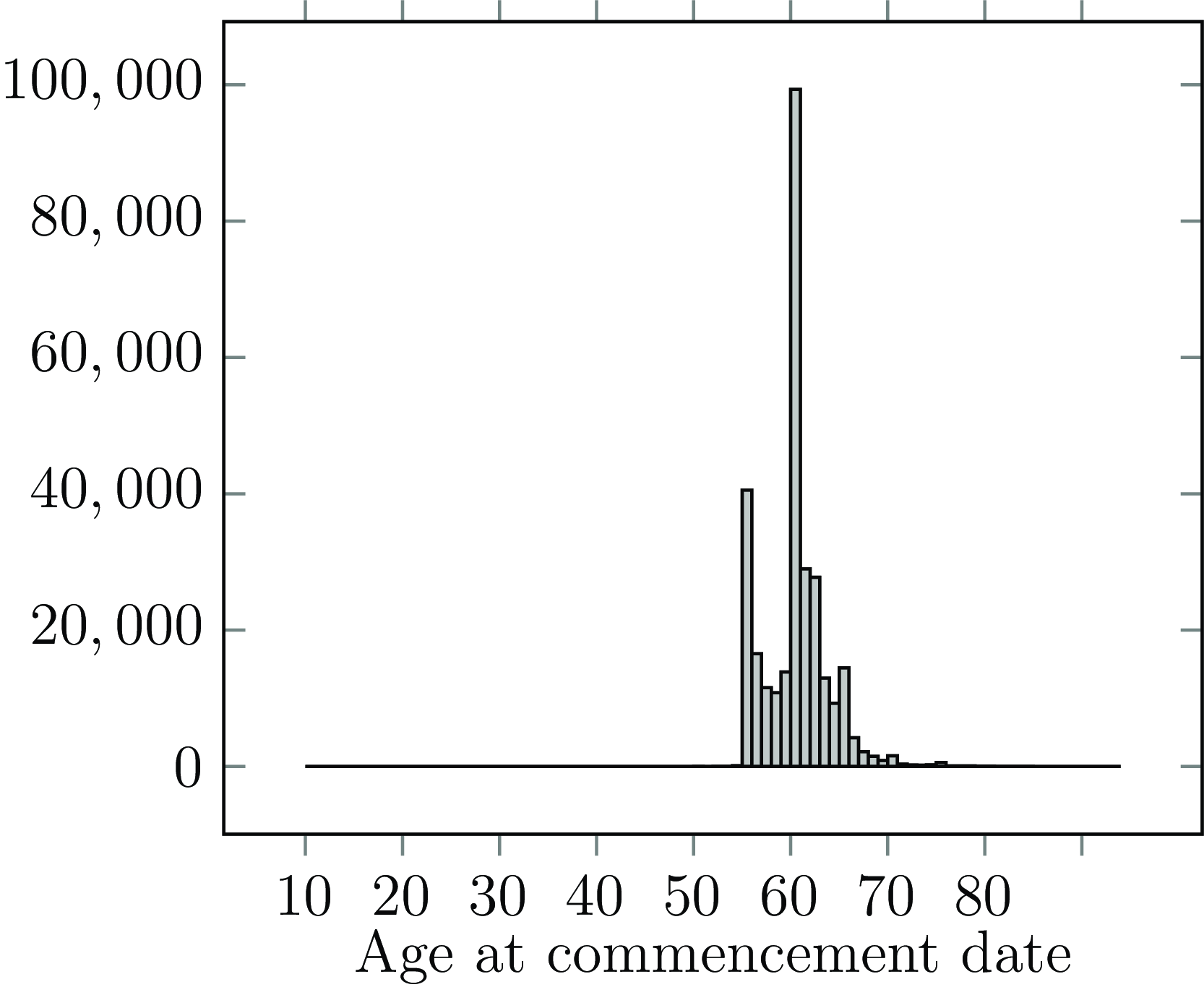

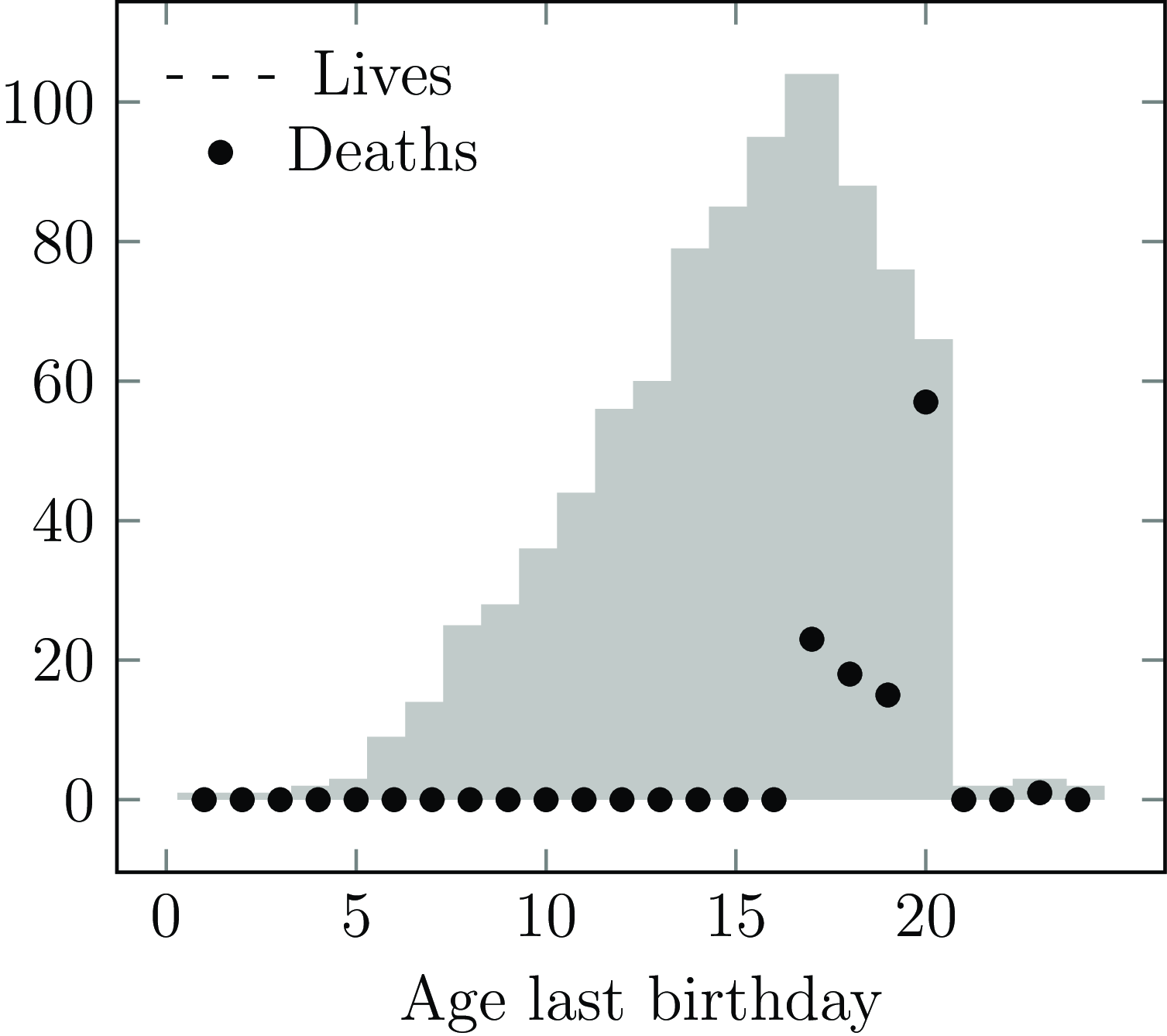

The start of an observation period for a life is qualitatively different from the end of the observation period. In actuarial work, most policyholders only become known to the portfolio well into their adult life; Figure 2 shows the distribution of age at commencement for annuities set up with a French insurer, where almost nobody enters observation before age 55 (an exception is shown in Figure A2). This is left truncation, where the mortality of those not entering observation is by definition unknown. Left truncation is a central feature of actuarial survival analysis, in contrast to, say, medical analysis (Collett, Reference Collett2003). Left truncation arises naturally, such as when a policy first commences or retirement commences (Section 3.2). However, left truncation can also be introduced by business processes (such as the system migrations discussed in Section 3.3) and deliberate analyst decisions (such as described in Section 5).

Figure 2. Histogram of age last birthday at annuity commencement date.

Source: 298,906 annuities for a French insurer.

We assume that we have data on individuals. If we have data on benefits or policies, we will first need to create records for individual lives through a process of deduplication; see Macdonald et al. (Reference Macdonald, Richards and Currie2018, Section 2.5). Deduplication is not merely a prerequisite for the independence assumption underlying statistical modelling, but it also results in a better understanding of risk and can even save money; see Case Study A.1 in Appendix A for an example. Note that having data on individual lives does not presuppose that we must model their risk individually, although this is desirable. However, extracting individual records does enable detailed validation and checking (Macdonald et al., Reference Macdonald, Richards and Currie2018, Sections 2.3 and 2.4).

The preceding descriptions of

![]() $q$

-type and

$q$

-type and

![]() $\mu $

-type models assume the modelling of individuals for simplicity. However, the analyst also has the option to model grouped counts by allocating individuals to groups and computing appropriate aggregate death counts and exposure measures; see Macdonald and Richards (Reference Macdonald and Richards2025), Appendix A, especially Figure 4, for the details of the splitting of the individual observations and assigning them to rate intervals. The advantages and disadvantages of grouped-count modelling are discussed in Macdonald et al. (Reference Macdonald, Richards and Currie2018, Section 1.7).

$\mu $

-type models assume the modelling of individuals for simplicity. However, the analyst also has the option to model grouped counts by allocating individuals to groups and computing appropriate aggregate death counts and exposure measures; see Macdonald and Richards (Reference Macdonald and Richards2025), Appendix A, especially Figure 4, for the details of the splitting of the individual observations and assigning them to rate intervals. The advantages and disadvantages of grouped-count modelling are discussed in Macdonald et al. (Reference Macdonald, Richards and Currie2018, Section 1.7).

Time periods are often expressed in years. A practical issue is that the difference between two dates is a number of days, but calendar years do not always have the same number of days. Macdonald et al. (Reference Macdonald, Richards and Currie2018, p.38) standardize by dividing the number of days between two dates by 365.242 to account for leap years (Richards (Reference Richards, Urban and Seidelman2012, Table 15.1) gives a value of 365.24219 days for the astronomical cycle for the year 2000).

One question is how to handle dates at the start and end of a period of time. We adopt a policy of assuming that an interval starts at mid-day on the start date and ends at mid-day on the end date. For example, this means that the interval from 1st-31st January 2024 is taken to be 30 days long (

![]() $30 = 31 - 1$

) and not 31 days. This allows us to subdivide intervals without double-counting, e.g. the interval from 1st-16th January 2024 is 15 days long and the interval from 16th-31st January 2024 is 15 days long. Intervals that start and end on the same date have zero length and are excluded from analysis. A death at the end date is assumed to happen at mid-day. Thus, if the exposure period is 1st-31st January 2024 and

$30 = 31 - 1$

) and not 31 days. This allows us to subdivide intervals without double-counting, e.g. the interval from 1st-16th January 2024 is 15 days long and the interval from 16th-31st January 2024 is 15 days long. Intervals that start and end on the same date have zero length and are excluded from analysis. A death at the end date is assumed to happen at mid-day. Thus, if the exposure period is 1st-31st January 2024 and

![]() $d = 1$

, then we have 30 days of exposure terminated with a death.

$d = 1$

, then we have 30 days of exposure terminated with a death.

3. Reflecting Reality: Business Processes

A fundamental assumption of a

![]() $q$

-type model is that it is a Bernoulli trial, i.e. we observe each individual at the start and end of a complete year only. Otherwise, the complete year may not be interrupted by any right-censoring events, such as lapsing or the end of the investigation.

$q$

-type model is that it is a Bernoulli trial, i.e. we observe each individual at the start and end of a complete year only. Otherwise, the complete year may not be interrupted by any right-censoring events, such as lapsing or the end of the investigation.

Pensions and annuities in payment are nominally a single-decrement risk, i.e. the only way they are supposed to cease is through the death of the recipient. On the face of it, this matches the

![]() $q$

-type model in theory. However, in the following examples we will see that

$q$

-type model in theory. However, in the following examples we will see that

![]() $q$

-type models often do not match the reality of business practices, and that

$q$

-type models often do not match the reality of business practices, and that

![]() $\mu $

-type modelling is a more natural choice.

$\mu $

-type modelling is a more natural choice.

3.1. Bulk Transfers Out of a Portfolio

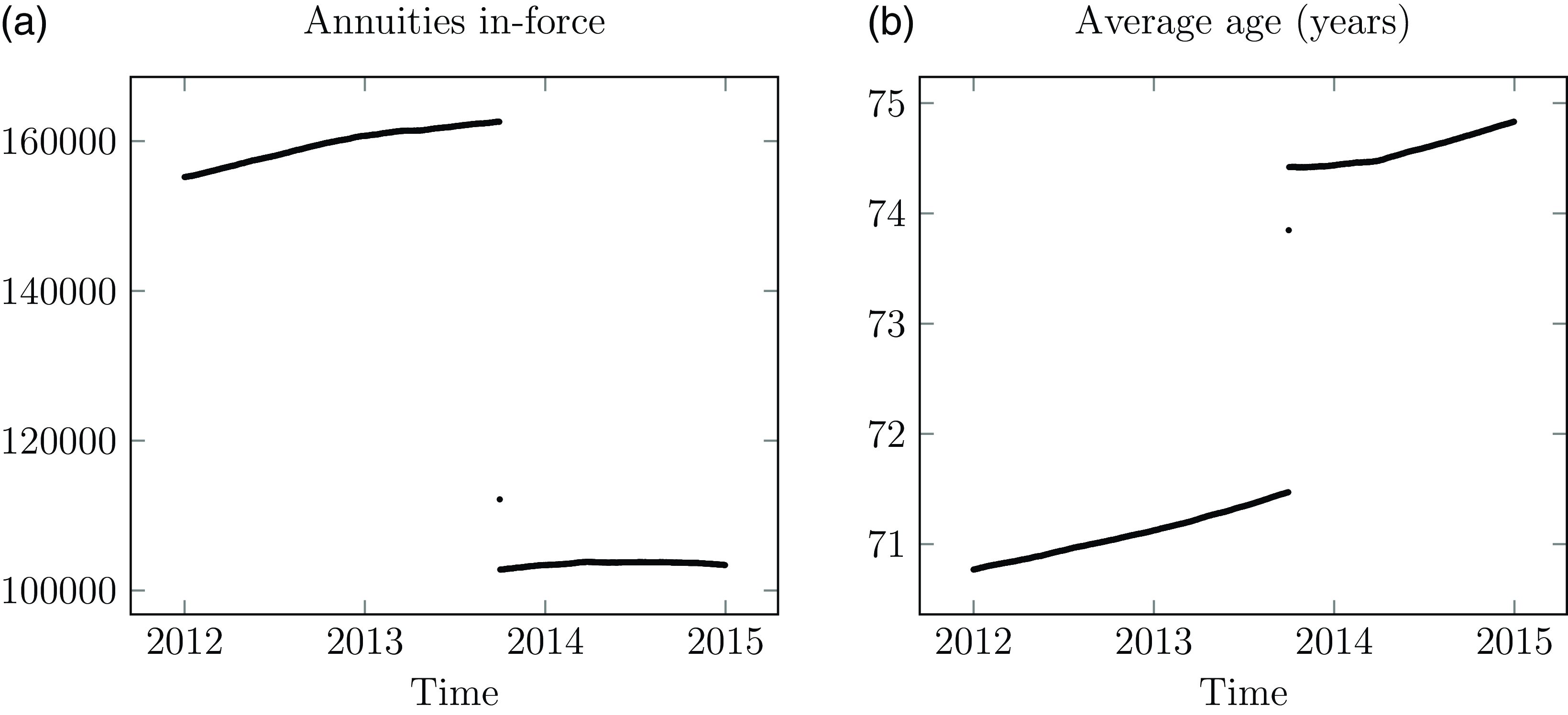

Portfolios can experience mid-year transfers out en masse. Examples include a transfer of annuities to another insurer, the purchase of buy-out annuities in a pension scheme or transfer to another administration system. Figure 3 shows an example for a UK annuity portfolio, where a large block of annuity contracts was moved to another insurer in a Part VII transfer (FSM, 2000, PartVII).

Figure 3. (a) Number of in-force annuities and (b) average age of in-force annuities at each date for a UK insurer. The discontinuities in late 2013 are caused by a transfer of liabilities to another insurer. New annuities are set up on any day, hence the seemingly continuous nature of the in-force annuity count at other times.

Source: Own calculations using experience data for all ages, January 2012–December 2014.

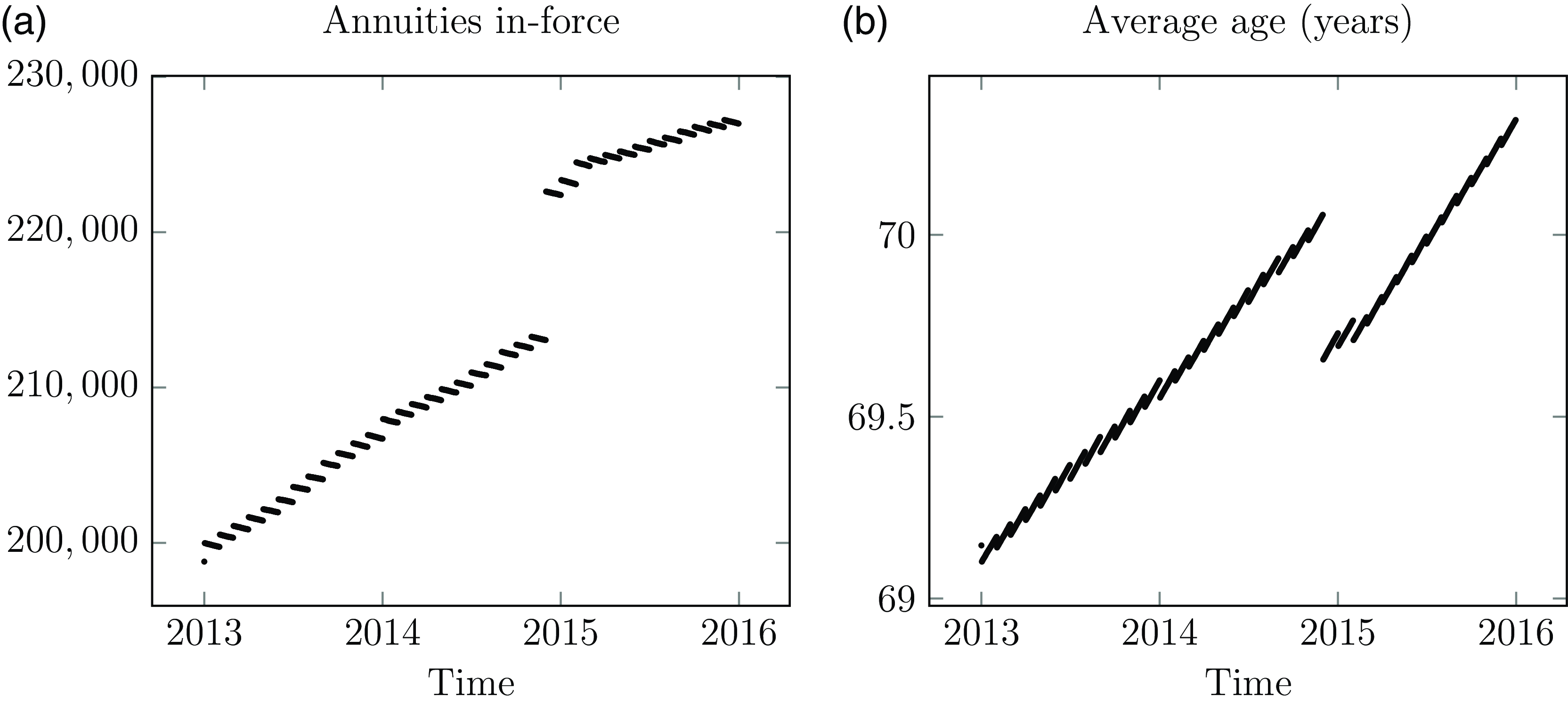

Figure 4. (a) Number of in-force annuities and (b) average age of in-force annuities at each date for a French insurer. The discontinuity in December 2014 was caused by a surge in new business. New annuities are set up on the first of the month, hence the stepped pattern of the annuity in-force count.

Source: Experience data for all ages, January 2013–December 2015.

Mid-year exits like in Figure 3(a) are a problem for

![]() $q$

-type models. They violate the assumption that there is no other possible event besides death or survival to the end of the year. In contrast, mid-year exits pose no problem for modelling in continuous time – the transfer out date is the date of last observation, and the indicator variable is set

$q$

-type models. They violate the assumption that there is no other possible event besides death or survival to the end of the year. In contrast, mid-year exits pose no problem for modelling in continuous time – the transfer out date is the date of last observation, and the indicator variable is set

![]() $d = 0$

to signify that the life was alive at that date. See Case C in Figure 1, and Case Studies A.1 and A.4 in Appendix A.

$d = 0$

to signify that the life was alive at that date. See Case C in Figure 1, and Case Studies A.1 and A.4 in Appendix A.

3.2. New Business

In addition to mid-year exits, most pension schemes and annuity portfolios also add new liabilities. These can be due to new retirements or spouse’s annuities set up on death of the first life. It is also possible to have a mass influx of new pensions, such as a pension scheme seeing a wave of early retirements due to corporate restructuring. Figure 4 shows an annuity portfolio where new cases are added at the start of each month and where there was a surge in new business in December 2014.

If an analyst is modelling

![]() $q$

-type rates aligned on calendar years, then any new records added during the year require special handling because a complete year of exposure is not possible. In contrast, all new business records can be used in a continuous-time model in the same way as in-force records; see Case Study A.8 in Appendix A.

$q$

-type rates aligned on calendar years, then any new records added during the year require special handling because a complete year of exposure is not possible. In contrast, all new business records can be used in a continuous-time model in the same way as in-force records; see Case Study A.8 in Appendix A.

3.3. Bulk Transfers into a Portfolio

For every bulk transfer out of one portfolio in Section 3.1 there will be a corresponding bulk transfer in to another portfolio. Examples include an insurer receiving a Part VII transfer, an insurer writing a large bulk annuity, or an administration system receiving migrated records from another administration system. This is like the new business in Section 3.2, but with the added complication that the receiving system will be missing deaths between annuity commencement and the transfer-in date. This is of course a challenge for

![]() $q$

-type models in the same way as for new business, but it is not a problem for continuous-time methods – see Cases D and E in Figure 1 and Case Study A.13 in Appendix A.

$q$

-type models in the same way as for new business, but it is not a problem for continuous-time methods – see Cases D and E in Figure 1 and Case Study A.13 in Appendix A.

3.4. Commutations and Temporary Pensions

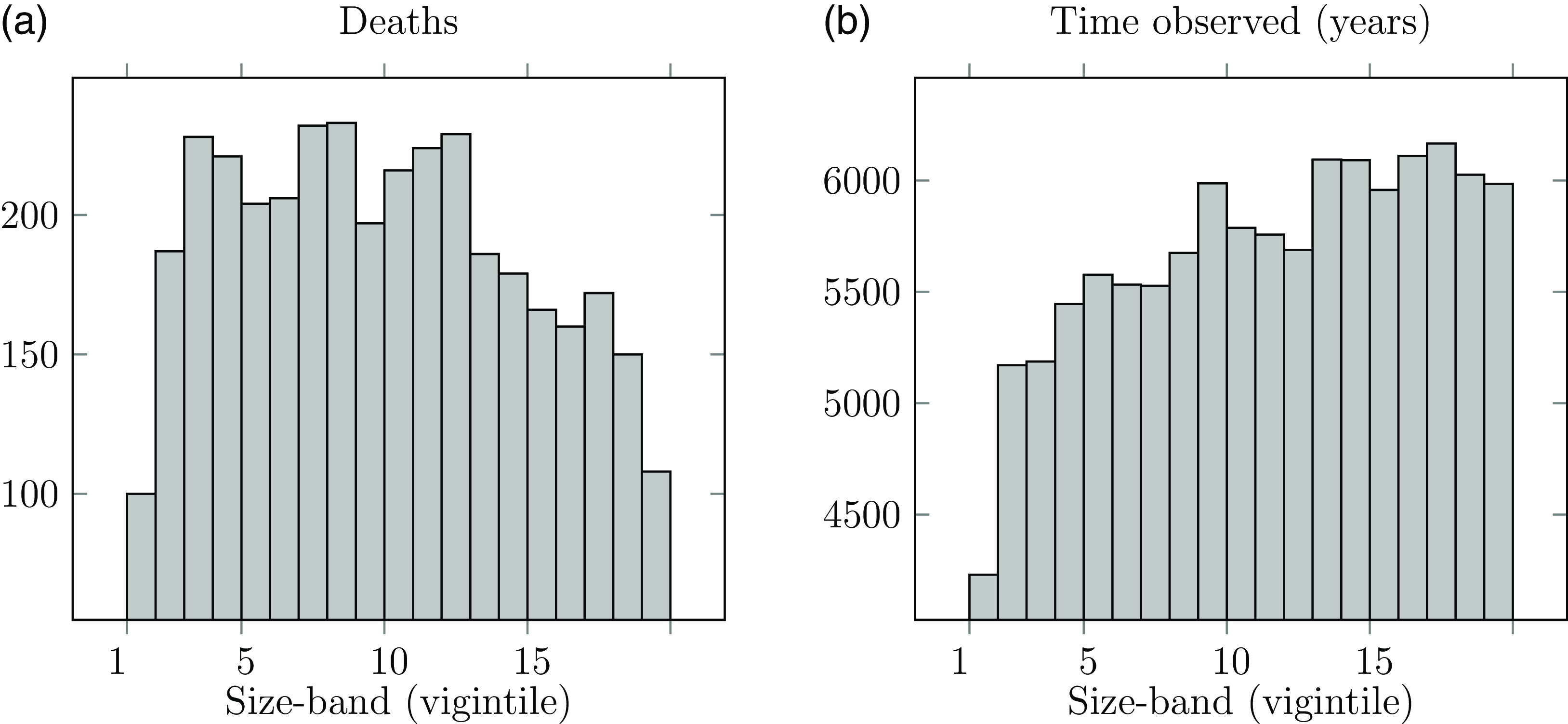

Even if a portfolio experiences no mass transfer out, it is still possible for individual liabilities to cease for a reason other than death. In the UK, pension benefits below a certain size can be commuted into a taxable lump sum. Administration expenses are largely fixed in nature, so the facility to commute small pensions saves disproportionate costs. However, this again means that the pension ceases for a reason other than the death of the recipient. Figure 5 shows the impact of this – although the number of lives in each size-band is the same, the time lived is much reduced for the smallest size-band due to non-death early exits. The number of observed deaths is correspondingly much reduced, as post-commutation deaths are of course unknown to the insurer.

Figure 5. (a) Deaths and (b) time observed in each pension size-band, showing the reduced exposure for the smallest pensions.

Source: Own calculations using experience data for Scottish pension scheme, ages 50–105, years 2000–2009. Pensioner records were sorted by pension size and grouped into twenty bands of equal numbers of lives (vigintiles). Size-band 1 represents the 5% of pensioners receiving the lowest pensions, while size-band 20 represents the 5% of pensioners receiving the largest amounts.

There are other ways for pensions to cease for a reason other than death. In Richards et al. (Reference Richards, Kaufhold and Rosenbusch2013) a multi-employer pension administrator in Germany had (i) temporary widow(er) pensions, (ii) widow(er) pensions ceasing on remarriage and (iii) disability pensions ceasing due to a return to work. In the Chilean national pension system, spouses’ pensions also cease upon remarriage. Some UK pension schemes grant pensions to “surviving adults” that can be terminated on remarriage or cohabitation with another person (TPS, 2010, 94(6)).

Commutations and temporary pensions pose a problem for

![]() $q$

-style models of mortality. One option is to exclude such cases, but this depends on them being clearly identified as such; see Case Study A.9 in Appendix A. Another issue is the need to exclude any corresponding deaths – trivial commutations are not always processed immediately, which means it is possible for a commutation-eligible pensioner to die first. Appendix C shows how discarding lapsed cases leads to a bias in mortality estimation. Discarding such data is therefore to be avoided.

$q$

-style models of mortality. One option is to exclude such cases, but this depends on them being clearly identified as such; see Case Study A.9 in Appendix A. Another issue is the need to exclude any corresponding deaths – trivial commutations are not always processed immediately, which means it is possible for a commutation-eligible pensioner to die first. Appendix C shows how discarding lapsed cases leads to a bias in mortality estimation. Discarding such data is therefore to be avoided.

In contrast, commutations and temporary pensions do not pose any kind of problem for models in continuous time – the commutation date is the date of last observation, and the indicator variable is set

![]() $d = 0$

to signify that the life was alive at that date. See Case C in Figure 1 and Case Studies A.1 and A.4 in Appendix A.

$d = 0$

to signify that the life was alive at that date. See Case C in Figure 1 and Case Studies A.1 and A.4 in Appendix A.

3.5. Representation of Data

In all the foregoing examples, non-death exits and mid-year entries are handled more easily by modelling in continuous time. This allows for simpler data preparation and corresponds more closely to the realities of the business processes than

![]() $q$

-type models. To illustrate, we assume that we have a data extract from a pension or annuity administration system. For each record we calculate the data pair

$q$

-type models. To illustrate, we assume that we have a data extract from a pension or annuity administration system. For each record we calculate the data pair

![]() $\left\{ {{d_i},{t_i}} \right\}$

as follows.

$\left\{ {{d_i},{t_i}} \right\}$

as follows.

![]() ${t_i}$

is the real-valued time observed in years between the on-risk date and the off-risk date. The on-risk date is the latest of: (i) the annuity commencement date, and (ii) the transfer-in date (if any). If the transfer-in date is not known exactly, a suitable proxy date can be used, such as the date of first payment made on the system; see Case Study A.13 in Appendix A. The off-risk date is the earliest of: (i) the date of extract, (ii) the date of death (if dead), (iii) the date of commutation (if commuted), and (iv) the date of transfer out (if any). If the transfer-out date is not known exactly, a suitable proxy can be used, such as the date of last payment made on the system; see Case Study A.4 in Appendix A. The indicator variable is set

${t_i}$

is the real-valued time observed in years between the on-risk date and the off-risk date. The on-risk date is the latest of: (i) the annuity commencement date, and (ii) the transfer-in date (if any). If the transfer-in date is not known exactly, a suitable proxy date can be used, such as the date of first payment made on the system; see Case Study A.13 in Appendix A. The off-risk date is the earliest of: (i) the date of extract, (ii) the date of death (if dead), (iii) the date of commutation (if commuted), and (iv) the date of transfer out (if any). If the transfer-out date is not known exactly, a suitable proxy can be used, such as the date of last payment made on the system; see Case Study A.4 in Appendix A. The indicator variable is set

![]() ${d_i} = 1$

if the off-risk date is the date of death and

${d_i} = 1$

if the off-risk date is the date of death and

![]() ${d_i} = 0$

otherwise. This is depicted graphically in Figure 1.

${d_i} = 0$

otherwise. This is depicted graphically in Figure 1.

Macdonald et al. (Reference Macdonald, Richards and Currie2018, Section 2.9.1) give an expanded consideration to include analyst-specified limits, such as minimum and maximum ages, modelling start and end dates and rules for excluding suspicious records. All of these are more easily handled in

![]() $\mu $

-type models than with

$\mu $

-type models than with

![]() $q$

-type models.

$q$

-type models.

4. Reflecting Reality: Risk Features

![]() $q$

-type methods are by definition unable to model mortality phenomena that take place over a shorter time frame than the fixed period they span. In contrast, continuous-time methods can better reflect rapidly changing phenomena, as demonstrated in the following sub-sections.

$q$

-type methods are by definition unable to model mortality phenomena that take place over a shorter time frame than the fixed period they span. In contrast, continuous-time methods can better reflect rapidly changing phenomena, as demonstrated in the following sub-sections.

4.1. Mortality Shocks

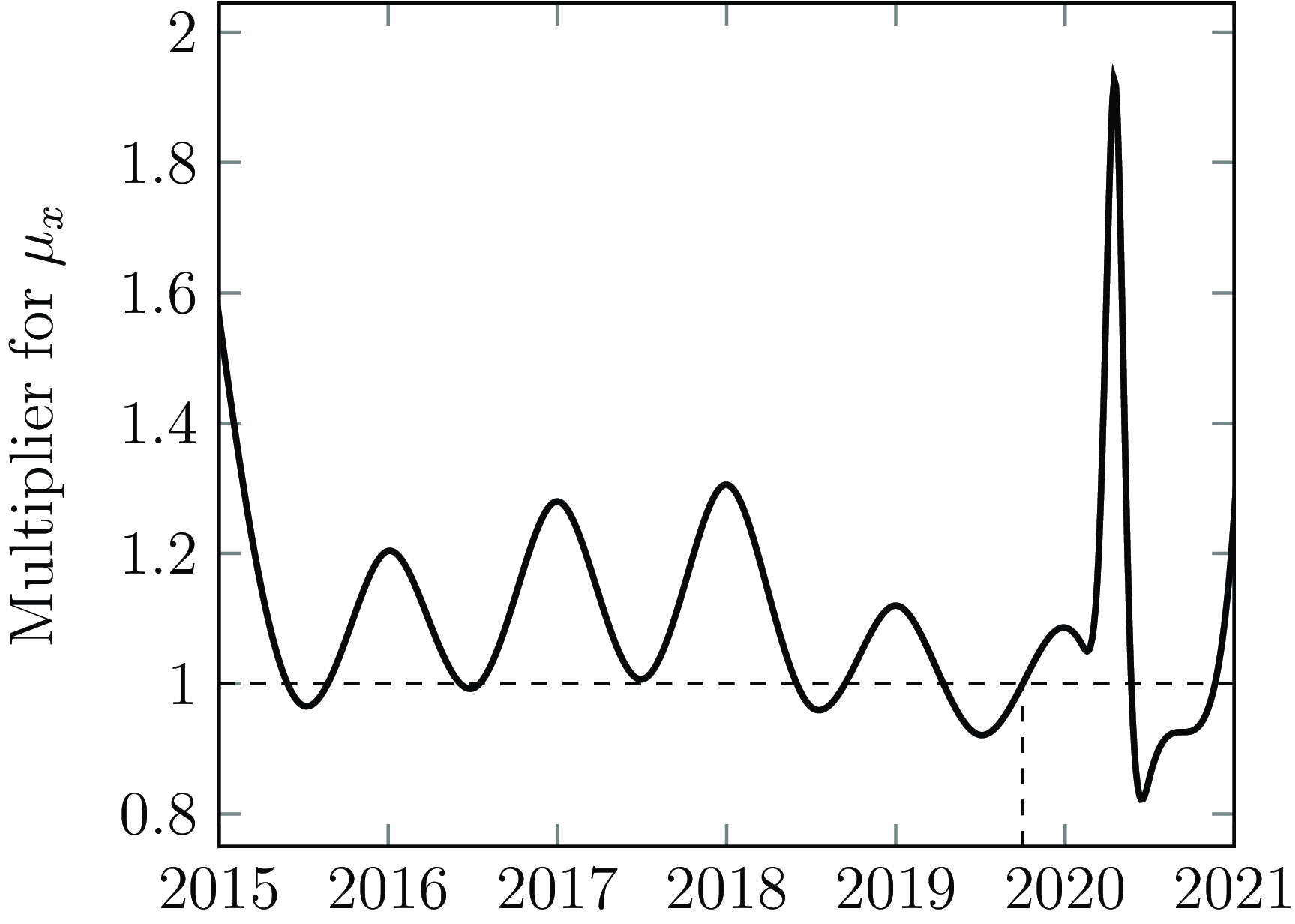

The Covid-19 pandemic (The Novel Coronavirus Pneumonia Emergency Response Epidemiology Team, 2020) produced intense spikes in mortality in a number of countries. This is reflected in the portfolio data that actuaries analyse; see the examples for annuity portfolios in France, the UK and the USA in Richards (Reference Richards2022b). The first Covid-19 shock in the UK was in April & May 2020, and the intensity of this two-month mortality shock cannot be captured with annual or even quarterly

![]() $q$

-type rates. In contrast, continuous-time models can reveal rich detail. Figure 6 shows an example, where the first Covid-19 shock is detected in April & May 2020 with seasonal variation in the years beforehand. Underlying Figure 6 is a

$q$

-type rates. In contrast, continuous-time models can reveal rich detail. Figure 6 shows an example, where the first Covid-19 shock is detected in April & May 2020 with seasonal variation in the years beforehand. Underlying Figure 6 is a

![]() $B$

-spline basis with knots at half-year intervals to pick up the substantial seasonal variation. In addition, a priori knowledge of population mortality shocks led to the addition of further

$B$

-spline basis with knots at half-year intervals to pick up the substantial seasonal variation. In addition, a priori knowledge of population mortality shocks led to the addition of further

![]() $B$

-spline knots in the first half of 2020, thus giving a basis of varying flexibility over time (there is no requirement for a

$B$

-spline knots in the first half of 2020, thus giving a basis of varying flexibility over time (there is no requirement for a

![]() $B$

-spline basis to always have equally spaced knots; see Kaishev et al. (Reference Kaishev, Dimitrova, Haberman and Verrall2016)). The result is a model for

$B$

-spline basis to always have equally spaced knots; see Kaishev et al. (Reference Kaishev, Dimitrova, Haberman and Verrall2016)). The result is a model for

![]() ${\mu _x}$

that allows mortality to vary smoothly over time, with periods of faster and slower change. Such detail is impossible with an annual

${\mu _x}$

that allows mortality to vary smoothly over time, with periods of faster and slower change. Such detail is impossible with an annual

![]() $q$

-type model.

$q$

-type model.

Figure 6. Mortality level over time for a UK annuity portfolio, where modelling in continuous time allows rich detail to be identified. The mortality level is standardized at 1 in October 2019.

Source: Richards (Reference Richards2022c).

4.2. Period Effects and Selection

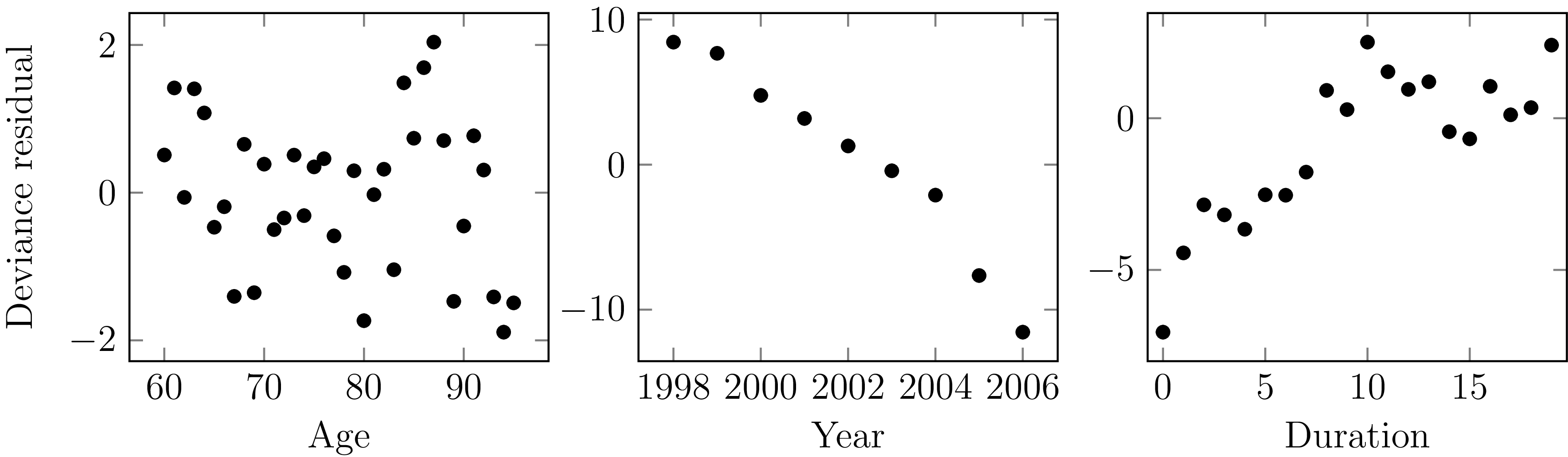

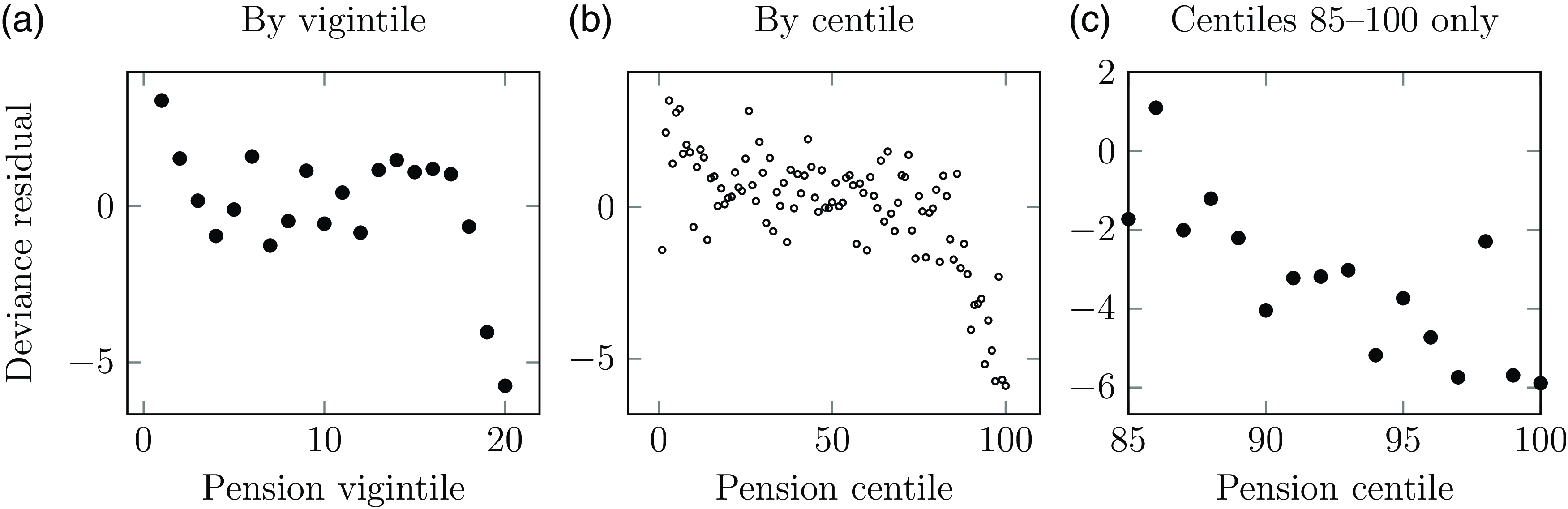

Figure 7 shows the deviance residuals (McCullagh and Nelder, Reference McCullagh and Nelder1989, Section 2.4.3) for a second portfolio of UK annuities. The model includes age and sex, and the random pattern of residuals by age suggests that the model is an acceptable fit for age-related mortality. However, the non-random patterns of residuals in time and duration suggest that it is also important to allow for both period effects and selection effects.

Figure 7. Deviance residuals by age, year and duration since commencement. The systematic pattern of residuals in the centre and right panels show that both time and duration are important risk factors for mortality, and should both be included in a model.

Source: own calculations for model for age and sex for second UK annuity portfolio, ages 60–95, years 1998–2006.

A drawback of a one-year

![]() $q$

-type model is that it is limited in how it can handle time-varying risk, especially when there are two or more time dimensions. This is due to the requirement to align the one-year intervals, as shown in Figure 8 for period effects and duration effects. This limitation of

$q$

-type model is that it is limited in how it can handle time-varying risk, especially when there are two or more time dimensions. This is due to the requirement to align the one-year intervals, as shown in Figure 8 for period effects and duration effects. This limitation of

![]() $q$

-type models improves a little if one of those risk factors is handled continuously (usually age). To see this, assume for simplicity that the basic mortality model is parametric, for example

$q$

-type models improves a little if one of those risk factors is handled continuously (usually age). To see this, assume for simplicity that the basic mortality model is parametric, for example

![]() ${q_x} = {e^{\alpha + \beta x}}/\left( {1 + {e^{\alpha + \beta x}}} \right)$

or

${q_x} = {e^{\alpha + \beta x}}/\left( {1 + {e^{\alpha + \beta x}}} \right)$

or

![]() ${\mu _x} = {e^{\alpha + \beta x}}$

. In each case we have a function defined for any real age,

${\mu _x} = {e^{\alpha + \beta x}}$

. In each case we have a function defined for any real age,

![]() $x$

, not just integer ages. We can also align the period effects on the calendar years, say by assuming that

$x$

, not just integer ages. We can also align the period effects on the calendar years, say by assuming that

![]() $\alpha $

is a function of calendar time, for example piecewise constant on calendar years. Since we have a function for mortality at any real age,

$\alpha $

is a function of calendar time, for example piecewise constant on calendar years. Since we have a function for mortality at any real age,

![]() $x$

, the fact that lives are at different ages during each year is not an issue for either the

$x$

, the fact that lives are at different ages during each year is not an issue for either the

![]() ${q_x}$

or the

${q_x}$

or the

![]() ${\mu _x}$

model.

${\mu _x}$

model.

Figure 8. When benefits can start at any point during the year,

![]() ${q_x}$

models can either include annual period effects or selection effects, but not both. For example, a period effect for 2021 requires a full year of exposure for survivors, so only Case A can contribute. However, the splitting of the exposure at annual boundaries means that different curtate durations are exposed, thus excluding the modelling of selection effects as well. The converse also applies.

${q_x}$

models can either include annual period effects or selection effects, but not both. For example, a period effect for 2021 requires a full year of exposure for survivors, so only Case A can contribute. However, the splitting of the exposure at annual boundaries means that different curtate durations are exposed, thus excluding the modelling of selection effects as well. The converse also applies.

However, how can the

![]() ${q_x}$

model accommodate selection effects for annuities commencing in the middle of the year? A one-year interval can either be aligned for annual period effects, or it can be aligned for selection effects, but it cannot be aligned for both. This is shown in Figure 8. In contrast, the

${q_x}$

model accommodate selection effects for annuities commencing in the middle of the year? A one-year interval can either be aligned for annual period effects, or it can be aligned for selection effects, but it cannot be aligned for both. This is shown in Figure 8. In contrast, the

![]() ${\mu _x}$

model can simultaneously model period and duration effects without such restriction. Of course, one could reduce the period covered by the

${\mu _x}$

model can simultaneously model period and duration effects without such restriction. Of course, one could reduce the period covered by the

![]() ${q_x}$

model to, say quarter-years. But if the answer is to reduce the fixed period covered by a

${q_x}$

model to, say quarter-years. But if the answer is to reduce the fixed period covered by a

![]() $q$

-type model, why not go all the way and reduce the interval to daily modelling?

$q$

-type model, why not go all the way and reduce the interval to daily modelling?

The problem of multivariate time scales is covered in detail in Andersen et al. (Reference Andersen, Borgan, Gill and Keiding1993), Chapter X. See also Case Study A.8 in Appendix A.

4.3. Occurred-but-not-Reported (OBNR) Deaths

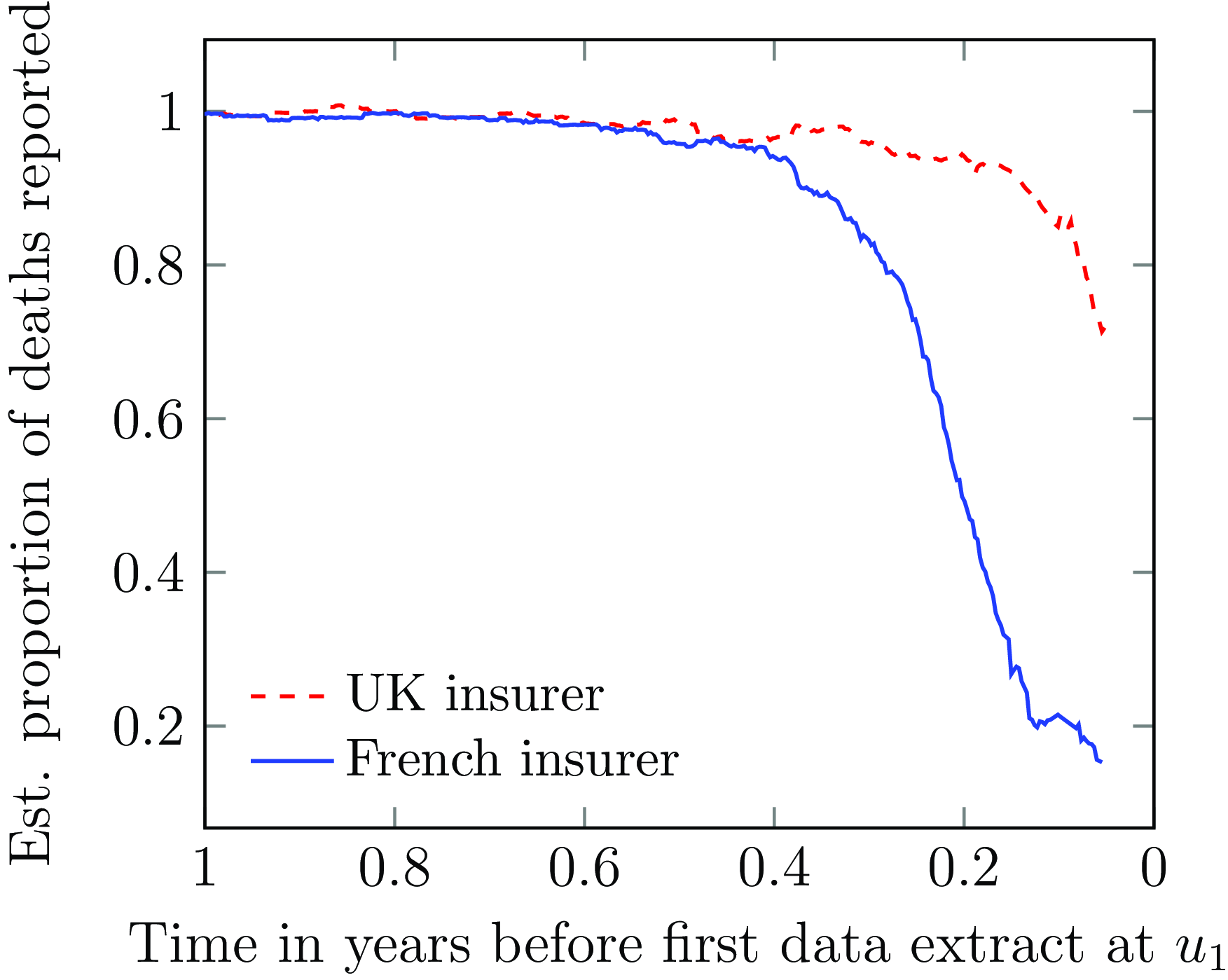

One other useful aspect of continuous-time analysis is the ability to examine delays in the reporting of deaths. Lawless (Reference Lawless1994) describes this as the problem of occurred-but-not-reported (OBNR) events, and Kalbfleisch and Lawless (Reference Kalbfleisch and Lawless1991) consider parametric models for estimating the distribution of delays. Section D.5 in Appendix D describes the calculation of a semi-parametric estimator of the portfolio-level hazard in continuous time. Assume that we have two estimates of the mortality hazard at the same point in time

![]() $y + t$

. These two estimates are labelled

$y + t$

. These two estimates are labelled

![]() ${\hat \mu _y}\left( {t,{u_1}} \right)$

and

${\hat \mu _y}\left( {t,{u_1}} \right)$

and

![]() ${\hat \mu _y}\left( {t,{u_2}} \right)$

, and they differ only in that they have been calculated using data extracts taken at two different times

${\hat \mu _y}\left( {t,{u_2}} \right)$

, and they differ only in that they have been calculated using data extracts taken at two different times

![]() ${u_1} \lt {u_2}$

. Then

${u_1} \lt {u_2}$

. Then

![]() ${\hat \mu _y}\left( {t,{u_1}} \right)$

will be more affected by delays in the reporting of deaths than

${\hat \mu _y}\left( {t,{u_1}} \right)$

will be more affected by delays in the reporting of deaths than

![]() ${\hat \mu _y}\left( {t,{u_2}} \right)$

, with the effect decreasing the further

${\hat \mu _y}\left( {t,{u_2}} \right)$

, with the effect decreasing the further

![]() $y + t$

lies before

$y + t$

lies before

![]() ${u_1}$

. Figure 9 shows the ratio of

${u_1}$

. Figure 9 shows the ratio of

![]() ${\hat \mu _y}\left( {t,{u_1}} \right)/{\hat \mu _y}\left( {t,{u_2}} \right)$

as an estimate of the proportion of deaths that have not been reported. The two portfolios have minimal OBNR cases half a year or more before time

${\hat \mu _y}\left( {t,{u_1}} \right)/{\hat \mu _y}\left( {t,{u_2}} \right)$

as an estimate of the proportion of deaths that have not been reported. The two portfolios have minimal OBNR cases half a year or more before time

![]() ${u_1}$

, but have very different OBNR rates in the handful of months leading up to the extract. This level of insight is not possible with annual

${u_1}$

, but have very different OBNR rates in the handful of months leading up to the extract. This level of insight is not possible with annual

![]() $q$

-type analysis.

$q$

-type analysis.

Figure 9. Estimated proportion of deaths reported for annuity portfolios in France and UK. The horizontal axis is reversed, as it measures the time before the first extract at calendar time

![]() ${u_1}$

.

${u_1}$

.

Source: Richards (Reference Richards2022b, Section 4).

Continuous-time methods go further than the identification of reporting delays in Figure 9. Including a period affected by OBNR deaths will naturally tend to under-state mortality levels, which is one reason to discard the exposure period immediately prior to the extract date; Figure 9 suggests that six months should be discarded for the French annuity portfolio and three months for the UK one. However, continuous-time models make it possible to model these OBNR deaths and thus use the full experience data, even up to the date of extract itself. This OBNR allowance can be either parametric (Richards, Reference Richards2022b, Section 5) or semi-parametric (Richards, Reference Richards2022c, Section 10). Richards (Reference Richards2022b, Section 7) showed how the use of daily data and continuous-time methods allowed the “nowcasting” of current mortality affected by late-reported deaths. These sorts of techniques are not possible with annual

![]() $q$

-type approaches. See also Case Study A.15 in Appendix A.

$q$

-type approaches. See also Case Study A.15 in Appendix A.

4.4. Season

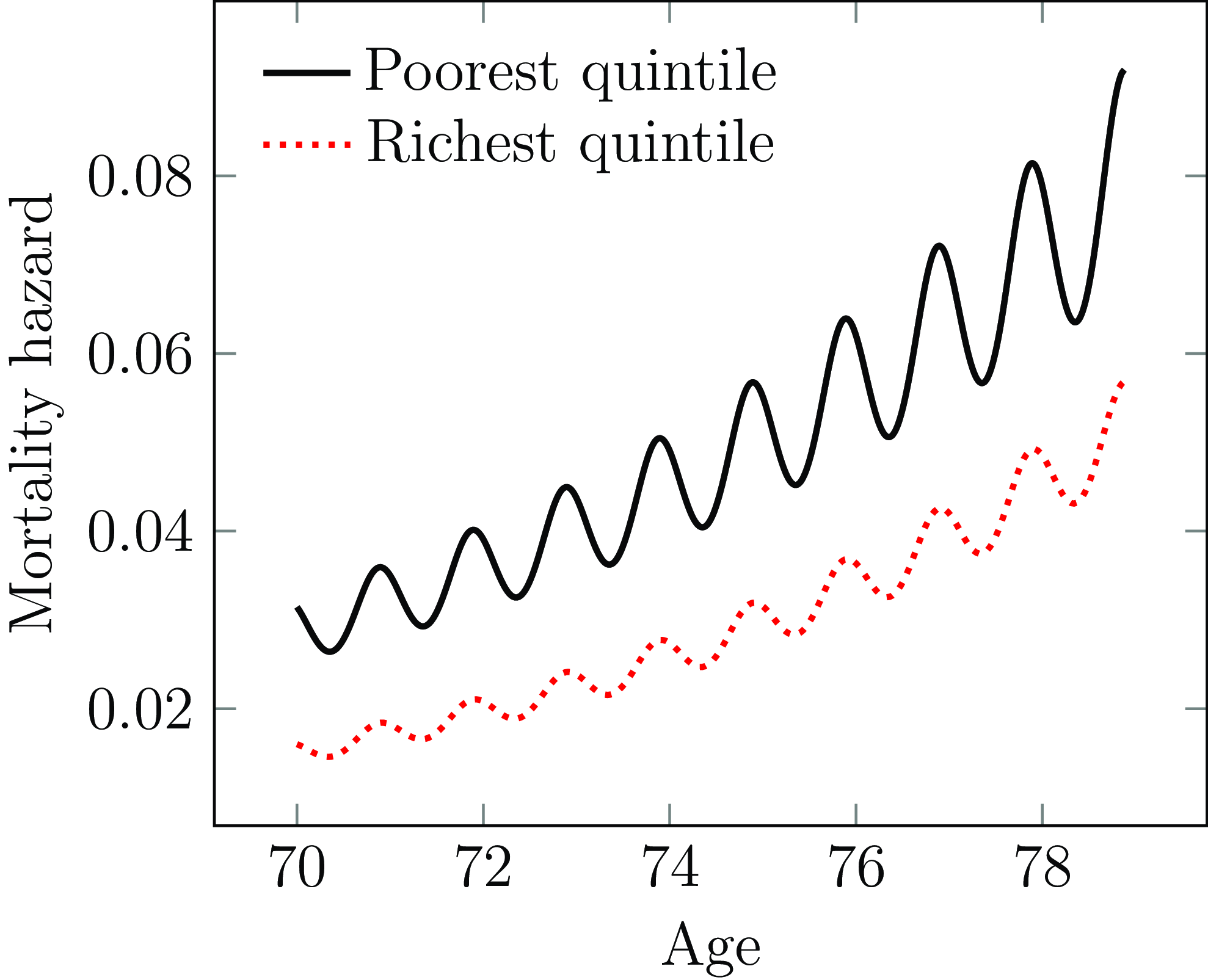

Annual

![]() $q$

-type rates are by definition unable to model the seasonal variation shown in Figure 10. The mortality hazard rises overall as the lives age, but with fluctuations as the lives pass through the seasons of each year. The amplitude of these fluctuations grows larger with increasing age.

$q$

-type rates are by definition unable to model the seasonal variation shown in Figure 10. The mortality hazard rises overall as the lives age, but with fluctuations as the lives pass through the seasons of each year. The amplitude of these fluctuations grows larger with increasing age.

As a periodic factor, season has the unusual distinction of being both highly statistically significant in explaining mortality variation, and yet of low financial significance for long-term business (Richards, Reference Richards2020, Section 9). Nevertheless, accounting for seasonality can be useful in separating out the impact of pandemic shocks, and can help quantify the extent to which a heavy winter flu experience is unusual. Seasonal modelling has applications for public-policy work, such as the observation that poorer annuitants not only have higher mortality, but also experience stronger seasonal variation, as shown in Figure 10. Models of seasonal variation can also be useful in commercial situations, such as Case Studies A.5, A.7 and A.12 in Appendix A.

Figure 10. Modelled cohort mortality hazard for males initially aged 70 at 1st January by selected income quintile.

Source: model calibrated to mortality experience of large UK pension scheme in Richards et al. (Reference Richards, Ramonat, Vesper and Kleinow2020).

5. Investigation Period

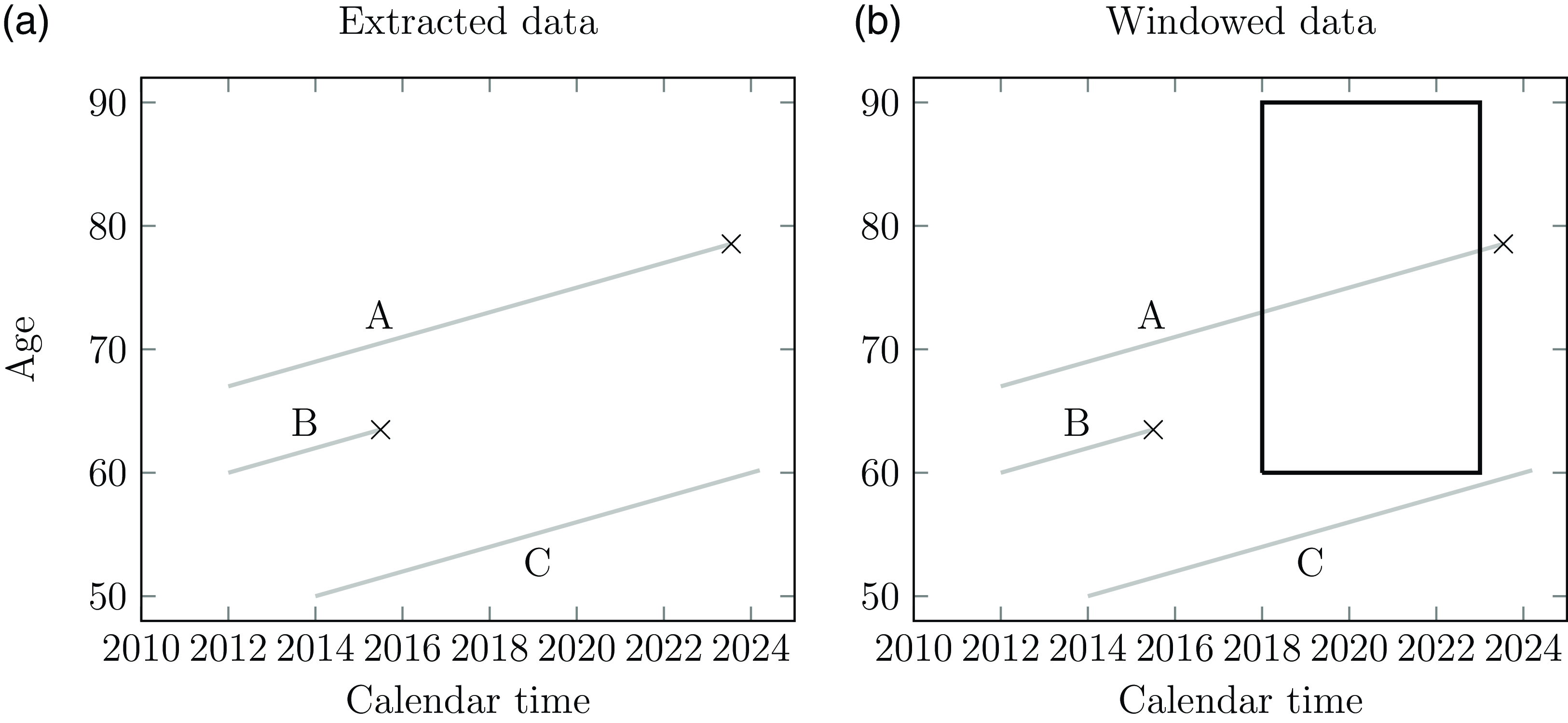

The investigation period will be set by the analyst, and there may be specific organizational policies for this. In setting the investigation period, the analyst needs to balance the benefits of statistical power (from using a longer period) against relevance (such as when mortality levels have been falling or the portfolio mix is rapidly changing); see Benjamin and Pollard (Reference Benjamin and Pollard1986), page 49. For example, ONS (2024) use population experience data over a three-year period to create the UK National Life Tables, while some life offices have a policy of using five-year period to set bases. Other investigations use longer periods of exposure still.

Specifying an investigation window causes additional left truncation and right-censoring, as shown in Figure 11; in addition to the life only becoming known to the insurer at an adult age at policy outset (standard left truncation) the analyst further discards the experience from policy outset to the start of the investigation window. Case Study A.18 in Appendix A concerns an annuity portfolio with uninterrupted experience data on the same administration system since outset. Despite the availability of decades of experience, the analyst would most likely only use the more recent data due to reasons of relevance. However, the length of the investigation period may also be limited by the data itself – Case Studies A.5 and A.7 in Appendix A are examples where the analyst has no scope for using a five-year investigation period.

Figure 11. An analyst wants to fit a Gompertz model of mortality to the experience between ages 60–90 over the period 1st January 2018–1st January 2023. An extract is taken of the data on 14th March 2024 and some specimen cases are shown in this Lexis diagram with exposure times in grey and deaths are marked with

![]() $ \times $

. (a) Raw experience data and (b) experience data within the analyst’s window superimposed. Individual A died on 20th July 2023, but is right-censored at the end of the investigation period and so is regarded as alive in the analysis. Individual A’s exposure time to the left of the window is also left-truncated. Individual B died before the investigation window opened and is excluded from the analysis. Individual C is also excluded from the analysis because they never reach the age range within the investigation period.

$ \times $

. (a) Raw experience data and (b) experience data within the analyst’s window superimposed. Individual A died on 20th July 2023, but is right-censored at the end of the investigation period and so is regarded as alive in the analysis. Individual A’s exposure time to the left of the window is also left-truncated. Individual B died before the investigation window opened and is excluded from the analysis. Individual C is also excluded from the analysis because they never reach the age range within the investigation period.

Most investigation periods also span a whole number of years, in part to balance out the seasonal fluctuations in mortality; see Figure 10 and years 2016–2019 in Figure 6. However, if a model includes an explicit seasonal term (Richards et al., Reference Richards, Ramonat, Vesper and Kleinow2020), then there is no need to worry about any bias caused by unbalanced numbers of summers and winters. This permits

![]() $\mu $

-type models to have an investigation period of a non-integer number of years if a seasonal component is included; see Case Studies A.5 and A.7 in Appendix A.

$\mu $

-type models to have an investigation period of a non-integer number of years if a seasonal component is included; see Case Studies A.5 and A.7 in Appendix A.

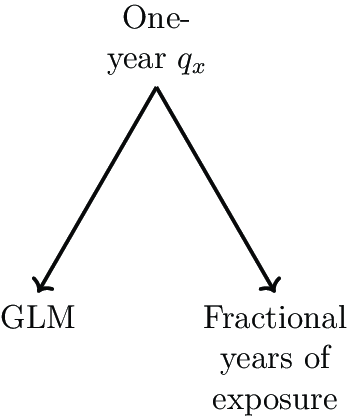

The ability to use fractional numbers of years in an investigation extends particularly to transactions such as bulk annuities. Pension schemes in the UK sometimes change administrators, which invariably leads to the loss of mortality data prior to the switchover. If a pension scheme can only supply 2.5 years of mortality experience, then the insurer will not want throw away a fifth of the information just because a GLM for

![]() ${q_x}$

can only use whole periods (although a

${q_x}$

can only use whole periods (although a

![]() $q$

-type model is still possible if one does not use a GLM; see Appendix B). Any concerns about delays in reporting of deaths can be addressed in a

$q$

-type model is still possible if one does not use a GLM; see Appendix B). Any concerns about delays in reporting of deaths can be addressed in a

![]() $\mu $

-type model by including an explicit occurred-but-not-reported (OBNR) term; see Richards (Reference Richards2022b), Section 5.

$\mu $

-type model by including an explicit occurred-but-not-reported (OBNR) term; see Richards (Reference Richards2022b), Section 5.

At the time of writing, most portfolios will still have recent mortality experience affected by Covid-19; see the spike in mortality in April and May 2020 in Figure 6.

![]() $\mu $

-type models can allow for such spikes in the experience (Richards, Reference Richards2022c) in a way that

$\mu $

-type models can allow for such spikes in the experience (Richards, Reference Richards2022c) in a way that

![]() $q$

-type models cannot. However, another alternative to avoiding bias might be to exclude the affected period, although this presupposes that the Covid-affected experience is an additive outlier with no relevance to later mortality. For a

$q$

-type models cannot. However, another alternative to avoiding bias might be to exclude the affected period, although this presupposes that the Covid-affected experience is an additive outlier with no relevance to later mortality. For a

![]() ${q_x}$

GLM this involves discarding an entire year’s worth of data, despite the fact that the shock in Figure 6 only lasts around two months (although, as before, a

${q_x}$

GLM this involves discarding an entire year’s worth of data, despite the fact that the shock in Figure 6 only lasts around two months (although, as before, a

![]() $q$

-type model is still possible if one does not use a GLM). In contrast,

$q$

-type model is still possible if one does not use a GLM). In contrast,

![]() $\mu $

-type models can more easily handle the ad hoc exclusion of short periods of experience, if the analyst feels that this is truly necessary. Macdonald and Richards (Reference Macdonald and Richards2025), Section 4.3 show how both left truncation and right-censoring can be handled by setting an exposure indicator function,

$\mu $

-type models can more easily handle the ad hoc exclusion of short periods of experience, if the analyst feels that this is truly necessary. Macdonald and Richards (Reference Macdonald and Richards2025), Section 4.3 show how both left truncation and right-censoring can be handled by setting an exposure indicator function,

![]() $Y\left( x \right)$

, to zero to “switch off” the contribution of exposure time and observed deaths to estimation.

$Y\left( x \right)$

, to zero to “switch off” the contribution of exposure time and observed deaths to estimation.

![]() $Y\left( x \right)$

is a multiplier of

$Y\left( x \right)$

is a multiplier of

![]() ${\mu _x}$

, and to exclude the contribution of Covid-affected experience, one could also set

${\mu _x}$

, and to exclude the contribution of Covid-affected experience, one could also set

![]() $Y\left( x \right) = 0$

for the period of any mortality shocks.

$Y\left( x \right) = 0$

for the period of any mortality shocks.

6. Competing Decrements

A limitation of

![]() $q$

-type models is that they assume that only two events are possible: survival to the end of the observation interval, or death during it. Even with notionally single-decrement business like pensions or annuities in payment, Section 3 shows that the business reality is that there are often other decrements like administrative transfers or trivial commutations.

$q$

-type models is that they assume that only two events are possible: survival to the end of the observation interval, or death during it. Even with notionally single-decrement business like pensions or annuities in payment, Section 3 shows that the business reality is that there are often other decrements like administrative transfers or trivial commutations.

However, the situation for

![]() $q$

-type models becomes worse with classes of business that have multiple modes of exit by design. Consider the data in Case Study A.32, where there are two decrements of interest: death and entry into long-term care.

$q$

-type models becomes worse with classes of business that have multiple modes of exit by design. Consider the data in Case Study A.32, where there are two decrements of interest: death and entry into long-term care.

![]() $n = 42$

lives start exposure at age 88, with

$n = 42$

lives start exposure at age 88, with

![]() ${d^{{\rm{mort}}}} = 5$

dying and

${d^{{\rm{mort}}}} = 5$

dying and

![]() ${d^{{\rm{care}}}} = 3$

entering long-term care before reaching age 89. Due to these exits and other censoring points there are

${d^{{\rm{care}}}} = 3$

entering long-term care before reaching age 89. Due to these exits and other censoring points there are

![]() ${E^c} = 36.9039$

years of life observed in the age interval

${E^c} = 36.9039$

years of life observed in the age interval

![]() $\left( {88,89} \right)$

. With continuous-time modelling, the number of deaths and care events can be modelled as separate pseudo-Poisson variables (Macdonald and Richards, Reference Macdonald and Richards2025), which lead to the same inference as assuming that:

$\left( {88,89} \right)$

. With continuous-time modelling, the number of deaths and care events can be modelled as separate pseudo-Poisson variables (Macdonald and Richards, Reference Macdonald and Richards2025), which lead to the same inference as assuming that:

where

![]() ${\mu ^{{\rm{mort}}}}$

and

${\mu ^{{\rm{mort}}}}$

and

![]() ${\mu ^{{\rm{care}}}}$

are the hazards for mortality and long-term care, respectively. In contrast,

${\mu ^{{\rm{care}}}}$

are the hazards for mortality and long-term care, respectively. In contrast,

![]() $q$

-type modelling has little theoretical support beyond binomial trials, which don’t permit multiple types of event. The classical actuarial approach is to try to create an artificial single-decrement equivalence by reducing the number of lives:

$q$

-type modelling has little theoretical support beyond binomial trials, which don’t permit multiple types of event. The classical actuarial approach is to try to create an artificial single-decrement equivalence by reducing the number of lives:

where

![]() ${q^{{\rm{mort}}}}$

and

${q^{{\rm{mort}}}}$

and

![]() ${q^{{\rm{care}}}}$

are the probabilities of mortality and long-term care, respectively.

${q^{{\rm{care}}}}$

are the probabilities of mortality and long-term care, respectively.

![]() ${n^{{\rm{mort}}}}$

and

${n^{{\rm{mort}}}}$

and

![]() ${n^{{\rm{care}}}}$

are pseudo-life-counts, both less than

${n^{{\rm{care}}}}$

are pseudo-life-counts, both less than

![]() $n$

. It is traditional to use an approximation like the following from Benjamin and Pollard (Reference Benjamin and Pollard1986), equation (2.1):

$n$

. It is traditional to use an approximation like the following from Benjamin and Pollard (Reference Benjamin and Pollard1986), equation (2.1):

The lack of rigour with the

![]() $q$

-type approach to competing decrements can be seen when we apply it to our example:

$q$

-type approach to competing decrements can be seen when we apply it to our example:

But what is 0.5 of a binomial trial? Half a person? The binomial likelihood can still be evaluated and maximized for non-integral values for the number of trials, but the

![]() $q$

-type models in Equations (3) and (4) clearly do not correspond to reality.

$q$

-type models in Equations (3) and (4) clearly do not correspond to reality.

7. Data-quality Checks

Continuous-time methods also offer better checks of data quality. We look at how data issues can be revealed by a non-parametric estimate of survival by age, and a semi-parametric estimate of mortality hazard in time.

7.1. Non-Parametric Survival Curve by Age

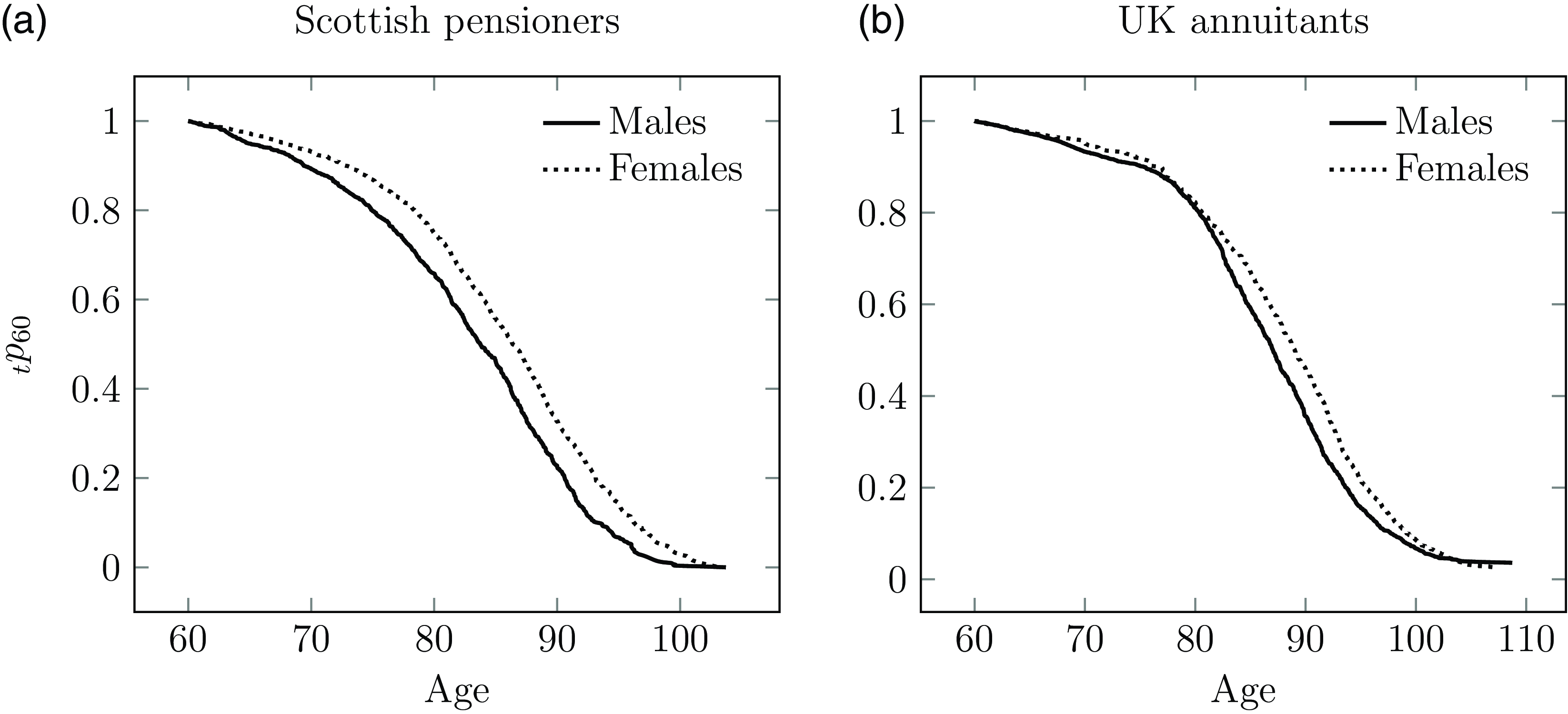

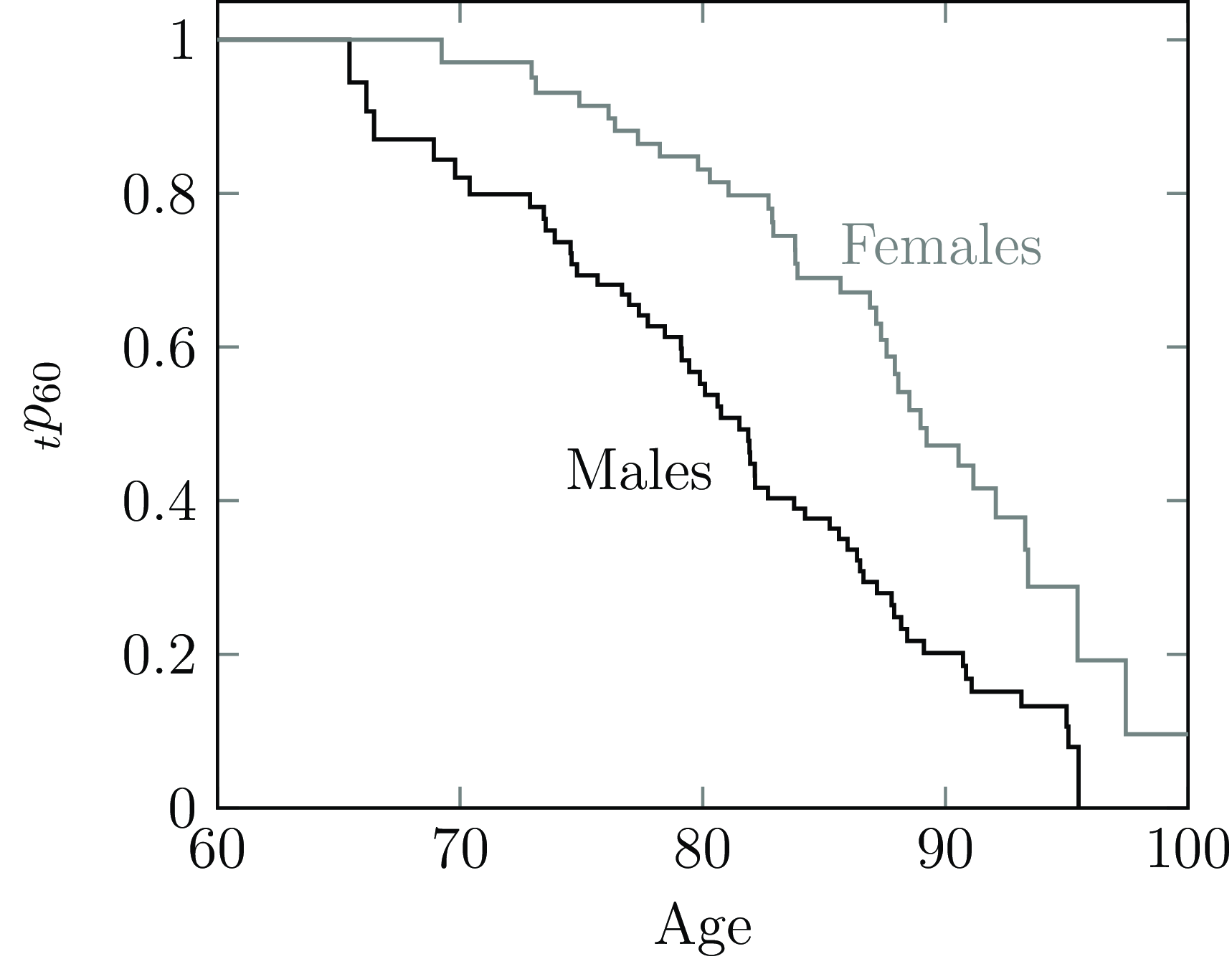

The estimator from Kaplan and Meier (Reference Kaplan and Meier1958) estimates the survival curve at intervals determined by the time between deaths (the definition from Fleming and Harrington (Reference Fleming and Harrington1991) does something very similar). In the case of many actuarial portfolios, this interval is often as small as one day, making the Kaplan-Meier estimate effectively a continuous-time measure; see Section D.1 in Appendix D. An example is shown in Figure 12(a).

Figure 12. Kaplan-Meier survival curves from age 60 for (a) the Scottish pension scheme in Figure 5 and (b) a UK annuity portfolio. The odd shape of the survival curve for the UK annuitants, and the lack of a male-female differential between ages 60–80, suggests a data-corruption problem like the one described in Case Study A.6 in Appendix A.

Source: Macdonald et al. (Reference Macdonald, Richards and Currie2018), Figure 2.8.

The value of the Kaplan-Meier estimate as a data-quality check is shown in Figure 12(b), where the odd shape and the lack of a male-female differential between ages 60 and 80 suggests a data-quality problem. Case Study A.6 in Appendix A describes a possible cause. Note that this sort of problem is trickier to detect with a traditional comparison of age-grouped mortality against a standard table. The visual nature of the Kaplan-Meier estimate is also a major advantage when communicating with non-specialists. See also Case Studies A.2 and A.3 in Appendix A.

7.2. Semi-Parametric Hazard Over Time

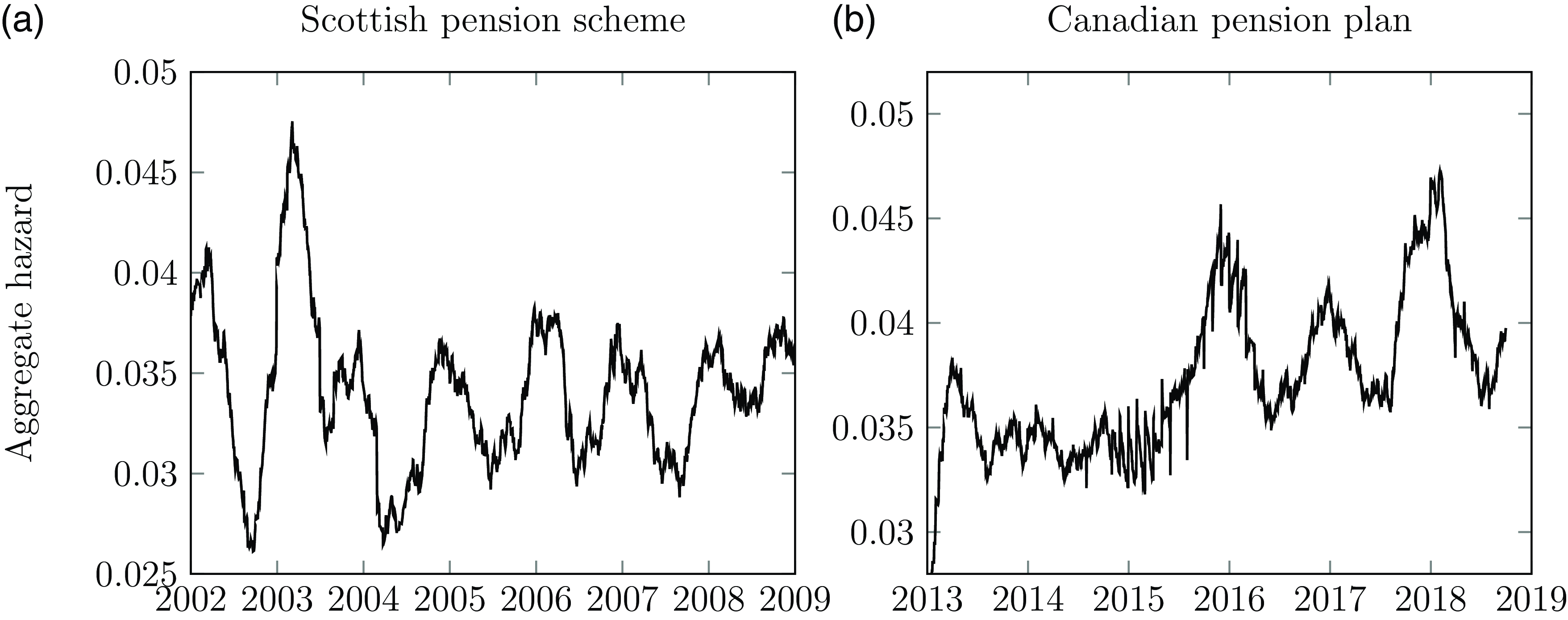

Richards (Reference Richards2022b) proposed a semi-parametric estimate for the mortality hazard of a portfolio over time, and the details of its calculation are given in Section D.5 in Appendix D. Figure 13(a) shows the seasonal variation in a Scottish pension scheme, with particularly high mortality in the winter of 2002–2003. In contrast, the much larger Canadian pension plan in Figure 13(b) shows seasonal variation only from 2016 onwards, raising questions about the data quality in 2014 and 2015. Closer inspection of the Canadian mortality reveals that deaths are recorded continuously throughout the year, but that the first of each month has disproportionately more deaths than other times during the same month. This suggests an inconsistent approach to the recording of the date of death; see also Case Study A.15 in Appendix A. Detailed inspection showed that this data anomaly is present throughout the entire period shown in Figure 13(b), but it is more dominant in 2014 and 2015 and thus more visually apparent.

Figure 13. Estimated aggregate hazard in time for (a) the Scottish pension scheme in Figure 5 and (b) a Canadian pension plan with more lives and deaths. The Canadian pension plan is missing the expected seasonal pattern in 2014 and 2015, raising questions over the accuracy of the experience data during that period.

Source: Adapted from Richards (Reference Richards2022b), Figure A.2.

The mortality spike in the winter of 2002–2003 in Figure 13(a) raises a question: why would one exclude Covid mortality spikes, when such seasonal spikes have typically been left in?

8. Management Information

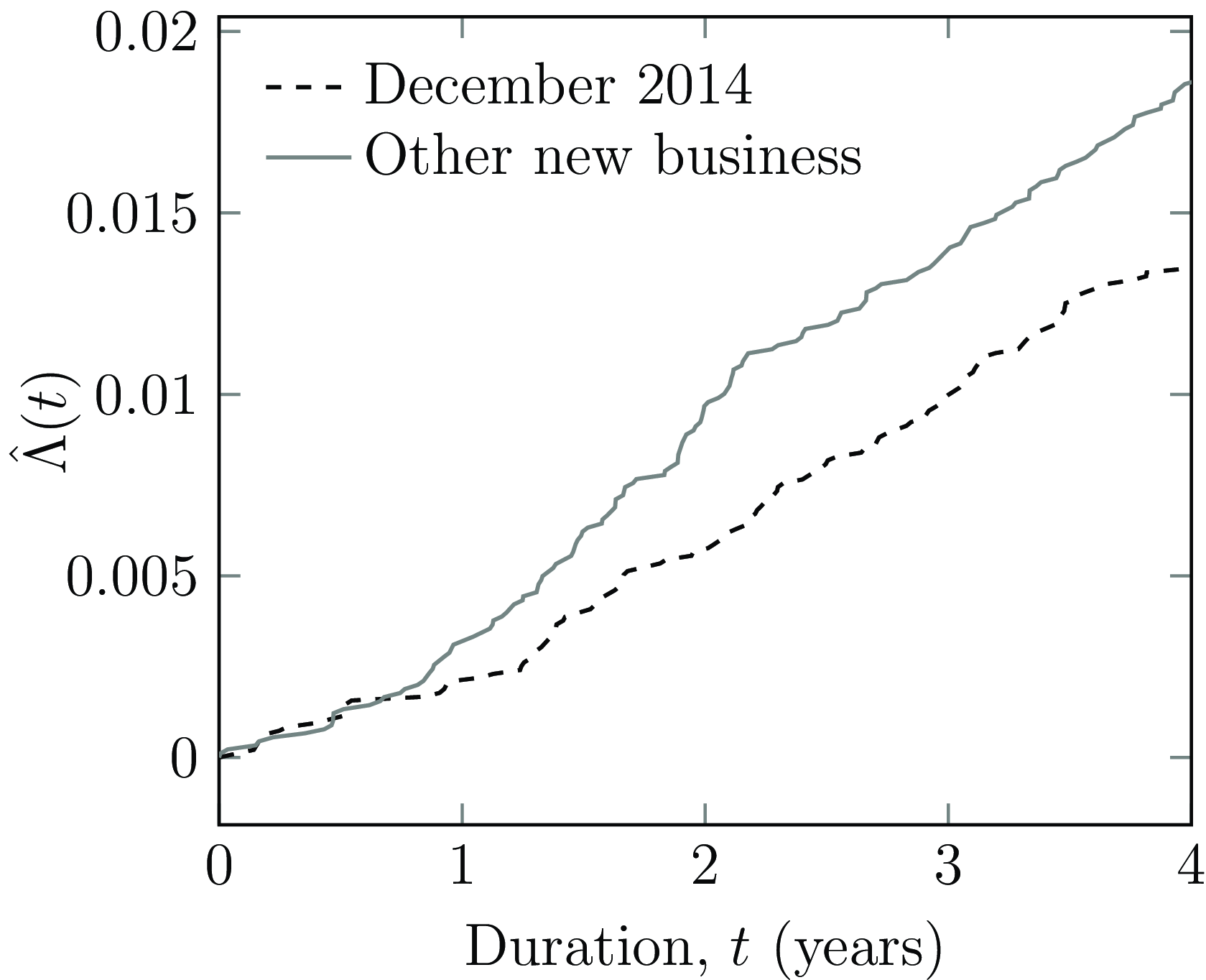

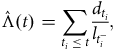

A major advantage of continuous-time methods is their ability to give timely and ongoing answers to management questions. For example, consider the surge in new business shown in December 2014 in Figure 4(a). The average age at commencement of the 9,570 new annuitants in December 2014 is 60.75 years. This is close to the average age at commencement of 61.34 years for the 9,033 new annuitants in the six months before and after December 2014. Although the commencement ages are very close, a natural management question is whether the mortality of the December 2014 annuitants is different from other recent new business? Figure 14 shows that this is indeed the case, with the December 2014 annuitants experiencing lighter mortality than their contemporaries. The statistic used here is the Nelson-Aalen estimate of Equation (D2) (see Section D.2), which can be updated continuously without waiting for a complete year of exposure as with a

![]() $q$

-type analysis; Figure 14 could be updated weekly, for example. This is a critical advantage when entering a new market; see Case Study A.8 in Appendix A.

$q$

-type analysis; Figure 14 could be updated weekly, for example. This is a critical advantage when entering a new market; see Case Study A.8 in Appendix A.

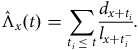

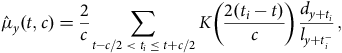

Figure 14. Nelson-Aalen estimate,

![]() $\hat \Lambda \left( t \right)$

, of cumulative mortality rate for new business written (i) in December 2014, and (ii) in the six months on each side of December 2014.

$\hat \Lambda \left( t \right)$

, of cumulative mortality rate for new business written (i) in December 2014, and (ii) in the six months on each side of December 2014.

Source: Own calculations using new annuities written by the French insurer in Figure 4.

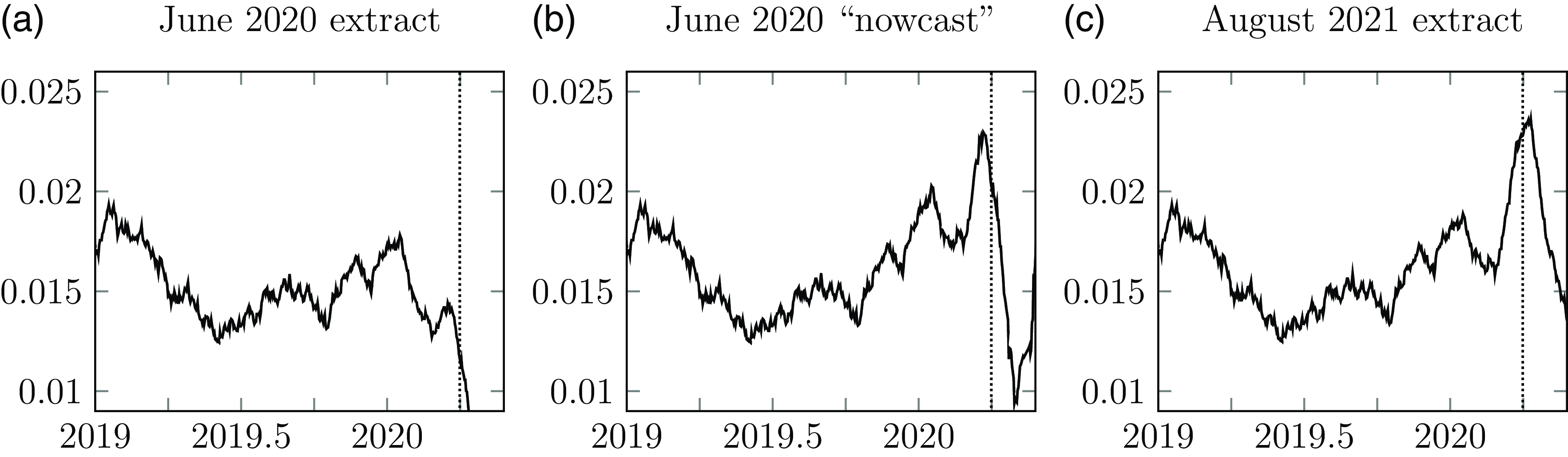

Of course, the phenomenon of OBNR deaths discussed in Section 4.3 is a challenge for any methods using current data. However, as shown in Figure 9, continuous-time semi-parametric methods can also be used to estimate the timing and incidence of OBNR. If need be, graphs such as Figure 14 can be adjusted to allow for OBNR. This is analogous to the econometric techniques of “nowcasting” (Bańbura et al., Reference Bańbura, Giannone, Modugno, Reichlin, Elliott and Timmermann2013), where the most recently available information is used to estimate the current value of a statistic that won’t be fully known until sufficient time has elapsed. This is illustrated in Figure 15(a), where the June 2020 data extract shows no sign of the pandemic mortality expected in April & May 2020. An allowance for OBNR allowed a “nowcast” of mortality, as shown in Figure 15(b). A later data extract used in Figure 15(c) shows that the OBNR-adjusted “nowcast” in panel (b) was accurate enough for business planning at the time it was made.

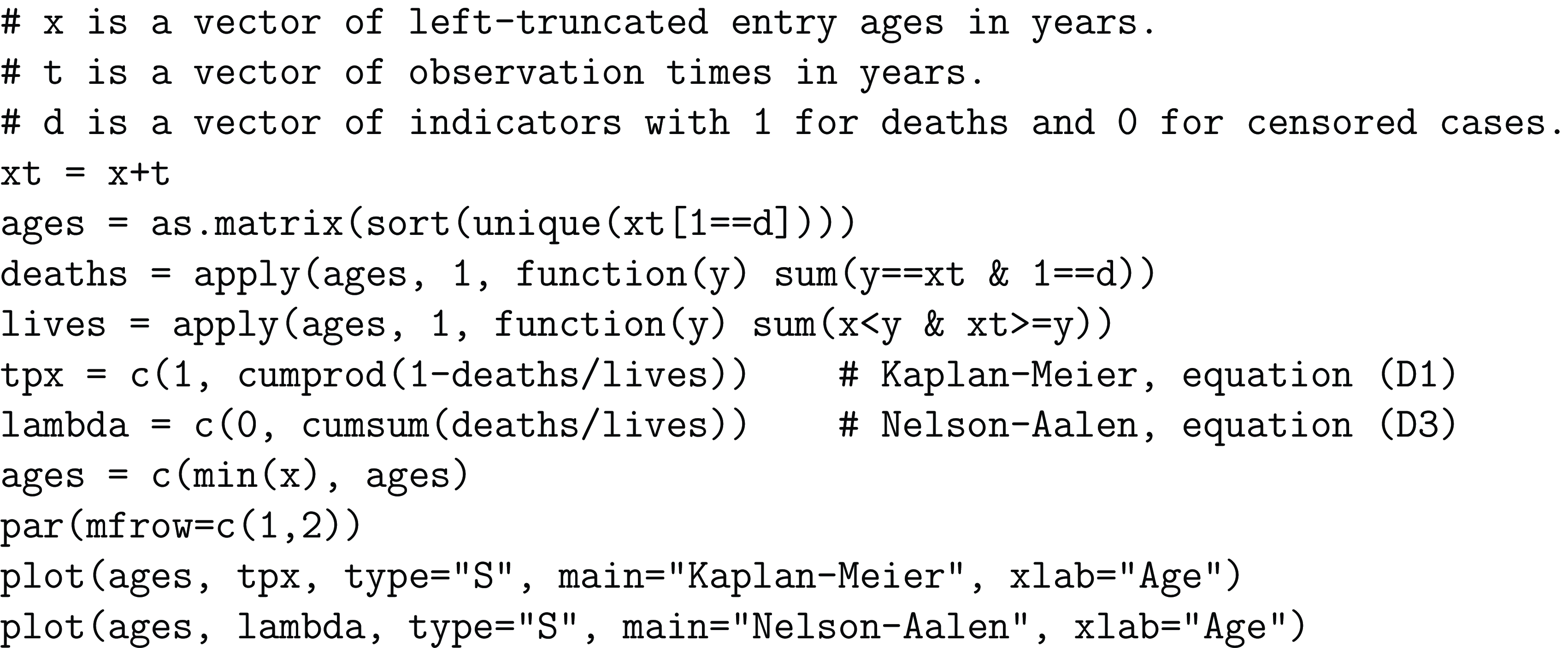

Figure 15.

![]() ${\hat \mu _{2019}}\left( t \right)$

for French annuity portfolio with smoothing parameter

${\hat \mu _{2019}}\left( t \right)$

for French annuity portfolio with smoothing parameter

![]() $c = 0.1$

: (a) calculated using June 2020 extract; (b) nowcast using Gaussian OBNR function estimated from June 2020 extract; (c) calculated using August 2021 extract. The vertical dotted line in each panel is at 1st April 2020.

$c = 0.1$

: (a) calculated using June 2020 extract; (b) nowcast using Gaussian OBNR function estimated from June 2020 extract; (c) calculated using August 2021 extract. The vertical dotted line in each panel is at 1st April 2020.

9. Discussion

The problems of single-decrement

![]() $q$

-type models in Section 3 can be partially solved by discarding some records and editing others. However, throwing away data is a luxury that not every analyst can afford; see Case Studies A.5 and A.7 in Appendix A. Furthermore, modifying the data to suit the needs of the model is surely the wrong direction of causality – a model should be picked that suits the data, not the other way around. As shown in Section 3, continuous-time methods are a better match to the reality of business processes on most administration systems.

$q$

-type models in Section 3 can be partially solved by discarding some records and editing others. However, throwing away data is a luxury that not every analyst can afford; see Case Studies A.5 and A.7 in Appendix A. Furthermore, modifying the data to suit the needs of the model is surely the wrong direction of causality – a model should be picked that suits the data, not the other way around. As shown in Section 3, continuous-time methods are a better match to the reality of business processes on most administration systems.

The challenges of

![]() $q$

-type models in Section 4 can be addressed by reducing the fixed observation period to a quarter-year, or even a month. However, if the answer is to increase granularity, why not use the full detail afforded by the data, namely daily intervals? Administration systems hold lots of dates, which are a natural fit for continuous-time methods.

$q$

-type models in Section 4 can be addressed by reducing the fixed observation period to a quarter-year, or even a month. However, if the answer is to increase granularity, why not use the full detail afforded by the data, namely daily intervals? Administration systems hold lots of dates, which are a natural fit for continuous-time methods.

Alternatively,

![]() $q$

-type models can be fitted using bespoke likelihoods for fractional years of exposure, as covered in Appendix B. However, if the analyst is able to do this, then a

$q$

-type models can be fitted using bespoke likelihoods for fractional years of exposure, as covered in Appendix B. However, if the analyst is able to do this, then a

![]() $\mu $

-type model will be more flexible and require less data preparation.

$\mu $

-type model will be more flexible and require less data preparation.

Individual records and exact dates allow the use of insightful non- and semi-parametric methods, as shown in Section 7. The Kaplan-Meier curve is a particularly useful tool for detecting subtle data-quality problems. And if one is anyway extracting individual records for data-checking, it makes sense to continue by modelling individual lifetimes if one can. The continuous-time representation attempts to describe these left-truncated, right-censored lifetimes as they really are (and presumably good business data processes share that aim to some extent). In contrast, the discrete-time representation for

![]() $q$

-type models works the other way, and attempts to force nature (and the data) to look like a life table.

$q$

-type models works the other way, and attempts to force nature (and the data) to look like a life table.

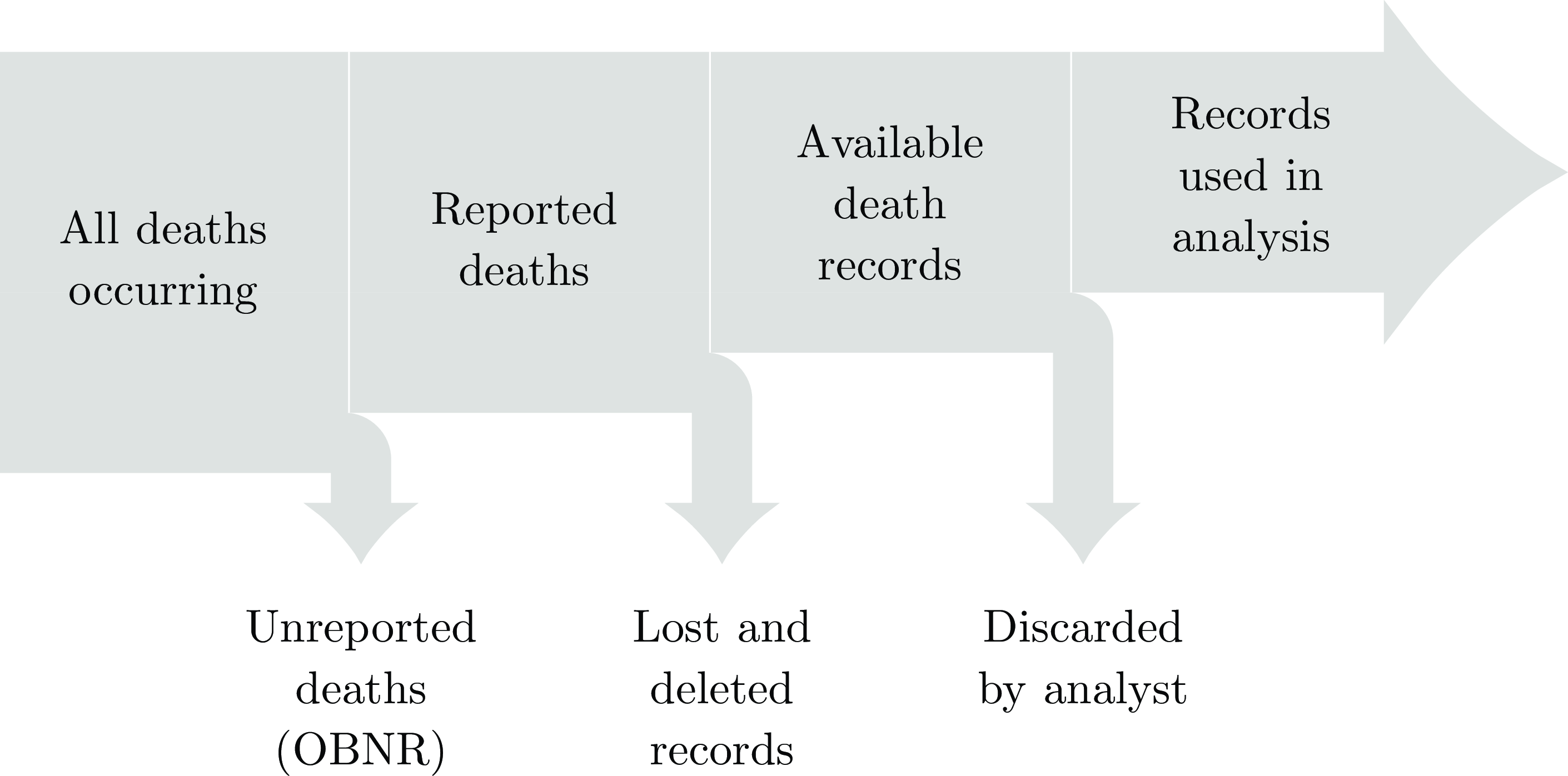

Figure 16 depicts the main categories of data loss between reality on the left and what the analyst has to work with on the right. There is not much that can be done about the middle sources of data loss: if records have been deleted or destroyed, they are unavailable to any type of model. However,

![]() $\mu $

-type models can deal with OBNR deaths better than

$\mu $

-type models can deal with OBNR deaths better than

![]() $q$

-type models, and less data is discarded with continuous-time methods than discrete-time ones.

$q$

-type models, and less data is discarded with continuous-time methods than discrete-time ones.

Figure 16. Cumulative loss of mortality data in a portfolio.

10. Conclusions

Methods operating in continuous time, or in daily intervals, offer substantial advantages for actuaries. Chief among these are the ability to reflect the reality of everyday business processes, and the ability to handle rapid changes in risk level. In contrast,

![]() $q$

-type models, especially those operating over a one-year interval, make assumptions about the data that are hard to justify, and often require data to be discarded if using standard GLMs. In addition, annual

$q$

-type models, especially those operating over a one-year interval, make assumptions about the data that are hard to justify, and often require data to be discarded if using standard GLMs. In addition, annual

![]() $q$

-type models are unable to reflect rapid intra-year movements in risk.

$q$

-type models are unable to reflect rapid intra-year movements in risk.

Continuous-time methods are also the more practical solution to certain modelling problems. Examples include risks varying in more than one time dimension and modelling risks with competing decrements. In contrast, annual

![]() $q$

-type models within a GLM framework are not capable of simultaneously modelling time- and duration-varying risk. For competing risks,

$q$

-type models within a GLM framework are not capable of simultaneously modelling time- and duration-varying risk. For competing risks,

![]() $q$

-type models force the pretence that the number of lives is smaller than it really is.

$q$

-type models force the pretence that the number of lives is smaller than it really is.

Continuous-time methods also offer useful data-quality checks. The visual nature of non- and semi-parametric methods make them particularly useful for communication with non-specialists.

There are occasional circumstances when daily information is unavailable, or even deliberately withheld, but actuaries should strive wherever possible to collect full dates and use continuous-time methods of analysis.

Acknowledgements

The authors thank Gavin Ritchie, Stefan Ramonat, Patrick Kelliher, Kai Kaufhold and Ross Ainslie for comments on earlier drafts. Any errors in the paper are the sole responsibility of the authors. The authors also thank the owner of the home-reversion portfolio in Case Study A.32, who wishes to remain anonymous. This document was typeset in LaTeX. Graphs were created with tikz (Tantau, Reference Tantau2024) and pgfplots (Feuersänger, Reference Feuersänger2015).

Appendices

A. Case Studies

The following are case studies are based on actual experience. In most case studies, continuous-time methods provided important benefits over

![]() $q$

-type models.

$q$

-type models.

Case Study A.1

The Pension Benefit Guaranty Corporation (PBGC) in the United States charges a flat annual fee of $37 per participant in a multi-employer pension plan in 2024, rising to $52 in 2031 (PBG, 2024, Table M-16). For single-employer plans the flat part of the annual fee is $101 per participant in 2024, with an additional variable fee of up to $686 per participant for unfunded vested liabilities (PBG, 2024, Table S-39). To minimize payments to the PBGC, a pension plan therefore buys annuities to close out the liabilities for the individuals with the smallest pensions. The pensions that are bought out are treated like commutations, with the observation end date being the date of annuity purchase.

A continuous-time model can include the bought-out annuities, as they will be like Case C in Figure 1. Note that the PBGC fee is per participant, so there is a financial benefit from identifying multiple records relating to the same person among retained cases; see the discussion of deduplication in Macdonald et al. (Reference Macdonald, Richards and Currie2018), Section 2.5.

Case Study A.2

A consulting actuary is asked by a reinsurer to set a pricing basis for a longevity swap. However, the Kaplan-Meier curves for males and females exhibit a pattern like that of Figure 12(b), suggesting that the data are corrupted. The consultant informs the reinsurer, which asks the cedant for better-quality data. The cedant refuses, and the consultant recommends that the reinsurer walks away from the transaction, which it does.

Case Study A.3

A consulting actuary is asked by the sponsoring employer of a pension scheme to validate the mortality basis used for funding calculations. The consulting actuary calculates the Kaplan-Meier curves for males and females and, like Case Study A.2, finds a pattern like that of Figure 12(b), suggesting that the data are corrupted. The third-party pension-scheme administrator is ordered to implement a data clean-up programme, which produces better data and thus allows a reliable basis to be derived.

Case Study A.4

The insurer of the annuities in Figure 3 needs to set a mortality basis for the year-end valuation in 2015. A simple multi-year comparison against a standard table is inappropriate, as Figure 3(b) shows that the transferred annuities are not a random sample of the overall portfolio. A

![]() $q$

-type analysis is complicated by the exit from observation of 60,000 annuities during 2013. A better solution is a

$q$

-type analysis is complicated by the exit from observation of 60,000 annuities during 2013. A better solution is a

![]() $\mu $

-type analysis, using the date of the last annuity payment on the system as a proxy for the date of right-censoring. This allows both transferred and remaining annuities to receive the same treatment as Cases A and B in Figure 1. Such a solution works best for annuities paid relatively frequently, e.g. monthly.

$\mu $

-type analysis, using the date of the last annuity payment on the system as a proxy for the date of right-censoring. This allows both transferred and remaining annuities to receive the same treatment as Cases A and B in Figure 1. Such a solution works best for annuities paid relatively frequently, e.g. monthly.

Case Study A.5

An actuary is tasked with setting a mortality basis for a large annuity portfolio. Eighteen months before the actuary’s appointment, annuities in payment were migrated to a new administration platform and records of prior deaths were lost. The actuary only has one-and-a-half years of experience data. Annuities set up before the migration are therefore like Cases D and E in Figure 1, whereas newer annuities set up post-migration are like Cases A and B.

The portfolio has scale in terms of the number of annuities, but it has no depth in terms of historic data. Using an annual

![]() $q$

-type GLM makes this depth problem even worse: the requirement for a complete year of potential exposure means discarding a third of what little historical data is available. The most recent half-year of experience would be discarded in order not to have mortality levels under-stated due to delays in reporting of deaths. Much of the new business written would therefore also be excluded from

$q$

-type GLM makes this depth problem even worse: the requirement for a complete year of potential exposure means discarding a third of what little historical data is available. The most recent half-year of experience would be discarded in order not to have mortality levels under-stated due to delays in reporting of deaths. Much of the new business written would therefore also be excluded from

![]() $q$

-type analysis, robbing the actuary of information on possible selection effects.

$q$

-type analysis, robbing the actuary of information on possible selection effects.

In contrast, a continuous-time solution is to model

![]() ${\mu _x}$

and use all eighteen months of data, including the new business. Delays in reporting of recent deaths could be handled either by using a parametric OBNR term (Richards, Reference Richards2022b) or using the approach of Richards (Reference Richards2022c), as illustrated in Figure A1. In the latter case, the level of mortality to use for a long-term purpose like valuation would have to be decided by judgement, say by picking the modelled (and thus smoothed) mortality level mid-way between a winter peak and a summer trough.

${\mu _x}$

and use all eighteen months of data, including the new business. Delays in reporting of recent deaths could be handled either by using a parametric OBNR term (Richards, Reference Richards2022b) or using the approach of Richards (Reference Richards2022c), as illustrated in Figure A1. In the latter case, the level of mortality to use for a long-term purpose like valuation would have to be decided by judgement, say by picking the modelled (and thus smoothed) mortality level mid-way between a winter peak and a summer trough.

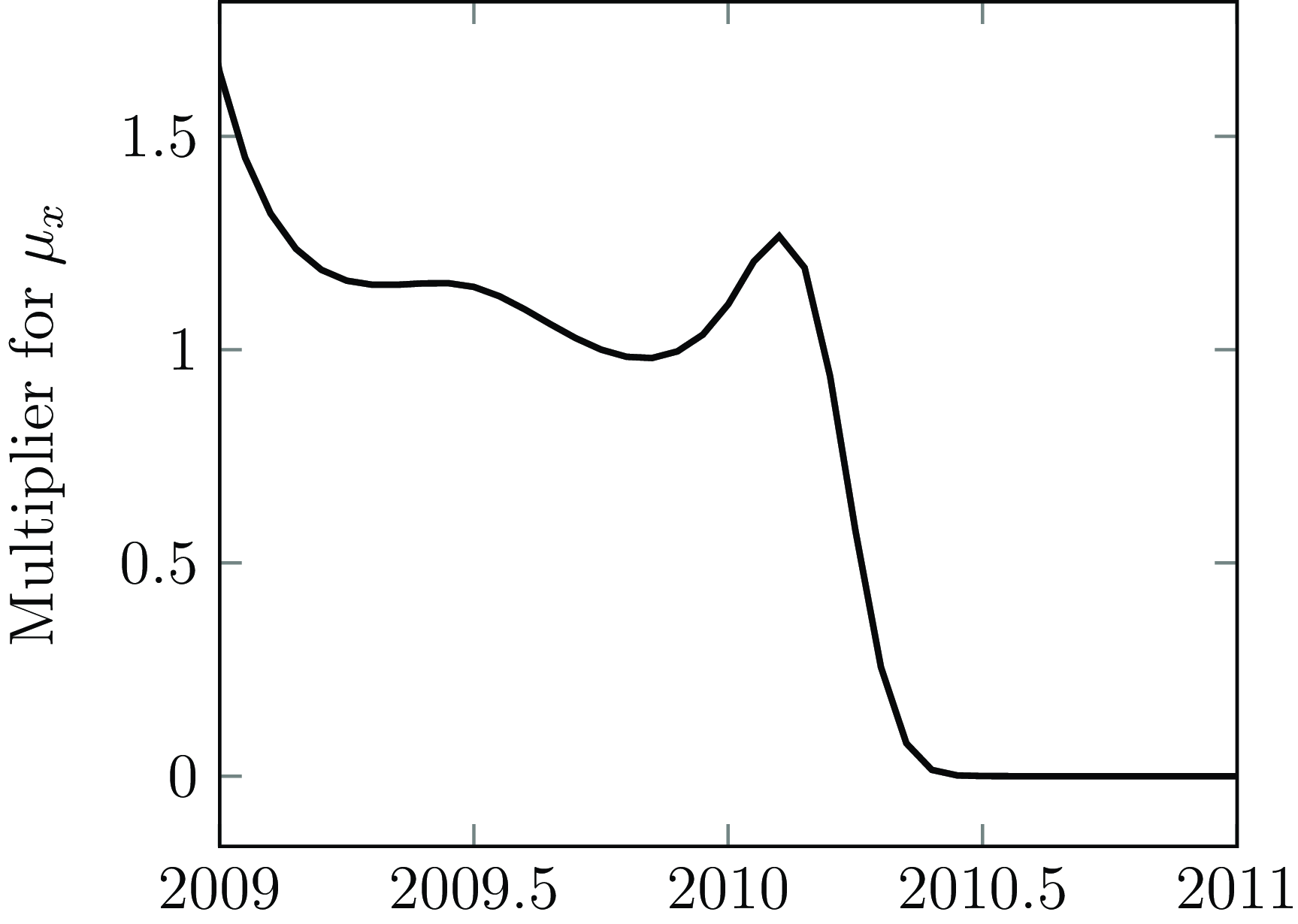

Figure A1. Mortality multiplier over time for French annuity portfolio, with multipliers standardized at 1 for October 2009. The data extract was taken in mid-2010, and the impact of reporting delays (OBNR) is very pronounced.

Source: Own calculations using flexible B-spline basis for mortality levels described in Richards (Reference Richards2022c).

Case Study A.6

A pricing analyst is looking for risk factors to use for annuitant mortality. One of the data fields available is whether an annuity has an attaching spouse’s benefit, i.e. a contingent annuity that commences on death of the first life. Statistical modelling suggests that the presence or absence of a spouse’s benefit is highly significant, and has a mortality effect on a par with the sex of the annuitant. The direction of the effect is plausible, with single-life annuitants exhibiting higher mortality (Ben-Shlomo et al., Reference Ben-Shlomo, Smith, Shipley and Marmot1993).

However, the analyst is aware of the potential for record corruption from Case Study A.2. Specifically, if the main annuitant dies and the spouse is then discovered to have pre-deceased them, the record of the contingent benefit might be removed during death processing. This would make the annuity look like a single-life annuity from outset, and thus distort the apparent mortality differential. The analyst emails the IT department with his concerns and is told that they are unfounded. Sceptical, he makes a further telephone enquiry and is again assured that the feared corruption is not present.

Believing that he may have found an important new risk factor for pricing, the analyst writes a note to the chief actuary. The analyst then remembers that these quality assurances come from the same IT department that lost all the mortality data in Case Study A.5. The analyst performs a third investigation, and discovers that his original suspicions were correct. The excess mortality for single-life annuitants has been grossly exaggerated by the deletion of pre-deceased spouse records.

Case Study A.7

An insurer with a large annuity portfolio has an administration system that automatically deletes records of annuitants who died more than two years in the past. Annuities are set up like Cases A and B in Figure 1, but after two years a Case A annuity becomes like Case D, while Case B annuities simply disappear from the administration system entirely. An urgent request is made to stop this rolling deletion of valuable data.

Similar to Case Study A.5, an annual

![]() $q$

-type GLM only permits the use of a single year of data – the most recent six months are ignored due to late-reported deaths, leaving 1.5 years of data, but a further half-year of unaffected data also has to be discarded to meet the requirement of only using complete years.

$q$

-type GLM only permits the use of a single year of data – the most recent six months are ignored due to late-reported deaths, leaving 1.5 years of data, but a further half-year of unaffected data also has to be discarded to meet the requirement of only using complete years.

As in Case Study A.5, a continuous-time solution is to model

![]() ${\mu _x}$

and use all two years of data. Delays in reporting of recent deaths could be handled either by using a parametric OBNR term (Richards, Reference Richards2022b) or using the approach of Richards (Reference Richards2022c), as illustrated in Figures 6 and A1. As in Case Study A.5, the level of mortality would be chosen by judgement, such as a modelled value from spring or autumn.

${\mu _x}$

and use all two years of data. Delays in reporting of recent deaths could be handled either by using a parametric OBNR term (Richards, Reference Richards2022b) or using the approach of Richards (Reference Richards2022c), as illustrated in Figures 6 and A1. As in Case Study A.5, the level of mortality would be chosen by judgement, such as a modelled value from spring or autumn.

Case Study A.8