If I dared, I would say we must have a theory – the word “theory” is so much disliked by so many Englishmen, and is considered by them so “unpractical”, that I avoid it all I can; though I cannot see, myself, that it is very “practical” to do things without knowing the theory of how to do them.

Wintringham & Blashford-Snell (Reference Wintringham and Blashford-Snell1973)1. Introduction

1.1. In Search of a Continuous-time Model of Mortality

Richards & Macdonald (Reference Richards and Macdonald2025) set out some practical benefits of using “continuous-time” models of mortality. This expository paper asks what we mean by a “continuous-time” model of mortality. As we seek an answer, in the theoretical basis of actuarial mortality modelling, we provide the language and notation to keep actuaries abreast of some fairly recent developments. The purpose of the paper is not to provide novel results, but to demonstrate how an actuary can see familiar objects in novel ways.

In fact, the idea of a “continuous-time” model of mortality is not clear-cut or self-contained, and it leads us to consider two contrasts, which we may think of as modelling phenomena on a “micro” scale versus phenomena on a “macro” scale. These are:

-

(a) very informally, the choice of infinitesimal time unit

$dx$

versus a discrete time unit, which we take to be a year; and

$dx$

versus a discrete time unit, which we take to be a year; and -

(b) models based on individual lives versus models based on collectives of lives, including, inter alia, the collection of data based on individual lives versus collection of age-grouped data.

1.2. Inspiration from the Life Table

The life table is an obvious source of inspiration. Indeed, in the past some have viewed the whole subject as being the construction of life tables, see for example Batten (Reference Batten1978). A life table is a model of a cohort of identical and independent individuals, followed from some initial selection event at integer age

![]() ${x_0} \ge 0$

(such as birth, with

${x_0} \ge 0$

(such as birth, with

![]() ${x_0} = 0$

) until mortality has extinguished the whole cohort. It is typically represented by the function

${x_0} = 0$

) until mortality has extinguished the whole cohort. It is typically represented by the function

![]() ${l_x}$

, interpreted as the expected number left alive at integer ages

${l_x}$

, interpreted as the expected number left alive at integer ages

![]() $x \ge {x_0}$

. The two key features are:

$x \ge {x_0}$

. The two key features are:

-

(a) the focus on the collective rather than the individual; and

-

(b) the time unit of a year

which are both “macro” properties. If we model the number of deaths between integer ages

![]() $x$

and

$x$

and

![]() $x + 1$

as a random variable

$x + 1$

as a random variable

![]() ${D_x}$

, then this formulation of the life table immediately suggests the binomial distribution as a model for

${D_x}$

, then this formulation of the life table immediately suggests the binomial distribution as a model for

![]() ${D_x}$

, see Section 2.3.

${D_x}$

, see Section 2.3.

A slightly different view of the life table inspires a different model. Allow

![]() ${l_x}$

to range over all real

${l_x}$

to range over all real

![]() $x \ge {x_0}$

, not just integer ages, and interpret the ratio

$x \ge {x_0}$

, not just integer ages, and interpret the ratio

![]() ${l_{x + n}}/{l_x}$

as the probability that an individual alive at age

${l_{x + n}}/{l_x}$

as the probability that an individual alive at age

![]() $x$

survives to age

$x$

survives to age

![]() $x + n$

(

$x + n$

(

![]() $x \ge {x_0},n \ge 0$

). This leads to further ideas, namely:

$x \ge {x_0},n \ge 0$

). This leads to further ideas, namely:

-

(a) a model in which death is possible at any moment of time; and

-

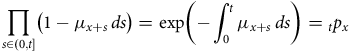

(b) the force of mortality or hazard rate (our preferred term)

${\mu _x}$

at age

${\mu _x}$

at age

$x$

as a measure of the instantaneous risk of death;

$x$

as a measure of the instantaneous risk of death;

which are both “micro” properties. However, observation is still “macro,” of the collective rather than of the individual. This setup suggests a Poisson model for

![]() ${D_x}$

, see Section 2.4.

${D_x}$

, see Section 2.4.

1.3. Models Based on Individuals: The Pseudo-Poisson Model

More recent introductions to the subject begin with the definition of the future lifetime of a person age

![]() $x$

as a non-negative random variable, denoted by

$x$

as a non-negative random variable, denoted by

![]() ${T_x}$

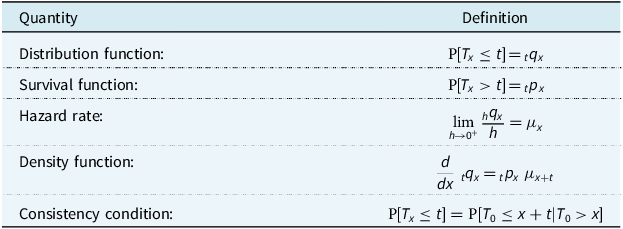

. For brevity and completeness, we compress the definitions of related quantities into Table 1, see Dickson et al. (Reference Dickson, Hardy and Waters2020) or Macdonald et al. (Reference Macdonald, Richards and Currie2018) for details. Of course, the actuarial symbols

${T_x}$

. For brevity and completeness, we compress the definitions of related quantities into Table 1, see Dickson et al. (Reference Dickson, Hardy and Waters2020) or Macdonald et al. (Reference Macdonald, Richards and Currie2018) for details. Of course, the actuarial symbols

![]() ${{\rm{\;}}_t}{q_x}{, {}_t}{p_x}$

and

${{\rm{\;}}_t}{q_x}{, {}_t}{p_x}$

and

![]() ${\mu _x}$

would be defined, based on the life table, in the process of obtaining the binomial and Poisson models in Section 1.2, but the point is that they are now defined by their rôles in the distribution of

${\mu _x}$

would be defined, based on the life table, in the process of obtaining the binomial and Poisson models in Section 1.2, but the point is that they are now defined by their rôles in the distribution of

![]() ${T_x}$

.

${T_x}$

.

Table 1. Definitions of quantities based on

![]() ${T_x}$

, the random future lifetime at age

${T_x}$

, the random future lifetime at age

![]() $x$

. The consistency condition assumes that

$x$

. The consistency condition assumes that

![]() ${x_0} = 0$

, and ensures that calculations based on the distribution of

${x_0} = 0$

, and ensures that calculations based on the distribution of

![]() ${T_x}$

will never contradict calculations based on the distribution of

${T_x}$

will never contradict calculations based on the distribution of

![]() ${T_y}$

(

${T_y}$

(

![]() $y \ne x$

)

$y \ne x$

)

If we define

![]() $T_0^i$

to be the random lifetime of the

$T_0^i$

to be the random lifetime of the

![]() $i$

th individual under observation, this model focuses attention on:

$i$

th individual under observation, this model focuses attention on:

-

(a) the individual rather than the collective; and

-

(b) events happening instantaneously, meaning during a short time period

$h$

as we let

$h$

as we let

$h \to {0^ + }$

;

$h \to {0^ + }$

;

which are both “micro” properties. The most important idea is expressed in the heuristic:

Knowing the density function of each

![]() $T_x^i$

(Table 1), we can write down the probability of any observations, hence a likelihood, and that leads to the following explanation of why the Poisson model of Section 2.4 works so well. Suppose we assume a constant hazard rate at each age, we observe

$T_x^i$

(Table 1), we can write down the probability of any observations, hence a likelihood, and that leads to the following explanation of why the Poisson model of Section 2.4 works so well. Suppose we assume a constant hazard rate at each age, we observe

![]() $M$

individuals and there are

$M$

individuals and there are

![]() $D$

deaths. Then:

$D$

deaths. Then:

-

(a) the model based on individual random lifetimes gives us, correctly, non-zero probabilities only of observing

$0,1, \ldots, M$

deaths, while

$0,1, \ldots, M$

deaths, while -

(b) a Poisson distribution would give us, incorrectly, non-zero probabilities also of observing

$M + 1,M + 2, \ldots $

deaths.

$M + 1,M + 2, \ldots $

deaths.

Moreover, unless the total time exposed-to-risk is a deterministic constant, fixed in advance by the observational scheme, the number of deaths cannot be Poisson (Macdonald, Reference Macdonald1996). However, both models above have the same likelihood, as if they were Poisson. It follows that likelihood-based inference will be identical under either model. This leads us to call the model based on age-grouped data (and a constant hazard) the pseudo-Poisson model (Section 3.7). We continue to seek the proper foundations of a mortality model at the “micro” level in the model of individual lifetimes.

1.4. Dynamic Life History Models I: Truncation and Censoring

The individual life history model lets us write down exact probabilities of observed events, if we know the hazard rates. It also lets us deal with incomplete observation, in particular:

-

(a) left-truncation: an individual enters observation having already survived to some age

${x_a} \gt 0$

; and

${x_a} \gt 0$

; and -

(b) right-censoring: the individual leaves observation while still alive, so we observe only that

${T_{{x_a}}} \gt {x_b - x_a}$

for some age

${T_{{x_a}}} \gt {x_b - x_a}$

for some age

${x_b} \gt {x_a}$

.

${x_b} \gt {x_a}$

.

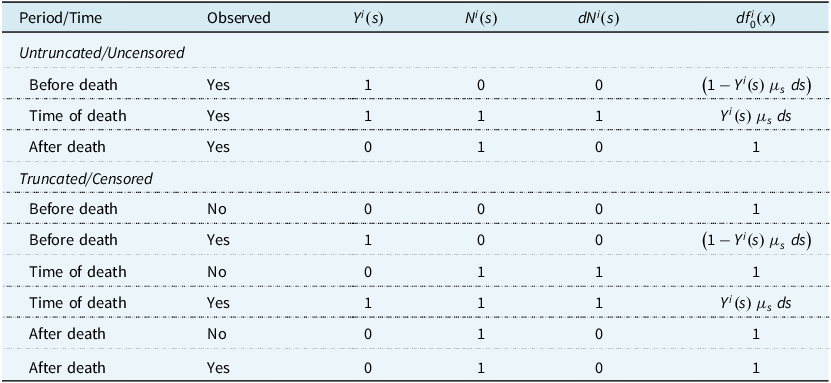

A neat device allows us to avoid the complication of keeping track of ages

![]() ${x_a}$

and

${x_a}$

and

![]() ${x_b}$

when writing expressions such as likelihoods. Define a process:

${x_b}$

when writing expressions such as likelihoods. Define a process:

(age

![]() ${x^ - }$

means “just before age

${x^ - }$

means “just before age

![]() $x$

” and is a technicality). The “under observation” condition takes care of left-truncation and right-censoring. Then, for example, the integrated hazard rate over the time spent under observation by the

$x$

” and is a technicality). The “under observation” condition takes care of left-truncation and right-censoring. Then, for example, the integrated hazard rate over the time spent under observation by the

![]() $i$

th individual (an important quantity in many calculations), can be written:

$i$

th individual (an important quantity in many calculations), can be written:

We see that the process

![]() ${Y^i}\left( x \right){\rm{\;}}{\mu _x}$

acts as a dynamic or stochastic hazard rate tailored to the

${Y^i}\left( x \right){\rm{\;}}{\mu _x}$

acts as a dynamic or stochastic hazard rate tailored to the

![]() $i$

th individual, and greatly simplifies expressions involving integrals, since all integrals can now be now taken over

$i$

th individual, and greatly simplifies expressions involving integrals, since all integrals can now be now taken over

![]() $\left( {0,\infty } \right]$

(Section 4.6).

$\left( {0,\infty } \right]$

(Section 4.6).

1.5. Dynamic Life History Models II: Back to Bernoulli

In a utilitarian sense the job was finished with Section 1.3, but the heuristic (1) suggests more to come. For, if

![]() ${{\rm{\;}}_h}{q_x} \approx h{\rm{\,}}{\mu _x}$

then

${{\rm{\;}}_h}{q_x} \approx h{\rm{\,}}{\mu _x}$

then

![]() ${{\rm{\;}}_h}{p_x} \approx 1 - h{\rm{\,}}{\mu _x}$

, and if we let

${{\rm{\;}}_h}{p_x} \approx 1 - h{\rm{\,}}{\mu _x}$

, and if we let

![]() ${\delta _x}$

be an indicator, equal to 1 if death occurs at age

${\delta _x}$

be an indicator, equal to 1 if death occurs at age

![]() $x$

, and 0 otherwise, then what is observed “during” time

$x$

, and 0 otherwise, then what is observed “during” time

![]() $h$

is the outcome of a Bernoulli trial with parameter

$h$

is the outcome of a Bernoulli trial with parameter

![]() $h{\rm{\,}}{\mu _x}$

and probability:

$h{\rm{\,}}{\mu _x}$

and probability:

We would like to take all such consecutive “instantaneous” Bernoulli trials while the individual is alive and under observation, and multiply their probabilities (4) together. In all of probability theory, there is nothing simpler than a Bernoulli trial, so we really would have reduced a probability in a mortality model to its constituent “atoms”; the ultimate “micro” level. That is what we describe in Section 4. To do so we introduce two ideas, which give us the notation needed to write down a product of Bernoulli probabilities like (4) in a rigorous way.

-

(a) Counting processes: A counting process

${N^i}\left( x \right)$

starts at

${N^i}\left( x \right)$

starts at

${N^i}\left( 0 \right) = 0$

and jumps to 1 at time

${N^i}\left( 0 \right) = 0$

and jumps to 1 at time

$T_0^i$

if the

$T_0^i$

if the

$i$

th individual is then under observation. Then its increment

$i$

th individual is then under observation. Then its increment

$d{N^i}\left( x \right)$

indicates an observed death, and is a rigorous version of the informal

$d{N^i}\left( x \right)$

indicates an observed death, and is a rigorous version of the informal

${\delta _x}$

in (4). Between them,

${\delta _x}$

in (4). Between them,

${N^i}\left( x \right)$

and

${N^i}\left( x \right)$

and

${Y^i}\left( x \right)$

let us write the Bernoulli trial probability (4) formally as:

${Y^i}\left( x \right)$

let us write the Bernoulli trial probability (4) formally as:

and this allows for left-truncation and right-censoring.

-

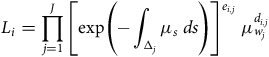

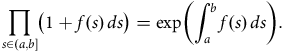

(b) Product-integral: The product-integral is the device that lets us multiply all the infinitesimal Bernoulli trial probabilities. We defer further description to Section 4.2 and Appendix B and just give the final form of the likelihood contributed by the

$i$

th individual, denoted by

$i$

th individual, denoted by

${L_i}$

:

${L_i}$

:

The product-integral is identified by a product over all values of an interval (

![]() $x \in \left( {0,\infty } \right]$

here) and the presence of the variable of integration (

$x \in \left( {0,\infty } \right]$

here) and the presence of the variable of integration (

![]() $dx$

here) in the integrand.

$dx$

here) in the integrand.

1.6. What is a “Continuous-time” Model?

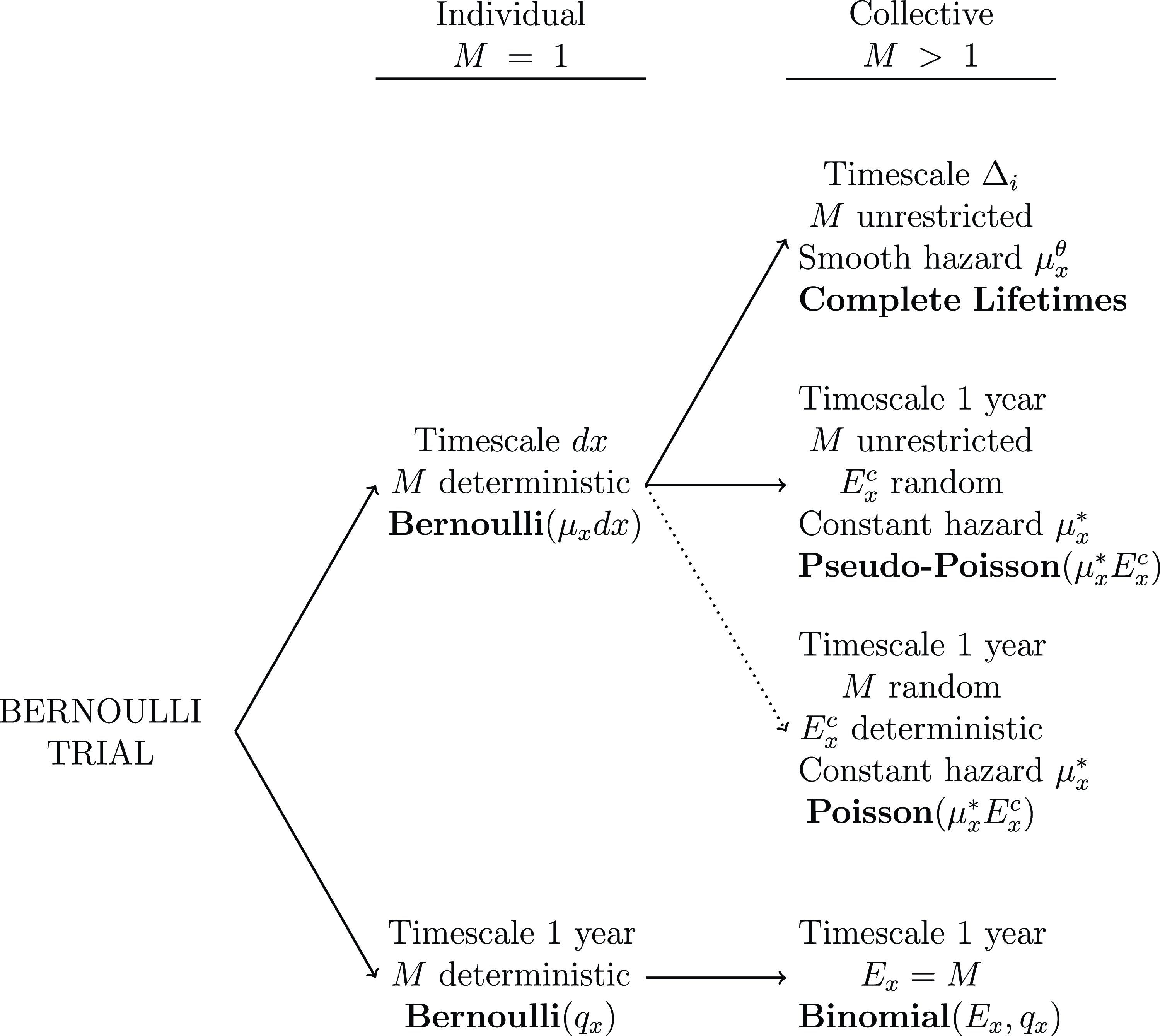

We started out by trying to pin down what we meant by the vague term “continuous-time mortality model.” Our answer is: it is the class of models with Poisson-like likelihoods built up, by product integration, out of infinitesimal Bernoulli trials (see Section 4.8 and Figure 2). Any model in this class has the following properties.

-

(a) It is irreducible, in the sense that it is composed of (infinitesimal) Bernoulli trials.

-

(b) It is based on behaviour at the “micro” time scale.

-

(c) It is based on individual lives.

-

(d) Aggregated, over time and over individuals, it explains the Poisson-like nature of likelihoods, therefore estimation based on the collective at the “macro” time scale.

-

(e) It allows for left-truncation and right-censoring.

-

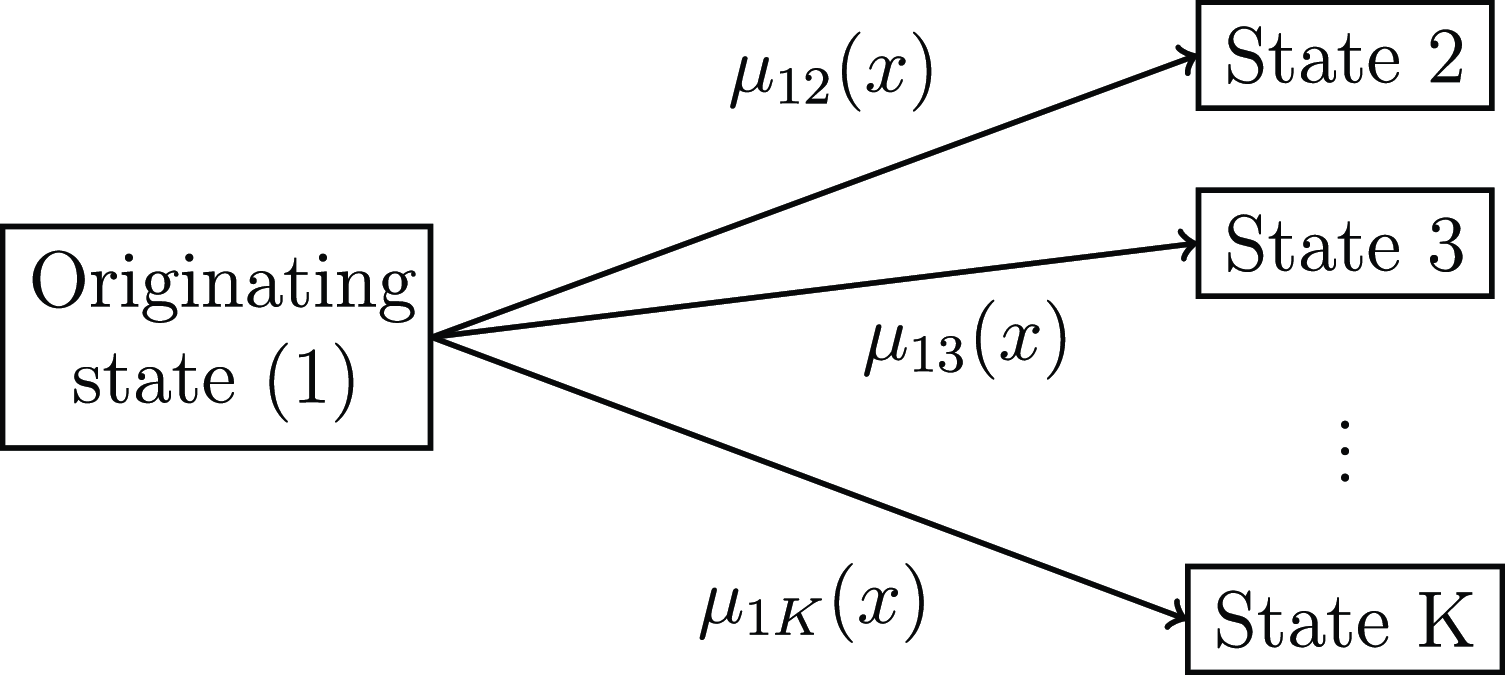

(f) It is easily extended to multiple-decrement and multiple-state models.

This class includes, as a special case, true Poisson distributions, but these are always associated with an improbable observational plan. Note that our endpoint is just the starting point for the modern statistical study of survival models (Section 4.10), see Andersen et al. (Reference Andersen, Borgan, Gill and Keiding1993).

1.7. Plan of this Paper

We start in Section 2 with Forfar et al. (Reference Forfar, McCutcheon and Wilkie1988), a definitive account of graduation using binomial and Poisson models, which we call mortality models at the “macro” scale. Then in Section 3 we turn to models at the “micro” scale based on individual lifetimes, and find the origins of Poisson-like behaviour at the “macro” scale. In Section 4 we bring together models of individual lifetimes and models based on behaviour over small intervals

![]() $h$

as

$h$

as

![]() $h \to {0^ + }$

, and find that all probabilities in a mortality model arise as a product of consecutive (infinitesimal) Bernoulli trials. Section 5 concludes.

$h \to {0^ + }$

, and find that all probabilities in a mortality model arise as a product of consecutive (infinitesimal) Bernoulli trials. Section 5 concludes.

2. Binomial and Poisson Models

2.1. Forfar et al. (Reference Forfar, McCutcheon and Wilkie1988)

In a landmark paper, Forfar et al. (Reference Forfar, McCutcheon and Wilkie1988) gave comprehensive accounts of two models for survival data, namely the binomial and Poisson models. These defined: (a) the random variable

![]() ${D_x}$

, to be the number of deaths observed at age

${D_x}$

, to be the number of deaths observed at age

![]() $x$

; and (b) a suitable measure of exposure to risk at age

$x$

; and (b) a suitable measure of exposure to risk at age

![]() $x$

, assumed to be non-random, that we will call

$x$

, assumed to be non-random, that we will call

![]() ${V_x}$

. Then the occurrence-exposure rate

${V_x}$

. Then the occurrence-exposure rate

![]() ${D_x}/{V_x}$

was shown to be an estimate of the model parameter, a mortality rate

${D_x}/{V_x}$

was shown to be an estimate of the model parameter, a mortality rate

![]() $\hat q$

in the binomial model, and a hazard rate

$\hat q$

in the binomial model, and a hazard rate

![]() $\hat \mu $

in the Poisson modelFootnote

1

.

$\hat \mu $

in the Poisson modelFootnote

1

.

Forfar et al. (Reference Forfar, McCutcheon and Wilkie1988) helpfully located the old subject of parametric graduation in a modern statistical setting, including model specification, likelihood, score function and information, covariance matrix, model selection and parametric bootstrapping. The treatment was heavily influenced by the authors’ work for the Continuous Mortality Investigation Bureau (the CMIB, now CMI) particularly in respect of data collection. The advance it represented may be gauged by comparison with contemporary texts such as Batten (Reference Batten1978) and Benjamin and Pollard (Reference Benjamin and Pollard1980).

Both binomial and Poisson models are rooted in simple thought-experiments, require no statistics beyond a first course in data analysis, and can, with qualifications, be implemented in standard statistical packages such as R (R Core Team, 2021). This gives them considerable staying power.

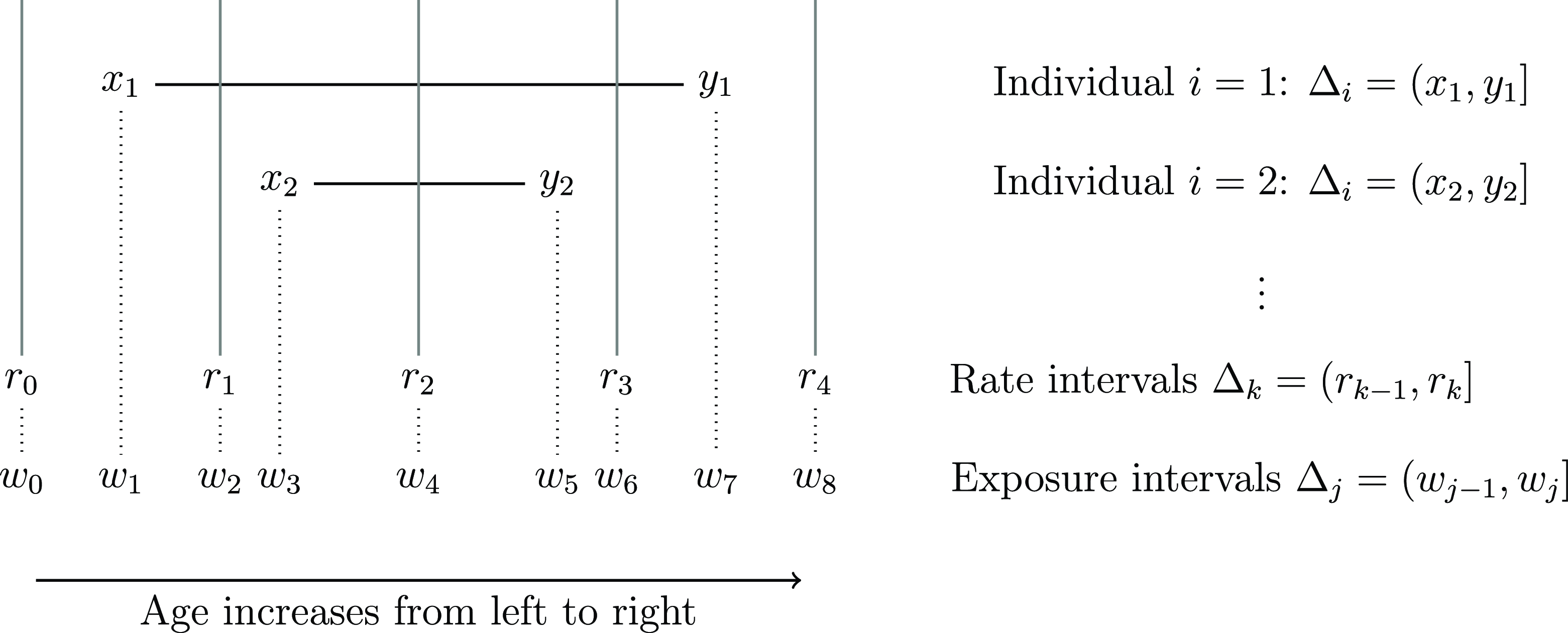

2.2. The Rate Interval and

${{\bf{\Delta }}_k}$

Notation

${{\bf{\Delta }}_k}$

Notation

The rate interval is an interval of age (or calendar time) on which an individual is assigned a given age label. It is the means of assigning an age label to an individual exposed to the risk of death and at the time of death. Note that rate intervals are only needed with age-grouped data, not models based on individual lives. They are treated in detail in texts such as Benjamin and Pollard (Reference Benjamin and Pollard1980). We assume that the rate interval, when we need one, is the year of age

![]() $\left( {x,x + 1} \right]$

defined by “age last birthday.” The CMI, for another example, use the year of age

$\left( {x,x + 1} \right]$

defined by “age last birthday.” The CMI, for another example, use the year of age

![]() $\left( {x - 1/2,x + 1/2} \right]$

defined by “age nearest birthday.”

$\left( {x - 1/2,x + 1/2} \right]$

defined by “age nearest birthday.”

We assume that the data are covered by

![]() $K$

rate intervals and that in the abstract these may be denoted by

$K$

rate intervals and that in the abstract these may be denoted by

![]() ${{\rm{\Delta }}_k}$

${{\rm{\Delta }}_k}$

![]() $\left( {k = 1,2, \ldots, K} \right)$

; and that a sum over all rate intervals may be denoted by

$\left( {k = 1,2, \ldots, K} \right)$

; and that a sum over all rate intervals may be denoted by

![]() $\mathop \sum \nolimits_k $

, and a product likewise by

$\mathop \sum \nolimits_k $

, and a product likewise by

![]() $\mathop \prod \nolimits_k $

.

$\mathop \prod \nolimits_k $

.

2.3. Binomial Models

The binomial model is based on the following thought-experiment: take

![]() ${E_x}$

lives at the start of a year, all alive at age

${E_x}$

lives at the start of a year, all alive at age

![]() $x$

and assumed to be “statistically independent” in respect of their mortality risk. Then

$x$

and assumed to be “statistically independent” in respect of their mortality risk. Then

![]() ${E_x}$

is the measure of exposure referred to as

${E_x}$

is the measure of exposure referred to as

![]() ${V_x}$

in Section 2.1, usually here called the initial exposed-to-risk. Define

${V_x}$

in Section 2.1, usually here called the initial exposed-to-risk. Define

![]() ${D_x}$

to be the number who are dead at the end of the year, and

${D_x}$

to be the number who are dead at the end of the year, and

![]() ${q_x}$

to be the probability that a life alive at age

${q_x}$

to be the probability that a life alive at age

![]() $x$

dies not later than age

$x$

dies not later than age

![]() $x + 1$

. Then the following are easily shown.

$x + 1$

. Then the following are easily shown.

-

(a)

${D_x}$

has a

${D_x}$

has a

${\rm{\;binomial}}\left( {{E_x},{q_x}} \right)$

distribution, with first two moments

${\rm{\;binomial}}\left( {{E_x},{q_x}} \right)$

distribution, with first two moments

${\rm{\;E\;}}\left[ {{D_x}} \right] = {E_x}{\rm{\;}}{q_x}$

and

${\rm{\;E\;}}\left[ {{D_x}} \right] = {E_x}{\rm{\;}}{q_x}$

and

${\rm{\;Var\;}}\left[ {{D_x}} \right] = {E_x}{\rm{\;}}{q_x}{\rm{\;}}\left( {1 - {q_x}} \right)$

.

${\rm{\;Var\;}}\left[ {{D_x}} \right] = {E_x}{\rm{\;}}{q_x}{\rm{\;}}\left( {1 - {q_x}} \right)$

. -

(b) As a function of parameter

${q_x}$

, the data

${q_x}$

, the data

$\left( {{D_x},{E_x}} \right)$

has likelihood function:

$\left( {{D_x},{E_x}} \right)$

has likelihood function:

leading to the maximum likelihood estimate (MLE)

![]() ${\hat q_x} = {D_x}/{E_x}$

which is asymptotically unbiased (

${\hat q_x} = {D_x}/{E_x}$

which is asymptotically unbiased (

![]() ${\rm{E}}\left[ {{{\hat q}_x}} \right] = {q_x}$

) with variance

${\rm{E}}\left[ {{{\hat q}_x}} \right] = {q_x}$

) with variance

![]() ${\rm{\;Var\;}}\left[ {{{\hat q}_x}} \right] = {q_x}{\rm{}}\left( {1 - {q_x}} \right)/{E_x}$

.

${\rm{\;Var\;}}\left[ {{{\hat q}_x}} \right] = {q_x}{\rm{}}\left( {1 - {q_x}} \right)/{E_x}$

.

-

(c) The estimate

${\hat q_x}$

is an estimate of

${\hat q_x}$

is an estimate of

${q_x}$

, that is, the function value at start of the rate interval

${q_x}$

, that is, the function value at start of the rate interval

$\left( {x,x + 1} \right]$

.

$\left( {x,x + 1} \right]$

.

![]() ${D_x}$

can be viewed as the number of successes out of

${D_x}$

can be viewed as the number of successes out of

![]() ${E_x}$

independent Bernoulli trials, each with probability of success (death) equal to

${E_x}$

independent Bernoulli trials, each with probability of success (death) equal to

![]() ${q_x}$

. The idea of the Bernoulli trial as the fundamental “atom” of mortality risk appears again in Section 4.5.

${q_x}$

. The idea of the Bernoulli trial as the fundamental “atom” of mortality risk appears again in Section 4.5.

2.4. Poisson Models

The Poisson model depends on a different thought-experiment. An unspecified number of individuals is observed, alive during the relevant rate interval of age

![]() $\left( {x,x + 1} \right]$

, such that the total time alive and under observation is a non-random quantity

$\left( {x,x + 1} \right]$

, such that the total time alive and under observation is a non-random quantity

![]() $E_x^c$

. Now

$E_x^c$

. Now

![]() $E_x^c$

is the measure of exposure referred to as

$E_x^c$

is the measure of exposure referred to as

![]() ${V_x}$

in Section 2.1, usually here called the central exposed-to-risk. A constant force of mortality

${V_x}$

in Section 2.1, usually here called the central exposed-to-risk. A constant force of mortality

![]() $\mu $

is assumed at all ages in the rate interval

$\mu $

is assumed at all ages in the rate interval

![]() $\left( {x,x + 1} \right]$

. Define

$\left( {x,x + 1} \right]$

. Define

![]() ${D_x}$

to be the number of observed deaths. Then the following can be shown.

${D_x}$

to be the number of observed deaths. Then the following can be shown.

-

(a)

${D_x}$

has a

${D_x}$

has a

${\rm{\;Poisson}}\left( {\mu {\rm{\,}}E_x^c} \right)$

distribution with first two moments

${\rm{\;Poisson}}\left( {\mu {\rm{\,}}E_x^c} \right)$

distribution with first two moments

${\rm{E}}\left[ {{D_x}\left] { = {\rm{Var}}} \right[{D_x}} \right] = \mu {\rm{\,}}E_x^c$

.

${\rm{E}}\left[ {{D_x}\left] { = {\rm{Var}}} \right[{D_x}} \right] = \mu {\rm{\,}}E_x^c$

. -

(b) As a function of parameter

$\mu $

, the data

$\mu $

, the data

$\left( {{D_x},E_x^c} \right)$

has likelihood function:

$\left( {{D_x},E_x^c} \right)$

has likelihood function: $$L\left( \mu \right) = {(\mu {\rm{\,}}E_x^c)^{{D_x}}}{\rm{\;exp}}\left( { - \mu {\rm{\,}}E_x^c} \right)/{D_x}!$$

(8)

$$L\left( \mu \right) = {(\mu {\rm{\,}}E_x^c)^{{D_x}}}{\rm{\;exp}}\left( { - \mu {\rm{\,}}E_x^c} \right)/{D_x}!$$

(8) $${\propto {\rm{exp}}\left( { - \mu {\rm{\,}}E_x^c} \right){\rm{\;}}{\mu ^{{D_x}}},}$$

$${\propto {\rm{exp}}\left( { - \mu {\rm{\,}}E_x^c} \right){\rm{\;}}{\mu ^{{D_x}}},}$$

leading to the MLE

$\hat \mu = {D_x}/E_x^c$

which is asymptotically unbiased (

$\hat \mu = {D_x}/E_x^c$

which is asymptotically unbiased (

${\rm{E\;}}\left[ {\hat \mu } \right] = {\mu _x}$

) with variance

${\rm{E\;}}\left[ {\hat \mu } \right] = {\mu _x}$

) with variance

${\rm{Var}}\left[ {\hat \mu } \right] = \mu /E_x^c$

.

${\rm{Var}}\left[ {\hat \mu } \right] = \mu /E_x^c$

. -

(c) Assuming a relatively even distribution of exposure over the rate interval

$\left( {x,x + 1} \right]$

, the MLE

$\left( {x,x + 1} \right]$

, the MLE

$\hat \mu $

estimates

$\hat \mu $

estimates

${\mu _{x + 1/2}}$

.

${\mu _{x + 1/2}}$

.

2.5. Terminology

Binomial and Poisson models may be described in different ways. The binomial model admits of no conceivable time other than its own time unit; it is unambiguously a discrete-time model. It may also be called a

![]() $q$

-type model in honour of its conventional parameter. The Poisson model is a candidate for a continuous-time model, although it turns out to be an extreme representative of a whole class, see Sections 3 et seq. It may also be called a

$q$

-type model in honour of its conventional parameter. The Poisson model is a candidate for a continuous-time model, although it turns out to be an extreme representative of a whole class, see Sections 3 et seq. It may also be called a

![]() $\mu $

-type model in honour of its conventional parameter. See Richards and Macdonald (Reference Richards and Macdonald2025) for both terminologies.

$\mu $

-type model in honour of its conventional parameter. See Richards and Macdonald (Reference Richards and Macdonald2025) for both terminologies.

2.6. Assessment of the Binomial and Poisson Models

2.6.1. Feasibility of the Thought-Experiment: Binomial Model

To carry out the binomial thought-experiment we would need a homogeneous sample of

![]() ${E_x}$

individuals age

${E_x}$

individuals age

![]() $x$

, observed to be alive or dead at age

$x$

, observed to be alive or dead at age

![]() $x + 1$

. This contrasts with observation of (say) members of a pension scheme or life office policyholders. Real data often includes exits for reasons other than death and not under the modeller’s control, see Richards and Macdonald (Reference Richards and Macdonald2025), Section 3 and Appendix for examples. The requirements of the binomial experiment will not be met by: (a) individuals entering observation between ages

$x + 1$

. This contrasts with observation of (say) members of a pension scheme or life office policyholders. Real data often includes exits for reasons other than death and not under the modeller’s control, see Richards and Macdonald (Reference Richards and Macdonald2025), Section 3 and Appendix for examples. The requirements of the binomial experiment will not be met by: (a) individuals entering observation between ages

![]() $x$

and

$x$

and

![]() $x + 1$

; and (b) individuals leaving observation between ages

$x + 1$

; and (b) individuals leaving observation between ages

![]() $x$

and

$x$

and

![]() $x + 1$

, for reasons other than death.

$x + 1$

, for reasons other than death.

Thus we are led to ask, what is the probability of surviving over any fraction of the rate interval? For example, an individual joining at age

![]() $x - 1/2$

and surviving to age

$x - 1/2$

and surviving to age

![]() $x$

requires the calculation of

$x$

requires the calculation of

![]() ${{\rm{\;}}_{1/2}}{p_{x - 1/2}}$

. The binomial model gives no satisfactory answer. Strictly, the question lies outside the bounds of the model. Even if we could implement the thought-experiment, the model posits only the number of lives observed at the start and end of the rate interval.

${{\rm{\;}}_{1/2}}{p_{x - 1/2}}$

. The binomial model gives no satisfactory answer. Strictly, the question lies outside the bounds of the model. Even if we could implement the thought-experiment, the model posits only the number of lives observed at the start and end of the rate interval.

Nevertheless, an answer may be demanded, because individuals can and do join or leave an investigation in the middle of the rate interval, see (Richards and Macdonald, Reference Richards and Macdonald2025, Section 3) for numerous examples in practice. The analyst is obliged to make some assumption about mortality between ages

![]() $x$

and

$x$

and

![]() $x + 1$

, for which the binomial model gives no guidance. Three popular assumptions have been:

$x + 1$

, for which the binomial model gives no guidance. Three popular assumptions have been:

-

(a) a uniform distribution of deaths;

-

(b) the Balducci hypothesis; and

-

(c) a constant hazard rate.

See Macdonald (Reference Macdonald1996) or Richards and Macdonald (Reference Richards and Macdonald2025) for a discussion of these. Here we just remark that (c), a constant hazard rate, is mathematically the simplest, fully consistent with the Poisson model, and also consistent with modelling individual lifetimes as in Section 3.

2.6.2. Feasibility of the Thought-Experiment: Poisson Model

The Poisson thought-experiment is not troubled by fractions of the rate interval. Since the hazard rate is assumed to be a constant

![]() $\mu $

during the rate interval

$\mu $

during the rate interval

![]() $\left( {x,x + 1} \right]$

, the probability of dying during the sub-interval

$\left( {x,x + 1} \right]$

, the probability of dying during the sub-interval

![]() $\left( {x + a,x + b} \right]$

(given alive at age

$\left( {x + a,x + b} \right]$

(given alive at age

![]() $x + a$

, for

$x + a$

, for

![]() $0 \le a \lt b \le 1$

) is

$0 \le a \lt b \le 1$

) is

![]() $1 - {\rm{exp}}\left( { - \mu {\rm{\,}}\left( {b - a} \right)} \right)$

.

$1 - {\rm{exp}}\left( { - \mu {\rm{\,}}\left( {b - a} \right)} \right)$

.

The Poisson thought-experiment is not met in practice, however, for different reasons. The distribution of

![]() ${D_x}$

is Poisson only if the exposed-to-risk

${D_x}$

is Poisson only if the exposed-to-risk

![]() $E_x^c$

is non-random, for example pre-determined. This is not the case if: (a) the population being sampled is finite, with known maximum size

$E_x^c$

is non-random, for example pre-determined. This is not the case if: (a) the population being sampled is finite, with known maximum size

![]() $M$

individuals, say, because then

$M$

individuals, say, because then

![]() ${D_x} \le M$

, but

${D_x} \le M$

, but

![]() ${\rm{\;P\;}}[{D_x} \gt M] \gt 0$

under any Poisson distribution; or (b) the exposure times of the individuals in the sampled population are not known in advance, because then

${\rm{\;P\;}}[{D_x} \gt M] \gt 0$

under any Poisson distribution; or (b) the exposure times of the individuals in the sampled population are not known in advance, because then

![]() $E_x^c$

is random. Moreover,

$E_x^c$

is random. Moreover,

![]() ${D_x}$

is usually a component of the bivariate random variable

${D_x}$

is usually a component of the bivariate random variable

![]() $\left( {{D_x},E_x^c} \right)$

. In such cases we call

$\left( {{D_x},E_x^c} \right)$

. In such cases we call

![]() ${D_x}$

pseudo-Poisson, see Section 3. For estimation purposes, however, it behaves as a true Poisson random variable would, see Section 3.7.

${D_x}$

pseudo-Poisson, see Section 3. For estimation purposes, however, it behaves as a true Poisson random variable would, see Section 3.7.

2.6.3. Occurrence-exposure Rates, Age-grouped Data and Graduation

The estimates

![]() ${\hat q_x} = {D_x}/{E_x}$

and

${\hat q_x} = {D_x}/{E_x}$

and

![]() ${\hat \mu _x} = {D_x}/E_x^c$

are examples of occurrence-exposure rates. Both they and their sample variances (Sections 2.3 and 2.4) require only the age-grouped totals

${\hat \mu _x} = {D_x}/E_x^c$

are examples of occurrence-exposure rates. Both they and their sample variances (Sections 2.3 and 2.4) require only the age-grouped totals

![]() ${D_x}$

and

${D_x}$

and

![]() ${E_x}$

or

${E_x}$

or

![]() $E_x^c$

to be reported to the analyst, rather than data on each individual. Such totals may easily be extracted from ordinary data files used in the business; they greatly reduce the volume of data required (which used to matter a lot); and they reduce the risk of accidentally breaching data-protection rules (which matters now). On the other hand they do not allow the level of checking and cleaning of the data that is possible with individual data (Macdonald et al., Reference Macdonald, Richards and Currie2018, Chapter 2).

$E_x^c$

to be reported to the analyst, rather than data on each individual. Such totals may easily be extracted from ordinary data files used in the business; they greatly reduce the volume of data required (which used to matter a lot); and they reduce the risk of accidentally breaching data-protection rules (which matters now). On the other hand they do not allow the level of checking and cleaning of the data that is possible with individual data (Macdonald et al., Reference Macdonald, Richards and Currie2018, Chapter 2).

If age-grouped data are prepared by someone other than the analyst, the modelling is wholly dependent on the thoroughness and diligence of the source provider. This is a material concern for risk transfer transactions, such as reinsurance, bulk annuities and portfolio transfers. If a model is to be used to price a risk transfer, the analyst should always insist on individual records, regardless of whether the intent is to use models based on individuals or age-grouped counts.

Occurrence-exposure rates

![]() ${\hat q_x}$

or

${\hat q_x}$

or

![]() ${\hat \mu _x}$

are normally smoothed or graduated for practical use. For this purpose a likelihood may be calculated as the product of the likelihoods for each rate interval, using age-grouped data. Other, non-likelihood methods may also be used (Forfar et al., Reference Forfar, McCutcheon and Wilkie1988).

${\hat \mu _x}$

are normally smoothed or graduated for practical use. For this purpose a likelihood may be calculated as the product of the likelihoods for each rate interval, using age-grouped data. Other, non-likelihood methods may also be used (Forfar et al., Reference Forfar, McCutcheon and Wilkie1988).

2.7. Generalized Linear Models (GLMs)

To the list of properties in Sections 2.3 and 2.4 we could have added “(d) Leads to a simple Generalized Linear Model (GLM) for graduating age-grouped mortality data.”

GLMs were introduced by Nelder and Wedderburn (Reference Nelder and Wedderburn1972), and contain three elements: (a) a random component,

![]() ${Y_x}$

; (b) a systematic component,

${Y_x}$

; (b) a systematic component,

![]() ${\eta _x}$

; and (c) a link function,

${\eta _x}$

; and (c) a link function,

![]() $g$

. A GLM connects the expectation of

$g$

. A GLM connects the expectation of

![]() ${Y_x}$

to

${Y_x}$

to

![]() ${\eta _x}$

via

${\eta _x}$

via

![]() $g$

as follows:

$g$

as follows:

The component

![]() ${\eta _x}$

is the linear predictor; in mortality work it is a linear function of age,

${\eta _x}$

is the linear predictor; in mortality work it is a linear function of age,

![]() $x$

, and a corresponding covariate vector,

z

$x$

, and a corresponding covariate vector,

z

![]() ${{\rm{\;\!}}_x}$

. Let

θ

be the vector of parameters to estimate, and let

X

be the corresponding model matrix. Each observation

${{\rm{\;\!}}_x}$

. Let

θ

be the vector of parameters to estimate, and let

X

be the corresponding model matrix. Each observation

![]() ${Y_x}$

has a corresponding row in

X

. For a binomial GLM we have:

${Y_x}$

has a corresponding row in

X

. For a binomial GLM we have:

For a Poisson GLM with the link function

![]() $g\left( x \right) = {\rm{log}}\left( x \right)$

we have:

$g\left( x \right) = {\rm{log}}\left( x \right)$

we have:

where

![]() $\left[ {x,{\rm{\;}}} \right]$

selects the row for the observation corresponding to age

$\left[ {x,{\rm{\;}}} \right]$

selects the row for the observation corresponding to age

![]() $x$

.

$x$

.

The link function,

![]() $g$

, is chosen by the analyst. The canonical link for the binomial GLM is the logit, but other link functions can be used, such as the probit link. The canonical link for the Poisson GLM is the logarithm, but other link functions have been used for mortality work, such as the logit link; see Currie (Reference Currie2016), Appendix A for implementation details of the logit link for Poisson GLMs.

$g$

, is chosen by the analyst. The canonical link for the binomial GLM is the logit, but other link functions can be used, such as the probit link. The canonical link for the Poisson GLM is the logarithm, but other link functions have been used for mortality work, such as the logit link; see Currie (Reference Currie2016), Appendix A for implementation details of the logit link for Poisson GLMs.

GLMs have a link with “classical” actuarial modelling since one of the simplest choices of fitted

![]() ${\hat \eta _x}$

is a Gompertz function, but this does not extend to other members of the Gompertz-Makeham family (see Forfar et al. (Reference Forfar, McCutcheon and Wilkie1988)).

${\hat \eta _x}$

is a Gompertz function, but this does not extend to other members of the Gompertz-Makeham family (see Forfar et al. (Reference Forfar, McCutcheon and Wilkie1988)).

GLMs are popular because they are flexible, have nice statistical properties and are linear in the covariates. The binomial and Poisson error structures arise naturally for “count” data, and age-grouped deaths are examples of “counts” so these GLMs are, in a sense, natural candidates as mortality models. However, linear dependence on covariates, and the canonical link functions associated with the exponential family, are restrictive, and for large experiences we will often find better-fitting models that are not GLMs (see, for example, the range of models included in Cairns et al. (Reference Cairns, Blake, Dowd, Coughlan, Epstein, Ong and Balevich2009)). In addition GLMs bring us no closer to any foundational concept of a “mechanism” generating mortality data, so we do not consider them further.

3. Modelling Individual Lifetimes: The Pseudo-Poisson Model

3.1. Observation of an Individual

Suppose the

![]() $i$

th individual is observed from age

$i$

th individual is observed from age

![]() ${x_i}$

until age

${x_i}$

until age

![]() ${y_i}$

for total time

${y_i}$

for total time

![]() ${v_i} = {y_i} - {x_i}$

. Denote the interval

${v_i} = {y_i} - {x_i}$

. Denote the interval

![]() $\left( {{x_i},{y_i}} \right]$

by

$\left( {{x_i},{y_i}} \right]$

by

![]() ${{\rm{\Delta }}_i}$

. Observation ends because of either death or right-censoring at age

${{\rm{\Delta }}_i}$

. Observation ends because of either death or right-censoring at age

![]() ${y_i}$

. Define the indicator:

${y_i}$

. Define the indicator:

Then the random variable observed is the bivariate

![]() $\left( {{d_i},{v_i}} \right)$

, and the total contribution to the likelihood of these observations, denoted by

$\left( {{d_i},{v_i}} \right)$

, and the total contribution to the likelihood of these observations, denoted by

![]() ${L_i}$

, is:

${L_i}$

, is:

see Table 1, or Macdonald et al. (Reference Macdonald, Richards and Currie2018), Chapter 5.

3.2. Age Intervals and

${{\rm{\Delta }}_i}$

Notation

${{\rm{\Delta }}_i}$

Notation

The definition of the interval

![]() ${{\rm{\Delta }}_i}$

depends on the observational plan and the method of investigation. The important point is that it is time under observation of a single individual, the

${{\rm{\Delta }}_i}$

depends on the observational plan and the method of investigation. The important point is that it is time under observation of a single individual, the

![]() $i$

th of

$i$

th of

![]() $M$

individuals. Some examples are the following.

$M$

individuals. Some examples are the following.

-

(a) The interval may be the entire period for which the

$i$

th individual was observed, potentially spanning many years.

$i$

th individual was observed, potentially spanning many years. -

(b) The interval may be that part of a rate interval (for example, year of age) for which the

$i$

th individual was under observation.

$i$

th individual was under observation. -

(c) The interval may be an interval of age on which the hazard rate is assumed to be constant.

Therefore the likelihood (13) based on

![]() ${{\rm{\Delta }}_i}$

may constitute the whole of the

${{\rm{\Delta }}_i}$

may constitute the whole of the

![]() $i$

th individual’s contribution to the total likelihood, or only part of it. We will call a contribution to a likelihood of the form (13) a survival model likelihood, whether it forms all or part of the

$i$

th individual’s contribution to the total likelihood, or only part of it. We will call a contribution to a likelihood of the form (13) a survival model likelihood, whether it forms all or part of the

![]() $i$

th individual’s contribution, and whether or not hazard rates are assumed to be piecewise-constant.

$i$

th individual’s contribution, and whether or not hazard rates are assumed to be piecewise-constant.

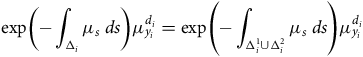

3.3. Multiplication and Factorization of Survival Model Likelihoods

Survival model likelihoods in respect of the same individual over contiguous intervals, multiplied together, give another survival model likelihood. In reverse, a survival model likelihood may be factorized into as many factors of like kind as we please. To see the multiplicative property, suppose the

![]() $i$

th individual is observed on the contiguous intervals

$i$

th individual is observed on the contiguous intervals

![]() ${\rm{\Delta }}_i^1 = \left( {{x_i},{z_i}} \right]$

and

${\rm{\Delta }}_i^1 = \left( {{x_i},{z_i}} \right]$

and

![]() ${\rm{\Delta }}_i^2 = \left( {{z_i},{y_i}} \right]$

, and that indicators of death

${\rm{\Delta }}_i^2 = \left( {{z_i},{y_i}} \right]$

, and that indicators of death

![]() $d_i^{\left( 1 \right)}$

at time

$d_i^{\left( 1 \right)}$

at time

![]() ${z_i}$

and

${z_i}$

and

![]() $d_i^{\left( 2 \right)}$

at time

$d_i^{\left( 2 \right)}$

at time

![]() ${y_i}$

are defined analagously to

${y_i}$

are defined analagously to

![]() ${d_i}$

above. Then if

${d_i}$

above. Then if

![]() ${{\rm{\Delta }}_i} = \left( {{x_i},{y_i}} \right]$

as before:

${{\rm{\Delta }}_i} = \left( {{x_i},{y_i}} \right]$

as before:

$$\hskip-100pt{\rm{exp}}\left( { - \mathop \int \nolimits_{{{\rm{\Delta }}_i}} {\mu _s}{\rm{\;}}ds} \right)\mu _{{y_i}}^{{d_i}} = {\rm{exp}}\left( { - \mathop \int \nolimits_{{\rm{\Delta }}_i^1 \cup {\rm{\Delta }}_i^2} {\mu _s}{\rm{\;}}ds} \right)\mu _{{y_i}}^{{d_i}}$$

$$\hskip-100pt{\rm{exp}}\left( { - \mathop \int \nolimits_{{{\rm{\Delta }}_i}} {\mu _s}{\rm{\;}}ds} \right)\mu _{{y_i}}^{{d_i}} = {\rm{exp}}\left( { - \mathop \int \nolimits_{{\rm{\Delta }}_i^1 \cup {\rm{\Delta }}_i^2} {\mu _s}{\rm{\;}}ds} \right)\mu _{{y_i}}^{{d_i}}$$

$$ \hskip 70pt= {\rm{exp}}\left( { - \mathop \int \nolimits_{{\rm{\Delta }}_i^1} {\mu _s}{\rm{\;}}ds} \right)\mu _{{z_i}}^{d_i^{\left( 1 \right)}}{\rm{\;exp}}\left( { - \mathop \int \nolimits_{{\rm{\Delta }}_i^2} {\mu _s}{\rm{\;}}ds} \right)\mu _{{y_i}}^{d_i^{\left( 2 \right)}}$$

$$ \hskip 70pt= {\rm{exp}}\left( { - \mathop \int \nolimits_{{\rm{\Delta }}_i^1} {\mu _s}{\rm{\;}}ds} \right)\mu _{{z_i}}^{d_i^{\left( 1 \right)}}{\rm{\;exp}}\left( { - \mathop \int \nolimits_{{\rm{\Delta }}_i^2} {\mu _s}{\rm{\;}}ds} \right)\mu _{{y_i}}^{d_i^{\left( 2 \right)}}$$

since necessarily

![]() $d_i^{\left( 1 \right)} = 0$

and

$d_i^{\left( 1 \right)} = 0$

and

![]() $d_i^{\left( 2 \right)} = {d_i}$

if events on

$d_i^{\left( 2 \right)} = {d_i}$

if events on

![]() ${{\rm{\Delta }}_2}$

are not trivially null. Whether we regard this as factorizing a likelihood on

${{\rm{\Delta }}_2}$

are not trivially null. Whether we regard this as factorizing a likelihood on

![]() ${{\rm{\Delta }}_i}$

, or multiplying two likelihoods on

${{\rm{\Delta }}_i}$

, or multiplying two likelihoods on

![]() ${\rm{\Delta }}_i^1$

and

${\rm{\Delta }}_i^1$

and

![]() ${\rm{\Delta }}_i^2$

, does not matter for our purposes.

${\rm{\Delta }}_i^2$

, does not matter for our purposes.

3.4. Rate Intervals, Piecewise-constant Hazards and Age-grouped Data

Recall from Section 2.2 that a rate interval is denoted by

![]() ${{\rm{\Delta }}_k}$

. Here let

${{\rm{\Delta }}_k}$

. Here let

![]() ${{\rm{\Delta }}_k}$

be the rate interval from integer age

${{\rm{\Delta }}_k}$

be the rate interval from integer age

![]() $k$

to age

$k$

to age

![]() $k + 1$

, that is,

$k + 1$

, that is,

![]() ${{\rm{\Delta }}_k} = \left( {k,k + 1} \right]$

. Age-grouped data may then be denoted by total deaths

${{\rm{\Delta }}_k} = \left( {k,k + 1} \right]$

. Age-grouped data may then be denoted by total deaths

![]() ${d_k}$

and total person-years exposure

${d_k}$

and total person-years exposure

![]() $E_k^c$

falling within rate interval

$E_k^c$

falling within rate interval

![]() ${{\rm{\Delta }}_k}$

.

${{\rm{\Delta }}_k}$

.

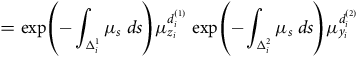

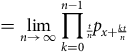

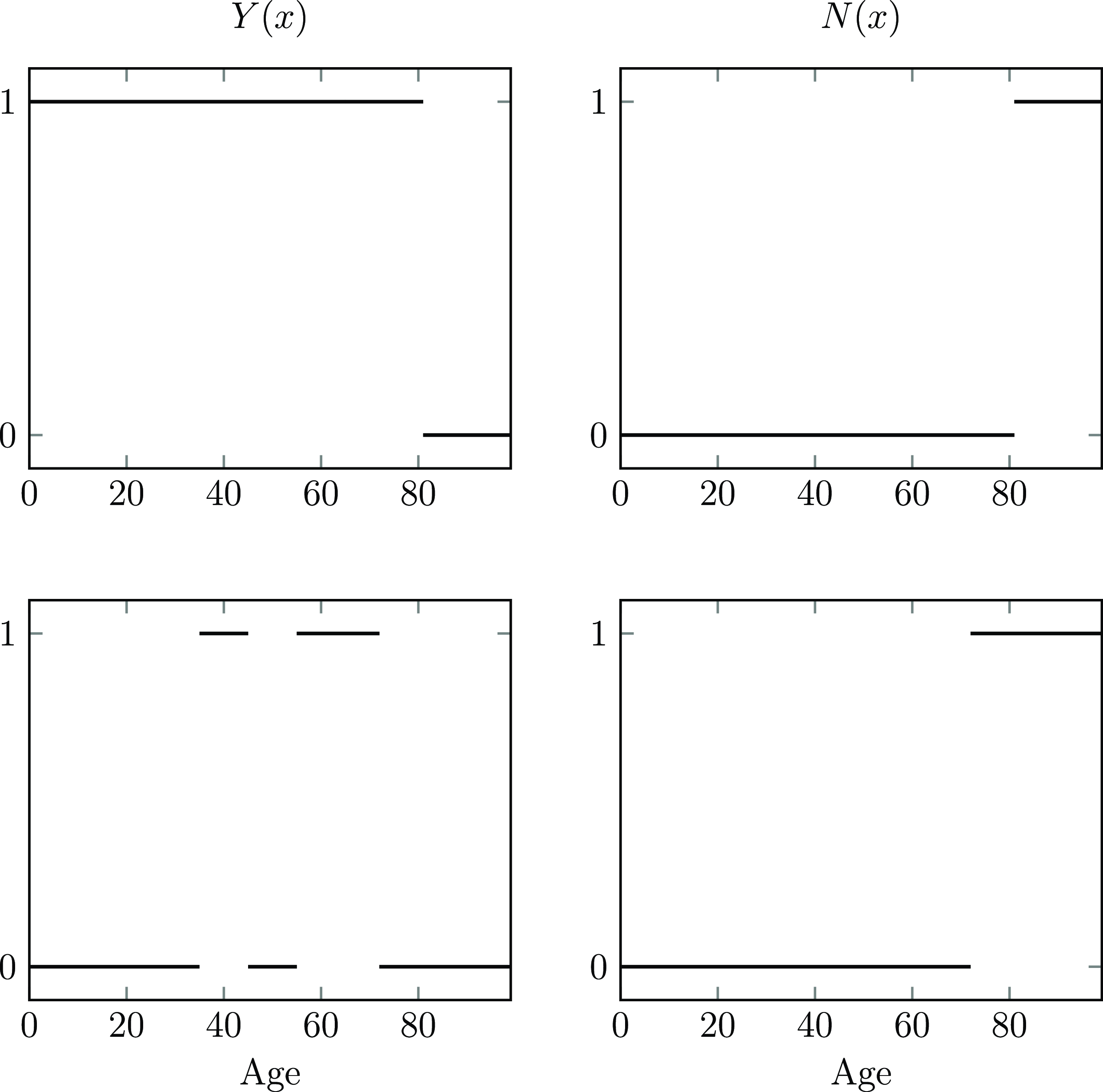

It is instructive to group data on individual lives to reproduce age-grouped data, and to compare the resulting likelihoods. This is aided by Table 2, which shows the contributions to likelihoods of individual data for two individuals, treated three ways. The

![]() $i$

th individual is observed from age 47 until right-censored at age 50. The

$i$

th individual is observed from age 47 until right-censored at age 50. The

![]() $j$

th individual is observed from age 47.6 until dying at age 49.3. We list contributions to the likelihood under three combinations of observational plan and model:

$j$

th individual is observed from age 47.6 until dying at age 49.3. We list contributions to the likelihood under three combinations of observational plan and model:

-

(a) Rate intervals

${{\rm{\Delta }}_k}$

, and a constant hazard rate on each rate interval, denoted by

${{\rm{\Delta }}_k}$

, and a constant hazard rate on each rate interval, denoted by

$\mu _k^{\rm{*}}$

.

$\mu _k^{\rm{*}}$

. -

(b) Rate intervals

${{\rm{\Delta }}_k}$

, and a smooth hazard rate parametrized by

${{\rm{\Delta }}_k}$

, and a smooth hazard rate parametrized by

$\theta $

, denoted by

$\theta $

, denoted by

$\mu _x^\theta $

(for example, a Gompertz-Makeham function).

$\mu _x^\theta $

(for example, a Gompertz-Makeham function). -

(c) Observation of complete lifetimes on age interval

${{\rm{\Delta }}_i}$

, and a smooth hazard rate parametrized by

${{\rm{\Delta }}_i}$

, and a smooth hazard rate parametrized by

$\theta $

, also denoted by

$\theta $

, also denoted by

$\mu _x^\theta $

.

$\mu _x^\theta $

.

Table 2. Contributions to likelihoods of the

![]() $i$

th individual, under observation from age 47 until right-censored at age 50, and the

$i$

th individual, under observation from age 47 until right-censored at age 50, and the

![]() $j$

th individual, under observation from age 47.6 until death at age 49.3, under three observational plans and assumptions: (a) annual rate interval

$j$

th individual, under observation from age 47.6 until death at age 49.3, under three observational plans and assumptions: (a) annual rate interval

![]() ${{\rm{\Delta }}_k}$

, piecewise-constant hazard rates; (b) annual rate interval

${{\rm{\Delta }}_k}$

, piecewise-constant hazard rates; (b) annual rate interval

![]() ${{\rm{\Delta }}_k}$

, smooth hazard rate parametrized by

${{\rm{\Delta }}_k}$

, smooth hazard rate parametrized by

![]() $\theta $

; and (c) observation of complete lifetime age interval

$\theta $

; and (c) observation of complete lifetime age interval

![]() ${{\rm{\Delta }}_i}$

, smooth hazard rate parametrized by

${{\rm{\Delta }}_i}$

, smooth hazard rate parametrized by

![]() $\theta $

$\theta $

The contributions are shown in Table 2. In obvious notation, we may denote the contributions to the likelihoods under (a), (b) and (c) above by

![]() $L_{i,k}^{\rm{*}},L_{i,k}^\theta $

and

$L_{i,k}^{\rm{*}},L_{i,k}^\theta $

and

![]() $L_i^\theta = \mathop \prod \nolimits_k L_{i,k}^\theta $

respectively. Likewise, collecting all contributions to rate interval

$L_i^\theta = \mathop \prod \nolimits_k L_{i,k}^\theta $

respectively. Likewise, collecting all contributions to rate interval

![]() ${{\rm{\Delta }}_k}$

in columns (a) and (b), we may define the total likelihood contributed by

${{\rm{\Delta }}_k}$

in columns (a) and (b), we may define the total likelihood contributed by

![]() ${{\rm{\Delta }}_k}$

by

${{\rm{\Delta }}_k}$

by

![]() $L_k^{\rm{*}} = \mathop \prod \nolimits_i L_{i,k}^{\rm{*}}$

and

$L_k^{\rm{*}} = \mathop \prod \nolimits_i L_{i,k}^{\rm{*}}$

and

![]() ${L_k^{\theta}} = \mathop \prod \nolimits_i {L_{i,k}^{\theta}}$

respectively. This leads to the following observations.

${L_k^{\theta}} = \mathop \prod \nolimits_i {L_{i,k}^{\theta}}$

respectively. This leads to the following observations.

-

(a) It is obvious from columns (b) and (c) in Table 2 that for any individual, the likelihood over the complete lifetime is the product of the likelihoods over each rate interval, see Section 3.3. In fact we have incorporated this in the notation,

$L_i^\theta = \mathop \prod \nolimits_k L_{i,k}^\theta $

. It makes no difference if we split the the individual lives data and present them by rate interval. But this does not lead to any simplification, and age-grouped totals

$L_i^\theta = \mathop \prod \nolimits_k L_{i,k}^\theta $

. It makes no difference if we split the the individual lives data and present them by rate interval. But this does not lead to any simplification, and age-grouped totals

${d_k}$

and

${d_k}$

and

$E_k^c$

play no part, because of the smooth hazard rate in the integrands.

$E_k^c$

play no part, because of the smooth hazard rate in the integrands. -

(b) Each entry in column (a) of Table 2 can be regarded as approximating its partner in column (b). For example in the third and sixth lines, we approximate

$\mu _{49 + s}^\theta $

by

$\mu _{49 + s}^\theta $

by

$\mu _{49}^{\rm{*}}$

for

$\mu _{49}^{\rm{*}}$

for

$0 \lt s \le 1$

; we show the sixth line below:(15)

$0 \lt s \le 1$

; we show the sixth line below:(15) $${\rm{exp}}\left( { - \mathop \int \nolimits_{0.0}^{0.3} \mu _{49 + s}^\theta {\rm{\;}}ds} \right)\mu _{49.3}^\theta \approx {\rm{exp}}\left( { - \mathop \int \nolimits_{0.0}^{0.3} \mu _{49}^{\rm{*}}{\rm{\;}}ds} \right)\mu _{49}^{\rm{*}} = {\rm{exp}}\left( { - 0.3\mu _{49}^{\rm{*}}} \right)\mu _{49}^{\rm{*}}.$$

$${\rm{exp}}\left( { - \mathop \int \nolimits_{0.0}^{0.3} \mu _{49 + s}^\theta {\rm{\;}}ds} \right)\mu _{49.3}^\theta \approx {\rm{exp}}\left( { - \mathop \int \nolimits_{0.0}^{0.3} \mu _{49}^{\rm{*}}{\rm{\;}}ds} \right)\mu _{49}^{\rm{*}} = {\rm{exp}}\left( { - 0.3\mu _{49}^{\rm{*}}} \right)\mu _{49}^{\rm{*}}.$$

Collecting together all such terms in

$\mu _{49}^{\rm{*}}$

we get the total likelihood:(16)

$\mu _{49}^{\rm{*}}$

we get the total likelihood:(16) $$L_{49}^{\rm{*}}\left( {\mu _{49}^{\rm{*}}} \right) = {\rm{exp}}\left( { - \mu _{49}^{\rm{*}}{\rm{\;}}E_{49}^c} \right){(\mu _{49}^{\rm{*}})^{{d_{49}}}}$$

$$L_{49}^{\rm{*}}\left( {\mu _{49}^{\rm{*}}} \right) = {\rm{exp}}\left( { - \mu _{49}^{\rm{*}}{\rm{\;}}E_{49}^c} \right){(\mu _{49}^{\rm{*}})^{{d_{49}}}}$$

in which the age-grouped totals do appear. Comparing equations (16) and (8), we see that the former is functionally identical to the likelihood from the Poisson model, and yet no assumption about Poisson random variables or distributions has been made in this section. In other words, the Poisson-like nature of the likelihood arises from the fundamental nature of modelling individual lifetimes.

3.5. Individual versus Age-Grouped Data for Multiple Lives

Table 2 illustrates how the individual lifetime model is related to age-grouped data, based on rate intervals, exactly without using any approximations. Indeed, columns (b) and (c) show that labelling the data by individual

![]() $i$

or by rate interval

$i$

or by rate interval

![]() $k$

is merely a rearrangement. Specifically, the

$k$

is merely a rearrangement. Specifically, the

![]() $i$

th individual contributes

$i$

th individual contributes

![]() $L_{i,k}^\theta $

, possibly null, to the likelihood in rate interval

$L_{i,k}^\theta $

, possibly null, to the likelihood in rate interval

![]() $k$

(column (b)). The outer form of the total likelihood, denoted by

$k$

(column (b)). The outer form of the total likelihood, denoted by

![]() ${L^\theta }$

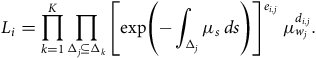

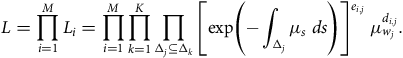

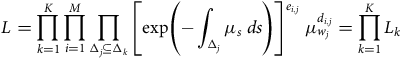

, then depends simply on the order in which we take products, as the following identities show:

${L^\theta }$

, then depends simply on the order in which we take products, as the following identities show:

This informal statement based on Table 2 (“proof-by-example”) of course needs to be demonstrated properly. Doing so with the notation to hand is surprisingly detailed, though elementary, and is delegated to Appendix A. A much simpler proof will be shown when the notation of Section 4 is available (Section 4.7).

3.6. The Rôle of Occurrence-exposure Rates

We may arrive at the likelihood based on the age-grouped data

![]() $\left( {{d_k},E_k^c} \right)$

in two different ways.

$\left( {{d_k},E_k^c} \right)$

in two different ways.

-

(a) We could use the Poisson model with parameter

$\mu _k^{\rm{*}}{\rm{\,}}E_k^c$

(Section 2.4) for rate interval

$\mu _k^{\rm{*}}{\rm{\,}}E_k^c$

(Section 2.4) for rate interval

${{\rm{\Delta }}_k}$

.

${{\rm{\Delta }}_k}$

. -

(b) Within the individual lives model, we could assume that the hazard rate is piecewise-constant with value

$\mu _k^{\rm{*}}$

on rate interval

$\mu _k^{\rm{*}}$

on rate interval

${{\rm{\Delta }}_k}$

. This means assuming that the parameter

${{\rm{\Delta }}_k}$

. This means assuming that the parameter

$\theta $

is the vector of hazard rates

$\theta $

is the vector of hazard rates

$\mu _k^{\rm{*}}$

.

$\mu _k^{\rm{*}}$

.

In either case, on rate interval

![]() ${{\rm{\Delta }}_k}$

, we have a single parameter, which we denote by

${{\rm{\Delta }}_k}$

, we have a single parameter, which we denote by

![]() $\mu _k^{\rm{*}}$

, and a likelihood that we denote by

$\mu _k^{\rm{*}}$

, and a likelihood that we denote by

![]() $L_k^{\rm{*}}\left( {\mu _k^{\rm{*}}} \right) = {\rm{exp}}\left( { - \mu _k^{\rm{*}}{\rm{\,}}E_k^c} \right){\rm{\;}}{(\mu _k^{\rm{*}})^{{d_k}}}$

. In total we have a

$L_k^{\rm{*}}\left( {\mu _k^{\rm{*}}} \right) = {\rm{exp}}\left( { - \mu _k^{\rm{*}}{\rm{\,}}E_k^c} \right){\rm{\;}}{(\mu _k^{\rm{*}})^{{d_k}}}$

. In total we have a

![]() $K$

-parameter model with likelihood

$K$

-parameter model with likelihood

![]() $\mathop \prod \nolimits_k L_k^{\rm{*}}\left( {\mu _k^{\rm{*}}} \right)$

, from which the parameters are estimated independently by the occurrence-exposure rates

$\mathop \prod \nolimits_k L_k^{\rm{*}}\left( {\mu _k^{\rm{*}}} \right)$

, from which the parameters are estimated independently by the occurrence-exposure rates

![]() ${d_k}/E_k^c$

, which we denote by

${d_k}/E_k^c$

, which we denote by

![]() $\hat \mu _k^{\rm{*}}$

. That is as far as the probabilistic model takes us.

$\hat \mu _k^{\rm{*}}$

. That is as far as the probabilistic model takes us.

In traditional actuarial terminology, the

![]() $\hat \mu _k^{\rm{*}}$

are “crude” rates which require to be smoothed or graduated, using no more than the available age-grouped data (Benjamin & Pollard, Reference Benjamin and Pollard1980). A convenient way of doing so is to use: (a) the likelihood function

$\hat \mu _k^{\rm{*}}$

are “crude” rates which require to be smoothed or graduated, using no more than the available age-grouped data (Benjamin & Pollard, Reference Benjamin and Pollard1980). A convenient way of doing so is to use: (a) the likelihood function

![]() $\mathop \prod \nolimits_k L_k^{\rm{*}}\left( {\mu _k^{\rm{*}}} \right)$

; (b) a parametric function

$\mathop \prod \nolimits_k L_k^{\rm{*}}\left( {\mu _k^{\rm{*}}} \right)$

; (b) a parametric function

![]() $\mu _x^\theta $

for the hazard rate, of much lower dimension than

$\mu _x^\theta $

for the hazard rate, of much lower dimension than

![]() $K$

; and (c) to connect the two with an assumption that

$K$

; and (c) to connect the two with an assumption that

![]() $\hat \mu _k^{\rm{*}}$

estimates

$\hat \mu _k^{\rm{*}}$

estimates

![]() $\mu _{{x_k}}^\theta $

, for some

$\mu _{{x_k}}^\theta $

, for some

![]() ${x_k} \in {{\rm{\Delta }}_k}$

, for example

${x_k} \in {{\rm{\Delta }}_k}$

, for example

![]() $\hat \mu _k^{\rm{*}}$

estimates

$\hat \mu _k^{\rm{*}}$

estimates

![]() $\mu _{k + 1/2}^\theta $

. Note that this smoothing procedure is not part of the probabilistic model, despite its use of the likelihood function. Forfar et al. (Reference Forfar, McCutcheon and Wilkie1988) show that it is approximately equivalent to the much older minimum-

$\mu _{k + 1/2}^\theta $

. Note that this smoothing procedure is not part of the probabilistic model, despite its use of the likelihood function. Forfar et al. (Reference Forfar, McCutcheon and Wilkie1988) show that it is approximately equivalent to the much older minimum-

![]() ${\chi ^2}$

method.

${\chi ^2}$

method.

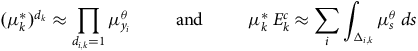

In either case, again, the age-grouped quantities approximate exact quantities as follows:

$${(\mu _k^{\rm{*}})^{{d_k}}} \approx \mathop \prod \limits_{{d_{i,k}} = 1} \mu _{{y_i}}^\theta {\rm{\;\;\;\;\;\;\;\;\;and\;\;\;\;\;\;\;\;\;}}\mu _k^{\rm{*}}{\rm{\,}}E_k^c \approx \mathop \sum \limits_i \mathop \int \nolimits_{{{\rm{\Delta }}_{i,k}}} \mu _s^\theta {\rm{\;}}ds$$

$${(\mu _k^{\rm{*}})^{{d_k}}} \approx \mathop \prod \limits_{{d_{i,k}} = 1} \mu _{{y_i}}^\theta {\rm{\;\;\;\;\;\;\;\;\;and\;\;\;\;\;\;\;\;\;}}\mu _k^{\rm{*}}{\rm{\,}}E_k^c \approx \mathop \sum \limits_i \mathop \int \nolimits_{{{\rm{\Delta }}_{i,k}}} \mu _s^\theta {\rm{\;}}ds$$

where

![]() ${d_{i,k}}$

is the number of deaths (0 or 1) befalling the

${d_{i,k}}$

is the number of deaths (0 or 1) befalling the

![]() $i$

th individual in rate interval

$i$

th individual in rate interval

![]() ${{\rm{\Delta }}_k}$

, and

${{\rm{\Delta }}_k}$

, and

![]() ${{\rm{\Delta }}_{i,k}} = {{\rm{\Delta }}_i} \cap {{\rm{\Delta }}_k}$

(possibly

${{\rm{\Delta }}_{i,k}} = {{\rm{\Delta }}_i} \cap {{\rm{\Delta }}_k}$

(possibly

![]() $\emptyset $

). Therefore, inference based upon age-grouped data is close, but not identical, to inference based upon the individual lives data.

$\emptyset $

). Therefore, inference based upon age-grouped data is close, but not identical, to inference based upon the individual lives data.

The crude hazard rates

![]() $\hat \mu _k^{\rm{*}}$

, or more accurately the expected deaths based upon them,

$\hat \mu _k^{\rm{*}}$

, or more accurately the expected deaths based upon them,

![]() $\hat \mu _k^{\rm{*}}{\rm{\,}}E_k^c$

, may be used in forming statistics such as deviances, used in testing the fit of a graduation (Benjamin & Pollard, Reference Benjamin and Pollard1980; Forfar et al., Reference Forfar, McCutcheon and Wilkie1988; Macdonald et al., Reference Macdonald, Richards and Currie2018).

$\hat \mu _k^{\rm{*}}{\rm{\,}}E_k^c$

, may be used in forming statistics such as deviances, used in testing the fit of a graduation (Benjamin & Pollard, Reference Benjamin and Pollard1980; Forfar et al., Reference Forfar, McCutcheon and Wilkie1988; Macdonald et al., Reference Macdonald, Richards and Currie2018).

3.7. Pseudo-Poisson Models

The various likelihoods that appear in this section, see Table 2, are all Poisson-like, and if a piecewise-constant hazard rate is assumed, indistinguishable from a true Poisson likelihood, an observation that goes back to the earliest work on inference in Markov models, see for example Sverdrup (Reference Sverdrup1965); Waters (Reference Waters1984). However, there are no Poisson random variables. In the likelihood (13): (a)

![]() ${d_i}$

is either 0 or 1, and does not range over the non-negative integers; (b)

${d_i}$

is either 0 or 1, and does not range over the non-negative integers; (b)

![]() ${v_i}$

is random, not deterministic; and (c)

${v_i}$

is random, not deterministic; and (c)

![]() ${d_i}$

and

${d_i}$

and

![]() ${v_i}$

are not independent, the random variable is the bivariate

${v_i}$

are not independent, the random variable is the bivariate

![]() $\left( {{d_i},{v_i}} \right)$

. Many authors suppose, as we did in Section 2.4, that the number of deaths in some model has a Poisson distribution, but without ensuring, as we did, that the exposure times would be non-random; more often the observation of random death times ensures the opposite. This conceptual error is almost always immaterial for inference, precisely because the Poisson likelihood is of the correct form for a survival model, although the survival model is not Poisson. Where it matters is in misdirecting us when we come to extend the survival model, including allowing for: (a) truncation and censoring; (b) more complicated life histories, including multiple decrements; (c) calculating residuals when the expected number of deaths is small; and (d) statistics for multiple lives, see Section 3.5 and Appendix A.

$\left( {{d_i},{v_i}} \right)$

. Many authors suppose, as we did in Section 2.4, that the number of deaths in some model has a Poisson distribution, but without ensuring, as we did, that the exposure times would be non-random; more often the observation of random death times ensures the opposite. This conceptual error is almost always immaterial for inference, precisely because the Poisson likelihood is of the correct form for a survival model, although the survival model is not Poisson. Where it matters is in misdirecting us when we come to extend the survival model, including allowing for: (a) truncation and censoring; (b) more complicated life histories, including multiple decrements; (c) calculating residuals when the expected number of deaths is small; and (d) statistics for multiple lives, see Section 3.5 and Appendix A.

We suggest it would be clearer and less confusing if the term pseudo-Poisson was adopted, to describe the great majority of models for death counts that appear in the literature.

3.8. Covariates

Covariates may be introduced by defining a vector

![]() ${{\bf{z}}^i}$

of covariates for the

${{\bf{z}}^i}$

of covariates for the

![]() $i$

th individual and letting the hazard rate be a function

$i$

th individual and letting the hazard rate be a function

![]() $\mu (x,$

z

$\mu (x,$

z

![]() ${^i})$

of age and covariates. A common way to introduce such a dependency is to define a vector

β

of regression coefficients such that the hazard rate is a function

${^i})$

of age and covariates. A common way to introduce such a dependency is to define a vector

β

of regression coefficients such that the hazard rate is a function

![]() $\mu (x,{\rm{\;}}{\beta ^T}$

z

$\mu (x,{\rm{\;}}{\beta ^T}$

z

![]() ${^i})$

of age and a linear combination of the covariates. Further simplification is achieved if the hazard rate factorizes as

${^i})$

of age and a linear combination of the covariates. Further simplification is achieved if the hazard rate factorizes as

![]() $\mu (x,$

$\mu (x,$

![]() $z{{\rm}^i}) = {\mu _x} \times g($

z

$z{{\rm}^i}) = {\mu _x} \times g($

z

![]() ${^i})$

, the product of an age dependent hazard rate

${^i})$

, the product of an age dependent hazard rate

![]() ${\mu _x}$

(called the baseline hazard) and some function

${\mu _x}$

(called the baseline hazard) and some function

![]() $g$

of the covariates; then the hazard rates of any two individuals of the same age are always in the same proportion, called proportional hazards. Finally, the most common choice of

$g$

of the covariates; then the hazard rates of any two individuals of the same age are always in the same proportion, called proportional hazards. Finally, the most common choice of

![]() $g$

is an exponential function of a linear combination of the covariates,

$g$

is an exponential function of a linear combination of the covariates,

![]() $\mu (x,$

z

$\mu (x,$

z

![]() ${^i}) = {\mu _x} \times {\rm{exp}}({\beta ^T}$

z

${^i}) = {\mu _x} \times {\rm{exp}}({\beta ^T}$

z

![]() ${^i})$

, which has proportional and non-negative hazards as well as a log-linear dependence on covariates. These steps in adding structure to the hazard rate are summarized in Table 3.

${^i})$

, which has proportional and non-negative hazards as well as a log-linear dependence on covariates. These steps in adding structure to the hazard rate are summarized in Table 3.

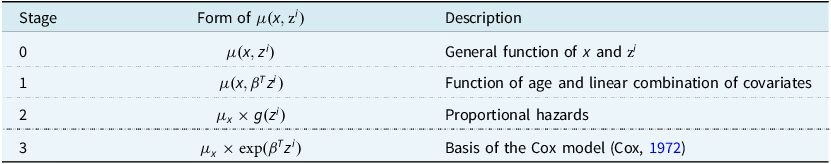

Table 3. Three stages in adding structure to a hazard rate that is a function

![]() $\mu (x,{{\rm z}^i})$

of age

$\mu (x,{{\rm z}^i})$

of age

![]() $x$

and a vector

$x$

and a vector

![]() ${{\rm z}^i}$

of covariates for the

${{\rm z}^i}$

of covariates for the

![]() $i$

th individual. Each stage is increasingly restrictive, from the most flexible model in Stage 0 to the most restrictive in Stage 3

$i$

th individual. Each stage is increasingly restrictive, from the most flexible model in Stage 0 to the most restrictive in Stage 3

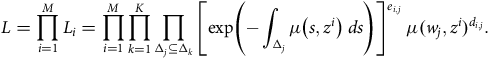

The last hazard structure in Table 3 is popular in medical statistics, where it is known as the Cox model, because the baseline hazard can be ignored and only the regression coefficients need to be estimated, by the procedure known as partial likelihood (see Andersen et al. (Reference Andersen, Borgan, Gill and Keiding1993)). However, actuaries usually wish to estimate the whole model, baseline hazard included, whatever the form of the hazard rate. Then the full likelihood (46) from Appendix A becomes:

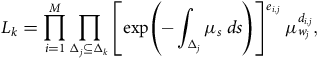

$$L = \mathop \prod \limits_{i = 1}^M {L_i} = \mathop \prod \limits_{i = 1}^M \mathop \prod \limits_{k = 1}^K \mathop \prod \limits_{{{\rm{\Delta }}_j} \subseteq {{\rm{\Delta }}_k}} {\left[ {{\rm{exp}}\left( { - \mathop \int \nolimits_{{{\rm{\Delta }}_j}} \mu \left( {s{,z^i}} \right){\rm{\;}}ds} \right)} \right]^{{e_{i,j}}}}{\rm{\;}}\mu {({w_j}{,z^i})^{{d_{i,j}}}}.$$

$$L = \mathop \prod \limits_{i = 1}^M {L_i} = \mathop \prod \limits_{i = 1}^M \mathop \prod \limits_{k = 1}^K \mathop \prod \limits_{{{\rm{\Delta }}_j} \subseteq {{\rm{\Delta }}_k}} {\left[ {{\rm{exp}}\left( { - \mathop \int \nolimits_{{{\rm{\Delta }}_j}} \mu \left( {s{,z^i}} \right){\rm{\;}}ds} \right)} \right]^{{e_{i,j}}}}{\rm{\;}}\mu {({w_j}{,z^i})^{{d_{i,j}}}}.$$

Clearly any of the hazard rates in Table 3 may be substituted into the likelihood (19). However inspection of the innermost elements of (19), integrals over intervals

![]() ${{\rm{\Delta }}_j} \subseteq {{\rm{\Delta }}_k}$

, shows that, even if the hazard rate factorizes as in Stages 2 and 3 of Table 3, these factors cannot be collected together to form likelihoods

${{\rm{\Delta }}_j} \subseteq {{\rm{\Delta }}_k}$

, shows that, even if the hazard rate factorizes as in Stages 2 and 3 of Table 3, these factors cannot be collected together to form likelihoods

![]() ${L_k}$

over rate intervals. See also the written comments by A. D. Wilkie in the discussion of Richards (Reference Richards2008).

${L_k}$

over rate intervals. See also the written comments by A. D. Wilkie in the discussion of Richards (Reference Richards2008).

4. Dynamic Life History Models

4.1. The Anatomy of a Survival Probability

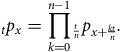

We begin with a closer examination of the multiplicative property of survival probabilities, usually expressed as:

We can apply this repeatedly to factorize

![]() ${{\rm{\;}}_t}{p_x}$

, with

${{\rm{\;}}_t}{p_x}$

, with

![]() $n$

a positive integer, as follows:

$n$

a positive integer, as follows:

$$_t{p_x} = {\mathop {\prod \limits_{k = 0}^{n - 1}}{_{t \over n}}}\,{p_{x + {{kt} \over n}}}.$$

$$_t{p_x} = {\mathop {\prod \limits_{k = 0}^{n - 1}}{_{t \over n}}}\,{p_{x + {{kt} \over n}}}.$$

This motivates the first of two questions: what happens as

![]() $n \to \infty $

? The second (related) question is: how can we express or represent events in the life history as a function of passing time? We have a compact notation (

$n \to \infty $

? The second (related) question is: how can we express or represent events in the life history as a function of passing time? We have a compact notation (

![]() ${{\rm}_t}{p_x}$

,

${{\rm}_t}{p_x}$

,

![]() ${{\rm{\;}}_t}{q_x}$

and so on) for the probabilities of events in the life history, but no such notation for the events themselves; generally we must express events somewhat clumsily in words. We consider these questions in turn in the next two sections.

${{\rm{\;}}_t}{q_x}$

and so on) for the probabilities of events in the life history, but no such notation for the events themselves; generally we must express events somewhat clumsily in words. We consider these questions in turn in the next two sections.

4.2. The Product-integral Representation of a Survival Probability

From the heuristic

![]() ${{\rm{\;}}_h}{p_x} \approx 1 - {\mu _x}{\rm{\,}}h \approx {\rm{exp}}\left( { - {\mu _x}{\rm{\,}}h} \right)$

, for small

${{\rm{\;}}_h}{p_x} \approx 1 - {\mu _x}{\rm{\,}}h \approx {\rm{exp}}\left( { - {\mu _x}{\rm{\,}}h} \right)$

, for small

![]() $h$

, we have the important product-integral representation as

$h$

, we have the important product-integral representation as

![]() $n \to \infty $

and

$n \to \infty $

and

![]() $1/n \to {0^ + }$

:

$1/n \to {0^ + }$

:

$$ \hskip -1pt= \mathop {{\rm{lim}}}\limits_{n \to \infty } {\mathop {\prod \limits_{k = 0}^{n - 1}} {_{t \over n}}}{p_{x + {{kt} \over n}}}$$

$$ \hskip -1pt= \mathop {{\rm{lim}}}\limits_{n \to \infty } {\mathop {\prod \limits_{k = 0}^{n - 1}} {_{t \over n}}}{p_{x + {{kt} \over n}}}$$

see Appendix B or, for example, Andersen et al. (Reference Andersen, Borgan, Gill and Keiding1993). The product-integral has the same

![]() ${\rm{\Pi }}$

symbol as an ordinary product over a finite or countable number of terms, but is distinguished (here) by the presence of

${\rm{\Pi }}$

symbol as an ordinary product over a finite or countable number of terms, but is distinguished (here) by the presence of

![]() $ds$

in the integrand and by the variable

$ds$

in the integrand and by the variable

![]() $s$

ranging over an interval of the real line,

$s$

ranging over an interval of the real line,

![]() $s \in \left( {0,t} \right]$

. Then by differentiation of

$s \in \left( {0,t} \right]$

. Then by differentiation of

![]() ${{\rm{\;}}_t}{p_x}$

, the density function of the random future lifetime

${{\rm{\;}}_t}{p_x}$

, the density function of the random future lifetime

![]() ${T_x}$

, denoted by

${T_x}$

, denoted by

![]() ${f_x}\left( t \right)$

, is:

${f_x}\left( t \right)$

, is: