Public opinion data collected in the Arab Middle East and North Africa (MENA) remain scarce relative to the survey data available from most other world regions, and these data are often accompanied by concerns about reliability. A majority of Arab states are non-democratic (Bellin Reference Bellin2004; Bellin Reference Bellin2012), and this closed political environment is a major source of both the obstacles facing survey practitioners working in the region and of reservations among consumers of Arab survey data (Benstead Reference Benstead2018). The authoritarian character of most MENA states limits where scientific surveys can be conducted, who can administer them, what kinds of samples can be drawn, and the types of questions that can be asked (Clark Reference Clark2006; Pollock Reference Pollock1992; Pollock Reference Pollock2008; Tessler Reference Tessler1987; Tessler Reference Tessler2011). Such limitations have in turn fueled concerns about the representativeness of polls (Farah Reference Farah and Tessler1987), about respondents’ ability and willingness to give accurate and truthful answers (Pollock Reference Pollock2008; Tessler and Jamal Reference Tessler and Jamal2006), and even about the ethical implications of carrying out surveys in settings where research may be monitored (Carapico Reference Carapico2006).

Yet despite the scope and persistence of questions about MENA survey quality, producers and users of Arab opinion data still have a limited understanding of exactly how the region's political climate may influence participation in survey research. Previous studies have sought to detect and account for bias introduced by specific characteristics of surveys, such as the survey sponsor (Corstange Reference Corstange2014; Corstange Reference Corstange2016; Gordoni and Schmidt Reference Gordoni and Schmidt2010), the observable attributes of survey enumerators (Benstead Reference Benstead2014a; Benstead Reference Benstead2014b; Blaydes and Gillum Reference Blaydes and Gillum2013) and the presence of third parties during the interview (Diop, Le, and Traugott Reference Diop, Le and Traugott2015; Mneimneh et al. Reference Mneimneh2015). However, theories of survey-taking behavior (for example, Hox, de Leeuw and Vorst Reference Hox, de Leeuw and Vorst1995; Loosveldt and Storms Reference Loosveldt and Storms2008) suggest a more pervasive possibility: that political conditions in the Arab world may instill negative perceptions of the entire survey research enterprise – whatever the characteristics of an individual survey – and these general attitudes toward surveys may influence participation and response behavior in ways that produce misleading results.

This article extends prior research into the nature and effects of survey attitudes both geographically and substantively to the case of the Arab world. In Europe and North America, general attitudes toward surveys have been found to predict a multitude of respondent behaviors, including non-response and refusal, panel attrition and participation intentions. Moreover, this literature has identified conceptually distinct and empirically separable dimensions of survey attitudes, which allows us to test specific mechanisms linking elements of the MENA survey climate to Arab survey behavior. For instance, if Arabs do indeed tend to view surveys negatively, to what extent is this because survey results are seen as irrelevant to policy making and thus of no societal value? Or is survey research viewed as an unreliable method in light of political censorship and a limited history of scientific polling? Or, yet again, are negative evaluations of survey research due to the association of opinion polls with political surveillance or manipulation?

In what follows, we seek to answer these and other questions using data from a nationally representative face-to-face survey conducted in the Arab Gulf state of Qatar, a highly diverse society that permits comparison of attitudes across cultural–geographical groupings within a single, non-democratic polity. Our analysis proceeds in several steps. We first use factor analysis to assess the extent to which the individual components of survey attitudes in Qatar match those previously identified in Western settings. Next, we test whether citizen and expatriate Arabs in Qatar hold attitudes toward public opinion surveys that differ from those held by non-Arabs in Qatar, and we conduct these tests for each of the attitudinal dimensions we identify. Finally, we report on two novel experiments designed to gauge the impact of survey attitudes on survey participation, as well as cross-group differences in such effects. A conjoint experiment evaluates the impact of objective survey attributes alongside subjective survey attitudes on intentions to participate in a hypothetical survey. A screening experiment estimates a respondent's likelihood of completing our survey and the degree to which this is a function of survey attitudes. To our knowledge, our study represents the first assessment of survey attitudes in an Arab country, and one of the very few such studies in a non-Western setting.Footnote 1

Context and Literature Review

Trends and Challenges in the Arab World

Until the 2000s, the investigation of individual attitudes, values and behaviors was the missing dimension in political science research on the Arab world. Such research was limited with respect to the countries where surveys could be conducted, the degree to which representative national samples could be drawn, and the extent to which sensitive questions about society and politics could be asked. Complaints about this situation date to the 1970s.Footnote 2

Although parts of the Arab world remain inhospitable to survey research, significant changes have occurred in the last two decades. First, there has emerged a number of Arab academic research institutions dedicated to conducting social scientific surveys, such as the Center for Strategic Studies at the University of Jordan, the Palestine Center for Policy and Survey Research, and the Social and Economic Survey Research Institute at Qatar University. Global interest in Arab public opinion has also increased dramatically. This is reflected in such international, multi-country projects as the World Values Survey (WVS) and the Arab Barometer. Until its third wave, in 2000–2004, the WVS had not been carried out in a single Arab country. In its latest (sixth) wave, in 2010–2014, the WVS surveyed citizens in twelve Arab states, more than in all previous waves combined. The Arab Barometer, since its founding in 2006, has conducted thirty-nine surveys in fifteen different countries and interviewed over 45,000 individuals.Footnote 3 International polling agencies, such as the Pew Research Center and Gallup, have also become more active in the Arab world. During 2009–2011, for example, Gallup conducted two or more polls, and as many as nine, in each of six Arab states (Gallup 2012).

The Arab world today thus looks very different with respect to public opinion research than it did less than twenty years ago. However, not all of the news is good. The trend toward more openness also has created opportunities for those willing to cut corners on methodology and/or shade their findings to support a political agenda. Some opinion polls in the Arab world, like some polls elsewhere, are not transparent about their methods or report details that belie their representativeness.Footnote 4 In addition, data frequently are not made available for replication and secondary analysis, and data falsification in surveys of Arab countries also has drawn attention lately (Bohannon Reference Bohannon2016; Kuriakose and Robbins Reference Kuriakose and Robbins2016). These issues are not unique to Arab societies, of course. Nor are they limited to research involving surveys and other quantitative methodologies. But they do represent cautions and concerns that have accompanied the expansion of survey research in the MENA region.

Survey Attitudes and the Survey-Taking Climate

This proliferation of surveys – scientific and unscientific – in Arab countries gives new impetus to questions about how ordinary people in the region view survey research, and how these views might impact survey behavior and data reliability. Such questions, generally described as pertaining to the survey climate, are well known to researchers working in the United States and Europe (Kim et al. Reference Kim2011). Beginning with Sjoberg's (Reference Sjoberg1955) ‘questionnaire on questionnaires’, a large literature has suggested that ‘a positive survey-taking climate in a population’ is an important precondition for effective survey administration (Loosveldt and Storms Reference Loosveldt and Storms2008, 74; Lyberg and Dean Reference Lyberg and Dean1992). This consensus is rooted in behavioral theories of survey taking and other forms of action that view respondent cooperation as being influenced by generalized norms and attitudes (Ajzen and Fishbein Reference Ajzen and Fishbein1980; Stocké and Langfeldt Reference Stocké and Langfeldt2004), as well as cognitive limitations (Krosnick Reference Krosnick1991; Krosnick Reference Krosnick1999), that defy pure rationality. Individual attitudes toward surveys are thus conceived as ‘the expression of the subjective experience of the survey climate’, representing ‘the link between the survey climate at the societal level and the decision to participate [in a survey] at the individual level’ (Loosveldt and Joye Reference Loosveldt, Joye and Wolf2016, 73).

In Western settings,Footnote 5 survey attitudes have been found to predict a broad range of respondent behaviors, including non-response and refusal (Groves and Couper Reference Groves and Couper1998; Groves, Presser, and Dipko Reference Groves, Presser and Dipko2004; Groves, Singer, and Corning Reference Groves, Singer and Corning2000; Groves et al. Reference Groves2001; Hox, de Leeuw, and Vorst Reference Hox, de Leeuw and Vorst1995; Stocké Reference Stocké2006; Weisberg Reference Weisberg2005), panel attrition, following survey instructions, timeliness of response and willingness to participate in surveys (Loosveldt and Storms Reference Loosveldt and Storms2008; Rogelberg et al. Reference Rogelberg2001; Stocké and Langfeldt Reference Stocké and Langfeldt2004; Stoop Reference Stoop2005). Studies spanning a multitude of populations and techniques report a consistent conclusion: more positive views of surveys are associated with greater respondent cooperation, and this in turn improves data reliability by reducing error stemming from non-response, satisficing or socially desirable reporting, motivated under-reporting or other behaviors. These findings are generally accepted despite recognition that survey attitude studies are susceptible to selection effects that may bias assessments of surveys in a positive direction (Goldman Reference Goldman1944; Vannieuwenhuyze, Loosveldt and Molenberghs Reference Vannieuwenhuyze, Loosveldt and Molenberghs2012).

Yet the extent to which insights from existing scholarship on survey attitudes can guide understanding of the MENA survey climate is limited by two important factors – one empirical and one theoretical. First, while extant research has broadly differentiated between general attitudes toward surveys and attitudes toward specific questionnaire formats and modes (Hox, de Leeuw and Vorst Reference Hox, de Leeuw and Vorst1995), studies of survey attitudes in the West have not produced a consensus on the best way to measure and classify survey attitudes. Scholars have commonly used exploratory and sometimes confirmatory factor analysis to map the structure of survey attitudes. But this has not led to agreement about the number and character of individual attitude dimensions. For instance, Goyder (Reference Goyder1986) reduces twelve questions about surveys to four underlying attitudinal factors. Rogelberg et al. (2011) employ six items to arrive at two dimensions. Stocké (Reference Stocké2001), meanwhile, constructs an eight-item ‘Attitudes towards Surveys Scale’, the components of which load highly on a single factor.

Nor have meta-analyses led to agreement. Loosveldt and Storms (Reference Loosveldt and Storms2008) identify from previous studies five different factors that affect individuals’ willingness to participate in a survey. They argue that a respondent's decision about participation:

[W]ill be positive when he or she considers an interview a pleasant activity (survey enjoyment), which produces useful (survey value) and reliable (survey reliability) results and when the perceived cost of cooperation in the interview (time and cognitive efforts; = survey cost) and impact on privacy (survey privacy) are minimal (Loosveldt and Storms Reference Loosveldt and Storms2008, 77).

But more recent work by de Leeuw et al. (Reference De Leeuw2010) retains only three of these factors in their own ‘Survey Attitude Scale’. Thus after six decades of investigation and despite general agreement that more positive attitudes induce greater cooperation and therefore better data, survey researchers working in Western settings with long histories of opinion polling still have not arrived at a common understanding of the elements that constitute survey attitudes.

The second factor limiting the relevance for MENA of existing research on survey attitudes is its theoretical, as opposed to merely geographical, grounding in the Western political context. Indeed, the very first survey on surveys, conducted in 1944, aimed to assess public views about ‘the role of polls in democracy’ (Loosveldt and Joye Reference Loosveldt, Joye and Wolf2016, 69). This conceptual connection between opinion polling and participatory politics, notably elections, underlies extant studies of survey attitudes, and it begs the question of whether and how the nature and effects of survey attitudes uncovered in the West might differ from those in countries and world regions with very dissimilar political institutions. Additionally, the democratic lens through which scholars have tended to view survey attitudes suggests that previous work may have overlooked other, context-driven concerns of people residing in non-Western and non-democratic settings. These include the use of surveys for purposes of political surveillance and manipulation rather than political participation, and the very political association of survey research with the Western world.

Survey Climate and Political Climate in the MENA Region

It is perhaps natural, then, to ask whether the authoritarian political climate of the Arab world might create an especially inhospitable survey climate. As reported by Sadiki (Reference Sadiki2009, 252) in his wide-ranging study of autocratic regimes in the Arab world, ‘[i]t is no exaggeration to say that “public opinion” has not had any presence to speak of in the Arabic political vocabulary’. Accordingly, he suggests, the fact that Arab publics are largely excluded from decision making might lead people to value surveys less than do the citizens of more democratic societies in which public opinion has a clearer impact on policy. Other aspects of the Arab world's political environment may also make the region's survey climate inhospitable. A weak capacity for scientific surveys, combined with official restrictions on polling, may make MENA publics more skeptical of survey reliability than publics elsewhere. Privacy concerns surrounding surveys may also be amplified in places, such as the Middle East, where social science research may be surveilled.

In these and other ways, it is possible that the MENA political landscape fosters negative attitudes toward survey research for reasons that are unrelated to its Western connotations. Yet we also theorize that, in the Middle East and perhaps elsewhere, an important and until now neglected dimension of attitudes towards survey research does in fact stem from its multifaceted association with the West. This association has both an epistemological and a political aspect, in addition to the fact that survey research was developed and is most widely used in Western countries. With respect to epistemology, survey research is part of a data collection and analysis toolkit that is centrally concerned with measuring and accounting for variance, particularly at the individual level of analysis. With respect to politics, survey research represents, at least for some in the Arab world, a methodology that has been employed to produce information that can support Western imperial interests in the region.

That such concerns color the MENA survey-taking climate is supported by several recent survey experiments undertaken in Arab countries. These have shown that Arab citizens with higher a priori levels of hostility toward the West are less likely to take part in surveys sponsored by Western governments (Corstange Reference Corstange2014; Corstange Reference Corstange2016), and, similarly, that citizens report more negative views of policies (Bush and Jamal Reference Bush and Jamal2015) and political candidates (Corstange and Marinov Reference Corstange and Marinov2012) when they are endorsed by the United States. We hypothesize and test for a similar but more general mechanism: that viewing surveys as inherently in the service of Western scientific or state interests may dampen survey response and increase the likelihood of early termination. The latter effect may occur if participants form negative judgments about a survey's purpose only after they have started the interview and begun answering questions.

It is also possible that latent attitudes toward survey research may interact with the objective attributes of surveys to produce conditional effects on survey behavior. In particular, worry over the possible misuses of surveys in the Arab political context may generate a kind of mistrust or suspicion, but these attitudes may be activated and impact participation only in combination with specific survey characteristics, such as a survey sponsor or survey topic that Arab respondents are inclined to distrust. This proposition is consistent with experimental results from other authoritarian settings, including Latin America, where respondents have been seen to use the observable attributes of survey enumerators as a heuristic for judging the political intentions of a survey (Bischoping and Schuman Reference Bischoping and Schuman1992). We test for such conditionalities in the analysis to follow.

Data and Case Selection

Our contribution is based on data from the first systematic assessment of survey attitudes in an Arab country. This original and nationally representative survey interviewed 751 citizens and 934 non-citizen residents of the Gulf state of Qatar. Findings from an investigation in any one Arab country cannot, of course, be assumed to characterize all Arab countries, and so caution is necessary when reflecting on the broader applicability of our results. Nevertheless, the case of Qatar offers key methodological and theoretical advantages for a study of survey attitudes in the Arab world.

First, Qatar is a highly diverse society, and its extreme diversity allows comparison across cultural–geographical groupings within a single survey setting. A small, resource-exporting monarchy, Qatar is home to 2.7 million residents, of whom around 300,000 are Qatari citizens (Snoj Reference Snoj2017). The remaining population consists of Arab and non-Arab expatriate workers from around the world. More than fifty countries, including seventeen of the twenty-two member states of the Arab League, are represented in our survey.Footnote 6 Moreover, this expatriate population in Qatar, as elsewhere in the Gulf region, is highly transient. The median length of residence in Qatar among expatriates in our sample is 7 years, meaning that individuals can for the most part be expected to possess the values, traditions and experiences of their home country, including those related to survey research.

Qatar is also a fitting study from a conceptual standpoint, as it is characterized by those country-level features that are commonly cited as barriers to obtaining reliable survey data from the Arab region. These include a lack of democracy and the absence of a survey tradition, coupled with a recent proliferation of surveys. Qatar is a hereditary monarchy rated as having the lowest possible level of democracy for all years since independence according to the widely used Polity IV measure of regime type (Marshall, Jaggers and Gurr Reference Marshall, Jaggers and Gurr2002). It is also a newcomer to public opinion polling, both in general and relative to most other Arab states. Independent opinion surveys were absent in the country until a decade ago. Qatar therefore fits very well the description of Sadiki quoted earlier: that public opinion traditionally has not had any presence to speak of in the nation's political vocabulary.

However, Qatar has in the last few years moved to the forefront of Arab countries with respect to the quantity and quality of systematic and scientific survey research. In 2008, a survey research institute was established at the national Qatar University and soon began conducting rigorous and nationally representative surveys of both Qatari citizens and the country's expatriate population. Further, given the country's small population, the likelihood of being selected for a survey, or knowing someone who has been selected, is relatively high. Qatar's citizens and resident expatriates have thus had opportunities during the last few years to experience surveys, either directly or indirectly, which has probably led them to form opinions about surveys.

For these reasons, Qatar represents a very appropriate setting in which to observe attitudes toward surveys and the impact of these orientations on survey behavior.

Our survey was conducted face to face in May 2017 by the Social and Economic Survey Research Institute at Qatar University. Households were selected randomly from a comprehensive frame via proportionate stratified sampling, using Qatar's administrative zones for stratification. Individuals were selected through software randomization, with gender pre-specified (Le et al. Reference Le2014). The survey was conducted in Arabic or English by bilingual enumerators. The response rate, following the American Association for Public Opinion Research definition RR3, was 35.2 per cent, and the sampling error was 3.1 per cent. Importantly, there was no significant difference in participation rates between Arabs and non-Arabs that might confound analysis of group-based variation in survey attitudes.Footnote 7

Methods

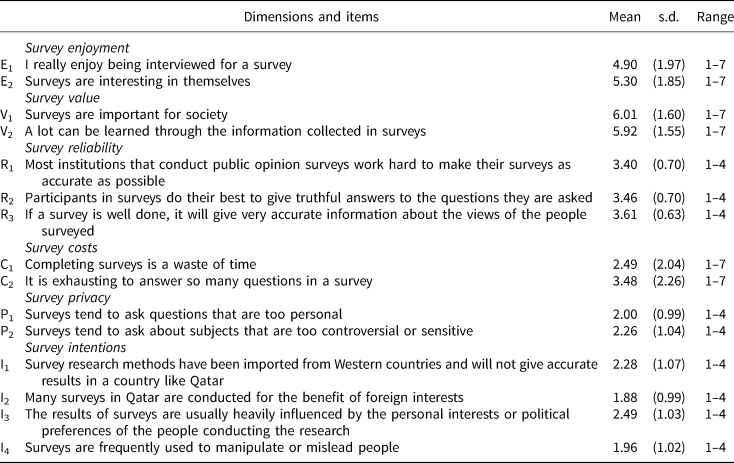

The survey was designed to capture respondent attitudes toward survey research and to offer behavioral measures of the effects of these attitudes. The interview schedule contained items borrowed or adapted from previous investigations, and it also included original questions developed by the authors to explore aspects of surveys that relate to conditions prevailing in the MENA region and thus might not be captured adequately in batteries developed for Western contexts (see Table 1).

Table 1. Items for the six dimensions of survey attitudes in Qatar

Note: E1, E2, V1, V2, C1 and C2 are from the Survey Attitude Scale of de Leeuw et al. (Reference De Leeuw2010); P1 and R2 are from Loosveldt and Storms (Reference Loosveldt and Storms2008); R1 and R3 are similar to other items used by Loosveldt and Storms (Reference Loosveldt and Storms2008); and P2, I1, I2, I3, and I4 are the authors' creation. Response code 1 corresponds to ‘strongly disagree’ and the maximum category (4/7) to ‘strongly agree.’ Missing values are omitted from the analysis.

In line with previous studies, we utilize factor analysis to explore how items cluster in order to develop a conceptual map of respondent perceptions of surveys. We use exploratory rather than confirmatory factor analysis, both because prior studies have not resulted in an agreed factor structure, and because our theory predicts one or more new attitudinal dimensions connected to the MENA survey climate. Items with high loadings on a common factor after rotationFootnote 8 measure the same underlying concept and, hence, constitute a unidimensional measure. Unidimensionality offers evidence of item reliability, a basis for inferring validity, and justification for combining items into a multi-item index. Due to the number of items in the measurement model and limited subsample sizes, we group all respondents together in the factor analysis. We create variables from predicted factor scores to measure each of the identified dimensions of survey attitudes.Footnote 9 We first use the variables generated from factor analysis as dependent variables to evaluate cultural–geographical group differences in attitudes. Thereafter, we use them as independent variables to investigate the effects of attitudes on survey behavior, conditional on group.

Because we do not observe the survey attitudes of non-participants, we cannot assess the impact of attitudes, either alone or in combination with manipulated survey attributes (cf. Corstange Reference Corstange2014; Corstange Reference Corstange2016), on participation in our survey. Therefore, we embed within our survey two experiments that afford behavioral measures of the effects of the attitude dimensions identified in the factor analysis.

Our first measure comes from a conjoint experiment in which respondents were presented with a description of a hypothetical survey and then asked to rate their likelihood of participating. The conjoint technique simulates the complex nature of the survey participation decision, in which respondents must weigh a combination of pertinent factors. The hypothetical survey was randomly assigned four objective attributes that the literature suggests may affect participation: survey sponsor, topic, mode and length. The survey's sponsor was given as either a Qatari government institution, a university in Qatar, a private company in Qatar or an international agency. The survey's topic was cultural, economic or political. Its mode was either face to face or telephone. Its length was either 10 or 20 minutes for a telephone survey, and 30 or 60 minutes for face-to-face interviews.Footnote 10 The selection of treatments was informed both by the relevant scholarly literature, which is based primarily on research in developed democracies, and by our intuition that other, context-driven concerns about surveys might also influence participation in the Arab world. The inclusion of an international sponsor and a political topic reflects the latter consideration. As is typical, respondents were asked to assess three different survey profiles in sequence, in each case rating their intention of taking part as ‘very likely’, ‘somewhat likely’, ‘somewhat unlikely’ or ‘very unlikely’.

Our design is based on the well-known conjoint approach of Hainmueller, Hopkins and Yamamoto (Reference Hainmueller, Hopkins and Yamamoto2014) and Hainmueller and Hopkins (Reference Hainmueller and Hopkins2015). Variables were left in their native metric, and the average conditional interactive effects (ACIEs) reported in the following section reflect the effects of treatments in the metric of the dependent variable, conditional on respondent cultural–geographical category. Since all survey characteristics were randomly selected, ACIEs provide an estimate of which attributes have a larger impact than others.

Unlike in most conjoint analyses, however, our interest lies not only in the effects of the experimental treatments, but also in the impact of generalized attitudes that we expect to predict behavior separately from, or in combination with, the treatments. Thus, after considering the attribute-only model of survey participation, we insert the attitude factors as additional independent variables. We also test theoretically motivated interactions between survey attitudes and politically salient survey characteristics.

The second experiment builds on the first by gauging the impacts of survey attitudes on actual respondent behavior within our survey, rather than on hypothetical survey participation. Devised by the authors, the experiment uses a transparent screening question to give respondents an easy option of exiting the interview. Respondents were advised that the final section of the survey, due to its length, would only be completed by half of the respondents, and that the basis for this random selection was their birthday, with only respondents whose birthday fell in the previous six months being asked to continue. Since the distribution of birthdays throughout the year is approximately uniform (McKinney Reference McKinney1966), we interpret deviation from the expected value of 0.5 to be evidence of deliberate falsification, or ‘motivated underreporting’ (Eckman et al. Reference Eckman2014; Tourangeau, Kreuter and Eckman Reference Tourangeau, Kreuter and Eckman2012), designed to cut the interview short. We estimate the effects of the survey attitude dimensions on the respondent's likelihood of finishing the survey, as well as differences in these effects across cultural–geographical categories.

In the results sections that follow, we first map the dimensions of survey attitudes among citizens and non-citizen residents of Qatar, and assess how these dimensions correspond to those identified in prior studies of Western populations. We then examine subgroup differences along these dimensions, with respondents divided into five cultural–geographical categories: Qatari (n = 751), Arab non-Qatari (n = 392), South Asian (Indian subcontinent; n = 285), Southeast Asian (Philippines, Malaysia, Indonesia; n = 42) and Western (United States Canada, Europe, Australia; n = 28).Footnote 11 We next use the results of these mapping operations to determine whether and how survey attitudes influence respondent behavior.

Results: Measuring and Predicting Survey Attitudes

Mapping Survey Attitudes in an Arab Country

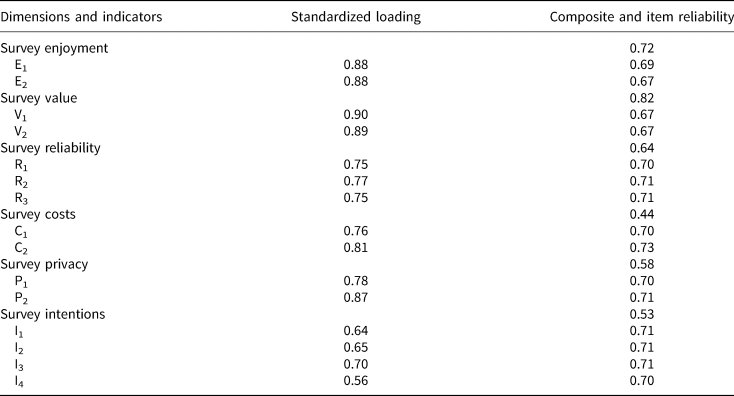

We measure survey attitudes in Qatar using fifteen items from the interview schedule. Factor analysis yields a six-factor solution that mostly matches the findings obtained in other contexts. However, the analysis reveals one additional dimension not identified in previous studies. Table 1 presents the components of these six dimensions with means and standard deviations, while Table 2 gives the results of the factor analysis. Between two and four items specified as measures of a particular dimension have high standardized loadings on that dimension. All loadings in the model are significant (p < 0.001), and, except for one dimension, all loadings are above 0.7. This supports the convergent validity of the model. Correlations between the latent factors are low, the highest being between survey enjoyment and survey value (0.56). The highest correlation between any two other factors is 0.27.Footnote 12 This indicates that each dimension measures a different aspect of attitudes toward surveys and supports the model's discriminant validity. The values of item reliability (Cronbach's α) are acceptable at 0.67 or above, although some of the composite reliability values are lower.Footnote 13

Table 2. Results of a factor analysis of survey attitudes in Qatar

Note: right-hand column reports Cronbach's α.

As noted, the factors identified in the model largely match those of previous studies conducted in very different settings – most closely those of Loosveldt and Storms (Reference Loosveldt and Storms2008), who worked in Belgium. Beyond the notable implication that the dimensions of survey attitudes may not differ much across populations, this result also lends additional confidence to our measurement model. We follow previous work in labeling five of our attitude dimensions: survey enjoyment, survey value, survey reliability, survey costs and survey privacy. A final, sixth factor not previously identified we label as ‘survey intentions’. This dimension is related to the purpose – well intentioned or ill intentioned – of surveys. Taken together, these six factors provide a conceptual map of the survey climate as perceived by individuals in Qatar.

It is instructive that the factor analysis reveals three independent dimensions of attitudes associated with survey burden: one associated with the cognitive or time cost of surveys (survey costs), another related to the invasiveness of surveys (survey privacy), and a final factor related to the possible manipulative or politicized purposes of some surveys (survey intentions). While de Leeuw et al. (Reference De Leeuw2010) have found that concerns about time and privacy load on a single factor, and hence constitute a single dimension of survey burden, respondents in Qatar judge these two separately. One possible reason is the high volume of surveys conducted in Qatar over the previous decade relative to the country's small population, suggesting the proposition that burden associated with time becomes a separate consideration under conditions of high survey exposure.

The survey value and survey reliability factors have also been observed elsewhere and do not require extensive discussion, except to note that they are conceptually distinct from the other dimensions identified. In Qatar, finding surveys burdensome is a separate consideration from questioning either the accuracy or honesty of surveys or the fact that the information they provide can be useful. Similarly, it might have been expected that the skepticism reflected in the survey intentions factor would form a common dimension with negative judgments about the reliability or value of surveys. Somewhat surprisingly, this is not the case.

A final observation concerns the attitudinal dimension exhibited by our respondents in Qatar but not found by studies mapping survey attitudes in Western societies. This is the survey intentions factor, on which the items with high loadings pertain both to foreign methods and interests and to the motivations of those conducting surveys. These items involve a degree of distrust, or at least skepticism, toward surveys, and it is worth pondering the conditions under which attitudes about Western influences and researcher motivation are strongly interrelated and define a dimension of the survey-taking climate that has not been observed in Western contexts.

This finding about the survey intentions factor may stem from Qatar's vast expatriate population, and so apply in countries with similar demographic characteristics. A more likely and also more instructive explanation, however, is that Qatar is an Arab country and the Arab world's relationship with the West has been complex and frequently problematic, and this has often given rise to suspicions about Western and especially American activities in the MENA region (for example, Blaydes and Linzer Reference Blaydes and Linzer2012; Jamal Reference Jamal2012; Katzenstein and Keohane Reference Katzenstein, Keohane, Katzenstein and Keohane2007). Previous surveys conducted in Qatar and other Gulf states have revealed citizen worries over Western interference in domestic affairs (Gengler Reference Gengler2012; Gengler Reference Gengler2017). If these are indeed relevant scope conditions, similar findings should be expected in other Arab countries and perhaps other societies with similar attitudes toward the West.

Cross-Cultural Differences in Survey Attitudes

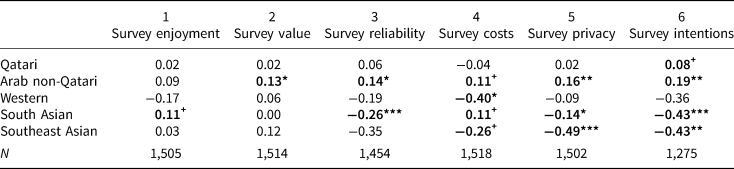

We now consider the extent to which these six dimensions of survey attitudes vary across the distinct cultural–geographical groupings represented in Qatar. We employ an ordinary least squares (OLS) method to estimate differences according to group while controlling for respondent gender, age and education.Footnote 14 The baseline subgroup category is set to Arab expatriates, so that coefficients in Table 3 report differences in attitudes relative to the average of a diverse cross-section of the Arab world. This test matches our theoretical interest in understanding whether Arabs as a broad category of respondents possess systematically different orientations toward surveys than other publics. Here and elsewhere, we treat Qatari citizens separately both because they constitute a distinct population that is sampled separately from expatriates, and to capture possible differences in attitudes based on citizenship status or on Qataris' particular social and political experiences.

Table 3. Survey attitudes of cultural-geographical groups in Qatar, relative to Arab non-Qatari baseline

Notes: estimates by OLS regression; p-values in parentheses; + p < 0.10, * p < 0.05, ** p < 0.01, *** p < 0.001; results of demographic controls and ‘Other’ group category not reported; sampling probability weights utilized.

The results show that, far from being more negatively oriented toward surveys, both citizen and expatriate Arabs in Qatar hold substantially more positive views of surveys than do individuals from Western, South Asian and Southeast Asian nations. This applies to five of the six attitude dimensions. In the cases of survey value and enjoyment, there is little subgroup variation, although respondents from Western countries seem to enjoy surveys less compared to Arabs (p = 0.07). By contrast, regarding survey reliability, all subgroups except for Qataris report more negative evaluations than Arab respondents. Similarly, for the three dimensions of survey burden, Arab respondents perceive surveys as being less burdensome than do Western and Asian residents of Qatar. Western, Southeast Asian and even Qatari respondents report more negative attitudes than Arab expats regarding the time and cognitive costs required by survey participation; the two Asian subgroups express greater concern than Arabs over the privacy implications of surveys; and South Asian, Southeast Asian and – ironically – Western respondents are more likely than Arabs to view surveys as serving partisan and foreign interests.

Table 3 shows how the survey attitudes of Arabs differ from those of other respondent types. A related but separate question is whether the attitudes of Arabs and the other cultural–geographical groupings are substantively positive or negative across the six attitudinal dimensions. That is, the findings reported in Table 3 demonstrate that Arab attitudes toward surveys do indeed differ from those of most other groups in Qatar – albeit not in the direction one might have predicted – but the question remains whether one should characterize Arabs or others as being positively or negatively oriented toward surveys. The findings on this question are presented in Table 4, which reports post-regression predicted values by subgroup for all six survey attitude dimensions. Since the factor variables that represent the dimensions are normalized with a mean of 0 and standard deviation of 1, a predicted value different from 0 can be interpreted as representing positive/high or negative/low survey attitudes, depending on its sign. Predicted values are directly interpretable in standard deviation terms, such that a value of 0.5 corresponds to attitudes that are half a standard deviation more positive than average, for example.

Table 4. Predicted values on survey attitude dimensions, by cultural-geographical group

Notes: columns report post-OLS predicted values; + p < 0.10, * p < 0.05, ** p < 0.01, *** p < 0.001; sampling probability weights utilized.

Table 4 shows that the Arab residents of Qatar are not only more positively inclined toward surveys compared to other cultural–geographical groups; they also report qualitatively positive attitudes on each survey dimension. Meanwhile, the attitudes of Qatari citizens are substantively neutral on all but one dimension. Notably, this factor is survey intentions, about which Qataris report more positive views than average.

Conversely, the survey attitudes of Westerners in Qatar are negative across all dimensions except survey value, although only one of these results – survey costs – is significant at the standard level of statistical confidence. This is due, at least in part, to the limited size of the Western subsample. South Asian respondents report positive enjoyment of surveys and more positive views than average about the time and effort required of survey participants; yet they hold negative perceptions about survey reliability, privacy and intentions. Finally, members of the Southeast Asian subgroup, like other respondents, appear to attach positive value to surveys, yet they may consider them unreliable (these first two results are not statistically significant), and they find surveys burdensome across all three measured dimensions. In short, Arab residents of Qatar have broadly positive views of surveys, Qatari citizens are mostly neutral, South Asian respondents are mixed, and individuals from Southeast Asian and, to a lesser extent, Western countries have generally negative orientations toward surveys.

Results: The Behavioral Impacts of Survey Attitudes

Survey Attitudes, Attributes and Participation

But how do these attitudes influence Arabs and non-Arabs in Qatar when they are asked to participate in surveys? Here we estimate the behavioral impacts of survey attitudes via our two embedded experiments. We begin with a conjoint model of survey participation that includes only objective survey characteristics: survey mode, length, topic and sponsor. We estimate treatment effects conditional on cultural–geographical category, with all non-Arab respondents grouped together due to insufficient subsample sizes for some groups. We next add to the model the six survey attitude dimensions to examine the extent to which variance in participation is explained by generalized attitudes about surveys compared to the specific attributes of the hypothetical surveys in our experiment. We conclude this analysis by considering how the politically salient survey attributes manipulated in the experiment – topic and sponsor – interact with survey attitudes to affect participation. In particular, we are interested in understanding the possible conditionalities associated with the attitude dimension that has not been identified in previous studies but is an important consideration of survey takers in Qatar, namely survey intentions.

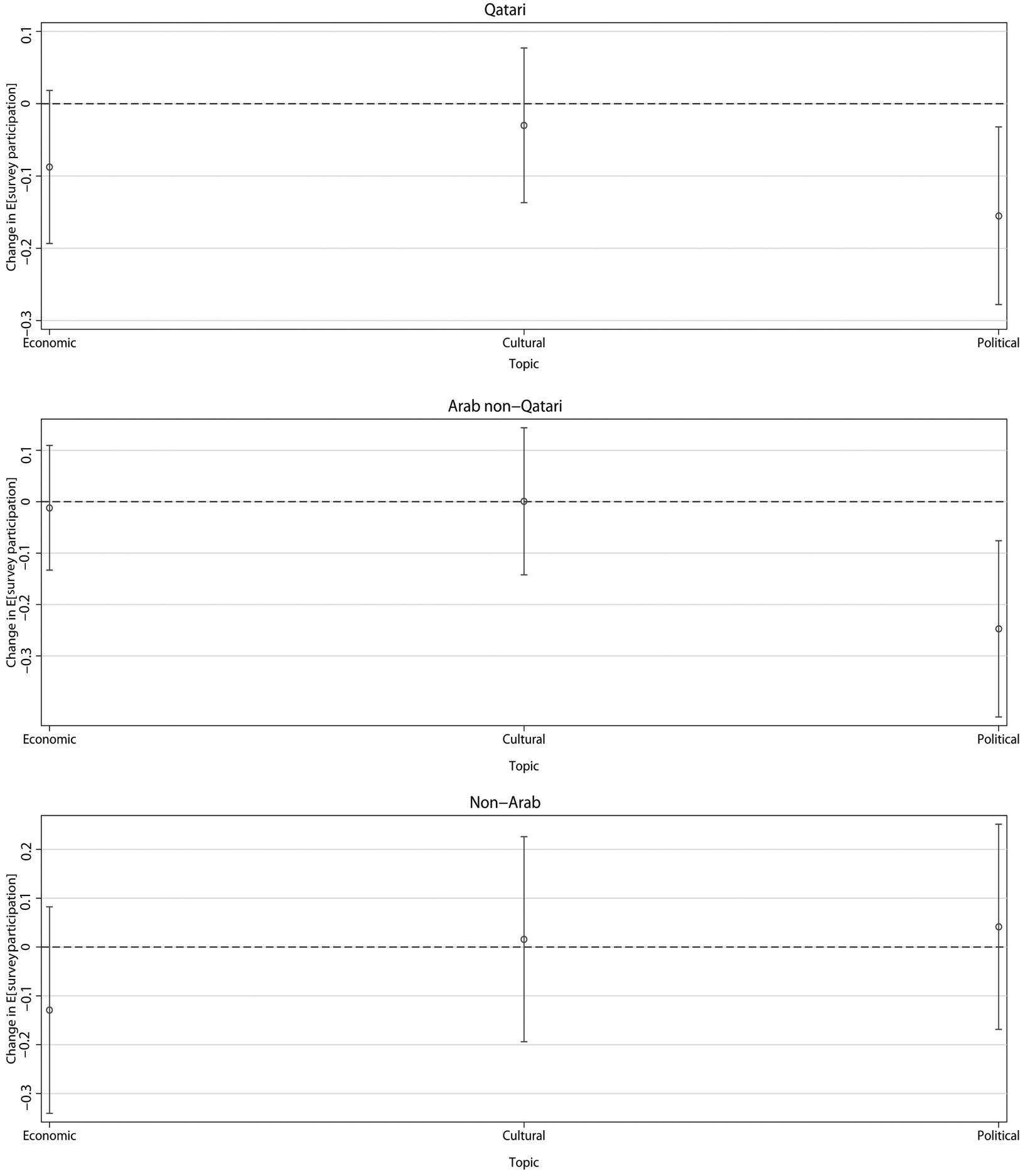

The findings of the attribute-only model, visualized in Figure 1, reveal robust treatment effects across all four survey attributes considered, as well as notable similarities and differences across respondent types.Footnote 15 All subgroups prefer shorter surveys. Arab residents and Qatari citizens prefer telephone surveys to face-to-face interviews, whereas the estimated effect of face-to-face mode on participation among non-Arab respondents is also negative but not statistically significant. Compared to the baseline of an economically focused survey, all population groups are substantially less likely to participate if the survey topic is political in nature. Yet the size of this effect is twice as great among Arab and non-Arab expatriates, among whom it is indeed the strongest of any treatment effect. Perhaps unexpectedly, then, citizens in Qatar appear to be less disinclined than Arab non-citizens to participate in political surveys (p = 0.065). This suggests that the negative treatment effects witnessed among non-citizen respondents may be at least partially due to a lack of interest or engagement in local politics, rather than to apprehensions over divulging political opinions in a non-democratic environment.

Figure 1. Effects of survey attributes on participation intentions

A more qualitative disparity emerges in the case of survey sponsor. Non-Arab respondents have no perceptible preferences about who sponsors a survey: they are equally willing to participate if a survey is commissioned by a university, state institution, local private company or international organization. Conversely, relative to the baseline of a university sponsor, both Qatari and non-Qatari Arabs report a much lower willingness to participate in surveys for private companies, and an international sponsor also dampens participation among Qatari respondents. Finally, Qataris are no less likely to participate when a survey is sponsored by a government entity, while Arab expatriates are potentially more likely to participate in such surveys (p = 0.109). Thus there is no general fear among Qatar's citizens or residents of participating in a survey connected to the state.Footnote 16 Rather, it is surveys commissioned by companies and/or foreign entities that are associated with a lower willingness to participate among Arab populations in Qatar.

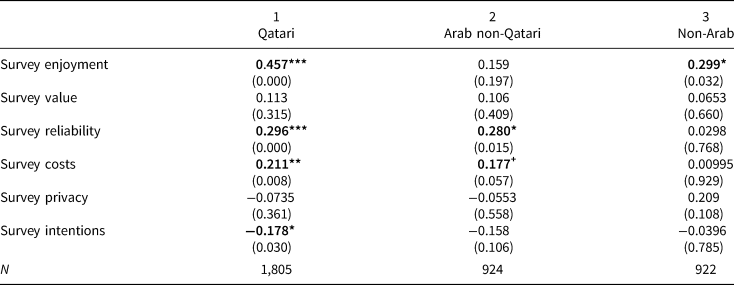

However, the decision to take part in a survey is not an isolated response to the parameters of a given participation request; it is also shaped by overall feelings about surveys. For one who dislikes pizza, as it were, the choice of toppings is irrelevant. To better understand the relative importance of survey attitudes and objective survey attributes in influencing participation, we now add to the conjoint model of participation our six survey attitude dimensions. These are introduced into the model sequentially to demonstrate how coefficient and error estimates change with additional regressors. As before, the effects are estimated conditional on respondent type. Table 5 reports the results of this analysis in the form of post-estimation marginal effects.Footnote 17 To ensure the effects are meaningful, columns report estimated changes in participation resulting from a change in survey attitudes from substantively negative (−1 standard deviation) to positive (+1 standard deviation) on that dimension.

Table 5. Marginal effects of survey attitudes on participation, by respondent group

Notes: p-values in parentheses; + p < 0.1, * p < 0.05, ** p < 0.01, *** p < 0.001; columns report estimated marginal effects on participation evaluated at −1 and +1 standard deviations of the respective survey attitude dimension; standard errors clustered by respondent; sampling weights utilized.

The findings are notable in several respects. First, the effects of the attitude dimensions on participation are on par with those of the most impactful experimental treatments manipulating survey topic and sponsor. Secondly, the inclusion of survey attitudes in the model of participation significantly improves overall fit in the Arab and especially Qatari models, but not in the model of participation for non-Arabs. The full specification that includes all survey attributes and attitudes achieves an adjusted R 2 of 0.132 for Qataris, for instance, compared to 0.054 in the attribute-only model. Among non-Arabs, the difference in fit is a mere 0.007.

This disparity highlights another key result: like objective survey attributes, the effects of survey attitudes on participation vary across the three cultural–geographical groupings. Most notably, whereas the perceived reliability of surveys strongly predicts participation for both Arab subgroups irrespective of survey attributes, it exerts no effect among non-Arabs. Participation is also more likely for Qatari citizens and Arab expats when they hold more positive views about the time and cognitive costs of surveys, while again this consideration does not impact non-Arabs independently of the survey attribute treatments. Compared to Arabs, participation by non-Arabs is more contingent upon the perceived privacy implications of surveys. Indeed, the direction and magnitude of the coefficient estimates suggest only two consistent effects on participation: a positive impact of survey enjoyment, and a null effect of perceived survey value.

Finally, the survey intentions dimension is unique in being a negative predictor of participation among citizens and, with a lower degree of statistical confidence, Arab expats (p = 0.106) in Qatar. That is, more positive views about the intentions of surveys are associated with a reduced likelihood of participation. To help elucidate this result, and more generally to understand the possible conditionalities associated with our new attitude dimension not found in previous studies, we investigate the interaction between perceived survey intentions and survey attribute treatments that could signal a negative political purpose. Figures 2 and 3 give the effects of the survey intentions factor conditional on survey sponsor and topic, respectively. In each case, the findings reveal that the intentions dimension depresses participation among Arabs only for surveys whose topics (politics) and sponsors (polling companies and foreign organizations) they tend to disfavor, whereas the intentions dimension never affects participation among non-Arabs.

Figure 2. The impact of survey intentions, by survey sponsor and respondent group

Figure 3. The impact of survey intentions, by survey topic and respondent group

The generally favorable attitudes toward surveys observed among Arabs in Qatar thus turn to unfavorable attitudes under certain conditions, namely when the survey topic or sponsor makes people suspicious of its purpose. If views of survey intentions are negative to begin with, suspicious sponsorship is not required to make Arabs distrustful and dampen participation. But if one is predisposed to be positive, as the majority of men and women in our sample are, that changes only when the sponsor or topic is distrusted, reflecting a sort of dissonance between preconceived attitudes and contradictory feelings prompted by a specific request for survey participation.Footnote 18 That it is sponsorship by private polling firms and international entities, rather than the government, that signals negative intentions and so contributes to lowering participation in Qatar accords with the negative effects of these two treatments among Qatari and non-Qatari Arabs observed in Figure 1.

Survey Attitudes and Early Termination

We conclude our study by assessing the effects of survey attitudes on actual rather than hypothetical respondent behavior. Since our design does not allow us to estimate the impact of survey attitudes on participation in the survey for lack of data on non-participants, we instead examine respondent willingness to complete the entire interview schedule when offered an easy exit via a simple filter question. The introduction to the final section of the interview informed respondents that, in order to shorten the survey administration time, half of them would be randomly selected into the final module: those whose birthday fell in the past six months would be asked to continue. For others, the survey would conclude. Having clearly spelled out the purpose and implications of answering this screening question, we expected that respondents whose birthday occurred within the past six months but who wished to terminate the survey might lie about when they were born, thereby ending the survey. The survey did not collect information about the respondent's birth date, so respondents did not need to fear being caught in a lie.

Since birthdays tend to be randomly distributed, it was expected that, absent falsification, approximately 50 per cent of respondents would continue into the final survey section. However, only 39 per cent of respondents reported a birthday in the past six months and thus completed the full schedule. This proportion varies by respondent type, with 35 per cent of Qataris, 39 per cent of Arab expats and 45 per cent of non-Arabs finishing the entire survey. We take this result as an indication that some respondents lied in order to exit the survey. To understand the determinants of such motivated under-reporting, we consider the extent to which survey attitudes predict the likelihood of drop-out.

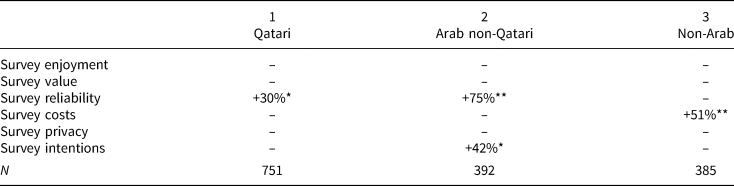

Our general hypothesis was that respondents with more negative survey attitudes would be more likely to terminate the interview. In fact, three attitude dimensions are unrelated to willingness to continue the survey. These are survey enjoyment, survey value and survey privacy. As shown in Table 6, none is associated with continuation (birthday) for any respondent group. The three remaining factors – survey reliability, survey costs and survey intentions – do have significant predictive power, but only among particular respondent groups.Footnote 19

Table 6. Marginal effects of survey attitudes on continuation (birthday), by respondent group

Notes: columns report post-logit marginal effects as percentage changes in the predicted probability of continuation (a birthday in the past six months) evaluated at −1 and +1 standard deviations of the respective survey attitude dimension; * p < 0.05, ** p < 0.01, *** p < 0.001; sampling probability weights utilized.

Among Qatari respondents, survey reliability is the only dimension related to survey completion: Qataris are 30 per cent more likely to enter the final module if they view surveys as being reliable. Survey reliability also predicts the likelihood of continuation among non-Qatari Arabs, who are a striking 75 per cent more likely to finish the interview if they possess high rather than low values on this dimension. Survey intentions also has predictive power among non-Qatari Arab expats, who are 42 per cent more likely to continue if they have positive views of survey intentions. These Arab expatriates are the only respondent category for which more than one survey attitude is related to the willingness to complete the survey.Footnote 20 Finally, among non-Arab respondents, only the survey costs dimension predicts motivated under-reporting. For this group, the likelihood of continuation increases by 51 per cent when views of the time and cognitive burden of surveys are positive rather than negative. We had expected that any respondent who views surveys as burdensome would be more likely to seek to shorten the survey duration, but in fact this is not the case among the Arab citizens and residents of Qatar.

The effect of attitudes about survey reliability on continuation, conditional on respondent social category, is visualized in Figure 4. Beyond depicting the disparate impacts of this attitude dimension across respondent groups, the illustration clearly shows that the higher drop-out rates observed among the two Arab categories reflect the decisive impact of their survey attitudes on actual survey behavior, rather than any propensity toward falsification. Whereas Qatari and non-Qatari Arab respondents are only around 25 per cent likely to finish the survey when they possess negative views of survey reliability (that is, evaluated at −1), non-Arab respondents with negative views are nearly twice as likely to do so, at an estimated 45 per cent. As indicated by the flat line denoting non-Arab respondents, survey continuation is unrelated to perceptions of survey reliability for this group (p = 0.597). Meanwhile, for both categories of Arab respondents who view surveys as highly reliable, the estimated probability of continuation is indistinguishable from the corresponding likelihood among non-Arabs.

Figure 4. The effect of survey reliability on continuation (birthday), by respondent group

The opposite result obtains in the case of survey costs. Pessimistic views of survey time and cognitive burden do not depress the likelihood of continuation for Qataris or other Arabs in Qatar, yet they strongly predict interview completion among non-Arabs. Non-Arab respondents are an estimated 54 per cent likely to finish the survey if they perceive survey costs positively, compared to 36 per cent likely if they perceive them negatively. The latter probability is statistically identical to the probability of continuation among Qataris and Arab non-Qataris, irrespective of where they fall on the costs dimension. (Visualization not shown.)

Conclusion

The expansion of survey research in the Arab world has brought with it questions about the region's survey-taking climate. Do authoritarian political institutions and publics lacking experience with opinion polls undermine the validity of the growing number of surveys being conducted in MENA countries? Findings from this study suggest that these apprehensions are misplaced. In Qatar, a country that typifies these environmental conditions, both citizens and expatriates from a disparate set of Arab countries are actually more positively oriented toward surveys than people from other cultural–geographical regions.

Nevertheless, experimental evidence suggests that ensuring data quality in Arab contexts may entail some challenges. Despite being more positively oriented toward surveys on average, our results show that Arab survey takers are disproportionately sensitive to their subjective impressions about the reliability and intentions of surveys, whereas the survey behavior of non-Arab respondents depends on their enjoyment of survey taking and a survey's perceived cognitive and time burden. Notable, too, is the partial discrepancy between the impacts of survey attitudes on hypothetical willingness to participate in a survey, as measured in the conjoint experiment, and on actual survey behavior, as measured in the birthday experiment. This suggests a promising and potentially very productive avenue for future research: that different considerations may influence the decision to participate and the decision to continue a survey to completion. With respect to our findings from Qatar, it may be that respondents form and update opinions about the reliability, intentions and burden of a survey after it has begun, so these factors predict both participation and drop-out, whereas survey enjoyment and privacy concerns are less connected to a particular survey and so are more closely related to participation.

That Qatari citizens are less sensitive to political topics than non-citizen respondents, and also are not averse to government sponsors, even on political topics, is also noteworthy. For citizens and resident Arabs, the least acceptable survey sponsors are private and international organizations. Our findings on interaction effects suggest that these sponsors may signal perceived negative intentions, and, if so, hesitation to participate may stem from worries that survey results will be manipulated or used for nefarious purposes, rather than from the type of sponsor or topic per se. This helps explain why the effects of survey sponsorship differ by cultural–geographical category, which suggests that they likely also differ across countries.

Finally, these results highlight the importance of measuring and understanding the impact of attitudes about the perceived purpose of surveys, distinct from their value or reliability. To the authors' knowledge, this dimension has not been identified, or even searched for, in previous quantitative studies of survey attitudes. Of course, public skepticism about the purposes of surveys is not limited to the MENA region, and indeed our findings show that respondents from various other parts of the world tend to hold more negative views along this dimension. Nevertheless, especially given the challenges posed by declining interest in survey participation across many contexts, it is worth further studying the effects of this and other survey attitudes.

Supplementary material

Data replication sets are available in Harvard Dataverse at: https://doi.org/10.7910/DVN/QBPIVL and online appendices at: https://doi.org/10.1017/S0007123419000206.

Acknowledgements

The authors would like to thank the anonymous referees for their helpful feedback and suggestions that greatly contributed to improving the final version of this article. They would also like to thank the Editors for their generous support during the review process.