Article contents

Approximate discrete entropy monotonicity for log-concave sums

Published online by Cambridge University Press: 13 November 2023

Abstract

It is proven that a conjecture of Tao (2010) holds true for log-concave random variables on the integers: For every  $n \geq 1$, if

$n \geq 1$, if  $X_1,\ldots,X_n$ are i.i.d. integer-valued, log-concave random variables, then

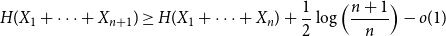

$X_1,\ldots,X_n$ are i.i.d. integer-valued, log-concave random variables, then \begin{equation*} H(X_1+\cdots +X_{n+1}) \geq H(X_1+\cdots +X_{n}) + \frac {1}{2}\log {\Bigl (\frac {n+1}{n}\Bigr )} - o(1) \end{equation*}

\begin{equation*} H(X_1+\cdots +X_{n+1}) \geq H(X_1+\cdots +X_{n}) + \frac {1}{2}\log {\Bigl (\frac {n+1}{n}\Bigr )} - o(1) \end{equation*} $H(X_1) \to \infty$, where

$H(X_1) \to \infty$, where  $H(X_1)$ denotes the (discrete) Shannon entropy. The problem is reduced to the continuous setting by showing that if

$H(X_1)$ denotes the (discrete) Shannon entropy. The problem is reduced to the continuous setting by showing that if  $U_1,\ldots,U_n$ are independent continuous uniforms on

$U_1,\ldots,U_n$ are independent continuous uniforms on  $(0,1)$, then

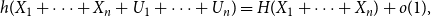

$(0,1)$, then \begin{equation*} h(X_1+\cdots +X_n + U_1+\cdots +U_n) = H(X_1+\cdots +X_n) + o(1), \end{equation*}

\begin{equation*} h(X_1+\cdots +X_n + U_1+\cdots +U_n) = H(X_1+\cdots +X_n) + o(1), \end{equation*} $H(X_1) \to \infty$, where

$H(X_1) \to \infty$, where  $h$ stands for the differential entropy. Explicit bounds for the

$h$ stands for the differential entropy. Explicit bounds for the  $o(1)$-terms are provided.

$o(1)$-terms are provided.

Keywords

MSC classification

Information

- Type

- Paper

- Information

- Copyright

- © The Author(s), 2023. Published by Cambridge University Press

Footnotes

L.G. has received funding from the European Union’s Horizon 2020 research and innovation program under the Marie Sklodowska-Curie grant agreement No 101034255.

References

- 1

- Cited by