No CrossRef data available.

Article contents

The Bernoulli clock: probabilistic and combinatorial interpretations of the Bernoulli polynomials by circular convolution

Published online by Cambridge University Press: 16 November 2023

Abstract

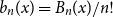

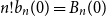

The factorially normalized Bernoulli polynomials  $b_n(x) = B_n(x)/n!$ are known to be characterized by

$b_n(x) = B_n(x)/n!$ are known to be characterized by  $b_0(x) = 1$ and

$b_0(x) = 1$ and  $b_n(x)$ for

$b_n(x)$ for  $n \gt 0$ is the anti-derivative of

$n \gt 0$ is the anti-derivative of  $b_{n-1}(x)$ subject to

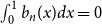

$b_{n-1}(x)$ subject to  $\int _0^1 b_n(x) dx = 0$. We offer a related characterization:

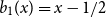

$\int _0^1 b_n(x) dx = 0$. We offer a related characterization:  $b_1(x) = x - 1/2$ and

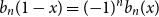

$b_1(x) = x - 1/2$ and  $({-}1)^{n-1} b_n(x)$ for

$({-}1)^{n-1} b_n(x)$ for  $n \gt 0$ is the

$n \gt 0$ is the  $n$-fold circular convolution of

$n$-fold circular convolution of  $b_1(x)$ with itself. Equivalently,

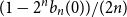

$b_1(x)$ with itself. Equivalently,  $1 - 2^n b_n(x)$ is the probability density at

$1 - 2^n b_n(x)$ is the probability density at  $x \in (0,1)$ of the fractional part of a sum of

$x \in (0,1)$ of the fractional part of a sum of  $n$ independent random variables, each with the beta

$n$ independent random variables, each with the beta $(1,2)$ probability density

$(1,2)$ probability density  $2(1-x)$ at

$2(1-x)$ at  $x \in (0,1)$. This result has a novel combinatorial analog, the Bernoulli clock: mark the hours of a

$x \in (0,1)$. This result has a novel combinatorial analog, the Bernoulli clock: mark the hours of a  $2 n$ hour clock by a uniformly random permutation of the multiset

$2 n$ hour clock by a uniformly random permutation of the multiset  $\{1,1, 2,2, \ldots, n,n\}$, meaning pick two different hours uniformly at random from the

$\{1,1, 2,2, \ldots, n,n\}$, meaning pick two different hours uniformly at random from the  $2 n$ hours and mark them

$2 n$ hours and mark them  $1$, then pick two different hours uniformly at random from the remaining

$1$, then pick two different hours uniformly at random from the remaining  $2 n - 2$ hours and mark them

$2 n - 2$ hours and mark them  $2$, and so on. Starting from hour

$2$, and so on. Starting from hour  $0 = 2n$, move clockwise to the first hour marked

$0 = 2n$, move clockwise to the first hour marked  $1$, continue clockwise to the first hour marked

$1$, continue clockwise to the first hour marked  $2$, and so on, continuing clockwise around the Bernoulli clock until the first of the two hours marked

$2$, and so on, continuing clockwise around the Bernoulli clock until the first of the two hours marked  $n$ is encountered, at a random hour

$n$ is encountered, at a random hour  $I_n$ between

$I_n$ between  $1$ and

$1$ and  $2n$. We show that for each positive integer

$2n$. We show that for each positive integer  $n$, the event

$n$, the event  $( I_n = 1)$ has probability

$( I_n = 1)$ has probability  $(1 - 2^n b_n(0))/(2n)$, where

$(1 - 2^n b_n(0))/(2n)$, where  $n! b_n(0) = B_n(0)$ is the

$n! b_n(0) = B_n(0)$ is the  $n$th Bernoulli number. For

$n$th Bernoulli number. For  $ 1 \le k \le 2 n$, the difference

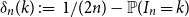

$ 1 \le k \le 2 n$, the difference  $\delta _n(k)\,:\!=\, 1/(2n) -{\mathbb{P}}( I_n = k)$ is a polynomial function of

$\delta _n(k)\,:\!=\, 1/(2n) -{\mathbb{P}}( I_n = k)$ is a polynomial function of  $k$ with the surprising symmetry

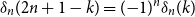

$k$ with the surprising symmetry  $\delta _n( 2 n + 1 - k) = ({-}1)^n \delta _n(k)$, which is a combinatorial analog of the well-known symmetry of Bernoulli polynomials

$\delta _n( 2 n + 1 - k) = ({-}1)^n \delta _n(k)$, which is a combinatorial analog of the well-known symmetry of Bernoulli polynomials  $b_n(1-x) = ({-}1)^n b_n(x)$.

$b_n(1-x) = ({-}1)^n b_n(x)$.

Information

- Type

- Paper

- Information

- Copyright

- © The Author(s), 2023. Published by Cambridge University Press