Policy Significance Statement

This study of existing regulatory strategies for artificial intelligence (AI) as well as patterns of AI transparency mandates within the current landscape of AI regulation in Europe, the United States, and Canada will inform the global AI regulation discourse as well as sociotechnical research on compliance with AI transparency mandates.

1. Introduction

AI systems increasingly pervade social life, commerce, and the public sector. AI systems include autocorrect and text suggestion features in word processing systems, automated risk assessments and algorithmic trading, or predictive analytics used for resource allocation planning in government agencies. In 2023, this development was accelerated by the release of text- and image-based generative AI systems such as OpenAI’s ChatGPT, Google’s Bard, or Anthropic’s Claude. AI, yet, again, is making headlines.

Over the past years, evidence has been mounting that AI systems can cause harms and amplify already existing systems of discrimination and oppression (SafiyaUmojaNoble, 2018; Eubanks, Reference Eubanks2017; Buolamwini & Gebru, Reference Buolamwini and Gebru2018; Bolukbasi et al., Reference Bolukbasi2016). These include limiting access to resources and opportunity, healthcare, or loans as well as oversurveillance and wrongful accusations of criminal behavior, among others (Johnson, Reference Johnson2022; Ledford, Reference Ledford2019).

At the same time, however, AI is seen as a conduit for innovation and prosperity, leading to governments’ large scale investments in AI across a range of domains, such as research, innovation, training, and national security (S, Reference Mona2022a; Hunter et al., Reference Hunter2018; European Commission, 2017) and underlining AI’s growing significance in the current phase of geopolitics. This phase is often portrayed as featuring a “global AI race” (Kokas, Reference Kokas2023; Annoni et al., Reference Annoni2018) in which the most powerful geopolitical powers (chiefly the United States, China, and the European Union) compete for global AI leadership. This includes large-scale data collection and trading, model development, as well as semiconductor production and chips innovation (Miller, Reference Miller2022).

AI is not new, but has—in its basic form—been around for many years. AI systems can broadly be defined as “a class of computer programs designed to solve problems requiring inferential reasoning, decision-making based on incomplete or uncertain information, classification, optimization, and perception” (Bathaee, Reference Bathaee2018). Broad distinctions can be made between rule-based and learning-based AI, with the former being given explicit instructions (such as what symptoms lead to a diagnosis or conditional statements based on the rules of chess) to follow and the latter being fed labeled or unlabeled data and independently detecting patterns in the data (Stoyanovich & Khan, Reference Stoyanovich and Khan2021). Generative AI are learning-based systems that ingest vast amounts of raw data to learn to generate statistically probable outputs when prompted by encoding simplified representations of the ingested data to produce new data that is similar but not identical to the training data (Martineau, Reference Martineau2023a).

As civil society, policymakers and governments, as well as industry players, are increasingly made aware of the potential harms of AI systems, including harms that can flow from generative AI, they are under pressure to devise regulations and governance measures that mitigate potential AI harm: AI policy has entered the “AI race” (Espinoza et al., Reference Espinoza, Criddle and Liu2023). Most recently, this race has been accelerated by the re-invigoration of “existential risk” narratives in the AI discourse that posit that generative AI is proof of AI’s potential to overthrow humanity (Roose, Reference Roose2023). These narratives have been propelled by prominent and powerful AI actors, such as Geoffrey Hinton who famously declared in mid-2023 that the potential threat of AI to the world was a more urgent issue than climate change (Coulter, Reference Coulter2023). Although subsequently framed as “AI safety” and distinctly different from the calls of academics and activists for the mitigation of real-world AI harms that are already occurring (versus being an artifact of the future), the release of generative AI into global markets and its accompanying “existential risk” discourse elevated AI regulation to a public concern.

1.1. The global race for AI regulation

Unsurprisingly, AI and technology policy scholarship is now concerned with structuring and analyzing evolving AI policy discourse. While this is challenging due to its rapidly evolving nature—by September 2023, 190 new state-based AI regulations had been introduced in the United States alone (BSA | The Software Alliance, 2023), and in late October 2023, President Biden had signed the sweeping “Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence” (US President, 2023)—there are general meta trends in AI regulation that are discernible. These trends map onto the cultural and political differences that characterize leading geopolitical powers and, therefore, also mirror geopolitical divisions. For example, the United States tend to follow a market-driven regulatory model which focuses on incentives to innovate and centers markets over government regulation (A, Reference Bradford2023). This is a contrast to the Chinese state-driven regulatory model that sets out to deploy AI to strengthen government control (Kokas, Reference Kokas2023) and the European model which emphasizes government oversight and rules (A, Reference Bradford2023).

The geopolitical tensions around AI also influence how AI regulation is framed and rationalized. For example, the United States legislature regulates AI research and deployment as a national security concern (Fischer et al., Reference Fischer2021). This includes state-wide bans of foreign AI and data collection products, such as the Chinese social media services TikTok and WeChat (Governor Glenn Youngkin, 2022), or the call for such bans on a US federal level (Kang & Maheshwari, Reference Kang and Maheshwari2023; Protecting Americans from Foreign Adversary Controlled Applications Act, 2024).

While the race for AI regulation has been interpreted as fruitful for identifying best policies and regulatory tools (Smuha, Reference Smuha2021), there is also an absence in global harmonization of AI governance, an issue that the UK AI Safety Summit held in November 2023 sought to address (Smuha, Reference Smuha2023). However, an emphasis on these global perspectives of AI regulation conceals the efforts to regulate AI at different levels of government, creating a gap in the literature on subnational AI policy (Liebig et al., Reference Liebig2022).

1.2. AI regulation and transparency

Another gap in scholarship emerges on the “other side of the coin” of AI regulation: compliance. The body of work on strategies for compliance specifically with AI regulation is nascent. For the most part, there is agreement on the vagueness of many AI regulations (Schuett, Reference Schuett2023), although enforcement potentials always depend on the regulatory set-up. At the same time, policy and legal scholars have turned to examining potential adaptions and uses of existing governance mechanisms to AI applications. Examples include the need to adapt the definition of protected groups, as codified in antidiscrimination legislation, to better harmonize with the opaque ways algorithms sort people into groups (Wachter, Reference Wachter2022), or the evaluation of existing governance measures, such as impact assessments and audits that are common in other domains, for AI systems (Stahl et al., Reference Stahl2023; Nahmias & Perel, Reference Nahmias and Perel2020; D et al., Reference Raji Inioluwa2020).

While enhancing AI “safety” and ensuring harm mitigation are goals cutting across most AI regulations on global, national, and subnational levels, there is a central mechanism that is assumed to enable the achievement of those goals: the “opening up” of AI’s technical and legal “black box” (Pasquale, Reference Pasquale2016; Bathaee, Reference Bathaee2018) by way of mandating different forms of AI transparency (Larsson & Heintz, Reference Larsson and Heintz2020). In other words, AI transparency is often considered cornerstone of safe and equitable AI systems (Ingrams & Klievink, Reference Ingrams, Klievink and Bullock2022; Novelli et al., Reference Novelli, Taddeo and Floridi2023; Mittelstadt et al., Reference Mittelstadt2016), even though it is messy in practice (F et al., Reference Heike2019; Bell et al., Reference Bell, Nov and Stoyanovich2023) and by no means a panacea (Ananny & Crawford, Reference Ananny and Crawford2018; Cucciniello et al., Reference Cucciniello, Porumbescu and Grimmelikhuijsen2017) since universally perfect AI transparency is unachievable (Sloane et al., Reference Sloane2023).

AI transparency, however, is as much of a legal and regulatory concern as it is a technical compliance concern and, therefore, is a sociotechnical concern. The kinds of transparency measures that are mandated by regulators in AI regulations ultimately must be translated into action by AI industry and enforcement agencies. This challenge is not new to computer, data, and information science disciplines where a concern with applied methods for opening up AI’s “black box” and developing “fair, accountable and transparent” machine learning techniques has become a rapidly growing field over the past 5 years. (This is, for example, evidenced in the rapid growth of the Fairness, Accountability and Transparency (FAccT) Conference of the Association for Computing Machinery (ACM): accepted papers have grown from 63 papers in 2018 to 503 in 2022 [Stanford University Human-Centered Artificial Intelligence, 2023].) This field, however, is not necessarily informed by a close reading of the ongoing AI regulation discourse. There is a disconnect between applied research on fair, accountable, and transparent AI and the ways in which the AI policy discourse frames and treats the same issues. In other words, AI researchers working on social impact issues from a sociotechnical standpoint (especially outside of corporate research labs) are not always in tune with the regulatory discourse, missing out on the potential to provide alternative pathways to AI compliance that are not directed by corporate structures and interests (S, Reference Mona2022b; Young et al., Reference Young, Katell and Krafft2022). This disconnect means that vast potentials for synergistic work between research and policy spaces on AI transparency are foreclosed.

2. Study

To address the disconnect described above, an analysis of the ways in which AI regulation is strategically approached and how AI transparency is framed within AI regulation is required. This is particularly true for countries in which both AI industry and regulatory activity are strong. Prompted by that, we survey the landscape of proposed and enacted AI regulations in Europe, the United States, and Canada—geographies that house both growing AI industries and demonstrate high AI regulatory activity—and provide a qualitative analysis of regulatory strategies and patterns in AI transparency. Our work is driven by the following research questions:

-

• What are regulatory strategies in existing AI regulations in Europe, the United States and Canada?

-

• What are the patterns of AI transparency measures in existing AI regulation in Europe, the United States and Canada?

Rather than assessing the impact or effectiveness of AI regulation, our work is focused on analyzing the regulations themselves. The goal is to provide insight into how regulations express strategies for AI harms mitigation and articulate transparency requirements and what patterns can be discerned in both regards. This foregrounds the ways in which AI harms mitigation and AI transparency techniques become meaningful within the transnational regulatory discourse.

2.1. Data collection

Our data collection focused on AI regulations (in this paper, we use a broad frame for AI regulations; we include any legally binding frameworks that govern AI in society [which includes bills, laws, ordinances, and more]) in the European Union and European Countries, in the United States, and in Canada. To be included in the sample, AI regulations in our chosen geographies had to be either enacted or not enacted. The latter included proposed regulations, inactive regulations, that is, regulations that were introduced but not ratified, stalled, as well as not yet reintroduced regulations. To be included, regulations also had to explicitly address either the functioning or the operation of AI systems, or both. We base this distinction on the following definition of transparency: Existing works in governance scholarship tend to frame transparency as either transparency of artefacts, such as budgets, or transparency of processes, such as decision-making (Cucciniello et al., Reference Cucciniello, Porumbescu and Grimmelikhuijsen2017; Heald, Reference Heald, Hood and Heald2006; Meijer et al., Reference Meijer, Paul and Worthy2018; Ingrams & Klievink, Reference Ingrams, Klievink and Bullock2022). We build on this two-pronged approach (transparency of both artifacts and processes; including both an artefact- and process- (or use)-based approach is particularly salient in the context of regulatory debates about generative AI which can be considered “general purpose technology” (Caliskan & Lum, Reference Caliskan and Lum2024)) to define AI transparency as mandates for accessibility and availability of information on the functioning of AI systems and their operation. Based on this definition, we exclude regulations exclusively focused on establishing committees or councils as they are neither focused on the functioning of AI systems, nor their operation. Additionally, AI-adjacent umbrella regulations such as data privacy regulations and fiscal laws mentioning AI, but not explicitly addressing AI, were excluded.

Across the three geographies, we followed the AI localism approach (Verhulst et al., Reference Verhulst, Young and Sloane2021) to distinguish AI regulations based on their local, state, national, and transnational reach. Consequently, agency- and government-internal AI regulations and processes are excluded since they do not articulate AI regulations on a local, state, national, or transnational level. Based on this, government resolutions are also excluded, except when they establish an AI ban or moratorium, that is, they explicitly target AI. Additionally, two exceptions were made: the 2022 US Blueprint for an AI Bill of Rights which was added due to its signaling effect for AI regulation across the United States; and similarly US Executive Orders on AI which do impact how US agencies engage with AI.

For our data collection, we relied on existing AI policy repositories that are freely accessible. Specifically, the OECD.AI policy observatory (OCED, 2023); the AI Watch website by the European Commission’s Joint Research Centre (JRC) (European Commission’s Joint Research Center, 2023); the collection of legislation related to AI by the National Conference of State Legislatures in the United States (NCSL, 2023) (which was last updated in September 2023); the US Chamber of Commerce AI Legislation Tracker (U.S. Chamber of Commerce, 2022); and the map of US locations that banned facial recognition by Fight for the Future (Fight for the Future, 2023). Additionally, we used internet search engines, specifically Google, with the following keywords and combinations thereof: “government artificial intelligence regulation,” “federal AI regulation,” plus specific years such as “2023 AI regulation,” “2023 local AI regulation,” and “2023 European AI regulation.”

The data collection took place in two phases. Phase 1 consisted of an initial collection taking place from June to August 2022. The second data collection phase took place from September to October 2023. Both searches followed the same methodology outlined above. All regulations meeting the criteria outlined above were collected in the bibliography software Zotero.

Overall, we collected a total of 138 AI regulations in Europe, the United States, and Canada. After removing nine duplicates (duplicates are stalled or inactive regulations that were reintroduced verbatim in the same chamber or in a different chamber, or were introduced verbatim in a different state; duplicates also include same-text regulations that are pending in more than one chamber), the final dataset was comprised of 129 AI regulations. All AI regulations in the sample were proposed or enacted in the time frame from July 2016 to October 2023. The AI regulatory formats featured in the sample were directives (directives are regulations which define a goal that must be legislated by the respective lower level of government, for example, directives of the European Commission must be implemented by the member states), amendments, acts, bills (which are US-specific), municipal codes, ordinances, administrative codes, and moratoria.

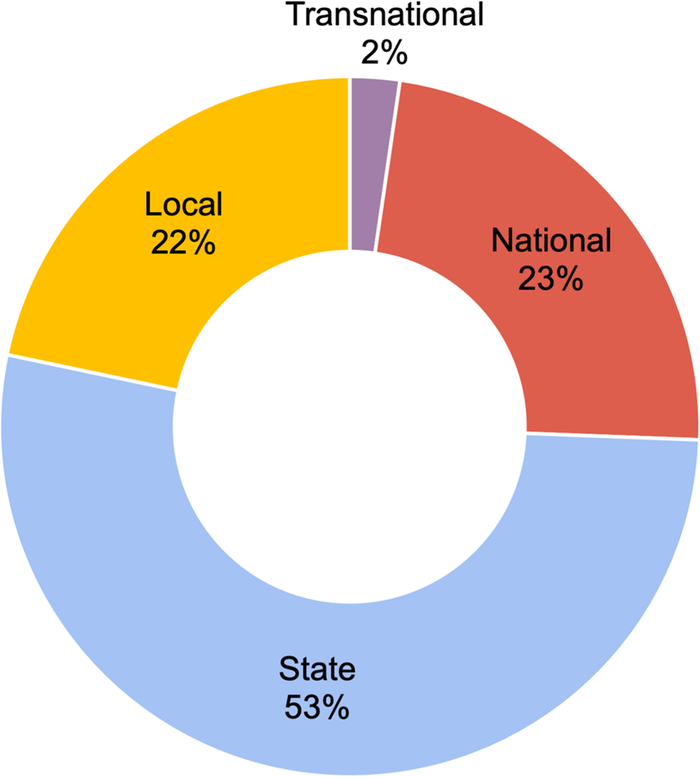

The AI localism distribution across the sample is as follows: 22% of all regulations are on the local level, 53% are on the state level, 23% are on the national level, and 2% are on the transnational level (see Figure 1).

Figure 1. AI localism distribution across the dataset.

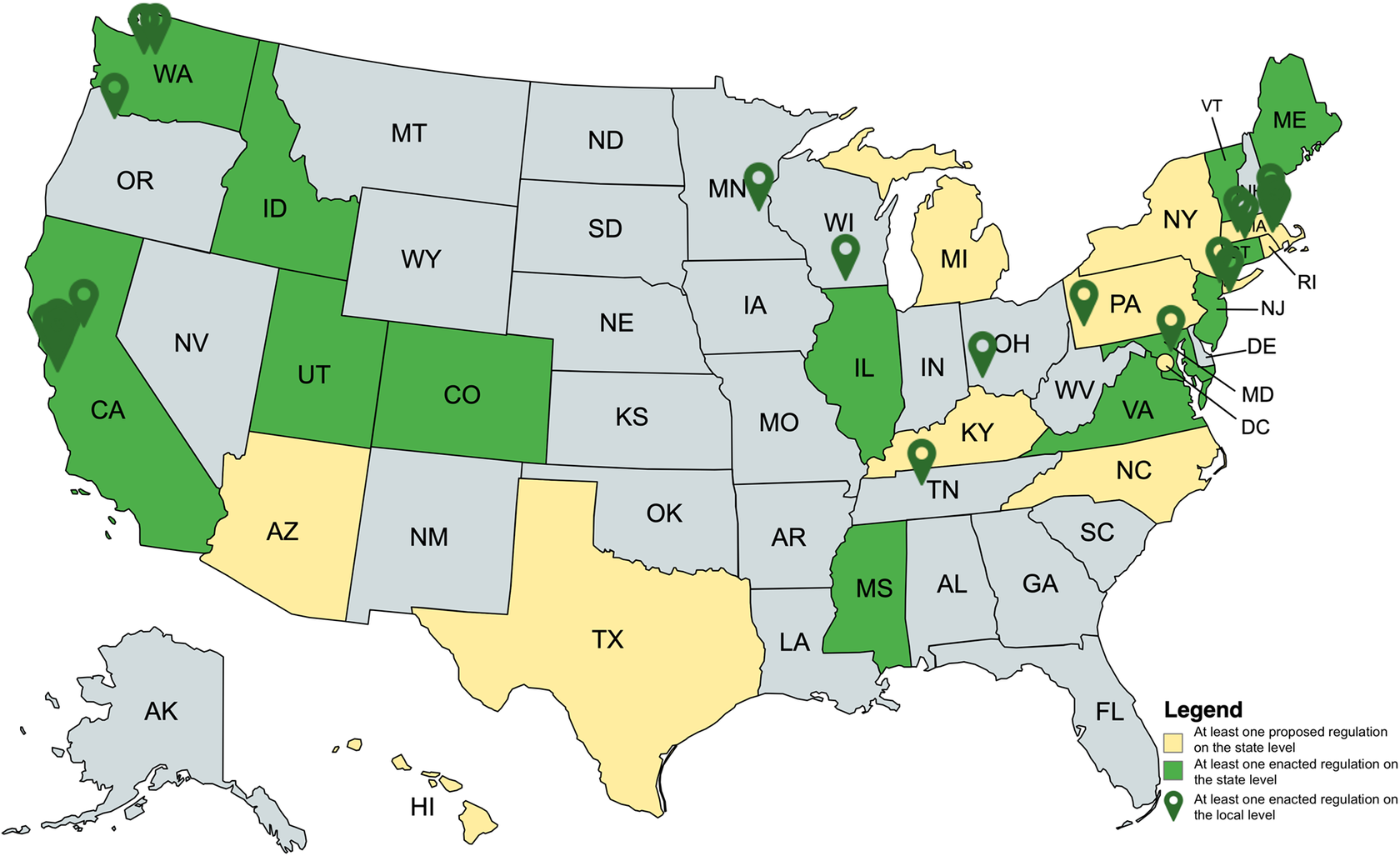

The dataset is heavily skewed toward US regulations with 90% of all regulations in the dataset being US regulations (a total of 116 regulations), only 8% European regulations (a total of 11 regulations), and 2% (a total of 2 regulations) Canadian regulations. The United States also demonstrates the biggest diversity in terms of AI localism. Figure 2 shows the regulatory activity in the United States. National regulations apply to the whole country. Green-colored states indicate that at least one regulation has been enacted on the state level. Yellow-colored states indicate that at least one regulation has been proposed on the state level. Finally, green pins indicate that at least one regulation has been enacted on the local level. Certain clusters of local regulations can be observed both in Massachusetts, where there are eight local regulations in the state alone, and California where there are seven local regulations in the Bay Area. The regulations enacted on the local level often share content in that they use similar formulations and specify requirements sometimes verbatim. For example, the regulations on surveillance technology in Oakland, Berkeley, Davis and Santa Clara County, California center on the same requirements (surveillance use policy and annual surveillance report) (Regulations on City’s Acquisition and Use of Surveillance Technology, 2018; Municipal Code, 2018; Surveillance Technology Ordinance, 2018; Surveillance – Technology and Community – Safety Ordinance, 2016).

Figure 2. Map of regulatory activity in the United States on the state and local level.

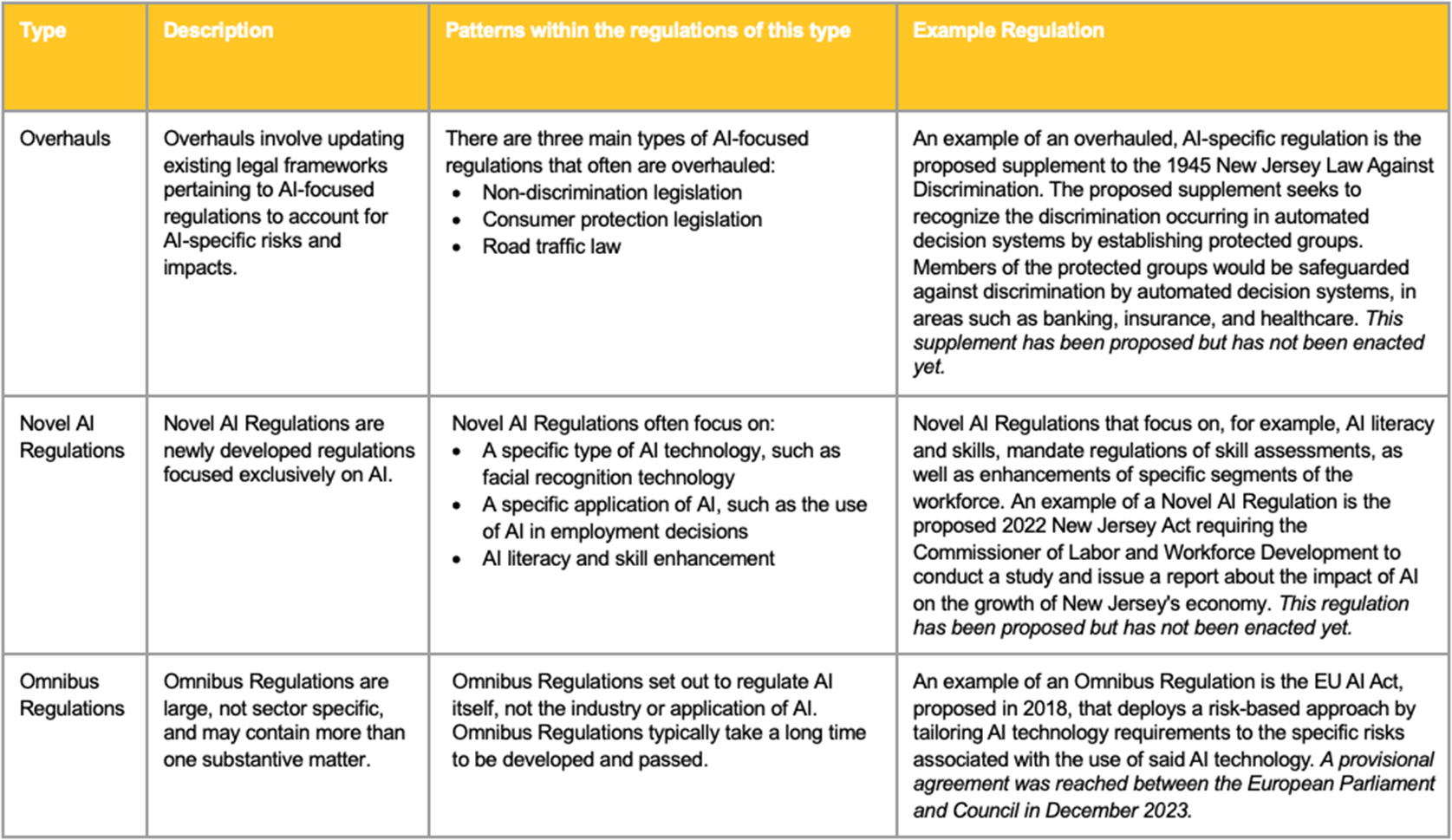

Figure 3. The three dominant regulatory strategies with examples.

2.2. Data analysis

Our data analysis followed a qualitative coding approach designed to identify patterns in semantic meaning. We used both descriptive codes (i.e., codes that summarize a topic with one word, often verbatim derived from the data, such as “amendment”) and evaluative codes (i.e., codes that summarize the interpretation of the data, such as “transnational” or “human in the loop”) (Saldaña, Reference Saldaña2021). Our data analysis and coding practice was driven by the following prompts:

-

• What is the type of regulation is the AI regulation?

-

• What are the regulatory strategies or concrete AI governance measures proposed in the regulation?

-

• What are the AI transparency requirements, if any, articulated in the regulation?

Based on these guiding questions, we read each regulation and wrote a summary of the regulation and of the transparency requirements contained within it. Additionally, we qualitatively coded each regulation. The qualitative coding process was comprised of three steps. First, existing categories or labels contained in the regulations were descriptively coded as patterns. For example, if the regulation was called “amendment to XXX,” then it was coded as “amendment” (descriptive coding). Second, the scope of the regulation was analyzed, that is, the concern of the regulation with the functioning of AI, and/or specific sectors and their use of AI technologies and applications (evaluative coding; using codes such as “facial recognition technology”). Third, the explicit governance measures described in the regulation were analyzed (evaluative coding; using codes such as “ban”). To identify patterns in AI transparency requirements, the same strategy was deployed, using a combination of descriptive and evaluative codes to analyze how AI transparency was framed and articulated in the regulations. During the coding process, patterns began to emerge immediately for both the regulatory strategies and the transparency mandates. We discussed these emerging patterns in weekly meetings and began to consolidate codes based on their growing overlap in meaning. For example, we consolidated all codes that described governance measures exclusively focused on types of AI technology, or areas of application into “Novel AI Regulations,” one of the regulatory strategies we identified as a major pattern. Similarly, we combined all codes that described some form of human intervention as AI transparency into “Human in the Loop.”

2.3. Limitations

As with any research, this study has a number of limitations. First, its focus on AI regulations limits what can be said about the political and social processes surrounding AI law-making. For example, excluding an analysis of the discourse of AI regulation, as well as ethnographic work on the processes of creating AI regulation excludes the generation of knowledge about whose voices and concerns are heard and addressed in AI regulation, and whose are ignored. In other words, this research does not address issues of inequality in AI regulation. Furthermore, albeit working toward creating synergies between AI policy, social science research, and applied AI work (including compliance), this study does not include research on how technologists deal with AI regulation, or even contribute to it through work on fair, accountable and transparent AI, nor does it include research on compliance practice or the “careers” of AI regulations over time.

Second, our dataset shows a number of biases that limit our ability to make generalizable statements based on our analysis. Our dataset is characterized by a Western-centric view, excluding regulatory developments on AI in other geographies and cultures, including those with very active AI and software industries, such as China or India, and those historically excluded from global technology discourses, such as African countries. Additionally, the heavy skew toward US regulations is a limitation. Furthermore, the European sample is heavily biased due to the language limitations of the research team, who only were able to access and process regulations written in English or German. The European regulations consist of AI regulations at the level of the European Union, as well as British, Austrian, German, and Dutch AI regulations. (It must be noted at this point that from very early on AI regulation was considered a matter of the European Union, rather than national legislation. The European Union began working on the topic of AI regulation as early as 2017, a development effectively halting AI regulations on the national level of EU member states.) It must also be noted that 55% of all regulations in the dataset are not enacted yet or are inactive. In other words, this study is an examination of aspirations and strategic directions of the AI regulatory discourse, not an assessment of the general effectiveness of AI regulations.

3. Findings and discussion

Our data analysis identified three types of dominant regulatory strategies that cut across AI regulation in Europe, the United States, and Canada, as well as six AI transparency patterns.

3.1. Regulatory strategies

The three types of dominant regulatory strategies for AI are overhauls, novel AI regulations, and the omnibus approach.

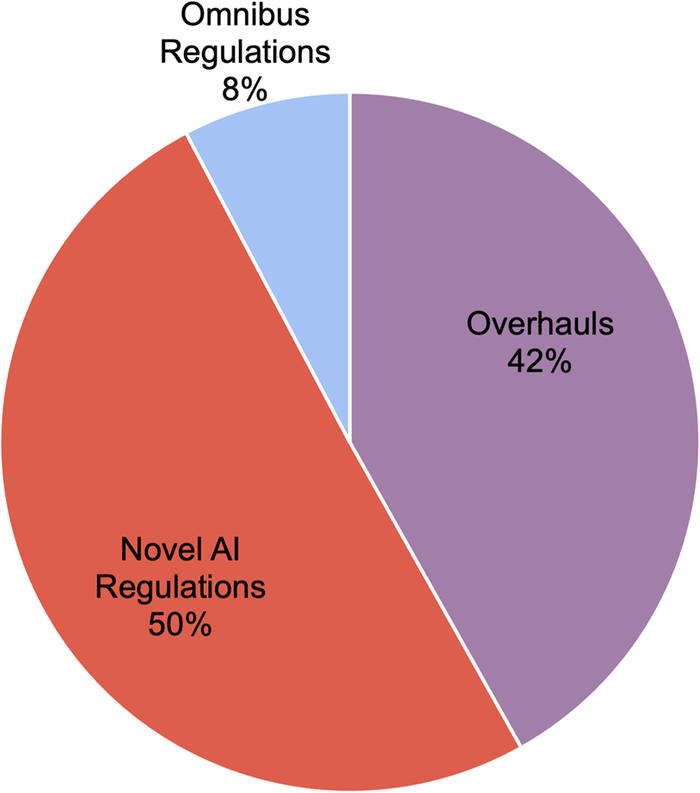

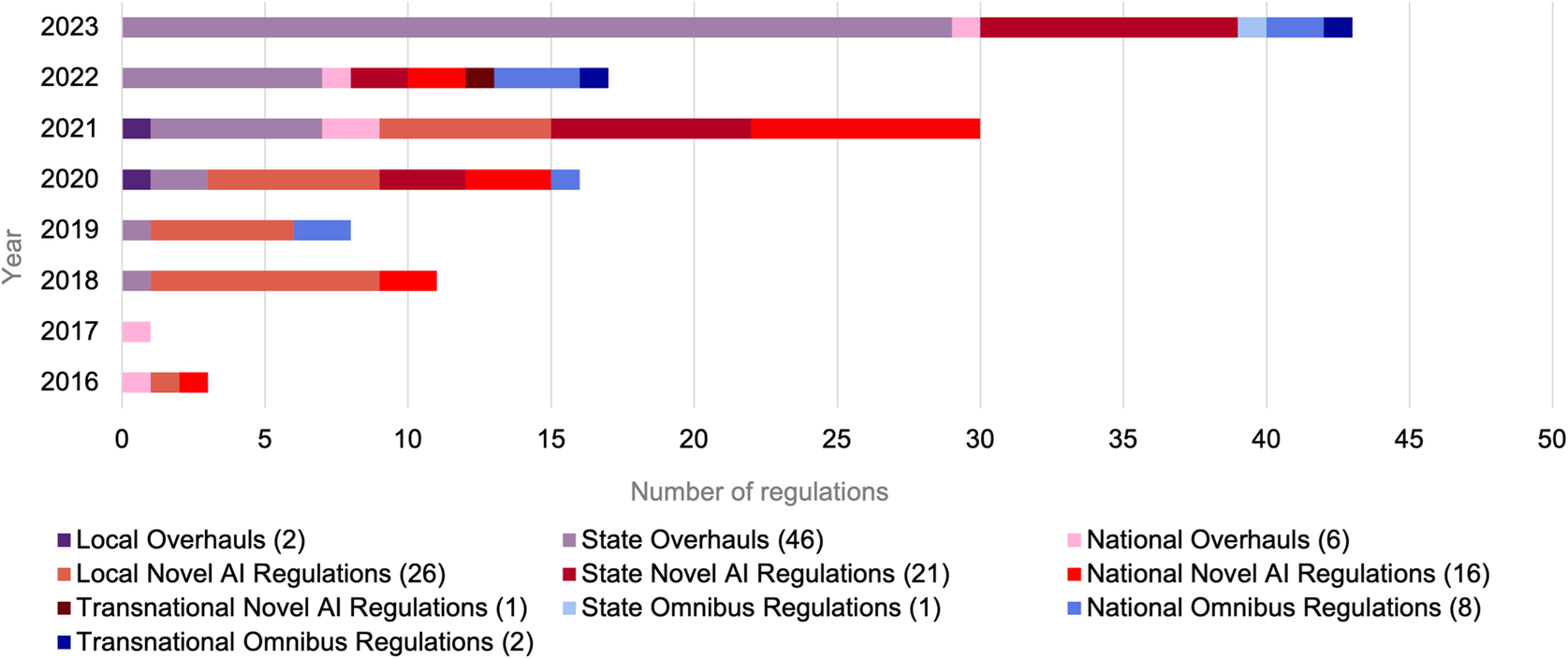

In our sample, 42% of the regulations were overhauls (a total of 54 regulations), 50% were novel AI regulations (a total of 64 regulations), and 8% were omnibus regulations (a total of 11 regulations). This can be seen in Figure 4 (in percentages).

Figure 4. Distribution of regulatory strategies across the dataset.

It must be noted that even though omnibus regulations present a minority in the sample, omnibus regulations generally affect more people than novel AI regulations or overhauls of existing regulations with respect to AI. This is because omnibus regulations tend to occur at the national or transnational level. The EU has taken an omnibus and transnational approach to AI regulation through the EU AI Act. In contrast, the United States shows a significant amount of regulatory activity on the state level. But fewer people are affected by US state-level activity. This means that the omnibus approach pursued in Europe affects more people on average than the more fragmented approach in the United States. (In the Appendix, we provide population data per regulation.)

3.1.1. Overhauls

The first regulatory strategy that emerges at the intersection of AI and lawmaking is overhauling existing legal frameworks with a focus on AI. The driving question, here, is how existing regulatory frameworks, policies, and laws can be leveraged to account for AI-specific risks and impacts and mitigate those.

Notable examples of AI-focused overhauls are specific adjustments or additions that are made to US anti-discrimination legislation. For example, the proposed supplement to the 1945 New Jersey Law Against Discrimination (this supplement was introduced twice, once in 2020 (S 1943)(Gill, 2020) and once in 2022 (S 1402)(Gill, 2022)), which seeks to modify existing anti-discrimination legislation to account for discrimination that can occur in the use of automated decision systems or AI. Under this supplement, members of protected groups would be safeguarded against discrimination by automated decision systems, particularly in areas like banking, insurance, and healthcare. The amendment was proposed but not enacted in 2020 (S 1943) (Gill, 2020) and was recently reintroduced unchanged.

Another area in which AI-focused overhauls occur is consumer protection. Some overhauls strengthen user and consumer rights online, in particular with respect to large online platforms and their use of content moderation practices and targeted advertisement technologies, some of which can be AI-driven. For example, the California Business and Professions Code (SB 1001) (Bots, 2018) was amended in 2018 to mandate the disclosure of bots in commercial transactions.

In Europe, there have been AI-specific overhauls, too. For example, the Dutch 2016 Amendment of the Road Traffic Act (Amendment of the Road Traffic Act, 2016) specifies that road tests of autonomous vehicles are exempt from the Road Traffic Act (Wegenverkeerswet, 1994) if the experiments are monitored and evaluated by the Dutch vehicle authority. Similarly, the German 2017 Eight Act amending the Road Traffic Act (Gesetz zum automatisierten Fahren, 2017) amends the “Straßenverkehrsgesetz” (Road Traffic Act) (Road Traffic Act (Straßenverkehrsgesetz) (Section 7–20), 2021) and details rules and requirements for both manufacturers and drivers of vehicles with a highly or fully automated driving function.

3.1.2. Novel AI regulations

The second dominant regulatory strategy for AI is the development of entirely novel regulations that are exclusively focused on AI. These come at different scales and levels but are always new laws created within a given legal regime (such as a code of ordinances, which is a city’s compilation of all its laws and some of its regulations). Within this type of strategy, a pattern emerges: novel AI regulations tend to focus on the artefact of AI, that is, a specific type of AI technology, such as facial recognition technology, on a specific application or use of AI, such as the use of AI in employment or healthcare, or they set out to enhance AI literacy and skills.

An example of novel AI regulations focused on a specific AI technology is the growing body of regulations focusing on facial recognition technology. In the United States, there are many local facial recognition technology regulations. Most are concerned with the use of facial recognition technology by local government agencies or departments. Local governments either enforce a moratorium (Moratorium on Facial Recognition Technology, 2020; Moratorium on Facial Recognition Technology in Schools, 2023) or a complete ban of facial recognition technology, such as the City of Northampton in Massachusetts (Prohibition on the Use of Face Recognition Systems by Municipal Agencies, Officers, and Employees, 2019), which enacted a prohibition of the use of face surveillance systems by municipal agencies, officers, and employees in 2019. More often the ban comes with a partial exemption for law enforcement like in Alameda, California (Prohibiting the use of Face Recognition Technology, 2019), or a full exemption for law enforcement, such as in Maine (HP 1174) (ILAP, 2021). Municipal governments also often specify an approval process for facial recognition or, more generally, surveillance technology.

Another focus in AI regulations directed at a specific AI technology are autonomous vehicles. Respective AI regulations have been enacted in the UK (Automated and Electric Vehicles Act, 2018) and Austria (Automatisierte Fahrsysteme, 2016), typically specifying conditions for the testing of vehicles with automated driving functions. Often there are specific requirements for military vehicles and again different requirements pertaining to different levels of automation within the vehicle.

An example of novel AI regulations targeting the use of AI in a specific domain are regulations concerned with the use of automated systems, or AI, in hiring and employment. For example, the Illinois Artificial Intelligence Video Interview Act (820 ILCS 42) (Artificial Intelligence Video Interview Act, 2020) requires that applicants must give consent to employers seeking to use AI analysis on recorded video interviews. Similarly, New York City’s Local Law 144 (The New York City Council, 2021) requires that applicants are notified of any AI use that may occur in the application process. It also mandates annual bias audits for automated employment decision tools. Similarly, a proposed regulation in Pennsylvania targets the use of AI in health insurance, mandating the disclosure of AI use in the review process (HB 1663) (Rep Venkat, 2023). Furthermore, AI regulation often targets government procurement and use of AI specifically, such as a proposed Vermont act relating to state development, use, and procurement of automated decision systems (H 263) (Brian Cina, 2021).

Novel AI regulations designed to enhance AI literacy and skills are, for example, regulations that mandate skill assessments and the enhancement of specific segments of the workforce. An example of this is the proposed 2022 New Jersey Act requiring the Commissioner of Labor and Workforce Development to conduct a study and issue a report on the impact of artificial intelligence as it pertains to the growth of the state’s economy (A 168) (Carter, 2022).

3.1.3. Omnibus regulations

Regardless of the domain, an omnibus regulation is generally large in scope, not constrained to specific sectors, and may contain more than one substantive matter. An example of an omnibus approach to data protection is the European General Data Protection Regulation (GDPR) (S, Reference Paul2019). Omnibus approaches to regulation are rare. They typically take a long time to develop and get passed. For example, the GDPR took 8 years, from 2011 to 2019, to be fully developed and come into effect (European Data Protection Supervisor, 2023). The groundwork for the EU AI Act was laid in 2018 with the launch of a high-level expert group working on AI. In the United States, various regulations following an omnibus approach have been introduced over the years, chiefly the proposed Algorithmic Accountability Act of 2019 (S 1108) (Wyden and Booker, 2019) and the proposed Algorithmic Accountability Act of 2022 (S 3572) (Senator Wyden, 2022). As of Spring 2024, both the European Union and the United States have omnibus approaches for putting guardrails around AI. In the European Union, it is the EU AI Act (the EU AI Act was introduced in April 2021. The European Parliament and Council reached a provisional agreement on the Artificial Intelligence Act on December 9th 2023) (Artificial Intelligence Act (EU AI Act), 2024), and in the United States, it is the Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (EO 14110; the US President manages the operations of the executive branch of government through Executive Orders; Executives Orders are not the law but are prescriptive for the operations of government agencies (Executive Orders, 2024)) (US President, 2023). While the EU AI Act is a regulation, the Executive Order only directs the design and use of AI in the US executive branch.

These frameworks set out to regulate AI itself, not the industry or application. To achieve that, the EU AI Act (Artificial Intelligence Act (EU AI Act), 2024) deploys a risk-based approach. The Canadian Directive on Automated Decision-Making (Directive on Automated Decision-Making, 2023) deploys a risk-based approach, too. However, like the US Executive Order, it only addresses government use of AI. The EU’s risk-based approach involves tailoring requirements to the specific risks associated with the use of each AI technology. The EU AI Act introduces a tiered risk approach that categorizes the risk of AI use as either unacceptable, high, limited, or minimal and imposes respective “ex-ante” and “ex-post” testing and mitigation approaches, chiefly premarket deployment impact assessments and postmarket deployment audits (Mökander et al., Reference Mökander2022).

The US Executive Order (EO 14110) (US President, 2023) is not based on one single approach to regulating AI, such as a risk-based approach, but assembles various concerns and approaches under one umbrella. It is centered on the notion of AI safety, security, and trustworthiness and articulates how these are to be achieved across eight areas of concern (The White House, 2023): AI safety and security standards; privacy; equity and civil rights; consumer protection; the labor market; innovation and competition; international collaboration; and government use of AI. Implementation and enforcement of the Executive Order is also decentralized and falls onto different federal agencies.

3.1.4. Discussion of regulatory strategies

This typology of regulatory strategy cuts across the AI localism levels since any of these strategies are deployed at the transnational, national, state, and local level. Figure 5 shows what types of regulation were proposed between 2016 and 2023 at each AI localism level, indicating trends in regulatory strategies. We can see that on the state level, there is a large increase in proposals to overhaul existing regulations with respect to AI. Additionally, an overall increase in proposed AI regulations AI can be observed. Similarly, Figure 6 shows regulatory strategies per AI Localism level. It can be observed that on the state and local level, regulations are generally novel AI regulations or overhauls. Omnibus regulations are typically proposed at the transnational or national level.

Figure 5. Regulatory strategies in absolutes by AI localism level per year.

Figure 6. Regulatory strategies in absolutes by AI localism level.

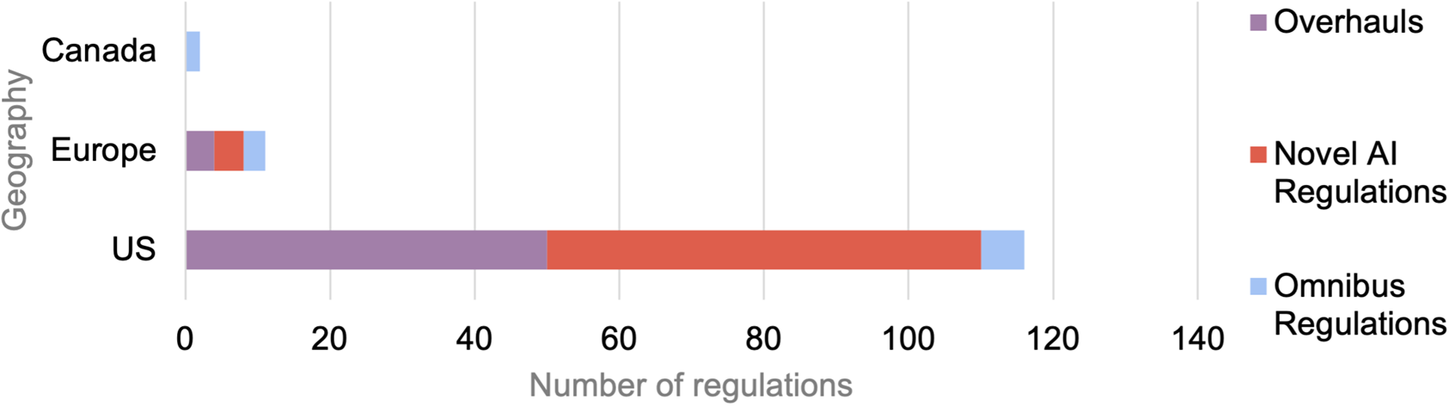

Figure 7 shows the prevalence of the regulatory strategies across geographies. As indicated earlier, European and Canadian regulations focus on omnibus regulations, whereas in the United States, predominately overhauls and novel AI regulations are proposed. Figure 7 also shows the skew in our sample toward US regulations, as mentioned in the limitation section.

Figure 7. Regulatory strategies in absolutes by geography.

It is important to note that the boundaries between the categories of regulatory strategies we introduced are extremely porous. AI regulation strategies continually evolve and increasingly overlap. For example, they may have the characteristics of an omnibus approach but focus on a particular sector, for example by virtue of their policy reach. Examples of this are both the Canadian Directive on Automated Decision-Making (Directive on Automated Decision-Making, 2023) and the US Executive Order on Safe, Secure and Trustworthy AI (EO 14110) (US President, 2023) which both focus on AI broadly, but only in terms of government use of AI.

Similarly, AI regulations may focus on AI technology alongside the application of AI. For example, there are AI regulations that are AI technology-specific, as well as application-specific, but that are an overhaul, such as the 2023 proposed overhaul of the Arizona revised statues relating to the conduct of elections which targets the use of AI in machines used for elections, for the processing of ballots, or the electronic vote adjudication process (S 1565; the act was vetoed by the Governor of the State of Arizona Katie Hobbs in April 2023 (Hobbs, Reference Hobbs2023)) (Carroll, 2023). Similarly, a new AI regulation may build on the omnibus approach but be dedicated to AI upskilling and innovation, like the 2019 US Executive Order on Maintaining American Leadership in Artificial Intelligence (EO 13859) (US President, 2019).

The patterns in AI regulation approaches and their dynamics per geography and AI Localism level map onto similar studies presented by other scholars. For example, Marcucci et. al (Marcucci et al., Reference Marcucci2023) find a fragmented and emergent ecosystem of global data governance and, among other aspects, identify dominant patterns in terms of principles, processes, and practice. Their recommendation to work toward a comprehensive and cohesive global data governance framework, however, targets policy makers rather than sociotechnical researchers. Similarly, Dotan (Dotan, Reference Dotan, Alexander, Christoph and Raphael2024) surveys the evolving landscape of US AI regulation, covering much longer time periods, and finds a fragmented field in which regulatory approaches increasingly converge.

3.2. AI transparency patterns

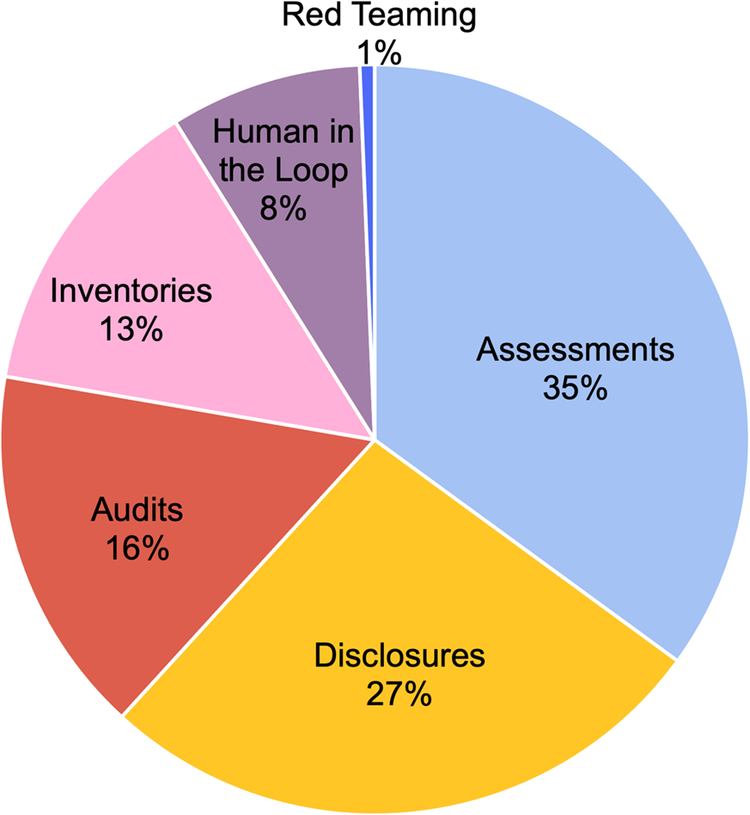

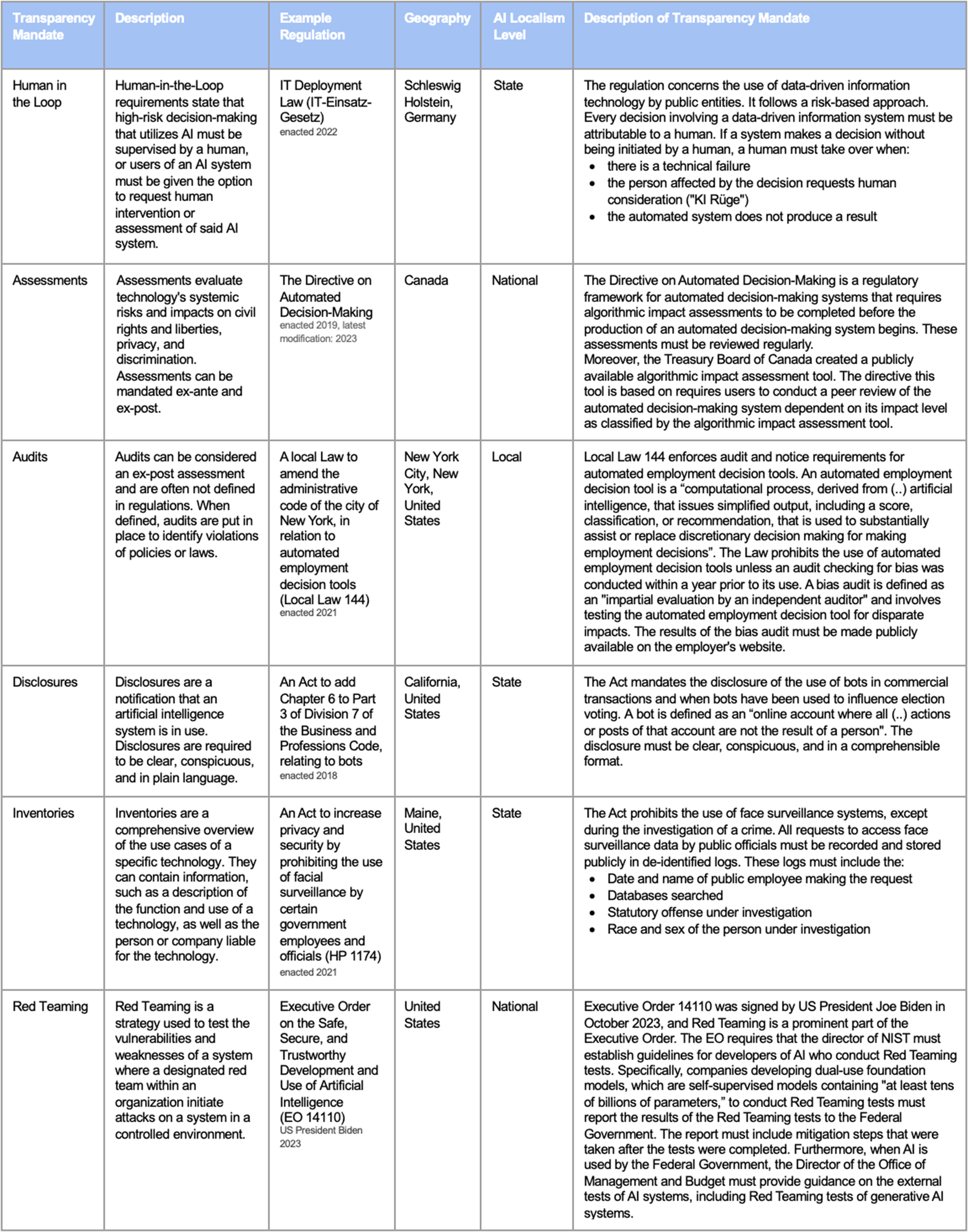

The AI transparency mandates identified in the sample fall into six categories. They are: human in the loop, assessments, audits, disclosures, inventories, and red teaming. Figure 8 shows the distribution of individual transparency mandates in the sample: 8% are human in the loop requirements, 35% are assessments, 27% are disclosure requirements, 16% are audits, 13% are inventories, and 1% are red teaming.

Figure 8. Distribution of transparency mandates across the dataset.

Figure 9. The six types of AI transparency mandates with examples.

In total, there are 157 individual transparency mandates assigned across all regulations in the sample. This includes regulations with combinations of transparency mandates. Overall, 48 out of the 129 regulations analyzed do not assign any transparency mandate.

3.2.1. Human in the loop

Many regulations treat human intervention as a pathway to mitigating potential AI harms. The requirements for human in the loop typically mandate that either a human must supervise high-risk decisions and/or be able to intervene, or an affected individual can demand human intervention. This usually requires at least a partial opening up of AI’s “black box” because the system has to be designed in a way that a human actually can intervene.

Example: An example of this is an IT Deployment Law, enacted in April 2022 in Schleswig-Holstein, Germany (IT Deployment Law (IT-Einsatz-Gesetz), 2022). This state regulation governs the use of data-driven information technology by public entities. Of particular interest is the law’s Human in the Loop provision which requires that in cases of technical failure, at the request of the affected person, or if the system fails to produce a result, human intervention must replace the technology. The law stipulates that there must be a clearly defined process enabling humans to deactivate the technology and assume control of the task.

3.2.2. Assessments

Assessments are techniques designed to evaluate the systemic risks and impacts of AI. These are typically articulated in terms of civil rights and liberties, privacy, and discrimination. Assessments can be mandated to be completed both premarket deployment (ex-ante) or postmarket deployment (ex-post). Some AI regulations do not only mandate AI assessments, but also propose risk mitigation measures (Digital Services Oversight and Safety Act, 2022). Assessments feature prominently in most AI regulations.

Example: An example of mandated assessments is the mandatory use of the Algorithmic Impact Assessment (AIA) tool (Treasury Board of Canada, 2023) in the Canadian Directive on Automated Decision-Making (Directive on Automated Decision-Making, 2023). This tool is designed as a questionnaire to help assess the impact level of an automated decision-making system. It consists of questions focused on risk alongside questions on mitigation. The assessment scores are derived from various factors such as the system’s architecture, its algorithm, the nature of decisions it makes, the impact of these decisions, and the data it uses.

3.2.3. Audits

Audits are a prominent feature in many AI regulations. They can also be considered an ex-post assessment (which is the case in the EU AI Act). Audits generally compare nominal values or information to actual values or information (Sloane & Moss, Reference Sloane and Moss2023), but they are often not clearly defined in AI regulation. Where they are specified, however, they are deployed to identify violations of policies or laws. Here, the comparisons of how an AI should behave (nominal value) versus how it actually behaves (actual value; this includes audits of potential bias in AI training data), or of how it should be used versus how it is actually used, serve as a technique for identifying violations of policies or laws, such as anti-discrimination law, consumer protection regulation, or specific use policies.

Example: New York City’s Local Law 144 (The New York City Council, 2021) mandates an annual bias audit. Even though the regulation does not define the term ‘bias audit’, it is widely interpreted in the context of US anti-discrimination legislation in employment. That means that employment and hiring AI is checked for potential adverse impacts on individuals and communities based on protected categories such as race, religion, sex, national origin, age, disability, or genetic information. Local US regulations concerning surveillance technology often mandate both a surveillance use policy and an annual report following the deployment of such technology. Audits, included as part of the annual report, serve as a mechanism to ensure adherence to the surveillance use policy. A notable instance of this can be seen in Oakland, California’s regulations on the city’s acquisition and use of surveillance technology [85], which were enacted in May 2018 and subsequently amended in January 2021.

3.2.4. Disclosures

Disclosure requirements typically prescribe that AI users must be notified that an AI system is at work. In general, AI regulations mandating disclosure state that the disclosure format must be a clear, conspicuous notice in plain language. Some AI regulations require disclosure for bots, advertisements, automated decision systems, and content moderation. Disclosure requirements can be combined with other requirements, such as human in the loop.

Examples: An example is an amendment of the California Business and Professions Code relating to bots, enacted in 2018 (SB 1001) (Bots, 2018). Bots are defined as online accounts whose actions and posts are not produced by a person. When bots are used to communicate or interact in commercial transactions or in an election then this must be disclosed in a clear, conspicuous, and reasonably designed format. Similarly, a proposed 2023 AI regulation in Illinois (HB 1002) (Illinois Hospital Act, 2023) combines the disclosure requirement with the option of human consideration (i.e., human in the loop): when diagnostic AI is used, it must be disclosed and patients can request a human assessment instead.

3.2.5. Inventories

Inventories are designed to provide an overview of the uses of AI by specific organizations or agencies. Their format varies. An inventory can provide information on the training data (a popular format for providing information on training data is datasheets (Gebru et al., Reference Gebru2021); datasheets are, for example, mandated in the EU AI Act; here, training data sets must be described (including provenance, scope and main characteristics, labeling, and cleaning), alongside training methodologies and techniques (Artificial Intelligence Act (EU AI Act), 2024)) and the functioning and use of AI systems, as well as information on liability. Inventories are not always public, nor are they necessarily comprehensive.

Example: An example is the California Act concerning automated decision systems (AB 302) (Ward, 2023) which was approved by the Governor in October 2023. This regulation requires the Department of Technology to conduct an inventory of all AI systems that are classified as “high-risk” (high-risk automated decision systems in this regulation are defined as automated decision systems that assist or replace human discretionary decisions that have a legal or similarly significant effect (Ward, 2023)) and have been proposed or are already developed, procured, or used by any state agency prior to September 1, 2024. The inventory must include information on how the AI supports or makes decisions, its benefits and potential alternatives, evidence of research on assessing its efficacy and benefits, the data and personal information used by the AI, and risk mitigation measures. (This example shows that there are intersections between governance measures. The inventory includes a risk assessment.)

Another regulation requiring an inventory of use cases of surveillance technology is Maine Act HP 1174 to increase privacy and security by prohibiting the use of facial surveillance by certain government employees and officials (ILAP, 2021). The regulation was enacted in July 2021. It prohibits government departments, employees, and officials from using face surveillance systems and their data except for specific purposes. One exception is the investigation of a crime. Requests to search facial surveillance systems must be logged by the Bureau of Motor Vehicles and the State Police. The logs are public records and must contain demographic information, for example the race and sex of the person under investigation.

3.2.6. Red teaming

Red teaming is a relatively novel addition to the canon of AI transparency patterns. It originates in military simulations in the Cold War where adversarial teams were assigned the color red (Ji, Reference Ji2023). Red teaming has since been employed as a tactic in cybersecurity. Typically, red teaming is performed by internal engineers of a company (although not always, see the example below) with the goal of testing specific outcomes of certain types of interactions with a system, such as adversarial attacks (such as obtaining access to personal credit card details). The goal of red teaming is to identify (otherwise hidden) flaws in the system so that they can then be addressed (Friedler et al., Reference Friedler2023). Red teaming is currently considered to be a valid addition to already existing AI (risk) assessment processes (Friedler et al., Reference Friedler2023).

Example: An example is the 2023 Executive Order on AI (EO 14110) (US President, 2023) since it includes AI red teaming as a key measure. In August 2023, the White House also cohosted a large-scale generative AI red team competition at the hacker conference DEF CON (Ji, Reference Ji2023). Outside of these instances, red teaming has not formally been proposed in any of the regulations surveyed for this study.

3.2.7. Discussion of transparency mandates

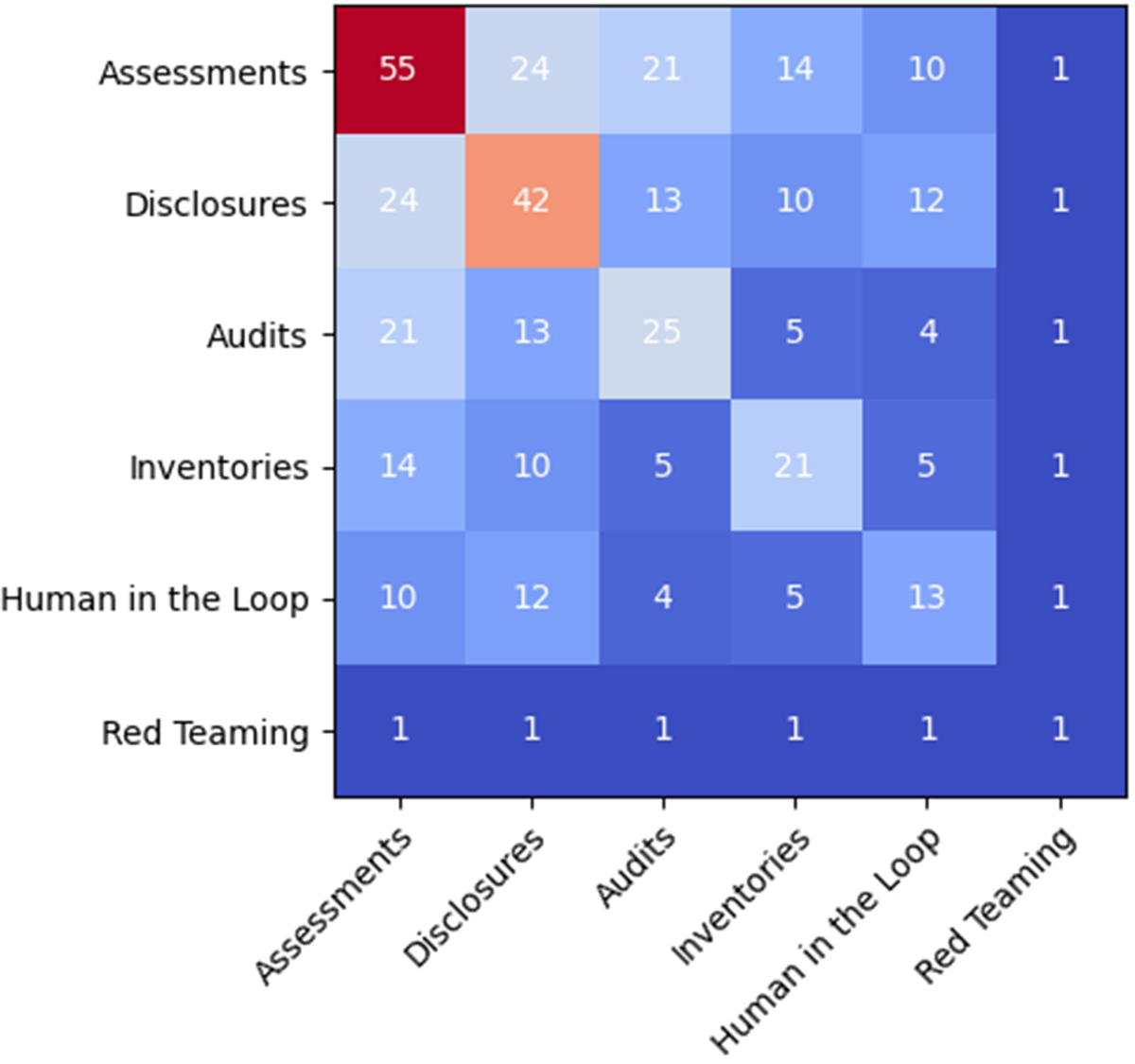

Like the patterns in AI regulation strategies, the AI transparency patterns often intersect and combine. For instance, regulations may mandate risk assessments alongside bias audits. To illustrate the popularity of individual transparency mandates and their combinations, Figure 10 shows transparency mandates in the regulations in absolute numbers. The diagonal entries showcase the number of regulations assigning a single transparency mandate: 55 regulations in the sample require some form of assessment. The off-diagonal entries showcase the pairings of transparency mandates. There are, for example, 24 regulations that require both assessment and disclosure. This heat map is symmetric. Following a temperature scale, red color indicates high and dark blue low frequency.

Figure 10. Heat map of transparency mandates.

We want to note that the six transparency mandates we identified in our dataset as dominant should not be taken as comprehensive, nor as best practice. The field of fair, accountable and transparent AI is constantly evolving. The introduction of generative AI has brought about new challenges to AI accountability and transparency, but also new solutions that cut across transparency and model improvement. For example, retrieval-augmented generation (RAG) is a new technique that directs large language models (LLMs) to retrieve facts from an external knowledge base (for example, a specific text corpus) to ensure higher accuracy and up-to-date information, as well as provide transparency about the output generation (Martineau, Reference Martineau2023b). Users can check an LLM’s claims vis-à-vis the base corpus. RAG cannot exclusively be classified as transparency technique; it also is an accuracy technique. Current regulations do not account for these new developments. Moving forward, regulators may want to consider the growing overlaps between compliance and innovation as they refine AI regulations and the transparency mandates contained within them. This is particularly true for many of the more specific state regulations that are not enacted yet.

We also want to note that the regulatory strategies and transparency mandates we outline do not automatically mitigate the structural issues that persist in the realm of AI design and society at large. These include, but are not limited to, pervasive AI harms, homogeneous groups entrusted with AI design and governance and resulting power imbalances, the extractive nature of data collection for AI systems, or AI’s ecological impact.

Against that backdrop, we want to caution against using the list of AI transparency mandates in a check box manner. Rather, we envision a future of applied socio-technical research where researchers can build on these patterns to push forward with applied research on any of these mandates in order to avoid corporate capture of compliance. Empowering the research community to push forward with this effort in an interdisciplinary way (rather than letting corporate legal teams decide on what good compliance looks like) keeps the door open for asking questions around the structural issues that AI might be involved in and that AI regulation set out to mitigate in the first place.

4. Conclusion and Future Work

In this paper, we provided a bottom-up analysis of AI regulation strategies and AI transparency patterns in Europe, the United States, and Canada. The goal of this study was to begin building a bridge between the AI policy world and applied socio-technical research on fairness, accountability and transparency in AI. The latter is a research space in which methods for compliance with new AI regulations will emerge. We envision future socio-technical research to explicitly build on the patterns of AI transparency mandates we identify and develop meaningful compliance techniques that are public interest- rather than corporate interest-driven, and that are research-based. These may include more holistic audit approaches that include assumptions, stakeholder-driven impact assessments and red teaming approaches, profession-specific disclosures, comprehensive and mandatory inventories, and meaningful human in the loop processes. We hope that this study can be a resource to further this work and make the AI policy discourse, which often is complex and legalistic, more accessible.

In addition to the analytical work contained in the paper, we hope that our work will prompt not only pragmatic, but also critical research on the global landscape of AI regulation. In particular, we hope that future work will shed light on the AI regulations that were not included in this study, particularly in geographies that tend to be under-represented in AI research, and in research more generally. We also hope that future research turns to ethnographic explorations of the AI law-making process in different cultural contexts in order to generate a better understanding of how the typologies we identify in this paper come about, and what their politics are. Lastly, we hope that future work will examine how participatory policy-making and participatory compliance processes can be established.

Data availability statement

The repository of regulations can be accessed here: https://docs.google.com/spreadsheets/d/1-QBmdlNYDHG6eQ4fvFBKtbhben1G3DqZ/edit?usp=sharing&ouid=100323066448477703790&rtpof=true&sd=true.

Acknowledgments

We thank the reviewers for their insightful comments, as well as the teams at Data & Policy and Sloane Lab (and particularly Mack Brumbaugh) for their support of this project.

Author contribution

Conceptualization: M.S.; Methodology: M.S.; Data collection: E.W.; Data analysis: E.W.; Writing original draft: M.S., E.W.; Reviewing and editing: M.S. All authors approved the final submitted draft.

Provenance

This article is part of the Data for Policy 2024 Proceedings and was accepted in Data & Policy on the strength of the Conference’s review process.

Funding statement

This research was supported by the NYU Center for Responsible AI (Pivotal Ventures), Sloane Lab at the University of Virginia, and the German Academic Scholarship Foundation.

Competing interest

The authors declare no competing interests exist.

Ethical standards

The research meets all ethical guidelines, including adherence to the legal requirements of the study country.

Comments

No Comments have been published for this article.