Impact Statement

Information on the hydrological cycle is imprinted onto the isotopic composition of precipitation, which subsequently is preserved in natural climate archives like speleothems or glaciers. Some climate models, so-called isotope-enabled General Circulation Models (iGCMs), simulate isotopes explicitly and, thus, allow comparing climate model output under paleoclimate scenarios to samples taken from natural climate archives. However, isotopes are not included in most climate simulations due to computational constraints and the complexity of their implementation. We test the possibility of using machine learning methods to infer the isotopic composition from surface temperature and precipitation amounts, which are standard outputs for a wide range of climate models.

1. Introduction

Reliable analysis of current climate change, as well as robust prediction of future Earth system behavior, has become a crucial foundation for all endeavors to protect humanity’s prosperity, mitigate ecological disasters, or formulate plans for adaptation (IPCC, 2023). This analysis hinges on an accurate understanding and modeling of complex mechanisms in the climate system, which in turn relies on knowledge of the system’s past behavior. To analyze past climatic conditions outside the comparatively short period of instrumental measurements, we depend on environmental processes recording and preserving information on the climate system in natural “climate archives.” One way to recover past climate information from such archives is to measure the relative abundance of isotopes, particularly of the isotopes of the constituents of water molecules (Mook and Rozanski, Reference Mook2000). Due to differences in mass, molecules with varying isotopic compositions, so-called isotopologues, differ in their behavior in chemical reactions and phase transitions. For the special case of water, molecules containing heavy

![]() $ {}^{18}\mathrm{O} $

atoms, in the following denoted heavy isotopes, evaporate slower but condensate faster than ones containing the lighter

$ {}^{18}\mathrm{O} $

atoms, in the following denoted heavy isotopes, evaporate slower but condensate faster than ones containing the lighter

![]() $ {}^{16}\mathrm{O} $

. These effects are imprinted on the global hydrological cycle. The resulting patterns of the isotopic composition of precipitation depend on many variables such as precipitation amount, temperature, relative humidity, and the circulation of the atmosphere (Dansgaard, Reference Dansgaard1964). This makes heavy isotopes in water an important tracer of the hydrological cycle and consequently a valuable proxy for past climatic changes.

$ {}^{16}\mathrm{O} $

. These effects are imprinted on the global hydrological cycle. The resulting patterns of the isotopic composition of precipitation depend on many variables such as precipitation amount, temperature, relative humidity, and the circulation of the atmosphere (Dansgaard, Reference Dansgaard1964). This makes heavy isotopes in water an important tracer of the hydrological cycle and consequently a valuable proxy for past climatic changes.

Isotopic abundances are canonically expressed in the delta notation. For stable oxygen isotopes

![]() $ {}^{18}\mathrm{O} $

and

$ {}^{18}\mathrm{O} $

and

![]() $ {}^{16}\mathrm{O} $

, this is given by

$ {}^{16}\mathrm{O} $

, this is given by

Here the ratio of concentrations of the isotopic species in a given sample is compared to a defined reference standard. For

![]() $ {\delta}^{18}\mathrm{O} $

of precipitation, this standard is an artificially created sample with an isotopic composition that is typical for ocean surface water (Baertschi, Reference Baertschi1976).

$ {\delta}^{18}\mathrm{O} $

of precipitation, this standard is an artificially created sample with an isotopic composition that is typical for ocean surface water (Baertschi, Reference Baertschi1976).

One important task in paleoclimatology is to test whether hypotheses about the past climate are compatible with proxy data like

![]() $ {\delta}^{18}\mathrm{O} $

measured in natural climate archives (e.g. Bühler et al., Reference Bühler, Axelsson, Lechleitner, Fohlmeister, LeGrande, Midhun, Sjolte, Werner, Yoshimura and Rehfeld2022). To compare simulations of hypothetical climate states to those measurements, a special sub-type of climate models, so-called isotope-enabled General Circulation Models (iGCMs), was developed. They explicitly simulate isotopic compositions by following the isotopic water species through the hydrological cycle (Yoshimura et al., Reference Yoshimura, Kanamitsu, Noone and Oki2008; Tindall et al., Reference Tindall, Valdes and Sime2009; Colose et al., Reference Colose, LeGrande and Vuille2016; Werner et al., Reference Werner, Haese, Xu, Zhang, Butzin and Lohmann2016; Brady et al., Reference Brady, Stevenson, Bailey, Liu, Noone, Nusbaumer, Otto-Bliesner, Tabor, Tomas, Wong, Zhang and Zhu2019). However, many climate models and climate model simulations exist that do not include information on water isotopologues. Simulating

$ {\delta}^{18}\mathrm{O} $

measured in natural climate archives (e.g. Bühler et al., Reference Bühler, Axelsson, Lechleitner, Fohlmeister, LeGrande, Midhun, Sjolte, Werner, Yoshimura and Rehfeld2022). To compare simulations of hypothetical climate states to those measurements, a special sub-type of climate models, so-called isotope-enabled General Circulation Models (iGCMs), was developed. They explicitly simulate isotopic compositions by following the isotopic water species through the hydrological cycle (Yoshimura et al., Reference Yoshimura, Kanamitsu, Noone and Oki2008; Tindall et al., Reference Tindall, Valdes and Sime2009; Colose et al., Reference Colose, LeGrande and Vuille2016; Werner et al., Reference Werner, Haese, Xu, Zhang, Butzin and Lohmann2016; Brady et al., Reference Brady, Stevenson, Bailey, Liu, Noone, Nusbaumer, Otto-Bliesner, Tabor, Tomas, Wong, Zhang and Zhu2019). However, many climate models and climate model simulations exist that do not include information on water isotopologues. Simulating

![]() $ {\delta}^{18}\mathrm{O} $

is costly because it typically requires duplicating large parts of the water cycle for each simulated water species (Tindall et al., Reference Tindall, Valdes and Sime2009). In light of recent advances in data science, the question arises whether this isotopic output can instead be emulated using machine learning (ML) models that infer the

$ {\delta}^{18}\mathrm{O} $

is costly because it typically requires duplicating large parts of the water cycle for each simulated water species (Tindall et al., Reference Tindall, Valdes and Sime2009). In light of recent advances in data science, the question arises whether this isotopic output can instead be emulated using machine learning (ML) models that infer the

![]() $ {\delta}^{18}\mathrm{O} $

at each location from other climate variables after a model run is finished. We thus call this approach “offline-emulation.” Conducting the emulation “offline,” that is, not coupled to the climate simulation, is possible because isotopes are passive tracers of the hydrological cycle that reflect climatic variations, but have no feedback onto the climate system. Exploratory work in this direction has been conducted by Fiorella et al. (Reference Fiorella, Siler, Nusbaumer and Noone2021), who used random forest regression to infer isotope ratios in precipitation. Their study assessed whether and to what extent potential climate effects on the isotopic composition can be verified in data simulated by an isotope-enabled climate model. There is an important difference between their study and our work: while our ML methods use standard output variables of climate models as inputs, Fiorella et al. (Reference Fiorella, Siler, Nusbaumer and Noone2021) relied on tracers being implemented for the inputs to the random forest regression. While this is a suitable choice for their study design and research question, it limits the utility of their random forest model as an emulator.

$ {\delta}^{18}\mathrm{O} $

at each location from other climate variables after a model run is finished. We thus call this approach “offline-emulation.” Conducting the emulation “offline,” that is, not coupled to the climate simulation, is possible because isotopes are passive tracers of the hydrological cycle that reflect climatic variations, but have no feedback onto the climate system. Exploratory work in this direction has been conducted by Fiorella et al. (Reference Fiorella, Siler, Nusbaumer and Noone2021), who used random forest regression to infer isotope ratios in precipitation. Their study assessed whether and to what extent potential climate effects on the isotopic composition can be verified in data simulated by an isotope-enabled climate model. There is an important difference between their study and our work: while our ML methods use standard output variables of climate models as inputs, Fiorella et al. (Reference Fiorella, Siler, Nusbaumer and Noone2021) relied on tracers being implemented for the inputs to the random forest regression. While this is a suitable choice for their study design and research question, it limits the utility of their random forest model as an emulator.

Within this study, we narrow the broad task of “offline-emulation” by making a number of choices for the learned isotope emulation. The first choice is to only emulate the isotopic composition of precipitation, neglecting subsequent processes that might disturb the signal until it is stored in a climate archive (see e.g., Casado et al., Reference Casado, Landais, Picard, Münch, Laepple, Stenni, Dreossi, Ekaykin, Arnaud, Genthon, Touzeau, Masson-Delmotte and Jouzel2018). Systematic observations of oxygen isotopes in precipitation did not begin until the 1960s (IAEA/WMO, 2020), and data are spatially sparse. A line of research (Bowen and Revenaugh, Reference Bowen and Revenaugh2003; Bowen, Reference Bowen2010; Vachon et al., Reference Vachon, Welker, White and Vaughn2010; Terzer et al., Reference Terzer, Wassenaar, Araguás-Araguás and Aggarwal2013) constructs so-called iso-scapes (isotopic landscapes) for

![]() $ {\delta}^{18}\mathrm{O} $

from observation data (e.g., IAEA/WMO, 2020). These studies often address climatological rather than meteorological questions (Bowen, Reference Bowen2010), and provide, for instance, multi-year averages of annual and monthly mean

$ {\delta}^{18}\mathrm{O} $

from observation data (e.g., IAEA/WMO, 2020). These studies often address climatological rather than meteorological questions (Bowen, Reference Bowen2010), and provide, for instance, multi-year averages of annual and monthly mean

![]() $ {\delta}^{18}\mathrm{O} $

. In contrast, we exclusively utilize simulation data in our experiments and aim to learn and emulate the relationship between a given atmospheric state and the related spatial distribution of

$ {\delta}^{18}\mathrm{O} $

. In contrast, we exclusively utilize simulation data in our experiments and aim to learn and emulate the relationship between a given atmospheric state and the related spatial distribution of

![]() $ {\delta}^{18}\mathrm{O} $

.

$ {\delta}^{18}\mathrm{O} $

.

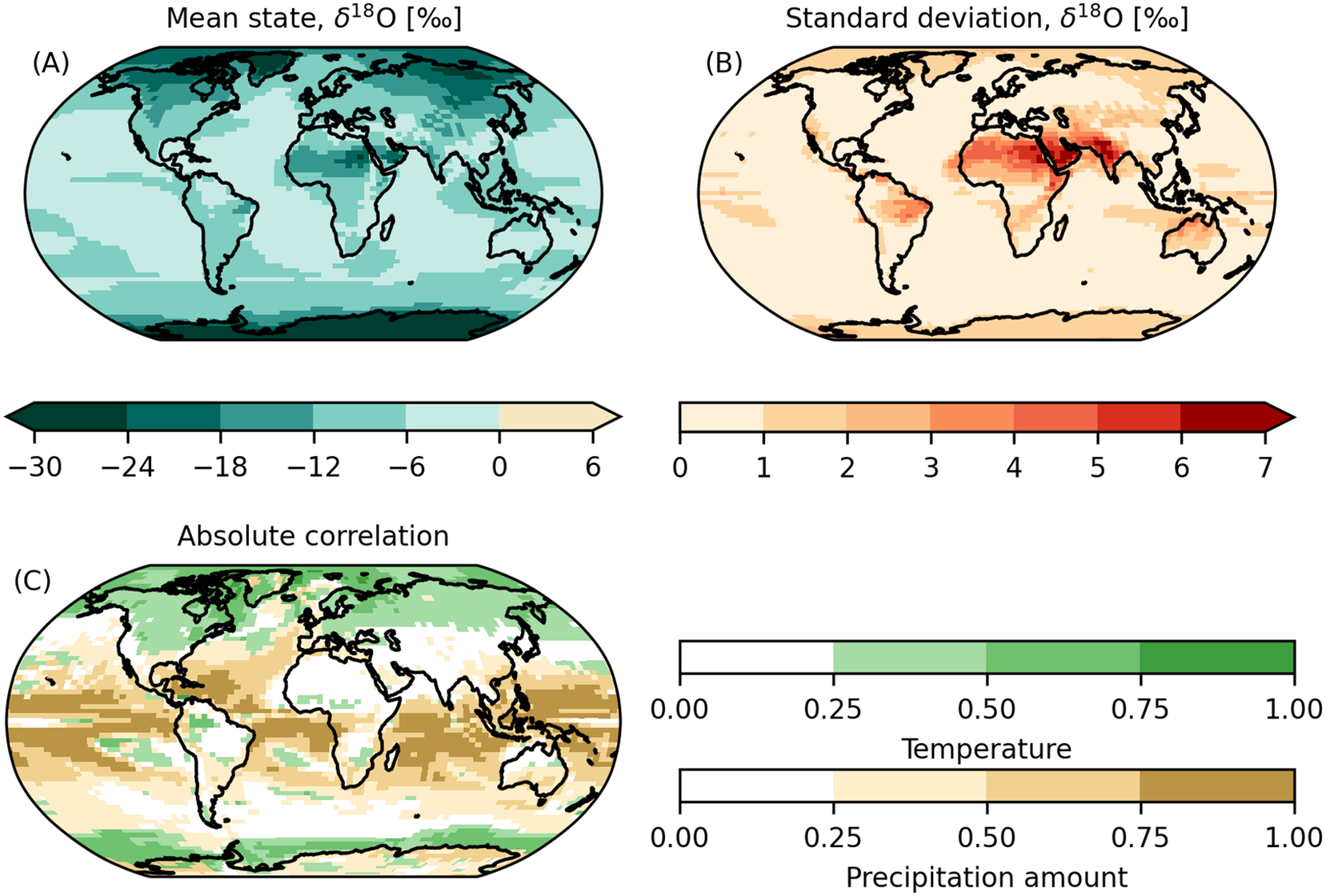

We limit ourselves to using surface temperature and precipitation amount as the two fundamental predictor variables, since these variables possess strong correlations to

![]() $ {\delta}^{18}\mathrm{O} $

that are well known experimentally (Dansgaard, Reference Dansgaard1964) and from simulations (see Figure 2c) and are frequently simulated in climate models. We decided to emulate yearly

$ {\delta}^{18}\mathrm{O} $

that are well known experimentally (Dansgaard, Reference Dansgaard1964) and from simulations (see Figure 2c) and are frequently simulated in climate models. We decided to emulate yearly

![]() $ {\delta}^{18}\mathrm{O} $

data from the last millennium (850 CE to 1849 CE) climate simulations. This is motivated by the combination of the high data availability of simulation runs of sufficient length, and the archiving resolution of paleoclimate records during this time period which is typically between monthly and sub-decadal. We also contrast the yearly emulation results with experiments using monthly resolution.

$ {\delta}^{18}\mathrm{O} $

data from the last millennium (850 CE to 1849 CE) climate simulations. This is motivated by the combination of the high data availability of simulation runs of sufficient length, and the archiving resolution of paleoclimate records during this time period which is typically between monthly and sub-decadal. We also contrast the yearly emulation results with experiments using monthly resolution.

As a measure of emulator performance, we will use the

![]() $ {R}^2 $

score, which measures the fraction of explained temporal variance, as detailed in Section 2.2.5. While we use ML methods that exploit spatial correlations in the data by design, we leave explicit incorporation of temporal correlations largely to future investigation.

$ {R}^2 $

score, which measures the fraction of explained temporal variance, as detailed in Section 2.2.5. While we use ML methods that exploit spatial correlations in the data by design, we leave explicit incorporation of temporal correlations largely to future investigation.

Working within these constraints, our article presents the following contributions:

-

• We train a deep neural network to estimate stable oxygen isotopes in precipitation (

$ {\delta}^{18}\mathrm{O} $

), given surface temperature and precipitation, and compare to common regression baselines.

$ {\delta}^{18}\mathrm{O} $

), given surface temperature and precipitation, and compare to common regression baselines. -

• To respect the underlying geometry of the climate model data, we investigate the performance of a spherical network architecture.

-

• We present cross-model results, where a regressor trained on simulated data from one climate model is used to emulate

$ {\delta}^{18}\mathrm{O} $

in a run from a different model.

$ {\delta}^{18}\mathrm{O} $

in a run from a different model.

2. Data and Methodology

Our approach to emulating

![]() $ {\delta}^{18}\mathrm{O} $

is sketched in Figure 1. For each time step, we start with variables that we know to be statistically related to

$ {\delta}^{18}\mathrm{O} $

is sketched in Figure 1. For each time step, we start with variables that we know to be statistically related to

![]() $ {\delta}^{18}\mathrm{O} $

, namely surface temperature and precipitation amount. All variables are standardized pixel-wise, that is, we subtract the mean and divide by the standard deviation, both calculated on the training set. We then estimate the standardized spatial field of

$ {\delta}^{18}\mathrm{O} $

, namely surface temperature and precipitation amount. All variables are standardized pixel-wise, that is, we subtract the mean and divide by the standard deviation, both calculated on the training set. We then estimate the standardized spatial field of

![]() $ {\delta}^{18}\mathrm{O} $

from the predictor variables by training a machine learning (ML) regression model. Subsequently, the standardization is inverted for the inferred

$ {\delta}^{18}\mathrm{O} $

from the predictor variables by training a machine learning (ML) regression model. Subsequently, the standardization is inverted for the inferred

![]() $ {\delta}^{18}\mathrm{O} $

, resulting in our estimate for the isotopic composition.

$ {\delta}^{18}\mathrm{O} $

, resulting in our estimate for the isotopic composition.

Figure 1. Our approach to the emulation of

![]() $ {\delta}^{18}\mathrm{O} $

in precipitation: for each time step, we use surface temperature and precipitation amount as predictor variables. Subsequently, the data is standardized pixel-wise by subtracting the mean and dividing it by the standard deviation at each pixel (top right). Means and standard deviations are based on the training set of the investigated climate model simulation. We use a machine learning emulation model (ML Regressor) to obtain a standardized estimate for

$ {\delta}^{18}\mathrm{O} $

in precipitation: for each time step, we use surface temperature and precipitation amount as predictor variables. Subsequently, the data is standardized pixel-wise by subtracting the mean and dividing it by the standard deviation at each pixel (top right). Means and standard deviations are based on the training set of the investigated climate model simulation. We use a machine learning emulation model (ML Regressor) to obtain a standardized estimate for

![]() $ {\delta}^{18}\mathrm{O} $

. The emulator output (bottom right) is then de-standardized using the training set mean and standard deviation of

$ {\delta}^{18}\mathrm{O} $

. The emulator output (bottom right) is then de-standardized using the training set mean and standard deviation of

![]() $ {\delta}^{18}\mathrm{O} $

at every pixel, to arrive at the final emulation result (bottom left). When applying the ML model to data from climate models other than the one that was used for training (e.g., in the cross-comparison experiment in Section 3.4), we use the mean and standard deviation from the training set of the new model.

$ {\delta}^{18}\mathrm{O} $

at every pixel, to arrive at the final emulation result (bottom left). When applying the ML model to data from climate models other than the one that was used for training (e.g., in the cross-comparison experiment in Section 3.4), we use the mean and standard deviation from the training set of the new model.

2.1. Data

We use data from the isotope-enabled version of the Hadley Center Climate Model version 3 (hereafter iHadCM3, Tindall et al., Reference Tindall, Valdes and Sime2009). iHadCM3 is a fully coupled atmosphere–ocean general circulation model (AOGCM). The horizontal resolution of iHadCM3 is 3.75° in the longitudinal direction, and 2.5° in the latitudinal direction. We exclude −90° and 90° from the latitudinal values because

![]() $ {\delta}^{18}\mathrm{O} $

is not simulated at these latitudes. We focus on the last millennium (850 CE to 1849 CE), which is characterized by a stable climate with variability on interannual-to-centennial timescales, but no major trends (Jungclaus et al., Reference Jungclaus, Bard, Baroni, Braconnot, Cao, Chini, Egorova, Evans, González-Rouco, Goosse, Hurtt, Joos, Kaplan, Khodri, Klein Goldewijk, Krivova, LeGrande, Lorenz, Luterbacher, Man, Maycock, Meinshausen, Moberg, Muscheler, Nehrbass-Ahles, Otto-Bliesner, Phipps, Pongratz, Rozanov, Schmidt, Schmidt, Schmutz, Schurer, Shapiro, Sigl, Smerdon, Solanki, Timmreck, Toohey, Usoskin, Wagner, Wu, Yeo, Zanchettin, Zhang and Zorita2017). Additionally, the last millennium is well documented in climate archives and observations (Morice et al., Reference Morice, Kennedy, Rayner and Jones2012; PAGES2k-Consortium, 2019; Comas-Bru et al., Reference Comas-Bru, Rehfeld, Roesch, Amirnezhad-Mozhdehi, Harrison, Atsawawaranunt, Ahmad, Brahim, Baker, Bosomworth, Breitenbach, Burstyn, Columbu, Deininger, Demény, Dixon, Fohlmeister, Hatvani, Hu, Kaushal, Kern, Labuhn, Lechleitner, Lorrey, Martrat, Novello, Oster, Pérez-Mejías, Scholz, Scroxton, Sinha, Ward, Warken and Zhang2020; Konecky et al., Reference Konecky, McKay, Churakova (Sidorova), Comas-Bru, Dassié, KL, Falster, Fischer, Jones, Jonkers, Kaufman, Leduc, Managave, Martrat, Opel, Orsi, Partin, Sayani, Thomas, Thompson, Tyler, Abram, Atwood, Conroy, Kern, Porter, Stevenson and von Gunten2020).

$ {\delta}^{18}\mathrm{O} $

is not simulated at these latitudes. We focus on the last millennium (850 CE to 1849 CE), which is characterized by a stable climate with variability on interannual-to-centennial timescales, but no major trends (Jungclaus et al., Reference Jungclaus, Bard, Baroni, Braconnot, Cao, Chini, Egorova, Evans, González-Rouco, Goosse, Hurtt, Joos, Kaplan, Khodri, Klein Goldewijk, Krivova, LeGrande, Lorenz, Luterbacher, Man, Maycock, Meinshausen, Moberg, Muscheler, Nehrbass-Ahles, Otto-Bliesner, Phipps, Pongratz, Rozanov, Schmidt, Schmidt, Schmutz, Schurer, Shapiro, Sigl, Smerdon, Solanki, Timmreck, Toohey, Usoskin, Wagner, Wu, Yeo, Zanchettin, Zhang and Zorita2017). Additionally, the last millennium is well documented in climate archives and observations (Morice et al., Reference Morice, Kennedy, Rayner and Jones2012; PAGES2k-Consortium, 2019; Comas-Bru et al., Reference Comas-Bru, Rehfeld, Roesch, Amirnezhad-Mozhdehi, Harrison, Atsawawaranunt, Ahmad, Brahim, Baker, Bosomworth, Breitenbach, Burstyn, Columbu, Deininger, Demény, Dixon, Fohlmeister, Hatvani, Hu, Kaushal, Kern, Labuhn, Lechleitner, Lorrey, Martrat, Novello, Oster, Pérez-Mejías, Scholz, Scroxton, Sinha, Ward, Warken and Zhang2020; Konecky et al., Reference Konecky, McKay, Churakova (Sidorova), Comas-Bru, Dassié, KL, Falster, Fischer, Jones, Jonkers, Kaufman, Leduc, Managave, Martrat, Opel, Orsi, Partin, Sayani, Thomas, Thompson, Tyler, Abram, Atwood, Conroy, Kern, Porter, Stevenson and von Gunten2020).

Diagnostics of the iHadCM3 data set are visualized in Figure 2. As can be seen from Figure 2b, the standard deviation of the simulated

![]() $ {\delta}^{18}\mathrm{O} $

is large over dry regions like the Sahara desert or the Arabian peninsula. This is partly related to the way

$ {\delta}^{18}\mathrm{O} $

is large over dry regions like the Sahara desert or the Arabian peninsula. This is partly related to the way

![]() $ {\delta}^{18}\mathrm{O} $

is computed in the climate models: in these regions, the abundances of

$ {\delta}^{18}\mathrm{O} $

is computed in the climate models: in these regions, the abundances of

![]() $ {}^{18}\mathrm{O} $

and

$ {}^{18}\mathrm{O} $

and

![]() $ {}^{16}\mathrm{O} $

are both small because of generally low precipitation amounts, leading to numerically unstable ratios and missing values on the monthly time scale. Overall, 0.3% of the

$ {}^{16}\mathrm{O} $

are both small because of generally low precipitation amounts, leading to numerically unstable ratios and missing values on the monthly time scale. Overall, 0.3% of the

![]() $ {\delta}^{18}\mathrm{O} $

values are missing on the monthly timescale, with a strong clustering in the regions with numerical instabilities described above (compare Supplementary Figure A.10). We take this into account by adapting the loss we use to train our ML methods to deal with missing values, as described in Section 2.2.3.

$ {\delta}^{18}\mathrm{O} $

values are missing on the monthly timescale, with a strong clustering in the regions with numerical instabilities described above (compare Supplementary Figure A.10). We take this into account by adapting the loss we use to train our ML methods to deal with missing values, as described in Section 2.2.3.

Figure 2. Statistical properties of the iHadCM3

![]() $ {\delta}^{18}\mathrm{O} $

data: (a) mean state of isotopic composition (

$ {\delta}^{18}\mathrm{O} $

data: (a) mean state of isotopic composition (

![]() $ {\delta}^{18}\mathrm{O} $

) in precipitation and (b) standard deviation of

$ {\delta}^{18}\mathrm{O} $

) in precipitation and (b) standard deviation of

![]() $ {\delta}^{18}\mathrm{O} $

on an annual timescale; (c) absolute correlations of

$ {\delta}^{18}\mathrm{O} $

on an annual timescale; (c) absolute correlations of

![]() $ {\delta}^{18}\mathrm{O} $

with temperature (green) and precipitation amount (brown) on interannual timescale—for each grid cell only the stronger of the two is shown.

$ {\delta}^{18}\mathrm{O} $

with temperature (green) and precipitation amount (brown) on interannual timescale—for each grid cell only the stronger of the two is shown.

To test the extrapolation and robustness of our emulator, we use last-millennium simulations of three other climate models: Scripps Experimental Climate Prediction Center’s Global Spectral Model (hereafter isoGSM, Yoshimura et al., Reference Yoshimura, Kanamitsu, Noone and Oki2008), iCESM version 1.2 (hereafter iCESM, Brady et al., Reference Brady, Stevenson, Bailey, Liu, Noone, Nusbaumer, Otto-Bliesner, Tabor, Tomas, Wong, Zhang and Zhu2019), and ECHAM5/MPI-OM (hereafter ECHAM5-wiso, Werner et al., Reference Werner, Haese, Xu, Zhang, Butzin and Lohmann2016). While iCESM and ECHAM5-wiso are fully coupled AOGCMs, isoGSM is an atmospheric GCM forced by sea-surface temperatures and sea-ice distributions of a last millennium run with the CCSM4 climate model (Landrum et al., Reference Landrum, Otto-Bliesner, Wahl, Conley, Lawrence, Rosenbloom and Teng2013). We re-grid the data of the other climate model simulations (

![]() $ {\delta}^{18}\mathrm{O} $

, surface temperature, precipitation amount) to the iHadCM3 grid using bilinear interpolation from the CDO tool set (Schulzweida, Reference Schulzweida2020).

$ {\delta}^{18}\mathrm{O} $

, surface temperature, precipitation amount) to the iHadCM3 grid using bilinear interpolation from the CDO tool set (Schulzweida, Reference Schulzweida2020).

All datasets are freely available at https://doi.org/10.5281/zenodo.7516327 and described in detail in Bühler et al. (Reference Bühler, Axelsson, Lechleitner, Fohlmeister, LeGrande, Midhun, Sjolte, Werner, Yoshimura and Rehfeld2022).Footnote 1

2.1.1. Pre-processing

We apply the following pre-processing steps to the climate simulation data:

-

• We set valid ranges for all variables, thereby excluding implausibly large or small values, using the following choices: surface temperature range:

$ \left[\mathrm{173,373}\right] $

K,

$ \left[\mathrm{173,373}\right] $

K,

$ {\delta}^{18}\mathrm{O} $

range:

$ {\delta}^{18}\mathrm{O} $

range:

$ \left[-\mathrm{100,100}\right] $

, precipitation amount:

$ \left[-\mathrm{100,100}\right] $

, precipitation amount:

$ \left[-1,10000\right]\frac{\mathrm{mm}}{\mathrm{month}} $

. Wide ranges are chosen because we aim to exclude only implausible values that might deteriorate emulator performance without artificially removing model deficiencies. Thus, we also keep small negative precipitation values that climate models might produce due to numerical inaccuracies in rare occasions.

$ \left[-1,10000\right]\frac{\mathrm{mm}}{\mathrm{month}} $

. Wide ranges are chosen because we aim to exclude only implausible values that might deteriorate emulator performance without artificially removing model deficiencies. Thus, we also keep small negative precipitation values that climate models might produce due to numerical inaccuracies in rare occasions. -

• Time steps with missing values in the predictor variables are excluded from the dataset. This leads to the exclusion of 31 of the 12,000 monthly time steps of iHadCM3.

-

• We form yearly averages from monthly data. Missing

$ {\delta}^{18}\mathrm{O} $

data points are omitted in the yearly averaging. We argue that this does not impact our results negatively, because the invalid 0.3% of

$ {\delta}^{18}\mathrm{O} $

data points are omitted in the yearly averaging. We argue that this does not impact our results negatively, because the invalid 0.3% of

$ {\delta}^{18}\mathrm{O} $

values cluster in regions, where due to numerical instabilities in the “ground truth” iHadCM3 simulation, learning a physically consistent emulation would not have been possible anyway (compare Supplementary Figure A.10).

$ {\delta}^{18}\mathrm{O} $

values cluster in regions, where due to numerical instabilities in the “ground truth” iHadCM3 simulation, learning a physically consistent emulation would not have been possible anyway (compare Supplementary Figure A.10). -

• We re-grid the yearly datasets to the irregular grid on which the investigated spherical network operates (see Section 2.2) using a first-order conservative remapping scheme (Schulzweida, Reference Schulzweida2020).

-

• We split the data into test and training sets. We use 850–1750 CE for training and 1751–1849 CE for testing. The data are split chronologically instead of randomly to make the test and training set as independent as possible, and prevent the network from exploiting auto-correlations from previous or subsequent time steps. If a validation set (used for making choices of ML hyperparameters) is needed, we split off 10% of the training set randomly unless specified otherwise.

-

• Before the ML methods are applied, the data are standardized pixel-wise by subtracting the training set mean and dividing by the standard deviation of the corresponding climate model, as visualized in Figure 1.

2.2. Methodology

To obtain a spatially consistent emulation, and to utilize the fact that the local statistical relations between

![]() $ {\delta}^{18}\mathrm{O} $

and the predictor variables are similar in many grid boxes on the Earth’s surface, we choose two approaches based on convolutional neural networks (CNNs). Both utilize the successful UNet architecture (Ronneberger et al., Reference Ronneberger, Fischer and Brox2015), whose multi-scale analysis can simultaneously capture fine structure variations and utilize large-scale contextual information. UNet architectures have been successfully applied in a climate science context before (e.g., Kadow et al., Reference Kadow, Hall and Ulbrich2020). The first of our two approaches treats data on the latitude-longitude grid as a flat image. The second explicitly incorporates the spherical geometry of the data.

$ {\delta}^{18}\mathrm{O} $

and the predictor variables are similar in many grid boxes on the Earth’s surface, we choose two approaches based on convolutional neural networks (CNNs). Both utilize the successful UNet architecture (Ronneberger et al., Reference Ronneberger, Fischer and Brox2015), whose multi-scale analysis can simultaneously capture fine structure variations and utilize large-scale contextual information. UNet architectures have been successfully applied in a climate science context before (e.g., Kadow et al., Reference Kadow, Hall and Ulbrich2020). The first of our two approaches treats data on the latitude-longitude grid as a flat image. The second explicitly incorporates the spherical geometry of the data.

2.2.1. Flat network

Because our data naturally lie on the surface of a sphere, distortions arise when treating the equally spaced longitude-latitude grid as a flat image using, for example, a plate carrée projection (lat/lon projection). We test if we can still obtain reasonable results with this naive setup. Furthermore, we try to partially remedy the effects of the distortions within the “flat” approach, by modifying the standard UNet architecture in three ways:

-

• We use area-weighted loss functions.

-

• We use periodic padding in the longitudinal direction, that is, we append the rightmost column to the very left of the plate carrée map (and vice versa) before computing convolutions. Thereby, we assure continuity along the 0°–360° coordinate discontinuity.

-

• We incorporate CoordConv (Liu et al., Reference Liu, Lehman, Molino, Such, Frank, Sergeev, Yosinski, Bengio, Wallach, Larochelle, Grauman, Cesa-Bianchi and Garnett2018), a tweak to convolutional layers that appends the coordinates to the features input into each convolution, thus allowing networks to learn to break translational symmetry if necessary.

2.2.2. Spherical network

As a more sophisticated technique, a multitude of approaches to directly incorporate the spherical nature of data into a neural network architecture has been proposed (Cohen et al., Reference Cohen, Geiger, Koehler and Welling2018; Coors et al., Reference Coors, Condurache, Geiger, Ferrari, Hebert, Sminchisescu and Weiss2018; Cohen et al., Reference Cohen, Weiler, Kicanaoglu, Welling, Chaudhuri and Salakhutdinov2019; Defferrard et al., Reference Defferrard, Milani, Gusset and Perraudin2020; Esteves et al., Reference Esteves, Allen-Blanchette, Makadia and Daniilidis2020; Lam et al., Reference Lam, Sanchez-Gonzalez, Willson, Wirnsberger, Fortunato, Pritzel, Ravuri, Ewalds, Alet, Eaton-Rosen, Hu, Merose, Hoyer, Holland, Stott, Vinyals, Mohamed and Battaglia2022). We reproduce the approach of Cohen et al. (Reference Cohen, Weiler, Kicanaoglu, Welling, Chaudhuri and Salakhutdinov2019), where the network operates on an icosahedral grid, with grid boxes centered on the vertices. Using the icosahedron offers a straightforward way to increase or decrease resolution for a UNet-like design, as we can recursively subdivide each of its triangles into four smaller triangles, projecting all newly created vertices onto the sphere again. We denote the number of recursive refinements of the grid as

![]() $ r $

, with

$ r $

, with

![]() $ r=0 $

identifying the grid containing only the 12 vertices of the regular icosahedron. As the refined icosahedral grid is locally very similar to a flat hexagonal grid, we can use an appropriately adapted implementation of the usual efficient way to compute convolutions. Additionally, the architecture of Cohen et al. (Reference Cohen, Weiler, Kicanaoglu, Welling, Chaudhuri and Salakhutdinov2019) is equivariant to a group of symmetry transformations, meaning that if the input to the CNN is transformed by an element of the symmetry group, the output transforms accordingly. This fits well with the approximate symmetries present in the Earth system, like symmetry to reflections on the equatorial plane or rotations around the polar axis. We validate our implementation of the method on a toy problem described by Cohen et al. (Reference Cohen, Weiler, Kicanaoglu, Welling, Chaudhuri and Salakhutdinov2019): the classification of handwritten digits projected onto a spherical surface. We obtain results that are comparable to those reported by Cohen et al. (Reference Cohen, Weiler, Kicanaoglu, Welling, Chaudhuri and Salakhutdinov2019); see Supplementary Appendix A.1.1 for more details.

$ r=0 $

identifying the grid containing only the 12 vertices of the regular icosahedron. As the refined icosahedral grid is locally very similar to a flat hexagonal grid, we can use an appropriately adapted implementation of the usual efficient way to compute convolutions. Additionally, the architecture of Cohen et al. (Reference Cohen, Weiler, Kicanaoglu, Welling, Chaudhuri and Salakhutdinov2019) is equivariant to a group of symmetry transformations, meaning that if the input to the CNN is transformed by an element of the symmetry group, the output transforms accordingly. This fits well with the approximate symmetries present in the Earth system, like symmetry to reflections on the equatorial plane or rotations around the polar axis. We validate our implementation of the method on a toy problem described by Cohen et al. (Reference Cohen, Weiler, Kicanaoglu, Welling, Chaudhuri and Salakhutdinov2019): the classification of handwritten digits projected onto a spherical surface. We obtain results that are comparable to those reported by Cohen et al. (Reference Cohen, Weiler, Kicanaoglu, Welling, Chaudhuri and Salakhutdinov2019); see Supplementary Appendix A.1.1 for more details.

2.2.3. Loss function

To train our UNet architectures for isotope emulation, we use a weighted mean squared error loss between the standardized

![]() $ {\delta}^{18}\mathrm{O} $

ground truth

$ {\delta}^{18}\mathrm{O} $

ground truth

![]() $ Y $

and the predicted values

$ Y $

and the predicted values

![]() $ \hat{Y} $

:

$ \hat{Y} $

:

$$ L\left(Y,\hat{Y}\right)=\frac{1}{b}\sum \limits_{i=1}^b\frac{1}{\mid {\mathcal{G}}_i\mid}\sum \limits_{j\in {\mathcal{G}}_i}^{\mid {\mathcal{G}}_i\mid }{w}_j{\left({Y}_{i,j}-{\hat{Y}}_{i,j}\right)}^2, $$

$$ L\left(Y,\hat{Y}\right)=\frac{1}{b}\sum \limits_{i=1}^b\frac{1}{\mid {\mathcal{G}}_i\mid}\sum \limits_{j\in {\mathcal{G}}_i}^{\mid {\mathcal{G}}_i\mid }{w}_j{\left({Y}_{i,j}-{\hat{Y}}_{i,j}\right)}^2, $$

where the loss is averaged over a batch of size

![]() $ b $

and the set of valid grid boxes

$ b $

and the set of valid grid boxes

![]() $ {\mathcal{G}}_i $

at time step

$ {\mathcal{G}}_i $

at time step

![]() $ i $

. A grid box is valid if the simulated ground truth data has no missing value at this time step in this grid box.

$ i $

. A grid box is valid if the simulated ground truth data has no missing value at this time step in this grid box.

![]() $ \mid {\mathcal{G}}_i\mid $

denotes the cardinality of

$ \mid {\mathcal{G}}_i\mid $

denotes the cardinality of

![]() $ {\mathcal{G}}_i $

, and

$ {\mathcal{G}}_i $

, and

![]() $ {w}_j $

are weighting coefficients. For the convolutional UNet working on the plate carrée projection, we choose

$ {w}_j $

are weighting coefficients. For the convolutional UNet working on the plate carrée projection, we choose

![]() $ {w}_j $

to be proportional to the cosine of the latitude of the center of grid cell

$ {w}_j $

to be proportional to the cosine of the latitude of the center of grid cell

![]() $ j $

, which is an approximation of the area of the grid cell. We rescale the weights, such that they sum to the total number of grid boxes. For the icosahedral UNet, all grid boxes are of approximately equal size. Therefore, no weighting is applied and

$ j $

, which is an approximation of the area of the grid cell. We rescale the weights, such that they sum to the total number of grid boxes. For the icosahedral UNet, all grid boxes are of approximately equal size. Therefore, no weighting is applied and

![]() $ {w}_j $

is a constant independent of

$ {w}_j $

is a constant independent of

![]() $ j $

.

$ j $

.

2.2.4. Baselines

In addition to the UNet models, we implement three simple baseline models to assess the relative benefit of complex and deep models in our emulation problem. These baselines are as follows:

-

• Grid-box-wise linear regression, the simplest conceivable model: regress

$ {\delta}^{18}\mathrm{O} $

on temperature and precipitation amount in a separate model for each grid box.

$ {\delta}^{18}\mathrm{O} $

on temperature and precipitation amount in a separate model for each grid box. -

• Grid-box-wise random forest regression model: in contrast to the linear regression baseline, we train a single random forest (Breiman, Reference Breiman2001) to make predictions on all grid boxes. To allow the model to learn spatially varying relationships, we include the coordinates as predictor variables.Footnote 2

-

• Grid-to-grid approach (PCA regression): relations between

$ {\delta}^{18}\mathrm{O} $

and other climatic variables tend to behave similarly over large areas (see Figure 2c), justifying a dimension reduction of the input and output spaces before applying a multivariate linear regression. This is implemented by computing the principal components of the input and output spaces. Schematically, the computation goes as follows:

$ {\delta}^{18}\mathrm{O} $

and other climatic variables tend to behave similarly over large areas (see Figure 2c), justifying a dimension reduction of the input and output spaces before applying a multivariate linear regression. This is implemented by computing the principal components of the input and output spaces. Schematically, the computation goes as follows:

$ X\overset{{\mathrm{PCA}}_X}{\mapsto }{C}_X\overset{\mathrm{lin}.\mathrm{reg}.}{\mapsto }{\hat{C}}_Y\overset{{\mathrm{PCA}}_Y^{-1}}{\mapsto}\hat{Y} $

. Approximately optimal numbers of principal components are obtained as follows: we iterate over a

$ X\overset{{\mathrm{PCA}}_X}{\mapsto }{C}_X\overset{\mathrm{lin}.\mathrm{reg}.}{\mapsto }{\hat{C}}_Y\overset{{\mathrm{PCA}}_Y^{-1}}{\mapsto}\hat{Y} $

. Approximately optimal numbers of principal components are obtained as follows: we iterate over a

$ 50\times 50 $

logarithmically spaced grid of candidate values for the number of input and output principal components. For each configuration, the emulation model is trained and its performance is measured on a held-out validation set. We then select the combination of numbers of input and output principal components which yields the best results on the validation set. As a last step, the selected model is retrained, now including the validation set data. Principal component analysis can be performed on arbitrary grids, which makes it equally applicable to the projected 2D data and the icosahedral representation.

$ 50\times 50 $

logarithmically spaced grid of candidate values for the number of input and output principal components. For each configuration, the emulation model is trained and its performance is measured on a held-out validation set. We then select the combination of numbers of input and output principal components which yields the best results on the validation set. As a last step, the selected model is retrained, now including the validation set data. Principal component analysis can be performed on arbitrary grids, which makes it equally applicable to the projected 2D data and the icosahedral representation.

2.2.5. Metrics

The metric we use for evaluating emulation approaches is the

![]() $ {R}^2 $

score, also called the “coefficient of determination,” which quantifies what fraction of the temporal variance in the test set is explained by the ML estimate in each grid box. The

$ {R}^2 $

score, also called the “coefficient of determination,” which quantifies what fraction of the temporal variance in the test set is explained by the ML estimate in each grid box. The

![]() $ {R}^2 $

score compares the

$ {R}^2 $

score compares the

![]() $ {\delta}^{18}\mathrm{O} $

ground truth

$ {\delta}^{18}\mathrm{O} $

ground truth

![]() $ {Y}_j $

and an estimate

$ {Y}_j $

and an estimate

![]() $ {\hat{Y}}_j $

in a given grid box

$ {\hat{Y}}_j $

in a given grid box

![]() $ j $

as

$ j $

as

$$ {R}^2\left({Y}_j,{\hat{Y}}_j\right)=1-\frac{\mathrm{MSE}\left({Y}_j,{\hat{Y}}_j\right)}{\sigma_j^2}, $$

$$ {R}^2\left({Y}_j,{\hat{Y}}_j\right)=1-\frac{\mathrm{MSE}\left({Y}_j,{\hat{Y}}_j\right)}{\sigma_j^2}, $$

where

![]() $ \mathrm{MSE}\left({Y}_j,{\hat{Y}}_j\right) $

is the mean squared error and

$ \mathrm{MSE}\left({Y}_j,{\hat{Y}}_j\right) $

is the mean squared error and

![]() $ {\sigma}_j^2 $

the variance of the test set ground truth, both taken over the time axis at grid box

$ {\sigma}_j^2 $

the variance of the test set ground truth, both taken over the time axis at grid box

![]() $ j $

. A value of

$ j $

. A value of

![]() $ {R}^2=1 $

indicates perfect emulation.

$ {R}^2=1 $

indicates perfect emulation.

![]() $ {R}^2=0 $

can, for instance, be obtained by a trivial baseline model that returns the true temporal mean at every time step. The score can become arbitrarily negative.

$ {R}^2=0 $

can, for instance, be obtained by a trivial baseline model that returns the true temporal mean at every time step. The score can become arbitrarily negative.

Additionally, we compute the Pearson correlation coefficient between the true and emulated time series at selected grid boxes. To choose time steps in which a method’s performance is particularly strong or weak, we calculate the anomaly correlation coefficient (ACC) between emulation and ground truth. ACC is defined as the Pearson correlation coefficient between the true and emulated anomaly patterns for a given time step. Anomalies are computed with respect to the training set mean.

If error intervals on performance metrics are given, they are

![]() $ 1\sigma $

intervals computed over a set of 10 runs, unless stated otherwise. Thus, the uncertainties only account for the uncertainty of the stochastic aspects of the ML model parameter optimization, disregarding any uncertainty that is related to the data.

$ 1\sigma $

intervals computed over a set of 10 runs, unless stated otherwise. Thus, the uncertainties only account for the uncertainty of the stochastic aspects of the ML model parameter optimization, disregarding any uncertainty that is related to the data.

Implementation details for training and configuration of the ML methods are provided in Supplementary Appendix A.1, and the code to reproduce our experiments is freely available at https://github.com/paleovar/isoEm/releases/v1.0.

3. Results

We structure the Results section as follows. First, we give a detailed spatiotemporal overview to illustrate the characteristics of the ML-based emulation results. To this purpose, we use the best-performing emulation method as an example. Subsequently, we compare emulation methods amongst each other, contrasting deep architectures and baselines as well as “flat” and “spherical” approaches. We follow up with a range of sensitivity experiments and conclude by conducting a cross-model experiment, that is, we train an ML model on data from one climate model and then use the trained model to emulate

![]() $ {\delta}^{18}\mathrm{O} $

in other climate model simulations.

$ {\delta}^{18}\mathrm{O} $

in other climate model simulations.

3.1. Spatiotemporal overview of emulation results

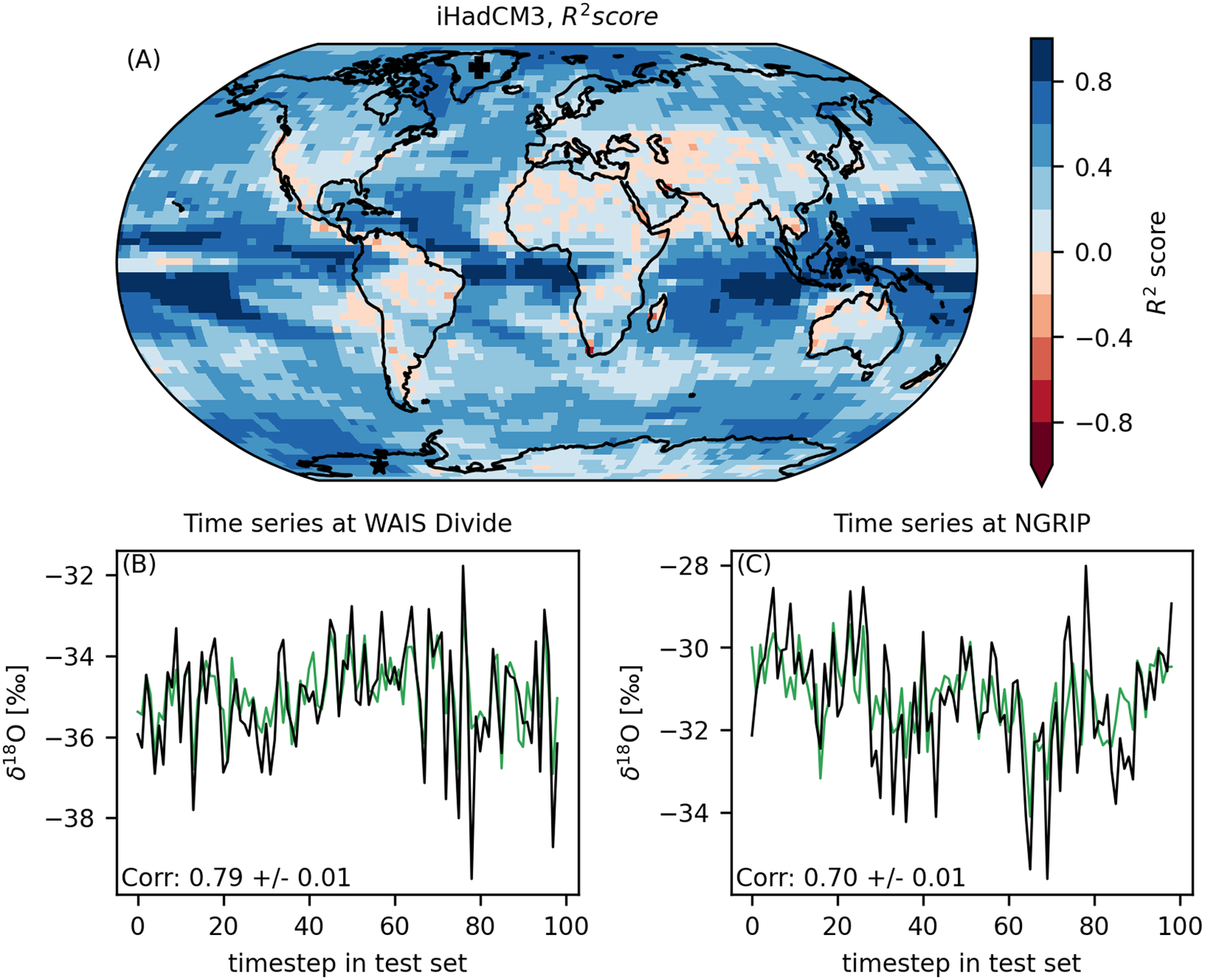

In Section 3.3, we will discover that the best-performing ML emulation method, a deeper version of the flat UNet architecture, reaches an average

![]() $ {R}^2 $

score of

$ {R}^2 $

score of

![]() $ 0.389\pm 0.006 $

on the plate carrée grid. This means that in the global average, almost

$ 0.389\pm 0.006 $

on the plate carrée grid. This means that in the global average, almost

![]() $ 40\% $

of the temporal variance in the test set is explained by our emulation on the interannual timescale. We use this best ML method to introduce spatial and temporal characteristics of the emulation.

$ 40\% $

of the temporal variance in the test set is explained by our emulation on the interannual timescale. We use this best ML method to introduce spatial and temporal characteristics of the emulation.

The prediction quality varies spatially, as shown in Figure 3a.

![]() $ {R}^2 $

scores of

$ {R}^2 $

scores of

![]() $ 0.6 $

or larger are reached in

$ 0.6 $

or larger are reached in

![]() $ 18.5\% $

of the grid cells, and

$ 18.5\% $

of the grid cells, and

![]() $ {R}^2\le 0 $

for only

$ {R}^2\le 0 $

for only

![]() $ 5.4\% $

of grid cells. The best results are achieved over tropical oceans, which are regions with strong correlations of

$ 5.4\% $

of grid cells. The best results are achieved over tropical oceans, which are regions with strong correlations of

![]() $ {\delta}^{18}\mathrm{O} $

and precipitation amounts. Performance is good over large parts of the Arctic and over western Antarctica as well, which is important because these regions are especially relevant for the comparison with

$ {\delta}^{18}\mathrm{O} $

and precipitation amounts. Performance is good over large parts of the Arctic and over western Antarctica as well, which is important because these regions are especially relevant for the comparison with

![]() $ {\delta}^{18}\mathrm{O} $

measurements from ice cores. We illustrate the performance in these regions by comparing emulated and ground truth time series in the grid boxes closest to two ice core drilling sites in panels b and c of Figure 3: the North Greenland Ice Core Project (“NGRIP,” 75.1° N, 42.3° W, North Greenland Ice Core Project Members, 2004) and the West Antarctic Ice Sheet Divide ice core project (“WAIS Divide,” 79.5° S, 112.1° W, Buizert et al., Reference Buizert, Cuffey, Severinghaus, Baggenstos, Fudge, Steig, Markle, Winstrup, Rhodes and Brook2015). For these drilling sites, the correlation between our emulation and the exact output time series of the isotope-enabled climate model exceeds

$ {\delta}^{18}\mathrm{O} $

measurements from ice cores. We illustrate the performance in these regions by comparing emulated and ground truth time series in the grid boxes closest to two ice core drilling sites in panels b and c of Figure 3: the North Greenland Ice Core Project (“NGRIP,” 75.1° N, 42.3° W, North Greenland Ice Core Project Members, 2004) and the West Antarctic Ice Sheet Divide ice core project (“WAIS Divide,” 79.5° S, 112.1° W, Buizert et al., Reference Buizert, Cuffey, Severinghaus, Baggenstos, Fudge, Steig, Markle, Winstrup, Rhodes and Brook2015). For these drilling sites, the correlation between our emulation and the exact output time series of the isotope-enabled climate model exceeds

![]() $ 0.7 $

.

$ 0.7 $

.

Figure 3. Test set emulation performance of the best ML emulation method. The bluer the colors, the better the emulation. Blue colors indicate regions in which the performance is better than a trivial baseline model that returns the correct test set mean at every time step. This plot displays the average of the

![]() $ {R}^2 $

scores over 10 runs. Additionally, we show the time series of the ML emulation (green, mean over ten runs) and the true simulation data (black) for grid boxes next to two ice core drilling sites. Panel (b) “NGRIP” (Greenland). Panel (c) “WAIS Divide” (West Antarctica).

$ {R}^2 $

scores over 10 runs. Additionally, we show the time series of the ML emulation (green, mean over ten runs) and the true simulation data (black) for grid boxes next to two ice core drilling sites. Panel (b) “NGRIP” (Greenland). Panel (c) “WAIS Divide” (West Antarctica).

In general, spatial variations in performance follow the correlation structure between

![]() $ {\delta}^{18}\mathrm{O} $

and the predictor variables (Figure 2c): in regions with strong absolute correlations between

$ {\delta}^{18}\mathrm{O} $

and the predictor variables (Figure 2c): in regions with strong absolute correlations between

![]() $ {\delta}^{18}\mathrm{O} $

and surface temperature or precipitation amount, the

$ {\delta}^{18}\mathrm{O} $

and surface temperature or precipitation amount, the

![]() $ {R}^2 $

scores are higher than in regions where none of the predictor variables is strongly correlated with

$ {R}^2 $

scores are higher than in regions where none of the predictor variables is strongly correlated with

![]() $ {\delta}^{18}\mathrm{O} $

. Thus, performance is worse over landmasses, especially in the low and mid-latitudes. Next, we visualize emulation and climate model output for individual time steps. For a year with typical emulator performanceFootnote

3, we plot emulated (panel a) and simulated (panel b) anomalies in Figure 4. We can see that the large-scale patterns match well between emulation and simulation: there are strong positive anomalies over the Arctic, related to positive temperature anomalies in this time step, and the large-scale structure over the Pacific is captured as well. Strong negative anomalies over parts of South America and northern India and Pakistan are reproduced. Emulation and ground truth simulation differ in their fine-scale structure: the ground truth is generally less smooth than the emulation and seems particularly noisy over some dry regions like the Sahara and the Arabic deserts. In these regions, there is a potential for numerical inaccuracies in the isotopic component of climate models, due to small abundances of each isotopic species, and it is hard to untangle which parts of the “noisy” signal have a climatic origin and which parts are simulation artifacts. A part of the overall smoother nature of the UNet regression results can be attributed to the MSE Loss giving a large (quadratic) penalty for strong deviations from the true values, thus, priming the network against predicting values in the tails of the distribution. Supplementary Figure A.4 compares emulation and simulation for three additional time steps: time steps in which the emulation works particularly well or poorly, and a climatically interesting year—1816 CE, the “year without a summer” (Luterbacher and Pfister, Reference Luterbacher and Pfister2015), which is caused by a volcanic eruption included in the volcanic forcing of the iHadCM3 simulation. For 1816 CE, we observe that the emulator reproduces a strong negative

$ {\delta}^{18}\mathrm{O} $

. Thus, performance is worse over landmasses, especially in the low and mid-latitudes. Next, we visualize emulation and climate model output for individual time steps. For a year with typical emulator performanceFootnote

3, we plot emulated (panel a) and simulated (panel b) anomalies in Figure 4. We can see that the large-scale patterns match well between emulation and simulation: there are strong positive anomalies over the Arctic, related to positive temperature anomalies in this time step, and the large-scale structure over the Pacific is captured as well. Strong negative anomalies over parts of South America and northern India and Pakistan are reproduced. Emulation and ground truth simulation differ in their fine-scale structure: the ground truth is generally less smooth than the emulation and seems particularly noisy over some dry regions like the Sahara and the Arabic deserts. In these regions, there is a potential for numerical inaccuracies in the isotopic component of climate models, due to small abundances of each isotopic species, and it is hard to untangle which parts of the “noisy” signal have a climatic origin and which parts are simulation artifacts. A part of the overall smoother nature of the UNet regression results can be attributed to the MSE Loss giving a large (quadratic) penalty for strong deviations from the true values, thus, priming the network against predicting values in the tails of the distribution. Supplementary Figure A.4 compares emulation and simulation for three additional time steps: time steps in which the emulation works particularly well or poorly, and a climatically interesting year—1816 CE, the “year without a summer” (Luterbacher and Pfister, Reference Luterbacher and Pfister2015), which is caused by a volcanic eruption included in the volcanic forcing of the iHadCM3 simulation. For 1816 CE, we observe that the emulator reproduces a strong negative

![]() $ {\delta}^{18}\mathrm{O} $

anomaly in regions where

$ {\delta}^{18}\mathrm{O} $

anomaly in regions where

![]() $ {\delta}^{18}\mathrm{O} $

is primarily influenced by temperature, namely in the Arctic, northern North America, and Siberia.

$ {\delta}^{18}\mathrm{O} $

is primarily influenced by temperature, namely in the Arctic, northern North America, and Siberia.

Figure 4. Typical emulation results on iHadCM3 dataset: we show anomalies as they are output by the ML emulator (“Emulation”) and the “true” result in the simulation data set (“Ground truth”). The anomalies are computed with respect to the training set mean. For the selected time step, the anomaly correlation coefficient (ACC) reaches its median value.

3.2. Comparing machine learning methods

The ML emulation models (UNet architectures and simpler baselines) differ in the quality of their emulation. In the following, we compare the methods amongst each other. For details on the training procedures, network architectures, and method implementations, see Supplementary Appendix A.1. We also address the question of whether using an inherently spherical approach is beneficial over treating the latitude-longitude grid as “flat.” However, the comparison is not trivial: the approaches are developed for data on different grids (plate carrée and icosahedral) and the necessary interpolations may deteriorate performance. Thus, we compute performances on both grids, interpolating the predictions from one grid to another. Results for the globally averaged

![]() $ {R}^2 $

scores are shown in Table 1. The best model in the comparison is the “modified” version of the flat UNet that includes the three modifications described in Section 2.2 (area-weighted loss, adapted padding, CoordConv). The effects of the individual modifications are detailed in Supplementary Table A.2 and Supplementary Figure A.6.

$ {R}^2 $

scores are shown in Table 1. The best model in the comparison is the “modified” version of the flat UNet that includes the three modifications described in Section 2.2 (area-weighted loss, adapted padding, CoordConv). The effects of the individual modifications are detailed in Supplementary Table A.2 and Supplementary Figure A.6.

Table 1. Globally averaged

![]() $ {R}^2 $

scores for the different ML emulation methods.

$ {R}^2 $

scores for the different ML emulation methods.

Note. Bold indicates the best performing methods (highest R 2 values) on each model grid (= each column). Results are calculated for the icosahedral grid that the method of Cohen et al. (Reference Cohen, Weiler, Kicanaoglu, Welling, Chaudhuri and Salakhutdinov2019) operates on and the plate carrée grid. When a method works with data on the other grid, the emulated data is interpolated. “Flat UNet, unmodified” and “Flat UNet, modified” refer to the flat network architecture described in Section 2.2.1, either not applying or applying the modifications to remedy projection artifacts described in that chapter.

All UNet architectures outperform all baseline architectures that operate on the same grid. The best UNet method explains

![]() $ 7\% $

more of the test set variance than the best baseline model, PCA regression. The other baseline models perform worse. In particular, it seems that the random forest baseline, which regresses on a pixel-to-pixel level is not able to capture the spatially varying relationships between

$ 7\% $

more of the test set variance than the best baseline model, PCA regression. The other baseline models perform worse. In particular, it seems that the random forest baseline, which regresses on a pixel-to-pixel level is not able to capture the spatially varying relationships between

![]() $ {\delta}^{18}\mathrm{O} $

and the predictor variables surface temperature and precipitation amount well enough, even when including coordinates as additional inputs. The spatial performance differences between the UNet methods and the best baselines are visualized in Supplementary Figure A.5. The improvements by the UNets are largest over oceans.

$ {\delta}^{18}\mathrm{O} $

and the predictor variables surface temperature and precipitation amount well enough, even when including coordinates as additional inputs. The spatial performance differences between the UNet methods and the best baselines are visualized in Supplementary Figure A.5. The improvements by the UNets are largest over oceans.

On the icosahedral grid, the icosahedral UNet and the modified flat UNet achieve

![]() $ {R}^2 $

scores that are not significantly different. On the plate carrée grid, however, the results of the icosahedral UNet are much worse. This drop can largely be attributed to the interpolation method (see Supplementary Figure A.7): on the plate carrée grid, neither training data nor results of the flat UNet are interpolated, while interpolations are necessary in both cases for the icosahedral UNet.

$ {R}^2 $

scores that are not significantly different. On the plate carrée grid, however, the results of the icosahedral UNet are much worse. This drop can largely be attributed to the interpolation method (see Supplementary Figure A.7): on the plate carrée grid, neither training data nor results of the flat UNet are interpolated, while interpolations are necessary in both cases for the icosahedral UNet.

3.3. Sensitivity experiments

We conduct a range of sensitivity experiments, to test (a) the influence of each predictor variable on the results, (b) whether we can further improve the performance of our ML method, and (c) whether emulation quality varies with timescale.

First, we use the modified flat UNet architecture as employed in Section 3.2 and test how the results differ if we exclude one of the predictor variables. The globally averaged

![]() $ {R}^2 $

score on the plate carrée grid drops from

$ {R}^2 $

score on the plate carrée grid drops from

![]() $ 0.377\pm 0.005 $

if both precipitation and temperature are used to

$ 0.377\pm 0.005 $

if both precipitation and temperature are used to

![]() $ 0.327\pm 0.006 $

when using only precipitation and to

$ 0.327\pm 0.006 $

when using only precipitation and to

![]() $ 0.251\pm 0.004 $

when only using temperature. The spatial differences in emulation quality follow the large-scale behavior of the correlation structure in panel c of Figure 2. When precipitation is excluded, the performance decreases most over low latitudes, while the

$ 0.251\pm 0.004 $

when only using temperature. The spatial differences in emulation quality follow the large-scale behavior of the correlation structure in panel c of Figure 2. When precipitation is excluded, the performance decreases most over low latitudes, while the

![]() $ {R}^2 $

score drops over polar regions without temperature. This is visualized in Supplementary Figure A.8.

$ {R}^2 $

score drops over polar regions without temperature. This is visualized in Supplementary Figure A.8.

To potentially improve the emulation results even further, we create variations of the modified flat UNet architecture: a “wider” version in which the number of computed features per network layer is doubled (

![]() $ {R}^2=0.386\pm 0.008 $

, plate carrée grid), and a “deeper” version with six additional network layersFootnote

4, which obtains

$ {R}^2=0.386\pm 0.008 $

, plate carrée grid), and a “deeper” version with six additional network layersFootnote

4, which obtains

![]() $ {R}^2=0.389\pm 0.006 $

(plate carrée grid), both improving over the default choice by roughly

$ {R}^2=0.389\pm 0.006 $

(plate carrée grid), both improving over the default choice by roughly

![]() $ 0.01 $

. Additionally, we test whether results could be improved by tuning the learning rate of the employed optimizer by testing a grid of 20 logarithmically spaced values between

$ 0.01 $

. Additionally, we test whether results could be improved by tuning the learning rate of the employed optimizer by testing a grid of 20 logarithmically spaced values between

![]() $ {10}^{-4} $

and

$ {10}^{-4} $

and

![]() $ {10}^{-1} $

. The performance is best for learning rates between

$ {10}^{-1} $

. The performance is best for learning rates between

![]() $ {10}^{-3} $

and

$ {10}^{-3} $

and

![]() $ {10}^{-2} $

. However, no substantial improvements over the default parameter choice were reached in the limited range of tested values.

$ {10}^{-2} $

. However, no substantial improvements over the default parameter choice were reached in the limited range of tested values.

The monthly timescale differs from the interannual scale by a pronounced seasonal cycle of

![]() $ {\delta}^{18}\mathrm{O} $

in many regions. Thus, even a simple climatology can explain a part of the variability in

$ {\delta}^{18}\mathrm{O} $

in many regions. Thus, even a simple climatology can explain a part of the variability in

![]() $ {\delta}^{18}\mathrm{O} $

. To exclude this trivially explainable part from the computation of the

$ {\delta}^{18}\mathrm{O} $

. To exclude this trivially explainable part from the computation of the

![]() $ {R}^2 $

score, we compute the score separately for each month. Results are similar to the results on the interannual scale with roughly

$ {R}^2 $

score, we compute the score separately for each month. Results are similar to the results on the interannual scale with roughly

![]() $ 40\% $

of variance explained. The higher time resolution suggests exploring whether the emulation can profit from taking the temporal context into account. We test this by including the temperature and precipitation of not only the current time step but also the previous month as inputs to the emulation of

$ 40\% $

of variance explained. The higher time resolution suggests exploring whether the emulation can profit from taking the temporal context into account. We test this by including the temperature and precipitation of not only the current time step but also the previous month as inputs to the emulation of

![]() $ {\delta}^{18}\mathrm{O} $

. Results do not improve strongly, however, possibly because the investigated timescale is still larger than the average atmospheric moisture residence time (Trenberth, Reference Trenberth1998).

$ {\delta}^{18}\mathrm{O} $

. Results do not improve strongly, however, possibly because the investigated timescale is still larger than the average atmospheric moisture residence time (Trenberth, Reference Trenberth1998).

3.4. Cross-model comparison

For practical applicability, it is essential that an emulator’s performance is robust under varying climatic conditions and under potential biases of the climate model that produces the training data for the emulator. We address these questions by testing how well our emulation generalizes to data generated with different climate models (iCESM, ECHAM5-wiso, isoGSM). To do so, we train the best model architecture so far, the deeper modified flat UNet, on data from iHadCM3. Subsequently, the trained network is used to emulate

![]() $ {\delta}^{18}\mathrm{O} $

for the test sets of the other climate model datasets. Results of the emulation are visualized in Figure 5. For all datasets the mean

$ {\delta}^{18}\mathrm{O} $

for the test sets of the other climate model datasets. Results of the emulation are visualized in Figure 5. For all datasets the mean

![]() $ {R}^2 $

score is positive, meaning that in the global average, the emulation is preferable to predicting the mean state of the corresponding training set. The

$ {R}^2 $

score is positive, meaning that in the global average, the emulation is preferable to predicting the mean state of the corresponding training set. The

![]() $ {R}^2 $

score is highest for the ECHAM5-wiso simulation and lowest for isoGSM, where

$ {R}^2 $

score is highest for the ECHAM5-wiso simulation and lowest for isoGSM, where

![]() $ 80\% $

less variance is explained than on iHadCM3.

$ 80\% $

less variance is explained than on iHadCM3.

Figure 5. Results for the cross-prediction task: a UNet is trained on the iHadCM3 training data set. The performance is then evaluated on the test set of various climate models; shown

![]() $ {R}^2 $

scores are averages over 10 runs.

$ {R}^2 $

scores are averages over 10 runs.

In all three cross-prediction cases, the performance drops strongly in the Pacific Ocean west of South America, a region that is important for the El Niño–Southern Oscillation (ENSO). This might hint at inter-model differences in the spatial pattern of ENSO variability. For isoGSM, the emulation quality over Antarctica is considerably worse than for all other models. The Antarctic in isoGSM is much less depleted in

![]() $ {\delta}^{18}\mathrm{O} $

(less negative

$ {\delta}^{18}\mathrm{O} $

(less negative

![]() $ {\delta}^{18}\mathrm{O} $

) than in the other models while showing similar equator-to-pole temperature gradients (Bühler et al., Reference Bühler, Axelsson, Lechleitner, Fohlmeister, LeGrande, Midhun, Sjolte, Werner, Yoshimura and Rehfeld2022). This can potentially impact the relationship between the temporal variations of temperature and

$ {\delta}^{18}\mathrm{O} $

) than in the other models while showing similar equator-to-pole temperature gradients (Bühler et al., Reference Bühler, Axelsson, Lechleitner, Fohlmeister, LeGrande, Midhun, Sjolte, Werner, Yoshimura and Rehfeld2022). This can potentially impact the relationship between the temporal variations of temperature and

![]() $ {\delta}^{18}\mathrm{O} $

.

$ {\delta}^{18}\mathrm{O} $

.

For isoGSM and iCESM,

![]() $ {R}^2 $

is negative over large areas of the mid-latitude oceans. As synoptic-scale variability of moisture transport pathways might be an important factor for

$ {R}^2 $

is negative over large areas of the mid-latitude oceans. As synoptic-scale variability of moisture transport pathways might be an important factor for

![]() $ {\delta}^{18}\mathrm{O} $

in the mid-latitudes, adding predictor variables that encode information on the atmospheric circulation in the respective models could improve the results. The independence of the isoGSM and iCESM runs in these regions must be assessed carefully: isoGSM is forced by sea-surface temperatures and sea-ice distributions of a last-millennium run with CCSM4, which is a predecessor model of iCESM. Therefore, characteristics of iCESM might also be present in the isoGSM results.

$ {\delta}^{18}\mathrm{O} $

in the mid-latitudes, adding predictor variables that encode information on the atmospheric circulation in the respective models could improve the results. The independence of the isoGSM and iCESM runs in these regions must be assessed carefully: isoGSM is forced by sea-surface temperatures and sea-ice distributions of a last-millennium run with CCSM4, which is a predecessor model of iCESM. Therefore, characteristics of iCESM might also be present in the isoGSM results.

We also test how well the baseline ML models generalize when employed to estimate

![]() $ {\delta}^{18}\mathrm{O} $

for other climate models. The very simplistic pixel-wise linear regression yields better results than the PCA regression baseline. Supplementary Figure A.9 shows the cross-model performance of the linear regression baseline. While the

$ {\delta}^{18}\mathrm{O} $

for other climate models. The very simplistic pixel-wise linear regression yields better results than the PCA regression baseline. Supplementary Figure A.9 shows the cross-model performance of the linear regression baseline. While the

![]() $ {R}^2 $

score for iHadCM3 itself is significantly smaller than for the UNet model, the loss of performance when doing cross-prediction is much smaller. For iCESM, the results are even better than those obtained with the UNet model. Especially over mid-latitude oceans, the

$ {R}^2 $

score for iHadCM3 itself is significantly smaller than for the UNet model, the loss of performance when doing cross-prediction is much smaller. For iCESM, the results are even better than those obtained with the UNet model. Especially over mid-latitude oceans, the

![]() $ {R}^2 $

scores of the linear regression are better than the ones obtained with the UNet.

$ {R}^2 $

scores of the linear regression are better than the ones obtained with the UNet.

4. Discussion

In a first step toward data-driven emulation of water-isotope variability in precipitation from standard climate model output variables, we show that in a simulated dataset

![]() $ 40\% $

of the interannual

$ 40\% $

of the interannual

![]() $ {\delta}^{18}\mathrm{O} $

variance can be explained by ML models. The emulation quality follows patterns of the correlation between

$ {\delta}^{18}\mathrm{O} $

variance can be explained by ML models. The emulation quality follows patterns of the correlation between

![]() $ {\delta}^{18}\mathrm{O} $

and the predictor variables, precipitation amount and surface temperature. This hints at the possibility of further improving the emulation by including other variables that are statistically connected to

$ {\delta}^{18}\mathrm{O} $

and the predictor variables, precipitation amount and surface temperature. This hints at the possibility of further improving the emulation by including other variables that are statistically connected to

![]() $ {\delta}^{18}\mathrm{O} $

as predictors.

$ {\delta}^{18}\mathrm{O} $

as predictors.

![]() $ {\delta}^{18}\mathrm{O} $

composition depends on atmospheric moisture transport, which in turn, depends on atmospheric circulation. Thus, variables encoding information on atmospheric circulation, such as sea-level pressure, are promising candidates which should be explored in future research. This could be particularly relevant in the mid-latitudes, where the comparably poor performance of the emulators might be due to synoptic-scale moisture transport variability which is not well captured by annual or monthly means of precipitation and temperature. In addition, relative humidity seems a promising candidate as it is important for the evolution of

$ {\delta}^{18}\mathrm{O} $

composition depends on atmospheric moisture transport, which in turn, depends on atmospheric circulation. Thus, variables encoding information on atmospheric circulation, such as sea-level pressure, are promising candidates which should be explored in future research. This could be particularly relevant in the mid-latitudes, where the comparably poor performance of the emulators might be due to synoptic-scale moisture transport variability which is not well captured by annual or monthly means of precipitation and temperature. In addition, relative humidity seems a promising candidate as it is important for the evolution of

![]() $ {\delta}^{18}\mathrm{O} $

during the evaporation process.

$ {\delta}^{18}\mathrm{O} $

during the evaporation process.

It should be noted that correlation structures between predictor variables and

![]() $ {\delta}^{18}\mathrm{O} $

are likely timescale dependent. Our results suggest that temperature, precipitation, and atmospheric circulation variations due to internal variability in the climate system and short-scale external forcing such as volcanic eruptions and solar variability are the most important factors controlling interannual

$ {\delta}^{18}\mathrm{O} $

are likely timescale dependent. Our results suggest that temperature, precipitation, and atmospheric circulation variations due to internal variability in the climate system and short-scale external forcing such as volcanic eruptions and solar variability are the most important factors controlling interannual

![]() $ {\delta}^{18}\mathrm{O} $

variability. On the other hand, changes in long-term external forcings such as greenhouse gas concentrations and Earth’s orbital configuration, and variations in oceanic circulation have been found to explain

$ {\delta}^{18}\mathrm{O} $

variability. On the other hand, changes in long-term external forcings such as greenhouse gas concentrations and Earth’s orbital configuration, and variations in oceanic circulation have been found to explain

![]() $ {\delta}^{18}\mathrm{O} $

changes on millennial and orbital (10,000 years and longer) timescales (He et al., Reference He, Liu, Otto-Bliesner, Brady, Zhu, Tomas, Clark, Zhu, Jahn, Gu, Zhang, Nusbaumer, Noone, Cheng, Wang, Yan and Bao2021). This varying importance of factors controlling climate variations can also result in timescale-dependent relationships between the predictor variables surface temperature and precipitation amount (Rehfeld and Laepple, Reference Rehfeld and Laepple2016), which limits the generalization of emulators between timescales. Meanwhile, on timescales from hours to weeks, the memory in the atmosphere is higher. Thus, taking into account previous time steps and explicitly tracking moisture pathways, for example, in tropical or extratropical cyclones could improve the emulation performance. On these timescales, ML methods to model sequences of data, like long short-term memory (LSTM), recurrent neural networks (RNNs), or transformer models could be good alternatives.

$ {\delta}^{18}\mathrm{O} $

changes on millennial and orbital (10,000 years and longer) timescales (He et al., Reference He, Liu, Otto-Bliesner, Brady, Zhu, Tomas, Clark, Zhu, Jahn, Gu, Zhang, Nusbaumer, Noone, Cheng, Wang, Yan and Bao2021). This varying importance of factors controlling climate variations can also result in timescale-dependent relationships between the predictor variables surface temperature and precipitation amount (Rehfeld and Laepple, Reference Rehfeld and Laepple2016), which limits the generalization of emulators between timescales. Meanwhile, on timescales from hours to weeks, the memory in the atmosphere is higher. Thus, taking into account previous time steps and explicitly tracking moisture pathways, for example, in tropical or extratropical cyclones could improve the emulation performance. On these timescales, ML methods to model sequences of data, like long short-term memory (LSTM), recurrent neural networks (RNNs), or transformer models could be good alternatives.

A tested spherical CNN architecture shows no clear benefit over a modified version of the standard flat UNet for our task of emulating

![]() $ {\delta}^{18}\mathrm{O} $

in precipitation globally. We suppose that this is partly due to the strong latitudinal dependence of the statistical relationships between

$ {\delta}^{18}\mathrm{O} $

in precipitation globally. We suppose that this is partly due to the strong latitudinal dependence of the statistical relationships between

![]() $ {\delta}^{18}\mathrm{O} $