Part I Introduction

1 Statement of results and history

Let ![]() $k \geqslant 3$ be a positive integer. Write

$k \geqslant 3$ be a positive integer. Write ![]() $w(3,k)$ (sometimes written

$w(3,k)$ (sometimes written ![]() $w(2;3,k)$) for the smallest N such that the following is true: however

$w(2;3,k)$) for the smallest N such that the following is true: however ![]() $[N] = \{1,\dots , N\}$ is coloured blue and red, there is either a blue 3-term arithmetic progression or a red k-term arithmetic progression. The celebrated theorem of van der Waerden implies that

$[N] = \{1,\dots , N\}$ is coloured blue and red, there is either a blue 3-term arithmetic progression or a red k-term arithmetic progression. The celebrated theorem of van der Waerden implies that ![]() $w(3,k)$ is finite; the best upper bound currently known is due to Schoen [Reference Schoen19], who proved that for large k, one has

$w(3,k)$ is finite; the best upper bound currently known is due to Schoen [Reference Schoen19], who proved that for large k, one has ![]() $w(3,k) < e^{k^{1 - c}}$ for some constant

$w(3,k) < e^{k^{1 - c}}$ for some constant ![]() $c> 0$. This also follows from the celebrated recent work of Bloom and Sisask [Reference Bloom and Sisask4] on bounds for Roth’s theorem.

$c> 0$. This also follows from the celebrated recent work of Bloom and Sisask [Reference Bloom and Sisask4] on bounds for Roth’s theorem.

There is some literature on lower bounds for ![]() $w(3,k)$. Brown, Landman and Robertson [Reference Brown, Landman and Robertson5] showed that

$w(3,k)$. Brown, Landman and Robertson [Reference Brown, Landman and Robertson5] showed that ![]() $w(3,k) \gg k^{2 - \frac {1}{\log \log k}}$, and this was subsequently improved by Li and Shu [Reference Li and Shu16] to

$w(3,k) \gg k^{2 - \frac {1}{\log \log k}}$, and this was subsequently improved by Li and Shu [Reference Li and Shu16] to ![]() $w(3,k) \gg (k/\log k)^2$, the best bound currently in the literature. Both of these papers use probabilistic arguments based on the Lovász Local Lemma.

$w(3,k) \gg (k/\log k)^2$, the best bound currently in the literature. Both of these papers use probabilistic arguments based on the Lovász Local Lemma.

Computation or estimation of ![]() $w(3,k)$ for small values of k has attracted the interest of computationally inclined mathematicians. In [Reference Brown, Landman and Robertson5], one finds, for instance, that

$w(3,k)$ for small values of k has attracted the interest of computationally inclined mathematicians. In [Reference Brown, Landman and Robertson5], one finds, for instance, that ![]() $w(3,10) = 97$, whilst in Ahmed, Kullmann and Snevily [Reference Ahmed, Kullmann and Snevily1], one finds the lower bound

$w(3,10) = 97$, whilst in Ahmed, Kullmann and Snevily [Reference Ahmed, Kullmann and Snevily1], one finds the lower bound ![]() $w(3,20) \geqslant 389$ (conjectured to be sharp) as well as

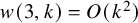

$w(3,20) \geqslant 389$ (conjectured to be sharp) as well as ![]() $w(3,30) \geqslant 903$. This data suggests a quadratic rate of growth, and indeed Li and Shu state as an open problem to prove or disprove that

$w(3,30) \geqslant 903$. This data suggests a quadratic rate of growth, and indeed Li and Shu state as an open problem to prove or disprove that ![]() $w(3,k) \geqslant c k^2$, whilst in [Reference Ahmed, Kullmann and Snevily1] it is conjectured that

$w(3,k) \geqslant c k^2$, whilst in [Reference Ahmed, Kullmann and Snevily1] it is conjectured that ![]() $w(3,k) = O(k^2)$. Brown, Landman and Robertson are a little more circumspect and merely say that it is ‘of particular interest whether or not there is a polynomial bound for

$w(3,k) = O(k^2)$. Brown, Landman and Robertson are a little more circumspect and merely say that it is ‘of particular interest whether or not there is a polynomial bound for ![]() $w(3,k)$’. I should also admit that I suggested the plausibility of a quadratic bound myself [Reference Green12, Problem 14].

$w(3,k)$’. I should also admit that I suggested the plausibility of a quadratic bound myself [Reference Green12, Problem 14].

The main result in this paper shows that, in fact, there is no such bound.

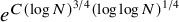

Theorem 1.1. There is a blue-red colouring of ![]() $[N]$ with no blue 3-term progression and no red progression of length

$[N]$ with no blue 3-term progression and no red progression of length ![]() $e^{C(\log N)^{3/4}(\log \log N)^{1/4}}$. Consequently, we have the bound

$e^{C(\log N)^{3/4}(\log \log N)^{1/4}}$. Consequently, we have the bound ![]() $w(3,k) \geqslant k^{b(k)}$, where

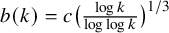

$w(3,k) \geqslant k^{b(k)}$, where ![]() $b(k) = c \big ( \frac {\log k}{\log \log k} \big )^{1/3}$.

$b(k) = c \big ( \frac {\log k}{\log \log k} \big )^{1/3}$.

Update, June 2022: Nine months after the arxiv version of this paper was made public, Zachary Hunter [Reference Hunter14] was able to simplify parts of the argument and at the same time improve the lower bound to ![]() $w(3,k) \geqslant k^{b'(k)}$, where

$w(3,k) \geqslant k^{b'(k)}$, where ![]() $b'(k) = c \frac {\log k}{\log \log k}$.

$b'(k) = c \frac {\log k}{\log \log k}$.

2 Overview and structure the paper

2.1 Discussion and overview

I first heard the question of whether or not ![]() $w(3,k) = O(k^2)$ from Ron Graham in around 2004. My initial reaction was that surely this must be false, for the following reason: take a large subset of

$w(3,k) = O(k^2)$ from Ron Graham in around 2004. My initial reaction was that surely this must be false, for the following reason: take a large subset of ![]() $[N]$ free of 3-term progressions, and colour it blue. Then the complement of this set probably does not have overly long red progressions. However, by considering the known examples of large sets free of 3-term progressions, one swiftly becomes less optimistic about this strategy.

$[N]$ free of 3-term progressions, and colour it blue. Then the complement of this set probably does not have overly long red progressions. However, by considering the known examples of large sets free of 3-term progressions, one swiftly becomes less optimistic about this strategy.

Example 1. If we take the blue points to be the folklore example ![]() $\{ \sum _i a_i 3^i : a_i \in \{0,1\}\} \cap [N]$, then the set of red points contains a progression of linear length, namely

$\{ \sum _i a_i 3^i : a_i \in \{0,1\}\} \cap [N]$, then the set of red points contains a progression of linear length, namely ![]() $\{ n \equiv 2 (\operatorname {mod}\, 3)\}$.

$\{ n \equiv 2 (\operatorname {mod}\, 3)\}$.

Example 2. If we take the blue points to be the Salem-Spencer set [Reference Salem and Spencer18] or the Behrend set [Reference Behrend3], then one runs into similar issues. Both sets consists of points ![]() $a_1 + a_2 (2d-1) + \dots + a_n (2d - 1)^n$ with all

$a_1 + a_2 (2d-1) + \dots + a_n (2d - 1)^n$ with all ![]() $a_i \in \{0,1,\dots , d-1\}$, and for such sets the red set will contain progressions such as

$a_i \in \{0,1,\dots , d-1\}$, and for such sets the red set will contain progressions such as ![]() $\{ n \equiv d (\operatorname {mod}\, 2d-1)\}$. If

$\{ n \equiv d (\operatorname {mod}\, 2d-1)\}$. If ![]() $N := d(2d - 1)^n$, so that the set is contained in

$N := d(2d - 1)^n$, so that the set is contained in ![]() $[N]$, the length of such a red progression is

$[N]$, the length of such a red progression is ![]() $\sim N/d$. In the Behrend case, one takes

$\sim N/d$. In the Behrend case, one takes ![]() $d \sim \sqrt {\log N}$, so this is very long.

$d \sim \sqrt {\log N}$, so this is very long.

Example 3. One runs into an apparently different kind of obstacle (although it is closely related) when considering the variant of Behrend’s construction due to Julia Wolf and myself [Reference Green and Wolf11]. (The bound in [Reference Green and Wolf11] was previously obtained by Elkin [Reference Elkin7], but the method of construction in [Reference Green and Wolf11] was different.) Roughly speaking, this construction proceeds as follows. Pick a dimension D, and consider the torus ![]() $\mathbf {T}^D = \mathbf {R}^D/\mathbf {Z}^D$. In this torus, consider a thin annulus, the projection

$\mathbf {T}^D = \mathbf {R}^D/\mathbf {Z}^D$. In this torus, consider a thin annulus, the projection ![]() $\pi (A)$ of the set

$\pi (A)$ of the set ![]() $A = \{ x \in \mathbf {R}^D : \frac {1}{4} - N^{-4/D} \leqslant \Vert x \Vert _2 \leqslant \frac {1}{4}\}$ under the natural map. Pick a rotation

$A = \{ x \in \mathbf {R}^D : \frac {1}{4} - N^{-4/D} \leqslant \Vert x \Vert _2 \leqslant \frac {1}{4}\}$ under the natural map. Pick a rotation ![]() $\theta \in \mathbf {T}^D$ at random, and define the blue points to be

$\theta \in \mathbf {T}^D$ at random, and define the blue points to be ![]() $\{ n \in [N] : \theta n \in \pi (A)\}$. By simple geometry, one can show that the only 3-term progressions in A are those with very small common difference v,

$\{ n \in [N] : \theta n \in \pi (A)\}$. By simple geometry, one can show that the only 3-term progressions in A are those with very small common difference v, ![]() $\Vert v \Vert _2 \ll N^{-4/D}$. This property transfers to

$\Vert v \Vert _2 \ll N^{-4/D}$. This property transfers to ![]() $\pi (A)$, essentially because by choosing

$\pi (A)$, essentially because by choosing ![]() $\frac {1}{4}$ as the radius of the annulus, one eliminates ‘wraparound effects’, which could have generated new progressions under the projection

$\frac {1}{4}$ as the radius of the annulus, one eliminates ‘wraparound effects’, which could have generated new progressions under the projection ![]() $\pi $. Due to the choice of parameters, it turns out that with very high probability, there are no blue 3-term progressions at all.

$\pi $. Due to the choice of parameters, it turns out that with very high probability, there are no blue 3-term progressions at all.

Let us now consider red progressions. Suppose that ![]() $\theta = (\theta _1,\dots , \theta _D)$, and think of D as fixed, with N large. By Dirichlet’s theorem, there is some

$\theta = (\theta _1,\dots , \theta _D)$, and think of D as fixed, with N large. By Dirichlet’s theorem, there is some ![]() $d \leqslant \sqrt {N}$ such that

$d \leqslant \sqrt {N}$ such that ![]() $\Vert \theta _1 d \Vert \leqslant 1/\sqrt {N}$, where

$\Vert \theta _1 d \Vert \leqslant 1/\sqrt {N}$, where ![]() $\Vert \cdot \Vert _{\mathbf {T}}$ denotes the distance to the nearest integer. Since

$\Vert \cdot \Vert _{\mathbf {T}}$ denotes the distance to the nearest integer. Since ![]() $\theta $ is chosen randomly, the sequence

$\theta $ is chosen randomly, the sequence ![]() $(\theta n)_{n = 1}^{\infty }$ will be highly equidistributed, and one certainly expects to find an

$(\theta n)_{n = 1}^{\infty }$ will be highly equidistributed, and one certainly expects to find an ![]() $n_0 = O_D(1)$ such that

$n_0 = O_D(1)$ such that ![]() $\theta n_0 \approx (\frac {1}{2},\dots , \frac {1}{2})$. If one then considers the progression

$\theta n_0 \approx (\frac {1}{2},\dots , \frac {1}{2})$. If one then considers the progression ![]() $P = \{ n_0 + nd : n \leqslant \sqrt {N}/10\}$ (say), one sees that

$P = \{ n_0 + nd : n \leqslant \sqrt {N}/10\}$ (say), one sees that ![]() $P \subset [N]$ and

$P \subset [N]$ and ![]() $\Vert \theta _1 (n_0 + nd) - \frac {1}{2} \Vert _{\mathbf {T}} < \frac {1}{4}$ for all

$\Vert \theta _1 (n_0 + nd) - \frac {1}{2} \Vert _{\mathbf {T}} < \frac {1}{4}$ for all ![]() $n \leqslant \sqrt {N}/10$. That is, all points of P avoid the annulus

$n \leqslant \sqrt {N}/10$. That is, all points of P avoid the annulus ![]() $\pi (A)$ (and in fact the whole ball of radius

$\pi (A)$ (and in fact the whole ball of radius ![]() $\frac {1}{4}$) since their first coordinates are confined to a narrow interval about

$\frac {1}{4}$) since their first coordinates are confined to a narrow interval about ![]() $\frac {1}{2}$. Therefore, P is coloured entirely red.

$\frac {1}{2}$. Therefore, P is coloured entirely red.

This last example does rather better than Examples 1 and 2, and it is the point of departure for the construction in this paper. Note that, in Example 3, the progression P of length ![]() $\gg \sqrt {N}$ that we found is not likely to be the only one. One could instead apply Dirichlet’s theorem to any of the other coordinates

$\gg \sqrt {N}$ that we found is not likely to be the only one. One could instead apply Dirichlet’s theorem to any of the other coordinates ![]() $\theta _2,\dots , \theta _D$. Moreover, one could also apply it to

$\theta _2,\dots , \theta _D$. Moreover, one could also apply it to ![]() $\theta _1 + \theta _2$, noting that points

$\theta _1 + \theta _2$, noting that points ![]() $x \in \pi (A)$ satisfy

$x \in \pi (A)$ satisfy ![]() $\Vert x_1 + x_2 \Vert _{\mathbf {T}} \leqslant \frac {\sqrt {2}}{4}$ and so avoid a narrow ‘strip’

$\Vert x_1 + x_2 \Vert _{\mathbf {T}} \leqslant \frac {\sqrt {2}}{4}$ and so avoid a narrow ‘strip’ ![]() $\Vert x_1 + x_2 - \frac {1}{2} \Vert _{\mathbf {T}} \leqslant \frac {1}{2} - \frac {\sqrt {2}}{4}$, or to

$\Vert x_1 + x_2 - \frac {1}{2} \Vert _{\mathbf {T}} \leqslant \frac {1}{2} - \frac {\sqrt {2}}{4}$, or to ![]() $\theta _1 + \theta _2 + \theta _3$, noting that points

$\theta _1 + \theta _2 + \theta _3$, noting that points ![]() $x \in \pi (A)$ satisfy

$x \in \pi (A)$ satisfy ![]() $\Vert x_1 + x_2 + x_3 \Vert _{\mathbf {T}} \leqslant \frac {\sqrt {3}}{4}$ and so avoid a narrow strip

$\Vert x_1 + x_2 + x_3 \Vert _{\mathbf {T}} \leqslant \frac {\sqrt {3}}{4}$ and so avoid a narrow strip ![]() $\Vert x_1 + x_2 + x_3 - \frac {1}{2} \Vert _{\mathbf {T}} \leqslant \frac {1}{2} - \frac {\sqrt {3}}{4}$. However, this no longer applies to

$\Vert x_1 + x_2 + x_3 - \frac {1}{2} \Vert _{\mathbf {T}} \leqslant \frac {1}{2} - \frac {\sqrt {3}}{4}$. However, this no longer applies to ![]() $\theta _1 + \theta _2 + \theta _3 + \theta _4$, since the relevant strip has zero width.

$\theta _1 + \theta _2 + \theta _3 + \theta _4$, since the relevant strip has zero width.

This discussion suggests the following key idea: instead of one annulus ![]() $\pi (A)$, we could try taking several, in such a way that every strip like the ones just discussed intersects at least one of these annuli. This then blocks all the ‘obvious’ ways of making red progressions of length

$\pi (A)$, we could try taking several, in such a way that every strip like the ones just discussed intersects at least one of these annuli. This then blocks all the ‘obvious’ ways of making red progressions of length ![]() $\sim \sqrt {N}$. Of course, one then runs the risk of introducing blue 3-term progressions. However, by shrinking the radii of the annuli to some

$\sim \sqrt {N}$. Of course, one then runs the risk of introducing blue 3-term progressions. However, by shrinking the radii of the annuli to some ![]() $\rho \lll 1$, a suitable construction may be achieved by picking the annuli to be random translates of a fixed one.

$\rho \lll 1$, a suitable construction may be achieved by picking the annuli to be random translates of a fixed one.

At this point we have an annulus ![]() $A = \{ x \in \mathbf {R}^D : \rho - N^{-4/D} \leqslant \Vert x \Vert _2 \leqslant \rho \}$ together with a union of translates

$A = \{ x \in \mathbf {R}^D : \rho - N^{-4/D} \leqslant \Vert x \Vert _2 \leqslant \rho \}$ together with a union of translates ![]() $S := \bigcup _{i=1}^M (x_i + \pi (A)) \subset \mathbf {T}^D$. Pick

$S := \bigcup _{i=1}^M (x_i + \pi (A)) \subset \mathbf {T}^D$. Pick ![]() $\theta \in \mathbf {T}^D$ at random, and colour those

$\theta \in \mathbf {T}^D$ at random, and colour those ![]() $n \leqslant N$ for which

$n \leqslant N$ for which ![]() $\theta n \in S$ blue. As we have stated, it is possible to show that (with a suitable choice of

$\theta n \in S$ blue. As we have stated, it is possible to show that (with a suitable choice of ![]() $\rho $, and for random translates

$\rho $, and for random translates ![]() $x_1,\dots , x_M$ with M chosen correctly) there are likely to be no blue 3-term progressions. Moreover, the obvious examples of red progressions of length

$x_1,\dots , x_M$ with M chosen correctly) there are likely to be no blue 3-term progressions. Moreover, the obvious examples of red progressions of length ![]() $\sim \sqrt {N}$ coming from Dirichlet’s theorem are blocked.

$\sim \sqrt {N}$ coming from Dirichlet’s theorem are blocked.

Now one may also apply Dirichlet’s theorem to pairs of frequencies, for instance producing ![]() $d \leqslant N^{2/3}$ such that

$d \leqslant N^{2/3}$ such that ![]() $\Vert \theta _1 d\Vert _{\mathbf {T}}, \Vert \theta _2 d \Vert _{\mathbf {T}} \leqslant N^{-1/3}$ and thereby potentially creating red progressions of length

$\Vert \theta _1 d\Vert _{\mathbf {T}}, \Vert \theta _2 d \Vert _{\mathbf {T}} \leqslant N^{-1/3}$ and thereby potentially creating red progressions of length ![]() $\sim N^{1/3}$ unless they too are blocked by the union of annuli. To obstruct these, one needs to consider ‘strips’ of codimension

$\sim N^{1/3}$ unless they too are blocked by the union of annuli. To obstruct these, one needs to consider ‘strips’ of codimension ![]() $2$, for instance given by conditions such as

$2$, for instance given by conditions such as ![]() $x_1, x_2 \approx \frac {1}{2}$. Similarly, to avoid progressions of length

$x_1, x_2 \approx \frac {1}{2}$. Similarly, to avoid progressions of length ![]() $\sim N^{1/4}$, one must ensure that strips of codimension

$\sim N^{1/4}$, one must ensure that strips of codimension ![]() $3$ are blocked, and so on.

$3$ are blocked, and so on.

Using these ideas, one can produce, for arbitrarily large values of r, a red–blue colouring of ![]() $[N]$ with no blue 3-term progression and no obvious way to make a red progression of length

$[N]$ with no blue 3-term progression and no obvious way to make a red progression of length ![]() $N^{1/r}$. Of course, this is by no means a proof that there are no such red progressions!

$N^{1/r}$. Of course, this is by no means a proof that there are no such red progressions!

Let us now discuss a further difficulty that arises when one tries to show that there no long red progressions. Consider the most basic progression ![]() $P = \{ 1, 2,\dots , X\}$,

$P = \{ 1, 2,\dots , X\}$, ![]() $X = N^{1/r}$, together with the task of showing it has at least one blue point. Suppose that

$X = N^{1/r}$, together with the task of showing it has at least one blue point. Suppose that ![]() $x_1$ (the centre of the first annulus in S) is equal to

$x_1$ (the centre of the first annulus in S) is equal to ![]() $0 \in \mathbf {T}^D$. Then, since P is ‘centred’ on

$0 \in \mathbf {T}^D$. Then, since P is ‘centred’ on ![]() $0$, it is natural to try to show that

$0$, it is natural to try to show that ![]() $\{ \theta , 2\theta ,\dots , X \theta \}$ intersects the annulus

$\{ \theta , 2\theta ,\dots , X \theta \}$ intersects the annulus ![]() $\pi (A)$ with centre

$\pi (A)$ with centre ![]() $x_1 = 0$, or in other words to show that there is

$x_1 = 0$, or in other words to show that there is ![]() $n \leqslant X$ such that

$n \leqslant X$ such that

The general flavour of this problem is to show that a certain ‘quadratic form’ takes at least one value in a rather small interval. However, what we have is not a bona fide quadratic form. To make it look like one, we apply standard geometry of numbers techniques to put a multidimensional structure on the Bohr set of n such that ![]() $\Vert n \theta _1 \Vert _{\mathbf {T}},\dots , \Vert n \theta _D \Vert _{\mathbf {T}} \leqslant \frac {1}{10}$ (say). This gives, inside the set of such n, a multidimensional progression

$\Vert n \theta _1 \Vert _{\mathbf {T}},\dots , \Vert n \theta _D \Vert _{\mathbf {T}} \leqslant \frac {1}{10}$ (say). This gives, inside the set of such n, a multidimensional progression ![]() $\{ \ell _1 n_1 + \dots + \ell _{D+1} n_{D+1} : 0 \leqslant \ell _i < L_i\}$ for certain

$\{ \ell _1 n_1 + \dots + \ell _{D+1} n_{D+1} : 0 \leqslant \ell _i < L_i\}$ for certain ![]() $n_i$ and certain lengths

$n_i$ and certain lengths ![]() $L_i$. The size

$L_i$. The size ![]() $L_1 \cdots L_{D+1}$ of this progression is comparable to X.

$L_1 \cdots L_{D+1}$ of this progression is comparable to X.

In this ‘basis’, the task in equation (2.1) then becomes to show that there are ![]() $\ell _1,\dots , \ell _{D+1}$,

$\ell _1,\dots , \ell _{D+1}$, ![]() $0 \leqslant \ell _i < L_i$, such that

$0 \leqslant \ell _i < L_i$, such that

where q is a certain quadratic form depending on ![]() $n_1,\dots , n_{D+1}$ and

$n_1,\dots , n_{D+1}$ and ![]() $\theta $. A representative case (but not the only one we need to consider) would be

$\theta $. A representative case (but not the only one we need to consider) would be ![]() $L_1 \approx \dots \approx L_{D+1} \approx L = X^{1/(D+1)}$, with the coefficients of q having size

$L_1 \approx \dots \approx L_{D+1} \approx L = X^{1/(D+1)}$, with the coefficients of q having size ![]() $\sim L^{-2}$ so that q is bounded in size by

$\sim L^{-2}$ so that q is bounded in size by ![]() $O(1)$. Note that

$O(1)$. Note that ![]() $N^{-4/D} \approx L^{-4r}$. Thus we have a problem of roughly the following type: given a quadratic form

$N^{-4/D} \approx L^{-4r}$. Thus we have a problem of roughly the following type: given a quadratic form ![]() $q : \mathbf {Z}^{D+1} \rightarrow \mathbf {R}$ with coefficients of size

$q : \mathbf {Z}^{D+1} \rightarrow \mathbf {R}$ with coefficients of size ![]() $\sim L^{-2}$, show that on the box

$\sim L^{-2}$, show that on the box ![]() $[L]^{D+1}$, it takes at least one value on some given interval of length

$[L]^{D+1}$, it takes at least one value on some given interval of length ![]() $L^{-4r}$.

$L^{-4r}$.

Without further information, this is unfortunately a hopeless situation because of the possibility that, for instance, the coefficients of q lie in ![]() $\frac {1}{Q} \mathbf {Z}$ for some

$\frac {1}{Q} \mathbf {Z}$ for some ![]() $Q> L^2$. In this case, the values taken by q are

$Q> L^2$. In this case, the values taken by q are ![]() $\frac {1}{Q}$-separated and hence, for moderate values of Q, not likely to lie in any particular interval of length

$\frac {1}{Q}$-separated and hence, for moderate values of Q, not likely to lie in any particular interval of length ![]() $L^{-4r}$.

$L^{-4r}$.

A small amount of hope is offered by the fact that q is not a fixed quadratic form – it depends on the random choice of ![]() $\theta $. However, the way in which random

$\theta $. However, the way in which random ![]() $\theta $ correspond to quadratic forms is not at all easy to analyse, and moreover we also need to consider similar problems for

$\theta $ correspond to quadratic forms is not at all easy to analyse, and moreover we also need to consider similar problems for ![]() $2\theta , 3 \theta ,\dots $ corresponding to potential red progressions with common difference

$2\theta , 3 \theta ,\dots $ corresponding to potential red progressions with common difference ![]() $d = 2,3,\dots $.

$d = 2,3,\dots $.

Our way around this issue, and the second key idea in the paper, is to introduce a large amount of extra randomness elsewhere, in the definition of the annuli. Instead of the standard ![]() $\ell ^2$-norm

$\ell ^2$-norm ![]() $\Vert x \Vert _2$, we consider instead a perturbation

$\Vert x \Vert _2$, we consider instead a perturbation ![]() $\Vert (I + E) x \Vert _2$, where E is a random

$\Vert (I + E) x \Vert _2$, where E is a random ![]() $D \times D$ matrix with small entries so that

$D \times D$ matrix with small entries so that ![]() $I+E$ is invertible with operator norm close to

$I+E$ is invertible with operator norm close to ![]() $1$. Such ellipsoidal annuli are just as good as spherical ones for the purposes of our construction. The choice of E comes with a massive

$1$. Such ellipsoidal annuli are just as good as spherical ones for the purposes of our construction. The choice of E comes with a massive ![]() $D(D+1)/2$ degrees of freedom (not

$D(D+1)/2$ degrees of freedom (not ![]() $D^2$, because Es that differ by an orthogonal matrix give the same norm). The quadratic form q then becomes a random quadratic form

$D^2$, because Es that differ by an orthogonal matrix give the same norm). The quadratic form q then becomes a random quadratic form ![]() $q_E$.

$q_E$.

With considerable effort, the distribution of ![]() $q_E$, E random, can be shown to be somewhat related (for typical

$q_E$, E random, can be shown to be somewhat related (for typical ![]() $\theta $) to the distribution of a truly random quadratic form

$\theta $) to the distribution of a truly random quadratic form ![]() $q_a(\ell _1,\dots , \ell _{D+1}) = \sum _{i\leqslant j} a_{ij} \ell _i \ell _j$, with the coefficients chosen uniformly from

$q_a(\ell _1,\dots , \ell _{D+1}) = \sum _{i\leqslant j} a_{ij} \ell _i \ell _j$, with the coefficients chosen uniformly from ![]() $|a_{ij}| \leqslant L^{-2}$. One is then left with the task of showing that a uniformly random quadratic form

$|a_{ij}| \leqslant L^{-2}$. One is then left with the task of showing that a uniformly random quadratic form ![]() $q_a$ takes values in a very short interval of length

$q_a$ takes values in a very short interval of length ![]() $L^{-4r}$. Moreover, this is required with a very strong bound on the exceptional probability, suitable for taking a union bound over the

$L^{-4r}$. Moreover, this is required with a very strong bound on the exceptional probability, suitable for taking a union bound over the ![]() $\sim N^{1 - 1/r}$ possible choices of the common difference d.

$\sim N^{1 - 1/r}$ possible choices of the common difference d.

A natural tool for studying gaps in the values of quadratic forms is the Hardy-Littlewood circle method, and indeed it turns out that a suitable application of the Davenport-Heilbronn variant of the method can be applied to give what we require. The application is not direct and additionally requires a novel amplification argument using lines in the projective plane over a suitable ![]() $\mathbf {F}_p$ to get the strong bound on the exceptional probability that we need.

$\mathbf {F}_p$ to get the strong bound on the exceptional probability that we need.

The above sketch omitted at least one significant detail, namely how to handle ‘uncentred’ progressions P starting at points other than ![]() $0$. For these, one must use the particular choice of the centres

$0$. For these, one must use the particular choice of the centres ![]() $x_i$. One can show that P enters inside at least one of the balls

$x_i$. One can show that P enters inside at least one of the balls ![]() $x_i + \pi (B_{\rho /10}(0))$, and starting from here one can proceed much as in the centred case.

$x_i + \pi (B_{\rho /10}(0))$, and starting from here one can proceed much as in the centred case.

2.2 Structure of the paper

With that sketch of the construction complete, let us briefly describe the structure of the paper. As the above discussion suggests, it is natural to introduce a parameter r and consider the following equivalent form of Theorem 1.1.

Theorem 2.1. Let r be an integer, and suppose that ![]() $N> e^{Cr^4 \log r}$. Then there is a red/blue colouring of

$N> e^{Cr^4 \log r}$. Then there is a red/blue colouring of ![]() $[N]$ with no blue 3-term progression and no red progression of length

$[N]$ with no blue 3-term progression and no red progression of length ![]() $N^{1/r}$.

$N^{1/r}$.

Taking ![]() $r = c \big ( \frac {\log N}{\log \log N} \big )^{1/4}$ for suitable c, we recover Theorem 1.1. While Theorems 1.1 and 2.1 are equivalent, it is much easier to think about Theorem 2.1 and its proof by imagining that r is fixed and N is a very large compared to r. We will, of course, keep track of just how large N needs to be as we go along, but this is somewhat secondary to understanding the key concepts of the argument. For the rest of the paper, r will denote the parameter appearing in Theorem 2.1, and we will always assume (as we clearly may) that it is sufficiently large.

$r = c \big ( \frac {\log N}{\log \log N} \big )^{1/4}$ for suitable c, we recover Theorem 1.1. While Theorems 1.1 and 2.1 are equivalent, it is much easier to think about Theorem 2.1 and its proof by imagining that r is fixed and N is a very large compared to r. We will, of course, keep track of just how large N needs to be as we go along, but this is somewhat secondary to understanding the key concepts of the argument. For the rest of the paper, r will denote the parameter appearing in Theorem 2.1, and we will always assume (as we clearly may) that it is sufficiently large.

In Section 3, we summarise some key notation and conventions in force for the rest of the paper. In Section 4, we turn to the details of our construction, in particular constructing the translates ![]() $x_1,\dots , x_M$ of our annuli, and introducing the notion of a random ellipsoidal annulus properly. In Section 5, we describe the red/blue colouring itself and divide the task of showing that there are no blue 3-term progressions or red

$x_1,\dots , x_M$ of our annuli, and introducing the notion of a random ellipsoidal annulus properly. In Section 5, we describe the red/blue colouring itself and divide the task of showing that there are no blue 3-term progressions or red ![]() $N^{1/r}$-term progressions into three parts (the blue progressions, and what we call steps 1 and 2 for the red progressions). In Section 6, we handle the blue 3-term progressions. Section 7 is then devoted to a technical ‘diophantine’ condition on

$N^{1/r}$-term progressions into three parts (the blue progressions, and what we call steps 1 and 2 for the red progressions). In Section 6, we handle the blue 3-term progressions. Section 7 is then devoted to a technical ‘diophantine’ condition on ![]() $\theta \in \mathbf {T}^D$ that will be in force for the rest of the paper. In Section 8, we handle step 1 of the treatment of red progressions.

$\theta \in \mathbf {T}^D$ that will be in force for the rest of the paper. In Section 8, we handle step 1 of the treatment of red progressions.

At this point we are only one third of the way through the paper. The remaining discussion is devoted to the treatment of step 2 for the red progressions, which involves the geometry of numbers and gaps in random quadratic forms material outlined above. We devote Section 9 to a more detailed technical overview of the argument that reduces it to three key propositions. The proofs of these propositions are then handled in Parts IV and V of the paper. Part IV contains, roughly speaking, the relevant geometry of numbers arguments, whilst Part V contains the arguments pertaining to gaps in quadratic forms. These parts may be read independently of one another and of the rest of the paper.

2.3 Further comments

The discussion around the application of Dirichlet’s theorem above suggests that there are certain ‘phase changes’ in the problem as one goes from ruling out red progressions of length ![]() $\sim N^{1/2}$ to ruling out progressions of length

$\sim N^{1/2}$ to ruling out progressions of length ![]() $\sim N^{1/3}$, and so on. Indeed, this is why we formulate our main result in the equivalent form of Theorem 2.1. I consider it quite plausible that such phase changes are not merely an artefact of our argument but rather of the problem as a whole, and the apparently strong numerical evidence for quadratic behaviour of

$\sim N^{1/3}$, and so on. Indeed, this is why we formulate our main result in the equivalent form of Theorem 2.1. I consider it quite plausible that such phase changes are not merely an artefact of our argument but rather of the problem as a whole, and the apparently strong numerical evidence for quadratic behaviour of ![]() $w(3,k)$ reflects the fact that in the regime

$w(3,k)$ reflects the fact that in the regime ![]() $k \leqslant 40$, one is only seeing the first phase in which it is more efficient to take just one large annulus as in the construction of Julia Wolf and myself, at the expense of having to allow strips of codimension 1 that admit red progressions of length

$k \leqslant 40$, one is only seeing the first phase in which it is more efficient to take just one large annulus as in the construction of Julia Wolf and myself, at the expense of having to allow strips of codimension 1 that admit red progressions of length ![]() $\sim N^{1/2}$. It would be interesting to see whether the ideas of this paper could be used to produce, computationally, an example with

$\sim N^{1/2}$. It would be interesting to see whether the ideas of this paper could be used to produce, computationally, an example with ![]() $w(3,k) \sim k^3$.

$w(3,k) \sim k^3$.

I have worked quite hard to try to optimise the exponent in Theorem 1.1, and it seems to represent the limit of the method for multiple different reasons, as discussed in a little more detail in Section 3 below. These limitations seem to be a mix of fundamental ones and artefacts of our analysis. I would expect that the true value of ![]() $w(3,k)$ lies somewhere in between the bound of Theorem 1.1 and something like

$w(3,k)$ lies somewhere in between the bound of Theorem 1.1 and something like ![]() $k^{c\log k}$, which is what a Behrend construction of the blue points would give if only the complement of such a set ‘behaved randomly’. Ron Graham [Reference Graham10] established a lower bound of this type for a restricted version of the problem in which one only forbids red progressions with common difference

$k^{c\log k}$, which is what a Behrend construction of the blue points would give if only the complement of such a set ‘behaved randomly’. Ron Graham [Reference Graham10] established a lower bound of this type for a restricted version of the problem in which one only forbids red progressions with common difference ![]() $1$.

$1$.

Finally, we remark that [Reference Fox and Pohoata9] is an earlier example in which random unions of structured objects are used to understand a problem related to arithmetic progressions.

3 Notation and conventions

3.1 Fourier transforms

We use the standard notation ![]() $e(t) := e^{2\pi i t}$ for

$e(t) := e^{2\pi i t}$ for ![]() $t \in \mathbf {R}$.

$t \in \mathbf {R}$.

We will take Fourier transforms of functions on ![]() $\mathbf {R}^k, \mathbf {Z}^k, \mathbf {T}^k$ for various integers k. We will use the same hat symbol for all of these and define them as follows:

$\mathbf {R}^k, \mathbf {Z}^k, \mathbf {T}^k$ for various integers k. We will use the same hat symbol for all of these and define them as follows:

• If

$f : \mathbf {R}^k \rightarrow \mathbf {C}$,

$f : \mathbf {R}^k \rightarrow \mathbf {C}$,  $\hat {f}(\gamma ) = \int _{\mathbf {R}^k} f(x) e(-\langle \gamma , x\rangle ) dx$ for

$\hat {f}(\gamma ) = \int _{\mathbf {R}^k} f(x) e(-\langle \gamma , x\rangle ) dx$ for  $\gamma \in \mathbf {R}^k$;

$\gamma \in \mathbf {R}^k$;• If

$f : \mathbf {Z}^k \rightarrow \mathbf {C}$,

$f : \mathbf {Z}^k \rightarrow \mathbf {C}$,  $\hat {f}(\theta ) = \sum _{n \in \mathbf {Z}^k} f(n) e(-n \cdot \theta )$ for

$\hat {f}(\theta ) = \sum _{n \in \mathbf {Z}^k} f(n) e(-n \cdot \theta )$ for  $\theta \in \mathbf {T}^k$;

$\theta \in \mathbf {T}^k$;• If

$f : \mathbf {T}^k \rightarrow \mathbf {C}$,

$f : \mathbf {T}^k \rightarrow \mathbf {C}$,  $\hat {f}(\xi ) = \int _{\mathbf {T}^k} f(x) e(-\xi \cdot x) dx$ for

$\hat {f}(\xi ) = \int _{\mathbf {T}^k} f(x) e(-\xi \cdot x) dx$ for  $\xi \in \mathbf {Z}^k$.

$\xi \in \mathbf {Z}^k$.

The notation ![]() $\langle x, y\rangle $ for

$\langle x, y\rangle $ for ![]() $\sum _i x_i y_i$ in

$\sum _i x_i y_i$ in ![]() $\mathbf {R}^k$, but

$\mathbf {R}^k$, but ![]() $x \cdot y$ in

$x \cdot y$ in ![]() $\mathbf {Z}^k$ and

$\mathbf {Z}^k$ and ![]() $\mathbf {T}^k$, is merely cultural and is supposed to reflect the fact that our arguments in the former space will be somewhat geometric in flavour.

$\mathbf {T}^k$, is merely cultural and is supposed to reflect the fact that our arguments in the former space will be somewhat geometric in flavour.

We will only be using the Fourier transform on smooth, rapidly decaying functions where convergence issues are no problem. Note in particular that the normalisation of the Fourier transform on ![]() $\mathbf {R}^k$ (with the phase multiplied by

$\mathbf {R}^k$ (with the phase multiplied by ![]() $2\pi $) is just one of the standard options, but a convenient one in this paper. With this normalisation, Fourier inversion states that

$2\pi $) is just one of the standard options, but a convenient one in this paper. With this normalisation, Fourier inversion states that ![]() $f(x) = \int _{\mathbf {R}^k} \hat {f}(\gamma ) e(\langle \gamma , x\rangle ) d\gamma $.

$f(x) = \int _{\mathbf {R}^k} \hat {f}(\gamma ) e(\langle \gamma , x\rangle ) d\gamma $.

3.2 Convention on absolute constants

It would not be hard to write in explicit constants throughout the paper. They would get quite large, but not ridiculously so. However, we believe it makes the presentation neater, and the dependencies between parameters easier to understand, if we leave the larger ones unspecified and adopt the following convention:

•

$C_1$ is a sufficiently large absolute constant;

$C_1$ is a sufficiently large absolute constant;•

$C_2$ is an even larger absolute constant, how large it needs to be depending on the choice of

$C_2$ is an even larger absolute constant, how large it needs to be depending on the choice of  $C_1$;

$C_1$;•

$C_3$ is a still larger constant, large enough in terms of

$C_3$ is a still larger constant, large enough in terms of  $C_1, C_2$.

$C_1, C_2$.

To clarify, no matter which ![]() $C_1$ we choose (provided it is sufficiently big), there is an appropriate choice of

$C_1$ we choose (provided it is sufficiently big), there is an appropriate choice of ![]() $C_2$, and in fact all sufficiently large

$C_2$, and in fact all sufficiently large ![]() $C_2$ work. No matter which

$C_2$ work. No matter which ![]() $C_2$ we choose, all sufficiently large

$C_2$ we choose, all sufficiently large ![]() $C_3$ work. There are many constraints on how large

$C_3$ work. There are many constraints on how large ![]() $C_1$ needs to be throughout the paper, and it must be chosen to satisfy all of them, and similarly for

$C_1$ needs to be throughout the paper, and it must be chosen to satisfy all of them, and similarly for ![]() $C_2, C_3$.

$C_2, C_3$.

3.3 Key parameters

The most important global parameters in the paper are the following:

• N: the interval

$[N]$ is the setting for Theorem 2.1.

$[N]$ is the setting for Theorem 2.1.• r: a positive integer, always assumed to be sufficiently large.

$N^{1/r}$ is the length of red progressions we are trying to forbid.

$N^{1/r}$ is the length of red progressions we are trying to forbid.• D: a positive integer dimension. The torus

$\mathbf {T}^D = \mathbf {R}^D/\mathbf {Z}^D$ will play a key role in the paper.

$\mathbf {T}^D = \mathbf {R}^D/\mathbf {Z}^D$ will play a key role in the paper.

Throughout the paper, we will assume that

Several lemmas and propositions do not require such strong assumptions. However, two quite different results in the paper (Proposition 4.1 and the application of Proposition 9.3 during the proof of Proposition 5.4 in Section 9) require a condition of the form ![]() $D \gg r^2$. The condition

$D \gg r^2$. The condition ![]() $N> D^{C_2 D^2}$ comes up in the proof of Proposition 5.4, in fact in no fewer than three different ways in the last displayed equation of Section 9. For these reasons, it seems as if our current mode of argument cannot possibly yield anything stronger than Theorem 2.1.

$N> D^{C_2 D^2}$ comes up in the proof of Proposition 5.4, in fact in no fewer than three different ways in the last displayed equation of Section 9. For these reasons, it seems as if our current mode of argument cannot possibly yield anything stronger than Theorem 2.1.

A number of other parameters and other nomenclature feature in several sections of the paper:

• X: shorthand for

$N^{1/r}$.

$N^{1/r}$.•

$\theta $: an element of

$\theta $: an element of  $\mathbf {T}^D$, chosen uniformly at random, and later in the paper always taken to lie in the set

$\mathbf {T}^D$, chosen uniformly at random, and later in the paper always taken to lie in the set  $\Theta $ of diophantine elements (Section 7).

$\Theta $ of diophantine elements (Section 7).•

$\rho $: a small radius (of annuli in

$\rho $: a small radius (of annuli in  $\mathbf {T}^D$), from Section 5 onwards fixed to be

$\mathbf {T}^D$), from Section 5 onwards fixed to be  $D^{-4}$.

$D^{-4}$.•

$\mathbf {e}$: a uniform random element of

$\mathbf {e}$: a uniform random element of  $[-\frac {1}{D^4} , \frac {1}{D^4} ]^{D(D+1)/2}$ (used to define random ellipsoids). Usually we will see

$[-\frac {1}{D^4} , \frac {1}{D^4} ]^{D(D+1)/2}$ (used to define random ellipsoids). Usually we will see  $\sigma (\mathbf {e})$, which is a symmetric matrix formed from

$\sigma (\mathbf {e})$, which is a symmetric matrix formed from  $\mathbf {e}$ in an obvious way (see Section 4 for the definition).

$\mathbf {e}$ in an obvious way (see Section 4 for the definition).• d: invariably the common difference of a progression, with

$d \leqslant N/X$.

$d \leqslant N/X$.

The letter Q is reserved for a ‘complexity’ parameter (bounding the allowed size of coefficients, or of matrix entries) in various different contexts.

3.4 Notation

![]() $[N]$ always denotes

$[N]$ always denotes ![]() $\{1,\dots , N\}$.

$\{1,\dots , N\}$.

If ![]() $x \in \mathbf {T}$, then we write

$x \in \mathbf {T}$, then we write ![]() $\Vert x \Vert _{\mathbf {T}}$ for the distance from x to the nearest integer. If

$\Vert x \Vert _{\mathbf {T}}$ for the distance from x to the nearest integer. If ![]() $x = (x_1,\dots , x_D) \in \mathbf {T}^D$, then we write

$x = (x_1,\dots , x_D) \in \mathbf {T}^D$, then we write ![]() $\Vert x \Vert _{\mathbf {T}^D} = \max _i \Vert x_i \Vert _{\mathbf {T}}$.

$\Vert x \Vert _{\mathbf {T}^D} = \max _i \Vert x_i \Vert _{\mathbf {T}}$.

We identify the dual ![]() $\hat {\mathbf {T}}^D$ with

$\hat {\mathbf {T}}^D$ with ![]() $\mathbf {Z}^D$ via the map

$\mathbf {Z}^D$ via the map ![]() $\xi \mapsto (x \mapsto e(\xi \cdot x))$, where

$\xi \mapsto (x \mapsto e(\xi \cdot x))$, where ![]() $\xi \cdot x = \xi _1 x_1 + \cdots + \xi _D x_D$. In this setting, we always write

$\xi \cdot x = \xi _1 x_1 + \cdots + \xi _D x_D$. In this setting, we always write ![]() $|\xi | := \max _i |\xi _i|$ instead of the more cumbersome

$|\xi | := \max _i |\xi _i|$ instead of the more cumbersome ![]() $\Vert \xi \Vert _{\infty }$.

$\Vert \xi \Vert _{\infty }$.

Apart from occasional instances where it denotes ![]() $3.141592\dots $,

$3.141592\dots $, ![]() $\pi $ is the natural projection homomorphism

$\pi $ is the natural projection homomorphism ![]() $\pi : \mathbf {R}^D \rightarrow \mathbf {T}^D$. Clearly

$\pi : \mathbf {R}^D \rightarrow \mathbf {T}^D$. Clearly ![]() $\pi $ is not invertible, but nonetheless we abuse notation by writing

$\pi $ is not invertible, but nonetheless we abuse notation by writing ![]() $\pi ^{-1}(x)$ for the unique element

$\pi ^{-1}(x)$ for the unique element ![]() $y \in (-\frac {1}{2}, \frac {1}{2}]^D$ with

$y \in (-\frac {1}{2}, \frac {1}{2}]^D$ with ![]() $\pi (y) = x$.

$\pi (y) = x$.

If R is a ![]() $D \times D$ matrix over

$D \times D$ matrix over ![]() $\mathbf {R}$, then we write

$\mathbf {R}$, then we write ![]() $\Vert R \Vert $ for the

$\Vert R \Vert $ for the ![]() $\ell ^2$-to-

$\ell ^2$-to-![]() $\ell ^2$ operator norm, that is to say

$\ell ^2$ operator norm, that is to say ![]() $\Vert Rx \Vert _{2} \leqslant \Vert R \Vert \Vert x \Vert _2$, and

$\Vert Rx \Vert _{2} \leqslant \Vert R \Vert \Vert x \Vert _2$, and ![]() $\Vert R \Vert $ is the smallest constant with this property. Equivalently,

$\Vert R \Vert $ is the smallest constant with this property. Equivalently, ![]() $\Vert R \Vert $ is the largest singular value of R.

$\Vert R \Vert $ is the largest singular value of R.

Part II A red/blue colouring of ![]() $ [\boldsymbol{N}]$

$ [\boldsymbol{N}]$

4 Random ellipsoidal annuli

In this section, we prepare the ground for describing our red/blue colouring of ![]() $[N]$, which we will give in Section 5. In the next section, we describe our basic construction by specifying the points in

$[N]$, which we will give in Section 5. In the next section, we describe our basic construction by specifying the points in ![]() $[N]$ to be coloured blue. The torus

$[N]$ to be coloured blue. The torus ![]() $\mathbf {T}^D$ (and Euclidean space

$\mathbf {T}^D$ (and Euclidean space ![]() $\mathbf {R}^D$) play a fundamental role in our construction, where

$\mathbf {R}^D$) play a fundamental role in our construction, where ![]() $D = C_3 r^2$.

$D = C_3 r^2$.

4.1 A well-distributed set of centres

As outlined in Section 2, an important part of our construction is the selection (randomly) of a certain set ![]() $x_1,\dots , x_M$ of points in

$x_1,\dots , x_M$ of points in ![]() $\mathbf {T}^D$. Later, we will fix

$\mathbf {T}^D$. Later, we will fix ![]() $\rho := D^{-4}$, but the following proposition does not make any assumption on

$\rho := D^{-4}$, but the following proposition does not make any assumption on ![]() $\rho $ beyond that

$\rho $ beyond that ![]() $\rho < D^{-1}$.

$\rho < D^{-1}$.

Proposition 4.1. Suppose that ![]() $D = C_3 r^2$ and

$D = C_3 r^2$ and ![]() $\rho < \frac {1}{D}$. There are

$\rho < \frac {1}{D}$. There are ![]() $x_1,\dots , x_M \in \mathbf {T}^D$ such that the following hold:

$x_1,\dots , x_M \in \mathbf {T}^D$ such that the following hold:

1. Whenever

$i_1, i_2, i_3$ are not all the same,

$i_1, i_2, i_3$ are not all the same,  $\Vert x_{i_1} - 2 x_{i_2} + x_{i_3} \Vert _{\mathbf {T}^D} \geqslant 10 \rho $.

$\Vert x_{i_1} - 2 x_{i_2} + x_{i_3} \Vert _{\mathbf {T}^D} \geqslant 10 \rho $.2. Whenever

$V \leqslant \mathbf {Q}^D$ is a subspace of dimension at most

$V \leqslant \mathbf {Q}^D$ is a subspace of dimension at most  $4r$ and

$4r$ and  $x \in \mathbf {T}^D$, there is some j such that

$x \in \mathbf {T}^D$, there is some j such that  $\Vert \xi \cdot (x_j - x) \Vert _{\mathbf {T}} \leqslant \frac {1}{100}$ for all

$\Vert \xi \cdot (x_j - x) \Vert _{\mathbf {T}} \leqslant \frac {1}{100}$ for all  $\xi \in V \cap \mathbf {Z}^D$ with

$\xi \in V \cap \mathbf {Z}^D$ with  $|\xi | \leqslant \rho ^{-3}$.

$|\xi | \leqslant \rho ^{-3}$.

Remark. With reference to the outline in Section 2, condition (2) here is saying that any ‘slice’ of codimension at most ![]() $4r$ contains one of the

$4r$ contains one of the ![]() $x_i$; this is what obstructs a simple construction of red progressions of length

$x_i$; this is what obstructs a simple construction of red progressions of length ![]() $N^{1/r}$ using Dirichlet’s theorem. Item (1) will allow us to guarantee that by taking a union of translates of annuli, rather than just one, we do not introduce new blue 3-term progressions.

$N^{1/r}$ using Dirichlet’s theorem. Item (1) will allow us to guarantee that by taking a union of translates of annuli, rather than just one, we do not introduce new blue 3-term progressions.

Proof. Set ![]() $M = \lceil \rho ^{-D/4}\rceil $, and pick

$M = \lceil \rho ^{-D/4}\rceil $, and pick ![]() $x_1,\dots , x_M \in \mathbf {T}^D$ independently and uniformly at random. For any triple

$x_1,\dots , x_M \in \mathbf {T}^D$ independently and uniformly at random. For any triple ![]() $(i_1, i_2, i_3)$ with not all the indices the same,

$(i_1, i_2, i_3)$ with not all the indices the same, ![]() $x_{i_1} - 2x_{i_2} + x_{i_3}$ is uniformly distributed on

$x_{i_1} - 2x_{i_2} + x_{i_3}$ is uniformly distributed on ![]() $\mathbf {T}^D$. Therefore,

$\mathbf {T}^D$. Therefore, ![]() $\mathbb {P} ( \Vert x_{i_1} - 2 x_{i_2} + x_{i_3} \Vert _{\mathbf {T}^D} \leqslant 10 \rho ) \leqslant (20 \rho )^{D}$. Summing over all

$\mathbb {P} ( \Vert x_{i_1} - 2 x_{i_2} + x_{i_3} \Vert _{\mathbf {T}^D} \leqslant 10 \rho ) \leqslant (20 \rho )^{D}$. Summing over all ![]() $< M^3$ choices of indices gives an upper bound of

$< M^3$ choices of indices gives an upper bound of ![]() $M^3 (20 \rho )^D < \frac {1}{4}$ on the probability that (1) fails (since D is sufficiently large).

$M^3 (20 \rho )^D < \frac {1}{4}$ on the probability that (1) fails (since D is sufficiently large).

For (2), we may assume that V is spanned (over ![]() $\mathbf {Q}$) by vectors

$\mathbf {Q}$) by vectors ![]() $\xi \in \mathbf {Z}^D$ with

$\xi \in \mathbf {Z}^D$ with ![]() $|\xi | \leqslant \rho ^{-3}$ (otherwise, pass from V to the subspace of V spanned by such vectors). There are at most

$|\xi | \leqslant \rho ^{-3}$ (otherwise, pass from V to the subspace of V spanned by such vectors). There are at most ![]() $4r (3 \rho ^{-3})^{4r D} < \rho ^{-14rD}$ such V (choose the dimension

$4r (3 \rho ^{-3})^{4r D} < \rho ^{-14rD}$ such V (choose the dimension ![]() $m < 4r$, and then m basis elements with

$m < 4r$, and then m basis elements with ![]() $\xi \in \mathbf {Z}^D$ and

$\xi \in \mathbf {Z}^D$ and ![]() $|\xi | \leqslant \rho ^{-3}$).

$|\xi | \leqslant \rho ^{-3}$).

Fix such a V. By Lemma 1.2, ![]() $V \cap \mathbf {Z}^D$ is a free

$V \cap \mathbf {Z}^D$ is a free ![]() $\mathbf {Z}$-module generated by some

$\mathbf {Z}$-module generated by some ![]() $\xi _1,\dots , \xi _m$,

$\xi _1,\dots , \xi _m$, ![]() $m \leqslant 4r$, and with every element

$m \leqslant 4r$, and with every element ![]() $\xi \in V \cap \mathbf {Z}^D$ with

$\xi \in V \cap \mathbf {Z}^D$ with ![]() $|\xi | \leqslant \rho ^{-3}$ being

$|\xi | \leqslant \rho ^{-3}$ being ![]() $\xi = n_1 \xi _1+ \cdots + n_m \xi _m$ with

$\xi = n_1 \xi _1+ \cdots + n_m \xi _m$ with ![]() $|n_i| \leqslant m! (2\rho ^{-3})^m \leqslant \frac {1}{400r}\rho ^{-14 r}$. Thus, to satisfy our requirement, we need only show that for any

$|n_i| \leqslant m! (2\rho ^{-3})^m \leqslant \frac {1}{400r}\rho ^{-14 r}$. Thus, to satisfy our requirement, we need only show that for any ![]() $x \in \mathbf {T}^D$ there is

$x \in \mathbf {T}^D$ there is ![]() $x_j$ such that

$x_j$ such that

since then

$$\begin{align*}\Vert \xi \cdot (x_j - x) \Vert_{\mathbf{T}} \leqslant \rho^{14r}\sum_{i = 1}^m |n_i| < \frac{1}{100}.\end{align*}$$

$$\begin{align*}\Vert \xi \cdot (x_j - x) \Vert_{\mathbf{T}} \leqslant \rho^{14r}\sum_{i = 1}^m |n_i| < \frac{1}{100}.\end{align*}$$ Divide ![]() $\mathbf {T}^m$ into

$\mathbf {T}^m$ into ![]() $\rho ^{- 14 rm} \leqslant \rho ^{-56r^2}$ boxes of side length

$\rho ^{- 14 rm} \leqslant \rho ^{-56r^2}$ boxes of side length ![]() $\rho ^{14 r}$; it is enough to show that each such box B contains at least one point

$\rho ^{14 r}$; it is enough to show that each such box B contains at least one point ![]() $(\xi _1 \cdot x_j, \cdots \xi _m \cdot x_j)$. The following fact will also be needed later, so we state it as a separate lemma.

$(\xi _1 \cdot x_j, \cdots \xi _m \cdot x_j)$. The following fact will also be needed later, so we state it as a separate lemma.

Lemma 4.2. Let ![]() $\xi _1,\dots , \xi _m \in \mathbf {Z}^D$ be linearly independent. Then as x ranges uniformly over

$\xi _1,\dots , \xi _m \in \mathbf {Z}^D$ be linearly independent. Then as x ranges uniformly over ![]() $\mathbf {T}^D$,

$\mathbf {T}^D$, ![]() $(\xi _1 \cdot x, \cdots , \xi _m \cdot x)$ ranges uniformly over

$(\xi _1 \cdot x, \cdots , \xi _m \cdot x)$ ranges uniformly over ![]() $\mathbf {T}^m$.

$\mathbf {T}^m$.

Proof. Let ![]() $f(t) = e(\gamma \cdot t)$ be a nontrivial character on

$f(t) = e(\gamma \cdot t)$ be a nontrivial character on ![]() $\mathbf {T}^{m}$. Then

$\mathbf {T}^{m}$. Then

since ![]() $\gamma _1 \xi _1 + \dots + \gamma _m \xi _m \neq 0$. Since the characters are dense in

$\gamma _1 \xi _1 + \dots + \gamma _m \xi _m \neq 0$. Since the characters are dense in ![]() $L^1(\mathbf {T}^m)$, the result follows.

$L^1(\mathbf {T}^m)$, the result follows.

Returning to the proof of Proposition 4.1, Lemma 4.2 implies that for each fixed j,

By independence,

$$ \begin{align*} \mathbb{P} ( (\xi_1 \cdot x_j, \dots , \xi_m \cdot x_j) \notin & \; B \; \mbox{for } \ j = 1,\dots, M ) \\ & = (1 - \rho^{14rm})^M \leqslant e^{-\rho^{14rm} M} \leqslant e^{-\rho^{-D/8}}. \end{align*} $$

$$ \begin{align*} \mathbb{P} ( (\xi_1 \cdot x_j, \dots , \xi_m \cdot x_j) \notin & \; B \; \mbox{for } \ j = 1,\dots, M ) \\ & = (1 - \rho^{14rm})^M \leqslant e^{-\rho^{14rm} M} \leqslant e^{-\rho^{-D/8}}. \end{align*} $$ Here we critically use that ![]() $D = C_3 r^2$;

$D = C_3 r^2$; ![]() $C_3 \geqslant 448$ is sufficient here, but it will need to be larger than this in later arguments. Summing over the boxes B, we see that the probability of even one empty box is

$C_3 \geqslant 448$ is sufficient here, but it will need to be larger than this in later arguments. Summing over the boxes B, we see that the probability of even one empty box is ![]() $\leqslant \rho ^{-56 r^2} e^{-\rho ^{-D/8}}$. This is the probability that equation (4.1) does not hold for this particular V. Summing over the

$\leqslant \rho ^{-56 r^2} e^{-\rho ^{-D/8}}$. This is the probability that equation (4.1) does not hold for this particular V. Summing over the ![]() $\leqslant \rho ^{-14 D r}$ choices for V, the probability that equation (4.1) fails to hold for some V is

$\leqslant \rho ^{-14 D r}$ choices for V, the probability that equation (4.1) fails to hold for some V is

For D large, this will be ![]() $< \frac {1}{4}$ as well, uniformly in

$< \frac {1}{4}$ as well, uniformly in ![]() $\rho $. (To see this, write

$\rho $. (To see this, write ![]() $X = 1/\rho> D$; then this function is bounded by

$X = 1/\rho> D$; then this function is bounded by ![]() $X^{D^2} e^{-X^{D/8}}$, which is absolutely tiny on the range

$X^{D^2} e^{-X^{D/8}}$, which is absolutely tiny on the range ![]() $X> D$.)

$X> D$.)

It follows that, with probability ![]() $> \frac {1}{2}$ in the choice of

$> \frac {1}{2}$ in the choice of ![]() $x_1,\dots , x_M$, both (1) and (2) hold.

$x_1,\dots , x_M$, both (1) and (2) hold.

4.2 Random ellipsoidal annuli

Our construction is based on annuli centred on the points ![]() $x_1,\dots , x_M$ just constructed. As outlined in Section 2, so as to introduce a source of randomness into the problem, we consider, rather than just spherical annuli, random ellipsoidal annuli.

$x_1,\dots , x_M$ just constructed. As outlined in Section 2, so as to introduce a source of randomness into the problem, we consider, rather than just spherical annuli, random ellipsoidal annuli.

To specify the ellipsoids, here and throughout the paper identify ![]() $\mathbf {R}^{D(D+1)/2}$ with the space of all tuples

$\mathbf {R}^{D(D+1)/2}$ with the space of all tuples ![]() $x = (x_{ij})_{1 \leqslant i \leqslant j \leqslant D}$. Let

$x = (x_{ij})_{1 \leqslant i \leqslant j \leqslant D}$. Let ![]() $\mathbf {e}$ be a random tuple uniformly sampled from

$\mathbf {e}$ be a random tuple uniformly sampled from ![]() $[-\frac {1}{D^4} , \frac {1}{D^4} ]^{D(D+1)/2} \subset \mathbf {R}^{D(D+1)/2}$.

$[-\frac {1}{D^4} , \frac {1}{D^4} ]^{D(D+1)/2} \subset \mathbf {R}^{D(D+1)/2}$.

To any tuple ![]() $x \in \mathbf {R}^{D(D+1)/2}$, we associate a symmetric matrix

$x \in \mathbf {R}^{D(D+1)/2}$, we associate a symmetric matrix ![]() $\sigma (x) \in \operatorname {Sym}_D(\mathbf {R})$ (the space of

$\sigma (x) \in \operatorname {Sym}_D(\mathbf {R})$ (the space of ![]() $D \times D$ symmetric matrices over

$D \times D$ symmetric matrices over ![]() $\mathbf {R}$) as follows:

$\mathbf {R}$) as follows: ![]() $(\sigma (x))_{ii} = x_{ii}$,

$(\sigma (x))_{ii} = x_{ii}$, ![]() $(\sigma (x))_{ij} = \frac {1}{2}x_{ij}$ for

$(\sigma (x))_{ij} = \frac {1}{2}x_{ij}$ for ![]() $i < j$, and

$i < j$, and ![]() $(\sigma (x))_{ij} = \frac {1}{2}x_{ji}$ for

$(\sigma (x))_{ij} = \frac {1}{2}x_{ji}$ for ![]() $i> j$.

$i> j$.

The ellipsoidal annuli we consider will then be of the form ![]() $\pi (A_{\mathbf {e}})$, where

$\pi (A_{\mathbf {e}})$, where ![]() $\pi : \mathbf {R}^D \rightarrow \mathbf {T}^D$ is the natural projection and

$\pi : \mathbf {R}^D \rightarrow \mathbf {T}^D$ is the natural projection and

It is convenient to fix, for the rest of the paper,

the choice is somewhat arbitrary, and any sufficiently large power of ![]() $1/D$ would lead to essentially the same bounds in our final result. With this choice, the parameter M in Proposition 4.1 (that is, the number of points

$1/D$ would lead to essentially the same bounds in our final result. With this choice, the parameter M in Proposition 4.1 (that is, the number of points ![]() $x_1,\dots , x_M$) is

$x_1,\dots , x_M$) is ![]() $D^D$.

$D^D$.

Now ![]() $\Vert \sigma (e) \Vert \leqslant D \Vert e \Vert _{\infty } \leqslant \frac {1}{2}$, where

$\Vert \sigma (e) \Vert \leqslant D \Vert e \Vert _{\infty } \leqslant \frac {1}{2}$, where ![]() $\Vert \cdot \Vert $ denotes the

$\Vert \cdot \Vert $ denotes the ![]() $\ell ^2$-to-

$\ell ^2$-to-![]() $\ell ^2$ operator norm on matrices, and therefore

$\ell ^2$ operator norm on matrices, and therefore

Remark. Taking ![]() $\sigma (\mathbf {e})$ to be symmetric is natural in view of the polar decomposition of real matrices. Premultiplying

$\sigma (\mathbf {e})$ to be symmetric is natural in view of the polar decomposition of real matrices. Premultiplying ![]() $\sigma (\mathbf {e})$ by an orthogonal matrix makes no difference to

$\sigma (\mathbf {e})$ by an orthogonal matrix makes no difference to ![]() $\Vert (I + \sigma (\mathbf {e}) ) x\Vert _2$.

$\Vert (I + \sigma (\mathbf {e}) ) x\Vert _2$.

5 The colouring. Outline proof of the main theorem

We are now in a position to describe our red/blue colouring of ![]() $[N]$. Once again let

$[N]$. Once again let ![]() $r, D$ be integers with r sufficiently large and

$r, D$ be integers with r sufficiently large and ![]() $D = C_3 r^2$. Set

$D = C_3 r^2$. Set ![]() $\rho := D^{-4}$, and let

$\rho := D^{-4}$, and let ![]() $x_1,\dots , x_M \in \mathbf {T}^D$ be points as constructed in Proposition 4.1 for this value of

$x_1,\dots , x_M \in \mathbf {T}^D$ be points as constructed in Proposition 4.1 for this value of ![]() $\rho $. Pick

$\rho $. Pick ![]() $\mathbf {e} \in [-\frac {1}{D^4} , \frac {1}{D^4} ]^{D(D+1)/2} \subset \mathbf {R}^{D(D+1)/2}$ uniformly at random, and consider the random ellipsoidal annulus

$\mathbf {e} \in [-\frac {1}{D^4} , \frac {1}{D^4} ]^{D(D+1)/2} \subset \mathbf {R}^{D(D+1)/2}$ uniformly at random, and consider the random ellipsoidal annulus

Pick ![]() $\theta \in \mathbf {T}^D$ uniformly at random, let

$\theta \in \mathbf {T}^D$ uniformly at random, let ![]() $\pi : \mathbf {R}^D \rightarrow \mathbf {T}^D$ be the natural projection, and define a red/blue colouring by

$\pi : \mathbf {R}^D \rightarrow \mathbf {T}^D$ be the natural projection, and define a red/blue colouring by

$$ \begin{align} \operatorname{Blue}_{\mathbf{e},\theta} := \{ n \in [N] : \theta n \in \bigcup_{j=1}^M (x_j + \pi(A_{\mathbf{e}}) \}, \end{align} $$

$$ \begin{align} \operatorname{Blue}_{\mathbf{e},\theta} := \{ n \in [N] : \theta n \in \bigcup_{j=1}^M (x_j + \pi(A_{\mathbf{e}}) \}, \end{align} $$ Suppose henceforth that ![]() $N> D^{C_2 D^2}$, this being stronger than needed for some results but necessary in the worst case. We claim that with high probability there is no blue progression of length

$N> D^{C_2 D^2}$, this being stronger than needed for some results but necessary in the worst case. We claim that with high probability there is no blue progression of length ![]() $3$.

$3$.

Proposition 5.1. Suppose that ![]() $N> D^{C_2D^2}$. Then

$N> D^{C_2D^2}$. Then

In dealing with the progressions in the red points, we introduce a specific set ![]() $\Theta $ of rotations

$\Theta $ of rotations ![]() $\theta \in \mathbf {T}^D$ that we wish to consider. We call

$\theta \in \mathbf {T}^D$ that we wish to consider. We call ![]() $\Theta $ the set of diophantine

$\Theta $ the set of diophantine ![]() $\theta $: the terminology is not standard, but the word diophantine is used in similar ways in other contexts. The precise definition of

$\theta $: the terminology is not standard, but the word diophantine is used in similar ways in other contexts. The precise definition of ![]() $\Theta $ is given in Section 7 below. Roughly,

$\Theta $ is given in Section 7 below. Roughly, ![]() $\theta $ is disqualified from

$\theta $ is disqualified from ![]() $\Theta $ if the orbit

$\Theta $ if the orbit ![]() $\{ \theta n : n \leqslant N\}$ exhibits certain pathological behaviours such as being highly concentrated near

$\{ \theta n : n \leqslant N\}$ exhibits certain pathological behaviours such as being highly concentrated near ![]() $0$ or having long subprogressions almost annihilated by a large set of characters on

$0$ or having long subprogressions almost annihilated by a large set of characters on ![]() $\mathbf {T}^D$. For our discussion in this section, the important fact about

$\mathbf {T}^D$. For our discussion in this section, the important fact about ![]() $\Theta $ is that diophantine elements are (highly) generic in the sense that

$\Theta $ is that diophantine elements are (highly) generic in the sense that

This is proven in Section 7, specifically Proposition 7.1, where the definition of ![]() $\Theta $ is given.

$\Theta $ is given.

Now we claim that, conditioned on the event that ![]() $\theta $ is diophantine, with high probability there is no red progression of length

$\theta $ is diophantine, with high probability there is no red progression of length ![]() $N^{1/r}$.

$N^{1/r}$.

Proposition 5.2. Suppose that ![]() $N> D^{C_2D^2}$. Then

$N> D^{C_2D^2}$. Then

where ![]() $\Theta \subset \mathbf {T}^D$ denotes the set of diophantine elements.

$\Theta \subset \mathbf {T}^D$ denotes the set of diophantine elements.

The proof of Proposition 5.1 is relatively straightforward and is given in Section 6. The proof of Proposition 5.2 is considerably more involved and occupies the rest of the paper.

Let us now show how Theorem 2.1 follows essentially immediately from Propositions 5.1 and 5.2.

Proof of Theorem 2.1.

(assuming Propositions 5.1 and 5.2) First observe that, with ![]() $D = C_3 r^2$, the conditions required in Propositions 5.1 and 5.2 will be satisfied if

$D = C_3 r^2$, the conditions required in Propositions 5.1 and 5.2 will be satisfied if ![]() $N> e^{C r^4 \log r}$ for a sufficiently large C. Also, in proving Theorem 2.1, we may clearly assume that r is sufficiently large.

$N> e^{C r^4 \log r}$ for a sufficiently large C. Also, in proving Theorem 2.1, we may clearly assume that r is sufficiently large.

First note that by Proposition 5.2 and equation (5.3), we have

This and Proposition 5.1 imply that there is some choice of ![]() $\theta , \mathbf {e}$ (in fact, a random choice works with very high probability) for which simultaneously

$\theta , \mathbf {e}$ (in fact, a random choice works with very high probability) for which simultaneously ![]() $\operatorname {Blue}_{\theta , \mathbf {e}}$ has no 3-term progression and

$\operatorname {Blue}_{\theta , \mathbf {e}}$ has no 3-term progression and ![]() $\operatorname {Red}_{\theta , \mathbf {e}}$ has no

$\operatorname {Red}_{\theta , \mathbf {e}}$ has no ![]() $N^{1/r}$-term progression. This completes the proof of Theorem 2.1.

$N^{1/r}$-term progression. This completes the proof of Theorem 2.1.

Let us consider the task of proving Proposition 5.2 in a little more detail. Let

a notational convention we will retain throughout the paper.

It suffices to show that if ![]() $N> D^{C_2D^2}$ and

$N> D^{C_2D^2}$ and ![]() $\theta \in \Theta $ is diophantine, then for each fixed progression

$\theta \in \Theta $ is diophantine, then for each fixed progression ![]() $P = \{n_0 + dn : n \leqslant X \} \subset [N]$ of length X,

$P = \{n_0 + dn : n \leqslant X \} \subset [N]$ of length X,

Indeed, there are fewer than ![]() $N^2$ choices of

$N^2$ choices of ![]() $n_0$ and d, the start point and common difference of P, so Proposition 5.2 follows from equation (5.5) by the union bound.

$n_0$ and d, the start point and common difference of P, so Proposition 5.2 follows from equation (5.5) by the union bound.

The task, then, is to show that (with very high probability) ![]() $\theta P \subset \mathbf {T}^D$ intersects one of the annuli

$\theta P \subset \mathbf {T}^D$ intersects one of the annuli ![]() $x_j + \pi (A_{\mathbf {e}})$. To achieve this, we proceed in two distinct stages. Denoting by

$x_j + \pi (A_{\mathbf {e}})$. To achieve this, we proceed in two distinct stages. Denoting by ![]() $P_{\operatorname {init}} = \{ n_0 + dn : n \leqslant X/2\}$ the first half of P, we show that

$P_{\operatorname {init}} = \{ n_0 + dn : n \leqslant X/2\}$ the first half of P, we show that ![]() $\theta P_{\operatorname {init}}$ at some point enters the interior of some ball

$\theta P_{\operatorname {init}}$ at some point enters the interior of some ball ![]() $x_j + \pi (B_{\rho /10}(0))$, where

$x_j + \pi (B_{\rho /10}(0))$, where ![]() $B_{\varepsilon }(0) \subset \mathbf {R}^D$ is the Euclidean ball of radius

$B_{\varepsilon }(0) \subset \mathbf {R}^D$ is the Euclidean ball of radius ![]() $\varepsilon $, and as usual

$\varepsilon $, and as usual ![]() $\pi : \mathbf {R}^D \rightarrow \mathbf {T}^D$ is projection. This we call the first step.

$\pi : \mathbf {R}^D \rightarrow \mathbf {T}^D$ is projection. This we call the first step.

Proposition 5.3 (First step)

Suppose that ![]() $N> D^{C_2 D^2}$. Let

$N> D^{C_2 D^2}$. Let ![]() $\theta \in \Theta $ be diophantine. Let

$\theta \in \Theta $ be diophantine. Let ![]() $d \leqslant N/X$, and consider a progression

$d \leqslant N/X$, and consider a progression ![]() $P_{\operatorname {init}} = \{n_0 + dn : n \leqslant X/2\}$. Then

$P_{\operatorname {init}} = \{n_0 + dn : n \leqslant X/2\}$. Then ![]() $\theta P_{\operatorname {init}}$ intersects

$\theta P_{\operatorname {init}}$ intersects ![]() $x_j + \pi (B_{\rho /10}(0))$ for some

$x_j + \pi (B_{\rho /10}(0))$ for some ![]() $j \in \{1,\dots , M\}$.

$j \in \{1,\dots , M\}$.

Once we have a point of ![]() $\theta P_{\operatorname {init}}$ in

$\theta P_{\operatorname {init}}$ in ![]() $x_j + \pi (B_{\rho /10}(0))$, we use the remaining half of P to intersect the annulus

$x_j + \pi (B_{\rho /10}(0))$, we use the remaining half of P to intersect the annulus ![]() $x_j + \pi (A_{\mathbf {e}})$. This we call the second step.

$x_j + \pi (A_{\mathbf {e}})$. This we call the second step.

Proposition 5.4 (Second step)

Suppose that ![]() $N \geqslant D^{C_2D^2}$. Let

$N \geqslant D^{C_2D^2}$. Let ![]() $d \leqslant N/X$. Suppose that

$d \leqslant N/X$. Suppose that ![]() $\theta \in \Theta $ is diophantine, and consider a progression

$\theta \in \Theta $ is diophantine, and consider a progression ![]() $\dot {P} = \{ dn : n \leqslant X/2\}$. Then

$\dot {P} = \{ dn : n \leqslant X/2\}$. Then

Together, Propositions 5.3 and 5.4 imply equation (5.5) and hence, as explained above, Proposition 5.2. Indeed, Proposition 5.3 implies that there is some ![]() $n_0 + n_1 d \in P_{\operatorname {init}}$ (that is, some

$n_0 + n_1 d \in P_{\operatorname {init}}$ (that is, some ![]() $n_1 \leqslant X/2$ such that

$n_1 \leqslant X/2$ such that ![]() $\theta (n_0 + n_1 d) \in x_j + \pi (B_{\rho /10}(0))$, for some

$\theta (n_0 + n_1 d) \in x_j + \pi (B_{\rho /10}(0))$, for some ![]() $j \in \{1,\dots , M\}$). Now apply Proposition 5.4, taking

$j \in \{1,\dots , M\}$). Now apply Proposition 5.4, taking ![]() $y = \theta (n_0 + n_1d) - x_j$. With probability

$y = \theta (n_0 + n_1d) - x_j$. With probability ![]() $1 - O(N^{-3})$ in the choice of

$1 - O(N^{-3})$ in the choice of ![]() $\mathbf {e}$, this provides some

$\mathbf {e}$, this provides some ![]() $n_2 d \in \dot {P}$ (that is,

$n_2 d \in \dot {P}$ (that is, ![]() $n_2 \leqslant X/2$) such that

$n_2 \leqslant X/2$) such that ![]() $y + \theta n_2 d \in \pi (A_{\mathbf {e}})$.

$y + \theta n_2 d \in \pi (A_{\mathbf {e}})$.

If ![]() $n_1, n_2$ can both be found (which happens with probability

$n_1, n_2$ can both be found (which happens with probability ![]() $1 - O(N^{-3})$ in the choice of

$1 - O(N^{-3})$ in the choice of ![]() $\mathbf {e}$), then

$\mathbf {e}$), then

which means ![]() $n_0 + (n_1 + n_2)d$ is coloured blue. This establishes equation (5.5).

$n_0 + (n_1 + n_2)d$ is coloured blue. This establishes equation (5.5).

The remaining tasks in the paper are therefore as follows.

• Establish Proposition 5.1 (blue 3-term progressions). This is relatively straightforward and is covered in Section 6.

• Give the full definition of

$\Theta $ and the set of diophantine

$\Theta $ and the set of diophantine  $\theta $, and prove equation (5.3). This is carried out in Section 7.

$\theta $, and prove equation (5.3). This is carried out in Section 7.• Prove Proposition 5.3, the ‘first step’ for the red progressions. This is carried out in Section 8.

• Prove Proposition 5.4, the ‘second step’ for the red progressions.

The first three tasks, as well as an outline of the fourth, are carried out in Part III of the paper (Sections 6, 7, 8 and 9, respectively).

The fourth task (proving Proposition 5.4) is very involved. We give a technical outline in Section 9, which reduces it to the task of proving three further propositions: Propositions 9.1, 9.2 and 9.3. These propositions are established in Parts IV and V of the paper.

Part III Monochromatic progressions

6 No blue 3-term progressions

In this section, we establish Proposition 5.1. Let us recall the statement.

Proposition 5.1. Suppose that ![]() $N> D^{C_2D^2}$. Then

$N> D^{C_2D^2}$. Then

Recall that the definition of ![]() $\operatorname {Blue}_{\theta , \mathbf {e}}$ is given in equation (5.1). The following lemma is a quantitative version of Behrend’s observation that no three points on a sphere lie in arithmetic progression. The spherical version of this was already used in [Reference Green and Wolf11].

$\operatorname {Blue}_{\theta , \mathbf {e}}$ is given in equation (5.1). The following lemma is a quantitative version of Behrend’s observation that no three points on a sphere lie in arithmetic progression. The spherical version of this was already used in [Reference Green and Wolf11].

Lemma 6.1. Fix ![]() $e \in [-\frac {1}{D^4} , \frac {1}{D^4} ]^{D(D+1)/2}$. Suppose that

$e \in [-\frac {1}{D^4} , \frac {1}{D^4} ]^{D(D+1)/2}$. Suppose that ![]() $u , u + v, u + 2v$ all lie in

$u , u + v, u + 2v$ all lie in ![]() $A_{e}$, where the ellipsoidal annulus

$A_{e}$, where the ellipsoidal annulus ![]() $A_{e}$ is defined as in equation (4.2), but with e fixed. Then

$A_{e}$ is defined as in equation (4.2), but with e fixed. Then ![]() $\Vert v \Vert _2 \leqslant \frac {1}{2}N^{-2/D}$.

$\Vert v \Vert _2 \leqslant \frac {1}{2}N^{-2/D}$.

Proof. We have the parallelogram law

Applying this with ![]() $x = (1 + \sigma (e))u$,

$x = (1 + \sigma (e))u$, ![]() $y = (1 + \sigma (e)) v$ gives

$y = (1 + \sigma (e)) v$ gives

The result now follows from equation (4.4).

Condition on the event that ![]() $\mathbf {e} = e$, and let

$\mathbf {e} = e$, and let ![]() $\theta \in \mathbf {T}^D$ be chosen uniformly at random. Suppose that

$\theta \in \mathbf {T}^D$ be chosen uniformly at random. Suppose that ![]() $n, n + d, n + 2d$ are all coloured blue. Then for some

$n, n + d, n + 2d$ are all coloured blue. Then for some ![]() $i,j,k \in \{1,\dots , M\}$, we have

$i,j,k \in \{1,\dots , M\}$, we have ![]() $\theta n \in x_i + \pi (A_{e})$,

$\theta n \in x_i + \pi (A_{e})$, ![]() $\theta (n+d) \in x_j + \pi (A_{e})$,

$\theta (n+d) \in x_j + \pi (A_{e})$, ![]() $\theta (n+2d) \in x_k + \pi (A_{e})$. Since

$\theta (n+2d) \in x_k + \pi (A_{e})$. Since ![]() $\theta (n+2d) - 2\theta (n+d) + \theta n = 0$, we have

$\theta (n+2d) - 2\theta (n+d) + \theta n = 0$, we have ![]() $x_i - 2x_j + x_k \in \pi (A_{e}) - 2 \pi (A_{e}) + \pi (A_{e})$, so

$x_i - 2x_j + x_k \in \pi (A_{e}) - 2 \pi (A_{e}) + \pi (A_{e})$, so ![]() $\Vert x_i - 2x_j + x_k \Vert _{\mathbf {T}^D} \leqslant 4 \rho $ since every

$\Vert x_i - 2x_j + x_k \Vert _{\mathbf {T}^D} \leqslant 4 \rho $ since every ![]() $x \in \pi (A_{e})$ has

$x \in \pi (A_{e})$ has ![]() $\Vert x \Vert _{\mathbf {T}^D} = \Vert \pi ^{-1} x \Vert _{\infty } \leqslant \Vert \pi ^{-1} x \Vert _2 \leqslant \rho $. By the construction of the points

$\Vert x \Vert _{\mathbf {T}^D} = \Vert \pi ^{-1} x \Vert _{\infty } \leqslant \Vert \pi ^{-1} x \Vert _2 \leqslant \rho $. By the construction of the points ![]() $x_1,\dots , x_M$ (specifically, Proposition 4.1 (1)), it follows that

$x_1,\dots , x_M$ (specifically, Proposition 4.1 (1)), it follows that ![]() $i = j = k$.

$i = j = k$.

We apply Lemma 6.1 with ![]() $u = \pi ^{-1}(\theta n - x_i)$,

$u = \pi ^{-1}(\theta n - x_i)$, ![]() $v = \pi ^{-1}(\theta d)$, both of which lie in

$v = \pi ^{-1}(\theta d)$, both of which lie in ![]() $B_{2\rho }(0) \subset B_{1/10}(0)$. Since

$B_{2\rho }(0) \subset B_{1/10}(0)$. Since ![]() $\pi (u + \lambda v) = \theta (n + \lambda d) - x_i$ for

$\pi (u + \lambda v) = \theta (n + \lambda d) - x_i$ for ![]() $\lambda \in \{0,1,2\}$, we see that

$\lambda \in \{0,1,2\}$, we see that ![]() $\pi (u + \lambda v) \in \pi ( A_{e})$, and therefore since

$\pi (u + \lambda v) \in \pi ( A_{e})$, and therefore since ![]() $u + \lambda v \in B_{1/5}(0)$ and

$u + \lambda v \in B_{1/5}(0)$ and ![]() $A_{e} \subset B_{1/5}(0)$, we have

$A_{e} \subset B_{1/5}(0)$, we have ![]() $u + \lambda v \in A_{e}$.

$u + \lambda v \in A_{e}$.

It follows from Lemma 6.1 that ![]() $\Vert v \Vert _2 \leqslant \frac {1}{2}N^{-2/D}$. Therefore,

$\Vert v \Vert _2 \leqslant \frac {1}{2}N^{-2/D}$. Therefore, ![]() $\Vert \theta d \Vert _{\mathbf {T}^D} = \Vert \pi ^{-1}(\theta d) \Vert _{\infty } \leqslant \Vert \pi ^{-1}(\theta d) \Vert _2 \leqslant \frac {1}{2}N^{-2/D}$.

$\Vert \theta d \Vert _{\mathbf {T}^D} = \Vert \pi ^{-1}(\theta d) \Vert _{\infty } \leqslant \Vert \pi ^{-1}(\theta d) \Vert _2 \leqslant \frac {1}{2}N^{-2/D}$.

If ![]() $d \neq 0$, then, with

$d \neq 0$, then, with ![]() $\theta \in \mathbf {T}^D$ chosen randomly,

$\theta \in \mathbf {T}^D$ chosen randomly, ![]() $\theta d$ is uniformly distributed on

$\theta d$ is uniformly distributed on ![]() $\mathbf {T}^D$. Therefore, the probability that there is any blue 3-term progression

$\mathbf {T}^D$. Therefore, the probability that there is any blue 3-term progression ![]() $(n, n+d, n + 2d)$ with common difference d is bounded above by the probability that

$(n, n+d, n + 2d)$ with common difference d is bounded above by the probability that ![]() $\theta d$ lies in the box

$\theta d$ lies in the box ![]() $\{ x \in \mathbf {T}^D : \Vert x \Vert _{\mathbf {T}^d} \leqslant \frac {1}{2}N^{-2/D}\}$, a set of volume

$\{ x \in \mathbf {T}^D : \Vert x \Vert _{\mathbf {T}^d} \leqslant \frac {1}{2}N^{-2/D}\}$, a set of volume ![]() $N^{-2}$.

$N^{-2}$.

Therefore, for any fixed ![]() $e \in [-\frac {1}{D^4} , \frac {1}{D^4} ]^{D(D+1)/2}$, we have, summing over the at most N possible choices for d, that

$e \in [-\frac {1}{D^4} , \frac {1}{D^4} ]^{D(D+1)/2}$, we have, summing over the at most N possible choices for d, that

from which Proposition 5.1 follows immediately by removing the conditioning on ![]() $\mathbf {e} = e$.

$\mathbf {e} = e$.

This completes the proof of Proposition 5.1. Note that the randomness of ![]() $\theta $ was vital, but the ability to choose

$\theta $ was vital, but the ability to choose ![]() $\mathbf {e}$ randomly here was irrelevant since the analysis works for any fixed

$\mathbf {e}$ randomly here was irrelevant since the analysis works for any fixed ![]() $\mathbf {e} = e$.

$\mathbf {e} = e$.

7 Diophantine conditions

We turn now to the definition of ![]() $\Theta $, the set of ‘diophantine’ rotations

$\Theta $, the set of ‘diophantine’ rotations ![]() $\theta \in \mathbf {T}^D$. Here (as usual)

$\theta \in \mathbf {T}^D$. Here (as usual) ![]() $X = N^{1/r}$.

$X = N^{1/r}$.

Proposition 7.1. Suppose that ![]() $D = C_3 r^2$ and

$D = C_3 r^2$ and ![]() $N \geqslant D^{D^2}$. Define

$N \geqslant D^{D^2}$. Define ![]() $\Theta \subset \mathbf {T}^D$ to be the set of all