No CrossRef data available.

Article contents

Hausdorff dimension of sets defined by almost convergent binary expansion sequences

Part of:

Probabilistic theory: distribution modulo $1$; metric theory of algorithms

Classical measure theory

Published online by Cambridge University Press: 13 March 2023

Abstract

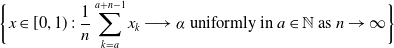

In this paper, we study the Hausdorff dimension of sets defined by almost convergent binary expansion sequences. More precisely, the Hausdorff dimension of the following setis determined for any  \begin{align*} \bigg\{x\in[0,1)\;:\;\frac{1}{n}\sum_{k=a}^{a+n-1}x_{k}\longrightarrow\alpha\textrm{ uniformly in }a\in\mathbb{N}\textrm{ as }n\rightarrow\infty\bigg\} \end{align*}

\begin{align*} \bigg\{x\in[0,1)\;:\;\frac{1}{n}\sum_{k=a}^{a+n-1}x_{k}\longrightarrow\alpha\textrm{ uniformly in }a\in\mathbb{N}\textrm{ as }n\rightarrow\infty\bigg\} \end{align*} $ \alpha\in[0,1] $. This completes a question considered by Usachev [Glasg. Math. J. 64 (2022), 691–697] where only the dimension for rational

$ \alpha\in[0,1] $. This completes a question considered by Usachev [Glasg. Math. J. 64 (2022), 691–697] where only the dimension for rational  $ \alpha $ is given.

$ \alpha $ is given.

Information

- Type

- Research Article

- Information

- Copyright

- © The Author(s), 2023. Published by Cambridge University Press on behalf of Glasgow Mathematical Journal Trust

References

Besicovitch, A., On the sum of digits of real numbers represented in the dyadic system, Math. Ann. 110 (1935), 321–330.CrossRefGoogle Scholar

Borel, É., Les probabilités dénombrables et leurs applications arithmétiques, Rend. Circ. Mat. Parlemo 26 (1909), 247–271.CrossRefGoogle Scholar

Connor, J., Almost none of the sequences of 0’s and 1’s are almost convergent, Int. J. Math. Math. Sci. 13 (1990), 775–777.CrossRefGoogle Scholar

Esi, A. and Necdet, M. çatalbaş, Almost convergence of triple sequences, Global J. Math. A 2 (2014), 6–10.Google Scholar

Falconer, K., Fractal geometry, Mathematical Foundations and Applications, 2nd edition (John Wiley & Sons, Hoboken, NJ, 2003).CrossRefGoogle Scholar

Lorentz, G., A contribution to the theory of divergent sequences, Acta Math. 80 (1948), 167–190.CrossRefGoogle Scholar

Mohiuddine, S., An application of almost convergence in approximation theorems, App. Math. L. 24 (2011), 1856–1860.CrossRefGoogle Scholar

Usachev, A., Hausdorff dimension of the set of almost convergent sequences, Glasg. Math. J. 64 (2022), 691–697.CrossRefGoogle Scholar