Introduction

Recruitment of participants into clinical studies is critical to the success of any research study; poor recruitment raises study costs and jeopardizes study completion. It is widely understood among the research community that a large number of research studies fail to meet their recruitment goals [Reference Huang, Bull, Johnston McKee, Mahon, Harper and Roberts1,Reference Carlisle, Kimmelman, Ramsay and MacKinnon2]. In addition, many studies suffer from a lack of diversity among participants [Reference Clark3,Reference Paskett4]. It is important that studies recruit a diverse population in order to ensure that study results can be applied to real-world patients of varying age, gender, race, and ethnicity.

The Clinical and Translational Science Awards (CTSA) Program (https://ncats.nih.gov/ctsa) is supported by the National Center for Advancing Translational Sciences (NCATS) with the purpose of improving clinical and translational research and has a commitment to cultivating innovative tools and methodologies for recruitment and retention. Specific medical research institutions act as CTSA hubs to carry out functions to support clinical and translational research across the country. Recruitment and retention of research participants are essential elements of clinical research, and CTSAs work to facilitate these important activities. Each hub provides recruitment and retention support in unique ways through a variety of services, and the network of CTSAs provides a rich source of knowledge and information.

CTSAs have been addressing recruitment challenges by developing a portfolio of research recruitment strategies within their individual institutions and through a national centralized clinical research support infrastructure [Reference Bernard, Harris, Pulley and Benjamin5]. While the structure and populations supported by these institutions differ, the recruitment challenges faced by investigators at the CTSA institutions overlap substantially. The commonalities present important opportunities to share innovations and expertise among one another, while also creating opportunities to collaborate.

Understanding both the unique and common elements of CTSA recruitment programs was an essential step to fostering recruitment collaborations across CTSA institutions; therefore, it was important to understand what recruitment-related services and resources institutions are currently offering to their research investigators. To assess this, we conducted an inventory of participant recruitment resources by asking each CTSA to complete a survey of what they currently offer related to recruitment support.

Methods

The CTSA Recruitment and Retention working group, established in 2015, developed an electronic survey to collect information about resources and processes in the area of participant recruitment. In 2016, a similar survey was created to summarize recruitment services that CTSA institutions offered, with the goal of sharing the results among the Recruitment and Retention working group. This survey was developed by members of this working group and pilot tested at a few CTSA institutions before launching. The RedCap survey was distributed to members of the working group and sent to CTSA administrators.

The current survey was designed by the working group and pilot tested with colleagues at three CTSA institutions; feedback led to further questionnaire refinement before deployment. The survey was estimated to take 10–20 min to complete and included many of the same questions from the 2016 survey related to recruitment registry use, recruitment feasibility assessment tools, clinical trial listings, experience recruiting different populations (e.g. older adults, LGBTQ+ [lesbian, gay, bisexual, transgender, and queer sexual orientations], rural, patients, and children), resources and services, and operations. Additional questions regarding workforce development, program evaluation, and specific questions about the use of electronic health records (EHRs) were included in the current survey. The final survey instrument (see Supplemental Digital Appendix 1) was distributed to CTSA Hubs between May and July 2019. Each CTSA was asked to submit one non-anonymized response; however, one institution submitted responses for four institutions separately since each had independent recruitment and retention programs. Study data were collected and managed using REDCap electronic data capture tools hosted at Indiana University [Reference Harris6,Reference Harris7]. REDCap (Research Electronic Data Capture) is a secure, web-based software platform designed to support data capture for research studies, providing (1) an intuitive interface for validated data capture; (2) audit trails for tracking data manipulation and export procedures; (3) automated export procedures for seamless data downloads to common statistical packages; and (4) procedures for data integration and interoperability with external sources. The Internal Review Board (IRB) at Indiana University determined that the survey met criteria for Exempt Review and approved the survey’s Request for Exemption on 05/15/2019 (IRB #1904520178). The survey was distributed by sharing the REDCap survey link on the Zoho platform that the working group uses to communicate, which is supported by Vanderbilt University. The survey was also distributed to the administrators at each CTSA. The survey announcement was pinned on the Zoho platform page, and a reminder was sent to this group approximately one month later.

For closed-ended responses, descriptive statistics were tabulated and reported. Content thematic coding examined patterns or themes in participants’ open-ended survey responses. The aim of the content thematic coding process was to present key themes regarding participants’ responses. Codes were created to identify recurrent concepts that represented the range of topics, views, experiences, or beliefs voiced by participants [Reference Green8]. Themes were coded independently by three research team members. The thematic codes were then reviewed to ensure that they represented a coherent thematic concept. Any discrepancies were discussed and resolved by consensus [Reference Block9–Reference McIntosh11].

Results

Characteristics of Respondents

The survey was sent to 60 CTSAs. One CTSA had four affiliates that completed the survey for a total of 64 potential responses. Of these, 40 (63%) completed the survey with a mix of small, medium, and large hubs based on 2018 funding dollars. There were small differences in the characteristics of the institutions that did and did not respond to the survey, for example, 27% of institutions that did not respond to the survey were large institutions, while 18% of those who did respond were considered to be large. The average age of CTSA institutions that did not respond to the survey was 10.7 years versus 10.2 years for those that did respond.

Tables and figures representing quantitative survey data are compiled in Supplemental Digital Appendix 2. The majority of the 40 institutions (83%) reported having a website where information about their recruitment services/resources was available (Fig. 1). Eighty-five percent reported having a website where recruiting clinical trials were listed. Only 33% shared the link to a website where potential participants could register to be contacted about research participation.

Fig. 1. Online presence of CTSA institutions. Percent of institutions reporting online resources for recruitment and retention (N = 40 institutions).

General Information on the Recruitment Program

Recruitment programs varied in structure, functions, and staffing within institutions. The number of full-time employees (FTEs) within each institution’s recruitment program ranged from 0 to 7 with a median of 1.25. Thirty percent of institutions had one FTE and 18% had two FTEs. Seventy-two percent of all programs collaborate with community organizations including public agencies, faith-based organizations, community businesses, patient advocacy groups, grassroots organizations, and community health centers. Most institutions (87%) provided data on which stakeholders they work with. These institutions reported that they work with investigators (85%) and study coordinators (85%) more often than with patients (50%), community organizations (48%), or research volunteers (45%).

Fifty-five percent of institutions do not charge research teams a fee for their recruitment and retention services. Presentations, referrals, word of mouth, and websites represented the most popular ways research teams learn about a recruitment program’s services (reported by >80% of institutions). Less popular methods included newsletters and emails (>60% of institutions), promotion through offices outside of the CTSA (43%), and webinars (33%). Attending events within institutions or in the community was other ways to promote recruitment services.

Recruitment Services Offered

Respondents indicated which recruitment resources and services they provide to their investigators (Fig. 2). The most frequently offered services were individual consultation with a recruitment specialist, use of EHRs to facilitate recruitment, and recruitment feasibility assessments. These were followed closely by designing recruitment plans and helping with study advertisements. Less common services were screening participants or scheduling study visits, and direct recruitment of participants on behalf of investigators. Least common was working directly with commercial companies that offer recruitment and retention support (10%). We asked institutions to indicate which companies they work with. These companies included advertisers (newspaper companies, radio advertisers, and television companies), companies that provide feasibility assessment tools (TriNetX), recruiters (BBK Worldwide, ThreeWire), and the Center for Information and Study on Clinical Research Participation, a non-profit recruitment research institute that assisted with creating research education materials. Twenty-eight percent of institutions also listed additional services as described in Table 1. There appears to be a relationship between the number of full-time staff within in a recruitment program and the number of services that each program offers, displayed in Fig. 3.

Fig. 2. Summary of recruitment services offered by CTSA institutions. Percent of institutions reporting offering different recruitment and retention services to researchers (N = 40 institutions).

Table 1. Recruitment and retention resources listed as “other” (N = 11)

Respondents were asked to select recruitment and retention services offered at their institutions. Any resources that did not fit into a provided category were listed as “other” and displayed here.

Fig. 3. Comparison of the number of FTEs dedicated to recruitment services and the number of services offered. Comparison of number of recruitment retention resources/services versus number of full-time effort (FTEs) employees dedicated to recruitment services.

The survey also asked if CTSAs were considering any future additional services, to which 58% percent of institutions provided responses (see Table 2). Future services included user-friendly methods for listing clinical trials and inviting participants to registries, collaboration with community organizations, using EHR to facilitate patient recruitment, evaluation of ongoing recruitment strategies and research participant experiences, social media advertising support, and training in recruitment and consent methods particularly in special populations.

Table 2. Potential future recruitment and retention services (N = 23)

Participants were asked if there were any recruitment resources or services their institutions were considering for the future, which are categorized and displayed here.

Populations and Areas of Expertise

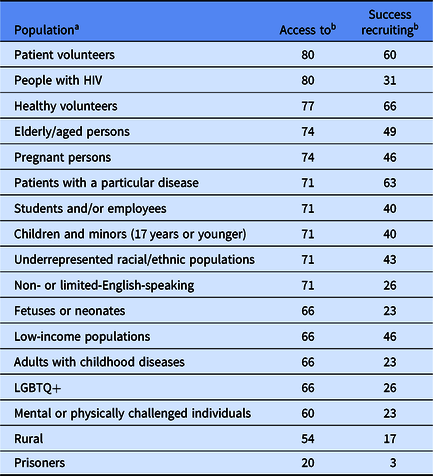

CTSA sites were surveyed about which populations they perceived as accessible for recruitment as well as the ease of recruiting from these populations (Table 3), and 35 institutions provided responses. Institutions reported the greatest access to patient volunteers (80%), people with HIV (80%), healthy volunteers (77%), pregnant women (74%), and elderly persons (74%). Sites reported having the least access to prisoners (20%) and rural populations (54%). The populations in which institutions observed the most recruitment success were healthy volunteers (66%), patient volunteers (60%), and patients with a particular disease (63%). While a majority of sites reported having access to persons with HIV, only 31% of institutions reported success recruiting this population. When asked specifically about which populations were most difficult to recruit, 40% of institutions selected non- or limited-English-speaking persons, and 31% selected rural populations.

Table 3. Self-reported institutional recruitment success of different special populations (N = 35 institutions)

Institutions were asked about their perceptions of access and success with recruiting various populations.

a Populations are ranked in order of percent of institutions reporting perceived access to that population.

b Percent of all institutions reporting perceived access to and success recruiting.

LGBTQ+, lesbian, gay, bisexual, transgender, and queer sexual orientations.

Registries and Web Listings of Clinical Trials

Institutions can facilitate study recruitment by listing studies currently open to enrollment and by providing a way for the public to indicate research interest or join a registry of potential participants. All sites stated they used at least one type of registry to recruit participants into studies. ResearchMatch was the most widely used registry (83%); institutional registries were the second most popular (55%), followed closely by disease-specific registries (48%). Although ResearchMatch was used by the most sites, institutional registries were more commonly used as a primary registry over ResearchMatch (40% vs. 33%). The reasons given for preferring institutional registries over ResearchMatch include that institutional registries “are faster and easier for participants to join”, can be linked with EHR and include Health Insurance Portability and Accountability Act authorization (allowing researchers to recruit and contact based on medical records rather than self-report), allow for face-to-face outreach to enroll people without Internet access, provide a larger percentage of participants from the local population, and better serve investigators interested in “treatment, prevention and genetic studies” (as opposed to ResearchMatch which respondents described as “better for observational and quality of life studies”). While 55% of CTSA institutions have a local registry, only 28% of these registries were managed through the CTSA award. Numbers of registrants in the institutional registries ranged from 291 to 200,000, with a median of 8,905 registrants.

Most (85%) CTSA institutions listed their clinical studies on an institutional website. About half used information from clinicaltrials.gov to populate these websites (45%), while about a third used a clinical trial management system (35%) or relied on information provided by the research team (33%).

Electronic Health Records

Most (85%) CTSA sites offer feasibility services to determine numbers of possibly eligible participants. This process includes searching the EHR itself (70%) and using tools such as Informatics for Integrating Biology and the Bedside (i2b2) which allows researchers to query medical records for a particular institution (70%), and the Accrual to Clinical Trials network, which allows researchers to search medical records across multiple institutions (53%).

Fifty-three percent of institutions reported having a program where patients can give authorization to be contacted about studies for which they are eligible. Of those with this program, 56% use an opt-in method, while the rest use an opt-out method. Over all, EHRs were used to identify and recruit patients in 85% of sites; of these 34 sites, 56% use EPIC software to manage medical records. There were 47% of CTSAs that directly contact patients about research participation using their EHR system, with 29% planning to develop this process. The most common methods for contacting patients about research participation included mailings (74%), phone calls (65%), email (47%), and the patient portal (41%). CTSAs cited the following difficulties with this process: too many medical record systems leading to decentralized patient information, lack of efficient methods for screening health records, and requirements involving provider and IRB approvals prior to contact.

Advertising and Social Media

Outside of medical record systems, CTSAs facilitated recruitment by assisting with social media advertising. Most CTSAs (73%) provided assistance with the design, creation, and placement of study advertisements. The social media channel most often used for advertising was Facebook (94%), while Twitter (50%) and Instagram (28%) were used less frequently (Fig. 4). While 61% of CTSAs were willing to share social media expertise with other CTSAs, only 34% of CTSAs reported feeling somewhat or very comfortable with using social media for recruitment, and 17% reported being very uncomfortable with using social media. Available training materials for social media recruitment ranged from institutional rules for social media posting to in-depth handbooks for creating and targeting ads to specific populations using various social media platforms.

Fig. 4. What social media channels have you used for participant recruitment? Percent of institutions that reported using each social media channel for recruitment and retention (N = 18 institutions).

Program Evaluation

To evaluate their recruitment programs, 80% of CTSA institutions track who uses their services, and 60% track the status of the projects receiving recruitment assistance. About a third (30%) of CTSAs currently evaluate their studies’ accrual and recruitment/retention practices, and 15% of CTSAs specifically evaluate the recruitment and retention of underrepresented populations. Other CTSAs track the number of studies conducted, the number of participants along with the number of investigator consultations and service refusals. Some (35%) CTSAs collect satisfaction data, which include surveys for both study teams and research participants to evaluate staff knowledge, professionalism, and usefulness. Thirty percent of CTSAs also collect data for the Accrual Index, a metric to evaluate the timeliness of accrual, which is calculated by dividing the accrual target by the projected time to accrual completion [Reference Corregano12].

Workforce Development

Currently, 68% of CTSAs report having courses, workshops, online trainings, brown bag events, round tables, and seminars offered to researchers as training in recruitment and retention. Respondents further described these offerings as monthly forums, coordinator training, professional and lay presentations, in-person workshops, grand rounds, trainings for special populations (e.g., rural and HIV community), and personalized recruitment program training.

Qualitative research analytic methods were used with all open-ended survey questions in order to provide contextual information that complements and extends the quantitative survey findings and to identify areas of recruitment and retention that may benefit from workforce development programs. Recurring phrases were categorized into themes for further interpretation across the entire range of participant responses as well as within targeted domains. Common themes included: patient-initiated research contact; option to “opt out” of medical records research; networking and community connections; recruitment knowledge services; community outreach; online resources; special populations; and user-friendly tools. These themes address areas of workforce development that could be addressed through new training programs and resources that focus on these topics. Further research and evaluation could refine these channels and facilitate practical recommendations to improve the experience of research teams and ultimately the recruitment of participants.

We compared these 2019 data to a similar CTSA survey we conducted in 2016 where there were 21/64 responding institutions. The range of FTEs dedicated to recruitment and retention services was similar in both years. Compared to 2016, the percentage of institutions that reported offering recruitment services and resources has increased. For example, in 2016, 48% of institutions offered recruitment consultations to researchers, which increased in 2019 to 85%. Also, in 2016, 19% of institutions offered social media campaigns/postings, while in 2019, 45% of institutions provided social media advertising services. There was an increase in the number of institutions reporting the use of the EHR for recruitment and retention (14% in 2016 versus 85% in 2019). There was a small increase in the number of institutions who charge for their services from 25% in 2016 to 33% in 2019. Survey data do not capture the reasons for the increase in fee-for-service. CTSA institutions described other support for recruitment services to include National Institutes of Health grant funding, institutional support, and vouchers through clinical research organizations. Institutions described plans to re-evaluate fees once future recruitment services are developed. The percentage of institutions that reported using ResearchMatch as their primary volunteer registry decreased (71% in 2016 vs. 33% in 2019), as did the percentage of institutions primarily using an institutional registry (57% in 2016 vs. 40% in 2019). Finally, there was a similar percentage of institutions that have a website where clinical trials are posted (90% in 2016 vs. 85% in 2019). Any observed differences between the two survey results may be due to differences in the number of participating institutions but can also indicate a shift in the methods and tools used for recruitment.

Discussion

A variety of recruitment and retention resources exist across a sample of 40 CTSA institutions, and this inventory serves as a way to compile a summary of recruitment services currently being offered and reveal the direction that clinical research is headed. Research institutions can use this information to identify areas where their recruitment resources may be lacking, thus creating opportunities for collaboration in the development of these services.

Analyzing qualitative responses yielded common themes across institutions including the use of online resources such as websites for registries or clinical trial directories, and the focus on a participant-initiated approach, where potential research participants were responsible for opting in or out of joining registries or allowing contact about research participation. Continuing to make recruitment resources such as registries and clinical trial listings publicly accessible online could facilitate recruitment and encourage patients and community members to initiate engagement in clinical research.

This survey also compiled an extensive list of recruitment services that institutions considered offering in the future, including methods for listing currently enrolling studies, community outreach, social media advertising, utilizing the EHR, and developing recruitment operations and training. Specifically, institutions mentioned the need for user-friendly tools for public-facing clinical trial listings and a need for more community engagement through community advisory boards and networking with local community organizations to assess needs and assist with recruitment. Connections to the community could also assist with recruitment of special populations, such as rural communities or non-English-speaking populations, which many institutions described as difficult to recruit. Institutions that have successfully established community connections or work closely with their community engagement programs could collaborate with other institutions that need assistance in this area.

Some institutions had familiarity with social media advertising and even shared detailed handbooks providing guidance in these areas, while others reported much less comfort with using social media platforms. Sixty-one percent of institutions were willing to share their social media expertise, though only 17% reported being very comfortable with social media advertising; this reveals areas where sharing expertise would supplement the places where social media expertise is lacking.

Sharing expertise in the realm of EHR utilization would also be beneficial. Some institutions had innovative recruitment practices using the EHR, while almost a third of respondents were in the process of developing these methods. Institutions could also examine the structure and staffing of their recruitment programs to compare the variety of resources offered and the number of staff required. Training these staff, and the researchers they support, in recruitment, retention and consent practices as some institutions described, would be beneficial to recruitment overall.

Comparing the 2016 survey with the current 2019 survey shows some increases in frequency of recruitment consultations, EHR usage, social media advertising, and changes in how registries are used. As institutions have established recruitment services, the capacity to provide recruitment consultations has increased. Technological discoveries have driven the increase in EHR tools and social media advertising. The decrease in the primary use of ResearchMatch and institutional registries could be due to an increase in department-specific or disease-specific registries or an increase in EHR usage to allow for a more specified pool of research participants.

While the information collected was an important first step to understanding the recruitment resources available to institutions, there are limitations to the methodology used in this study. It may not fully represent a comprehensive view of recruitment and retention services at CTSA institutions, due to its sampling of only two-thirds of the institutions. The completeness of the data we collected also depended on survey respondents’ awareness of all services available due to their roles in their programs. Although qualitative questions were analyzed when available, the survey design limited many responses to short answer and multiple-choice selections, which may not have captured the depth of the information we requested, and some respondents may not have been willing or able to elaborate when asked.

The data from this report reveal the need for a future survey to compare the improvements CTSAs have made to their recruitment programs and the breadth of services offered. Highlighting data from this report and any collaborations that resulted from it, while also increasing reminders and advertisements of the next survey, could encourage higher response rates in the future. Efforts would be made to more fully understand the breadth of recruitment services that CTSAs provide by asking for examples of the recruitment and retention requests that CTSAs have fulfilled for their investigators. Adding more questions concentrating on specific resources and tools and how they were developed will allow institutions to further understand how to recruit hard-to-reach populations, better use EHRs for recruitment, and improve social media advertising for research. This survey and the data that are shared from it should encourage communication between institutions by highlighting areas where research recruitment could benefit from the collaboration and sharing of successful recruitment techniques and tools. Communities of CTSA representatives such as the CTSA Recruitment and Retention working group are examples of platforms where these collaborations can begin.

Supplementary material

To view supplementary material for this article, please visit https://doi.org/10.1017/cts.2020.44.

Acknowledgements

The authors want to thank research team members Manpreet Kaur and Astghik Baghinyan for participating in qualitative thematic coding and Neal Dickert for providing manuscript edits. The authors would also like to thank all the CTSA-awarded institutions that participated in this grant. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

The project described in this publication was supported by the University of Rochester CTSA award number UL1 TR002001, the Georgia CTSA award number UL1 TR002378, the Indiana University CTSA award number UL1 TR002529, the Georgetown-Howard Universities CTSA UL1TR001409, and the Northwestern University Clinical and Translational Science Institute (NUCATS) UL1TR001422 from the NCATS of the National Institutes of Health.

Disclosures

The authors have no conflicts of interest to declare.