1. Introduction

Despite the growth of available computational resources and the development of high-fidelity methods, industrial computational fluid dynamics (CFD) simulations still rely predominantly on Reynolds-averaged Navier–Stokes (RANS) solvers with turbulence models. This is expected to remain so in the decades to come, particularly for outer loop applications such as design optimization and uncertainty quantification (Slotnick et al. Reference Slotnick, Khodadoust, Alonso, Darmofal, Gropp, Lurie and Mavriplis2014). Therefore, it is still of practical interest to develop more accurate and robust turbulence models.

Most of the models used currently are linear eddy viscosity models such as the ![]() $k$–

$k$–![]() $\varepsilon$ model (Launder & Sharma Reference Launder and Sharma1974) and Spalart–Allmaras model (Spalart & Allmaras Reference Spalart and Allmaras1992), which are based on two major assumptions (Pope Reference Pope2000): (1) the weak equilibrium assumption, i.e. only the non-equilibrium in the magnitude of the Reynolds stress is accounted for through the transport equations, while its anisotropy is modelled based on local strain rate; and (2) the Boussinesq assumption, i.e. the Reynolds stress anisotropy is assumed to be aligned with the strain rate tensor. Reynolds stress transport models (also referred to as differential stress models) have been developed in the past few decades to address the shortcomings caused by the weak equilibrium assumption (Launder, Reece & Rodi Reference Launder, Reece and Rodi1975; Speziale, Sarkar & Gatski Reference Speziale, Sarkar and Gatski1991; Eisfeld, Rumsey & Togiti Reference Eisfeld, Rumsey and Togiti2016). As to the second assumption, various nonlinear eddy viscosity and explicit algebraic stress models have been developed (Spalart Reference Spalart2000; Wallin & Johansson Reference Wallin and Johansson2000), and some have even achieved dramatic successes in specialized flows (e.g. those with secondary flows or rotation). However, these complex models face challenges from the lack of robustness, increased computational costs and implementation complexity, and the difficulty of generalizing to a broader range of flows. Consequently, turbulence modellers and CFD practitioners often face a compromise between predictive performance and practical usability (Xiao & Cinnella Reference Xiao and Cinnella2019).

$\varepsilon$ model (Launder & Sharma Reference Launder and Sharma1974) and Spalart–Allmaras model (Spalart & Allmaras Reference Spalart and Allmaras1992), which are based on two major assumptions (Pope Reference Pope2000): (1) the weak equilibrium assumption, i.e. only the non-equilibrium in the magnitude of the Reynolds stress is accounted for through the transport equations, while its anisotropy is modelled based on local strain rate; and (2) the Boussinesq assumption, i.e. the Reynolds stress anisotropy is assumed to be aligned with the strain rate tensor. Reynolds stress transport models (also referred to as differential stress models) have been developed in the past few decades to address the shortcomings caused by the weak equilibrium assumption (Launder, Reece & Rodi Reference Launder, Reece and Rodi1975; Speziale, Sarkar & Gatski Reference Speziale, Sarkar and Gatski1991; Eisfeld, Rumsey & Togiti Reference Eisfeld, Rumsey and Togiti2016). As to the second assumption, various nonlinear eddy viscosity and explicit algebraic stress models have been developed (Spalart Reference Spalart2000; Wallin & Johansson Reference Wallin and Johansson2000), and some have even achieved dramatic successes in specialized flows (e.g. those with secondary flows or rotation). However, these complex models face challenges from the lack of robustness, increased computational costs and implementation complexity, and the difficulty of generalizing to a broader range of flows. Consequently, turbulence modellers and CFD practitioners often face a compromise between predictive performance and practical usability (Xiao & Cinnella Reference Xiao and Cinnella2019).

In the past few years, data-driven methods have emerged as a promising alternative for developing more generalizable and robust turbulence models. For example, non-local models based on vector-cloud neural networks have been proposed to emulate Reynolds stress transport equations (Han, Zhou & Xiao Reference Han, Zhou and Xiao2022; Zhou, Han & Xiao Reference Zhou, Han and Xiao2022). While this line of research is still in an early stage, it has the potential of leading to more robust and flexible non-equilibrium Reynolds stress models without solving the tensorial transport equations. Alternatively, data-driven nonlinear eddy viscosity models have achieved much more success. Researchers have used machine learning to discover data-driven turbulence models or corrections thereto, which are nonlinear mappings from the strain rate and rotation rate to Reynolds stresses learned from data. Such functional mappings can be in the form of symbolic expressions (Weatheritt & Sandberg Reference Weatheritt and Sandberg2016; Schmelzer, Dwight & Cinnella Reference Schmelzer, Dwight and Cinnella2020), tensor basis neural networks (Ling, Kurzawski & Templeton Reference Ling, Kurzawski and Templeton2016), and random forests (Wang, Wu & Xiao Reference Wang, Wu and Xiao2017; Wu, Michelén-Ströfer & Xiao Reference Wu, Michelén-Ströfer and Xiao2019a), among others. The data-driven nonlinear eddy viscosity models are a major improvement over their traditional counterparts in that they can leverage calibration data more systematically and explore a much larger functional space of stress–strain-rate mappings. However, they have some major shortcomings. First, as with their traditional counterparts, these data-driven models addressed only the Boussinesq assumption of the linear models as their strain–stress relations are still local, and thus they cannot address the weak equilibrium assumption described above. This is in contrast to the data-driven non-local Reynolds stress models (Han et al. Reference Han, Zhou and Xiao2022; Zhou et al. Reference Zhou, Han and Xiao2022), which emulate the Reynolds stress transport equations and fully non-equilibrium models. Second, the training of such models often requires full-field Reynolds stresses (referred to as direct data hereafter), which are rarely available except from high-fidelity simulations such as direct numerical simulations (DNS) and wall-resolved large eddy simulations (LES) (Yang & Griffin Reference Yang and Griffin2021). This would inevitably constrain the training flows to those accessible for DNS and LES, i.e. flows with simple configurations at low Reynolds numbers. It is not clear whether the data-driven models trained with such data would be applicable to practical industrial flows. Finally, the training of data-driven models is often performed in an a priori manner, i.e. without involving RANS solvers in the training process. Consequently, the trained model may have poor predictions of the mean velocity in a posteriori tests where the trained turbulence model is coupled with the RANS solvers. This is caused by the inconsistency between the training and prediction environments (Duraisamy Reference Duraisamy2021). Specifically, even small errors in the Reynolds stress can be amplified dramatically in the predicted velocities due to the intrinsic ill-conditioning of the RANS operator (Wu et al. Reference Wu, Xiao, Sun and Wang2019b; Brener et al. Reference Brener, Cruz, Thompson and Anjos2021). Such ill-conditioning is particularly prominent in high-Reynolds-number flows; even an apparently simple flow such as a plane channel flow can be extremely ill-conditioned (Wu et al. Reference Wu, Xiao, Sun and Wang2019b). Such an inconsistency can be more severe in learning dynamic models such as subgrid-scale models of LES, since the training environment is static while the prediction environment is dynamic. Moreover, the model with the best a posterior performance may not necessarily excel in a priori evaluations (Park & Choi Reference Park and Choi2021). In view of the drawbacks in a priori training of turbulence models with direct data (Reynolds stress), it is desirable to leverage indirect observation data (e.g. sparse velocities and drag) to train data-driven turbulence models in the prediction environments by involving the RANS solvers in the training process. These indirect data are often available from experiments at high Reynolds numbers. Such a strategy is referred to as ‘model-consistent learning’ in the literature (Duraisamy Reference Duraisamy2021).

Model-consistent learning amounts to finding the turbulence model that, when embedded in the RANS solvers, produces outputs in the best agreement with the training data. Specifically, in incompressible flows, these outputs include the velocity and pressure as well as their post-processed or sparsely observed quantities. Assuming that the turbulence model is represented by a neural network to be trained with the stochastic gradient descent method, every iteration in the training process involves solving the RANS equations and finding the sensitivity of the discrepancy between the observed and predicted velocities with respect to the neural network weights. This is in stark contrast to the traditional method of training neural networks that learns from direct data (output of the neural network, i.e. Reynolds stresses in this case), where the gradients can be obtained directly from back-propagation. In model-consistent training, one typically uses adjoint solvers to obtain the RANS-solver-contributed gradient (i.e. the sensitivity of velocity with respect to the Reynolds stress), as the full model consists of both the neural network and the RANS solver (Holland, Baeder & Duraisamy Reference Holland, Baeder and Duraisamy2019; Michelén-Ströfer & Xiao Reference Michelén-Ströfer and Xiao2021). The adjoint sensitivity is then multiplied by the neural network gradient according to the chain rule to yield the full gradient. Similar efforts of combining adjoint solvers and neural network gradients have been made in learning subgrid-scale models in LES (MacArt, Sirignano & Freund Reference MacArt, Sirignano and Freund2021). These adjoint-based methods have been demonstrated to learn models with good posterior velocity predictions. Moreover, for turbulence models represented as symbolic expressions, model-consistent learning is performed similarly by combining the model with the RANS solver in the learning processes (Zhao et al. Reference Zhao, Akolekar, Weatheritt, Michelassi and Sandberg2020; Saïdi et al. Reference Saïdi, Schmelzer, Cinnella and Grasso2022), although the chain-rule-based gradient evaluation is no longer needed in gradient-free optimizations such as genetic optimization.

In view of the extra efforts in developing adjoint solvers, particularly for legacy codes and multi-physics coupled solvers, Michelén-Ströfer, Zhang & Xiao (Reference Michelén-Ströfer, Zhang and Xiao2021b) explored ensemble-based gradient approximation as an alternative to the adjoint solver used in Michelén-Ströfer & Xiao (Reference Michelén-Ströfer and Xiao2021) to learn turbulence models from indirect data. Such a gradient is combined with that from the neural network via the chain rule, and then used in an explicit gradient-descent training. They found that the learned model was less accurate than that learned by using adjoint solvers in the prediction of Reynolds stress and velocity. This is not surprising, because the ensemble-based gradient approximation is less accurate than the analytic gradient from the adjoint solvers (Evensen Reference Evensen2018). Therefore, instead of using an ensemble to approximate gradients in optimization, it can be advantageous to use ensemble Kalman methods directly for training neural networks (Chen et al. Reference Chen, Chang, Meng and Zhang2019; Kovachki & Stuart Reference Kovachki and Stuart2019). This is because such ensemble methods do not merely perform explicit, first-order gradient-descent optimization as is typically done in neural network training (deep learning). Rather, they use implicitly the Hessian matrix (second-order gradient) along with the Jacobian (first-order gradient) to accelerate convergence. Indeed, ensemble-based learning has gained significant success recently (Schneider, Stuart & Wu Reference Schneider, Stuart and Wu2020a,Reference Schneider, Stuart and Wub), but the applications focused mostly on learning from direct data. They have not been used to learn from indirect data, where physical models such as RANS solvers become an integral part of the learning process.

In this work, we propose using an iterative ensemble Kalman method to train a neural-network-based turbulence model by using indirect observation data. To the authors’ knowledge, this is the first such attempt in turbulence modelling. Moreover, in view of the strong nonlinearity of the problem, we adjust the step size adaptively in the learning process (Luo et al. Reference Luo, Stordal, Lorentzen and Nævdal2015), which serves a similar purpose to that of the learning-rate scheduling in deep learning. Such an algorithmic modification is crucial for accelerating convergence and improving robustness of the learning, which can make an otherwise intractable learning problem with the adjoint method computationally feasible with the ensemble method. A comparison is performed between the present method and the continuous adjoint method based on our particular implementation (Michelén-Ströfer & Xiao Reference Michelén-Ströfer and Xiao2021). We show that by incorporating Hessian information with adaptive stepping, the ensemble Kalman method exceeds the performance of the adjoint-based learning in both accuracy and robustness. Specifically, the present method successfully learned a generalizable nonlinear eddy viscosity model for the separated flows over periodic hills (§ 4), which the adjoint method was not able to achieve due to the lack of robustness. We emphasize that all these improvements are achieved at a much lower computational cost (measured in wall time) and with a significantly lower implementation effort compared to the adjoint method. Both methods used the same representation of Reynolds stresses based on the tensor basis neural network (Ling et al. Reference Ling, Kurzawski and Templeton2016).

In summary, the present framework of ensemble-based learning from indirect data has three key advantages. First, compared to methods that learn from direct data, the present framework relaxes the data requirements and needs only the measurable flow quantities, e.g. sparse measurements of the mean velocities or integral quantities such as drag and lift, rather than full-field Reynolds stresses. Second, the model is trained in the prediction environment, thereby alleviating the ill-condition of the explicit data-driven RANS equation and avoiding the inconsistency between training and prediction. Finally, the ensemble method is non-intrusive and thus very straightforward to implement for any solvers. In particular, it does not require adjoint solvers, which allows different quantities to be used in the objective function without additional code redevelopments.

The rest of this paper is organized as follows. The architecture of the neural network and the model-consistent training algorithm are presented in § 2. The case set-up for testing the performance of the proposed non-intrusive model-consistent training workflow is detailed in § 3. The training results are presented and analysed in § 4. The parallelization and the flexibility of the proposed method are discussed in § 5. Finally, conclusions are provided in § 6.

2. Reynolds stress representation and model-consistent training

The objective is to develop a data-driven turbulence modelling framework that meets the following requirements.

(i) The Reynolds stress representation will be frame invariant and sufficiently flexible in expressive power to represent a wide range of flows.

(ii) The model will be trained in the prediction environment for robustness.

(iii) The model will be able to incorporate sparse and potentially noisy observation data as well as Reynolds stress data.

To this end, we choose the tensor basis neural networks (Ling et al. Reference Ling, Kurzawski and Templeton2016) to represent the mapping from the mean velocities to the Reynolds stresses. This representation has the merits of the embedded Galilean invariance and the flexibility to model complicated nonlinear relationships. Furthermore, we use the ensemble Kalman method to learn the neural-network-based model in a non-intrusive, model-consistent manner.

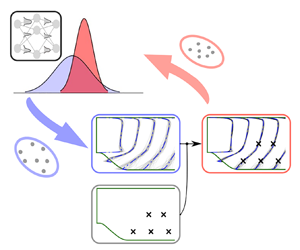

The proposed workflow for training the tensor basis neural networks with indirect observation data is illustrated schematically in figure 1. Traditionally, ensemble Kalman methods have been used in data assimilation applications to infer the state of the system (e.g. velocities and pressures of a flow field). However, in our application, we aim to learn a turbulence model represented by a neural network. Therefore, the parameters (weight vector ![]() $\boldsymbol {w}$) of the network are the quantities to be inferred. The iterative ensemble Kalman method adopted for model learning consists of the following steps.

$\boldsymbol {w}$) of the network are the quantities to be inferred. The iterative ensemble Kalman method adopted for model learning consists of the following steps.

(i) Sample the parameters (neural network weight vector

$\boldsymbol {w}$) based on the initial prior distribution (figure 1a). The initial parameters are obtained by pre-training based on a baseline model.

$\boldsymbol {w}$) based on the initial prior distribution (figure 1a). The initial parameters are obtained by pre-training based on a baseline model.(ii) Construct the Reynolds stress field from the mean velocity field by evaluating the neural-network-based turbulence model (figure 1b). The initial velocity field is obtained from the prediction with the baseline model. For a given mean velocity field

$\boldsymbol {u}(\boldsymbol {x})$, each of the sample

$\boldsymbol {u}(\boldsymbol {x})$, each of the sample  $\boldsymbol {w}_j$ (with

$\boldsymbol {w}_j$ (with  $j$ being the sample index) implies a different turbulence model and thus a different Reynolds stress field, leading to an ensemble of Reynolds stress fields in the whole computational domain.

$j$ being the sample index) implies a different turbulence model and thus a different Reynolds stress field, leading to an ensemble of Reynolds stress fields in the whole computational domain.(iii) Propagate each Reynolds stress field in the ensemble to velocity field by solving the RANS equations (figure 1c), based on which the observations can be obtained via post-processing (e.g. extracting velocities at specific points or integrating surface pressure to obtain drag).

(iv) Update the parameters (network weights

$\boldsymbol {w}$) through statistical analysis of the predicted observable quantities (e.g. velocities or drag) and comparison with observation data (figure 1d).

$\boldsymbol {w}$) through statistical analysis of the predicted observable quantities (e.g. velocities or drag) and comparison with observation data (figure 1d).

Steps (ii)–(iv) are repeated until convergence is achieved. The implementation details are provided in Appendix A.

Figure 1. Schematic of the ensemble-based learning with sparse velocity data, consisting of the following four steps: (a) sample the weights of the tensor basis neural network (NN); (b) construct the Reynolds stress by evaluating the neural-network-based turbulence model; (c) propagate the constructed Reynolds stress tensor to velocity by solving the RANS equations; (d) update the neural network weights by incorporating observation data.

In this section, we introduce the Reynolds stress representation based on the tensor basis neural network and the ensemble-based learning algorithm. The latter is compared to other learning algorithms in the literature.

2.1. Embedded neural network for Reynolds stress representation

For constant-density incompressible turbulent flows, the mean flow can be described by the RANS equation

\begin{equation} \left.\begin{gathered} \boldsymbol{\nabla}\boldsymbol{\cdot} \boldsymbol{u} = 0, \\ \boldsymbol{u} \boldsymbol{\cdot}\boldsymbol{\nabla} \boldsymbol{u} ={-} \boldsymbol{\nabla} p + \nu\,\nabla^2 \boldsymbol{u} - \boldsymbol{\nabla}\boldsymbol{\cdot} \boldsymbol{\tau} {,} \end{gathered}\right\} \end{equation}

\begin{equation} \left.\begin{gathered} \boldsymbol{\nabla}\boldsymbol{\cdot} \boldsymbol{u} = 0, \\ \boldsymbol{u} \boldsymbol{\cdot}\boldsymbol{\nabla} \boldsymbol{u} ={-} \boldsymbol{\nabla} p + \nu\,\nabla^2 \boldsymbol{u} - \boldsymbol{\nabla}\boldsymbol{\cdot} \boldsymbol{\tau} {,} \end{gathered}\right\} \end{equation}

where ![]() $p$ denotes mean pressure normalized by the constant flow density, and the Reynolds stress

$p$ denotes mean pressure normalized by the constant flow density, and the Reynolds stress ![]() $\boldsymbol {\tau }$ indicates the effects of the small-scale turbulence on the mean flow quantities, which are required to be modelled. The Reynolds stress can be decomposed into a deviatoric part (

$\boldsymbol {\tau }$ indicates the effects of the small-scale turbulence on the mean flow quantities, which are required to be modelled. The Reynolds stress can be decomposed into a deviatoric part (![]() $\boldsymbol {a}$) and a spherical part as

$\boldsymbol {a}$) and a spherical part as

where ![]() $k$ is the turbulence kinetic energy, and

$k$ is the turbulence kinetic energy, and ![]() ${\boldsymbol {I}}$ is the second-order identity tensor. Different strategies have been developed to represent the deviatoric part of the Reynolds stress, and here we use the tensor basis neural network (Ling et al. Reference Ling, Kurzawski and Templeton2016).

${\boldsymbol {I}}$ is the second-order identity tensor. Different strategies have been developed to represent the deviatoric part of the Reynolds stress, and here we use the tensor basis neural network (Ling et al. Reference Ling, Kurzawski and Templeton2016).

The neural network represents the deviatoric part of Reynolds stress with the scalar invariants and the tensor bases of the turbulence field. Specifically, the neural network is used to represent the mapping between the scalar invariants and coefficients of the tensor bases. Further, the output of the neural network is combined with the tensor bases to construct the Reynolds stress field such that the framework has the embedded Galilean invariance. The deviatoric part of the Reynolds stress (![]() $\boldsymbol {a}$) can be constructed as (Pope Reference Pope1975)

$\boldsymbol {a}$) can be constructed as (Pope Reference Pope1975)

\begin{gather} \boldsymbol{a} = 2 k \sum_{i=1}^{10} g^{(i)} \boldsymbol{T}^{(i)}, \end{gather}

\begin{gather} \boldsymbol{a} = 2 k \sum_{i=1}^{10} g^{(i)} \boldsymbol{T}^{(i)}, \end{gather}

where ![]() ${\boldsymbol {T}}$ and

${\boldsymbol {T}}$ and ![]() $\boldsymbol {\theta }$ are the tensor basis and scalar invariant of the input tensors, and

$\boldsymbol {\theta }$ are the tensor basis and scalar invariant of the input tensors, and ![]() $\boldsymbol{g}$ is the scalar coefficient functions to be learned. There are

$\boldsymbol{g}$ is the scalar coefficient functions to be learned. There are ![]() $10$ independent tensors that give the most general form of eddy viscosity. The first four tensors are given as

$10$ independent tensors that give the most general form of eddy viscosity. The first four tensors are given as

\begin{equation} \left.\begin{gathered} \boldsymbol{T}^{(1)} = \boldsymbol{S}, \quad \boldsymbol{T}^{(2)} = \boldsymbol{S W} - \boldsymbol{W S} \\ \boldsymbol{T}^{(3)} = \boldsymbol{S}^2 - \tfrac{1}{3}\{\boldsymbol{S}^2\} \boldsymbol{I}, \quad \boldsymbol{T}^{(4)} = \boldsymbol{W}^2-\tfrac{1}{3}\{\boldsymbol{W}^2\}\boldsymbol{I}, \end{gathered}\right\} \end{equation}

\begin{equation} \left.\begin{gathered} \boldsymbol{T}^{(1)} = \boldsymbol{S}, \quad \boldsymbol{T}^{(2)} = \boldsymbol{S W} - \boldsymbol{W S} \\ \boldsymbol{T}^{(3)} = \boldsymbol{S}^2 - \tfrac{1}{3}\{\boldsymbol{S}^2\} \boldsymbol{I}, \quad \boldsymbol{T}^{(4)} = \boldsymbol{W}^2-\tfrac{1}{3}\{\boldsymbol{W}^2\}\boldsymbol{I}, \end{gathered}\right\} \end{equation}

where the curly brackets ![]() $\{\cdot \}$ indicate the trace of a matrix. The first two scalar invariants are

$\{\cdot \}$ indicate the trace of a matrix. The first two scalar invariants are

Both the symmetric tensor ![]() ${\boldsymbol {S}}$ and the anti-symmetric tensor

${\boldsymbol {S}}$ and the anti-symmetric tensor ![]() ${\boldsymbol {W}}$ are normalized by the turbulence time scale

${\boldsymbol {W}}$ are normalized by the turbulence time scale ![]() ${k}/{\varepsilon }$ as

${k}/{\varepsilon }$ as ![]() ${\boldsymbol {S}}=\frac {1}{2} {k}/{\varepsilon } [\boldsymbol {\nabla } \boldsymbol {u} + (\boldsymbol {\nabla } \boldsymbol {u})^\textrm {T}]$ and

${\boldsymbol {S}}=\frac {1}{2} {k}/{\varepsilon } [\boldsymbol {\nabla } \boldsymbol {u} + (\boldsymbol {\nabla } \boldsymbol {u})^\textrm {T}]$ and ![]() ${\boldsymbol {W}}=\frac {1}{2} {k}/{\varepsilon } [\boldsymbol {\nabla } \boldsymbol {u} - (\boldsymbol {\nabla } \boldsymbol {u})^\textrm {T}]$. The time scale

${\boldsymbol {W}}=\frac {1}{2} {k}/{\varepsilon } [\boldsymbol {\nabla } \boldsymbol {u} - (\boldsymbol {\nabla } \boldsymbol {u})^\textrm {T}]$. The time scale ![]() ${k}/{\varepsilon }$ is obtained from the turbulence quantities solved from the transport equations for turbulence kinetic energy

${k}/{\varepsilon }$ is obtained from the turbulence quantities solved from the transport equations for turbulence kinetic energy ![]() $k$ and dissipation rate

$k$ and dissipation rate ![]() $\varepsilon$. For a two-dimensional flow, only two scalar invariants are non-zero, and the first three tensor bases are linearly independent (Pope Reference Pope1975). Further, for incompressible flows, the components of the third tensor have

$\varepsilon$. For a two-dimensional flow, only two scalar invariants are non-zero, and the first three tensor bases are linearly independent (Pope Reference Pope1975). Further, for incompressible flows, the components of the third tensor have ![]() $\boldsymbol {T}^{(3)}_{11} = \boldsymbol {T}^{(3)}_{22}$ and

$\boldsymbol {T}^{(3)}_{11} = \boldsymbol {T}^{(3)}_{22}$ and ![]() $\boldsymbol {T}^{(3)}_{12}=\boldsymbol {T}^{(3)}_{21}=0$. Hence the third tensor basis can be incorporated into the pressure term in the RANS equation, leaving only two tensor functions and two scalar invariants. In the turbulence transport equation, the turbulence production term is modified to account for the expanded formulation of Reynolds stress

$\boldsymbol {T}^{(3)}_{12}=\boldsymbol {T}^{(3)}_{21}=0$. Hence the third tensor basis can be incorporated into the pressure term in the RANS equation, leaving only two tensor functions and two scalar invariants. In the turbulence transport equation, the turbulence production term is modified to account for the expanded formulation of Reynolds stress ![]() $\mathcal {P} = -\boldsymbol {\tau } : \boldsymbol {S}$, where

$\mathcal {P} = -\boldsymbol {\tau } : \boldsymbol {S}$, where ![]() $:$ denotes double contraction of tensors. For details of the implementation, readers are referred to Michelén-Ströfer & Xiao (Reference Michelén-Ströfer and Xiao2021). Note that the representation of the Reynolds stress is based on the following three hypotheses: (1) the Reynolds stress can be described locally with the scalar invariants and the independent tensors; (2) the coefficients of the tensor bases can be represented by a neural network with the scalar invariants as input features; (3) a universal model form exists for flows having similar distributions of scalar invariants in the feature space. Admittedly, the nonlinear eddy viscosity model is essentially still under the weak equilibrium assumption. Here, we choose the nonlinear eddy viscosity model as a base model, mainly due to the following considerations. First, it is a more general representation of the Reynolds stress tensor compared to the linear eddy viscosity model. It utilizes ten tensor bases formed by the strain-rate tensor and rotation-rate tensor to represent the Reynolds stress, while the linear eddy viscosity model uses only the first tensor basis. Second, the nonlinear eddy viscosity model uses uniform model inputs with Galilean invariance, i.e. scalar invariants, without requiring feature selections based on physical knowledge of specific flow applications. Finally, the model expresses the Reynolds stress in an algebraic form, and no additional transport equation is solved. From a practical perspective, the model is straightforward to implement and computationally more efficient than the Reynolds stress transport models.

$:$ denotes double contraction of tensors. For details of the implementation, readers are referred to Michelén-Ströfer & Xiao (Reference Michelén-Ströfer and Xiao2021). Note that the representation of the Reynolds stress is based on the following three hypotheses: (1) the Reynolds stress can be described locally with the scalar invariants and the independent tensors; (2) the coefficients of the tensor bases can be represented by a neural network with the scalar invariants as input features; (3) a universal model form exists for flows having similar distributions of scalar invariants in the feature space. Admittedly, the nonlinear eddy viscosity model is essentially still under the weak equilibrium assumption. Here, we choose the nonlinear eddy viscosity model as a base model, mainly due to the following considerations. First, it is a more general representation of the Reynolds stress tensor compared to the linear eddy viscosity model. It utilizes ten tensor bases formed by the strain-rate tensor and rotation-rate tensor to represent the Reynolds stress, while the linear eddy viscosity model uses only the first tensor basis. Second, the nonlinear eddy viscosity model uses uniform model inputs with Galilean invariance, i.e. scalar invariants, without requiring feature selections based on physical knowledge of specific flow applications. Finally, the model expresses the Reynolds stress in an algebraic form, and no additional transport equation is solved. From a practical perspective, the model is straightforward to implement and computationally more efficient than the Reynolds stress transport models.

In this work, the tensor basis neural network is embedded into the RANS equation during the training process. Specifically, the RANS equation is solved to propagate the Reynolds stress to the velocity by coupling with the neural-network-based model, and the propagated velocity and the indirect observations are analysed to train the neural network through model learning algorithms. We use an ensemble Kalman method to train the neural-network-based turbulence model embedded in the RANS equations, which is elaborated in § 2.2. More detailed comparisons between the proposed method and other related schemes are presented in § 2.3.

2.2. Ensemble-based model-consistent training

The goal of the model-consistent training is to reduce the model prediction error by optimizing the weights ![]() $\boldsymbol {w}$ of the neural network. The corresponding cost function can be formulated as (Zhang, Michelén-Ströfer & Xiao Reference Zhang, Michelén-Ströfer and Xiao2020a)

$\boldsymbol {w}$ of the neural network. The corresponding cost function can be formulated as (Zhang, Michelén-Ströfer & Xiao Reference Zhang, Michelén-Ströfer and Xiao2020a)

where ![]() $\|\cdot \|_{ {\mathsf {A}}}$ indicates weighted norm (defined as

$\|\cdot \|_{ {\mathsf {A}}}$ indicates weighted norm (defined as ![]() $\| \boldsymbol {v} \|_{ {\mathsf {A}}}^2 = \boldsymbol {v}^\textrm {T} { {\mathsf {A}}}^{-1} \boldsymbol {v}$ for a vector

$\| \boldsymbol {v} \|_{ {\mathsf {A}}}^2 = \boldsymbol {v}^\textrm {T} { {\mathsf {A}}}^{-1} \boldsymbol {v}$ for a vector ![]() $\boldsymbol {v}$ with weight matrix

$\boldsymbol {v}$ with weight matrix ![]() ${ {\mathsf {A}}}$),

${ {\mathsf {A}}}$), ![]() ${ {\mathsf {P}}}$ is the model error covariance matrix indicating the uncertainties of the initial weights,

${ {\mathsf {P}}}$ is the model error covariance matrix indicating the uncertainties of the initial weights, ![]() ${ {\mathsf {R}}}$ is the observation error covariance matrix, and

${ {\mathsf {R}}}$ is the observation error covariance matrix, and ![]() ${ {\mathsf {y}}}$ is the training data which are subjected to the Gaussian noise

${ {\mathsf {y}}}$ is the training data which are subjected to the Gaussian noise ![]() $\epsilon \sim \mathcal {N}(0, { {\mathsf {R}}})$. For simplicity, we introduce the operator

$\epsilon \sim \mathcal {N}(0, { {\mathsf {R}}})$. For simplicity, we introduce the operator ![]() $\mathcal {H}$, which is a composition of the RANS solver and the associated post-processing (observation). It maps the weights

$\mathcal {H}$, which is a composition of the RANS solver and the associated post-processing (observation). It maps the weights ![]() $\boldsymbol {w}$ to the observation space (e.g. velocity or drag coefficient). The first term in (2.7) is introduced to regularize the updated weights

$\boldsymbol {w}$ to the observation space (e.g. velocity or drag coefficient). The first term in (2.7) is introduced to regularize the updated weights ![]() $\boldsymbol {w}$ by penalizing large deviations from their initial values

$\boldsymbol {w}$ by penalizing large deviations from their initial values ![]() $\boldsymbol {w}^0$. The second term describes the discrepancy between the model prediction

$\boldsymbol {w}^0$. The second term describes the discrepancy between the model prediction ![]() $\mathcal {H}[\boldsymbol {w}]$ and the observation

$\mathcal {H}[\boldsymbol {w}]$ and the observation ![]() ${ {\mathsf {y}}}$. The training of the neural network is equivalent to minimization of the cost function (2.7) by optimizing the weights

${ {\mathsf {y}}}$. The training of the neural network is equivalent to minimization of the cost function (2.7) by optimizing the weights ![]() $\boldsymbol {w}$. Note that the cost function can be modified to include other observation quantities such as friction coefficient and transition location.

$\boldsymbol {w}$. Note that the cost function can be modified to include other observation quantities such as friction coefficient and transition location.

In this work, we use the iterative ensemble Kalman method with adaptive stepping (Luo et al. Reference Luo, Stordal, Lorentzen and Nævdal2015) to train the neural network framework. This algorithm is a variant of the ensemble-based method where the observation error covariance matrix ![]() ${ {\mathsf {R}}}$ is inflated such that the step size is adjusted adaptively at each iteration step. The corresponding cost function involves the regularization based on the difference from the last iteration, i.e.

${ {\mathsf {R}}}$ is inflated such that the step size is adjusted adaptively at each iteration step. The corresponding cost function involves the regularization based on the difference from the last iteration, i.e.

where ![]() $l$ is the iteration index,

$l$ is the iteration index, ![]() $j$ is the sample index, and

$j$ is the sample index, and ![]() $\gamma$ is a scaling parameter. The weight-update scheme of the iterative ensemble Kalman method is formulated as

$\gamma$ is a scaling parameter. The weight-update scheme of the iterative ensemble Kalman method is formulated as

The square-root matrices ![]() ${ {\mathsf {S}}}_w$ and

${ {\mathsf {S}}}_w$ and ![]() ${ {\mathsf {S}}}_y$ can be estimated from the ensemble at each iteration. See step (vi) and (A1) of the detailed implementation in Appendix A.

${ {\mathsf {S}}}_y$ can be estimated from the ensemble at each iteration. See step (vi) and (A1) of the detailed implementation in Appendix A.

Note that the Kalman gain matrix above has a slightly different form than the more common formulation ![]() ${ {\mathsf {K}}} = { {\mathsf {P}}}{ {\mathsf {H}}}^\textrm {T}({ {\mathsf {H}}}{ {\mathsf {P}}}{ {\mathsf {H}}}^\textrm {T} + \gamma ^l { {\mathsf {R}}} )^{-1}$. This is because we have written the terms associated with the model error covariance matrix

${ {\mathsf {K}}} = { {\mathsf {P}}}{ {\mathsf {H}}}^\textrm {T}({ {\mathsf {H}}}{ {\mathsf {P}}}{ {\mathsf {H}}}^\textrm {T} + \gamma ^l { {\mathsf {R}}} )^{-1}$. This is because we have written the terms associated with the model error covariance matrix ![]() ${ {\mathsf {P}}}$ by using the square-root matrix

${ {\mathsf {P}}}$ by using the square-root matrix ![]() ${ {\mathsf {S}}}_w$ and its projection

${ {\mathsf {S}}}_w$ and its projection ![]() ${ {\mathsf {S}}}_y$ to the observation space, i.e.

${ {\mathsf {S}}}_y$ to the observation space, i.e.

where ![]() ${ {\mathsf {H}}}$ is the local gradient of the observation operator

${ {\mathsf {H}}}$ is the local gradient of the observation operator ![]() $\mathcal {H}$ with respect to the parameter

$\mathcal {H}$ with respect to the parameter ![]() $\boldsymbol {w}$. The equivalence between the two formulations is illustrated in Appendix B.

$\boldsymbol {w}$. The equivalence between the two formulations is illustrated in Appendix B.

The Kalman gain matrix in (2.9b) contains implicitly the inverse of the approximated second-order derivatives (Hessian matrix) as well as the gradient (Jacobian) of the cost function (both with respect to the weights ![]() $\boldsymbol {w})$. This can be seen from the derivations presented in Appendix B. Including both the gradient and the Hessian information significantly accelerates the convergence of the iteration process and thus improves the learning efficiency. This is in stark contrast to using only the gradient in typical training procedures of deep learning. Moreover, this is done in ensemble Kalman methods economically, without significant overhead in computational costs or memory footprint.

$\boldsymbol {w})$. This can be seen from the derivations presented in Appendix B. Including both the gradient and the Hessian information significantly accelerates the convergence of the iteration process and thus improves the learning efficiency. This is in stark contrast to using only the gradient in typical training procedures of deep learning. Moreover, this is done in ensemble Kalman methods economically, without significant overhead in computational costs or memory footprint.

The inflation parameter ![]() $\gamma ^l$ in (2.9b) can be considered a coefficient for adjusting the relative weight between the prediction discrepancies and the regularization terms. As such, we let

$\gamma ^l$ in (2.9b) can be considered a coefficient for adjusting the relative weight between the prediction discrepancies and the regularization terms. As such, we let

where ![]() $\beta ^l$ is a scalar coefficient whose value also changes over the iteration process. The detailed algorithm for scheduling

$\beta ^l$ is a scalar coefficient whose value also changes over the iteration process. The detailed algorithm for scheduling ![]() $\beta ^l$ (and thus

$\beta ^l$ (and thus ![]() $\gamma ^l$) is presented in step (vii) of the detailed implementation in Appendix A.

$\gamma ^l$) is presented in step (vii) of the detailed implementation in Appendix A.

The ensemble-based method has the following three practical advantages. First, it produces an ensemble of weights of the neural network, based on which uncertainty quantification can be conducted for the model prediction similarly to the Bayesian neural network (Sun & Wang Reference Sun and Wang2020). Second, unlike the adjoint-based method, the ensemble-based method is non-intrusive and derivative-free, which means that it can be applied to black-box systems without the need for modifying the underlying source code. This feature makes it convenient to implement the ensemble-based method in practice, and promotes the generalizability of the implemented ensemble-based method to different problems. Finally, to reduce the consumption of computer memory, commonly used training algorithms, such as stochastic gradient descent, typically involve the use of only gradients of an objective function to update the weights of a neural network, while the ensemble-based method incorporates the information of a low-rank approximated Hessian without a substantial increment of computer memory. Utilizing the Hessian information significantly improves convergence, as discussed above. In addition, the method can be used to train the model jointly with data from different flow configurations. In such scenarios, the observation vector and the corresponding error covariance matrix would contain different quantities, e.g. the velocity and drag coefficient. The ensemble-based learning method interacts with the prediction environment during the training process, which is similar to the reinforcement learning in this sense. However, the reinforcement learning usually learns a control policy for a dynamic scenario (e.g. Novati, de Laroussilhe & Koumoutsakos Reference Novati, de Laroussilhe and Koumoutsakos2021; Bae & Koumoutsakos Reference Bae and Koumoutsakos2022), while the present work learns a closure model with supervised learning. Moreover, the reinforcement learning approach uses particular policy gradient algorithms to update the policy, while the ensemble Kalman method uses the ensemble-based gradient and Hessian to find the minimum of the underlying objective function.

An open-source platform OpenFOAM (The OpenFOAM Foundation 2021) is used in this work to solve the RANS equations with turbulence models. Specifically, the built-in solver simpleFoam is applied to solve the RANS equation coupling with the specialized neural network model. Moreover, the DAFI code (Michelén-Ströfer, Zhang & Xiao Reference Michelén-Ströfer, Zhang and Xiao2021a) is used to implement the ensemble-based training algorithm. A fully connected neural network is used in this work, and the detailed architecture for each case will be explained later. The rectified linear unit (ReLU) activation function is used for the hidden layers, and the linear activation function is used for the output layer. The machine learning library TensorFlow (Abadi et al. Reference Abadi2015) is employed to construct the neural network. The code developed for this work is publicly available on Github (Zhang et al. Reference Zhang, Xiao, Luo and He2022).

2.3. Comparison to other learning methods

Various approaches have been proposed for data-driven turbulence modelling, such as the direct training method (Ling et al. Reference Ling, Kurzawski and Templeton2016), the adjoint-based differentiable method (Holland et al. Reference Holland, Baeder and Duraisamy2019; MacArt et al. Reference MacArt, Sirignano and Freund2021; Michelén-Ströfer & Xiao Reference Michelén-Ströfer and Xiao2021), the ensemble gradient method (Michelén-Ströfer et al. Reference Michelén-Ströfer, Zhang and Xiao2021b), and the ensemble Kalman inversion (Kovachki & Stuart Reference Kovachki and Stuart2019). Here, we present an algorithmic comparison of the proposed method with other model learning strategies in a unified perspective.

Conventional methods use the Reynolds stress of DNS to train the model in the a priori manner, with the goal of minimizing the discrepancy between the output of a neural network and the training data based on the back-propagation technique. This concept can be formulated as a corresponding minimization problem (with the proposed solution), as follows:

\begin{equation} \left.\begin{gathered} \mathop{\arg\min}\limits_{\boldsymbol{w}} J = \| \boldsymbol{\tau}(\boldsymbol{w}, \tilde{\boldsymbol{S}}, \tilde{\boldsymbol{W}}) - \boldsymbol{\tau}^{DNS} \|^2 {,}\\ \boldsymbol{w}^{l+1} = \boldsymbol{w}^{l} - \beta\,\frac{\partial \boldsymbol{\tau}(\boldsymbol{w}, \tilde{\boldsymbol{S}}, \tilde{\boldsymbol{W}})}{\partial \boldsymbol{w}}\left[\boldsymbol{\tau}(\boldsymbol{w}, \tilde{\boldsymbol{S}}, \tilde{\boldsymbol{W}}) - \boldsymbol{\tau}^{DNS} \right], \end{gathered}\right\} \end{equation}

\begin{equation} \left.\begin{gathered} \mathop{\arg\min}\limits_{\boldsymbol{w}} J = \| \boldsymbol{\tau}(\boldsymbol{w}, \tilde{\boldsymbol{S}}, \tilde{\boldsymbol{W}}) - \boldsymbol{\tau}^{DNS} \|^2 {,}\\ \boldsymbol{w}^{l+1} = \boldsymbol{w}^{l} - \beta\,\frac{\partial \boldsymbol{\tau}(\boldsymbol{w}, \tilde{\boldsymbol{S}}, \tilde{\boldsymbol{W}})}{\partial \boldsymbol{w}}\left[\boldsymbol{\tau}(\boldsymbol{w}, \tilde{\boldsymbol{S}}, \tilde{\boldsymbol{W}}) - \boldsymbol{\tau}^{DNS} \right], \end{gathered}\right\} \end{equation}

where the input features ![]() $\tilde {\boldsymbol {S}}$ and

$\tilde {\boldsymbol {S}}$ and ![]() $\tilde {\boldsymbol {W}}$ are processed from the DNS results. Further, the trained neural network is coupled with the RANS solver for the posterior tests in similar configurations. It is obvious that inconsistency exists between the training and prediction environments. Specifically, during the training process, the model inputs are post-processed from the DNS data, while the learned model uses the RANS prediction to construct the input features. Besides, the training process aims to minimize the cost function associated with the Reynolds stress, while the prediction aims to achieve the least discrepancies in the velocity. This inconsistency would lead to unsatisfactory prediction due to the ill-conditioning issue of the RANS equation (Wu et al. Reference Wu, Xiao, Sun and Wang2019b). To tackle this problem, model-consistent training is required to construct the input features and the cost function with respect to more appropriate predicted quantities, e.g. the velocity.

$\tilde {\boldsymbol {W}}$ are processed from the DNS results. Further, the trained neural network is coupled with the RANS solver for the posterior tests in similar configurations. It is obvious that inconsistency exists between the training and prediction environments. Specifically, during the training process, the model inputs are post-processed from the DNS data, while the learned model uses the RANS prediction to construct the input features. Besides, the training process aims to minimize the cost function associated with the Reynolds stress, while the prediction aims to achieve the least discrepancies in the velocity. This inconsistency would lead to unsatisfactory prediction due to the ill-conditioning issue of the RANS equation (Wu et al. Reference Wu, Xiao, Sun and Wang2019b). To tackle this problem, model-consistent training is required to construct the input features and the cost function with respect to more appropriate predicted quantities, e.g. the velocity.

For model-consistent training, the corresponding minimization problem (together with its solution) is changed to

\begin{equation} \left.\begin{gathered} \mathop{\arg\min}\limits_{\boldsymbol{w}} J = \| \boldsymbol{u}^{DNS} - \boldsymbol{u}(\boldsymbol{\tau}(\boldsymbol{w}, \boldsymbol{S}, \boldsymbol{W})) \|^2 {,} \\ \boldsymbol{w}^{l+1} = \boldsymbol{w}^{l} - \beta\,\frac{\partial J}{\partial \boldsymbol{w}}, \end{gathered}\right\} \end{equation}

\begin{equation} \left.\begin{gathered} \mathop{\arg\min}\limits_{\boldsymbol{w}} J = \| \boldsymbol{u}^{DNS} - \boldsymbol{u}(\boldsymbol{\tau}(\boldsymbol{w}, \boldsymbol{S}, \boldsymbol{W})) \|^2 {,} \\ \boldsymbol{w}^{l+1} = \boldsymbol{w}^{l} - \beta\,\frac{\partial J}{\partial \boldsymbol{w}}, \end{gathered}\right\} \end{equation}

where the input features ![]() $\boldsymbol {S}$ and

$\boldsymbol {S}$ and ![]() $\boldsymbol {W}$ are processed from the RANS prediction. Both the input feature and the objective function used for training are consistent with the prediction environment. Different approaches can be used to train the model, such as the adjoint-based differentiable method, the ensemble-based gradient method, and the ensemble Kalman inversion method. Specifically, the adjoint-based differentiable framework (Michelén-Ströfer et al. Reference Michelén-Ströfer, Zhang and Xiao2021b) decomposes the gradient of the cost function into

$\boldsymbol {W}$ are processed from the RANS prediction. Both the input feature and the objective function used for training are consistent with the prediction environment. Different approaches can be used to train the model, such as the adjoint-based differentiable method, the ensemble-based gradient method, and the ensemble Kalman inversion method. Specifically, the adjoint-based differentiable framework (Michelén-Ströfer et al. Reference Michelén-Ströfer, Zhang and Xiao2021b) decomposes the gradient of the cost function into ![]() ${\partial J}/{\partial \boldsymbol {\tau } }$ and

${\partial J}/{\partial \boldsymbol {\tau } }$ and ![]() ${\partial \boldsymbol {\tau }}/{\partial \boldsymbol {w}}$ by using the chain rule. The weight-update scheme can be written as

${\partial \boldsymbol {\tau }}/{\partial \boldsymbol {w}}$ by using the chain rule. The weight-update scheme can be written as

The gradient ![]() ${\partial J}/{\partial \boldsymbol {\tau }}$ is computed using the adjoint-based method, and the gradient

${\partial J}/{\partial \boldsymbol {\tau }}$ is computed using the adjoint-based method, and the gradient ![]() ${\partial \boldsymbol {\tau }}/{\partial \boldsymbol {w}}$ is computed based on the back-propagation method. The ensemble-based gradient method applies the Monte Carlo technique to draw samples from a Gaussian distribution. Moreover, the data noise is taken into account by weighting the cost function with the observation error covariance matrix

${\partial \boldsymbol {\tau }}/{\partial \boldsymbol {w}}$ is computed based on the back-propagation method. The ensemble-based gradient method applies the Monte Carlo technique to draw samples from a Gaussian distribution. Moreover, the data noise is taken into account by weighting the cost function with the observation error covariance matrix ![]() ${ {\mathsf {R}}}$. Further, the cross-covariance matrix computed by the ensemble-based method can be used to approximate the adjoint-based gradient as

${ {\mathsf {R}}}$. Further, the cross-covariance matrix computed by the ensemble-based method can be used to approximate the adjoint-based gradient as

The above-mentioned training approach employs the readily available analytic gradient of the neural network based on the back-propagation method. Further, the gradient of the cost function can be constructed by coupling with adjoint- or ensemble-based sensitivity of the RANS equation.

The ensemble Kalman inversion method (Kovachki & Stuart Reference Kovachki and Stuart2019) adds a regularization term into the cost function and approximates the gradient of the cost function with respect to the weights of the neural network based on implicit linearization. The minimization problem and the corresponding weight-update scheme are

\begin{equation} \left.\begin{gathered} \mathop{\arg\min}\limits_{\boldsymbol{w}} J = \| \boldsymbol{w}^{l+1} - \boldsymbol{w}^l \|^2_{{\mathsf{P}}} + \| \boldsymbol{u}^{DNS} - \boldsymbol{u} \|^2_{{\mathsf{R}}}, \\ \boldsymbol{w}_j^{l+1} = \boldsymbol{w}_j^l + {{\mathsf{S}}}_w^l \left({{\mathsf{S}}}_y^l \right)^{\rm T} \left({{\mathsf{S}}}_y^l \left({{\mathsf{S}}}_y^l \right)^{\rm T} + {{\mathsf{R}}} \right)^{{-}1} \left({{\mathsf{y}}}_j - \mathcal{H}[\boldsymbol{w}^l]\right) {.} \end{gathered}\right\} \end{equation}

\begin{equation} \left.\begin{gathered} \mathop{\arg\min}\limits_{\boldsymbol{w}} J = \| \boldsymbol{w}^{l+1} - \boldsymbol{w}^l \|^2_{{\mathsf{P}}} + \| \boldsymbol{u}^{DNS} - \boldsymbol{u} \|^2_{{\mathsf{R}}}, \\ \boldsymbol{w}_j^{l+1} = \boldsymbol{w}_j^l + {{\mathsf{S}}}_w^l \left({{\mathsf{S}}}_y^l \right)^{\rm T} \left({{\mathsf{S}}}_y^l \left({{\mathsf{S}}}_y^l \right)^{\rm T} + {{\mathsf{R}}} \right)^{{-}1} \left({{\mathsf{y}}}_j - \mathcal{H}[\boldsymbol{w}^l]\right) {.} \end{gathered}\right\} \end{equation}Note that this method involves the Hessian of the cost function (Evensen Reference Evensen2018; Luo Reference Luo2021) and provides quantified uncertainties based on Bayesian analysis (Zhang et al. Reference Zhang, Xiao, Gomez and Coutier-Delgosha2020b). Similar to the ensemble gradient method, the ensemble Kalman inversion method also approximates the sensitivity of velocity to neural network weights based on the ensemble cross-covariance matrix, without involving the analytic gradient of the neural network. However, the ensemble Kalman inversion method includes the approximated Hessian in the weight-update scheme, which is missing in the ensemble gradient method. The present algorithm can be considered a variant of the ensemble Kalman inversion method, which inherits the advantages of ensemble-based methods in terms of non-intrusiveness and quantified uncertainty. Moreover, the present method adjusts the relative weights of the prediction discrepancy and the regularization terms at each iteration step, which helps to speed up the convergence of the iteration process and enhance the robustness of the weight-update scheme. For convenience of comparison, the training algorithms of different model-consistent data-driven turbulence modelling frameworks are summarized in table 1.

Table 1. Summary of different approaches for learning turbulence models in terms of the cost function and update schemes. We compared the ensemble Kalman method with adaptive stepping (Luo et al. Reference Luo, Stordal, Lorentzen and Nævdal2015; Kovachki & Stuart Reference Kovachki and Stuart2019) with other related methods, including learning from direct data, i.e. the Reynolds stresses (Ling et al. Reference Ling, Kurzawski and Templeton2016), adjoint-based learning (Holland et al. Reference Holland, Baeder and Duraisamy2019; MacArt et al. Reference MacArt, Sirignano and Freund2021; Michelén-Ströfer & Xiao Reference Michelén-Ströfer and Xiao2021) and ensemble gradient learning (Michelén-Ströfer et al. Reference Michelén-Ströfer, Zhang and Xiao2021b). The DNS mean velocities are used as example indirect data.

The performance of the aforementioned methods in two applications, i.e. flow in a square duct and flow over periodic hills, is summarized in table 2. The square duct case is a synthetic case to assess the capability of the methods in learning underlying model functions, where the prediction with Shih's quadratic model (Shih Reference Shih1993) is used as the training data. For this reason, learning from direct data (referred to as the ‘direct learning method’ hereafter) can construct the synthetic model function accurately, and the results are omitted for brevity. We present the results of the direct learning method for the periodic hill case in § 4.2, where the DNS data are used as the training data. The direct learning method is able to learn a model that improves the estimation of both velocity and Reynolds stress. However, when generalized to configurations with varying slopes, the learned model lacks robustness and leads to large discrepancies, as shown in figure 12. The adjoint-based learning method reconstructs accurately both the velocity and Reynolds stress fields in the square duct case, as shown in § 4.1. However, the method failed to learn a nonlinear eddy viscosity model in the periodic hill case – it diverged during the training as reported in Michelén-Ströfer & Xiao (Reference Michelén-Ströfer and Xiao2021). The ensemble gradient method was not able to recover the underlying model function in the synthetic square duct case (Michelén-Ströfer et al. Reference Michelén-Ströfer, Zhang and Xiao2021b), and also diverged in the periodic hill case. In contrast, the present method is capable of learning the functional mapping in both cases. Moreover, the learned model is generalized well to similar configurations with varying slopes, as shown in figure 12.

Table 2. Summary of the performance of different approaches for learning turbulence models in two different cases, i.e. flow in a square duct and flow over periodic hills. We compare the present method (Luo et al. Reference Luo, Stordal, Lorentzen and Nævdal2015; Kovachki & Stuart Reference Kovachki and Stuart2019) with other related methods, including learning from direct data (Ling et al. Reference Ling, Kurzawski and Templeton2016), adjoint-based learning (Holland et al. Reference Holland, Baeder and Duraisamy2019; MacArt et al. Reference MacArt, Sirignano and Freund2021; Michelén-Ströfer & Xiao Reference Michelén-Ströfer and Xiao2021) and ensemble gradient learning (Michelén-Ströfer et al. Reference Michelén-Ströfer, Zhang and Xiao2021b). The square duct case uses the prediction from Shih's quadratic model (Shih Reference Shih1993) as training data, while the periodic hill case uses the DNS results as training data.

3. Case set-up

We use two test cases to show the performance of the proposed method for learning turbulence models: (1) flow in a square duct, and (2) separated flows over periodic hills. Both are classical test cases that are well-known to be challenging for linear eddy viscosity models (Xiao & Cinnella Reference Xiao and Cinnella2019). We aim to learn neural-network-represented nonlinear eddy viscosity models from velocity data by using the ensemble method. The learned models are evaluated by comparing to the ground truth for the square duct case and assessing its generalization performance in the separated flows over periodic hills. The results are also compared to those of the adjoint-based method. Details of the case set-up are discussed below.

3.1. Secondary flows in a square duct

The first case is the flow in a square duct, where the linear eddy viscosity model is not able to capture the in-plane secondary flow. The nonlinear eddy viscosity model, e.g. Shih's quadratic model (Shih Reference Shih1993), is able to simulate the secondary flows. Furthermore, Shih's quadratic model provides an explicit formula for the mapping between the scalar invariant ![]() $\boldsymbol {\theta }$ and the function

$\boldsymbol {\theta }$ and the function ![]() $\boldsymbol{g}$, which serves as an ideal benchmark for evaluating the accuracy of the trained model functions. In Shih's quadratic model, the

$\boldsymbol{g}$, which serves as an ideal benchmark for evaluating the accuracy of the trained model functions. In Shih's quadratic model, the ![]() $\boldsymbol{g}$ function of the scalar invariant

$\boldsymbol{g}$ function of the scalar invariant ![]() $\boldsymbol {\theta }$ is written as

$\boldsymbol {\theta }$ is written as

\begin{equation} \left.\begin{gathered} g^{(1)}\left(\theta_{1}, \theta_{2}\right)=\frac{-2 / 3}{1.25+\sqrt{2 \theta_{1}}+0.9 \sqrt{-2 \theta_{2}}}, \\ g^{(2)}\left(\theta_{1} , \theta_{2}\right)=\frac{7.5}{1000+\left(\sqrt{2 \theta_{1}}\right)^{3}}, \\ g^{(3)}\left(\theta_{1} , \theta_{2}\right)=\frac{1.5}{1000+\left(\sqrt{2 \theta_{1}}\right)^{3}}, \\ g^{(4)}\left(\theta_{1}, \theta_{2}\right)=\frac{-9.5}{1000+\left(\sqrt{2 \theta_{1}}\right)^{3}} {.} \end{gathered}\right\} \end{equation}

\begin{equation} \left.\begin{gathered} g^{(1)}\left(\theta_{1}, \theta_{2}\right)=\frac{-2 / 3}{1.25+\sqrt{2 \theta_{1}}+0.9 \sqrt{-2 \theta_{2}}}, \\ g^{(2)}\left(\theta_{1} , \theta_{2}\right)=\frac{7.5}{1000+\left(\sqrt{2 \theta_{1}}\right)^{3}}, \\ g^{(3)}\left(\theta_{1} , \theta_{2}\right)=\frac{1.5}{1000+\left(\sqrt{2 \theta_{1}}\right)^{3}}, \\ g^{(4)}\left(\theta_{1}, \theta_{2}\right)=\frac{-9.5}{1000+\left(\sqrt{2 \theta_{1}}\right)^{3}} {.} \end{gathered}\right\} \end{equation}Hence we use the velocity results from Shih's quadratic model as the synthetic truth and show that the method is able to reveal the underlying relationship between the scalar invariant and the tensor basis when the model exists in the form of the tensor bases. Moreover, we aim to compare the adjoint-based and the present ensemble-based methods in terms of the training accuracy and efficiency in this case.

The flow in a square duct is fully developed, and only one cell is used in the streamwise direction. Moreover, one-quarter of the domain is used due to the symmetry, and the mesh grid is ![]() $50 \times 50$. As for the architecture of the neural network in this case, two scalar invariants are used as input features, and four

$50 \times 50$. As for the architecture of the neural network in this case, two scalar invariants are used as input features, and four ![]() $g$ functions

$g$ functions ![]() $g^{(1-4)}$ are used in the output layer. The input features of the synthetic truth are shown in figure 2. Since the streamwise velocity

$g^{(1-4)}$ are used in the output layer. The input features of the synthetic truth are shown in figure 2. Since the streamwise velocity ![]() $u_x$ is dominant, the first two scalar invariants are approximately equal in magnitude but with opposite signs. The slight difference between the scalar invariants

$u_x$ is dominant, the first two scalar invariants are approximately equal in magnitude but with opposite signs. The slight difference between the scalar invariants ![]() $\theta _1$ and

$\theta _1$ and ![]() $\theta _2$ is caused by the secondary flow in the plane. We also provide the plot of

$\theta _2$ is caused by the secondary flow in the plane. We also provide the plot of ![]() $|\theta _1| - |\theta _2|$, which indicates the relative importance of the strain rate and the vorticity. The streamwise velocity gradient is relatively small near the centre of the duct, leading to the negligible scalar invariant

$|\theta _1| - |\theta _2|$, which indicates the relative importance of the strain rate and the vorticity. The streamwise velocity gradient is relatively small near the centre of the duct, leading to the negligible scalar invariant ![]() $\theta _1$. Moreover, the shear strain rate is dominant near the duct centre, while there is a pair of vortexes indicating the strong rotation rate. Also, it can be seen that the range of the input features is from

$\theta _1$. Moreover, the shear strain rate is dominant near the duct centre, while there is a pair of vortexes indicating the strong rotation rate. Also, it can be seen that the range of the input features is from ![]() $0$ to approximately

$0$ to approximately ![]() $7$. We draw

$7$. We draw ![]() $50$ samples of the neural network weights in this case. In the neural network, we use

$50$ samples of the neural network weights in this case. In the neural network, we use ![]() $2$ hidden layers with five neurons per layer. A sensitivity study of the training algorithm to the neural network architecture and the observation data is provided in Appendix C.

$2$ hidden layers with five neurons per layer. A sensitivity study of the training algorithm to the neural network architecture and the observation data is provided in Appendix C.

Figure 2. Contour plots of input features of the reference data for the square duct case and periodic hill case. (a) Square duct, ![]() $\theta _1$. (b) Square duct,

$\theta _1$. (b) Square duct, ![]() $|\theta _1|-|\theta _2|$. (c) Periodic hills,

$|\theta _1|-|\theta _2|$. (c) Periodic hills, ![]() $\theta _1$. (d) Periodic hills,

$\theta _1$. (d) Periodic hills, ![]() $\theta _2$. (e) Periodic hills,

$\theta _2$. (e) Periodic hills, ![]() $|\theta _1|-|\theta _2|$.

$|\theta _1|-|\theta _2|$.

3.2. Separated flow over periodic hills

The flow over periodic hills is a canonical separated flow for the numerical investigation of turbulence models. There is no ground truth for the model function that is able to capture the flow characteristics accurately. Here, we use the DNS results (Xiao et al. Reference Xiao, Wu, Laizet and Duan2020) as the training data, and learn the neural-network-based model by using the ensemble-based method. Further, we validate the generalizability of the learned model in similar configurations with varying slopes (Xiao et al. Reference Xiao, Wu, Laizet and Duan2020). Specifically, the hill geometry is parametrized with the slope coefficient ![]() $\alpha$. The separation extent decreases as the slope

$\alpha$. The separation extent decreases as the slope ![]() $\alpha$ increases from

$\alpha$ increases from ![]() $0.5$ to

$0.5$ to ![]() $1.5$. The case with slope parameter

$1.5$. The case with slope parameter ![]() $\alpha =1$ is used as the training case, and the cases with other slopes,

$\alpha =1$ is used as the training case, and the cases with other slopes, ![]() $\alpha = 0.5, 0.8, 1.2, 1.5$, are used to test the generalizability of the learned model in the scenarios having different levels of flow separation. The mesh is set as

$\alpha = 0.5, 0.8, 1.2, 1.5$, are used to test the generalizability of the learned model in the scenarios having different levels of flow separation. The mesh is set as ![]() $149$ cells in the streamwise direction, and

$149$ cells in the streamwise direction, and ![]() $99$ cells in the normal direction after grid-independence tests. We use the

$99$ cells in the normal direction after grid-independence tests. We use the ![]() $k$–

$k$–![]() $\varepsilon$ model (Launder & Sharma Reference Launder and Sharma1974) as the baseline model. The model learned from direct data is also provided for comparison. The implementation of the direct learning method is illustrated in Appendix D.

$\varepsilon$ model (Launder & Sharma Reference Launder and Sharma1974) as the baseline model. The model learned from direct data is also provided for comparison. The implementation of the direct learning method is illustrated in Appendix D.

For the two-dimensional incompressible flow, there are only the first two scalar invariants and independent tensors after merging the third tensor basis into the pressure term in the RANS equation (Michelén-Ströfer & Xiao Reference Michelén-Ströfer and Xiao2021). The input features of the DNS data are shown in figure 2, scaled with the RANS predicted time scale. The plot of the first scalar invariant ![]() $\theta _1$ indicates the large strain rate in the free shear layer and the windward side of the hill. The second scalar invariant

$\theta _1$ indicates the large strain rate in the free shear layer and the windward side of the hill. The second scalar invariant ![]() $\theta _2$ shows the vorticity mainly in the flow separation region at the leeward side of the hill. From the plot of

$\theta _2$ shows the vorticity mainly in the flow separation region at the leeward side of the hill. From the plot of ![]() $|\theta _1| - |\theta _2|$, it can be seen that the magnitude of the first two scalars is equivalent in most areas. The strong vorticity in the downhill region is caused by the flow separation, while near the uphill region, the shear strain rate is dominant due to the channel contraction. Compared to the square duct case, the separated flow over periodic hills has a wider range in the magnitude of the input features, which is from

$|\theta _1| - |\theta _2|$, it can be seen that the magnitude of the first two scalars is equivalent in most areas. The strong vorticity in the downhill region is caused by the flow separation, while near the uphill region, the shear strain rate is dominant due to the channel contraction. Compared to the square duct case, the separated flow over periodic hills has a wider range in the magnitude of the input features, which is from ![]() $0$ to about

$0$ to about ![]() $100$. That is because in the square duct case, the magnitude of the scalar invariant is determined mainly by the streamwise velocity

$100$. That is because in the square duct case, the magnitude of the scalar invariant is determined mainly by the streamwise velocity ![]() $u_x$, while in the periodic hill case, both

$u_x$, while in the periodic hill case, both ![]() $u_x$ and

$u_x$ and ![]() $u_y$ have considerable effects on the input features. Moreover, the magnitude of the time scale in the periodic hill is much larger than that in the square duct flow. Concretely, the maximum value for the periodic hill case is about

$u_y$ have considerable effects on the input features. Moreover, the magnitude of the time scale in the periodic hill is much larger than that in the square duct flow. Concretely, the maximum value for the periodic hill case is about ![]() $490$, while that for the square duct case is about

$490$, while that for the square duct case is about ![]() $10$. Hence we use a deeper neural network of

$10$. Hence we use a deeper neural network of ![]() $10$ hidden layers with

$10$ hidden layers with ![]() $10$ neurons per layer compared to the square duct case based on the sensitivity analysis of the neural network architecture as shown in Appendix C. We draw

$10$ neurons per layer compared to the square duct case based on the sensitivity analysis of the neural network architecture as shown in Appendix C. We draw ![]() $50$ samples of the neural network weights in this case. The training data set is summarized in table 3.

$50$ samples of the neural network weights in this case. The training data set is summarized in table 3.

Table 3. Summary of the configurations and the training data.

4. Results

4.1. Flow in a square duct: learning underlying closure functions

We first use the proposed ensemble-based method to train the turbulence model for flows in a square duct, and the results show that the predicted Reynolds stress has a good agreement with the synthetic ground truth (3.1). Plots of the velocity and the Reynolds stress are presented in figures 3 and 4, with comparison to the adjoint-based method and the ground truth. The contour lines for ![]() $u_y$ are indicated in the velocity vector plot to clearly show similar patterns among the ground truth, the adjoint-based method and the ensemble-based method. The contour plots of the Reynolds stress in

$u_y$ are indicated in the velocity vector plot to clearly show similar patterns among the ground truth, the adjoint-based method and the ensemble-based method. The contour plots of the Reynolds stress in ![]() $\tau _{xy}$,

$\tau _{xy}$, ![]() $\tau _{yz}$, and

$\tau _{yz}$, and ![]() $\tau _{yy}$ are used to demonstrate the ability of the ensemble-based method in discovering the underlying Reynolds stress model given velocity data. The in-plane velocity is driven by the Reynolds normal stresses imbalance

$\tau _{yy}$ are used to demonstrate the ability of the ensemble-based method in discovering the underlying Reynolds stress model given velocity data. The in-plane velocity is driven by the Reynolds normal stresses imbalance ![]() $\tau _{yy}-\tau _{zz}$, which is evident from the vorticity transport equation (Launder & Sandham Reference Launder and Sandham2002). As such, the imbalance

$\tau _{yy}-\tau _{zz}$, which is evident from the vorticity transport equation (Launder & Sandham Reference Launder and Sandham2002). As such, the imbalance ![]() $\tau _{yy}-\tau _{zz}$ is also presented in figure 4, demonstrating that the Reynolds stress field is learned accurately from the in-plane velocities. The learned model with the proposed method achieves results in both the velocity and Reynolds stress similar to those of the adjoint-based method. The error contours are provided to show the error distribution of the adjoint-based and ensemble-based methods in the estimation of velocity and Reynolds stress. It is noticeable that the adjoint-based method can achieve better velocity estimation than the ensemble-based method. As for the Reynolds stress, the adjoint-based and ensemble-based methods lead to similar results. It is noted that in this case, the entire field is used as the training data. By using fewer observations, e.g. only velocity data on the anti-diagonal line (upper right corner to lower left corner), the full velocity field can also be recovered, and the Reynolds stresses are learned correctly, but the errors are larger, especially in velocity. This is presented in Appendix C. The results demonstrate that the proposed method is able to learn the underlying turbulence model, which in turn provides good estimations of velocities and Reynolds stresses.

$\tau _{yy}-\tau _{zz}$ is also presented in figure 4, demonstrating that the Reynolds stress field is learned accurately from the in-plane velocities. The learned model with the proposed method achieves results in both the velocity and Reynolds stress similar to those of the adjoint-based method. The error contours are provided to show the error distribution of the adjoint-based and ensemble-based methods in the estimation of velocity and Reynolds stress. It is noticeable that the adjoint-based method can achieve better velocity estimation than the ensemble-based method. As for the Reynolds stress, the adjoint-based and ensemble-based methods lead to similar results. It is noted that in this case, the entire field is used as the training data. By using fewer observations, e.g. only velocity data on the anti-diagonal line (upper right corner to lower left corner), the full velocity field can also be recovered, and the Reynolds stresses are learned correctly, but the errors are larger, especially in velocity. This is presented in Appendix C. The results demonstrate that the proposed method is able to learn the underlying turbulence model, which in turn provides good estimations of velocities and Reynolds stresses.

Figure 3. Plots of the velocity vector and components ![]() $u_{x}$ and

$u_{x}$ and ![]() $u_y$ in the square duct predicted from the models learned by the adjoint (d–g) and ensemble (h–k) methods, compared against the ground truth (a–c). The velocity vectors are plotted along with contours of the in-plane velocity

$u_y$ in the square duct predicted from the models learned by the adjoint (d–g) and ensemble (h–k) methods, compared against the ground truth (a–c). The velocity vectors are plotted along with contours of the in-plane velocity ![]() $u_y$ scaled by a factor of 1000. The error contour is plotted based on

$u_y$ scaled by a factor of 1000. The error contour is plotted based on ![]() $\|\boldsymbol {u} - \boldsymbol {u}^{truth}\|$ normalized by the maximum magnitude of

$\|\boldsymbol {u} - \boldsymbol {u}^{truth}\|$ normalized by the maximum magnitude of ![]() $\boldsymbol {u}^{truth}$.

$\boldsymbol {u}^{truth}$.

Figure 4. Plots of Reynolds shear stresses ![]() $\tau _{xy}$ and

$\tau _{xy}$ and ![]() $\tau _{yz}$, normal stress

$\tau _{yz}$, normal stress ![]() $\tau _{yy}$, and normal stresses imbalance

$\tau _{yy}$, and normal stresses imbalance ![]() $\tau _{yy}-\tau _{zz}$, in the square duct predicted from the models learned by the adjoint (e–i) and ensemble (j–n) methods, compared against the ground truth (a–d). The error contour is plotted based on

$\tau _{yy}-\tau _{zz}$, in the square duct predicted from the models learned by the adjoint (e–i) and ensemble (j–n) methods, compared against the ground truth (a–d). The error contour is plotted based on ![]() $\|\boldsymbol {\tau } - \boldsymbol {\tau }^{truth}\|$ normalized by the maximum magnitude of

$\|\boldsymbol {\tau } - \boldsymbol {\tau }^{truth}\|$ normalized by the maximum magnitude of ![]() $\boldsymbol {\tau }^{truth}$.

$\boldsymbol {\tau }^{truth}$.

To show clearly the performance of the trained model, we provide the error in the estimation of velocity and Reynolds stress. The error over the computational domain is defined as

The comparison between the adjoint- and ensemble-based methods in the error of velocity and Reynolds stress, as well as the training efficiency, is provided in table 4. The results confirm that both adjoint- and ensemble-based methods are able to achieve satisfactory agreements in the velocities and to predict the Reynolds stresses well. By contrast, the adjoint-based method provides slightly better estimation than the ensemble-based method. Specifically, the errors in velocity and Reynolds stress with the adjoint-based method are ![]() $0.1\,\%$ and

$0.1\,\%$ and ![]() $4.5\,\%$, respectively, while those for the ensemble-based method are

$4.5\,\%$, respectively, while those for the ensemble-based method are ![]() $0.47\,\%$ and

$0.47\,\%$ and ![]() $5.8\,\%$, respectively.

$5.8\,\%$, respectively.

Table 4. Comparison of the estimation error and time cost between adjoint-based and ensemble-based learning.

As for the training efficiency, the adjoint-based method is more time-consuming compared to the ensemble-based method, as shown in table 4. Specifically, the adjoint-based method requires approximately ![]() $1000$ iterations, which significantly increase the wall time to about

$1000$ iterations, which significantly increase the wall time to about ![]() $133$ h in this case. In contrast, the ensemble-based method is efficient to obtain comparable results within

$133$ h in this case. In contrast, the ensemble-based method is efficient to obtain comparable results within ![]() $3.6$ h. To achieve error reduction

$3.6$ h. To achieve error reduction ![]() $\mathcal {E}(\boldsymbol {u}) < 0.005$, the adjoint-based method requires

$\mathcal {E}(\boldsymbol {u}) < 0.005$, the adjoint-based method requires ![]() $238$ steps and wall time

$238$ steps and wall time ![]() $32$ h, while the ensemble-based method can reach the same error within only

$32$ h, while the ensemble-based method can reach the same error within only ![]() $0.6$ h. That is likely due to the use of Hessian information and the covariance inflation factor