1 Introduction

We consider a structure-preserving numerical implementation of the Vlasov–Maxwell system, which is a system of kinetic equations describing the dynamics of charged particles in a plasma, coupled to Maxwell’s equations, describing electrodynamic phenomena arising from the motion of the particles as well as from externally applied fields. While the design of numerical methods for the Vlasov–Maxwell (and Vlasov–Poisson) system has attracted considerable attention since the early 1960s (see Sonnendrücker Reference Sonnendrücker2017 and references therein), the systematic development of structure-preserving or geometric numerical methods started only recently.

The Vlasov–Maxwell system exhibits a rather large set of structural properties, which should be considered in the discretization. Most prominently, the Vlasov–Maxwell system features a variational (Low Reference Low1958; Ye & Morrison Reference Ye and Morrison1992; Cendra et al. Reference Cendra, Holm, Hoyle and Marsden1998) as well as a Hamiltonian (Morrison Reference Morrison1980; Weinstein & Morrison Reference Weinstein and Morrison1981; Marsden & Weinstein Reference Marsden and Weinstein1982; Morrison Reference Morrison1982) structure. This implies a range of conserved quantities, which by Noether’s theorem are related to symmetries of the Lagrangian and the Hamiltonian, respectively. In addition, the degeneracy of the Poisson brackets in the Hamiltonian formulation implies the conservation of several families of so-called Casimir functionals (see e.g. Morrison Reference Morrison1998 for a review).

Maxwell’s equations have a rich structure themselves. The various fields and potentials appearing in these equations are most naturally described as differential forms (Bossavit Reference Bossavit1990; Baez & Muniain Reference Baez and Muniain1994; Warnick, Selfridge & Arnold Reference Warnick, Selfridge and Arnold1998; Warnick & Russer Reference Warnick and Russer2006) (see also Darling Reference Darling1994; Morita Reference Morita2001; Dray Reference Dray2014). The spaces of these differential forms build what is called a deRham complex. This implies certain compatibility conditions between the spaces, essentially boiling down to the identities from vector calculus,

![]() $\text{curl}\,\text{grad}=0$

and

$\text{curl}\,\text{grad}=0$

and

![]() $\text{div}\,\text{curl}=0$

. It has been realized that it is of utmost importance to preserve this complex structure in the discretization in order to obtain stable numerical methods. This goes hand in hand with preserving two more structural properties provided by the constraints on the electromagnetic fields, namely that the divergence of the magnetic field

$\text{div}\,\text{curl}=0$

. It has been realized that it is of utmost importance to preserve this complex structure in the discretization in order to obtain stable numerical methods. This goes hand in hand with preserving two more structural properties provided by the constraints on the electromagnetic fields, namely that the divergence of the magnetic field

![]() $\boldsymbol{B}$

vanishes,

$\boldsymbol{B}$

vanishes,

![]() $\text{div}\,\boldsymbol{B}=0$

, and Gauss’ law,

$\text{div}\,\boldsymbol{B}=0$

, and Gauss’ law,

![]() $\text{div}\,\boldsymbol{E}=\unicode[STIX]{x1D70C}$

, stating that the divergence of the electromagnetic field

$\text{div}\,\boldsymbol{E}=\unicode[STIX]{x1D70C}$

, stating that the divergence of the electromagnetic field

![]() $\boldsymbol{E}$

equals the charge density

$\boldsymbol{E}$

equals the charge density

![]() $\unicode[STIX]{x1D70C}$

.

$\unicode[STIX]{x1D70C}$

.

The compatibility problems of discrete Vlasov–Maxwell solvers has been widely discussed in the particle-in-cell (PIC) literature (Eastwood Reference Eastwood1991; Villasenor & Buneman Reference Villasenor and Buneman1992; Esirkepov Reference Esirkepov2001; Umeda et al. Reference Umeda, Omura, Tominaga and Matsumoto2003; Barthelmé & Parzani Reference Barthelmé and Parzani2005; Yu et al. Reference Yu, Jin, Zhou, Li and Gu2013) for exact charge conservation. An alternative is to modify Maxwell’s equations by adding Lagrange multipliers to relax the constraint (Boris Reference Boris1970; Marder Reference Marder1987; Langdon Reference Langdon1992; Munz et al. Reference Munz, Schneider, Sonnendrücker and Voß1999, Reference Munz, Omnes, Schneider, Sonnendrücker and Voss2000). For a more geometric perspective on charge conservation based on Whitney forms one can refer to Moon, Teixeira & Omelchenko (Reference Moon, Teixeira and Omelchenko2015). Even though it has attracted less interest the problem also exists for grid-based discretizations of the Vlasov equations and the same recipes apply there as discussed in Sircombe & Arber (Reference Sircombe and Arber2009), Crouseilles, Navaro & Sonnendrücker (Reference Crouseilles, Navaro and Sonnendrücker2014). Note also that the infinite-dimensional kernel of the curl operator has made it particularly hard to find good discretizations for Maxwell’s equations, especially for the eigenvalue problem (Caorsi, Fernandes & Raffetto Reference Caorsi, Fernandes and Raffetto2000; Hesthaven & Warburton Reference Hesthaven and Warburton2004; Boffi Reference Boffi2006, Reference Boffi2010; Buffa and Perugia Reference Buffa and Perugia2006).

Geometric Eulerian (grid-based) discretizations for the Vlasov–Poisson system have been proposed based on spline differential forms (Back & Sonnendrücker Reference Back and Sonnendrücker2014) as well as variational integrators (Kraus, Maj & Sonnendruecker Reference Kreeft, Palha and Gerritsmain preparation; Kraus Reference Kraus2013). While the former guarantees exact local conservation of important quantities like mass, momentum, energy and the

![]() $L^{2}$

norm of the distribution function after a semi-discretization in space, the latter retains these properties even after the discretization in time. Recently, also various discretizations based on discontinuous Galerkin methods have been proposed for both, the Vlasov–Poisson (de Dios, Carrillo & Shu Reference de Dios, Carrillo and Shu2011, Reference de Dios, Carrillo and Shu2012; de Dios & Hajian Reference de Dios and Hajian2012; Heath et al.

Reference Heath, Gamba, Morrison and Michler2012; Cheng, Gamba & Morrison Reference Cheng, Gamba and Morrison2013; Madaule, Restelli & Sonnendrücker Reference Madaule, Restelli and Sonnendrücker2014) and the Vlasov–Maxwell system (Cheng, Christlieb & Zhong Reference Cheng, Christlieb and Zhong2014a

,Reference Cheng, Christlieb and Zhong

b

; Cheng et al.

Reference Cheng, Gamba, Li and Morrison2014c

). Even though these are usually not based on geometric principles, they tend to show good long-time conservation properties with respect to momentum and/or energy.

$L^{2}$

norm of the distribution function after a semi-discretization in space, the latter retains these properties even after the discretization in time. Recently, also various discretizations based on discontinuous Galerkin methods have been proposed for both, the Vlasov–Poisson (de Dios, Carrillo & Shu Reference de Dios, Carrillo and Shu2011, Reference de Dios, Carrillo and Shu2012; de Dios & Hajian Reference de Dios and Hajian2012; Heath et al.

Reference Heath, Gamba, Morrison and Michler2012; Cheng, Gamba & Morrison Reference Cheng, Gamba and Morrison2013; Madaule, Restelli & Sonnendrücker Reference Madaule, Restelli and Sonnendrücker2014) and the Vlasov–Maxwell system (Cheng, Christlieb & Zhong Reference Cheng, Christlieb and Zhong2014a

,Reference Cheng, Christlieb and Zhong

b

; Cheng et al.

Reference Cheng, Gamba, Li and Morrison2014c

). Even though these are usually not based on geometric principles, they tend to show good long-time conservation properties with respect to momentum and/or energy.

First attempts to obtain geometric semi-discretizations for particle-in-cell methods for the Vlasov–Maxwell system have been made by Lewis (Reference Lewis1970, Reference Lewis1972). In his works, Lewis presents a fairly general framework for discretizing Low’s Lagrangian (Low Reference Low1958) in space. After fixing the Coulomb gauge and applying a simple finite difference approximation to the fields, he obtains semi-discrete, energy and charge-conserving Euler–Lagrange equations. For integration in time the leapfrog method is used. In a similar way, Evstatiev, Shadwick and Stamm performed a variational semi-discretization of Low’s Lagrangian in space, using standard finite difference and finite element discretizations of the fields and an explicit symplectic integrator in time (Evstatiev & Shadwick Reference Evstatiev and Shadwick2013; Shadwick, Stamm & Evstatiev Reference Shadwick, Stamm and Evstatiev2014; Stamm & Shadwick Reference Stamm and Shadwick2014). On the semi-discrete level, energy is conserved exactly but momentum and charge are only conserved in an average sense.

The first semi-discretization of the noncanonical Poisson bracket formulation of the Vlasov–Maxwell system (Morrison Reference Morrison1980; Weinstein & Morrison Reference Weinstein and Morrison1981; Marsden & Weinstein Reference Marsden and Weinstein1982; Morrison Reference Morrison1982) can be found in the work of Holloway (Reference Holloway1996). Spatial discretizations based on Fourier–Galerkin, Fourier collocation and Legendre–Gauss–Lobatto collocation methods are considered. The semi-discrete system is automatically guaranteed to be gauge invariant as it is formulated in terms of the electromagnetic fields instead of the potentials. The different discretization approaches are shown to have varying properties regarding the conservation of momentum maps and Casimir invariants but none preserves the Jacobi identity. It was already noted by Morrison (Reference Morrison1981a ) and Scovel & Weinstein (Reference Scovel and Weinstein1994) however that grid-based discretizations of noncanonical Poisson brackets do not appear to inherit a Poisson structure from the continuous problem and Scovel & Weinstein suggested that one should turn to particle-based discretizations instead. In fact, for the vorticity equation it was shown by Morrison (Reference Morrison1981b ) that using discrete vortices leads to a semi-discretization that retains the Hamiltonian structure. Such an integrator for the Vlasov–Ampère Poisson bracket was first presented by Evstatiev & Shadwick (Reference Evstatiev and Shadwick2013), based on a mixed semi-discretization in space, using particles for the distribution function and a grid-based discretization for the electromagnetic fields. However, this work lacks a proof of the Jacobi identity for the semi-discrete bracket, which is crucial for a Hamiltonian integrator.

The first fully discrete geometric particle-in-cell method for the Vlasov–Maxwell system has been proposed by Squire, Qin & Tang (Reference Squire, Qin and Tang2012), applying a fully discrete action principle to Low’s Lagrangian and discretizing the electromagnetic fields via discrete exterior calculus (DEC) Hirani (Reference Hirani2003), Desbrun, Kanso & Tong (Reference Desbrun, Kanso and Tong2008), Stern et al. (Reference Stern, Tong, Desbrun and Marsden2014). This leads to gauge-invariant variational integrators that satisfy exact charge conservation in addition to approximate energy conservation. Xiao et al. (Reference Xiao, Qin, Liu, He, Zhang and Sun2015) suggest a Hamiltonian discretization using Whitney form interpolants for the fields. Their integrator is obtained from a variational principle, so that the Jacobi identity is satisfied automatically. Moreover, the Whitney form interpolants preserve the deRham complex structure of the involved spaces, so that the algorithm is also charge conserving. Qin et al. (Reference Qin, Liu, Xiao, Zhang, He, Wang, Sun, Burby, Ellison and Zhou2016) use the same interpolants to directly discretize the canonical Vlasov–Maxwell bracket (Marsden & Weinstein Reference Marsden and Weinstein1982) and integrate the resulting finite dimensional system with the symplectic Euler method. He et al. (Reference He, Sun, Qin and Liu2016) introduce a discretization of the noncanonical Vlasov–Maxwell bracket, based on first-order finite elements, which is a special case of our framework. The system of ordinary differential equations obtained from the semi-discrete bracket is integrated in time using the splitting method developed by Crouseilles, Einkemmer & Faou (Reference Crouseilles, Einkemmer and Faou2015) with a correction provided by He et al. (Reference He, Qin, Sun, Xiao, Zhang and Liu2015) (see also Qin et al. Reference Qin, He, Zhang, Liu, Xiao and Wang2015). The authors prove the Jacobi identity of the semi-discrete bracket but skip over the Casimir invariants, which also need to be conserved for the semi-discrete system to be Hamiltonian.

In this work, we unify many of the preceding ideas in a general, flexible and rigorous framework based on finite element exterior calculus (FEEC) (Monk Reference Monk2003; Arnold, Falk & Winther Reference Arnold, Falk and Winther2006, Reference Arnold, Falk and Winther2010; Christiansen, Munthe-Kaas & Owren Reference Christiansen, Munthe-Kaas and Owren2011). We provide a semi-discretization of the noncanonical Vlasov–Maxwell Poisson structure, which preserves the defining properties of the bracket, anti-symmetry and the Jacobi identity, as well as its Casimir invariants, implying that the semi-discrete system is still a Hamiltonian system. Due to the generality of our framework, the aforementioned conservation properties are guaranteed independently of a particular choice of the finite element basis, as long as the corresponding finite element spaces satisfy certain compatibility conditions. In particular, this includes the spline spaces presented in § 3.4. In order to ensure that these properties are also conserved by the fully discrete numerical scheme, the semi-discrete bracket is used in conjunction with Poisson time integrators provided by the previously mentioned splitting method (Crouseilles et al. Reference Crouseilles, Einkemmer and Faou2015; He et al. Reference He, Qin, Sun, Xiao, Zhang and Liu2015; Qin et al. Reference Qin, He, Zhang, Liu, Xiao and Wang2015) and higher-order compositions thereof. A semi-discretization of the noncanonical Hamiltonian structure of the relativistic Vlasov–Maxwell system with spin and that for the gyrokinetic Vlasov–Maxwell system have recently been described by Burby (Reference Burby2017).

It is worth emphasizing that the aim and use of preserving the Hamiltonian structure in the course of discretization is not limited to good energy and momentum conservation properties. These are merely by-products but not the goal of the effort. Furthermore, from a practical point of view, the significance of global energy or momentum conservation by some numerical scheme for some Hamiltonian partial differential equation should not be overestimated. Of course, these are important properties of any Hamiltonian system and should be preserved within suitable error bounds in any numerical simulation. However, when performing a semi-discretization in space, the resulting finite-dimensional system of ordinary differential equations usually has millions or billions degrees of freedom. Conserving only a very small number of invariants hardly restricts the numerical solution of such a large system. It is not difficult to perceive that one can conserve the total energy of a system in a simulation and still obtain false or even unphysical results. It is much more useful to preserve local conservation laws like the local energy and momentum balance or multi-symplecticity (Reich Reference Reich2000; Moore & Reich Reference Moore and Reich2003), thus posing much more severe restrictions on the numerical solution than just conserving the total energy of the system. A symplectic or Poisson integrator, on the other hand, preserves the whole hierarchy of Poincaré integral invariants of the finite-dimensional system (Channell & Scovel Reference Channell and Scovel1990; Sanz-Serna & Calvo Reference Sanz-Serna and Calvo1993). For a Hamiltonian system of ordinary differential equations with

![]() $n$

degrees of freedom, e.g. obtained from a semi-discrete Poisson bracket, these are

$n$

degrees of freedom, e.g. obtained from a semi-discrete Poisson bracket, these are

![]() $n$

invariants. In addition, such integrators often preserve Noether symmetries and the associated local conservation laws as well as Casimir invariants.

$n$

invariants. In addition, such integrators often preserve Noether symmetries and the associated local conservation laws as well as Casimir invariants.

We proceed as follows. In § 2, we provide a short review of the Vlasov–Maxwell system and its Poisson bracket formulation, including a discussion of the Jacobi identity, Casimir invariants and invariants commuting with the specific Vlasov–Maxwell Hamiltonian. In § 3, we introduce the finite element exterior calculus framework using the example of Maxwell’s equation, we introduce the deRham complex and finite element spaces of differential forms. The actual discretization of the Poisson bracket is performed in § 4. We prove the discrete Jacobi identity and the conservation of discrete Casimir invariants, including the discrete Gauss’ law. In § 5, we introduce a splitting for the Vlasov–Maxwell Hamiltonian, which leads to an explicit time stepping scheme. Various compositions are used in order to obtain higher-order methods. Backward error analysis is used in order to study the long-time energy behaviour. In § 6, we apply the method to the Vlasov–Maxwell system in 1d2v (one spatial and two velocity dimensions) using splines for the discretization of the fields. Section 7 concludes the paper with numerical experiments, using nonlinear Landau damping and the Weibel instability to verify the favourable properties of our scheme.

2 The Vlasov–Maxwell system

The non-relativistic Vlasov equation for a particle species

![]() $s$

of charge

$s$

of charge

![]() $q_{s}$

and mass

$q_{s}$

and mass

![]() $m_{s}$

reads

$m_{s}$

reads

and couples nonlinearly to the Maxwell equations,

These equations are to be solved with suitable initial and boundary conditions. Here,

![]() $(\boldsymbol{x},\boldsymbol{v})$

denotes the phasespace coordinates,

$(\boldsymbol{x},\boldsymbol{v})$

denotes the phasespace coordinates,

![]() $f_{s}$

is the phase space distribution function of particle species

$f_{s}$

is the phase space distribution function of particle species

![]() $s$

,

$s$

,

![]() $\boldsymbol{E}$

is the electric field and

$\boldsymbol{E}$

is the electric field and

![]() $\boldsymbol{B}$

is the magnetic flux density (or induction), which we will refer to as the magnetic field as is prevalent in the plasma physics literature, and we have scaled the variables, but retained the mass

$\boldsymbol{B}$

is the magnetic flux density (or induction), which we will refer to as the magnetic field as is prevalent in the plasma physics literature, and we have scaled the variables, but retained the mass

![]() $m_{s}$

and the signed charge

$m_{s}$

and the signed charge

![]() $q_{s}$

to distinguish species. Observe that we use

$q_{s}$

to distinguish species. Observe that we use

![]() $\text{grad}$

,

$\text{grad}$

,

![]() $\text{curl}$

,

$\text{curl}$

,

![]() $\text{div}$

to denote

$\text{div}$

to denote

![]() $\unicode[STIX]{x1D735}_{\boldsymbol{x}}$

,

$\unicode[STIX]{x1D735}_{\boldsymbol{x}}$

,

![]() $\unicode[STIX]{x1D735}_{\boldsymbol{x}}\times ,\unicode[STIX]{x1D735}_{\boldsymbol{x}}\boldsymbol{\cdot }$

, respectively, when they act on variables depending only on

$\unicode[STIX]{x1D735}_{\boldsymbol{x}}\times ,\unicode[STIX]{x1D735}_{\boldsymbol{x}}\boldsymbol{\cdot }$

, respectively, when they act on variables depending only on

![]() $\boldsymbol{x}$

. The sources for the Maxwell equations, the charge density

$\boldsymbol{x}$

. The sources for the Maxwell equations, the charge density

![]() $\unicode[STIX]{x1D70C}$

and the current density

$\unicode[STIX]{x1D70C}$

and the current density

![]() $\boldsymbol{J}$

, are obtained from the distribution functions

$\boldsymbol{J}$

, are obtained from the distribution functions

![]() $f_{s}$

by

$f_{s}$

by

Taking the divergence of Ampère’s equation (2.2) and using Gauss’ law (2.4) gives the continuity equation for charge conservation

Equation (2.7) serves as a compatibility condition for Maxwell’s equations, which are ill posed when (2.7) is not satisfied. Moreover it can be shown that if the divergence constraints (2.4) and (2.5) are satisfied at the initial time, they remain satisfied for all times by the solution of Ampère’s equation (2.2) and Faraday’s law (2.3), which have a unique solution by themselves provided adequate initial and boundary conditions are imposed. This follows directly from the fact that the divergence of the curl vanishes and (2.7). The continuity equation follows from the Vlasov equation by integration over velocity space and using the definitions of charge and current densities. However this does not necessarily remain true when the charge and current densities are approximated numerically. The problem for numerical methods is then to find a way to have discrete sources, which satisfy a discrete continuity equation compatible with the discrete divergence and curl operators. Another option is to modify the Maxwell equations, so that they are well posed independently of the sources, by introducing two additional scalar unknowns that can be seen as Lagrange multipliers for the divergence constraints. These should become arbitrarily small when the continuity equation is close to being satisfied.

2.1 Non-canonical Hamiltonian structure

The Vlasov–Maxwell system possesses a noncanonical Hamiltonian structure. The system of equations (2.1)–(2.3) can be obtained from the following Poisson bracket, a bilinear, anti-symmetric bracket that satisfies Leibniz’ rule and the Jacobi identity:

$$\begin{eqnarray}\displaystyle \left\{{\mathcal{F}},{\mathcal{G}}\right\}[\,f_{s},\boldsymbol{E},\boldsymbol{B}] & = & \displaystyle \mathop{\sum }_{s}\int {\displaystyle \frac{f_{s}}{m_{s}}}\left[\frac{\unicode[STIX]{x1D6FF}{\mathcal{F}}}{\unicode[STIX]{x1D6FF}f_{s}},\frac{\unicode[STIX]{x1D6FF}{\mathcal{G}}}{\unicode[STIX]{x1D6FF}f_{s}}\right]\,\text{d}\boldsymbol{x}\,\text{d}\boldsymbol{v}\nonumber\\ \displaystyle & & \displaystyle +\mathop{\sum }_{s}{\displaystyle \frac{q_{s}}{m_{s}}}\int f_{s}\left(\unicode[STIX]{x1D735}_{\boldsymbol{v}}\frac{\unicode[STIX]{x1D6FF}{\mathcal{F}}}{\unicode[STIX]{x1D6FF}f_{s}}\boldsymbol{\cdot }\frac{\unicode[STIX]{x1D6FF}{\mathcal{G}}}{\unicode[STIX]{x1D6FF}\boldsymbol{E}}-\unicode[STIX]{x1D735}_{\boldsymbol{v}}\frac{\unicode[STIX]{x1D6FF}{\mathcal{G}}}{\unicode[STIX]{x1D6FF}f_{s}}\boldsymbol{\cdot }\frac{\unicode[STIX]{x1D6FF}{\mathcal{F}}}{\unicode[STIX]{x1D6FF}\boldsymbol{E}}\right)\text{d}\boldsymbol{x}\,\text{d}\boldsymbol{v}\nonumber\\ \displaystyle & & \displaystyle +\mathop{\sum }_{s}{\displaystyle \frac{q_{s}}{m_{s}^{2}}}\int f_{s}\,\boldsymbol{B}\boldsymbol{\cdot }\left(\unicode[STIX]{x1D735}_{\boldsymbol{v}}\frac{\unicode[STIX]{x1D6FF}{\mathcal{F}}}{\unicode[STIX]{x1D6FF}f_{s}}\times \unicode[STIX]{x1D735}_{\boldsymbol{v}}\frac{\unicode[STIX]{x1D6FF}{\mathcal{G}}}{\unicode[STIX]{x1D6FF}f_{s}}\right)\text{d}\boldsymbol{x}\,\text{d}\boldsymbol{v}\nonumber\\ \displaystyle & & \displaystyle +\,\int \left(\text{curl}\,\frac{\unicode[STIX]{x1D6FF}{\mathcal{F}}}{\unicode[STIX]{x1D6FF}\boldsymbol{E}}\boldsymbol{\cdot }\frac{\unicode[STIX]{x1D6FF}{\mathcal{G}}}{\unicode[STIX]{x1D6FF}\boldsymbol{B}}-\text{curl}\,\frac{\unicode[STIX]{x1D6FF}{\mathcal{G}}}{\unicode[STIX]{x1D6FF}\boldsymbol{E}}\boldsymbol{\cdot }\frac{\unicode[STIX]{x1D6FF}{\mathcal{F}}}{\unicode[STIX]{x1D6FF}\boldsymbol{B}}\right)\text{d}\boldsymbol{x},\end{eqnarray}$$

$$\begin{eqnarray}\displaystyle \left\{{\mathcal{F}},{\mathcal{G}}\right\}[\,f_{s},\boldsymbol{E},\boldsymbol{B}] & = & \displaystyle \mathop{\sum }_{s}\int {\displaystyle \frac{f_{s}}{m_{s}}}\left[\frac{\unicode[STIX]{x1D6FF}{\mathcal{F}}}{\unicode[STIX]{x1D6FF}f_{s}},\frac{\unicode[STIX]{x1D6FF}{\mathcal{G}}}{\unicode[STIX]{x1D6FF}f_{s}}\right]\,\text{d}\boldsymbol{x}\,\text{d}\boldsymbol{v}\nonumber\\ \displaystyle & & \displaystyle +\mathop{\sum }_{s}{\displaystyle \frac{q_{s}}{m_{s}}}\int f_{s}\left(\unicode[STIX]{x1D735}_{\boldsymbol{v}}\frac{\unicode[STIX]{x1D6FF}{\mathcal{F}}}{\unicode[STIX]{x1D6FF}f_{s}}\boldsymbol{\cdot }\frac{\unicode[STIX]{x1D6FF}{\mathcal{G}}}{\unicode[STIX]{x1D6FF}\boldsymbol{E}}-\unicode[STIX]{x1D735}_{\boldsymbol{v}}\frac{\unicode[STIX]{x1D6FF}{\mathcal{G}}}{\unicode[STIX]{x1D6FF}f_{s}}\boldsymbol{\cdot }\frac{\unicode[STIX]{x1D6FF}{\mathcal{F}}}{\unicode[STIX]{x1D6FF}\boldsymbol{E}}\right)\text{d}\boldsymbol{x}\,\text{d}\boldsymbol{v}\nonumber\\ \displaystyle & & \displaystyle +\mathop{\sum }_{s}{\displaystyle \frac{q_{s}}{m_{s}^{2}}}\int f_{s}\,\boldsymbol{B}\boldsymbol{\cdot }\left(\unicode[STIX]{x1D735}_{\boldsymbol{v}}\frac{\unicode[STIX]{x1D6FF}{\mathcal{F}}}{\unicode[STIX]{x1D6FF}f_{s}}\times \unicode[STIX]{x1D735}_{\boldsymbol{v}}\frac{\unicode[STIX]{x1D6FF}{\mathcal{G}}}{\unicode[STIX]{x1D6FF}f_{s}}\right)\text{d}\boldsymbol{x}\,\text{d}\boldsymbol{v}\nonumber\\ \displaystyle & & \displaystyle +\,\int \left(\text{curl}\,\frac{\unicode[STIX]{x1D6FF}{\mathcal{F}}}{\unicode[STIX]{x1D6FF}\boldsymbol{E}}\boldsymbol{\cdot }\frac{\unicode[STIX]{x1D6FF}{\mathcal{G}}}{\unicode[STIX]{x1D6FF}\boldsymbol{B}}-\text{curl}\,\frac{\unicode[STIX]{x1D6FF}{\mathcal{G}}}{\unicode[STIX]{x1D6FF}\boldsymbol{E}}\boldsymbol{\cdot }\frac{\unicode[STIX]{x1D6FF}{\mathcal{F}}}{\unicode[STIX]{x1D6FF}\boldsymbol{B}}\right)\text{d}\boldsymbol{x},\end{eqnarray}$$

where

![]() $[f,g]=\unicode[STIX]{x1D735}_{\boldsymbol{x}}f\boldsymbol{\cdot }\unicode[STIX]{x1D735}_{\boldsymbol{v}}g-\unicode[STIX]{x1D735}_{\boldsymbol{x}}g\boldsymbol{\cdot }\unicode[STIX]{x1D735}_{\boldsymbol{v}}f$

. This bracket was introduced in Morrison (Reference Morrison1980), with a term corrected in Marsden & Weinstein (Reference Marsden and Weinstein1982) (see also Weinstein & Morrison Reference Weinstein and Morrison1981; Morrison Reference Morrison1982), and its limitation to divergence-free magnetic fields first pointed out in Morrison (Reference Morrison1982). See also Chandre et al. (Reference Chandre, Guillebon, Back, Tassi and Morrison2013) and Morrison (Reference Morrison2013), where the latter contains the details of the direct proof of the Jacobi identity

$[f,g]=\unicode[STIX]{x1D735}_{\boldsymbol{x}}f\boldsymbol{\cdot }\unicode[STIX]{x1D735}_{\boldsymbol{v}}g-\unicode[STIX]{x1D735}_{\boldsymbol{x}}g\boldsymbol{\cdot }\unicode[STIX]{x1D735}_{\boldsymbol{v}}f$

. This bracket was introduced in Morrison (Reference Morrison1980), with a term corrected in Marsden & Weinstein (Reference Marsden and Weinstein1982) (see also Weinstein & Morrison Reference Weinstein and Morrison1981; Morrison Reference Morrison1982), and its limitation to divergence-free magnetic fields first pointed out in Morrison (Reference Morrison1982). See also Chandre et al. (Reference Chandre, Guillebon, Back, Tassi and Morrison2013) and Morrison (Reference Morrison2013), where the latter contains the details of the direct proof of the Jacobi identity

The time evolution of any functional

![]() ${\mathcal{F}}[\,f_{s},\boldsymbol{E},\boldsymbol{B}]$

is given by

${\mathcal{F}}[\,f_{s},\boldsymbol{E},\boldsymbol{B}]$

is given by

with the Hamiltonian

![]() ${\mathcal{H}}$

given as the sum of the kinetic energy of the particles and the electric and magnetic field energies,

${\mathcal{H}}$

given as the sum of the kinetic energy of the particles and the electric and magnetic field energies,

In order to obtain the Vlasov equations, we consider the functional

for which the equations of motion (2.10) are computed as

$$\begin{eqnarray}\displaystyle \frac{\unicode[STIX]{x2202}f_{s}}{\unicode[STIX]{x2202}t}(t,\boldsymbol{x},\boldsymbol{v}) & = & \displaystyle \int \unicode[STIX]{x1D6FF}(\boldsymbol{x}-\boldsymbol{x}^{\prime })\,\unicode[STIX]{x1D6FF}(\boldsymbol{v}-\boldsymbol{v}^{\prime })\left[\frac{1}{2}\left|\boldsymbol{v}^{\prime }\right|^{2},f_{s}(t,\boldsymbol{x}^{\prime },\boldsymbol{v}^{\prime })\right]\,\text{d}\boldsymbol{x}^{\prime }\text{d}\boldsymbol{v}^{\prime }\nonumber\\ \displaystyle & & \displaystyle -\,{\displaystyle \frac{q_{s}}{m_{s}}}\int \unicode[STIX]{x1D6FF}(\boldsymbol{x}-\boldsymbol{x}^{\prime })\,\unicode[STIX]{x1D6FF}(\boldsymbol{v}-\boldsymbol{v}^{\prime })\left(\unicode[STIX]{x1D735}_{\boldsymbol{v}}f_{s}(t,\boldsymbol{x}^{\prime },\boldsymbol{v}^{\prime })\right)\boldsymbol{\cdot }\boldsymbol{E}(t,\boldsymbol{x}^{\prime })\,\text{d}\boldsymbol{x}^{\prime }\text{d}\boldsymbol{v}^{\prime }\nonumber\\ \displaystyle & & \displaystyle -\,{\displaystyle \frac{q_{s}}{m_{s}}}\int \unicode[STIX]{x1D6FF}(\boldsymbol{x}-\boldsymbol{x}^{\prime })\,\unicode[STIX]{x1D6FF}(\boldsymbol{v}-\boldsymbol{v}^{\prime })\left(\unicode[STIX]{x1D735}_{\boldsymbol{v}}f_{s}(t,\boldsymbol{x}^{\prime },\boldsymbol{v}^{\prime })\right)\boldsymbol{\cdot }\left(\boldsymbol{B}(t,\boldsymbol{x}^{\prime })\times \boldsymbol{v}^{\prime }\right)\text{d}\boldsymbol{x}^{\prime }\text{d}\boldsymbol{v}^{\prime }\nonumber\\ \displaystyle & = & \displaystyle -\boldsymbol{v}\boldsymbol{\cdot }\unicode[STIX]{x1D735}_{\boldsymbol{x}}f_{s}(t,\boldsymbol{x},\boldsymbol{v})-{\displaystyle \frac{q_{s}}{m_{s}}}(\boldsymbol{E}(t,\boldsymbol{x})+\boldsymbol{v}\times \boldsymbol{B}(t,\boldsymbol{x}))\boldsymbol{\cdot }\unicode[STIX]{x1D735}_{\boldsymbol{v}}f_{s}(t,\boldsymbol{x},\boldsymbol{v}).\end{eqnarray}$$

$$\begin{eqnarray}\displaystyle \frac{\unicode[STIX]{x2202}f_{s}}{\unicode[STIX]{x2202}t}(t,\boldsymbol{x},\boldsymbol{v}) & = & \displaystyle \int \unicode[STIX]{x1D6FF}(\boldsymbol{x}-\boldsymbol{x}^{\prime })\,\unicode[STIX]{x1D6FF}(\boldsymbol{v}-\boldsymbol{v}^{\prime })\left[\frac{1}{2}\left|\boldsymbol{v}^{\prime }\right|^{2},f_{s}(t,\boldsymbol{x}^{\prime },\boldsymbol{v}^{\prime })\right]\,\text{d}\boldsymbol{x}^{\prime }\text{d}\boldsymbol{v}^{\prime }\nonumber\\ \displaystyle & & \displaystyle -\,{\displaystyle \frac{q_{s}}{m_{s}}}\int \unicode[STIX]{x1D6FF}(\boldsymbol{x}-\boldsymbol{x}^{\prime })\,\unicode[STIX]{x1D6FF}(\boldsymbol{v}-\boldsymbol{v}^{\prime })\left(\unicode[STIX]{x1D735}_{\boldsymbol{v}}f_{s}(t,\boldsymbol{x}^{\prime },\boldsymbol{v}^{\prime })\right)\boldsymbol{\cdot }\boldsymbol{E}(t,\boldsymbol{x}^{\prime })\,\text{d}\boldsymbol{x}^{\prime }\text{d}\boldsymbol{v}^{\prime }\nonumber\\ \displaystyle & & \displaystyle -\,{\displaystyle \frac{q_{s}}{m_{s}}}\int \unicode[STIX]{x1D6FF}(\boldsymbol{x}-\boldsymbol{x}^{\prime })\,\unicode[STIX]{x1D6FF}(\boldsymbol{v}-\boldsymbol{v}^{\prime })\left(\unicode[STIX]{x1D735}_{\boldsymbol{v}}f_{s}(t,\boldsymbol{x}^{\prime },\boldsymbol{v}^{\prime })\right)\boldsymbol{\cdot }\left(\boldsymbol{B}(t,\boldsymbol{x}^{\prime })\times \boldsymbol{v}^{\prime }\right)\text{d}\boldsymbol{x}^{\prime }\text{d}\boldsymbol{v}^{\prime }\nonumber\\ \displaystyle & = & \displaystyle -\boldsymbol{v}\boldsymbol{\cdot }\unicode[STIX]{x1D735}_{\boldsymbol{x}}f_{s}(t,\boldsymbol{x},\boldsymbol{v})-{\displaystyle \frac{q_{s}}{m_{s}}}(\boldsymbol{E}(t,\boldsymbol{x})+\boldsymbol{v}\times \boldsymbol{B}(t,\boldsymbol{x}))\boldsymbol{\cdot }\unicode[STIX]{x1D735}_{\boldsymbol{v}}f_{s}(t,\boldsymbol{x},\boldsymbol{v}).\end{eqnarray}$$

For the electric field, we consider

so that from (2.10) we obtain Ampère’s equation,

$$\begin{eqnarray}\displaystyle \frac{\unicode[STIX]{x2202}\boldsymbol{E}}{\unicode[STIX]{x2202}t}(t,\boldsymbol{x}) & = & \displaystyle \int \bigg(\text{curl}\,\boldsymbol{B}(t,\boldsymbol{x}^{\prime })-\mathop{\sum }_{s}q_{s}f_{s}(t,\boldsymbol{x}^{\prime },\boldsymbol{v}^{\prime })\,\boldsymbol{v}^{\prime }\bigg)\,\unicode[STIX]{x1D6FF}(\boldsymbol{x}-\boldsymbol{x}^{\prime })\,\text{d}\boldsymbol{x}^{\prime }\text{d}\boldsymbol{v}^{\prime }\nonumber\\ \displaystyle & = & \displaystyle \text{curl}\,\boldsymbol{B}(t,\boldsymbol{x})-\boldsymbol{J}(t,\boldsymbol{x}),\end{eqnarray}$$

$$\begin{eqnarray}\displaystyle \frac{\unicode[STIX]{x2202}\boldsymbol{E}}{\unicode[STIX]{x2202}t}(t,\boldsymbol{x}) & = & \displaystyle \int \bigg(\text{curl}\,\boldsymbol{B}(t,\boldsymbol{x}^{\prime })-\mathop{\sum }_{s}q_{s}f_{s}(t,\boldsymbol{x}^{\prime },\boldsymbol{v}^{\prime })\,\boldsymbol{v}^{\prime }\bigg)\,\unicode[STIX]{x1D6FF}(\boldsymbol{x}-\boldsymbol{x}^{\prime })\,\text{d}\boldsymbol{x}^{\prime }\text{d}\boldsymbol{v}^{\prime }\nonumber\\ \displaystyle & = & \displaystyle \text{curl}\,\boldsymbol{B}(t,\boldsymbol{x})-\boldsymbol{J}(t,\boldsymbol{x}),\end{eqnarray}$$

where the current density

![]() $\boldsymbol{J}$

is given by

$\boldsymbol{J}$

is given by

And for the magnetic field, we consider

and obtain the Faraday equation,

Our aim is to preserve this noncanonical Hamiltonian structure and its features at the discrete level. This can be done by taking only a finite number of initial positions for the particles instead of a continuum and by taking the electromagnetic fields in finite-dimensional subspaces of the original function spaces. A good candidate for such a discretization is the finite element particle-in-cell framework. In order to satisfy the continuity equation as well as the identities from vector calculus and thereby preserve Gauss’ law and the divergence of the magnetic field, the finite element spaces for the different fields cannot be chosen independently. The right framework is given by FEEC.

Before describing this framework in more detail, we shortly want to discuss some conservation laws of the Vlasov–Maxwell system. In Hamiltonian systems, there are two kinds of conserved quantities, Casimir invariants and momentum maps.

2.2 Invariants

A family of conserved quantities are Casimir invariants (Casimirs), which originate from the degeneracy of the Poisson bracket. Casimirs are functionals

![]() ${\mathcal{C}}(f_{s},\boldsymbol{E},\boldsymbol{B})$

which Poisson commute with every other functional

${\mathcal{C}}(f_{s},\boldsymbol{E},\boldsymbol{B})$

which Poisson commute with every other functional

![]() ${\mathcal{G}}(f_{s},\boldsymbol{E},\boldsymbol{B})$

, i.e.

${\mathcal{G}}(f_{s},\boldsymbol{E},\boldsymbol{B})$

, i.e.

![]() $\{{\mathcal{C}},{\mathcal{G}}\}=0$

. For the Vlasov–Maxwell bracket, there are several such Casimirs (Morrison Reference Morrison1987; Morrison & Pfirsch Reference Morrison and Pfirsch1989; Chandre et al.

Reference Chandre, Guillebon, Back, Tassi and Morrison2013). First, the integral of any real function

$\{{\mathcal{C}},{\mathcal{G}}\}=0$

. For the Vlasov–Maxwell bracket, there are several such Casimirs (Morrison Reference Morrison1987; Morrison & Pfirsch Reference Morrison and Pfirsch1989; Chandre et al.

Reference Chandre, Guillebon, Back, Tassi and Morrison2013). First, the integral of any real function

![]() $h_{s}$

of each distribution function

$h_{s}$

of each distribution function

![]() $f_{s}$

is preserved, i.e.

$f_{s}$

is preserved, i.e.

This family of Casimirs is a manifestation of Liouville’s theorem and corresponds to conservation of phase space volume. Further we have two Casimirs related to Gauss’ law (2.4) and the divergence-free property of the magnetic field (2.5),

where

![]() $h_{E}$

and

$h_{E}$

and

![]() $h_{B}$

are arbitrary real functions of

$h_{B}$

are arbitrary real functions of

![]() $\boldsymbol{x}$

. The latter functional,

$\boldsymbol{x}$

. The latter functional,

![]() ${\mathcal{C}}_{B}$

, is not a true Casimir but should rather be referred to as pseudo-Casimir. It acts like a Casimir in that it Poisson commutes with any other functional, but the Jacobi identity is only satisfied when

${\mathcal{C}}_{B}$

, is not a true Casimir but should rather be referred to as pseudo-Casimir. It acts like a Casimir in that it Poisson commutes with any other functional, but the Jacobi identity is only satisfied when

![]() $\text{div}\,B=0$

(see Morrison Reference Morrison1982, Reference Morrison2013).

$\text{div}\,B=0$

(see Morrison Reference Morrison1982, Reference Morrison2013).

A second family of conserved quantities are momentum maps

![]() $\unicode[STIX]{x1D6F7}$

, which arise from symmetries that preserve the particular Hamiltonian

$\unicode[STIX]{x1D6F7}$

, which arise from symmetries that preserve the particular Hamiltonian

![]() ${\mathcal{H}}$

, and therefore also the equations of motion. This means that the Hamiltonian is constant along the flow of

${\mathcal{H}}$

, and therefore also the equations of motion. This means that the Hamiltonian is constant along the flow of

![]() $\unicode[STIX]{x1D6F7}$

, i.e.

$\unicode[STIX]{x1D6F7}$

, i.e.

From Noether’s theorem it follows that the generators

![]() $\unicode[STIX]{x1D6F7}$

of the symmetry are preserved by the time evolution, i.e.

$\unicode[STIX]{x1D6F7}$

of the symmetry are preserved by the time evolution, i.e.

If the symmetry condition (2.22) holds, this is obvious by the anti-symmetry of the Poisson bracket as

Therefore

![]() $\unicode[STIX]{x1D6F7}$

is a constant of motion if and only if

$\unicode[STIX]{x1D6F7}$

is a constant of motion if and only if

![]() $\{\unicode[STIX]{x1D6F7},{\mathcal{H}}\}=0$

.

$\{\unicode[STIX]{x1D6F7},{\mathcal{H}}\}=0$

.

The complete set of constants of motion, the algebra of invariants, will be discussed elsewhere. However, as an example of a momentum map we shall consider here the total momentum

By direct computations, assuming periodic boundary conditions, it can be shown that

defining

![]() $Q(\boldsymbol{x}):=\unicode[STIX]{x1D70C}-\text{div}\,\boldsymbol{E}$

, which is a local version of the Casimir

$Q(\boldsymbol{x}):=\unicode[STIX]{x1D70C}-\text{div}\,\boldsymbol{E}$

, which is a local version of the Casimir

![]() ${\mathcal{C}}_{E}$

. Therefore, if at

${\mathcal{C}}_{E}$

. Therefore, if at

![]() $t=0$

the Casimir

$t=0$

the Casimir

![]() $Q\equiv 0$

, then momentum is conserved. If at

$Q\equiv 0$

, then momentum is conserved. If at

![]() $t=0$

the Casimir

$t=0$

the Casimir

![]() $Q\not \equiv 0$

, then momentum is not conserved and it changes in accordance with (2.26). For a multi-species plasma

$Q\not \equiv 0$

, then momentum is not conserved and it changes in accordance with (2.26). For a multi-species plasma

![]() $Q\equiv 0$

is equivalent to the physical requirement that Poisson’s equation be satisfied. If for some reason it is not exactly satisfied, then we have violation of momentum conservation.

$Q\equiv 0$

is equivalent to the physical requirement that Poisson’s equation be satisfied. If for some reason it is not exactly satisfied, then we have violation of momentum conservation.

For a single species plasma, say electrons, with a neutralizing positive background charge

![]() $\unicode[STIX]{x1D70C}_{B}(\boldsymbol{x})$

, say ions, Poisson’s equation is

$\unicode[STIX]{x1D70C}_{B}(\boldsymbol{x})$

, say ions, Poisson’s equation is

The Poisson bracket for this case has the local Casimir

and it does not recognize the background charge. Because the background is stationary, the total momentum is

and it satisfies

We will verify this relation in the numerical experiments of § 7.5.

3 Finite element exterior calculus

FEEC is a mathematical framework for mixed finite element methods, which uses geometrical and topological ideas for systematically analysing the stability and convergence of finite element discretizations of partial differential equations. This proved to be a particularly difficult problem for Maxwell’s equation, which we will use in the following as an example for reviewing this framework.

3.1 Maxwell’s equations

When Maxwell’s equations are used in some material medium, they are best understood by introducing two additional fields. The electromagnetic properties are then defined by the electric and magnetic fields, usually denoted by

![]() $\boldsymbol{E}$

and

$\boldsymbol{E}$

and

![]() $\boldsymbol{B}$

, the displacement field

$\boldsymbol{B}$

, the displacement field

![]() $\boldsymbol{D}$

and the magnetic intensity

$\boldsymbol{D}$

and the magnetic intensity

![]() $\boldsymbol{H}$

. For simple materials, the electric field is related to the displacement field and the magnetic field to the magnetic intensity by

$\boldsymbol{H}$

. For simple materials, the electric field is related to the displacement field and the magnetic field to the magnetic intensity by

where

![]() $\unicode[STIX]{x1D73A}$

and

$\unicode[STIX]{x1D73A}$

and

![]() $\unicode[STIX]{x1D741}$

are the permittivity and permeability tensors reflecting the material properties. In vacuum they become the scalars

$\unicode[STIX]{x1D741}$

are the permittivity and permeability tensors reflecting the material properties. In vacuum they become the scalars

![]() $\unicode[STIX]{x1D700}_{0}$

and

$\unicode[STIX]{x1D700}_{0}$

and

![]() $\unicode[STIX]{x1D707}_{0}$

, which are unity in our scaled variables, while for more complicated media such as plasmas they can be nonlinear operators (Morrison Reference Morrison2013). The Maxwell equations with the four fields read

$\unicode[STIX]{x1D707}_{0}$

, which are unity in our scaled variables, while for more complicated media such as plasmas they can be nonlinear operators (Morrison Reference Morrison2013). The Maxwell equations with the four fields read

The mathematical interpretation of these fields become clearer when interpreting them as differential forms:

![]() $\boldsymbol{E}$

and

$\boldsymbol{E}$

and

![]() $\boldsymbol{H}$

are 1-forms,

$\boldsymbol{H}$

are 1-forms,

![]() $\boldsymbol{D}$

and

$\boldsymbol{D}$

and

![]() $\boldsymbol{B}$

are 2-forms. The charge density

$\boldsymbol{B}$

are 2-forms. The charge density

![]() $\unicode[STIX]{x1D70C}$

is a 3-form and the current density

$\unicode[STIX]{x1D70C}$

is a 3-form and the current density

![]() $\boldsymbol{J}$

a 2-form. Moreover, the electrostatic potential

$\boldsymbol{J}$

a 2-form. Moreover, the electrostatic potential

![]() $\unicode[STIX]{x1D719}$

is a 0-form and the vector potential

$\unicode[STIX]{x1D719}$

is a 0-form and the vector potential

![]() $\boldsymbol{A}$

a 1-form. The

$\boldsymbol{A}$

a 1-form. The

![]() $\text{grad}$

,

$\text{grad}$

,

![]() $\text{curl}$

,

$\text{curl}$

,

![]() $\text{div}$

operators represent the exterior derivative applied respectively to 0-forms, 1-forms and 2-forms. To be more precise, there are two kinds of differential forms, depending on the orientation. Straight differential forms have an intrinsic (or inner) orientation, whereas twisted differential forms have an outer orientation, defined by the ambient space. Faraday’s equation and

$\text{div}$

operators represent the exterior derivative applied respectively to 0-forms, 1-forms and 2-forms. To be more precise, there are two kinds of differential forms, depending on the orientation. Straight differential forms have an intrinsic (or inner) orientation, whereas twisted differential forms have an outer orientation, defined by the ambient space. Faraday’s equation and

![]() $\text{div}\,\boldsymbol{B}=0$

are naturally inner oriented, whereas Ampère’s equation and Gauss’ law are outer oriented. This knowledge can be used to define a natural discretization for Maxwell’s equations. For finite difference approximations a dual mesh is needed for the discretization of twisted forms. This can already be found in Yee’s scheme (Yee Reference Yee1966). In the finite element context, only one mesh is used, but dual operators are used for the twisted forms. As an implication, the charge density

$\text{div}\,\boldsymbol{B}=0$

are naturally inner oriented, whereas Ampère’s equation and Gauss’ law are outer oriented. This knowledge can be used to define a natural discretization for Maxwell’s equations. For finite difference approximations a dual mesh is needed for the discretization of twisted forms. This can already be found in Yee’s scheme (Yee Reference Yee1966). In the finite element context, only one mesh is used, but dual operators are used for the twisted forms. As an implication, the charge density

![]() $\unicode[STIX]{x1D70C}$

will be treated as a 0-form and the current density

$\unicode[STIX]{x1D70C}$

will be treated as a 0-form and the current density

![]() $J$

as a 1-form, instead of a (twisted) 3-form and a (twisted) 2-form, respectively. Another consequence is that Ampère’s equation and Gauss’ law are being treated weakly while Faraday’s equation and

$J$

as a 1-form, instead of a (twisted) 3-form and a (twisted) 2-form, respectively. Another consequence is that Ampère’s equation and Gauss’ law are being treated weakly while Faraday’s equation and

![]() $\text{div}\,\boldsymbol{B}=0$

are treated strongly. A detailed description of this formalism can be found, e.g. in Bossavit’s lecture notes (Bossavit Reference Bossavit2006).

$\text{div}\,\boldsymbol{B}=0$

are treated strongly. A detailed description of this formalism can be found, e.g. in Bossavit’s lecture notes (Bossavit Reference Bossavit2006).

3.2 Finite element spaces of differential forms

The full mathematical theory for the finite element discretization of differential forms is due to Arnold et al. (Reference Arnold, Falk and Winther2006, Reference Arnold, Falk and Winther2010) and is called finite element exterior calculus (see also Monk Reference Monk2003, Christiansen et al. Reference Christiansen, Munthe-Kaas and Owren2011). Most finite element spaces appearing in this theory were known before, but their connection in the context of differential forms was not made clear. The first building block of FEEC is the following commuting diagram:

where

![]() $\unicode[STIX]{x1D6FA}\subset \mathbb{R}^{3}$

,

$\unicode[STIX]{x1D6FA}\subset \mathbb{R}^{3}$

,

![]() $\unicode[STIX]{x1D6EC}^{k}(\unicode[STIX]{x1D6FA})$

is the space of

$\unicode[STIX]{x1D6EC}^{k}(\unicode[STIX]{x1D6FA})$

is the space of

![]() $k$

-forms on

$k$

-forms on

![]() $\unicode[STIX]{x1D6FA}$

that we endow with the inner product

$\unicode[STIX]{x1D6FA}$

that we endow with the inner product

![]() $\langle \unicode[STIX]{x1D6FC},\unicode[STIX]{x1D6FD}\rangle =\int \unicode[STIX]{x1D6FC}\wedge \star \unicode[STIX]{x1D6FD}$

,

$\langle \unicode[STIX]{x1D6FC},\unicode[STIX]{x1D6FD}\rangle =\int \unicode[STIX]{x1D6FC}\wedge \star \unicode[STIX]{x1D6FD}$

,

![]() $\star$

is the Hodge operator and

$\star$

is the Hodge operator and

![]() $\unicode[STIX]{x1D625}$

is the exterior derivative that generalizes the gradient, curl and divergence. Then we define

$\unicode[STIX]{x1D625}$

is the exterior derivative that generalizes the gradient, curl and divergence. Then we define

and the Sobolev spaces of differential forms

Obviously in a three-dimensional manifold the exterior derivative of a 3-form vanishes so that

![]() $H\unicode[STIX]{x1D6EC}^{3}(\unicode[STIX]{x1D6FA})=L^{2}(\unicode[STIX]{x1D6FA})$

. This diagram can also be expressed using the standard vector calculus formalism:

$H\unicode[STIX]{x1D6EC}^{3}(\unicode[STIX]{x1D6FA})=L^{2}(\unicode[STIX]{x1D6FA})$

. This diagram can also be expressed using the standard vector calculus formalism:

The first row of (3.9) represents the sequence of function spaces involved in Maxwell’s equations. Such a sequence is called a complex if at each node, the image of the previous operator is in the kernel of the next operator, i.e.

![]() $\text{Im}(\text{grad})\subseteq \text{Ker}(\text{curl})$

and

$\text{Im}(\text{grad})\subseteq \text{Ker}(\text{curl})$

and

![]() $\text{Im}(\text{curl})\subseteq \text{Ker}(\text{div})$

. The power of the conforming finite element framework is that this complex can be reproduced at the discrete level by choosing the appropriate finite-dimensional subspaces

$\text{Im}(\text{curl})\subseteq \text{Ker}(\text{div})$

. The power of the conforming finite element framework is that this complex can be reproduced at the discrete level by choosing the appropriate finite-dimensional subspaces

![]() $V_{0}$

,

$V_{0}$

,

![]() $V_{1}$

,

$V_{1}$

,

![]() $V_{2}$

,

$V_{2}$

,

![]() $V_{3}$

. The order of the approximation is dictated by the choice made for

$V_{3}$

. The order of the approximation is dictated by the choice made for

![]() $V_{0}$

and the requirement of having a complex at the discrete level. The projection operators

$V_{0}$

and the requirement of having a complex at the discrete level. The projection operators

![]() $\unicode[STIX]{x1D6F1}_{i}$

are the finite element interpolants, which have the property that the diagram is commuting. This means for example, that the

$\unicode[STIX]{x1D6F1}_{i}$

are the finite element interpolants, which have the property that the diagram is commuting. This means for example, that the

![]() $\text{grad}$

of the projection on

$\text{grad}$

of the projection on

![]() $V_{0}$

is identical to the projection of the

$V_{0}$

is identical to the projection of the

![]() $\text{grad}$

on

$\text{grad}$

on

![]() $V_{1}$

. As proven by Arnold, Falk and Winther, their choice of finite elements naturally leads to stable discretizations.

$V_{1}$

. As proven by Arnold, Falk and Winther, their choice of finite elements naturally leads to stable discretizations.

There are many known sequences of finite element spaces that fit this diagram. The sequences proposed by Arnold, Falk and Winther are based on well-known finite element spaces. On tetrahedra these are

![]() $H^{1}$

conforming

$H^{1}$

conforming

![]() $\mathbb{P}_{k}$

Lagrange finite elements for

$\mathbb{P}_{k}$

Lagrange finite elements for

![]() $V_{0}$

, the

$V_{0}$

, the

![]() $H(\text{curl})$

conforming Nédélec elements for

$H(\text{curl})$

conforming Nédélec elements for

![]() $V_{1}$

, the

$V_{1}$

, the

![]() $H(\text{div})$

conforming Raviart–Thomas elements for

$H(\text{div})$

conforming Raviart–Thomas elements for

![]() $V_{2}$

and discontinuous Galerkin elements for

$V_{2}$

and discontinuous Galerkin elements for

![]() $V_{3}$

. A similar sequence can be defined on hexahedra based on the

$V_{3}$

. A similar sequence can be defined on hexahedra based on the

![]() $H^{1}$

conforming

$H^{1}$

conforming

![]() $\mathbb{Q}_{k}$

Lagrange finite elements for

$\mathbb{Q}_{k}$

Lagrange finite elements for

![]() $V_{0}$

.

$V_{0}$

.

Other sequences that satisfy the complex property are available. Let us in particular cite the mimetic spectral elements (Kreeft, Palha & Gerritsma Reference Kreeft, Palha and Gerritsma2011; Gerritsma Reference Gerritsma2012; Palha et al. Reference Palha, Rebelo, Hiemstra, Kreeft and Gerritsma2014) and the spline finite elements (Buffa, Sangalli & Vázquez Reference Buffa, Sangalli and Vázquez2010; Buffa et al. Reference Buffa, Rivas, Sangalli and Vázquez2011; Ratnani and Sonnendrücker Reference Ratnani and Sonnendrücker2012) that we shall use in this work, as splines are generally favoured in PIC codes due to their smoothness properties that enable noise reduction.

3.3 Finite element discretization of Maxwell’s equations

This framework is enough to express discrete relations between all the straight (or primal forms), i.e.

![]() $\boldsymbol{E}$

,

$\boldsymbol{E}$

,

![]() $\boldsymbol{B}$

,

$\boldsymbol{B}$

,

![]() $\boldsymbol{A}$

and

$\boldsymbol{A}$

and

![]() $\unicode[STIX]{x1D719}$

. The commuting diagram yields a direct expression of the discrete Faraday equation. Indeed projecting all the components of the equation onto

$\unicode[STIX]{x1D719}$

. The commuting diagram yields a direct expression of the discrete Faraday equation. Indeed projecting all the components of the equation onto

![]() $V_{2}$

yields

$V_{2}$

yields

which is equivalent, due to the commuting diagram property, to

Denoting with an

![]() $h$

index the discrete fields,

$h$

index the discrete fields,

![]() $\boldsymbol{B}_{h}=\unicode[STIX]{x1D6F1}_{2}\boldsymbol{B}$

,

$\boldsymbol{B}_{h}=\unicode[STIX]{x1D6F1}_{2}\boldsymbol{B}$

,

![]() $\boldsymbol{E}_{h}=\unicode[STIX]{x1D6F1}_{1}\boldsymbol{E}$

, this yields the discrete Faraday equation,

$\boldsymbol{E}_{h}=\unicode[STIX]{x1D6F1}_{1}\boldsymbol{E}$

, this yields the discrete Faraday equation,

In the same way, the discrete electric and magnetic fields are defined exactly as in the continuous case from the discrete potentials, thanks to the compatible finite element spaces,

so that automatically we get

On the other hand, Ampère’s equation and Gauss’ law relate expressions involving twisted differential forms. In the finite element framework, these should be expressed on the dual complex to (3.9). But due to the property that the dual of an operator in

![]() $L^{2}(\unicode[STIX]{x1D6FA})$

can be identified with its

$L^{2}(\unicode[STIX]{x1D6FA})$

can be identified with its

![]() $L^{2}$

adjoint via an inner product, the discrete dual spaces are such that

$L^{2}$

adjoint via an inner product, the discrete dual spaces are such that

![]() $V_{0}^{\ast }=V_{3}$

,

$V_{0}^{\ast }=V_{3}$

,

![]() $V_{1}^{\ast }=V_{2}$

,

$V_{1}^{\ast }=V_{2}$

,

![]() $V_{2}^{\ast }=V_{1}$

and

$V_{2}^{\ast }=V_{1}$

and

![]() $V_{3}^{\ast }=V_{0}$

, so that the dual operators and spaces are not explicitly needed. They are most naturally used seamlessly by keeping the weak formulation of the corresponding equations. The weak form of Ampère’s equation is found by taking the dot product of (2.2) with a test function

$V_{3}^{\ast }=V_{0}$

, so that the dual operators and spaces are not explicitly needed. They are most naturally used seamlessly by keeping the weak formulation of the corresponding equations. The weak form of Ampère’s equation is found by taking the dot product of (2.2) with a test function

![]() $\bar{\boldsymbol{E}}\in H(\text{curl},\unicode[STIX]{x1D6FA})$

and applying a Green identity. Assuming periodic boundary conditions, the weak solution of Ampère’s equation

$\bar{\boldsymbol{E}}\in H(\text{curl},\unicode[STIX]{x1D6FA})$

and applying a Green identity. Assuming periodic boundary conditions, the weak solution of Ampère’s equation

![]() $(\boldsymbol{E},\boldsymbol{B})\in H(\text{curl},\unicode[STIX]{x1D6FA})\times H(\text{div},\unicode[STIX]{x1D6FA})$

is characterized by

$(\boldsymbol{E},\boldsymbol{B})\in H(\text{curl},\unicode[STIX]{x1D6FA})\times H(\text{div},\unicode[STIX]{x1D6FA})$

is characterized by

The discrete version is obtained by replacing the continuous spaces by their finite-dimensional subspaces. The approximate solution

![]() $(\boldsymbol{E}_{h},\boldsymbol{B}_{h})\in V_{1}\times V_{2}$

is characterized by

$(\boldsymbol{E}_{h},\boldsymbol{B}_{h})\in V_{1}\times V_{2}$

is characterized by

In the same way the weak solution of Gauss’ law with

![]() $\boldsymbol{E}\in H(\text{curl},\unicode[STIX]{x1D6FA})$

is characterized by

$\boldsymbol{E}\in H(\text{curl},\unicode[STIX]{x1D6FA})$

is characterized by

its discrete version for

![]() $\boldsymbol{E}_{h}\in V_{1}$

being characterized by

$\boldsymbol{E}_{h}\in V_{1}$

being characterized by

The last step for the finite element discretization is to define a basis for each of the finite-dimensional spaces

![]() $V_{0},V_{1},V_{2},V_{3}$

, with

$V_{0},V_{1},V_{2},V_{3}$

, with

![]() $\dim V_{k}=N_{k}$

and to find equations relating the coefficients on these bases. Let us denote by

$\dim V_{k}=N_{k}$

and to find equations relating the coefficients on these bases. Let us denote by

![]() $\{\unicode[STIX]{x1D6EC}_{i}^{0}\}_{i=1\ldots N_{0}}$

and

$\{\unicode[STIX]{x1D6EC}_{i}^{0}\}_{i=1\ldots N_{0}}$

and

![]() $\{\unicode[STIX]{x1D6EC}_{i}^{3}\}_{i=1\ldots N_{3}}$

a basis of

$\{\unicode[STIX]{x1D6EC}_{i}^{3}\}_{i=1\ldots N_{3}}$

a basis of

![]() $V_{0}$

and

$V_{0}$

and

![]() $V_{3}$

, respectively, which are spaces of scalar functions, and

$V_{3}$

, respectively, which are spaces of scalar functions, and

![]() $\{\unicode[STIX]{x1D726}_{i,\unicode[STIX]{x1D707}}^{1}\}_{i=1\ldots N_{1},\unicode[STIX]{x1D707}=1\ldots 3}$

a basis of

$\{\unicode[STIX]{x1D726}_{i,\unicode[STIX]{x1D707}}^{1}\}_{i=1\ldots N_{1},\unicode[STIX]{x1D707}=1\ldots 3}$

a basis of

![]() $V_{1}\subset H(\text{curl},\unicode[STIX]{x1D6FA})$

and

$V_{1}\subset H(\text{curl},\unicode[STIX]{x1D6FA})$

and

![]() $\{\unicode[STIX]{x1D726}_{i,\unicode[STIX]{x1D707}}^{2}\}_{i=1\ldots N_{2},\unicode[STIX]{x1D707}=1\ldots 3}$

a basis of

$\{\unicode[STIX]{x1D726}_{i,\unicode[STIX]{x1D707}}^{2}\}_{i=1\ldots N_{2},\unicode[STIX]{x1D707}=1\ldots 3}$

a basis of

![]() $V_{2}\subset H(\text{div},\unicode[STIX]{x1D6FA})$

, which are vector valued functions,

$V_{2}\subset H(\text{div},\unicode[STIX]{x1D6FA})$

, which are vector valued functions,

$$\begin{eqnarray}\unicode[STIX]{x1D726}_{i,1}^{k}=\left(\begin{array}{@{}c@{}}\unicode[STIX]{x1D6EC}_{i}^{k,1}\\ 0\\ 0\end{array}\right),\quad \unicode[STIX]{x1D726}_{i,2}^{k}=\left(\begin{array}{@{}c@{}}0\\ \unicode[STIX]{x1D6EC}_{i}^{k,2}\\ 0\end{array}\right),\quad \unicode[STIX]{x1D726}_{i,1}^{k}=\left(\begin{array}{@{}c@{}}0\\ 0\\ \unicode[STIX]{x1D6EC}_{i}^{k,3}\end{array}\right),\quad k=1,2.\end{eqnarray}$$

$$\begin{eqnarray}\unicode[STIX]{x1D726}_{i,1}^{k}=\left(\begin{array}{@{}c@{}}\unicode[STIX]{x1D6EC}_{i}^{k,1}\\ 0\\ 0\end{array}\right),\quad \unicode[STIX]{x1D726}_{i,2}^{k}=\left(\begin{array}{@{}c@{}}0\\ \unicode[STIX]{x1D6EC}_{i}^{k,2}\\ 0\end{array}\right),\quad \unicode[STIX]{x1D726}_{i,1}^{k}=\left(\begin{array}{@{}c@{}}0\\ 0\\ \unicode[STIX]{x1D6EC}_{i}^{k,3}\end{array}\right),\quad k=1,2.\end{eqnarray}$$

Let us note that the restriction to a basis of this form is not strictly necessary and the generalization to more general bases is straightforward. However, for didactical reasons we stick to this form of the basis as it simplifies some of the computations and thus helps to clarify the following derivations. In order to keep a concise notation, and by slight abuse of the same, we introduce vectors of basis functions

![]() $\unicode[STIX]{x1D726}^{k}=(\unicode[STIX]{x1D726}_{1,1}^{k},\unicode[STIX]{x1D726}_{1,2}^{k},\ldots ,\unicode[STIX]{x1D726}_{N_{k},3}^{k})^{\text{T}}$

for

$\unicode[STIX]{x1D726}^{k}=(\unicode[STIX]{x1D726}_{1,1}^{k},\unicode[STIX]{x1D726}_{1,2}^{k},\ldots ,\unicode[STIX]{x1D726}_{N_{k},3}^{k})^{\text{T}}$

for

![]() $k=1,2$

, which are indexed by

$k=1,2$

, which are indexed by

![]() $I=3(i-1)+\unicode[STIX]{x1D707}=1\ldots 3N_{k}$

with

$I=3(i-1)+\unicode[STIX]{x1D707}=1\ldots 3N_{k}$

with

![]() $i=1\ldots N_{k}$

and

$i=1\ldots N_{k}$

and

![]() $\unicode[STIX]{x1D707}=1\ldots 3$

, and

$\unicode[STIX]{x1D707}=1\ldots 3$

, and

![]() $\unicode[STIX]{x1D6EC}^{k}=(\unicode[STIX]{x1D6EC}_{1}^{k},\unicode[STIX]{x1D6EC}_{2}^{k},\ldots ,\unicode[STIX]{x1D6EC}_{N_{k}}^{k})^{\text{T}}$

for

$\unicode[STIX]{x1D6EC}^{k}=(\unicode[STIX]{x1D6EC}_{1}^{k},\unicode[STIX]{x1D6EC}_{2}^{k},\ldots ,\unicode[STIX]{x1D6EC}_{N_{k}}^{k})^{\text{T}}$

for

![]() $k=0,3$

, which are indexed by

$k=0,3$

, which are indexed by

![]() $i=1\ldots N_{k}$

.

$i=1\ldots N_{k}$

.

We shall also need for each basis the dual basis, which in finite element terminology corresponds to the degrees of freedom. For each basis

![]() $\unicode[STIX]{x1D6EC}_{i}^{k}$

for

$\unicode[STIX]{x1D6EC}_{i}^{k}$

for

![]() $k=0,3$

and

$k=0,3$

and

![]() $\unicode[STIX]{x1D726}_{I}^{k}$

for

$\unicode[STIX]{x1D726}_{I}^{k}$

for

![]() $k=1,2$

, the dual basis is denoted by

$k=1,2$

, the dual basis is denoted by

![]() $\unicode[STIX]{x1D6F4}_{i}^{k}$

and

$\unicode[STIX]{x1D6F4}_{i}^{k}$

and

![]() $\unicode[STIX]{x1D72E}_{I}^{k}$

, respectively, and defined by

$\unicode[STIX]{x1D72E}_{I}^{k}$

, respectively, and defined by

for the scalar valued bases

![]() $\unicode[STIX]{x1D6EC}_{i}^{k}$

, and

$\unicode[STIX]{x1D6EC}_{i}^{k}$

, and

for the vector valued bases

![]() $\unicode[STIX]{x1D726}_{I}^{k}$

, where

$\unicode[STIX]{x1D726}_{I}^{k}$

, where

![]() $\left<\boldsymbol{\cdot },\boldsymbol{\cdot }\right>$

denotes the

$\left<\boldsymbol{\cdot },\boldsymbol{\cdot }\right>$

denotes the

![]() $L^{2}$

inner product in the appropriate space and

$L^{2}$

inner product in the appropriate space and

![]() $\unicode[STIX]{x1D6FF}_{IJ}$

is the Kronecker symbol, whose value is unity for

$\unicode[STIX]{x1D6FF}_{IJ}$

is the Kronecker symbol, whose value is unity for

![]() $I=J$

and zero otherwise. We introduce the linear functionals

$I=J$

and zero otherwise. We introduce the linear functionals

![]() $L^{2}\unicode[STIX]{x1D6EC}^{k}(\unicode[STIX]{x1D6FA})\rightarrow \mathbb{R}$

, which are denoted by

$L^{2}\unicode[STIX]{x1D6EC}^{k}(\unicode[STIX]{x1D6FA})\rightarrow \mathbb{R}$

, which are denoted by

![]() $\unicode[STIX]{x1D70E}_{i}^{k}$

for

$\unicode[STIX]{x1D70E}_{i}^{k}$

for

![]() $k=0,3$

and by

$k=0,3$

and by

![]() $\unicode[STIX]{x1D748}_{I}^{k}$

for

$\unicode[STIX]{x1D748}_{I}^{k}$

for

![]() $k=1,2$

, respectively. On the finite element space they are represented by the dual basis functions

$k=1,2$

, respectively. On the finite element space they are represented by the dual basis functions

![]() $\unicode[STIX]{x1D6F4}_{i}^{k}$

and

$\unicode[STIX]{x1D6F4}_{i}^{k}$

and

![]() $\unicode[STIX]{x1D72E}_{I}^{k}$

and defined by

$\unicode[STIX]{x1D72E}_{I}^{k}$

and defined by

and

so that

![]() $\unicode[STIX]{x1D70E}_{i}^{k}(\unicode[STIX]{x1D6EC}_{j}^{k})=\unicode[STIX]{x1D6FF}_{ij}$

and

$\unicode[STIX]{x1D70E}_{i}^{k}(\unicode[STIX]{x1D6EC}_{j}^{k})=\unicode[STIX]{x1D6FF}_{ij}$

and

![]() $\unicode[STIX]{x1D748}_{I}^{k}(\unicode[STIX]{x1D726}_{J}^{k})=\unicode[STIX]{x1D6FF}_{IJ}$

for the appropriate

$\unicode[STIX]{x1D748}_{I}^{k}(\unicode[STIX]{x1D726}_{J}^{k})=\unicode[STIX]{x1D6FF}_{IJ}$

for the appropriate

![]() $k$

. Elements of the finite-dimensional spaces can be expanded on their respective bases, e.g. elements of

$k$

. Elements of the finite-dimensional spaces can be expanded on their respective bases, e.g. elements of

![]() $V_{1}$

and

$V_{1}$

and

![]() $V_{2}$

, respectively, as

$V_{2}$

, respectively, as

denoting by

![]() $\boldsymbol{e}=(e_{1,1},\,e_{1,2},\ldots ,e_{N_{1},3})^{\text{T}}\in \mathbb{R}^{3N_{1}}$

and

$\boldsymbol{e}=(e_{1,1},\,e_{1,2},\ldots ,e_{N_{1},3})^{\text{T}}\in \mathbb{R}^{3N_{1}}$

and

![]() $\boldsymbol{b}=(b_{1,1},\,b_{1,2},\ldots ,b_{N_{2},3})^{\text{T}}\in \mathbb{R}^{3N_{2}}$

the corresponding degrees of freedom with

$\boldsymbol{b}=(b_{1,1},\,b_{1,2},\ldots ,b_{N_{2},3})^{\text{T}}\in \mathbb{R}^{3N_{2}}$

the corresponding degrees of freedom with

![]() $e_{i,\unicode[STIX]{x1D707}}=\unicode[STIX]{x1D748}_{i,\unicode[STIX]{x1D707}}^{1}(\boldsymbol{E}_{h})$

and

$e_{i,\unicode[STIX]{x1D707}}=\unicode[STIX]{x1D748}_{i,\unicode[STIX]{x1D707}}^{1}(\boldsymbol{E}_{h})$

and

![]() $b_{i,\unicode[STIX]{x1D707}}=\unicode[STIX]{x1D748}_{i,\unicode[STIX]{x1D707}}^{2}(\boldsymbol{B}_{h})$

, respectively.

$b_{i,\unicode[STIX]{x1D707}}=\unicode[STIX]{x1D748}_{i,\unicode[STIX]{x1D707}}^{2}(\boldsymbol{B}_{h})$

, respectively.

Denoting the elements of

![]() $\boldsymbol{e}$

by

$\boldsymbol{e}$

by

![]() $\boldsymbol{e}_{I}$

and the elements of

$\boldsymbol{e}_{I}$

and the elements of

![]() $\boldsymbol{b}$

by

$\boldsymbol{b}$

by

![]() $\boldsymbol{b}_{I}$

, we have that

$\boldsymbol{b}_{I}$

, we have that

![]() $\boldsymbol{e}_{I}=\unicode[STIX]{x1D748}_{I}^{1}(\boldsymbol{E}_{h})$

and

$\boldsymbol{e}_{I}=\unicode[STIX]{x1D748}_{I}^{1}(\boldsymbol{E}_{h})$

and

![]() $\boldsymbol{b}_{I}=\unicode[STIX]{x1D748}_{I}^{2}(\boldsymbol{B}_{h})$

, respectively, and can re-express (3.25) as

$\boldsymbol{b}_{I}=\unicode[STIX]{x1D748}_{I}^{2}(\boldsymbol{B}_{h})$

, respectively, and can re-express (3.25) as

Henceforth we will use both notations in parallel, choosing whichever is more practical at any given time.

Due to the complex property we have that

![]() $\text{curl}\,\boldsymbol{E}_{h}\in V_{2}$

for all

$\text{curl}\,\boldsymbol{E}_{h}\in V_{2}$

for all

![]() $\boldsymbol{E}_{h}\in V_{1}$

, so that

$\boldsymbol{E}_{h}\in V_{1}$

, so that

![]() $\text{curl}\,\boldsymbol{E}_{h}$

can be expressed in the basis of

$\text{curl}\,\boldsymbol{E}_{h}$

can be expressed in the basis of

![]() $V_{2}$

by

$V_{2}$

by

Let us also denote by

![]() $\boldsymbol{c}=(c_{1,1},\,c_{1,2},\ldots ,c_{N_{2},3})^{\text{T}}$

, so that

$\boldsymbol{c}=(c_{1,1},\,c_{1,2},\ldots ,c_{N_{2},3})^{\text{T}}$

, so that

![]() $\text{curl}\,\boldsymbol{E}_{h}$

can also be written as

$\text{curl}\,\boldsymbol{E}_{h}$

can also be written as

On the other hand

$$\begin{eqnarray}\left.\begin{array}{@{}c@{}}\displaystyle \text{curl}\,\boldsymbol{E}_{h}=\text{curl}\left(\mathop{\sum }_{I=1}^{3N_{1}}\boldsymbol{e}_{I}\,\unicode[STIX]{x1D726}_{I}^{1}\right)=\mathop{\sum }_{I=1}^{3N_{1}}\boldsymbol{e}_{I}\,\text{curl}\,\unicode[STIX]{x1D726}_{I}^{1},\\ \displaystyle \unicode[STIX]{x1D748}_{I}^{2}(\text{curl}\,\boldsymbol{E}_{h})=\mathop{\sum }_{J=1}^{3N_{1}}e_{J}\,\unicode[STIX]{x1D748}_{I}^{2}(\text{curl}\,\unicode[STIX]{x1D726}_{J}^{1}).\end{array}\right\}\end{eqnarray}$$

$$\begin{eqnarray}\left.\begin{array}{@{}c@{}}\displaystyle \text{curl}\,\boldsymbol{E}_{h}=\text{curl}\left(\mathop{\sum }_{I=1}^{3N_{1}}\boldsymbol{e}_{I}\,\unicode[STIX]{x1D726}_{I}^{1}\right)=\mathop{\sum }_{I=1}^{3N_{1}}\boldsymbol{e}_{I}\,\text{curl}\,\unicode[STIX]{x1D726}_{I}^{1},\\ \displaystyle \unicode[STIX]{x1D748}_{I}^{2}(\text{curl}\,\boldsymbol{E}_{h})=\mathop{\sum }_{J=1}^{3N_{1}}e_{J}\,\unicode[STIX]{x1D748}_{I}^{2}(\text{curl}\,\unicode[STIX]{x1D726}_{J}^{1}).\end{array}\right\}\end{eqnarray}$$

Denoting by

![]() $\mathbb{C}$

the discrete curl matrix,

$\mathbb{C}$

the discrete curl matrix,

the degrees of freedom of

![]() $\text{curl}\,\boldsymbol{E}_{h}$

in

$\text{curl}\,\boldsymbol{E}_{h}$

in

![]() $V_{2}$

are related to the degrees of freedom of

$V_{2}$

are related to the degrees of freedom of

![]() $\boldsymbol{E}_{h}$

in

$\boldsymbol{E}_{h}$

in

![]() $V_{1}$

by

$V_{1}$

by

![]() $\boldsymbol{c}=\mathbb{C}\boldsymbol{e}$

. In the same way we can define the discrete gradient matrix

$\boldsymbol{c}=\mathbb{C}\boldsymbol{e}$

. In the same way we can define the discrete gradient matrix

![]() $\mathbb{G}$

and the discrete divergence matrix

$\mathbb{G}$

and the discrete divergence matrix

![]() $\mathbb{D}$

, given by

$\mathbb{D}$

, given by

respectively. Denoting by

![]() $\unicode[STIX]{x1D753}=(\unicode[STIX]{x1D711}_{1},\ldots ,\unicode[STIX]{x1D711}_{N_{0}})^{\text{T}}$

and

$\unicode[STIX]{x1D753}=(\unicode[STIX]{x1D711}_{1},\ldots ,\unicode[STIX]{x1D711}_{N_{0}})^{\text{T}}$

and

![]() $\boldsymbol{a}=(\mathit{a}_{1,1},\,\mathit{a}_{1,2},\,\ldots ,\,\mathit{a}_{N_{1},3})^{\text{T}}$

the degrees of freedom of the potentials

$\boldsymbol{a}=(\mathit{a}_{1,1},\,\mathit{a}_{1,2},\,\ldots ,\,\mathit{a}_{N_{1},3})^{\text{T}}$

the degrees of freedom of the potentials

![]() $\unicode[STIX]{x1D719}_{h}$

and

$\unicode[STIX]{x1D719}_{h}$

and

![]() $\boldsymbol{A}_{h}$

, with

$\boldsymbol{A}_{h}$

, with

![]() $\unicode[STIX]{x1D711}_{i}=\unicode[STIX]{x1D70E}_{i}^{0}(\unicode[STIX]{x1D719}_{h})$

for

$\unicode[STIX]{x1D711}_{i}=\unicode[STIX]{x1D70E}_{i}^{0}(\unicode[STIX]{x1D719}_{h})$

for

![]() $1\leqslant i\leqslant N_{0}$

and

$1\leqslant i\leqslant N_{0}$

and

![]() $\boldsymbol{a}_{I}=\unicode[STIX]{x1D748}_{I}^{1}(\boldsymbol{A}_{h})$

for

$\boldsymbol{a}_{I}=\unicode[STIX]{x1D748}_{I}^{1}(\boldsymbol{A}_{h})$

for

![]() $1\leqslant I\leqslant 3N_{1}$

, the relation (3.13) between the discrete fields (3.25) and the potentials can be written using only the degrees of freedom as

$1\leqslant I\leqslant 3N_{1}$

, the relation (3.13) between the discrete fields (3.25) and the potentials can be written using only the degrees of freedom as

Finally, we need to define the so-called mass matrices in each of the discrete spaces

![]() $V_{i}$

, which define the discrete Hodge operator linking the primal complex with the dual complex. We denote by

$V_{i}$

, which define the discrete Hodge operator linking the primal complex with the dual complex. We denote by

![]() $(\mathbb{M}_{0})_{ij}=\int _{\unicode[STIX]{x1D6FA}}\unicode[STIX]{x1D6EC}_{i}^{0}(\boldsymbol{x})\,\unicode[STIX]{x1D6EC}_{j}^{0}(\boldsymbol{x})\,\text{d}\boldsymbol{x}$

with

$(\mathbb{M}_{0})_{ij}=\int _{\unicode[STIX]{x1D6FA}}\unicode[STIX]{x1D6EC}_{i}^{0}(\boldsymbol{x})\,\unicode[STIX]{x1D6EC}_{j}^{0}(\boldsymbol{x})\,\text{d}\boldsymbol{x}$

with

![]() $1\leqslant i,j\leqslant N_{0}$

and

$1\leqslant i,j\leqslant N_{0}$

and

![]() $(\mathbb{M}_{1})_{IJ}=\int _{\unicode[STIX]{x1D6FA}}\unicode[STIX]{x1D726}_{I}^{1}(\boldsymbol{x})\boldsymbol{\cdot }\unicode[STIX]{x1D726}_{J}^{1}(\boldsymbol{x})\,\text{d}\boldsymbol{x}$

with

$(\mathbb{M}_{1})_{IJ}=\int _{\unicode[STIX]{x1D6FA}}\unicode[STIX]{x1D726}_{I}^{1}(\boldsymbol{x})\boldsymbol{\cdot }\unicode[STIX]{x1D726}_{J}^{1}(\boldsymbol{x})\,\text{d}\boldsymbol{x}$

with

![]() $1\leqslant I,J\leqslant 3N_{1}$

the mass matrices in

$1\leqslant I,J\leqslant 3N_{1}$

the mass matrices in

![]() $V_{0}$

and

$V_{0}$

and

![]() $V_{1}$

, respectively, and similarly

$V_{1}$

, respectively, and similarly

![]() $\mathbb{M}_{2}$

and

$\mathbb{M}_{2}$

and

![]() $\mathbb{M}_{3}$

the mass matrices in

$\mathbb{M}_{3}$

the mass matrices in

![]() $V_{2}$

and

$V_{2}$

and

![]() $V_{3}$

. Using these definitions as well as

$V_{3}$

. Using these definitions as well as

![]() $\unicode[STIX]{x1D754}=(\unicode[STIX]{x1D71A}_{1},\ldots ,\unicode[STIX]{x1D71A}_{N_{0}})^{\text{T}}$

and

$\unicode[STIX]{x1D754}=(\unicode[STIX]{x1D71A}_{1},\ldots ,\unicode[STIX]{x1D71A}_{N_{0}})^{\text{T}}$

and

![]() $\boldsymbol{j}=(j_{1,1},\,j_{1,2},\ldots ,j_{N_{1},3})^{\text{T}}$

with

$\boldsymbol{j}=(j_{1,1},\,j_{1,2},\ldots ,j_{N_{1},3})^{\text{T}}$

with

![]() $\unicode[STIX]{x1D71A}_{i}=\unicode[STIX]{x1D70E}_{i}^{0}(\unicode[STIX]{x1D70C}_{h})$

for

$\unicode[STIX]{x1D71A}_{i}=\unicode[STIX]{x1D70E}_{i}^{0}(\unicode[STIX]{x1D70C}_{h})$

for

![]() $1\leqslant i\leqslant N_{0}$

and

$1\leqslant i\leqslant N_{0}$

and

![]() $\boldsymbol{j}_{I}=\unicode[STIX]{x1D748}_{I}^{1}(\boldsymbol{J}_{h})$

for

$\boldsymbol{j}_{I}=\unicode[STIX]{x1D748}_{I}^{1}(\boldsymbol{J}_{h})$

for

![]() $1\leqslant I\leqslant 3N_{1}$

(recall that the charge density

$1\leqslant I\leqslant 3N_{1}$

(recall that the charge density

![]() $\unicode[STIX]{x1D70C}$

is treated as a 0-form and the current density

$\unicode[STIX]{x1D70C}$

is treated as a 0-form and the current density

![]() $\boldsymbol{J}$

as a 1-form), we obtain a system of ordinary differential equations for each of the continuous equations, namely

$\boldsymbol{J}$

as a 1-form), we obtain a system of ordinary differential equations for each of the continuous equations, namely

It is worth emphasizing that

![]() $\text{div}\,\boldsymbol{B}=0$

is satisfied in strong form, which is important for the Jacobi identity of the discretized Poisson bracket (cf. § 4.4). The complex properties can also be expressed at the matrix level. The primal sequence being

$\text{div}\,\boldsymbol{B}=0$

is satisfied in strong form, which is important for the Jacobi identity of the discretized Poisson bracket (cf. § 4.4). The complex properties can also be expressed at the matrix level. The primal sequence being

with

![]() $\text{Im}\mathbb{G}\subseteq \text{Ker}\mathbb{C}$

,

$\text{Im}\mathbb{G}\subseteq \text{Ker}\mathbb{C}$

,

![]() $\text{Im}\mathbb{C}\subseteq \text{Ker}\mathbb{D}$

, and the dual sequence being

$\text{Im}\mathbb{C}\subseteq \text{Ker}\mathbb{D}$

, and the dual sequence being

with

![]() $\text{Im}\mathbb{D}^{\text{T}}\subseteq \text{Ker}\mathbb{C}^{\text{T}}$

,

$\text{Im}\mathbb{D}^{\text{T}}\subseteq \text{Ker}\mathbb{C}^{\text{T}}$

,

![]() $\text{Im}\mathbb{C}^{\text{T}}\subseteq \text{Ker}\mathbb{G}^{\text{T}}$

.

$\text{Im}\mathbb{C}^{\text{T}}\subseteq \text{Ker}\mathbb{G}^{\text{T}}$

.

3.4 Example: B-spline finite elements

In the following, we will use so-called basic splines, or B-splines, as bases for the finite element function spaces. B-splines are piecewise polynomials. The points where two polynomials connect are called knots. The

![]() $j$

th basic spline (B-spline) of degree

$j$

th basic spline (B-spline) of degree

![]() $p$

can be defined recursively by

$p$

can be defined recursively by

where

and

with the knot vector

![]() $\unicode[STIX]{x1D6EF}=\{x_{i}\}_{1\leqslant i\leqslant N+k}$

being a non-decreasing sequence of points. The knot vector can also contain repeated knots. If a knot

$\unicode[STIX]{x1D6EF}=\{x_{i}\}_{1\leqslant i\leqslant N+k}$

being a non-decreasing sequence of points. The knot vector can also contain repeated knots. If a knot

![]() $x_{i}$

has multiplicity

$x_{i}$

has multiplicity

![]() $m$

, then the B-spline is

$m$

, then the B-spline is

![]() $C^{p-m}$

continuous at

$C^{p-m}$

continuous at

![]() $x_{i}$

. The derivative of a B-spline of degree

$x_{i}$

. The derivative of a B-spline of degree

![]() $p$

can easily be computed as the difference of two B-splines of degree

$p$

can easily be computed as the difference of two B-splines of degree

![]() $p-1$

,

$p-1$

,

For convenience, we introduce the following shorthand notation for differentials,

In the case of an equidistant grid with grid step size

![]() $\unicode[STIX]{x0394}x=x_{j+1}-x_{j}$

, this simplifies to

$\unicode[STIX]{x0394}x=x_{j+1}-x_{j}$

, this simplifies to

Using

![]() $D_{j}^{p}$

the recursion formula (3.39) becomes

$D_{j}^{p}$

the recursion formula (3.39) becomes

In more than one dimension, we can define tensor-product B-spline basis functions, e.g. for three dimensions as

The bases of the differential form spaces will be tensor products of the basis functions

![]() $N_{i}^{p}$

and the differentials

$N_{i}^{p}$

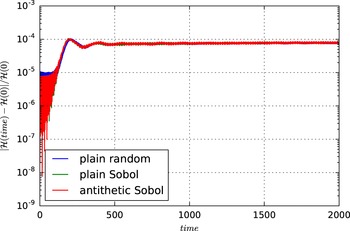

and the differentials