1. Introduction

Laser-accelerated ions have great potential for various applications, such as compact medical accelerators [Reference Katayama, Kako, Yamaguchi, Michizono, Umemori and Kondo1–Reference Linz and Alonso4], neutron sources [Reference Katayama, Kako, Yamaguchi, Michizono, Umemori and Kondo1, Reference Favalli, Guler and Henzlova5–Reference Roth, Jung and Falk7], or as injectors for conventional accelerators [Reference Aymar, Becker and Boogert8]. These applications require a high repetition rate to overcome the drawback of the exponential energy distribution, typical for target normal sheath acceleration. However, conventional solid-state targets cannot achieve high repetition rates due to engineering difficulties and target supply [Reference Zimmer9] (chapter 4.2). For this reason, different targets such as gas [Reference Huebl, Rehwald and Obst-Huebl10] or liquid-based targets [Reference Berglund, Rymell, Hertz and Wilhein11, Reference Wieland, Wilhein, Faubel, Ellert, Schmidt and Sublemontier12] are currently being developed.

In this work, we investigate a liquid leaf target [Reference George, Morrison and Feister13] currently under development at TU Darmstadt. This target is a major step towards achieving reproducible, high repetition rate ion bunches from laser-plasma interactions, which is necessary for any kind of application. This new system allows for the operation of a repetitive target with arbitrary H2O/D2O ratios for the first time, which we investigate in this work.

In particular, we aim to train a surrogate model for a liquid leaf target in a target normal sheath acceleration (TNSA) experiment to understand the characteristics of the liquid leaf and its composition, predict ideal operating points, and understand how multiple ion species interact with each other. The first aim for the target at TU Darmstadt is the creation of a viable compact neutron source, which requires proton energies larger than the production threshold of neutrons (> 1.7MeV) [Reference Kleinschmidt, Bagnoud and Deppert6].

Previous attempts at modeling laser-plasma acceleration experiments have been made [Reference Djordjević, Kemp and Kim14–Reference Djordjevic, Kemp, Kim, Haefner and Awwal17]. With our contributions, presented in this work, we expand on the state-of-the-art by taking more parameters of the experimental setup into account and providing a surrogate for the theory of intricate effects a multispecies target can have on the energy spectrum of the accelerated ions.

Huebl et al. [Reference Huebl, Rehwald and Obst-Huebl10] have found a strong influence of the mixture ratio of multiple species on the resulting energy spectra in hydrogen-deuterium targets. In Section 2.1, we expand on their idea and provide indicators for the importance of this effect.

Furthermore, while attempts at modeling a laser-plasma acceleration experiment using neural networks have been made [Reference Djordjević, Kemp and Kim14], we extend previous work with a vast number of Particle-In-Cell simulations to train our models. The chosen approach via deep learning also ensures that expansion (transfer learning) of the model with experimental data is possible. We demonstrate our surrogate model’s high performance and utility by optimizing an example laser-plasma acceleration experiment, leveraging nontrivial relationships between the experimental parameters not yet understood by theory (see Section 3).

2. Plasma Target Models

The following section details our contributions related to the considered multispecies target experiment as well as the creation of our simulation datasets and the training of our surrogate model.

For this work, we carried out various PIC simulations to generate our datasets. The bulk of the simulations was computed on the Virgo high-performance computing cluster [18] at GSI Helmholtzzentrum, Darmstadt. For these simulations, we used the Smilei [Reference Derouillat, Beck and Pérez19] PIC code. We determined the resulting surrogate by training an artificial neural network from the simulated data.

2.1. Multispecies Target Considerations

We are considering a liquid leaf target which consists of multiple different atom species. These ion species differ in their charge-to-mass ratio qi/mi, and an ion can have up to Z different charge states. Taking water, for example, one can have up to 8 ionization states of oxygen and an additional one for the hydrogen component.

Water occurs naturally with different isotopes of hydrogen. Taking into account regular water (H2O) and heavy water (D2O), an additional degree of freedom—the mixtures between the two—must be considered. Since several ion species are present, this can be denoted as an n-species plasma, where n is the number of ion states in the plasma.

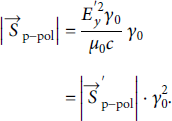

The final nonrelativistic kinetic energy of species i accelerated in a constant electric field E 0 scales as follows:

Therefore, species with a higher

![]() ratio will gain more energy. Provided that the initial densities are similar, species with higher

ratio will gain more energy. Provided that the initial densities are similar, species with higher

![]() will deplete most of the available field energy. This energy is then split between different particle species and limits the acceleration efficiency of a single species.

will deplete most of the available field energy. This energy is then split between different particle species and limits the acceleration efficiency of a single species.

Several species interact with each other, leading to a deformation of the particle spectrum. Faster particles take electrons from the sheath and screen the acceleration field for the following heavier particles. These heavier particles are then accelerated in the screened field and hence have less kinetic energy per nucleon and a lower velocity than expected from the assumption mentioned above. Mid-energy lighter particles are accelerated by the following heavier particle front due to the Coulomb force, getting compressed in the momentum space. This compression causes plateaus and quasi-monoenergetic features to form.

This effect is described in detail and analytically calculated for the asymptotic case for two particle species (deuterium and hydrogen gas) by Huebl et al. [Reference Huebl, Rehwald and Obst-Huebl10]. We applied their solutions for 2 species, since we assumed fully ionized plasma in our simulations to reduce the degrees of freedom inside the plasma.

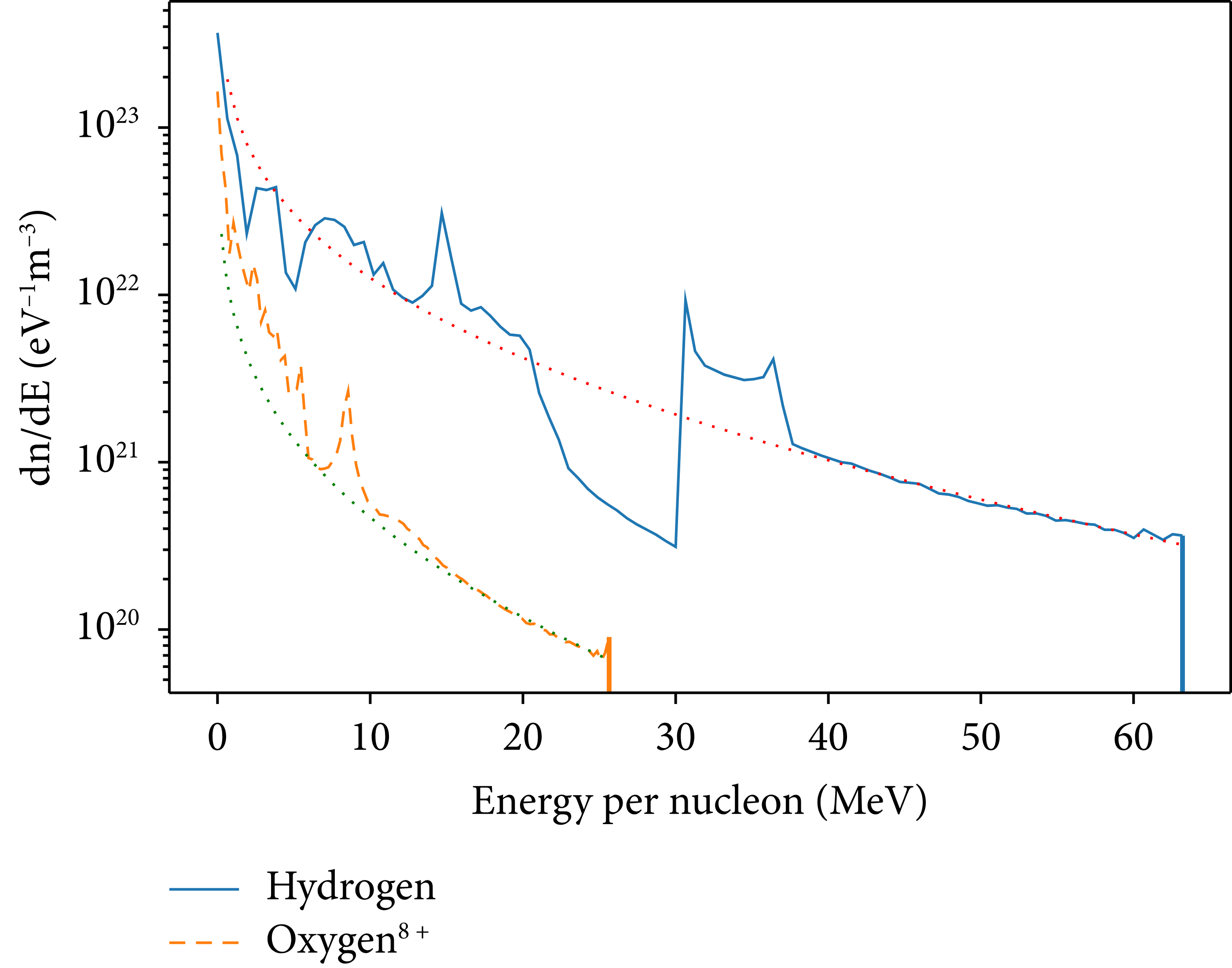

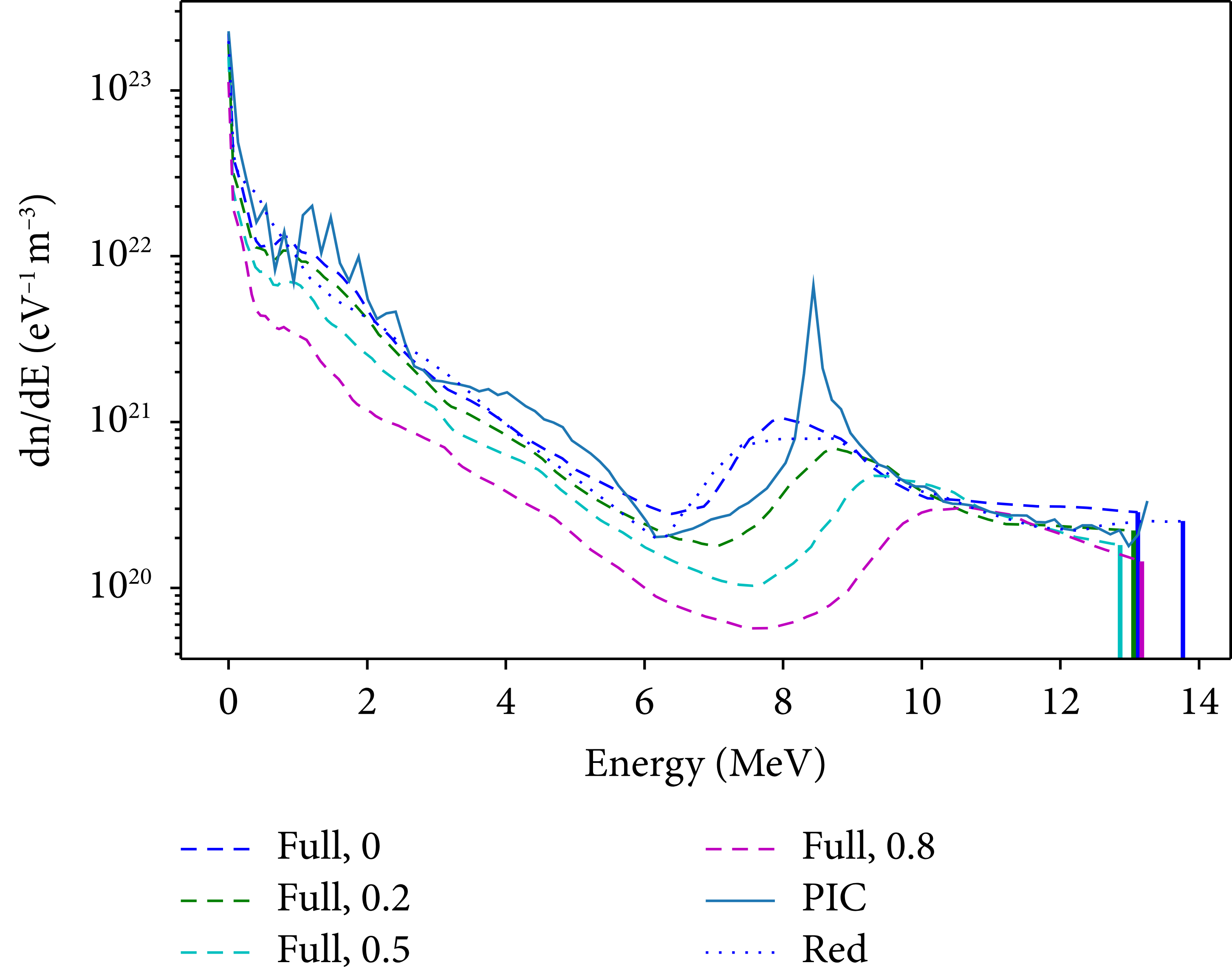

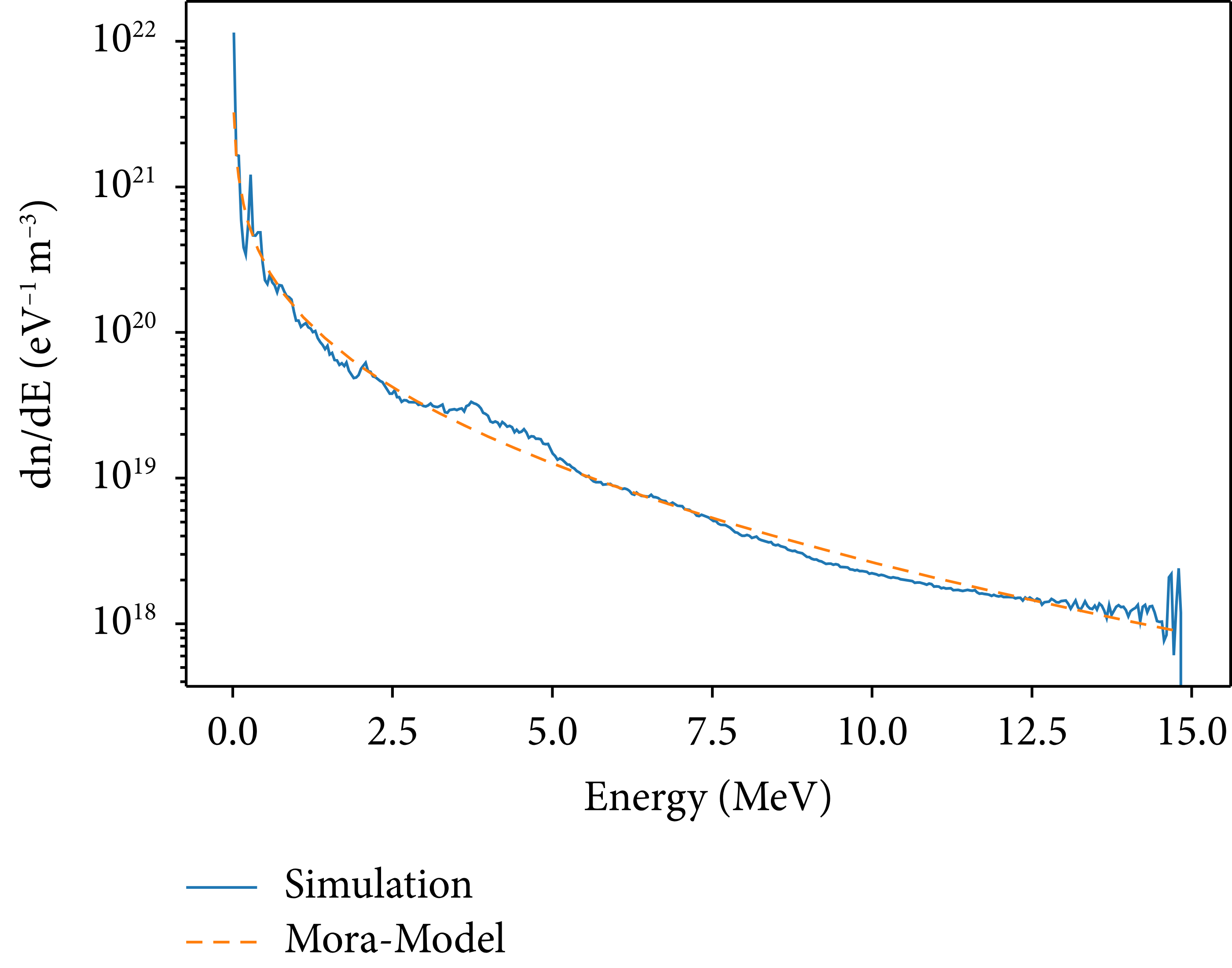

The compression effect on the lighter particle spectrum is visualized in Figure 1 using a PIC simulation for regular H2O. Deviations from the ideal Mora [Reference Huebl, Rehwald and Obst-Huebl10, Reference Mora20] can be seen.

Figure 1: Particle energy spectra of hydrogen and oxygen after TNSA PIC simulation of liquid leaf water target. The dotted lines are the corresponding Mora [Reference Mora20] fits for the displayed spectrum. Large deviations from the spectrum (30 MeV and above) can be explained by the multispecies effect. The simulation setup is described in Section 2.2. The investigated features are sharp and their shape varies. One-dimensional simulations have a sharper profile, while higher dimensional ones and real-life experiments are smoother [Reference Huebl, Rehwald and Obst-Huebl10, Reference Alejo, Kar and Ahmed21].

We can make two observations: firstly, the lower energy part of the spectrum, in this case until half of the maximum energy, is coarser than the corresponding higher energy part of the spectrum. There is also a peak at around 10 MeV in both spectra which makes it possible to compare the spectra against each other. These peaks are shifted by the same amount as the oxygen cut-off is shifted from the hydrogen plateau, which can be assumed to be a correlation due to the particle interaction in this energy range.

Secondly, there is a plateau in the hydrogen spectrum starting at around 30 MeV. This plateau and the corresponding dip before it deviate fairly strongly from the established Mora theory for TNSA (indicated by the dotted lines). This drop/increase combination is explainable by the previously introduced multispecies effect, and we want to investigate, predict, and leverage this behavior.

If we can describe and predict this effect, we can optimize our ion beam for specific applications. To do this, we need to find a surrogate model for the full spectrum problem. Further insights gained from considering multiple species become evident in Section 2.2.6. Fully ionized oxygen has the same qi/mi ratio as deuterium, for example, which reduces the efficiency of deuterium acceleration. In this work, we only modeled the proton part of the spectrum because our data are ambiguous for the oxygen/deuterium combination part. However, expanding the dataset to include a sweep of the oxygen’s charge number would resolve this ambiguity and yield clearer modeling results for deuterium.

2.2. Particle-In-Cell Simulations Setup

The simulations reflect a real experiment in reduced dimensions. To sample a larger parameter space in a reasonable time, we reduced the dimensions of the simulation to 1.5D. This means simulating one space and three momentum components. The fields are also sampled in three dimensions.

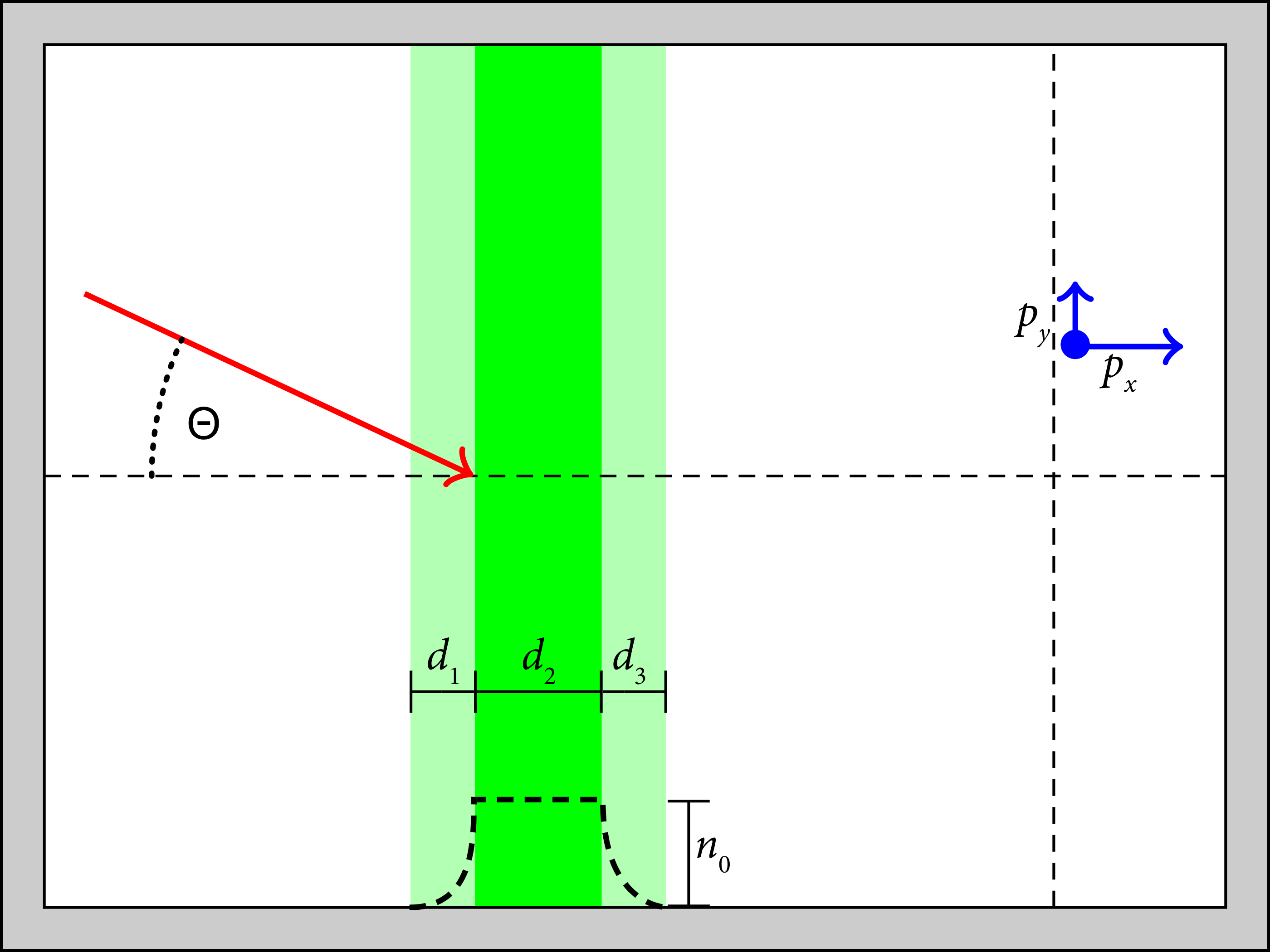

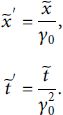

We further applied an additional method to account for angle dependency by applying a transverse Lorentz boost to the system. Details on both the Lorentz boost and the method itself can be found in Appendix C. A sketch of the full setup is displayed in Figure 2.

Figure 2: Overview of the simulation setup. Green marks the plasma target. The lighter green areas indicate the preplasma and the skirt implemented. The laser, indicated by the red arrow, hits the plasma under an angle Θ‐relative to the target normal. After the acceleration time, the momenta of the accelerated particles, given in blue, are registered. For the liquid leaf target, d 1 is assumed to be equal to d 3.

2.2.1. Plasma Target

The target in the conducted simulation models a liquid leaf target under development at TU Darmstadt Institute of Nuclear Physics and which is similar to the work by George et al. [Reference George, Morrison and Feister13]. The liquid leaf target’s width is some cm, while the typical irradiation size of a laser is in the order of µm. We assume that the surface roughness is negligible and the plasma surface is therefore considered to be planar.

When the target is only dependent on one coordinate, it can be described fully by its particle density profile. Thus, the simulation only allows movement along the x coordinate and is independent of y and z. We also assume that the plasma is expanded when the main pulse hits the target, and that the preplasma and skirt follow an exponential profile. We chose the scale length for the exponential profile as 0.4 μm so as to be longer than a comparable setup with a cryogenic (i.e., less evaporative) jet target [Reference Obst, Göde and Rehwald22] while still ensuring a well-defined plasma border. The exponential profile thus takes the shape.

where ls = 0.4 μm, and x front is the location of the target front. The skirt has identical functional shape for the backside of the target.

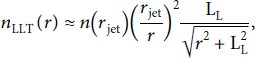

Since a liquid leaf target evaporates, we superimposed the typical vapor density distribution for a liquid leaf target, given by

where n(r jet is the water vapor density at the liquid jet surface, LL ≈ 3 cm is the liquid jet length, and r jet is the liquid jet radius [Reference Cappa, Drisdell, Smith, Saykally and Cohen23]. Note that the second term in the abovementioned expression has been squared as we expect a faster drop-off of the liquid leaf density in our proposed experimental setup.

The assumed particle densities are

![]() and

and

![]() stemming from the liquid density of water and the density estimated at the saturation vapor pressure at 0°C [Reference Cappa, Drisdell, Smith, Saykally and Cohen23]. We also introduced a cut-off of the profile 4 μm before and after the target, which washes out by approximately 1.4 μm by the time the laser hits the target. This cut-off is only introduced to optimize the simulation’s performance.

stemming from the liquid density of water and the density estimated at the saturation vapor pressure at 0°C [Reference Cappa, Drisdell, Smith, Saykally and Cohen23]. We also introduced a cut-off of the profile 4 μm before and after the target, which washes out by approximately 1.4 μm by the time the laser hits the target. This cut-off is only introduced to optimize the simulation’s performance.

We chose to investigate multispecies effects resulting from a combination of different ion species inside the target. We simulated regular water, heavy water, and a potential mixture between the two. This mixture is indicated by the mixture parameter listed in Table 1, which we varied in discrete steps. The simulation thus consists of up to four species: electrons (e−), hydrogen (H+), deuterium (D+), and oxygen (On+). As the ionization of oxygen is of importance to the model, this was varied as well. All particle species follow the same distribution function defined above.

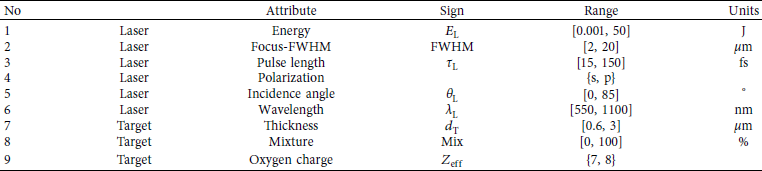

Table 1: Table of the physical parameters that were used for sampling of the input files to the 1.5D PIC simulations. Mixture defines the percentage of hydrogen substituted by deuterium.

The ion species are initialized cold while the electrons received an initial temperature of 30 keV to simulate interaction with a prepulse. We used Smilei’s defaults for particle initialization, including no ionization or radiation model [24]. The length of one cell is the Debye length at the initial electron temperature, around 5 nm. For the time resolution, a CFL number of 0.98 was used. The interpolation order of the particle shape functions is set to four and the particle per cell count for each species is 800.

2.2.2. Laserpulse

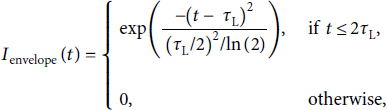

In a 1.5D simulation, the laser pulse is given by its time profile only. We assumed a Gaussian time profile, using Smilei’s Gaussian profile with the following shape:

where τ L is the main laser pulse duration. In this work, we deal with lasers that have a pulse duration τL < 1 ps and an a 0 > 1. The laser energy E L, pulse length τ L, polarization, incident angle θ L, wavelength λ L, and the target thickness d T are variable and are uniformly sampled from the defined intervals in Table 1. Our thought process in choosing exactly these parameters was that we needed to cover the full system, which required the use of 9 parameters. These parameters were chosen based on two different, sometimes contradictory, paradigms: one was to allow the similarity equations to take full effects, while the other was to enable experimental validation of the model (see also Appendix A and Appendix B).

2.2.3. Simulation Output Quantities

The diagnostics recorded are the particles’ x-coordinate, all components of the momentum

![]() , and the macroparticle weight w at the acceleration time.

, and the macroparticle weight w at the acceleration time.

where c s is the ion-acoustic velocity. Lécz [Reference Lécz25] has found that this is a suitable acceleration time after which an isothermal plasma expansion model no longer holds. From these recorded values, we reconstruct the energy spectrum of the particles in the lab frame by using equation (C5). Since all energy spectra have an individual shape and cut-off energy, the spectra were each normalized to the energy range [0, 1], counted into 100 bins, and stored as a list together with their respective cut-off energies. In order to keep the numbers more practical, we took the logarithm. An entry for the results of a simulation thus has the following shape:

![]() . Exponentiating and rescaling by E max restores the original energy spectrum accordingly. This same recording scheme is used for all four species for all simulations.

. Exponentiating and rescaling by E max restores the original energy spectrum accordingly. This same recording scheme is used for all four species for all simulations.

We chose that the parameters in Table 1 are uniformly sampled with exception of the laser energy E L, which we sampled following a square root scale and the mixture was varied in discrete steps. This type of sampling results in significantly more simulations with low a 0 than with high a 0. To deal with this, we forced additional simulations onto dedicated intervals of a 0. Although the laser focus-FWHM is technically not relevant in the 1D case, we sampled it nonetheless such that together with the sampled laser energy and pulse length, the correct a 0 was written in the input file. This also ensures comparability with higher-order simulations and experimental data.

2.2.4. Simulation Statistics

We used the setup described above to create a dataset of simulations for our subsequent surrogate model. All parameters were stochastically sampled and their combination can be thought of as a sparse grid. The Virgo cluster employs the Simple Linux Utility for Resource Management (SLURM) [Reference Yoo, Jette and Grondona26] to schedule incoming jobs where up to 10 000 jobs can be added to the queue simultaneously. The jobs were queued using a script to sample a certain number of parameter combinations and then start a simulation job for each of them. The number of simulations varies between the different species. There were 508 200 simulations for hydrogen and 762 426 simulations for deuterium, resulting in a total of 1 270 626 simulations. However, the precise number of simulations is not crucial, as long as the number of simulations is in a similar order of magnitude, the results should be comparable. The reduced model, which utilizes only the pure H2O data without D2O component, was trained on a subset of the full dataset with 68 973 entries accordingly.

2.2.5. Limitations of 1.5D PIC

We used 1.5D simulations as mentioned earlier. These low-dimensional simulations do have some drawbacks. While they, together with our introduced transversal Lorentz boost method (Appendix C), are capable of describing several effects, some are not possible. The main limitation is created by the expansion of the plasma behind the target. In one spatial dimension, no transversal drift of the particles is possible, therefore also no decay of space charge effects exists. The expansion continues until infinity if it is not stopped. Even though we introduced an effective acceleration time t acc, this problem persists. Since we keep both setup and method constant, the relative behavior of the cut-off energies can still be taken into account, but the absolute value is overestimated. This overestimation is predictable and when applied makes the models directly comparable. Lécz et al. [Reference Lécz, Boine-Frankenheim and Kornilov27] have shown that the acceleration time t acc cuts off the spectrum, such that it is a good approximation of 2D simulations. The simulations have been verified with experiments as well, which have shown that the bias can be mitigated. Furthermore, Sinigardi et al. [Reference Sinigardi, Babaei and Turchetti28] have shown further scalings between 2D and 3D cut-off energies. Taking both arguments into account, we can deduce that there is a constant scaling factor from 1D to real-world experiments and also to 3D simulations. Similarly, because of the lack of transversal particle movement, we cannot evaluate divergence opening angles in a 1D simulation.

2.2.6. Data Discussion by Example

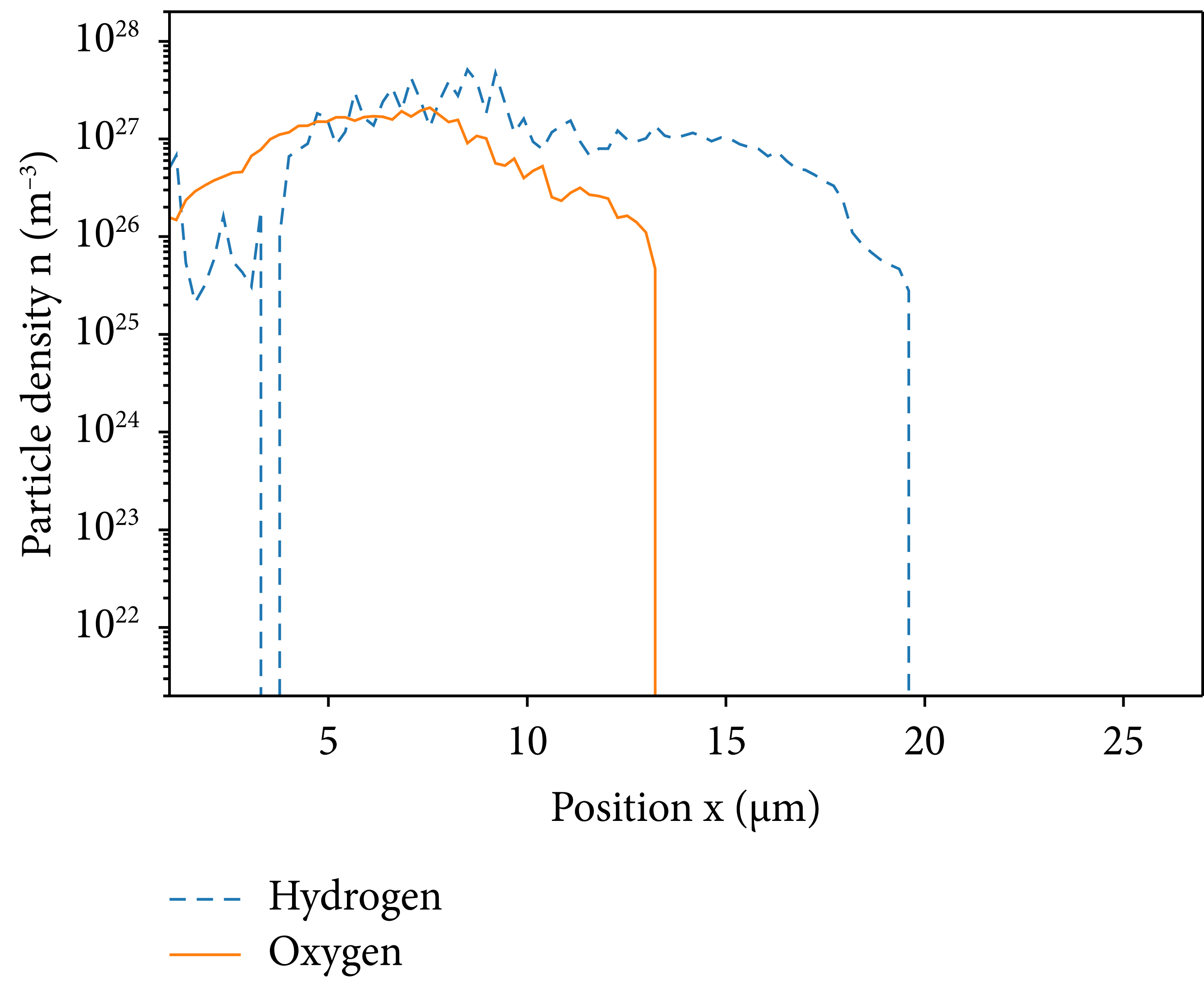

We display an example of the spatial distribution from the simulations in Figure 3. An example of the energy spectrum is already displayed in Figure 1.

Figure 3: Example of PIC simulation of water leaf target TNSA experiment. The plot shows the particle distribution at the previously proposed acceleration time t acc (equation (5)).

Firstly, in this simulation, we assumed that the target consists of regular water and is fully ionized by the implied laser prepulse. Thus, the three species (e−, H+, and O8+) are initialized with a density ratio of 10: 2: 1, respectively, such that overall neutrality is conserved. We display the species’ positions at t = t acc in Figure 3.

In this simulation, the laser incidence angle is 0°, the target thickness is 2 μm, and the dimensionless laser amplitude is a 0 = 20. We observe the two ion species, H+ and O8+, at t = t acc. The figures show that the species have different positions at the measured time, which means that the species are accelerated separately by the sheath field.

The ion front position at the acceleration time for different species varies due to the different charge and mass values as mentioned in Section 2.1. Calculating the expected variation, following the relation from Huebl et al., for only fully ionized oxygen and hydrogen present, yields a scaling factor of

![]() . The corresponding factor from Figure 3 is

. The corresponding factor from Figure 3 is

![]() , where the uncertainty results from the binning.

, where the uncertainty results from the binning.

We can see that the general TNSA mechanism is still applicable. Although the dynamics of the different particle species with each other are more complex, as we will see later, the general behavior appears to follow classical TNSA theory. This is supported by the kinetic energy spectra of the ion species after acceleration, an example of which is shown in Figure 1. The figure shows the energy spectra of hydrogen and oxygen ions, along with Mora’s predicted ideal curve.

2.3. Deep Learning Application

We have to correlate the different simulations to each other and find relations and interpolations to allow for an optimization of the full setup. We decided to use a neural network approach with fully connected feedforward topologies and built them in Keras [Reference Chollet29] running inside of Tensorflow 2 [Reference Abadi, Agarwal and Barham30]. For hyperparameter tuning, we used the Keras Tuner module [Reference O’Malley, Bursztein, Long, Chollet, Jin and Invernizzi31].

2.3.1. Model Training

To predict a particle spectrum, two models are needed. The spectrum model continuously maps

![]() onto

onto

![]() while a second cut-off model only predicts the maximum energy (i.e., when to cut off the continuous spectrum from the first model). We trained a reduced model pair, not taking deuterons into account for regular H2O, and a full model pair containing different ratios between H2O and D2O. The dedicated features of the PIC simulation can be seen better with the reduced model. We assume that this is a result of the lower number of input dimensions and therefore of the deviating degrees of generalization.

while a second cut-off model only predicts the maximum energy (i.e., when to cut off the continuous spectrum from the first model). We trained a reduced model pair, not taking deuterons into account for regular H2O, and a full model pair containing different ratios between H2O and D2O. The dedicated features of the PIC simulation can be seen better with the reduced model. We assume that this is a result of the lower number of input dimensions and therefore of the deviating degrees of generalization.

We essentially think of the energy spectrum as the graph of a continuous function f, the first model maps {x, [system parameters]} to f(x) while the second model predicts the point x at which the graph gets cut off. Details about the training parameters and the procedure are given in Appendix E. The reduced spectrum model has 6 hidden layers (x⟶320⟶288⟶288⟶256⟶256⟶320⟶1) while the full spectrum model has 11 hidden layers with 460 neurons each. The cut-off models both have 8 hidden layers (x⟶320⟶284⟶288⟶512⟶32⟶480⟶512⟶32⟶1. It is worth noting that the input dimension of the reduced spectrum model is one less than the full spectrum model since it does not include the mix parameter. All networks were fully connected architectures with ReLU activations on their hidden layers. We will now briefly discuss and evaluate the trained models:

(1) Reduced Model Pair. The precision of the cut-off models, which attempt to map {[physical parameters]} onto E max can be estimated rather easily. For the reduced problem, the model achieved a mean squared error of 8.93 MeV2 on validation data (confer to Appendix E), meaning the average error on the prediction of the hydrogen spectrum’s maximum energy is projected to be around ±2.99 MeV.

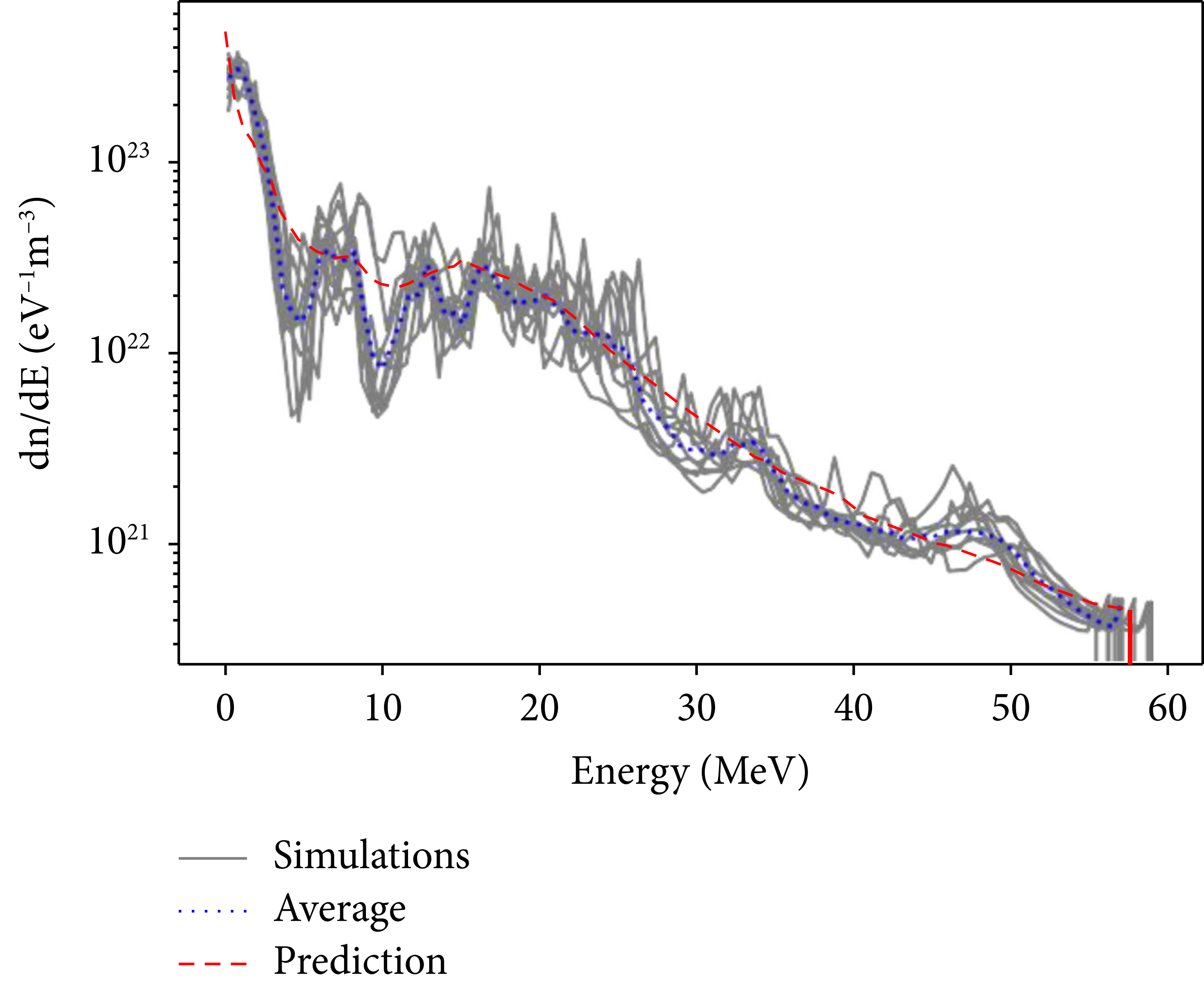

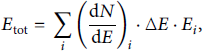

To more intuitively evaluate the reduced spectrum model’s predicting capabilities and potential shortcomings, ten simulations with equal parameters (except for random seed) were computed such that their hydrogen ion energy spectra could be compared to the predicted spectrum of the model. A plot of all the spectra is shown in Figure 4.

Figure 4: Reconstructed hydrogen ion energy spectra of ten simulations differing only in their random seed. The energy spectrum prediction by the trained neural network model is indicated with a red dashed line, while the average of the simulations is indicated by the blue dotted line. The curve is obtained from the reduced continuous model and is cut off at the maximum energy determined by the maximum energy model.

The overall agreement of the model with the simulations is evident. The maximum energy predicted by the cut-off model falls centrally between the maximum energies of the ten simulations, only differing from the simulation average by 0.2 MeV. Looking at more intricate features of the simulation spectra, however, it is clear that the model possibly generalized slightly too much. At around 10 MeV, a dip, possibly due to multispecies effects, can be observed in most of the simulations and yet is barely present in the model prediction. Generally, the fluctuations in the simulation spectra are greatly reduced in the neural network predicted spectrum. A reason for this is likely the sheer vastness of differing spectra, the model was trained on. Since the parameter space for the training simulations was so large, the model had to generalize too many very different output spectra.

(2) Full Model Pair. The full model pair were trained exactly the same as the reduced model pair but with an additional parameter and a larger dataset. The full cut-off model converged with a mean squared error of 7.25 MeV2 on validation data resulting in a prediction error of ±2.7 MeV for the maximum energy of the hydrogen spectrum (confer to Appendix E).

Again, as given above, the sensitivity of the spectrum model is more complicated to estimate. In Figure 5, we can see that both numerical models, the full and the reduced model do deviate from one another slightly, even if the mixture is set to zero. This is expected behavior since there is a statistical variation in the training of neural networks. Important to note is the deviation in the spectra for different mixture ratios. An influence of the mixture parameter on the spectrum is visible and it can be used to tune the spectrum. The behavior for the full spectrum model is the same as the one for the reduced spectrum model presented in Figure 4, the model is generalizing to a specific degree and has an uncertainty of few MeV for the cut-off energy.

Figure 5: Model comparison for hydrogen spectra with reference PIC simulation. Dashed lines give the result for the full model, the number indicates the value for the mixture parameter. The PIC reference (for mixture = 0) is displayed with a solid line and the reduced model with a dotted line.

2.3.2. Model Efficiency

Calling the models in a Python code environment is similar to calling any other function and takes around 20 ms on a personal laptop. This time is in stark contrast to the four hours on 16 CPUs taken to run a similar 1D PIC simulation on the HPC cluster. To put this in perspective, we can run inference on the models roughly 720 000 times, while one PIC simulation calculates.

We made other attempts at fitting the regression problem using various kernel combinations and Gaussian process regression [Reference Bishop32], however, they never produced energy spectrum predictions that even came close to the neural network prediction seen in Figure 4, usually being off from simulations by orders of magnitude. As expected, the adaptability of modern unparameterized machine learning methods such as neural network models stand out from other regressors.

3. Application of the Model

With a trained surrogate in hand, we were able to take advantage of the model to perform a numerical optimization of an experiment as well as evaluate our models’ interpretability.

3.1. Optimization of Parameters for Laser Plasma Interactions

In this section, we optimize a TNSA experiment with a water leaf target. We aim at an ideal set of laser and target parameters and apply the previously obtained reduced machine learning model pair.

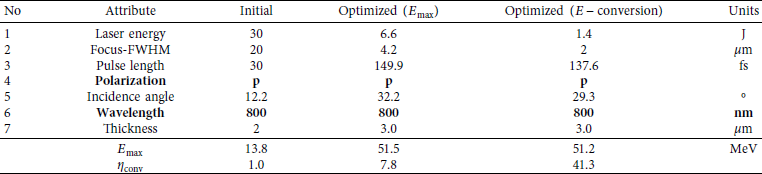

We chose some base parameters that have a proven repetition rate of at least 1 Hz: Ti:Sa lasers with a central wavelength of 800 nm and p-polarized laser light. Exemplary systems would be the VEGA-3 laser at the Centro De Laseres Pulsados in Salamanca, Spain, (CLPU) [Reference Vega33] or DRACO laser at the Helmholt-Zentrum Dresden-Rossendorf [Reference Schramm, Bussmann and Irman34]. Following the procedure in this section, the model can also be applied to any other system, if its parameters are inside the minimal and maximal physical parameters of our model (see Table 1). If the system’s parameters are not included, our model could be expanded by retraining with additional data, using transfer learning [Reference Spears, Brase and Bremer35], or other modern domain adaptation methods [Reference Wang and Deng36]. The assumed initial parameters of the laser system are stated in Table 2.

Table 2: Table of the physical parameters to be optimized for the laser system. Both initial and optimized values are shown. Rows in bold remained fixed during optimization. The dimensionless laser amplitude a 0 also remained fixed during optimization to encourage the convergence towards nontrivial parameter combinations. η conv is a measure for energy conversion efficiency (see equation (6)), normalized to the initial parameter case.

In this section, we investigate two different optimization goals. The first goal is to find the maximum cut-off energy, while the second goal is to maximize the laser energy deposition into the plasma.

As mentioned, we assumed polarization and central laser wavelength as fixed but otherwise allowed all parameters to change, as long as they stayed in the given physical constraints. Since the obvious solution to maximizing output energy is to maximize input energy, the optimizations were computed under the constraint of a constant dimensionless laser amplitude a 0. This ensures optimization by exploiting complicated relationships between the physical parameters of the system; a task that can only be feasibly solved with a rapidly callable model.

We implemented the optimization utilizing the SciPy Python library [Reference Virtanen, Gommers and Oliphant37] and the Byrd–Omojokun algorithm [Reference Lalee, Nocedal and Plantenga38] included in its scipy optimize minimize routine. The Byrd–Omojokun algorithm allows us to include both, boundary conditions according to Table 1 as well as the equality constraint of constant a 0, to leverage the aforementioned nontrivialities of the system.

The optimized parameters are displayed in Table 2. The optimizer seems to have taken advantage of incidence angle-dependent absorption effects such as resonance absorption. In addition, by dramatically increasing laser focus while simultaneously decreasing laser power (energy over time), the maximum ion energy could be optimized without changing the dimensionless laser amplitude a 0. Overall, the optimizer was able to increase the maximum ion output energy by a factor of roughly 4. The hydrogen energy spectra for these optimized parameters as well as for the initial parameters are depicted in Figure 6.

Figure 6: Energy spectra of H-ions for a TNSA water leaf target experiment using both initial VEGA-3 as well as optimized parameters with respect to the maximum ion energy. Predicted spectra by the neural network model and spectra from a 1D PIC simulation are shown. The parameters are given in Table 2. (a) Initial parameter selection. (b) Optimized parameter selection with respect to maximum ion energy.

A more intricate measure of a TNSA experimental system is the laser-ion energy conversion efficiency, i.e., the measure of how much of the laser’s input energy gets transported into the accelerated particles. We thus consider the optimization of the ratio of the total kinetic energy of the ions E H to the laser pulse energy E L:

where

![]() and E max are given by the neural network models, and {params} is the set of all parameter combinations within the ranges specified in Table 1. It is important to note that the Smilei output gives

and E max are given by the neural network models, and {params} is the set of all parameter combinations within the ranges specified in Table 1. It is important to note that the Smilei output gives

![]() which has to be scaled by a unit volume V to arrive at the expression needed. For further explanation of how to arrive at the abovementioned integral term, we refer to Appendix D. Here, we also allow the variation of laser energy E L, increasing the complexity of the problem. The optimization described in equation (6) was carried out by solving the numerical integral using the composite trapezoidal rule and once again employing the Byrd–Omojokun algorithm.

which has to be scaled by a unit volume V to arrive at the expression needed. For further explanation of how to arrive at the abovementioned integral term, we refer to Appendix D. Here, we also allow the variation of laser energy E L, increasing the complexity of the problem. The optimization described in equation (6) was carried out by solving the numerical integral using the composite trapezoidal rule and once again employing the Byrd–Omojokun algorithm.

As seen in Table 2, despite having slightly lower maximum ion energy than the first optimization task, the calculated energy conversion efficiency is more than five times greater. This gives a strong indication that laser coupling into the target in a laser-plasma experiment depends on the physical parameters of the system in a highly nontrivial way.

3.2. Sensitivity Analysis

Artificial neural networks are generally difficult to interpret, which is a drawback we have to accept. Nevertheless, the importance of specific parameters for a model can be evaluated. One way to quantify the impact of a model’s input parameters on its output is to use variance-based global sensitivity analysis, also known as the Sobol’ method. The corresponding sensitivity metrics are known as Sobol’ indices [Reference Sobol39–Reference Saltelli, Annoni, Azzini, Campolongo, Ratto and Tarantola41]. The Sobol’ indices are calculated by Monte Carlo sampling of parameters and corresponding model outputs. This method is used to apportion the variance of the output to the inputs and their combinations. The number of evaluations of our model is

![]() , where D is the number of input features and n is the number of samples drawn. n is ideally selected as a power of 2, where we selected 218 = 2 62 144 drawn samples.

, where D is the number of input features and n is the number of samples drawn. n is ideally selected as a power of 2, where we selected 218 = 2 62 144 drawn samples.

We used the PAWN method [Reference Pianosi and Wagener42] for a second sensitivity analysis to complement the Sobol’ method due to its shortcomings for the higher order of the input features. The PAWN method uses a different approach for when the variance might not be a good measure for the outcome of a system. It utilizes the traits of the cumulative distribution functions with similar Monte Carlo sampling as for the Sobol’ indices, giving a different approach to determine the sensitivity of a model. A combination of these two methods was also proposed by Baroni et al. [Reference Baroni and Francke43].

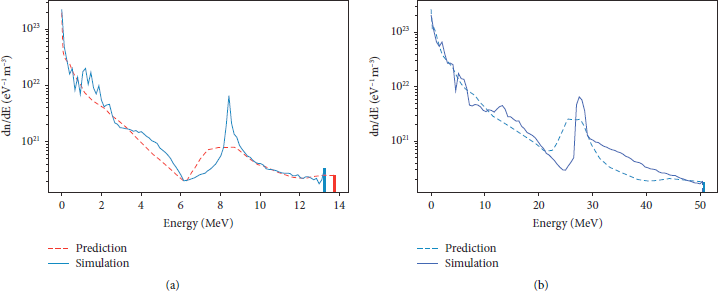

3.2.1. Reduced Model

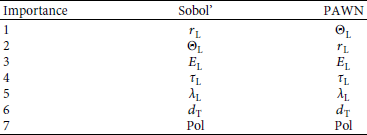

The reduced cut-off model has 7 input features which are mapped to 1 output prediction for the maximal energy. Our results of the Sobol’ analysis for the reduced H2O-only model are given in Figure 7. The larger the value of a Sobol’ index is, the more influence the independent parameter has on the result. The total Sobol’ indices, normally referred to as S T give a measure of the total importance of the given features. The total Sobol’ indices can neither describe how much of the variance is attributed to which combination of parameters nor are they normalized for the total expression. This is due to multiple counting of effects, e.g., if there is a second-order contribution for ΘL and r L, then this contribution is added to both of the values in the total representation. It doubles the counting for the second order, triples for the third order, and so on.

Figure 7: Sobol’ sensitivity analysis results showing the influence of various physical parameters on the cut-off energy of H-ions for a TNSA water leaf target experiment, for the reduced model utilizing only the H2O data. Errors are given in the 95% confidence level. (a) Total variation which explains the cut-off energy variation. (b) Matrix of dependencies to explain the cut-off energy variation. The diagonal gives first-order Sobol’ indices, while the lower gives the second-order Sobol’ indices for the corresponding variables. The upper line is the numerical value, and the lower line gives the corresponding error.

Due to this complication, the determination of higher-order dependencies makes it necessary to display the first- and second-order Sobol’ indices, as done in Figure 7(b). The values are displayed in a matrix, such that the interaction between (xi, yj) can be displayed. The first-order Sobol’ indices are shown on the main diagonal (xi = yj). It is evident from the plot that the sum does not add up to 1, leaving approximately 21% of data variance unexplained. The consequence of this is that even higher-order interactions are necessary to fully explain the variation in our model. Full calculation of higher orders has been omitted as it was deemed unfeasible due to the extreme computational cost for higher dimensions.

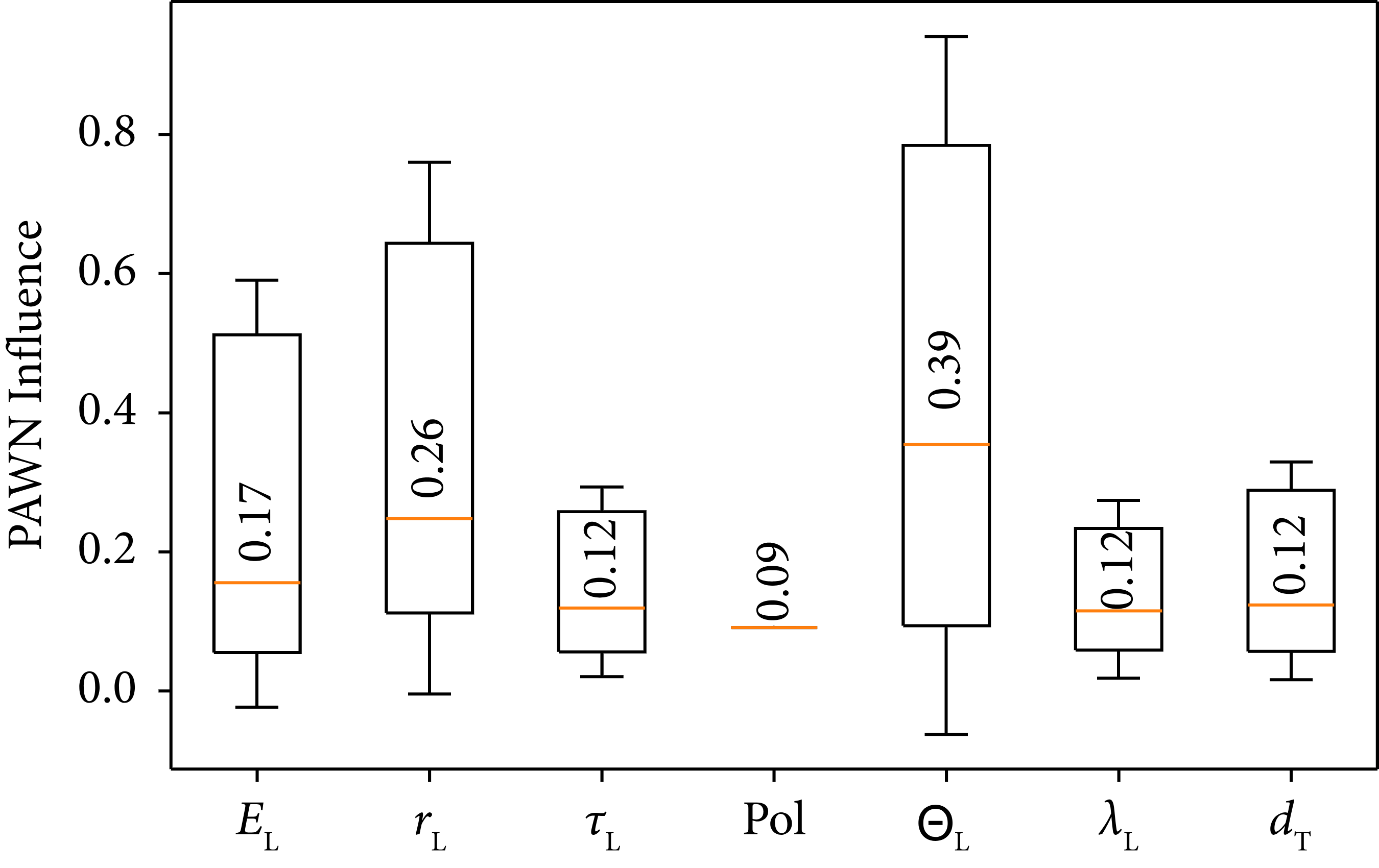

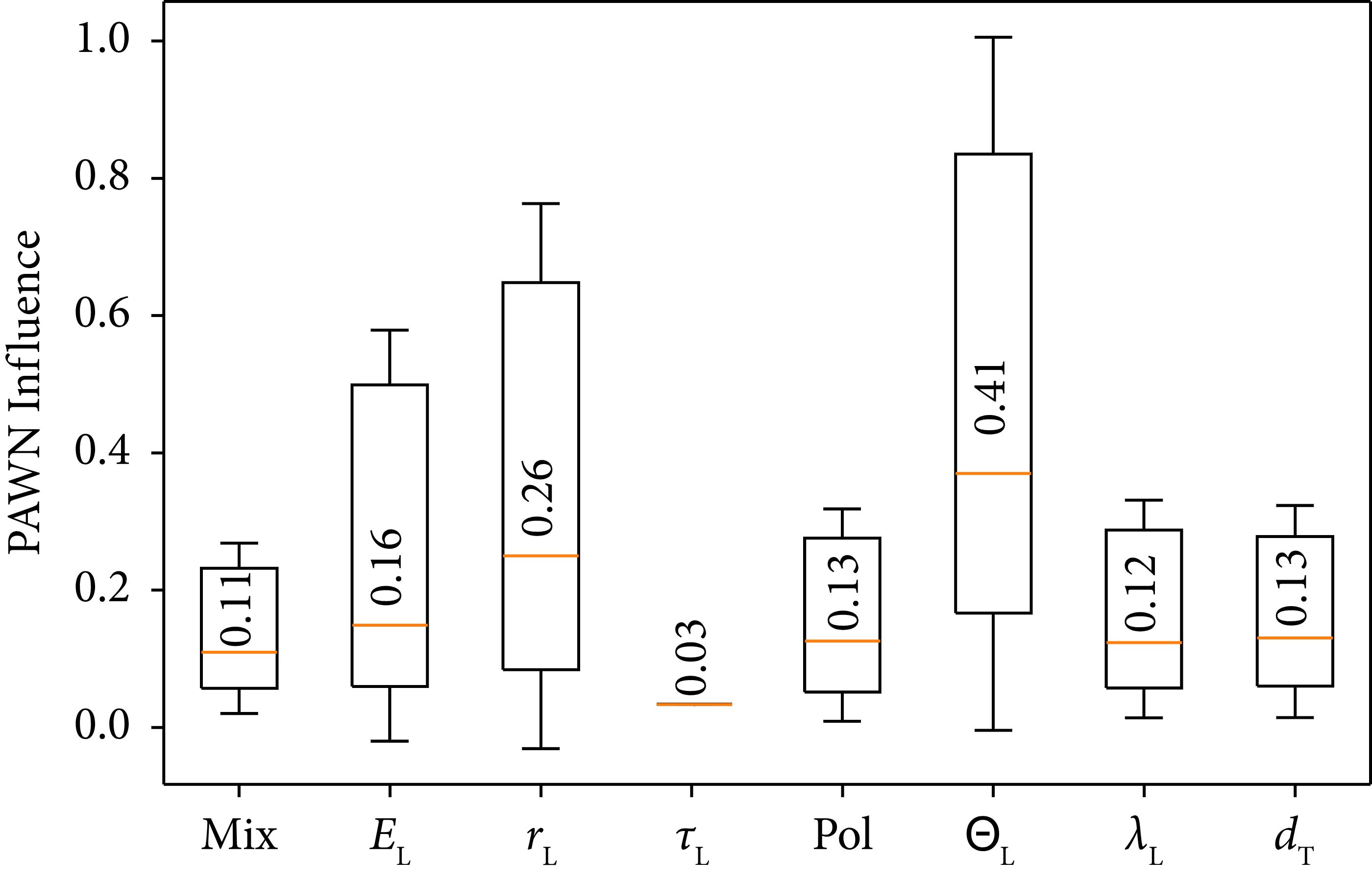

The results of the PAWN method are displayed in Figure 8. PAWN can only give us a measure of the full importance of the individual parameters. A subsequent division into main effects and higher order is not possible. The importance ranking from PAWN does not entirely match the order found by the Sobol’ method but is rather close. Both are listed in Table 3. If not the total, but the sum of first- and second-order Sobol’ is taken, then the first two features change places.

Figure 8: PAWN indices for the reduced model as a measure for parameter importance. Boxes consist of uncertainty value, minimum, median, maximum, and upper uncertainty value. The numerical value given is the median.

Table 3: Importance ranking of the model parameters as calculated by the Sobol’ and PAWN methods.

The sensitivity analyses thus suggest that higher-order interactions are important in this model and a simple optimization (e.g., maximizing only one quantity) is not sufficient. Our previously presented optimizations take this implicitly into account. Furthermore, the incidence angle and the irradiation area appear to be important. The angle ΘL’s high influence is expected, considering laser-ion absorption mechanisms, and is faithfully implemented into the 1D simulation space using the Lorentz boosted geometry (see Appendix C). While the third quantity, the laser energy, directly scales the laser’s dimensionless amplitude a 0; the irradiation radius r L’s influence is more difficult to understand. The irradiation area is not directly represented in a 1.5D PIC simulation. However, since a 0 is dependent on r L, an indirect influence is included.

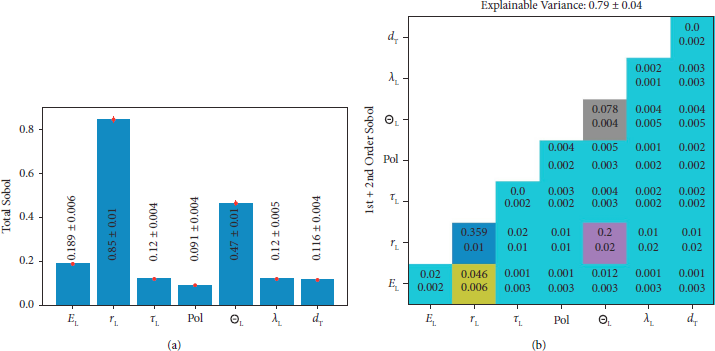

3.2.2. Full Model

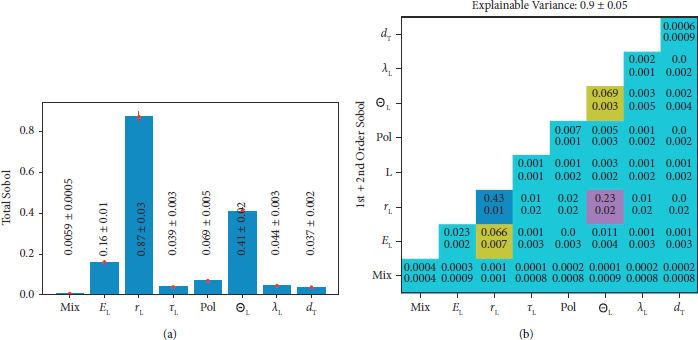

Running the same analysis for the full cut-off model including the mixture parameters yields the results given in Figures 9 and 10. As can be seen in the display of the data, the mixture has, according to the Sobol’ analysis, minimal if not zero influence on the maximum energy of the hydrogen component, while the PAWN analysis gives a higher influence. Furthermore, the variance of the output can be better explained in this model, than from the reduced model although the geometry did not change.

Figure 9: Sobol’ sensitivity analysis results showing the influence of various physical parameters on the cut-off energy of H-ions for a TNSA water leaf target experiment, for the full model utilizing only the H2O data. Errors are given in the 95% confidence level. (a) Total variation which explains the cut-off energy variation. (b) Matrix of dependencies to explain the cut-off energy variation. The diagonal gives first-order Sobol’ indices, while the lower gives the second-order Sobol’ indices for the corresponding variables. The upper line is the numerical value, and the lower line gives the corresponding error.

Figure 10: PAWN indices for the full model as a measure for parameter importance. Boxes consist of uncertainty value, minimum, median, maximum, and upper uncertainty value. The numerical value given is the median.

3.2.3. Sensitivity Analysis Discussion

We performed a sensitivity analysis on our models and were able to evaluate the importance of the different parameters. We found evidence that the model describing the laser-plasma system is highly nonlinear. It should be noted that a deep learning model approximates the physical system very well. It does not, however, provide a closed-form solution for the underlying physics, which would require further theoretical work.

The models apply regression for the simulated data and as such are able to reproduce a mean curve for the data, which is for example displayed in Figure 6 or in Figure 4. The spectrum models take the energy bin value into account and predict the continuum of accelerated ions. This means, that the dependency on the bin’s energy would be included as well. An explainable analysis of taking all energy bin values into account then becomes infeasible as each bin would require a separate Sobol/PAWN analysis.

The cut-off energy of a TNSA spectrum is the main parameter investigated in the literature, which is for example analyzed by Zimmer et al. [Reference Zimmer, Scheuren and Ebert15]. We have seen that we can get similar results to Zimmer et al. for the cut-off energy dependencies. We see from the Sobol’ analysis, that several parameters are of importance, therefore having only a single parameter to describe the cut-off is not sufficient. Since second-order Sobol’ indices are not zero, we have to take them into account as well.

Since neither model is explaining the cut-off variation close to 100%, when only 1st and 2nd-order variations are taken into account, we can conclude that the calculated models require consideration of even higher order variations to describe an additional 10%–20% of the cut-off variation. Such a large reliance on higher-order interactions implies that simple scaling models are unideal for optimizations since these effects are not taken into account. A model capable of approximating highly nonlinear effects, such as our neural network models, should thus be preferred.

The Sobol’ indices decompose the function into a unique space [Reference Sobol39], this could be used to construct a polynomial chaos expansion [Reference Ghanem and Spanos44] polynomial from it. This polynomial can describe the same amount of variation as indicated by first- and second-order Sobol’. It is therefore neither a complete representation of our network-based models nor is it physically interpretable.

3.3. Interpretations

Two major observations from the numerical study are of interest for the understanding of the modeled system.

The first observation is the deviation from the exponential Mora-like shape towards the plateau-like features as presented in Figure 1. An explanation of this effect is the particle-particle interaction inside the expanding plasma. The driver of this effect is the higher-mass particle species (heavy ions), which is accelerated later than the lower-mass particle species (protons). Heavy ions, with their higher inertia copropagate with lower energy protons and interact via the Coulomb force. Due to the higher inertia, the protons are pushed away from the heavy ions, being accelerated as a result. This effect is especially highlighted by 1D PIC simulations since no transverse particle movement is allowed. For higher-order dimensions [Reference Huebl, Rehwald and Obst-Huebl10] or experimental data [Reference Alejo, Kar and Ahmed21], the effect is less dominant and the transitions are smoother. If the particles are accelerated purely in the longitudinal direction, the divergence, which is given by the quotient of transversal and longitudinal momentum, can be reduced as well [Reference Schmitz, Metternich and Boine-Frankenheim45] (equation (2)).

The second observation is the high increase in energy absorption. The fraction of the energy passed onto the protons increases by a factor of about 42. As shown in the validation for the angular Lorentz boost scheme in Appendix C, a large increase in the absorption efficiency is a result of the angle-dependent resonance absorption. Indications for this are displayed in Figures 11 and 12 The optimization algorithm exploits this behavior directly and therefore finds ideal angle values. However, there are at least two sides to this coin. The goal of the approach was to describe the TNSA process in a model which allows for the optimization of the output depending on the input. Approaching this directly and analytically is not possible. The time development of the governing Maxwell–Vlasov system, which already is a simplification using the collision-free case, cannot be solved in closed form. No true relations for the cut-off energy, for example, have been derived so far. To get as close to this ground truth as possible, and to become able to extract it at a later point (with sufficient experimental data), a complex numerical model must be used. In our case, we adopted an artificial neural network approach. Artificial neural networks have desirable properties as they have been shown to be universal function approximators [Reference Leshno, Lin, Pinkus and Schocken48]. However, the explainability of such complex models has been a critical point in their analysis for some time. The sensitivity analyses shown in Section 3.2 were used as a way to mitigate the complexity and gain some explainability of the model. The Sobol’ indices method, or global variance-based method, underlines that the interaction of the different parameters is of importance. First- and second-order can only explain 79% of the models’ variance (Figure 7(b)). This means that higher-order dependencies of the input parameters are necessary to explain a significant part (21%) of the variance. The model cannot explain which higher-order effect, i.e., which combination of input quantities, is exactly responsible. The model’s goal is to allow engineering optimization of the TNSA process. As a result of this optimization, this higher-order dependency was found.

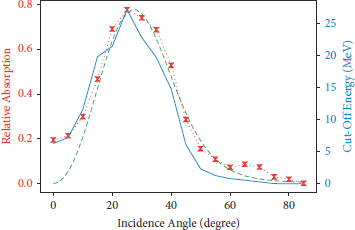

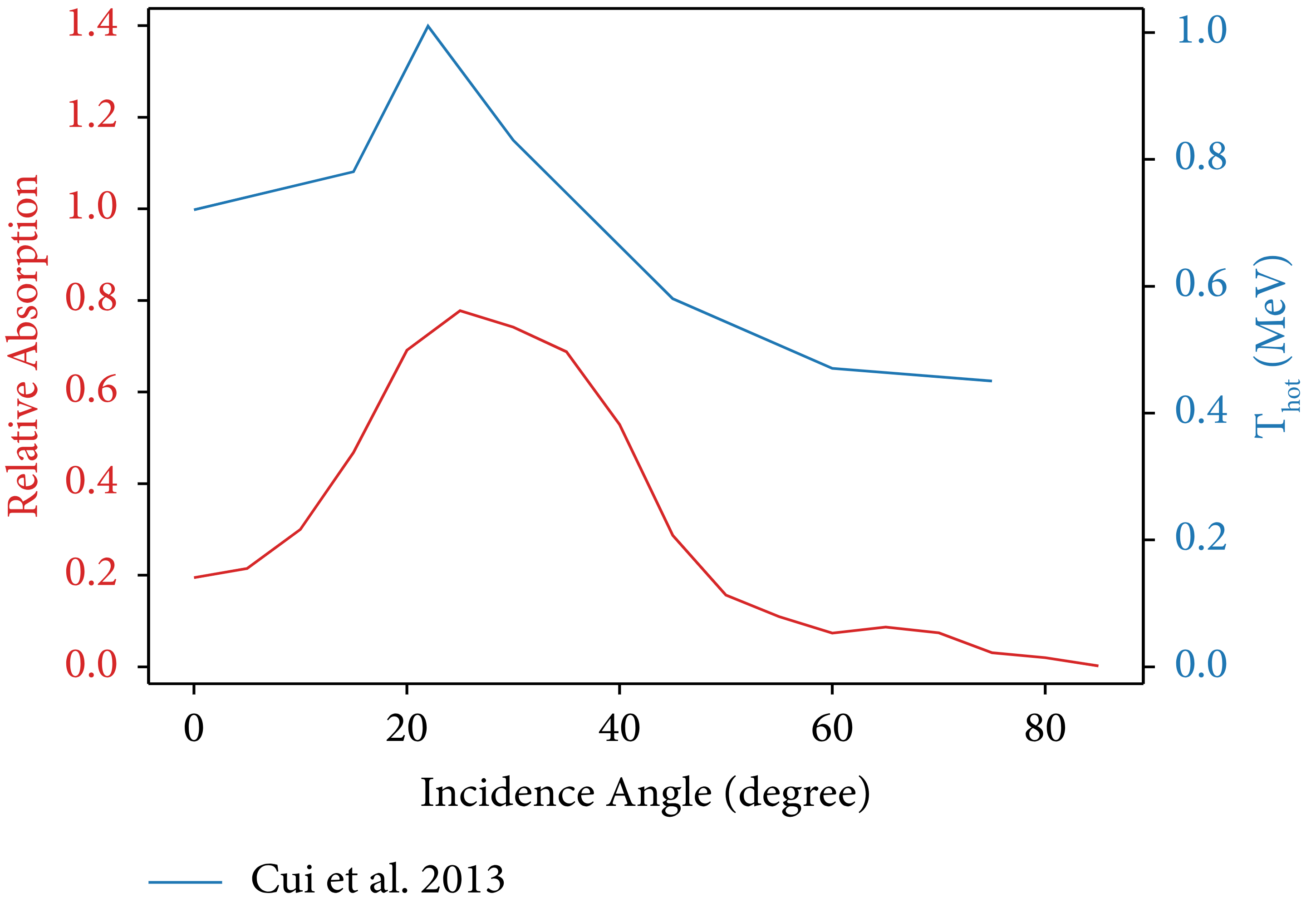

Figure 11: Simulation of laser incidence angles between 0° and 85°. The plot shows the incidence angle versus the relative absorption of a laser into a hydrogen plasma target (dotted red line) as well as the maximum proton kinetic energy behind the target (blue solid line). The laser impinges on the target with p-polarized fields. Classical resonance absorption, also known as the desinov curve [Reference Gibbon46], is shown as a dashed line.

Figure 12: Plot of the absorption of energy in the Lorentz-boosted simulation in comparison to the data from Cui et al. [Reference Cui, Wang, Sheng, Li and Zhang47].

4. Conclusion

In this study, we modeled and optimized a possible TNSA experiment using a liquid leaf target by employing a combination of Particle-In-Cell simulations and deep learning. In agreement with previous studies [Reference Huebl, Rehwald and Obst-Huebl10, Reference Alejo, Kar and Ahmed21], we have seen that the accelerated spectra from a multispecies target behave untypical in comparison to regular one-species TNSA which is described by Mora.

We developed surrogate models that replicate computationally costly PIC simulations using a deep learning approach. Deep learning is well-suited for optimizing complex systems. To take advantage of the trained models’ inference speed, we used the Byrd–Omojokun algorithm to find an optimal parameter configuration for the system. This yielded a set of parameters that resulted in optimal maximum hydrogen energy (8 times greater than the initial parameters), and a set of parameters that resulted in optimal laser energy conversion efficiency (41 times greater than the initial parameters). We verified these findings with additional PIC simulations.

We applied sensitivity analysis methods to evaluate the influence of the different parameters and successfully identified the relevant ones. We showed that such sensitivity analysis methods bear great potential for the understanding and quantification of physical dependencies when a closed-form solution is not known.

The data-based model that we developed can be extended in the future to improve predictions and better understand the system. This can be achieved by incorporating future experimental data for the liquid jet.

5. Disclosure

The results presented here are based on simulations, which were performed on the Virgo HPC cluster at the GSI Helmholtzzentrum für Schwerionenforschung, Darmstadt (Germany). DK was affiliated to TU Darmstadt while participating in this work.

Appendix

A. Units and Dimensionality

The simulations in this work were done using the Particle-In-Cell (PIC) method [Reference Birdsall and Langdon49]. In the following section, we discuss the units and dimensions of the underlying Maxwell Vlasov system and extract a lower number of relevant parameters which give valuable physical insight. This is important to understand why 9 parameters were used in our model. The basis we construct in this chapter can be represented by the physical parameters we sampled for the simulation part of our study.

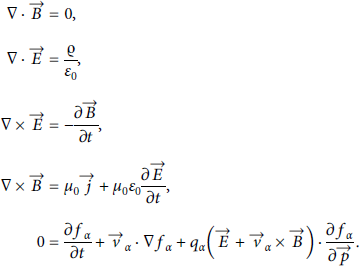

A.1. Basis Maxwell Vlasov System and Normalization

TNSA requires a high-intensity laser pulse to heat plasma electrons up to MeV temperatures. We assume that the mean free path is larger than the target thickness and the whole process can therefore be assumed as collision free [Reference Mulser and Bauer50, Reference Arber, Bennett and Brady51]. If the process is collision free, it can be characterized by the Maxwell–Vlasov system of partial differential equations.

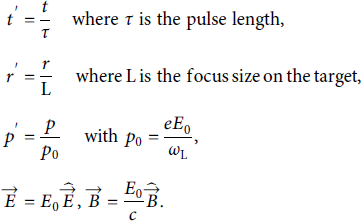

Solving these coupled equations efficiently with numerical methods makes it important to simplify relations. A normalization towards reference quantities is the first step:

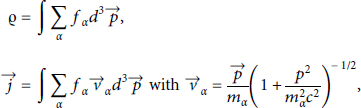

The charge distribution and the current can further be expressed by

where f α denotes the charge density. These can be normalized to a reference quantity as well by modifying f α accordingly shifting all dimensions into the new n α.

A.2. Similitude Relations and Dimensional Reduction

A system of equation can be simplified, by applying the Buckingham Π theorem [Reference Buckingham52]. This theorem allows us to take the dimensional quantities of a problem into account and find underlying dimensionless quantities which reflect the actual physical meaning.

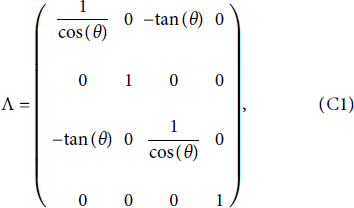

If the boundary and initial conditions are similar, then fewer dimensionless parameters than dimensional parameters can be found to fully represent this equation system. This implies that the shape of the electromagnetic wave, defined by

![]() and

and

![]() , and the normalized charge density

, and the normalized charge density

![]() , have to be similar. Similar in this case means, that the governing function is the same except for some parameters which themselves can be derived using the Buckingham Π theorem as well.

, have to be similar. Similar in this case means, that the governing function is the same except for some parameters which themselves can be derived using the Buckingham Π theorem as well.

To ensure similarity in this work, a Gaussian profile was assumed for the electromagnetic wave, leaving the laser frequency ω L, the pulse length τ L, and the corresponding electric peak field E 0 as variable quantities. The initial plasma distribution is defined as a homogeneous slab with particle density n 0 and a thickness d T with exponential decaying preplasma and skirt. The exact relations are given in Section 2.2.

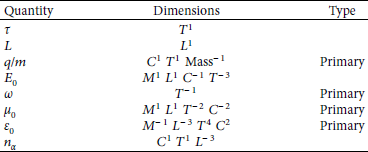

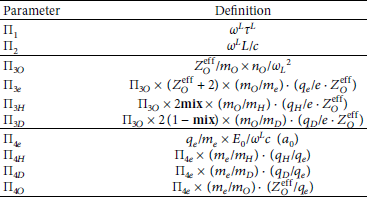

Keeping these initial conditions fixed allows us to apply the Buckingham Π theorem to the Maxwell–Vlasov system of equations. This results in dimensionless quantities Πi which are capable of describing all dimensional quantities inside the equation system. The dimensional quantities are given in Table 4.

Table 4: Overview of the dimensional quantities of the Maxwell–Vlasov EQS. Dimensions are listed in SI base dimensions. Buckingham Π parameters are calculated by defining primary quantities which are used multiplicatively in each parameter.

T: time; L: length; C: current; M: mass.

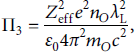

Using these dimensional quantities, the following Πi are determined:

The theorem also states that the number of resulting dimensionless parameters is lower than the number of dimensional parameters, reducing the complexity of the model.

A.3. Interpretation of the Dimensionless Quantities

These quantities are sufficient to describe and condition the EQS from a mathematical standpoint. From a physical standpoint, this also creates valuable insight. Π1 gives the number of

![]() -oscillations in the laser pulse and Π2 the irradiation size of the laser. Π3 correlates the laser with the target since it is the ratio of the particle density to the critical plasma density defined by the laser. Π4 describes the particle dynamic inside the laser’s amplitude for each species. For electrons, Π4 is identical to the dimensionless quiver velocity a 0. The meaning is equivalent for the different ion species.

-oscillations in the laser pulse and Π2 the irradiation size of the laser. Π3 correlates the laser with the target since it is the ratio of the particle density to the critical plasma density defined by the laser. Π4 describes the particle dynamic inside the laser’s amplitude for each species. For electrons, Π4 is identical to the dimensionless quiver velocity a 0. The meaning is equivalent for the different ion species.

Writing down the EQS and substituting the Π parameters makes their importance apparent:

If these parameters are constant, then the equations are all the same and therefore behave the same. This results in the same time development of the system and therefore yields the same results. One can say that for constant Πi areas of iso-dynamics exist, finally simplifying any model approaches by reducing the dimensions to be examined. Models therefore only need these 4 parameters to precisely determine a system.

A.4. Correlating Dimensionless Parameters and Simulation Input

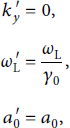

The system, therefore, has a dedicated number of Π parameters which have to be taken into account: Π1 and Π2 are laser-relevant quantities and therefore particle species independent. Π3 and Π4 describe quantities of the particle species, therefore introducing a multiplicity in the parameter, denoted by α. In the case investigated here, the multiplicity is 4: electrons, oxygen, hydrogen, and deuterium. The system is initialized with the same spatial distribution function

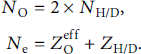

![]() for each species. To mimic ionization and mixture, some conditions apply:

for each species. To mimic ionization and mixture, some conditions apply:

Taking these assumptions into account resolves the multiplicity, and the corresponding Πs can be expressed with a multiplicative factor. The construction, including the multiplicative factors and the needed parameters, are given in Table 5.

Table 5: Construction of Π parameters.

A.5. Mapping Physical to Dimensionless Parameters

As stated in the main body of this work, several physical input quantities are used. They are chosen based on keeping datasets consistent and comparable. Therefore, some parameters are sampled which do not exist in 1D. This also ensures that the data can be broken down into the Πi with the following relations. It is important to note that from the Π4 possibilities, only the electron variant (equivalent to a 0) has to be passed to the PIC code.

This culminates in a needed dimensionality of 9 for the list of parameters: 4 Πi, 2 parameters to deal with the ambiguity

![]() and the mixture parameters, 1 parameter for the plasma slab d T (particle density is fixed), and 2 for dealing with laser’s polarization: Selection whether p/s linear polarization and to make a difference, variation of the incidence angle ΘL.

and the mixture parameters, 1 parameter for the plasma slab d T (particle density is fixed), and 2 for dealing with laser’s polarization: Selection whether p/s linear polarization and to make a difference, variation of the incidence angle ΘL.

Taking the mapping into account (equations (A8)–(A11)), a proper physical sampling includes the following:

-

(1) Ionization of oxygen

-

(2) Mixtures (deuterium vs. hydrogen)

-

(3) Laser polarization

-

(4) Laser energy/joule

-

(5) Laser pulse time/second

-

(6) Laser irradiation size/micron

-

(7) Laser wavelength/meter

-

(8) Laser incidence angle/degree (to the plasma normal)

-

(9) Plasma slab thickness

These 9 parameters have the same dimensionality as the parameter space calculated by the Πi which is necessary since the construction of dedicated quantities (especially the electric field of the laser) cannot be determined easily and a composition of these parameters has to be taken into account.

B. Parameter Ranges

As mentioned in Section 2.2.2, two paradigms are relevant for the selection of the parameter ranges.

For this work, we considered Petawatt laser systems that use mainly linearly polarized light and are capable of varying the the incidence angle of the laser onto the target. Taking this into account, we get two possibilities for the laser’s polarization: s-polarization and p-polarization. Similarly, we can get angles from 0° to less than 90°. At 90°, the laser is not hitting the target and is traveling parallel to the plasma surface, we, therefore, chose to cut the interval at 85°.

The laser energy was selected to cover a large area to increase the comparability of the model with different laser systems. At the conceptualization phase of this study, it was unreasonable to assume high repetition rate experimentation with much larger systems (e.g., GSI’s PHELIX system [Reference Bagnoud, Aurand and Blazevic53, Reference Busold, Almomani and Bagnoud54]), since the currently achievable repetition rates were too low. This might change in the future, such that higher energies are realistic, and the model base has to be expanded under such cases.

The most critical parameter is the pulse length. As our reference value, we selected the FWHM in the time domain of a pure Gaussian pulse. Firstly, the approximation of a pure Gaussian pulse is not necessarily true for a technically implemented laser. If the FWHM according to equation (4) is not used, then the value has to be adjusted accordingly. Due to calculation time issues of the underlying PIC models, we reduced the selected times to the interval from 15 fs to 150 fs. We know that this time can be significantly larger, but high repetition systems can operate with low pulse length variables. We also acknowledge that our lower simulation border for the time is close to the bandwidth-limited pulse limit, but we wanted to have some lower data points to force the interpolation into good behavior and therefore mathematically overshot into the lower regime. The upper pulse length boundary is also the first parameter we want to increase in further studies since technical laser systems do need a larger pulse length to apply this model.

The focus FWHM was then sampled according to the definition of the laser a 0 conditions which we applied to our parameters. We made sure to stay in the TNSA regime and prevented the laser a 0 to be smaller than one and keep the focus still realistically small with 2 μm as the lower range cut-off.

The selected wavelengths are larger than those used in engineered systems and also are somehow continuously sampled from this larger range. The reason for this is the importance of the wavelength parameter following the similitude relations, which are dependent on the laser wavelength in every component.

Concerning the target, we chose the thickness according to the parameters of the physical implementation of a liquid jet, which is currently under development. The mixture can only vary between 0 and 100%. Again, the effective charge of the particles plays an important role. We started with fully ionized oxygen and found traits of the multispecies effect during our investigation. While discussing the results, we generalized the effective charge discussion but were not able to properly simulate different effective ionization levels. This is due to a lack of proper ionization models (also beyond the scope of this study) and limited numerical resources. This would also be a parameter that could be further improved in additional studies.

C. Transversal Lorentz Boosted 1.5D PIC Simulations

Modeling oblique laser incidence onto a target is inherently at least a 2D problem, which requires substantially more computational power than a similar 1D geometry to simulate. Bourdier [Reference Bourdier55] thus proposed a method in which a relativistic Lorentz boost is applied to the frame of reference in the simulation. This method has later been employed by Gibbon et al. [Reference Gibbon, Andreev and Lefebvre56] in a PIC code.

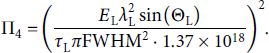

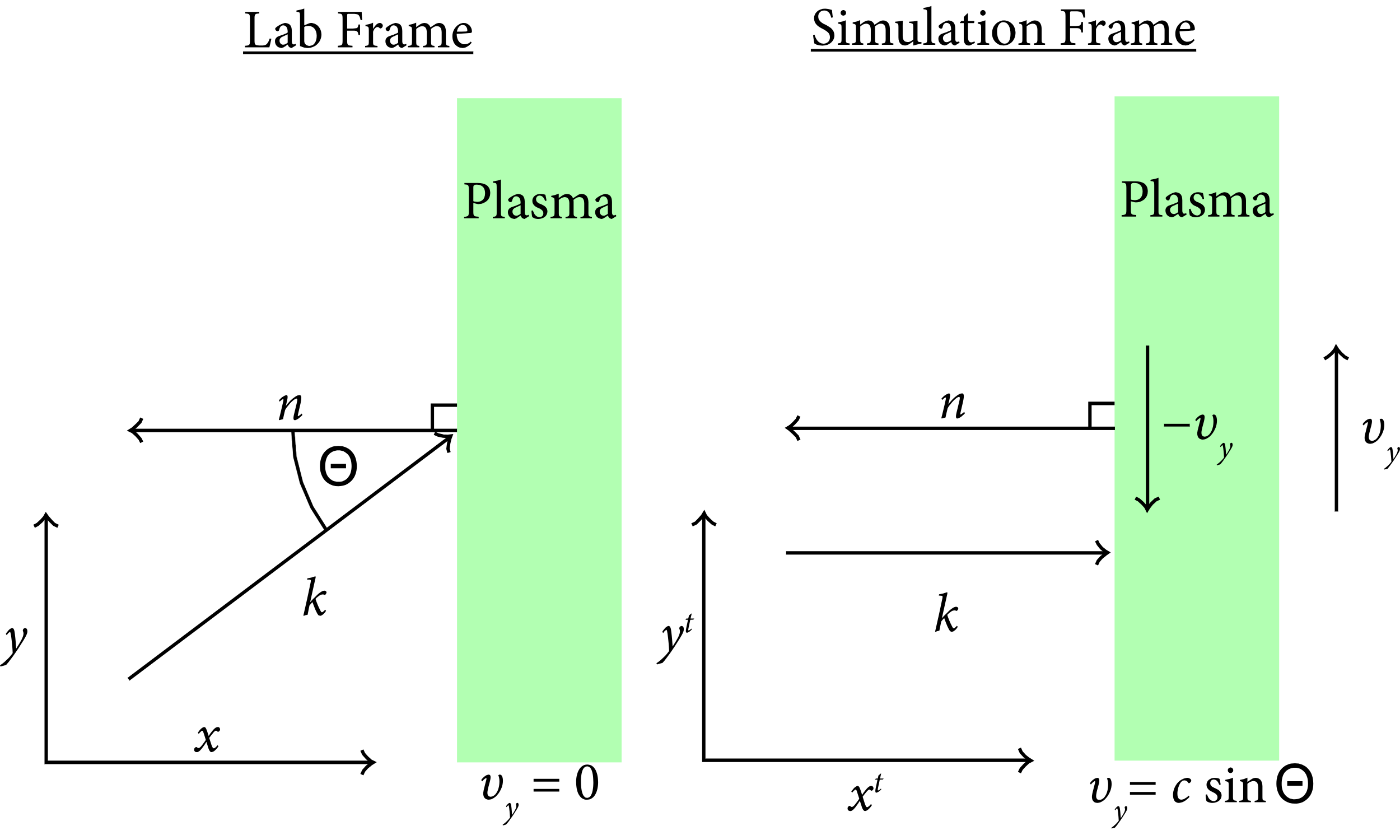

Here, we would like to present the implementation of this technique yet again for a modern PIC code while also correcting some mistakes in the calculations by Gibbon et al. A schematic diagram of the general principle is shown in Figure 13. To obtain the results in the lab frame, a back transformation must be applied to the diagnostics obtained from the simulation. For finding the transformations, a simple Lorentz boost in y-direction by the velocity

![]() is applied. In matrix form, this can be represented by

is applied. In matrix form, this can be represented by

since

![]() . This transformation matrix can be used to transform all the quantities of the particles and the electromagnetic fields. Indicating quantities in the transformed system with a prime, we find after carrying out all transformations.

. This transformation matrix can be used to transform all the quantities of the particles and the electromagnetic fields. Indicating quantities in the transformed system with a prime, we find after carrying out all transformations.

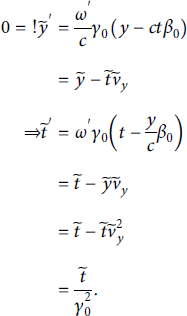

where k y ′ is the y-component of the wave vector in the boosted system, showing that, indeed, the laser is now at normal incidence. Note that the dimensionless laser amplitude a 0 is invariant under the transformation [Reference Gibbon, Andreev and Lefebvre56]. Furthermore, denoting PIC code units with a tilde, we find

Figure 13: Schematic of the Lorentz boosted simulation frame versus the implied lab frame. In the simulation frame, the laser appears to be at normal incidence onto the target while the particles appear to drift in negative y-direction with velocity vy.

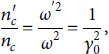

Giving a rescaling of both the simulation time as well as the cell grid, the initial particle density is also affected by.

With these conditions, the particles can be initialized in the boosted frame. The relative velocity vy is added as a permanent drift which is handled and relativistically added to the particles by the code.

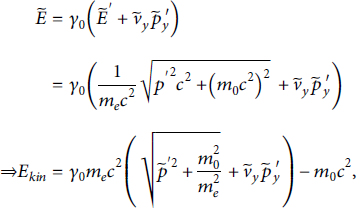

From the diagnostics in the simulation, we can obtain desired quantities via a back transformation. For the particle kinetic energies, we find using the energy-momentum relation:

where m 0 is the particle rest mass. Noting that

![]() , we can find relations to recover the fields of the laser. The nonzero fields are as follows:

, we can find relations to recover the fields of the laser. The nonzero fields are as follows:

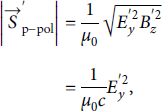

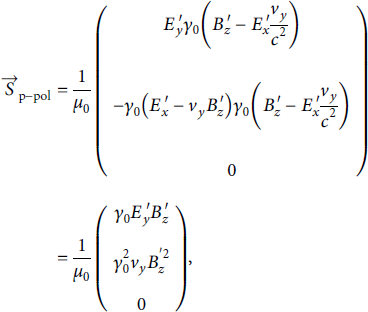

Using the field transformations and assuming that reflection at the plasma surface does not change polarization, we search for the absolute magnitude of the Poynting vector:

with which the relative absorption of the laser into the plasma can be calculated by dividing the incoming Poynting flux by the outgoing Poynting flux.

It should be noted that while the Lorentz-boosted frame method can replicate incidence angle-based behavior, it cannot replace a 2D or even 3D simulation on all accounts [Reference Gibbon, Andreev and Lefebvre56]. Firstly, in the general case, all physical quantities depend separately on the transformed coordinates x, y, z, t, px, py, and pz. Thus, the Lorentz-boosted simulation can only be used for a problem independent of y and z. In addition, reducing the geometry after the boost to 1D limits the spatial dynamics of the particles. Since only the x-axis is present, all particles (while having 3D velocities) can only move along a straight line (i.e., have only 1 spatial dimension). This disregards the angular spread at the back of the target such that the particles can be accelerated for longer times and thus end up with higher energies compared to a similar 2D simulation. Distinctly, 2D effects such as hole boring can also not be modeled accurately.

To illustrate the capabilities of this method, however, the relative laser absorption of a p-polarized laser impinging on a hydrogen plasma target was measured for varying laser incidence angles using the abovementioned method in the Smilei PIC code. The resulting absorption curve is shown in Figure 11. The results agree well with 2D simulations by Cui et al. [Reference Cui, Wang, Sheng, Li and Zhang47] using a similar target and laser (see Figure 12 for a comparison).

C.1. Explicit Lorentz Boost for Oblique Laser Incidence

In the following section, we discuss the full transformation in more detail, and explicitly calculate the relations we mentioned before. Starting from the transformation matrix in equation (C1), the full derivation will be done for all quantities in the system.

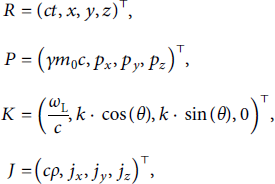

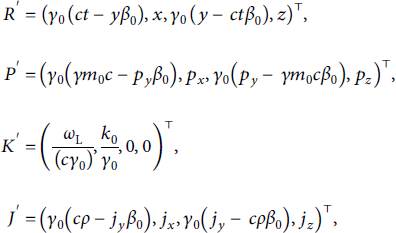

Firstly, the four-position R, the four-momentum P, the four-wave vector K, and the four-current J are given as follows:

where k = ω L/c is the magnitude of the wave vector, ρ is the charge density, and

![]() is the current density. Here, the geometry of the wave vector from Figure 13 has already been applied, reducing the wave vector to two spatial dimensions. By left multiplication of Λ, these quantities can be transformed into the boosted frame. This multiplication yields

is the current density. Here, the geometry of the wave vector from Figure 13 has already been applied, reducing the wave vector to two spatial dimensions. By left multiplication of Λ, these quantities can be transformed into the boosted frame. This multiplication yields

where a prime indicates quantities in the transformed system and

![]() . Most importantly, here, we find ky′ = 0 and

. Most importantly, here, we find ky′ = 0 and

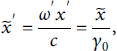

![]() . Also, since the particles are assumed cold at t = 0, we find for the initial density

. Also, since the particles are assumed cold at t = 0, we find for the initial density

![]() .

.

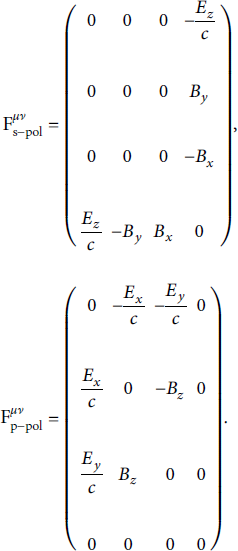

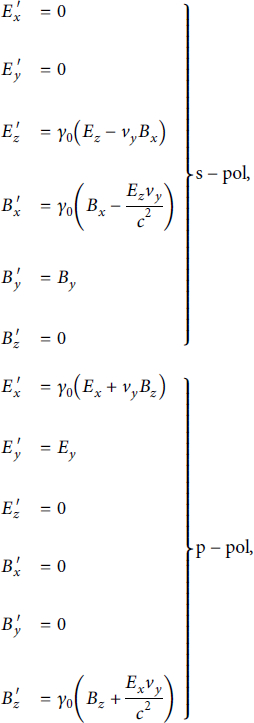

The next transformation is for the electromagnetic fields. Here, we differentiate between s- and p-polarized incidence lasers. To transform the electric and magnetic fields of the incoming laser, the electromagnetic tensor is used:

The Lorentz transformation of such a tensor is given by

where a prime again indicates quantities in the transformed system. The calculated fields are as follows:

where

![]() since the laser is at normal incidence in the boosted system. For absorption measurements, it is useful to have a look at the transformation of the Poynting Vector

since the laser is at normal incidence in the boosted system. For absorption measurements, it is useful to have a look at the transformation of the Poynting Vector

![]() . We first define in vacuum.

. We first define in vacuum.

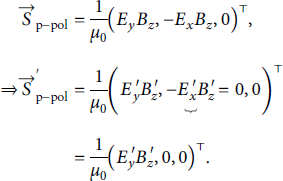

where μ 0 is the vacuum permeability. As an example, we will only present the calculation in the p-polarization case. The s-polarization calculation is equivalent. We find

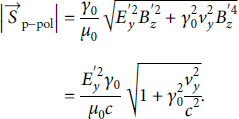

We, hence, find for the magnitude of the transformed Poynting vector.

since

![]() . On the other hand, inserting the transformation into

. On the other hand, inserting the transformation into

![]() , we find

, we find

such that for the magnitude we have

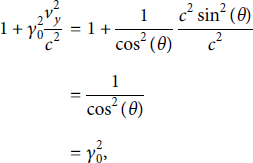

The term in the square root can be resolved elegantly once we remind ourselves of the definition of vy:

and with that we have

Then, let us consider the transformed quantities in code units, so as to initialize the particles correctly in the PIC code. For the space coordinate, we find

while for the time coordinate, since

![]() :

:

Finally, the critical density transforms as follows:

such that the initial particle densities in code units become

A verification plot for the Lorentz Boost method is displayed in Figure 14 for irradiation under an oblique angle.

Figure 14: Example result for a Lorentz-boosted simulation with an angle of 40°. The dashed line denotes the fit of Mora’s model [Reference Mora20] to the data.

D. Laser Conversion Efficiency

The laser conversion efficiency is an important quantity to characterize particle acceleration and especially laser-plasma acceleration. In order to retrieve information about the energy in the output spectrum of a TNSA experiment, consider first a spectrum dN/ dE recorded in multiple energy bins of width ∆E. In this case, the number of particles in bin i is given by the bin’s height multiplied by its width, i.e.,

Hence, the total energy of the particles within the bin could be approximated by multiplying the particles in the bin by the bin’s central energy Ei. Summing over all bins yields the total energy of the particles.

which can be generalized in the continuous limit ∆E⟶0, giving

Concretely, adjusting for the output format of the neural network models, the total energy is given by

where ln (dn/dE and E max are given by the neural network models and V is a unit volume. To obtain a measure for the energy conversion efficiency then, the abovementioned integral should be weighted by the laser pulse energy E L, resulting in the maximization problem shown in equation (6).

E. Neural Network Training and Preparation

In this section, we discuss the chosen parameter ranges for the surrogate models based on neural networks.

Training surrogate models is a tedious and numerically expensive task. This means that we have to be clear about the parameters and data used for the training process. We will first focus on the data preparation task, and second on the numerical hyperparameters chosen for our model. Both parts are important if we want to create fast converging models.

E.1. Data Preparation

Neural networks can only be as good as the data used for training them. Convergence is important and data, therefore, has to be prepared properly. We can only investigate the multispecies effect and subsequent optimizations if we take the full spectrum into account.

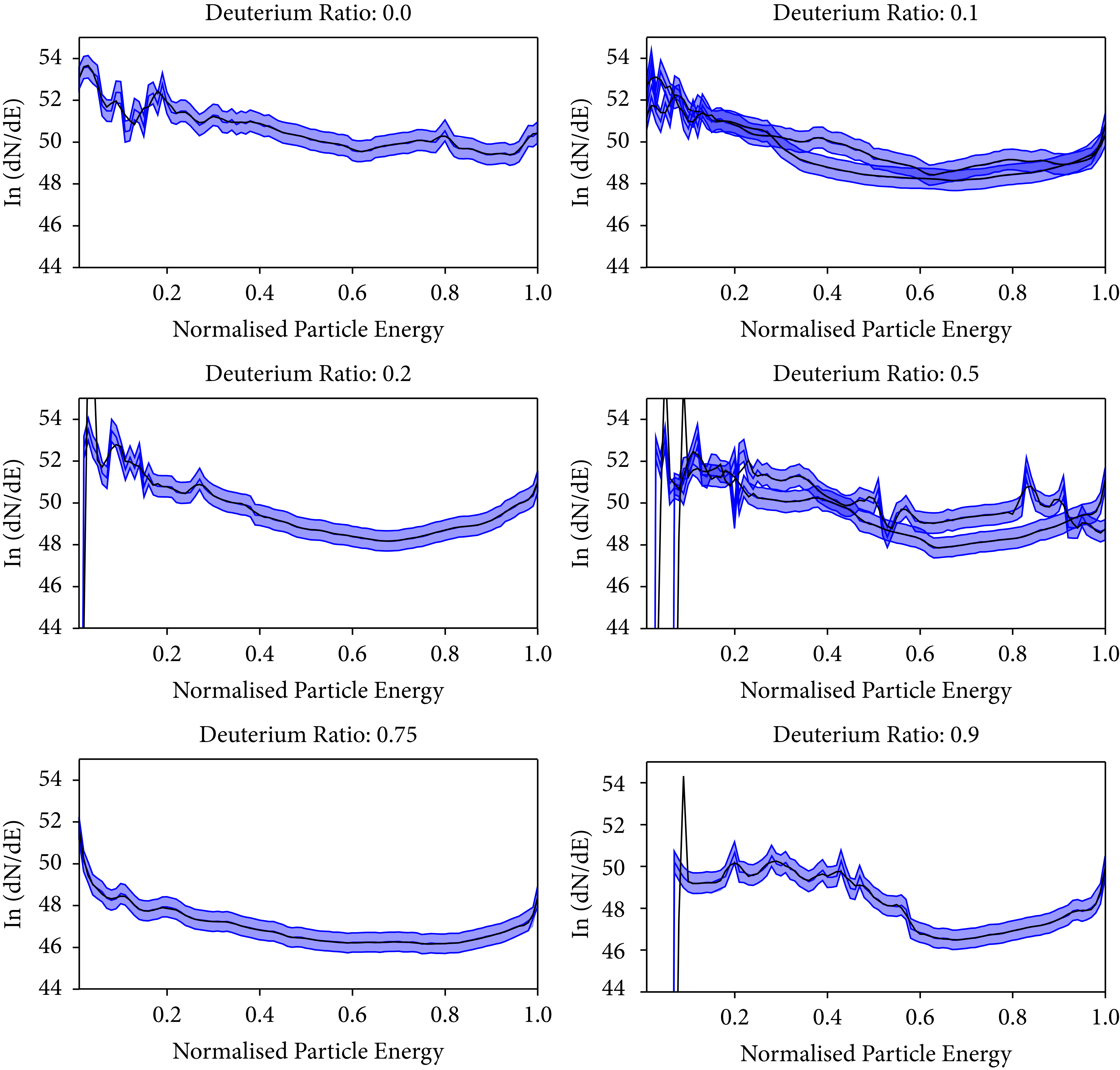

The spectral data for the output spectrum is taken on a logarithmic scale since the count rates vary over several orders of magnitude. The logarithmic data can directly be used to train a model. We tried using the data directly, but convergence was problematic. This is due to the noise of the data and the mixture-depending shifts of multispecies plateaus. The signal variation in both cases is similar, and it is therefore difficult for the network to fit the dependencies. To mitigate this we applied a Savitzky and Golay filter [Reference Savitzky and Golay57] with a window size of 7 points and a 3rd-order polynomial. This filter decreased the noise-based fluctuations and allowed subsequent convergence. We display a comparison for filtered and unfiltered data in Figure 15, which shows that the major behavior of the curves is reproduced but the bin-to-bin fluctuations in the mid to high energy range are minimized.

Figure 15: Savitzky–Golay filter with window size 7 and a 3rd-order polynomial. Blue indicates the raw data, projected to a wider range for visibility and black indicates the filtered signal.

E.2. Numerical Parameters, Training, and Topology

As none of the architectural parameters for these models were known, some outlying hyperparameters were decided first. For a regression problem, the rectified linear unit (ReLU) activation function is widely used and was added to every layer of the network except the output layer which used the identity activation. Similarly, we chose the mean squared error, suited for regression problems, as loss and it was minimized using the Adam optimizer with β 1 = 0.9, β 2 = 0.999, and ϵ = 1 × 10−7. We initialised the training with a learning rate of 0.001. Using Keras’ code (ReduceLROnPlateau) feature with a patience of 3 epochs and monitoring validation loss, the learning rate was continuously halved until reaching 0.0001. In order for the physical parameters to be more manageable numerically, all parameters were divided by the maximum value in their range (see Table 1) before being given to the model.

With these outlying parameters in place, the architecture of the FCNs, i.e., the number of layers and the number of neurons in each layer, was left variable and was optimized for the problem using a hyperparameter tuning method. Keras Tuner allows for extensive hyperparameter tuning using various optimization algorithms [Reference O’Malley, Bursztein, Long, Chollet, Jin and Invernizzi31].

Recalling Section 2.2, each simulation output contains information about 100 locations in the energy spectrum of the particles. Hence, for the reduced continuous model, the available data length was 68 973 × 100 = 6 897 300 data points. Of these, 81% were used for training, 9% were used for validation, and 10% were used for testing. Running Keras Tuner on Google Cloud Compute Engine API from a Google Colab Notebook, Bayesian Optimization could be performed for the hyperparameters of the continuous model of hydrogen ions. In order to find a model architecture that most accurately describes the simulation results, the number of layers and the number of neurons for each layer was first optimized to achieve the lowest possible training loss. Every training used a batch size of 256 and an early stopping mechanism. After 50 trials, each running training twice in order to lower the chance of a bad local minimum, a suitable architecture was found. However, this optimized model was only tuned to minimize the training loss of the model without considering the validation data at all. To generalize the model, hyperparameter tuning was run again on the optimized architecture, this time with L1 and L2 regularization on each layer as the hyperparameters to be tuned and with the tuning objective set to the mean squared error on the validation set. Each hidden layer in the network has L1 regularization strength of 1.98 × 10−6 and L2 regularization strength of 3.07 × 10−8. The network achieved a mean squared error of 3.38 on the 620 757 randomly selected validation data points. As a reminder, this number is equal to the mean squared error on the

![]() prediction for input parameters {E, [physical parameters]}.

prediction for input parameters {E, [physical parameters]}.

Equivalently, the second model predicting the maximum ion energy could be tuned and optimized. Since the maximum energy is only predicted per simulation and not per energy bin of the energy spectra, the second model was trained on 68 973 unique data points. This significantly smaller dataset made the model training on a home computer feasible. The optimized model for the maximum energy found L1 regularization strength of 2.3 × 10−4 and L2 regularization strength of 1.1 × 10−7.

Data Availability

The simulation data and it postprocessing scripts used for the model of this study have been deposited in tudatalib repository (https://doi.org/10.48328/tudatalib-1143). The models and exemplary training and application routines used here are deposited in another tudatalib repository (https://doi.org/10.48328/tudatalib-1142).

Conflicts of Interest

The authors declare that they have no conflicts of interest.

Acknowledgments

The authors would like to thank the Smilei team for providing valuable discussion. The authors would also like to thank Ion Gabriel Ion and Dimitrios Loukrezis for the helpful discussion on sensitivity analysis and the physical interpretation of artificial neural network models. This work was funded by HMWK through the LOEWE Center “Nuclear Photonics.” This work was also supported by the Graduate School CE within the Centre for Computational Engineering at Technische Universität Darmstadt.