1. Introduction

Static analysis tools based on abstract interpretation are complicated software systems. They are complicated due to the complications of programming language semantics and the subtle invariants required to achieve meaningful results. They are also complicated when dedicated analysis algorithms are required to deal with certain types of properties, for example, one algorithm for inferring points-to information and another for checking array-out-of-bounds accesses. Thus, static analysis tools themselves are subject to subtle programming errors. From a software engineering perspective, it is therefore meaningful to separate the specification of the analysis as much as possible from the algorithm solving the analysis problem for a given program. In order to be widely applicable, this algorithm therefore should be as generic as possible.

In abstract interpretation-based static analysis, the analysis of a program, notably an imperative or object-oriented program, can naturally be compiled into a system of abstract equations. The unknowns represent places where invariants regarding the reaching program states are desired. Such unknowns could be, for example, plain program points or, in case of interprocedural analysis, program points together with abstract calling contexts. The sets of possible abstract values for these unknowns then correspond to classes of possible invariants and typically form complete lattices. Since for infinite complete lattices of abstract values the number of calling contexts is also possibly infinite, interprocedural analysis has generally to deal with infinite systems of equations. It turns out, however, that in this particular application, only the values of those unknowns are of interest that directly or indirectly influence some initial unknown. Here, local solving comes as a rescue: a local solver can be started with one initial unknown of interest. It then explores only those unknowns whose values contribute to the value of this initial unknown.

One such generic local solver is the top-down solver TD (Charlier and Van Hentenryck Reference Charlier and Van Hentenryck1992; Muthukumar and Hermenegildo Reference Muthukumar and Hermenegildo1990). Originally, the TD solver has been conceived for goal-directed static analysis of Prolog programs (Hermenegildo and Muthukumar Reference Hermenegildo and Muthukumar1989; Hermenegildo and Muthukumar Reference Hermenegildo and Muthukumar1992) while some basic ideas can be traced back to Bruynooghe et al. (Reference Bruynooghe, Janssens, Callebaut and Demoen1987). The same technology as developed for Prolog later turned out to be useful for imperative programs as well (Hermenegildo Reference Hermenegildo2000) and also was applied to other languages via translation to CLP programs (Gallagher and Henriksen Reference Gallagher and Henriksen2006; Hermenegildo et al. Reference Hermenegildo, Mendez-Lojo and Navas2007). A variant of it is still at the heart of the program analysis system Ciao (Hermenegildo et al. Reference Hermenegildo, Bueno, Carro, LÓpez-GarcÍa, Mera, Morales and Puebla2012; Hermenegildo et al. Reference Hermenegildo, Puebla, Bueno and LÓpez-GarcÍa2005). The TD solver is interesting in that it completely abandons specific data structures such as work-lists, but solely relies on recursive evaluation of (right-hand sides of) unknowns.

Subsequently, the idea of using generic local solvers has also been adopted for the design and implementation of the static analysis tool Goblint (Vojdani et al. Reference Vojdani, Apinis, RÕtov, Seidl, Vene and Vogler2016) – now targeted at the abstract interpretation of multi-threaded C. Since the precise analysis of programming languages requires to employ abstract domains with possibly infinite ascending or descending chains, the solvers provided by Goblint were extended with support for widening as well as narrowing (Amato et al. Reference Amato, Scozzari, Seidl, Apinis and Vojdani2016). Recall that widening and narrowing operators have been introduced in Cousot and Cousot (Reference Cousot, Cousot, Graham, Harrison and Sethi1977); Cousot and Cousot (Reference Cousot and Cousot1992) as an iteration strategy for solving finite systems of equations: in a first phase, an upward Kleene fixpoint iteration is accelerated to obtain some post-solution, which then, in a second phase, is improved by an accelerated downward iteration. This strict separation into phases is given up by the algorithms from Amato et al. (Reference Amato, Scozzari, Seidl, Apinis and Vojdani2016). Instead, the ascending iteration on one unknown is possibly intertwined with the descending iteration on another. The idea is to avoid unnecessary loss of precision by starting an improving iteration on an unknown as soon as an over-approximation of the least solution for this unknown has been reached. On the downside, the solvers developed in Amato et al. (Reference Amato, Scozzari, Seidl, Apinis and Vojdani2016) are only guaranteed to terminate for monotonic systems of equations. Systems for interprocedural analysis, however, are not necessarily monotonic. The problems concerning termination as encountered by the non-standard local iteration strategy from Amato et al. (Reference Amato, Scozzari, Seidl, Apinis and Vojdani2016) were resolved in Frielinghaus et al. (Reference Frielinghaus, Seidl and Vogler2016) where the switch from a widening to a narrowing iteration for an unknown was carefully redesigned.

When reviewing advantages and disadvantages of local generic solvers for Goblint, we observed in Apinis et al. (Reference Apinis, Seidl, Vojdani, Probst, Hankin and Hansen2016) that the extension with widening and narrowing from Amato et al. (Reference Amato, Scozzari, Seidl, Apinis and Vojdani2016) could nicely be applied to the solver TD as well – it was left open, though, how termination can be enforced not only for monotonic, but for arbitrary systems of equations. In this paper, we settle this issue and present a variant of the TD solver with widening and narrowing which is guaranteed to terminate for all systems of equations – whenever only finitely many unknowns are encountered.

Besides termination, another obstacle for the practical application of static analysis is the excessive space consumption incurred by storing abstract values for all encountered unknowns. Storing all these values allows interprocedural static analysis tools like Goblint only to scale to medium-sized programs of about 100k LOC. This is in stark contrast to the tool AstrÉe, which – while also being implemented in OCaml – succeeds in analyzing much larger input programs (Cousot et al. Reference Cousot, Cousot, Feret, Mauborgne, MinÉ and Rival2009). One reason is that AstrÉe only keeps the abstract values of a subset of unknowns in memory, which is sufficient for proceeding with the current fixpoint iteration. As our second contribution, we therefore show how to realize a space-efficient iteration strategy within the framework of generic local solvers. Unlike AstrÉe which iterates over the syntax, generic local solvers are application-independent. They operate on systems of equations – no matter where these are extracted from. As right-hand sides of equations are treated as black boxes, inspection of the syntax of the input program is out of reach. Since for local solvers the set of unknowns to be considered is only determined during the solving iteration, also the subset of unknowns sufficient for reconstructing the analysis result must be identified on the fly. Our idea for that purpose is to instrument the solver to observe when the value of an unknown is queried, for which an iteration is already on the way. For equation systems corresponding to standard control-flow graphs, these unknowns correspond to the loop heads and are therefore ideal candidates for widening and narrowing to be applied. That observation was already exploited in Apinis et al. (Reference Apinis, Seidl, Vojdani, Probst, Hankin and Hansen2016) to identify a set of unknowns where widening and narrowing should be applied. The values of these unknowns also suffice for reconstructing the values of all remaining unknowns without further iteration. Finally, we present an extension of the TD solver with side effects. Side effects during solving means that, while evaluating the right-hand side for some unknown, contributions to other unknowns may be triggered. This extension allows to nicely formulate partial context-sensitivity at procedure calls and also to combine flow-insensitive analysis, for example, of global data, with context-sensitive analysis of the local program state (Apinis et al. Reference Apinis, Seidl, Vojdani and Igarashi2012). The presented solvers have been implemented as part of Goblint, a static analysis framework written in OCaml.

This paper is organized as follows: First, we recall the basics of abstract interpretation. In Section 2, we show how the concrete semantics of a program can be defined using a system of equations with monotonic right-hand sides. In Section 3, we describe how abstract equation systems can be used to compute sound approximations of the collecting semantics. Since the sets of unknowns of concrete and abstract systems of equations may differ, we argue that soundness can only be proven relative to a description relation between concrete and abstract unknowns. We also recall the concepts of widening and narrowing and indicate in which sense these can be used to effectively solve abstract systems of equations. In Section 4, we present the generic local solver

![]() $\mathbf{TD}_{\mathbf{term}}$

. In Section 5, we show that it is guaranteed to terminate even for abstract equation systems with non-monotonic right-hand sides. In Section 6, we prove that it will always compute a solution that is a sound description of the least solution of the corresponding concrete system. In Section 7, we present the solver

$\mathbf{TD}_{\mathbf{term}}$

. In Section 5, we show that it is guaranteed to terminate even for abstract equation systems with non-monotonic right-hand sides. In Section 6, we prove that it will always compute a solution that is a sound description of the least solution of the corresponding concrete system. In Section 7, we present the solver

![]() $\mathbf{TD}_{\mathbf{space}}$

, a space-efficient variation of

$\mathbf{TD}_{\mathbf{space}}$

, a space-efficient variation of

![]() $\mathbf{TD}_{\mathbf{term}}$

that only keeps values at widening points. In Section 8, we prove that it terminates and similar to

$\mathbf{TD}_{\mathbf{term}}$

that only keeps values at widening points. In Section 8, we prove that it terminates and similar to

![]() $\mathbf{TD}_{\mathbf{term}}$

computes a sound description of the least solution of the corresponding concrete system. In Section 9, we introduce side-effecting systems of equations, and the solver

$\mathbf{TD}_{\mathbf{term}}$

computes a sound description of the least solution of the corresponding concrete system. In Section 9, we introduce side-effecting systems of equations, and the solver

![]() $\mathbf{TD}_{\mathbf{side}}$

, a variation of

$\mathbf{TD}_{\mathbf{side}}$

, a variation of

![]() $\mathbf{TD}_{\mathbf{term}}$

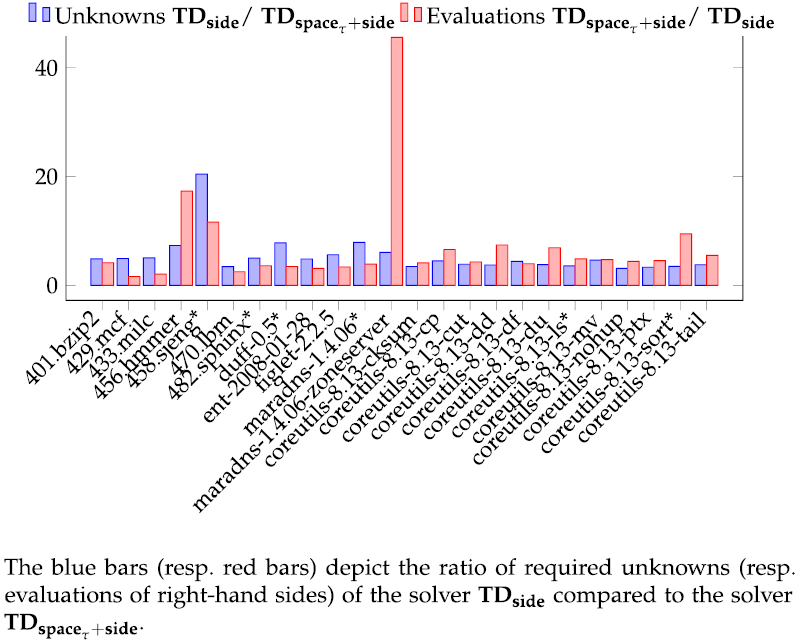

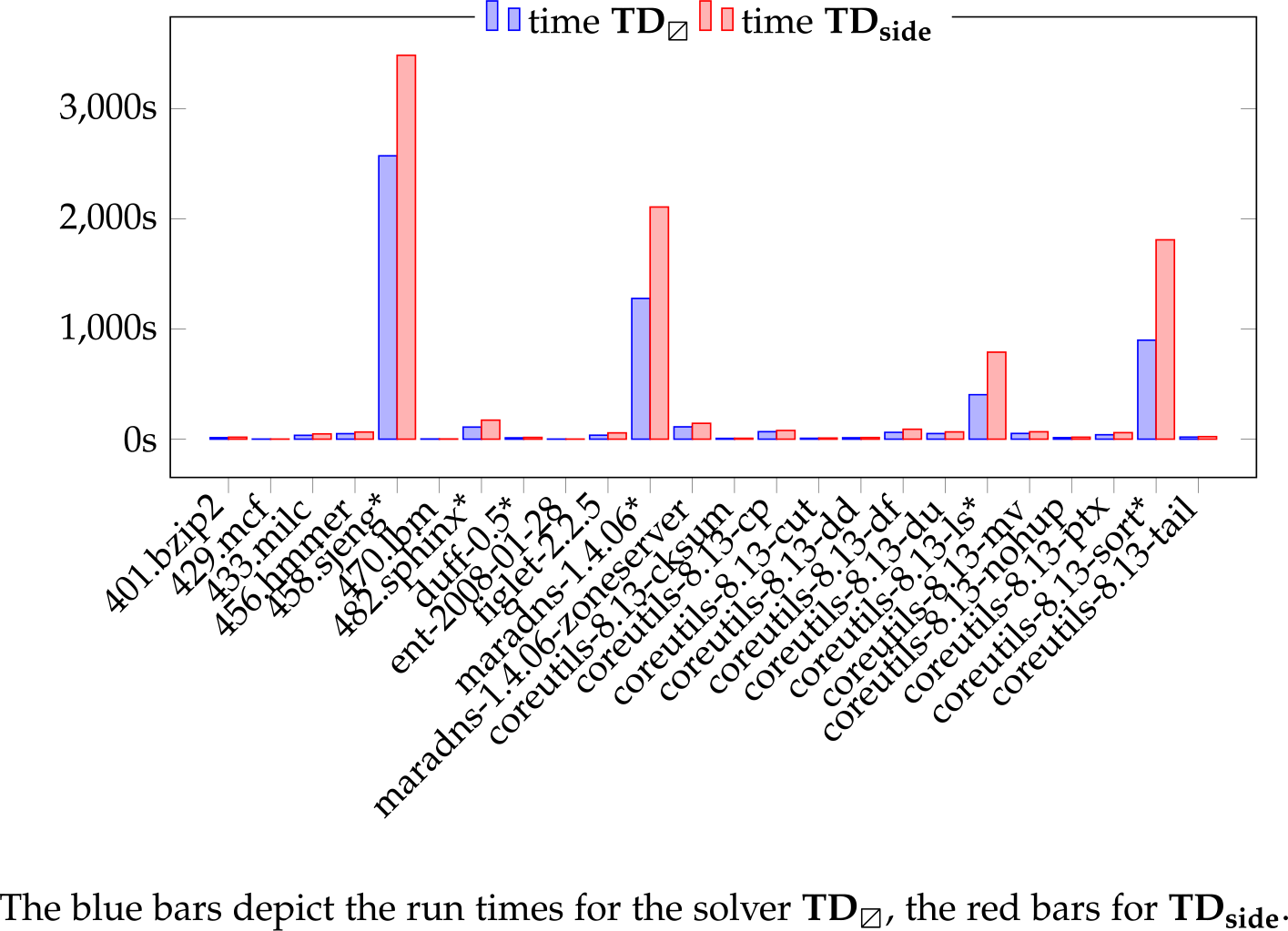

. In order to argue about soundness in presence of side effects, it is now convenient to consider description relations between concrete and abstract unknowns which are not static, but dynamically computed during fixpoint iteration, that is, themselves depend on the analysis results. In Section 10, we discuss the results of evaluating the solvers on a set of programs. In Section 11, we summarize our main contributions. Throughout the presentation, we exemplify our notions and concepts by small examples from interprocedural program analysis.

$\mathbf{TD}_{\mathbf{term}}$

. In order to argue about soundness in presence of side effects, it is now convenient to consider description relations between concrete and abstract unknowns which are not static, but dynamically computed during fixpoint iteration, that is, themselves depend on the analysis results. In Section 10, we discuss the results of evaluating the solvers on a set of programs. In Section 11, we summarize our main contributions. Throughout the presentation, we exemplify our notions and concepts by small examples from interprocedural program analysis.

2. Concrete Systems of Equations

Solvers are meant to provide solutions to systems of equations over some complete lattice. Assume that

![]() $\mathcal{X}$

is a (not necessarily finite) set of unknowns and

$\mathcal{X}$

is a (not necessarily finite) set of unknowns and

![]() $\mathbb{D}$

a complete lattice. Then, a system of equations

$\mathbb{D}$

a complete lattice. Then, a system of equations

![]() $\mathbb{E}$

(with unknowns from

$\mathbb{E}$

(with unknowns from

![]() $\mathcal{X}$

and values in

$\mathcal{X}$

and values in

![]() $\mathbb{D}$

) assigns a right-hand side

$\mathbb{D}$

) assigns a right-hand side

![]() $F_x$

to each unknown

$F_x$

to each unknown

![]() $x\in\mathcal{X}$

. Since we not only interested in the values assigned to unknowns but also in the dependencies between these, we assume that each right-hand side

$x\in\mathcal{X}$

. Since we not only interested in the values assigned to unknowns but also in the dependencies between these, we assume that each right-hand side

![]() $F_x$

is a function of type

$F_x$

is a function of type

![]() $(\mathcal{X} \to \mathbb{D}) \to \mathbb{D} \times \mathcal{P} (\mathcal{X})$

.

$(\mathcal{X} \to \mathbb{D}) \to \mathbb{D} \times \mathcal{P} (\mathcal{X})$

.

-

The first component of the result of

$F_x$

is the value for x;

$F_x$

is the value for x; -

The second component is a set of unknowns which is sufficient to determine the value.

More formally, we assume for the second component of

![]() $F_x$

that for every assignment

$F_x$

that for every assignment

![]() $\sigma:\mathcal{X}\to\mathbb{D}$

,

$\sigma:\mathcal{X}\to\mathbb{D}$

,

![]() $F_x\,\sigma = (d,X)$

implies that for any other assignment

$F_x\,\sigma = (d,X)$

implies that for any other assignment

![]() $\sigma':\mathcal{X}\to\mathbb{D}$

with

$\sigma':\mathcal{X}\to\mathbb{D}$

with

![]() $\sigma|_X = \sigma'|_X$

, that is, which agrees with

$\sigma|_X = \sigma'|_X$

, that is, which agrees with

![]() $\sigma$

on all unknowns from

$\sigma$

on all unknowns from

![]() $\mathcal{X}$

,

$\mathcal{X}$

,

![]() $F_x\,\sigma = F_x\,\sigma'$

holds as well.

$F_x\,\sigma = F_x\,\sigma'$

holds as well.

The set X thus can be considered as a superset of all unknowns onto which the unknown x depends – w.r.t. the assignment

![]() $\sigma$

. We remark this set very well may be different for different assignments. For convenience, we denote these two components of the result

$\sigma$

. We remark this set very well may be different for different assignments. For convenience, we denote these two components of the result

![]() $F_x\,\sigma$

as

$F_x\,\sigma$

as

![]() $(F_x\,\sigma)_1$

and

$(F_x\,\sigma)_1$

and

![]() $(F_x\,\sigma)_2$

, respectively.

$(F_x\,\sigma)_2$

, respectively.

Systems of equations can be used to formulate the concrete (accumulating) semantics of a program. In this case, the complete lattice

![]() $\mathbb{D}$

is of the form

$\mathbb{D}$

is of the form

![]() $\mathcal{P} (\mathcal{Q})$

where

$\mathcal{P} (\mathcal{Q})$

where

![]() $\mathcal{Q}$

is the set of states possibly attained during program execution. Furthermore, all right-hand sides of the concrete semantics should be monotonic w.r.t. the natural ordering on pairs. This means that on larger assignments, the sets of states for x as well as the set of contributing unknowns may only increase.

$\mathcal{Q}$

is the set of states possibly attained during program execution. Furthermore, all right-hand sides of the concrete semantics should be monotonic w.r.t. the natural ordering on pairs. This means that on larger assignments, the sets of states for x as well as the set of contributing unknowns may only increase.

Example 1 For

![]() $\mathcal{X} = \mathcal{Q} = \mathbb{Z},$

a system of equations could have right-hand sides

$\mathcal{X} = \mathcal{Q} = \mathbb{Z},$

a system of equations could have right-hand sides

![]() $F_x:(\mathbb{Z} \to \mathcal{P} (\mathbb{Z})) \to (\mathcal{P} (\mathbb{Z}) \times \mathcal{P} (\mathbb{Z}))$

where, for example,

$F_x:(\mathbb{Z} \to \mathcal{P} (\mathbb{Z})) \to (\mathcal{P} (\mathbb{Z}) \times \mathcal{P} (\mathbb{Z}))$

where, for example,

Thus,

![]() $F_1$

always returns the value

$F_1$

always returns the value

![]() $\{0\}$

and accordingly depends on no other unknown,

$\{0\}$

and accordingly depends on no other unknown,

![]() $F_2$

on the other hand returns the union of the values of unknowns 1 and 3. Therefore, it depends on both of them.

$F_2$

on the other hand returns the union of the values of unknowns 1 and 3. Therefore, it depends on both of them.

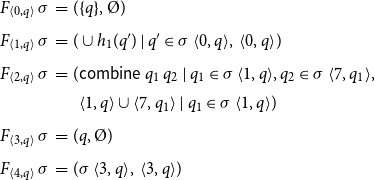

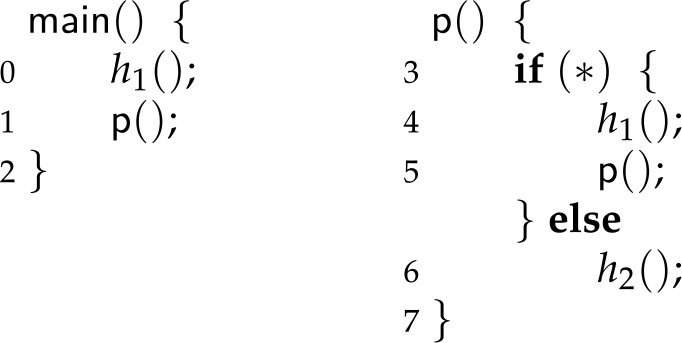

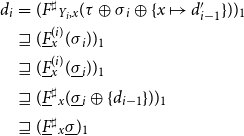

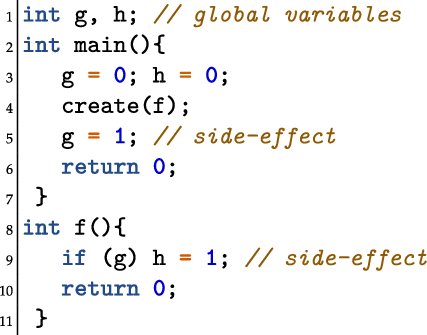

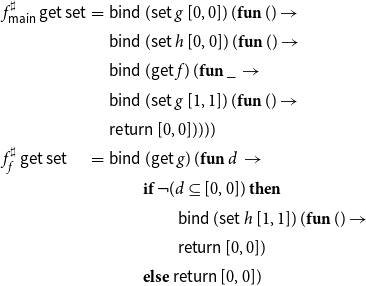

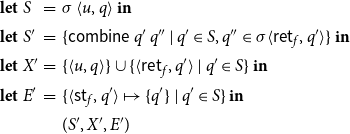

Example 2 As a running example, consider the program from Figure 1 which consists of the procedures main and p. Assume that these operate on a set

![]() $\mathcal{Q}$

of program states where the functions

$\mathcal{Q}$

of program states where the functions

![]() $h_1, h_2: \mathcal{Q} \to \mathcal{P} (\mathcal{Q})$

represent the semantics of basic computation steps. The collecting semantics for the program provides subsets of states for each program point

$h_1, h_2: \mathcal{Q} \to \mathcal{P} (\mathcal{Q})$

represent the semantics of basic computation steps. The collecting semantics for the program provides subsets of states for each program point

![]() $u \in {0, \ldots, 7}$

and each possible calling context

$u \in {0, \ldots, 7}$

and each possible calling context

![]() $q \in \mathcal{Q}$

. The right-hand side function

$q \in \mathcal{Q}$

. The right-hand side function

![]() $F_{{\langle{u,q}\rangle}}$

for each such pair

$F_{{\langle{u,q}\rangle}}$

for each such pair

![]() ${\langle{u,q}\rangle}$

is given by:

${\langle{u,q}\rangle}$

is given by:

\begin{equation*}\begin{array}{lll}F_{{\langle{0,q}\rangle}}\,\sigma &=& (\{q\},\unicode{x00D8}) \\[0.5ex]F_{{\langle{1,q}\rangle}}\,\sigma &=& (\cup{h_1(q')\mid q'\in\sigma\,{\langle{0,q}\rangle}},\;{{\langle{0,q}\rangle}}) \\[0.5ex]F_{{\langle{2,q}\rangle}}\,\sigma &=& ({\textsf{combine}\,q_1\,q_2\mid q_1\in\sigma\,{\langle{1,q}\rangle}, q_2\in\sigma\,{\langle{7,q_1}\rangle}}, \\ & &\phantom{(}{{\langle{1,q}\rangle}}\cup{{\langle{7,q_1}\rangle}\mid q_1\in\sigma\,{\langle{1,q}\rangle}}) \\[0.5ex] F_{{\langle{3,q}\rangle}}\,\sigma &=& ({q},\unicode{x00D8}) \\[0.5ex]F_{{\langle{4,q}\rangle}}\,\sigma &=& (\sigma\,{\langle{3,q}\rangle},\;{{\langle{3,q}\rangle}}) \\[0.5ex]\end{array}\end{equation*}

\begin{equation*}\begin{array}{lll}F_{{\langle{0,q}\rangle}}\,\sigma &=& (\{q\},\unicode{x00D8}) \\[0.5ex]F_{{\langle{1,q}\rangle}}\,\sigma &=& (\cup{h_1(q')\mid q'\in\sigma\,{\langle{0,q}\rangle}},\;{{\langle{0,q}\rangle}}) \\[0.5ex]F_{{\langle{2,q}\rangle}}\,\sigma &=& ({\textsf{combine}\,q_1\,q_2\mid q_1\in\sigma\,{\langle{1,q}\rangle}, q_2\in\sigma\,{\langle{7,q_1}\rangle}}, \\ & &\phantom{(}{{\langle{1,q}\rangle}}\cup{{\langle{7,q_1}\rangle}\mid q_1\in\sigma\,{\langle{1,q}\rangle}}) \\[0.5ex] F_{{\langle{3,q}\rangle}}\,\sigma &=& ({q},\unicode{x00D8}) \\[0.5ex]F_{{\langle{4,q}\rangle}}\,\sigma &=& (\sigma\,{\langle{3,q}\rangle},\;{{\langle{3,q}\rangle}}) \\[0.5ex]\end{array}\end{equation*}

\begin{equation*}\begin{array}{lll}F_{{\langle{5,q}\rangle}}\,\sigma &=& (\cup{h_1(q_1)\mid q_1\in\sigma\,{\langle{4,q}\rangle}},{{\langle{4,q}\rangle}}) \\[0.5ex]F_{{\langle{6,q}\rangle}}\,\sigma &=& (\sigma\,{\langle{3,q}\rangle},\;{{\langle{3,q}\rangle}}) \\[0.5ex]F_{{\langle{7,q}\rangle}}\,\sigma &=& ({\textsf{combine}\,q_1\,q_2\mid q_1\in\sigma\,{\langle{5,q}\rangle}, q_2\in\sigma\,{\langle{7,q_1}\rangle}} \\ & &\phantom{(} \cup\,{h_2(q_1)\mid q_1\in\sigma\,{\langle{6,q}\rangle}}, \\ & &\phantom{(} {{\langle{5,q}\rangle}}\cup{{\langle{7,q_1}\rangle}\mid q_1\in\sigma\,{\langle{5,q}\rangle}}\cup {{\langle{6,q}\rangle}}) \end{array}\end{equation*}

\begin{equation*}\begin{array}{lll}F_{{\langle{5,q}\rangle}}\,\sigma &=& (\cup{h_1(q_1)\mid q_1\in\sigma\,{\langle{4,q}\rangle}},{{\langle{4,q}\rangle}}) \\[0.5ex]F_{{\langle{6,q}\rangle}}\,\sigma &=& (\sigma\,{\langle{3,q}\rangle},\;{{\langle{3,q}\rangle}}) \\[0.5ex]F_{{\langle{7,q}\rangle}}\,\sigma &=& ({\textsf{combine}\,q_1\,q_2\mid q_1\in\sigma\,{\langle{5,q}\rangle}, q_2\in\sigma\,{\langle{7,q_1}\rangle}} \\ & &\phantom{(} \cup\,{h_2(q_1)\mid q_1\in\sigma\,{\langle{6,q}\rangle}}, \\ & &\phantom{(} {{\langle{5,q}\rangle}}\cup{{\langle{7,q_1}\rangle}\mid q_1\in\sigma\,{\langle{5,q}\rangle}}\cup {{\langle{6,q}\rangle}}) \end{array}\end{equation*}

Recall that in presence of local scopes of program variables, the state after a call may also depend on the state before the call. Accordingly, we use an auxiliary function

![]() $\textsf{combine}:\mathcal{Q}\to \mathcal{Q}\to \mathcal{Q}$

which determines the program state after a call from the state before the call and the state attained at the end of the procedure body. For simplicity, we have abandoned an extra function enter for modeling passing of parameters as considered, e.g., in Apinis et al. (Reference Apinis, Seidl, Vojdani and Igarashi2012) and thus assume that the full program state of the caller before the call is passed to the callee. If the set

$\textsf{combine}:\mathcal{Q}\to \mathcal{Q}\to \mathcal{Q}$

which determines the program state after a call from the state before the call and the state attained at the end of the procedure body. For simplicity, we have abandoned an extra function enter for modeling passing of parameters as considered, e.g., in Apinis et al. (Reference Apinis, Seidl, Vojdani and Igarashi2012) and thus assume that the full program state of the caller before the call is passed to the callee. If the set

![]() $\mathcal{Q}$

is of the form

$\mathcal{Q}$

is of the form

![]() $\mathcal{Q} = \mathcal{A}\times\mathcal{B}$

where

$\mathcal{Q} = \mathcal{A}\times\mathcal{B}$

where

![]() $\mathcal{A},\mathcal{B}$

are the sets of local and global states, respectively, the function

$\mathcal{A},\mathcal{B}$

are the sets of local and global states, respectively, the function

![]() $\textsf{combine}$

could, for example, be defined as

$\textsf{combine}$

could, for example, be defined as

We remark that the sets of unknowns onto which the right-hand sides for

![]() ${\langle{2,q}\rangle}$

as well as

${\langle{2,q}\rangle}$

as well as

![]() ${\langle{7,q}\rangle}$

depend themselves depend on the assignment

${\langle{7,q}\rangle}$

depend themselves depend on the assignment

![]() $\sigma$

.

$\sigma$

.

Figure 1. An example program with procedures.

Besides the concrete values provided for the unknowns, we also would like to determine the subset of unknowns which contribute to a particular subset of unknowns of interest. Restricting to this subset has the practical advantage that calculation may be restricted to a perhaps quite small number of unknowns only. Also, that subset in itself contains some form of reachability information. For interprocedural analysis, for example, of the program in Example 2, we are interested in all pairs

![]() ${\langle\textsf{ret}_{\textit{main}},q\rangle},q\in Q_0$

, that is, the endpoint of the initially called procedure main for every initial calling context

${\langle\textsf{ret}_{\textit{main}},q\rangle},q\in Q_0$

, that is, the endpoint of the initially called procedure main for every initial calling context

![]() $q\in Q_0$

. In the Example 2, these would be of the form

$q\in Q_0$

. In the Example 2, these would be of the form

![]() ${\langle{2,q}\rangle}, q\in Q_0$

. In order to determine the sets of program states for the unknowns of interest, it suffices to consider only those calling contexts for each procedure p (and thus each program point within p) in which p is possibly called. The set of all such pairs is given as the least subset of unknowns which (directly or indirectly) influences any of the unknowns of interest.

${\langle{2,q}\rangle}, q\in Q_0$

. In order to determine the sets of program states for the unknowns of interest, it suffices to consider only those calling contexts for each procedure p (and thus each program point within p) in which p is possibly called. The set of all such pairs is given as the least subset of unknowns which (directly or indirectly) influences any of the unknowns of interest.

More technically for a system

![]() $\mathbb{E}$

of equations, consider an assignment

$\mathbb{E}$

of equations, consider an assignment

![]() $\sigma : \mathcal{X}\to \mathbb{D}$

and a subset

$\sigma : \mathcal{X}\to \mathbb{D}$

and a subset

![]() $\textit{dom}\subseteq\mathcal{X}$

of unknowns. Then,

$\textit{dom}\subseteq\mathcal{X}$

of unknowns. Then,

![]() $\textit{dom}$

is called

$\textit{dom}$

is called

![]() $(\sigma,\mathbb{E})$

-closed if

$(\sigma,\mathbb{E})$

-closed if

![]() $(F_x\,\sigma)_2\subseteq\textit{dom}$

is satisfied for all

$(F_x\,\sigma)_2\subseteq\textit{dom}$

is satisfied for all

![]() $x\in\textit{dom}$

. The pair

$x\in\textit{dom}$

. The pair

![]() $(\sigma,\textit{dom})$

is called a partial post-solution of

$(\sigma,\textit{dom})$

is called a partial post-solution of

![]() $\mathbb{E}$

, if

$\mathbb{E}$

, if

![]() $\textit{dom}$

is

$\textit{dom}$

is

![]() $(\sigma,\mathbb{E})$

-closed, and for each

$(\sigma,\mathbb{E})$

-closed, and for each

![]() $x\in\textit{dom}$

,

$x\in\textit{dom}$

,

![]() $\sigma\,x\sqsupseteq(F_x\,\sigma)_1$

holds.

$\sigma\,x\sqsupseteq(F_x\,\sigma)_1$

holds.

The partial post-solution

![]() $(\sigma,\textit{dom})$

of

$(\sigma,\textit{dom})$

of

![]() $\mathbb{E}$

is total (or just a post-solution of

$\mathbb{E}$

is total (or just a post-solution of

![]() $\mathbb{E}$

) if

$\mathbb{E}$

) if

![]() $\textit{dom}=\mathcal{X}$

. In this case, we also skip the second component in this pair.

$\textit{dom}=\mathcal{X}$

. In this case, we also skip the second component in this pair.

Example 3 Consider the following system of equations with

![]() $\mathcal{X} = \mathcal{Q} = \mathbb{Z}$

where

$\mathcal{X} = \mathcal{Q} = \mathbb{Z}$

where

\begin{align*} F_1\,\sigma &= (\sigma\,2, \{2\}) \\ F_2\,\sigma &= (\{2\}, \unicode{x00D8}) \\ F_3\,\sigma &= (\{3\}, \unicode{x00D8}) \\ F_x\,\sigma &= (\unicode{x00D8},\unicode{x00D8})\qquad\text{otherwise}\end{align*}

\begin{align*} F_1\,\sigma &= (\sigma\,2, \{2\}) \\ F_2\,\sigma &= (\{2\}, \unicode{x00D8}) \\ F_3\,\sigma &= (\{3\}, \unicode{x00D8}) \\ F_x\,\sigma &= (\unicode{x00D8},\unicode{x00D8})\qquad\text{otherwise}\end{align*}

Assume we are given the set of unknowns of interest

![]() $X = \{1\}$

. Then,

$X = \{1\}$

. Then,

![]() $(\sigma,\textit{dom})$

with

$(\sigma,\textit{dom})$

with

![]() $\textit{dom}=\{1,2\}$

,

$\textit{dom}=\{1,2\}$

,

![]() $\sigma\,1 = \{2\}$

and

$\sigma\,1 = \{2\}$

and

![]() $\sigma\,2= \{2\}$

is the least partial post-solution with

$\sigma\,2= \{2\}$

is the least partial post-solution with

![]() $X\subseteq\textit{dom}$

. For

$X\subseteq\textit{dom}$

. For

![]() $X' = \{1,3\}$

on the other hand, the least partial solution

$X' = \{1,3\}$

on the other hand, the least partial solution

![]() $(\sigma',\textit{dom}')$

with

$(\sigma',\textit{dom}')$

with

![]() $X'\subseteq\textit{dom}'$

is given by

$X'\subseteq\textit{dom}'$

is given by

![]() $\textit{dom}'=\{1,2,3\}$

and

$\textit{dom}'=\{1,2,3\}$

and

![]() $\sigma'\,1 = \{2\}, \sigma'\,2 = \{2\}$

and

$\sigma'\,1 = \{2\}, \sigma'\,2 = \{2\}$

and

![]() $\sigma'\,3 = \{3\}$

. For monotonic systems such as those used for representing the collecting semantics, and any set

$\sigma'\,3 = \{3\}$

. For monotonic systems such as those used for representing the collecting semantics, and any set

![]() $X\subseteq\mathcal{X}$

of unknowns of interest, there always exists a least partial solution comprising X.

$X\subseteq\mathcal{X}$

of unknowns of interest, there always exists a least partial solution comprising X.

Proposition 1 Assume that the system

![]() $\mathbb{E}$

of equations is monotonic. Then for each subset

$\mathbb{E}$

of equations is monotonic. Then for each subset

![]() $X\subseteq{\mathcal{X}}$

of unknowns, consider the set P of all partial post-solutions

$X\subseteq{\mathcal{X}}$

of unknowns, consider the set P of all partial post-solutions

![]() $(\sigma',{\textit{dom}}')\in (({\mathcal{X}}\to \mathbb{D})\times \mathcal{P} (\mathcal{X}))$

so that

$(\sigma',{\textit{dom}}')\in (({\mathcal{X}}\to \mathbb{D})\times \mathcal{P} (\mathcal{X}))$

so that

![]() $X\subseteq{\textit{dom}}'$

. Then, P has a unique least element

$X\subseteq{\textit{dom}}'$

. Then, P has a unique least element

![]() $(\sigma{}_X,{\textit{dom}}_X)$

. In particular,

$(\sigma{}_X,{\textit{dom}}_X)$

. In particular,

![]() $\sigma{}_X\,x'=\bot$

for all

$\sigma{}_X\,x'=\bot$

for all

![]() $x'\not\in\textit{dom}{}_X$

.

$x'\not\in\textit{dom}{}_X$

.

Proof. Consider the complete lattice

![]() $\mathbb{L} = (\mathcal{X}\to\mathbb{D})\times \mathcal{P} (\mathcal{X})$

. The system

$\mathbb{L} = (\mathcal{X}\to\mathbb{D})\times \mathcal{P} (\mathcal{X})$

. The system

![]() $\mathbb{E}$

defines a function

$\mathbb{E}$

defines a function

![]() $F:\mathbb{L}\to\mathbb{L}$

by

$F:\mathbb{L}\to\mathbb{L}$

by

![]() $F(\sigma{}_1,X_1) = (\sigma{}_2,X_2)$

where

$F(\sigma{}_1,X_1) = (\sigma{}_2,X_2)$

where

Since each right-hand side

![]() $F_x$

in

$F_x$

in

![]() $\mathbb{E}$

is monotonic, so is the function F. Moreover,

$\mathbb{E}$

is monotonic, so is the function F. Moreover,

![]() $(\sigma,\textit{dom})$

is a post-fixpoint of F iff

$(\sigma,\textit{dom})$

is a post-fixpoint of F iff

![]() $(\sigma,\textit{dom})$

is a partial post-solution of

$(\sigma,\textit{dom})$

is a partial post-solution of

![]() $\mathbb{E}$

with

$\mathbb{E}$

with

![]() $X\subseteq\textit{dom}$

. By the fixpoint theorem of Knaster-Tarski, F has a unique least post-fixpoint – which happens to be also the least fixpoint of F.

$X\subseteq\textit{dom}$

. By the fixpoint theorem of Knaster-Tarski, F has a unique least post-fixpoint – which happens to be also the least fixpoint of F.

For a given set X, there thus is a least partial solution of

![]() $\mathbb{E}$

with a least domain

$\mathbb{E}$

with a least domain

![]() $\textit{dom}{}_X$

comprising X. Moreover for

$\textit{dom}{}_X$

comprising X. Moreover for

![]() $X\subseteq X'$

and least partial solutions

$X\subseteq X'$

and least partial solutions

![]() $(\sigma_X,\textit{dom}{}_X),(\sigma_{X'},\textit{dom}{}_{X'})$

comprising X and X’, respectively, we have

$(\sigma_X,\textit{dom}{}_X),(\sigma_{X'},\textit{dom}{}_{X'})$

comprising X and X’, respectively, we have

![]() $\textit{dom}{}_{X}\subseteq\textit{dom}{}_{X'}$

and

$\textit{dom}{}_{X}\subseteq\textit{dom}{}_{X'}$

and

![]() $\sigma_{X'}\,x = \sigma_X\,x$

for all

$\sigma_{X'}\,x = \sigma_X\,x$

for all

![]() $x\in\textit{dom}{}_X$

. In particular, this means for the least total solution

$x\in\textit{dom}{}_X$

. In particular, this means for the least total solution

![]() $\sigma$

that

$\sigma$

that

![]() $\sigma_X\,x = \sigma\,x$

whenever

$\sigma_X\,x = \sigma\,x$

whenever

![]() $x\in\textit{dom}{}_X$

.

$x\in\textit{dom}{}_X$

.

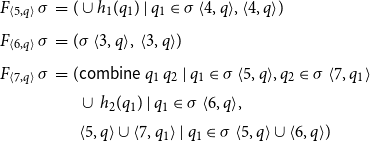

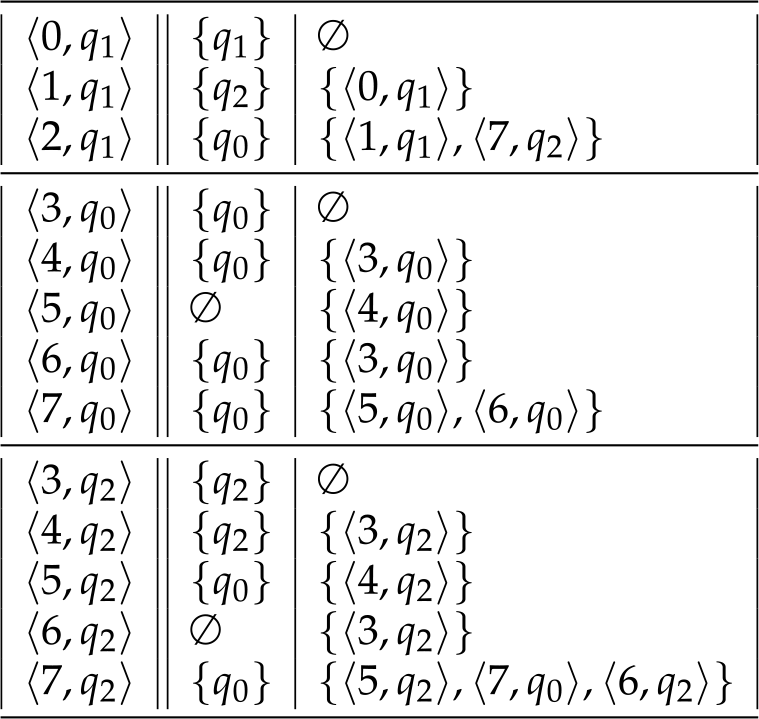

Example 4. Consider the program from Example 2. Assume that

![]() $\mathcal{Q}=\{q_0,q_1,q_2\}$

where the set of initial calling contexts is given by

$\mathcal{Q}=\{q_0,q_1,q_2\}$

where the set of initial calling contexts is given by

![]() $\{q_1\}$

. Accordingly, the set of unknowns of interest is given by

$\{q_1\}$

. Accordingly, the set of unknowns of interest is given by

![]() $X=\{{\langle2,q_1\rangle}\}$

(2 being the return point of main). Assume that the functions

$X=\{{\langle2,q_1\rangle}\}$

(2 being the return point of main). Assume that the functions

![]() $h_1,h_2$

are given by

$h_1,h_2$

are given by

while the function combine always returns its second argument, that is,

![]() $\textsf{combine}\,q\,q' = q'$

.

$\textsf{combine}\,q\,q' = q'$

.

Let

![]() $\textit{dom}$

denote the set

$\textit{dom}$

denote the set

\begin{equation*}\begin{array}{l}\{ {\langle0,q_1\rangle}, {\langle1,q_1\rangle}, {\langle2,q_1\rangle}, \\\;\;{\langle3,q_0\rangle}, {\langle4,q_0\rangle}, {\langle5,q_0\rangle}, {\langle6,q_0\rangle}, {\langle7,q_0\rangle}, \\\;\;{\langle3,q_2\rangle}, {\langle4,q_2\rangle}, {\langle5,q_2\rangle}, {\langle6,q_2\rangle}, {\langle7,q_2\rangle} \}. \\\end{array}\end{equation*}

\begin{equation*}\begin{array}{l}\{ {\langle0,q_1\rangle}, {\langle1,q_1\rangle}, {\langle2,q_1\rangle}, \\\;\;{\langle3,q_0\rangle}, {\langle4,q_0\rangle}, {\langle5,q_0\rangle}, {\langle6,q_0\rangle}, {\langle7,q_0\rangle}, \\\;\;{\langle3,q_2\rangle}, {\langle4,q_2\rangle}, {\langle5,q_2\rangle}, {\langle6,q_2\rangle}, {\langle7,q_2\rangle} \}. \\\end{array}\end{equation*}

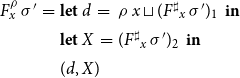

Together with the assignment

![]() $\sigma : \textit{dom}\to \mathcal{P} (\mathcal{Q})\times \mathcal{P} (\mathcal{X})$

as shown in Figure 2, we obtain the least partial solution of the given system of equations which we refer to as the collecting semantics of the program. We remark that

$\sigma : \textit{dom}\to \mathcal{P} (\mathcal{Q})\times \mathcal{P} (\mathcal{X})$

as shown in Figure 2, we obtain the least partial solution of the given system of equations which we refer to as the collecting semantics of the program. We remark that

![]() $\textit{dom}$

only has calls of procedure p for the calling contexts

$\textit{dom}$

only has calls of procedure p for the calling contexts

![]() $q_0$

and

$q_0$

and

![]() $q_2$

.

$q_2$

.

Figure 2. The collecting semantics.

3. Abstract Systems of Equations

Systems of abstract equations are meant to provide sound information for concrete systems. In order to distinguish abstract systems from concrete ones, we usually use superscripts

![]() $\sharp$

at all corresponding entities. Abstract systems of equations differ from concrete ones in several aspects:

$\sharp$

at all corresponding entities. Abstract systems of equations differ from concrete ones in several aspects:

-

Right-hand side functions need no longer be monotonic.

-

Right-hand side functions should be effectively computable and thus may access the values only of finitely many other unknowns in the system (which need not be the case for concrete systems).

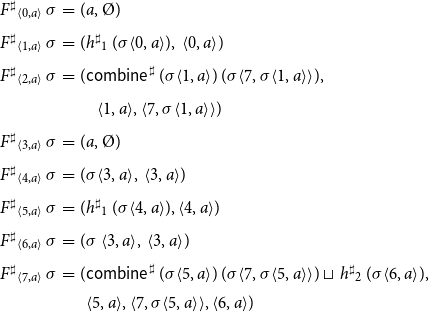

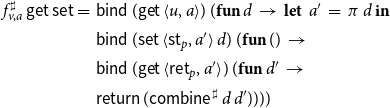

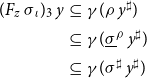

Example 5. Consider again the program from Figure 1 consisting of the procedures main and p. Assume that the abstract domain is given by some complete lattice

![]() $\mathbb{D}$

where the functions

$\mathbb{D}$

where the functions

![]() $h^\sharp_1 , h^\sharp_2 : \mathbb{D}\to\mathbb{D}$

represent the semantics of basic computation steps. The abstract semantics for the program provides an abstract state in

$h^\sharp_1 , h^\sharp_2 : \mathbb{D}\to\mathbb{D}$

represent the semantics of basic computation steps. The abstract semantics for the program provides an abstract state in

![]() $\mathbb{D}$

for each pair

$\mathbb{D}$

for each pair

![]() ${\langle u,a\rangle}$

(u program point from

${\langle u,a\rangle}$

(u program point from

![]() $\{ 0,\ldots,7\}$

, a possible abstract calling context from

$\{ 0,\ldots,7\}$

, a possible abstract calling context from

![]() $\mathbb{D}$

). The right-hand sides

$\mathbb{D}$

). The right-hand sides

![]() $F^\sharp{}_{{\langle u,a\rangle}}$

then are given by:

$F^\sharp{}_{{\langle u,a\rangle}}$

then are given by:

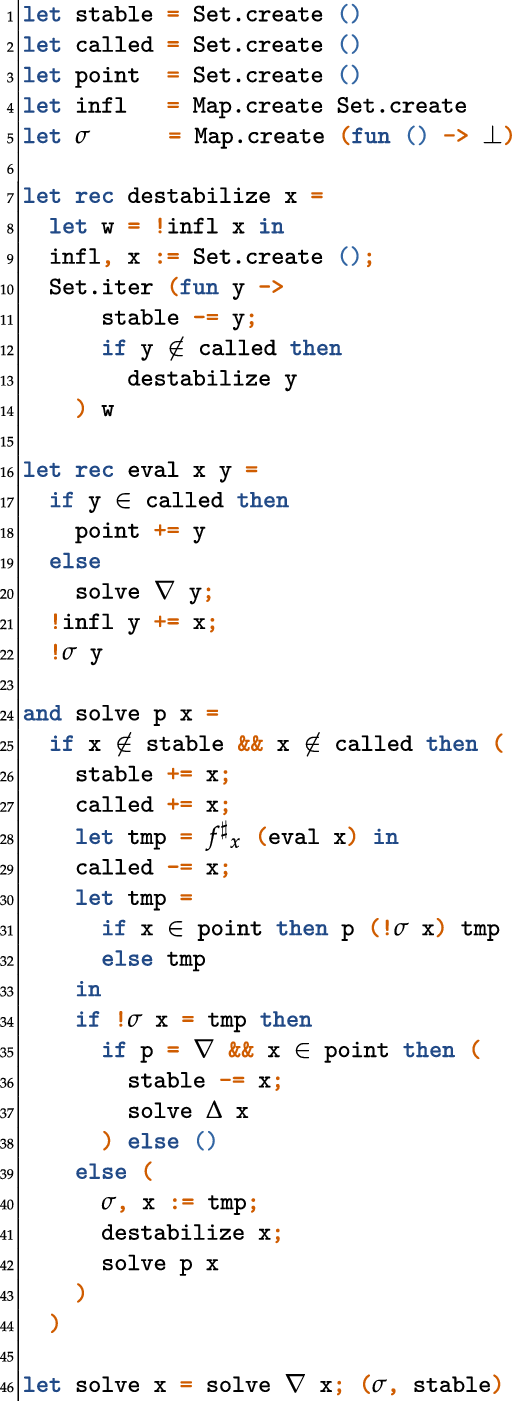

\begin{equation*}\begin{array}{lll}F^\sharp{}_{{\langle0,a\rangle}}\,\sigma &=& (a,\unicode{x00D8}) \\[0.5ex]F^\sharp{}_{{\langle1,a\rangle}}\,\sigma &=& (h^\sharp{}_1\,(\sigma{\langle0,a\rangle}),\;{{\langle0,a\rangle}}) \\[0.5ex]F^\sharp{}_{{\langle2,a\rangle}}\,\sigma &=& (\textsf{combine}^\sharp\, (\sigma{\langle1,a\rangle})\, (\sigma{\langle7,\sigma{\langle1,a\rangle}\rangle}),\\[0.5ex] & & \quad{{\langle1,a\rangle},{\langle7,\sigma{\langle1,a\rangle}\rangle}}) \\[0.5ex]F^\sharp{}_{{\langle3,a\rangle}}\,\sigma &=& (a,\unicode{x00D8}) \\[0.5ex]F^\sharp{}_{{\langle4,a\rangle}}\,\sigma &=& (\sigma{\langle3,a\rangle},\;{{\langle3,a\rangle}}) \\[0.5ex]F^\sharp{}_{{\langle5,a\rangle}}\,\sigma &=& (h^\sharp{}_1\,(\sigma{\langle4,a\rangle}),{{\langle4,a\rangle}}) \\[0.5ex]F^\sharp{}_{{\langle6,a\rangle}}\,\sigma &=& (\sigma\,{\langle3,a\rangle},\,{{\langle3,a\rangle}}) \\[0.5ex]F^\sharp{}_{{\langle7,a\rangle}}\,\sigma &=& (\textsf{combine}^\sharp\,(\sigma{\langle5,a\rangle})\, (\sigma{\langle7, \sigma{\langle5,a\rangle}\rangle}) \sqcup\,h^\sharp{}_2\,(\sigma{\langle6,a\rangle}), \\ & &\phantom{(} {{\langle5,a\rangle}, {\langle7,\sigma{\langle5,a\rangle}\rangle}, {\langle6,a\rangle}}) \end{array}\end{equation*}

\begin{equation*}\begin{array}{lll}F^\sharp{}_{{\langle0,a\rangle}}\,\sigma &=& (a,\unicode{x00D8}) \\[0.5ex]F^\sharp{}_{{\langle1,a\rangle}}\,\sigma &=& (h^\sharp{}_1\,(\sigma{\langle0,a\rangle}),\;{{\langle0,a\rangle}}) \\[0.5ex]F^\sharp{}_{{\langle2,a\rangle}}\,\sigma &=& (\textsf{combine}^\sharp\, (\sigma{\langle1,a\rangle})\, (\sigma{\langle7,\sigma{\langle1,a\rangle}\rangle}),\\[0.5ex] & & \quad{{\langle1,a\rangle},{\langle7,\sigma{\langle1,a\rangle}\rangle}}) \\[0.5ex]F^\sharp{}_{{\langle3,a\rangle}}\,\sigma &=& (a,\unicode{x00D8}) \\[0.5ex]F^\sharp{}_{{\langle4,a\rangle}}\,\sigma &=& (\sigma{\langle3,a\rangle},\;{{\langle3,a\rangle}}) \\[0.5ex]F^\sharp{}_{{\langle5,a\rangle}}\,\sigma &=& (h^\sharp{}_1\,(\sigma{\langle4,a\rangle}),{{\langle4,a\rangle}}) \\[0.5ex]F^\sharp{}_{{\langle6,a\rangle}}\,\sigma &=& (\sigma\,{\langle3,a\rangle},\,{{\langle3,a\rangle}}) \\[0.5ex]F^\sharp{}_{{\langle7,a\rangle}}\,\sigma &=& (\textsf{combine}^\sharp\,(\sigma{\langle5,a\rangle})\, (\sigma{\langle7, \sigma{\langle5,a\rangle}\rangle}) \sqcup\,h^\sharp{}_2\,(\sigma{\langle6,a\rangle}), \\ & &\phantom{(} {{\langle5,a\rangle}, {\langle7,\sigma{\langle5,a\rangle}\rangle}, {\langle6,a\rangle}}) \end{array}\end{equation*}

Corresponding to the function combine required by the collecting semantics, the auxiliary function

![]() $\textsf{combine}^\sharp:\mathbb{D}\to\mathbb{D}\to\mathbb{D}$

determines the abstract program state after a call from the abstract state before the call and the abstract state at the end of the procedure body.

$\textsf{combine}^\sharp:\mathbb{D}\to\mathbb{D}\to\mathbb{D}$

determines the abstract program state after a call from the abstract state before the call and the abstract state at the end of the procedure body.

Right-hand functions in practically given abstract systems of equations, however, usually do not explicitly provide a set of unknowns onto which the evaluation depends. Instead, the latter set is given implicitly via the implementation of the function computing the value for the left-hand side unknown. If necessary, this set must be determined by the solver, for example, as in case of TD, by keeping track of the unknowns accessed during the evaluation of the function.

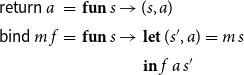

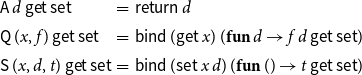

Evaluation of the right-hand side function during solving may thus affect the internal state of the solver. Such operational behavior can conveniently be made explicit by means of state transformer monads. For a set S of (solver) states, the state transformer monad

![]() $\mathcal{M}_S(A)$

for values of type A consists of all functions

$\mathcal{M}_S(A)$

for values of type A consists of all functions

![]() $S\to S\times A$

. As a special case of a monad, the state transformer monad

$S\to S\times A$

. As a special case of a monad, the state transformer monad

![]() $\mathcal{M}_S(A)$

provides functions

$\mathcal{M}_S(A)$

provides functions

![]() $\textsf{return}: A \to \mathcal{M}_S(A)$

and

$\textsf{return}: A \to \mathcal{M}_S(A)$

and

![]() $\textsf{bind}: \mathcal{M}_S(A) \to (A\to\mathcal{M}_S(B)) \to \mathcal{M}_S(B)$

. These are defined by

$\textsf{bind}: \mathcal{M}_S(A) \to (A\to\mathcal{M}_S(B)) \to \mathcal{M}_S(B)$

. These are defined by

\begin{equation*}\begin{array}{lll} \textsf{return}\,a &=& \textbf{fun}\,s\to (s,a) \\ \textsf{bind}\,m\,f &=& \textbf{fun}\,s\to \begin{array}[t]{l} \textbf{let}\,(s',a) = m\,s \\ \textbf{in}\, f\,a\,s' \end{array}\end{array}\end{equation*}

\begin{equation*}\begin{array}{lll} \textsf{return}\,a &=& \textbf{fun}\,s\to (s,a) \\ \textsf{bind}\,m\,f &=& \textbf{fun}\,s\to \begin{array}[t]{l} \textbf{let}\,(s',a) = m\,s \\ \textbf{in}\, f\,a\,s' \end{array}\end{array}\end{equation*}

The solvers we consider only take actions when the current values of unknowns are accessed during the evaluation of right-hand sides. In the monadic formulation, the right-hand side functions

![]() $f^\sharp_x, x\in\mathcal{X}$

of the abstract system of equations

$f^\sharp_x, x\in\mathcal{X}$

of the abstract system of equations

![]() $\mathbb{E}^\sharp$

therefore are of type

$\mathbb{E}^\sharp$

therefore are of type

![]() $(\mathcal{X}\to\mathcal{M}(\mathbb{D}))\to\mathcal{M}(\mathbb{D})$

for any monad

$(\mathcal{X}\to\mathcal{M}(\mathbb{D}))\to\mathcal{M}(\mathbb{D})$

for any monad

![]() $\mathcal{M}$

, that is, are parametric in

$\mathcal{M}$

, that is, are parametric in

![]() $\mathcal{M}$

(the system of equations should be ignorant of the internals of the solver!). Such functions

$\mathcal{M}$

(the system of equations should be ignorant of the internals of the solver!). Such functions

![]() $f^\sharp{}_x$

have been called pure in Karbyshev (Reference Karbyshev2013).

$f^\sharp{}_x$

have been called pure in Karbyshev (Reference Karbyshev2013).

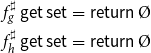

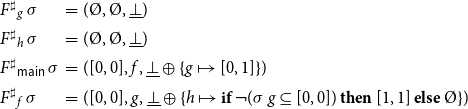

Example 6. For

![]() $\sigma^\sharp:\mathcal{X}\to\mathcal{M}_S(\mathbb{D})$

, the right-hand side functions in the monadic formulation of the abstract system of equations for the program from Figure 1 now are given by

$\sigma^\sharp:\mathcal{X}\to\mathcal{M}_S(\mathbb{D})$

, the right-hand side functions in the monadic formulation of the abstract system of equations for the program from Figure 1 now are given by

\begin{equation*}\begin{array}{lll}f^\sharp_{{\langle0,a\rangle}}\,\sigma^\sharp &=&\textsf{return}\,a \\[0.5ex]f^\sharp_{{\langle1,a\rangle}}\,\sigma &=& \textsf{bind}\,(\sigma\,{\langle0,a\rangle})\,(\textbf{fun}\,b\to \\ & & \textsf{return}\,(h^\sharp_1\,b)) \\[0.5ex]f^\sharp_{{\langle2,a\rangle}}\,\sigma &=& \textsf{bind}\,(\sigma\,{\langle1,a\rangle})\,(\textbf{fun}\,b_1\to\\ & & \textsf{bind}\,(\sigma\,{\langle7,b_1\rangle})\,(\textbf{fun} b_2 \to\\ & & \textsf{return}\,(\textsf{combine}^\sharp\,b_1\,b_2))) \\[0.5ex]f^\sharp_{{\langle3,a\rangle}}\,\sigma &=& \textsf{return}\,a \\[0.5ex]f^\sharp_{{\langle4,a\rangle}}\,\sigma &=& \sigma\,{\langle3,a\rangle} \\[0.5ex]f^\sharp_{{\langle5,a\rangle}}\,\sigma &=& \textsf{bind}\,(\sigma\,{\langle4,a\rangle})\,(\textbf{fun}\,b\to \\ & & \textsf{return}\,(h^\sharp_1\,b)) \\[0.5ex]f^\sharp_{{\langle6,a\rangle}}\,\sigma &=& \sigma\,{\langle3,a\rangle} \\[0.5ex]f^\sharp_{{\langle7,a\rangle}}\,\sigma &=& \textsf{bind}\,(\sigma\,{\langle5,a\rangle})\,(\textbf{fun}\,b_1\to \\ & & \textsf{bind}\,(\sigma\,{\langle7,b_1\rangle})\,(\textbf{fun}\,b_2\to \\ & & \textsf{bind}\,(\sigma\,{\langle6,a\rangle})\,(\textbf{fun}\,b_3\to \\ & & \textsf{return}\,(\textsf{combine}^\sharp\,b_1\,b2\;\sqcup\;h^\sharp_2\,b_3))))\end{array}\end{equation*}

\begin{equation*}\begin{array}{lll}f^\sharp_{{\langle0,a\rangle}}\,\sigma^\sharp &=&\textsf{return}\,a \\[0.5ex]f^\sharp_{{\langle1,a\rangle}}\,\sigma &=& \textsf{bind}\,(\sigma\,{\langle0,a\rangle})\,(\textbf{fun}\,b\to \\ & & \textsf{return}\,(h^\sharp_1\,b)) \\[0.5ex]f^\sharp_{{\langle2,a\rangle}}\,\sigma &=& \textsf{bind}\,(\sigma\,{\langle1,a\rangle})\,(\textbf{fun}\,b_1\to\\ & & \textsf{bind}\,(\sigma\,{\langle7,b_1\rangle})\,(\textbf{fun} b_2 \to\\ & & \textsf{return}\,(\textsf{combine}^\sharp\,b_1\,b_2))) \\[0.5ex]f^\sharp_{{\langle3,a\rangle}}\,\sigma &=& \textsf{return}\,a \\[0.5ex]f^\sharp_{{\langle4,a\rangle}}\,\sigma &=& \sigma\,{\langle3,a\rangle} \\[0.5ex]f^\sharp_{{\langle5,a\rangle}}\,\sigma &=& \textsf{bind}\,(\sigma\,{\langle4,a\rangle})\,(\textbf{fun}\,b\to \\ & & \textsf{return}\,(h^\sharp_1\,b)) \\[0.5ex]f^\sharp_{{\langle6,a\rangle}}\,\sigma &=& \sigma\,{\langle3,a\rangle} \\[0.5ex]f^\sharp_{{\langle7,a\rangle}}\,\sigma &=& \textsf{bind}\,(\sigma\,{\langle5,a\rangle})\,(\textbf{fun}\,b_1\to \\ & & \textsf{bind}\,(\sigma\,{\langle7,b_1\rangle})\,(\textbf{fun}\,b_2\to \\ & & \textsf{bind}\,(\sigma\,{\langle6,a\rangle})\,(\textbf{fun}\,b_3\to \\ & & \textsf{return}\,(\textsf{combine}^\sharp\,b_1\,b2\;\sqcup\;h^\sharp_2\,b_3))))\end{array}\end{equation*}

According to the considerations in Karbyshev (Reference Karbyshev2013), each pure function f of type

![]() $(\mathcal{X}\to\mathcal{M}(\mathbb{D}))\to\mathcal{M}(\mathbb{D})$

equals the semantics

$(\mathcal{X}\to\mathcal{M}(\mathbb{D}))\to\mathcal{M}(\mathbb{D})$

equals the semantics

![]() ${t}$

of some computation tree t. Computation trees make explicit in which order the values of unknowns are queried when computing the result values of a function. The set of all computation trees (over the unknowns

${t}$

of some computation tree t. Computation trees make explicit in which order the values of unknowns are queried when computing the result values of a function. The set of all computation trees (over the unknowns

![]() $\mathcal{X}^\sharp$

and the set of values

$\mathcal{X}^\sharp$

and the set of values

![]() $\mathbb{D}$

) is the least set

$\mathbb{D}$

) is the least set

![]() $\mathcal{T}$

with

$\mathcal{T}$

with

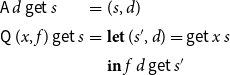

The computation tree

![]() $\textsf{A}\,d$

immediately returns the answer d, while the computation tree

$\textsf{A}\,d$

immediately returns the answer d, while the computation tree

![]() $\textsf{Q}\,(x,f)$

queries the value of the unknown x in order to apply the continuation f to the obtained value.

$\textsf{Q}\,(x,f)$

queries the value of the unknown x in order to apply the continuation f to the obtained value.

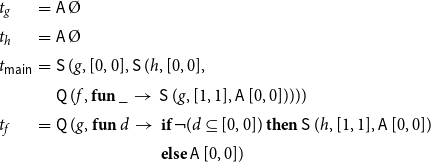

Example 7 Consider again the program from Figure 1 consisting of the procedures main and p and the abstract system of equations as provided in Example 6. The computation trees

![]() $t_{{\langle u,a\rangle}}$

for the right-hand side functions

$t_{{\langle u,a\rangle}}$

for the right-hand side functions

![]() $f^\sharp_{{\langle u,a\rangle}}$

then are given by:

$f^\sharp_{{\langle u,a\rangle}}$

then are given by:

\begin{equation*}\begin{array}{lll}t_{{\langle0,a\rangle}} &=& \textsf{A}\,a \\[0.5ex]t_{{\langle1,a\rangle}} &=& \textsf{Q}\,({\langle0,a\rangle},\textbf{fun}\,b\to \textsf{A}\,(h^\sharp{}_1\,b)) \\[0.5ex]t_{{\langle2,a\rangle}} &=& \textsf{Q}\,({\langle1,a\rangle},\textbf{fun}\,b\to \\[0.5ex] & & \textsf{Q}\,({\langle7,b\rangle},\textbf{fun}\,b'\to \\[0.5ex] & & \textsf{A}\,(\textsf{combine}^\sharp\,b\,b'))) \\[0.5ex]t_{{\langle3,a\rangle}} &=& \textsf{A}\,a \\[0.5ex]t_{{\langle4,a\rangle}} &=& \textsf{Q}\,({\langle3,a\rangle},\textbf{fun}\,b\to \textsf{A}\,b) \\[0.5ex]t_{{\langle5,a\rangle}} &=& \textsf{Q}\,({\langle4,a\rangle},\textbf{fun}\,b\to \textsf{A}\,(h^\sharp{}_1\,b)) \\[0.5ex]t_{{\langle6,a\rangle}} &=& \textsf{Q}\,({\langle3,a\rangle},\textbf{fun}\,b\to \textsf{A}\,b) \\[0.5ex]t_{{\langle7,a\rangle}} &=& \textsf{Q}\,({\langle5,a\rangle},\textbf{fun}\,b\to \\[0.5ex] & & \textsf{Q}\,({\langle7,b\rangle},\textbf{fun}\,b'\to \\[0.5ex] & & \textsf{Q}\,({\langle6,a\rangle},\textbf{fun}\,b''\to\\[0.5ex] & & \textsf{A}\,(\textsf{combine}^\sharp\,b\,b'\sqcup h^\sharp{}_2\,b''))))\end{array}\end{equation*}

\begin{equation*}\begin{array}{lll}t_{{\langle0,a\rangle}} &=& \textsf{A}\,a \\[0.5ex]t_{{\langle1,a\rangle}} &=& \textsf{Q}\,({\langle0,a\rangle},\textbf{fun}\,b\to \textsf{A}\,(h^\sharp{}_1\,b)) \\[0.5ex]t_{{\langle2,a\rangle}} &=& \textsf{Q}\,({\langle1,a\rangle},\textbf{fun}\,b\to \\[0.5ex] & & \textsf{Q}\,({\langle7,b\rangle},\textbf{fun}\,b'\to \\[0.5ex] & & \textsf{A}\,(\textsf{combine}^\sharp\,b\,b'))) \\[0.5ex]t_{{\langle3,a\rangle}} &=& \textsf{A}\,a \\[0.5ex]t_{{\langle4,a\rangle}} &=& \textsf{Q}\,({\langle3,a\rangle},\textbf{fun}\,b\to \textsf{A}\,b) \\[0.5ex]t_{{\langle5,a\rangle}} &=& \textsf{Q}\,({\langle4,a\rangle},\textbf{fun}\,b\to \textsf{A}\,(h^\sharp{}_1\,b)) \\[0.5ex]t_{{\langle6,a\rangle}} &=& \textsf{Q}\,({\langle3,a\rangle},\textbf{fun}\,b\to \textsf{A}\,b) \\[0.5ex]t_{{\langle7,a\rangle}} &=& \textsf{Q}\,({\langle5,a\rangle},\textbf{fun}\,b\to \\[0.5ex] & & \textsf{Q}\,({\langle7,b\rangle},\textbf{fun}\,b'\to \\[0.5ex] & & \textsf{Q}\,({\langle6,a\rangle},\textbf{fun}\,b''\to\\[0.5ex] & & \textsf{A}\,(\textsf{combine}^\sharp\,b\,b'\sqcup h^\sharp{}_2\,b''))))\end{array}\end{equation*}

The semantics of a computation tree t is a function

![]() ${t}:(\mathcal{X}^\sharp\to\mathcal{M}(\mathbb{D}))\to \mathcal{M}(\mathbb{D})$

where for

${t}:(\mathcal{X}^\sharp\to\mathcal{M}(\mathbb{D}))\to \mathcal{M}(\mathbb{D})$

where for

![]() $\textsf{get}:\mathcal{X}\to\mathcal{M}(\mathbb{D})$

,

$\textsf{get}:\mathcal{X}\to\mathcal{M}(\mathbb{D})$

,

In the particular case that

![]() $\mathcal{M}$

is the state transformer monad for a set of states S, we have:

$\mathcal{M}$

is the state transformer monad for a set of states S, we have:

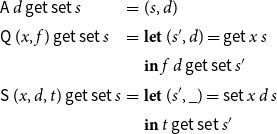

\begin{equation*}\begin{array}{lll} {\textsf{A}\,d}\,\textsf{get}\,s &=& (s,d) \\ {\textsf{Q}\,(x,f)}\,\textsf{get}\,s &=& \textbf{let}\;(s',d) = \textsf{get}\,x\,s \\ & & \textbf{in}\;{f\,d}\,\textsf{get}\,s' \\\end{array}\end{equation*}

\begin{equation*}\begin{array}{lll} {\textsf{A}\,d}\,\textsf{get}\,s &=& (s,d) \\ {\textsf{Q}\,(x,f)}\,\textsf{get}\,s &=& \textbf{let}\;(s',d) = \textsf{get}\,x\,s \\ & & \textbf{in}\;{f\,d}\,\textsf{get}\,s' \\\end{array}\end{equation*}

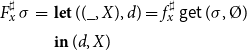

When reasoning about (partial post-)solutions of abstract systems of equations, we prefer to have right-hand side functions where (a superset of) the set of accessed unknowns is explicit, as we used for concrete systems of equations. These functions, however, can be recovered from right-hand side functions in monadic form, as we indicate now.

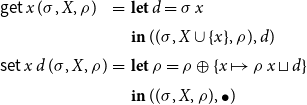

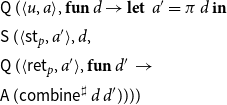

One instance of state transformer monads is a monad which tracks the variables accessed during the evaluation. Consider the set of states

![]() $S = (\mathcal{X}^\sharp\to\mathbb{D})\times \mathcal{P} ({\mathcal{X}^\sharp})$

together with the function

$S = (\mathcal{X}^\sharp\to\mathbb{D})\times \mathcal{P} ({\mathcal{X}^\sharp})$

together with the function

Proposition 2. For a mapping

![]() $\sigma : \mathcal{X}^\sharp\to\mathbb{D}$

and

$\sigma : \mathcal{X}^\sharp\to\mathbb{D}$

and

![]() $s=(\sigma,\unicode{x00D8})$

, assume that

$s=(\sigma,\unicode{x00D8})$

, assume that

![]() ${t}\;\textsf{get}\;s = (s_1,d)$

. Then for

${t}\;\textsf{get}\;s = (s_1,d)$

. Then for

![]() $s_1=(\sigma{}_1,X)$

the following holds:

$s_1=(\sigma{}_1,X)$

the following holds:

-

1.

$\sigma=\sigma{}_1$

;

$\sigma=\sigma{}_1$

; -

2. Assume that

$\sigma':\mathcal{X}^\sharp\to\mathbb{D}$

is another mapping and

$\sigma':\mathcal{X}^\sharp\to\mathbb{D}$

is another mapping and

$s'=(\sigma',\unicode{x00D8})$

. Let

$s'=(\sigma',\unicode{x00D8})$

. Let

${t}\;\textsf{get}\;s' = ((\sigma',X'),d')$

. If

${t}\;\textsf{get}\;s' = ((\sigma',X'),d')$

. If

$\sigma'$

agrees with

$\sigma'$

agrees with

$\sigma$

on X, that is,

$\sigma$

on X, that is,

$\sigma|_X = \sigma'|_X$

, then

$\sigma|_X = \sigma'|_X$

, then

$X'=X$

and

$X'=X$

and

$d'=d$

holds.

$d'=d$

holds.

We strengthen the statement by claiming that the conclusions also hold when s and s’ are given by

![]() $(\sigma,X_0)$

and

$(\sigma,X_0)$

and

![]() $(\sigma',X_0)$

, respectively, for the same set

$(\sigma',X_0)$

, respectively, for the same set

![]() $X_0$

. Then, the proof is by induction on the structure of t.

$X_0$

. Then, the proof is by induction on the structure of t.

Now assume that for each abstract unknown

![]() $x\in\mathcal{X}^\sharp$

, the system

$x\in\mathcal{X}^\sharp$

, the system

![]() $\mathbb{E}^\sharp$

provides us with a right-hand side function

$\mathbb{E}^\sharp$

provides us with a right-hand side function

![]() $f^\sharp_x:(\mathcal{X}\to\mathcal{M}(\mathbb{D}))\to\mathcal{M}(\mathbb{D})$

. Then, the elaborated abstract right-hand side function

$f^\sharp_x:(\mathcal{X}\to\mathcal{M}(\mathbb{D}))\to\mathcal{M}(\mathbb{D})$

. Then, the elaborated abstract right-hand side function

![]() $F_x^\sharp : (\mathcal{X}^\sharp \to \mathbb{D}) \to \mathbb{D} \times \mathcal{P} ({\mathcal{X}^\sharp})$

of x is given by:

$F_x^\sharp : (\mathcal{X}^\sharp \to \mathbb{D}) \to \mathbb{D} \times \mathcal{P} ({\mathcal{X}^\sharp})$

of x is given by:

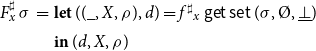

\begin{equation*}\begin{array}{lll}F_x^\sharp\,\sigma &=& \textbf{let}\;((\_,X),d) = f^\sharp_x\,\textsf{get}\,(\sigma,\unicode{x00D8}) \\& & \textbf{in}\; (d,X)\end{array}\end{equation*}

\begin{equation*}\begin{array}{lll}F_x^\sharp\,\sigma &=& \textbf{let}\;((\_,X),d) = f^\sharp_x\,\textsf{get}\,(\sigma,\unicode{x00D8}) \\& & \textbf{in}\; (d,X)\end{array}\end{equation*}

In fact, the explicit right-hand side functions

![]() $F^\sharp_{{\langle u,a\rangle}}$

of Example 5 are obtained in this way from the functions

$F^\sharp_{{\langle u,a\rangle}}$

of Example 5 are obtained in this way from the functions

![]() $f^\sharp_{{\langle u,a\rangle}}$

of Example 6.

$f^\sharp_{{\langle u,a\rangle}}$

of Example 6.

In order to relate the abstract with a corresponding concrete system of equations, we assume that there is a Galois connection (Cousot and Cousot Reference Cousot, Cousot, Graham, Harrison and Sethi1977) between the complete lattices

![]() $\mathcal{P} (\mathcal{Q})$

and

$\mathcal{P} (\mathcal{Q})$

and

![]() $\mathbb{D}$

, that is, monotonic mappings

$\mathbb{D}$

, that is, monotonic mappings

![]() $\alpha : \mathcal{P} (\mathcal{Q})\to\mathbb{D}$

(the abstraction) and

$\alpha : \mathcal{P} (\mathcal{Q})\to\mathbb{D}$

(the abstraction) and

![]() $\gamma : \mathbb{D}\to \mathcal{P} (\mathcal{Q})$

(the concretization) so that

$\gamma : \mathbb{D}\to \mathcal{P} (\mathcal{Q})$

(the concretization) so that

holds for all

![]() $Q\in\mathcal{P} (\mathcal{Q})$

and

$Q\in\mathcal{P} (\mathcal{Q})$

and

![]() $a\in\mathbb{D}$

.

$a\in\mathbb{D}$

.

In general, the sets of unknowns of the concrete system to be analyzed and the corresponding abstract system need not coincide. For interprocedural context-sensitive analysis, for example, the set of concrete unknowns is given by the set of all pairs

![]() ${\langle{u,q}\rangle}$

where u is a program point and

${\langle{u,q}\rangle}$

where u is a program point and

![]() $q\in\mathcal{Q}$

is a program state. The set of abstract unknowns are of the same form. The second components of pairs, however, now represent abstract calling contexts. Therefore, we assume that we are given a description relation

$q\in\mathcal{Q}$

is a program state. The set of abstract unknowns are of the same form. The second components of pairs, however, now represent abstract calling contexts. Therefore, we assume that we are given a description relation

![]() $\mathrel{\mathcal{R}} \subseteq \mathcal{X} \times \mathcal{X}^\sharp$

between the concrete and abstract unknowns. In case of interprocedural analysis, for example, we define

$\mathrel{\mathcal{R}} \subseteq \mathcal{X} \times \mathcal{X}^\sharp$

between the concrete and abstract unknowns. In case of interprocedural analysis, for example, we define

![]() $\mathrel{\mathcal{R}}$

by

$\mathrel{\mathcal{R}}$

by

Using the concretization

![]() $\gamma$

, the description relation

$\gamma$

, the description relation

![]() $\mathrel{\mathcal{R}}$

on unknowns is extended as follows.

$\mathrel{\mathcal{R}}$

on unknowns is extended as follows.

-

For sets of unknowns

$Y\subseteq\mathcal{X}$

and

$Y\subseteq\mathcal{X}$

and

$Y^\sharp\subseteq\mathcal{X}^\sharp$

,

$Y^\sharp\subseteq\mathcal{X}^\sharp$

,

$Y\mathrel{\mathcal{R}} Y^\sharp$

iff for each

$Y\mathrel{\mathcal{R}} Y^\sharp$

iff for each

$y\in Y$

,

$y\in Y$

,

$y\mathrel{\mathcal{R}} y^\sharp$

for some

$y\mathrel{\mathcal{R}} y^\sharp$

for some

$y^\sharp\in Y^\sharp$

.

$y^\sharp\in Y^\sharp$

. -

For sets of states

$Q\in\mathcal{P} (\mathcal{Q})$

and

$Q\in\mathcal{P} (\mathcal{Q})$

and

$d\in\mathbb{D}$

,

$d\in\mathbb{D}$

,

$Q\mathrel{\mathcal{R}} d$

iff

$Q\mathrel{\mathcal{R}} d$

iff

$Q\subseteq\gamma\,d$

;

$Q\subseteq\gamma\,d$

; -

For partial assignments

$(\sigma,\textit{dom})$

and

$(\sigma,\textit{dom})$

and

$(\sigma^\sharp,\textit{dom}^\sharp)$

with

$(\sigma^\sharp,\textit{dom}^\sharp)$

with

$\sigma : \mathcal{X}\to\mathbb{D},\sigma^\sharp : \mathcal{X}^\sharp\to\mathbb{D}^\sharp$

and

$\sigma : \mathcal{X}\to\mathbb{D},\sigma^\sharp : \mathcal{X}^\sharp\to\mathbb{D}^\sharp$

and

$\textit{dom}\subseteq\mathcal{X},\textit{dom}^\sharp\subseteq\mathcal{X}^\sharp$

,

$\textit{dom}\subseteq\mathcal{X},\textit{dom}^\sharp\subseteq\mathcal{X}^\sharp$

,

$(\sigma,\textit{dom})\mathrel{\mathcal{R}}(\sigma^\sharp,\textit{dom}^\sharp)$

holds if

$(\sigma,\textit{dom})\mathrel{\mathcal{R}}(\sigma^\sharp,\textit{dom}^\sharp)$

holds if

$\textit{dom}\mathrel{\mathcal{R}}\textit{dom}^\sharp$

, and for all

$\textit{dom}\mathrel{\mathcal{R}}\textit{dom}^\sharp$

, and for all

$y\in\textit{dom}$

,

$y\in\textit{dom}$

,

$y\in\textit{dom}^\sharp$

with

$y\in\textit{dom}^\sharp$

with

$y\mathrel{\mathcal{R}} y^\sharp$

,

$y\mathrel{\mathcal{R}} y^\sharp$

,

$\sigma\,y\subseteq\gamma(\sigma^\sharp\,y^\sharp)$

.

$\sigma\,y\subseteq\gamma(\sigma^\sharp\,y^\sharp)$

. -

For (elaborated) right-hand sides

$F : (\mathcal{X}\to \mathcal{P} ({\mathcal{Q}}))\to(\mathcal{P} (\mathcal{Q})\times \mathcal{P} (\mathcal{X}))$

and

$F : (\mathcal{X}\to \mathcal{P} ({\mathcal{Q}}))\to(\mathcal{P} (\mathcal{Q})\times \mathcal{P} (\mathcal{X}))$

and

$F^\sharp : (\mathcal{X}^\sharp\to \mathbb{D})\to(\mathbb{D}\times \mathcal{P} ({\mathcal{X}^\sharp}))$

,

$F^\sharp : (\mathcal{X}^\sharp\to \mathbb{D})\to(\mathbb{D}\times \mathcal{P} ({\mathcal{X}^\sharp}))$

,

$F\mathrel{\mathcal{R}} F^\sharp$

iff

$F\mathrel{\mathcal{R}} F^\sharp$

iff

$(F\,\sigma)_1\subseteq\gamma\,(F^\sharp\,\sigma^\sharp)_1$

, and

$(F\,\sigma)_1\subseteq\gamma\,(F^\sharp\,\sigma^\sharp)_1$

, and

$(F\,\sigma)_2\mathrel{\mathcal{R}}(F^\sharp\,\sigma^\sharp)_2$

whenever

$(F\,\sigma)_2\mathrel{\mathcal{R}}(F^\sharp\,\sigma^\sharp)_2$

whenever

$(\sigma,\textit{dom})\mathrel{\mathcal{R}}(\sigma^\sharp,\textit{dom}^\sharp)$

holds for domains

$(\sigma,\textit{dom})\mathrel{\mathcal{R}}(\sigma^\sharp,\textit{dom}^\sharp)$

holds for domains

$\textit{dom},\textit{dom}^\sharp$

which are

$\textit{dom},\textit{dom}^\sharp$

which are

$(\sigma,\mathbb{E})$

-closed and

$(\sigma,\mathbb{E})$

-closed and

$(\sigma^\sharp,\mathbb{E}^\sharp)$

-closed, respectively.

$(\sigma^\sharp,\mathbb{E}^\sharp)$

-closed, respectively. -

For equation systems

$\mathbb{E} : \mathcal{X} \to ((\mathcal{X}\to \mathcal{P} ({\mathcal{Q}}))\to(\mathcal{P} (\mathcal{Q})\times \mathcal{P} (\mathcal{X})))$

and

$\mathbb{E} : \mathcal{X} \to ((\mathcal{X}\to \mathcal{P} ({\mathcal{Q}}))\to(\mathcal{P} (\mathcal{Q})\times \mathcal{P} (\mathcal{X})))$

and

$\mathbb{E}^\sharp : \mathcal{X}^\sharp \to ((\mathcal{X}^\sharp\to \mathcal{M}_S(\mathbb{D}))\to\mathcal{M}_S(\mathbb{D}))$

,

$\mathbb{E}^\sharp : \mathcal{X}^\sharp \to ((\mathcal{X}^\sharp\to \mathcal{M}_S(\mathbb{D}))\to\mathcal{M}_S(\mathbb{D}))$

,

$\mathbb{E} \mathrel{\mathcal{R}} \mathbb{E}^\sharp$

iff

$\mathbb{E} \mathrel{\mathcal{R}} \mathbb{E}^\sharp$

iff

$(\mathbb{E}\,x) \mathrel{\mathcal{R}} F^\sharp{}_{x^\sharp}$

for each

$(\mathbb{E}\,x) \mathrel{\mathcal{R}} F^\sharp{}_{x^\sharp}$

for each

$x \in \mathcal{X}$

,

$x \in \mathcal{X}$

,

$x^\sharp \in \mathcal{X}^\sharp$

, where

$x^\sharp \in \mathcal{X}^\sharp$

, where

$F^\sharp{}_{x^\sharp}$

is the elaboration of

$F^\sharp{}_{x^\sharp}$

is the elaboration of

$\mathbb{E}^\sharp\,x^\sharp$

.

$\mathbb{E}^\sharp\,x^\sharp$

.

Let

![]() $(\sigma,\textit{dom})$

be the least solution of the concrete system

$(\sigma,\textit{dom})$

be the least solution of the concrete system

![]() $\mathbb{E}$

for some set X of interesting unknowns. Let

$\mathbb{E}$

for some set X of interesting unknowns. Let

![]() $\mathbb{E}^\sharp$

denote an abstract system corresponding to

$\mathbb{E}^\sharp$

denote an abstract system corresponding to

![]() $\mathbb{E}$

and

$\mathbb{E}$

and

![]() $X^\sharp$

a set of abstract unknowns and

$X^\sharp$

a set of abstract unknowns and

![]() $\mathrel{\mathcal{R}}$

a description relation between the unknowns of

$\mathrel{\mathcal{R}}$

a description relation between the unknowns of

![]() $\mathbb{E}$

and

$\mathbb{E}$

and

![]() $\mathbb{E}^\sharp$

such that

$\mathbb{E}^\sharp$

such that

![]() $\mathbb{E}\;\mathrel{\mathcal{R}}\;\mathbb{E}^\sharp$

and

$\mathbb{E}\;\mathrel{\mathcal{R}}\;\mathbb{E}^\sharp$

and

![]() $X\;\mathrel{\mathcal{R}}\;X^\sharp$

holds. A local solver then determines for

$X\;\mathrel{\mathcal{R}}\;X^\sharp$

holds. A local solver then determines for

![]() $\mathbb{E}^\sharp$

and

$\mathbb{E}^\sharp$

and

![]() $X^\sharp$

a pair

$X^\sharp$

a pair

![]() $(\sigma^\sharp,\textit{dom}^\sharp)$

so that

$(\sigma^\sharp,\textit{dom}^\sharp)$

so that

![]() $X\subseteq\textit{dom}^\sharp$

,

$X\subseteq\textit{dom}^\sharp$

,

![]() $\textit{dom}^\sharp$

is

$\textit{dom}^\sharp$

is

![]() $\sigma^\sharp$

-closed and

$\sigma^\sharp$

-closed and

![]() $(\sigma,\textit{dom})\;\mathrel{\mathcal{R}}\;(\sigma^\sharp,\textit{dom}^\sharp)$

holds, that is, the result produced by the solver is a sound description of the least partial solution of the concrete system.

$(\sigma,\textit{dom})\;\mathrel{\mathcal{R}}\;(\sigma^\sharp,\textit{dom}^\sharp)$

holds, that is, the result produced by the solver is a sound description of the least partial solution of the concrete system.

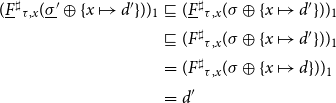

In absence of narrowing, the correctness of a solver can be proven intrinsically, that is, just by verifying that it terminates with a post-solution of the system of equations.

We first convince ourselves that the following holds:

Proposition 3. Assume that

![]() $\mathbb{E}\mathrel{\mathcal{R}}\mathbb{E}^\sharp$

holds and

$\mathbb{E}\mathrel{\mathcal{R}}\mathbb{E}^\sharp$

holds and

![]() $X\mathrel{\mathcal{R}} X^\sharp$

for subsets X and

$X\mathrel{\mathcal{R}} X^\sharp$

for subsets X and

![]() $X^\sharp$

concrete and abstract unknowns, respectively. Assume that

$X^\sharp$

concrete and abstract unknowns, respectively. Assume that

![]() $(\sigma,\textit{dom})$

is the least partial post-solution of

$(\sigma,\textit{dom})$

is the least partial post-solution of

![]() $\mathbb{E}$

with

$\mathbb{E}$

with

![]() $X\subseteq\textit{dom}$

, and

$X\subseteq\textit{dom}$

, and

![]() $(\sigma^\sharp,\textit{dom}^\sharp)$

some partial post-solution of

$(\sigma^\sharp,\textit{dom}^\sharp)$

some partial post-solution of

![]() $\mathbb{E}^\sharp$

with

$\mathbb{E}^\sharp$

with

![]() $X^\sharp\subseteq\textit{dom}^\sharp$

. Then

$X^\sharp\subseteq\textit{dom}^\sharp$

. Then

![]() $(\sigma,\textit{dom})\mathrel{\mathcal{R}}(\sigma^\sharp,\textit{dom}^\sharp)$

holds.

$(\sigma,\textit{dom})\mathrel{\mathcal{R}}(\sigma^\sharp,\textit{dom}^\sharp)$

holds.

Proposition 3 is a special case of Proposition 4 where additionally side effects and dynamic description relations are taken into account. Therefore, the proof of Proposition 3 is omitted. Proposition 3 can be used to prove soundness for local solver algorithms which perform accumulating fixpoint iteration and thus return partial post-solutions. These kinds of solvers require abstract domains where strictly ascending chains are always finite. This assumption, however, is no longer met for more complicated domains such as the interval domain (Cousot and Cousot Reference Cousot, Cousot, Graham, Harrison and Sethi1977) or octagons (Mine 2001). As already observed in Cousot and Cousot (Reference Cousot, Cousot, Graham, Harrison and Sethi1977), their applicability to these domains can still be extended by introducing widening operators. According to Cousot and Cousot (Reference Cousot and Cousot1992), Cousot (Reference Cousot, D’Souza, Lal and Larsen2015), a widening operator

![]() $\nabla$

is a mapping

$\nabla$

is a mapping

![]() $\nabla : \mathbb{D}\times\mathbb{D}\to\mathbb{D}$

with the following two properties:

$\nabla : \mathbb{D}\times\mathbb{D}\to\mathbb{D}$

with the following two properties:

-

(1).

$a\sqcup b\sqsubseteq a\nabla b$

for all

$a\sqcup b\sqsubseteq a\nabla b$

for all

$a,b\in\mathbb{D}$

;

$a,b\in\mathbb{D}$

; -

(2). Every sequence

$a_0,a_1,\ldots $

defined by

$a_0,a_1,\ldots $

defined by

$a_{i+1} = a_i\nabla b_i$

,

$a_{i+1} = a_i\nabla b_i$

,

$i\geq 0$

for any

$i\geq 0$

for any

$b_i\in\mathbb{D}$

is ultimately stable.

$b_i\in\mathbb{D}$

is ultimately stable.

Acceleration with widening then means that the occurrences of

![]() $\sqcup$

in the solver are replaced with

$\sqcup$

in the solver are replaced with

![]() $\nabla$

.

$\nabla$

.

Example 8. For intervals over

![]() $\mathbb{Z}{}_{-\infty}^{+\infty}$

we could use primitive widening:

$\mathbb{Z}{}_{-\infty}^{+\infty}$

we could use primitive widening:

Alternatively, we could use threshold widening where several intermediate bounds are introduced that can be jumped to. Note that widening in general is not monotonic in the first argument:

![]() $[0,1] \sqsubseteq [0,2]$

but

$[0,1] \sqsubseteq [0,2]$

but

![]() $[0,1] \nabla [0,2] = [0, +\infty] \not\sqsubseteq [0,2] = [0,2] \nabla [0,2]$

. We remark that Cousot and Cousot (Reference Cousot and Cousot1992), Cousot (Reference Cousot, D’Souza, Lal and Larsen2015) provide a more general notion of widening which refers not to the ordering of

$[0,1] \nabla [0,2] = [0, +\infty] \not\sqsubseteq [0,2] = [0,2] \nabla [0,2]$

. We remark that Cousot and Cousot (Reference Cousot and Cousot1992), Cousot (Reference Cousot, D’Souza, Lal and Larsen2015) provide a more general notion of widening which refers not to the ordering of

![]() $\mathbb{D}$

but (via

$\mathbb{D}$

but (via

![]() $\gamma$

) to the ordering in the concrete lattice

$\gamma$

) to the ordering in the concrete lattice

![]() $\mathcal{P} (\mathcal{Q})$

alone. W.r.t. that definition,

$\mathcal{P} (\mathcal{Q})$

alone. W.r.t. that definition,

![]() $a\sqcup b$

is no longer necessarily less or equal

$a\sqcup b$

is no longer necessarily less or equal

![]() $a\nabla b$

. In many applications, however, accelerated loss of precision due to widening may result in unacceptable analysis results. Therefore, Cousot and Cousot (Reference Cousot, Cousot, Graham, Harrison and Sethi1977) proposed to complement a terminating widening iteration with a narrowing iteration which subsequently tries to recover some of the precision loss. Following Cousot and Cousot (Reference Cousot and Cousot1992), Cousot (Reference Cousot, D’Souza, Lal and Larsen2015), a narrowing operator

$a\nabla b$

. In many applications, however, accelerated loss of precision due to widening may result in unacceptable analysis results. Therefore, Cousot and Cousot (Reference Cousot, Cousot, Graham, Harrison and Sethi1977) proposed to complement a terminating widening iteration with a narrowing iteration which subsequently tries to recover some of the precision loss. Following Cousot and Cousot (Reference Cousot and Cousot1992), Cousot (Reference Cousot, D’Souza, Lal and Larsen2015), a narrowing operator

![]() $\Delta$

is a mapping

$\Delta$

is a mapping

![]() $\Delta : \mathbb{D}\times\mathbb{D}\to\mathbb{D}$

with the following two properties:

$\Delta : \mathbb{D}\times\mathbb{D}\to\mathbb{D}$

with the following two properties:

-

(1).

$a\sqcap b\sqsubseteq(a\Delta b)\sqsubseteq a$

for all

$a\sqcap b\sqsubseteq(a\Delta b)\sqsubseteq a$

for all

$a,b\in\mathbb{D}$

;

$a,b\in\mathbb{D}$

; -

(2). Every sequence

$a_0,a_1,\ldots $

defined by

$a_0,a_1,\ldots $

defined by

$a_{i+1} = a_i\Delta b_i$

,

$a_{i+1} = a_i\Delta b_i$

,

$i\geq 0$

for any

$i\geq 0$

for any

$b_i\in\mathbb{D}$

is ultimately stable.

$b_i\in\mathbb{D}$

is ultimately stable.

Example 9. For intervals over

![]() $\mathbb{Z}{}_{-\infty}^{+\infty}$

we could use primitive narrowing:

$\mathbb{Z}{}_{-\infty}^{+\infty}$

we could use primitive narrowing:

which improves infinite bounds only. More sophisticated narrowing operators may allow a bounded number of improvements of finite bounds as well. Again we remark that, according to the more general definition in Cousot and Cousot (Reference Cousot and Cousot1992), Cousot (Reference Cousot, D’Souza, Lal and Larsen2015), the first property need not necessarily be satisfied. For monotonic systems of equations, the narrowing iteration when starting with a (partial) post-solution still will return a (partial) post-solution. The correctness of the solver started with an initial query

![]() $x^\sharp$

and returning a partial assignment

$x^\sharp$

and returning a partial assignment

![]() $\sigma^\sharp$

with domain

$\sigma^\sharp$

with domain

![]() $\textit{dom}^\sharp$

thus can readily be checked by verifying that

$\textit{dom}^\sharp$

thus can readily be checked by verifying that

-

1.

$x^\sharp\in\textit{dom}^\sharp$

;

$x^\sharp\in\textit{dom}^\sharp$

; -

2.

$(F^\sharp{}_x\,\sigma^\sharp)_2\subseteq\textit{dom}^\sharp$

for all

$(F^\sharp{}_x\,\sigma^\sharp)_2\subseteq\textit{dom}^\sharp$

for all

$x\in\textit{dom}^\sharp$

, that is,

$x\in\textit{dom}^\sharp$

, that is,

$\textit{dom}^\sharp$

is

$\textit{dom}^\sharp$

is

$(\sigma^\sharp,\mathbb{E}^\sharp)$

-closed; and

$(\sigma^\sharp,\mathbb{E}^\sharp)$

-closed; and -

3.

$\sigma^\sharp\,x\sqsupseteq(F^\sharp{}_x\,\sigma^\sharp)_1$

for all

$\sigma^\sharp\,x\sqsupseteq(F^\sharp{}_x\,\sigma^\sharp)_1$

for all

$x\in\textit{dom}^\sharp$

.

$x\in\textit{dom}^\sharp$

.

When the system of equations is non-monotonic, though, the computed assignment still is a sound description. It is, however, no longer guaranteed to be a post-solution of

![]() $\mathbb{E}^\sharp$

. In Section 6, we come back to this point.

$\mathbb{E}^\sharp$

. In Section 6, we come back to this point.

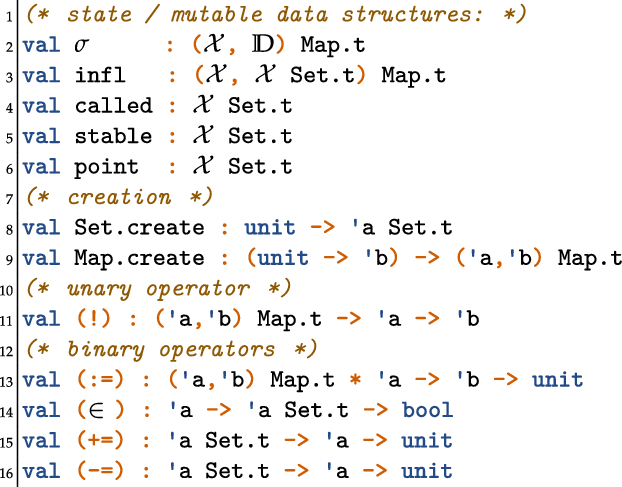

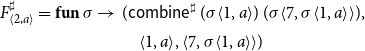

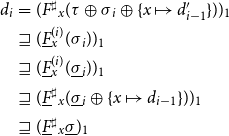

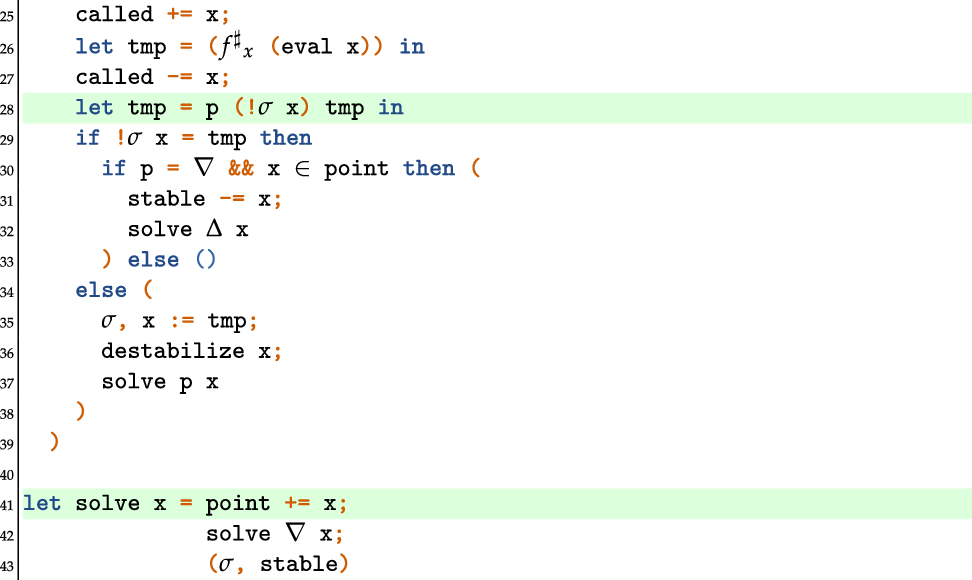

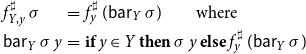

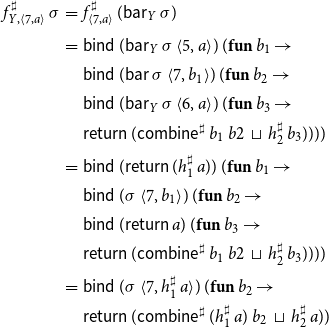

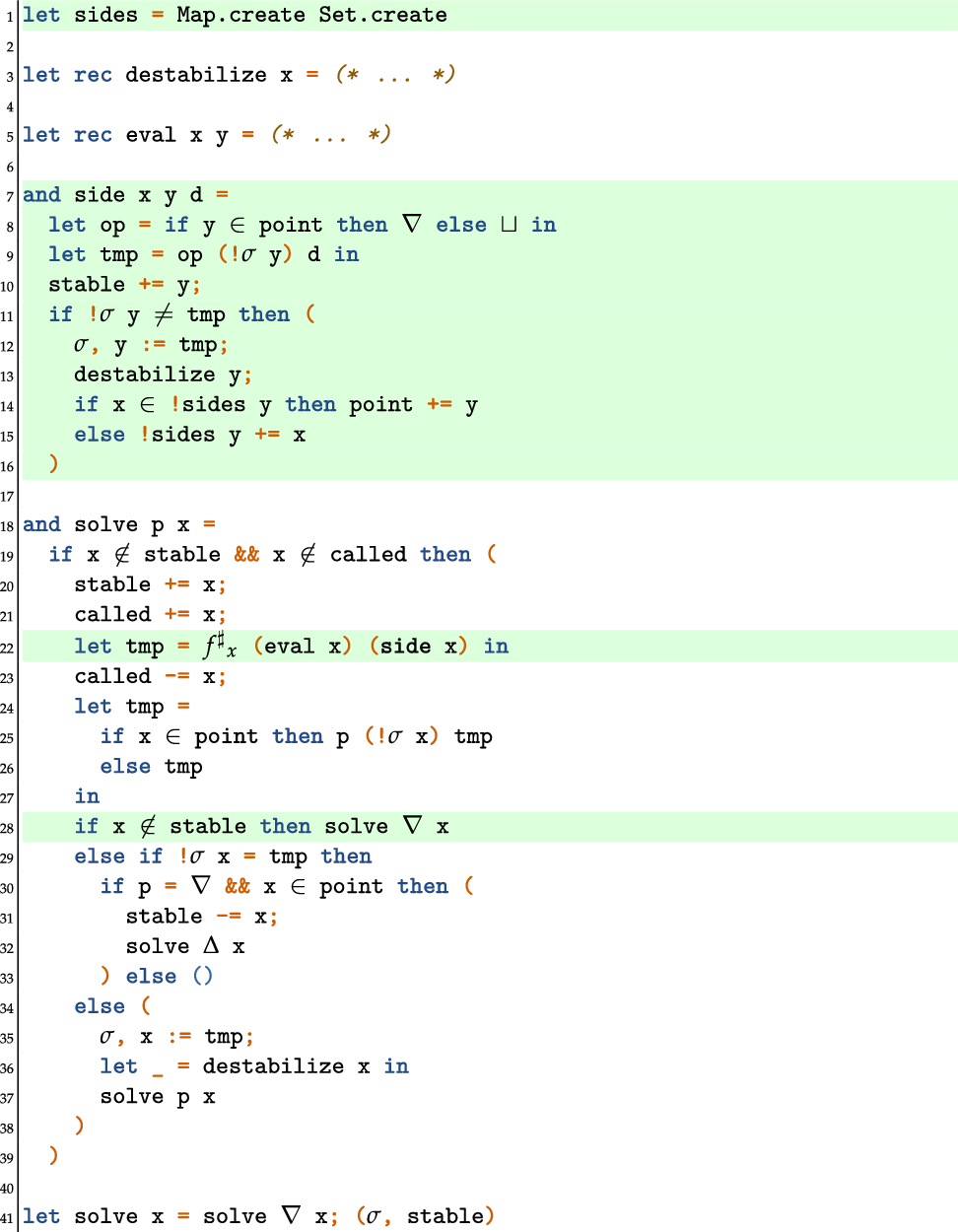

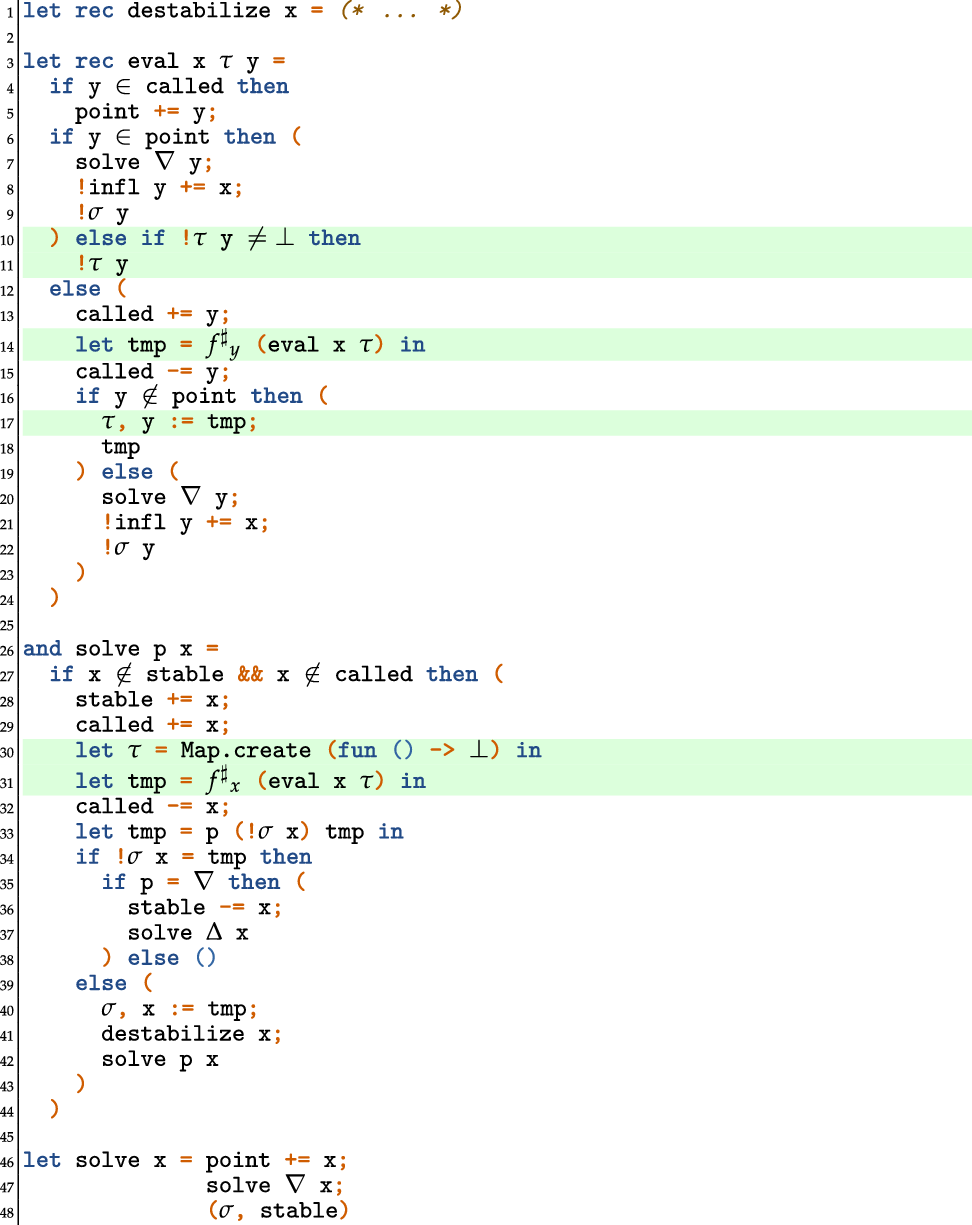

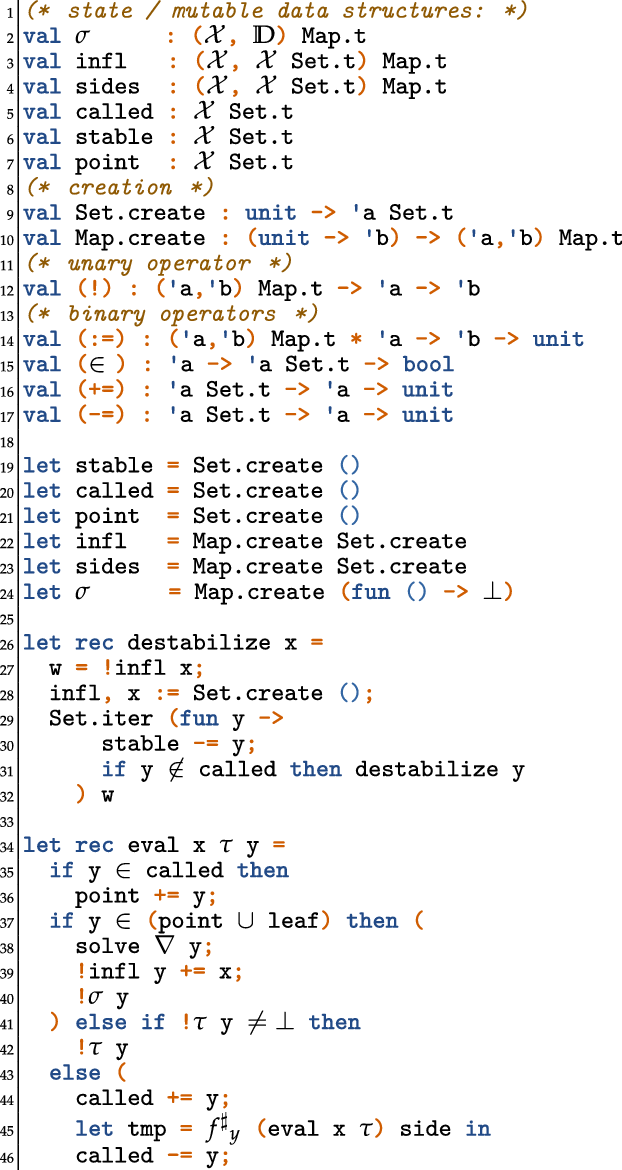

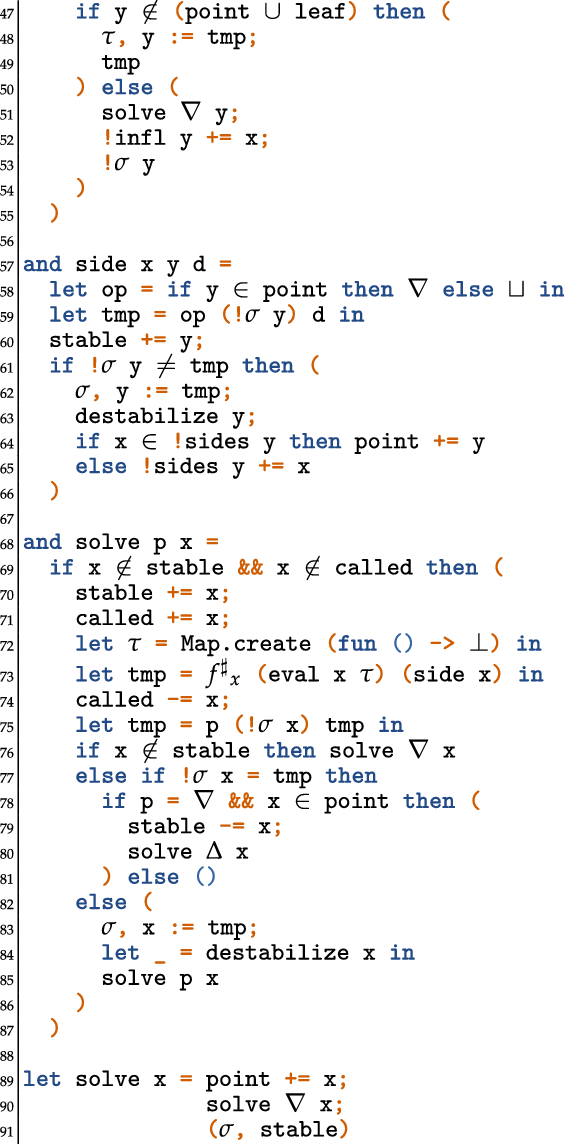

4. The Terminating Solver TDterm

In this section, we present our modification to the TD solver with widening and narrowing which improves on the variant in Apinis et al. (Reference Apinis, Seidl, Vojdani, Probst, Hankin and Hansen2016) in that termination guarantees can be proven even for non-monotonic abstract systems of equations. The vanilla TD solver from Muthukumar and Hermenegildo (Reference Muthukumar and Hermenegildo1990), Charlier and Van Hentenryck (Reference Charlier and Van Hentenryck1992) (see Appendix A for a pseudo code formulation of this solver along the lines presented in Fecht and Seidl Reference Fecht and Seidl1999) starts by querying the value of a given unknown. In order to answer the query, the solver evaluates the corresponding right-hand side. Whenever in the course of that evaluation, the value of another unknown is required, the best possible value for that unknown is computed first, before evaluation of the current right-hand side continues. Interestingly, the strategy employed by TD for choosing the next unknown to iterate upon, thereby resembles the iteration orders considered in Bourdoncle (Reference Bourdoncle, Bjørner, Broy and Pottosin1993) (see Fecht and Seidl Reference Fecht and Seidl1999 for a detailed comparison) for systems of equations derived from control-flow graphs of programs. The most remarkable difference, however, is that TD determines its order on-the-fly, while the ordering in Bourdoncle (Reference Bourdoncle, Bjørner, Broy and Pottosin1993) is determined via preprocessing.

In Apinis et al. (Reference Apinis, Seidl, Vojdani, Probst, Hankin and Hansen2016), the vanilla TD solver from Muthukumar and Hermenegildo (Reference Muthukumar and Hermenegildo1990), Charlier and Van Hentenryck (Reference Charlier and Van Hentenryck1992), Fecht and Seidl (Reference Fecht and Seidl1999) is enhanced with widening and narrowing. For that, the solver is equipped with a novel technique for identifying not only accesses to unknowns, but also widening and narrowing points on-the-fly. Moreover, that solver does not delegate the narrowing iteration to a separate second phase (as was done in the original papers on widening and narrowing Cousot and Cousot Reference Cousot and Cousot1992; Cousot Reference Cousot, D’Souza, Lal and Larsen2015), once a proceeding widening iteration has completed. Instead, widening and narrowing iterations may occur intertwined (Amato et al. Reference Amato, Scozzari, Seidl, Apinis and Vojdani2016). This is achieved by combining the widening operator

![]() $\nabla$

with the narrowing operator

$\nabla$

with the narrowing operator