1. Introduction

In recent years, event data have become ubiquitous in the social sciences. For instance, interpersonal structures are examined using face-to-face interactions (Elmer & Stadtfeld, Reference Elmer and Stadtfeld2020). At the same time, political event data are employed to study and predict the occurrence and intensity of armed conflict (Fjelde & Hultman, Reference Fjelde and Hultman2014; Blair & Sambanis, Reference Blair and Sambanis2020; Dorff et al., Reference Dorff, Gallop and Minhas2020). Butts (Reference Butts2008a) introduced the Relational Event Model (REM) to study such relational event data. In comparison to standard network data of durable relations observed at specific time points, relational events describe instantaneous actions or, put differently, interactions at a fine-grained temporal resolution (Borgatti et al., Reference Borgatti, Mehra, Brass and Labianca2009).

However, in some contexts there arise problems regarding the reliability of event data. While data gathered from, for example, direct observations (Tranmer et al., Reference Tranmer, Marcum, Morton, Croft and de Kort2015) or parliamentary records (Malang et al., Reference Malang, Brandenberger and Leifeld2019) should prove unproblematic in this regard, other data collection methods may be prone to spurious events, that is events that are recorded but did not actually occur as such. For instance, data collection on face-to-face interactions relies on different types of sociometric badges (Eagle & Pentland, Reference Eagle and Pentland2006) for which a recent study reports a false-discovery rate of the event identification of around 20

![]() $\%$

when compared to video-coded data (Elmer et al., Reference Elmer, Chaitanya, Purwar and Stadtfeld2019). Political event data on armed conflict, in contrast, are generally collected via automated or human coding of news and social media reporting (Kauffmann, Reference Kauffmann and Deschaux-Dutard2020). Spurious events may arise in this context if reports of fighting are wrong, as may be the case for propaganda reasons or due to reporters’ reliance on rumors, or when fighting took place between different belligerents than those named. Such issues are especially prevalent in machine-coded conflict data where both false-positive and false-discovery rates of over 60

$\%$

when compared to video-coded data (Elmer et al., Reference Elmer, Chaitanya, Purwar and Stadtfeld2019). Political event data on armed conflict, in contrast, are generally collected via automated or human coding of news and social media reporting (Kauffmann, Reference Kauffmann and Deschaux-Dutard2020). Spurious events may arise in this context if reports of fighting are wrong, as may be the case for propaganda reasons or due to reporters’ reliance on rumors, or when fighting took place between different belligerents than those named. Such issues are especially prevalent in machine-coded conflict data where both false-positive and false-discovery rates of over 60

![]() $\%$

have been reported (King & Lowe, Reference King and Lowe2003; Jäger, Reference Jäger2018). However, even human-coded data suffer from this problem (Dawkins, Reference Dawkins2020; Weidmann, Reference Weidmann2015).

$\%$

have been reported (King & Lowe, Reference King and Lowe2003; Jäger, Reference Jäger2018). However, even human-coded data suffer from this problem (Dawkins, Reference Dawkins2020; Weidmann, Reference Weidmann2015).

This discussion suggests that specific types of event data can include unknown quantities of spurious events, which may influence the substantive results obtained from models such as the REM (Butts, Reference Butts2008a) or the Dynamic Actor-Oriented Model (Stadtfeld et al., Reference Stadtfeld, Hollway and Block2017; Stadtfeld, Reference Stadtfeld2012). We thus propose a Relational Events Model with Spurious Events (REMSE) as a method that allows researchers to study relational events from potentially error-prone contexts or data collections methods. Moreover, this tool can assess whether spurious events are observed under a particular model specification and, more importantly, whether they influence the substantive results. The REMSE can thus serve as a straightforward robustness check in situations where the researcher, due to their substantive knowledge, suspects that there are spurious observations and wants to investigate whether they distort their empirical results.

We take a counting process point of view where some increments of the dyadic counting processes are true events, while others may be attributed to spurious events, that is, exist due to measurement error. This decomposition results in two different intensities governing the two respective types of events. The spurious events are described by a spurious-event intensity that we specify independently of the true-event intensity of true events. We present the model under the assumption that the spurious events are purely random. Therefore, we can model the respective intensity solely as a constant term. However, more complex scenarios involving the specification of exogenous and endogenous covariates for the spurious-event intensity are also possible. In general, we are however primarily interested in studying what factors drive the intensity of true events. We model this intensity following Butts (Reference Butts2008a), but the methodology is extendable to other model types such as Stadtfeld et al. (Reference Stadtfeld, Hollway and Block2017); Vu et al. (Reference Vu, Pattison and Robins2015); DuBois et al. (Reference DuBois, Butts, McFarland and Smyth2013); Perry & Wolfe (Reference Perry and Wolfe2013) or Lerner et al. (Reference Lerner, Lomi, Mowbray, Rollings and Tranmer2021).

This article is structured as follows: We begin in Section 2 by introducing our methodology. In particular, we lay out the general framework to study relational event data proposed by Butts (Reference Butts2008a) in Section 2.1 and introduce an extension to this framework, the REMSE, to correct for the presence of spurious events in the remainder of Section 2. Through a simulation study in Section 3, we investigate the performance of our proposed estimator when spurious events are correctly specified and when they are nonexistent. We then apply the proposed model in Section 4 to analyze fighting incidents in the Syrian civil war as well as social interaction data from a college campus. A discussion of possible implications and extensions for the analysis of events concludes the article in Section 5.

2. A Relational Event Model with Spurious Events

2.1 Modeling framework for relational events

We denote observed events in an event stream

![]() $\mathcal{E} = \left \{e_1, \ldots, e_M \right \}$

of

$\mathcal{E} = \left \{e_1, \ldots, e_M \right \}$

of

![]() $M$

elements. Each object

$M$

elements. Each object

![]() $e \in \mathcal{E}$

consists of a tuple encoding the information of an event. In particular, we denote the two actors of an event by

$e \in \mathcal{E}$

consists of a tuple encoding the information of an event. In particular, we denote the two actors of an event by

![]() $a(e)$

and

$a(e)$

and

![]() $b(e)$

and the time of the event with

$b(e)$

and the time of the event with

![]() $t(e)$

. For simplicity of notation, we omit the argument

$t(e)$

. For simplicity of notation, we omit the argument

![]() $e$

for

$e$

for

![]() $a()$

and

$a()$

and

![]() $b()$

when no ambiguity exists and write

$b()$

when no ambiguity exists and write

![]() $a_m$

for

$a_m$

for

![]() $a(e_m)$

,

$a(e_m)$

,

![]() $b_m$

for

$b_m$

for

![]() $b(e_m)$

, and

$b(e_m)$

, and

![]() $t_m$

for

$t_m$

for

![]() $t(e_m) \;\forall \; m \in \{1, \ldots, M\}$

. Stemming from our application cases, we mainly focus on undirected events in this article; hence, the events

$t(e_m) \;\forall \; m \in \{1, \ldots, M\}$

. Stemming from our application cases, we mainly focus on undirected events in this article; hence, the events

![]() $e = (a,b,t)$

and

$e = (a,b,t)$

and

![]() $\tilde{e} = (b,a,t)$

are equivalent in our framework. Note however that the proposed method also generalizes to the directed case. We denote the set of actor-tuples between which events can possibly occur by

$\tilde{e} = (b,a,t)$

are equivalent in our framework. Note however that the proposed method also generalizes to the directed case. We denote the set of actor-tuples between which events can possibly occur by

![]() $\mathcal{R}$

, where, for simplicity, we assume that

$\mathcal{R}$

, where, for simplicity, we assume that

![]() $\mathcal{R}$

is time-constant.

$\mathcal{R}$

is time-constant.

Following Perry & Wolfe (Reference Perry and Wolfe2013) and Vu et al. (Reference Vu, Asuncion, Hunter and Smyth2011a), we assume that the events in

![]() $\mathcal{E}$

are generated by an inhomogeneous matrix-valued counting process

$\mathcal{E}$

are generated by an inhomogeneous matrix-valued counting process

which, in our case, is assumed to be a matrix-valued Poisson process (see Daley & Vere-Jones Reference Daley and Vere-Jones2008 for an introduction to stochastic processes). Without loss of generality, we assume that

![]() $\textbf{N}(t)$

is observed during the temporal interval

$\textbf{N}(t)$

is observed during the temporal interval

![]() $\mathcal{T}$

, starting at

$\mathcal{T}$

, starting at

![]() $t = 0$

. The cells of (1) count how often all possible dyadic events have occurred between time

$t = 0$

. The cells of (1) count how often all possible dyadic events have occurred between time

![]() $0$

and

$0$

and

![]() $t$

; hence,

$t$

; hence,

![]() $\textbf{N}(t)$

can be conceived as a standard social network adjacency matrix with integer-valued cell entries (Butts, Reference Butts2008b). For instance,

$\textbf{N}(t)$

can be conceived as a standard social network adjacency matrix with integer-valued cell entries (Butts, Reference Butts2008b). For instance,

![]() $N_{ab}(t)$

indicates how often actors

$N_{ab}(t)$

indicates how often actors

![]() $a$

and

$a$

and

![]() $b$

have interacted in the time interval

$b$

have interacted in the time interval

![]() $[0,t]$

. Therefore, observing event

$[0,t]$

. Therefore, observing event

![]() $e = (a,b,t)$

constitutes an increase in

$e = (a,b,t)$

constitutes an increase in

![]() $N_{ab}(t)$

at time point

$N_{ab}(t)$

at time point

![]() $t$

, that is

$t$

, that is

![]() $N_{ab}(t - h) + 1 =N_{ab}(t)$

for

$N_{ab}(t - h) + 1 =N_{ab}(t)$

for

![]() $h \rightarrow 0$

. We denote with

$h \rightarrow 0$

. We denote with

![]() $\boldsymbol{\lambda } (t)$

the matrix-valued intensity of process

$\boldsymbol{\lambda } (t)$

the matrix-valued intensity of process

![]() $\mathbf{N}(t)$

. Based on this intensity function, we can characterize the instantaneous probability of a unit increase in a specific dimension of

$\mathbf{N}(t)$

. Based on this intensity function, we can characterize the instantaneous probability of a unit increase in a specific dimension of

![]() $\textbf{N}(t)$

at time point

$\textbf{N}(t)$

at time point

![]() $t$

(Daley & Vere-Jones, Reference Daley and Vere-Jones2008). We parameterize

$t$

(Daley & Vere-Jones, Reference Daley and Vere-Jones2008). We parameterize

![]() $\boldsymbol{\lambda } (t)$

conditional on the history of the processes,

$\boldsymbol{\lambda } (t)$

conditional on the history of the processes,

![]() $\mathcal{H}(t)$

, which may also include additional exogenous covariates. Hence,

$\mathcal{H}(t)$

, which may also include additional exogenous covariates. Hence,

![]() $\mathcal{H}(t) = (\textbf{N}(u),X(u)| u \lt t )$

, where

$\mathcal{H}(t) = (\textbf{N}(u),X(u)| u \lt t )$

, where

![]() $X(t)$

is some covariate process to be specified later. Note that we opt for a rather general characterization of Poisson processes, including stochastic intensities that explicitly depend on previous events. We define the intensity function at the tie-level:

$X(t)$

is some covariate process to be specified later. Note that we opt for a rather general characterization of Poisson processes, including stochastic intensities that explicitly depend on previous events. We define the intensity function at the tie-level:

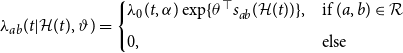

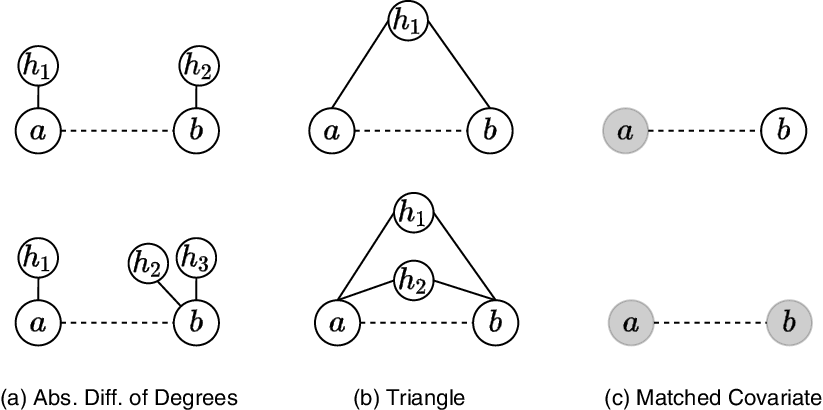

\begin{align} \lambda _{ab}(t| \mathcal{H}(t), \vartheta ) = \begin{cases} \lambda _0(t, \alpha ) \exp \{\theta ^\top s_{ab}(\mathcal{H}(t))\}, & \text{if } (a,b) \in \mathcal{R} \\[5pt] 0, & \text{else} \end{cases} \end{align}

\begin{align} \lambda _{ab}(t| \mathcal{H}(t), \vartheta ) = \begin{cases} \lambda _0(t, \alpha ) \exp \{\theta ^\top s_{ab}(\mathcal{H}(t))\}, & \text{if } (a,b) \in \mathcal{R} \\[5pt] 0, & \text{else} \end{cases} \end{align}

where

![]() $\vartheta = (\alpha ^\top, \theta ^\top )^\top = \text{vec}(\alpha,\theta )$

is defined with the help of a dyadic operator

$\vartheta = (\alpha ^\top, \theta ^\top )^\top = \text{vec}(\alpha,\theta )$

is defined with the help of a dyadic operator

![]() $\text{vec}(\cdot, \cdot )$

that stacks two vectors and

$\text{vec}(\cdot, \cdot )$

that stacks two vectors and

![]() $\lambda _0(t,\alpha )$

is the baseline intensity characterized by coefficients

$\lambda _0(t,\alpha )$

is the baseline intensity characterized by coefficients

![]() $\alpha$

, while the parameters

$\alpha$

, while the parameters

![]() $\theta$

weight the statistics computed by

$\theta$

weight the statistics computed by

![]() $s_{ab}(\mathcal{H}(t))$

, which is the function of sufficient statistics. Based on

$s_{ab}(\mathcal{H}(t))$

, which is the function of sufficient statistics. Based on

![]() $s_{ab}(\mathcal{H}(t))$

, we can formulate endogenous effects, which are calculated from

$s_{ab}(\mathcal{H}(t))$

, we can formulate endogenous effects, which are calculated from

![]() $(N(u)| u \lt t)$

, exogenous variables calculated from

$(N(u)| u \lt t)$

, exogenous variables calculated from

![]() $(X(u) | u\lt t)$

, or a combination of the two which results in complex dependencies between the observed events. Examples of endogenous effects for undirected events include degree-related statistics like the absolute difference of the degrees of actors

$(X(u) | u\lt t)$

, or a combination of the two which results in complex dependencies between the observed events. Examples of endogenous effects for undirected events include degree-related statistics like the absolute difference of the degrees of actors

![]() $a$

and

$a$

and

![]() $b$

or hyperdyadic effects, for example, investigating how triadic closure influences the observed events. In our first application case, exogenous factors include a dummy variable whether group

$b$

or hyperdyadic effects, for example, investigating how triadic closure influences the observed events. In our first application case, exogenous factors include a dummy variable whether group

![]() $a$

and

$a$

and

![]() $b$

share an ethno-religious identity. Alternatively, one may incorporate continuous covariates, for example, the absolute geographic distance between group

$b$

share an ethno-religious identity. Alternatively, one may incorporate continuous covariates, for example, the absolute geographic distance between group

![]() $a$

and

$a$

and

![]() $b$

.

$b$

.

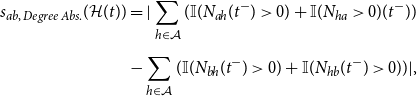

We give graphical representations of possible endogenous effects in Figure 1 and provide their mathematical formulations together with a general summary in Appendix A. When comparing the structures in the first row with the ones in the second row in Figure 1, the respective sufficient statistic of the event indicated by the dotted line differs by one unit. Its intensity thus changes by the multiplicative factor

![]() $\exp \{\theta _{endo}\}$

, where

$\exp \{\theta _{endo}\}$

, where

![]() $\theta _{endo}$

is the respective parameter of the statistic if all other covariates are fixed. The interpretation of the coefficients is, therefore, closely related to the interpretation of relative risk models (Kalbfleisch & Prentice, Reference Kalbfleisch and Prentice2002).

$\theta _{endo}$

is the respective parameter of the statistic if all other covariates are fixed. The interpretation of the coefficients is, therefore, closely related to the interpretation of relative risk models (Kalbfleisch & Prentice, Reference Kalbfleisch and Prentice2002).

Figure 1. Graphical illustrations of endogenous and exogenous covariates. Solid lines represent past interactions, while dotted lines are possible but unrealized events. Node coloring indicates the node’s value on a categorical covariate. The relative risk of the events in the second row compared to the events in the first row is

![]() $\exp \{\theta _{end}\}$

if all other covariates are fixed, where

$\exp \{\theta _{end}\}$

if all other covariates are fixed, where

![]() $\theta _{end}$

is the coefficient of the respective statistic of each row.

$\theta _{end}$

is the coefficient of the respective statistic of each row.

Previous studies propose multiple options to model the baseline intensity

![]() $\lambda _0(t)$

. Vu et al. (Reference Vu, Asuncion, Hunter and Smyth2011a, Reference Vu, Asuncion, Hunter and Smyth2011b) follow a semiparametric approach akin to the proportional hazard model by Cox (Reference Cox1972), while Butts (Reference Butts2008a) assumes a constant baseline intensity. We follow Etezadi-Amoli & Ciampi (Reference Etezadi-Amoli and Ciampi1987) by setting

$\lambda _0(t)$

. Vu et al. (Reference Vu, Asuncion, Hunter and Smyth2011a, Reference Vu, Asuncion, Hunter and Smyth2011b) follow a semiparametric approach akin to the proportional hazard model by Cox (Reference Cox1972), while Butts (Reference Butts2008a) assumes a constant baseline intensity. We follow Etezadi-Amoli & Ciampi (Reference Etezadi-Amoli and Ciampi1987) by setting

![]() $\lambda _0(t, \alpha ) = \exp \{f(t, \alpha )\}$

, with

$\lambda _0(t, \alpha ) = \exp \{f(t, \alpha )\}$

, with

![]() $f(t, \alpha )$

being a smooth function in time parameterized by B-splines (de Boor, Reference de Boor2001):

$f(t, \alpha )$

being a smooth function in time parameterized by B-splines (de Boor, Reference de Boor2001):

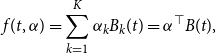

\begin{align} f(t, \alpha ) = \sum _{k=1}^K \alpha _k B_{k}(t) = \alpha ^\top B(t), \end{align}

\begin{align} f(t, \alpha ) = \sum _{k=1}^K \alpha _k B_{k}(t) = \alpha ^\top B(t), \end{align}

where

![]() $B_{k}(t)$

denotes the

$B_{k}(t)$

denotes the

![]() $k$

th B-spline basis function weighted by coefficient

$k$

th B-spline basis function weighted by coefficient

![]() $\alpha _k$

. To ensure a smooth fit of

$\alpha _k$

. To ensure a smooth fit of

![]() $f(t, \alpha )$

, we impose a penalty (or regularization) on

$f(t, \alpha )$

, we impose a penalty (or regularization) on

![]() $\alpha$

which is formulated through the a priori structure

$\alpha$

which is formulated through the a priori structure

where

![]() $\gamma$

is a hyperparameter controlling the level of smoothing and

$\gamma$

is a hyperparameter controlling the level of smoothing and

![]() $\mathbf{S}$

is a penalty matrix that penalizes the differences of coefficients corresponding to adjacent basis functions as proposed by Eilers & Marx (Reference Eilers and Marx1996). We ensure identifiability of the smooth baseline intensity by incorporating a sum-to-zero constraint and refer to Ruppert et al. (Reference Ruppert, Wand and Carroll2003) and Wood (Reference Wood2017) for further details on penalized spline smoothing. Given this notation, we can simplify (2):

$\mathbf{S}$

is a penalty matrix that penalizes the differences of coefficients corresponding to adjacent basis functions as proposed by Eilers & Marx (Reference Eilers and Marx1996). We ensure identifiability of the smooth baseline intensity by incorporating a sum-to-zero constraint and refer to Ruppert et al. (Reference Ruppert, Wand and Carroll2003) and Wood (Reference Wood2017) for further details on penalized spline smoothing. Given this notation, we can simplify (2):

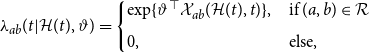

\begin{align} \lambda _{ab}(t| \mathcal{H}(t), \vartheta ) = \begin{cases} \exp \{\vartheta ^\top \mathcal{X}_{ab}(\mathcal{H}(t), t)\}, & \text{if } (a,b) \in \mathcal{R} \\[5pt] 0, & \text{else,} \end{cases} \end{align}

\begin{align} \lambda _{ab}(t| \mathcal{H}(t), \vartheta ) = \begin{cases} \exp \{\vartheta ^\top \mathcal{X}_{ab}(\mathcal{H}(t), t)\}, & \text{if } (a,b) \in \mathcal{R} \\[5pt] 0, & \text{else,} \end{cases} \end{align}

with

![]() $\mathcal{X}_{ab}(\mathcal{H}(t),t) = \text{vec}(B(t), s_{ab}(\mathcal{H}(t)))$

.

$\mathcal{X}_{ab}(\mathcal{H}(t),t) = \text{vec}(B(t), s_{ab}(\mathcal{H}(t)))$

.

2.2 Accounting for spurious relational events

Given the discussion in the introduction, we may conclude that some increments of

![]() $\mathbf{N}(t)$

are true events, while others stem from spurious events. Spurious events can occur because of coding errors during machine- or human-based data collection. To account for such erroneous data points, we introduce the REMSE.

$\mathbf{N}(t)$

are true events, while others stem from spurious events. Spurious events can occur because of coding errors during machine- or human-based data collection. To account for such erroneous data points, we introduce the REMSE.

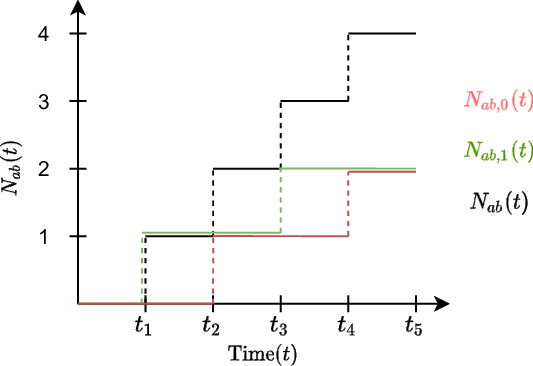

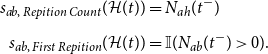

First, we decompose the observed Poisson process into two separate matrix-valued Poisson processes, that is

![]() $\mathbf{N}(t) = \mathbf{N}_{0}(t) + \mathbf{N}_1(t) \; \forall \; t \in \mathcal{T}$

. On the dyadic level,

$\mathbf{N}(t) = \mathbf{N}_{0}(t) + \mathbf{N}_1(t) \; \forall \; t \in \mathcal{T}$

. On the dyadic level,

![]() $N_{ab,1}(t)$

denotes the number of true events between actors

$N_{ab,1}(t)$

denotes the number of true events between actors

![]() $a$

and

$a$

and

![]() $b$

until

$b$

until

![]() $t$

, and

$t$

, and

![]() $N_{ab,0}(t)$

the number of events that are spurious. Assuming that

$N_{ab,0}(t)$

the number of events that are spurious. Assuming that

![]() $N_{ab}(t)$

is a Poisson process, we can apply the so-called thinning property, stating that two separate processes that sum up to a Poisson process are also Poisson processes (Daley & Vere-Jones Reference Daley and Vere-Jones2008). A graphical illustration of the three introduced counting processes,

$N_{ab}(t)$

is a Poisson process, we can apply the so-called thinning property, stating that two separate processes that sum up to a Poisson process are also Poisson processes (Daley & Vere-Jones Reference Daley and Vere-Jones2008). A graphical illustration of the three introduced counting processes,

![]() $N_{ab,0}(t), \;N_{ab,1}(t),$

and

$N_{ab,0}(t), \;N_{ab,1}(t),$

and

![]() $N_{ab}(t)$

, is given in Figure 2. In this illustrative example, we observe four events at times

$N_{ab}(t)$

, is given in Figure 2. In this illustrative example, we observe four events at times

![]() $t_1, \;t_2, \;t_3,$

and

$t_1, \;t_2, \;t_3,$

and

![]() $t_4$

, although only the first and third constitute true events, while the second and fourth are spurious. Therefore, the counting process

$t_4$

, although only the first and third constitute true events, while the second and fourth are spurious. Therefore, the counting process

![]() $N_{ab}(t)$

jumps at all times of an event, yet

$N_{ab}(t)$

jumps at all times of an event, yet

![]() $N_{ab,1}(t)$

does so only at

$N_{ab,1}(t)$

does so only at

![]() $t_1$

and

$t_1$

and

![]() $t_3$

. Conversely,

$t_3$

. Conversely,

![]() $N_{ab,0}(t)$

increases at

$N_{ab,0}(t)$

increases at

![]() $t_2$

and

$t_2$

and

![]() $t_4.$

$t_4.$

Figure 2. Graphical illustration of a possible path of the counting process of observed events (

![]() $N_{ab}(t)$

) between actors

$N_{ab}(t)$

) between actors

![]() $a$

and

$a$

and

![]() $b$

that encompasses spurious (

$b$

that encompasses spurious (

![]() $N_{ab,0}(t)$

) and true events (

$N_{ab,0}(t)$

) and true events (

![]() $N_{ab,1}(t)$

).

$N_{ab,1}(t)$

).

The counting processes

![]() $ \mathbf{N}_{0}(t)$

and

$ \mathbf{N}_{0}(t)$

and

![]() $\mathbf{N}_1(t)$

are characterized by the dyadic intensities

$\mathbf{N}_1(t)$

are characterized by the dyadic intensities

![]() $\lambda _{ab,0}(t|\mathcal{H}_{0}(t), \vartheta _0)$

and

$\lambda _{ab,0}(t|\mathcal{H}_{0}(t), \vartheta _0)$

and

![]() $\lambda _{ab,1}(t|\mathcal{H}_{1}(t), \vartheta _1)$

, where we respectively denote the history of all spurious and true processes by

$\lambda _{ab,1}(t|\mathcal{H}_{1}(t), \vartheta _1)$

, where we respectively denote the history of all spurious and true processes by

![]() $\mathcal{H}_{0}(t)$

and

$\mathcal{H}_{0}(t)$

and

![]() $\mathcal{H}_{1}(t)$

. This can also be perceived as a competing risks setting, where events can either be caused by the true-event or spurious-event intensity (Gelfand et al., Reference Gelfand, Ghosh, Christiansen, Soumerai and McLaughlin2000). To make the estimation of

$\mathcal{H}_{1}(t)$

. This can also be perceived as a competing risks setting, where events can either be caused by the true-event or spurious-event intensity (Gelfand et al., Reference Gelfand, Ghosh, Christiansen, Soumerai and McLaughlin2000). To make the estimation of

![]() $\theta _0$

and

$\theta _0$

and

![]() $\theta _1$

feasible and identifiable (Heckman & Honoré, Reference Heckman and Honoré1989), we assume that both intensities are independent of one another, which means that their correlation is fully accounted for by the covariates. Building on the superpositioning property of Poisson processes, the specification of those two intensity functions also defines the intensity of the observed counting process

$\theta _1$

feasible and identifiable (Heckman & Honoré, Reference Heckman and Honoré1989), we assume that both intensities are independent of one another, which means that their correlation is fully accounted for by the covariates. Building on the superpositioning property of Poisson processes, the specification of those two intensity functions also defines the intensity of the observed counting process

![]() $N_{ab}(t)$

. In particular,

$N_{ab}(t)$

. In particular,

![]() $\lambda _{ab}(t|\mathcal{H}(t), \vartheta )= \lambda _{ab,0}(t|\mathcal{H}_0(t), \vartheta _0) + \lambda _{ab,1}(t|\mathcal{H}_1(t), \vartheta _1)$

holds (Daley & Vere-Jones, Reference Daley and Vere-Jones2008).

$\lambda _{ab}(t|\mathcal{H}(t), \vartheta )= \lambda _{ab,0}(t|\mathcal{H}_0(t), \vartheta _0) + \lambda _{ab,1}(t|\mathcal{H}_1(t), \vartheta _1)$

holds (Daley & Vere-Jones, Reference Daley and Vere-Jones2008).

The true-event intensity

![]() $\lambda _{ab,1}(t|\mathcal{H}_{1}(t), \vartheta _1)$

drives the counting process of true events

$\lambda _{ab,1}(t|\mathcal{H}_{1}(t), \vartheta _1)$

drives the counting process of true events

![]() $\mathbf{N}_{1}(t)$

and only depends on the history of true events. This assumption is reasonable since if erroneous events are mixed together with true events, the covariates computed for actors

$\mathbf{N}_{1}(t)$

and only depends on the history of true events. This assumption is reasonable since if erroneous events are mixed together with true events, the covariates computed for actors

![]() $a$

and

$a$

and

![]() $b$

at time

$b$

at time

![]() $t$

through

$t$

through

![]() $s_{ab}(\mathcal{H}(t))$

would be confounded and could not anymore be interpreted in any consistent manner. We specify

$s_{ab}(\mathcal{H}(t))$

would be confounded and could not anymore be interpreted in any consistent manner. We specify

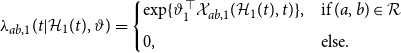

![]() $\lambda _{ab,1}(t|\mathcal{H}_1(t), \vartheta _1)$

in line with (2) at the dyadic level by:

$\lambda _{ab,1}(t|\mathcal{H}_1(t), \vartheta _1)$

in line with (2) at the dyadic level by:

\begin{align} \lambda _{ab,1}(t| \mathcal{H}_1(t), \vartheta ) = \begin{cases} \exp \{\vartheta _1^\top \mathcal{X}_{ab,1}(\mathcal{H}_1(t),t)\}, & \text{if } (a,b) \in \mathcal{R} \\[5pt] 0, & \text{else.} \end{cases} \end{align}

\begin{align} \lambda _{ab,1}(t| \mathcal{H}_1(t), \vartheta ) = \begin{cases} \exp \{\vartheta _1^\top \mathcal{X}_{ab,1}(\mathcal{H}_1(t),t)\}, & \text{if } (a,b) \in \mathcal{R} \\[5pt] 0, & \text{else.} \end{cases} \end{align}

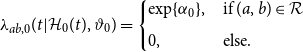

At the same time, the spurious-event intensity

![]() $\lambda _{ab,0}(t|\mathcal{H}_{0}(t), \vartheta _0)$

determines the type of measurement error generating spurious events. One may consider the spurious-event process as an overall noise level with a constant intensity. This leads to the following setting:

$\lambda _{ab,0}(t|\mathcal{H}_{0}(t), \vartheta _0)$

determines the type of measurement error generating spurious events. One may consider the spurious-event process as an overall noise level with a constant intensity. This leads to the following setting:

\begin{align} \lambda _{ab,0}(t|\mathcal{H}_{0}(t), \vartheta _0) = \begin{cases} \exp \{\alpha _0\}, & \text{if } (a,b) \in \mathcal{R} \\[5pt] 0, & \text{else.} \end{cases} \end{align}

\begin{align} \lambda _{ab,0}(t|\mathcal{H}_{0}(t), \vartheta _0) = \begin{cases} \exp \{\alpha _0\}, & \text{if } (a,b) \in \mathcal{R} \\[5pt] 0, & \text{else.} \end{cases} \end{align}

The error structure, that is, the intensity of the spurious-event process, can be made more complex, but to ensure identifiability,

![]() $\lambda _{ab,0}(t|\mathcal{H}_{0}(t), \vartheta _0)$

cannot depend on the same covariates as

$\lambda _{ab,0}(t|\mathcal{H}_{0}(t), \vartheta _0)$

cannot depend on the same covariates as

![]() $\lambda _{ab,1}(t| \mathcal{H}_1(t), \vartheta )$

. We return to the discussion of this point below and focus on model (7) for the moment.

$\lambda _{ab,1}(t| \mathcal{H}_1(t), \vartheta )$

. We return to the discussion of this point below and focus on model (7) for the moment.

2.3 Posterior inference via data augmentation

To draw inference on

![]() $\vartheta = \text{vec}(\vartheta _0, \vartheta _1)$

, we employ an empirical Bayes approach. Specifically, we will sample from the posterior of

$\vartheta = \text{vec}(\vartheta _0, \vartheta _1)$

, we employ an empirical Bayes approach. Specifically, we will sample from the posterior of

![]() $\vartheta$

given the observed data. Our approach is thereby comparable to the estimation of standard mixture (Diebolt & Robert, Reference Diebolt and Robert1994) and latent competing risk models (Gelfand et al., Reference Gelfand, Ghosh, Christiansen, Soumerai and McLaughlin2000).

$\vartheta$

given the observed data. Our approach is thereby comparable to the estimation of standard mixture (Diebolt & Robert, Reference Diebolt and Robert1994) and latent competing risk models (Gelfand et al., Reference Gelfand, Ghosh, Christiansen, Soumerai and McLaughlin2000).

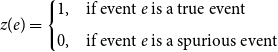

For our proposed method, the observed data are the event stream of all events

![]() $\mathcal{E}$

regardless of being a real or a spurious event. To adequately estimate the model formulated in Section 2, we lack information on whether a given event is spurious or not. We denote this formally as a latent indicator variable

$\mathcal{E}$

regardless of being a real or a spurious event. To adequately estimate the model formulated in Section 2, we lack information on whether a given event is spurious or not. We denote this formally as a latent indicator variable

![]() $z(e)$

for event

$z(e)$

for event

![]() $e \in \mathcal{E}$

:

$e \in \mathcal{E}$

:

\begin{align*} z(e) = \begin{cases} 1,& \text{if event $e$ is a true event} \\[5pt] 0,& \text{if event $e$ is a spurious event} \end{cases} \end{align*}

\begin{align*} z(e) = \begin{cases} 1,& \text{if event $e$ is a true event} \\[5pt] 0,& \text{if event $e$ is a spurious event} \end{cases} \end{align*}

We write

![]() $z = (z(e_1), \ldots, z(e_M))$

to refer to the latent indicators of all events and use

$z = (z(e_1), \ldots, z(e_M))$

to refer to the latent indicators of all events and use

![]() $z_m$

to shorten

$z_m$

to shorten

![]() $z(e_m)$

. Given this notation, we can apply the data augmentation algorithm developed in Tanner & Wong (Reference Tanner and Wong1987) to sample from the joint posterior distribution of

$z(e_m)$

. Given this notation, we can apply the data augmentation algorithm developed in Tanner & Wong (Reference Tanner and Wong1987) to sample from the joint posterior distribution of

![]() $(Z,\vartheta )$

by iterating between the I Step (Imputation) and P Step (Posterior) defined as:

$(Z,\vartheta )$

by iterating between the I Step (Imputation) and P Step (Posterior) defined as:

\begin{align*} &\text{I Step: Draw $Z^{(d)}$ from the posterior $p(z| \vartheta ^{(d-1)}, \mathcal{E})$; } \\[5pt] &\text{P Step: Draw $\vartheta ^{(d)}$ from the augmented $p(\vartheta | z^{(d)}, \mathcal{E})$.} \end{align*}

\begin{align*} &\text{I Step: Draw $Z^{(d)}$ from the posterior $p(z| \vartheta ^{(d-1)}, \mathcal{E})$; } \\[5pt] &\text{P Step: Draw $\vartheta ^{(d)}$ from the augmented $p(\vartheta | z^{(d)}, \mathcal{E})$.} \end{align*}

This iterative scheme generates a sequence that (under mild conditions) converges to draws from the joint posterior of

![]() $(\vartheta,Z)$

and is a particular case of a Gibbs’ sampler. Each iteration consists of an Imputation and a Posterior step, resembling the Expectation and Maximization step from the EM algorithm (Dempster et al., Reference Dempster, Laird and Rubin1977). Note, however, that Tanner & Wong (Reference Tanner and Wong1987) proposed this method with multiple imputations in each I Step and a mixture of all imputed complete-data posteriors in the P Step. We follow Little & Rubin (Reference Little and Rubin2002) and Diebolt & Robert (Reference Diebolt and Robert1994) by performing one draw of

$(\vartheta,Z)$

and is a particular case of a Gibbs’ sampler. Each iteration consists of an Imputation and a Posterior step, resembling the Expectation and Maximization step from the EM algorithm (Dempster et al., Reference Dempster, Laird and Rubin1977). Note, however, that Tanner & Wong (Reference Tanner and Wong1987) proposed this method with multiple imputations in each I Step and a mixture of all imputed complete-data posteriors in the P Step. We follow Little & Rubin (Reference Little and Rubin2002) and Diebolt & Robert (Reference Diebolt and Robert1994) by performing one draw of

![]() $Z$

and

$Z$

and

![]() $\vartheta$

in every iteration, which is a specific case of data augmentation. As Noghrehchi et al. (Reference Noghrehchi, Stoklosa, Penev and Warton2021) argue, this approach is closely related to the stochastic EM algorithm (Celeux et al., Reference Celeux, Chauveau and Diebolt1996). The main difference between the two approaches is that in our P Step, the current parameters are sampled from the complete-data posterior in the data augmentation algorithm and not fixed at its mean as in Celeux et al. (Reference Celeux, Chauveau and Diebolt1996). Consequently, the data augmentation algorithm is a proper multiple imputation procedure (MI, Rubin, Reference Rubin1987), while the stochastic EM algorithm is improper MI (see Noghrehchi et al., Reference Noghrehchi, Stoklosa, Penev and Warton2021). We choose the data augmentation algorithm over the stochastic EM algorithm because Rubin’s combination rule to get approximate standard errors can only be applied to proper MI procedures (Noghrehchi et al., Reference Noghrehchi, Stoklosa, Penev and Warton2021).

$\vartheta$

in every iteration, which is a specific case of data augmentation. As Noghrehchi et al. (Reference Noghrehchi, Stoklosa, Penev and Warton2021) argue, this approach is closely related to the stochastic EM algorithm (Celeux et al., Reference Celeux, Chauveau and Diebolt1996). The main difference between the two approaches is that in our P Step, the current parameters are sampled from the complete-data posterior in the data augmentation algorithm and not fixed at its mean as in Celeux et al. (Reference Celeux, Chauveau and Diebolt1996). Consequently, the data augmentation algorithm is a proper multiple imputation procedure (MI, Rubin, Reference Rubin1987), while the stochastic EM algorithm is improper MI (see Noghrehchi et al., Reference Noghrehchi, Stoklosa, Penev and Warton2021). We choose the data augmentation algorithm over the stochastic EM algorithm because Rubin’s combination rule to get approximate standard errors can only be applied to proper MI procedures (Noghrehchi et al., Reference Noghrehchi, Stoklosa, Penev and Warton2021).

In what follows, we give details and derivations on the I and P Steps and then exploit MI to combine a relatively small number of draws from the posterior to obtain point and interval estimates for

![]() $\vartheta$

.

$\vartheta$

.

Imputation-step: To acquire samples from

![]() $Z= (Z_1, \ldots, Z_M)$

conditional on

$Z= (Z_1, \ldots, Z_M)$

conditional on

![]() $\mathcal{E}$

and

$\mathcal{E}$

and

![]() $\vartheta$

, we first decompose the joint density by repeatedly applying the Bayes theorem:

$\vartheta$

, we first decompose the joint density by repeatedly applying the Bayes theorem:

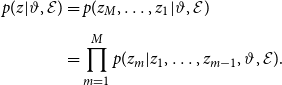

\begin{align} p(z | \vartheta, \mathcal{E}) &= p(z_M, \ldots, z_1 | \vartheta, \mathcal{E}) \nonumber \\[5pt] &= \prod _{m= 1}^M p(z_m | z_1, \ldots, z_{m-1},\vartheta, \mathcal{E}). \end{align}

\begin{align} p(z | \vartheta, \mathcal{E}) &= p(z_M, \ldots, z_1 | \vartheta, \mathcal{E}) \nonumber \\[5pt] &= \prod _{m= 1}^M p(z_m | z_1, \ldots, z_{m-1},\vartheta, \mathcal{E}). \end{align}

The distribution of

![]() $z_m$

conditional on

$z_m$

conditional on

![]() $ z_1, \ldots, z_{m-1},\vartheta$

and

$ z_1, \ldots, z_{m-1},\vartheta$

and

![]() $\mathcal{E}$

is:

$\mathcal{E}$

is:

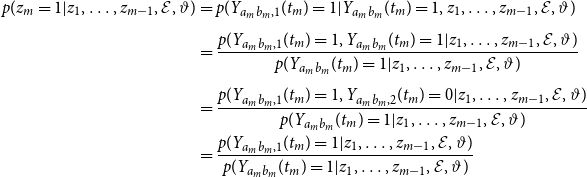

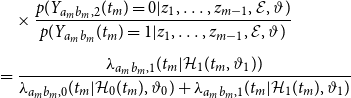

Note that the information of

![]() $z_1, \ldots z_{m-1}$

and

$z_1, \ldots z_{m-1}$

and

![]() $\mathcal{E}$

allows us to calculate

$\mathcal{E}$

allows us to calculate

![]() $ \mathcal{H}_1(t_m)$

as well as

$ \mathcal{H}_1(t_m)$

as well as

![]() $\mathcal{H}_0(t_m)$

. By iteratively applying (9) and plugging in

$\mathcal{H}_0(t_m)$

. By iteratively applying (9) and plugging in

![]() $\vartheta ^{(d)}$

for

$\vartheta ^{(d)}$

for

![]() $\vartheta$

, we can draw samples in the I Step of

$\vartheta$

, we can draw samples in the I Step of

![]() $Z= (Z_1, \ldots, Z_M)$

through a sequential design that sweeps once from

$Z= (Z_1, \ldots, Z_M)$

through a sequential design that sweeps once from

![]() $Z_1$

to

$Z_1$

to

![]() $Z_M$

. The mathematical derivation of (9) is provided in Appendix B.

$Z_M$

. The mathematical derivation of (9) is provided in Appendix B.

Posterior step: As already stated, we assume that the true-event and spurious-event intensities are independent. Hence, the sampling from the complete-data posteriors of

![]() $\vartheta _0$

and

$\vartheta _0$

and

![]() $\vartheta _1$

can be carried out independently. In the ensuing section, we therefore only show how to sample from

$\vartheta _1$

can be carried out independently. In the ensuing section, we therefore only show how to sample from

![]() $\vartheta _1| z, \mathcal{E}$

, but sampling from

$\vartheta _1| z, \mathcal{E}$

, but sampling from

![]() $\vartheta _0| z, \mathcal{E}$

is possible in the same manner. To derive this posterior, we begin by showing that the likelihood of

$\vartheta _0| z, \mathcal{E}$

is possible in the same manner. To derive this posterior, we begin by showing that the likelihood of

![]() $\mathcal{E}$

and

$\mathcal{E}$

and

![]() $z$

with parameter

$z$

with parameter

![]() $\vartheta _1$

is the likelihood of the counting process

$\vartheta _1$

is the likelihood of the counting process

![]() $\mathbf{N}_1(t)$

, which resembles a Poisson regression. Consecutively, we state all priors to derive the desired complete-data posterior.

$\mathbf{N}_1(t)$

, which resembles a Poisson regression. Consecutively, we state all priors to derive the desired complete-data posterior.

Given a general

![]() $z$

sampled in the previous I Step and

$z$

sampled in the previous I Step and

![]() $\mathcal{E}$

, we reconstruct a unique complete path of

$\mathcal{E}$

, we reconstruct a unique complete path of

![]() $\mathbf{N}_1(t)$

by setting

$\mathbf{N}_1(t)$

by setting

\begin{align} N_{ab,1}(t) = \sum _{\substack{ e \in \mathcal{E}; \\[5pt] z(e) = 1, \; t(e)\leq t} } \mathbb{I}(a(e) =a, b(e) = b) \; \forall \; (a,b) \in \mathcal{R},\; t\in \mathcal{T}, \end{align}

\begin{align} N_{ab,1}(t) = \sum _{\substack{ e \in \mathcal{E}; \\[5pt] z(e) = 1, \; t(e)\leq t} } \mathbb{I}(a(e) =a, b(e) = b) \; \forall \; (a,b) \in \mathcal{R},\; t\in \mathcal{T}, \end{align}

where

![]() $\mathbb{I}(\cdot )$

is an indicator function. The corresponding likelihood of

$\mathbb{I}(\cdot )$

is an indicator function. The corresponding likelihood of

![]() $\mathbf{N}_1(t)$

results from the property that any element-wise increments of the counting process between any times

$\mathbf{N}_1(t)$

results from the property that any element-wise increments of the counting process between any times

![]() $s$

and

$s$

and

![]() $t$

with

$t$

with

![]() $t\gt s$

and arbitrary actors

$t\gt s$

and arbitrary actors

![]() $a$

and

$a$

and

![]() $b$

with

$b$

with

![]() $(a,b) \in \mathcal{R}$

are Poisson distributed:

$(a,b) \in \mathcal{R}$

are Poisson distributed:

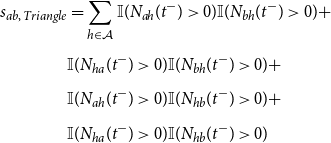

The integral in (11) is approximated through simple rectangular approximation between the observed event times to keep the numerical effort feasible, so that the distributional assumption simplifies to:

\begin{align} Y_{ab,1}(t_m) = N_{ab,1}(t_m) - N_{ab,1}(t_{m-1}) \sim & \text{Pois}\left (\left (t_m - t_{m-1}\right ) \lambda _{ab,1}\left (t_m|\mathcal{H}_1\left (t_m\right ),\vartheta _1\right )\right ) \\[5pt] \;\forall \; m \in \{1, \ldots, M\} &\text{ with }z_m = 1\text{ and }(a,b) \in \mathcal{R}. \nonumber \end{align}

\begin{align} Y_{ab,1}(t_m) = N_{ab,1}(t_m) - N_{ab,1}(t_{m-1}) \sim & \text{Pois}\left (\left (t_m - t_{m-1}\right ) \lambda _{ab,1}\left (t_m|\mathcal{H}_1\left (t_m\right ),\vartheta _1\right )\right ) \\[5pt] \;\forall \; m \in \{1, \ldots, M\} &\text{ with }z_m = 1\text{ and }(a,b) \in \mathcal{R}. \nonumber \end{align}

We specify the priors for

![]() $\alpha _1$

and

$\alpha _1$

and

![]() $\theta _1$

separately and independent of one another. The prior for

$\theta _1$

separately and independent of one another. The prior for

![]() $\alpha _1$

was already stated in (4). Through a restricted maximum likelihood approach, we estimate the corresponding hyperparameter

$\alpha _1$

was already stated in (4). Through a restricted maximum likelihood approach, we estimate the corresponding hyperparameter

![]() $\gamma _1$

such that it maximizes the marginal likelihood of

$\gamma _1$

such that it maximizes the marginal likelihood of

![]() $z$

and

$z$

and

![]() $\mathcal{E}$

given

$\mathcal{E}$

given

![]() $\gamma _1$

(for additional information on this estimation procedure and general empirical Bayes theory for penalized splines see Wood Reference Wood2011, Reference Wood2020). Regarding the linear coefficients

$\gamma _1$

(for additional information on this estimation procedure and general empirical Bayes theory for penalized splines see Wood Reference Wood2011, Reference Wood2020). Regarding the linear coefficients

![]() $\theta _1$

, we assume flat priors, that is

$\theta _1$

, we assume flat priors, that is

![]() $p(\theta _1) \propto k$

, indicating no prior knowledge.

$p(\theta _1) \propto k$

, indicating no prior knowledge.

In the last step, we apply Wood’s (Reference Wood2006) result that for large samples, the posterior distribution of

![]() $\vartheta _1$

under likelihoods resulting from distributions belonging to the exponential family, such as the Poisson distribution in (12), can be approximated through:

$\vartheta _1$

under likelihoods resulting from distributions belonging to the exponential family, such as the Poisson distribution in (12), can be approximated through:

Here,

![]() $\hat{\vartheta }_1$

denotes the penalized maximum likelihood estimator resulting from (12) with the extended penalty matrix

$\hat{\vartheta }_1$

denotes the penalized maximum likelihood estimator resulting from (12) with the extended penalty matrix

![]() $\tilde{ \mathbf{S}}_1$

defined by

$\tilde{ \mathbf{S}}_1$

defined by

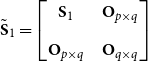

\begin{align*} \tilde{ \mathbf{S}}_1 = \begin{bmatrix} \mathbf{S}_1\;\;\;\; & \mathbf{O}_{p \times q} \\[5pt] \mathbf{O}_{p \times q}\;\;\;\; & \mathbf{O}_{q \times q} \end{bmatrix} \end{align*}

\begin{align*} \tilde{ \mathbf{S}}_1 = \begin{bmatrix} \mathbf{S}_1\;\;\;\; & \mathbf{O}_{p \times q} \\[5pt] \mathbf{O}_{p \times q}\;\;\;\; & \mathbf{O}_{q \times q} \end{bmatrix} \end{align*}

with

![]() $\mathbf{O}_{p \times q} \in \mathbb{R}^{p\times q}$

for

$\mathbf{O}_{p \times q} \in \mathbb{R}^{p\times q}$

for

![]() $p,q \in \mathbb{N}$

being a matrix filled with zeroes and

$p,q \in \mathbb{N}$

being a matrix filled with zeroes and

![]() $\mathbf{S}_1$

defined in accordance with (4). For

$\mathbf{S}_1$

defined in accordance with (4). For

![]() $\vartheta _1 = \text{vec}(\alpha _1, \theta _1),$

let

$\vartheta _1 = \text{vec}(\alpha _1, \theta _1),$

let

![]() $p$

be the length of

$p$

be the length of

![]() $\alpha _1$

and

$\alpha _1$

and

![]() $q$

of

$q$

of

![]() $\theta _1$

. The penalized likelihood is then given by:

$\theta _1$

. The penalized likelihood is then given by:

which is equivalent to a generalized additive model; hence, we refer to Wood (Reference Wood2017) for a thorough treatment of the computational methods needed to find

![]() $\hat{\vartheta }_1$

. The variance matrix in (13) has the following structure:

$\hat{\vartheta }_1$

. The variance matrix in (13) has the following structure:

Values for

![]() $\gamma _1$

and

$\gamma _1$

and

![]() $\hat{\vartheta }_1$

can be extracted from the estimation procedure to maximize (14) with respect to

$\hat{\vartheta }_1$

can be extracted from the estimation procedure to maximize (14) with respect to

![]() $\vartheta _1$

, while

$\vartheta _1$

, while

![]() $\mathcal{X}_1 \in \mathbb{R}^{(M |\mathcal{R}|)\times (p + q)}$

is a matrix whose rows are given by

$\mathcal{X}_1 \in \mathbb{R}^{(M |\mathcal{R}|)\times (p + q)}$

is a matrix whose rows are given by

![]() $\mathcal{X}_{ab,1}(\mathcal{H}_1(t_m),t_{m-1})$

as defined in (6) for

$\mathcal{X}_{ab,1}(\mathcal{H}_1(t_m),t_{m-1})$

as defined in (6) for

![]() $m \in \{ 1, \ldots, M\}$

and

$m \in \{ 1, \ldots, M\}$

and

![]() $(a,b) \in \mathcal{R}$

. Similarly,

$(a,b) \in \mathcal{R}$

. Similarly,

![]() $\mathbf{W}_1 = \text{diag}\big (\lambda _{ab,1}(t| \mathcal{H}_1(t), \vartheta _1);$

$\mathbf{W}_1 = \text{diag}\big (\lambda _{ab,1}(t| \mathcal{H}_1(t), \vartheta _1);$

![]() $t \in \{ t_1, \ldots, t_M\}, (a,b) \in \mathcal{R}\big )$

is a diagonal matrix.

$t \in \{ t_1, \ldots, t_M\}, (a,b) \in \mathcal{R}\big )$

is a diagonal matrix.

For the P Step, we now plug in

![]() $z^{(d)}$

for

$z^{(d)}$

for

![]() $z$

in (13) to obtain

$z$

in (13) to obtain

![]() $\hat{\vartheta }_1$

and

$\hat{\vartheta }_1$

and

![]() $\mathbf{V}_1$

by carrying out the corresponding complete-case analysis. In the case where no spurious events exist, the complete estimation can be carried out in a single P Step. In Algorithm 1, we summarize how to generate a sequence of random variables according to the data augmentation algorithm.

$\mathbf{V}_1$

by carrying out the corresponding complete-case analysis. In the case where no spurious events exist, the complete estimation can be carried out in a single P Step. In Algorithm 1, we summarize how to generate a sequence of random variables according to the data augmentation algorithm.

Multiple imputation: One could use the data augmentation algorithm to get a large amount of samples from the joint posterior of

![]() $(\vartheta, Z)$

to calculate empirical percentiles for obtaining any types of interval estimates. However, in our case this endeavor would be very time-consuming and even infeasible. To circumvent this, Rubin (Reference Rubin1976) proposed multiple imputation as a method to approximate the posterior mean and variance. Coincidentally, the method is especially successful when the complete-data posterior is multivariate normal as is the case in (13); thus, only a small number of draws is needed to obtain good approximations (Little & Rubin, Reference Little and Rubin2002). To be specific, we apply the law of iterative expectation and variance:

$(\vartheta, Z)$

to calculate empirical percentiles for obtaining any types of interval estimates. However, in our case this endeavor would be very time-consuming and even infeasible. To circumvent this, Rubin (Reference Rubin1976) proposed multiple imputation as a method to approximate the posterior mean and variance. Coincidentally, the method is especially successful when the complete-data posterior is multivariate normal as is the case in (13); thus, only a small number of draws is needed to obtain good approximations (Little & Rubin, Reference Little and Rubin2002). To be specific, we apply the law of iterative expectation and variance:

Next, we approximate (15) and (16) using a Monte Carlo quadrature with

![]() $K$

samples from the posterior obtained via the data augmentation scheme summarized in Algorithm 1 after a burn-in period of

$K$

samples from the posterior obtained via the data augmentation scheme summarized in Algorithm 1 after a burn-in period of

![]() $D$

iterations:

$D$

iterations:

\begin{align} \mathbb{E}(\vartheta |\mathcal{E}_{\text{obs}}) \approx \frac{1}{K} \sum _{k = D + 1}^{D+K} \hat{\vartheta }^{(k)} = \bar{\vartheta } \end{align}

\begin{align} \mathbb{E}(\vartheta |\mathcal{E}_{\text{obs}}) \approx \frac{1}{K} \sum _{k = D + 1}^{D+K} \hat{\vartheta }^{(k)} = \bar{\vartheta } \end{align}

\begin{align} \text{Var}(\vartheta |\mathcal{E}_{\text{obs}}) &\approx \frac{1}{K} \sum _{k = D + 1}^{D+K} \mathbf{V}^{(k)} + \frac{K+1}{K(K-1)}\sum _{k = D + 1}^{D+K} \left (\hat{\vartheta }^{(k)} - \bar{\vartheta }\right ) \left (\hat{\vartheta }^{(k)} - \bar{\vartheta }\right )^\top \nonumber \\[5pt] &= \bar{\mathbf{V}} + \bar{\mathbf{B}}, \end{align}

\begin{align} \text{Var}(\vartheta |\mathcal{E}_{\text{obs}}) &\approx \frac{1}{K} \sum _{k = D + 1}^{D+K} \mathbf{V}^{(k)} + \frac{K+1}{K(K-1)}\sum _{k = D + 1}^{D+K} \left (\hat{\vartheta }^{(k)} - \bar{\vartheta }\right ) \left (\hat{\vartheta }^{(k)} - \bar{\vartheta }\right )^\top \nonumber \\[5pt] &= \bar{\mathbf{V}} + \bar{\mathbf{B}}, \end{align}

where

![]() $\hat{\vartheta }^{(k)} = \text{vec}\left (\hat{\vartheta }^{(k)}_0, \hat{\vartheta }^{(k)}_1\right )$

encompasses the complete-data posterior means from the

$\hat{\vartheta }^{(k)} = \text{vec}\left (\hat{\vartheta }^{(k)}_0, \hat{\vartheta }^{(k)}_1\right )$

encompasses the complete-data posterior means from the

![]() $k$

th sample and

$k$

th sample and

![]() $\mathbf{V}^{(k)} = \text{diag} \big ( \mathbf{V}^{(k)}_0, \mathbf{V}^{(k)}_1\big )$

is composed of the corresponding variances defined in (13). We can thus construct point and interval estimates from relatively few draws of the posterior based on a multivariate normal reference distribution (Little & Rubin, Reference Little and Rubin2002).

$\mathbf{V}^{(k)} = \text{diag} \big ( \mathbf{V}^{(k)}_0, \mathbf{V}^{(k)}_1\big )$

is composed of the corresponding variances defined in (13). We can thus construct point and interval estimates from relatively few draws of the posterior based on a multivariate normal reference distribution (Little & Rubin, Reference Little and Rubin2002).

3. Simulation study

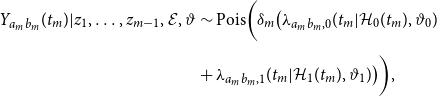

We conduct a simulation study to explore the performance of the REMSE compared to a REM, which assumes no spurious events, in two different scenarios, including a regime where measurement error is correctly specified in the REMSE and one where spurious events are instead nonexistent.

Simulation design: In

![]() S=1,000

runs, we simulate event data between

S=1,000

runs, we simulate event data between

![]() $n = 40$

actors under known true and spurious intensity functions in each example. For exogenous covariates, we generate categorical and continuous actor-specific covariates, transformed to the dyad level by checking for equivalence in the categorical case and computing the absolute difference for the continuous information. Generally, we simulate both counting processes

$n = 40$

actors under known true and spurious intensity functions in each example. For exogenous covariates, we generate categorical and continuous actor-specific covariates, transformed to the dyad level by checking for equivalence in the categorical case and computing the absolute difference for the continuous information. Generally, we simulate both counting processes

![]() $\mathbf{N_1(t)}$

and

$\mathbf{N_1(t)}$

and

![]() $\mathbf{N_0(t)}$

separately and stop once

$\mathbf{N_0(t)}$

separately and stop once

![]() $|\mathcal{E}_1| = 500$

.

$|\mathcal{E}_1| = 500$

.

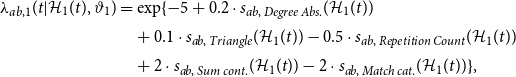

The data-generating processes for true events is identical in each case and given by:

\begin{align} \lambda _{ab,1}(t|\mathcal{H}_1(t), \vartheta _1) &= \exp \{-5 + 0.2\cdot s_{ab,\;Degree\;Abs.}(\mathcal{H}_1(t)) \\[2pt] & \quad + 0.1\cdot s_{ab,\;Triangle}(\mathcal{H}_1(t)) - 0.5\cdot s_{ab,\;Repetition \; Count}(\mathcal{H}_1(t)) \nonumber \\[2pt] & \quad + 2\cdot s_{ab,\;Sum \; cont.}(\mathcal{H}_1(t)) - 2\cdot s_{ab,\;Match \; cat.}(\mathcal{H}_1(t)) \}, \nonumber \end{align}

\begin{align} \lambda _{ab,1}(t|\mathcal{H}_1(t), \vartheta _1) &= \exp \{-5 + 0.2\cdot s_{ab,\;Degree\;Abs.}(\mathcal{H}_1(t)) \\[2pt] & \quad + 0.1\cdot s_{ab,\;Triangle}(\mathcal{H}_1(t)) - 0.5\cdot s_{ab,\;Repetition \; Count}(\mathcal{H}_1(t)) \nonumber \\[2pt] & \quad + 2\cdot s_{ab,\;Sum \; cont.}(\mathcal{H}_1(t)) - 2\cdot s_{ab,\;Match \; cat.}(\mathcal{H}_1(t)) \}, \nonumber \end{align}

where we draw the continuous exogenous covariate (cont.) from a standard Gaussian distribution and the categorical exogenous covariates (cat.) from a categorical random variable with seven possible outcomes, all with the same probability. Mathematical definition of the endogenous and exogenous statistics are given in Appendix A. In contrast, the spurious-event intensity differs across regimes to result in correctly specified (DG 1) and nonexistent (DG 2) measurement errors:

Given these intensities, we follow DuBois et al. (Reference DuBois, Butts, McFarland and Smyth2013) to sample the events.

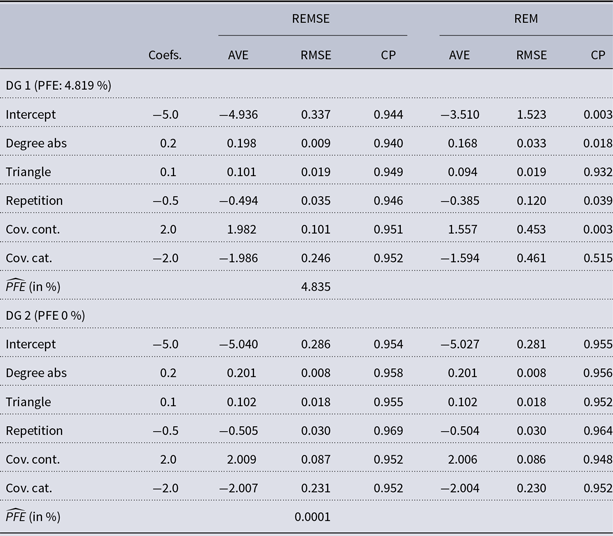

Table 1. Result of the simulation study for the REMSE and REM with the two data-generating processes (DG 1, DG 2). For each DG and covariate, we note the AVE (AVerage Estimate), RMSE (Root-Mean-Squared Error), and CP (Coverage Probability). We report the average Percentage of False Events (PFE) for each DG in the last row

Although the method is estimated in a Bayesian framework, we can still assess the frequentist properties of the estimates of the REMSE and REM. In particular, the average point estimate (AVE), the root-mean-squared error (RMSE) and the coverage probabilities (CP) are presented in Table 1. The AVE of a specific coefficient is the average over the posterior modes in each run:

\begin{align*} \text{AVE} = \frac{1}{S} \sum _{s = 1}^S \bar{\vartheta }_s, \end{align*}

\begin{align*} \text{AVE} = \frac{1}{S} \sum _{s = 1}^S \bar{\vartheta }_s, \end{align*}

where

![]() $\bar{\vartheta }_t$

is the posterior mean (17) of the

$\bar{\vartheta }_t$

is the posterior mean (17) of the

![]() $t$

th simulation run. To check for the average variance of the error in each run, we further report the RMSEs of estimating the coefficient vector

$t$

th simulation run. To check for the average variance of the error in each run, we further report the RMSEs of estimating the coefficient vector

![]() $\vartheta$

:

$\vartheta$

:

\begin{align*} \text{RMSE} = \sqrt{\frac{1}{S} \sum _{s = 1}^S\left (\bar{\vartheta }_s - \vartheta \right )^\top \left (\bar{\vartheta }_s - \vartheta \right )}, \end{align*}

\begin{align*} \text{RMSE} = \sqrt{\frac{1}{S} \sum _{s = 1}^S\left (\bar{\vartheta }_s - \vartheta \right )^\top \left (\bar{\vartheta }_s - \vartheta \right )}, \end{align*}

where

![]() $\vartheta$

is the ground truth coefficient vector defined above. Finally, we assess the adequacy of the uncertainty quantification by computing the percentage of runs in which the real parameter lies within the confidence intervals based on a multivariate normal posterior with mean and variance given in (17) and (18). According to standard statistical theory for interval estimates, this coverage probability should be around

$\vartheta$

is the ground truth coefficient vector defined above. Finally, we assess the adequacy of the uncertainty quantification by computing the percentage of runs in which the real parameter lies within the confidence intervals based on a multivariate normal posterior with mean and variance given in (17) and (18). According to standard statistical theory for interval estimates, this coverage probability should be around

![]() $95\%$

(Casella & Berger, Reference Casella and Berger2001).

$95\%$

(Casella & Berger, Reference Casella and Berger2001).

Results: DG 1 shows how the estimators behave if the true and false intensities are correctly specified. The results in Table 1 suggest that the REMSE can recover the coefficients from the simulation. On the other hand, strongly biased estimates are obtained in the REM, where not only the average estimates are biased, but we also observe high RMSEs and violated CP.

In the second simulation, we assess the performance of the spurious event model when it is superfluous. In particular, we investigate what happens when there are no spurious events in the data, that is, all events are real, and the intensity of

![]() $N_{ab,\;2}(t)$

is zero in DG 2. Unsurprisingly, the REM allows for valid and unbiased inference under this regime. But our stochastic estimation algorithm proves to be robust as for most runs, the simulated events were at some point only consisting of true events. In other words, the REMSE can detect the spurious events correctly and is unbiased if none occur in the observed data.

$N_{ab,\;2}(t)$

is zero in DG 2. Unsurprisingly, the REM allows for valid and unbiased inference under this regime. But our stochastic estimation algorithm proves to be robust as for most runs, the simulated events were at some point only consisting of true events. In other words, the REMSE can detect the spurious events correctly and is unbiased if none occur in the observed data.

For both DG 1 and DG 2, the PFE estimated by the REMSE closely matches the observed one whereas the REM, by constraining it to zero, severely underestimates the PFE in DG 1. In sum, the simulation study thus offers evidence that the REMSE increases our ability to model relational event data in the presence of measurement error while being equivalent to a standard REM when spurious events do not exist in the data.

4. Application

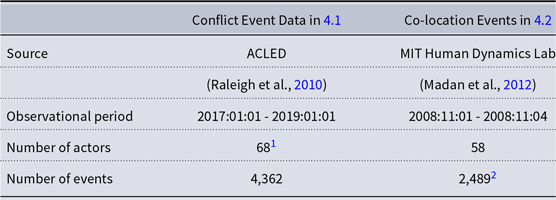

Next, we apply the REMSE on two real-world data sets motivated by the types of event data discussed in the introduction, namely human-coded conflict events in the Syrian civil war and co-location event data generated from the Bluetooth devices of students in a university dormFootnote 3 . Information on the data sources, observational periods, and numbers of actors and events is summarized in Table 2. Following the above presentation, we focus on modeling the true-event intensity of the REMSE and limit the spurious-event intensity to the constant term. Covariates are thus only specified for the true-event intensity. In our applications, the samples drawn according to Algorithm 1 converged to a stationary distribution within the first 30 iterations. To obtain the reported point and interval estimates via MI, we sampled 30 additional draws. Due to space restrictions, we keep our discussions of the substantive background and results of both applications comparatively short.

Table 2. Descriptive information on the two analyzed data sets

4.1 Conflict events in the Syrian civil war

In the first example, we model conflict events between different belligerents as driven by both exogenous covariates and endogenous network mechanisms. The exogenous covariates are selected based on the literature on inter-rebel conflict. We thus include dummy variables indicating whether two actors share a common ethno-religious identity or receive material support by the same external sponsor as these factors have previously been found to reduce the risk of conflict (Popovic, Reference Popovic2018; Gade et al., Reference Gade, Hafez and Gabbay2019). Additionally, we include binary indicators of two actors being both state forces or both rebel groups as conflict may be less likely in the former but more likely in the latter case (Dorff et al., Reference Dorff, Gallop and Minhas2020).

Furthermore, we model endogenous processes in the formation of the conflict event network and consider four statistics for this purpose. First, we account for repeated fighting between two actors by including both the count of their previous interactions as well as a binary indicator of repetition, which takes the value 1 if that count is at least 1. We use this additional endogenous covariate as a conflict onset arguably comprises much more information than subsequent fighting. Second, we include the absolute difference in a and b’s degree to capture whether actors with a high extent of previous activity are prone to engage each other or, instead, tend to fight less established groups to pre-empt their rise to power. Finally, we model hyperdyadic dependencies by including a triangle statistic that captures the combat network’s tendency towards triadic closure.

Given that fighting should be a relatively obvious event, one may wonder why conflict event data may include spurious observations. This is because all common data collection efforts on armed conflict cannot rely on direct observation but instead use news and social media reporting. Spurious events thus occur when these sources report fighting which did not actually take place as such. In armed conflict, this can happen for multiple reasons. For instance, pro-government media may falsely report that state security forces engaged with and defeated rebel combatants to boost morale and convince audiences that the government is winning. Social media channels aligned with a specific rebel faction may similarly claim victories by its own forces or, less obviously, battles where a rival faction fought and suffered defeat against another group. In war-time settings, journalists may also be unable or unwilling to enter conflict areas and thus base their reporting on local contacts, rumors, or hear-say. Finally, spurious observations may arise here when reported fighting occurred but was attributed to the wrong belligerent faction at some point in the data collection process. From a substantive perspective, it is thus advisable to check for the influence of spurious events when analyzing these data.

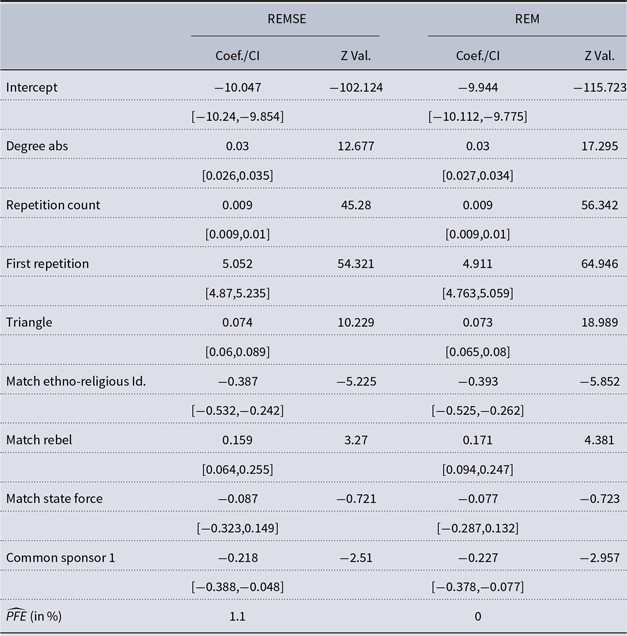

Table 3 accordingly presents the results of an REM and the REMSE. Beginning with the exogenous covariates, belligerents are found to be less likely to fight each other when they share an ethno-religious identity or receive resources from the same external sponsor. In contrast, there is no support for the idea that state forces exhibit less fighting among each other than against rebels in this type of internationalized civil war, whereas different rebel groups are more likely to engage in combat against one another. Furthermore, we find evidence that endogenous processes affect conflict event incidence. The binary repetition indicator exhibits the strongest effect across all covariates, implying that two actors are more likely to fight each other if they have done so in the past. As indicated by the positive coefficient of the repetition count, the dyadic intensity further increases the more they have previously fought with one another. The absolute degree difference also exhibits a positive effect, meaning that fighting is more likely between groups with different levels of previous activity. And finally, the triangle statistic’s positive coefficient suggests that even in a fighting network, triadic closure exists. This may suggest that belligerents engage in multilateral conflict, attacking the enemy of their enemy, in order to preserve the existing balance of capabilities or change it in their favor (Pischedda, Reference Pischedda2018).

Table 3. Combat events in the Syrian civil war: Estimated coefficients with confidence intervals noted in brackets in the first column, while the Z values are given in the second column. The results of the REMSE are given in the first two columns, while the coefficients of the REM are depicted in the last two columns. The last row reports the estimated average Percentage of False Events (PFE)

This discussion holds for both the results of REM and REMSE. Their point estimates are generally quite similar in this application, suggesting that spurious events do not substantively affect empirical results in this case. That being said, there are two noticeable differences between the two models. First, the coefficient estimates for the binary indicator of belligerents having fought before differs between the two models. In the REM, it implies a multiplicative change of

![]() $\exp \{4.911\}=135.775$

while for the REMSE, it is estimated at

$\exp \{4.911\}=135.775$

while for the REMSE, it is estimated at

![]() $\exp \{5.059\}=157.433$

. While both models thus identify this effect to be positive and significant, it is found to be stronger when spurious events are accounted for. Second, the two models differ in how precise they deem estimates to be. This difference is clearest in their respective Z values, which are always farther away from zero for the REM than the REMSE. As a whole, these results nonetheless show that spurious events have an overall small influence on substantive results in this application. The samples from the latent indicators

$\exp \{5.059\}=157.433$

. While both models thus identify this effect to be positive and significant, it is found to be stronger when spurious events are accounted for. Second, the two models differ in how precise they deem estimates to be. This difference is clearest in their respective Z values, which are always farther away from zero for the REM than the REMSE. As a whole, these results nonetheless show that spurious events have an overall small influence on substantive results in this application. The samples from the latent indicators

![]() $z$

also indicate that only approximately 1

$z$

also indicate that only approximately 1

![]() $\%$

of the observations, about 50 events, are on average classified as spurious events. These findings offer reassurance for the increasing use of event data to study armed conflict.

$\%$

of the observations, about 50 events, are on average classified as spurious events. These findings offer reassurance for the increasing use of event data to study armed conflict.

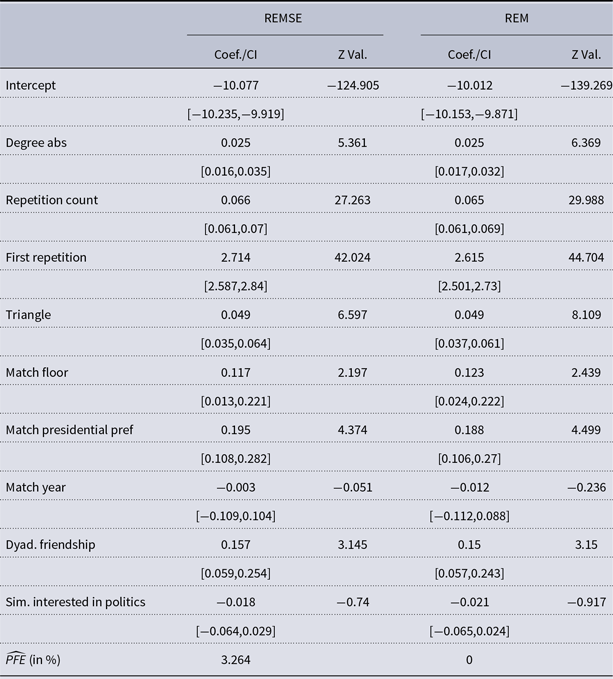

4.2 Co-location events in university housing

In our second application, we use a subset of the co-location data collected by Madan et al. (Reference Madan, Cebrian, Moturu, Farrahi and Pentland2012) to model when students within an American university dorm interact with each other. These interactions are deduced from continuous (every 6 minutes) scans of proximity via the Bluetooth signals of students’ mobile phones. Madan et al. (Reference Madan, Cebrian, Moturu, Farrahi and Pentland2012) used questionnaires to collect a host of information from the participating students. This information allows us to account for both structural and more personal exogenous predictors of social interaction. We thus include binary indicators of whether two students are in the same year of college or live on the same floor of the dorm to account for the expected homophily of social interactions (McPherson et al., Reference McPherson, Smith-Lovin and Cook2001). In addition, we incorporate whether two actors consider each other close friendsFootnote 4 . Given that the data were collected around a highly salient political event, the 2008 US presidential election, we also incorporate a dummy variable to measure whether they share the same presidential preference and a variable measuring their similarity in terms of interest in politics (Butters & Hare, Reference Butters and Hare2020). In addition, we include the same endogenous network statistics here as in section 4.1. These covariates allow us to capture the intuitions that individuals tend to socialize with people that they have interacted with before, are not equally popular as they are, and they share more common friends with (Rivera et al., Reference Rivera, Soderstrom and Uzzi2010). Compared to the first application, sources of spurious events here are more evident as students may not actually interact with but be physically close to and even face each other, for example, riding an elevator, queuing in a store, or studying in a common space.

We present the results in Table 4. Beginning with the exogenous covariates, we find that the observed interactions tend to be homophilous in that students have social encounters with people they live together with, consider their friends, and share a political opinion with. In contrast, neither a common year of college nor a similar level of political interest are found to have a statistically significant effect on student interactions. At the same time, these results indicate that the social encounters are affected by endogenous processes. Having already had a previous true event is found to be the main driver of the corresponding intensity, hence having a very strong and positive effect. Individuals who have socialized before are thus more likely to socialize again, an effect that, as indicated by the repetition count, increases with the number of previous interactions. Turning to the other endogenous covariates, the result for absolute degree difference suggests that students

![]() $a$

and

$a$

and

![]() $b$

are more likely to engage with each other if they have more different levels of previous activity, suggesting that, for example, popular individuals attract attention from less popular ones. As is usual for most social networks (Newman and Park Reference Newman and Park2003), the triangle statistic is positive, meaning that students “socialize” with the friends of their friends.

$b$

are more likely to engage with each other if they have more different levels of previous activity, suggesting that, for example, popular individuals attract attention from less popular ones. As is usual for most social networks (Newman and Park Reference Newman and Park2003), the triangle statistic is positive, meaning that students “socialize” with the friends of their friends.

Table 4. Co-location Events in University Housing: Estimated coefficients with confidence intervals noted in brackets in the first column, while the Z values are given in the second column. The results of the REMSE are given in the first two columns, while the coefficients of the REM are depicted in the last two columns. The last row reports the estimated average Percentage of False Events (PFE)

As in the first application, the REM and REMSE results presented in Table 4 are closely comparable but also show some differences. Again, the effect estimate for binary repetition, at

![]() $\exp \{2.715\}=15.105$

, is higher in the REMSE than in the REM (

$\exp \{2.715\}=15.105$

, is higher in the REMSE than in the REM (

![]() $\exp \{2.615\}=13.667$

) while Z values and confidence intervals obtained in the REM are substantially smaller in the REM than in the REMSE. In the co-location data too, the results are thus not driven by the presence of spurious events but accounting for these observations does affect results to some, albeit rather negligible, extent. This is the case even though the average percentage of spurious events here is comparatively high at 3

$\exp \{2.615\}=13.667$

) while Z values and confidence intervals obtained in the REM are substantially smaller in the REM than in the REMSE. In the co-location data too, the results are thus not driven by the presence of spurious events but accounting for these observations does affect results to some, albeit rather negligible, extent. This is the case even though the average percentage of spurious events here is comparatively high at 3

![]() $\%$

. That leaving out the corresponding 81 events yielded similar estimates may indicate that spurious events were mainly observed at the periphery of the interaction network and hardly affected the behavior in the network’s core. More generally, these results may assuage concerns over sensor data reliability (see Elmer et al., Reference Elmer, Chaitanya, Purwar and Stadtfeld2019).

$\%$

. That leaving out the corresponding 81 events yielded similar estimates may indicate that spurious events were mainly observed at the periphery of the interaction network and hardly affected the behavior in the network’s core. More generally, these results may assuage concerns over sensor data reliability (see Elmer et al., Reference Elmer, Chaitanya, Purwar and Stadtfeld2019).

5. Discussion

In summary, this paper extends the relational event framework to handle spurious events. In doing so, it offers applied researchers analyzing instantaneous interaction data a useful tool to explicitly account for measurement errors induced by spurious events or to investigate the robustness of their results against this type of error. Our proposed method controls for one explicit measurement error, namely that induced by spurious events. The simulation study showed that our approach can detect such false events and even yield correct results if they are not present. Still, we want to accentuate that numerous other types of measurement error may be present when one analyses relational events, which we disregard in this article. For instance, true events may be missing. These false negatives, for example, unreported conflict events between different belligerents, are difficult to tackle because of a lack of information.