1. INTRODUCTION

During the past decade, embodied and phenomenological-based approaches have become increasingly relevant in the movement and computing field, especially for those research studies involving artistic practice. The performer’s know-how, somatic knowledge and first-person inquiry have significantly informed user-centred design strategies thereby paving the way for a transdisciplinary approach to wearable technologies. Especially in sonic interaction design (SID), the recent interest in embodied knowledge, stemming from music and gesture studies, provided novel important insights to rethink mediation technology. Embodied theories support the idea that there is no real separation between mental processes and corporeal activity, describing music cognition in terms of an ‘action-perception coupling system’. According to this perspective, the physical effort, the feeling of presence and holistic involvement during the interaction seem to be essential features for designing effective sound feedback. If wearable technologies have been traditionally used to bring interactivity in music performance, recent embodied approaches consider sound interaction as a way of enhancing the performer’s sensorimotor learning, thereby providing a real somatic knowledge (Giomi Reference Giomi2020a).

As such, SID increasingly adopted protocols and methodologies from movement sonification. In movement sonification, interactive sound feedback is used as a means of objectively representing motion through the auditory channel. The goal of sonification is to provide meaningful information about movement perception that can eventually enhance bodily awareness, control and knowledge. It should be noted that the term ‘sonification’ has only recently been introduced in artistic-oriented research (Françoise, Candau, Fdili Alaoui and Schiphorst Reference Françoise, Candau, Fdili Alaoui and Schiphorst2017; Niewiadomski, Mancini, Cera, Piana, Canepa and Camurri Reference Niewiadomski, Mancini, Cera, Piana, Canepa and Camurri2019). This new trend highlights a fundamental shift of perspective from musical interactivity per se to somatic knowledge provided by interactive movement sonification, which can be considered as a major somatic-sonification turn. According to this perspective, the relation between sound and motion have to be perpetually meaningful. This means that ‘the moving source of sound’ should express particular features of the represented gesture (Leman Reference Leman2008: 236) thereby stimulating an embodied involvement with interaction. The way in which meaningful interactions can be designed reflects our culturally informed representations (Leman, Lesaffre and Maes Reference Leman, Lesaffre, Maes, Lesaffre, Maes and Leman2017) and relies on the fact that gesture and sound share a common multimodal perceptive ground; for example, the fact that ‘we mentally imitate the sound-producing action when we attentively listen to music’ while ‘we may image actively tracing or drawing the contours of the music as it unfolds’ (Godøy Reference Godøy2003: 318). Over the years, general design methods for creating movement–sound meaningful relationships in interactive systems have been proposed to achieve what dance tech pioneer Robert Wechsler called gesture-to-sound ‘compliance’ (Wechsler Reference Wechsler, Broadhurst and Machon2006): ‘coarticulation’ (Godøy Reference Godøy2014); ‘mapping through listening’ (Bevilacqua, Schnell, Françoise, Boyer, Schwarz and Caramiaux Reference Bevilacqua, Schnell, Françoise, Boyer, Schwarz, Caramiaux, Lesaffre, Maes and Leman2017); ‘model-based sonification’ (Giomi and Leonard Reference Giomi and Leonard2020); and ‘multidimensional gesture-timbre mapping’ (Zbyszyński, Di Donato, Visi and Tanaka Reference Zbyszyński, Di Donato, Visi and Tanaka2021).

Furthermore, research-creation and practice-led research (Smith and Dean Reference Smith and Dean2009) do not merely consider sonification as a way to provide an auditive representation of movement. Establishing an ecological link between sonic information and kinaesthesia, sound feedback stimulates bodily intelligence (Choinière Reference Choinière2018) allowing for a sensorial reconfiguration of the ‘action-perception coupling’. In this context, biosignal wearable systems seem to be particularly useful since they measure internal physiological data that can be referred to the performer’s proprioceptive and interoceptive activity. When applying sonification techniques to biosignals, the measurement provides performers with a new sensorial geography which allows them to renew the physiological basis of their somatic knowledge.

Following a phenomenological-based approach, and considering current trends in SID, this article presents an original contribution to practice-led artistic research in the field of movement computing and movement sonification. In particular, the article suggests a new way of understanding viscerality as a key feature to design interactive systems and empower the performer’s agentivity. It is dived in two parts. In the first part, I present the theoretical framework of the embodied approach to sound interaction. In the second part, I introduce the Feedback Loop Driver, an ongoing research-creation on musical improvisation with biophysical technologies. In particular, I examine how the relation between a visceral approach to sound design and improvisation techniques can transform the ecological link between sound and gesture, thereby altering the feedback loop mechanism. In this context, I shall demonstrate how wearable technologies can be used as an active sensory-perceptual mode of experiencing that allow us to rethink expressive composition of movement.

2. TOWARDS AN EMBODIED APPROACH TO MUSIC, PERFORMANCE AND MEDIATION TECHNOLOGY

The problem of embodiment represents a cornerstone for the understanding of the relationship between bodies and digital interfaces in performing arts. Embodiment processes, with regard to mediation technology, can refer either to the extension (or exteriorisation) of human skills in the prosthesis (e.g., the interactive musical instrument, the computer simulation) or to the the living body’s ability to integrate the artefact into its body schemata (e.g., incorporation or interiorisation). Both exteriorisation and interiorisation paradigms lie in the processuality of technical inscription, that is, the sensorimotor learning process needed for either extending a certain sensorial capacity or interiorising the technical mediation. According to Malafouris (Reference Malafouris2013) and Parisi (Reference Parisi2019), the technical embodiment’s processuality reveals the brain–body’s metaplasticity: the idea that individuals and environment can be plastically altered because of their mutual interaction in temporally extended dynamics. From this point of view, mediation technology can elicit specific transformations in the performer’s perceptual organisation and mental motor programs, according to the degree of involvement, engagement, agentivity and immersion provided by the interface.

Cyborg feminism (Haraway Reference Haraway1991), post-humanism (Hayles Reference Hayles1999; Braidotti Reference Braidotti2013), post-phenomenology (Ihde Reference Ihde2002) and media art studies (Hansen Reference Hansen2006) have widely theorised such an ontological shift from a cultural and philosophical perspective. Furthermore, choreographic practice and dance studies (Broadhurst and Machon Reference Broadhurst and Machon2006; Choinière Reference Choinière2015; Davidson Reference Davidson2016) have experimented, over the years, with various technologies, especially those involving real time feedback, in order to affect motor imagination and to radically transform gesture composition strategies (Pitozzi Reference Pitozzi and Kaduri2016). Music performance studies have only recently started to reflect on technical embodiment, in order to go beyond a mere instrumental approach to digital interfaces. In this context, sound feedback can be used as an informational channel providing qualitative or quantitative information about movement during its execution. From this perspective, sonic interaction allows performers to experience a new modality of perceptual organisation and corporeal creativity, thereby questioning the physiological basis of the action-perception coupling system.

2.1. From embodied cognition to music perception

Issuing from several theoretical frameworks such as phenomenology (Merleau-Ponty Reference Merleau-Ponty1962), ecological psychology (Gibson Reference Gibson1977), enactivism (Varela, Thompson and Rosch Reference Varela, Thompson and Rosch1991), action-based theories of perception (Noë Reference Noë2004), extended mind theories (Clark Reference Clark2008) and mirror neuron research (Rizzolatti and Sinigaglia Reference Rizzolatti and Sinigaglia2008), embodied cognition paradigm maintains the non-dualistic, ‘situated’ and bodily-based nature of human cognition. Although subtle differences exist between different embodied approaches, they all refer to cognition as an embodied, embedded, enactive and extended process. As such, embodied theories are often gathered under the 4E cognition label (Menary Reference Menary2010).

Over the past decade, embodied approaches have been further applied to music cognition (Lesaffre, Maes and Leman Reference Leman, Lesaffre, Maes, Lesaffre, Maes and Leman2017; Kim and Gilman Reference Kim and Gilman2019). Within this framework, music is interpreted as an action-oriented, multimodal and hence embodied experience. Instead of considering auditory perception as a pure computational process allowing us to capture acoustic information, elaborate mental representations and then produce music or music-related outputs, embodied perspective considers musical meaning as deeply rooted in our corporeality (Leman and Maes Reference Leman and Maes2015). Therefore, while in cognitivist and disembodied approaches musical experience is held to be a unidirectional process in which perception and action are separated, embodied perspective fosters the idea that musical experience has an immediate relevance for the sensorimotor system. For these reasons both musical meaning formation and music-related activities seem to emerge from the mutual interaction between perception and action (Maes, Dyck, Lesaffre, Leman and Kroonenberg Reference Maes, Dyck, Lesaffre, Leman and Kroonenberg2014).

Such theoretical assumptions are supported by empirical studies, which demonstrate that both movements and verbal descriptions in response to music are strictly connected to the morphological structure of sound: according to motor-mimetic theory (Cox Reference Cox2011), highly significant features of music, such as melody, harmony, timbre and rhythm are reflected in the movements of the perceivers (Burger, Saarikallio, Luck, Thompson and Toiviainen Reference Burger, Saarikallio, Luck, Thompson and Toiviainen2013; Nymoen, Godøy, Jensenius and Torresen Reference Nymoen, Godøy, Jensenius and Torresen2013). Sonic events and action trajectories can be thereby conceived in terms of ‘coarticulation’ (Godøy Reference Godøy and Beder2018). Moreover, music-related gestures can be described as mediators between sound phenomena and music-meaning formation. From this perspective, music perception is not only action-oriented, but also ecologically situated and multimodal. Indeed, the perceiver does not need to perform computations in order to draw a link between sensations and actions; it is sufficient to find the appropriate signals in the environment and associate them with the correct motor response (Matyja and Schiavio Reference Matyja and Schiavio2013). From this point of view, music can be conceived in terms of affordance (Menin and Schiavio Reference Menin and Schiavio2012). Being embodied and deeply implied in environmental interactions, musical experience is therefore essentially multimodal. This means that music is perceived not only through sound but also with the help of visual cues, kinaesthesia, effort and haptic perceptions (Timmers and Granot Reference Timmers and Granot2016).

What is crucial for SID is that sound perception is strictly related to sensorimotor learning. Therefore, sound feedback itself should be conceived as an action-related phenomenon capable of eliciting new gestural affordances. Systematic applications of this paradigm have been used for experimenting in the fields of music performance (Visi, Schramm and Miranda Reference Visi, Schramm and Miranda2014), music pedagogy (Addessi, Maffioli and Anelli Reference Addessi, Maffioli and Anelli2015), movement analysis (Giomi and Fratagnoli Reference Giomi and Fratagnoli2018) and healthcare/well-being (Lesaffre Reference Lesaffre and Bader2018).

2.2. Mediation, extension and flow

By establishing the four stages of interaction, Paul Dourish (Reference Dourish2001) introduced embodiment paradigm in human–computer interaction (HCI), thereby paving the way for the emergence of the so-called third wave of HCI (Marshall and Hornecker Reference Marshall, Hornecker, Price, Jewitt and Brown2013). Since the publication of his seminal work, embodiment became a conceptual key paradigm to improve the user’s physical and affective involvement in interface interaction. Adopting a human-centred perspective, recent trends in HCI highlighted the importance of meaning-making, experience and situatedness of embodied knowledge in order to devise a holistic approach to interaction.

Similarly, embodied music cognition paradigm provided a model for promoting the sensation of presence and non-mediation in interactive systems and wearable technologies design. Broadly, sound interfaces are effective when they become transparent or, as Leman would say, when they are perceived as an extension of the human body (Leman Reference Leman2008). According to Nijs (Reference Nijs, Lesaffre, Maes and Leman2017), the musical instrument–performer relationship is a meaningful model to understand how mediation technology should be conceived in the context of embodied interaction.

Echoing McLuhan’s classical definition of media (McLuhan [1964] Reference McLuhan1994), the musical instrument can be defined as a natural extension of the musician (Nijs, Lesaffre and Leman Reference Nijs, Lesaffre, Leman, Castellengo, Genevois and Bardez2013). Such a paradigm is not, however, a totally new conception. Merleau-Ponty (Reference Merleau-Ponty1962) first illustrated technical embodiment by describing the functioning of body patterns in the case of the blind man with his stick. The stick is not an external object for the man who carries it. For the blind man it is rather a physical extension of his sense of touch that provides information on the position of his limbs in relation to the surrounding space. Through a learning and training process, the stick is integrated into the blind man’s body schemata. Similarly, Merleau-Ponty provides a (less mentioned) analysis of the relationship between the musician’s body and the musical instrument describing it in terms of ‘merging’: when instrument-specific movements become a somatic know-how of the musician, the instrument can be considered as an organic component of the performer’s body. In highly skilled musicians, the instrument is no longer experienced as a separate entity but as a part of the sensorimotor system articulation. The merging of instrument and musician seems to be crucial in order to experience the holistic sensation of being completely involved in the music. This perceptual illusion of non-mediation enables a feeling of flow, that is, the intense and focused concentration on the present moment due to the merging between action, awareness and creativity (Csikszentmihalyi Reference Csikszentmihalyi2014).

As noted earlier, the instrument–musician coupling and the feeling of flow can be considered as meaningful models to devise effective sound interaction. However, I believe that the ‘merging’ paradigm is not sufficient to understand the complexity of sensorimotor learning in the context of mediation technology. In particular, the merging process does not consider the brain–body metaplasticity solicited by real-time sensorial feedback. From a post-phenomenological perspective, Merleau-Ponty’s early writing about the perceiving body provides not only a proto-theory about the technological exteriorisation of human capacities but also an important insight into the reorganisation of proprioception (Giomi Reference Giomi2020b). Indeed, even the ‘stick’ offers to the blind man a new perceptual repertoire demanding he renew his mental motor programmes. Mediation technology, especially if it involves performance and real-time audiovisual feedback, provides performers with a totally new sensorial geography allowing them to reflect on bodily metaplasticity. According to Choinière (Reference Choinière2018), feedback redefines our habitual modes of perception, thereby influencing the fact that we sense our body schemata as an unstable and fluid process. In this sense, embodied approaches to SID should pay attention to what I would call the effects of reconfiguration on body schemata induced by prosthetic exteriorisation and interiorisation processes. In a similar vein, Ihde and Malafouris (Reference Ihde and Malafouris2019) propose to go beyond interiorisation and exteriorisation dynamics, by focusing on ‘the transformative power and potential of technical mediation’ as well as on ‘the transactional character of the relationship between [man and his technical artefacts]’.

2.3. From movement to somatic sonification

Since the mid-1980s, wearable technologies have been extensively employed in artistic performance to connect gesture and sound. Dance technology had a pivotal role in developing interactive music systems. David Rockeby’s VNS, Frieder Weiss’s Eyecon and Mark Coniglio’s Isadora are probably the most renowned examples of artistic-based software created during the 1990s. Towards the end of the decade, the development of EyesWeb (Camurri et al. Reference Camurri, Hashimoto, Ricchetti, Ricci, Suzuki, Trocca and Volpe2000) provided the most credited platform for motion analysis and sound interaction, not to mention countless applications for the Max/MSP environment originally wrote by Miller Puckette. Footnote 1 Notwithstanding the prolific research in the field of interactive dance/music systems, the term ‘movement sonification’ been introduced in SID literature only recently. As noted by Bevilacqua et al. (Reference Bevilacqua, Boyer, Françoise, Houix, Susini, Roby-Brami and Hanneton2016), although both interactive dance/music systems and data-driven movement sonification use movement interaction in order to generate sound content, their goals are generally different. While in the former, sound outcome is designed to produce an aesthetically meaningful interaction, in the latter, sound feedback aims at providing an objective auditory representation of movement. In the last few years, some attempts have been made to combine these two traditions.

In a seminal paper, Hunt and Hermann (Reference Hunt and Hermann2004) first argued about the importance of interaction in sonification processes, highlighting how the ‘quality of the interaction’ can enhance perceptual skills in performing activities or accomplishing simple sensorimotor tasks. This text marks a turning point in real-time sound interaction, because it provides a conceptual bridge between data sonification and SID. Furthermore, the paper posits the centrality of gestural interaction as a means for experimenting high-dimensional data-space sonification. In the last few years, ‘interaction’ has effectively become a crucial issue, if not a trend topic, in the sonification field (Bresin, Hermann and Hunt Reference Bresin, Hermann and Hunt2012; Degara, Hunt and Hermann Reference Degara, Hunt and Hermann2015; Yang, Hermann and Bresin Reference Yang, Hermann and Bresin2019).

The case of dance is particularly emblematic of this new trend. In their pioneering study, Quinz and Menicacci (Reference Quinz and Menicacci2006) used the real-time sonification of a physical quantity (i.e., the performer’s lower limb extension captured by flex sensors) to successfully support the dancer’s postural reorientation. Moreover, the study provides a methodological framework to enhance performers’ somatic awareness and enrich their corporeal creativity. Similar studies have been proposed by Jensenius and Bjerkestrand (Reference Jensenius, Bjerkestrand and Brooks2012), who focused on micro-movement sonification, by Grosshauser, Bläsing, Spieth and Hermann (Reference Grosshauser, Bläsing, Spieth and Hermann2012), who developed a wearable sensor-based system (including an IMU Footnote 2 module, a goniometer and a pair of FSR Footnote 3 ) in order to sonify classical ballet jump typologies, and by Françoise, Fdili Alaoui, Schiphorst and Bevilacqua (Reference Françoise, Fdili Alaoui, Schiphorst and Bevilacqua2014), who reported the results of an experimental workshop in which the authors proposed an interactive sonification of effort categories issued from Laban Movement Analysis. Another relevant research on movement qualities sonification has been carried out by InfoMus Lab team (University of Genoa). The authors (Niewiadomski et al. Reference Niewiadomski, Mancini, Cera, Piana, Canepa and Camurri2019) describe the implementation of an EyesWeb algorithm to sonify two choreographic qualities, that is, lightness and fragility. Moreover, they introduce an interesting model-based sonification by associating a specific sonification with each movement quality. The authors demonstrate how the workshop participants (both expert and non-expert dancers) were able to distinguish the two movement qualities from the perception of the auditory feedback, and how the physical training with sound dramatically enhanced the recognition task.

In another study, Françoise et al. (Reference Françoise, Candau, Fdili Alaoui and Schiphorst2017) directly address kinaesthetic awareness via interactive sonification. The authors combine conceptual frameworks issued from somatic practices (e.g., Feldenkrais Method, Footnote 4 somaesthetic approach) to user-centred HCI. The study describes a somatic experimentation in which participants (both skilled dancers and non-dancers) wear a pair of Myo armbands, Footnote 5 placed on their lower legs, to sense the neuromuscular activity (EMG Footnote 6 ) of their calves and shins. The authors propose a corpus-based concatenative synthesis in order to generate sound grains drawn from ambient field recording (i.e., water and urban sounds). During the workshop, the kinaesthetic exploration of the installation space is facilitated by experimenters who lead participants to focus on their micro-movements while performing simple actions (i.e., walking, standing still). The study clearly demonstrates how user-centred strategies (e.g., adaptive system, neuromuscular sensing), combined with somatic approaches to experimentation, provide a rich playground to access bodily awareness and especially the dynamic relation between proprioception and movement.

2.4. On viscerality in digital performance

Since proprioception, feelings of flow and kinaesthetic creativity are key features for embodied interaction design strategies, and several artists and scholars have searched, over the years, for a more intimate entanglement with technology. Many of them stressed the importance of improving viscerality in artistic performance by exploiting increasing possibilities offered by wearable technologies (Machon Reference Machon2009). The term ‘visceral’ is commonly used to refer to deep, primordial, intuitive and ‘hard-wired’ aspects of the body and mind, and it can be applied to describe intense corporeal sensations, often including internal physiological processes such as respiration, cardiovascular activity, neuromuscular activity and excretory systems. Adopting a phenomenological perspective, Gromala (Reference Gromala2007) analyses how, by focusing on interoceptive (visceral) and proprioceptive rather than exteroceptive stimuli, it is possible to induce a more intimate subjective involvement in interactive artworks, allowing for a new awareness of the body/mind connection. Furthermore, Kuppers (Reference Kuppers, Broadhurst and Machon2006) and Kozel (Reference Kozel2007) observe how both digital image and virtual body can generate visceral sensations, thereby revealing the ‘here and now’ of the living body beyond all expected technical disembodying effects.

Experimentation with biophysical wearable technologies is undoubtedly one the most common ways to experience viscerality in digital performance. Biosignals generally refer to physiological data representation issuing from bodily electrical potential analysis. In music performance, biosignals have been used to control or affect sound generation and manipulation, by sensing neuronal activity (Lucier Reference Lucier and Rosenboom1976; Knapp and Lusted Reference Knapp and Lusted1990; Miranda Reference Miranda2006), skin conductance (Waisvisz Reference Waisvisz1985), blood flow (Stelarc 1991; Van Nort Reference Van Nort2015), heartbeat (Votava and Berger Reference Votava and Berger2015), mechanical muscular contraction (Donnarumma Reference Donnarumma2011) and neuromuscular activity (Tanaka Reference Tanaka1993; Pamela Z Reference Pamela and Malloy2003; Tanaka and Donnarumma Reference Tanaka, Donnarumma, Kim and Gilman2019). The use of biofeedback wearable technologies enabled radical artistic explorations over the past three decades. Biosignal sonification make it possible to alter stimulus-response paths, thus allowing performers to experience bodily transformations in terms of degrees of intensity outside the habitual movement-vision perspective.

Atau Tanaka’s artistic and academic work has probably been one of the most effective in emphasising performance viscerality by means of biophysical technologies. According to Massumi (Reference Massumi2002), visceral sensations are so intimate and profound that they precede all exteroceptive sense of perception. Similarly, Tanaka’s artistic research explores the physiological basis of gesture, by sonifying those signals (EMG Footnote 7 ) that are at the origin of action. Therefore, gesture is approached from the point of view of the performer’s intentionality and intuition, thereby providing a sense of intimacy in interacting with the interface. The physicalisation of sound is another crucial concept related to viscerality: several sonification strategies – ranging from pitch, amplitude and audio spectrum design – allow Tanaka to explore both sound physicality and concreteness, in order to generate a sort of ‘acoustic sense of haptic’. In this way, sound enhances the performer’s sense of visceral effort during interaction and communicates this effort to the audience in an audible form (Tanaka Reference Tanaka, Chatzichristodoulou and Zerihan2012).

Another pioneer of biophysical music performance is the African American artist Pamela Z. In the mid-1990s, she began to experiment with BodySynth, Footnote 8 a MIDI controller using electrode sensors to measure the electrical impulses generated by the performer’s muscles. Unlike Tanaka, whose performance techniques focuses on continuous muscular variations and tuning, Pamela Z uses electromyography to detect specific gestural poses, thereby developing an idiomatic gesture-to-sound vocabulary. This allows the performer to introduce a peculiar theatricality in her mixed media performances. In this context, viscerality is achieved by using voice as primary source material. However, voice, physical presence, wearable sensors and digital sound transformations are ‘components of a more complex instrument’ (Pamela Z 2003: 360). Both pre-recorded vocal samples and electronic effects on her live voice are triggered by her gestures, allowing the artist to empathetically guide the spectator through the dramaturgy of her theatrical music performance. By combing sung and spoken passages with electronic transformations to produce her gestures, her sounding body becomes ‘a tool for manipulating language and narrative structure’ (Rodgers Reference Rodgers2010: 202). This visceral approach to SID is exemplified her major work Voci (2003), 18 scenes that combine vocal performance with digital video and audio processing (Barrett Reference Barrett2022). In this ‘polyphonic mono-opera’, she enacts variety affective, geographical, gendered, national and ethno-racial markers of the voices. Embedding extra-musical meanings, the sound become here a sonic marker of diverse corporeal identities that reverberate in her visceral gestural communication.

According to Marco Donnarumma, one of the leading artists and researchers in this field, viscerality is strictly connected to the experience of hybridity. For him, the ‘technological body’, that is, the assemblage of wires, AI, biosignals and sound, is a way to experience new kinds of corporeal ‘configurations’. In his work Corpus Nil (2016), he explores the notion of hybridity by combining his body with sound, light and an artificial intelligence system. In this performance, Donnarumma uses his Xthsense, a MMG Footnote 9 interface that captures muscle sounds. These signals are computed for re-synthesizing salient features of his muscular activity (e.g., intensity, pace, abruptness) in order to generate granulised stream of sounds (based on direct muscular audification Footnote 10 ) and to control stroboscopic light patterns. Several aspects of the sound and light qualities are autonomously determined by the machine, which listens the muscular tone variations and generates unexpected audio and luminous configurations without. Similarly, the ways in which the performer moves depend on the audiovisual response produced by the algorithm. Therefore, Donnarumma’s movements are at once a generative input for the signal processing, a response to machine configurations and a way to ‘train’ the artificial intelligence. Corpus Nil is presented in totally black spaces. The performer is on the stage in front of the spectators almost completely covered by black paint. Donnarumma’s actions develop as a slow choreography made of muscular contractions and limb torsions. The unusual postures the performer adopts, the use of stroboscopic lights and the spatialised sound lead the audience to perceive non-human amorphous bodily fragments that exceed conventional anatomical representations. The amorphous being perceived on the stage emerges as a pure intensity, an hybrid creature made of flesh, desire, light, sound and intelligent machines that overcome normative representations of the human anatomy in terms of gender, sex, ability and normality. Muscular activity representing the connective material that enables this hybridisation thus suggests the continuity between human and non-human agents.

The relation between sound, biofeedback and viscerality have also been explored in other artistic fields such as immersive installations and dance performances. Since 1994, pioneer Canadian artist Char Davies experimented with biosensors in order to investigate porous ‘boundaries between interior and exterior, mind and body, self and world’ (Davies Reference Davies and Malloy2003: 333). In her seminal Virtual Reality artworks, Osmose (1995) and Éphémère (1998), she explores the phenomenological relationship between proprioception and space by putting attention ‘on the intuitive, instinctual, visceral processes of breathing and balance’ (ibid.: 332). By avoiding classic VR control devices (e.g., joystick, dataglove), Davies proposes an immersive experience in which the user (the ‘immersant’ in Davies’s vocabulary) is provided with a perceptible form of connection between its own visceral sensations and the exploration of audiovisual landscape (a dream-like natural environments made of caves, forests, subterranean and submarine spaces, and animated by male and female fairy voices). Immersive experience is enhanced thanks to a sophisticated work of sound spatialization providing unusual psycho-acoustic effects on the perception of depth and height. The interaction between 3D sound and physiological data ‘further erodes the distinctions between inside and outside’ (Davies Reference Davies, Penz, Radick and Howell2004: 75) thereby reinforcing the entanglement between environment and corporeality which are experienced as an ever-changing sensory geography.

Isabelle Choinière is a Canadian choreographer who experimented with the use of digital technologies and sound in dance pieces to investigate the notion of intercorporeality. In particular she explores how the interface can be a means for de-structuring corporeal codes and for developing a collective body, that is, a self-organized entity. In Meat Paradoxe (2007–13), she explores the potential of technologies in the creation of a sensory environment capable of activating self-organization principles between a group of dancers. The piece is conceived by the choreographer as a ‘moving three-dimensional sculpture’ (Choinière Reference Choinière2015: 225) made up of five partially nude dancers who form an amorphous corporeal agglomerate (a collective body) due to the constant contact of their bodies. Each dancer is equipped with a wireless microphone (that can be considered a biosensor). Microphones allow dancers to produce sound traces such as words, moans, chants and contacts between bodies. The audio material is processed and spatialised in real time via a piece of software designed by Dominique Besson. The sounds and gestures produced by each performer returns as echoes of the collective body that thus emerges as an intercorporeal sound entity. Choinière’s investigation of the sonorous body seems to penetrate the matter of sound, and at the same time, the body, because it operates ‘inside a fine limit where the shape of the body and sound are dissolved’ (Pitozzi Reference Pitozzi and Kaduri2016: 280–1). Both visually and kinaesthetically, the proximity of the dancers’ naked bodies and the collective sound vibration they produce constitute a visceral intercorporeal mass that aims at generating a ‘de-hierarchization’ of the single bodies (Choinière Reference Choinière2015: 229), thereby placing the audience, at least metaphorically, in the middle of the flesh.

3. FEEDBACK LOOP DRIVER: A BIOPHYSICAL MUSIC PERFORMANCE

The use of biosignals and sonification in technological-mediated performance enables the creation of ‘first-hand visceral experiences’ suggesting to the audience ‘vivid … experiences of hybridity’ (Donnarumma Reference Donnarumma2017). From the performer’s point of view, this means that the intimate resonance between sound and bodily (sensorimotor and physiological) activity can allow for an exploration of the corporeal organisation’s plasticity. Such an approach reveals a post-phenomenological understanding of the flesh in which the body is primarily conceived in terms of corporeality (Bernard Reference Bernard2001; Donnarumma Reference Donnarumma2016; Choinière Reference Choinière2020). Opposed to the conception of the body as a unique and normalised anatomy, the notion of corporeality provides a representation of the body as a reticular system, a fluid and metastable organisation, a network of intensities and forces. Within this theoretical framework, I present an autoethnographic analysis of my own performance of Feedback Loop Driver. Methodologically speaking, adopting a first-person point of view makes it possible not only to describe design methods but also to shed light on the performer’s embodied knowledge. From a phenomenological perspective, the first-person account on interaction strategies represents, indeed, a privileged way to access the performer’s somatic know-how. This approach seems particularly effective since this performance-led research focuses on the way in which wearable technologies and sonification can be employed in order to stimulate visceral corporeal sensations, thereby informing gesture composition strategies. Moreover, although an autoethnographic analysis does not provide a universally valid outcome, drawing on my artistic work allows me to tackle a particular embodied and situated reflection and application of the theoretical issues previously discussed.

In the next subsections, I shed light on the way in which peculiar artistic and subjective choices help us address the question of embodied musical interaction from different perspectives. Furthermore, I demonstrate how focusing on visceral and intimate sensations can transform wearable technologies into an active sensory-perceptual mode of experiencing enabling corporeality reorganisation.

3.1. Reconfiguring corporeality: the analytical/anatomical function of wearable sensing technologies

Feedback Loop Driver is a 25-minute biophysical music performance, strongly based on improvisation, that involves wearable technologies (especially biosignal sensors). All sound manipulations are generated in real time by the performer’s neuromuscular activity. The notion of ‘feedback loop’ is the core metaphor of the work. Methodologically speaking, this concept is used as a recursive function informing, at least at a metaphorical level, the general framework of the performance as well as the specific strategies implemented during the creative process (interactive system design, sonification, improvisation).

The technical setup of the performance essentially includes three Myo Armbands (providing eight-channels of EMG data). Moreover, a set of two BITalino R-IoT (embedding a 9-DoF IMU-Marg system) are used in order to compute additional motion features; for example, acceleration peaks and quantity of motion. A custom software is designed in Max/MSP 8 visual programming language.

The embodied approach informs the whole design and performative process. Indeed, even apparently objective technical procedures, such as sensors data acquisition and computation, reflect a precise embodied strategy. The performance mainly deals with muscular effort. A Myo armband is placed on each of my left and right forearms, while I wear the third Myo on my left calf. The main patch I designed enables the accurate analysis of 11 muscular-based gestures. Incoming raw data are analysed, computed and packed into different muscular groups (four groups for each forearm, three groups for the leg). The 11 muscular-based gestures are classified by using machine learning techniques based on Ircam’s Mubu plugins. Footnote 11 Each muscular-based gesture is targeted in real time with a correspondent label (e.g., wave_out_L). Each label triggers the activation of a specific sound manipulation. Each group is also associated to a list of two or three EMG signals. An average of those signals is calculated thereby providing a continuous value that can be sonified independently.

Moreover, a Bayesian filter Footnote 12 is implemented in order to continuously set the output range according to the amount of the muscular energy involved (Françoise et al. Reference Françoise, Candau, Fdili Alaoui and Schiphorst2017). This method allows us to make micro-contractions meaningful and adapt the threshold of the sound output to a specific performative situation. Each label indicates a certain gesture (or pose) and corresponds to a specific neuromuscular activation. For instance, each forearm muscle analysis enables the detection of two basic hand gestures – ‘wave in’ and ‘wave out’ – and of two basic poses – bicep and forearm contraction. The 11 muscular-gestures/poses represent the musical gesture vocabulary of the performance.

It should be noted that such a design strategy stems from the concrete practice with technologies. The list of gestures–poses emerges from the negotiation between the system’s capability to detect muscular groups from the EMG data analysis and the performer’s ability to accurately activate specific muscles and perform certain gestures. This is why, for instance, the leg poses form only three groups instead of four. Such a limit represents at the same time a technical and performative issue giving rise to the following artistic and technological implementations. Therefore, the sensor acquisition system is designed from the performer’s embodied perspective.

Movement sonification is experimented with from the very first part of the creative process in order to evaluate the system’s effectiveness. To this end, very simple auditory feedbacks are provided (e.g., pure tones). By transforming proprioceptive and interoceptive sensations (i.e., muscular effort) into exteroceptive information, the sound outcome helps me be aware of my muscular activity. In this context, sound represents a sensorial feedback improving my sensorimotor learning practice and enhancing my ability to attain a specific performative goal (to activate certain muscles). From this point of view, wearable technologies perform two analytical/anatomical functions. First, sensors coupled with sound feedback allow me to map and re-embody my muscular sensations (e.g., effort sensations produced by extensor/abductor muscles). Second, the specific architecture of the interface, emerging from the technological practice (i.e., EMG computation strategy), represents an anatomical dispositif Footnote 13 enabling corporeality reconfiguration. Through this new sensorial geography, in which certain parts of my body (my forearms, my left calf) and certain sensations (e.g., bicep effort) are intensified because of the sonorous feedback, the body renews its configuration spontaneously. For this reason, I propose to use the term ‘reconfiguration’ instead of ‘configuration’, because all human behaviours are informed by previously learned kinaesthetic patterns that form our ‘habitus’. Therefore, the interface, with its sonic qualities, allows us to design a new sensorial anatomy that alters or transforms our habitual motor behaviours.

3.2. Embodied interface design: adaptability, unpredictability and control

The mapping process is the core of the interactive system design and also develops from an embodied perspective. The importance of mapping has increasingly drawn academic interest in digital instrument development, since it allows for the determination of the perceptible relation between bodily action and sound, thus affecting the compositional process (Di Scipio Reference Di Scipio2003; Murray-Browne, Mainstone, Bryan-Kinns and Plumbley Reference Murray-Browne, Mainstone, Bryan-Kinns and Plumbley2011). In this work, I have adopted both explicit and implicit mapping strategies (Arfib, Couturier, Kessous and Verfaille Reference Arfib, Couturier, Kessous and Verfaille2002). On the one hand, explicitly defined strategies present the advantage of keeping the designer in control of the design of all the instrument components, therefore providing an understanding of the effectiveness of mapping choices throughout the experimentation and design phase. On the other hand, implicit mapping provides internal adaptations of the system through training. Mapping using implicit methods allows the designer to benefit from the self-organising capabilities of the model.

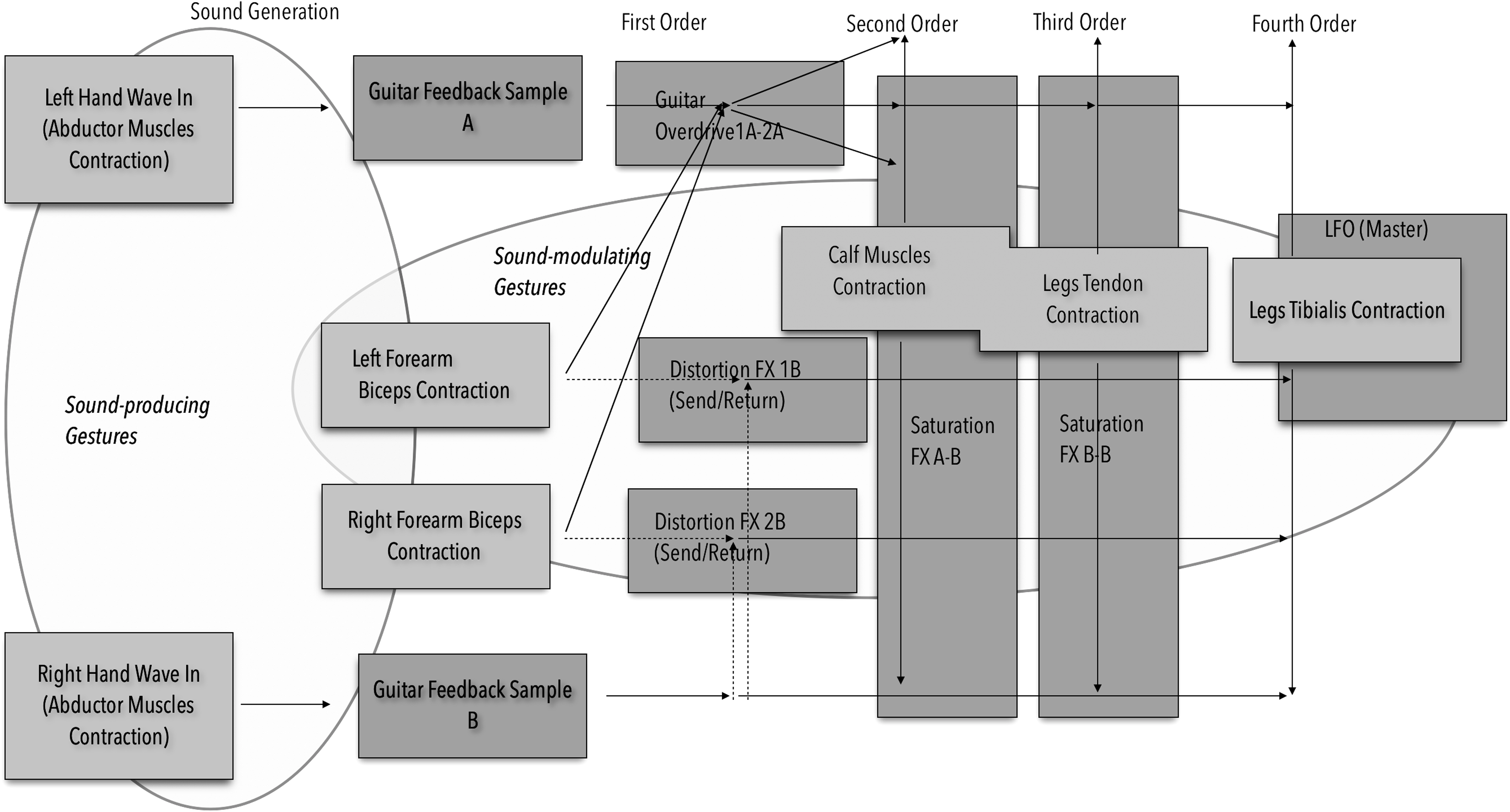

An explicit strategy is adopted to define a many-to-many configuration in gesture-sound interaction. However, according to the preceding idea of anatomical dispositif, mapping is also designed to provide an internal hierarchisation and co-articulation of sound parameters (see Figure 1).

Figure 1. Hierarchical and anatomical mapping example.

Here, too, the metaphor of the feedback is at work: a complex structure of send/return audio channels enables the modulation of the same sound texture in several modalities, depending on the combination of the muscular groups involved in the action. For instance, while a certain sound signal is directly generated by a specific gesture (e.g., contracting formant abductors), the same sound is sent to an external effect and controlled by another part of the body (e.g., left leg triceps). Therefore, the modified sound emerges as a reaction (a feedback) to the main sound. Even in this case, the interface is used as a dispositif enabling the reconfiguration of the anatomical layers by means of sound. Since gestures are divided in sound-producing and sound-modulating, kinaesthetic patterns present a different importance regarding the sound outcome. According to this anatomical approach, mapping provides, therefore, an explicit hierarchisation of the performer’s body.

If explicit strategies deal with interaction control, implicit approaches tend to emphasise the system’s unpredictability and adaptability. A specific algorithm has been implemented in order to change both sound parameters and mapping configuration during the performance. The algorithm continually senses the overall neuromuscular energy I am using. When I perform an extreme contraction of my whole body, the system detects an exceeding effort and triggers a transformation of the sound’s environmental setting. In this sense, implicit mapping methods allow us to integrate situatedness into interaction. Four different sets are designed for the performance. To this end, an adaptive threshold, using Bayesian filtering, is implemented in order to adapt system responsivity to my neuromuscular state during the performance. However, since EMG analysis is based on electrical activity, the output can significantly change according to my affective and emotional state during the performance (e.g., stress, anxiety, excitation due to live act, moments of unexpected feeling of flow). Sweating can also alter electrical signal analysis. From this point of view, the system response is definitely affected by my visceral ‘being there’ in the act of performing, thereby generating unexpected outcomes to which I adapt my corporeal behaviour during improvisation.

3.3. Effort traces across material forms: making the sound more visceral

A crucial goal of my sound design strategy is to create concrete resonances between sound quality and effort sensation in order to make the relation between movement and sound perceptually meaningful. Although translating motion and biosignals into sound represents a primary form of materialisation of the corporeal dimension (e.g., kinaesthetic traces, proprioceptive sensations, internal feelings), the way in which sound is designed (e.g., its specific texture, timbre) can definitely enhance, or not, the action/perception coupling system, improving the viscerality of the performance. In this sense, my sound design strategy takes inspiration from the sonification paradigm. This means that sound is designed to produce an auditive representation of the corporeal, thereby fostering a meaningful relation between the perceived movement and the perceived sound.

Embodied perspective and feedback metaphor play an important role in this phase as well. The main sound material of the performance is based on an electric guitar’s feedback recording. In sound engineering, the audio feedback (or Larsen effect) is a high-pitched tone emerging when an amplified signal returns from the output source (e.g., the amplifier, the loudspeakers) to the input source (e.g., a guitar, a microphone), thus generating a reiterative positive loop gain, since the amplifier is overloaded. I have decided to work on audio guitar feedback because, from my own point of view, it evokes a feeling of tension. This sound material provides indeed a meaningful perceptual outcome that can fit with my primary sensation of muscular contraction. Therefore, I have considered it as an effective auditive representation of muscular effort. Real-time audio manipulation extends this primary relation between effort and audio feedback. The different muscular groups are systematically connected to diverse typologies of audio distortions and overdrives (both inspired by noise music and post-rock aesthetics). Physical energy accumulation (effort) and sound energy accumulation (overdrive) are thus connected at a very sensorial and metaphorical level. Here, too, the feedback loop system is at play: if the corporeal effort generates the audio distortion, the perceptual acoustic saturation of the feedback reflects the physical tension expressed and performed through my body. In return, the physicality and materiality of the sound (its specific frequential and timbral texture) resonates with my own inner visceral sensation. This relation allows me to transform my body into an anatomical architecture made of thresholds, that I must exceed in order to achieve a certain degree of physical and sound saturation. In this way, the sound environment emerges as a material form (Schiller Reference Schiller, Broadhurst and Machon2006) capable of technically embodying the traces of the performer’s effort, whose concrete sensorial inscription takes place in the sound.

3.4. Improvising with technologies

If the formal structure of the performance develops from the dialogue between the performer’s neuromuscular activity and the system’s adaptative behaviour, the internal improvisational logic explores the notion of the feedback loop at a micro level. Two corporeal behaviours are thus at play: movements made with the purpose of producing a sound feedback (sound-oriented task) and movements made in reaction to the sound feedback (movement-oriented task). Footnote 14 Moreover, adaptive mapping provides a further degree of unpredictability that affects the improvisational logic. As a result, the interface responds to the performer with a range of auditive stimuli that, in turn, influence the way in which the body is reorganised in a variable, dynamic feedback loop. In this kind of improvisation, listening has a predominant role. While in traditional performances listening helps to evoke an idiomatic repertoire, in this case, it is used to orient intuitive behaviours. In this context, listening makes it possible to interpret the sound environment, sometimes in relation to the musical composition processes, sometimes according to bodily sensations. Such a feedback loop mechanism drives the internal evolution of the improvisation and makes the entanglement between my corporeality and the sound environment very close and visceral, in the sense that both elements are profoundly interdependent and co-evolve during the performance.

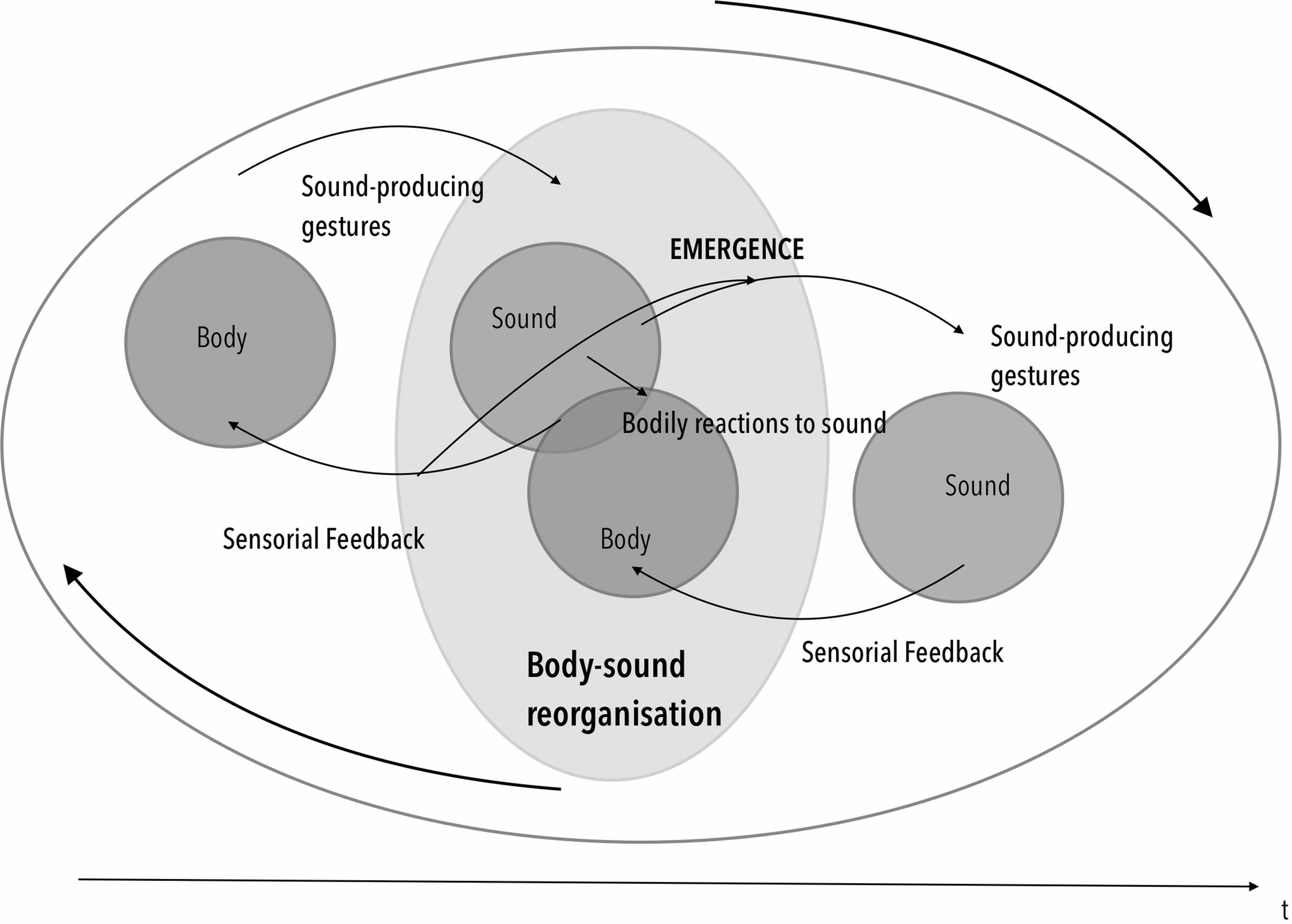

By acting directly on perception both as an effect of the action and as a retroactive cause of movement execution, the sound feedback provokes an immediate transformation of the performer’s corporeal behaviour. As such, the sound arises as an emergent phenomenon. Emergence designates phenomena exhibiting original properties that cannot be reduced to the material causes that produced them. In particular, the logic governing the reciprocal interaction between gesture and sound refers to the emerging principle of ‘top-down causality’, that is, the ability to affect the lower-level process from which a certain phenomenon emerges (Bedau Reference Bedau, Bedau and Humphreys2008). This notion can be used in this context to analyse the relation between sound and gesture. On the one hand, the auditory feedback is generated as a response to my muscular contraction. The sound primarily occurs as an effect of the movement. On the other hand, the feedback affects my own corporeality in terms of posture, internal sensations, micro-movements, and so on, thereby informing the way in which I execute the following gesture. In other terms, by acting on the body that produced it, the sound feedback modifies the conditions of appearance of the subsequent sound. According to this interpretation, feedback is, at the same time, the cause and the effect of the perceptual organisation of the movement. This kind of evolutionary feedback loop occurs in a diachronic way, thereby highlighting the metaplasticity of the action-perception coupling system (see Figure 2).

Figure 2. Dynamic feedback loop and downward causation.

4. CONCLUSION: SOMATICS/CORPOREALITY/TECHNOLOGIES

In this paper, I have proposed an extensive account on embodied approaches to SID, thereby underlining the increasing importance of somatic knowledge within the movement and computing field. Providing a transdisciplinary understanding of bodily experience, the phenomenological perspective fosters novel insights for the design and application of wearable technologies. In particular, embodied knowledge, somatic know-how and human-centred design have proven effective in helping move beyond designing technical systems. In fact, the understanding of the phenomenal body as corporeality and the interpretation of mediation technology as an environment capable of affording transformative feedback allow for an enrichment of the phenomenological experience of the performative body.

In the case study, I have demonstrated how a phenomenological-based perspective can enhance a visceral approach to musical expression. Biosignals are undoubtedly an effective means for creating a concrete intertwinement between visceral sensations and mediation technology. However, they are not sufficient to provide a real feeling of viscerality in performance. For this reason, designing perceptually meaningful relations between sound and movement seems to be necessary. Similar to traditional musical instruments, sensor-based interaction can afford a perceptive illusion of merging with the interface. However, on the basis of the case study I have presented, I would argue that the extension paradigm seems to be not completely satisfying or sufficient to describe embodied interaction in visceral performance. Feedback is not just a reaction to gesture. In the performance at issue, sound feedback is a material trace of the effort that informs the performer about the quality of his internal corporeal state. In this situation, the performer can organise movement composition both on habitual proprioceptive channels and on auditive exteroceptive feedbacks. From this perspective, embodied interaction transforms the physiological basis of the human perceptual system, altering the performer’s usual sensorimotor programmes. By acting directly on the physical activity in this twofold modality (consequence of action and retroactive effect on perception), sonification induces, therefore, an immediate transformation of the action-perception coupling system. This emergent sensory geography inevitably demands new strategies for movement composition. From a phenomenological perspective, the transformation of interoceptive or proprioceptive sensations into exteroceptive stimuli (i.e., real-time sonification) allows the performer not only to become aware of their own body but, due to this new sensorial feedback, he/she also becomes capable of experimenting with new modalities of movement execution. Especially for performers, such a sensorial reorganisation allows them to overcome what Hubert Godard has often defined as a ‘choreographic fixation’ (névrose chorégraphique), that is, the repetition of the same movement patterns stemming from the performer’s cultural, emotional and gestural habitus (Kuypers Reference Kuypers2006). For these reasons, technological mediation should be conceived as an organic element within the autopoiesis of the corporeality. Wearable technologies have already become a connective material for both informational and bodily anatomies. Adopting an embodied approach can transform wearable devices into an active sensory-perceptual mode of experiencing, which can stimulate brain–body metaplasticity. The reconfiguration of body automations through the use of sound feedback is a process that unfolds with a high degree of sensitivity and in which the body can be poetically understood as an emergent territoriality, inhabited and transfigured by the sound.