Medical expertise assessment models and their utility

Many involved in education will be familiar with Miller’s pyramid, which conceptualises medical expertise in four hierarchical levels, from propositional knowledge at the base (’what the doctor knows’) to performance at the pinnacle (’what the doctor does’). Reference Miller1 Miller’s model emphasises the importance of assessment systems examining each of these levels. Clinical examinations, whether of the Observed Structured Clinical Examination (OSCE) type or based on variations of the long case, are assessments that focus solely on the competence level of Miller’s pyramid (’what the doctor can do’).

As the present statutory regulator of postgraduate medical education in the UK, the General Medical Council (GMC) points out in its Standards for Curricula and Assessment Systems 2 that an assessment system is an integrated set of assessments which supports and assesses the whole curriculum and that ‘competence (can do) is necessary but not sufficient for performance (does)’ (p. 3). It follows that an assessment system should consist of both competence and performance elements.

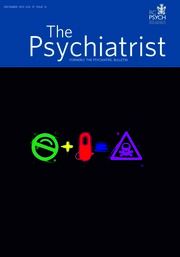

Van der Vleuten’s approach to considering utility of assessment Reference van der Vleuten3 is a very helpful one when selecting tools that should be used at each level of assessment. He pointed out that in mathematical terms the utility of an assessment system might be considered as the product of its reliability, validity, feasibility and educational impact. If the value of any of these qualities approaches zero, no matter how positive the remaining values are, the utility of the assessment system will also approach zero.

Although the long case examination is one of the most venerable forms of assessment in medical education, Reference Jolly and Grant4 and it has a good deal of face validity to psychiatrists, there are serious concerns about its reliability, Reference Jolly and Grant4 which ultimately reduces its utility as a tool in assessing the competence level. These concerns arise because the assessment is based on an encounter with one patient and unstructured questioning by examiners. Reference Fitch, Malik, Lelliot, Bhugra and Andiappan5 Norcini Reference Norcini6 has reported reliability estimates for a single long case of 0.24. Having more assessments performed by more assessors and observing the whole encounter between candidate and patient increase the reliability of the long case. Six such long-case assessments are needed to bring a reliability coefficient of 0.8. Unfortunately, however, the large amount of assessment time needed and the lack of willing and suitable patients severely limit the feasibility of the long case examination.

The new way - OSCE

First introduced by Harden et al in Dundee, Reference Harden, Stevenson, Downie and Wilson7 the OSCE was a response to the long-standing problems with oral examinations. Reference Hodges8 It was the first assessment of its kind to combine standardised live performances with multiple scenarios, each assessed by multiple independent examiners, and was hailed as a triumph in medical education. Reference Hodges8 Evidence began to emerge that OSCEs resulted in increased reliability and validity when compared with traditional and less structured oral examinations. Reference Jackson, Jamieson and Khan9,Reference Sauer, Hodges, Santhouse and Blackwood10 By the late 20th century, the OSCE was adopted worldwide and became known as the gold standard in undergraduate and postgraduate competence assessment in medical training and for other health professions. Reference Sloan, Donnelly, Schwartz and Strodel11,Reference Rushforth12

In 2008, the Royal College of Psychiatrists had to review its examination system to meet the standards of the Postgraduate Medical Education and Training Board (PMETB), which was then the statutory regulator of postgraduate medical education. It was decided to adopt the OSCE format as being the best for a high-stakes clinical examination and to modify the OSCE approach to deal with more complex clinical situations. And so the Clinical Assessment of Skills and Competencies (CASC) came into being. The College monitors its examinations carefully. Performance data from the most recent sitting of the CASC are normally distributed and pass rates were not significantly different over the 4 days of the examination. Reliability has increased slightly from the previous CASC diet, reaching the desired α level of 0.80 or greater on two examination days. None of the stations performed poorly. This suggests that the exam is robust.

Because the OSCE combines reliability with the opportunity to test in a greater number of domains than the long case while offering a degree of feasibility, it looks likely that this format will remain an important component of the testing of the competence level of medical expertise.

Assessing the performance level

As far as assessing the performance level of medical expertise is concerned, Michael et al Reference Michael, Rao and Goel13 are correct in saying that workplace-based assessment methods were little used in psychiatry and in UK medical education before their introduction as a requirement of the PMETB in 2007, as a part of the new curriculum. Since their introduction, a large study involving more than 600 doctors in training evaluated the performance of workplace-based assessment in psychiatry in the UK. Reference Brittlebank, Archer, Longson, Malik and Bhugra14 It has shown that an integrated package of workplace-based assessments can deliver acceptable levels of reliability and validity for feasible amounts of assessment time. Critically, the participants in this study all received a package of face-to-face training in the methods of assessment. The study showed that workplace-based assessments of a psychiatrist’s clinical encounter skills could achieve levels of reliability that could support high-stakes assessment decisions after approximately 3 hours of observation. Feedback from both the doctors being assessed and from assessors in this study indicated high levels of acceptability of this form of assessment.

Since the work that underpinned the Brittlebank Reference Brittlebank, Archer, Longson, Malik and Bhugra14 study was undertaken, it has become a curriculum requirement for all who conduct the assessment of psychiatrists in training to undergo training in assessment methods. There is evidence that such training improves the rigour and reliability of workplace-based assessments. Reference Holmboe, Hawkins and Huot15 This may go some way in addressing the cynicism found by Menon et al Reference Menon, Winston and Sullivan16 shortly after the introduction of workplace-based assessment.

The portfolio of assessment tools is not complete. As Michael et al suggest, there is a need for a tool that assesses a trainee’s integration of the clinical skills that are required in the primary assessment of a patient’s problem. This integrative approach was used by the Royal College of Physicians of the UK in the design of the Acute Care Assessment Tool (ACAT), which was developed to give feedback on a doctor’s performance on an acute medical take, where the doctor is expected to bring a range of skills to bear in a complex situation. Reference Johnson, Wade, Barrett and Jones17 The ACAT has been shown to be a useful and popular assessment tool. Reference Johnson, Wade, Barrett and Jones17 We suggest that rather than developing new clinical examinations, psychiatrists should adopt a similar approach to our physician colleagues and develop a tool that incorporates aspects of the Assessment of Clinical Expertise and the case-based discussion tools to assess the complex assessment, formulation and decision-making skills that are brought to bear when a psychiatrist assesses a new patient.

eLetters

No eLetters have been published for this article.