1. Introduction

Let ![]() $\mathcal P(\mathcal X)$ denote the space of Borel probability measures over a Polish space

$\mathcal P(\mathcal X)$ denote the space of Borel probability measures over a Polish space ![]() $\mathcal{X}$. Given

$\mathcal{X}$. Given ![]() $\mu,\upsilon\in \mathcal P(\mathcal X)$, denote by

$\mu,\upsilon\in \mathcal P(\mathcal X)$, denote by ![]() $\Gamma(\mu,\upsilon) \;:\!=\; \{\gamma\in\mathcal{P}(\mathcal{X}\times\mathcal{X})\colon\gamma({\mathrm{d}} x,\mathcal{X}) = \mu({\mathrm{d}} x),\,\gamma(\mathcal{X},{\mathrm{d}} y) = \upsilon({\mathrm{d}} y)\}$ the set of couplings (transport plans) with marginals

$\Gamma(\mu,\upsilon) \;:\!=\; \{\gamma\in\mathcal{P}(\mathcal{X}\times\mathcal{X})\colon\gamma({\mathrm{d}} x,\mathcal{X}) = \mu({\mathrm{d}} x),\,\gamma(\mathcal{X},{\mathrm{d}} y) = \upsilon({\mathrm{d}} y)\}$ the set of couplings (transport plans) with marginals ![]() $\mu$ and

$\mu$ and ![]() $\upsilon$. For a fixed, compatible, complete metric d, and given a real number

$\upsilon$. For a fixed, compatible, complete metric d, and given a real number ![]() $p\geq 1$, we define the p-Wasserstein space by

$p\geq 1$, we define the p-Wasserstein space by ![]() $\mathcal W_p(\mathcal{X}) \;:\!=\; \big\{\eta\in\mathcal P(\mathcal{X})\colon\int_{\mathcal{X}}d(x_0,x)^p\,\eta({\mathrm{d}} x) < \infty,\text{ for some }x_0\big\}$. Accordingly, the p-Wasserstein distance between measures

$\mathcal W_p(\mathcal{X}) \;:\!=\; \big\{\eta\in\mathcal P(\mathcal{X})\colon\int_{\mathcal{X}}d(x_0,x)^p\,\eta({\mathrm{d}} x) < \infty,\text{ for some }x_0\big\}$. Accordingly, the p-Wasserstein distance between measures ![]() $\mu,\upsilon\in\mathcal W_p(\mathcal{X})$ is given by

$\mu,\upsilon\in\mathcal W_p(\mathcal{X})$ is given by

When ![]() $p=2$,

$p=2$, ![]() $\mathcal{X}=\mathbb{R}^q$, d is the Euclidean distance, and

$\mathcal{X}=\mathbb{R}^q$, d is the Euclidean distance, and ![]() $\mu$ is absolutely continuous, which is the setting we will soon adopt for the remainder of the paper, Brenier’s theorem [Reference Villani50, Theorem 2.12(ii)] establishes the uniqueness of a minimizer for the right-hand side of (1). Furthermore, this optimizer is supported on the graph of the gradient of a convex function. See [Reference Ambrosio, Gigli and Savaré6, Reference Villani51] for further general background on optimal transport.

$\mu$ is absolutely continuous, which is the setting we will soon adopt for the remainder of the paper, Brenier’s theorem [Reference Villani50, Theorem 2.12(ii)] establishes the uniqueness of a minimizer for the right-hand side of (1). Furthermore, this optimizer is supported on the graph of the gradient of a convex function. See [Reference Ambrosio, Gigli and Savaré6, Reference Villani51] for further general background on optimal transport.

We recall now the definition of the Wasserstein population barycenter.

Definition 1. Given ![]() $\Pi \in \mathcal{P}(\mathcal{P}(\mathcal{X}))$, write

$\Pi \in \mathcal{P}(\mathcal{P}(\mathcal{X}))$, write ![]() $V_p(\bar{m}) \;:\!=\; \int_{\mathcal{P}(\mathcal X)}W_{p}(m,\bar{m})^{p}\,\Pi({\mathrm{d}} m)$. Any measure

$V_p(\bar{m}) \;:\!=\; \int_{\mathcal{P}(\mathcal X)}W_{p}(m,\bar{m})^{p}\,\Pi({\mathrm{d}} m)$. Any measure ![]() $\hat{m}\in\mathcal W_p(\mathcal{X})$ that is a minimizer of the problem

$\hat{m}\in\mathcal W_p(\mathcal{X})$ that is a minimizer of the problem ![]() $\inf_{\bar{m}\in\mathcal{P}(\mathcal X)}V_p(\bar{m})$ is called a p-Wasserstein population barycenter of

$\inf_{\bar{m}\in\mathcal{P}(\mathcal X)}V_p(\bar{m})$ is called a p-Wasserstein population barycenter of ![]() $\Pi$.

$\Pi$.

Wasserstein barycenters were first introduced and analyzed in [Reference Agueh and Carlier1], in the case when the support of ![]() $\Pi\in\mathcal{P}(\mathcal{P}(\mathcal{X}))$ is finite and

$\Pi\in\mathcal{P}(\mathcal{P}(\mathcal{X}))$ is finite and ![]() $\mathcal X=\mathbb{R}^q$. More generally, [Reference Bigot and Klein12, Reference Le Gouic and Loubes38] considered the so-called population barycenter, i.e. the general case in Definition 1 where

$\mathcal X=\mathbb{R}^q$. More generally, [Reference Bigot and Klein12, Reference Le Gouic and Loubes38] considered the so-called population barycenter, i.e. the general case in Definition 1 where ![]() $\Pi$ may have infinite support. These works addressed, among others, the basic questions of the existence and uniqueness of solutions. See also [Reference Kim and Pass35] for the Riemannian case. The concept of the Wasserstein barycenter has been extensively studied from both theoretical and practical perspectives over the last decade: we refer the reader to the overview in [Reference Panaretos and Zemel43] for statistical applications, and to [Reference Cazelles, Tobar and Fontbona17, Reference Cuturi and Doucet26, Reference Cuturi and Peyré27, Reference Peyré and Cuturi44] for computational aspects of optimal transport and applications in machine learning.

$\Pi$ may have infinite support. These works addressed, among others, the basic questions of the existence and uniqueness of solutions. See also [Reference Kim and Pass35] for the Riemannian case. The concept of the Wasserstein barycenter has been extensively studied from both theoretical and practical perspectives over the last decade: we refer the reader to the overview in [Reference Panaretos and Zemel43] for statistical applications, and to [Reference Cazelles, Tobar and Fontbona17, Reference Cuturi and Doucet26, Reference Cuturi and Peyré27, Reference Peyré and Cuturi44] for computational aspects of optimal transport and applications in machine learning.

In this article we develop a stochastic gradient descent (SGD) algorithm for the computation of 2-Wasserstein population barycenters. The method inherits features of the SGD rationale, in particular:

• It exhibits a reduced computational cost compared to methods based on the direct formulation of the barycenter problem using all the available data, as SGD only considers a limited number of samples of

$\Pi\in\mathcal{P}(\mathcal{P}(\mathcal{X}))$ per iteration.

$\Pi\in\mathcal{P}(\mathcal{P}(\mathcal{X}))$ per iteration.-

• It is online (or continual, as referred to in the machine learning community), meaning that it can incorporate additional datapoints sequentially to update the barycenter estimate whenever new observations becomes available. This is relevant even when

$\Pi$ is finitely supported.

$\Pi$ is finitely supported. • Conditions ensuring convergence towards the Wasserstein barycenter as well as finite-dimensional convergence rates for the algorithm can be provided. Moreover, the variance of the gradient estimators can be reduced by using mini-batches.

From now on we make the following assumption.

Assumption 1. ![]() $\mathcal X=\mathbb{R}^q$, d is the squared Euclidean metric, and

$\mathcal X=\mathbb{R}^q$, d is the squared Euclidean metric, and ![]() $p=2$.

$p=2$.

We denote by ![]() $\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ the subspace of

$\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ the subspace of ![]() $\mathcal W_{2}(\mathcal X)$ of absolutely continuous measures with finite second moment and, for any

$\mathcal W_{2}(\mathcal X)$ of absolutely continuous measures with finite second moment and, for any ![]() $\mu\in\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ and

$\mu\in\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ and ![]() $\nu\in\mathcal W_{2}(\mathcal X)$ we write

$\nu\in\mathcal W_{2}(\mathcal X)$ we write ![]() $T_\mu^\nu$ for the (

$T_\mu^\nu$ for the (![]() $\mu$-almost sure, unique) gradient of a convex function such that

$\mu$-almost sure, unique) gradient of a convex function such that ![]() $T_\mu^\nu(\mu)=\nu$. Notice that

$T_\mu^\nu(\mu)=\nu$. Notice that ![]() $x\mapsto T_{\mu}^{\nu}(x)$ is a

$x\mapsto T_{\mu}^{\nu}(x)$ is a ![]() $\mu$-almost sure (a.s.) defined map and that we use throughout the notation

$\mu$-almost sure (a.s.) defined map and that we use throughout the notation ![]() $T_\mu^\nu(\rho)$ for the image measure/law of this map under the measure

$T_\mu^\nu(\rho)$ for the image measure/law of this map under the measure ![]() $\rho$.

$\rho$.

Recall that a set ![]() $B\subset\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ is geodesically convex if, for every

$B\subset\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ is geodesically convex if, for every ![]() $\mu,\nu\in B$ and

$\mu,\nu\in B$ and ![]() $t\in[0,1]$ we have

$t\in[0,1]$ we have ![]() $((1-t)I+tT_\mu^\nu)(\mu)\in B$, with I denoting the identity operator. We will also assume the following condition for most of the article.

$((1-t)I+tT_\mu^\nu)(\mu)\in B$, with I denoting the identity operator. We will also assume the following condition for most of the article.

Assumption 2. ![]() $\Pi$ gives full measure to a geodesically convex

$\Pi$ gives full measure to a geodesically convex ![]() $W_2$-compact set

$W_2$-compact set ![]() $K_\Pi\subset\mathcal W_{2,\mathrm{ac}}(\mathcal X)$.

$K_\Pi\subset\mathcal W_{2,\mathrm{ac}}(\mathcal X)$.

In particular, under Assumption 2 we have ![]() $\int_{\mathcal P(\mathcal X)}\int_{\mathcal X}d(x,x_0)^2\,m({\mathrm{d}} x)\,\Pi({\mathrm{d}} m)<\infty$ for all

$\int_{\mathcal P(\mathcal X)}\int_{\mathcal X}d(x,x_0)^2\,m({\mathrm{d}} x)\,\Pi({\mathrm{d}} m)<\infty$ for all ![]() $x_0$. Moreover, for each

$x_0$. Moreover, for each ![]() $\nu\in\mathcal W_2(\mathcal X)$ and

$\nu\in\mathcal W_2(\mathcal X)$ and ![]() $\Pi(dm)$ for almost every (a.e.) m, there is a unique optimal transport map

$\Pi(dm)$ for almost every (a.e.) m, there is a unique optimal transport map ![]() $T_m^\nu$ from m to

$T_m^\nu$ from m to ![]() $\nu$ and, by [Reference Le Gouic and Loubes38, Proposition 6], the 2-Wasserstein population barycenter is unique.

$\nu$ and, by [Reference Le Gouic and Loubes38, Proposition 6], the 2-Wasserstein population barycenter is unique.

Definition 2. Let ![]() $\mu_0 \in K_\Pi$,

$\mu_0 \in K_\Pi$, ![]() $m_k \stackrel{\mathrm{i.i.d.}}{\sim} \Pi$, and

$m_k \stackrel{\mathrm{i.i.d.}}{\sim} \Pi$, and ![]() $\gamma_k > 0$ for

$\gamma_k > 0$ for ![]() $k \geq 0$. We define the stochastic gradient descent (SGD) sequence by

$k \geq 0$. We define the stochastic gradient descent (SGD) sequence by

The reasons why we can truthfully refer to the above sequence as stochastic gradient descent will become apparent in Sections 2 and 3. We stress that the sequence is a.s. well defined, as we can show by induction that ![]() $\mu_k\in \mathcal W_{2,\mathrm{ac}}(\mathcal X)$ a.s. thanks to Assumption 2. We also refer to Section 3 for remarks on the measurability of the random maps

$\mu_k\in \mathcal W_{2,\mathrm{ac}}(\mathcal X)$ a.s. thanks to Assumption 2. We also refer to Section 3 for remarks on the measurability of the random maps ![]() $\{T_{\mu_k}^{m_k}\}_k$ and sequence

$\{T_{\mu_k}^{m_k}\}_k$ and sequence ![]() $\{\mu_k\}_k$.

$\{\mu_k\}_k$.

Throughout the article, we assume the following conditions on the steps ![]() $\gamma_k$ in (2), commonly required for the convergence of SGD methods:

$\gamma_k$ in (2), commonly required for the convergence of SGD methods:

In addition to barycenters, we will need the concept of Karcher means (cf. [Reference Zemel and Panaretos55]), which, in the setting of the optimization problem in ![]() $\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ considered here, can be intuitively understood as an analogue of a critical point of a smooth function in Euclidean space (see the discussion in Section 2).

$\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ considered here, can be intuitively understood as an analogue of a critical point of a smooth function in Euclidean space (see the discussion in Section 2).

Definition 3. Given ![]() $\Pi\in\mathcal{P}(\mathcal{P}(\mathcal{X}))$, we say that

$\Pi\in\mathcal{P}(\mathcal{P}(\mathcal{X}))$, we say that ![]() $\mu\in\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ is a Karcher mean of

$\mu\in\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ is a Karcher mean of ![]() $\Pi$ if

$\Pi$ if ![]() $\mu\big(\big\{x\colon x = \int_{m\in\mathcal P(\mathcal X)}T_\mu^m(x)\,\Pi({\mathrm{d}} m)\big\}\big)=1$.

$\mu\big(\big\{x\colon x = \int_{m\in\mathcal P(\mathcal X)}T_\mu^m(x)\,\Pi({\mathrm{d}} m)\big\}\big)=1$.

It is known that any 2-Wasserstein barycenter is a Karcher mean, though the latter is in general a strictly larger class; see [Reference Álvarez-Esteban, del Barrio, Cuesta-Albertos and Matrán4] or Example 1. However, if there is a unique Karcher mean, then it must coincide with the unique barycenter. We can now state the main result of the article.

Theorem 1. We assume Assumptions 1 and 2, conditions (3) and (4), and that the 2-Wasserstein barycenter ![]() $\hat{\mu}$ of

$\hat{\mu}$ of ![]() $\Pi$ is the unique Karcher mean. Then, the SGD sequence

$\Pi$ is the unique Karcher mean. Then, the SGD sequence ![]() $\{\mu_k\}_k$ in (2) is a.s.

$\{\mu_k\}_k$ in (2) is a.s. ![]() $\mathcal W_2$-convergent to

$\mathcal W_2$-convergent to ![]() $\hat{\mu}\in K_\Pi$.

$\hat{\mu}\in K_\Pi$.

An interesting aspect of Theorem 1 is that it hints at a law of large numbers (LLN) on the 2-Wasserstein space. Indeed, in the conventional LLN, for i.i.d. samples ![]() $X_i$ the summation

$X_i$ the summation ![]() $S_{k}\;:\!=\;({1}/{k})\sum_{i\leq k}X_i$ can be expressed as

$S_{k}\;:\!=\;({1}/{k})\sum_{i\leq k}X_i$ can be expressed as

Therefore, if we rather think of sample ![]() $X_k$ as a measure

$X_k$ as a measure ![]() $m_k$ and of

$m_k$ and of ![]() $S_k$ as

$S_k$ as ![]() $\mu_k$, we immediately see the connection with

$\mu_k$, we immediately see the connection with

obtained from (2) when we take ![]() $\gamma_k={1}/({k+1})$. The convergence in Theorem 1 can thus be interpreted as an analogy to the convergence of

$\gamma_k={1}/({k+1})$. The convergence in Theorem 1 can thus be interpreted as an analogy to the convergence of ![]() $S_k$ to the mean of

$S_k$ to the mean of ![]() $X_1$ (we thank Stefan Schrott for this observation).

$X_1$ (we thank Stefan Schrott for this observation).

In order to state our second main result, Theorem 2, we first introduce a new concept.

Definition 4. Given ![]() $\Pi\in\mathcal{P}(\mathcal{P}(\mathcal{X}))$ we say that a Karcher mean

$\Pi\in\mathcal{P}(\mathcal{P}(\mathcal{X}))$ we say that a Karcher mean ![]() $\mu\in\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ of

$\mu\in\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ of ![]() $\Pi$ is pseudo-associative if there exists

$\Pi$ is pseudo-associative if there exists ![]() $C_{\mu}>0$ such that, for all

$C_{\mu}>0$ such that, for all ![]() $\nu\in\mathcal W_{2,\mathrm{ac}}(\mathcal{X})$,

$\nu\in\mathcal W_{2,\mathrm{ac}}(\mathcal{X})$,

Since the term on the right-hand side of (5) vanishes for any Karcher mean ![]() $\nu$, the existence of a pseudo-associative Karcher mean implies

$\nu$, the existence of a pseudo-associative Karcher mean implies ![]() $\mu=\nu$, hence uniqueness of Karcher means. We will see that, moreover, the existence of a pseudo-associative Karcher mean implies a Polyak–Lojasiewicz inequality for the functional minimized by the barycenter, see (20). This in turn can be utilized to obtain convergence rates for the expected optimality gap in a similar way to the Euclidean case. While the pseudo-associativity condition is a strong requirement, in the following result we are able to weaken Assumption 2 into the following milder assumption.

$\mu=\nu$, hence uniqueness of Karcher means. We will see that, moreover, the existence of a pseudo-associative Karcher mean implies a Polyak–Lojasiewicz inequality for the functional minimized by the barycenter, see (20). This in turn can be utilized to obtain convergence rates for the expected optimality gap in a similar way to the Euclidean case. While the pseudo-associativity condition is a strong requirement, in the following result we are able to weaken Assumption 2 into the following milder assumption.

Assumption 2′. ![]() $\Pi$ gives full measure to a geodesically convex set

$\Pi$ gives full measure to a geodesically convex set ![]() $K_\Pi\subset\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ that is

$K_\Pi\subset\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ that is ![]() $\mathcal W_2$-bounded (i.e.

$\mathcal W_2$-bounded (i.e. ![]() $\sup_{m\in K_\Pi}\int |x|^2\,m({\mathrm{d}} x)<\infty$).

$\sup_{m\in K_\Pi}\int |x|^2\,m({\mathrm{d}} x)<\infty$).

Clearly this condition is equivalent to requiring that the support of ![]() $\Pi$ be

$\Pi$ be ![]() $\mathcal W_2$-bounded and consist of absolutely continuous measures.

$\mathcal W_2$-bounded and consist of absolutely continuous measures.

We now present our second main result.

Theorem 2. We assume Assumptions 1 and 2′, and that the 2-Wasserstein barycenter ![]() $\hat{\mu}$ of

$\hat{\mu}$ of ![]() $\Pi$ is a pseudo-associative Karcher mean. Then,

$\Pi$ is a pseudo-associative Karcher mean. Then, ![]() $\hat{\mu}$ is the unique barycenter of

$\hat{\mu}$ is the unique barycenter of ![]() $\Pi$, and the SGD sequence

$\Pi$, and the SGD sequence ![]() $\{\mu_k\}_k$ in (2) is a.s. weakly convergent to

$\{\mu_k\}_k$ in (2) is a.s. weakly convergent to ![]() $\hat{\mu}\in K_\Pi$ as soon as (3) and (4) hold. Moreover, for every

$\hat{\mu}\in K_\Pi$ as soon as (3) and (4) hold. Moreover, for every ![]() $a>C_{\hat{\mu}}^{-1}$ and

$a>C_{\hat{\mu}}^{-1}$ and ![]() $b\geq a$ there exists an explicit constant

$b\geq a$ there exists an explicit constant ![]() $C_{a,b}>0$ such that, if

$C_{a,b}>0$ such that, if ![]() $\gamma_k={a}/({b+k})$ for all

$\gamma_k={a}/({b+k})$ for all ![]() $k\in\mathbb{N}$, the expected optimality gap satisfies

$k\in\mathbb{N}$, the expected optimality gap satisfies

Let us explain the reason for the terminology ‘pseudo-associative’ we have chosen. This comes from the fact that the inequality in Definition 4 holds true (with equality and ![]() $C_{\mu}=1$) as soon as the associativity property

$C_{\mu}=1$) as soon as the associativity property ![]() $T_{\mu}^{m}(x) = T_{\nu}^m \circ T_{\mu}^{\nu}(x)$ holds

$T_{\mu}^{m}(x) = T_{\nu}^m \circ T_{\mu}^{\nu}(x)$ holds ![]() $\mu({\mathrm{d}} x)$-a.s. for each pair

$\mu({\mathrm{d}} x)$-a.s. for each pair ![]() $\nu,m\in W_{2,\mathrm{ac}}(\mathcal X)$; see Remark 2. The previous identity was assumed to hold for the results proved in [Reference Bigot and Klein12], and is always valid in

$\nu,m\in W_{2,\mathrm{ac}}(\mathcal X)$; see Remark 2. The previous identity was assumed to hold for the results proved in [Reference Bigot and Klein12], and is always valid in ![]() $\mathbb{R}$, since the composition of monotone functions is monotone. Further examples where the associativity property holds are discussed in Section 6. Thus, in all those settings, (5) and Theorem 2 hold as soon as Assumption 2′ is granted, and, further, we have the explicit LLN-like expression

$\mathbb{R}$, since the composition of monotone functions is monotone. Further examples where the associativity property holds are discussed in Section 6. Thus, in all those settings, (5) and Theorem 2 hold as soon as Assumption 2′ is granted, and, further, we have the explicit LLN-like expression ![]() $\mu_{k+1}=\big(({1}/{k})\sum_{i=1}^k T_{\mu_0}^{m_i}\big)(\mu_0)$. As regards the more general pseudo-associativity property, we will see that it holds, for instance, in the Gaussian framework studied in [Reference Chewi, Maunu, Rigollet and Stromme19], and in certain classes of scatter-location families, which we discuss in Section 6.

$\mu_{k+1}=\big(({1}/{k})\sum_{i=1}^k T_{\mu_0}^{m_i}\big)(\mu_0)$. As regards the more general pseudo-associativity property, we will see that it holds, for instance, in the Gaussian framework studied in [Reference Chewi, Maunu, Rigollet and Stromme19], and in certain classes of scatter-location families, which we discuss in Section 6.

The remainder of the paper is organized as follows:

• In Section 2 we recall basic ideas and results on gradient descent algorithms for Wasserstein barycenters.

-

• In Section 3 we prove Theorem 1, providing the technical elements required to that end.

-

• In Section 4 we discuss the notion of pseudo-associative Karcher means and their relation to the so-calledPolyak–Lojasiewicz and variance inequalities, and we prove Theorem 2.

-

• In Section 5 we introduce the mini-batch version of our algorithm, discuss how this improves the variance of gradient-type estimators, and state extensions of our previous results to that setting.

-

• In Section 6 we consider closed-form examples and explain how in these cases the existence of pseudo-associative Karcher means required in Theorem 2 can be guaranteed. We also explore certain properties of probability distributions that are ‘stable’ under the operation of taking their barycenters.

In the remainder of this introduction we provide independent discussions on various aspects of our results (some of them suggested by the referees) and on related literature.

1.1. On the assumptions

Although Assumption 2 might appear strong at first sight, it can be guaranteed in suitable parametric situations (e.g. Gaussian, or the scatter-location setting recalled in Section 6.4) or, more generally, under moment and density constraints on the measures in ![]() $K_\Pi$. For instance, if

$K_\Pi$. For instance, if ![]() $\Pi$ is supported on a finite set of measures with finite Boltzmann entropy, then Assumption 2 is guaranteed. More generally, if the support of

$\Pi$ is supported on a finite set of measures with finite Boltzmann entropy, then Assumption 2 is guaranteed. More generally, if the support of ![]() $\Pi$ is a 2-Wasserstein compact set and the Boltzmann entropy is uniformly bounded on it, then Assumption 2 is fulfilled too: see Lemma 3.

$\Pi$ is a 2-Wasserstein compact set and the Boltzmann entropy is uniformly bounded on it, then Assumption 2 is fulfilled too: see Lemma 3.

The conditions of Assumption 2 and the uniqueness of a Karcher mean in Theorem 1 are natural substitutes for, respectively, the compactness of SGD sequences and the uniqueness of critical points, which hold under ther usual sets of assumptions ensuring the convergence of SGD in Euclidean space to a minimizer, e.g. some growth control and certain strict convexity-type conditions at a minimum. The usual reasoning underlying the convergence analysis of SGD in Euclidean spaces, however, seem not to be applicable in our context, as the functional ![]() $\mathcal W_{2,\mathrm{ac}}(\mathcal X)\ni\mu\mapsto F(\mu)\;:\!=\;\int W_{2}(m,\bar{m})^{2}\,\Pi({\mathrm{d}} m)$ is not convex, in fact not even

$\mathcal W_{2,\mathrm{ac}}(\mathcal X)\ni\mu\mapsto F(\mu)\;:\!=\;\int W_{2}(m,\bar{m})^{2}\,\Pi({\mathrm{d}} m)$ is not convex, in fact not even ![]() $\alpha$-convex for some

$\alpha$-convex for some ![]() $\alpha\in\mathbb R$, when

$\alpha\in\mathbb R$, when ![]() $\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ is endowed with its almost Riemannian structure induced by optimal transport (see [Reference Ambrosio, Gigli and Savaré6, Chapter 7.2]). The function F is also not

$\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ is endowed with its almost Riemannian structure induced by optimal transport (see [Reference Ambrosio, Gigli and Savaré6, Chapter 7.2]). The function F is also not ![]() $\alpha$-convex for some

$\alpha$-convex for some ![]() $\alpha\in\mathbb R$ when we use generalized geodesics (see [Reference Ambrosio, Gigli and Savaré6, Chapter 9.2]). In fact, SGD in finite-dimensional Riemannian manifolds could provide a more suitable framework to draw inspiration from; see, e.g., [Reference Bonnabel13]. Ideas useful in that setting seem not straightforward to leverage since that work either assumes negative curvature (while

$\alpha\in\mathbb R$ when we use generalized geodesics (see [Reference Ambrosio, Gigli and Savaré6, Chapter 9.2]). In fact, SGD in finite-dimensional Riemannian manifolds could provide a more suitable framework to draw inspiration from; see, e.g., [Reference Bonnabel13]. Ideas useful in that setting seem not straightforward to leverage since that work either assumes negative curvature (while ![]() $\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ is positively curved), or that the functional to be minimized be rather smooth and have bounded derivatives of first and second order.

$\mathcal W_{2,\mathrm{ac}}(\mathcal X)$ is positively curved), or that the functional to be minimized be rather smooth and have bounded derivatives of first and second order.

The assumption that ![]() $K_\Pi$ is contained in a subset of

$K_\Pi$ is contained in a subset of ![]() $\mathcal W_{2}(\mathcal X)$ of absolutely continuous probability measures, and hence the existence of optimal transport maps between elements of

$\mathcal W_{2}(\mathcal X)$ of absolutely continuous probability measures, and hence the existence of optimal transport maps between elements of ![]() $K_\Pi$, appears as a more structural requirement, as it is needed to construct the iterations in (2). Indeed, by dealing with an extended notion of Karcher mean, it is in principle also possible to define an analogous iterative scheme in a general setting, including in particular the case of discrete laws, and thus to relax to some extent the absolute continuity requirement. However, this introduces additional technicalities, and we unfortunately were not able to provide conditions ensuring the convergence of the method in reasonably general situations. For completeness of the discussion, we sketch the main ideas of this possible extension in the Appendix.

$K_\Pi$, appears as a more structural requirement, as it is needed to construct the iterations in (2). Indeed, by dealing with an extended notion of Karcher mean, it is in principle also possible to define an analogous iterative scheme in a general setting, including in particular the case of discrete laws, and thus to relax to some extent the absolute continuity requirement. However, this introduces additional technicalities, and we unfortunately were not able to provide conditions ensuring the convergence of the method in reasonably general situations. For completeness of the discussion, we sketch the main ideas of this possible extension in the Appendix.

1.2. Uniqueness of Karcher means

Regarding situations where uniqueness of Karcher means can be granted, we refer to [Reference Panaretos and Zemel43, Reference Zemel and Panaretos55] for sufficient conditions when the support of ![]() $\Pi$ is finite, based on the regularity theory of optimal transport, and to [Reference Bigot and Klein12] for the case of an infinite support, under rather strong assumptions. We remark also that in one dimension, the uniqueness of Karcher means is known to hold without further assumptions. A first counterexample where this uniqueness is not guaranteed is given in [Reference Álvarez-Esteban, del Barrio, Cuesta-Albertos and Matrán4]; see also the simplified Example 1. A general understanding of the uniqueness of Karcher means remains an open and interesting challenge, however. This is not only relevant for the present work, but also for the (non-stochastic) gradient descent method of [Reference Zemel and Panaretos55] and the fixed-point iterations of [Reference Álvarez-Esteban, del Barrio, Cuesta-Albertos and Matrán4]. The notion of pseudo-associativity introduced in Definition 4 provides an alternative viewpoint on this question, complementary to the aforementioned ones, which might deserve being further explored.

$\Pi$ is finite, based on the regularity theory of optimal transport, and to [Reference Bigot and Klein12] for the case of an infinite support, under rather strong assumptions. We remark also that in one dimension, the uniqueness of Karcher means is known to hold without further assumptions. A first counterexample where this uniqueness is not guaranteed is given in [Reference Álvarez-Esteban, del Barrio, Cuesta-Albertos and Matrán4]; see also the simplified Example 1. A general understanding of the uniqueness of Karcher means remains an open and interesting challenge, however. This is not only relevant for the present work, but also for the (non-stochastic) gradient descent method of [Reference Zemel and Panaretos55] and the fixed-point iterations of [Reference Álvarez-Esteban, del Barrio, Cuesta-Albertos and Matrán4]. The notion of pseudo-associativity introduced in Definition 4 provides an alternative viewpoint on this question, complementary to the aforementioned ones, which might deserve being further explored.

1.3. Gradient descents in Wasserstein space

Gradient descent (GD) in Wasserstein space was introduced as a method to compute barycenters in [Reference Álvarez-Esteban, del Barrio, Cuesta-Albertos and Matrán4, Reference Zemel and Panaretos55]. The SGD method we develop here was introduced in an early version of [Reference Backhoff-Veraguas, Fontbona, Rios and Tobar9] (arXiv:1805.10833) as a way to compute Bayesian estimators based on 2-Wasserstein barycenters. In view of the independent, theoretical interest of this SGD method, we decided to separately present this algorithm here, along with a deeper analysis and more complete results on it, and devote [Reference Backhoff-Veraguas, Fontbona, Rios and Tobar9] exclusively to its statistical application and implementation. More recently, [Reference Chewi, Maunu, Rigollet and Stromme19] obtained convergence rates for the expected optimality gap for these GD and SGD methods in the case of Gaussian families of distributions with uniformly bounded, uniformly positive-definite covariance matrices. This relied on proving a Polyak–Lojasiewicz inequality, and also derived quantitative convergence bounds in ![]() $W_2$ for the SGD sequence, relying on a variance inequality, which was shown to hold under general, though strong, conditions on the dual potential of the barycenter problem (verified under the assumptions in that paper). We refer to [Reference Carlier, Delalande and Merigot16] for more on the variance inequality.

$W_2$ for the SGD sequence, relying on a variance inequality, which was shown to hold under general, though strong, conditions on the dual potential of the barycenter problem (verified under the assumptions in that paper). We refer to [Reference Carlier, Delalande and Merigot16] for more on the variance inequality.

The Riemannian-like structure of the Wasserstein space and its associated gradient have been utilized in various other ways with statistical or machine learning motivations in recent years; see, e.g., [Reference Chizat and Bach21] for particle-like approximations, [Reference Kent, Li, Blanchet and Glynn34] for a sequential first-order method, and [Reference Chen and Li18, Reference Li and Montúfar39] for information geometry perspectives.

1.4. Computational aspects

Implementing our SGD algorithm requires, in general, computing or approximating optimal transport maps between two given absolutely continuous distributions (some exceptions where explicit closed-form maps are available are given in Section 6). During the last two decades, considerable progress has been made on the numerical resolution of the latter problem through partial differential equation methods [Reference Angenent, Haker and Tannenbaum7, Reference Benamou and Brenier10, Reference Bonneel, van de Panne, Paris and Heidrich14, Reference Loeper and Rapetti40] and, more recently, through entropic regularization approaches [Reference Cuturi25, Reference Cuturi and Peyré27, Reference Dognin29, Reference Mallasto, Gerolin and Minh41, Reference Peyré and Cuturi44, Reference Solomon49] crucially relying on the Sinkhorn algorithm [Reference Sinkhorn47, Reference Sinkhorn and Knopp48], which significantly speeds up the approximate resolution of the problem. It is also possible to approximate optimal transport maps via estimators built from samples (based on the Sinkhorn algorithm, plug-in estimators, or using stochastic approximations) [Reference Bercu and Bigot11, Reference Deb, Ghosal and Sen28, Reference Hütter and Rigollet33, Reference Manole, Balakrishnan, Niles-Weed and Wasserman42, Reference Pooladian and Niles-Weed45]. We also refer to [Reference Korotin37] for an overview and comparison of sample-free methods for continuous distributions, for instance based on neural networks (NNs).

1.5. Applications

The proposed method to compute Wasserstein barycenters is well suited to situations where a population law ![]() $\Pi$ on infinitely many probability measures is considered. The Bayesian setting addressed in [Reference Backhoff-Veraguas, Fontbona, Rios and Tobar9] provides a good example of such a situation, i.e. where we need to compute the Wasserstein barycenter of a prior/posterior distribution

$\Pi$ on infinitely many probability measures is considered. The Bayesian setting addressed in [Reference Backhoff-Veraguas, Fontbona, Rios and Tobar9] provides a good example of such a situation, i.e. where we need to compute the Wasserstein barycenter of a prior/posterior distribution ![]() $\Pi$ on models that has a possibly infinite support.

$\Pi$ on models that has a possibly infinite support.

Further instances of population laws ![]() $\Pi$ with infinite support arise in the context of Bayesian deep learning [Reference Wilson and Izmailov54], in which an NN’s weights are sampled from a given law (e.g. Gaussian, uniform, or Laplace); in the case that these NNs parametrize probability distributions, the collection of resulting probability laws is distributed according to a law

$\Pi$ with infinite support arise in the context of Bayesian deep learning [Reference Wilson and Izmailov54], in which an NN’s weights are sampled from a given law (e.g. Gaussian, uniform, or Laplace); in the case that these NNs parametrize probability distributions, the collection of resulting probability laws is distributed according to a law ![]() $\Pi$ with possibly infinite support. Another example is variational autoencoders [Reference Kingma and Welling36], which model the parameters of a law by a simple random variable (usually Gaussian) that is then passed to a decoder NN. In both cases, the support of

$\Pi$ with possibly infinite support. Another example is variational autoencoders [Reference Kingma and Welling36], which model the parameters of a law by a simple random variable (usually Gaussian) that is then passed to a decoder NN. In both cases, the support of ![]() $\Pi$ is infinite naturally. Furthermore, sampling from

$\Pi$ is infinite naturally. Furthermore, sampling from ![]() $\Pi$ is straightforward in these cases, which eases the implementation of the SGD algorithm.

$\Pi$ is straightforward in these cases, which eases the implementation of the SGD algorithm.

1.6. Possible extensions

It is in principle possible to define and study SGD methods similar to (2) for the minimization on the space of measures of other functionals than the barycentric objective function, or with respect to other geometries than the 2-Wasserstein one. For instance, [Reference Cazelles, Tobar and Fontbona17] introduces the notion of barycenters of probability measures based on weak optimal transport [Reference Backhoff-Veraguas, Beiglböck and Pammer8, Reference Gozlan and Juillet31, Reference Gozlan, Roberto, Samson and Tetali32] and extends the ideas and algorithm developed here to that setting. A further, natural, example of functionals to consider are the entropy-regularized barycenters dealt with for numerical purposes in some of the aforementioned works and most recently in [Reference Chizat20, Reference Chizat and Vaškevic̆ius22].

In a different vein, it would be interesting to study conditions ensuring the convergence of the algorithm in (2) to stationary points (Karcher means) when the latter is a class that strictly contains the minimum (barycenter). Under suitable conditions, such convergence can be expected to hold by analogy with the behavior of the Euclidean SGD algorithm in general (not necessarily convex) settings [Reference Bottou, Curtis and Nocedal15]. In fact, we believe this question can also be linked to the pseudo-associativity of Karcher means. A deeper study is left for future work.

2. Gradient descent in Wasserstein space: A review

We first survey the gradient descent method for the computation of 2-Wasserstein barycenters. This method will serve as motivation for the subsequent development of the SGD in Section 3. For simplicity, we take ![]() $\Pi$ to be finitely supported. Concretely, we suppose in this section that

$\Pi$ to be finitely supported. Concretely, we suppose in this section that ![]() $\Pi=\sum_{i\leq L}\lambda_i\delta_{m_i}$, with

$\Pi=\sum_{i\leq L}\lambda_i\delta_{m_i}$, with ![]() $L\in\mathbb{N}$,

$L\in\mathbb{N}$, ![]() $\lambda_i\geq 0$, and

$\lambda_i\geq 0$, and ![]() $\sum_{i\leq L}\lambda_i=1$. We define the operator over absolutely continuous measures

$\sum_{i\leq L}\lambda_i=1$. We define the operator over absolutely continuous measures

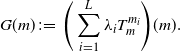

\begin{equation} G(m) \;:\!=\; \Bigg(\sum_{i=1}^{L}\lambda_iT_m^{m_i}\Bigg)(m).\end{equation}

\begin{equation} G(m) \;:\!=\; \Bigg(\sum_{i=1}^{L}\lambda_iT_m^{m_i}\Bigg)(m).\end{equation}

Notice that the fixed points of G are precisely the Karcher means of ![]() $\Pi$ presented in the introduction. Thanks to [Reference Álvarez-Esteban, del Barrio, Cuesta-Albertos and Matrán4] the operator G is continuous for the

$\Pi$ presented in the introduction. Thanks to [Reference Álvarez-Esteban, del Barrio, Cuesta-Albertos and Matrán4] the operator G is continuous for the ![]() ${W}_2$ distance. Also, if at least one of the L measures

${W}_2$ distance. Also, if at least one of the L measures ![]() $m_i$ has a bounded density, then the unique Wasserstein barycenter

$m_i$ has a bounded density, then the unique Wasserstein barycenter ![]() $\hat{m}$ of

$\hat{m}$ of ![]() $\Pi$ has a bounded density as well and satisfies

$\Pi$ has a bounded density as well and satisfies ![]() $G(\hat{m}) = \hat{m}$. This suggests defining, starting from

$G(\hat{m}) = \hat{m}$. This suggests defining, starting from ![]() $\mu_0$, the sequence

$\mu_0$, the sequence

The next result was proven in [Reference Álvarez-Esteban, del Barrio, Cuesta-Albertos and Matrán4, Theorem 3.6] and independently in [Reference Zemel and Panaretos55, Theorem 3, Corollary 2]:

Proposition 1. The sequence ![]() $\{\mu_n\}_{n \geq 0}$ in (7) is tight, and every weakly convergent subsequence of

$\{\mu_n\}_{n \geq 0}$ in (7) is tight, and every weakly convergent subsequence of ![]() $\{\mu_n\}_{n \geq 0}$ converges in

$\{\mu_n\}_{n \geq 0}$ converges in ![]() ${W}_2$ to an absolutely continuous measure in

${W}_2$ to an absolutely continuous measure in ![]() $\mathcal{W}_{2}(\mathbb{R}^q)$ that is also a Karcher mean. If some

$\mathcal{W}_{2}(\mathbb{R}^q)$ that is also a Karcher mean. If some ![]() $m_i$ has a bounded density, and if there exists a unique Karcher mean, then

$m_i$ has a bounded density, and if there exists a unique Karcher mean, then ![]() $\hat{m}$ is the Wasserstein barycenter of

$\hat{m}$ is the Wasserstein barycenter of ![]() $\Pi$ and

$\Pi$ and ![]() $W_2(\mu_n,\hat{m}) \rightarrow 0$.

$W_2(\mu_n,\hat{m}) \rightarrow 0$.

For the reader’s convenience, we present next a counterexample to the uniqueness of Karcher means.

Example 1. In ![]() $\mathbb R^2$ we take

$\mathbb R^2$ we take ![]() $\mu_1$ as the uniform measure on

$\mu_1$ as the uniform measure on ![]() $B((\!-\!1,M),\varepsilon)\cup B((1,-M),\varepsilon)$ and

$B((\!-\!1,M),\varepsilon)\cup B((1,-M),\varepsilon)$ and ![]() $\mu_2$ the uniform measure on

$\mu_2$ the uniform measure on ![]() $B((\!-\!1,-M),\varepsilon)\cup B((1,M),\varepsilon)$, with

$B((\!-\!1,-M),\varepsilon)\cup B((1,M),\varepsilon)$, with ![]() $\varepsilon$ a small radius and

$\varepsilon$ a small radius and ![]() $M\gg\varepsilon$. Then, if

$M\gg\varepsilon$. Then, if ![]() $\Pi=\frac{1}{2}(\delta_{\mu_1}+ \delta_{\mu_2})$, the uniform measure on

$\Pi=\frac{1}{2}(\delta_{\mu_1}+ \delta_{\mu_2})$, the uniform measure on ![]() $B((\!-\!1,0),\varepsilon)\cup B((1,0),\varepsilon)$ and the uniform measure on

$B((\!-\!1,0),\varepsilon)\cup B((1,0),\varepsilon)$ and the uniform measure on ![]() $B((0,M),\varepsilon)\cup B((0,-M),\varepsilon)$ are two distinct Karcher means.

$B((0,M),\varepsilon)\cup B((0,-M),\varepsilon)$ are two distinct Karcher means.

Thanks to the Riemann-like geometry of ![]() $\mathcal{W}_{2,\mathrm{ac}}(\mathbb{R}^q)$ we can reinterpret the iterations in (7) as a gradient descent step. This was discovered in [Reference Panaretos and Zemel43, Reference Zemel and Panaretos55]. In fact, [Reference Zemel and Panaretos55, Theorem 1] shows that the functional

$\mathcal{W}_{2,\mathrm{ac}}(\mathbb{R}^q)$ we can reinterpret the iterations in (7) as a gradient descent step. This was discovered in [Reference Panaretos and Zemel43, Reference Zemel and Panaretos55]. In fact, [Reference Zemel and Panaretos55, Theorem 1] shows that the functional

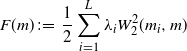

\begin{equation} F(m) \;:\!=\; \frac{1}{2}\sum_{i=1}^{L}\lambda_iW_2^2(m_i,m)\end{equation}

\begin{equation} F(m) \;:\!=\; \frac{1}{2}\sum_{i=1}^{L}\lambda_iW_2^2(m_i,m)\end{equation}

defined on ![]() $\mathcal{W}_2(\mathbb{R}^q)$ has a Fréchet derivative at each point

$\mathcal{W}_2(\mathbb{R}^q)$ has a Fréchet derivative at each point ![]() $m\in\mathcal{W}_{2,\mathrm{ac}}(\mathbb{R}^q)$ given by

$m\in\mathcal{W}_{2,\mathrm{ac}}(\mathbb{R}^q)$ given by

\begin{equation} F^\prime(m) = -\sum_{i=1}^{L}\lambda_i(T_m^{m_i}-I) = I-\sum_{i=1}^{L}\lambda_iT_m^{m_i} \in L^2(m),\end{equation}

\begin{equation} F^\prime(m) = -\sum_{i=1}^{L}\lambda_i(T_m^{m_i}-I) = I-\sum_{i=1}^{L}\lambda_iT_m^{m_i} \in L^2(m),\end{equation}

where I is the identity map in ![]() $\mathbb R^q$. More precisely, for such m, when

$\mathbb R^q$. More precisely, for such m, when ![]() $W_2(\hat{m},m)$ goes to zero,

$W_2(\hat{m},m)$ goes to zero,

thanks to [Reference Ambrosio, Gigli and Savaré6, Corollary 10.2.7]. It follows from Brenier’s theorem [Reference Villani50, Theorem 2.12(ii)] that ![]() $\hat{m}$ is a fixed point of G defined in (6) if and only if

$\hat{m}$ is a fixed point of G defined in (6) if and only if ![]() $F^\prime(\hat{m}) = 0$. The gradient descent sequence with step

$F^\prime(\hat{m}) = 0$. The gradient descent sequence with step ![]() $\gamma$ starting from

$\gamma$ starting from ![]() $\mu_0 \in \mathcal{W}_{2,\mathrm{ac}}(\mathbb{R}^q)$ is then defined by (cf. [Reference Zemel and Panaretos55])

$\mu_0 \in \mathcal{W}_{2,\mathrm{ac}}(\mathbb{R}^q)$ is then defined by (cf. [Reference Zemel and Panaretos55])

where

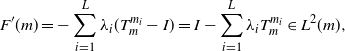

\begin{equation*} G_{\gamma}(m) \;:\!=\; [I + \gamma F^\prime(m)](m) = \Bigg[(1-\gamma)I + \gamma\sum_{i=1}^{L}\lambda_iT_m^{m_i}\Bigg](m) = \Bigg[I + \gamma\sum_{i=1}^{L}\lambda_i(T_m^{m_i}-I)\Bigg](m).\end{equation*}

\begin{equation*} G_{\gamma}(m) \;:\!=\; [I + \gamma F^\prime(m)](m) = \Bigg[(1-\gamma)I + \gamma\sum_{i=1}^{L}\lambda_iT_m^{m_i}\Bigg](m) = \Bigg[I + \gamma\sum_{i=1}^{L}\lambda_i(T_m^{m_i}-I)\Bigg](m).\end{equation*}

Note that, by (9), the iterations in (10) truly correspond to a gradient descent in ![]() $\mathcal{W}_2(\mathbb{R}^q)$ for the function in (8). We remark also that, if

$\mathcal{W}_2(\mathbb{R}^q)$ for the function in (8). We remark also that, if ![]() $\gamma=1$, the sequence in (10) coincides with that in (7), i.e.

$\gamma=1$, the sequence in (10) coincides with that in (7), i.e. ![]() $G_{1} = G$. These ideas serve as inspiration for the stochastic gradient descent iteration in the next part.

$G_{1} = G$. These ideas serve as inspiration for the stochastic gradient descent iteration in the next part.

3. Stochastic gradient descent for barycenters in Wasserstein space

The method presented in Section 2 is well suited to calculating the empirical barycenter. For the estimation of a population barycenter (i.e. when ![]() $\Pi$ does not have finite support) we would need to construct a convergent sequence of empirical barycenters, which can be computationally expensive. Furthermore, if a new sample from

$\Pi$ does not have finite support) we would need to construct a convergent sequence of empirical barycenters, which can be computationally expensive. Furthermore, if a new sample from ![]() $\Pi$ arrives, the previous method would need to recalculate the barycenter from scratch. To address these challenges, we follow the ideas of stochastic algorithms [Reference Robbins and Monro46], widely adopted in machine learning [Reference Bottou, Curtis and Nocedal15], and define a stochastic version of the gradient descent sequence for the barycenter of

$\Pi$ arrives, the previous method would need to recalculate the barycenter from scratch. To address these challenges, we follow the ideas of stochastic algorithms [Reference Robbins and Monro46], widely adopted in machine learning [Reference Bottou, Curtis and Nocedal15], and define a stochastic version of the gradient descent sequence for the barycenter of ![]() $\Pi$.

$\Pi$.

Recall that for ![]() $\mu_0 \in K_\Pi$ (in particular,

$\mu_0 \in K_\Pi$ (in particular, ![]() $\mu_0$ absolutely continuous),

$\mu_0$ absolutely continuous), ![]() $m_k \stackrel{\text{i.i.d.}}{\sim} \Pi$ defined in some probability space

$m_k \stackrel{\text{i.i.d.}}{\sim} \Pi$ defined in some probability space ![]() $(\Omega, \mathcal{F}, \mathbb{P})$, and

$(\Omega, \mathcal{F}, \mathbb{P})$, and ![]() $\gamma_k > 0$ for

$\gamma_k > 0$ for ![]() $k \geq 0$, we constructed the SGD sequence as

$k \geq 0$, we constructed the SGD sequence as ![]() $\mu_{k+1} \;:\!=\; \big[(1-\gamma_k)I + \gamma_k T_{\mu_k}^{m_k}\big](\mu_k)$ for

$\mu_{k+1} \;:\!=\; \big[(1-\gamma_k)I + \gamma_k T_{\mu_k}^{m_k}\big](\mu_k)$ for ![]() $k \geq 0$. The key ingredients for the convergence analysis of the above SGD iterations are the functions

$k \geq 0$. The key ingredients for the convergence analysis of the above SGD iterations are the functions

the natural analogues to the eponymous objects in (8) and (9). In this setting, we can formally (or rigorously, under additional assumptions) check that F ′ is the actual Frechet derivative of F, and this justifies naming ![]() $\{\mu_k\}_k$ a SGD sequence. In the following, the notation F and F ′ always refers to the functions just defined. Observe that the population barycenter

$\{\mu_k\}_k$ a SGD sequence. In the following, the notation F and F ′ always refers to the functions just defined. Observe that the population barycenter ![]() $\hat \mu$ is the unique minimizer of F. The following lemma justifies that F ′ is well defined and that

$\hat \mu$ is the unique minimizer of F. The following lemma justifies that F ′ is well defined and that ![]() $\|F'(\hat\mu)\|_{L^2(\hat \mu)}=0$, and in particular that

$\|F'(\hat\mu)\|_{L^2(\hat \mu)}=0$, and in particular that ![]() $\hat \mu$ is a Karcher mean. This is a generalization of the corresponding result in [Reference Álvarez-Esteban, del Barrio, Cuesta-Albertos and Matrán4] where only the case

$\hat \mu$ is a Karcher mean. This is a generalization of the corresponding result in [Reference Álvarez-Esteban, del Barrio, Cuesta-Albertos and Matrán4] where only the case ![]() $|\text{supp}(\Pi)|<\infty$ is covered.

$|\text{supp}(\Pi)|<\infty$ is covered.

Lemma 1. Let ![]() $\tilde\Pi$ be a probability measure concentrated on

$\tilde\Pi$ be a probability measure concentrated on ![]() $\mathcal W_{2,\mathrm{ac}}(\mathbb R^q)$. There exists a jointly measurable function

$\mathcal W_{2,\mathrm{ac}}(\mathbb R^q)$. There exists a jointly measurable function ![]() $\mathcal W_{2,\mathrm{ac}}(\mathbb R^q)\times\mathcal W_2(\mathbb R^q)\times\mathbb R^q \ni (\mu,m,x) \mapsto T^m_\mu(x)$ that is

$\mathcal W_{2,\mathrm{ac}}(\mathbb R^q)\times\mathcal W_2(\mathbb R^q)\times\mathbb R^q \ni (\mu,m,x) \mapsto T^m_\mu(x)$ that is ![]() $\mu({\mathrm{d}} x)\,\Pi({\mathrm{d}} m)\,\tilde\Pi({\mathrm{d}}\mu)$-a.s. equal to the unique optimal transport map from

$\mu({\mathrm{d}} x)\,\Pi({\mathrm{d}} m)\,\tilde\Pi({\mathrm{d}}\mu)$-a.s. equal to the unique optimal transport map from ![]() $\mu$ to m at x. Furthermore, letting

$\mu$ to m at x. Furthermore, letting ![]() $\hat\mu$ be a barycenter of

$\hat\mu$ be a barycenter of ![]() $\Pi$,

$\Pi$, ![]() $x = \int T^m_{\hat\mu}(x)\,\Pi({\mathrm{d}} m),$

$x = \int T^m_{\hat\mu}(x)\,\Pi({\mathrm{d}} m),$ ![]() $\hat\mu({\mathrm{d}} x)$-a.s.

$\hat\mu({\mathrm{d}} x)$-a.s.

Proof. The existence of a jointly measurable version of the unique optimal maps is proved in [Reference Fontbona, Guérin and Méléard30]. Let us prove the last assertion. Letting ![]() $T^m_{\hat\mu}\;=\!:\;T^m$, we have, by Brenier’s theorem [Reference Villani50, Theorem 2.12(ii)],

$T^m_{\hat\mu}\;=\!:\;T^m$, we have, by Brenier’s theorem [Reference Villani50, Theorem 2.12(ii)],

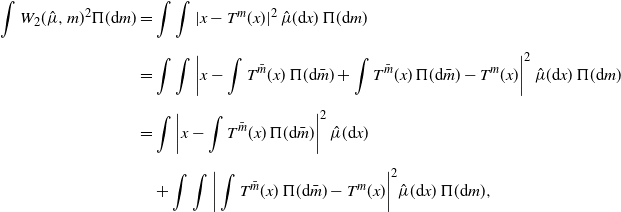

\begin{align*} \int W_2(\hat\mu,m)^2\Pi({\mathrm{d}} m) & = \int\int|x-T^m(x)|^2\,\hat\mu({\mathrm{d}} x)\,\Pi({\mathrm{d}} m) \\[5pt] & = \int\int\bigg|x-\int T^{\bar m}(x)\,\Pi({\mathrm{d}}{\bar m}) + \int T^{\bar m}(x)\,\Pi({\mathrm{d}}{\bar m}) - T^m(x)\bigg|^2\,\hat\mu({\mathrm{d}} x)\,\Pi({\mathrm{d}} m) \\[5pt] & = \int\bigg|x-\int T^{\bar m}(x)\,\Pi({\mathrm{d}}{\bar m})\bigg|^2\,\hat\mu({\mathrm{d}} x) \\[5pt] & \quad + \int\int\bigg|\int T^{\bar m}(x)\,\Pi({\mathrm{d}}{\bar m}) - T^m(x)\bigg|^2\hat\mu({\mathrm{d}} x)\,\Pi({\mathrm{d}} m), \end{align*}

\begin{align*} \int W_2(\hat\mu,m)^2\Pi({\mathrm{d}} m) & = \int\int|x-T^m(x)|^2\,\hat\mu({\mathrm{d}} x)\,\Pi({\mathrm{d}} m) \\[5pt] & = \int\int\bigg|x-\int T^{\bar m}(x)\,\Pi({\mathrm{d}}{\bar m}) + \int T^{\bar m}(x)\,\Pi({\mathrm{d}}{\bar m}) - T^m(x)\bigg|^2\,\hat\mu({\mathrm{d}} x)\,\Pi({\mathrm{d}} m) \\[5pt] & = \int\bigg|x-\int T^{\bar m}(x)\,\Pi({\mathrm{d}}{\bar m})\bigg|^2\,\hat\mu({\mathrm{d}} x) \\[5pt] & \quad + \int\int\bigg|\int T^{\bar m}(x)\,\Pi({\mathrm{d}}{\bar m}) - T^m(x)\bigg|^2\hat\mu({\mathrm{d}} x)\,\Pi({\mathrm{d}} m), \end{align*}

where we used the fact that

The term in the last line is an upper bound for

We conclude that ![]() $\int\big|x-\int T^{\bar m}(x)\,\Pi({\mathrm{d}}{\bar m})\big|^2\,\hat\mu({\mathrm{d}} x)$, as required.

$\int\big|x-\int T^{\bar m}(x)\,\Pi({\mathrm{d}}{\bar m})\big|^2\,\hat\mu({\mathrm{d}} x)$, as required.

Notice that Lemma 1 ensures that the SGD sequence ![]() $\{\mu_k\}_k$ is well defined as a sequence of (measurable)

$\{\mu_k\}_k$ is well defined as a sequence of (measurable) ![]() $\mathcal{W}_2$-valued random variables. More precisely, denoting by

$\mathcal{W}_2$-valued random variables. More precisely, denoting by ![]() $\mathcal F_{0}$ the trivial sigma-algebra and

$\mathcal F_{0}$ the trivial sigma-algebra and ![]() $\mathcal F_{k+1}$,

$\mathcal F_{k+1}$, ![]() $k\geq 0$, the sigma-algebra generated by

$k\geq 0$, the sigma-algebra generated by ![]() $m_0,\dots,m_{k}$, we can inductively apply the first part of Lemma 1 with

$m_0,\dots,m_{k}$, we can inductively apply the first part of Lemma 1 with ![]() $\tilde{\Pi}=\mathrm{Law}(\mu_{k})$ to check that

$\tilde{\Pi}=\mathrm{Law}(\mu_{k})$ to check that ![]() $T_{\mu_k}^{m_k}(x)$ is measurable with respect to

$T_{\mu_k}^{m_k}(x)$ is measurable with respect to ![]() $\mathcal F_{k+1}\otimes \mathcal B (\mathbb{R}^q)$, where

$\mathcal F_{k+1}\otimes \mathcal B (\mathbb{R}^q)$, where ![]() $\mathcal B$ stands for the Borel sigma-field. This implies that both

$\mathcal B$ stands for the Borel sigma-field. This implies that both ![]() $\mu_{k}$ and

$\mu_{k}$ and ![]() $\|F^\prime(\mu_{k})\|^2_{L^2(\mu_{k})}$ are measurable with respect to

$\|F^\prime(\mu_{k})\|^2_{L^2(\mu_{k})}$ are measurable with respect to ![]() $\mathcal F_{k}$.

$\mathcal F_{k}$.

The next proposition suggests that, in expectation, the sequence ![]() $\{F(\mu_k)\}_k$ is essentially decreasing. This is a first insight into the behavior of the sequence

$\{F(\mu_k)\}_k$ is essentially decreasing. This is a first insight into the behavior of the sequence ![]() $\{\mu_k\}_k$.

$\{\mu_k\}_k$.

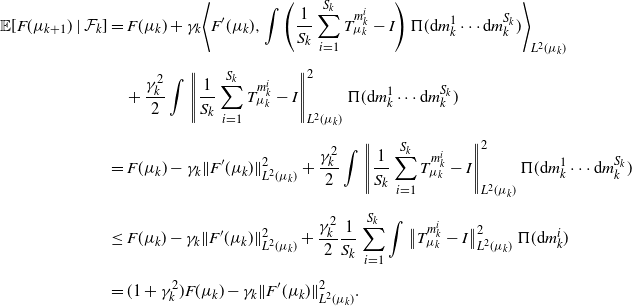

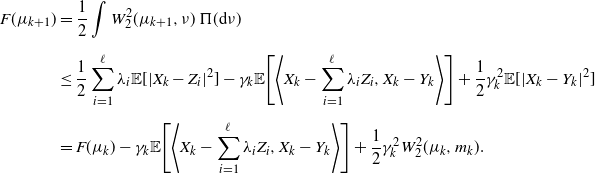

Proposition 2. The SGD sequence in (2) satisfies, almost surely,

Proof. Let ![]() $\nu\in\text{supp}(\Pi)$. Clearly,

$\nu\in\text{supp}(\Pi)$. Clearly, ![]() $\big(\big[(1-\gamma_k)I + \gamma_k T_{\mu_k}^{m_k}\big],T_{\mu_k}^\nu\big)(\mu_k)$ is a feasible (not necessarily optimal) coupling with first and second marginals

$\big(\big[(1-\gamma_k)I + \gamma_k T_{\mu_k}^{m_k}\big],T_{\mu_k}^\nu\big)(\mu_k)$ is a feasible (not necessarily optimal) coupling with first and second marginals ![]() $\mu_{k+1}$ and

$\mu_{k+1}$ and ![]() $\nu$ respectively. Writing

$\nu$ respectively. Writing ![]() $O_m\;:\!=\; T_{\mu_k}^{m} - I$, we have

$O_m\;:\!=\; T_{\mu_k}^{m} - I$, we have

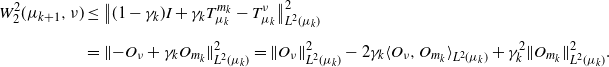

\begin{align*} W_2^2(\mu_{k+1},\nu) & \leq \big\|(1-\gamma_k)I + \gamma_k T_{\mu_k}^{m_k} - T_{\mu_k}^{\nu}\big\|^2_{L^2(\mu_k)} \\[5pt] & = \|{-}O_{\nu} + \gamma_k O_{m_k}\|^2_{L^2(\mu_k)} = \|O_{\nu}\|^2_{L^2(\mu_k)} - 2\gamma_k\langle O_{\nu},O_{m_k}\rangle_{L^2(\mu_k)} + \gamma_k^2\|O_{m_k}\|^2_{L^2(\mu_k)}. \end{align*}

\begin{align*} W_2^2(\mu_{k+1},\nu) & \leq \big\|(1-\gamma_k)I + \gamma_k T_{\mu_k}^{m_k} - T_{\mu_k}^{\nu}\big\|^2_{L^2(\mu_k)} \\[5pt] & = \|{-}O_{\nu} + \gamma_k O_{m_k}\|^2_{L^2(\mu_k)} = \|O_{\nu}\|^2_{L^2(\mu_k)} - 2\gamma_k\langle O_{\nu},O_{m_k}\rangle_{L^2(\mu_k)} + \gamma_k^2\|O_{m_k}\|^2_{L^2(\mu_k)}. \end{align*}

Evaluating ![]() $\mu_{k+1}$ on the functional F, thanks to the previous inequality we have

$\mu_{k+1}$ on the functional F, thanks to the previous inequality we have

\begin{align*} F(\mu_{k+1}) & = \frac{1}{2}\int W_{2}^2(\mu_{k+1},\nu)\,\Pi({\mathrm{d}}\nu) \\[5pt] & \leq \frac{1}{2}\int\|O_{\nu}\|^2_{L^2(\mu_k)}\,\Pi({\mathrm{d}}\nu) - \gamma_k\bigg\langle\int O_{\nu}\,\Pi({\mathrm{d}}\nu),O_{m_k}\bigg\rangle_{L^2(\mu_k)} + \frac{\gamma_k^2}{2}\|O_{m_k}\|^2_{L^2(\mu_k)} \\[5pt] & = F(\mu_k) + \gamma_k\langle F^\prime(\mu_k),O_{m_k}\rangle_{L^2(\mu_k)} + \frac{\gamma_k^2}{2}\|O_{m_k}\|^2_{L^2(\mu_k)}. \end{align*}

\begin{align*} F(\mu_{k+1}) & = \frac{1}{2}\int W_{2}^2(\mu_{k+1},\nu)\,\Pi({\mathrm{d}}\nu) \\[5pt] & \leq \frac{1}{2}\int\|O_{\nu}\|^2_{L^2(\mu_k)}\,\Pi({\mathrm{d}}\nu) - \gamma_k\bigg\langle\int O_{\nu}\,\Pi({\mathrm{d}}\nu),O_{m_k}\bigg\rangle_{L^2(\mu_k)} + \frac{\gamma_k^2}{2}\|O_{m_k}\|^2_{L^2(\mu_k)} \\[5pt] & = F(\mu_k) + \gamma_k\langle F^\prime(\mu_k),O_{m_k}\rangle_{L^2(\mu_k)} + \frac{\gamma_k^2}{2}\|O_{m_k}\|^2_{L^2(\mu_k)}. \end{align*}

Taking conditional expectation with respect to ![]() $\mathcal F_k$, and as

$\mathcal F_k$, and as ![]() $m_k$ is independently sampled from this sigma-algebra, we conclude that

$m_k$ is independently sampled from this sigma-algebra, we conclude that

\begin{align*} \mathbb{E}[F(\mu_{k+1})\mid\mathcal F_k] & \leq F(\mu_k) + \gamma_k\bigg\langle F^\prime(\mu_k),\int O_{m}\,\Pi({\mathrm{d}} m)\bigg\rangle_{L^2(\mu_k)} + \frac{\gamma_k^2}{2}\int\lVert |O_{m}\rVert^2_{L^2(\mu_k)}\,\Pi({\mathrm{d}} m) \\[5pt] & = (1+\gamma_k^2)F(\mu_k) - \gamma_k\|F^\prime(\mu_k)\|^2_{L^2(\mu_k)}. \end{align*}

\begin{align*} \mathbb{E}[F(\mu_{k+1})\mid\mathcal F_k] & \leq F(\mu_k) + \gamma_k\bigg\langle F^\prime(\mu_k),\int O_{m}\,\Pi({\mathrm{d}} m)\bigg\rangle_{L^2(\mu_k)} + \frac{\gamma_k^2}{2}\int\lVert |O_{m}\rVert^2_{L^2(\mu_k)}\,\Pi({\mathrm{d}} m) \\[5pt] & = (1+\gamma_k^2)F(\mu_k) - \gamma_k\|F^\prime(\mu_k)\|^2_{L^2(\mu_k)}. \end{align*}

The next lemma states some key continuity properties of the functions F and F ′. For this result, Assumption 2 can be dropped and it is only required that ![]() $\int\int\|x\|^2\,m({\mathrm{d}} x)\,\Pi({\mathrm{d}} m)<\infty$.

$\int\int\|x\|^2\,m({\mathrm{d}} x)\,\Pi({\mathrm{d}} m)<\infty$.

Lemma 2. Let ![]() $(\rho_n)_n\subset\mathcal{W}_{2,\mathrm{ac}}(\mathbb{R}^q)$ be a sequence converging with respect to

$(\rho_n)_n\subset\mathcal{W}_{2,\mathrm{ac}}(\mathbb{R}^q)$ be a sequence converging with respect to ![]() $W_2$ to

$W_2$ to ![]() $\rho\in\mathcal{W}_{2,\mathrm{ac}}(\mathbb{R}^q)$. Then, as

$\rho\in\mathcal{W}_{2,\mathrm{ac}}(\mathbb{R}^q)$. Then, as ![]() $n\to\infty$,

$n\to\infty$,

(i)

$F(\rho_n)\to F(\rho)$;

$F(\rho_n)\to F(\rho)$;-

(ii)

$\|F'(\rho_n)\|_{L^2(\rho_n)}\to\|F'(\rho)\|_{L^2(\rho)}$.

$\|F'(\rho_n)\|_{L^2(\rho_n)}\to\|F'(\rho)\|_{L^2(\rho)}$.

Proof. We prove both convergence claims using Skorokhod’s representation theorem. Thanks to that result, in a given probability space ![]() $(\Omega,\mathcal{G},{\mathbb{P}})$ we can simultaneously construct a sequence of random vectors

$(\Omega,\mathcal{G},{\mathbb{P}})$ we can simultaneously construct a sequence of random vectors ![]() $(X_n)_n$ of laws

$(X_n)_n$ of laws ![]() $(\rho_n)_n$ and a random variable X of law

$(\rho_n)_n$ and a random variable X of law ![]() $\rho$ such that

$\rho$ such that ![]() $(X_n)_n$ converges

$(X_n)_n$ converges ![]() ${\mathbb{P}}$-a.s. to X. Moreover, by [Reference Cuesta-Albertos, Matrán and Tuero-Diaz23, Theorem 3.4], the sequence

${\mathbb{P}}$-a.s. to X. Moreover, by [Reference Cuesta-Albertos, Matrán and Tuero-Diaz23, Theorem 3.4], the sequence ![]() $(T_{\rho_n}^m(X_n))_n$ converges

$(T_{\rho_n}^m(X_n))_n$ converges ![]() ${\mathbb{P}}$-a.s. to

${\mathbb{P}}$-a.s. to ![]() $T_{\rho}^m(X)$. Notice that, for all

$T_{\rho}^m(X)$. Notice that, for all ![]() $n\in\mathbb{N}$,

$n\in\mathbb{N}$, ![]() $T_{\rho_n}^m(X_n)$ distributes according to the law m, and the same holds true for

$T_{\rho_n}^m(X_n)$ distributes according to the law m, and the same holds true for ![]() $T_{\rho}^m(X)$.

$T_{\rho}^m(X)$.

We now enlarge the probability space ![]() $(\Omega,\mathcal{G},{\mathbb{P}})$ (maintaining the same notation for simplicity) with an independent random variable

$(\Omega,\mathcal{G},{\mathbb{P}})$ (maintaining the same notation for simplicity) with an independent random variable ![]() $\textbf{m}$ in

$\textbf{m}$ in ![]() $\mathcal{W}_2(\mathbb{R}^d)$ with law

$\mathcal{W}_2(\mathbb{R}^d)$ with law ![]() $\Pi$ (thus independent of

$\Pi$ (thus independent of ![]() $(X_n)_n$ and X). Applying Lemma 1 with

$(X_n)_n$ and X). Applying Lemma 1 with ![]() $\tilde{\Pi}=\delta_{\rho_n}$ for each n, or with

$\tilde{\Pi}=\delta_{\rho_n}$ for each n, or with ![]() $\tilde{\Pi}=\delta_{\rho}$, we can show that

$\tilde{\Pi}=\delta_{\rho}$, we can show that ![]() $\big(X_n,T_{\rho_n}^{\textbf{m}}(X_n)\big)$ and

$\big(X_n,T_{\rho_n}^{\textbf{m}}(X_n)\big)$ and ![]() $\big(X,T_{\rho}^{\textbf{m}}(X)\big)$ are random variables in

$\big(X,T_{\rho}^{\textbf{m}}(X)\big)$ are random variables in ![]() $(\Omega,\mathcal{G},{\mathbb{P}})$. By conditioning on

$(\Omega,\mathcal{G},{\mathbb{P}})$. By conditioning on ![]() $\{\textbf{m}=m\}$, we further obtain that

$\{\textbf{m}=m\}$, we further obtain that ![]() $\big(X_n,T_{\rho_n}^{\textbf{m}}(X_n)\big)_{n\in\mathbb{N}}$ converges

$\big(X_n,T_{\rho_n}^{\textbf{m}}(X_n)\big)_{n\in\mathbb{N}}$ converges ![]() ${\mathbb{P}}$-a.s. to

${\mathbb{P}}$-a.s. to ![]() $\big(X,T_{\rho}^{\textbf{m}}(X)\big)$.

$\big(X,T_{\rho}^{\textbf{m}}(X)\big)$.

Notice that ![]() $\sup_n\mathbb{E}\big(\|X_n\|^2\textbf{1}_{\|X_n\|^2\geq M}\big) = \sup_n\int_{\|x\|^2\geq M}\|x\|^2\,\rho_n({\mathrm{d}} x) \to 0$ as

$\sup_n\mathbb{E}\big(\|X_n\|^2\textbf{1}_{\|X_n\|^2\geq M}\big) = \sup_n\int_{\|x\|^2\geq M}\|x\|^2\,\rho_n({\mathrm{d}} x) \to 0$ as ![]() $M\to\infty$ since

$M\to\infty$ since ![]() $(\rho_n)_n$ converges in

$(\rho_n)_n$ converges in ![]() $\mathcal{W}_2(\mathcal{X})$, while, upon conditioning on

$\mathcal{W}_2(\mathcal{X})$, while, upon conditioning on ![]() $\textbf{m}$,

$\textbf{m}$,

by dominated convergence, since ![]() $\int_{\|x\|^2\geq M}\|x\|^2\,m({\mathrm{d}} x)\leq\int\|x\|^2\,m({\mathrm{d}} x) = W_2^2(m,\delta_0)$ and

$\int_{\|x\|^2\geq M}\|x\|^2\,m({\mathrm{d}} x)\leq\int\|x\|^2\,m({\mathrm{d}} x) = W_2^2(m,\delta_0)$ and ![]() $\Pi\in\mathcal{W}_2(\mathcal{W}_2(\mathcal{X}))$. By Vitalli’s convergence theorem, we deduce that

$\Pi\in\mathcal{W}_2(\mathcal{W}_2(\mathcal{X}))$. By Vitalli’s convergence theorem, we deduce that ![]() $(X_n,T_{\rho_n}^{\textbf{m}}(X_n))_{n\in\mathbb{N}}$ converges to

$(X_n,T_{\rho_n}^{\textbf{m}}(X_n))_{n\in\mathbb{N}}$ converges to ![]() $(X,T_{\rho}^{\textbf{m}}(X))$ in

$(X,T_{\rho}^{\textbf{m}}(X))$ in ![]() $L^2(\Omega,\mathcal{G},{\mathbb{P}})$. In particular, as

$L^2(\Omega,\mathcal{G},{\mathbb{P}})$. In particular, as ![]() $n\to \infty$,

$n\to \infty$,

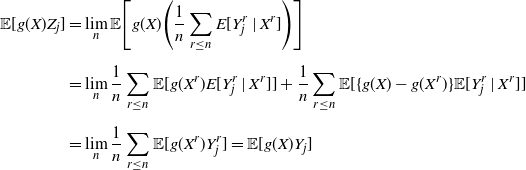

which proves the convergence in (i). Now denoting by ![]() $\mathcal{G}_{\infty}$ the sigma-field generated by

$\mathcal{G}_{\infty}$ the sigma-field generated by ![]() $(X_1,X_2,\dots)$, we also obtain that

$(X_1,X_2,\dots)$, we also obtain that

Observe now that the following identities hold:

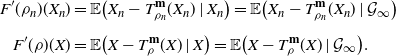

\begin{align*} F'(\rho_n)(X_n) & = \mathbb{E}\big(X_n-T_{\rho_n}^{\textbf{m}}(X_n)\mid X_n\big) = \mathbb{E}\big(X_n-T_{\rho_n}^{\textbf{m}}(X_n)\mid\mathcal{G}_{\infty}\big) \\[5pt] F'(\rho)(X) & = \mathbb{E}\big(X-T_{\rho}^{\textbf{m}}(X)\mid X\big) = \mathbb{E}\big(X-T_{\rho}^{\textbf{m}}(X)\mid\mathcal{G}_{\infty}\big). \end{align*}

\begin{align*} F'(\rho_n)(X_n) & = \mathbb{E}\big(X_n-T_{\rho_n}^{\textbf{m}}(X_n)\mid X_n\big) = \mathbb{E}\big(X_n-T_{\rho_n}^{\textbf{m}}(X_n)\mid\mathcal{G}_{\infty}\big) \\[5pt] F'(\rho)(X) & = \mathbb{E}\big(X-T_{\rho}^{\textbf{m}}(X)\mid X\big) = \mathbb{E}\big(X-T_{\rho}^{\textbf{m}}(X)\mid\mathcal{G}_{\infty}\big). \end{align*}

The convergence in (ii) follows from (12), ![]() $\mathbb{E}(F'(\rho_n)(X_n))^2 = \|F'(\rho_n)\|_{L^2(\rho_n)}$, and

$\mathbb{E}(F'(\rho_n)(X_n))^2 = \|F'(\rho_n)\|_{L^2(\rho_n)}$, and ![]() $\mathbb{E}(F'(\rho)(X))^2 = \|F'(\rho)\|_{L^2(\rho)}$.

$\mathbb{E}(F'(\rho)(X))^2 = \|F'(\rho)\|_{L^2(\rho)}$.

We now proceed to prove the first of our two main results.

Proof of Theorem 1. Let us denote by ![]() $\hat\mu$ the unique barycenter, write

$\hat\mu$ the unique barycenter, write ![]() $\hat{F} \;:\!=\; F(\hat{\mu})$, and introduce

$\hat{F} \;:\!=\; F(\hat{\mu})$, and introduce ![]() $h_t \;:\!=\; F(\mu_t) - \hat{F} \geq 0$ and

$h_t \;:\!=\; F(\mu_t) - \hat{F} \geq 0$ and ![]() $\alpha_t \;:\!=\; \prod_{i=1}^{t-1}{1}/({1+\gamma_i^2})$. Thanks to the condition in (3), the sequence

$\alpha_t \;:\!=\; \prod_{i=1}^{t-1}{1}/({1+\gamma_i^2})$. Thanks to the condition in (3), the sequence ![]() $(\alpha_t)$ converges to some finite

$(\alpha_t)$ converges to some finite ![]() $\alpha_\infty>0$, as can be verified simply by applying logarithms. By Proposition 2,

$\alpha_\infty>0$, as can be verified simply by applying logarithms. By Proposition 2,

from which, after multiplying by ![]() $\alpha_{t+1}$, the following bound is derived:

$\alpha_{t+1}$, the following bound is derived:

Now defining ![]() $\hat{h}_t\;:\!=\; \alpha_t h_t - \sum_{i=1}^{t}\alpha_i\gamma_{i-1}^2\hat{F}$, we deduce from (13) that

$\hat{h}_t\;:\!=\; \alpha_t h_t - \sum_{i=1}^{t}\alpha_i\gamma_{i-1}^2\hat{F}$, we deduce from (13) that ![]() $\mathbb{E}[\hat{h}_{t+1}-\hat{h}_t\mid\mathcal F_t] \leq 0$, namely that

$\mathbb{E}[\hat{h}_{t+1}-\hat{h}_t\mid\mathcal F_t] \leq 0$, namely that ![]() $(\hat{h}_t)_{t\geq 0}$ is a supermartingale with respect to

$(\hat{h}_t)_{t\geq 0}$ is a supermartingale with respect to ![]() $(\mathcal F_t)$. The fact that

$(\mathcal F_t)$. The fact that ![]() $(\alpha_t)$ is convergent, together with the condition in (3), ensures that

$(\alpha_t)$ is convergent, together with the condition in (3), ensures that ![]() $\sum_{i=1}^{\infty}\alpha_i\gamma_{i-1}^2\hat{F}<\infty$, and thus

$\sum_{i=1}^{\infty}\alpha_i\gamma_{i-1}^2\hat{F}<\infty$, and thus ![]() $\hat{h}_t$ is uniformly lower-bounded by a constant. Therefore, the supermartingale convergence theorem [Reference Williams53, Corollary 11.7] implies the existence of

$\hat{h}_t$ is uniformly lower-bounded by a constant. Therefore, the supermartingale convergence theorem [Reference Williams53, Corollary 11.7] implies the existence of ![]() $\hat{h}_{\infty}\in L^1$ such that

$\hat{h}_{\infty}\in L^1$ such that ![]() $\hat{h}_t\to \hat{h}_{\infty}$ a.s. But then, necessarily,

$\hat{h}_t\to \hat{h}_{\infty}$ a.s. But then, necessarily, ![]() $h_t\to h_{\infty}$ a.s. for some non-negative random variable

$h_t\to h_{\infty}$ a.s. for some non-negative random variable ![]() $h_{\infty}\in L^1$.

$h_{\infty}\in L^1$.

Thus, our goal now is to prove that ![]() $h_{\infty}=0$ a.s. Taking expectations in (13) and summing over t to obtain a telescopic summation, we obtain

$h_{\infty}=0$ a.s. Taking expectations in (13) and summing over t to obtain a telescopic summation, we obtain

Then, taking liminf, applying Fatou on the left-hand side and monotone convergence on the right-hand side, we obtain

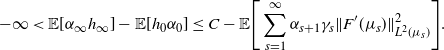

$$ -\infty

< \mathbb E[\alpha_{\infty}h_{\infty}] - \mathbb{E}[h_0\alpha_0] \leq C - \mathbb E\Bigg[\sum_{s=1}^\infty\alpha_{s+1}\gamma_s\|F^\prime(\mu_s)\|^2_{L^2(\mu_s)}\Bigg]. $$

$$ -\infty

< \mathbb E[\alpha_{\infty}h_{\infty}] - \mathbb{E}[h_0\alpha_0] \leq C - \mathbb E\Bigg[\sum_{s=1}^\infty\alpha_{s+1}\gamma_s\|F^\prime(\mu_s)\|^2_{L^2(\mu_s)}\Bigg]. $$

In particular, since ![]() $(\alpha_t)$ is bounded away from 0, we have

$(\alpha_t)$ is bounded away from 0, we have

Note that ![]() ${\mathbb{P}}(\liminf_{t\to \infty}\|F^\prime(\mu_t)\|^2_{L^2(\mu_t)}>0)>0$ would be at odds with the conditions in (14) and (4), so

${\mathbb{P}}(\liminf_{t\to \infty}\|F^\prime(\mu_t)\|^2_{L^2(\mu_t)}>0)>0$ would be at odds with the conditions in (14) and (4), so

Observe also that, from Assumption 2 and Lemma 2, we have

Indeed, we can see that the set ![]() $\{\rho\colon F(\rho) \geq \hat F + \varepsilon\}\cap K_\Pi$ is

$\{\rho\colon F(\rho) \geq \hat F + \varepsilon\}\cap K_\Pi$ is ![]() $W_2$-compact, using Lemma 2(i), and then check that the function

$W_2$-compact, using Lemma 2(i), and then check that the function ![]() $\rho\mapsto\|F^\prime(\rho)\|^2_{L^2(\rho)}$ attains its minimum on it, using part (ii) of that result. That minimum cannot be zero, as otherwise we would have obtained a Karcher mean that is not equal to the barycenter (contradicting the uniqueness of the Karcher mean). Note also that, a.s.,

$\rho\mapsto\|F^\prime(\rho)\|^2_{L^2(\rho)}$ attains its minimum on it, using part (ii) of that result. That minimum cannot be zero, as otherwise we would have obtained a Karcher mean that is not equal to the barycenter (contradicting the uniqueness of the Karcher mean). Note also that, a.s., ![]() $\mu_t\in K_\Pi$ for each t, by the geodesic convexity part of Assumption 2. We deduce the following a.s. relationships between events:

$\mu_t\in K_\Pi$ for each t, by the geodesic convexity part of Assumption 2. We deduce the following a.s. relationships between events:

\begin{align*} \{h_{\infty} \geq 2\varepsilon\} & \subset\{\mu_t\in\{\rho\colon F(\rho) \geq \hat F + \varepsilon\} \cap K_\Pi \text{ for all } t \mbox{ large enough} \} \\[5pt] & \subset \bigcup_{\ell\in\mathbb{N}}\big\{\|F^\prime(\mu_t)\|^2_{L^2(\mu_t)} > 1/\ell\colon \text{for all } t \mbox{ large enough}\big\} \\[5pt] & \subset \Big\{\liminf_{t\to\infty}\|F^\prime(\mu_t)\|^2_{L^2(\mu_t)}>0\Big\}, \end{align*}

\begin{align*} \{h_{\infty} \geq 2\varepsilon\} & \subset\{\mu_t\in\{\rho\colon F(\rho) \geq \hat F + \varepsilon\} \cap K_\Pi \text{ for all } t \mbox{ large enough} \} \\[5pt] & \subset \bigcup_{\ell\in\mathbb{N}}\big\{\|F^\prime(\mu_t)\|^2_{L^2(\mu_t)} > 1/\ell\colon \text{for all } t \mbox{ large enough}\big\} \\[5pt] & \subset \Big\{\liminf_{t\to\infty}\|F^\prime(\mu_t)\|^2_{L^2(\mu_t)}>0\Big\}, \end{align*}

where (16) was used to obtain the second inclusion. It follows using (15) that ![]() ${\mathbb{P}}(h_{\infty}\geq2\varepsilon)=0$ for every

${\mathbb{P}}(h_{\infty}\geq2\varepsilon)=0$ for every ![]() $\varepsilon>0$, and hence

$\varepsilon>0$, and hence ![]() $h_{\infty}=0$ as required.

$h_{\infty}=0$ as required.

To conclude, we use the fact that the sequence ![]() $\{\mu_t\}_t$ is a.s. contained in the

$\{\mu_t\}_t$ is a.s. contained in the ![]() $W_2$-compact

$W_2$-compact ![]() $K_\Pi$ by Assumption 2, and the first convergence in Lemma 2 to deduce that the limit

$K_\Pi$ by Assumption 2, and the first convergence in Lemma 2 to deduce that the limit ![]() $\tilde{\mu}$ of any convergent subsequence satisfies

$\tilde{\mu}$ of any convergent subsequence satisfies ![]() $F(\tilde{\mu})-\hat F=h_{\infty}=0$, and then

$F(\tilde{\mu})-\hat F=h_{\infty}=0$, and then ![]() $F(\tilde\mu)=\hat F$. Hencem

$F(\tilde\mu)=\hat F$. Hencem ![]() $\tilde{\mu}=\hat{\mu}$ by the uniqueness of the barycenter. This implies that

$\tilde{\mu}=\hat{\mu}$ by the uniqueness of the barycenter. This implies that ![]() $\mu_t\to\hat{\mu}$ in

$\mu_t\to\hat{\mu}$ in ![]() $\mathcal{W}_2(\mathcal{X})$ a.s. as

$\mathcal{W}_2(\mathcal{X})$ a.s. as ![]() $t\to \infty$.

$t\to \infty$.

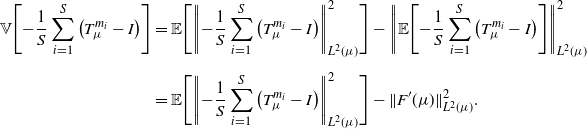

Remark 1. Observe that, for a fixed ![]() $\mu\in\mathcal{W}_{2,\mathrm{ac}}(\mathcal{X})$ and a random

$\mu\in\mathcal{W}_{2,\mathrm{ac}}(\mathcal{X})$ and a random ![]() $m\sim\Pi$, the random variable

$m\sim\Pi$, the random variable ![]() $-(T_{\mu}^{m}-I)(x)$ is an unbiased estimator of

$-(T_{\mu}^{m}-I)(x)$ is an unbiased estimator of ![]() $F^\prime(\mu)(x)$ for

$F^\prime(\mu)(x)$ for ![]() $\mu({\mathrm{d}} x)$ almost eveywhere,

$\mu({\mathrm{d}} x)$ almost eveywhere, ![]() $x\in\mathbb{R}^q$. A natural way to jointly quantify the pointwise variances of these estimators is through the integrated variance,

$x\in\mathbb{R}^q$. A natural way to jointly quantify the pointwise variances of these estimators is through the integrated variance,

which is the equivalent (for unbiased estimators) of the mean integrated square error from non-parametric statistics [Reference Wasserman52]. Simple computations yield the following expresion for it, which will be useful in the next two sections:

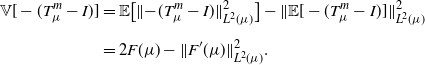

\begin{align} \mathbb{V}[-(T_{\mu}^{m}-I)] & = \mathbb{E}\big[\|{-}(T_{\mu}^{m}-I)\|_{L^2(\mu)}^2\big] - \|\mathbb{E}[-(T_{\mu}^{m}-I)]\|_{L^2(\mu)}^2 \notag \\[5pt] & = 2F(\mu) - \|F^\prime(\mu)\|_{L^2(\mu)}^2. \end{align}

\begin{align} \mathbb{V}[-(T_{\mu}^{m}-I)] & = \mathbb{E}\big[\|{-}(T_{\mu}^{m}-I)\|_{L^2(\mu)}^2\big] - \|\mathbb{E}[-(T_{\mu}^{m}-I)]\|_{L^2(\mu)}^2 \notag \\[5pt] & = 2F(\mu) - \|F^\prime(\mu)\|_{L^2(\mu)}^2. \end{align}

We close this section with the promised statement of Lemma 3, referred to in the introduction, giving us a sufficient condition for Assumption 2.

Lemma 3. If the support of ![]() $\Pi$ is

$\Pi$ is ![]() $\mathcal W_2(\mathbb R^d)$-compact and there is a constant

$\mathcal W_2(\mathbb R^d)$-compact and there is a constant ![]() $C_1<\infty$ such that

$C_1<\infty$ such that ![]() $\Pi(\{m\in\mathcal W_{2,\mathrm{ac}}(\mathbb R^d)\colon\int\log({\mathrm{d}} m/{\mathrm{d}} x)\,m({\mathrm{d}} x) \leq C_1\})=1$, then Assumption 2 is fulfilled.

$\Pi(\{m\in\mathcal W_{2,\mathrm{ac}}(\mathbb R^d)\colon\int\log({\mathrm{d}} m/{\mathrm{d}} x)\,m({\mathrm{d}} x) \leq C_1\})=1$, then Assumption 2 is fulfilled.

Proof. Let K be the support of ![]() $\Pi$, which is

$\Pi$, which is ![]() $\mathcal W_2(\mathbb R^d)$-compact. By the de la Vallée Poussin criterion, there is a

$\mathcal W_2(\mathbb R^d)$-compact. By the de la Vallée Poussin criterion, there is a ![]() $V\colon\mathbb R_+\to\mathbb R_+$, increasing, convex, and super-quadratic (i.e.

$V\colon\mathbb R_+\to\mathbb R_+$, increasing, convex, and super-quadratic (i.e. ![]() $\lim_{r\to +\infty} V(r)/r^2=+\infty$), such that

$\lim_{r\to +\infty} V(r)/r^2=+\infty$), such that ![]() $C_2\;:\!=\;\sup_{m\in K}\int V(\|x\|)\,m({\mathrm{d}} x)<\infty$. Observe that

$C_2\;:\!=\;\sup_{m\in K}\int V(\|x\|)\,m({\mathrm{d}} x)<\infty$. Observe that ![]() $p({\mathrm{d}} x)\;:\!=\;\exp\{-V(\|x\|)\}\,{\mathrm{d}} x$ is a finite measure (without loss of generality a probability measure). Moreover, for the relative entropy with respect to p we have

$p({\mathrm{d}} x)\;:\!=\;\exp\{-V(\|x\|)\}\,{\mathrm{d}} x$ is a finite measure (without loss of generality a probability measure). Moreover, for the relative entropy with respect to p we have ![]() $H(m\mid p)\;:\!=\;\int\log({\mathrm{d}} m/{\mathrm{d}} p)\,{\mathrm{d}} m=\int\log({\mathrm{d}} m/{\mathrm{d}} x)\,m({\mathrm{d}} x)+\int V(\|x\|)\,m({\mathrm{d}} x)$ if

$H(m\mid p)\;:\!=\;\int\log({\mathrm{d}} m/{\mathrm{d}} p)\,{\mathrm{d}} m=\int\log({\mathrm{d}} m/{\mathrm{d}} x)\,m({\mathrm{d}} x)+\int V(\|x\|)\,m({\mathrm{d}} x)$ if ![]() $m\ll {\mathrm{d}} x$ and

$m\ll {\mathrm{d}} x$ and ![]() $+\infty$ otherwise. Now define

$+\infty$ otherwise. Now define ![]() $K_\Pi\;:\!=\;\{m\in\mathcal W_{2,\mathrm{ac}}(\mathbb R^d)\colon H(m\mid p)\leq C_1+C_2,\, \int V(\|x\|)\,m({\mathrm{d}} x) \leq C_2\}$ so that

$K_\Pi\;:\!=\;\{m\in\mathcal W_{2,\mathrm{ac}}(\mathbb R^d)\colon H(m\mid p)\leq C_1+C_2,\, \int V(\|x\|)\,m({\mathrm{d}} x) \leq C_2\}$ so that ![]() $\Pi(K_\Pi)=1$. Clearly,

$\Pi(K_\Pi)=1$. Clearly, ![]() $K_\Pi$ is

$K_\Pi$ is ![]() $\mathcal W_2$-closed, since the relative entropy is weakly lower-semicontinuous, and also

$\mathcal W_2$-closed, since the relative entropy is weakly lower-semicontinuous, and also ![]() $\mathcal W_2$-relatively compact, since V is super-quadratic. Finally,

$\mathcal W_2$-relatively compact, since V is super-quadratic. Finally, ![]() $K_\Pi$ is geodesically convex by [Reference Villani50, Theorem 5.15].

$K_\Pi$ is geodesically convex by [Reference Villani50, Theorem 5.15].

For instance, if all m in the support of ![]() $\Pi$ is of the form

$\Pi$ is of the form

with ![]() $V_m$ bounded from below, then one way to guarantee the conditions in Lemma 3, assuming without loss of generality that

$V_m$ bounded from below, then one way to guarantee the conditions in Lemma 3, assuming without loss of generality that ![]() $V_m\geq 0$, is to ask that

$V_m\geq 0$, is to ask that ![]() $\int_y{\mathrm{e}}^{-V_m}(y)\,{\mathrm{d}} y \geq A$ and

$\int_y{\mathrm{e}}^{-V_m}(y)\,{\mathrm{d}} y \geq A$ and ![]() $\int_y|y|^{2+\varepsilon}{\mathrm{e}}^{-V_m}(y)\,{\mathrm{d}} y \leq B$ for some fixed

$\int_y|y|^{2+\varepsilon}{\mathrm{e}}^{-V_m}(y)\,{\mathrm{d}} y \leq B$ for some fixed ![]() $\varepsilon,A,B >0$. In words: tails are controlled and the measures cannot be too concentrated.

$\varepsilon,A,B >0$. In words: tails are controlled and the measures cannot be too concentrated.

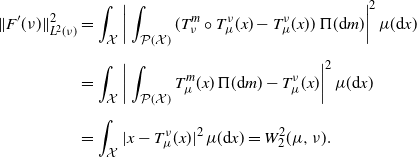

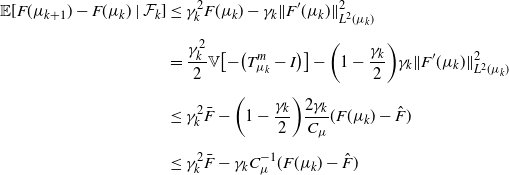

4. A condition granting uniqueness of Karcher means and convergence rates

The aim of this section is to prove Theorem 2, a refinement of Theorem 1 under the additional assumption that the barycenter is a pseudo-associative Karcher mean. Notice that, with the notation introduced in Section 3, Definition 4 of pseudo-associative Karcher mean ![]() $\mu$ simply reads as

$\mu$ simply reads as

for all ![]() $\nu\in\mathcal W_{2,\mathrm{ac}}(\mathcal{X})$.

$\nu\in\mathcal W_{2,\mathrm{ac}}(\mathcal{X})$.

Remark 2. Suppose ![]() $\mu$ is a Karcher mean of

$\mu$ is a Karcher mean of ![]() $\Pi$ such that, for all

$\Pi$ such that, for all ![]() $\nu\in\mathcal W_{2,\mathrm{ac}}(\mathcal{X})$ and

$\nu\in\mathcal W_{2,\mathrm{ac}}(\mathcal{X})$ and ![]() $\Pi({\mathrm{d}} m)$ for almost every m,

$\Pi({\mathrm{d}} m)$ for almost every m,

Then, ![]() $\mu$ is pseudo-associative, with

$\mu$ is pseudo-associative, with ![]() $C_{\mu}=1$ and equality holding in Definition 4. Indeed, in that case we have

$C_{\mu}=1$ and equality holding in Definition 4. Indeed, in that case we have

\begin{align*} \|F^\prime(\nu)\|^2_{L^2(\nu)} & = \int_{\mathcal{X}}\bigg|\int_{\mathcal P(\mathcal X)}(T_{\nu}^m \circ T_{\mu}^{\nu}(x)-T_{\mu}^{\nu}(x))\, \Pi({\mathrm{d}} m)\bigg|^2\,\mu({\mathrm{d}} x) \\[5pt] & = \int_{\mathcal{X}}\bigg|\int_{\mathcal P(\mathcal X)} T_{\mu}^{m}(x)\,\Pi({\mathrm{d}} m) - T_{\mu}^{\nu}(x)\bigg|^2\,\mu({\mathrm{d}} x) \\[5pt] & = \int_{\mathcal{X}}|x-T_{\mu}^{\nu}(x)|^2\,\mu({\mathrm{d}} x) = W_2^2(\mu,\nu). \end{align*}

\begin{align*} \|F^\prime(\nu)\|^2_{L^2(\nu)} & = \int_{\mathcal{X}}\bigg|\int_{\mathcal P(\mathcal X)}(T_{\nu}^m \circ T_{\mu}^{\nu}(x)-T_{\mu}^{\nu}(x))\, \Pi({\mathrm{d}} m)\bigg|^2\,\mu({\mathrm{d}} x) \\[5pt] & = \int_{\mathcal{X}}\bigg|\int_{\mathcal P(\mathcal X)} T_{\mu}^{m}(x)\,\Pi({\mathrm{d}} m) - T_{\mu}^{\nu}(x)\bigg|^2\,\mu({\mathrm{d}} x) \\[5pt] & = \int_{\mathcal{X}}|x-T_{\mu}^{\nu}(x)|^2\,\mu({\mathrm{d}} x) = W_2^2(\mu,\nu). \end{align*}

We will thus say that the Karcher mean ![]() $\mu$ is associative simply if (19) holds.

$\mu$ is associative simply if (19) holds.

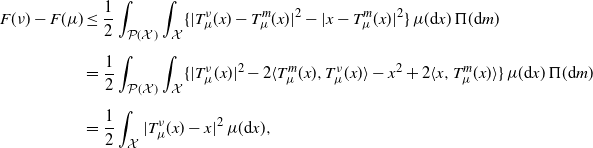

The following is an analogue in Wasserstein space of a classical property implying convergence rates in gradient-type optimization algorithms.

Definition 5. We say that ![]() $\mu\in\mathcal W_{2,\mathrm{ac}}(\mathcal{X})$ satisfies a Polyak–Lojasiewicz inequality if

$\mu\in\mathcal W_{2,\mathrm{ac}}(\mathcal{X})$ satisfies a Polyak–Lojasiewicz inequality if

for some ![]() $\bar{C}_{\mu}>0$ and every

$\bar{C}_{\mu}>0$ and every ![]() $\nu\in\mathcal W_{2,\mathrm{ac}}(\mathcal{X})$.

$\nu\in\mathcal W_{2,\mathrm{ac}}(\mathcal{X})$.

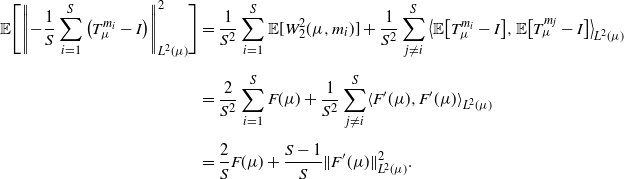

We next state some useful properties.

Lemma 4. Suppose ![]() $\mu$ is a Karcher mean of

$\mu$ is a Karcher mean of ![]() $\Pi$. Then:

$\Pi$. Then:

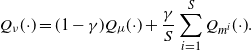

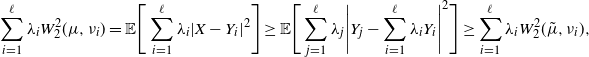

(i) For all

$\nu\in\mathcal W_{2,\mathrm{ac}}(\mathcal{X})$,