1. Introduction

Text readability refers to the textual elements that influence the comprehension, reading fluency, and level of interest of the readers (Dale and Chall Reference Dale and Chall1949). These textual components involve several dimensions of text properties, including lexical, syntactic, and conceptual dimensions (Xia et al. Reference Xia, Kochmar and Briscoe2016). In order to predict the readability of a text automatically, text readability assessment task has been proposed and widely used in many fields, such as language teaching (Lennon and Burdick Reference Lennon and Burdick2004; Pearson et al. Reference Pearson, Ngo, Ekpo, Sarraju, Baird, Knowles and Rodriguez2022), commercial advertisement (Chebat et al. Reference Chebat, Gelinas-Chebat, Hombourger and Woodside2003), newspaper readership (Pitler and Nenkova Reference Pitler and Nenkova2008), health information (Bernstam et al. Reference Bernstam, Shelton, Walji and Meric-Bernstam2005; Eltorai et al. Reference Eltorai, Naqvi, Ghanian, Eberson, Weiss, Born and Daniels2015; Partin et al. Reference Partin, Westfall, Sanda, Branham, Muir, Bellcross and Jain2022), web search (Ott and Meurers Reference Ott and Meurers2011), and book publishing (Pera and Ng Reference Pera and Ng2014). In this section, we will first introduce our proposed concept of textual form complexity, then present related work on text readability assessment with handcrafted features, and finally present a novel insight into text readability assessment and our study.

1.1 Concept of textual form complexity

Text complexity refers to the sophisticated level of a text, which manifests in various aspects such as vocabulary level, sentence structure, text organization, and inner semantics (Frantz et al. Reference Frantz, Starr and Bailey2015). Derived from this, a new concept named textual form complexity is proposed in this paper. Among all the aspects of text complexity, textual form complexity covers the outer form facets in contrast to the inner semantic facets. In this section, we will describe this concept of textual form complexity in detail.

Previous studies have not given a consistent and formal definition of text complexity. However, one perspective (Siddharthan Reference Siddharthan2014) suggests defining text complexity as the linguistic intricacy present at different textual levels. To offer a more comprehensive explanation of this definition, we cited the viewpoints of several experts as follows:

-

• Lexical complexity means that a wide variety of both basic and sophisticated words are available and can be accessed quickly, whereas a lack of complexity means that only a narrow range of basic words are available or can be accessed (Wolfe et al. Reference Wolfe, Schreiner, Rehder, Laham, Foltz, Kintsch and Landauer1998).

-

• Syntactical complexity refers to the sophistication of syntactic structure seen in writing or speaking that arises from our ability to group words as phrases and embed clauses in a recursive, hierarchical fashion (Friederici et al. Reference Friederici, Chomsky, Berwick, Moro and Bolhuis2017).

-

• Grammatical complexity means that a wide variety of both basic and sophisticated structures are available and can be accessed quickly, whereas a lack of complexity means that only a narrow range of basic structures are available or can be accessed (Wolfe et al. Reference Wolfe, Schreiner, Rehder, Laham, Foltz, Kintsch and Landauer1998).

-

• Discourse complexity refers to the difficulty associated with the text’s discourse structure or coreference resolution (Cristea et al. Reference Cristea, Ide, Marcu and Tablan2000).

-

• Graphical complexity refers to the challenges in interpreting the visual aspects of text, such as letter combinations, especially for individuals with dyslexia (Gosse and Van Reybroeck Reference Gosse and Van Reybroeck2020).

On the basis of these viewpoints from experts, text complexity is categorized with regard to different aspects, such as lexical, syntactical, grammatical, discourse, and graphical aspects. Different from this way of categorization, we offer a new perspective. In our view, the complexity of a text is affected by the interplay of multiple factors. Among these factors, there are inner semantic-related factors, such as content, vocabulary richness, and implied information or knowledge; and there are outer form-related factors, such as expressions, structural characteristics, and layout. In this paper, we separate out these outer form-related factors and introduce a new concept, namely textual form complexity, to measure the degree of sophistication of the external forms.

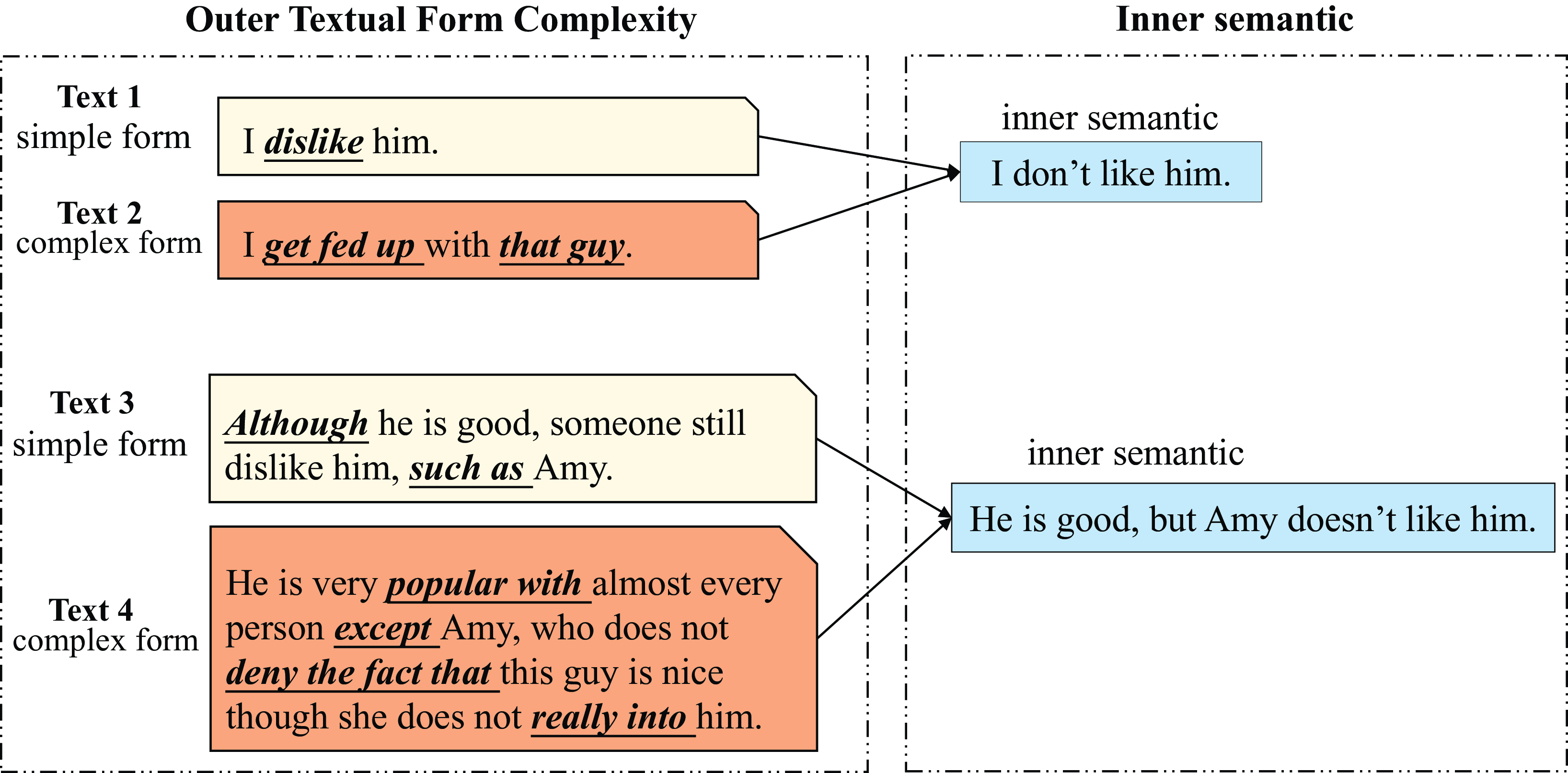

Figure 1 depicts a graph that helps illustrate our proposed concept of textual form complexity. As shown in the picture, four texts are given in terms of their outer form complexity and inner semantics. As can be observed, we have the following discussions: First, there are multiple ways to convey the same semantic, and different ways of expression correspond to different textual outer forms of complexity. For example, given an inner semantic with the meaning “I don’t like him,” text 1 and text 2 are two distinct ways to convey this meaning. Although both of them share the same inner semantic, text 1 expresses it in a simple manner and in a simple textual form, whereas text 2 expresses it in a more complex manner and a more complex textual form. Second, whether the inner semantic is simple or complex, it can be represented by either a simple or a complex textual form. For instance, although the inner semantic of “I don’t like him,” is easy, the complex textual form of text 2 makes it difficult to understand; in contrast, although the inner semantic of “He is good, but Amy doesn’t like him” is a little difficult, the simple textual form of text 3 makes it easy to understand. Based on this observation, we argue that the outer textual form of a text plays a crucial role in determining its reading difficulty, indicating that the proposed concept of textual form complexity is worth further research.

Figure 1. Diagram of the distinctions between the outer textual form complexity and the inner semantic. Four texts are presented in terms of their inner semantic and outer textual form complexity. While text 1 and text 2 convey the same inner semantics, text 2 has a more sophisticated outside textual structure than text 1. Although the inner semantics of text 3 and text 4 are more difficult to comprehend, text 3 expresses them in a simple form, whereas text 4 expresses them in a more complex form.

1.2 Text readability assessment with handcrafted features

Text readability assessment is a significant research field in text information processing. Early work focused on the exploitation of readability formulas (Thorndike Reference Thorndike1921; Lively and Pressey Reference Lively and Pressey1923; Washburne and Vogel Reference Washburne and Vogel1926; Dale and Chall Reference Dale and Chall1948; Mc Laughlin Reference Mc Laughlin1969; Yang Reference Yang1971). Some of the readability formulas approximate syntactic complexity based on simple statistics, such as sentence length (Kincaid et al. Reference Kincaid, Fishburne and Chissom1975). Other readability formulas focus on semantics, which is usually approximated by word frequency with respect to a reference list or corpus (Chall and Dale Reference Chall and Dale1995). While these traditional formulas are easy to compute, they are too simplistic in terms of text difficulty assumptions (Bailin and Grafstein Reference Bailin and Grafstein2001). For example, sentence length is not an accurate measure of syntactic complexity, and syllable count does not necessarily indicate the difficulty of a word (Schwarm and Ostendorf Reference Schwarm and Ostendorf2005; Yan et al. Reference Yan, Song and Li2006).

In recent years, researchers have tackled readability assessment as a supervised classification task and followed different methods to construct their models. The methods can be divided into three categories: language model-based methods (Si and Callan Reference Si and Callan2001; Schwarm and Ostendorf Reference Schwarm and Ostendorf2005; Petersen and Ostendorf Reference Petersen and Ostendorf2009), machine learning-based methods (Heilman et al. Reference Heilman, Collins-Thompson, Callan and Eskenazi2007, Reference Heilman, Collins-Thompson and Eskenazi2008; Xia et al. Reference Xia, Kochmar and Briscoe2016; Vajjala and Lučić, Reference Vajjala and Lučić2018), and neural network-based methods (Azpiazu and Pera Reference Azpiazu and Pera2019; Deutsch et al. Reference Deutsch, Jasbi and Shieber2020; Lee et al. Reference Lee, Jang and Lee2021). Based on the fact that text readability involves many different dimensions of text properties, the performance of classification models highly relies on handcrafted features (Schwarm and Ostendorf Reference Schwarm and Ostendorf2005; Yan et al. Reference Yan, Song and Li2006; Petersen and Ostendorf Reference Petersen and Ostendorf2009; Vajjala and Meurers Reference Vajjala and Meurers2012).

All along, multiple linguistic features have been proposed to improve the performance of text readability assessment, such as lexical features to capture word complexity (Lu Reference Lu2011; Malvern and Richards Reference Malvern and Richards2012; Kuperman et al. Reference Kuperman, Stadthagen-Gonzalez and Brysbaert2012; Collins-Thompson Reference Collins-Thompson2014), syntactical features to mimic the difficulty of the cognitive process (Schwarm and Ostendorf Reference Schwarm and Ostendorf2005; Lu Reference Lu2010; Tonelli et al. Reference Tonelli, Tran and Pianta2012; Lee and Lee Reference Lee and Lee2020), and discourse-based features to characterize text organization (Pitler and Nenkova Reference Pitler and Nenkova2008; Feng et al. Reference Feng, Jansche, Huenerfauth and Elhadad2010).

Although the existing studies have proposed a number of effective handcrafted features for text readability assessment, there are still three points that can be improved. First, the majority of previous studies focused on extracting features based on the content and shallow and deep semantics of the texts; few of them investigated text readability from the perspective of outer textual formal complexity. Second, most of the existing features focus on a single linguistic dimension of texts; namely, one feature tends to correspond to one single linguistic dimension (e.g., the length of a word corresponds to the lexical dimension) (Collins-Thompson Reference Collins-Thompson2014). These single linguistic dimensional features may be limited in their ability to acquire more textual information. If a feature can reflect multiple linguistic dimensions, then it can capture more information about text readability. Third, most of the existing studies focus on a specific language (Chen and Meurers Reference Chen and Meurers2016; Okinina et al. Reference Okinina, Frey and Weiss2020; Wilkens et al. Reference Wilkens, Alfter, Wang, Pintard, Tack, Yancey and François2022), few of them explicitly exploit language-independent features for multilingual readability assessment.

To this end, in this paper, we provide a new perspective on text readability by employing the proposed concept of textual form complexity and introducing a set of textual formal features for the text readability assessment based on the concept.

1.3 A novel insight into text readability assessment

In traditional viewpoints (Feng et al. Reference Feng, Jansche, Huenerfauth and Elhadad2010), text readability evaluates how easy or difficult it is for a reader to understand a given text. In other words, it evaluates the degree of difficulty in comprehending a specific text. In this study, we provide a new perspective on text readability by employing the proposed concept of textual form complexity. As described in Section 1.1, outer textual forms may be carriers of internal core semantics. However, complex outer textual forms may distract the reader and make it more difficult to understand the meaning of the text, reducing the text’s readability. Intuitively, the more exterior textual forms a given text has, the more complex and less readable it is. This perception reflects the implicit association between textual form complexity and text readability, and the purpose of our research is to determine if and to what extent this correlation can contribute to text readability assessment.

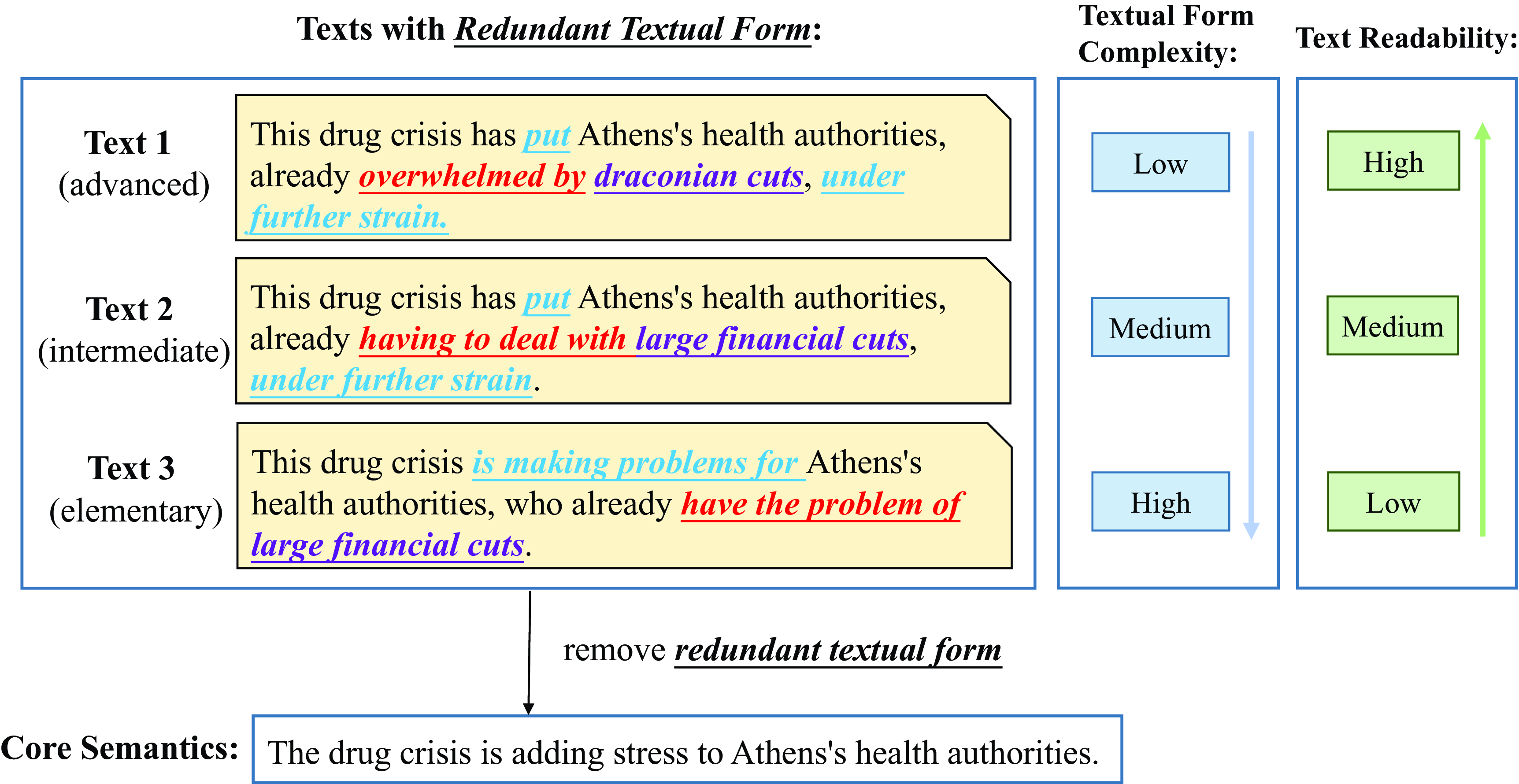

In this work, it is hoped that a novel insight will contribute to a new understanding of text readability assessment. The insight is illustrated in Figure 2. It can be seen that the three provided texts have the same inner core semantics when the exterior textual forms are removed. Due to the different amounts of external textual form, the textual form complexity and readability of the three texts are different. Textual form complexity is positively correlated with the amount of exterior textual form, which results in the ranking of the textual form complexity of the three texts as text 1

![]() $\lt$

text 2

$\lt$

text 2

![]() $\lt$

text 3. Due to the fact that the exterior textual form causes distractions and makes comprehension more difficult, it negatively affects text readability. That is, text readability is negatively connected with the quantity of exterior textual form, which promotes the ranking of the text readability of the three texts as text 3

$\lt$

text 3. Due to the fact that the exterior textual form causes distractions and makes comprehension more difficult, it negatively affects text readability. That is, text readability is negatively connected with the quantity of exterior textual form, which promotes the ranking of the text readability of the three texts as text 3

![]() $\lt$

text 2

$\lt$

text 2

![]() $\lt$

text 1.

$\lt$

text 1.

Figure 2. An instance of the novel insight into text readability. Three parallel texts with the same core semantics from the different readability levels of OneStopEnglish corpus are given. The exterior textual form within the texts is marked with underlines. As can be seen, textual form complexity is positively correlated with the amount of exterior textual form, and the exterior textual form negatively affects text readability.

In summary, using the exterior textual form as a bridge, we can make the following inference: the higher the textual form complexity, the lower the text’s readability. In response to this inference, we propose to construct a set of textual formal features to express the complexity of textual forms and use these features to evaluate the readability of texts. Specifically, we first use a text simplification tool (Martin et al. 2020) to simplify a given text, so as to reduce the complex exterior text forms and obtain the simplified text. Then, we design a set of textual form features based on the similarity, overlap, and length ratio of pre-simplified and post-simplified texts to quantify the differences between them as a measure of the textual exterior form information.

In contrast to existing features based on internal semantics, our proposed textual formal features may benefit from their ability to represent multiple dimensions of the language to obtain more information to assess the readability of the text. This is because textual forms represent a text’s outer qualities and manifest in various linguistic dimensions, such as lexical, syntactical, and grammatical dimensions. In addition, the extraction procedure for the features involves multiple levels, such as word-level deletion, phrase-level substitution, and sentence-level reconstruction, allowing the proposed features to reflect different dimensions of linguistic information. To sum up, two studies are proposed in this work:

-

• Study 1: How to build textual form features to capture text characteristics in terms of textual form complexity?

-

• Study 2: To what extent can the proposed textual form features contribute to readability assessment?

2. Literature review

In this section, we provide a comprehensive review of related works from two perspectives: text complexity and readability assessment. Concerning text complexity, we explore relevant studies focusing on various dimensions individually in Section 2.1. Regarding readability assessment, we conduct a separate review of studies based on different methods in Section 2.2 and provide an in-depth and detailed review of the linguistic features applied for this task in Section 2.3.

2.1 Different dimensions of text complexity

Text complexity refers to the level of difficulty or intricacy present in a given text. It encompasses both linguistic and structural aspects of the text, such as vocabulary richness, sentence length, syntactic complexity, and overall readability. Over time, researchers have devised numerous tools and systems to quantify text complexity in different dimensions. In the following sections, we will separately review the relevant research on text complexity in the lexical, syntactic, and discourse dimensions.

From the lexical dimension, some studies focus on developing tools for analyzing the lexical complexity of a text, while others focus on predicting the difficulty level of individual words. In early studies, Rayner and Duffy (Reference Rayner and Duffy1986) investigate whether lexical complexity increases a word’s processing time. To automatically analyze lexical complexity, there is a widely used tool called Range,Footnote A which focuses on measuring the vocabulary and word diversity. Based on this tool, Anthony (Reference Anthony2014) developed a tool named AntWordProfiler, which is an extension of Range and can be applied to multiple languages. Both tools are based on unnormalized type-token ratio (TTR), and thus, they are all sensitive to the length of the text. Recently, researchers have been engaged in the automatic classification of word difficulty levels (Gala et al. Reference Gala, François and Fairon2013; Tack et al. Reference Tack, François, Ligozat and Fairon2016; Tack et al. Reference Tack, François, Ligozat and Fairon2016). For example, Gala et al. (Reference Gala, François and Fairon2013) investigate 27 intra-lexical and psycholinguistic variables and utilize the nine most predictive features to train a support vector machine (SVM) classifier for word-level prediction. Alfter and Volodina (Reference Alfter and Volodina2018) explore the applicability of previously established word lists to the analysis of single-word lexical complexity. They incorporate topics as additional features and demonstrate that linking words to topics significantly enhances the accuracy of classification.

As another dimension of text complexity, syntactic complexity measures the complexity of sentence structure and organization within the text. In earlier studies, Ortega (Reference Ortega2003) evaluates the evidence concerning the relationship between syntactic complexity measures and the overall proficiency of second-language (L2) writers. They conclude that the association varies systematically across different studies, depending on whether a foreign language learning context is investigated and whether proficiency is defined by program level. Szmrecsanyi (Reference Szmrecsanyi2004) compares three measures of syntactic complexity—node counts, word counts, and a so-called “Index of Syntactic Complexity”—with regard to their accuracy and applicability. They conclude that node counts are resource-intensive to conduct and researchers can confidently use this measure. Recently, Lu and Xu (Reference Lu and Xu2016) develop a system, namely L2SCA, to analyze the syntactic complexity of second-language (L2) writing. The correlation between the output of the L2SCA system and manual calculation is 0.834-1.000. Although L2SCA is designed for L2 texts, studies have shown that it is also suitable for analyzing other textual materials (Gamson et al. Reference Gamson, Lu and Eckert2013; Jin et al. Reference Jin, Lu and Ni2020). Besides, Kyle (Reference Kyle2016) develops another system, TAASSC. The system analyzes syntactic structure at clause and phrase levels and includes both coarse-grained and fine-grained measures.

Despite lexical and syntactic dimensions, discourse is also an integral dimension of text complexity (Graesser et al. Reference Graesser, McNamara and Kulikowich2011). In early work, Biber (Reference Biber1992) employs a theory-based statistical approach to examine the dimensions of discourse complexity in English. They analyze the distribution of 33 surface linguistic markers of complexity across 23 spoken and written registers. The study reveals that discourse complexity is a multidimensional concept, with various types of structural elaboration reflecting different discourse functions. Recently, Graesser et al. (Reference Graesser, McNamara, Cai, Conley, Li and Pennebaker2014) propose a classical discourse-level text analysis system, namely Coh-Metrix, which is designed to analyze text on multiple levels of language and discourse. This system regards cohesion as a textual notion and coherence as a reader-related psychological concept (Graesser et al. Reference Graesser, McNamara, Louwerse and Cai2004). It contains measures such as cohesion features, connective-related features, and LSA-based features (Landauer et al. Reference Landauer, Foltz and Laham1998). Besides, Crossley et al. (2016, Reference Crossley, Kyle and Dascalu2019) also focus on analyzing text cohesion and develop a system named TAACO. In addition to the overall cohesion emphasized in previous systems, this system adds local and global cohesion measures based on LDA (Blei et al. Reference Blei, Ng and Jordan2003) and word2vec (Mikolov et al. Reference Mikolov, Sutskever, Chen, Corrado and Dean2013).

In recent years, educators have been trying to measure the complexity of texts so that they can provide texts that match the level of knowledge and skills of their students. Wolfe et al. (Reference Wolfe, Schreiner, Rehder, Laham, Foltz, Kintsch and Landauer1998) refer to this as the zone of proximal text difficulty. That is, the text should challenge the reader in a way that inspires them to improve their existing knowledge and skills. Besides, the Common Core State Standards (CCSS) set a goal for students to comprehend texts of progressively increasing complexity as they advance through school (Association et al. Reference Association2010). To assist educators in determining the proper degree of complexity, CCSS standards introduce a triangle model to measure text complexity through a combination of qualitative analysis, quantitative measurements, and reader-task considerations (Williamson et al. Reference Williamson, Fitzgerald and Stenner2013). Frantz et al. (Reference Frantz, Starr and Bailey2015) argue that the syntactic complexity, as one aspect of text complexity, should be explicitly included as a quantitative measure in the model. In addition, Sheehan et al. (Reference Sheehan, Kostin, Napolitano and Flor2014) design a text analysis system namely TextEvaluator to help select reading materials that are consistent with the text complexity goals outlined in CCSS. This system expands the text variation dimension in traditional metrics and addresses two potential threats, genre bias and blueprint bias.

2.2 Readability assessment methods

Text readability assessment refers to the process of determining the comprehensibility of a given text. It is a significant research field in text information processing. In the majority of studies, researchers treat readability assessment as a supervised classification task, aiming to assign a readability label to each text. They follow different methods to construct their models, which can be divided into three categories: machine learning-based methods, neural network-based methods, and language model-based methods. The following section will review the relevant studies based on the three methods, respectively.

The machine learning-based method involves utilizing machine learning algorithms and feature engineering to assess the readability of a given text. These approaches typically utilize a set of text features as inputs and train a machine learning model to predict the readability labels. Following this method, Vajjala and Lučić (Reference Vajjala and Lučić2018) introduce a Support Vector Machine (SVM) classifier trained on a combination of 155 traditional, discourse cohesion, lexico-semantic, and syntactic features. Their classification model attains an accuracy of 78.13% when evaluated on the OneStopEnglish corpus. Xia et al. (Reference Xia, Kochmar and Briscoe2016) adopt similar lexical, syntactic, and traditional features as Vajjala and Lučić (Reference Vajjala and Lučić2018) and further incorporate language modeling-based and discourse cohesion-based features to train a SVM classifier. Their approach achieves an accuracy of 80.3% on the Weebit corpus. More recently, Chatzipanagiotidis et al. Reference Chatzipanagiotidis, Giagkou and Meurers2021) collect a diverse set of quantifiable linguistic complexity features, including lexical, morphological, and syntactic aspects, and conduct experiments using various feature subsets. Their findings demonstrate that each linguistic dimension provides distinct information, contributing to the highest performance achieved when using all linguistic feature subsets together. By training a readability classifier based on these features, they achieve a classification accuracy of 88.16% for the Greek corpus. Furthermore, Wilkens et al. (Reference Wilkens, Alfter, Wang, Pintard, Tack, Yancey and François2022) introduce the FABRA readability toolkit and propose that measures of lexical diversity and dependency counts are crucial predictors for native texts, whereas for foreign texts, the most effective predictors include syntactic features that illustrate language development, along with features related to lexical gradation.

With the rise of deep learning, many neural network-based methods have been proposed and applied to readability assessment. For example, Azpiazu and Pera (Reference Azpiazu and Pera2019) introduce a neural model called Vec2Read. The model utilizes a hierarchical RNN with attention mechanisms and is applied in a multilingual setting. They find that no language-specific patterns suggest text readability is more challenging to predict in certain languages compared to others. Mohammadi and Khasteh (Reference Mohammadi and Khasteh2019) employ a deep reinforcement learning model as a classifier to assess readability, showcasing its ability to achieve multi-linguality and efficient utilization of text. Moreover, Deutsch et al. (Reference Deutsch, Jasbi and Shieber2020) evaluate the joint use of linguistic features and deep learning models (i.e., transformers and hierarchical attention networks). They utilize the output of deep learning models as features and combine them with linguistic features, which are then fed into a classifier. However, their findings reveal that incorporating linguistic features into deep learning models does not enhance model performance, suggesting these models may already represent the readability-related linguistic features. This finding connects to the studies of BERTology, which aim to unveil BERT’s capacity for linguistic representation (Buder-Gröndahl, Reference Buder-Gröndahl2023). Research in this area has shown BERT’s ability to grasp phrase structures (Reif et al. Reference Reif, Yuan, Wattenberg, Viegas, Coenen, Pearce and Kim2019), dependency relations (Jawahar et al. Reference Jawahar, Sagot and Seddah2019), semantic roles (Kovaleva et al. Reference Kovaleva, Romanov, Rogers and Rumshisky2019), and lexical semantics (Soler and Apidianaki Reference Soler and Apidianaki2020). In recent studies, hybrid models have also been explored for their applicability in readability assessment. Wilkens et al. (Reference Wilkens, Watrin, Cardon, Pintard, Gribomont and François2024) systematically compare six hybrid approaches alongside standard machine learning and deep learning approaches across four corpora, covering different languages and target audiences. Their research provides new insights into the complementarity between linguistic features and transformers.

The language model-based methods can be divided into early ones based on statistical language models and recent ones based on pre-trained language models. In early work, Si and Callan (Reference Si and Callan2001) are the first to introduce the application of statistical models for estimating the reading difficulty. They employ language models to represent the content of web pages and devise classifiers that integrated language models with surface linguistic features. Later on, many other methods based on statistical models are proposed. For instance, Schwarm and Ostendorf (Reference Schwarm and Ostendorf2005) propose training n-gram language models for different readability levels and use likelihood ratios as features in a classification model. Petersen and Ostendorf (Reference Petersen and Ostendorf2009) train three language models (uni-gram, bi-gram, and tri-gram) on external data resources and compute perplexities for each text to indicate its readability. In recent times, pre-trained language models have gained considerable attention in academia, contributing significantly to advancements in the field of natural language processing. Concurrently, some researchers are also investigating their applicability in readability assessment. For example, Lee et al. (Reference Lee, Jang and Lee2021) investigate appropriate pre-trained language models and traditional machine learning models for the task of readability assessment. They combine these models to form various hybrid models, and their RoBERTA-RF-T1 hybrid achieves an almost perfect classification accuracy of 99%. Lee and Lee (Reference Lee and Lee2023) introduce a novel adaptation of a pre-trained seq2seq model for readability assessment. They prove that a seq2seq model—T5 or BART—can be adapted to discern which text is more difficult from two given texts.

2.3 Linguistic features for readability assessment

This section provides an overview of the linguistic features used in readability assessment. We review these features from five dimensions: shallow features, lexical features, syntactical features, discourse-based features, and reading interaction-based features.

The shallow features refer to the readability formulas exploited in the early research (Thorndike Reference Thorndike1921; Lively and Pressey Reference Lively and Pressey1923; Washburne and Vogel Reference Washburne and Vogel1926; Dale and Chall Reference Dale and Chall1948; Mc Laughlin Reference Mc Laughlin1969; Yang Reference Yang1971). These formulas often assess text readability through simple statistical measures. For example, the Flesch-Kincaid Grade Level Index (Kincaid et al. Reference Kincaid, Fishburne and Chissom1975), a widely used readability formula, calculates its scores based on the average syllable count per word and the average sentence length in a given text. Similarly, the Gunning Fog index (Reference Gunning1952) uses the average sentence length and the proportion of words that are three syllables or longer to determine readability. Beyond syntax, some formulas delve into semantic analysis, typically by comparing word frequency against a standard list or database. As an illustration, the Dale-Chall formula employs a combination of average sentence length and the percentage of words not present in the “simple” word list (Chall and Dale Reference Chall and Dale1995). While these traditional formulas are easy to compute, they exhibit shortcomings in terms of their assumptions about text difficulty (Bailin and Grafstein Reference Bailin and Grafstein2001). For instance, sentence length does not accurately measure syntactic complexity, and syllable count may not necessarily reflect the difficulty of a word (Schwarm and Ostendorf Reference Schwarm and Ostendorf2005; Yan et al. Reference Yan, Song and Li2006). Furthermore, as highlighted by Collins-Thompson (Reference Collins-Thompson2014), the traditional formulas do not adequately capture the actual reading process and neglect crucial aspects such as textual coherence. Collins-Thompson (Reference Collins-Thompson2014) argues that traditional readability formulas solely focus on surface-level textual features and fail to consider deeper content-related characteristics.

The lexical features capture the attributes associated with the difficulty or unfamiliarity of words (Lu Reference Lu2011; Malvern and Richards Reference Malvern and Richards2012; Kuperman et al. Reference Kuperman, Stadthagen-Gonzalez and Brysbaert2012; Collins-Thompson Reference Collins-Thompson2014). Lu (Reference Lu2011) analyzes the distribution of lexical richness across three dimensions: lexical density, sophistication, and variation. Their work employs various metrics from language acquisition studies and reports noun, verb, adjective, and adverb variations, which reflect the proportion of words belonging to each respective grammatical category relative to the total word count. Type-Token Ratio (TTR) is the ratio of number of word types to total number of word tokens in a text. It has been widely used as a measure of lexical diversity or lexical variation (Malvern and Richards Reference Malvern and Richards2012). Vajjala and Meurers (Reference Vajjala and Meurers2012) consider four alternative transformations of TTR and the Measure of Textual Lexical Diversity (MTLD; Malvern and Richards (Reference Malvern and Richards2012)), which is a TTR-based approach that is not affected by text length. Vajjala and Meurers (Reference Vajjala and Meurers2016) explore the word-level psycholinguistic features such as concreteness, meaningfulness, and imageability extracted from the MRC psycholinguistic database (Wilson Reference Wilson1988) and various Age of Acquisition (AoA) measures released by Kuperman et al. (Reference Kuperman, Stadthagen-Gonzalez and Brysbaert2012). Moreover, word concreteness has been shown to be an important aspect of text comprehensibility. Paivio et al. (Reference Paivio, Yuille and Madigan1968) and Richardson (Reference Richardson1975) define concreteness based on the psycholinguistic attributes of perceivability and imageability. Tanaka et al. (Reference Tanaka, Jatowt, Kato and Tanaka2013) incorporate these attributes of word concreteness into their measure of text comprehensibility and readability.

The syntactical features imitate the difficulty of cognitive process for readers (Schwarm and Ostendorf Reference Schwarm and Ostendorf2005; Lu Reference Lu2010; Tonelli et al. Reference Tonelli, Tran and Pianta2012; Lee and Lee Reference Lee and Lee2020). These features capture properties of the parse tree that are associated with more complex sentence structure (Collins-Thompson Reference Collins-Thompson2014). For example, Schwarm and Ostendorf (Reference Schwarm and Ostendorf2005) examine four syntactical features related to parse tree structure: the average height of parse trees, the average numbers of subordinate clauses (SBARs), noun phrases, and verb phrases per sentence. Building on this, Lu (Reference Lu2010) expands the analysis and implements four specific metrics for each of these phrase types, which are respectively the total number of phrases in a document, the average number of phrases per sentence, and the average length of phrases both in terms of words and characters. In addition to average tree height, Lu (Reference Lu2010) introduces metrics based on non-terminal nodes, such as the average number of non-terminal nodes per parse tree and per word. Tonelli et al. (Reference Tonelli, Tran and Pianta2012) assess syntactic complexity and the frequency of particular syntactic constituents in a text. They suggest that texts with higher syntactic complexity are more challenging to comprehend, thus reducing their readability. This is attributed to factors such as syntactic ambiguity, dense structure, and high number of embedded constituents within the text.

The discourse-based features illustrate the organization of a text, emphasizing that a text is not just a series of random sentence but reveals a higher-level structure of dependencies (Pitler and Nenkova Reference Pitler and Nenkova2008; Feng et al. Reference Feng, Jansche, Huenerfauth and Elhadad2010; Lee et al. Reference Lee, Jang and Lee2021). In previous research, Pitler and Nenkova (Reference Pitler and Nenkova2008) investigate how discourse relations impact text readability. They employ the Penn Discourse Treebank annotation tool (Prasad, Webber, and Joshi Reference Prasad, Webber and Joshi2017), focusing on the implicit local relations between adjacent sentences and explicit discourse connectives. Feng et al. (Reference Feng, Elhadad and Huenerfauth2009) propose that discourse processing is based on concepts and propositions, which are fundamentally composed of established entities, such as general nouns and named entities. They design a set of entity-related features for readability assessment, focusing on quantifying the number of entities a reader needs to track both within each sentence and throughout the entire document. To analyze how discourse entities are distributed across a text’s sentences, Barzilay and Lapata (Reference Barzilay and Lapata2008) introduce the entity grid method for modeling local coherence. Based on this approach, Guinaudeau and Strube (Reference Guinaudeau and Strube2013) refine the use of local coherence scores, applying them to distinguish documents that are easy to read from those difficult ones.

Recently, various reading interaction-based features have been proposed and applied in the task of readability assessment (Gooding et al. Reference Gooding, Berzak, Mak and Sharifi2021; Baazeem, Al-Khalifa, and Al-Salman Reference Baazeem, Al-Khalifa and Al-Salman2021). Dale and Chall (Reference Dale and Chall1949) suggest that readability issues stem not only from the characteristics of the text but also from those of the reader. Similarly, Feng et al. (Reference Feng, Elhadad and Huenerfauth2009) argue that readability is not just determined by literacy skills, but also by the readers’ personal characteristics and backgrounds. For example, Gooding et al. (Reference Gooding, Berzak, Mak and Sharifi2021) measure implicit feedback through participant interactions during reading, generating a set of readability features based on readers’ aggregate scrolling behaviors. These features are language agnostic, unobtrusive, and are robust to noisy text. Their work shows that scroll behavior can provide an insight into the subjective readability for an individual. Baazeem et al. (Reference Baazeem, Al-Khalifa and Al-Salman2021) suggest the use of eye-tracking features in automatic readability assessment and achieve enhanced model performance. Their findings reveal that eye-tracking features are superior to linguistic features when combined, implying the ability of these features to reflect the text readability level in a more natural and precise way.

In addition to the linguistic features for readability assessment reviewed above, this paper proposes a set of novel features which characterize text readability from the perspective of outer textual form complexity. In subsequent sections, we will introduce the proposed features and describe our readability assessment method in detail.

3. Method

3.1 Overall framework

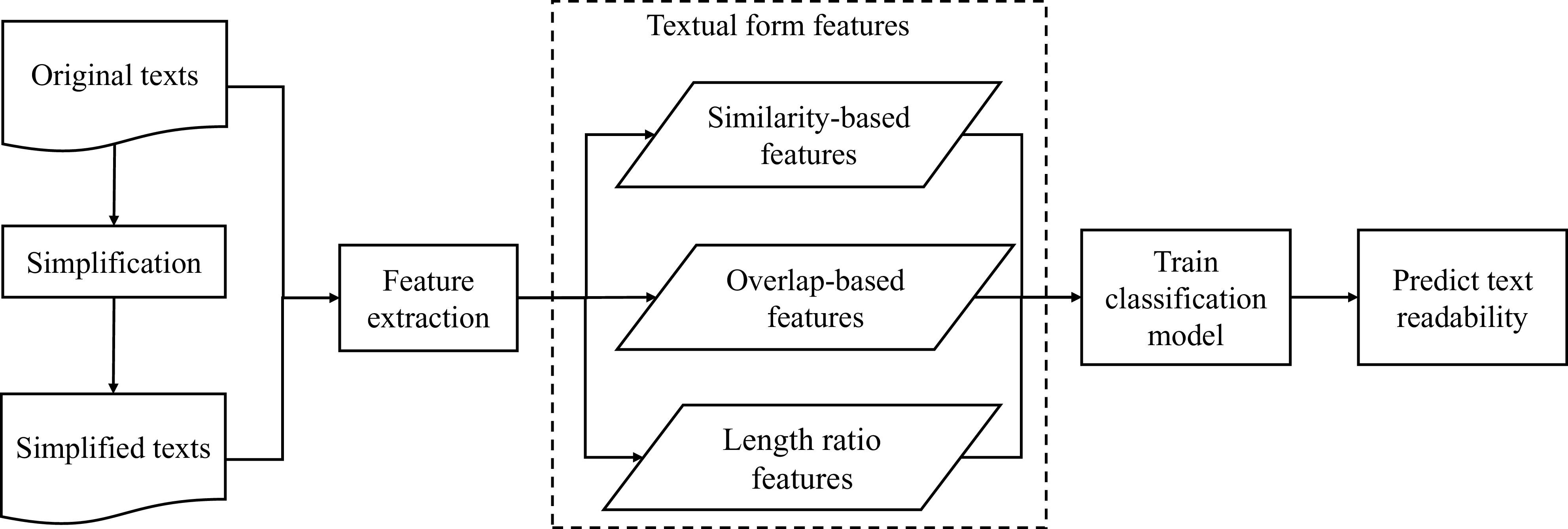

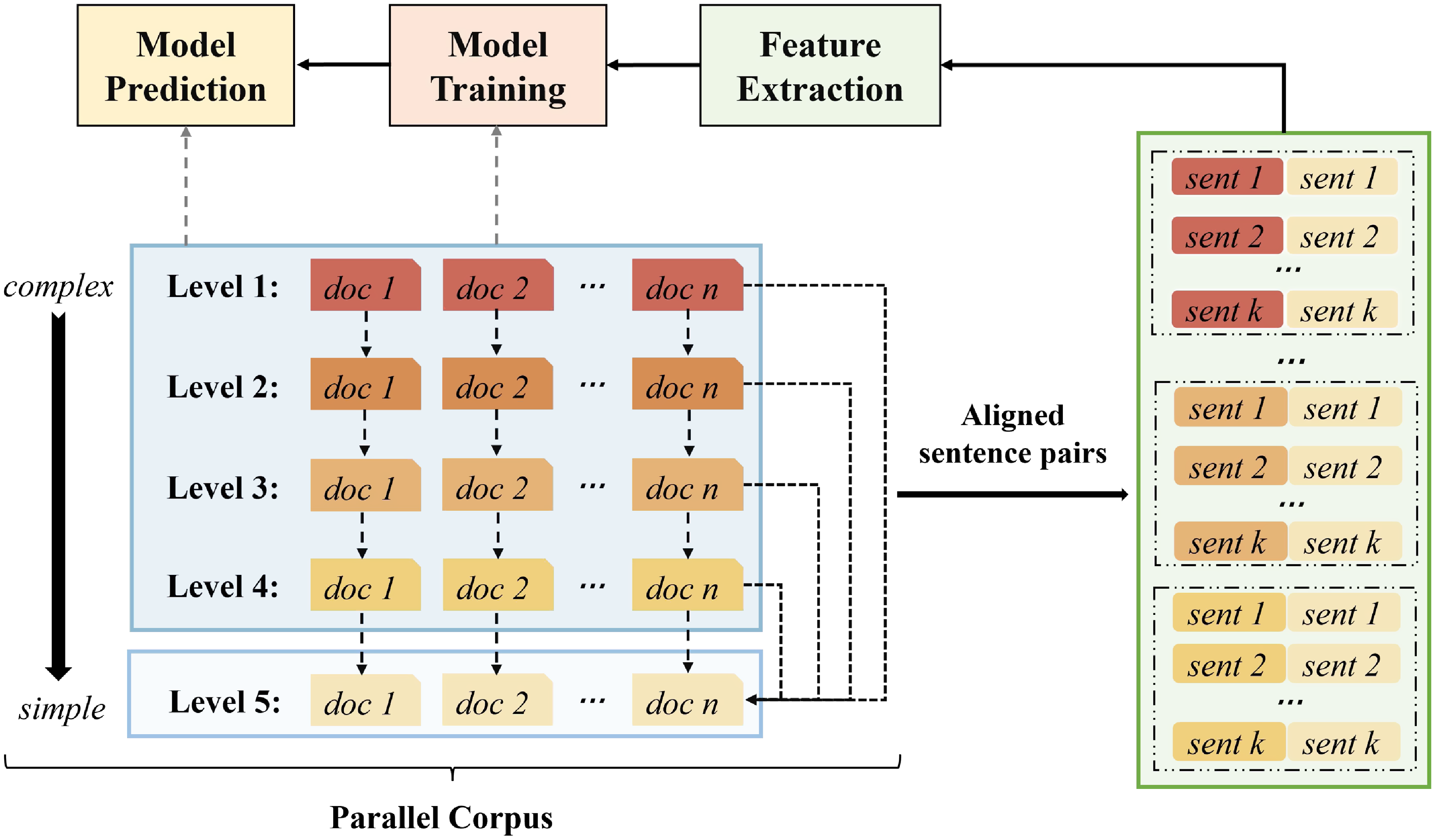

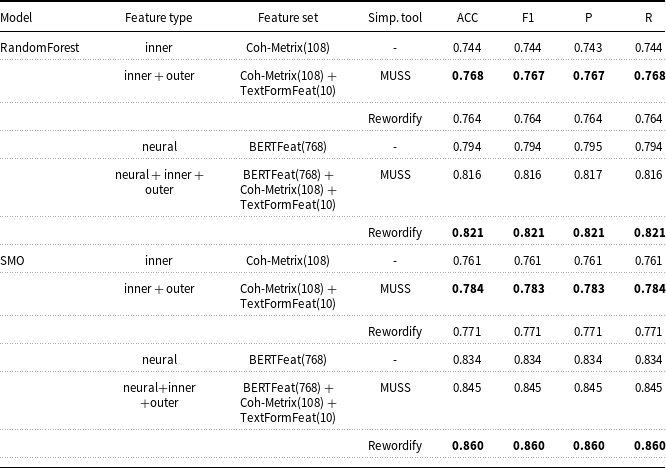

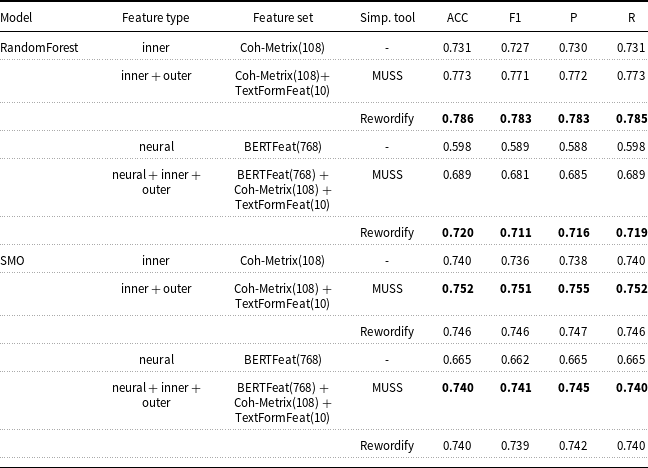

Based on the concept of textual form complexity, we propose to construct a set of textual formal features to help characterize the readability of the text. The overall framework of our method is presented in Figure 3. Our method consists of three components. The first component is the text simplification stage. In this stage, we use the simplification tool to simplify each text to its simplified version. These original and simplified texts are used to extract the textual formal features in the second stage. The process of detailed simplification will be introduced in Section 3.2. The second component is the feature extraction stage. In this stage, we extract the textual form features with the input of the original texts and their simplified versions. The textual form features are extracted by measuring the distinction between the original and the simplified texts from three aspects, which correspond to similarity-based features, overlap-based features, and length ratio-based features. The details of the extraction of the textual form features will be introduced in Section 3.3. The third component is the model training stage. In this stage, we first train a classification model with the extracted features and use the model to predict the readability label of the texts. The details of model prediction will be introduced in Section 3.4.

Figure 3. The flowchart of our method. To obtain the simplified texts, we begin by simplifying the original texts. The original and simplified texts are then passed to feature extraction as inputs to get the three subsets of textual form features. Using these features, we then train a classification model to predict text readability.

3.2 Simplification operations to reduce the exterior textual form

In order to provide a more formal description for the proposed idea of textual form complexity, we study certain criteria for writing simple and clear texts, such as the Plain Language Guidelines (Caldwell et al. Reference Caldwell, Cooper, Reid, Vanderheiden, Chisholm, Slatin and White2008; PLAIN 2011) and the “Am I making myself clear?” guideline (Dean Reference Dean2009). The basic principles shared by these guidelines are: “write short sentences; use the simplest form of a verb (present tense and not conditional or future); use short and simple words; avoid unnecessary information; etc.” Basically, the principle is to simplify the complexity of the textual form by reducing unnecessary exterior textual forms. Consequently, we conclude that the complexity of textual form is a measure of the amount of exterior textual form in a given text.

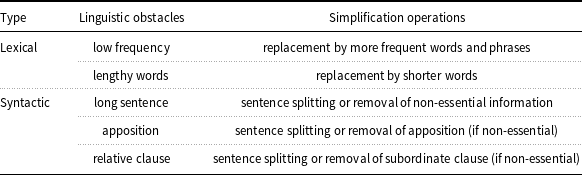

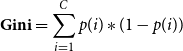

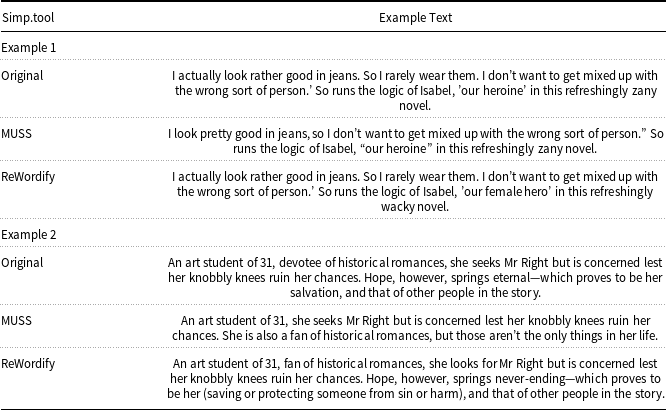

In the field of automatic text simplification, these guidelines also serve as guidance on the simplification operations (Štajner et al. Reference Štajner, Sheang and Saggion2022), such as splitting sentences, deletion of non-essential parts, and replacement of simple words. Table 1 presents some typical examples. It shows that simplification operations can help reduce the external textual form by reducing the linguistic obstacles within the texts. Furthermore, text simplification, according to Martin et al. (2020), is the process of reducing the complexity of a text without changing its original meaning. Based on the above theoretical foundations, we assume that text simplification can reduce the outer textual form while retaining the inner core semantics. If we can measure what the simplification operations have done, we can quantify the exterior textual form. Motivated by this, we propose to extract the textual form features by measuring the reduced textual forms during the simplification process.

Table 1. Linguistic obstacles and simplification operations to remove them

This table is presented by Štajner et al. (Reference Štajner, Sheang and Saggion2022)

We quote this table to show the functions of simplification operations in reducing the exterior textual form.

The technique used in the simplification process is a multilingual unsupervised sentence simplification system called MUSS (Martin et al. 2020). The architecture of this system is based on the sequence-to-sequence pre-trained transformer BART (Lewis et al. Reference Lewis, Liu, Goyal, Ghazvininejad, Mohamed, Levy, Stoyanov and Zettlemoyer2020). For different languages, we utilize the corresponding pre-trained model, that is“

![]() $muss\_en\_mined$

” for English, “

$muss\_en\_mined$

” for English, “

![]() $muss\_fr\_mined$

” for French, and “

$muss\_fr\_mined$

” for French, and “

![]() $muss\_es\_mined$

” for Spanish. Specifically, we first utilize the NLTK tool for sentence segmentation (Loper and Bird Reference Loper and Bird2002). The pre-trained model is then applied to each document, sentence by sentence, to simplify it. Finally, we incorporate the simplified sentences into a single document as the corresponding simplified text.

$muss\_es\_mined$

” for Spanish. Specifically, we first utilize the NLTK tool for sentence segmentation (Loper and Bird Reference Loper and Bird2002). The pre-trained model is then applied to each document, sentence by sentence, to simplify it. Finally, we incorporate the simplified sentences into a single document as the corresponding simplified text.

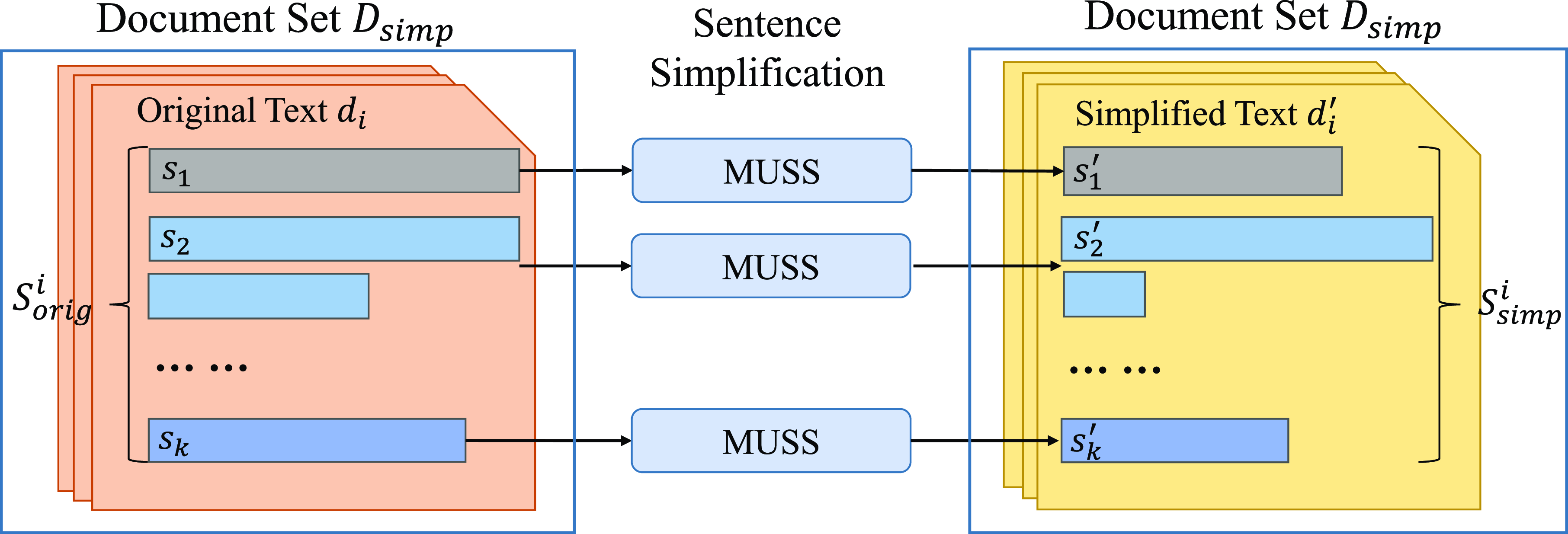

We show the simplification process in Figure 4. Supposing the document set of original texts is

![]() $D_{orig}=\{d_1,d_2,\ldots, d_i,\ldots, d_n\}$

. For a given text

$D_{orig}=\{d_1,d_2,\ldots, d_i,\ldots, d_n\}$

. For a given text

![]() $d_i$

$d_i$

![]() $(1\le i\le n)$

, the original sentence set of

$(1\le i\le n)$

, the original sentence set of

![]() $d_i$

is denoted as

$d_i$

is denoted as

![]() $S_{orig}^i=\{s_1,s_2,\ldots s_j,\ldots, s_k\}$

. After the simplification process, the simplified text of

$S_{orig}^i=\{s_1,s_2,\ldots s_j,\ldots, s_k\}$

. After the simplification process, the simplified text of

![]() $d_i$

is obtained, which is denoted as

$d_i$

is obtained, which is denoted as

![]() $d_i'$

. Due to the sentence-by-sentence manner, we also obtain the parallel simplified sentence

$d_i'$

. Due to the sentence-by-sentence manner, we also obtain the parallel simplified sentence

![]() $s_j'$

for each sentence in the text

$s_j'$

for each sentence in the text

![]() $d_i$

. If a sentence is split into multiple sub-sentences after simplification, we connect the sub-sentences as the corresponding simplified sentence

$d_i$

. If a sentence is split into multiple sub-sentences after simplification, we connect the sub-sentences as the corresponding simplified sentence

![]() $s_j'$

. Then the simplified sentence set of the simplified document

$s_j'$

. Then the simplified sentence set of the simplified document

![]() $d_i'$

is obtained, which is denoted as

$d_i'$

is obtained, which is denoted as

![]() $S_{simp}^i=\{s_1',s_2',\ldots, s_j',\ldots, s_k'\}$

. We denote the parallel sentence pairs as

$S_{simp}^i=\{s_1',s_2',\ldots, s_j',\ldots, s_k'\}$

. We denote the parallel sentence pairs as

![]() $(s_j,s_j')$

$(s_j,s_j')$

![]() $(s_j\in S_{orig}^i, s_j' \in S_{simp}^i, 1 \le i \le n, 1 \le j \le k)$

, where

$(s_j\in S_{orig}^i, s_j' \in S_{simp}^i, 1 \le i \le n, 1 \le j \le k)$

, where

![]() $n$

is the total number of the texts in the corpus and

$n$

is the total number of the texts in the corpus and

![]() $k$

is the total number of the sentences in each text.

$k$

is the total number of the sentences in each text.

Figure 4. The symbolism of the simplification process. We use the sentence-level simplification model MUSS to simplify each sentence

![]() $s_j$

and obtain a simplified sentence

$s_j$

and obtain a simplified sentence

![]() $s_j'$

for each text

$s_j'$

for each text

![]() $d_i$

in the original document set

$d_i$

in the original document set

![]() $D_{orig}$

. Finally, we combine the simplified sentences in each text

$D_{orig}$

. Finally, we combine the simplified sentences in each text

![]() $d_i'$

to produce a parallel simplified document set

$d_i'$

to produce a parallel simplified document set

![]() $D_{simp}$

.

$D_{simp}$

.

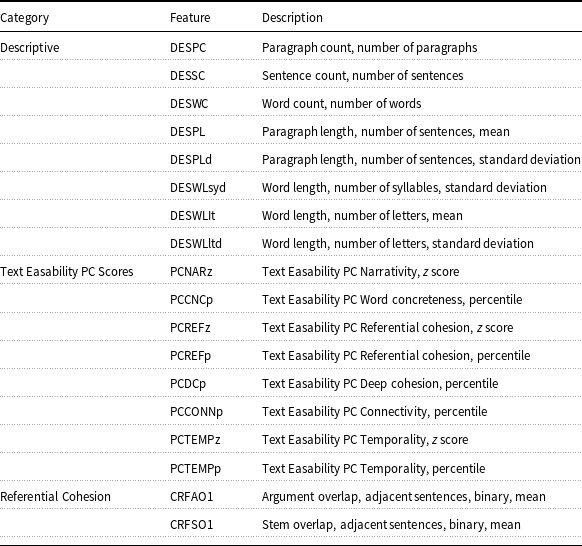

3.3 Textual form features

On the basis of our innovative insight into text readability, we offer a set of textual form features for assessing text readability. The retrieved features are based on the assumption that the differences between the original text and the simplified text can reflect the degree to which the complex exterior textual form is reduced during the simplification process. In other words, the distinctions measure the quantity of the outer textual form and hence serve as an indicator of the complexity of the textual form. To achieve this, we extract features from three dimensions, namely similarity, overlap, and length ratio, to reflect the difference between the original text and the simplified text and to quantify how many exterior forms have been reduced. The proposed textual form features are divided into three subsets:

-

• Similarity-based features. The similarity-based features are based on the classical similarity measurements, including cosine similarity, Jaccard similarity, edit distance, BLEU score, and METEOR score. In particular, we propose an innovative feature

$BertSim$

, which determines the distance between BERT-based text representations.

$BertSim$

, which determines the distance between BERT-based text representations. -

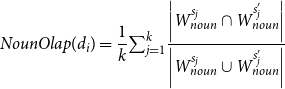

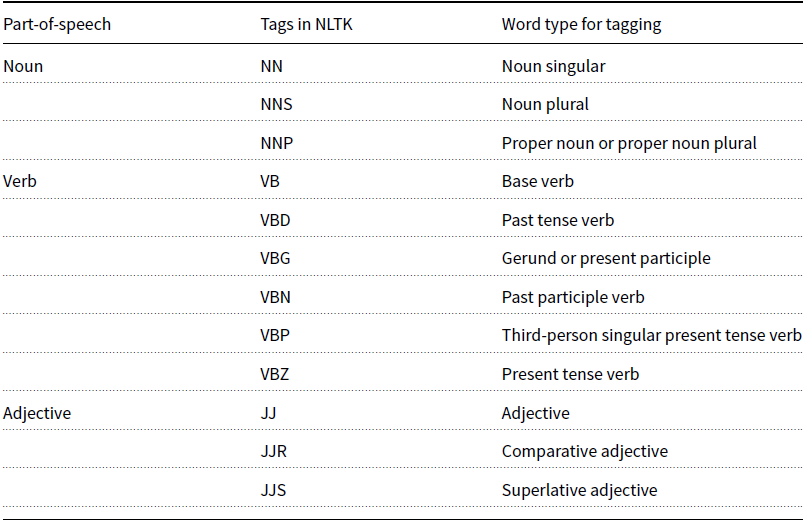

• Overlap-based features. The overlap-based features are proposed based on the intuition that word-level overlap can represent word-level change through simplification (e.g., the number of complex word deletion) and capture lexical-dimension textual form complexity (e.g., vocabulary difficulty). We investigate the overlap from the perspective of part-of-speech (i.e., noun, verb, and adjective).

-

• Length ratio-based features. The length ratio-based features are based on the observation that text is usually shortened after being simplified, we propose to use the sentence-level length ratio to characterize the syntactical dimension of textual form complexity.

In the following subsections, we will describe these three types of textual form features in detail.

3.3.1 Similarity-based features

According to the information theory lin1998information, similarity refers to the commonalities shared by two different texts. The greater the commonality, the higher the similarity, and vice versa. Over the past three decades (Wang and Dong Reference Wang and Dong2020), several similarity measurements have been proposed. These similarity measurements vary in how they represent text and compute distance. In this work, we use three classical similarity measurements to capture textual form complexity for readability assessment. In addition, we also propose an innovative feature based on the similarity of BERT embeddings (Imperial Reference Imperial2021). The detailed descriptions of them are as follows:

-

![]() $\mathbf{CosSim}$

.

$\mathbf{CosSim}$

.

Cosine Similarity measures the cosine of the angle between two vectors in an inner product space (Deza and Deza Reference Deza and Deza2009). For a given document, the

![]() $CosSim$

feature is calculated as the average cosine similarity between the vector representations of sentences in the text. It can be considered a sentence-level similarity between the texts before and after simplification. Specifically, for a given text

$CosSim$

feature is calculated as the average cosine similarity between the vector representations of sentences in the text. It can be considered a sentence-level similarity between the texts before and after simplification. Specifically, for a given text

![]() $d_i$

, the

$d_i$

, the

![]() $k$

parallel sentence pairs

$k$

parallel sentence pairs

![]() $(s_j, s_j')$

are obtained through simplification operations, where

$(s_j, s_j')$

are obtained through simplification operations, where

![]() $j$

=1,2…,

$j$

=1,2…,

![]() $k$

. We use the one-hot encoding as the vector representations of these sentences, which is a well-known bag-of-words model for text representation (Manning and Schütze Reference Manning and Schütze1999). Then, we calculate the cosine similarity between the one-hot vectors of

$k$

. We use the one-hot encoding as the vector representations of these sentences, which is a well-known bag-of-words model for text representation (Manning and Schütze Reference Manning and Schütze1999). Then, we calculate the cosine similarity between the one-hot vectors of

![]() $s_j$

and

$s_j$

and

![]() $s_j'$

and average the

$s_j'$

and average the

![]() $k$

values in text

$k$

values in text

![]() $d_i$

. The feature

$d_i$

. The feature

![]() $CosSim$

calculation formula is as follows:

$CosSim$

calculation formula is as follows:

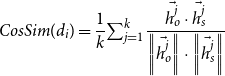

\begin{equation} CosSim(d_i)=\frac{1}{k}{\textstyle \sum _{j=1}^{k}} \frac{\vec{h_o^j}\cdot \vec{h_s^j}}{\left \|\vec{h_o^j}\right \| \cdot \left \|\vec{h_s^j}\right \|} \end{equation}

\begin{equation} CosSim(d_i)=\frac{1}{k}{\textstyle \sum _{j=1}^{k}} \frac{\vec{h_o^j}\cdot \vec{h_s^j}}{\left \|\vec{h_o^j}\right \| \cdot \left \|\vec{h_s^j}\right \|} \end{equation}

where

![]() $\vec{h_o^j}$

is the one-hot vector of sentence

$\vec{h_o^j}$

is the one-hot vector of sentence

![]() $s_j$

in original text

$s_j$

in original text

![]() $d_i$

,

$d_i$

,

![]() $\vec{h_s^j}$

is the one-hot vector of sentence

$\vec{h_s^j}$

is the one-hot vector of sentence

![]() $s_j'$

in simplified text

$s_j'$

in simplified text

![]() $d_i'$

,

$d_i'$

,

![]() $\left \|\vec{h^j}\right \|$

denotes the modulus of vector

$\left \|\vec{h^j}\right \|$

denotes the modulus of vector

![]() $\vec{h^j}$

,

$\vec{h^j}$

,

![]() $k$

is the number of sentence pair

$k$

is the number of sentence pair

![]() $(s_j, s_j')$

for the given text

$(s_j, s_j')$

for the given text

![]() $d_i$

.

$d_i$

.

-

![]() $\mathbf{JacSim}$

.

$\mathbf{JacSim}$

.

Jaccard similarity is a similarity metric used to compare two finite sets. It is defined as the intersection of two sets over the union of two sets (Jaccard Reference Jaccard1901). Applied to text similarity measurement, Jaccard similarity is computed as the number of shared terms over the number of all unique terms in both strings (Gomaa and Fahmy Reference Gomaa and Fahmy2013). The feature

![]() $JacSim$

measures the average Jaccard similarity of the sentence pairs for the given text. The extraction procedure is the same as for the feature

$JacSim$

measures the average Jaccard similarity of the sentence pairs for the given text. The extraction procedure is the same as for the feature

![]() $CosSim$

, and the calculation formula is as follows:

$CosSim$

, and the calculation formula is as follows:

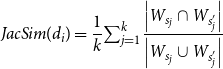

\begin{equation} JacSim(d_i)=\frac{1}{k}{\textstyle \sum _{j=1}^{k}}\frac{\left | W_{s_j} \cap W_{s_j'} \right | }{\left | W_{s_j}\cup W_{s_j'}\right | } \end{equation}

\begin{equation} JacSim(d_i)=\frac{1}{k}{\textstyle \sum _{j=1}^{k}}\frac{\left | W_{s_j} \cap W_{s_j'} \right | }{\left | W_{s_j}\cup W_{s_j'}\right | } \end{equation}

where

![]() $W_{s_j}$

denotes the word set of sentence

$W_{s_j}$

denotes the word set of sentence

![]() $s_j$

in original text

$s_j$

in original text

![]() $d_i$

,

$d_i$

,

![]() $W_{s_j'}$

denotes the word set of sentence

$W_{s_j'}$

denotes the word set of sentence

![]() $s_j'$

in simplified text

$s_j'$

in simplified text

![]() $d_i'$

,

$d_i'$

,

![]() $k$

is the number of sentence pairs

$k$

is the number of sentence pairs

![]() $(s_j, s_j')$

for the given text

$(s_j, s_j')$

for the given text

![]() $d_i$

.

$d_i$

.

-

![]() $\mathbf{EditDis}$

.

$\mathbf{EditDis}$

.

Edit distance is a metric used in computational linguistics to measure the dissimilarity between two strings. It is determined by the minimal number of operations required to transform one string into another (Gomaa et al. Reference Gomaa and Fahmy2013). Depending on the definitions, there are different sets of string operations at different edit distances. In our work, we used the Levenshtein distance, which incorporates operations such as the deletion, insertion, or substitution of a character in a string (Levenshtein et al. Reference Levenshtein1966). The feature

![]() $EditDis$

calculates the Levenshtein distance between the original text and its simplified version. Because the Levenshtein distance is calculated character by character, the feature

$EditDis$

calculates the Levenshtein distance between the original text and its simplified version. Because the Levenshtein distance is calculated character by character, the feature

![]() $EditDis$

can capture the character-level textual form complexity for a given text.

$EditDis$

can capture the character-level textual form complexity for a given text.

-

![]() $\mathbf{BertSim}$

.

$\mathbf{BertSim}$

.

The

![]() $BertSim$

feature measures the text’s readability based on the similarity between the BERT-based representations of the texts before and after simplification at the document level. BERT is a pre-trained language model that has shown effectiveness in a variety of NLP tasks. It possesses the inherent capability to encode linguistic knowledge, such as hierarchical parse trees (Hewitt and Manning Reference Hewitt and Manning2019), syntactic chunks (Liu et al. Reference Liu, Gardner, Belinkov, Peters and Smith2019), and semantic roles (Ettinger Reference Ettinger2020). We believe such knowledge can be incorporated to capture the text’s multidimensional textual features in order to more comprehensively represent the textual form complexity. During the feature extraction process, we leveraged the pre-trained models “bert-base-casedFootnote

B

” and “bert-base-multilingual-uncasedFootnote

C

” for English and non-English texts. Steps for feature extraction are as follows:

$BertSim$

feature measures the text’s readability based on the similarity between the BERT-based representations of the texts before and after simplification at the document level. BERT is a pre-trained language model that has shown effectiveness in a variety of NLP tasks. It possesses the inherent capability to encode linguistic knowledge, such as hierarchical parse trees (Hewitt and Manning Reference Hewitt and Manning2019), syntactic chunks (Liu et al. Reference Liu, Gardner, Belinkov, Peters and Smith2019), and semantic roles (Ettinger Reference Ettinger2020). We believe such knowledge can be incorporated to capture the text’s multidimensional textual features in order to more comprehensively represent the textual form complexity. During the feature extraction process, we leveraged the pre-trained models “bert-base-casedFootnote

B

” and “bert-base-multilingual-uncasedFootnote

C

” for English and non-English texts. Steps for feature extraction are as follows:

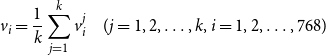

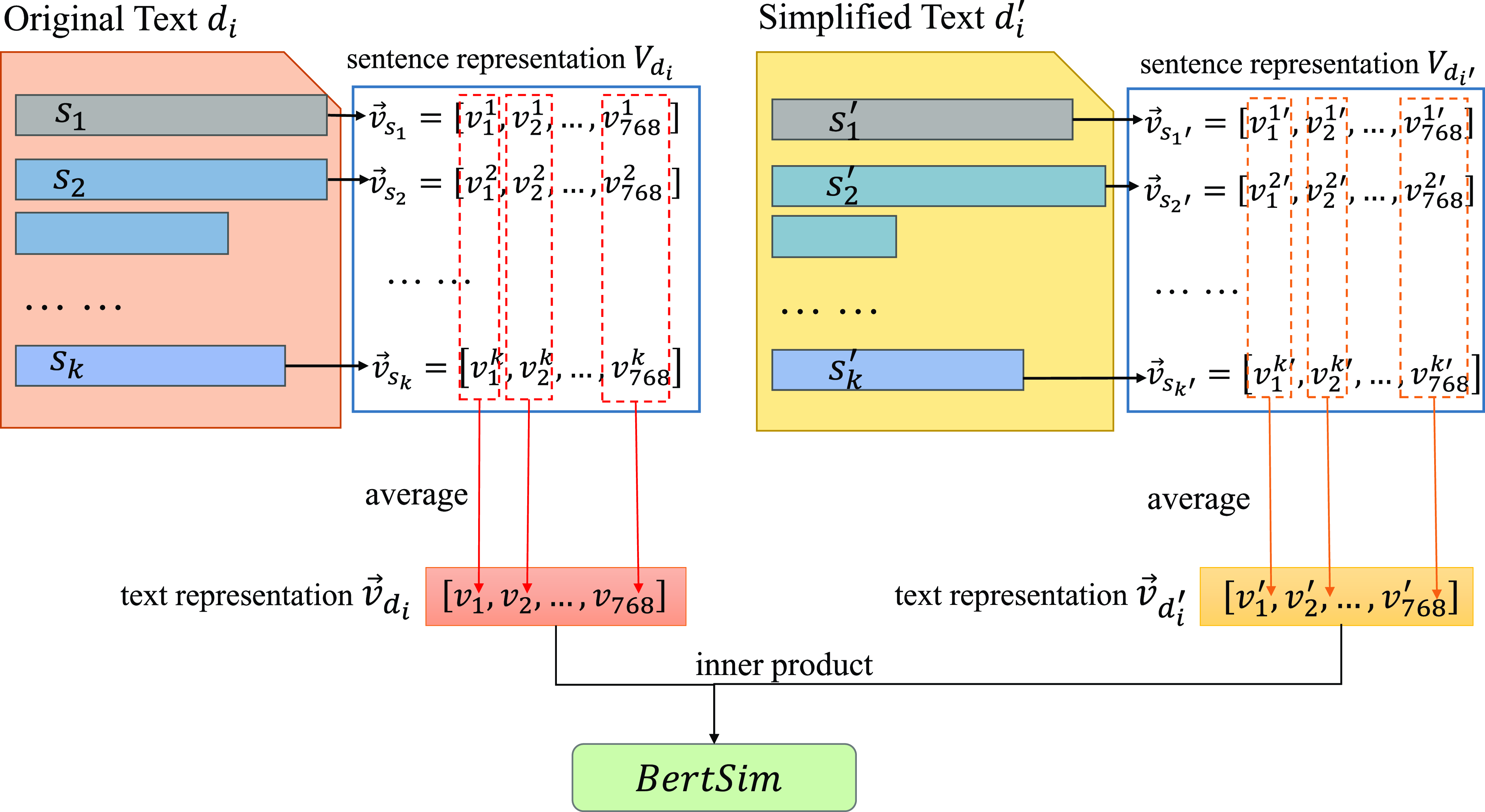

Step 1: BERT-based sentence representation

For each sentence of a given text, we use the BERT tokenizer to split and get the token sequence

![]() $[t_1, t_2, \ldots, t_l,\ldots, t_{L}]$

, where

$[t_1, t_2, \ldots, t_l,\ldots, t_{L}]$

, where

![]() $t_l$

is the

$t_l$

is the

![]() $l^{th}$

token for the sentence and

$l^{th}$

token for the sentence and

![]() $L=510$

is the maximum sentence length supported by BERT. If the token length in the sentence is greater than

$L=510$

is the maximum sentence length supported by BERT. If the token length in the sentence is greater than

![]() $L$

, the sequence will be truncated; otherwise, it will be padded with the

$L$

, the sequence will be truncated; otherwise, it will be padded with the

![]() $[PAD]$

token. The final token sequence of the sentence is concatenated by the

$[PAD]$

token. The final token sequence of the sentence is concatenated by the

![]() $[CLS]$

token, original sentence token sequence, and the

$[CLS]$

token, original sentence token sequence, and the

![]() $[SEP]$

token, which is denoted as

$[SEP]$

token, which is denoted as

![]() $T_j = [[CLS],t_1, t_2, \ldots \ldots t_{L},[SEP]]$

. As described in the work of BERT (Kenton and Toutanova Reference Kenton and Toutanova2019), we use the final token embeddings, segmentation embeddings, and position embeddings as input to the BERT to get the BERT encoding of the sentence. For a given sentence

$T_j = [[CLS],t_1, t_2, \ldots \ldots t_{L},[SEP]]$

. As described in the work of BERT (Kenton and Toutanova Reference Kenton and Toutanova2019), we use the final token embeddings, segmentation embeddings, and position embeddings as input to the BERT to get the BERT encoding of the sentence. For a given sentence

![]() $s_j$

, the output hidden states of the sentence are

$s_j$

, the output hidden states of the sentence are

![]() $\mathbf{H} \in \mathbb{R}^{L\times d}$

, where

$\mathbf{H} \in \mathbb{R}^{L\times d}$

, where

![]() $d=768$

is the dimension of the hidden state. In our work, instead of using the output vector of token [CLS], we use the mean pooling of

$d=768$

is the dimension of the hidden state. In our work, instead of using the output vector of token [CLS], we use the mean pooling of

![]() $\mathbf{H} \in \mathbb{R}^{L\times d}$

as the sentence representation, which is denoted as

$\mathbf{H} \in \mathbb{R}^{L\times d}$

as the sentence representation, which is denoted as

![]() $\vec{v_{s_j}} \in \mathbb{R}^d$

.

$\vec{v_{s_j}} \in \mathbb{R}^d$

.

Step 2: BERT-based text representation and similarity calculation

After getting the BERT-based representations of the text’s sentences, the BERT-based representation of the text can be obtained by taking the average of the sentence representations. Given the original text

![]() $d_i$

and the simplified text

$d_i$

and the simplified text

![]() $d_i'$

, we first get the representations of all sentences in them, which are denoted as

$d_i'$

, we first get the representations of all sentences in them, which are denoted as

![]() $V_{d_i}=\{\vec{v_{s_1}},\vec{v_{s_2}},\ldots, \vec{v_{s_k}}\}$

for the text

$V_{d_i}=\{\vec{v_{s_1}},\vec{v_{s_2}},\ldots, \vec{v_{s_k}}\}$

for the text

![]() $d_i$

and

$d_i$

and

![]() $V_{d_i'}=\{\vec{v_{s_1'}},\vec{v_{s_2'}},\ldots, \vec{v_{s_k'}}\}$

for the text

$V_{d_i'}=\{\vec{v_{s_1'}},\vec{v_{s_2'}},\ldots, \vec{v_{s_k'}}\}$

for the text

![]() $d_i'$

, where

$d_i'$

, where

![]() $k$

is the sentence number,

$k$

is the sentence number,

![]() $\vec{v_{s_k}}=[v_1^k,v_2^k,\ldots, v_{768}^k]$

is the vector of the

$\vec{v_{s_k}}=[v_1^k,v_2^k,\ldots, v_{768}^k]$

is the vector of the

![]() $k^{th}$

sentence in

$k^{th}$

sentence in

![]() $d_i$

and

$d_i$

and

![]() $\vec{v_{s_k'}}=[{v_1^k}',{v_2^k}',\ldots, {v_{768}^{k'}}]$

is the vector of the

$\vec{v_{s_k'}}=[{v_1^k}',{v_2^k}',\ldots, {v_{768}^{k'}}]$

is the vector of the

![]() $k^{th}$

sentence in

$k^{th}$

sentence in

![]() $d_i'$

. The final representation

$d_i'$

. The final representation

![]() $\vec{v_{d_i}}=[v_1,v_2,\ldots, v_{768}]$

for text

$\vec{v_{d_i}}=[v_1,v_2,\ldots, v_{768}]$

for text

![]() $d_i$

and

$d_i$

and

![]() $\vec{ v_{d_i'} }=[ v_{1}',v_{2}',\ldots, v_{768}' ]$

for text

$\vec{ v_{d_i'} }=[ v_{1}',v_{2}',\ldots, v_{768}' ]$

for text

![]() $d_i'$

are obtained by averaging the values of each dimension of the

$d_i'$

are obtained by averaging the values of each dimension of the

![]() $k$

number of sentences. The inner product of the two text representations is then utilized as the

$k$

number of sentences. The inner product of the two text representations is then utilized as the

![]() $BertSim$

feature value. The equations are as follows:

$BertSim$

feature value. The equations are as follows:

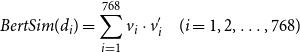

\begin{equation} BertSim(d_i)=\sum _{i=1}^{768} v_i\cdot v_i' \quad (i=1,2,\ldots, 768) \end{equation}

\begin{equation} BertSim(d_i)=\sum _{i=1}^{768} v_i\cdot v_i' \quad (i=1,2,\ldots, 768) \end{equation}

\begin{equation} v_i = \frac{1}{k}\sum _{j=1}^{k}v_i^j \quad (j=1,2,\ldots, k,\space i=1,2,\ldots, 768) \end{equation}

\begin{equation} v_i = \frac{1}{k}\sum _{j=1}^{k}v_i^j \quad (j=1,2,\ldots, k,\space i=1,2,\ldots, 768) \end{equation}

\begin{equation} v_i' = \frac{1}{k}\sum _{j=1}^{k}{v_i^j}' \quad (j=1,2,\ldots, k,\space i=1,2,\ldots, 768) \end{equation}

\begin{equation} v_i' = \frac{1}{k}\sum _{j=1}^{k}{v_i^j}' \quad (j=1,2,\ldots, k,\space i=1,2,\ldots, 768) \end{equation}

where

![]() $k$

is the number of sentences in text,

$k$

is the number of sentences in text,

![]() $v_i$

is the average value of

$v_i$

is the average value of

![]() $i^{th}$

dimension in the representations of the

$i^{th}$

dimension in the representations of the

![]() $k$

sentences of text

$k$

sentences of text

![]() $d_i$

, and

$d_i$

, and

![]() $v_i'$

is the average value of

$v_i'$

is the average value of

![]() $i^{th}$

dimension in the representations of the

$i^{th}$

dimension in the representations of the

![]() $k$

sentences of text

$k$

sentences of text

![]() $d_i'$

. The detailed procedure for step 2 is presented in Figure 5.

$d_i'$

. The detailed procedure for step 2 is presented in Figure 5.

Figure 5. The extraction process of the feature

![]() $BertSim$

in step 2. The sentence representations

$BertSim$

in step 2. The sentence representations

![]() $\vec{v_{s_k}}$

and

$\vec{v_{s_k}}$

and

![]() $\vec{v_s^i}$

are obtained in step 2. For each text

$\vec{v_s^i}$

are obtained in step 2. For each text

![]() $d_i$

, we average the value of each dimension in the sentence representations as the text representation. The text representation for the original text is

$d_i$

, we average the value of each dimension in the sentence representations as the text representation. The text representation for the original text is

![]() $\vec{v_{d_i}}$

, and the text representation for the simplified text is

$\vec{v_{d_i}}$

, and the text representation for the simplified text is

![]() $\vec{v_{d_i'}}$

. At last, the inner product of

$\vec{v_{d_i'}}$

. At last, the inner product of

![]() $\vec{v_{d_i}}$

and

$\vec{v_{d_i}}$

and

![]() $\vec{v_{d_i'}}$

is calculated as the final value of the feature

$\vec{v_{d_i'}}$

is calculated as the final value of the feature

![]() $BertSim$

.

$BertSim$

.

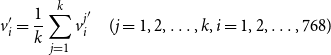

-

![]() $\mathbf{BleuSim}$

.

$\mathbf{BleuSim}$

.

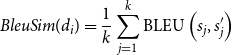

The BLEU score is a commonly used evaluation metric for machine translation (Papineni et al. Reference Papineni, Roukos, Ward and Zhu2002). It assesses the frequency of n-gram matches (usually 1-gram to 4-gram) in the machine-generated text compared to reference texts. As the core idea of BLEU is to evaluate the alignment between machine-generated output and human reference, BLEU score can be viewed as a similarity measure between two text segments (Callison-Burch, Osborne, and Koehn Reference Callison-Burch, Osborne and Koehn2006). Based on this similarity measure, we construct the feature

![]() $BleuSim$

. Specifically,

$BleuSim$

. Specifically,

![]() $BleuSim$

computes the BLEU score for each original-simplified sentence pair for the given text

$BleuSim$

computes the BLEU score for each original-simplified sentence pair for the given text

![]() $d_i$

and then averages these scores across all pairs. The calculation formulas are as follows:

$d_i$

and then averages these scores across all pairs. The calculation formulas are as follows:

\begin{equation} BleuSim(d_i)=\frac{1}{k} \sum _{j=1}^{k} \operatorname{BLEU}\left (s_{j}, s_{j}^{\prime }\right ) \end{equation}

\begin{equation} BleuSim(d_i)=\frac{1}{k} \sum _{j=1}^{k} \operatorname{BLEU}\left (s_{j}, s_{j}^{\prime }\right ) \end{equation}

where

![]() $\text{BLEU}(s_j, s'_j)$

is the BLEU score for the

$\text{BLEU}(s_j, s'_j)$

is the BLEU score for the

![]() $j$

-th sentence pair for text

$j$

-th sentence pair for text

![]() $d_i$

,

$d_i$

,

![]() $s_j$

is the original sentence,

$s_j$

is the original sentence,

![]() $ s'_j$

is the corresponding simplified sentence, and

$ s'_j$

is the corresponding simplified sentence, and

![]() $k$

is the total number of sentence pairs.

$k$

is the total number of sentence pairs.

![]() $\mathrm{BP}$

is the brevity penalty that penalizes shorter simplified sentences to ensure they are not too brief compared to the original sentences.

$\mathrm{BP}$

is the brevity penalty that penalizes shorter simplified sentences to ensure they are not too brief compared to the original sentences.

![]() $P_{n}^{(j)}$

denotes the precision of the

$P_{n}^{(j)}$

denotes the precision of the

![]() $n$

-gram for the

$n$

-gram for the

![]() $j$

-th sentence pair. Each

$j$

-th sentence pair. Each

![]() $n$

-gram length is treated equally with the weight of 1/4.

$n$

-gram length is treated equally with the weight of 1/4.

The precision

![]() $P_{n}^{(j)}$

is the proportion of the matched

$P_{n}^{(j)}$

is the proportion of the matched

![]() $n$

-grams in

$n$

-grams in

![]() $ s'_j$

out of the total number of

$ s'_j$

out of the total number of

![]() $n$

-grams in

$n$

-grams in

![]() $s_j$

. Its calculation is as follows:

$s_j$

. Its calculation is as follows:

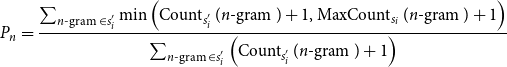

\begin{equation} P_{n}=\frac{\sum _{n \text{-gram } \in s_{i}^{\prime }} \min \left (\operatorname{Count}_{s_{i}^{\prime }}(n \text{-gram })+1, \operatorname{MaxCount}_{s_{i}}(n \text{-gram })+1\right )}{\sum _{n \text{-gram } \in s_{i}^{\prime }}\left (\operatorname{Count}_{s_{i}^{\prime }}(n \text{-gram })+1\right )} \end{equation}

\begin{equation} P_{n}=\frac{\sum _{n \text{-gram } \in s_{i}^{\prime }} \min \left (\operatorname{Count}_{s_{i}^{\prime }}(n \text{-gram })+1, \operatorname{MaxCount}_{s_{i}}(n \text{-gram })+1\right )}{\sum _{n \text{-gram } \in s_{i}^{\prime }}\left (\operatorname{Count}_{s_{i}^{\prime }}(n \text{-gram })+1\right )} \end{equation}

where

![]() $\text{Count}_{s'_i}(n \text{-gram })$

is the count of

$\text{Count}_{s'_i}(n \text{-gram })$

is the count of

![]() $n$

-gram in the simplified sentence

$n$

-gram in the simplified sentence

![]() $ s'_j$

.

$ s'_j$

.

![]() $\text{MaxCount}_{s_i}(n \text{-gram })$

is the maximum count of that

$\text{MaxCount}_{s_i}(n \text{-gram })$

is the maximum count of that

![]() $n$

-gram in the original sentence. We use the additive smoothing method to avoid the extreme case where an

$n$

-gram in the original sentence. We use the additive smoothing method to avoid the extreme case where an

![]() $n$

-gram is completely absent in

$n$

-gram is completely absent in

![]() $ s'_j$

, leading to a zero precision. This smoothing method adds the constant

$ s'_j$

, leading to a zero precision. This smoothing method adds the constant

![]() $1$

to the count of all

$1$

to the count of all

![]() $n$

-grams.

$n$

-grams.

-

![]() $\mathbf{MeteorSim}$

.

$\mathbf{MeteorSim}$

.

The METEOR is an automatic metric for the evaluation of machine translation(Banerjee and Lavie Reference Banerjee and Lavie2005). It functions by aligning words within a pair of sentences. By accounting for both precision and recall, METEOR offers a more balanced similarity measure between two text segments. Based on this similarity measure, we construct the feature

![]() $MeteorSim$

. Specifically,

$MeteorSim$

. Specifically,

![]() $MeteorSim$

computes the METEOR score for each sentence pair for the given text

$MeteorSim$

computes the METEOR score for each sentence pair for the given text

![]() $d_i$

and then averages these scores across all pairs. The calculation formulas are as follows:

$d_i$

and then averages these scores across all pairs. The calculation formulas are as follows:

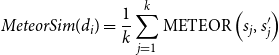

\begin{equation} MeteorSim(d_i)=\frac{1}{k} \sum _{j=1}^{k} \operatorname{METEOR}\left (s_{j}, s_{j}^{\prime }\right ) \end{equation}

\begin{equation} MeteorSim(d_i)=\frac{1}{k} \sum _{j=1}^{k} \operatorname{METEOR}\left (s_{j}, s_{j}^{\prime }\right ) \end{equation}

where

![]() $k$

is the total number of sentences in text

$k$

is the total number of sentences in text

![]() $d_i$

,

$d_i$

,

![]() $s_j$

is the

$s_j$

is the

![]() $j$

-th sentence in document

$j$

-th sentence in document

![]() $d_i$

,

$d_i$

,

![]() $s'_j$

is the corresponding simplified sentence

$s'_j$

is the corresponding simplified sentence

![]() $s_j$

.

$s_j$

.

![]() $\rho$

is the penalty factor based on the chunkiness of the alignment between

$\rho$

is the penalty factor based on the chunkiness of the alignment between

![]() $s_j$

and

$s_j$

and

![]() $s'_j$

. It penalizes the score if the word order in the simplified sentence differs significantly from the original sentence.

$s'_j$

. It penalizes the score if the word order in the simplified sentence differs significantly from the original sentence.

![]() $\alpha$

is a parameter that balances the contributions of precision

$\alpha$

is a parameter that balances the contributions of precision

![]() $P_j$

and recall

$P_j$

and recall

![]() $R_j$

.

$R_j$

.

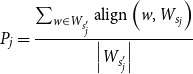

For the

![]() $j$

-th sentence pair, precision

$j$

-th sentence pair, precision

![]() $P_j$

quantifies the ratio of words in the simplified sentence

$P_j$

quantifies the ratio of words in the simplified sentence

![]() $s'_j$

that align to the words in the original sentence

$s'_j$

that align to the words in the original sentence

![]() $s_j$

; recall

$s_j$

; recall

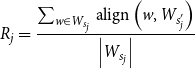

![]() $R_j$

quantifies the ratio of words in the original sentence

$R_j$

quantifies the ratio of words in the original sentence

![]() $s_j$

that find alignments in the simplified sentence

$s_j$

that find alignments in the simplified sentence

![]() $s'_j$

. According to Banerjee and Lavie (Reference Banerjee and Lavie2005), the word alignment process is executed by considering not only exact word matches but also the presence of synonyms and stem matches. The calculation formulas are as follows:

$s'_j$

. According to Banerjee and Lavie (Reference Banerjee and Lavie2005), the word alignment process is executed by considering not only exact word matches but also the presence of synonyms and stem matches. The calculation formulas are as follows:

\begin{equation} P_j=\frac{\sum _{w \in W_{s_{j}^{\prime }}} \operatorname{align}\left (w, W_{s_{j}}\right )}{\left |W_{s_{j}^{\prime }}\right |} \end{equation}

\begin{equation} P_j=\frac{\sum _{w \in W_{s_{j}^{\prime }}} \operatorname{align}\left (w, W_{s_{j}}\right )}{\left |W_{s_{j}^{\prime }}\right |} \end{equation}

\begin{equation} R_j=\frac{\sum _{w \in W_{s_{j}}} \operatorname{align}\left (w, W_{s_{j}^{\prime }}\right )}{\left |W_{s_{j}}\right |} \end{equation}

\begin{equation} R_j=\frac{\sum _{w \in W_{s_{j}}} \operatorname{align}\left (w, W_{s_{j}^{\prime }}\right )}{\left |W_{s_{j}}\right |} \end{equation}

where

![]() $W_{s'_j}$

is the set of words in the simplified sentence

$W_{s'_j}$

is the set of words in the simplified sentence

![]() $s'_j$

,

$s'_j$

,

![]() $W_{s_j}$

is the set of words in the original sentence

$W_{s_j}$

is the set of words in the original sentence

![]() $s_j$

,

$s_j$

,

![]() $\text{align}(w, W)$

is a function that returns 1 if the word

$\text{align}(w, W)$

is a function that returns 1 if the word

![]() $w$

aligns with any word in the set

$w$

aligns with any word in the set

![]() $W$

, otherwise 0.

$W$

, otherwise 0.

![]() $|W_{s_j}|$

and

$|W_{s_j}|$

and

![]() $|W_{s'_j}|$

denote the number of words in the sentence

$|W_{s'_j}|$

denote the number of words in the sentence

![]() $s_j$

and

$s_j$

and

![]() $s'_j$

, respectively.

$s'_j$

, respectively.

The six similarity-based features listed above characterize textual form complexity at various levels and assess text readability across multiple linguistic dimensions. The features

![]() $CosSim$

and

$CosSim$

and

![]() $JacSim$

measure the word-level text similarity before and after simplification.

$JacSim$

measure the word-level text similarity before and after simplification.

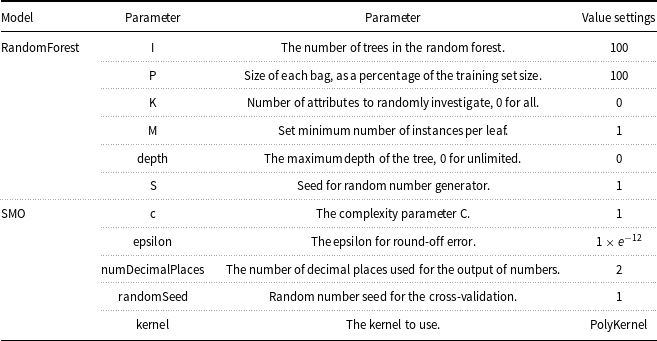

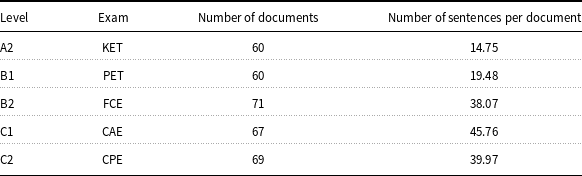

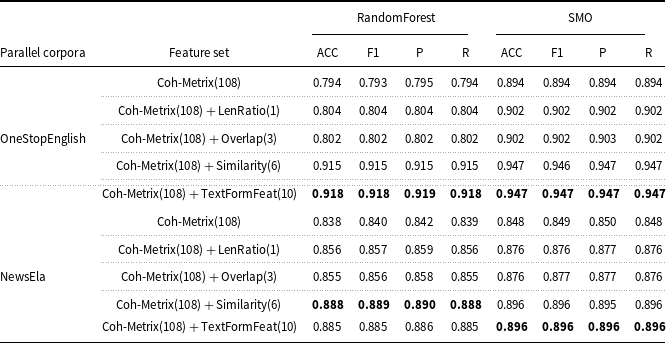

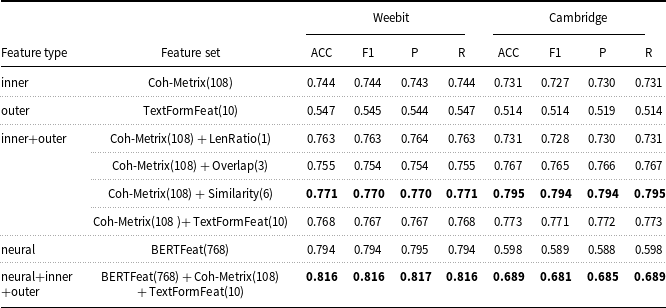

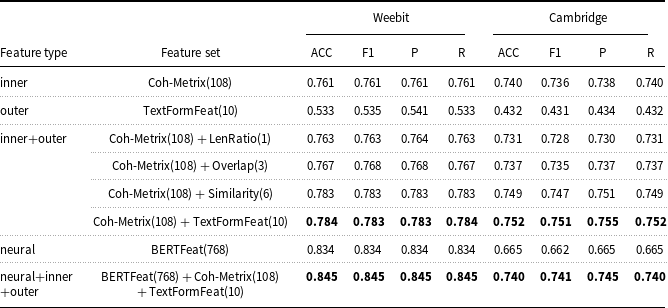

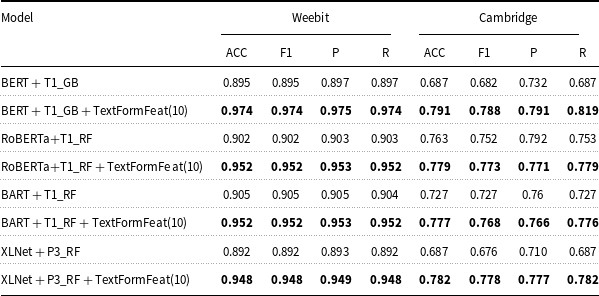

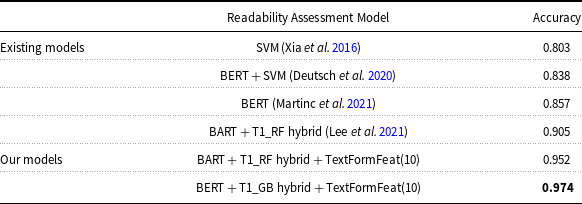

![]() $JacSim$