1.1 Fire and Water Become Heat and Steam

We have been warming ourselves and cooking with fire since prehistory, but not until the eighteenth century did we begin to understand its properties. Fire was one of the four ancient elements, along with earth, water, and air, and was considered to be hot and dry, in contrast to water which was cold and wet. Aristotle described fire as “finite” and its locomotion as “up,” compared to earth which was “down.” Not much was known of its material properties, however, other than in contrast to something else, although Aristotle did note that “Heraclitus says that at some time all things become fire” (Physics, Book 3, Part 5).

By the early eighteenth century, fire was being tamed in England in large furnaces at high temperatures to turn clay into pottery, for example, in the kilns of the early industrialist Josiah Wedgwood, “potter to her majesty.”1 More importantly, fire was being used to heat water to create steam, which could move a piston to lift a load many times that of man or horse. When the steam was condensed, a vacuum was created that lowered the piston to begin another cycle. For a burgeoning mining industry, mechanical power was essential to pump water from flooded mines, considered “the great engineering problem of the age,” employing simple boilers fueled by wood and then coal, which was more than twice as efficient, easy to mine, and seemingly limitless in the English Midlands.

Wedgwood’s need for more coal to fire the shaped clay in his growing pottery factory turned his mind to seek better access to local mines, helping to spur on an era of canal building. Distinctively long and slender to accommodate two-way traffic, canal barges were first pulled by horses walking alongside on adjacent towpaths, but would soon be powered by a revolutionary new engine.

In today’s technology-filled world, units can often be confusing, but one is essential, the watt (W), named after Scotsman James Watt, who is credited with inventing the first “general-purpose” steam engine, forever transforming the landscape of a once pastoral England. The modern unit of electrical power, the watt (W), or ability to “do work” or “use energy” in a prescribed time, was named in his honor, and replaced the “horsepower” (hp), a unit Watt himself had created to help customers quantify the importance of his invention. One horse lifting 550 pounds (roughly the weight of three average men) a distance of one foot in one second equals 1 hp or 746 watts.2

First operated in 1776, Watt sufficiently improved upon the atmospheric water pump of the English ironmonger Thomas Newcomen, who had reworked an earlier crude design of Denis Papin, which in turn was based on the vacuum air pump of the Anglo-Irish chemist Robert Boyle. Papin was a young French Huguenot who had fled Paris for London, becoming an assistant to Boyle, but was unable to finance a working model of his “double-acting” steam engine, despite having explained the theory in his earlier 1695 publication Collection of Various Letters Concerning Some New Machines.3

Before Watt, various rudimentary machines pumped water and wound elevator cables in deep coalmines, all of which employed a boiler to make the steam to raise the piston, which was then lowered by atmospheric pressure over a vacuum created when the steam was condensed, providing a powerful motive energy to ease man’s labor.4 But such “engines” were severely limited because of engineering constraints, poor fuel efficiency, and the inability to convert up-and-down reciprocal motion to the axial rotation of a wheel.

Watt improved the condensation process by adding a separate condenser to externally cool the steam, keeping the piston cylinder as hot as possible. The separate condenser saved energy by not heating, cooling, and reheating the cylinder, which was hugely wasteful, ensuring more useful work was done moving steam instead of boiling water. After tinkering and experimenting for years on the demonstration models of Newcomen’s pump (which sprayed cold water directly into the cylinder) and Papin’s digester (a type of pressure cooker), the idea came to him while out walking in Glasgow Green one spring Sunday in 1765.5 Watt also incorporated an automatic steam inlet to regulate the speed via a “fly-ball” governor – an early self-regulating or negative feedback control system – and a crankshaft gear system to translate up–down motion to rotary motion. Most importantly, his engine was much smaller and used one-quarter as much coal.

With business partner Matthew Boulton, who acquired a two-thirds share of net profits while paying all expenses, Watt developed his steam engine full time, building an improved version to work in the copper and tin mines of Cornwall, where limited coal supplies made the Newcomen water pump expensive to operate. Adapted for smooth operation via gears and line shafts, their new steam engine was soon powering the cloth mills of Birmingham, initiating the industrial transformation of Britain. Controlled steam had created mechanical work, indispensable in the retooled textile factories sprouting up across England. The model Newcomen atmospheric steam engine that Watt had improved on still resides in the University of Glasgow, where he studied and worked as a mathematical instrument maker when he began experimenting on Newcomen’s cumbersome and inefficient water pump.

By the end of their partnership, Boulton and Watt had built almost 500 steam engines in their Soho Manufactory near Birmingham, the world’s first great machine-making plant, both to pump water and to turn the spindles of industry. As noted by historian Richard Rhodes, the general-purpose steam engine not only pumped water (“the miner’s great enemy”), but “came to blow smelting furnaces, turn cotton mills, grind grain, strike medals and coins, and free factories of the energetic and geographic constraints of animal or water power.”6 Following the earlier replacement of charcoal (partially burnt wood) with coke (baked or “purified” coal) to smelt iron, which “lay at the heart of the Industrial Revolution,”7 a truly modern age emerged after Watt’s invention, along with a vast increase in the demand for coal.

With subsequent improvements made by Cornish engineer Richard Trevithick, such as an internal fire box in a cylindrical boiler, smaller-volume, high-pressure steam known as “strong steam” – thus no need of an external condenser, the piston returning by its own force – exhaust blasts, and a double-acting, horizontal mount, the first locomotion engine was ready for testing. Dubbed the “puffing devil,” Trevithick’s steam carriage was the world’s first self-propelled “automobile,” initially tested on Christmas Eve in 1801 on the country roads of Cornwall. Alas, his curious contraption was too large, crude, and sputtered unevenly. In the autumn of 1803, he demonstrated an improved version on the streets of London that fared much better despite the bumpy cobblestone ride. Called “Trevithick’s Dragon,” his three-wheeled “horseless carriage” managed 6 miles per hour, although no investors were interested in further developing his unique, steam-powered, road vehicle.8

It didn’t take long, however, for the steam engine to replace horse-drawn carriages in purpose-built “iron roads” and on canals and rivers more suited for safe and smooth travel, which would soon come to epitomize the might of the Industrial Revolution. The expiration in 1800 of Watt’s original 1769 patent9 helped stimulate innovation, the first successful demonstration of a steam “locomotive” on rails occurring on February 21, 1804, as part of a wager between two Welsh ironmasters. Trevithick’s “Tram Waggon” logged almost 20 miles on a return trip from Penydarren to the canal wharf in Abercynon, north of Cardiff in south Wales. The locomotive was fully loaded with 10 tons of iron and 70 men in 5 wagons, reaching almost 5 mph, triumphantly besting horse power and showing that flanged iron wheels wouldn’t slip on iron rails although the rails themselves were damaged.10 With improved engineering, there would be enough power to propel the mightiest of trains and ships as the changes came fast and furious, beginning a century-long makeover from animal and human to machine power.

Across the Atlantic in the United States, Robert Fulton made the steamship a viable commercial venture, the engine power transmitted by iconic vertical paddle wheels, after modifying the important though fruitless work of three unheralded American inventors – Oliver Evans (whose Orukter Amphibolos dredger was the first steam-powered land and water vehicle, albeit hardly functional) and James Rumsey and John Fitch (who both tried to run motorized steam ships upstream with little success). Cutting his business teeth on the windy and tidal waters of the Hudson River, navigable as far as Albany, and having taken the New Orleans on its maiden voyage downriver from Pittsburgh to its namesake town in 82 days – interrupted by fits of odd winter weather throughout and a major earthquake – Fulton acquired the exclusive rights in 1811 to run a river boat up the Mississippi River.11

Having established steam and continental travel in the psyche of a young American nation, the steamboat greatly fueled westward expansion and the need for immigrant workers. Refashioning the low-pressure, reciprocating steam engine into a more powerful, high-pressure, motive steam engine, the Enterprise made the first ever return journey along the Mississippi in 1815, a model that would become standard for generations,12 while two years later the Washington went as far as Louisville in a record 25 days, both riverboats designed by Henry M. Shreve after whom Shreveport is named.

The American west would never be the same, steam power opening up new lands for commerce and habitation. In 1817, only 17 steamboats operated on western rivers; by 1860 there were 735.13 Prior to the success of the railroads from the 1840s on, steamboat travel accounted for 60% of all US steam power.14 As noted by Thomas Crump in The Age of Steam, “large-scale settlement of the Mississippi river system – unknown before the nineteenth century – was part and parcel of steamboat history.”15 (For the most part, because of continental geography, boat traffic in the USA is north–south while railways go east–west.)

It would be in the smaller, coal-rich areas of northern England, however, where steam was initially exploited for travel on land, the first steam-powered locomotive rail route operating between the Teesside towns of Stockton and Darlington. The massive contraption was designed by George Stephenson, a self-educated Tyneside mining engineer, who improved the engine efficiency via a multiple-fire-tube boiler that increased the amount of water heated to steam. Primarily employed to haul coal to a nearby navigable waterway, be it river, canal, or sea, Stephenson also used wrought iron rails that were stronger and lighter than brittle cast iron to support the heavier loads, implemented a standard track gauge at 4 foot 8½ inches,16 and introduced the whistle blast to vent exhaust steam via the chimney to increase the draft.17

After numerous tests and an 1826 Act of Parliament to secure funds, the first official passenger train service opened on September 15, 1830, bringing excited travelers from Liverpool to Manchester, including the leading dignitaries of the day such as the prime minister, the Duke of Wellington, and the home secretary Robert Peel. The 30-mile line passed through a mile-long tunnel, across a viaduct, and over the 4-mile-long Chat Moss peat bog west of Manchester. Driven by Stephenson, the service was powered by his Northumbrian locomotive steam engine, and was meant to cover the journey at 14 miles per hour, although a fatal accident on the day disrupted the festivities.18

Steam-powered trains soon brought travelers from across Britain to new destinations, most of whom had never been further from their home than a day’s return travel by foot or horse, such as day trips to the shore and other “railway tourism” spots. Transporting a curious Victorian public here and there in a now rapidly shrinking world, the confines of the past were loosed as locomotion improved. Expanding the boundaries of travel as never before, markets around the globe flourished with increased transportation speed and distance.

In 1838, the SS (Steam Ship) Sirius made the first transatlantic crossing faster than sail, from Cork to New York, ushering in the global village as well as standardizing schedules rather than relying on capricious winds (in an early example of “range anxiety” the first steamships were too small to carry enough coal for an ocean crossing). High-pressure compound engines, twin screw propellers,19 tin alloy bearings, and steel cladding followed, much of the new technology tested for battle or in battle.20 By 1900, a voyage from Liverpool to New York took less than 2 weeks instead of 12 as immigrants arrived in record numbers, peaking between 1890 and 1914 when 200,000 made the crossing per year.21 A disproportionately smaller number of travelers crossed in the opposite direction, covered by shipping back more consumer goods, including for the first time coveted American perishables.

The race was on to exploit the many industrial applications of steam power as coal-powered factories and mills manufactured goods faster and cheaper – textiles, ironmongery, pottery, foodstuffs – spreading from the 1840s across Europe and beyond. By the year of the great Crystal Palace Exhibition in 1851 in Hyde Park, London – the world’s first international fair (a.k.a. The Great Exhibition of the Works of Industry of all Nations) that brought hundreds of thousands of tourists from all over the UK to the capital for the first time by rail – Victorian Britain ruled the world, producing two-thirds of its coal and half its iron.22

Railways in the United States also expanded rapidly, in part because of the 1849 California gold rush as tens of thousands of fortune seekers went west. Government grants offered $16,000 per mile to lay tracks on level ground and $48,000 per mile in mountain passes.23 By 1860 there were 30,000 miles of track – three times that of the UK – greatly increasing farm imports from west to east.24 More track opened the frontier to migrant populations as new territory was added to the federal fold, increasingly so after the Civil War. While the steamboat opened up the American west, the railroad cemented its credentials.

On May 10, 1869, the Union Pacific met the Central Pacific at Promontory Summit in Utah, just north of Great Salt Lake, triumphantly uniting west and east and cutting a 30-day journey by stagecoach from New York to San Francisco to just 7 days by train “for those willing to endure the constant jouncing, vagaries of weather, and dangers of travelling through hostile territory,”25 beginning at least in principle the nascent creed of Manifest Destiny over the North American continent. As if to anoint the American industrial era, when the trains met, the eastbound Jupiter engine burned wood and the westbound Locomotive No. 119 coal.26

Signaling the importance of rail travel to the birth of a nation and modern identity, British Columbia joined a newly confederated Canada in 1871, amid the fear of more continental growth by its rapidly expanding neighbor to the south, in part because of a promised rail connection to the east. The “last spike” was hammered at Craigellachie, BC, on November 7, 1885, inaugurating at the time the world’s longest railroad at almost 5,000 km.

Born of fire and steam, the world’s first technological revolution advanced at a prodigious pace as the world outgrew its horse-, water-, and wind-powered limits. Engines improved in efficiency and power, from the 2.9-ton axel load of Stephenson’s 1830 Northumbrian to the 46,000 hp of the White Star Line’s Titanic 80 years on. What we call the Industrial Revolution was in fact an energy revolution, replacing the inconsistent labor of men and beasts of burden with the organized and predictable workings of steam-powered machines.

In his biography of Andrew Carnegie, one of the most successful industrialists to master the financial power of a rapidly changing mechanized world via his “vertically integrated” Pittsburgh steel mills, Harold Livesay noted that “steam boilers replaced windmills, waterwheels, and men as power sources for manufacturing” and that the new machinery “could be run almost continuously on coal, which in England was abundant and accessible in all weathers.”27 As transportation networks grew, the need for more coal increased, as did the need for more steel that required an enormous amount of coking coal to manufacture. Soon, a modern, coal- and steel-powered United States would rule the world.

If not for government assistance, however, the revolution would have been severely hampered. Subsidies made possible the fortunes of early industrialists such as Wedgwood and Thomas Telford in Britain and Carnegie and Cornelius Vanderbilt in the United States, while railway construction across the world would have been impossible without secure government funding, much of it abused by those at the helm:

Governments assumed much of the financial risk involved in building the American railway network. States made loans to railway companies, bought equipment for them and guaranteed their bonds. The federal government gave them free grants of land. However, promoters and construction companies, often controlled by American or British bankers, retained the profits from building and operating the railways. Thus the railways, too, played a part in the transformation of the industrial and corporation pattern that was sharply changing the class structure of the United States.28

To be sure, governments enlisted and encouraged development, although many early railway builders ensured routes passed through territories they themselves had purchased prior to public listing, which were then resold at great profit. Some even moved the proposed routes after dumping the soon-to-be-worthless land. Money was the main by-product of the Industrial Revolution in a nascent political world, systematically supporting a new owner class that demanded control of a vast, newly mechanized infrastructure and a pliant workforce. Harnessing steam power was the product of many inquisitive minds seeking to make man’s labor easier, although never before had the masters of business owned so much.

Of course, building infrastructure takes time and funds. The first half of the rail networks that now service Britain, Europe, and the eastern US took four decades to construct from 1830 to 1870, by which time the most critical operational problems were solved.29 But despite legal challenges, labor shortages, safety (fatal accidents a regular occurrence), and the politics of the day, the Industrial Revolution prevailed because of those who demanded change. By the sweat and ingenuity of many, fire and water was converted into motive and industrial energy.

1.2 The Energy Content of Fuel: Wood, Peat, Coal, Biomass, etc.

As more improved lifting and propulsion devices were developed, using what Watt called “fire engines,” mechanical power would become understood in terms of the work done by a horse in a given amount of time. The heat generated in the process, either as hot vapor or friction, however, was still as mysterious as fire, thought of as either a form of self-repellent “caloric” that flowed like blood between a hot and a cold object or as a weightless gas that filled the interstices of matter. Heat is calor in Latin, also known as phlogiston, Greek for “combustible.” What heat was, however, was still unknown.

In the seventeenth century, Robert Boyle had investigated the properties of air and gases, formulating one of the three laws to relate the volume, pressure, and temperature of a vapor or gas, important concepts that explain the properties of heated and expanding chambers of steam.30 But not until the middle of the nineteenth century was a workable understanding of heat made clear, essential to improve the efficiency of moving steam in a working engine. In 1798, the American-born autodidact and physicist Benjamin Thompson published the paper, “An Experimental Enquiry Concerning the Source of the Heat which is Excited by Friction” that disputed the idea of a caloric fluid, and instead described heat as a form of motion.

As a British loyalist during the American Revolution, Thompson moved to England and then to Bavaria, where he continued his experiments on heat, studying the connection between friction and the boring of cannons, a process he observed could boil water. As Count Rumford – a title bestowed on Thompson by the Elector of Bavaria for designing the grounds of the vast English Garden in Munich and reorganizing the German military – he went on to pioneer a new field of science called thermodynamics, that is, motion from heat, which could be used to power machines and eventually generate electricity.

Building on Thompson’s work, James Prescott Joule, who came from a long line of English brewers and was well versed in the scientific method, would ultimately devise various experiments that showed how mechanical energy was converted to heat, by which heat would become understood as a transfer of energy from one object to another. Joule’s work would lead to the formulation of the idea of conservation of energy (a.k.a. the first law of thermodynamics), a cornerstone of modern science and engineering. Essentially, Joule let a falling weight turn a paddle enclosed in a liquid and noted the temperature increase of the liquid. Knowing the mechanical work done by the falling weight, Joule was able to measure the equivalence of heat and energy, which he initially called vis-viva, from which one could calculate the efficiency of a heat engine. Notably for the time, Joule measured temperature to within 1/200th of a degree Fahrenheit, essential to the precision of his results.31

Joule would also establish the relationship between the flow of current in a wire and the heat dissipated, now known as Joule’s Law, still important today regarding efficiency and power loss during electrical transmission. To understand Joule heating, feel the warm connector cable as a phone charges, the result of electrons bumping into copper atoms.32 In his honor, the joule (J) was made the System International (SI) unit of work and its energy equivalent.

As we have seen, the watt is the SI unit of power, equivalent to doing 1 joule of work in 1 second (1 W = 1 J/s). The watt and joule both come in metric multiples, for example, the kilowatt (kW), megawatt (MW), gigawatt (GW), and terawatt (TW). The first commercial electricity-generating power plant in 1882 produced about 600,000 watts or 600 kW (via Edison’s six coal-fired Pearl Street dynamos in Lower Manhattan as we shall see), the largest power plant in the UK produces 4,000 MW (4,000 million watts or 4 GW in the Drax power station in north Yorkshire, generating almost 7% of the UK electrical supply), the installed global photovoltaic power is now over 100 billion watts per year (100 GW/year), while the amount of energy from the Sun reaching the Earth per second is about 10,000 TW (over 1,000 times current electric grid capacity). As for the joule, the total primary energy produced annually across the globe is about 600 × 1018 joules, that is, 600 billion billion, 600 quintillion, or 600 exajoules (EJ).

To measure the power generated or consumed in a period of time, that is, the energy expressed in joules, you will often see another unit, the kilowatt-hour (kWh), which is more common than joules when discussing electrical power. The British thermal unit (or BTU) is also used, as are other modern measures – for example, tonnes of oil equivalent (TOE) and even gasoline gallon equivalent (GGE) – but we don’t want to get bogged down in conversions between different units, so let’s stick to joules (J) for energy and watts (W) for power wherever possible. The important thing to remember is that power (in watts) is the rate of using energy (in joules) or the rate of doing work (in joules) – a horse lifting three average men twice as fast exerts twice the power.

We also have to consider the quantity of fuel burned that creates the heat to boil water to make steam and generate electric power, which also comes in legacy units such as cords, tons, or barrels. To compare the energy released from different materials, Table 1.1 lists the standard theoretical energy content in megajoules per kilogram (MJ/kg). A kilogram of wood produces less energy when burned than a kilogram of coal, which has less energy than a kilogram of oil. Indeed, coal has about three times the energy content of wood and was thus better suited to exploit the motive force of steam-powered machines, especially black coal with over twice the energy of brown coal (32.6/14.7). We see also that dry wood has 40% more energy than green wood (15.5/10.9), which has more moisture and thus generates more smoke when burned, while gasoline (45.8) gives more bang for your buck than coal, peat, or wood, and is especially valued as a liquid.

| Fuel | Energy content (MJ/kg) |

|---|---|

| Wood (green/air dry) | 10.9/15.5 |

| Peat | 14.6 |

| Coal (lignite, a.k.a. “brown”) | 14.7–19.3 |

| Coal (sub-bituminous) | 19.3–24.4 |

| Coal (bituminous, a.k.a. “black”) | 24.4–32.6 |

| Coal (anthracite) | >32.6 |

| Biomass (ethanol) | 29.7 |

| Biomass (biodiesel) | 45.3 |

| Crude oil | 41.9 |

| Gasoline | 45.8 |

| Methane | 55.5 |

| Hydrogen gas (H2) | 142 |

Some fuels also have many varieties and thus the efficiency depends on the type. Hardwoods (e.g., maple and oak) contain more stored energy than softwoods (e.g., pine and spruce) and will burn longer with greater heat when combusted in a fireplace (hardwoods are better during colder outdoor temperatures). The efficiency also depends on the density, moisture content, and age. Furthermore, how we burn the wood – in a simple living-room fireplace, wood-burning stove, cast-iron insert, or as pressed pellets in a modern biomass power station – affects the efficiency, but the energy content is still the same no matter how the fuel is burnt. For example, a traditional, open fireplace is eight times less efficient than a modern, clean-burning stove or fan-assisted, cast-iron insert, because much of the energy is lost up the chimney due to poor air circulation (oxygen is essential for combustion). Similar constraints follow for all fuels, whether wood, peat, coal, biomass, oil, gasoline, methane, or hydrogen.

Two of the fuels listed in Table 1.1 have been around forever – wood and peat – considered now by some as a renewable-energy source along with a very modern biomass. Remade as a presumed clean-energy miracle and labeled “renewable” in a modern industrial makeover, we will see if they are as green as claimed. As usual, the devil is in the detail.

***

Wood was our first major energy source, requiring little technology to provide usable heat for cooking or warmth in a dwelling. As a sustainable combustion fuel, however, wood requires large tracts of land and lacks sufficient energy content and supply, unless managed in a controlled way, for example, as practiced for millennia in Scandinavia. With the advent of coal in land-poor Britain, wood as an energy source fell from one-third in the time of Elizabeth I in the sixteenth century to 0.1% by the time of Victoria three centuries later.33 By 1820, the amount of woodland needed to equal coal consumption in the UK was 10 times the national forest cover, highlighting the limitations of wood as a viable fuel source.34 The transition also helped save wood for other essential industries rather than inefficient burning.

The modern practice of sustainability in forest management can be traced to the early eighteenth century in Germany, where replanting homogeneous species – mostly for timber – followed clear cutting, which also led to a ban on collecting deadwood and acorns in previously common lands. The German economist and philosopher Karl Marx would begin to form his paradigm-changing ideas about class in Bavaria in the 1840s from “the privatization of forests and exclusion of communal uses,”35 setting off the battle over public and private control of natural resources and the ongoing debate about unregulated capitalism.

Today, a cooperative and sustainable “tree culture” exists in many northern countries, especially in Scandinavia, where roughly 350 kg of wood per person per year is burned.36 Wood has also become part of a controversial new energy business, converted to pellets and burned in refitted power plants as a renewable fuel replacement for coal (which we’ll look at later).

Formed mostly in the last 10,000 years, peat is partially decomposed plant material found on the surface layer of mires and bogs (cool, acidic, oxygen-depleted wetlands), where temperatures are high enough for plant growth but not for microbial breakdown. Peat is also found in areas as diverse as the temperate sub-arctic and in rainforests. According to the World Energy Council, 3% of the world’s land surface is peat, 85% in Russia, Canada, the USA, and Indonesia, although less than 0.1% is used as energy.37 The three largest consumers of peat in Europe are Finland (59%), Ireland (29%), and Sweden (11%), comprising 99% of total consumption.

Peat (or turf as it’s known in Ireland) has been burnt for centuries in Irish fireplaces, mostly threshed from plentiful midland bogs. Burned in three national power stations, peat contributes 8.5% of Irish electrical needs, and is also processed into milled briquettes by the state-run turf harvester Bord na Móna for household fireplaces, readily purchased in local corner shops and petrol stations across the country. For years, Ireland’s three peat-fired power plants provided roughly 300 MW of electrical power to a national grid of about 3.2 GW, although in 2018 Bord na Móna announced it would close all 62 “active bogs” and discontinue burning peat by 2025, citing increased global warming and the need to decarbonize energy (small-scale threshing is still allowed for local domestic use via so-called “turbary rights”).38 Elsewhere, peat is still burned for warmth and electric power. Globally, 125 peat-fired power stations (some co-fired with biomass) provide electricity to almost two million people.

To combat increased greenhouse gases, however, peatlands have become strategically important as carbon sinks. The World Energy Council estimates that additional atmospheric carbon dioxide from the deforestation, drainage, degradation, and conversion to palm oil and paper pulp tree plantations – particularly in the farmed peatlands of Southeast Asia – is equivalent to 30% of global carbon dioxide emissions from fossil fuels. Peat contains roughly one-third of all soil carbon – twice that stored in forests (~2,000 billion tons of CO2) – while about 20% of the world’s peatlands have already been cleared, drained, or used for fuel, releasing centuries of stored carbon into the atmosphere.39

Holding one-third of the earth’s tropical peatlands, Indonesia has been particularly degraded from decades of land-use change for palm-oil plantations, while icy northern bogs are becoming increasingly vulnerable as more permafrost melts from increased global warming (the peat resides on top and within the permafrost that holds about 80% of all stored peatland carbon). As Russia expands petroleum exploration in the north, more Arctic tundra is also being destroyed, a double whammy for the environment via increased GHG emissions and depleted carbon stores. Although trees and plants can be grown and turned into biomass for more efficient burning (as seen in Table 1.1), bogs are not as resilient and care is needed to avoid depleting a diminishing resource. Today, the rate of extraction is greater than the rate of growth, prompting peat to be reclassified as a “non-renewable fossil fuel.” In countries with a historic connection, conservation is more important than as any viable energy source.40

The transformation from old to new continues as we grapple with increasing energy needs. Whether we use steam to move a piston in a Watt “fire engine” or a giant turbine in a modern power station that burns peat, coal, biomass, oil, or natural gas, the efficiency still depends on the fuel used to boil the water to make the steam. The demand for fuel with better energy content changed the global economy, firstly after coal replaced wood (at least twice as efficient), which we look at now, and again as oil replaced coal (roughly twice as efficient again), which we’ll look at in Chapter 2. A transition simplifies or improves the efficiency of old ways (see Table 1.1), turning intellect into industry with increased capital – when both transpire, change becomes unstoppable.

1.3 The Original Black Gold: Coal

Found in wide seams up to 3 km below the ground, coal is a “fossil fuel” created about 300 million years ago during the Carboniferous Period as heat and pressure compressed the peat that had formed from pools of decaying plant matter, storing carbon throughout the Earth’s crust. Aristotle called it “the rock that burns.” As far back as the thirteenth century, sea coals that had washed up on the shores and beaches of northeast England and Scotland were collected for cooking and heating in small home fires as well as for export by ship to London and abroad.

By the seventeenth century, coal had been found throughout England, burned to provide heat at home and in small manufacturing applications. Supplies were plentiful, initially dug out by “miners” lowered into bell pits by rope, before deeper mines were excavated by carving horizontally through the ground. Coal seams as wide as 10 feet were worked with hammers, picks, and shovels, the lengthening tunnels supported by wooden props. The broken coal bits hewn from the coal face were removed by basket or pushed and pulled in wagons along a flat “gallery” or slightly inclined “drift” before being mechanically hoisted from above through vertical shafts. Animals and railcars eventually replaced men to remove the coal from within the sunless pits. To help evacuate the deepening, water-laden mines, mechanical pumps were employed, inefficiently fueled with coal from the very same mines they serviced.

As we have seen, manufacturing industries evolved to incorporate newly invented coal-powered steam engines: spindles turned imported American cotton into cloth, large mills greatly increased grinding capacities of wheat between two millstones to make bread flour as well as other processed foodstuffs, and transportation was transformed across Britain and beyond. The Boulton & Watt Soho Foundry made cast iron and wrought iron parts to manufacture bigger and better engines. Glass, concrete, paper, and steel plants all prospered thanks to highly mechanized, coal-fueled, high-temperature manufacturing and steam-driven technology. By the beginning of the nineteenth century, “town gas” formed from the gasification of coal also lit the lamps of many cities, in particular London.

A few critics wondered about the social costs and safety of mining and burning coal. In his poem “Jerusalem,” the English Romantic artist and poet William Blake asked “And was Jerusalem builded here / Among these dark Satanic Mills?” The Victorian writer Charles Dickens questioned the inhumane treatment in large mechanized factories, where more than 80% of the work was done by children, many of whom suffered from tuberculosis, while child labor was still rampant in the mines. Adults did most of the extraction, but “boys as young as six to eight years were employed for lighter tasks” and “some of the heaviest work was done by women and teenage girls” carrying coal up steep ladders in “baskets on their back fastened with straps to the forehead.”41 Pit ponies eventually replaced women and children as beasts of burden, some permanently stabled underground and equally mistreated.

The 1819 Cotton Mills and Factories Act in Britain limited to 11 hours per day the work of children aged 5–11 years, but in reality did little to aid their horrible plight. In Hard Times, an account of life in an 1840s Lancashire mill town, Dickens wrote about the abuse at the hands of seemingly well-intended Christian minders, “So many hundred Hands in this Mill; so many hundred horse Steam Power. It is known, to the force of a single pound weight, what the engine will do; but, not all the calculators of the National Debt can tell me the capacity for good or evil.”42 The 1842 Mines and Collieries Act finally outlawed women and children under 10 from working in the mines, often sidestepped by uncaring owners.

By the mid-nineteenth century, 500 gigantic chimneys spewed out toxins over the streets of Manchester, the center of early British coal-powered manufacturing. Known as “the chimney of the world,” Manchester had the highest mortality rate for respiratory diseases and rickets in the UK, countless dead trees from acid rain, and an ashen daylight reduced almost by half.43 As seen in J. M. W. Turner’s ephemeral paintings, depicting the soot, smoke, and grime of nineteenth-century living, black was the dominant color of a growing industrialization, expelled from an increasing number of steam-powered trains and towering factory smokestacks. In a letter home to his brother Theo in 1879, Vincent van Gogh noted the grim reality of the Borinage coal regions in southern Belgium, where he came to work as a lay priest prior to becoming a painter: “It’s a sombre place, and at first sight everything around it has something dismal and deathly about it. The workers there are usually people, emaciated and pale owing to fever, who look exhausted and haggard, weather-beaten and prematurely old, the women generally sallow and withered.”44

Health problems, low life expectancy, and regular accidents were the reality of pit life as miners worked in oxygen-depleted and dangerous dust-filled mines, subject to pneumoconiosis (black lung or miner’s asthma), nystagmus (dancing eyes), photophobia, black spit, roof cave-ins, runaway cars, cage crashes, and coal-dust and methane gas explosions (fire damp). Prior to battery-operated filament lamps, the Davy lamp fastened an iron gauze mesh around an open flame to lower the point temperature and reduce mine gas ignitions, although economic output mattered more than safety as the world reveled in a vast newfound wealth. A 1914 report calculated that “a miner was severely injured every two hours, and one killed every six hours.”45

Mining is not for the faint-hearted nor weak-bodied. Even seasoned miners cricked their backs, crawling doubled-over through four-foot-high, dimly lit gallery tunnels to and from the coal face, traveling a mile or more both ways to work each day (for which they were not paid). In The Road to Wigan Pier, the English novelist and journalist George Orwell likened the mines with their roaring machines and blackened air to hell because of the “heat, noise, confusion, darkness, foul air, and above all, unbearably cramped space.”46 Published in 1937, Orwell described the miners as “blackened to the eyes, with their throats full of coal dust, driving their shovels forward with arms and belly muscles of steel” as they labored in “hideous” slag-heaps and lived amid “frightful” landscapes.47 Writing at the outset of his depressingly frank report on the invisible drudgery of working-class miners in Yorkshire and Lancashire, Orwell nonetheless considered the coal miner “second in importance only to the man who ploughs the soil,” while the whole of modern civilization was “founded on coal.”48

Almost a century after Dickens had written about the horrid state of working-class life in the factories of industrial England, the smell and occupational status of a person was still the unwritten measure of a man. Bleak industrial towns remained full of “warped lives” and “ailing children,” while the ugliness of industrialism was evident everywhere amidst mass unemployment at its worst. Coal may have been the essential ingredient of the Industrial Revolution, fortuitously located where many displaced laborers lived, yet did little to improve the lives of those who had no choice but to take whatever work they could get.

At the height of the British coal industry in the 1920s, 1.2 million workers were employed,49 each producing on average about one ton of coal per day, one-third of which was exported.50 With the conversion of a labor-intensive, coal-fired steam to more easily maintained diesel-electric trains; however, 400,000 jobs were lost.51 By the 1950s, only 165,000 men worked 300 pits in the Northumberland and Durham coalfields, dubbed the Great Northern Coalfield.

North Sea gas in the 1980s also replaced coal gas in home heating and cooking, reducing the demand for coal even further. Mostly comprised of methane, “natural” gas is so-called because the gas isn’t manufactured like synthetic coal gas, but comes straight from the ground. Unable to compete with oil and gas finds from the North Sea, coal was finally phased out in the UK from 1984, after a prolonged battle between politicians and workers despite the National Coal Board claiming 400 years’ worth still left to mine. By 1991 only a few deep mines remained in operation with just 1,000 employees.52 Having once shipped 10,000 tons a day from the northeast to London in the mid-nineteenth century until being upended by the railways, by 2004 the docks of Newcastle had become a net importer of coal.53 The last deep UK mine – the Kellingley Colliery in North Yorkshire – shut in 2015.

Just as coal and steam had taken away jobs from low-output, rural handicraft workers two centuries earlier, oil and diesel made coal workers redundant, their labor-intensive output no longer required. The transition would engulf Britain, pitting the power of technology and capital against long-standing working communities, emboldening the prime minister of the day Margaret Thatcher and the trade-union leader Arthur Scargill, who battled over ongoing pit closures. Coal mining was dying in the very place it had begun as Ewan MacColl sang in “Come all ye Gallant Colliers” (sung to the tune of Morrissey and the Russian Sailor):

By contrast, coal remained a thriving industry in the United States – even into the twenty-first century, the lust for abundant, cheap energy unabated. Prior to 1812, the USA imported most of its coal from Britain, but after the War of 1812 deposits were mined in the plentiful seams of the Appalachian Mountains, comprising parts of six eastern states (Pennsylvania, Maryland, Ohio, Kentucky, Virginia, and West Virginia). After the Civil War, coal production grew rapidly – almost 10-fold in 30 years – especially needed for steam engines on trains as coal replaced wood toward the end of the nineteenth century. The United States grew rapidly, building more energy-intensive infrastructure to secure its vast, ever-expanding borders.

As in Britain, coal brought many health and safety problems, as well as conflicts between workers and owners. From 1877 to 1879, labor unrest in Pennsylvania led to the hanging of 20 supposed saboteurs known as the Molly Maguires, who sought better conditions and basic workers’ rights. In 1920, after attempting to organize as part of the United Mine Workers of America (UMWA) union in the company coal town of Matewan, West Virginia, miners were repeatedly manhandled by private guards leading to a shoot-out on the main street and 10 deaths. With an abundant and inexpensive resource, dug out of remote mountainous regions, lawlessness prevailed; even the wives of injured miners were forced into prostitution to cover their husbands’ down time.54 Disputes continued well into the twentieth century as profits were valued above health, safety, and security.

In his Pulitzer Prize-winning novel King Coal, Upton Sinclair described the wretched conditions faced by miners and their families during the 1910s in his telling account of working for the fictional General Fuel Company. Drudgery, grime, headaches, accidents, company debt in a company town, and uncertain futures were the harsh reality of the early coal industry in a “don’t ask” world of corporate paternalism. Hal Warner is the protagonist, who learns first-hand about the grim existence of a coal worker’s life, many recent immigrants with families in search of a better life, yet destined instead to a life ever on the periphery:

There was a part of the camp called “shanty-town,” where, amid miniature mountains of slag, some of the lowest of the newly arrived foreigners had been permitted to build themselves shacks out of old boards, tin, and sheets of tar-paper. These homes were beneath the dignity of chicken-houses, yet in some of them a dozen people were crowded, men and women sleeping on old rags and blankets on a cinder floor. Here the babies swarmed like maggots.55

Country singer Loretta Lynn sang about her experiences growing up in poverty in a Kentucky mining town as a coal miner’s daughter “in a cabin on a hill in Butcher Holler,” where her daddy shoveled coal “to make a poor man’s dollar.” The drudgery was endless and “everything would start all over come break of morn.”

Before basic safety measures were introduced in the 1930s, 2,000 miners died on average each year in the United States. Methane gas monitors, ventilation systems, double shafts, roof bolts, safety glasses, steel-toed boots, battery-operated lamps, and emergency breathing packs helped improve conditions, while continuous mining replaced hazardous blasting, employing remote-control cutting heads to break up the coalface and hydraulic roof supports. Various innovations transformed the coal industry, many invented by American engineer Joseph Francis Joy, such as mechanized loading devices, shuttle cars, and long-wall machines that did the work of a thousand men, turning hewers and haulers into machine operators.56

Naturally found in coalmines, outgassing is particularly dangerous, responsible for one of the strangest detection methods ever: a caged canary that dies in the presence of methane or carbon monoxide, signaling impending peril to nearby miners. Today, coal mining is highly mechanized and much safer – modern mining operations include stabilizing old tunnels with coal paste and 5G monitoring to increase safety – although accidents are still very much a part of a miner’s life despite the more modern measures. Sadly, thousands of workers die each year in the mining business, while every country has its own horrid story of lost miners and devastated families. Not all accidents can be explained as bad luck.

In 2018, a methane gas explosion in a black-coal mine in the Czech Republic killed 13 miners working about 1 km down, where the level of methane was almost five times the allowed level, while in 2021, 52 Russian miners died after a methane explosion ripped through a Siberian coal mine that had previously been certified safe without inspection. Killed in an explosion at the Pike River mine in New Zealand, the remains of 29 workers went undiscovered for over a decade until improved bore-hole drilling technology revealed the location of some of the men in 2021.57 In 2022, 41 miners died after a methane explosion in a coastal Black Sea mine, the worst mining disaster in Turkey since 2014 when 301 miners lost their lives. Risk warnings had been given prior to the accident about outgassing below 300 m.

No amount of protection can make mining entirely safe, but open-cast or surface mining avoids many of the problems of underground mining, and is now more prevalent. Accounting for about one-fifth of electrical power generation in the United States, two-thirds of American coal is surface-mined in open pits, where the “overburden” is first cleared to access the giant seams, some as wide as 80 feet. Giant draglines and tipper shovels load the coal into 400-ton trucks for removal. Nonetheless, a 2023 accident at an open-pit mine in northern China killed five miners instantly when a mine wall collapsed, while 47 more were buried under tons of rubble and presumed dead.

Mechanization, lower operating costs, and better management in the USA has helped to counter much of the conflict that engulfed the UK, where mine owners and miners battled for decades over low wages, working conditions, and long hours amid mounting inefficiency. Mediated by beholden governments, industry control in the UK regularly changed hands, epitomized by the 1926 General Strike, the 1938 Coal Act, and eventual nationalization of Britain’s premier industry eight years later. Prior to World War I, Britain had astoundingly supplied two-thirds of the world’s coal, but unregulated profits, lowered living standards, and blind loyalty to a national cause was unsustainable.

Today, China generates more than half and India and the United States about 10% each, burning roughly three-quarters of an 8.5 billion ton annual production (Table 1.2). Coal is available in vast quantities with deposits in over 75 countries with enough reserves to last 100 years at current rates, generating electricity from over 6,000 units worldwide. Despite what may appear to be the dying days of coal, the original Industrial Revolution fuel still accounts for almost 40% of globally produced electric power. What’s more, even if the percentage of coal power were to decrease from 40% to 25% in the next two decades as has been called for to limit global warming to under 1.5°C, more coal will still be burnt if production increases as predicted by 2% per year.58 Coal is also burned to produce the high temperatures to smelt steel, make cement, and produce coal-to-liquid fuel. Any proposed “phase down” let alone “phase out” won’t happen easily or without a fight.

***

| # | Country | Number* | Power (GW) | % | CO2 (Gtons/year) | % |

|---|---|---|---|---|---|---|

| 1 | China | 1,118 | 1,074 | 52.0 | 4.7 | 49.7 |

| 2 | India | 285 | 233 | 11.3 | 1.0 | 11.1 |

| 3 | United States | 225 | 218 | 10.5 | 1.1 | 11.3 |

| 4 | Japan | 92 | 51 | 2.4 | 0.2 | 2.5 |

| 5 | South Africa | 19 | 44 | 2.1 | 0.2 | 2.2 |

| 6 | Indonesia | 87 | 41 | 2.0 | 0.2 | 2.0 |

| 7 | Russia | 71 | 40 | 1.9 | 0.2 | 2.3 |

| 8 | South Korea | 23 | 38 | 1.8 | 0.2 | 1.7 |

| 9 | Germany | 63 | 38 | 1.8 | 0.2 | 1.9 |

| 10 | Poland | 44 | 30 | 1.5 | 0.2 | 1.7 |

| Rest of world | 261 | 261 | 12.6 | 1.3 | 3.6 | |

| Total | 2,439 | 2,067 | 100.0 | 9.4 | 100.0 |

There are five coal types, generally ranked by age, based on the percentage of carbon and thus energy content or “calorific value.” From lowest to highest rank: peat, lignite (a.k.a. brown), sub-bituminous, bituminous (a.k.a. black), and anthracite. Younger coal has less carbon and more moisture, and is less efficient when burned (low calorific value). Peat is included as the youngest, least-efficient coal; lignite is high in moisture (up to 40%) and lower in energy (20 MJ/kg); whereas anthracite has less moisture (as low as 3%) and more energy (24 MJ/kg). The older “mature” coal is darker, harder, shinier, and produces more carbon dioxide when burned. (The ratio of carbon to hydrogen and oxygen atoms increases in higher-ranked coals.)

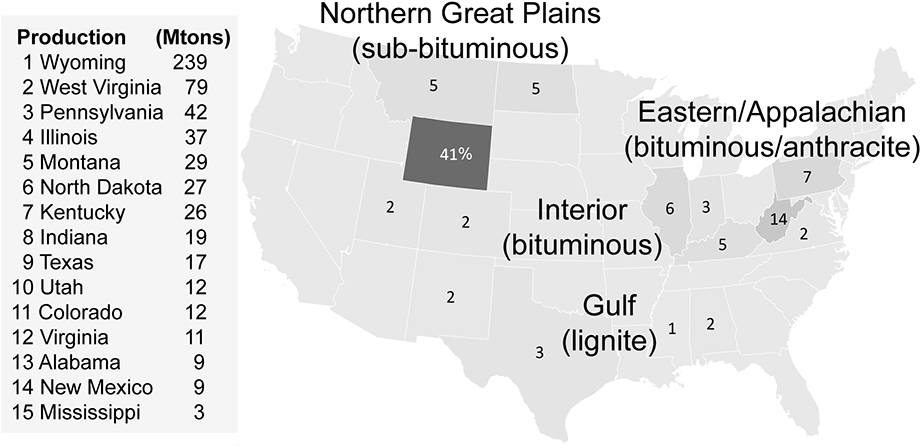

In the United States, the older, dirtier coal is typically found in the east in the anthracite-filled Appalachian regions along with bituminous coal. The relatively cleaner sub-bituminous coal is mostly found in the west, while the cleanest, lignite, is in the south. Wyoming produces over 200 million tons each year, more than 40% of American supplies, primarily in the North Antelope Rochelle and Black Thunder surface mines of the Powder River Basin, at about one-fifth the cost of east-coast anthracite (Figure 1.1). Mile-long trains transport the cheaper, easy-to-access coal to power plants as far away as New York. Underscoring its importance to the American economy, almost half of all US train freight by tonnage is coal.59

According to the US Energy Information Administration, the Appalachian Region – the historical heartland of American coal mining – still accounts for almost one-third of US production, mostly in West Virginia (14%), Pennsylvania (7%), Illinois (6%), and Kentucky (5%).60 Much of the coal is anthracite – older, dirtier, and hard-to-access. As in other coal regions around the world, long-standing communities and livelihoods depend on mining. Nevertheless, 40,000 jobs have been shed in the past decade as the American coal industry declines because of higher costs, worsening pollution, and cheaper, cleaner alternatives despite attempts by some to reinvent a past glory or claim a supposed “war on coal.”

Since its peak in 2007, US coal production has dropped almost 50%, while more coal plants were retired in the first two years of the Trump administration than in the first four years of his predecessor.61 Two long-standing coal plants on the Monongahela River south of Pittsburgh were shuttered on the same day in 2013 – the 370-MW Mitchell Power Station and 1.7-GW Hatfield’s Ferry Power Station – undercut by cheaper natural gas and renewables. Just as diesel and natural gas spelled the end of coal in the UK, igniting an intense worker–owner battle, cheap natural gas from fracking has undercut American coal, widening an already fierce political divide.

Of course, consumption in the USA is now dwarfed by China, which has remade its economy on the back of coal-fired power, helping to raise hundreds of millions from poverty. Having accelerated the building of power plants since liberalizing state controls in the late 1970s, China now burns more coal than the rest of the world combined (50.6%), providing more than two-thirds of its domestic energy needs, albeit with poorer emissions standards. Life expectancy in China has been reduced by 5 years because of coal pollution with over one million premature deaths per year compared to 650,000 in India and 25,000 in the USA.62

Unfortunately, coal has dramatically increased air pollution as seen in regular public-health alerts. Pollution levels in some Chinese cities are so unbearable that inhabitants routinely wear masks, don’t eat outdoors, and run air purifiers in their homes. Chinese journalist Chai Jing notes that smokestacks were once considered a sign of progress in a developing China, yet she now worries about the link between air pollution and cancer: “In the past 30 years, the death rate from lung cancer has increased by 465%.”63 Xiao Lijia, a Chinese electrical engineer who began his career at the second-largest coal plant in Heilongjiang Province northeast of Beijing, adds “It is still a great challenge to improve the surrounding environment.”64

While developing countries continue adding coal power (although at a lesser rate than in the past two decades), many developed countries are cutting back or even phasing out coal in the face of rising global temperatures and increased pollution. After failing to meet its 2015 Paris Agreement emissions targets, Germany – the world’s fourth-largest economy and sixth-largest consumer of coal – announced in 2019 that it would end coal-fired electrical power generation by 2038. Highly subsidized, deep “black-coal” mining in the Ruhr had already closed in 2018, but under Germany’s new plan 34 GW of coal power will be halved by 2030 before being completely shut down.

The affected regions will be offered €40 billion in aid, in particular the open-pit “brown-coal” mining communities of Lusatia near the Polish border. A member of both the overseeing commission and executive board of Germany’s second-largest trade union offered an ironic though pragmatic analysis: “It’s a compromise that hurts everyone. That’s always a good sign.”65 The plan includes converting Germany’s largest open-pit mine at Hambach, North Rhine-Westphalia, into a lake, the deepest artificially created space in the country, as well as “rehabilitating” other disused mining areas.

In a bid to tackle air pollution and desertification, China has been planting trees at a rate of more than a billion a year since 1978 as part of the Great Green Wall forestation project. In 2019, 60,000 soldiers were reassigned to plant even more, primarily in coal-heavy Hebei Province, the main source of pollution to neighboring Beijing, where pollution levels are 10 times safe limits. The plan is to increase woodland area by more than 300,000 km2 (from 21.7% to 23%). The Chinese government has also pledged to reduce its carbon intensity and “peak” emissions by 2030 (and become overall carbon neutral or “net zero” by 2060), but to achieve any real reductions China must significantly limit coal consumption.

As more jobs in the renewable-energy sector supplant mining jobs, coal becomes less viable by the day. The International Renewable Energy Agency (IRENA) counted 11.5 million global renewable-energy jobs in 2019, including 3.8 million in solar, 2.5 million in liquid biofuels, 2 million in hydropower, and 1.2 million in wind power.66 California now has more clean-energy jobs than all coal-related jobs in the rest of the United States,67 while Wall Street continues to dump coal investments. In communities that have worked the mines for generations, King Coal is dying after almost two centuries of rule. And yet the damage continues, wherever brown jobs and old thinking is championed over cleaner alternatives, while in the developing world coal still thrives. In 2021, the amount of coal-powered electrical power generation actually increased despite an acknowledged recognition of global warming at the annual COP meeting that year in Glasgow.

Discarded in the land of its birth because of poor economics and increased health problems, and in decline in most developed countries around the world, without viable alternatives countries will still generate power with “the rock that burns” no matter the obvious problems to health and increased global warming. Who would have thought we would be burning the black stuff three centuries after the start of the Industrial Revolution? Who would have thought we would ignore all the consequences?

1.4 Coal Combustion, Health, and the Forgotten Environment

Steam-powered technology has brought numerous changes to the way we live, especially a thirst for more, the rally cry of the Industrial Revolution. The most onerous by-product, however, is pollution. In The Ascent of Man, author and philosopher Jacob Bronowski describes the reality quite simply: “We think of pollution as a modern blight, but it’s not. It’s another expression of the squalid indifference to health and decency that for centuries had made the plague a yearly visitation.”68

We no longer have an annual plague, but we do have increased occurrences of bronchitis, asthma, lung cancer, and other respiratory illnesses, all attributable in part to burning wood and coal. To be sure, we have reaped numerous benefits from the Industrial Revolution, but we also suffer unwanted side effects from burning carbon-containing fuels: pollution, smog, acid rain, increased cancer rates, and now a rising global temperature caused by a secondary absorption effect of the main combustion by-product, carbon dioxide (1 kg of coal produces about 2 kg of CO2).

We didn’t use to care about the by-products, some of which contain valuable nutrients that can be ploughed back into the ground as fertilizer (for example, nitrogen-rich wood ash), but after almost two centuries of ramped-up industrial use, our energy waste products have become problematic. The main culprits are carbon dioxide (CO2) and methane (CH4), atmospheric gases that absorb infrared heat reradiated from a sun-heated earth, which enhances the natural greenhouse effect, turning the earth into an increasingly warming hothouse. Many other combustion by-products are also extremely harmful to the environment and our health.

Combustion is the process of burning fuel in the presence of oxygen, which produces energy and waste; for example, simple charcoal and ash from wood, coke and ash from coal, or a witches’ brew of by-products from various long-winded, hard-to-pronounce hydrocarbons.69 Any material can be burned given sufficient heat and oxygen, and thus the question with all fuels is: What can we burn that gives us enough energy to heat our homes, run our power plants, and fuel our cars, but doesn’t produce so much of the bad stuff?

Not all burning is the same: as more coal replaced wood in urban fireplaces, the waste became a particularly toxic combination of coal-creosote and sulfuric acid, causing numerous health issues. In London, smoke and river fog would combine in a dense “smog,” forever labeling the first great industrial capital with its infamous moniker “The Smoke” and permanently dividing the city into a wealthier, upwind west end and a poorer, downwind east end, based solely on proximity to the “aerial sewage.”

Fueled by numerous power plants, industrial sites, and household hearths that burned more coal along the Thames and elsewhere, the smog became unbearable after an especially bad cold spell during the winter of 1952. Visibility was almost impossible throughout London as football games and greyhound races were cancelled (the dogs couldn’t see what they were chasing), people drowned in canals they hadn’t seen, and buildings and monuments rotted in a sulfurous bath, while one airplane pilot got lost taxiing to the terminal after landing, requiring a search party that promptly got lost too.70 “Fog cough” was rampant as more people perished in Greater London in a single month than “died on the entire country’s roads in the whole year.”71 After the so-called “Great Smog” of 1952 that killed almost 4,000 people in a few days72 – a level of death unseen since the Blitz – the horror was eventually addressed, prompting a switch from solid fuels such as coal to liquid fuel: firstly coal-derived town gas, followed by imported propane, and finally “natural” gas (methane) after the 1965 North Sea finds.73

To counter the smog, the Clean Air Act of 1956 mandated “smoke-free” zones, where only smokeless fuel could be burned, although others were slow to heed the warnings. Not until 1989 did Dublin introduce similar restrictions, following an especially bad winter in 1981–82,74 adding an extra 33 deaths per 100,000 people from the increase in smog caused by burning coal in open grates.75 Following the ban, air pollution mortalities dropped by half.76 The move from coal-powered to diesel locomotives in many industrialized countries also reduced the rampant railway exhaust that had gone unchecked for over a century.

In poorer countries, however, it is harder to convince people to burn expensive, imported natural gas rather than cheap and readily available coal or give up their coal jobs without alternatives. In Ulan Bator, Mongolia, the world’s coldest capital, particulate matter levels have been measured at more than 100 times WHO limits because of inefficient coal burning, leading to higher rates of pneumonia, a 40% reduction in lung function for children, and a 270% spike in respiratory diseases.77

Even in Europe, which has vowed to end coal, countries such as Poland still heat their homes primarily with coal (87%). Talk of “phasing out” coal or switching to a “bridge” fuel such as natural gas seems cynical at best in the face of a shivering population. Even in the United States, one in 25 homes is still heated by wood, much of it in substandard stoves where particulate matter is four times that of coal, contributing to 40,000 early deaths per year.78 Warmth always comes at a hefty price when inefficient wood- or coal-burning is the source.

In December 2015, just as national delegates were convening in Paris for the COP21 climate change meetings, China raised its first-ever four-color warning system to a maximum “red alert.” The government recommended that “everyone should avoid all outdoor activity,” while the high-range Air Quality Index (AQI) almost doubled, alarming officials and posing a “serious risk of respiratory effects in the general population.”79 As Edward Wong noted, “Sales of masks and air purifiers soared, and parents kept their children indoors during mandatory school closings.”80

As noted in a 2019 WHO study, 8.7 million people across the globe die prematurely each year from bad air – even more than from smoking – while air pollution in Europe has lowered life expectancy by more than 2 years,81 where all forms of pollution have been linked to one in eight deaths.82 The economic cost of an increasingly toxic environment has been estimated at almost $3 trillion per year from premature deaths, diminished health, and lost work.83

To explain the toxic blight in countries that have scrimped on safety, a new global lexicon has arisen, including “airpocalypse” and “PM2.5” indicating the size in microns of the particulate matter (PM) released to the air, which can travel deep into the respiratory tract and enter the bloodstream through the lungs. Another modern measure is the “social cost of carbon dioxide” (SCCO2), a 2021 report pegging SCCO2 at between $51/tonne and $310/tonne, that is, annual costs ranging from 2 to 11 trillion dollars (the upper estimate includes future uncertainty and feedback loops84). Developing countries hoping to expand their energy needs by burning coal do so at a huge cost to public health and climate change.

***

Despite the danger, some remedies are available. In early conventional, coal-fired plants, “lump” coal was burned on a grate to create steam, but is now milled to a fine powder (<200 microns) and automatically stoked to increase the surface area for faster, more efficient burning. In such a pulverized coal combustion (PCC) system, powdered coal is blown into a combustion chamber to burn at a higher temperature, combusting about 25 tons per minute.85 Although coal combustion is more efficient than in the past, two-thirds of the energy is nonetheless lost as heat if no thermal capture systems are employed, for example, collecting waste heat for district heating in a combined heat and power (CHP) plant.

Washing coal before combustion helps reduce toxic emissions in older, dirtier ranked coal, while precipitators can be installed to filter fly ash after burning.86 New carbon storage methods are also being sought to dispose of emissions, such as burying high-pressure carbon dioxide thousands of feet underground in disused oil and gas reservoirs. In a typical coal plant, carbon dioxide accounts for roughly 15% of the exhaust gas, but capturing the outflow is prohibitively expensive and is more about extending outdated systems than improving bad air (as we will see later).

But despite the improvements and PR about “clean coal” or “carbon capture,” the pollutants are still the same, the amount depending on the elemental makeup of the coal type. Coal is not pure carbon, but is mostly carbon (70–95%) along with hydrogen (2–6%) and oxygen (2–20%), plus various levels of sulfur, nitrogen, mercury, other metals, and moisture, as well as an inorganic, incombustible mineral part left behind as ash that is full of rare-earth elements (REEs), especially in lignite. The particulate-matter emissions include numerous pollutants: carbon monoxide, sulfur dioxide, nitrogen oxide, mercury, lead, cadmium, arsenic, and various hydrocarbons, some of which are carcinogenic.

According to the Physicians for Social Responsibility, coal contributes to four of the five top causes of US deaths: heart disease, cancer, stroke, and chronic lower respiratory diseases. Ill effects include asthma, lung disease, lung cancer, arterial occlusion, infarct formation, cardiac arrhythmias, congestive heart failure, stroke, and diminished intellectual capacity, while “between 317,000 and 631,000 children are born in the US each year with blood mercury levels high enough to reduce IQ scores and cause lifelong loss of intelligence.”87

The higher the sulfur content, the more sulfur dioxide, perhaps the nastiest coal-burning by-product, which creates acid rain (the sulfur reacts with oxygen during burning and is absorbed by water vapor in the atmosphere). Sulfur content varies by coal rank, but bituminous coal has as much as nine times more sulfur than sub-bituminous coal, creating more health problems in the eastern United States.88 Flue-gas desulfurization (FGD) systems, better known as “scrubbers,” have been available in the USA since 1971 to reduce sulfur emissions and acid rain, but are not required in every state. According to the US Energy Information Administration, plants fitted with FGD equipment produced more than half of all electricity generated from coal yet only a quarter of the sulfur dioxide emissions.89

Since Richard Nixon signed the 1970 Clean Air Act, the US government has mandated emission controls for both carbon and sulfur emissions in coal-fired power plants, including the use of scrubbers, although emissions are still higher than anywhere else in the world besides China. In 2015, Barack Obama signed a new Clean Air Act, allowing states to set their own means to reduce emissions by 20% by 2020, realistically achievable only by shuttering coal-powered plants, especially older ones fueled by the dirtier, darker coal found in the Illinois Basin, Central Appalachia, and Northern Appalachia.

Efficient coal plants make a difference. According to the World Energy Council, “A one percentage point improvement in the efficiency of a conventional pulverized coal combustion plant results in a 2–3% reduction in CO2 emissions. Highly efficient modern supercritical and ultra-supercritical coal plants emit almost 40% less CO2 than subcritical plants.”90 Unfortunately, despite almost half of all new coal-fired power plants fitted with high-efficiency, low-emission (HELE) coal technologies, about three-quarters still do not avail of the latest emission-reduction technologies.

Stiff opposition continues by lobbyists and politicians who routinely downplay the dangers, especially where coal accounts for much of the economy. Long-time Republican senate leader Mitch McConnell from Kentucky stated that “carbon-emission regulations are creating havoc in my state and other states,”91 while Democrat senator Joe Manchin from coal-rich West Virginia has made millions from coal, derailing legislation curbing its use. It isn’t easy to turn off the coal switch in a country so reliant on a readily accessible energy source when vested interests dictate policy, and indeed regulations were rolled back in 2017 under Donald Trump. As always, jobs are at stake, but the emissions stake is higher, while the whole coal-use cycle must be costed – increased temperatures, air pollution, and expensive cleanups in sensitive water-table areas affected by mining run-off.

As the world wrestles with how to transition from dirty to clean energy, including how to pay the bills, the ongoing problem of coal was highlighted at the 2018 COP24 summit in southwest Poland, the largest coal-mining region in Europe that provides 80% of Poland’s electric power. The meeting took place in the Silesian capital of Katowice, where the smog hangs in the open air. Christiana Figueres, the former UN climate executive secretary and one of the architects of the Paris Agreement, acknowledged that Katowice was on the frontline of industrial change amid the increased perils of global warming, “a microcosm of everything that’s happening in the world.”92

Alas, even developed countries are finding coal hard to quit amid the lofty rhetoric about “net zero” and a “carbon-neutral” future, which is now part of a regular discourse on transitioning to cleaner energy. At the same time as the 2021 COP26 meeting in Glasgow, the UK was considering reopening coalmine operations on the Cumbrian coast to supply coking coal for steel, citing critical local jobs, despite cleaner alternatives such as Sweden’s pioneering green steel (which has 5% the carbon footprint of conventional steel) or recycling via electric arc furnaces.93 Rather than “consign coal to history” as called for at the meeting by the then UK prime minister and contravening renewed ideals about the right to clean air, a new license was also granted at the Aberpergwm Colliery in south Wales to mine 40 million tonnes of high-grade anthracite, most of which will be used to make steel at the nearby Port Talbot steelworks.94 The war in Ukraine also pushed other European countries to reconsider shuttering coal-powered plants as more Russian natural gas was curtailed by Western sanctions and Russian retaliation. One can hardly expect the developing world to ramp down coal use when the rich, developed countries won’t abide by their own rules.

Despite the dangers, turning off coal is no simple fix. And indeed, coal consumption has continued to rise, 25% over the last decade in Asia, which accounts for 77% of all coal use, two-thirds from China.95 Once thought of as “the fuel of the future” in Asia, more than 75% of planned coal plants have at least been shelved since the 2015 COP21 in Paris.96 Nonetheless, without effective global policy agreements, coal will continue to provide more power in poorer countries. In late 2021, Poland was fined €500,000 per day rather than close an open-cast lignite mine in Turów, Poland’s prime minister noting “it would deprive millions of Polish families of electricity.”97 Soon after, however, China announced it would no longer build new coal plants abroad, although more domestic plants would continue to be built.

Converting from brown to green is a huge challenge when so much of our generated power depends on the rock that burns. New investment strategies for stagnate regions and economic support for displaced workers – a.k.a. a “just transition” – is essential if we want to manage the social dangers and political fallout, both in the richer developed West and in the poorer, coal-reliant developing world. The goal is to maintain the supply of readily available, “dispatchable” power without continuing to pollute or add to global warming.

1.5 The Economic By-Products of Coal: Good, Bad, Ugly, and Indifferent

Coal and steam have brought many advantages to the world. Global trade expanded as raw materials such as cotton were shipped in greater quantities from Charleston, South Carolina, to Liverpool, ferried by canal barge and train to Manchester, and turned into finished goods in the burgeoning textile factories of “Cottonopolis,” before being shipped around the world at great profit.

But more than just locomotion and industry changed with steam technology. In 1866, the first transatlantic cable was laid between Valencia Island, Kerry, and Heart’s Content, Newfoundland, transmitting news in minutes instead of weeks via telegraph between the stock floors and investment houses of New York and London.98 In 1873, the French writer, Jules Verne, wrote about circumnavigating the globe in 80 days as the world shrank more each year, forever changing the concept of distance and time, and accelerating the transformation to global village we know today.

If we take the start of the Industrial Revolution99 as the year Thomas Newcomen invented the atmospheric water pump in 1712 for the Conygree Coalworks near Birmingham and the end as the sinking of the Titanic in 1912, we can try to gauge the vast changes in a 200-year period from old to new. Some mark 1776 or 1830 as the start, while others think the revolution is still rolling on today, but these dates or thereabouts are only a convenient benchmark. No single date can be ascribed, while the extent of industrialization has varied widely across the globe.

From 1700 to 1900, the population of England increased almost six-fold, from 5.8 to 32.5 million, whereas Europe’s population more than trebled from 86.3 to 284 million. In the same period, the population of the world more than doubled from almost 750 million to 1.65 billion (passing one billion around 1800). Towns and cities flourished with industrialization despite worrying a few concerned souls such as the English cleric and political economist Thomas Malthus, who thought that a linearly increasing food supply couldn’t keep pace with a doubling population. But double it did, again and again, to more than eight billion today, although the overall rate of increase has slowed of late.

Urbanization also increased as more people moved from the country to seek work and to avail of better goods and services in the newly expanding towns and cities. From 1717, Manchester grew from a pastoral town of 10,000 to more than 2.3 million by 1911.100 In 1800, 20% of Britons lived in towns of at least 10,000, while by 1912, over half did. Urbanization ultimately led to suburbanization and city sprawl as trains went underground, making modern commuting possible as well as speeding the change from dirty, coal-powered steam to clean, electric locomotion. By 1950, 79% of the population of the United Kingdom lived in cities, a number expected to rise to over 92% by 2030.101

Urbanization is the main sociological change of the Industrial Revolution. Indeed, everyone has neighbors now. By comparison, in China, more than a quarter of a billion people are expected to move from the country to the city by 2030, dwarfing in a decade Europe’s century-long industrial transformation.

The number of farms and people working the land dropped accordingly as newly mechanized tools reduced the need for farm labor and an urbanized manufacturing economy replaced a centuries-old, rural, agrarian way of life, referred to by Jacob Bronowski as being “paid in coin not kind.”102 From 1700 to 1913, Britain’s GDP increased more than 20-fold (from £9 billion to £195 billion),103 establishing Britain as the greatest capital-accumulating empire in history, the third such after Spain and the Netherlands, and the most vast prior to the rise of the United States.

By 1847, at the peak of the second rail mania, 4% of male UK laborers were employed in rail construction.104 In 1877, there were 322 million train passengers, increasing to 797 million by 1890, including on the luxury 2,000-km Orient Express, which by 1883 left daily from Paris to Constantinople, epitomizing the newly minted power and wealth of the industrial age.105 In the midst of all the progress, old jobs were supplanted, and boom–bust cycles became the norm as demand periodically lagged supply and vice versa.

Industrialization created specialization and the need for organized economic planning, about which Adam Smith wrote in detail in The Wealth of Nations, his seminal treatise on the causes and consequences of a rapidly industrializing world. In the midst of the Industrial Revolution, workers were displaced and opposition to the loss of jobs intensified, some a way of life for generations.