Introduction

The human brain, with its approximately 100 billion interconnected neurons, enables our higher cognitive abilities without being a physiological outlier from other primate species (Herculano-Houzel, Reference Herculano-Houzel2012). This incredibly complex organ can also host debilitating clinical disorders, including seizures, depression and anxiety, Parkinson’s disease, and many others. Unlocking the secrets of the human brain is one of the largest scientific challenges to ever be undertaken. Advances in neuroscience research have enabled us to further our understanding of the brain and develop technologies to read and write brain activity, with implications for understanding behavior and decision-making.

In the past decade, the United States and the People’s Republic of China have begun large neuroscience research projects along with several other international actors, including Canada, Korea, Japan, Australia, and the European Union (EU) (International Brain Initiative, n.d.). These brain initiatives have ambitious goals that are only now possible with advances in genetic tools and imaging techniques (National Institutes of Health, n.d.c). The U.S. BRAIN (Brain Research through Advancing Innovative Neurotechnologies) Initiative has a stated goal of “accelerating the development and application of innovative technologies … to produce a revolutionary new dynamic picture of the brain that … shows how individual cells and complex neural circuits interact in both time and space” (National Institutes of Health, n.d.b). Additionally, the BRAIN Initiative was highlighted as a major component of the United States’ innovation strategy for breakthrough technologies (Office of Science and Technology Policy, 2015). The China Brain Project’s framework focuses on the ability to develop technologies both for diagnosis and treatment of brain disorders and for mimicking human intelligence and connecting humans and machines (Poo et al., Reference Poo, Du, Ip, Xiong, Xu and Tan2016).

While the initial public focus of these brain projects has been clinical technologies to cure disease and return faculties to people, such research is likely to enable augmentation and use for healthy people in both the commercial and military sectors. The U.S. BRAIN Initiative and the China Brain Project emphasize the importance of neuroscience research to treat and prevent brain disorders in aging populations, but they also highlight additional goals for accelerating basic research in the United States and applied research in human-machine teaming in China (National Institutes of Health, n.d.b; Poo et al., Reference Poo, Du, Ip, Xiong, Xu and Tan2016). The dual-use technologies made possible by all seven brain projects are likely to have profound implications for society, public health, and national security. From a national security perspective, it would be valuable to be able to predict the dissemination of neurotechnologies to both the commercial and military sectors. This research presents the first analytical framework that attempts to do so.

Like past scientific and technological breakthroughs, cognitive sciences research is purported to have an impact on future security policies. Since the cognitive sciences focus on humans, they are intricately tied to the study of social processes, including the realms of politics and security. International scientific bodies, including the U.S. National Academy of Sciences and the United Kingdom’s Royal Society, have also engaged in discussions on the field’s policy relevance (National Research Council, 2008, 2009; Royal Society, Reference Society2012). Experts involved in developing NATO’s New Strategic Concept released in 2010 noted that “less predictable is the possibility that research breakthroughs will transform the technological battlefield,” and that “allies and partners should be alert for potentially disruptive developments in such dynamic areas as information and communications technology, cognitive and biological sciences, robotics, and nanotechnology” (NATO, 2010, p. 15; emphasis added). Interest in neurosciences is not limited to any specific nation, and the potential for new technology to affect conflict and cooperation has been recognized.

Security-related research in neuroscience and operationalization of scientific discoveries into commercial or deployable neurotechnologies present concerns for policymakers and scholars. Not only do the effects and use of military applications of cognitive science and neuroscience research require their attention, but also the increasing understanding of cognitive processes continues to provide new perspectives on how we understand policy and politics.

Consideration of the challenges to international security and policymaking as a result of scientific and technological advancements is not novel. Anticipating and responding to potential emerging threats to security and understanding disruptive technologies are intrinsic to the security dilemma. Consideration of the relationship between technology and conflict has a substantial and deep history across the social sciences as a determinant of global power (Turner, Reference Turner1943). The classical realist thinker Hans Morgenthau (Reference Morgenthau1972) critiqued the role of modern technology, technocrats, and the resultant “technological revolutions” in international politics and asserted that a rethinking of traditional international relations theory and government structures was required because of such. Bridging sociology and political science, William Ogburn (Reference Ogburn1949) was one of the first academics to focus on “inventions” or innovation, in the context of origins, diffusion, and effects, and to systematically study the social effects of innovation on international politics. More recent work by scholars such as Susan Strange (Reference Strange1996) has explored how technology and technologically enabled systems have affected concepts of geographically demarcated state sovereignty and autonomy.

The importance and security implications of how well a state is able to translate basic research into applied and commercial technology is another area of research in security studies that is particularly relevant to this work, as it explores how concepts from basic neuroscience can be developed and applied to innovations in a security context (Dombrowski & Gholz, Reference Dombrowski and Gholz2006; Skolnikoff, Reference Skolnikoff1993). One of the most notable examples in the study of technology’s impact on state interactions is the invention of nuclear weapons and the reconfiguration of strategic logic to deterrence (Gaddis, Reference Gaddis1989; Herz, Reference Herz1959; Meyer, Reference Meyer1984; Sagan & Waltz, Reference Sagan and Waltz2012). The mutual assured destruction logic underlying nuclear deterrence constrains a state’s choice of strategy. Within security studies, there is a rich literature theorizing and empirically exploring the intersection of science, technology, and understanding the outcomes of armed conflict (Arquilla, Reference Arquilla2002; Biddle, Reference Biddle2004; Chin, Reference Chin2019; Kosal, Reference Kosal2019; O’Hanlon, Reference O’Hanlon2009; Rosen, Reference Rosen1991; Skolnikoff, Reference Skolnikoff1993; Solingen, Reference Solingen1994).

For strategists and scholars of revolution in military affairs, which focuses on emerging technologies and posits that military technological transformations and the accompanying organizational and doctrinal adaptations can lead to new forms of warfare, the nexus between technology and military affairs bears directly on the propensity for conflict and outcomes of war, as well as the efficacy of security cooperation and coercive statecraft (Bernstein & Libicki, Reference Bernstein and Libicki1998; Blank, Reference Blank1984; Cohen, Reference Cohen1996; Herspring, Reference Herspring1987; Krepinevich, Reference Krepinevich1994; Mahnken, Reference Mahnken2010; McKitrick et al., Reference McKitrick, Blackwell, Littlepage, Kraus, Blanchfield, Hill, Schneider and Grinter1995; Nofi, Reference Nofi2006). These discussions underpin the concept of network-centric warfare, operations that link combatants and military platforms to each other in order to facilitate information sharing as a result of the progress in information technologies (Arquilla, Reference Arquilla2002).

In the late twentieth century, one predominant model for understanding the conditions under which conflict and cooperation are likely and how technology can contribute to increasing or decreasing instability in the international system was the offense-defense model (Jervis, Reference Jervis1978). This theory asserts that more complete information on the intentions of rivals allows both sides to manage a spiraling arms race. Awareness of aggression allows for coalition building and diplomatic action in order to preemptively quell belligerence. An advantage of defensive technology over offensive technology is that it lowers the cost/benefit equation of the attacker and, in the words of Clausewitz (Reference Clausewitz and Greene2013), “tame[s] the elementary impetuosity of War.” Offensive ascendency, conversely, creates a sense of urgency for states to develop greater offensive capabilities and seek out alliances, further increasing tensions. Emerging technologies, such as nanotechnologically enabled meta-materials, biotechnology, and neurotechnology, may problematize offense-defense theory by challenging the distinction between offensive and defensive weapons (Kosal & Stayton, Reference Kosal, Stayton and Kosal2019). This work does not seek to resolve that issue but furthers the scholarly debate about how emerging technologies affect international security.

Another area of scholarly work has considered and problematized the way emerging technologies may challenge existing laws, including the law of armed conflict, international environmental law, and arms control treaties, and need to govern the introduction, implementation, and use of emerging technologies as a means or method of warfare (Leins, Reference Leins2021; Nasu & McLaughlin, Reference Nasu and McLaughlin2014).

This research focuses on analyzing one emerging technology that has been identified as having security significance: the commercial and military adoption of brain-computer interfaces (BCIs), a nascent neurotechnology, by the United States and China. These two nations are the focus because they are among the largest spenders on brain projects, and they are increasingly seen as peer economic and military competitors, with particular recent emphasis on technological competitiveness between the two (Dobbins et al., Reference Dobbins, Shatz and Wyne2018; Ferchen, Reference Ferchen2020; Lewis, Reference Lewis2018; O’Rourke, Reference O’Rourke2020; Rasser, Reference Rasser2020; Wray, Reference Wray2020). The 2018 U.S. National Defense Strategy highlighted “long-term, strategic competition” with China as a top priority in context of great power competition (Campbell & Ratner, Reference Campbell and Ratner2018; Colby & Mitchell, Reference Colby and Mitchell2020 Jones, Reference Jones2020; Tellis, Reference Tellis, Tellis, Szalwinski and Wills2020; Wu, Reference Wu2020). This competition will naturally include vying for “technological advantage,” especially with emerging technologies like those enabled by the brain projects to avoid technological surprise (U.S. Department of Defense 2018). Future work may consider other nations.

Adoption of truly emerging technologies, like neurotechnologies, in both the commercial and military sectors is likely to have important implications for the strategic competition between nations, making them important case studies for predicting neurotechnology adoption likelihood. In economics, the advantages and disadvantages of being a “first mover” or a “fast follower” in new markets have been discussed (Kerin et al., Reference Kerin, Varadarajan and Peterson1992; Wunker, Reference Wunker2012). Most of these studies highlight very large advantages for first movers in emerging industries, while also acknowledging that fast followers occasionally benefit by learning from any missteps that a first mover makes or by capitalizing on the high development costs that first movers incur. This terminology has also been applied to nations’ strategies toward emerging technologies. Notably, the U.S. National Security Commission on Artificial Intelligence highlighted in its 2021 report “the first-mover advantage of developing and deploying technologies like microelectronics, biotechnology, and quantum computing” (National Security Commission on Artificial Intelligence, 2021). Being a first mover would enable a nation’s private industry to capitalize on a growing market for neurotechnologies. It may also allow that first-mover nation to play a lead role in setting the ethical and legal norms internationally for these devices. Therefore, being able to anticipate which nation is most likely to be a first mover and see widespread technology development and dissemination is crucial to understanding the national and international security landscape. Additionally, the pursuit of new capabilities, including first acquisition and deployment, has been studied as part of the arms race literature (Evangelista, Reference Evangelista1989; Gray, Reference Gray1974; Hundley, Reference Hundley1999).

This research looks at public funding for neuroscience research and development (R&D) through the U.S. BRAIN Initiative and the China Brain Project. Though other sources of funding for neuroscience are present in both nations, we chose to analyze these brain projects because of their importance as an articulation of national strategy for neuroscience research that could enable earlier adoption. Their stated goals and directed funding, which involves stakeholders from government, academia, military, and industry, can be viewed as a cohesive national strategy for identifying top-priority areas of neuroscience research and determining how findings from this research will be translated into new technologies. Each project also has components to enable translational research—that is, moving from basic research through applied R&D. Additionally, the brain projects serve as a diplomatic interface for international collaboration by fostering data inventorying and sharing, as well as promoting consensus on international norms for ethical use of these technologies (International Brain Initiative, n.d.).

Focusing on one emerging neurotechnology, instead of a broad investigation of many neurotechnologies, enables more robust analysis of the sociocultural, governmental, and economic influences that could drive adoption of a specific dual-use technology. BCIs are a dual-use technology directly enabled by brain project funding that both the United States and China have a stated interest in developing for commercial and military applications. These devices have the potential for high adoption by healthy people for both civilian and military purposes, and they are already available on the market (Emondi, Reference Emondin.d.; Farnsworth, Reference Farnsworth2017). Additionally, these devices may raise significant or even profound ethical concerns involving data privacy and individual autonomy (Global Neuroethics Summit Delegates et al., Reference Rommelfanger, Jeong, Ema, Fukushi, Kasai and Singh2018; Moreno, Reference Moreno2003). Likely for these reasons, the U.S. Congressional Research Service (2021) identified BCIs as an emerging technology that should be considered for export controls to nations like China. BCIs fit the definition of dual-use technology in two ways: BCIs can be used for both civilian and military purposes, and BCI technologies intended for civilian use could be co-opted for malicious or deleterious misuse.

Two hypotheses are proposed and tested in order to better understand how this emerging technology is likely to be operationalized:

H1: The United States will adopt BCI technologies for commercial and military use before China if national innovation systems, amount of brain project funding, and current BCI market share are more predictive of BCI technology adoption.

H2: China will adopt BCI technologies for commercial and military use before the United States if government structure, brain project and military goals, sociocultural norms, and research monkey resources are more predictive of BCI technology adoption.

Literature review

While other papers have addressed the potential commercial and military applications of BCIs, none has attempted to predict adoption likelihood or compared adoption likelihood of these devices. The ability to make predictions about adoption likelihood in the United States and other countries has been identified as a priority by the U.S. Army Combat Capabilities Development Command (DEVCOM), since so few studies have been conducted to assess attitudes toward BCIs in both the public and military sectors (Emanuel et al., Reference Emanuel, Walper, DiEuliis, Klein, Petro and Giordano2019). These devices, which can connect human and machine intelligence, have the potential to shape society and change the nature of warfare.

In the last decade or so, a small but significant body of literature has emerged on the intersection of advances in the cognitive and neurosciences and conflict across multiple disciplines. A significant portion of the studies and policy literature on cognitive sciences research is concerned with the ethics of such research. The ethical concerns raised largely fall into two areas of debate—(1) human enhancement and (2) thought privacy and autonomy—both of which are relevant to the current security research on cognition. The issue of cognitive enhancement has been a contested area of research (Parens, Reference Parens and Illes2006). While some embrace the potential of “neuropharmaceuticals” and advocate industry’s self-regulation, others have raised concerns about the potential for political inequality and the possible disruption of natural physiological processes (Fukuyama, Reference Fukuyama2003; Gazzaniga, Reference Gazzaniga2005; Hitchens, Reference Hitchens2021; Naam, Reference Naam2005). The issue of thought privacy often emanates from advancements in noninvasive imaging and stimulation techniques used for neurological research. Many of the concerns relate to how such advancements could be used for lie detection and interrogation, including applications for domestic or foreign intelligence (Canli et al., Reference Canli, Brandon, Casebeer, Crowley, DuRousseau, Greely and Pascual-Leone2007; Wild, Reference Wild2005).

These ethical discussions have also extended to military R&D in the cognitive sciences. What role, if any, neuroscience research should play in national security and how its impact should be understood have been hotly debated (Evans, Reference Evans2021; Krishnan, Reference Krishnan2018; Marcus, Reference Marcus2002; Moreno, Reference Moreno2006; Munyon, Reference Munyon2018; Tracey & Flower, Reference Tracey and Flower2014). Some have advocated against the inclusion and use of neuroscience techniques for national security purposes, while others justify the defense and intelligence community’s involvement in consideration of maintaining the superpower status of the United States (Giordano et al., Reference Giordano, Forsythe and Olds2010; Rippon & Senior, Reference Rippon and Senior2010; Rosenberg & Gehrie, Reference Rosenberg and Gehrie2007). Still, some have contended that neuroethics must be considered in national security discussions, while others have advocated that the security use of neuroscience research is best framed as a consideration for human rights (Justo & Erazun, Reference Justo and Erazun2007; Lunstroth & Goldman, Reference Lunstroth and Goldman2007; Marks, Reference Marks2010). In discussing classified research on brain imaging, an open dialogue between scientists and government officials has been called for (Resnik, Reference Resnik2007). The place and role of neurosciences research and neurotechnology in security policy remain contested, and neither a security nor an ethical framework acceptable to all parties under which such research can be analyzed exists.

Another major area of social science work, often empirical and positivist in nature (rather than normative), can be found in research that approaches neuroscience and neurotechnology from a technology survey or qualitative case study method to probe its implications, including understanding the national security implications and approaches to reducing risk (Binnendijk et al., Reference Binnendijk, Marler and Bartels2020; DeFrancoet al., Reference DeFranco, DiEuliis and Giordano2019; Huang & Kosal, Reference Huang and Kosal2008; Rachna & Agrawal, Reference Rachna and Agrawal2018; Royal Society, Reference Society2012). Some such studies are more descriptive and others are more systematic, depending on the researcher and the scholarly discipline, ranging from political science and international relations to public policy and science and technology studies. Many, but not all, consider neurotechnologies in the context of chemical and biological weapons and their respective international legal bodies and biosecurity policy (Dando, Reference Dando and Rappert2007; DiEuliis & Giordano, Reference DiEuliis and Giordano2017; Nixdorff et al., Reference Nixdorff, Borisova, Komisarenko and Dando2018).

In addition to the earlier discussion on the theoretical approaches to the relationships between technology and conflict more generally, there are specific theoretical questions that apply to the realm of neuroscience in the context of military technology, strategic decision-making, and conflict. How advances in the cognitive neurosciences may undermine rational actor theory, a core component of nuclear deterrence theory, has been theorized (Stein, Reference Stein, Paul and Morgan2009). Other work has considered how scientists and neuroscientists, the very people whose research is in the spotlight, understand and view the security risks and potential consequences of such research and its implications for international security and governance (Kosal & Huang, Reference Kosal and Huang2015). This work adds to that systematic body of literature in the social sciences.

Technical background

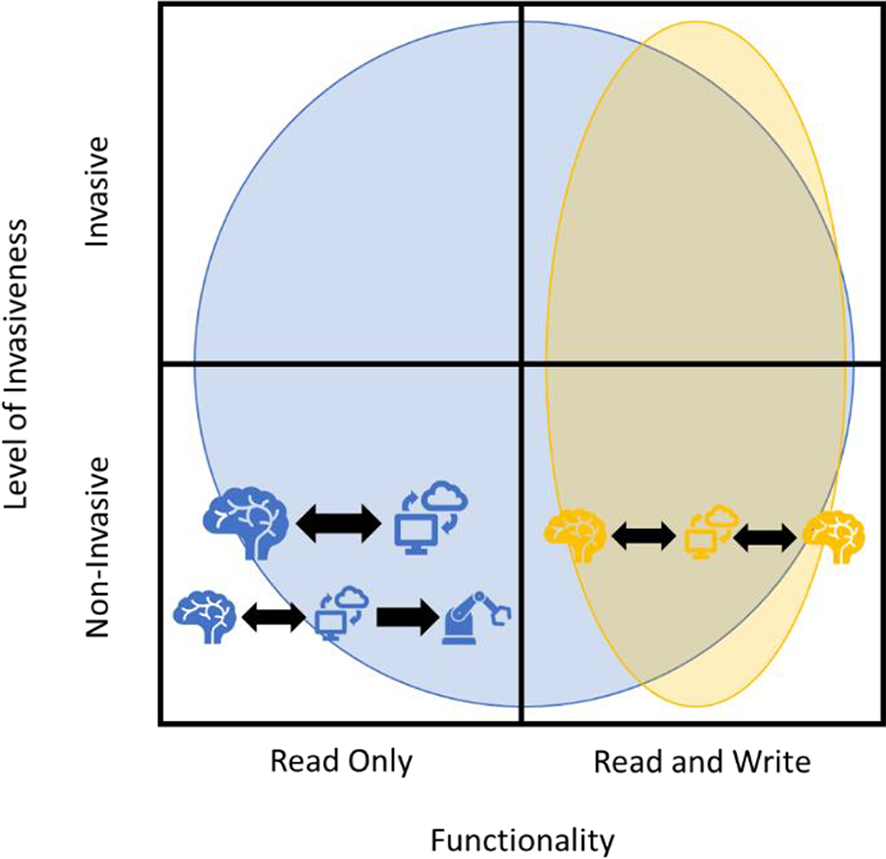

BCIs can be categorized by their capabilities and by their applications (see Figure 1). BCIs can be distinguished in their capabilities by whether they can “read” brain activity, “write” brain activity, or both, and by whether they are invasive or noninvasive. Three applications are discussed here: brain-computer, brain-computer-device, and brain-computer-brain. Each of these applications can be invasive or noninvasive. While read functions are all that is necessary for brain-computer-device and brain-computer technologies, write functions are necessary for brain-computer-brain technologies.

Figure 1. Three dual-use applications of BCIs (brain-computer, brain-computer-device, and brain-computer-brain technologies) can be categorized by their level of invasiveness and their functional ability to read or write brain activity. The symbols for each technology are shown in the category that corresponds to the theoretical minimum functionality and invasiveness required to use them, while the shaded areas demonstrate the other categories these technologies fall under. Greater invasiveness generally leads to greater data fidelity/interpretability. The ability to write brain activity noninvasively is an active area of research.

Capabilities

Functionality

The ability to read brain activity involves being able to capture the electrical activity of the brain and interpret the information contained in that electrical activity. Capturing the electrical activity of the brain involves electrodes and can occur on different spatial (centimeters to micrometers) and temporal (seconds to sub-milliseconds) scales (Nicolas-Alonso & Gomez-Gil, Reference Nicolas-Alonso and Gomez-Gil2012). Electrically active cells in the brain called neurons are the fundamental unit of brain computation; they produce action potentials, or “spikes,” in electrical activity, which carry information (Gerstner et al., Reference Gerstner, Kreiter, Markram and Herz1997). Electrical activity is interpreted using some form of model or decoder to understand the information carried, such as recurrent neural networks that can predict intended movements from neural activity (Pandarinath et al., Reference Pandarinath, Ames, Russo, Farschchian, Miller, Dyer and Kao2018). Different regions of the brain serve known functions, so placing a device in specific brain regions obtains different types of information. For example, the motor cortex is a region of the brain that contains information about intended movements, and most cortical neuroprosthetic devices record from this region (Bensmaia & Miller, Reference Bensmaia and Miller2014).

The ability to write brain activity involves evoking electrical activity in neurons. This can be done with a variety of techniques, including magnetic, optical, and electrical stimulation paradigms (DARPA, 2019). Most techniques currently used in research and clinical settings involve electrical stimulation via electrodes (Lozano et al., Reference Lozano, Lipsman, Bergman, Brown, Chabardes, Chang and Krauss2019). Electrodes are bidirectional in that they serve as an electrical contact between the brain and computer. Electrodes can both read activity by recording neurons and write activity by generating an electrical current that stimulates the neurons to produce spikes. Because we have limited principled understanding of how the brain structures information in single neurons and circuits of connected neurons, writing activity that translates into the desired outcomes of the stimulation is much more difficult than reading and interpreting activity, which can be accomplished using data-driven approaches (Jonas & Kording, Reference Jonas and Kording2017).

Level of invasiveness

BCIs can be either invasive or noninvasive. Invasive devices require surgery to implant beneath the skull (intracranially). They utilize electrodes to be able to read and write brain activity at finer scales than noninvasive devices (Ramadan & Vasilakos, Reference Ramadan and Vasilakos2017). Invasive BCI devices include the following:

-

• Single-unit recordings obtained from micro-electrodes read spikes from many single neurons simultaneously. These devices typically use the spike rate (the number of spikes in a given window of time) of many related neurons to determine meaningful information on what the brain is computing. To obtain recordings from single neurons, the electrodes used for these devices must penetrate the cortex. These devices are considered the best for obtaining high information quality, but their weakness is a lack of long-term viability due to degradation of the recording quality over time via neural scarring (Salatino et al., Reference Salatino, Ludwig, Kozai and Purcell2017).

-

• Depth local field potential (LFP) recordings are also obtained using larger electrodes. Instead of reading spikes, they capture the sum of electrical activity from many neurons on a coarser temporal scale. These devices also penetrate the cortex, but they do not require single-unit recordings. One of the benefits of these devices is that they are less prone to day-to-day variability in the quality of recordings, like single-unit recordings (Heldman & Moran, Reference Heldman, Moran, Ramsey and Millán2020). However, their invasiveness still makes them prone to degradation around the recording site.

-

• Electrocorticography recordings are obtained from the surface of the brain or the cortex and capture broad electrical activity from many neurons at once. In contrast with single-unit recordings, these do not capture individual spikes from single neurons or depth LFPs, but rather the LFPs generated at the surface of the brain, with a temporal resolution too coarse to capture individual spikes. The spatial resolution of these devices is also coarser grain but can capture a larger region of the brain. Finally, they are less invasive than single-unit recordings or depth LFPs because they do not penetrate the cortex, and they may be more stable over longer periods (Sauter-Starace et al., Reference Sauter-Starace, Cretallaz, Foerster, Lambert, Gaude and Torres-Martinez2019). These electrodes can also be used to write brain activity and are currently used for this purpose in clinical settings (Caldwell et al., Reference Caldwell, Ojemann and Rao2019).

Currently, only noninvasive devices are available commercially for nonmedical purposes. These devices sacrifice information quality for ease of use. They are packaged in the form of electroencephalography (EEG) headsets with a few large electrodes that record information from the surface of the scalp. They require no surgery and can be removed easily. However, they are incredibly noisy, with signals that are corrupted by head movements and eye blinks, making it difficult to read brain activity. Despite these shortcomings, EEG is also used in clinical and therapeutic settings for important procedures such as diagnosing epilepsy (Tatum et al., Reference Tatum, Rubboli, Kaplan, Mirsatari, Radhakrishnan, Gloss and Beniczky2018). Medical EEG devices tend to have better data quality than commercial EEG devices (Ratti et al., Reference Ratti, Waninger, Berka, Ruffini and Verma2017). Additionally, EEG headsets available on the market do not currently provide the ability to write brain activity. The ability to write brain activity with a noninvasive device remains an active area of research (Polania et al., Reference Polania, Nitsche and Ruff2018). These noninvasive write devices could include the use of acoustic, optical, and electromagnetic techniques that induce electrical activity in localized areas of the brain from outside the skull (DARPA, 2019).

Applications

To begin a discussion of the applications of BCI technologies described earlier, we present two illustrative scenarios: one that considers how a relevant past military engagement may have been different if BCIs had been utilized, and another that considers a relevant future scenario in which the dual-use properties of BCIs could cause a security incident. Construction and presentation of formalized scenarios are contemporary tools widely utilized in the military, business, government policy planning processes, and international relations (Barma et al., Reference Barma, Durbin, Lorber and Whitlark2016; Bishop e tal., Reference Bishop, Hines and Collins2007 Schwartz, Reference Schwartz1996). Scenarios are not intended to be predictive but are used to illustrate potential futures for planning or other analytical purposes.

Scenario #1

In the mid-2000s, BCIs are already a fully developed, deployable technology used by military personnel. There is a major offensive against a densely populated urban city controlled by insurgents. The goal of the operation is to take control of the city from the insurgents while minimizing civilian casualties. Military units with enhanced personnel lead an assault on the city. Personnel with visual enhancement BCIs discriminate between insurgents and civilians using artificial intelligence (AI) identification that presents an overlayed sensory indicator on their visual field. Other personnel use auditory-enhancement BCIs that allow them to receive real-time translations of the languages being used by insurgents and civilians, providing actionable intelligence. The insurgents left improvised explosive devices and other incendiary traps at key locations in the city. Personnel with BCIs defuse devices using an extremely dexterous arm robot that can be controlled remotely, avoiding the potential for setting off the device near the unit or location detection by the insurgents, while also allowing for simultaneous control of a firearm. Finally, these enhanced units can communicate telepathically with each other via brain-to-brain communication enabled by BCIs, relaying important battlefield information silently. These enhanced units can do reconnaissance, clear deadly devices from the streets, and effectively minimize civilian casualties. This results in fewer personnel casualties during the overall offensive.

Scenario #2

The year is 2040. BCIs are widely used by both civilians and military personnel for routine computing and control tasks in developed nations. BCIs are used for piloting drones by air forces, providing a competitive advantage in reaction time and sensory processing speed over nations that use manual controls. These BCIs are noninvasive and have strict cybersecurity and data privacy protocols to ensure they are not prone to a cyberattack. Additionally, invasive BCIs used in a civilian context can control devices as well as alter mood and focus, but they have less security measures in place than military BCIs. Two great power competitors are involved in a territorial dispute over an island nation with rare earth metal deposits and an advanced semiconductor manufacturer. Nation A has a military base on and treaty with the island nation, while Nation B seeks regional hegemony and a greater sphere of influence through control of the island. Drones routinely engage in air-to-air combat over air space disputes around the island, potentially endangering Nation A’s crewed planes carrying personnel and supplies to the island. Nation A has more advanced BCIs and is able to protect its crewed planes and cause significant financial loss to Nation B by destroying its drones in air-to-air combat. However, during a recent combat, Nation A’s drone pilots report feeling nausea, drowsiness, lack of attention, and heightened stress. The mental state of the pilots causes significant loss of drones and the downing of a crewed plane, sparking an international incident, since air force casualties in combat are now a rarity. It is later discovered that Nation B exploited the weaker security protocols of civilian BCI devices used by Nation A’s military personnel and conducted a targeted attack on the drone pilots’ mental state and mood during the air-to-air engagement through these devices.

Applications of BCIs

BCI devices can be used for many different purposes, but they fall into three broad categories of applications that are illustrated in these two scenarios:

-

• Brain-computer-device: These are technologies in which activity from the brain is read and used to control an external device. Examples include neuroprosthetics that provide dexterous hand movements and sensory feedback to amputees and communication devices for paralyzed people (Adewole et al., Reference Adewole, Serruya, Harris, Burrell, Petrov, Chen and Cullen2017; Nuyujukian et al., Reference Nuyujukian, Sanabria, Saab, Pandarinath, Jarosiewicz, Blabe and Henderson2018). In another example, the company Emotiv demonstrated a paralyzed individual controlling a Formula 1 race car using a noninvasive EEG headset (Emotivstation, 2017). Potential military applications include hands-free and/or remote control of uncrewed vehicles, drones, or weapons systems in a combat environment (Emanuel et al., Reference Emanuel, Walper, DiEuliis, Klein, Petro and Giordano2019; Vahle, Reference Vahle2020). In a military application that mirrors the driving of a Formula 1 car, a quadriplegic woman was able to pilot an F-35 in simulation using a BCI in 2015 (Stockton, Reference Stockton2015).

-

• Brain-computer: These technologies are used to read and write brain activity without controlling an external device. One clinical application of these devices involves reading brain activity to predict seizures and then writing activity to end seizures quicker and reduce damage to the brain (Maksimenko et al., Reference Maksimenko, Lüttjohann, van Heukelum, Kelderhuis, Makarov, Hramov and van Luijtelaar2020). Most commercial BCIs currently available are brain-computer technologies that can read activity from regions of the brain associated with attention and focus and report interpretations of that activity (EMOTIV, n.d.). Envisioned defense applications of these technologies could be for deception detection in interrogations and cognitive enhancement when managing and responding to many flows of information (Emanuel et al., Reference Emanuel, Walper, DiEuliis, Klein, Petro and Giordano2019; Fisher, Reference Fisher2010).

-

• Brain-computer-brain: These technologies would allow telepathic communication between two people. While they are mainly speculative, these technologies have been demonstrated in research settings with rats and humans (Jiang et al., Reference Jiang, Stocco, Losey, Abernethy, Prat and Rao2019; Trimper et al., Reference Trimper, Wolpe and Rommelfanger2014). These devices could be used for silent and/or remote communication as well as for the transfer of learned knowledge between individuals.

The potential civilian and military applications of BCI for human enhancement will affect the international security environment. A more thorough treatment of the security implications of dual-use BCIs can be found in DEVCOM’s Cyborg Soldier 2050 study; in brief, these technologies can serve as both measures and countermeasures in military contexts, as well as complicate the national security landscape in civilian contexts (Emanuel et al., Reference Emanuel, Walper, DiEuliis, Klein, Petro and Giordano2019). BCIs could be used by military personnel to enhance visual processing, reaction time, attention, and mental states, all of which would provide a significant cognitive advantage over competitors without BCIs or with less advanced BCIs. However, BCIs are also vulnerable to countermeasures like cyberattacks, raising concerns about data privacy or even the ability to attack the brain through BCIs with write capabilities. BCIs used in civilian contexts are also vulnerable to countermeasures, which lead to broader security implications than even their military use entails.

Additionally, BCIa have proposed applications within the intelligence community. For example, a BCI that can read brain activity could be utilized to detect whether a person is being deceptive during interrogation, while also monitoring other brain states like attention and stress (Gherman & Zander, Reference Gherman and Zander2021; Lin et al., Reference Lin, Sai and Yuan2018). Proponents of this use say that this would provide a unique advantage over other deception detection methods like the polygraph in both accuracy and multifunctionality. Defensive counterintelligence measures would also be necessary if BCIs are utilized in military and intelligence operations, or if foreign intelligence services seek to attack BCI vulnerabilities in the manner described earlier.

Methods

Independent variables associated with BCI technology adoption were identified that may indicate whether the United States or China will adopt BCI technologies first. These variables address separate factors that affect adoption of emerging technologies (Bussell, Reference Bussell2011; Corrales & Westhoff, Reference Corrales and Westhoff2006; Milner, Reference Milner2006; Rotolo et al., Reference Rotolo, Hicks and Martin2015; Schmid & Huang, Reference Schmid and Huang2017; Taylor, Reference Taylor2016): (1) the technological capacity of a state and (2) cultural and social characteristics.

Most variables examined here can be measured quantitatively, though important qualitative variables include government structure, national innovation systems, and stated brain project and military goals. One natural consequence of attempting to understand a future event is that a model of how indicators map onto the dependent variable of widespread BCI adoption technology cannot be constructed based on data. To address the question of individuals’ likelihood of using a technology, previous studies have mapped sociocultural scores and other indicators onto technology adoption likelihood, but these studies investigated other technologies such as mobile phones and IT technologies that have some differences from BCIs and other neurotechnologies (LaBrie et al., Reference LaBrie, Steinke, Li and Cazier2017; Lee et al., Reference Lee, Trimi and Kim2013).

To support a hypothesis of earlier adoption in either the United States or China based on the identified indicators, reports of BCI use in both commercial and military settings in the United States and China were identified as early proxies for adoption likelihood. Therefore, the methodology of this article can be divided into (1) developing hypotheses that would support earlier adoption in either the United States or China and (2) using the real-world examples of BCI use as a dependent variable to determine which hypothesis is more likely and therefore which indicators are more important for BCI technology adoption. In this section, definitions are provided for each of the indicators of BCI adoption identified and analyzed. Why indicators are important factors to consider and why these variables were included whereas others were not is also discussed.

If government structure, sociocultural norms, and stated goals are more predictive of BCI adoption, China will be the first adopter of BCIs in both commercial and military contexts. However, if BCI adoption is predicted by public funding and market share, then the United States will be the first adopter of BCIs. Here, we support the hypothesis that China will be the first widespread adopter of BCIs.

Qualitative variables

Government structure

Government structure, whether democratic-republic, autocratic rule, or another form, can affect BCI adoption because of its effect on the ties between the military, industry, academia, and government. Government structure can also strongly affect how new technologies are implemented for defense purposes.

National innovation systems

National innovation systems have been defined as “the network of institutions in the public and private sectors whose activities and interactions initiate, import, modify, and diffuse new technologies” (Freeman, Reference Freeman1987 p. 17). This definition emphasizes the linkages between the many important institutions within a nation that drive innovation and technology development. This is an important indicator of BCI adoption because of the way it affects support for R&D and companies that will market BCIs. While government structure can strongly affect national innovation systems, it is not the only determining factor. Additionally, government’s role in technology adoption can be separate from its role in technology innovation. Therefore, government structure and national innovation systems are considered separately here. A previous analysis of the national innovation systems in the United States and China by Melaas and Zhang (Reference Melaas and Zhang2016) is used here as an indicator of BCI technology adoption.

Brain initiative and military goals

This study emphasizes government investment in the major brain research projects rather than investment in general or other neuroscience research, because the goals of the U.S. BRAIN Initiative and the China Brain Project are a coherent articulation of a national strategy for neuroscience research that involves all major stakeholders in government, academia, military, and industry. The overall goals of these brain projects are related to the way they affect BCI research and adoption, and we look for specific mentions of BCI technologies. In addition to the emphasis on brain project rhetoric, military documents from both countries were reviewed to provide additional insight into perceived military use cases for BCIs.

Quantitative variables

Sociocultural norms

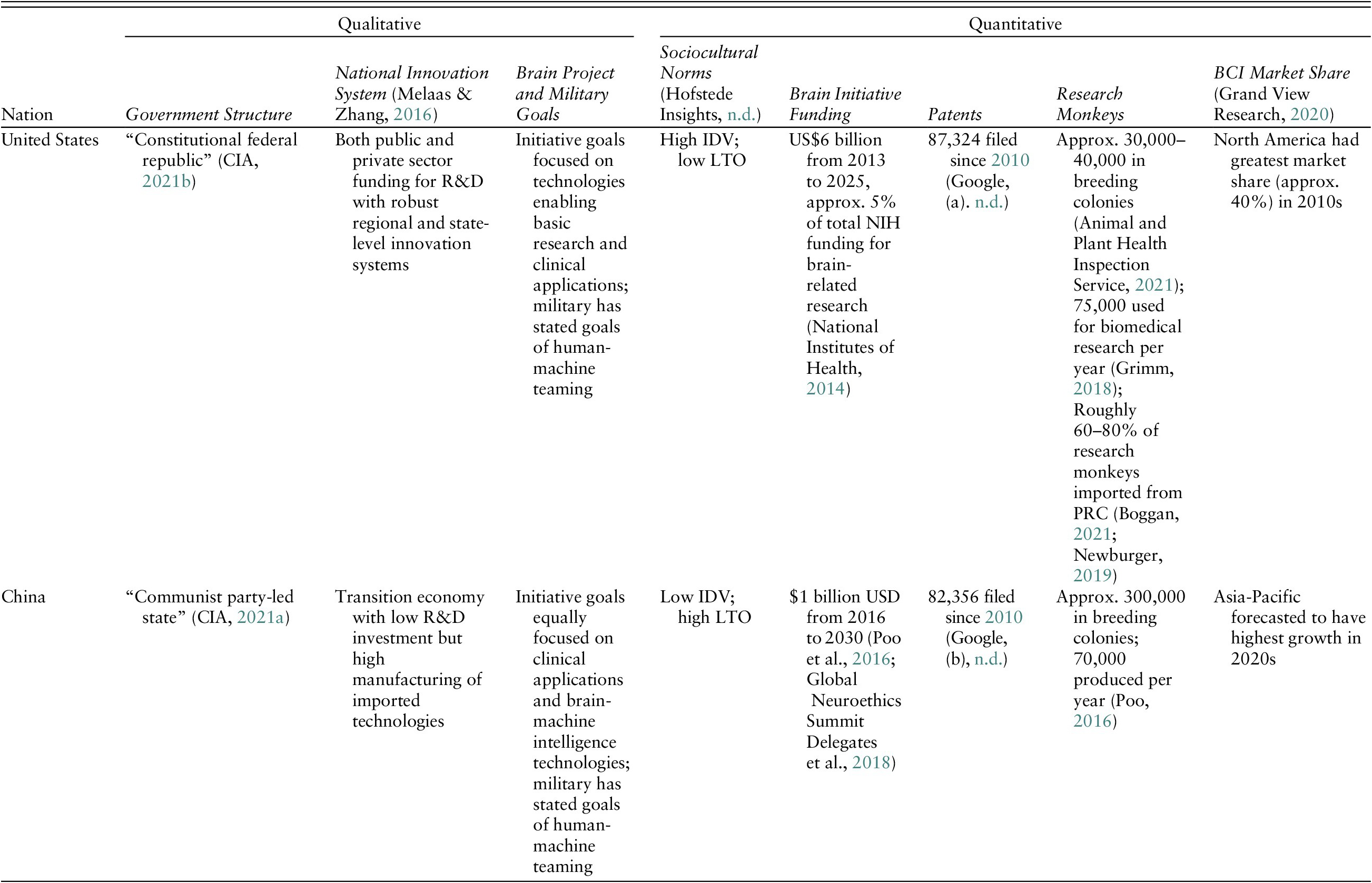

Cultural and sociocultural norms can be treated as quantitative variables. Various frameworks have been proposed to treat sociocultural norms quantitatively. One of the most widely used is Hofstede’s cultural dimensions (see Table 1 and Figure 2, adapted from LaBrie et al., Reference LaBrie, Steinke, Li and Cazier2017) (Hofstede & Bond, Reference Hofstede and Bond1984; LaBrie et al., Reference LaBrie, Steinke, Li and Cazier2017). This framework gives a quantitative approach to describing culture and is based on surveys of IBM employees and other populations (Hofstede et al., Reference Hofstede, Hofstede and Minkov2010). Additionally, these variables have been used by others to predict and describe technology adoption of information systems, mobile devices, and big data analytics (LaBrie et al., Reference LaBrie, Steinke, Li and Cazier2017; Lee et al., Reference Lee, Trimi and Kim2013; Srite, Reference Srite2006).

Table 1. Hofstede’s cultural dimensions.

Figure 2. A comparison of Hofstede’s cultural dimensions for the United States and China; adapted from LaBrie et al. (Reference LaBrie, Steinke, Li and Cazier2017).

Brain initiative funding

The budgets for both the United States’ and China’s brain projects are available online. While this variable does not include breakdowns for money specifically targeted at BCI development between the two countries, it gives a general sense of the level of investment in neuroscience and neurotechnologies. Additionally, this variable quantifies the actual investment of each nation to achieve its stated goals and national strategy for neuroscience research. In the context of the stated goals for each brain project, this variable demonstrates whether a gap exists between rhetoric and real investment.

Access to data on the budget of the U.S. BRAIN Initiative and the projects it has funded was much more open than data on the China Brain Project. One of the difficulties was an inability to compare funding within these projects specifically dedicated to BCIs. While these data were available for the U.S. BRAIN Initiative, they were not available for the China Brain Project (Dimensions, 2021).

Funding for neuroscience research outside the brain projects is not included here as another variable. The brain projects are an articulation of a national strategy for neuroscience research and are meant to propel the neurotechnology sector of both nations far more than disparate research funding through other sources. While military funding for neuroscience research has been significant in the United States, estimated in the hundreds of millions of dollars across multiple funders, including the Defense Advanced Research Projects Agency (DARPA) and the military service branches, it is still believed that most military adoption of BCIs will occur after, not prior to, civilian adoption, which would be supported more by the clinical and commercial neurotechnologies whose development is made possible through the brain projects (Emanuel et al., Reference Emanuel, Walper, DiEuliis, Klein, Petro and Giordano2019; Kosal & Huang, Reference Kosal and Huang2015).

Number of patents

The number of BCI patents filed in each country since 2010 was determined using Google Patents, specifically only including patents with the keyword “brain-computer interface.” Patents are one way to measure innovation in an industry, but differences in the requirements for patent filing in different countries can complicate interpretations of this variable (Shambaugh et al., Reference Shambaugh, Nunn and Portman2017).

Number of research monkeys

Access to research monkeys is an important and novel variable for predicting BCI adoption because nonhuman primates are used extensively in BCI R&D. While noninvasive devices like EEG headsets with read-only capabilities can skip animal trials and proceed to human trials, any device that is invasive or that tests write capabilities must use an animal model (Li & Zhao, Reference Li and Zhao2019). Additionally, one of the lead scientists of the China Brain Project has placed a strong emphasis on developing research monkey colonies (Poo, Reference Poo2016).

Access to and use of research monkeys was assessed using statistics from the U.S. Department of Agriculture, the Chinese Experimental Monkey Breeding Association, and the European Commission on the number of monkeys in colonies, the number used in biomedical research, and the number imported for research purposes. It was difficult to find standard ways of assessing the number of monkeys used for research and the number of monkeys used specifically for BCI research, but the numbers reported give vital information about the supply of this necessary resource.

BCI market share

Market research was used to obtain information about BCI market share between North America and the Asia-Pacific region (Grand View Research, 2020). Detailed information regarding China was not available publicly.

Results and discussion

H1: The United States will adopt BCI technologies for commercial and military use before China if national innovation systems, amount of brain project funding, and current BCI market share are more predictive of BCI technology adoption

According to Melaas and Zhang (Reference Melaas and Zhang2016), the United States and China share some similarities in their national innovation systems, but the United States has a greater capacity to support the basic R&D necessary to generate new technologies like BCIs. The United States’ national innovation system is more “fully integrated,” but also more decentralized than the Chinese national innovation system. Notably, China is a transition economy without “mature private capital markets”; therefore, R&D for technologies is mostly funded through the public sector. This results in a strategy in which China has sought to import technologies developed abroad and focused on developing the manufacturing capability to produce them cheaply. These characteristics support the hypothesis that the United States will be the first to develop marketable BCI technologies, though China may later co-opt these technologies, which is supported by current market share reports discussed later.

The amount of projected funding for the U.S. BRAIN Initiative is much larger than the projected funding for the China Brain Project (Table 2). This demonstrates a larger financial commitment by the government toward basic R&D necessary for BCI neurotechnologies. This again reflects a difference in the robustness of public funding for basic R&D that is demonstrated by the two countries’ national innovation systems. The amount of funding for either project may change. To date, the U.S. government has funded US$1.9 billion in research grants under the U.S. BRAIN Initiative, which aligns with the budget proposed when the initiative was first conceived (Dimensions, 2021; National Institutes of Health, 2014). Approximately US$21.2 million of these research grants are directly related to BCIs or brain-machine interfaces (BMIs). Obtaining data on how much has been spent to date on the China Brain Project is more difficult. Therefore, a direct comparison of research grants for BCI or BMI technologies under the U.S. BRAIN Initiative and China Brain Project cannot be made with detailed precision. In 2018, the China Institute for Brain Research was officially opened with plans to support 150 principal investigators as one of the “first concrete developments” of the China Brain Project (Cyranoski, Reference Cyranoski2018).

Table 2. Summary of qualitative and quantitative indicators of BCI adoption.

The U.S. BRAIN Initiative’s budget of US$6 billion during its lifetime represents only 5% of the NIH’s total budget for brain-related research (National Institutes of Health, 2014). Additional funding for brain research in the United States comes directly from defense agencies and military services. It was difficult to find numbers to indicate China’s total investment in brain-related research, but the US$1 billion expected to be invested in the China Brain Project is still considerable. Additionally, China is likely still in the ramp-up stage of its project. Whereas the U.S. BRAIN Initiative started in 2013, the China Brain Project was first formalized in 2016, three years later. Other sources of funding like private investment are also not considered here but are likely to be important.

North America currently has the largest market share for BCIs. Around 40% of the total revenue from BCI technologies globally was generated in North America in 2019 (Grand View Research, 2020). Most of this revenue was generated by medical applications of BCI technologies, but significant portions of the market were driven by commercial and military technologies. When combined, commercial and military BCI technologies accounted for more than half the global market share, surpassing medical BCI technologies. However, in the next decade, market research suggests that the BCI market will see the most growth in the Asia-Pacific region because of “low-cost manufacturing sites and favorable taxation policies” (Grand View Research, 2020).

The current large market share held by North America is indicative of a robust private sector that will aid commercial adoption of BCI technologies. Many of the products marketed by the top companies cited in the market report are noninvasive, read-only technologies. Acceptance and adoption of these technologies will likely occur before the marketing and adoption of technologies that allow write capabilities or brain-to-brain communication because of a continued need for R&D of these more complex technologies and the need to overcome general distrust of BCI technologies (Emanuel et al., Reference Emanuel, Walper, DiEuliis, Klein, Petro and Giordano2019; Google, n.d.).

Surprisingly, although the United States has better R&D capabilities and North America has a larger market share, the numbers of patents related to BCI technologies filed in the United States and China since 2010 are remarkably similar, with a gap of approximately 5,000 patents favoring the United States (Table 2). However, it is difficult to assess the quality of these patents in the two countries. It is possible that better-quality patents are filed in the United States since there is better support for R&D there and a stronger market for BCI in North America. Additionally, of the top five BCI companies identified by market research, four are headquartered in the United States. The only top BCI company not headquartered in the United States is in Australia (Grand View Research, 2020). None of these key players are in China, though some Chinese state-owned enterprises have developed BCI technologies for general public use, such as the “Brain Talker,” which is a computer chip designed for reading brain activity to interpret “mental intent” (Yin, Reference Yin2019).

It is important to note that the numbers of patents filed in the United States and China are considerably higher than in other nations with brain projects. The EU’s patent office has the third-highest number of patents filed for any international actor with a brain project, and the number is nearly a third of that filed in the United States or China (27,720 patents) (Google, n.d.). The EU is projected to spend US$1.2 billion for its Human Brain Project, which is similar to China’s projected spending, but China far outpaces the EU in the number of patents filed (Global Neuroethics Summit Delegates et al., Reference Rommelfanger, Jeong, Ema, Fukushi, Kasai and Singh2018).

The indicators that favor the United States’ earlier adoption of BCIs over China—national innovation systems, brain project funding, and BCI market share—all address the R&D capacity of both nations. Because the United States has a more robust national innovation system that supports the generation of new technologies, higher public brain project funding, and a greater share of the current market, the United States could generate BCI technologies faster than China and market them domestically, facilitating widespread adoption first in the commercial and then in the military sector. One important caveat to note here is that China is beginning to rapidly ramp up its public sector R&D funding to better compete with the United States (Hourihan, Reference Hourihan2020). While the United States has the advantage currently, it is possible this advantage may begin to erode in the next decade.

H2: China will adopt BCI technologies for commercial and military use before the United States if government structure, brain project and military goals, sociocultural norms, and research monkey resources are more predictive of BCI technology adoption

The United States and China are obvious foils in their government structure, with one being a federal republic and the other being a Communist party-led state. One consequence of this is that China benefits from a continuity of objectives both in statecraft and national defense since power does not change hands with elections. Additionally, China blurs the lines between its civilian and military sectors, which could eventually allow for faster defense acquisition of dual-use technologies (U.S. Department of Defense, 2020). It is also easier for China to mandate the research, development, and adoption of technologies. The U.S. BRAIN Initiative receives funding from and works in partnership with national security-focused agencies like DARPA and the Intelligence Advanced Research Projects Agency (IARPA), while the initiation of the China Brain Project was also closely tied to their overall government’s “Five-Year Plan” for 2016–2020 (Central Compliation and Translation Beureau, 2016).

The China Brain Project’s stated goals place a greater emphasis on brain-machine technologies like BCI than the U.S. BRAIN Initiative. The U.S. BRAIN Initiative’s seven major goals only relate to understanding the brain and improving treatment of brain disorders, and they are focused on developing technologies that enable basic research and clinical applications (National Institutes of Health, 2014). The China Brain Project’s structure is envisioned as “one body two wings,” with a core body of understanding the brain, with an equal emphasis on the applications—the two wings—of treating brain disorders and developing brain-machine intelligence technologies (Poo et al., Reference Poo, Du, Ip, Xiong, Xu and Tan2016).

The China Brain Project puts an equal emphasis on clinical and nonclinical applications of brain research, and it specifically emphasizes integrating brain and machine intelligence much more than the messaging from the U.S. BRAIN Initiative. Dr. Mu-Ming Poo, a leading scientist of the China Brain Project, has written about his belief that a better understanding of the brain will revolutionize AI technologies and his expectation that China will accelerate “development of next-generation AI with human-like intelligence and brain-machine interface technology” (Poo, Reference Poo2018). This stance favors BCI technology dissemination and adoption in China because the China Brain Project has placed a much greater emphasis on BCIs as a top priority.

China also exhibits greater alignment between the stated goals of its brain project and stated military goals. The goals of the U.S. BRAIN Initiative and China Brain Project can be viewed as a high-level articulation of a national strategy for neuroscience research, giving insight into how BCI technologies may be disseminated from clinical research to both commercial and military applications. Rhetoric and stated goals in national defense policy demonstrate whether BCIs are being prioritized specifically for defense or military applications. Greater alignment between the national strategy for brain research as articulated by the brain projects and defense emphasis on BCIs will likely enable quicker BCI adoption in the military sector.

Striking similarities emerge when examining the rhetoric of the Chinese People’s Liberation Army (PLA) and the China Brain Project. The director of the Central Military Commission Science and Technology Commission in China has said “[t]he combination of artificial intelligence and human intelligence can achieve the optimum, and human-machine hybrid intelligence will be the highest form of future intelligence” (Kania, Reference Kania2020). The China Brain Project has identified its two important applications of basic brain research (in its “one body two wings” framework): medical applications for treating brain disorders and brain-machine intelligence technologies like BCIs (Poo et al., Reference Poo, Du, Ip, Xiong, Xu and Tan2016). Both PLA strategists and the heads of the China Brain Project cite artificial intelligence, biological intelligence, and hybrid intelligence as key areas to promote technology development.

This contrasts strongly with the U.S. BRAIN Initiative, in which the high-level goals are limited to basic research and clinical outcomes (translational research) and do not include an emphasis on applications for the commercial and military sectors, though those may be outcomes. The U.S. BRAIN Initiative is also not devoid of ties to the U.S. defense and intelligence communities, since it partners with both DARPA and IARPA (National Institutes of Health, n.d.a). Both the PLA in China and the Department of Defense in the United States have emphasized AI and human-machine teaming as important technologies for future warfare (Binnendijk et al., Reference Binnendijk, Marler and Bartels2020; Kania, Reference Kania2020). The U.S. military has invested in the development of neurotechnologies, and BCIs specifically, through programs at DARPA and through the different military services (DARPA, n.d.; Kosal & Huang, Reference Kosal and Huang2015). However, the lack of goals within the U.S. BRAIN Initiative directly supporting BCIs and commercial applications of neurotechnologies demonstrates less coordination and coherence between a defense strategy for neurotechnology and the U.S. BRAIN Initiative, which represents the most aggressive neuroscience research drive in the United States. A more aligned articulation of technology dissemination goals exists between the China Brain Project and the country’s defense arm—the PLA—in contrast with the mainly medically focused goals of the U.S. BRAIN Initiative.

Differences in sociocultural norms in the United States and China also favor earlier BCI adoption in China because a lesser focus on individualism and social pressures is a large driver of technology adoption. Hofstede’s cultural dimensions have been used to predict technology adoption for other computer technologies, mobile phones, and mobile commerce. While the United States and China have comparable masculinity (MAS) and uncertainty avoidance (UAI) scores, differences are found in their individualism (IDV), power distance (PDI), long-term orientation (LTO), and indulgence (IND) scores (Hofstede Insights, Reference Insightsn.d.). The United States is an individualistic culture, whereas China is a collectivist culture. Additionally, China scores high on acceptance of power differentials in society (PDI) and has a longer-term outlook that makes individuals more adaptable and pragmatic, supporting structures like the government that provide stability (LTO). Finally, individuals in the United States tend to be more indulgent, while individuals in China show more restraint (IND). While IND scores have not been strongly tied to technology adoption trends, differences in IDV, PDI, and LTO scores could drive earlier BCI adoption in China.

One of the potential barriers to the adoption of BCIs identified by U.S. military experts was distrust by service members (Binnendijk et al., Reference Binnendijk, Marler and Bartels2020). In civilian populations, 69% of U.S. respondents to a Pew Research Center survey on human enhancements said they are worried by the idea of BCI technologies, and 66% of those surveyed claimed they would not want to use BCI technologies to enhance their brain (Funk et al., Reference Funk, Kennedy and Sciupac2016). This survey reported similar results for other human enhancements like synthetic blood for improved stamina and gene editing to reduce disease risk. This wariness is predicted by Hofstede’s cultural scores. For mobile devices, it has been shown that during the development of new technologies, cultures similar to Chinese culture (low IDV, high LTO) see more rapid widespread adoption, and cultures like American culture (high IDV, low LTO) will lag behind in adoption (Lee et al., Reference Lee, Trimi and Kim2013). These findings could generalize to other IT technologies, and more broadly to neurotechnologies.

A survey was conducted with American and Chinese respondents to determine their attitudes toward big data technologies, with hypotheses driven by Hofstede’s cultural framework (LaBrie et al., Reference LaBrie, Steinke, Li and Cazier2017). While this survey did not address BCI technologies directly, there are some key principles to take away on U.S. attitudes toward new technologies based on their cultural scores. Additionally, BCI technologies will likely use components of big data technologies like machine learning and dimensionality reduction, so they could be considered a nascent big data technology (Frégnac, Reference Frégnac2017). U.S. respondents were less likely than Chinese respondents to approve of technologies that involved data collection from individuals, likely because they place a higher value on individual identity and privacy, as reflected in their high IDV scores in Hosftede’s framework (LaBrie et al., Reference LaBrie, Steinke, Li and Cazier2017). U.S. respondents were also strongly averse to the use of big data analytics by the government, whereas Chinese respondents were mostly favorable to government use. U.S. respondents were only more favorable toward big data analytics use than their Chinese counterparts when data could be anonymized and used by businesses to improve performance. BCI technologies by necessity collect data from individuals and can even affect brain activity, which is highly tied to identity and privacy. High individualism and lower long-term orientation make technologies that collect individual data and technology use by the government more suspect to U.S. respondents. A lower emphasis on individualism could lower barriers to BCI technology adoption in China. Additionally, the high LTO scores of Chinese respondents point to higher acceptance of government use of technologies that collect personal data, like BCI, since high LTO scores have been suggested to translate into greater government support.

U.S. individuals are likely to put a higher value on the perceived usefulness of technologies to determine whether they adopt a technology, while Chinese individuals are more influenced by subjective norms or imitation of peers (Srite, Reference Srite2006). U.S. individuals may perceive BCI technologies as less useful, especially in the current forms available on market. While noninvasive EEG headsets are prone to data errors and poor accuracy, these concerns may be less important to Chinese individuals than American individuals, especially if the technology is promoted by the government, authorities, or peers. These values are reflected in high PDI, high LTO, and low IDV scores.

Hofstede’s cultural dimensions have been used for other technologies to demonstrate that Chinese citizens have fewer reservations about emerging technologies that collect individual data, even when used by the government. In contrast, U.S. individuals are wary of human enhancement technologies like BCIs, and these technologies would need to demonstrate high usefulness to overcome initial distrust of these technologies. A U.S. Army–funded report suggested that media and cultural images of BCI technologies could be altered to include “more accurate depiction of technology and its applications” to change public perceptions and wariness (Emanuel et al., Reference Emanuel, Walper, DiEuliis, Klein, Petro and Giordano2019). In the same report, the authors also suggest that commercial development and dissemination of BCIs will drive the military’s ability to use BCI technologies. Because China can mandate technology use and because its citizens are more likely to approve of BCI technologies, sociocultural norms in China favor earlier adoption of BCIs over the United States.

The final indicator that supports earlier adoption of BCIs in China is that country’s investment in research monkeys. Research monkeys are a vital resource for the development of BCIs of all types, but especially for any invasive BCI technologies. They are robust, novel indicators of whether invasive BCI technologies will be developed for both clinical and nonclinical use, since any BCI that writes to or changes brain activity likely involves R&D using research monkeys (nonhuman primates).

China has a significant material advantage over the United States in the size of its research monkey colonies. They have a larger overall supply of research monkeys which will aid the longevity of their BCI R&D programs. In 2017, the United States used 75,000 monkeys for biomedical research purposes (Grimm, Reference Grimm2018). Around 60% to 80% of those research monkeys were imported from China (Boggan, Reference Boggan2021; Newburger, Reference Newburger2019). This is because U.S. colonies of research monkeys are an order of magnitude smaller than those in China. One of the lead scientists of the China Brain Project, Mu-Ming Poo, reported that there are nearly 300,000 research monkeys in dedicated breeding colonies in China compared to around 40,000 in the United Staes (Animal and Plant Health Inspection Service, 2021; Poo, Reference Poo2016). Monkeys used in research, such as rhesus macaques, are native to China; this fact, combined with a focus on enlarging research monkey colonies in the China Brain Project, gives China a huge advantage over the United States. In fact, in 2020, China stopped exports of research monkeys because of the COVID-19 pandemic (Zhang, Reference Zhang2020). This has hampered some biomedical research efforts, including COVID-19 vaccine trials, in the United States.

The ease of access to research monkeys is one of China’s strategies to drive acquisition of foreign research talent. In contrast to both the United States and China, the EU has severely limited the use of monkeys in research, with only 6,000 monkeys used in 2011 (SCHEER, 2017). The cultural norms driving EU primate research decisions illustrate how nonmaterial ideas can directly impact R&D. China has begun to attract researchers who use monkeys “by offering fully equipped labs with state-of-the-art technology, competitive salaries, ample funding for primate studies, and co-appointments at Chinese institutions for European and American investigators” (Zimmer, Reference Zimmer2018). China’s investment in its research monkey colonies for biomedical research and in attracting research talent demonstrates a long-term investment in using research monkeys for its stated research goals of developing brain-machine intelligence and technologies.

Additionally, China has demonstrated a willingness to limit the supply of research monkeys to the United States. While research monkeys are not necessary for developing noninvasive, read-only BCIs, China’s ability to develop advanced BCI technologies will be enabled by its investment in research monkeys. Research monkeys therefore serve as an indicator of invasive BCI adoption.

BCI adoption indicators that address the broader sociocultural and governmental context necessary to encourage adoption favor an earlier adoption of BCI technologies by China. The collectivist culture of China leads to fewer concerns about data privacy and wider acceptance of government use of technologies like BCIs that collect individual data. The Chinese government has a more coordinated emphasis on developing and disseminating BCI technologies than the United States as well. This includes an emphasis on developing and maintaining research monkey colonies as a vital resource for biotechnology research generally and for testing BCIs before human trials specifically. While the R&D capability of China for developing BCI is not currently on a par with the United States, these factors affecting the likelihood of individuals adopting BCIs (whether elective or mandated) favor earlier adoption in China.

Current BCI use in both nations supports the hypothesis that China will adopt BCI technologies before the United States, supporting H2

Two hypotheses have been presented here supporting the earlier widespread adoption of BCIs in either the United States or China. If BCI adoption is mostly determined by indicators that address R&D capability, the United States will be the first adopter. However, if BCI adoption is mostly be determined by indicators that address government structure and sociocultural norms, China will be the first adopter. To support one of these hypotheses, early indicators of BCI use in commercial and military settings in both countries were identified and assessed.

Currently, BCIs are available on the market for both clinical and commercial applications. However, their use is not widespread in either the United States or China. Here, current BCI use is taken to be a dependent variable, or a proxy, for later widespread BCI adoption. We make the assumption that current levels of BCI use will continue to increase rapidly in countries with higher use rates. This is not a bad assumption and has been used to characterize technology adoption models; namely, early users can have a large effect on technology adoption both by driving greater awareness of the innovativeness of the technology and by promoting imitation among peers (Atkin et al., Reference Atkin, Hunt and Lin2015; Calantone et al., Reference Calantone, Griffith and Goksel2006; Lee et al., Reference Lee, Trimi and Kim2013). Both the innovation and imitation factors are driven by early users, and they are prerequisites for widespread technology adoption.

Early reports of BCI use support the hypothesis that China will be the first adopter of BCI technologies in both the commercial and military sectors. There are media reports of mandatory BCI use by companies in China, whereas there have not been similar reports in the United States. Both the United States and China have had noninvasive EEG headsets with read capabilities used in school settings, usually in pilot studies for devices designed to measure focus and attention (Johnson, Reference Johnson2017; Shen, Reference Shen2019). However, Chinese state-owned companies that run electricity/power plants and train operations have already reported using this same kind of headset to monitor workers’ attention or sleep/awake states (Chen, Reference Chen2018). This application is also advertised by companies operating in the United States, but it is not known whether any commercial entities are using them (EMOTIV, n.d.). While the efficacy of these headsets is potentially low because of the difficulties of interpreting brain activity from EEG, this signals that Chinese state-owned companies are more likely to use these types of devices to monitor workers, potentially driving BCI adoption for commercial purposes (Winick, Reference Winick2018).

Conclusions

Though the United States has spent more money on its brain project, started its brain project earlier, and has a more robust innovation system that has led to better R&D capabilities in both the private and public sectors, China is a more likely to be the first adopter of BCI technologies in both the commercial and military sectors because of its government structure, sociocultural norms, and greater alignment of brain project goals with military goals. Its coordinated national focus has driven investment in brain-machine intelligence and BCI technologies. While the U.S. military has also indicated high interest in human-machine teaming and the United States has invested a large sum of money in brain-related research, there is a disconnect between the stated military goals for brain research and the basic research goals driving the U.S. BRAIN Initiative. Additionally, the cultural values of the United States, including an emphasis on individual identity and a distrust of new technologies that have not proven their usefulness, will hinder BCI adoption both commercially and militarily.

China’s early adoption of BCIs could have important implications for U.S. national security. These truly emerging technologies, though nascent, may give China a military advantage through cognitive enhancement of warfighters and improved human-machine teaming when matured. Additionally, China’s native supply of research monkeys, coupled with lack of ideational drive away from primate research, will lower barriers to developing commercially and militarily viable invasive BCIs that can read and write brain activity with better accuracy and precision.

Security risks also exist for commercial applications of BCIs. If China is the most viable place to produce and market BCI technologies, it may have a large influence on the supply of BCIs that will eventually be used in the United States and other countries. This could lead to privacy issues for very personal data—the activity of a human brain and interpretations of that activity that give insight into mental state and mood. Finally, being a first mover on BCI technology may allow China to set ethical norms for BCI use. Understanding the brain to treat disease is a noble cause and should be pursued. However, the technologies enabled by this heavy investment in brain research have clear dual-use capabilities that will shape society and warfare.

The effects described here for the specific relationship between China and the United States reflect the broader reality of the impact of BCIs and neurotechnologies generally on the international security landscape. Early innovators and adopters of BCIs may have the opportunity to set international norms for their use in both civilian and military contexts for human enhancement. BCIs have both offensive and defensive capabilities in military contexts, while also possessing clear clinical and therapeutic uses that will promote social good, complicating their categorization and treatment in the international community. Existing international treaties or conventions on weapons do not cover neurotechnologies, and it is unclear whether existing conventions could neatly and efficiently do so. It will be necessary for both nations and the international community at large to grapple with the ethical, legal, and social implications of BCIs as they begin to see widespread use by civilians and military personnel.