1. Introduction

Stories are a programming language for people. Whether an individual is curled up in an armchair devouring her favorite novel, a group of students scribble notes as they attend an intriguing lecture, the board of a large corporation considers the report of its CEO, or a nation is captivated by the President’s State of the Union address, a narrative is unveiled in each situation that can change the emotional state, opinions, attitudes, and beliefs of its audience. Social psychologist Jonathan Haidt recently captured the idea, observing, “The human mind is a story processor, not a logic processor” (Haidt, Reference Haidt2012), and the public relations industry has long understood the ability of stories to change people’s minds, as well as the inherent connection between narrative and identity embodied in the idea of a strategic narrative (Bonchek, Reference Bonchek2016).

This idea, that stories program people, suggests that narratives can be incredibly powerful. Indeed, historian Yuval Noah Harari notes that,

“Telling effective stories is not easy. The difficulty lies not in telling the story, but in convincing everyone else to believe it. Much of history revolves around this question: how does one convince millions of people to believe particular stories about gods, or nations, or limited liability companies? Yet when it succeeds, it gives Sapiens immense power, because it enables millions of strangers to cooperate and work towards common goals. Just try to imagine how difficult it would have been to create states, or churches, or legal systems if we could speak only about things that really exist, such as rivers, trees and lions.” (Harari, Reference Harari2014)

Simply put, stories are not only entertaining, they are powerful, deserving our most careful attention and finest critical analysis. This paper presents new tools to help in this effort.

Recently, technologies have been employed to both help engineer (Isaak and Hanna, Reference Isaak and Hanna2018) and disseminate (Congress of the United States of America, 2021) narratives designed to manipulate people’s behavior. As such technologies become the norm in our society, we need other technologies to better understand the nature of narrative and how it impacts human behavior. A first step towards such understanding would be software agents capable of culling through the mass of stories available today and automatically gleaning central characteristics of these narratives. These characteristics could then be studied for their impact on human response.

In Franco Moretti’s influential book, Distant Reading (Moretti, Reference Moretti2013), he claims that in order for us to better understand literature as a whole, we should change our focus from reading each story individually to information extraction tools that can aid us in analyzing the content of literature en masse, a stance that has received both support and criticism among the academic community. The reason for such analysis tools is simply that there are more published books than any one person or small group of people could ever read. According to Google’s book search algorithm, there are about 130 million books that have been published as of 2010 (Taycher, Reference Taycher2010). There are many natural language processing (NLP) tools that aid in the evaluation of text, such as dependency parsing (Wang, Huang, and Tu, Reference Wang, Huang and Tu2019; He and Choi, Reference He and Choi2020; Fernández-González and Gómez-Rodríguez, Reference Fernández-González and Gómez-Rodríguez2020; Yang et al., Reference Yang, Jiang, Han and Tu2020), named entity recognition (NER) (Shen et al., Reference Shen, Yun, Lipton, Kronrod and Anandkumar2017; Dernoncourt, Lee, and Szolovits, Reference Dernoncourt, Lee and Szolovits2017; Li et al., Reference Li, Sun, Han and Li2020), coreference resolution (Kantor and Globerson, Reference Kantor and Globerson2019; Joshi et al., Reference Joshi, Levy, Zettlemoyer and Weld2019; Wu et al., Reference Wu, Wang, Yuan, Wu and Li2020), topic modeling (Barde and Bainwad, Reference Barde and Bainwad2017), and sentiment analysis (Soleymani et al., Reference Soleymani, Garcia, Jou, Schuller, Chang and Pantic2017; Zhang et al., Reference Zhang, Wang and Liu2018), but there is a need for readily available tools that analyze or extract document-comprehensive literary elements, such as plot, chronology, and location mapping. This paper focuses on plot-relevant global aspects of narrative, exploring what kinds of information about plot might be automatically or semi-automatically extractable one day.

Plot can be informally described as the causal interaction of key elements and events in a story that move the story from its beginning to its end. (We provide a more complete definition of plot in Section 2.1.) Currently, in order to do any plot analysis of a novel, news story, historical account, or any other form of narrative where plot is present, the text must be read by a human who then performs analyses. For a common-length novel of about 100,000 words and an average reading speed of 200 words a minute, one novel can take over 8 hours to read. Studying plot as an abstraction in general, then, would require analyzing many texts, multiplying this requisite minimum of 8 hours by the number of books that need to be read. One motivation for this research is to drastically reduce this time by automating the extraction of the plot from text where plot exists, such as novels, and output it in a form that is easy to understand visually and usable in other analytical software and machine learning systems. Although we do not yet attain full automation of this process in this research due to the limitations of current NLP technology, our research lays groundwork for when the required technology improves. While this research can be applicable to other media where a narrative exists, such as news stories and historical accounts, fiction offers a flexible medium with both rich and diverse plots as well as a variety of formats, from microfiction to multi-volume epics. Our work here focuses on the detection and extraction of plot-relevant, global features from short stories and scripts, due to the tractability and ability of these story formats to illuminate key concepts. These features include assessment of entity importance, scene segmentation, entity tracking, narrative structures, and more. We then visualize this extracted content to provide at-a-glance insight into these global features of the stories.

In this article, we present two visualizations of the narrative of the story. Following a brief background explanation in Section 2, we introduce The Scatter Plot of Entities in Section 3 which visualizes the introduction of entities (actors, objects, and locations) within the story and displays them on a scatter plot according to their continued appearance in narrative order. We cluster these entities to perform scene segmentation and use trends in the scatter plot to detect which entities may be influential in the story. These entities can then be used as input into the next visualization. In Section 4, we introduce The Narrative Flow Graph which uses the story entities and locations to build a graph structure representing the flow of entities through scenes in the story. Scenes act as vertices of the graph, and the entities become the edges connecting the scenes from the beginning of the story to the end, creating a graph representation of the syuzhet of the story. We finish by discussing applications of this research in Section 5 and future work in Section 6.

2. History and related work

2.1. On plot and narrative

The description of plot we give in the introduction is insufficient for complex analysis and extraction because it does not detail what needs to be extracted. The interacting elements that progress the story from beginning to end must be defined.

Plot and narrative structure are so closely linked that the idea of plot brings to mind almost algorithmic structures or steps that are laid in order. An example of such a structure is as follows: exposition, inciting incident, rising action, climax, falling action, denouement, and resolution. Such a delineation of plot is commonly called Freytag’s Pyramid and is used to describe the narrative structure of classical epics and dramas (Freytag, Reference Freytag1863). However, not all stories follow this structure. In addition to Freytag’s Pyramid, there is the Fichtean Curve, Hero’s Journey, In Media Res, 3-act, Seven Point, and more. If this type of structure labeling is necessary for plot or to be plot itself, there needs to be a universal structure—some way to define a structure that can be applied to all types of plot. Identifying such a structure may be difficult.

Russian Formalist literary researchers and critics sought to understand this structure by breaking down the narrative to smaller thematic elements. Vladimir Propp delved into Russian folktales to investigate the commonalities between them (Propp, Reference Propp1968). His idea was to separate the theory from the specifics and assign labels to the different forms the events and characters in a story can take, resulting in what came to be known as the “Thirty-one Narratemes.” Boris Tomashevsky wrote about the microstructure of a story and how it can be broken down into what are very similar to Propp’s narratemes—thematic sections of the story, or events, that follow specific forms (Tomashevsky et al., Reference Tomashevsky, Shklovsky, Eichenbaum, Lemon and Reis1965). Alexander Veselovsky spoke of the “motif,” or the “simplest narrative unit” of a story, which combines together to create the themes of a tale (Veselovsky, Reference Veselovsky1894/2015).

In an expansion on Propp and Veselovsky and inspired by other folklorists like Antti Aarne, American folklorist Stith Thompson developed the Motif-Index of Folk-Literature, six volumes that include thousands of commonly occurring—and some rarely occurring—event types and story elements in folktales (Thompson, Reference Thompson1989). It is clear to see that as more investigation is done into thematic elements of story, the number of those elements ever increases. Unless the scope is narrowed, identifying every thematic element in a story is a monumental task, so the structure must be broken down further.

In part six of Poetics (Aristotle and Butcher, Reference Aristotle and Butcher335BCE/1961), Aristotle claims that one cannot have plot without action. This notion comes from the Tragedies and other stage plays of the period in which visible action is needed to understand the plot, and the lack of action means the absence of plot. The Aristotelian notion of action-driven story has held for many centuries, but it alone is insufficient to represent the complexities of plot, especially in literature. E. M. Forster theorizes that plot is more than the Aristotelian notion of action-driven story. He states that what is known and not known as well as the emotions that lead to action are just as vital as the actions themselves. In addition, the causal element is at the core of plot. In a famous example, Forster states, “A plot is also a narrative of events, the emphasis falling on causality. ‘The king died and then the queen died’, is a story. ‘The king died, and then the queen died of grief’ is a plot” (Forster, Reference Forster1927). In The Plot of Tom Jones (Crane, Reference Crane1950), R. S. Crane elaborates on Forster’s idea and criticizes Aristotle, defining plot as the synthesis of action, character, and thought that may take on different structures depending on the author’s use and emphasis on any of these three aspects.

Following the theories and discussions of the above literary analysts, we select five elements of narrative that can be detected and extracted and use them for our definition of plot. We analyze the output created by the two extraction methods detailed in this article on how they fulfill our chosen definition of plot.

-

Characters: entities of volition within a story

-

Events: actions taken in the story

-

Information: what is known and how that knowledge spreads between characters

-

Causation: the manner in which one event leads to another

-

Structure: the linking of events from the beginning of the story to the end

Due to the varying definitions of narrative used among modern literary analysts, in this article when narrative is mentioned, we mean how the source text tells the underlying story (the teller’s choice of scenes, ordering, character inclusion and emphasis, and more). With the focus of this research on plot and narrative structure, we define “narrative flow” as how the events and scenes in that narrative progress from one to the next, a representation of the structure of the syuzhet. This definition of narrative flow is different from the flow of how the narrative sounds and feels when read or spoken.

2.2. Content extraction of narrative fiction

Story viewed with an Aristotelian action-driven lens may be insufficient to fully encapsulate the complexity of plot. Even so, physical actions are still a major aspect of plot, and for most novels, it is the dominant element. Given the connection between events and plot, plot extraction can be seen as a form of event extraction modified to fit the definition of plot.

The greatest breadth of event extraction research has not fallen upon the domain of fiction narrative but involves biomedical text (Yakushiji et al., Reference Yakushiji, Tateisi, Miyao and ichi Tsujii2001; Riedel and McCallum, Reference Riedel and McCallum2011; Bjorne and Salakoski, Reference Bjorne and Salakoski2011), news (Vargas-Vera and Celjuska, Reference Vargas-Vera and Celjuska2004; Naughton, Kushmerick, and Carthy, Reference Naughton, Kushmerick and Carthy2006; Wevers, Kostkan, and Nielbo, Reference Wevers, Kostkan and Nielbo2021), and historical text (Chieu and Lee, Reference Chieu and Lee2004; Segers et al., Reference Segers, Van Erp, Van Der Meij, Aroyo, van Ossenbruggen, Schreiber, Wielinga, Oomen and Jacobs2011), to name a few. Each of these genres as well as others require a more topical approach to event extraction where specific trigger or anchor words commonly found in a particular topical domain help identify the events of the text. Such an approach is not as feasible for fiction literature due to the plethora of topics, themes, and genres. Non-topical event extraction has been used in studies like those of Alan Ritter et al., to extract events of general interest from Twitter using machine learning to recognize event structure (Ritter, Etzioni, and Clark, Reference Ritter, Etzioni and Clark2012) and Valenzuela-Escarcega et al., in biomedical text using rule-based algorithms that detect events by locating sentences that have the correct grammatical or semantic representation of the desired event (Valenzuela-Escarcega et al., Reference Valenzuela-Escarcega, Hahn-Powell, Hicks and Surdeanu2015). This non-topical approach removes the need for trigger words and allows event extraction to be applied to an open range of text. However, due to the free-form nature of creative writing, events will usually not follow a set grammatical or semantic rule or structure that can be detected and extracted, making such methods a poor match for creative fiction text.

Despite the emphasis of event extraction in the non-literary domain, event detection and extraction on fiction and other literary narrative is an active field of research that is gaining momentum. In Jan Christoph Meister’s work, Computing Action: A Narratological Approach (Meister, Reference Meister2003), he develops an event markup and parsing system for literary text. The EventParser is software designed to facilitate human annotation of events. The accompanying EpiTest software then links these events into what he calls Episodes that detail the action in the narrative. Nils Reiter in his PhD thesis follows the school of Propp in claiming that “Events happen in a certain order and this order is similar across tales.” (Reiter, Reference Reiter2014, p. 63) The thesis proposes that structural similarity in stories can be detected through the similarity of event archetypes and in the sequential event ordering of those similar events. Graph-based representations of events in the narrative are used as part of the assessment and have seen continual use as interest in this field grows. Adolfo and Ong build a Story World Graph where vertices are both events and characters in the story. Edges connect characters to actions or coreferences and connect the events together in sequential narrative order (Adolfo and Ong, Reference Adolfo and Ong2019). Sims et al. combine the classic use of trigger words with neural networks to automate the identification of verbs that may denote the beginning of events (Sims, Park, and Bamman, Reference Sims, Park and Bamman2019). Vauth et al. detect events through the actions/verbs that define them and then classify those events by their eventfulness to assign scores that can be plotted. The resulting line plots provide a visualization of the eventfulness of the story where peak maxima denote the most eventful parts of the story (Vauth et al., Reference Vauth, Hatzel, Gius and Biemann2021).

As an extension of event extraction, scene segmentation of fiction text has recently grown as a research interest. In a 2021 paper by Zehe et al., that acts as a rallying call to researchers to advance this field, they highlight the difficulties and how available text segmentation and partitioning tools are ill-fit for this task and provide poor baselines: “Additionally, we show that established baselines for text segmentation fail to capture the notion of a narrative scene, necessitating the development of new methods for this task” (Zehe et al., Reference Zehe, Konle, Dümpelmann, Gius, Hotho, Jannidis, Kaufmann, Krug, Puppe, Reiter, Schreiber and Wiedmer2021a, p. 3168). Even widely used language models, such as BERT (Devlin et al., Reference Devlin, Chang, Lee and Toutanova2019), perform rather poorly without extensive research on how it can apply to this unique task. In the Shared Task on Scene Segmentation at KONVENS 2021 (Zehe et al., Reference Zehe, Konle, Guhr, Dumpelmann, Gius, Hotho, Jannidis, Kaufmann, Krug, Puppe, Reiter and Schreiber2021b), five research teams attempt to tackle this novel task. Three of these teams utilize BERT with widely varying success (Hatzel and Biemann, Reference Hatzel and Biemann2021; Gombert, Reference Gombert2021; Kurfalı and Wirén, Reference Kurfalı and Wirén2021). Other teams use feature vectors (Barth and Donicke, Reference Barth and Donicke2021) or detect changes in context (Schneider, Barz, and Denzler, Reference Schneider, Barz and Denzler2021) with similar trouble, showing the immense difficulty of this task.

Although events are a dominant feature of plot, they are not the only extractable feature. Sentiment or valence of text is commonly extracted to provide a visualization of the story through the length of text (Nalisnick and Baird, Reference Nalisnick and Baird2013; Jacobs, Reference Jacobs2019; Somasundaran, Chen, and Flor, Reference Somasundaran, Chen and Flor2020), but creating similarly informative visuals of more complex story content extracted from a fiction text is a far more difficult task. One of the earliest attempts at non-event content extraction applied to the fiction domain is a paper written by Sharon Givon of the University of Edinburgh in which she describes the extraction of central characters and their social relationships (Givon, Reference Givon2006). These social network relationships are often shown using graphs where each character is a vertex of the graph, and where a relationship or social connection is present between two characters, an edge is created between the corresponding vertices. Other research that involves the extraction of characters’ relationships and social network interactions in fiction has followed (Elson, Dames, and McKeown, Reference Elson, Dames and McKeown2010; Agarwal, Kotalwar, and Rambow, Reference Agarwal, Kotalwar and Rambow2013; Dekker, Kuhn, and van Erp, Reference Dekker, Kuhn and van Erp2018). There has also been ontology creation that shows categorical definitions and relationships between concepts in fiction text (Goh et al., Reference Goh, Kiu, Soon and Ranaivo-Malancon2011) and creation of graph structures that map explicit relationships between discussion topics (basic narrative elements) in the United States Congressional Record (Ash, Gauthier, and Widmer, Reference Ash, Gauthier and Widmer2022), but little research has been done pertaining to the automated extraction of fiction plot.

The extraction of plot elements in a story has been attempted by Hajime Murai who researches the behavioral and emotional aspects of characters in microfiction in an attempt to use the characters’ vocabulary and behavior to model the plot structure with the goal of developing an automated plot extractor (Murai, Reference Murai2014, Reference Murai2017). Later he attempts to extract plot from detective comics (Murai, Reference Murai2020). This method of plot extraction is based on theme and is similar to how Vladimir Prop views plot. Murai’s purpose is to find transition patterns from one thematic element to another and display those patterns in a relational graph structure similar to the research done in social network extraction. Goyal et al. in their AESOP system (Goyal, Riloff, and Iii, Reference Goyal, Riloff and Iii2013) detect and isolate positive, negative, and mental statements in the text of a story and relates them by whether the following statements are motivation for, actualization of, or termination of the plot unit following Lehnert’s definition of plot units (Lehnert, Reference Lehnert1981). The statements are represented as vertices and the relations the edges in a graph structure, creating a visualization of the plot unit. AESOP is automated and shows partial success, but the authors state that the problem of plot extraction in fiction remains extremely difficult, and much more research is needed.

3. Narrative as a scatter plot of entities

As stated above in Section 2.2, visualizing a story as a plotting of measured values, such as using the sentiment of the text or in the aforementioned work of Vauth et al. (Reference Vauth, Hatzel, Gius and Biemann2021), is not a new concept. Here we take a novel approach by plotting the appearance of entities in the story to visualize the story content in a scatter plot and then cluster those entities in an attempt to isolate the individual scenes of the narrative.

3.1. Concept: scatter plot of entities explanation

The Scatter Plot of Entities visualizes the location of entities within the story by plotting them on a two-dimensional plane. We refer to the horizontal axis of this plane as the

![]() $x$

-axis and the vertical axis as the

$x$

-axis and the vertical axis as the

![]() $y$

-axis, with each point in the plane denoted by an ordered pair,

$y$

-axis, with each point in the plane denoted by an ordered pair,

![]() $(x,y)$

.

$(x,y)$

.

The values of the

![]() $x$

-axis represent the integer location of each token within the story, where a token is a contiguous string of characters between two spaces (or a space and a punctuation mark), mapping the entire text onto the set of tokens

$x$

-axis represent the integer location of each token within the story, where a token is a contiguous string of characters between two spaces (or a space and a punctuation mark), mapping the entire text onto the set of tokens

![]() $T=\{0,1,2, \ldots,n\}$

for some integer

$T=\{0,1,2, \ldots,n\}$

for some integer

![]() $n$

, the total number of tokens in the text. Similarly, the values on the

$n$

, the total number of tokens in the text. Similarly, the values on the

![]() $y$

-axis represent an integer identifier for each unique entity of interest in the order they are introduced in the text. We consider the list of entity identifiers,

$y$

-axis represent an integer identifier for each unique entity of interest in the order they are introduced in the text. We consider the list of entity identifiers,

![]() $\Phi = \{0, 1, 2, \ldots, m\}$

, where

$\Phi = \{0, 1, 2, \ldots, m\}$

, where

![]() $m$

is the total number of entities of interest in the text, allowing us to plot points of interest as any point (token, entity) that specifies the tokens where each entity appears. Note that only non-negative, integer values are allowed to identify viable token-entity pairs. We discuss which entities are included in

$m$

is the total number of entities of interest in the text, allowing us to plot points of interest as any point (token, entity) that specifies the tokens where each entity appears. Note that only non-negative, integer values are allowed to identify viable token-entity pairs. We discuss which entities are included in

![]() $\Phi$

in Section 3.2.

$\Phi$

in Section 3.2.

In this way, each

![]() $(x,y)$

coordinate pair represents a single appearance of an entity within the text. Each instance of a same entity has the same

$(x,y)$

coordinate pair represents a single appearance of an entity within the text. Each instance of a same entity has the same

![]() $y$

-coordinate but a different

$y$

-coordinate but a different

![]() $x$

-coordinate depending on where within the text that specific instance appears, which can be identified by the location of the token representing that entity. For example, if the entity with index 7 of the ordered list

$x$

-coordinate depending on where within the text that specific instance appears, which can be identified by the location of the token representing that entity. For example, if the entity with index 7 of the ordered list

![]() $\Phi$

appears in the text as token 42, that creates a point on the Scatter Plot of Entities at the coordinate pair

$\Phi$

appears in the text as token 42, that creates a point on the Scatter Plot of Entities at the coordinate pair

![]() $(42,7)$

. If that same entity appears again at token 89, another coordinate pair is created at point

$(42,7)$

. If that same entity appears again at token 89, another coordinate pair is created at point

![]() $(89,7)$

. Multi-token entities are handled by replacing the multi-token entity with a single-token label during annotation and coreferencing. All alternative mentions of an entity, such as “Mary Jane Smith” also being called “Dr. Smith” or “Mary Jane,” are similarly coreferenced with the single-token label, for example “MaryJane.” See Sections A.1.1 and A.1.2 for more explanation. Such annotation work ensures that each mention of an entity in the text only creates a single coordinate on the Scatter Plot of Entities.

$(89,7)$

. Multi-token entities are handled by replacing the multi-token entity with a single-token label during annotation and coreferencing. All alternative mentions of an entity, such as “Mary Jane Smith” also being called “Dr. Smith” or “Mary Jane,” are similarly coreferenced with the single-token label, for example “MaryJane.” See Sections A.1.1 and A.1.2 for more explanation. Such annotation work ensures that each mention of an entity in the text only creates a single coordinate on the Scatter Plot of Entities.

Given a list of entities of interest,

![]() $\Phi$

, from a specific text, it should be apparent that the resulting Scatter Plot of Entities is unique. In principle, the converse is not true. For example, one could imagine a hypothetical situation in which two stories with the same number of tokens and the same number of entities of interest happen to generate identical scatter plots. Nevertheless, we note that such a situation would be extremely unlikely, and, in practice, the Scatter Plot of Entities does seem to offer a characteristic fingerprint of a given text.

$\Phi$

, from a specific text, it should be apparent that the resulting Scatter Plot of Entities is unique. In principle, the converse is not true. For example, one could imagine a hypothetical situation in which two stories with the same number of tokens and the same number of entities of interest happen to generate identical scatter plots. Nevertheless, we note that such a situation would be extremely unlikely, and, in practice, the Scatter Plot of Entities does seem to offer a characteristic fingerprint of a given text.

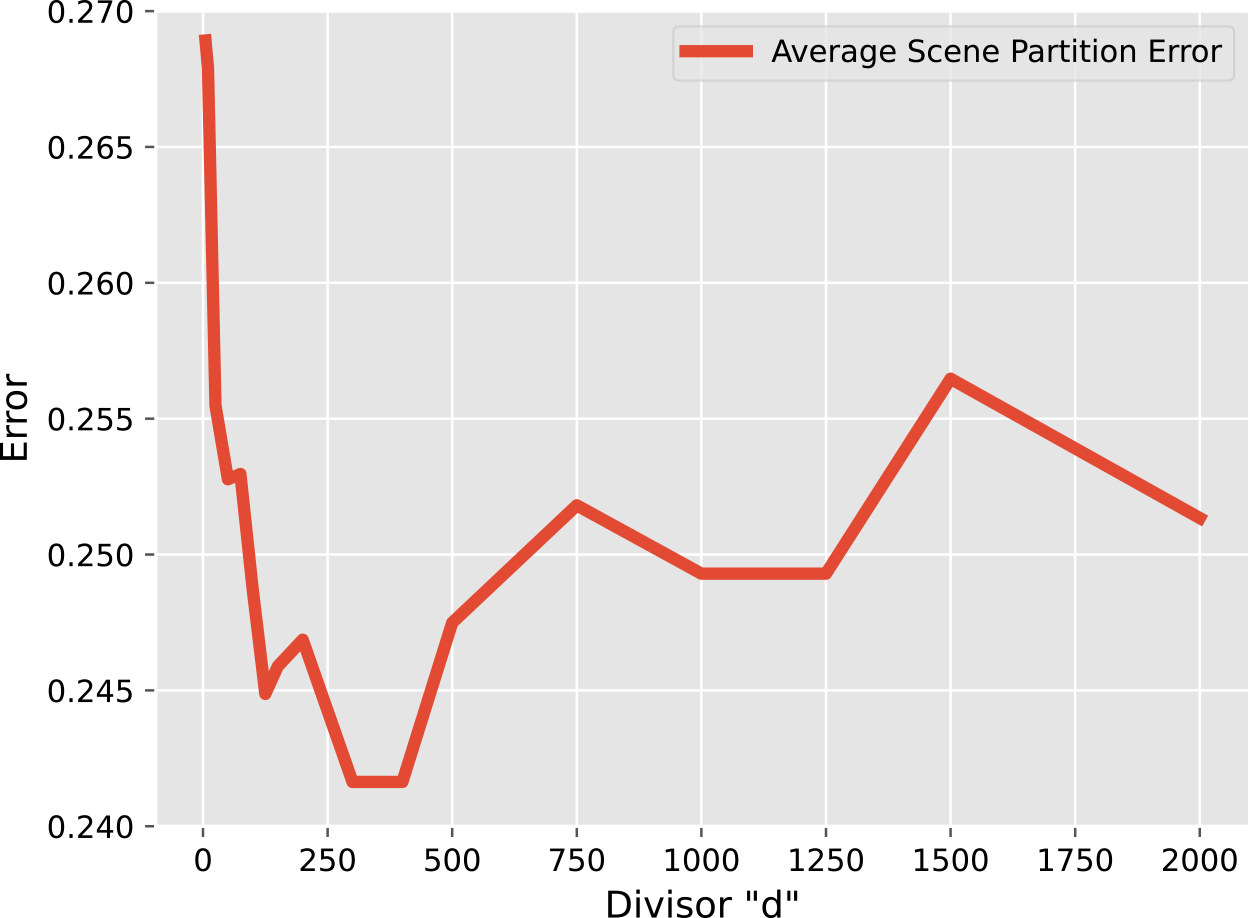

3.2. Methods: system outline and explanation

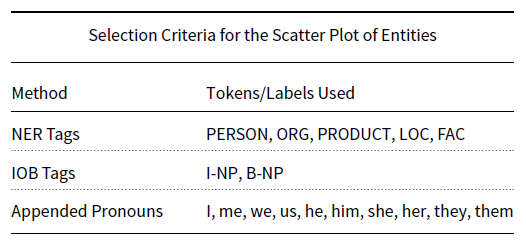

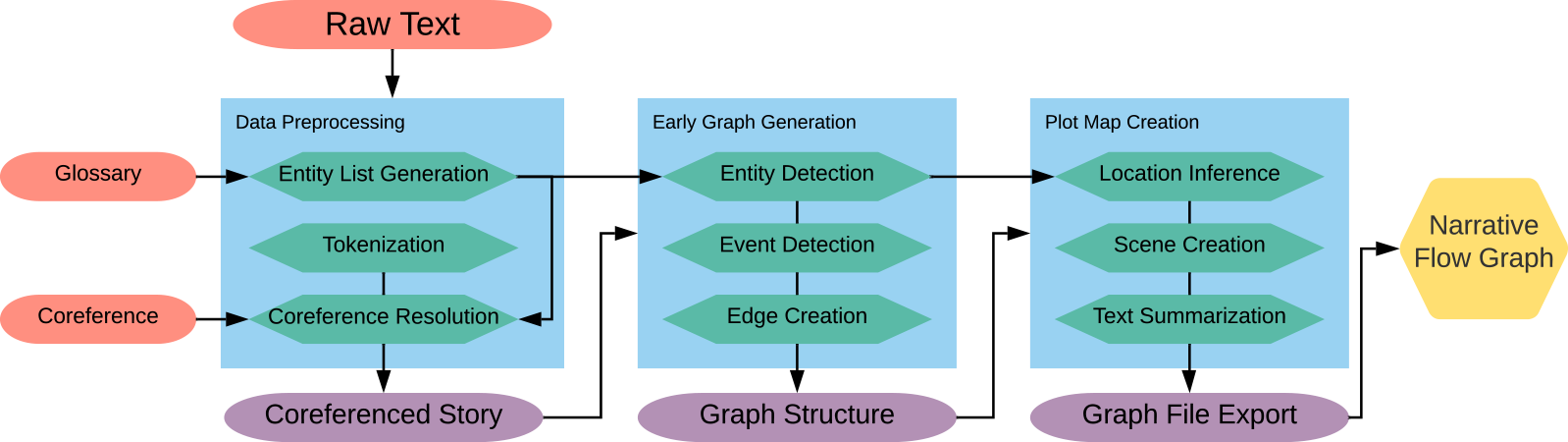

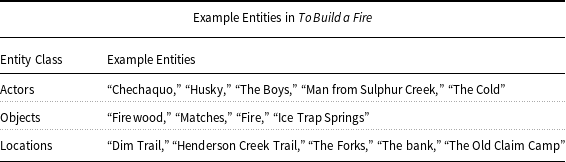

Figure 1 shows the diagram of the Scatter Plot of Entities. The input to the system is a plain-text document of the story. The system can perform without pre-modifications to the document, but for better and more informative results, some data preparation is necessary.

Figure 1. System diagram of the process for extracting the Scatter Plot of Entities.

3.2.1. Data preparation and preprocessing

The purpose of preprocessing is to create two ordered lists, one of all the tokens in the story and another of important entities that we want to detect in the story. These two lists become our

![]() $x$

and

$x$

and

![]() $y$

axes, respectively. We prepare the data by coreferencing the stories by hand to ensure that any mention of an entity as a pronoun or other moniker is recognized as that same entity within the text. For details on the coreferencing process and why we choose to do this by hand instead of using available NLP toolkits, please refer to Section A.1.2. We do, however, conduct experiments using a coreference resolution library for comparison. An NLP pipeline then handles tokenization, tagging parts of speech, and then running NER which identifies names of people, places, and organizations as well as dates and other text with specific formats. For the purposes of this research, we choose only from the list generated by NER those entity types that correlate to actors, objects, and locations in a text that could potentially have relevance to the plot. We append to this list objects and common pronouns that are missed by NER. A properly coreferenced story replaces most of these pronouns with what they reference, but we include them for situations in which there are entities without proper names or labels that can be coreferenced. The first-person pronouns "I’"and "me" are particularly important in first-person narratives in which the speaker’s name is not given and thus cannot be coreferenced to something recognizable by NER. See Appendix B for the complete description of what types of entities are chosen.

$y$

axes, respectively. We prepare the data by coreferencing the stories by hand to ensure that any mention of an entity as a pronoun or other moniker is recognized as that same entity within the text. For details on the coreferencing process and why we choose to do this by hand instead of using available NLP toolkits, please refer to Section A.1.2. We do, however, conduct experiments using a coreference resolution library for comparison. An NLP pipeline then handles tokenization, tagging parts of speech, and then running NER which identifies names of people, places, and organizations as well as dates and other text with specific formats. For the purposes of this research, we choose only from the list generated by NER those entity types that correlate to actors, objects, and locations in a text that could potentially have relevance to the plot. We append to this list objects and common pronouns that are missed by NER. A properly coreferenced story replaces most of these pronouns with what they reference, but we include them for situations in which there are entities without proper names or labels that can be coreferenced. The first-person pronouns "I’"and "me" are particularly important in first-person narratives in which the speaker’s name is not given and thus cannot be coreferenced to something recognizable by NER. See Appendix B for the complete description of what types of entities are chosen.

3.2.2. Entity plotting and activity

Once the system generates the list of entities, it locates them within the text and gives an

![]() $x$

-coordinate corresponding to their narrative order location by token index and provides a

$x$

-coordinate corresponding to their narrative order location by token index and provides a

![]() $y$

-coordinate according to the order of that entity’s first appearance within the text. We remove from the list those entities that appear only once or twice within the entirety of the text to reduce clutter and under the assumption that if it appears that infrequently, it is not as important to the narrative. We then plot these

$y$

-coordinate according to the order of that entity’s first appearance within the text. We remove from the list those entities that appear only once or twice within the entirety of the text to reduce clutter and under the assumption that if it appears that infrequently, it is not as important to the narrative. We then plot these

![]() $(x,y)$

coordinates on a Cartesian plane. Every

$(x,y)$

coordinates on a Cartesian plane. Every

![]() $y$

-coordinate will have three or more points corresponding to how many times the associated entity appears in the text. Every

$y$

-coordinate will have three or more points corresponding to how many times the associated entity appears in the text. Every

![]() $x$

-coordinate has either one or no points depending on whether or not that specific token is equivalent to one of the entities in

$x$

-coordinate has either one or no points depending on whether or not that specific token is equivalent to one of the entities in

![]() $\Phi$

, creating gaps in the

$\Phi$

, creating gaps in the

![]() $x$

-coordinates. Large gaps appear when the text uses wording that does not involve any of the entities we are tracking, enabling a concept of entity density, which we call entity activity, defined as a measure of how often entities appear in a section of the text. High entity activity means that a section of text has many appearances of entities, and low entity activity means there are few entity appearances in that section of text.

$x$

-coordinates. Large gaps appear when the text uses wording that does not involve any of the entities we are tracking, enabling a concept of entity density, which we call entity activity, defined as a measure of how often entities appear in a section of the text. High entity activity means that a section of text has many appearances of entities, and low entity activity means there are few entity appearances in that section of text.

We do not quantify what level of entity activity is considered high or low because it changes depending on the text. For example, a story that naturally has a lot of entity mentions in the text only has high entity activity if there is a spike, meaning that even more entities are present in that section of text than usual. Low entity activity works the same way. A story with naturally many entity mentions may have low entity activity values that are higher than another story with fewer entity mentions overall. Those low entity activity values in the first text are still considered low in relation to that text even if they are higher than the entity activity values of a different story.

We can now create an entity activity line measuring frequency of entity appearance as the story progresses. We create this line by projecting all coordinates onto the

![]() $x$

-axis and then generating a Gaussian curve for each entity centered at the

$x$

-axis and then generating a Gaussian curve for each entity centered at the

![]() $x$

-coordinate of that entity, creating a distribution overlap of entity positions that are then summed together to create a density curve. The more entities that appear near each other in the text, the more overlap and the higher the value of the curve at that point. The standard deviation, or

$x$

-coordinate of that entity, creating a distribution overlap of entity positions that are then summed together to create a density curve. The more entities that appear near each other in the text, the more overlap and the higher the value of the curve at that point. The standard deviation, or

![]() $\sigma$

, of the Gaussian directly affects how smooth or noisy the activity line is. The smaller the

$\sigma$

, of the Gaussian directly affects how smooth or noisy the activity line is. The smaller the

![]() $\sigma$

value, the steeper the fluctuations in the line, and thus, the more information that is present. The larger the

$\sigma$

value, the steeper the fluctuations in the line, and thus, the more information that is present. The larger the

![]() $\sigma$

value, the smoother the line, and thus the less information that is present. See Figure 2 for an example illustration of the summation of Gaussian curves at different values of

$\sigma$

value, the smoother the line, and thus the less information that is present. See Figure 2 for an example illustration of the summation of Gaussian curves at different values of

![]() $\sigma$

. Because this line helps determine which clustering is chosen as the best (explained in Section 3.2.3), we must first determine the best possible

$\sigma$

. Because this line helps determine which clustering is chosen as the best (explained in Section 3.2.3), we must first determine the best possible

![]() $\sigma$

value for our needs.

$\sigma$

value for our needs.

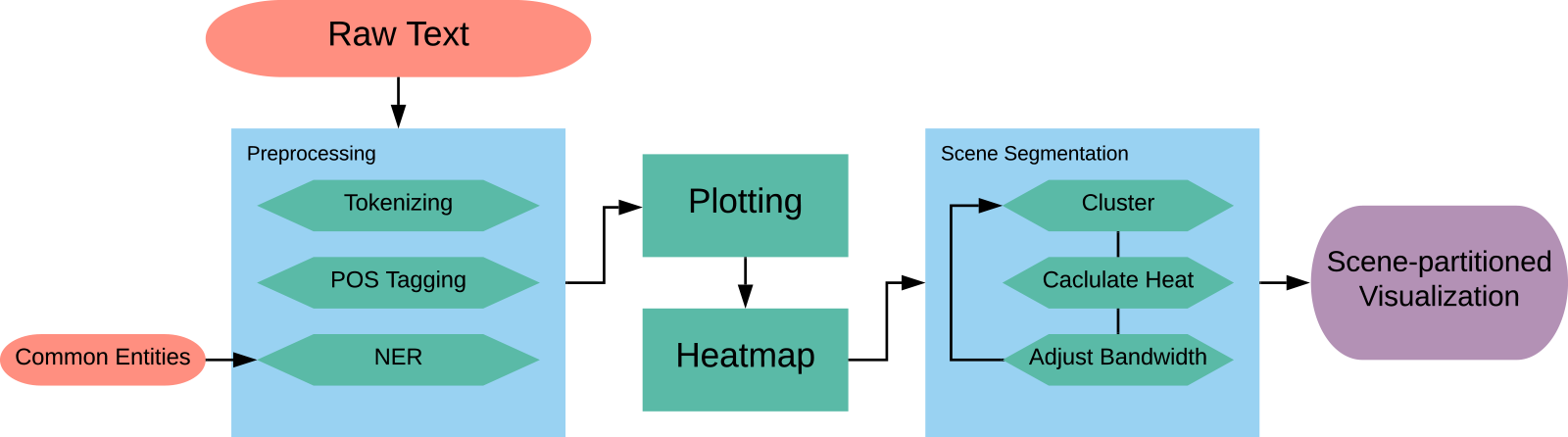

Figure 2. Example illustration of the summation of Gaussian curves. Large dots are the entity locations on the horizontal axis. The dotted curves are the individual Gaussian curves centered at the x-coordinates of the entities. The solid line is the summation of the Gaussian Curves. Figure (a) through (c) show increases of the standard deviation,

![]() $\sigma$

, and how it affects the smoothness of the curve.

$\sigma$

, and how it affects the smoothness of the curve.

At first, we sought a constant

![]() $\sigma$

value that is optimal for all stories, but initial tests showed that stories of different lengths need different

$\sigma$

value that is optimal for all stories, but initial tests showed that stories of different lengths need different

![]() $\sigma$

values. To determine what value for

$\sigma$

values. To determine what value for

![]() $\sigma$

is best, we test values of

$\sigma$

is best, we test values of

![]() $\sigma = n/d$

where

$\sigma = n/d$

where

![]() $d \in \{5..2000\}$

is the range of divisors we are testing and

$d \in \{5..2000\}$

is the range of divisors we are testing and

![]() $n$

is the number of tokens in the story. Figure 3 shows the activity line for different values of

$n$

is the number of tokens in the story. Figure 3 shows the activity line for different values of

![]() $\sigma$

for Leiningen Versus the Ants (Stephenson, Reference Stephenson1972). We also translate the activity line to a heat map that shows entity activity level as a color gradient from blue (low activity) to yellow (high activity) for better human readability. The number in parentheses in the upper left corner of the activity line is the divisor,

$\sigma$

for Leiningen Versus the Ants (Stephenson, Reference Stephenson1972). We also translate the activity line to a heat map that shows entity activity level as a color gradient from blue (low activity) to yellow (high activity) for better human readability. The number in parentheses in the upper left corner of the activity line is the divisor,

![]() $d$

. The greater the divisor, the smaller the

$d$

. The greater the divisor, the smaller the

![]() $\sigma$

value, and in turn the thinner the Gaussian and noisier the activity line.

$\sigma$

value, and in turn the thinner the Gaussian and noisier the activity line.

Figure 3. Entity activity lines and corresponding heat maps for Leiningen Versus the Ants (Stephenson, Reference Stephenson1972) for six different values of smoothing, from extremely wide (top plot) to very narrow (bottom plot) for the full length of the story (10050 tokens). This smoothing aspect is characterized by the size of the standard deviation of the Gaussian convolution kernel.

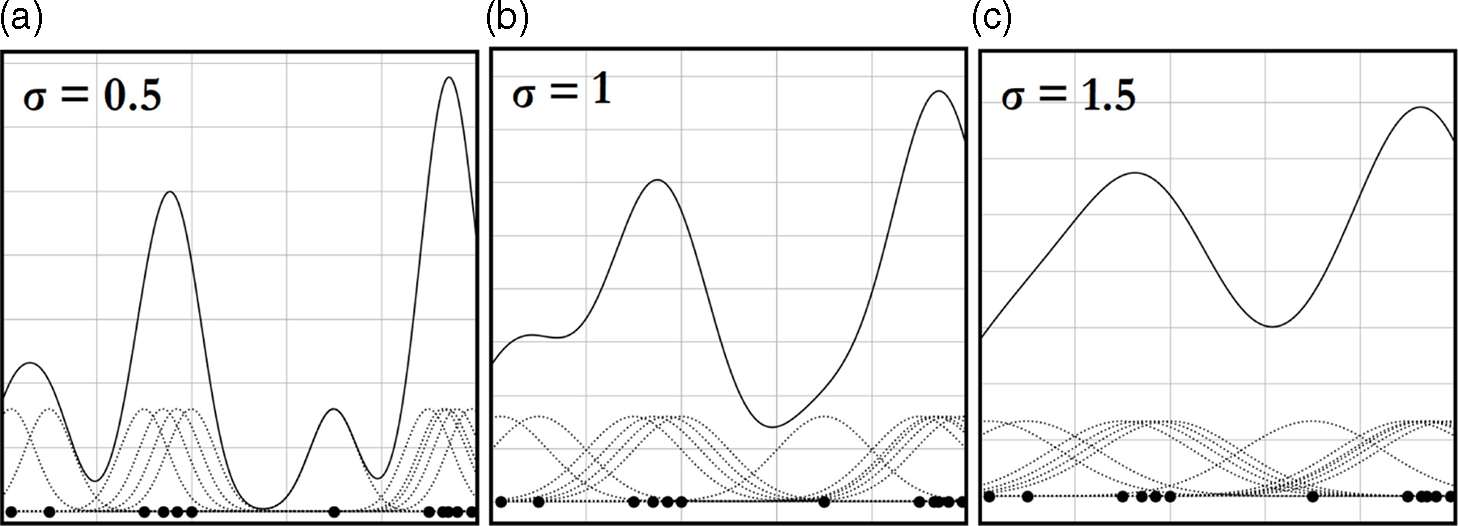

The divisor is simply a hyperparameter that must be chosen prior to computation, and the optimal value may change depending on what stories are used when running the system. We need a value for

![]() $d$

that provides sufficient information for the clustering algorithm to make informed decisions while at the same time not providing so much information that the data becomes too noisy to determine which clustering is best. Figure 4 reveals a trend where increasing

$d$

that provides sufficient information for the clustering algorithm to make informed decisions while at the same time not providing so much information that the data becomes too noisy to determine which clustering is best. Figure 4 reveals a trend where increasing

![]() $d$

improves the quality of clustering selection (and therefore scene partitioning) up until a point. After reaching this point, the activity line becomes too noisy, and so quality starts to drop, that is the scene partitioning error increases. The

$d$

improves the quality of clustering selection (and therefore scene partitioning) up until a point. After reaching this point, the activity line becomes too noisy, and so quality starts to drop, that is the scene partitioning error increases. The

![]() $d$

value which provides the best selection quality (the lowest error) for the stories used in this research resides between the values of 300 and 400. In the end, we select

$d$

value which provides the best selection quality (the lowest error) for the stories used in this research resides between the values of 300 and 400. In the end, we select

![]() $d=400$

, making

$d=400$

, making

![]() $\sigma = n/400$

with

$\sigma = n/400$

with

![]() $n$

being the number of tokens in the story.

$n$

being the number of tokens in the story.

Figure 4. Average error, over all eight stories in the corpus considered for this study, of scene partitioning as the divisor,

![]() $d$

, of the variance of the Gaussian convolution kernel increases. Lowest error occurs when this hyperparameter

$d$

, of the variance of the Gaussian convolution kernel increases. Lowest error occurs when this hyperparameter

![]() $d$

is between about 300 and 400.

$d$

is between about 300 and 400.

3.2.3. Clustering and scene partitioning

Next, we cluster segments of the text into scenes based around entity activity. We use a single-dimensional mean shift algorithm variant for this purpose. Mean shift uses the density gradient of data coordinates to attract data points together, letting the data points climb the gradient slope to where the points are densest. Those points that converge on the same gradient peak are included in the same cluster. A bandwidth value sets the distance of attraction for the points, determining the number of clusters created. We cluster unidimensionally around the

![]() $x$

-coordinate. The assumption motivating this method is that the more entities that are near each other in the text, the more these entities are interacting within the story, and scenes are therefore evident by the presence and interaction of these entities.

$x$

-coordinate. The assumption motivating this method is that the more entities that are near each other in the text, the more these entities are interacting within the story, and scenes are therefore evident by the presence and interaction of these entities.

We use clustering instead of calculating local maxima of the activity line due to the nature of the curve’s creation producing many local maxima and minima, far more than there are scenes in the story. Using a mean shift clustering method, the entities converge into clusters centered on the largest grouping of nearby maxima. We calculate the borders between the clusters at half the distance between the nearest two entities in neighboring clusters, creating a clean division between clusters because they are clustered unidimensionally.

The size of the bandwidth directly affects the number of clusters. Since clusters represent scenes, we iteratively adjust the bandwidth and run our mean shift algorithm, saving every unique clustering that gives us our desired number of scenes. We assume the number of scenes to be known a priori so that we may better assess how informative entity density is about the placement of scene boundaries without conflating the problem with another difficult task of determining scene existence. We must then determine which of these clusters is the best for that story and use the entity activity line for this purpose. We have two hypotheses:

-

1. Since scenes are denoted by entity presence and interaction which results in high entity activity, scene transitions are represented by areas of local minima in the activity graph where entity appearance is not as frequent.

-

2. When scenes transition, the new scene must be set up, explaining to the reader who is involved and the setting of the scene. Involved entities are introduced quickly in the text at these locations, causing a small spike in entity activity, meaning scene transitions are denoted by areas of local maxima in the activity graph.

We test both these hypotheses, saving the best cluster orientation where the

![]() $x$

-coordinates of the cluster partitions have the lowest average activity value from the activity line for the first hypothesis, and saving the best cluster orientation where the

$x$

-coordinates of the cluster partitions have the lowest average activity value from the activity line for the first hypothesis, and saving the best cluster orientation where the

![]() $x$

-coordinates of the cluster partitions have the highest average activity value for the second hypothesis. This creates two possible scene partitions for the story.

$x$

-coordinates of the cluster partitions have the highest average activity value for the second hypothesis. This creates two possible scene partitions for the story.

3.2.4. Ground truth annotation

To create a ground truth comparison for scene partitioning, two human annotators per source text read and select a token location that can then be matched to an

![]() $x$

-coordinate for each scene transition (the division between the scenes). We have two annotators per source text for quality control purposes to ensure the validity of the annotation. See Section A.2.2 for more explanation on the annotation process. We store these

$x$

-coordinate for each scene transition (the division between the scenes). We have two annotators per source text for quality control purposes to ensure the validity of the annotation. See Section A.2.2 for more explanation on the annotation process. We store these

![]() $x$

-coordinates in an array that can then be compared with the cluster boundaries to see how well the clustering partitions the source text into scenes.

$x$

-coordinates in an array that can then be compared with the cluster boundaries to see how well the clustering partitions the source text into scenes.

There is no need for ground truth annotation for the scatter plot itself. Correctness for the scatter plot (i.e., the proper location of each individual

![]() $(x,y)$

coordinate) is easily measurable by finding a token representing an entity in the text and checking whether there is an associated point in the scatter plot. The accuracy of the scatter plot may seem obvious so long as the system itself plots each entity coordinate correctly. Indeed, when comparing each token in the source text where an entity appears in the output scatter plot, the coordinate matches 100% of the time, so we spend no time in the results discussing the accuracy of plotting the coordinates.

$(x,y)$

coordinate) is easily measurable by finding a token representing an entity in the text and checking whether there is an associated point in the scatter plot. The accuracy of the scatter plot may seem obvious so long as the system itself plots each entity coordinate correctly. Indeed, when comparing each token in the source text where an entity appears in the output scatter plot, the coordinate matches 100% of the time, so we spend no time in the results discussing the accuracy of plotting the coordinates.

3.3. Results

We select eight stories to test the scene partitioning of the Scatter Plot of Entities.

Three professional short stories: Leiningen Versus the Ants (Stephenson, Reference Stephenson1972), A Sound of Thunder (Bradbury, Reference Bradbury1952/2016), and To Build a Fire (London, Reference London1902/2007).

Three amateur short stories: “Falling” (DeBuse, Reference DeBuse2013), “Observer 1: A Warm Home” (DeBuse, Reference DeBuse2012a), and “Observer 4: Legends” (DeBuse, Reference DeBuse2012b).

Two scripts: Hamlet (Shakespeare et al., Reference Shakespeare, Mowat, Werstine, Poston and Niles1600/2022) and The Lion King (Allers and Minkoff, Reference Allers and Minkoff1994). Included in the text for both scripts is the speaker markup (who is saying each line) and scene markup (when a new scene begins) due to these structural components being part of the raw text of the script; however, the Scatter Plot of Entities does not use scene markers to determine scene partitions, and speaker markup is treated just like any other entity mention in the text.

See Table 1 for additional details on each story, such as author, year, length, and genre.

Table 1. Information on the author, year, genre, and length in tokens for each story.

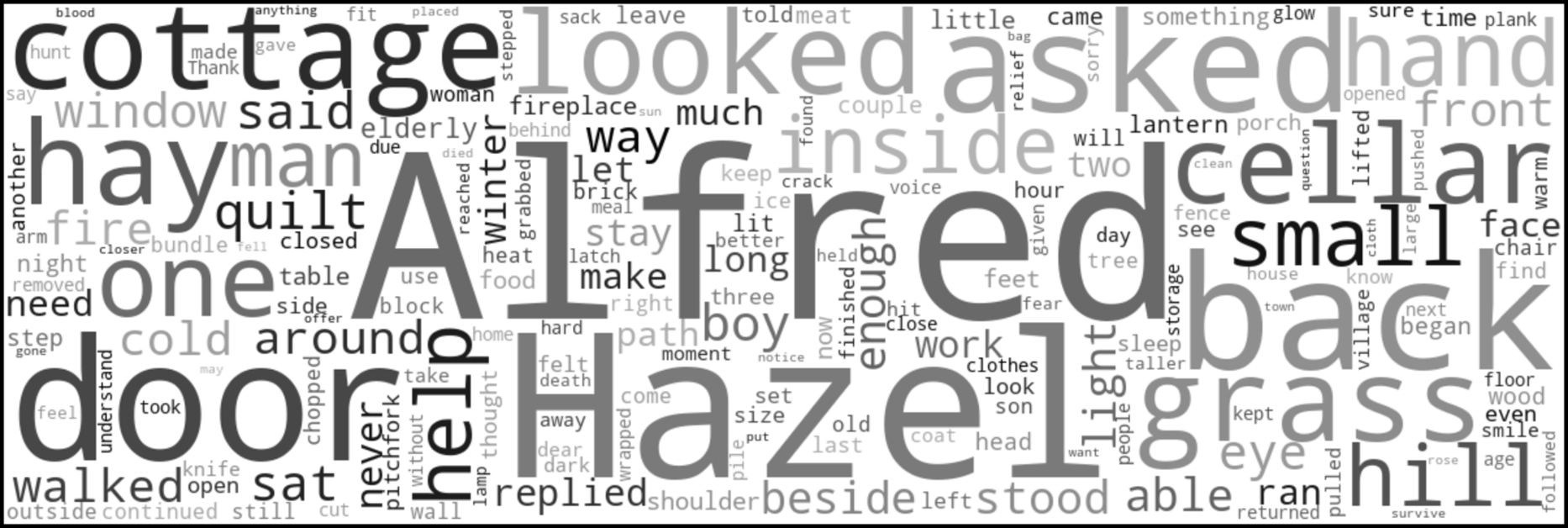

3.3.1. The output

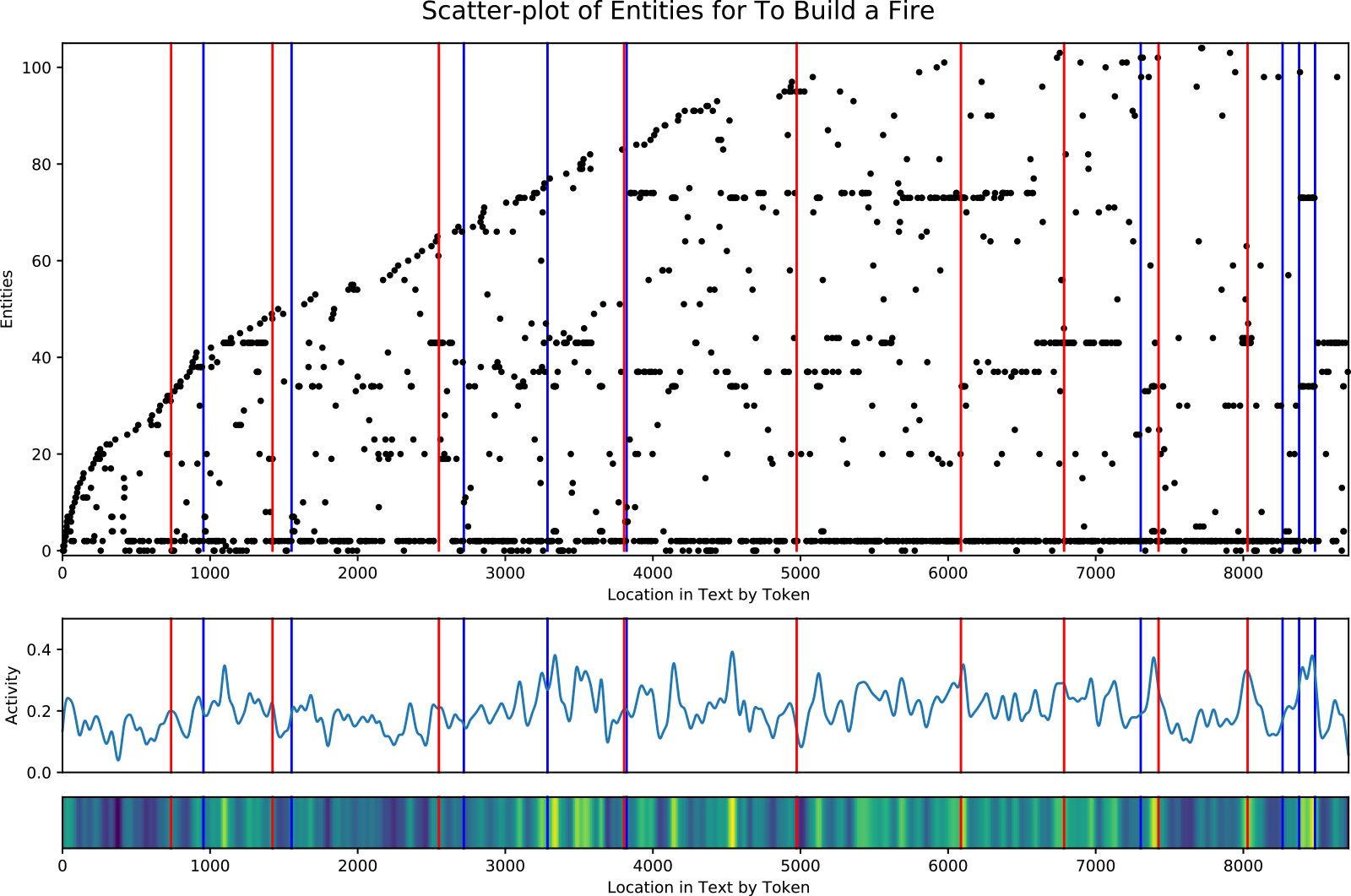

Figure 5 shows the output of the Scatter Plot of Entities for the short story, To Build a Fire (London, Reference London1902/2007). Two visuals are included in the output, with the entity scatter plot on top and the entity activity on bottom. On the scatter plot, the

![]() $x$

-axis is the narrative order of tokens in the source text, so it represents the location of each entity in the text. Each tick on the

$x$

-axis is the narrative order of tokens in the source text, so it represents the location of each entity in the text. Each tick on the

![]() $y$

-axis represents a unique entity in the order that entity is first introduced in the text. Like the scatter plot, the entity activity’s

$y$

-axis represents a unique entity in the order that entity is first introduced in the text. Like the scatter plot, the entity activity’s

![]() $x$

-axis is the location within the text by token. The

$x$

-axis is the location within the text by token. The

![]() $y$

-axis is the entity activity determined by the summation of the overlapping Gaussian curves. Included just below the entity activity plot is the corresponding heat map where blue is low entity activity and yellow is high activity. The red lines running vertically through each plot indicate a partition of the tokens into clusters, where the system believes the scene transitions are located. The blue lines are the ground truth transitions or borders between the scenes.

$y$

-axis is the entity activity determined by the summation of the overlapping Gaussian curves. Included just below the entity activity plot is the corresponding heat map where blue is low entity activity and yellow is high activity. The red lines running vertically through each plot indicate a partition of the tokens into clusters, where the system believes the scene transitions are located. The blue lines are the ground truth transitions or borders between the scenes.

Figure 5. Entity scatter plot (top) and corresponding entity activity (bottom) for the short story To Build a Fire (London, Reference London1902/2007). Story entities are plotted as black dots, where the x-value (i.e., along the horizontal axis) is the location in the story, and the y-value (i.e., along the vertical axis) is the order of the entity’s first appearance. Vertical red lines show the cluster partitions. Vertical blue lines show the ground truth scene transitions.

3.3.2. Point-wise dissimilarity evaluation metric

To evaluate the scene partitioning created by the clustering, we need a metric that does not require a foreknown classification matching of which scene partition in the output pairs with which scene partition in the ground truth, since a scene boundary may be missed or a scene in the ground truth may be split into multiple scenes in the output. The metric also cannot require perfect alignment but instead provides a better score the closer in alignment to the ground truth the output is. The F1 score fails to satisfy both requirements. WindowDiff (Pevzner and Hearst, Reference Pevzner and Hearst2002) is an evaluation metric designed for single-category linear tiling of the full continuum, much like our problem, but it requires the setting of a rolling window size

![]() $k$

, and an arbitrary hyperparameter. This measure is useful for comparison between different partitions of the same length set or continuum, but it does not allow for equal comparison between partitions of different length data. This led us to create the Point-wise Dissimilarity Evaluation Metric.

$k$

, and an arbitrary hyperparameter. This measure is useful for comparison between different partitions of the same length set or continuum, but it does not allow for equal comparison between partitions of different length data. This led us to create the Point-wise Dissimilarity Evaluation Metric.

Recall that a partition of a set is collectively exhaustive, mutually exclusive subsets of a given set. In our case, the set of interest is the set of tokens,

![]() $T$

, which we define back in Section 3.1 as containing the integer indices of all tokens in narrative order from

$T$

, which we define back in Section 3.1 as containing the integer indices of all tokens in narrative order from

![]() $0$

to

$0$

to

![]() $n$

,

$n$

,

![]() $n$

being the last token.

$n$

being the last token.

Here, we are interested in comparing two partitions,

![]() $P_O$

and

$P_O$

and

![]() $P_G$

where

$P_G$

where

![]() $P_O$

is the partition generated by the system output and

$P_O$

is the partition generated by the system output and

![]() $P_G$

is the ground truth partition characterizing how the text is actually divided into scenes. Without loss of generality, let

$P_G$

is the ground truth partition characterizing how the text is actually divided into scenes. Without loss of generality, let

![]() $X$

be the larger of the two partitions and

$X$

be the larger of the two partitions and

![]() $Y$

be the smaller (if they are equal-sized let

$Y$

be the smaller (if they are equal-sized let

![]() $X$

represent

$X$

represent

![]() $P_G$

). Similarly, let

$P_G$

). Similarly, let

![]() $\ell$

be the number of subsets in

$\ell$

be the number of subsets in

![]() $X$

(the number of scenes) and

$X$

(the number of scenes) and

![]() $s$

be the number of subsets in

$s$

be the number of subsets in

![]() $Y$

. Furthermore, for any

$Y$

. Furthermore, for any

![]() $i^{th}$

element of

$i^{th}$

element of

![]() $X$

,

$X$

,

![]() $X_i$

, or

$X_i$

, or

![]() $Y$

,

$Y$

,

![]() $Y_i$

, let

$Y_i$

, let

![]() $\overline{x_i} \;:\!=\; \max{\!(X_i)}$

, and

$\overline{x_i} \;:\!=\; \max{\!(X_i)}$

, and

![]() $\overline{y_i} \;:\!=\; \max{\!(Y_i)}$

. Recall that since

$\overline{y_i} \;:\!=\; \max{\!(Y_i)}$

. Recall that since

![]() $X$

and

$X$

and

![]() $Y$

are partitions of sets of contiguous integers, the

$Y$

are partitions of sets of contiguous integers, the

![]() $i^{th}$

element of such a partition is a subset of integers, which always has a maximum value. This maximum value then becomes the value of

$i^{th}$

element of such a partition is a subset of integers, which always has a maximum value. This maximum value then becomes the value of

![]() $\overline{x_i}$

or

$\overline{x_i}$

or

![]() $\overline{y_i}$

. We can now define the point-wise error as follows:

$\overline{y_i}$

. We can now define the point-wise error as follows:

\begin{equation} \textit{error}=\frac{1}{n}\sum _{i=1}^{\ell }\min _{j\in \{1,\dots,s\}}\big |\overline{x_i}-\overline{y_j}\big | \end{equation}

\begin{equation} \textit{error}=\frac{1}{n}\sum _{i=1}^{\ell }\min _{j\in \{1,\dots,s\}}\big |\overline{x_i}-\overline{y_j}\big | \end{equation}

This error looks at the boundaries in each element in the partition with most scenes and finds the closest boundary in the other partition, calculates the difference between these two boundaries, aggregates the error over all closest comparisons, and then normalizes over the number of tokens to enable comparison of the error between stories of different lengths. The closer to zero the Point-wise Dissimilarity error is, the more accurate the partitions of

![]() $P_O$

are to

$P_O$

are to

![]() $P_G$

are. When

$P_G$

are. When

![]() $P_O$

and

$P_O$

and

![]() $P_G$

have the same number of scene partitions, the worst error we can expect is

$P_G$

have the same number of scene partitions, the worst error we can expect is

![]() $1.0$

. If additionally

$1.0$

. If additionally

![]() $P_G$

has even-length partitions, and

$P_G$

has even-length partitions, and

![]() $P_O$

is initialized with random partition lengths, on average the error will be

$P_O$

is initialized with random partition lengths, on average the error will be

![]() $0.5$

, which we see as the worst feasible error for our tests.

$0.5$

, which we see as the worst feasible error for our tests.

3.3.3. Evaluation results

We collect five different error measurements, three as a baseline for comparison and two corresponding to our hypotheses of how entity activity could mark a transition between scenes. We create the first baseline, the even-split partitions, by dividing the text into a number of even-length scenes. We create the second baseline, the randomized partitions, by dividing the text into random-length partitions, and we repeat this 100 times to get an average error. Our purpose in including these two baselines is to reveal fundamental aspects of the nature of the novel task of scene segmentation in narrative. These two baselines can be seen as “bookends” of a spectrum of solution techniques, one end being deterministic and the other being stochastic, using only the information about the expected or desired number of scenes in the story:

-

At one end, we consider a completely deterministic approach of simply partitioning the text into N equally sized scenes, the even-split partition. If this completely naive approach performs well over a large corpus, then presumably the scene segmentation problem is not as difficult as we might have first thought, hinging only on discovering the number of scenes in the story.

-

At the other end of the spectrum, we adopt a completely stochastic approach, randomly choosing N-1 locations in the text to partition it into N scenes. Again, this approach only uses knowledge of the number of scenes, but in an entirely different way than the deterministic approach above.

A corpus that has a high average even-split score will not have a high random partition score, and vice versa. With a high even-split score, scenes within a story are expected to be relatively equal in length, while corpora with high random partition scores will have a wide variety of scene sizes within each story (some large and other small). These two baselines add insight into the nature of the particular scene segmentation problem we address through the Scatter Plot of Entities by providing guardrails from which we can better interpret the performance scores of our solution to this problem. Some stories can approach a solution to the problem by simply chopping the text into equal-sized chunks, but others—even with knowledge of the number of scenes—require more information to get the segmentation right. As one considers the performance of our solution on a particular story, we think they should have insight into the degree to which that story belongs to the one category or the other.

Our third baseline, Texttiling (Hearst, Reference Hearst1997), provides a comparison between a topical approach to story partitioning and our density-based approach which lacks the need to know any topical information about the source text. To match our problem setup, the Texttiling also produces a number of partitions matching the number of scenes so that the comparisons will be equal.

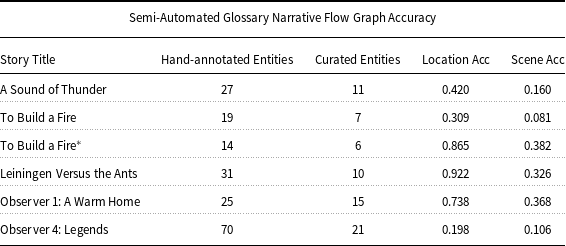

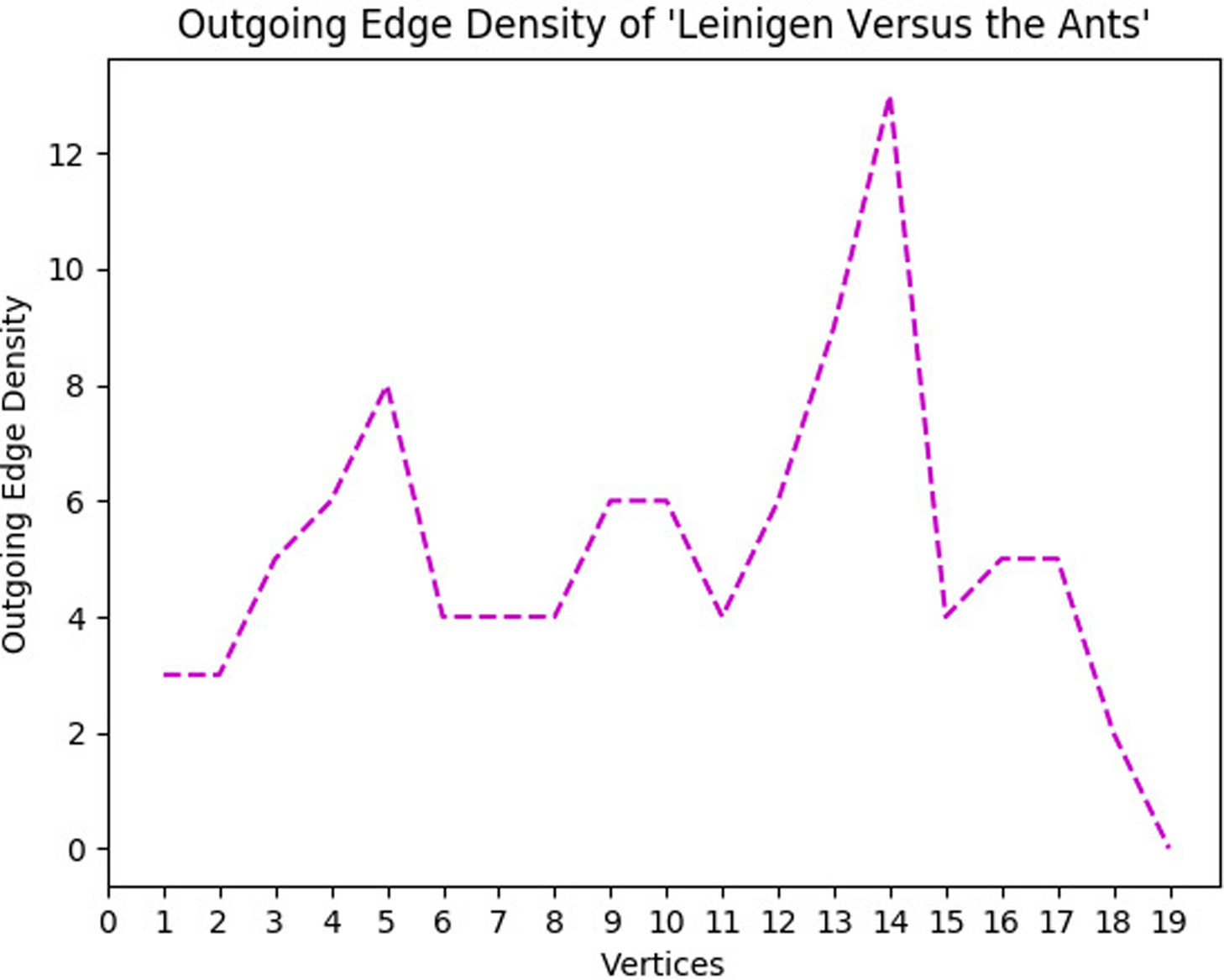

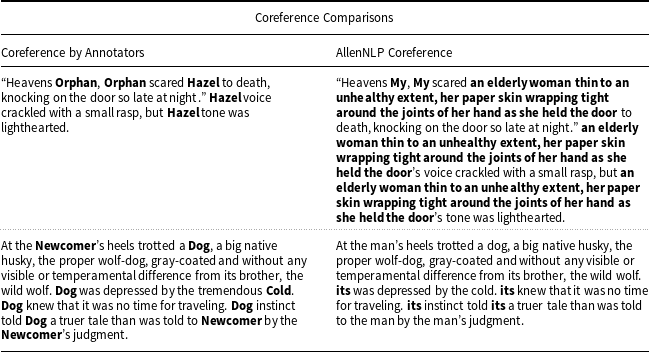

Table 2 shows the error calculations for the partitioning. Bold numbers highlight the lowest error for that story. Low-activity partitions select scene partitions where the partition borders have on average the lowest entity activity. High-activity partitions select scene partitions where borders on average have the highest scene activity. Remember that the number of partitions of the story for each baseline and test is the same. The only way the resulting number of scenes would differ is if there is an output partitioning that includes an empty partition, meaning that the mean shift clustering algorithm created an empty cluster. Averaging over the stories (taking the column average, shown in the bottom row of Table 2), our strongest baseline, even-split, obtains a point-wise dissimilarity error score of 0.261. Low-activity partitions perform near-equivalently at 0.262. High-activity partitions perform best with an average point-wise dissimilarity error score of 0.242. Texttiling’s topical approach produces an error score of 0.293, worse than both even-split and low-activity. To assess the feasibility of fully automating the process of Scatter Plot of Entities creation, we use the best-performing method of high-activity partitions and run additional experiments on each story using AllenNLP’s coreference resolution library (Gardner et al., Reference Gardner, Grus, Neumann, Tafjord, Dasigi, Liu, Peters, Schmitz and Zettlemoyer2018) to see how well it performs in comparison with our hand-coreferencing. The average point-wise dissimilarity score over all stories for high-activity partitions using AllenNLP’s library coreference resolution toolkit is 0.266, worse than our best baseline. In addition to our point-wise dissimilarity score, we also calculated the overall F1 scores, not on the scene boundaries but on the overlap of the matched partitions. Even-split partitioning has an F1 score of 0.603. The Scatter Plot of Entities obtains an F1 score of 0.635 for high-activity partitioning (precision 0.775 and recall 0.549). Using AllenNLP’s coreference resolution toolkit with high-activity partitioning results in a worse F1 score of 0.579 (precision 0.764 and recall 0.475).

Table 2. Scene partitioning error for the Scatter Plot of entities. Bold numbers highlight the method with lowest error for that story. For the averages over all stories, bold represents the partitioning method that results in overall best scene partitioning according to the point-wise dissimilarity evaluation metric.

3.4. Discussion

The Scatter Plot of Entities provides a visualization of and insight into three important aspects of narrative: a partitioning of the story into scenes, denoting locations in the story of potential plot-importance, and highlighting what characters may have the most influence on the story. We dissect each of these below and discuss how well the Scatter Plot of Entities adheres to our goal, which is to model the underlying plot of a story. Given the initial findings from the results and the resulting qualitative and anecdotal observations we discuss for the seven stories, determining how universal these findings are among multiple stories and genres with the aid of large-scale third-party human judgments is a necessary topic we will address in the future.

3.4.1. Scene partitioning

Our first hypothesis that scene transitions are denoted by locations of low entity activity in the story does not hold up well according to Table 2, whereas our second hypothesis performs the best overall, obtaining the lowest error for all but two stories. The success of the second hypothesis does not mean that our first hypothesis is false. Figure 6 shows the ground truth scene partitions overlayed on the entity activity lines. Of the 140 combined scene transitions for all 8 stories, 83 of them land on or near local maxima, 41 land on or near local minima, and 16 are undecidable (about midway between local a local maxima and minima). These results give supporting evidence that high entity activity hints at scene location and that small spikes in local entity activity are a common marker for scene transitions.

Figure 6. Ground truth scene partitioning for each story, shown on the entity activity lines. The vertical blue bars are the divisions between the scenes.

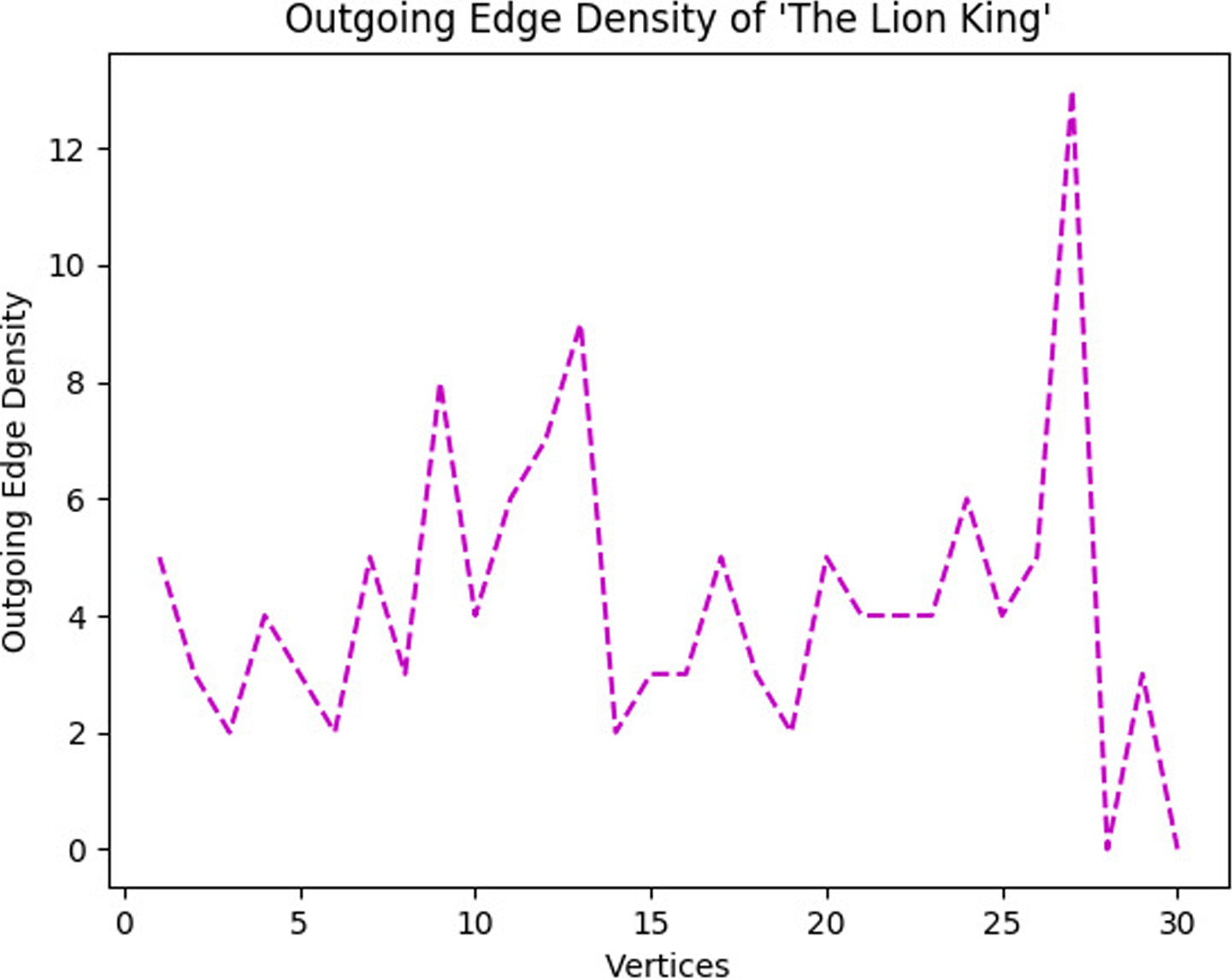

The two exceptions in Table 2 that have lower partitioning error on tests other than high entity activity are special cases. The first exception, The Lion King, has a much higher percentage of scene transitions at or near local minima (17 out of 29 scene transitions) than the other stories. Given this pattern, we would expect the error for low-activity partitions to be lower than high-activity partitions in the table, but that is not the case. We believe this is because the lowest of the local minima in the activity line is within scenes and far from the ground truth scene transitions. By trying to find clusterings where the partition borders land in these locations, the partitions get further from the ground truth. In the end, even-split partitions have the lowest error, but only by a very small margin (

![]() $0.2334$

for even-split compared to

$0.2334$

for even-split compared to

![]() $0.235$

for high-activity).

$0.235$

for high-activity).

The second exception is the most peculiar, A Sound of Thunder. The Texttiling baseline had the lowest out of all the error measurements for any story, and randomized scene partitioning had the next lowest error for the story. Because Texttiling is a topic-based text segmentation algorithm, it performs better on stories that have drastically different thematic topics between scenes. The first and last scenes take place in the present while the long scene in the middle takes place in the ancient past. This makes differentiating between scenes by topic much easier as opposed to To Build A Fire which is topically very monochrome, resulting in almost the same error from Texttiling as randomly partitioning the text. A Sound of Thunder also provides a perfect example of why inconsistent scene lengths are such a great weakness. The beginning and ending scenes, which take place in the present time, are very short, whereas the middle scene, which takes place in the past, takes up nearly 60% of the story. The drastic difference between the scene lengths conflicts with the mean shift clustering algorithm’s bandwidth. As mentioned before, the bandwidth determines the distance of attraction to cluster the entities together, determining the size and number of clusters. If a scene is much larger than the bandwidth, the system forces that scene to split. The Scatter Plot of Entities places the partitions closer to center in this story because the bandwidth can grow only so large to create a partition into three scenes. The scenes end up becoming near equal in length, increasing error. When placing randomized partitions, there is a much higher chance of one being placed close to the two ground truth scene partitions, so when averaging over 100 randomized three-scene partitions, the likelihood of lower error for a large number of them is higher. In general, much of the partitioning error comes from scenes being much longer or shorter than the mean shift clustering bandwidth—a common issue for all stories in our study, not just A Sound of Thunder.

3.4.2. Marking locations of plot-importance

The entity activity line and its corresponding heat map give us a clear visual of locations of high entity activity. The system’s best standard deviation,

![]() $\sigma$

, for the Gaussians creates a noisy activity line that is difficult for a human to use to determine general locations of high entity activity. However, if we increase

$\sigma$

, for the Gaussians creates a noisy activity line that is difficult for a human to use to determine general locations of high entity activity. However, if we increase

![]() $\sigma$

to smooth out the activity lines, we find a common pattern in that the areas of higher entity activity often correspond to locations of higher plot-importance in our chosen stories.

$\sigma$

to smooth out the activity lines, we find a common pattern in that the areas of higher entity activity often correspond to locations of higher plot-importance in our chosen stories.

Figure 7 shows the corresponding heat maps for three stories where

![]() $\sigma$

has been increased. We can see near the end of each story that the climax shows up yellow, which shows they have high entity activity. In addition to climaxes, there are often locations within a story that are similarly plot-important but do not have the finality of a climax. Such moments, which are often called sub-climaxes or crisis points (Gardner, Reference Gardner1991), show up as yellow in the heat map just like climaxes.

$\sigma$

has been increased. We can see near the end of each story that the climax shows up yellow, which shows they have high entity activity. In addition to climaxes, there are often locations within a story that are similarly plot-important but do not have the finality of a climax. Such moments, which are often called sub-climaxes or crisis points (Gardner, Reference Gardner1991), show up as yellow in the heat map just like climaxes.

Figure 7. Heat map for three representative stories. The areas of strongest yellow (high entity activity) tend to be the most plot-important locations in these stories. Independent analysis verifies that the climax of each story corresponds to the rightmost bright yellow region for these examples, although in general, it may correspond to other bright patches.

In “Falling,” the strongest yellow mark in the middle of the story is a crisis point in which the main character shows the dystopian nature of the colony to the secondary main character. The climax, marked in yellow at the end of the story, is an assassination attempt against the main character. Similarly in Leiningen Versus the Ants, the area of high entity activity in the middle of the story is the moment when the ants begin overrunning the plantation. The climax in yellow shows the location where Leiningen must flood his plantation. Lastly in “Observer 4: Legends,” the climactic battle, shown as the only location of high entity activity, appears relatively earlier because the resolution and denouement of this story is longer. Though not all stories follow the pattern of high entity activity equating plot-importance, and it is possible for a story to have a climax with low entity activity, the pattern is common enough to be worthy of highlighting.

3.4.3. Identifying the most influential entities

Although the scatter plot is used for clustering and scene partitioning, on its own it gives us detailed information on the entities of the story. In Figure 8, we see that the introduction of entities follows a nearly logarithmic curve. As the story progresses, fewer and fewer new entities are introduced, and by the one-third to midway mark, most if not all the prominent entities have been introduced. This pattern of entity introduction is a common trend that all eight of our stories follow, although The Lion King does introduce two major characters, Timone and Pumba, a little past the midway point, so there are exceptions.

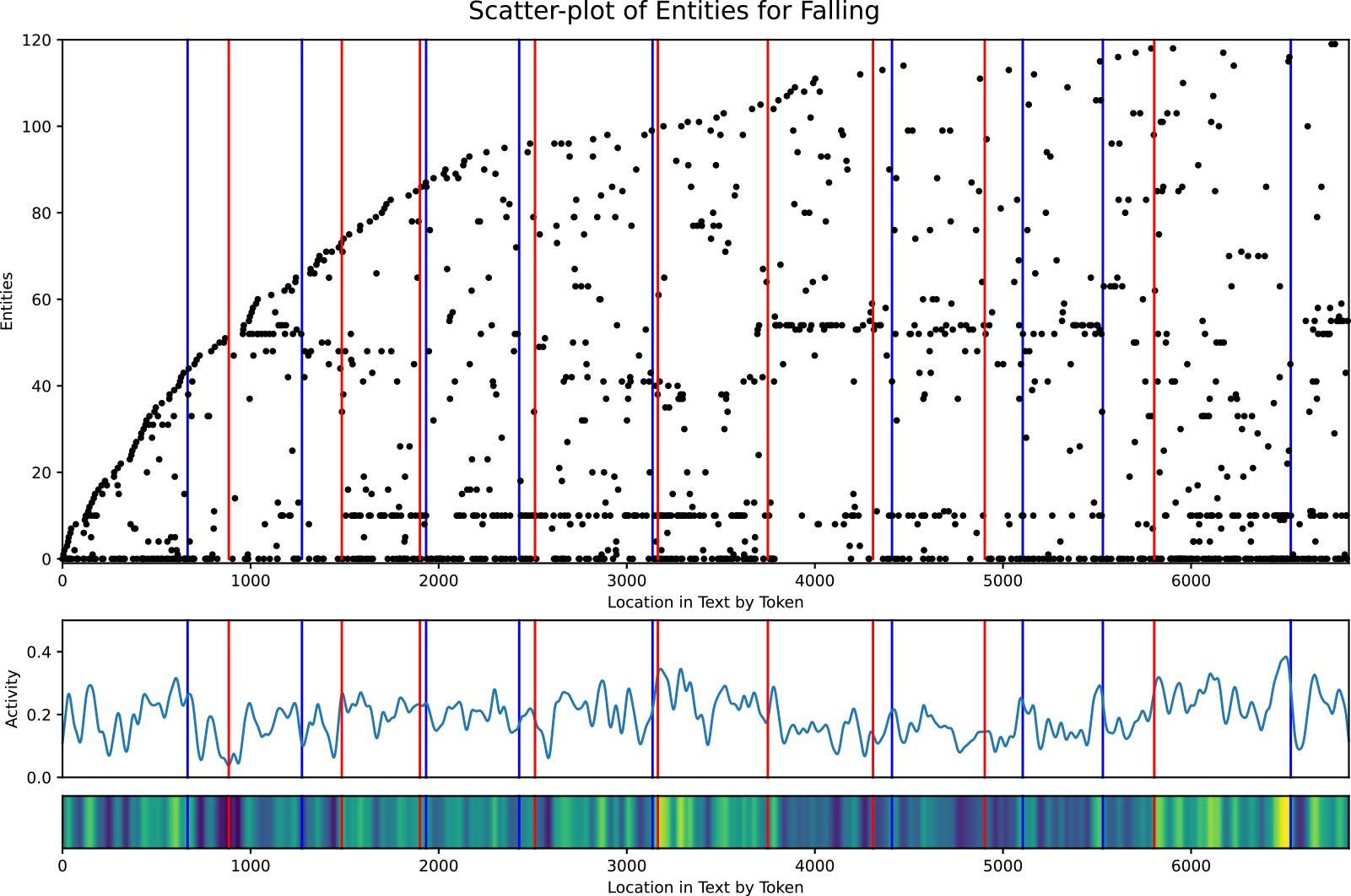

Figure 8. Scatter Plot of Entities for the short story, “Falling” (DeBuse, Reference DeBuse2013). The most influential entities are entities 0, 10, and 53, visible by the near-solid horizontal dotted lines. Entities 0 and 10 are the male and female leads of the story, and entity 53 is a secondary, supporting character. The output partitions (red vertical lines) and the ground truth partitions (blue vertical lines) show by their proximity that the system also does a fairly good job partitioning this story into scenes.

Using the scatter plot, we can also see which entities are the most prominent at a glance, namely those that create near-solid horizontal dotted lines. The densest dotted lines indicate scenes where that entity is actively involved or mentioned, hinting that they may be relevant to the plot in that place in the story. When determining the most influential entities, we must look at more than frequency. Local density is just as important. An entity that appears infrequently in every scene may not hold as much importance as another entity that appears the same number of times overall but is concentrated with high activity in a select few scenes where it appears. Referring back to Figure 8, we can see that entity 0, 10, and 53 are the most prominent and locally frequent in the various scenes where they appear. These entities are the male lead (mentioned only by the first-person pronoun, ‘I’), the female lead, Skyler, and a supporting character, Stonne, respectively. Sure enough, these are the three main characters of “Falling.” This means that the Scatter Plot of Entities can be used to determine the main or most influential characters of a story, assuming that named entity recognition is able to properly detect them. We will see in Section 4 that the ability of the Scatter Plot of Entities to highlight entities of importance in the story enables the curation of the glossary of entities used as input into the Narrative Flow Graph, instructing the system on which plot-important entities to track.

3.4.4. Adherence to the definition of plot

The Scatter Plot of Entities can only very loosely be considered a visualization of the plot of the story. As we defined in Section 2.1, plot requires (1) characters of volition, (2) events involving those characters, (3) representation on how information spreads, (4) causation linking these aspects of plot, and (5) a full structure of those links from the beginning to the end of the story. The only requirements that this visualization addresses are events and very lightly characters and structure.

As a means of partitioning a story into its scenes, we see moderate success in clustering around high entity activity. Those scenes created by the clusters encompass the events of the story. This is the Scatter Plot of Entities’ strongest feature as far as the story’s plot is concerned. Characters are loosely represented by their location in the story, and we see which characters are most influential and which events they are heavily involved in through the more solid of the horizontal dotted lines; however, we do not have any representation of a character’s volition. Structure is also absent, and without some form of linking between scenes, we cannot develop a true plot structure for the story. By partitioning the story into scenes, we show only the narrative ordering of events. This inability to fully represent plot does not mean that the Scatter Plot of entities is without merit. The visual artifacts are informative about the story’s content, and through further research, more can be learned. We can use the Scatter Plot of Entities as a start, utilizing those aspects on which it performs well, to create another visualization that better addresses the aspects of plot where this visualization lacks.

4. Narrative as a graph

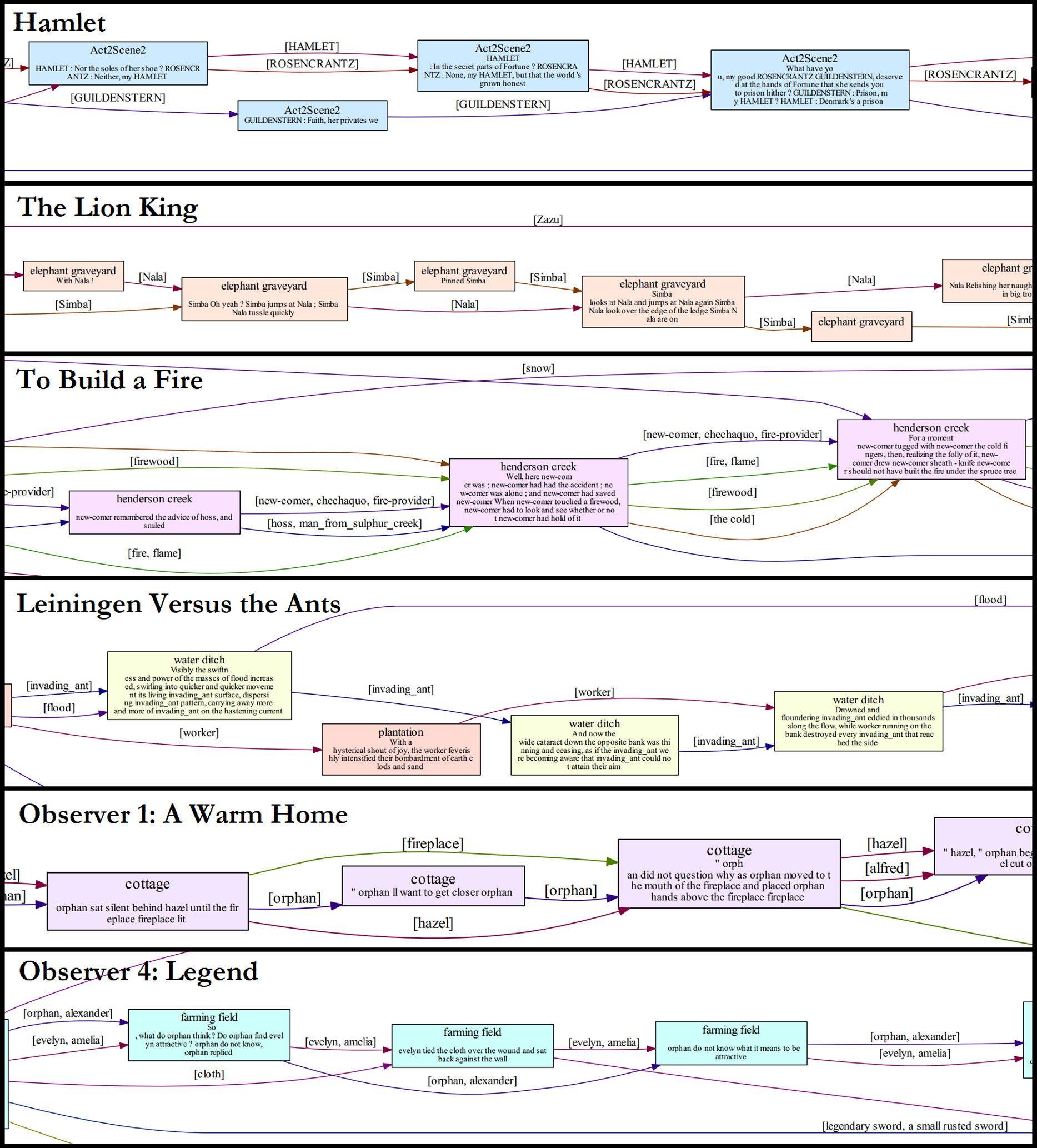

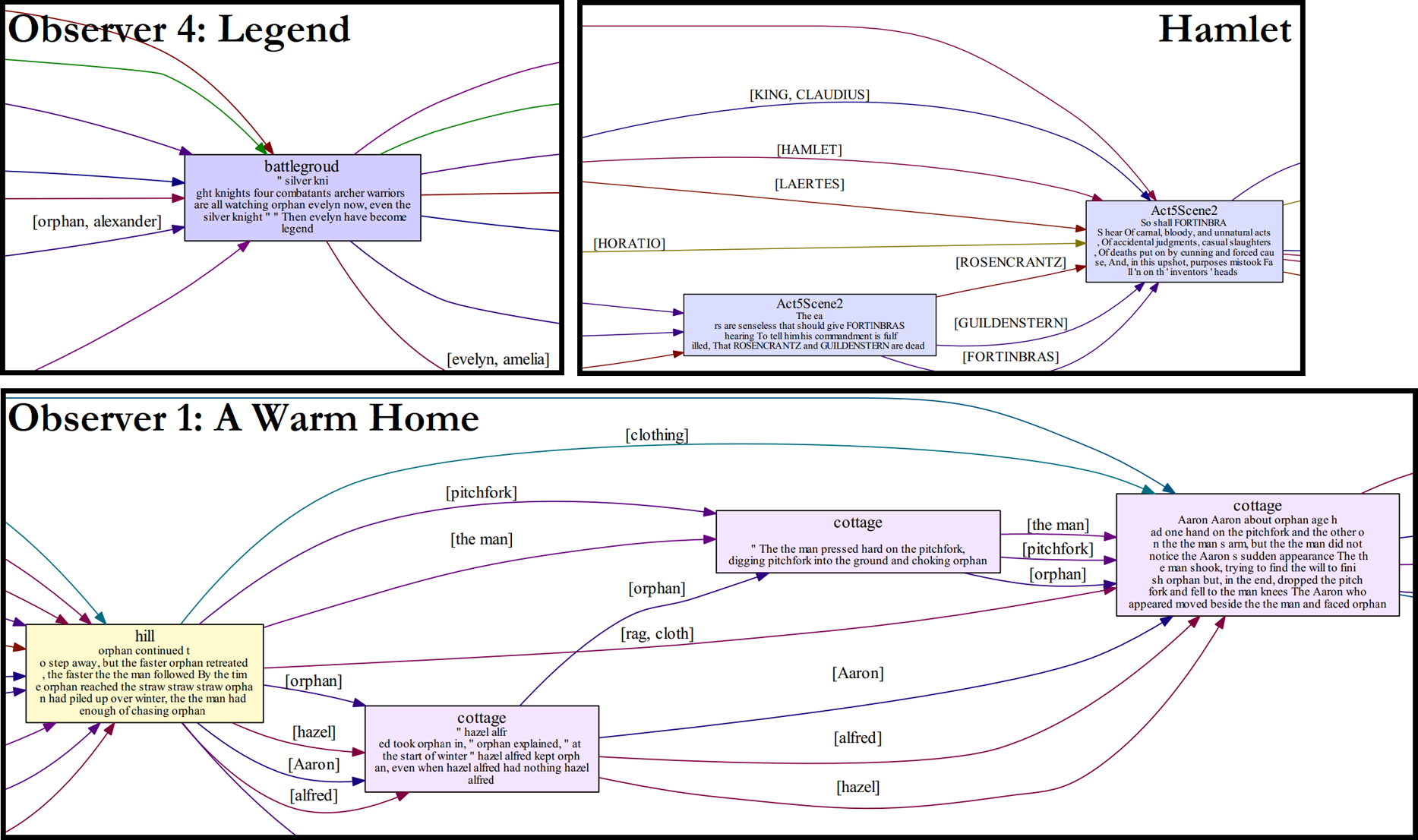

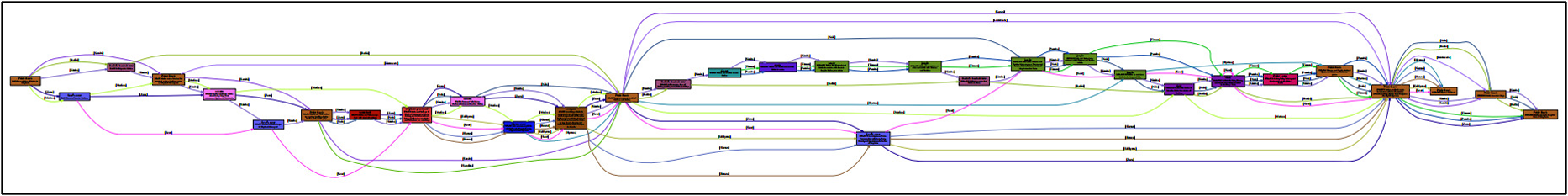

The greatest weakness of the Scatter Plot of Entities is its inability to model the underlying structure of the narrative. Given this deficiency, how then can we represent that structure? When we look back to the related works in Section 2.2, graphs are a commonly used method of showing connections or relationships in stories. Here we are interested in the relationships between scenes in which the entities appear. We can envision a graph where each vertex is a scene, and the entities are the links between those scenes. Each entity is an edge, and where there are multiple entities in both the preceding scene and the current scene, there is an edge for each individual entity, creating a directed multi-graph. This way we not only get a structural linking of the scenes in narrative order, but we track individual entities through that structure to see in which scenes they are involved.

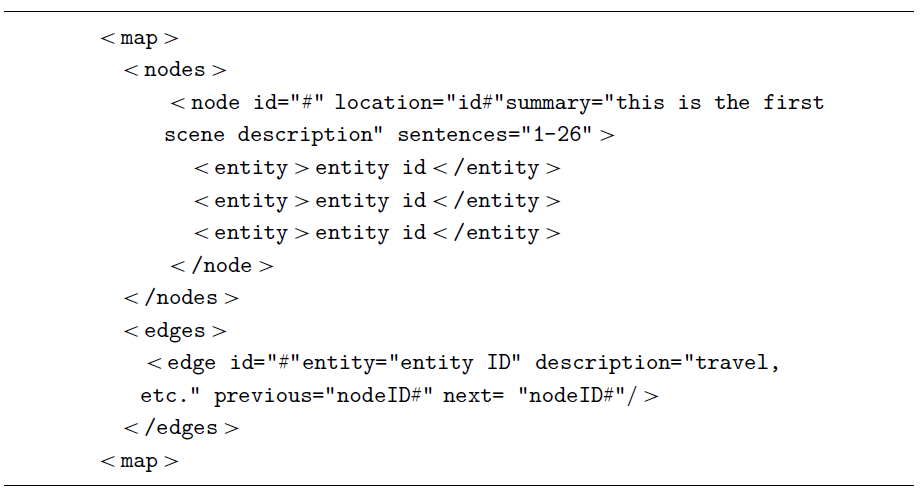

If the Scatter Plot of Entities could properly determine the number of scenes in a story, we could use it directly to initially partition the story. As stated earlier, scene partitioning is a complicated task. Another option is to take advantage of the graph structure and use the interaction of entities within the stories—which entities are involved in which events and where those events take place—to try and automate the creation of scenes. In this way, the system can determine for itself how many scenes are in the story. We call this multi-graph structure that visualizes the flow of entities through scenes in the story the Narrative Flow Graph.