Impact Statement

This article provides a framework to reduce the global warming potential of civil structures such as bridges, dams, or buildings while satisfying constraints relating to structural efficiency. The framework combines mixture design and structural simulation in a joint workflow to enable optimization. Advanced optimization algorithm with appropriate extensions and heuristics are employed to account for stochasticity of workflow, nonlinear constraints, and lack of derivatives of the workflow. Some relations in the workflow are not known a priori in the literature. These are learned by employing advanced probabilistic machine learning algorithms trained using the fusion of noisy data and physical models. Various forms of uncertainty arising due to noise in the data or incompleteness of data are systematically incorporated into the framework. The big idea here is to create structures with a smaller environmental footprint without compromising their strength while being cost-efficient for the manufacturer. The proposed holistic approach is demonstrated on a specific design problem, but should serve as a template that can be readily adapted to other design problems.

1. Introduction

Precast concrete elements play a critical role in achieving efficient, low-cost, and sustainable structures. The controlled manufacturing environment allows for higher quality products and enables the mass production of such elements. In the standard design approach, engineers or architects select the geometry of a structure, estimate the loads, choose mechanical properties, and design the element accordingly. If the results are unsatisfactory, the required mechanical properties are iteratively adjusted, aiming to improve the design. This approach is adequate when the choice of mixtures is limited, and the expected concrete properties are well known. There are various published methods to automate this process and optimize the beam design at this level. Computer-aided beam design optimization dates back at least 50 years (e.g., Haung and Kirmser, Reference Haung and Kirmser1967).

Generally, the objective is to reduce costs, with the design variables being the beam geometry, the amount and location of the reinforcement, and the compressive strength of the concrete (Chakrabarty, Reference Chakrabarty1992; Coello et al., Reference Coello, Hernández and Farrera1997; Pierott et al., Reference Pierott, Hammad, Haddad, Garcia and Falcón2021; Shobeiri et al., Reference Shobeiri, Bennett, Xie and Visintin2023). Most publications focus on analytical functions based on well-known, empirical rules of thumb. In recent years, the use of alternative binders in the concrete mix design has increased, mainly to reduce the environmental impact and cost of concrete but also to improve and modify specific properties. This is a challenge as the concrete mix is no longer a constant and is itself subject to an optimization. Known heuristics might no longer apply to the new materials, and old design approaches might fail to produce optimal results. In addition, it is not desirable to choose from a predetermined set of possible mixes, as this would lead to either an overwhelming number of required experiments or a limiting subset of the possible design space.

In the existing literature on the optimization of the concrete mix design (Lisienkova et al., Reference Lisienkova, Shindina, Orlova and Komarova2021; Kondapally et al., Reference Kondapally, Chepuri, Elluri and Reddy2022; Forsdyke et al., Reference Forsdyke, Zviazhynski, Lees and Conduit2023), the objective is either to improve mechanical properties like durability within constraints or to minimize, for example, the amount of concrete while keeping other properties above a threshold. A first step to address these limitations is incorporating the compressive strength during optimization in the beam design phase. Higher compressive strength usually correlates with a larger amount of cement and, therefore, higher cost as well as a higher global warming potential (GWP). This approach has shown promising results in achieving improved structural efficiency while considering environmental impact (dos Santos et al., Reference dos Santos, Alves and Kripka2023). To be able to find a part specific optimum, individual data of the manufacturer and specific mix options must be integrated. Therefore, there is still a need for a comprehensive optimization procedure that can seamlessly integrate concrete mix design and structural simulations, ensuring structurally sound and buildable elements with minimized environmental impact for part specific data.

When designing elements subjected to various requirements, both on the material and structural levels, including workability of the fresh concrete, durability of the structure, maximum acceptable temperature, minimal cost, and GWP, the optimal solution is not apparent and will change depending on each individual project. In this article, we present a holistic optimization procedure that combines physics-based models and experimental data in order to enable the optimization of the concrete mix design in the presence of uncertainty, with an objective to minimize the GWP. In particular, we employ structural simulations as constraints to ensure structural integrity, limit the maximum temperature, and ensure an adequate time of demolding.

By integrating the concrete mixture optimization and structural design processes, engineers can tailor the concrete properties to meet the specific requirements of the customer and manufacturer. This approach opens up possibilities for performance prediction and optimization for new mixtures that fall outside the standard range of existing ones. To the best of our knowledge, there are no published works that combine the material and structural levels in one flexible optimization framework. In addition to changing the order of the design steps, the proposed framework allows to directly integrate experimental data and propagate the identified uncertainties. This allows a straightforward integration of new data and quantification of uncertainties regarding the predictions. The proposed framework consists of three main parts. The first part introduces an automated and reproducible probabilistic machine learning-based parameter identification method to calibrate the models by using experimental data. The second part focuses on a black-box optimization method for non-differentiable functions, including constraints. The third part presents a flexible workflow combining the models and functions required for the respective problem.

To carry out black-box optimization, we advocate the use of Variational Optimization (Staines and Barber, Reference Staines and Barber2013; Bird et al., Reference Bird, Kunze and Barber2018), which uses stochastic gradient estimators for black-box functions. We utilize this with appropriate enhancements in order to account for the stochastic, nonlinear constraints. Our choice is motivated by three challenges present in the workflow describing the physical process. First, we are limited by the availability of only black-box evaluations of the physical workflow. In many real-world cases involving physics-based solvers/simulators in the optimization process, one resorts to gradient-free optimization (Moré and Wild, Reference Moré and Wild2009; Snoek et al., Reference Snoek, Larochelle and Adams2012). However, the gradient-free methods perform poorly on high-dimensional parametric spaces (Moré and Wild, Reference Moré and Wild2009). Also, it requires more functional evaluations to reach the optimum as compared to gradient-based methods. Recently, stochastic gradient estimators (Mohamed et al., Reference Mohamed, Rosca, Figurnov and Mnih2020) have been used to estimate gradients of black-box functions and, hence, perform gradient-based optimization (Ruiz et al., Reference Ruiz, Schulter and Chandraker2018; Louppe et al., Reference Louppe, Hermans and Cranmer2019; Shirobokov et al., Reference Shirobokov, Belavin, Kagan, Ustyuzhanin and Baydin2020). However, they do not account for the constraints. Second, the presence of nonlinear constraints makes the optimization challenging. Popular gradient-free methods like constrained Bayesian optimization (cBO) (Gardner et al., Reference Gardner, Kusner, Xu, Weinberger and Cunningham2014) and COBYLA (Powell, Reference Powell1994) pose significant challenges when (non)linear constraints are involved (Audet and Kokkolaras, Reference Audet and Kokkolaras2016; Menhorn et al., Reference Menhorn, Augustin, Bungartz and Marzouk2017; Agrawal et al., Reference Agrawal, Ravi, Koutsourelakis and Bungartz2023). The third challenge is the stochasticity of the workflow, discussed in the following paragraph.

The physical workflow comprising physics-based models to link design variables with the objective and constraints poses an information flow-related challenge. Some links leading to the objective/constraints are not known a priori in the literature, thus hindering the optimization process. We propose a method to learn these missing links, parameterized by an appropriate neural network, with the help of (noisy) experimental data and physical models. The unavoidable noise in the data introduces aleatoric uncertainty, or its incompleteness introduces epistemic uncertainty. To account for the presence of these uncertainties, we advocate the links to be probabilistic. The learned probabilistic links tackle the information bottleneck; however, it introduces random parameters in the physical workflow, thus necessitating optimization under uncertainty (OUU) (Acar et al., Reference Acar, Bayrak, Jung, Lee, Ramu and Ravichandran2021; Martins and Ning, Reference Martins and Ning2021; Qiu et al., Reference Qiu, Wu, Elishakoff and Liu2021). Deterministic inputs can lead to a poor-performing design, which OUU tries to tackle by producing a robust and reliable design that is less sensitive to inherent variability. This paradigm of fusing data and physical models to train machine-learning models has been extensively used across engineering and physics in recent years (Koutsourelakis et al., Reference Koutsourelakis, Zabaras and Girolami2016; Fleming, Reference Fleming2018; Baker et al., Reference Baker, Alexander, Bremer, Hagberg, Kevrekidis, Najm, Parashar, Patra, Sethian, Wild and Willcox2019; Karniadakis et al., Reference Karniadakis, Kevrekidis, Lu, Perdikaris, Wang and Yang2021; Karpatne et al., Reference Karpatne, Kannan and Kumar2022; Lucor et al., Reference Lucor, Agrawal and Sergent2022; Agrawal and Koutsourelakis, Reference Agrawal and Koutsourelakis2023; Forsdyke et al., Reference Forsdyke, Zviazhynski, Lees and Conduit2023), colloquially referred to as scientific machine learning (SciML). In contrast to traditional machine learning areas where big data are generally available, engineering and physical applications generally suffer from a lack of data, further complicated by experimental noise. SciML has shown promise in addressing this lack of data. We summarize our key contributions below:

-

• We present an inverse design approach for the precast concrete industry to promote sustainable construction practices.

-

• To achieve the desired goals, we propose an algorithmic framework with two main components. (a) an optimization algorithm that accounts for the presence of various uncertainties, nonlinear constraints, and lack of derivatives and (b) a probabilistic machine learning algorithm that learns the missing relations to enable the optimization, by combining noisy, experimental data with physical models. The algorithmic framework is transferable to several other material, structural, and mechanical design problems.

-

• To assist the optimization procedure, we propose an automated workflow that combines concrete mixture design and structural simulation.

-

• We demonstrate the effectiveness of the algorithmic framework and the workflow, on a precast concrete beam element. We learn the missing probabilistic link between the mixture design variables and finite element (FE) model parameters describing the concrete hydration and homogenization procedure. Subsequently, we optimize mixture design and beam geometry in the presence of uncertainties, with the goal of reducing the GWP while employing structural simulations as constraints to ensure safe and reliable design.

The structure of the rest of this article is as follows. Section 2.1 describes the proposed design approach, and Section 2.2 describes the physical material models and the applied assumptions. Section 2.3 presents the details of the experimental data. Section 2.4 provides an overview of the aforementioned probabilistic links and the optimization procedure. Section 2.5 talks about the methodology employed to learn the probabilistic links based on the experimental data and the physical models. Then Section 2.6 describe the details of the proposed black-box optimization algorithm. In Section 3, we showcase and discuss the results of the numerical experiments combining all the parts, the experimental data, the physical models, the identification of the probabilistic links, and the optimization framework. Finally, in Section 4, we summarize our findings and discuss possible extensions.

1.1. Demonstration problem

In this work, a well-known example of a simply supported, reinforced, rectangular beam has been chosen. The design problem was originally published in Everard and Tanner (Reference Everard and Tanner1966) and illustrated in Figure 1.

Figure 1. Geometry of the design problem of a bending beam with a constant distributed load (dead load and live load with safety factors of 1.35 and 1.5) and a rectangular cross section. The design variable, beam height is denoted by

![]() $ h $

.

$ h $

.

It has been used to showcase different optimization schemes (e.g., Chakrabarty, Reference Chakrabarty1992; Coello et al., Reference Coello, Hernández and Farrera1997; Pierott et al., Reference Pierott, Hammad, Haddad, Garcia and Falcón2021). As opposed to the literature where the optimization is often related to costs, we aim to reduce the overall GWP of the part. This objective is particularly meaningful as the cement industry accounts for approximately 8% of the total anthropogenic GWP (Miller et al., Reference Miller, Horvath and Monteiro2016). Reducing the environmental impact of concrete production becomes crucial in the pursuit of sustainable construction practices. In addition, the reduction of the amount of cement in concrete is also correlated to the reduction of cost, as cement is generally the most expensive component of the concrete mix (Paya-Zaforteza et al., Reference Paya-Zaforteza, Yepes, Hospitaler and González-Vidosa2009). There are three direct ways to reduce the GWP of a given concrete part. First, replace the cement with a substitute with a lower carbon footprint. This usually changes mechanical properties and, in particular, their temporal evolution. Second, increase the amount of aggregates, therefore reducing the cement per volume. This also changes effective properties and needs to be balanced with the workability and the limits due to the applications. Third, decrease the overall volume of concrete by improving the topology of the part. In addition, when analyzing the whole life cycle of a structure, both cost and GWP can be reduced by increasing the durability and, therefore, extending its lifetime. To showcase the proposed method’s capability, two design variables have been chosen; the height of the beam and the ratio of ordinary Portland cement (OPC) to its replacement binder ground granulated blast furnace slag (BFS), a by-product of the iron industry. In addition to the static design according to the standard, the problem is extended to include a key performance indicator (KPI) related to the production process in a prefabrication factory that defines the time after which the removal of the formwork can be performed. To approximate this, the point in time when the beam can bear its own weight has been chosen a criterion. Reducing this time equates to being able to produce more parts with the same formwork.

2. Methods

2.1. Design approaches

The conventional method of designing reinforced concrete structures is depicted in Figure 2. The structural engineer starts by choosing a suitable material (e.g., strength class C40/50) and designs the structure, including the geometry (e.g., the height of a beam) and the reinforcement. In the second step, this design is handed over to the material engineer with the constraint that the material properties assumed by the structural engineer have to be met. This lack of coordination strongly restricts the set of potential solutions since structural design and concrete mix design are strongly coupled; for example, a lower strength can be compensated with a larger beam height.

Figure 2. Classical design approach, where the required minimum material properties are defined by the structural engineer which is then passed to the material engineer.

An alternative design workflow is illustrated in Figure 3, which entails inverting the classical design pipeline. The material composition is the input to the material engineer who predicts the corresponding mechanical properties of the material. This includes KPIs related to the material, for example, viscosity/slump test, or simply the water/cement ratio. In a second step, a structural analysis is performed with the material properties as input. This step outputs the structural KPIs such as the load-bearing capacity, the expected lifetime (for a structure susceptible to fatigue), or the GWP of the complete structure. These two (coupled) modules are used within an optimization procedure to estimate the optimal set of input parameters (both on the material level and on the structural level). One of the KPIs is chosen as the objective function (e.g., GWP) and others as constraints (e.g., load-bearing capacity larger than the load, cement content larger than a threshold, viscosity according to the slump test within a certain interval). Note that in order to use such an inverse-design procedure, the forward modeling workflow needs to be automated and subsequently the information needs to be efficiently back-propagated.

Figure 3. Proposed design approach to cast material and structural design into a forward model that is then integrated into a holistic optimization approach.

This article aims to present the proposed methodological framework as well as illustrate its capabilities in the design of a precast concrete element with the objective of reducing the GWP. The constraints employed are related to the structural performance after 28 days as well as the maximum time of demolding after 10 hours. The design/optimization variables are, on the structural level, the height of the beam, and on the material level, the composition of the binder as a mixture of Portland cement and slag. The complete workflow is illustrated in Figure 4.

Figure 4. Workflow to compute key performance indicators from input parameters.

2.2. Workflow for predicting key performance indicators

The workflow consists of four major steps. In the first step, the cement composition (blended cement and slag) defined in the mix composition is used to predict the mechanical properties of the cement paste. This is done using a data-driven approach as discussed in Section 2.4. In the second step, homogenization is used in order to compute the effective, concrete properties based on cement paste and aggregate data. An analytical function is applied for the homogenization based on the Mori–Tanaka scheme (Mori and Tanaka, Reference Mori and Tanaka1973). The third step involves a multi-physics, FE model with two complex constitutive models—a hydration model, which computes the evolution of the degree of hydration (DOH), considering the local temperature and the heat released during the reaction, and a mechanical model, which simulates the temporal evolution of the mechanical properties assuming that those depend on the DOH. The fourth and last model is based on a design code to estimate the amount of reinforcement and predict the load-bearing capacity after 28 days. Subsequent sections will provide insights into how these models function within the optimization framework.

2.2.1. Homogenized concrete parameters

Experimental data for estimating the compressive strength are obtained from concrete specimens measuring the homogenized response of cement paste and aggregates. The mechanical properties of aggregates are known, whereas the cement paste properties have to be inversely estimated. The calorimetry is directly performed for cement paste.

In order to relate macroscopic mechanical properties to the individual constituents (cement paste and aggregates), an analytical homogenization procedure is used. The homogenized effective concrete properties are the Young modulus

![]() $ E $

, the Poisson ratio

$ E $

, the Poisson ratio

![]() $ \nu $

, the compressive strength

$ \nu $

, the compressive strength

![]() $ {f}_c $

, the density

$ {f}_c $

, the density

![]() $ \rho $

, the thermal conductivity

$ \rho $

, the thermal conductivity

![]() $ \chi $

, the heat capacity

$ \chi $

, the heat capacity

![]() $ C $

, and the total heat release

$ C $

, and the total heat release

![]() $ {Q}_{\infty } $

. Depending on the physical meaning, these properties need slightly different methods to estimate the effective concrete properties. The elastic, isotropic properties

$ {Q}_{\infty } $

. Depending on the physical meaning, these properties need slightly different methods to estimate the effective concrete properties. The elastic, isotropic properties

![]() $ E $

and

$ E $

and

![]() $ \nu $

of the concrete are approximated using the Mori–Tanaka homogenization scheme (Mori and Tanaka, Reference Mori and Tanaka1973). The method assumes spherical inclusions in an infinite matrix and considers the interactions of multiple inclusions. Details are given in Appendix A.1.

$ \nu $

of the concrete are approximated using the Mori–Tanaka homogenization scheme (Mori and Tanaka, Reference Mori and Tanaka1973). The method assumes spherical inclusions in an infinite matrix and considers the interactions of multiple inclusions. Details are given in Appendix A.1.

The estimation of the concrete compressive strength

![]() $ {f}_{c,\mathrm{eff}} $

follows the ideas of Nežerka et al. (Reference Nežerka, Hrbek, Prošek, Somr, Tesárek and Fládr2018). The premise is that a failure in the cement paste will cause the concrete to crack. The approach is based on two main assumptions. First, the Mori–Tanaka method is used to estimate the average stress within the matrix material

$ {f}_{c,\mathrm{eff}} $

follows the ideas of Nežerka et al. (Reference Nežerka, Hrbek, Prošek, Somr, Tesárek and Fládr2018). The premise is that a failure in the cement paste will cause the concrete to crack. The approach is based on two main assumptions. First, the Mori–Tanaka method is used to estimate the average stress within the matrix material

![]() $ {\sigma}^{(m)} $

. Second, the von Mises failure criterion of the average matrix stress is used to estimate the uniaxial compressive strength (see Appendix A.1.1).

$ {\sigma}^{(m)} $

. Second, the von Mises failure criterion of the average matrix stress is used to estimate the uniaxial compressive strength (see Appendix A.1.1).

Table 1 gives an overview of the material properties of the constituents used in the subsequent sensitivity studies. The effective properties as a function of the aggregate content are plotted in Figure 5. Note that both extremes (0 [pure cement] and 1 [only aggregates]) are purely theoretical.

Table 1. Properties of the cement paste and aggregates used in subsequent sensitivity studies

Figure 5. Influence of aggregate ratio on effective concrete properties.

For the considered example, the relations are close to linear. This can change when the difference between the matrix and the inclusion properties is more pronounced or more complex micromechanical mechanisms are incorporated, such as air pores or the interfacial transition zone. Though not done in this article, these could be considered within the chosen homogenization scheme by adding additional phases (cf. Nežerka and Zeman, Reference Nežerka and Zeman2012). Homogenization of the thermal conductivity is also based on the Mori–Tanaka method, following the ideas of Stránský et al. (Reference Stránský, Vorel, Zeman and Šejnoha2011) with details given in Appendix A.1.2. The density

![]() $ \rho $

, the heat capacity

$ \rho $

, the heat capacity

![]() $ C $

, and the total heat release

$ C $

, and the total heat release

![]() $ {Q}_{\infty } $

can be directly computed based on their volume average. As an example for the volume-averaged quantities, the heat release is shown in Figure 5 as it exemplifies the expected linear relation of the volume average as well as the zero heat output of a theoretical pure aggregate.

$ {Q}_{\infty } $

can be directly computed based on their volume average. As an example for the volume-averaged quantities, the heat release is shown in Figure 5 as it exemplifies the expected linear relation of the volume average as well as the zero heat output of a theoretical pure aggregate.

2.2.2. Hydration and evolution of mechanical properties

Due to a chemical reaction (hydration) of the binder with water, calcium-silicate hydrates form that lead to a temporal evolution of concrete strength and stiffness. The reaction is exothermal and the kinetics are sensitive to the temperature. The primary model simulates the hydration process and computes the temperature field

![]() $ T $

and the DOH

$ T $

and the DOH

![]() $ \alpha $

(see Eqs. (B1) and (B2) in Appendix B). The latter characterizes the DOH that condenses the complex chemical reactions into a single scalar variable. The thermal model depends on three material properties: the effective thermal conductivity

$ \alpha $

(see Eqs. (B1) and (B2) in Appendix B). The latter characterizes the DOH that condenses the complex chemical reactions into a single scalar variable. The thermal model depends on three material properties: the effective thermal conductivity

![]() $ \lambda $

, the specific heat capacity

$ \lambda $

, the specific heat capacity

![]() $ C $

, and the heat release

$ C $

, and the heat release

![]() $ \frac{\partial Q}{\partial t} $

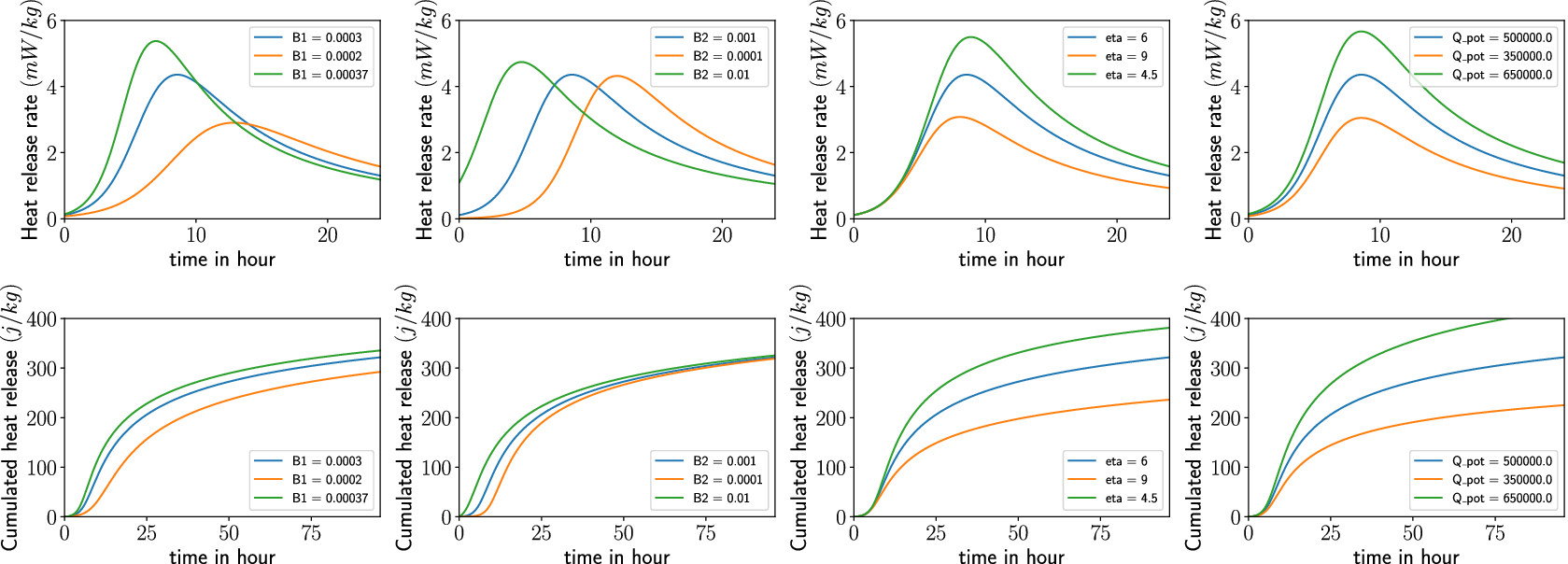

. The latter is governed by the hydration model, characterized by six parameters:

$ \frac{\partial Q}{\partial t} $

. The latter is governed by the hydration model, characterized by six parameters:

![]() $ {B}_1,{B}_2,\eta, {T}_{\mathrm{ref}},{E}_a $

, and

$ {B}_1,{B}_2,\eta, {T}_{\mathrm{ref}},{E}_a $

, and

![]() $ {\alpha}_{\mathrm{max}} $

. The first three

$ {\alpha}_{\mathrm{max}} $

. The first three

![]() $ {B}_1,{B}_2 $

, and

$ {B}_1,{B}_2 $

, and

![]() $ \eta $

are parameters characterizing the shape of the evolution of the heat release.

$ \eta $

are parameters characterizing the shape of the evolution of the heat release.

![]() $ {T}_{\mathrm{ref}} $

is the reference temperature for which the first three parameters are calibrated. (Based on the difference between the actual and the reference temperature, the heat released is scaled.) The sensitivity to the temperature is characterized by the activation energy

$ {T}_{\mathrm{ref}} $

is the reference temperature for which the first three parameters are calibrated. (Based on the difference between the actual and the reference temperature, the heat released is scaled.) The sensitivity to the temperature is characterized by the activation energy

![]() $ {E}_a $

.

$ {E}_a $

.

![]() $ {\alpha}_{\mathrm{max}} $

is the maximum DOH that can be reached. Following Mills (Reference Mills1966), the maximum DOH is estimated based on the water to binder ratio

$ {\alpha}_{\mathrm{max}} $

is the maximum DOH that can be reached. Following Mills (Reference Mills1966), the maximum DOH is estimated based on the water to binder ratio

![]() $ {r}_{\mathrm{wc}} $

, as

$ {r}_{\mathrm{wc}} $

, as

![]() $ {\alpha}_{\mathrm{max}}=\frac{1.031\hskip0.1em {r}_{\mathrm{wc}}}{0.194+{r}_{\mathrm{wc}}} $

.

$ {\alpha}_{\mathrm{max}}=\frac{1.031\hskip0.1em {r}_{\mathrm{wc}}}{0.194+{r}_{\mathrm{wc}}} $

.

By assuming the DOH to be the fraction of the currently released heat with respect to its theoretical potential

![]() $ {Q}_{\infty } $

, the current DOH is estimated as

$ {Q}_{\infty } $

, the current DOH is estimated as

![]() $ \alpha (t)=\frac{Q(t)}{Q_{\infty }} $

. As the potential heat release is also difficult to measure as it takes a long time to fully hydrate and will only do so under perfect conditions, we identify it as an additional parameter in the model parameter estimation. For a detailed model description, see Appendix B. In addition to influencing the reaction speed, the computed temperature is used to verify that the maximum temperature during hydration does not exceed a limit of

$ \alpha (t)=\frac{Q(t)}{Q_{\infty }} $

. As the potential heat release is also difficult to measure as it takes a long time to fully hydrate and will only do so under perfect conditions, we identify it as an additional parameter in the model parameter estimation. For a detailed model description, see Appendix B. In addition to influencing the reaction speed, the computed temperature is used to verify that the maximum temperature during hydration does not exceed a limit of

![]() $ {T}_{\mathrm{limit}}=60{}^{\circ}\mathrm{C} $

. Above a certain temperature, the hydration reaction changes (e.g., secondary ettringite formation) and, additionally, volumetric changes in the cooling phase correlate with cracking and reduced mechanical properties. The maximum temperature is implemented as a constraint for the optimization problem (see Eq. (B19)).

$ {T}_{\mathrm{limit}}=60{}^{\circ}\mathrm{C} $

. Above a certain temperature, the hydration reaction changes (e.g., secondary ettringite formation) and, additionally, volumetric changes in the cooling phase correlate with cracking and reduced mechanical properties. The maximum temperature is implemented as a constraint for the optimization problem (see Eq. (B19)).

The evolution of the Young modulus

![]() $ E $

of a linear-elastic material model is modeled as a function of the DOH (details in Eq. (B17)). In a similar way, the compressive strength evolution is computed (see Eq. (B15)), which is utilized to determine a failure criterion based on the computed local stresses (Eq. (B20)) related to the time when the formwork can be removed. For a detailed description of the parameter evolution as a function of the DOH, see Appendix B.2. Figure 6 shows the influence of the different parameters. In addition to the formulations given in Carette and Staquet (Reference Carette and Staquet2016) which depend on a theoretical value of parameters for fully hydrated concrete at

$ E $

of a linear-elastic material model is modeled as a function of the DOH (details in Eq. (B17)). In a similar way, the compressive strength evolution is computed (see Eq. (B15)), which is utilized to determine a failure criterion based on the computed local stresses (Eq. (B20)) related to the time when the formwork can be removed. For a detailed description of the parameter evolution as a function of the DOH, see Appendix B.2. Figure 6 shows the influence of the different parameters. In addition to the formulations given in Carette and Staquet (Reference Carette and Staquet2016) which depend on a theoretical value of parameters for fully hydrated concrete at

![]() $ \alpha =1 $

, this work reformulates the equations, to depend on the 28 day values

$ \alpha =1 $

, this work reformulates the equations, to depend on the 28 day values

![]() $ {E}_{28} $

and

$ {E}_{28} $

and

![]() $ {f}_{c28} $

as well as the corresponding

$ {f}_{c28} $

as well as the corresponding

![]() $ {\alpha}_{28} $

which is obtained via a simulation. This allows us to directly use the experimental values as input. In Figure 6,

$ {\alpha}_{28} $

which is obtained via a simulation. This allows us to directly use the experimental values as input. In Figure 6,

![]() $ {\alpha}_{28} $

is set to

$ {\alpha}_{28} $

is set to

![]() $ 0.8 $

.

$ 0.8 $

.

Figure 6. Influence of parameters

![]() $ {\alpha}_t,{a}_E $

, and

$ {\alpha}_t,{a}_E $

, and

![]() $ {a}_{f_c} $

on the evolution the Young modulus and the compressive strength with respect to the degree of hydration

$ {a}_{f_c} $

on the evolution the Young modulus and the compressive strength with respect to the degree of hydration

![]() $ \alpha $

. Parameters:

$ \alpha $

. Parameters:

![]() $ {E}_{28}=50\hskip0.22em \mathrm{GPa} $

,

$ {E}_{28}=50\hskip0.22em \mathrm{GPa} $

,

![]() $ {a}_E=0.5 $

,

$ {a}_E=0.5 $

,

![]() $ {\alpha}_t=0.2 $

,

$ {\alpha}_t=0.2 $

,

![]() $ {a}_{f_c}=0.5 $

,

$ {a}_{f_c}=0.5 $

,

![]() $ {f}_{c28}=30\hskip0.22em \mathrm{N}/{\mathrm{mm}}^2 $

,

$ {f}_{c28}=30\hskip0.22em \mathrm{N}/{\mathrm{mm}}^2 $

,

![]() $ {\alpha}_{28}=0.8 $

.

$ {\alpha}_{28}=0.8 $

.

2.2.3. Beam design according to EC2

The design of the reinforcement and the computation of the load-bearing capacity is performed based on DIN EN 1992-1-1 (2011) according to Eq. (C7) with a detailed explanation in the Appendix C. To ensure that the design is realistic, the continuous cross section is transformed into a discrete number of bars with a diameter chosen from a list. This is visible in Figure 7 by the stepwise increase in cross sections. The admissible results are restricted by two constraints. One is coming from a minimal required compressive strength (Eq. (C8)), visualized as a dashed line. The other, based on the available space to place bars with admissible spacing (Eq. (C13)), visualized as the dotted line. Further details on the computation are given in Appendix C. A sensitivity study for the mutual interaction and the constraints is visualized in Figure 7. The parameters for the sensitivity study are given in Table D1 in Appendix D.

Figure 7. Influence of beam height, concrete compressive strength, and load in the center of the beam on the required steel. The dashed lines represent the minimum compressive strength constraint (Eq. (C8)), and the dotted lines represent the geometrical constraint from the spacing of the bars (Eq. (C13)).

2.2.4. Computation of GWP

The computation of the GWP is performed by multiplying the volume content of each individual material by its specific GWP. The values used in this study are extracted from Braga et al. (Reference Braga, Silvestre and de Brito2017) and listed in Table 2.

Table 2. Specific global warming potential of the raw materials used in the design

We note that the question of what exactly to include in the GWP computation is largely open. For example, the transport of materials, while non-negligible, is difficult in general to include. Furthermore, there are always local conditions (e.g., the GWP of the energy sources used in the cement production depends on the amount of green energy in that country). In addition, the time span (complete life cycle analysis vs. production) is a point of debate and finally the usage of by-products (slag is currently a by-product of steel manufacturing and thus its GWP is considered to be small). There are currently efforts, both at the national and international levels, to standardize the computation of the GWP or similar quantities. Once these are available, they can be readily integrated into the proposed approach. Such a standardized computation of the GWP can lead either to taxing GWP or to introducing a sustainability limit state, though this is an ongoing discussion in standardization committees.

2.3. Experimental data

This section describes the data used for learning the missing (probabilistic) links (detailed in Section 2.5) between the slag–binder mass ratio

![]() $ {r}_{\mathrm{sb}} $

and physical model parameters. The slag–binder mass ratio

$ {r}_{\mathrm{sb}} $

and physical model parameters. The slag–binder mass ratio

![]() $ {r}_{\mathrm{sb}} $

is the mass ratio between the amount of BFS and the binder (sum of BFS and OPC). The data are sourced from Gruyaert (Reference Gruyaert2011). In particular, we are concerned about the parameter estimation for the concrete homogenization discussed in Section 2.2.1 and the hydration model in Section 2.2.2.

$ {r}_{\mathrm{sb}} $

is the mass ratio between the amount of BFS and the binder (sum of BFS and OPC). The data are sourced from Gruyaert (Reference Gruyaert2011). In particular, we are concerned about the parameter estimation for the concrete homogenization discussed in Section 2.2.1 and the hydration model in Section 2.2.2.

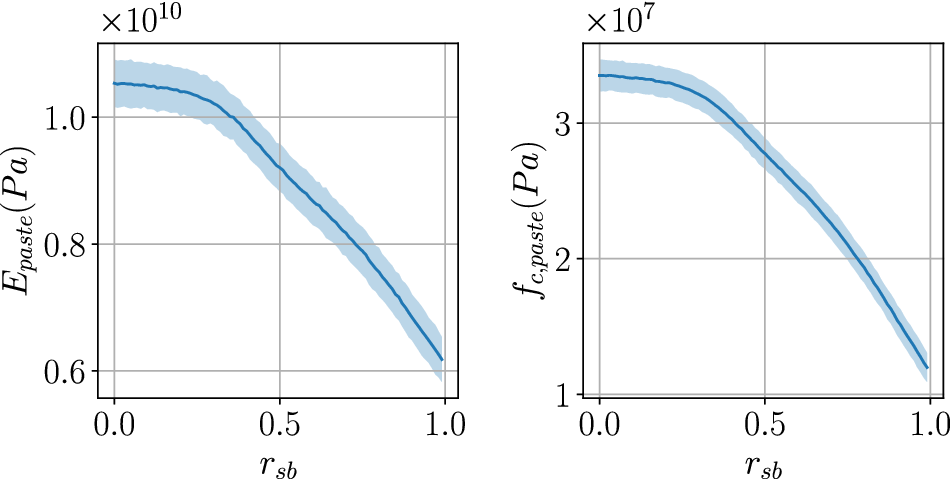

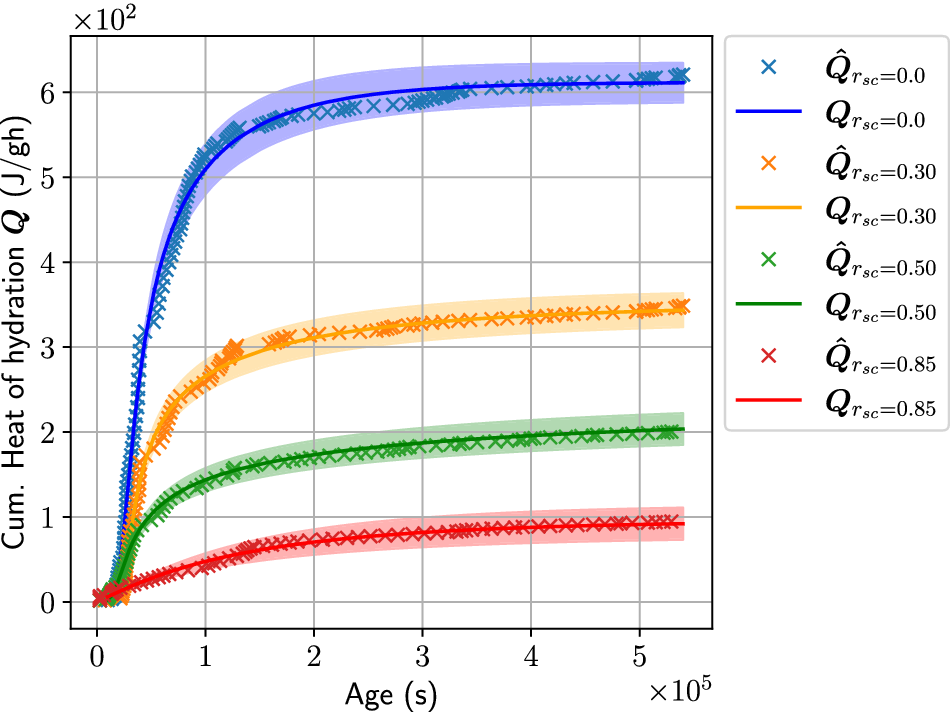

For concrete homogenization, six different tests for varying ratios

![]() $ {r}_{\mathrm{sb}}=\left\{\mathrm{0.0,0.15,0.3,0.5,0.7,0.85}\right\} $

are available for the concrete compressive strength

$ {r}_{\mathrm{sb}}=\left\{\mathrm{0.0,0.15,0.3,0.5,0.7,0.85}\right\} $

are available for the concrete compressive strength

![]() $ {f}_c $

after 28 days. For the concrete hydration, we utilize isothermal calorimetry data at

$ {f}_c $

after 28 days. For the concrete hydration, we utilize isothermal calorimetry data at

![]() $ 20{}^{\circ}\mathrm{C} $

. We have temporal evolution data of cumulative heat of hydration

$ 20{}^{\circ}\mathrm{C} $

. We have temporal evolution data of cumulative heat of hydration

![]() $ \hat{\boldsymbol{Q}} $

for four different values of

$ \hat{\boldsymbol{Q}} $

for four different values of

![]() $ {r}_{\mathrm{sb}}=\left\{\mathrm{0.0,0.30,0.50,0.85}\right\} $

. For details on other material parameters and phenomenological values used to obtain the data, the reader is directed to Gruyaert (Reference Gruyaert2011).

$ {r}_{\mathrm{sb}}=\left\{\mathrm{0.0,0.30,0.50,0.85}\right\} $

. For details on other material parameters and phenomenological values used to obtain the data, the reader is directed to Gruyaert (Reference Gruyaert2011).

2.3.1. Young’s modulus E based on fc

The dataset does not encompass information about the Young modulus. Given its significance for the FEM simulation, we resort to a phenomenological approximation derived from ACI Committee 363 (2010). This approximation relies on the compressive strength

![]() $ {f}_c $

and the density

$ {f}_c $

and the density

![]() $ \rho $

to estimate the Young modulus

$ \rho $

to estimate the Young modulus

$$ E=3,320\sqrt{f_c}+6,895{\left(\frac{\rho }{2,320}\right)}^{1.5}, $$

$$ E=3,320\sqrt{f_c}+6,895{\left(\frac{\rho }{2,320}\right)}^{1.5}, $$

with

![]() $ \rho $

in kilogram per cubic meter and

$ \rho $

in kilogram per cubic meter and

![]() $ {f}_c $

and

$ {f}_c $

and

![]() $ E $

in megapascals.

$ E $

in megapascals.

2.4. Model learning and optimization

The workflow illustrated in Figure 4, which builds the link between the parameters relevant to the concrete mix design and the KPIs involving the environmental impact and the structural performance, can be represented in terms of the probabilistic graph (Koller and Friedman, Reference Koller and Friedman2009) shown in Figure 8. As discussed in the Introduction (Section 1), the goal of the present study is to find the value of the design variables

![]() $ \boldsymbol{x} $

(concrete mix design and beam geometry) which minimizes the objective

$ \boldsymbol{x} $

(concrete mix design and beam geometry) which minimizes the objective

![]() $ \mathcal{O} $

(environmental impact), while satisfying a given set of constraints

$ \mathcal{O} $

(environmental impact), while satisfying a given set of constraints

![]() $ {\mathcal{C}}_i $

(beam design criterion, structural performance, etc.). This necessitates forward and backward information flow in the presented graph. The forward information flow is necessary to compute the KPIs for given values of the design variables and the backward information is essentially the sensitivities of the objective and the constraints with respect to the design variables that enable gradient-based optimization. Establishing the information flow poses challenges, which we attempt to tackle with the methods proposed as follows:

$ {\mathcal{C}}_i $

(beam design criterion, structural performance, etc.). This necessitates forward and backward information flow in the presented graph. The forward information flow is necessary to compute the KPIs for given values of the design variables and the backward information is essentially the sensitivities of the objective and the constraints with respect to the design variables that enable gradient-based optimization. Establishing the information flow poses challenges, which we attempt to tackle with the methods proposed as follows:

-

• Data-based model learning: The physics-based models discussed in Sections 2.2.1 and 2.2.2 are used to compute various KPIs (discussed in Figure 8). These depend on some model parameters denoted by

$ b $

which are unobserved (latent) in the experiments performed. The model parameters need not only be inferred on the basis of experimental data but also their dependence on the design variables

$ b $

which are unobserved (latent) in the experiments performed. The model parameters need not only be inferred on the basis of experimental data but also their dependence on the design variables

$ \boldsymbol{x} $

is required in order to be integrated into the optimization framework. In addition, the noise in the data (aleatoric) or the incompleteness of data (epistemic) introduces uncertainty. To this end, we propose learning probabilistic links by employing experimental data as discussed in detail in Section 2.5.

$ \boldsymbol{x} $

is required in order to be integrated into the optimization framework. In addition, the noise in the data (aleatoric) or the incompleteness of data (epistemic) introduces uncertainty. To this end, we propose learning probabilistic links by employing experimental data as discussed in detail in Section 2.5. -

• Optimization under uncertainty: The aforementioned uncertainties as well as additional randomness that might be present in the associated links necessitate reformulating the optimization problem (i.e., objectives/constraints) as one of OUU. In turn, this gives rise to new challenges in order to compute the needed derivatives of the KPIs with respect to the design variables which are discussed in Section 2.6.

Figure 8. Stochastic computational graph for the constrained optimization problem for performance-based concrete design: The circles represent stochastic nodes and rectangles deterministic nodes. The design variables are denoted by

![]() $ \boldsymbol{x}=\left({x}_1,{x}_2\right) $

. The vector

$ \boldsymbol{x}=\left({x}_1,{x}_2\right) $

. The vector

![]() $ b $

represents the unobserved model parameters which are needed in order to link the key performance indicators (KPIs)

$ b $

represents the unobserved model parameters which are needed in order to link the key performance indicators (KPIs)

![]() $ \boldsymbol{y}=\left({y}_o,{\left\{{y}_{c_i}\right\}}_{i=1}^I\right) $

with the design variables

$ \boldsymbol{y}=\left({y}_o,{\left\{{y}_{c_i}\right\}}_{i=1}^I\right) $

with the design variables

![]() $ \boldsymbol{x} $

. Here,

$ \boldsymbol{x} $

. Here,

![]() $ {y}_o $

represents the model output appearing in the optimization objective and

$ {y}_o $

represents the model output appearing in the optimization objective and

![]() $ {y}_{c_i} $

represents the model output appearing in the

$ {y}_{c_i} $

represents the model output appearing in the

![]() $ i\mathrm{th} $

constraint. The objective function is given by

$ i\mathrm{th} $

constraint. The objective function is given by

![]() $ \mathcal{O} $

and the

$ \mathcal{O} $

and the

![]() $ i\mathrm{th} $

constraint by

$ i\mathrm{th} $

constraint by

![]() $ {\mathcal{C}}_i $

. They are not differentiable with respect to

$ {\mathcal{C}}_i $

. They are not differentiable with respect to

![]() $ {x}_1,{x}_2 $

. (Hence,

$ {x}_1,{x}_2 $

. (Hence,

![]() $ {x}_1 $

and

$ {x}_1 $

and

![]() $ {x}_2 $

are dotted.) The variables

$ {x}_2 $

are dotted.) The variables

![]() $ \boldsymbol{\theta} $

are auxiliary and are used in the context of Variational Optimization discussed in Section 2.6.2. Several other deterministic nodes are present between the random variables

$ \boldsymbol{\theta} $

are auxiliary and are used in the context of Variational Optimization discussed in Section 2.6.2. Several other deterministic nodes are present between the random variables

![]() $ \boldsymbol{b} $

and the KPIs

$ \boldsymbol{b} $

and the KPIs

![]() $ \boldsymbol{y} $

, but they are omitted for brevity. The physical meaning of the variables used is detailed in Table 3.

$ \boldsymbol{y} $

, but they are omitted for brevity. The physical meaning of the variables used is detailed in Table 3.

2.5. Probabilistic links based on data and physical models

This section deals with learning a (probabilistic) model linking the design variables

![]() $ \boldsymbol{x} $

and the input parameters of the physics-based models, that is, concrete hydration and concrete homogenization. A graphical representation is contained in Figure 9. Therein,

$ \boldsymbol{x} $

and the input parameters of the physics-based models, that is, concrete hydration and concrete homogenization. A graphical representation is contained in Figure 9. Therein,

![]() $ {\left\{{\hat{\boldsymbol{x}}}^{(i)},{\hat{\boldsymbol{z}}}^{(i)}\right\}}_{i=1}^N $

denote the observed data pairs and

$ {\left\{{\hat{\boldsymbol{x}}}^{(i)},{\hat{\boldsymbol{z}}}^{(i)}\right\}}_{i=1}^N $

denote the observed data pairs and

![]() $ \boldsymbol{b} $

denotes a vector of unknown and unobserved parameters of the physics-based models and

$ \boldsymbol{b} $

denotes a vector of unknown and unobserved parameters of the physics-based models and

![]() $ \boldsymbol{z}\left(\boldsymbol{b}\right) $

the model outputs. The latter relate to an experimental observation

$ \boldsymbol{z}\left(\boldsymbol{b}\right) $

the model outputs. The latter relate to an experimental observation

![]() $ {\hat{\boldsymbol{z}}}^{(i)} $

as

$ {\hat{\boldsymbol{z}}}^{(i)} $

as

![]() $ {\hat{\boldsymbol{z}}}^{(i)}=\boldsymbol{z}\left({\boldsymbol{b}}^{(i)}\right)+\mathrm{noise} $

, which gives rise to a likelihood

$ {\hat{\boldsymbol{z}}}^{(i)}=\boldsymbol{z}\left({\boldsymbol{b}}^{(i)}\right)+\mathrm{noise} $

, which gives rise to a likelihood

![]() $ p\left({\hat{\boldsymbol{z}}}^{(i)}|\boldsymbol{z}\left({\boldsymbol{b}}^{(i)}\right)\right) $

. We further postulate a probabilistic relation between

$ p\left({\hat{\boldsymbol{z}}}^{(i)}|\boldsymbol{z}\left({\boldsymbol{b}}^{(i)}\right)\right) $

. We further postulate a probabilistic relation between

![]() $ \hat{\boldsymbol{x}} $

and

$ \hat{\boldsymbol{x}} $

and

![]() $ \boldsymbol{b} $

that is expressed by the conditional

$ \boldsymbol{b} $

that is expressed by the conditional

![]() $ p\left(\boldsymbol{b}|\boldsymbol{x};\varphi \right) $

, which depends on unknown parameters

$ p\left(\boldsymbol{b}|\boldsymbol{x};\varphi \right) $

, which depends on unknown parameters

![]() $ \boldsymbol{\varphi} $

. The physical meaning of the aforementioned variables and model links, as well as of the relevant data, is presented in Table 4. The elements introduced above suggest a Bayesian formulation that can quantify inferential uncertainties in the unknown parameters and propagate it in the model predictions (Koutsourelakis et al., Reference Koutsourelakis, Zabaras and Girolami2016), as detailed in the next section.

$ \boldsymbol{\varphi} $

. The physical meaning of the aforementioned variables and model links, as well as of the relevant data, is presented in Table 4. The elements introduced above suggest a Bayesian formulation that can quantify inferential uncertainties in the unknown parameters and propagate it in the model predictions (Koutsourelakis et al., Reference Koutsourelakis, Zabaras and Girolami2016), as detailed in the next section.

Figure 9. Probabilistic graph for the data and physical model-based model learning. The shaded nodes are the observed and unshaded are the unobserved (latent) nodes.

2.5.1. Expectation–maximization

Given

![]() $ N $

data pairs

$ N $

data pairs

![]() $ {\mathcal{D}}_N={\left\{{\hat{\boldsymbol{x}}}^{(i)},{\hat{\boldsymbol{z}}}^{(i)}\right\}}_{i=1}^N $

consisting of different concrete mixes and corresponding outputs, we would like to infer the corresponding

$ {\mathcal{D}}_N={\left\{{\hat{\boldsymbol{x}}}^{(i)},{\hat{\boldsymbol{z}}}^{(i)}\right\}}_{i=1}^N $

consisting of different concrete mixes and corresponding outputs, we would like to infer the corresponding

![]() $ {\boldsymbol{b}}^{(i)} $

, but more importantly the relation between

$ {\boldsymbol{b}}^{(i)} $

, but more importantly the relation between

![]() $ \boldsymbol{x} $

and

$ \boldsymbol{x} $

and

![]() $ \boldsymbol{b} $

which would be of relevance for downstream, optimization tasks discussed in Section 2.6.

$ \boldsymbol{b} $

which would be of relevance for downstream, optimization tasks discussed in Section 2.6.

We postulate a probabilistic relation between

![]() $ \boldsymbol{x} $

and

$ \boldsymbol{x} $

and

![]() $ \boldsymbol{b} $

in the form of a conditional density

$ \boldsymbol{b} $

in the form of a conditional density

![]() $ p\left(\boldsymbol{b}|\boldsymbol{x};\boldsymbol{\varphi} \right) $

parametrized by

$ p\left(\boldsymbol{b}|\boldsymbol{x};\boldsymbol{\varphi} \right) $

parametrized by

![]() $ \boldsymbol{\varphi} $

. For example,

$ \boldsymbol{\varphi} $

. For example,

where

![]() $ {\boldsymbol{f}}_{\varphi}\left(\boldsymbol{x}\right) $

represents a fully connected, feed-forward neural network parametrized by

$ {\boldsymbol{f}}_{\varphi}\left(\boldsymbol{x}\right) $

represents a fully connected, feed-forward neural network parametrized by

![]() $ \boldsymbol{w} $

(further details discussed in Section 3) and

$ \boldsymbol{w} $

(further details discussed in Section 3) and

![]() $ {\boldsymbol{S}}_{\varphi}\left(\boldsymbol{x}\right)={\boldsymbol{LL}}^T $

denotes the covariance matrix where

$ {\boldsymbol{S}}_{\varphi}\left(\boldsymbol{x}\right)={\boldsymbol{LL}}^T $

denotes the covariance matrix where

![]() $ \boldsymbol{L} $

is lower-triangular. Hence, the parameters

$ \boldsymbol{L} $

is lower-triangular. Hence, the parameters

![]() $ \boldsymbol{\varphi} $

to be learned correspond to

$ \boldsymbol{\varphi} $

to be learned correspond to

![]() $ \boldsymbol{\varphi} =\left\{\boldsymbol{w},\boldsymbol{L}\right\} $

. We assume that the observations

$ \boldsymbol{\varphi} =\left\{\boldsymbol{w},\boldsymbol{L}\right\} $

. We assume that the observations

![]() $ {\hat{\boldsymbol{z}}}^{(i)} $

are contaminated with Gaussian noise, which gives rise to the likelihood:

$ {\hat{\boldsymbol{z}}}^{(i)} $

are contaminated with Gaussian noise, which gives rise to the likelihood:

The covariance

![]() $ {\boldsymbol{\Sigma}}_{\mathrm{\ell}} $

depends on the data used and is discussed in Section 3.

$ {\boldsymbol{\Sigma}}_{\mathrm{\ell}} $

depends on the data used and is discussed in Section 3.

Given Eqs. (2) and (3), one can observe that

![]() $ {\boldsymbol{b}}^{(i)} $

(i.e., the unobserved model inputs for each concrete mix

$ {\boldsymbol{b}}^{(i)} $

(i.e., the unobserved model inputs for each concrete mix

![]() $ i $

) and

$ i $

) and

![]() $ \boldsymbol{\varphi} $

would need to be inferred simultaneously. In the following, we obtain point estimates

$ \boldsymbol{\varphi} $

would need to be inferred simultaneously. In the following, we obtain point estimates

![]() $ {\boldsymbol{\varphi}}^{\ast } $

for

$ {\boldsymbol{\varphi}}^{\ast } $

for

![]() $ \boldsymbol{\varphi} $

, by maximizing the marginal log-likelihood

$ \boldsymbol{\varphi} $

, by maximizing the marginal log-likelihood

![]() $ p\left({\mathcal{D}}_N|\boldsymbol{\varphi} \right) $

(also known as log-evidence), that is, the probability that the observed data arose from the model postulated. Hence, we get

$ p\left({\mathcal{D}}_N|\boldsymbol{\varphi} \right) $

(also known as log-evidence), that is, the probability that the observed data arose from the model postulated. Hence, we get

As this is analytically intractable, we propose employing variational Bayesian expectation–maximization (VB-EM) (Beal and Ghahramani, Reference Beal and Ghahramani2003) according to which a lower bound

![]() $ \mathrm{\mathcal{F}} $

to the log-evidence (called evidence lower bound [ELBO]) is constructed with the help of auxiliary densities

$ \mathrm{\mathcal{F}} $

to the log-evidence (called evidence lower bound [ELBO]) is constructed with the help of auxiliary densities

![]() $ {h}_i\left({\boldsymbol{b}}^{(i)}\right) $

on the unobserved variables

$ {h}_i\left({\boldsymbol{b}}^{(i)}\right) $

on the unobserved variables

![]() $ {\boldsymbol{b}}^{(i)} $

:

$ {\boldsymbol{b}}^{(i)} $

:

$$ \log \hskip0.35em p\left({\mathcal{D}}_N|\varphi \right)\ge \underset{=\mathrm{\mathcal{F}}\left({h}_{1:N},\boldsymbol{\varphi} \right)}{\underbrace{\sum \limits_{i=1}^N{\unicode{x1D53C}}_{h_i\left({\boldsymbol{b}}^{(i)}\right)}\left[\log \frac{p\left({\hat{\boldsymbol{z}}}^{(i)}|\boldsymbol{z}\left({\boldsymbol{b}}^{(i)}\right)\right)p\left({\boldsymbol{b}}^{(i)}|{\boldsymbol{x}}^{(i)};\boldsymbol{\varphi} \right)}{h_i\left({\boldsymbol{b}}^{(i)}\right)}\right]}}, $$

$$ \log \hskip0.35em p\left({\mathcal{D}}_N|\varphi \right)\ge \underset{=\mathrm{\mathcal{F}}\left({h}_{1:N},\boldsymbol{\varphi} \right)}{\underbrace{\sum \limits_{i=1}^N{\unicode{x1D53C}}_{h_i\left({\boldsymbol{b}}^{(i)}\right)}\left[\log \frac{p\left({\hat{\boldsymbol{z}}}^{(i)}|\boldsymbol{z}\left({\boldsymbol{b}}^{(i)}\right)\right)p\left({\boldsymbol{b}}^{(i)}|{\boldsymbol{x}}^{(i)};\boldsymbol{\varphi} \right)}{h_i\left({\boldsymbol{b}}^{(i)}\right)}\right]}}, $$

where

![]() $ {\unicode{x1D53C}}_{h_i\left({\boldsymbol{b}}^{(i)}\right)}\left[\cdot \right] $

denote the expectation with respect to the auxiliary densities

$ {\unicode{x1D53C}}_{h_i\left({\boldsymbol{b}}^{(i)}\right)}\left[\cdot \right] $

denote the expectation with respect to the auxiliary densities

![]() $ {h}_i\left({\boldsymbol{b}}^{(i)}\right) $

on the unobserved variables

$ {h}_i\left({\boldsymbol{b}}^{(i)}\right) $

on the unobserved variables

![]() $ {\boldsymbol{b}}^{(i)} $

. Eq. (5) suggests the following iterative scheme where one alternates between the steps:

$ {\boldsymbol{b}}^{(i)} $

. Eq. (5) suggests the following iterative scheme where one alternates between the steps:

-

• E-step: Fix

$ \boldsymbol{\varphi} $

and maximize

$ \boldsymbol{\varphi} $

and maximize

$ \mathrm{\mathcal{F}} $

with respect to

$ \mathrm{\mathcal{F}} $

with respect to

$ {h}_i\left({\boldsymbol{b}}^{(i)}\right) $

. It can be readily shown (Bishop and Nasrabadi, Reference Bishop and Nasrabadi2006) that optimality is achieved by the conditional posterior, that is,

$ {h}_i\left({\boldsymbol{b}}^{(i)}\right) $

. It can be readily shown (Bishop and Nasrabadi, Reference Bishop and Nasrabadi2006) that optimality is achieved by the conditional posterior, that is,

which makes the inequality in Eq. (5) tight. Since the likelihood is not tractable as it involves a physics-based solver, we have used Markov chain Monte Carlo (MCMC) to sample from the conditional posterior (see Section 3).

-

• M-step: Given

$ {\left\{{h}_i\left({\boldsymbol{b}}^{(i)}\right)\right\}}_{i=1}^N $

, maximize

$ {\left\{{h}_i\left({\boldsymbol{b}}^{(i)}\right)\right\}}_{i=1}^N $

, maximize

$ \mathrm{\mathcal{F}} $

with respect to

$ \mathrm{\mathcal{F}} $

with respect to

$ \boldsymbol{\varphi} $

.

$ \boldsymbol{\varphi} $

.

This requires derivatives of

![]() $ \mathrm{\mathcal{F}} $

, that is,

$ \mathrm{\mathcal{F}} $

, that is,

$$ \frac{\mathrm{\partial \mathcal{F}}}{\partial \boldsymbol{\varphi}}=\sum \limits_{i=1}^N{\unicode{x1D53C}}_{h_i}\left[\frac{\partial \log p\left({\boldsymbol{b}}^{(i)}|{\boldsymbol{x}}^{(i)};\boldsymbol{\varphi} \right)}{\partial \boldsymbol{\varphi}}\right]. $$

$$ \frac{\mathrm{\partial \mathcal{F}}}{\partial \boldsymbol{\varphi}}=\sum \limits_{i=1}^N{\unicode{x1D53C}}_{h_i}\left[\frac{\partial \log p\left({\boldsymbol{b}}^{(i)}|{\boldsymbol{x}}^{(i)};\boldsymbol{\varphi} \right)}{\partial \boldsymbol{\varphi}}\right]. $$

Given the MCMC samples

![]() $ {\left\{{\boldsymbol{b}}_m^{(i)}\right\}}_{m=1}^M $

from the E-step, these can be approximated as

$ {\left\{{\boldsymbol{b}}_m^{(i)}\right\}}_{m=1}^M $

from the E-step, these can be approximated as

$$ \frac{\mathrm{\partial \mathcal{F}}}{\partial \varphi}\approx \sum \limits_{i=1}^N\frac{1}{M}\sum \limits_{m=1}^M\frac{\partial \log \hskip0.35em p\left({\boldsymbol{b}}_m^{(i)}|{\boldsymbol{x}}^{(i)};\varphi \right)}{\partial \varphi }. $$

$$ \frac{\mathrm{\partial \mathcal{F}}}{\partial \varphi}\approx \sum \limits_{i=1}^N\frac{1}{M}\sum \limits_{m=1}^M\frac{\partial \log \hskip0.35em p\left({\boldsymbol{b}}_m^{(i)}|{\boldsymbol{x}}^{(i)};\varphi \right)}{\partial \varphi }. $$

Due to the Monte Carlo noise in these estimates, a stochastic gradient ascent algorithm is utilized. In particular, the ADAM optimizer (Kingma and Ba, Reference Kingma and Ba2014) was used from the PyTorch (Paszke et al., Reference Paszke, Gross, Massa, Lerer, Bradbury, Chanan, Killeen, Lin, Gimelshein, Antiga, Desmaison, Kopf, Yang, DeVito, Raison, Tejani, Chilamkurthy, Steiner, Fang, Bai and Chintala2019) library to capitalize on its auto-differentiation capabilities.

The major elements of the method are summarized in Algorithm 1. We note here that training complexity grows linearly with the number of training samples

![]() $ N $

due to the densities

$ N $

due to the densities

![]() $ {h}_i $

associated with each data point (for-loop of Algorithm 1), but this can be embarrassingly parallelizable.Footnote 1

$ {h}_i $

associated with each data point (for-loop of Algorithm 1), but this can be embarrassingly parallelizable.Footnote 1

Algorithm 1 Data-based model learning.

1: Input: Data

![]() $ {\mathcal{D}}_N={\left\{{\hat{\boldsymbol{x}}}^{(i)},{\hat{\boldsymbol{z}}}^{(i)}\right\}}_{i=1}^N $

, model form

$ {\mathcal{D}}_N={\left\{{\hat{\boldsymbol{x}}}^{(i)},{\hat{\boldsymbol{z}}}^{(i)}\right\}}_{i=1}^N $

, model form

![]() $ p\left(\boldsymbol{b}|\boldsymbol{x};\boldsymbol{\varphi} \right) $

, likelihood noise

$ p\left(\boldsymbol{b}|\boldsymbol{x};\boldsymbol{\varphi} \right) $

, likelihood noise

![]() $ {\boldsymbol{\Sigma}}_l $

,

$ {\boldsymbol{\Sigma}}_l $

,

![]() $ \hskip0.5em n=0 $

$ \hskip0.5em n=0 $

2: Output: Learned parameter

![]() $ {\boldsymbol{\varphi}}^{\ast } $

$ {\boldsymbol{\varphi}}^{\ast } $

3: Initialize the parameters

![]() $ \boldsymbol{\varphi} $

$ \boldsymbol{\varphi} $

4: while ELBO not converged do

Expectation Step (E-step):

5: for

![]() $ i=1 $

to

$ i=1 $

to

![]() $ N $

do

$ N $

do

6: MCMC to get the posterior probability

![]() $ p\left({\boldsymbol{b}}^{(i)}|{\mathcal{D}}_N,{\boldsymbol{\varphi}}^n\right) $

using current

$ p\left({\boldsymbol{b}}^{(i)}|{\mathcal{D}}_N,{\boldsymbol{\varphi}}^n\right) $

using current

![]() $ {\boldsymbol{\varphi}}^n $

⊳ Eq. (6)

$ {\boldsymbol{\varphi}}^n $

⊳ Eq. (6)

7: end for

Maximization Step (M-step):

8: Monte Carlo gradient estimate ⊳ Eq. (9)

9:

![]() $ {\boldsymbol{\varphi}}^{n+1}=\arg {\max}_{\boldsymbol{\varphi}}\;\mathrm{\mathcal{F}}\left({h}_{1:N},{\boldsymbol{\varphi}}^n\right) $

$ {\boldsymbol{\varphi}}^{n+1}=\arg {\max}_{\boldsymbol{\varphi}}\;\mathrm{\mathcal{F}}\left({h}_{1:N},{\boldsymbol{\varphi}}^n\right) $

10:

![]() $ n\leftarrow n+1 $

$ n\leftarrow n+1 $

11: end while

Model Predictions: The VB-EM based model learning scheme discussed above can be carried out in an offline phase. Once the model is learned, we are interested in the proposed model’s ability to produce probabilistic predictions (online stage) that reflect the various sources of uncertainty discussed previously. For learnt parameters

![]() $ {\boldsymbol{\varphi}}^{\ast } $

, the predictive posterior density

$ {\boldsymbol{\varphi}}^{\ast } $

, the predictive posterior density

![]() $ {p}_{\mathrm{pred}}\left(\boldsymbol{z}|\mathcal{D},{\boldsymbol{\varphi}}^{\ast}\right) $

on the solution vector

$ {p}_{\mathrm{pred}}\left(\boldsymbol{z}|\mathcal{D},{\boldsymbol{\varphi}}^{\ast}\right) $

on the solution vector

![]() $ \boldsymbol{z} $

of a physical model is as follows:

$ \boldsymbol{z} $

of a physical model is as follows:

$$ {\displaystyle \begin{array}{l}{p}_{\mathrm{pred}}\left(\boldsymbol{z}|\mathcal{D},{\boldsymbol{\varphi}}^{\ast}\right)=\int p\left(\boldsymbol{z},\boldsymbol{b}|\mathcal{D},{\boldsymbol{\varphi}}^{\ast}\right)\mathrm{d}\boldsymbol{b}\\ {}\hskip8.9em =\int p\left(\boldsymbol{z}|\boldsymbol{b}\right)p\left(\boldsymbol{b}|\mathcal{D},{\boldsymbol{\varphi}}^{\ast}\right)\mathrm{d}\boldsymbol{b}\end{array}} $$

$$ {\displaystyle \begin{array}{l}{p}_{\mathrm{pred}}\left(\boldsymbol{z}|\mathcal{D},{\boldsymbol{\varphi}}^{\ast}\right)=\int p\left(\boldsymbol{z},\boldsymbol{b}|\mathcal{D},{\boldsymbol{\varphi}}^{\ast}\right)\mathrm{d}\boldsymbol{b}\\ {}\hskip8.9em =\int p\left(\boldsymbol{z}|\boldsymbol{b}\right)p\left(\boldsymbol{b}|\mathcal{D},{\boldsymbol{\varphi}}^{\ast}\right)\mathrm{d}\boldsymbol{b}\end{array}} $$

$$ \approx \frac{1}{K}\sum \limits_{k=1}^K\boldsymbol{z}\left({\boldsymbol{b}}^{(k)}\right). $$

$$ \approx \frac{1}{K}\sum \limits_{k=1}^K\boldsymbol{z}\left({\boldsymbol{b}}^{(k)}\right). $$

The second of the densities is the conditional (Eq. (2)) substituted with the learned

![]() $ {\boldsymbol{\varphi}}^{\ast } $

and the first of the densities is simply a Dirac-delta that corresponds to the solution of the physical model, that is,

$ {\boldsymbol{\varphi}}^{\ast } $

and the first of the densities is simply a Dirac-delta that corresponds to the solution of the physical model, that is,

![]() $ \boldsymbol{z}\left(\boldsymbol{b}\right) $

. The intractable integral can be approximated by Monte Carlo using

$ \boldsymbol{z}\left(\boldsymbol{b}\right) $

. The intractable integral can be approximated by Monte Carlo using

![]() $ K $

samples of

$ K $

samples of

![]() $ \boldsymbol{b} $

drawn from

$ \boldsymbol{b} $

drawn from

![]() $ p\left(\boldsymbol{b}|\mathcal{D},{\boldsymbol{\varphi}}^{\ast}\right) $

.

$ p\left(\boldsymbol{b}|\mathcal{D},{\boldsymbol{\varphi}}^{\ast}\right) $

.

2.6. Optimization under uncertainty

With the relevant missing links identified as detailed in the previous section, the optimization can be performed on the basis of Figure 8. We seek to optimize the objective function

![]() $ \mathcal{O} $

subject to constraints

$ \mathcal{O} $

subject to constraints

![]() $ \mathcal{C}=\left({\mathcal{C}}_1,\dots, {\mathcal{C}}_I\right) $

that are dependent on uncertain parameters

$ \mathcal{C}=\left({\mathcal{C}}_1,\dots, {\mathcal{C}}_I\right) $

that are dependent on uncertain parameters

![]() $ \boldsymbol{b} $

, which in turn are dependent on the design variables

$ \boldsymbol{b} $

, which in turn are dependent on the design variables

![]() $ \boldsymbol{x} $

. In this setting, the general parameter-dependent nonlinear constrained optimization problem can be stated as

$ \boldsymbol{x} $

. In this setting, the general parameter-dependent nonlinear constrained optimization problem can be stated as

$$ {\displaystyle \begin{array}{l}\underset{\boldsymbol{x}}{\min}\;\mathcal{O}\left({y}_o\left(\boldsymbol{x},\boldsymbol{b}\right)\right),\\ {}\mathrm{s}.\mathrm{t}.\hskip0.35em {\mathcal{C}}_i\left({y}_{c_i}\left(\boldsymbol{x},\boldsymbol{b}\right)\right)\le 0,\hskip0.35em \forall i\in \left\{1,\dots, I\right\},\end{array}} $$

$$ {\displaystyle \begin{array}{l}\underset{\boldsymbol{x}}{\min}\;\mathcal{O}\left({y}_o\left(\boldsymbol{x},\boldsymbol{b}\right)\right),\\ {}\mathrm{s}.\mathrm{t}.\hskip0.35em {\mathcal{C}}_i\left({y}_{c_i}\left(\boldsymbol{x},\boldsymbol{b}\right)\right)\le 0,\hskip0.35em \forall i\in \left\{1,\dots, I\right\},\end{array}} $$

where

![]() $ \boldsymbol{x} $

is a

$ \boldsymbol{x} $

is a

![]() $ d $

-dimensional vector of design variables and

$ d $

-dimensional vector of design variables and

![]() $ \mathbf{b} $

are the model parameter discussed in the previous section. It can be observed that the optimization problem is nontrivial because of three main reasons: (a) the presence of the constraints (Section 2.6.1), (b) the presence of random variables

$ \mathbf{b} $

are the model parameter discussed in the previous section. It can be observed that the optimization problem is nontrivial because of three main reasons: (a) the presence of the constraints (Section 2.6.1), (b) the presence of random variables

![]() $ \boldsymbol{b} $

in the objective and the constraint(s) (Section 2.6.1), and (c) non-differentiability of

$ \boldsymbol{b} $

in the objective and the constraint(s) (Section 2.6.1), and (c) non-differentiability of

![]() $ {y}_o,{y}_{c_i} $

and therefore of

$ {y}_o,{y}_{c_i} $

and therefore of

![]() $ \mathcal{O} $

and

$ \mathcal{O} $

and

![]() $ {\mathcal{C}}_i $

.

$ {\mathcal{C}}_i $

.

2.6.1. Handling stochasticity and constraints

Since the solution of Eq. (12) depends on the random variables

![]() $ \boldsymbol{b} $

, the objective and constraints are random variables as well and we have to take their random variability into account. We do this by reverting to a robust optimization problem (Ben-Tal and Nemirovski, Reference Ben-Tal and Nemirovski1999; Bertsimas et al., Reference Bertsimas, Brown and Caramanis2011), with expected values denoted by

$ \boldsymbol{b} $

, the objective and constraints are random variables as well and we have to take their random variability into account. We do this by reverting to a robust optimization problem (Ben-Tal and Nemirovski, Reference Ben-Tal and Nemirovski1999; Bertsimas et al., Reference Bertsimas, Brown and Caramanis2011), with expected values denoted by

![]() $ \unicode{x1D53C}\left[\cdot \right] $

being the robustness measure to integrate out the uncertainties. In this manner, the optimization problem in Eq. (12) is reformulated as

$ \unicode{x1D53C}\left[\cdot \right] $

being the robustness measure to integrate out the uncertainties. In this manner, the optimization problem in Eq. (12) is reformulated as

$$ {\displaystyle \begin{array}{l}\underset{\boldsymbol{x}}{\min}\;{\unicode{x1D53C}}_{\boldsymbol{b}}\left[\mathcal{O}\left({y}_o\left(\boldsymbol{x},\boldsymbol{b}\right)\right)\right],\\ {}\mathrm{s}.\mathrm{t}.\hskip0.35em {\unicode{x1D53C}}_{\mathbf{b}}\left[{\mathcal{C}}_i\left({y}_{c_i}\left(\boldsymbol{x},\boldsymbol{b}\right)\right)\right]\le 0,\hskip0.35em \forall i\in \left\{1,\dots, I\right\}.\end{array}} $$

$$ {\displaystyle \begin{array}{l}\underset{\boldsymbol{x}}{\min}\;{\unicode{x1D53C}}_{\boldsymbol{b}}\left[\mathcal{O}\left({y}_o\left(\boldsymbol{x},\boldsymbol{b}\right)\right)\right],\\ {}\mathrm{s}.\mathrm{t}.\hskip0.35em {\unicode{x1D53C}}_{\mathbf{b}}\left[{\mathcal{C}}_i\left({y}_{c_i}\left(\boldsymbol{x},\boldsymbol{b}\right)\right)\right]\le 0,\hskip0.35em \forall i\in \left\{1,\dots, I\right\}.\end{array}} $$

The expected objective value will yield a design that performs best on average, while the reformulated constraints imply feasibility on average.

One can cast this constrained problem to an unconstrained one using penalty-based methods (Nocedal and Wright, Reference Nocedal and Wright1999; Wang and Spall, Reference Wang and Spall2003). In particular, we define an augmented objective function

![]() $ \mathrm{\mathcal{L}} $

as follows:

$ \mathrm{\mathcal{L}} $

as follows:

where

![]() $ {\lambda}_i>0 $

is the penalty parameter for the

$ {\lambda}_i>0 $

is the penalty parameter for the

![]() $ i\mathrm{th} $

constraint. The larger the

$ i\mathrm{th} $

constraint. The larger the

![]() $ {\lambda}_i $

’s are, the more strictly the constraints are enforced. Incorporating the augmented objective (Eq. (14)) in the reformulated optimization problem (Eq. (13)), one can arrive at the following penalized objective:

$ {\lambda}_i $

’s are, the more strictly the constraints are enforced. Incorporating the augmented objective (Eq. (14)) in the reformulated optimization problem (Eq. (13)), one can arrive at the following penalized objective:

leading to the following equivalent, unconstrained optimization problem:

The expectation above is approximated by Monte Carlo which induces noise and necessitates the use of stochastic optimization methods (discussed in detail in the sequel). Furthermore, we propose to alleviate the dependence on the penalty parameters

![]() $ \lambda $

by using the sequential unconstrained minimization technique algorithm (Fiacco and McCormick, Reference Fiacco and McCormick1990), which has been shown to work with nonlinear constraints (Liuzzi et al., Reference Liuzzi, Lucidi and Sciandrone2010). The algorithm considers a strictly increasing sequence

$ \lambda $

by using the sequential unconstrained minimization technique algorithm (Fiacco and McCormick, Reference Fiacco and McCormick1990), which has been shown to work with nonlinear constraints (Liuzzi et al., Reference Liuzzi, Lucidi and Sciandrone2010). The algorithm considers a strictly increasing sequence

![]() $ \left\{{\boldsymbol{\lambda}}_n\right\} $

with

$ \left\{{\boldsymbol{\lambda}}_n\right\} $

with

![]() $ {\boldsymbol{\lambda}}_n\to \infty $

. Fiacco and McCormick (Reference Fiacco and McCormick1990) proved that when

$ {\boldsymbol{\lambda}}_n\to \infty $

. Fiacco and McCormick (Reference Fiacco and McCormick1990) proved that when

![]() $ {\boldsymbol{\lambda}}_n\to \infty $

, then the sequence of corresponding minima, say

$ {\boldsymbol{\lambda}}_n\to \infty $

, then the sequence of corresponding minima, say

![]() $ \left\{{\boldsymbol{x}}_n^{\ast}\right\} $

, converges to a global minimizer

$ \left\{{\boldsymbol{x}}_n^{\ast}\right\} $

, converges to a global minimizer

![]() $ {\boldsymbol{x}}^{\ast } $

of the original constrained problem. This adaptation of the penalty parameters helps to balance the need to satisfy the constraints with the need to make progress toward the optimal solution.

$ {\boldsymbol{x}}^{\ast } $

of the original constrained problem. This adaptation of the penalty parameters helps to balance the need to satisfy the constraints with the need to make progress toward the optimal solution.

2.6.2. Non-differentiable objective and constraints

We note that the approximation of the objective in Eq. (16) with Monte Carlo requires multiple runs of the expensive, forward, physics-based models involved, at each iteration of the optimization algorithm. In order to reduce the number of iterations required, especially when the dimension of the design space is higher, derivatives of the objective would be needed. In cases where the dimension of the design vector

![]() $ \boldsymbol{x} $

is high, gradient-based methods are necessary. In turn, the computation of derivatives of

$ \boldsymbol{x} $

is high, gradient-based methods are necessary. In turn, the computation of derivatives of

![]() $ \mathrm{\mathcal{L}} $

would necessitate derivatives of the outputs of the forward models with respect to the optimization variables

$ \mathrm{\mathcal{L}} $

would necessitate derivatives of the outputs of the forward models with respect to the optimization variables

![]() $ \boldsymbol{x} $

. The latter are, however, unavailable due to the non-differentiability of the forward models. This is a common, restrictive feature of several physics-based simulators which in most cases of engineering practice are implemented in legacy codes that are run as black boxes. This lack of differentiability has been recognized as a significant roadblock by several researchers in recent years (Beaumont et al., Reference Beaumont, Zhang and Balding2002; Marjoram et al., Reference Marjoram, Molitor, Plagnol and Tavaré2003; Louppe et al., Reference Louppe, Hermans and Cranmer2019; Cranmer et al., Reference Cranmer, Brehmer and Louppe2020; Shirobokov et al., Reference Shirobokov, Belavin, Kagan, Ustyuzhanin and Baydin2020; Lucor et al., Reference Lucor, Agrawal and Sergent2022; Oliveira et al., Reference Oliveira, Scalzo, Kohn, Cripps, Hardman, Close, Taghavi and Lemckert2022; Agrawal and Koutsourelakis, Reference Agrawal and Koutsourelakis2023). In this work, we advocate Variational Optimization (Staines and Barber, Reference Staines and Barber2013; Bird et al., Reference Bird, Kunze and Barber2018), which employs a differentiable bound on the non-differentiable objective. In the context of the current problem, we can write

$ \boldsymbol{x} $

. The latter are, however, unavailable due to the non-differentiability of the forward models. This is a common, restrictive feature of several physics-based simulators which in most cases of engineering practice are implemented in legacy codes that are run as black boxes. This lack of differentiability has been recognized as a significant roadblock by several researchers in recent years (Beaumont et al., Reference Beaumont, Zhang and Balding2002; Marjoram et al., Reference Marjoram, Molitor, Plagnol and Tavaré2003; Louppe et al., Reference Louppe, Hermans and Cranmer2019; Cranmer et al., Reference Cranmer, Brehmer and Louppe2020; Shirobokov et al., Reference Shirobokov, Belavin, Kagan, Ustyuzhanin and Baydin2020; Lucor et al., Reference Lucor, Agrawal and Sergent2022; Oliveira et al., Reference Oliveira, Scalzo, Kohn, Cripps, Hardman, Close, Taghavi and Lemckert2022; Agrawal and Koutsourelakis, Reference Agrawal and Koutsourelakis2023). In this work, we advocate Variational Optimization (Staines and Barber, Reference Staines and Barber2013; Bird et al., Reference Bird, Kunze and Barber2018), which employs a differentiable bound on the non-differentiable objective. In the context of the current problem, we can write

$$ \underset{\boldsymbol{x}}{\min}\int \left(\mathrm{\mathcal{L}}\left(\boldsymbol{x},\boldsymbol{b},\boldsymbol{\lambda} \right)\right)\hskip0.35em p\left(\boldsymbol{b}|\boldsymbol{x},\boldsymbol{\varphi} \right)\mathrm{d}\boldsymbol{b}\le \underset{U\left(\boldsymbol{\theta} \right)}{\underbrace{\int \left(\mathrm{\mathcal{L}}\left(\boldsymbol{x},\boldsymbol{b},\boldsymbol{\lambda} \right)\right)p\left(\boldsymbol{b}|\boldsymbol{x},\boldsymbol{\varphi} \right)q\left(\boldsymbol{x}|\boldsymbol{\theta} \right)\hskip0.35em \mathrm{d}\boldsymbol{b}\hskip0.35em \mathrm{d}\boldsymbol{x}}}, $$

$$ \underset{\boldsymbol{x}}{\min}\int \left(\mathrm{\mathcal{L}}\left(\boldsymbol{x},\boldsymbol{b},\boldsymbol{\lambda} \right)\right)\hskip0.35em p\left(\boldsymbol{b}|\boldsymbol{x},\boldsymbol{\varphi} \right)\mathrm{d}\boldsymbol{b}\le \underset{U\left(\boldsymbol{\theta} \right)}{\underbrace{\int \left(\mathrm{\mathcal{L}}\left(\boldsymbol{x},\boldsymbol{b},\boldsymbol{\lambda} \right)\right)p\left(\boldsymbol{b}|\boldsymbol{x},\boldsymbol{\varphi} \right)q\left(\boldsymbol{x}|\boldsymbol{\theta} \right)\hskip0.35em \mathrm{d}\boldsymbol{b}\hskip0.35em \mathrm{d}\boldsymbol{x}}}, $$

where

![]() $ q\left(\boldsymbol{x}|\boldsymbol{\theta} \right) $

is a density over the design variables