1 Introduction

Revenge is a kind of wild justice, which the more a man’s nature runs to, the more ought law to weed it out. (Francis Bacon).

Imagine two employees. One stole money from her boss because he cheated her out of her hard-earned money; e.g., by not covering her business expenses as promised. The other stole from her boss because he treated her unfairly; e.g., by forbidding her, unlike others, from working overtime. Here we examined whether people who are mistreated in different ways allow themselves to cheat their perpetrators to get revenge, regardless of the type of mistreatment. According to the Oxford English Dictionary, in the act of revenge, individuals respond to a wrong done to them or someone they care about by harming the transgressor. Here, we empirically tested whether the behavioral reaction to mistreatment (revenge) and its associated physiological arousal depends on the type of mistreatment.

According to a recent psychological account of dishonesty, when the possibility to cheat presents itself, people find themselves in a dilemma (Reference Ayal, Gino, Barkan and ArielyAyal et al., 2015). On the one hand, people strive to maintain a positive self-image (Reference JonesJones, 1973; Reference RosenbergRosenberg, 1979), and they value morality and honesty very highly (Reference Chaiken, Giner-Sorolla, Chen, Gollwitzer and BarghChaiken et al., 1996; Reference GreenwaldGreenwald, 1980; Reference Sanitoso, Kunda and FongSanitoso et al., 1990). On the other hand, most people are tempted to increase their personal gain through cheating even though the extent of cheating is usually rather small (Gino, Norton & Ariely, 2009; Reference Hochman, Glöckner, Fiedler and AyalHochman et al., 2016; Reference Mazar, Amir and ArielyMazar, Amir & Ariely, 2008; Reference Ordóñez, Schweitzer, Galinsky and BazermanOrdóñez et al., 2009; Reference Shalvi, Dana, Handgraaf and DreuShalvi et al., 2011). This conflict creates a type of psychological distress termed ethical dissonance (Reference Ayal and GinoAyal & Gino, 2011; Reference Barkan, Ayal and ArielyBarkan et al., 2015), which is accompanied by physiological arousal (Reference Hochman, Glöckner, Fiedler and AyalHochman et al., 2016). This unpleasant arousal may serve as a moral gatekeeper that impedes dishonest behavior. Thus, the ability to benefit from cheating requires tension-reduction mechanisms (e.g., Reference Barkan, Ayal, Gino and ArielyBarkan, Ayal & Ariely, 2012; Reference Jordan, Mullen and MurnighanJordan et al., 2011; Reference Peer, Acquisti and ShalviPeer et al., 2014; Reference Sachdeva, Iliev and MedinSachdeva et al., 2009).

Behavioral ethics research shows that justifications can serve as a psychological tension-reduction mechanism for ethical dissonance (Reference Shalvi, Gino, Barkan and AyalShalvi et al., 2015). Justifications have been found to both facilitate dishonesty and increase the magnitude of cheating (e.g., Ariely, 2012; Reference Ayal and GinoAyal & Gino, 2011; Reference Gino and ArielyGino & Ariely, 2012; Reference Shalvi, Dana, Handgraaf and DreuShalvi et al., 2011; Reference Mazar, Amir and ArielyMazar et al., 2008), while reducing guilt feelings (Reference Gino and PierceGino et al., 2013; Reference Gneezy, Imas and MadarászGneezy et al., 2014). Justifications may also reduce the physiological arousal elicited by cheating behavior Hochman et al., 2016; Hochman et al., 2021).

According to Shalvi et al. (2015), these self-serving justifications can emerge before or after ethical violations. Pre-violation justifications such as moral licensing (Reference Merritt, Effron and MoninMerritt et al., 2010; Reference Monin and MillerMonin & Miller, 2001; Reference Sachdeva, Iliev and MedinSachdeva et al., 2009) lessen the anticipated threat to the moral self by redefining questionable behaviors as excusable. Post-violation justifications, such as physical or symbolic cleansing (Reference Gneezy, Imas, Brown, Nelson and NortonGneezy et al., 2012; Reference Gneezy, Imas and MadarászGneezy et al., 2014; Reference Mitkidis, Ayal, Shalvi, Heimann, Levy, Kyselo, Wallot, Ariely and RoepstorffMitkidis et al., 2017; Reference Monin and MillerMonin & Miller, 2001; Reference Tetlock, Kristel, Elson, Green and LernerTetlock et al., 2000), ease the threat to the moral self through compensations that balance or lessen violations.

The current study was designed to examine whether and to what extent getting revenge against someone who engaged in mistreatment can serve as a pre-violation justification that increases dishonesty while decreasing its associated guilt feelings. Specifically, we examined whether different types of mistreatment would result in the same type of revenge. For instance, similar to our workplace example above, imagine two players who were mistreated, but in a different way. Player A was cheated by a classmate while Player B was treated unfairly by his colleague. As luck would have it, both players have a chance to get back at their offender. It is likely that Player A will feel like she has a justification for cheating her classmate back since she cheated her earlier. The question is whether Player B would feel as comfortable and entitled to cheating her colleague simply because he treated her unfairly. To test this question, our experimental design was constructed to differentiate between these two types of mistreatment and to ask whether they differed not only in the extent of cheating they elicited but also in the psychological distress they invoked. To the best of our knowledge, this distinction between unfairness and dishonesty and their effects on subsequent cheating behavior has rarely been explored in research.

1.1 The (iffy) link between mistreatment and revenge

Some empirical evidence suggests that cheating may be an acceptable form of revenge for mistreatment. Mistreating others can lead to negative feelings (Reference Pillutla and MurnighanPillutla & Murnighan, 1996; Reference Van der Zee, Anderson and PoppeVan Der Zee et al., 2016). In turn, these negative feelings may increase unethical behavior. For example, Motro et al. (2016) primed participants with guilt, anger, or a neutral feeling. Then, participants engaged in the matrices task (Reference Mazar, Amir and ArielyMazar et al., 2008), in which they could cheat to increase their monetary gain. The results showed that participants in the anger condition cheated more than twice as much as participants in the neutral condition and more than eight times as much as those in the guilt condition (Reference Motro, Ordonez, Pittarello and WelshMotro et al., 2016). Similarly, Gneezy and Ariely (2010) found that even a subtle form of mistreatment (a research assistant who is rude when instructing participants how to complete a task in a coffee shop), results in stealing from the offender as an act of revenge (Reference ArielyAriely, 2012).

A different kind of mistreatment that might lead to cheating as a form of revenge has been studied under the guise of unfairness. Research shows that people are markedly sensitive to fairness and reciprocity (e.g., Reference Fehr and SchmidtFehr & Schmidt, 1999; Reference Houser, Vetter and WinterHouser, Vetter & Winter, 2012), and are willing to hurt and get their revenge on those who mistreat them, even at a personal cost (Reference Gino and PierceGino & Pierce, 2009). For example, Houser et al. (2012) showed that people who were treated unfairly were more likely to cheat to restore the unfair ‘balance’ they recently experienced. Similarly, Schweitzer and Gibson (2008) found that subjects claimed they would be more likely to engage in unethical behavior when they perceived their counterpart to violate fairness standards. In a similar vein, Greenberg (1990) showed in a field study that employees who perceived a pay cut as unfair engaged more in employee theft than employees who perceived the pay cut as fair. At first glance, these findings suggest that mistreatment in general, and not specific types of wrongdoing, may justify retaliating by cheating behavior, since people’s thirst for revenge stems from their desire to get back at the person who hurt them (Reference Bone and RaihaniBone & Raihani, 2015).

On the other hand, revenge might be more affected by the type of initial mistreatment. The term lex talionis, the law of retaliation, refers to the “tit for tat” principle (Reference JonesJones, 1995), which suggests that people should be penalized in a way similar to the way they hurt the other. Based on this logic, people who were cheated, for example, are entitled to cheat their offender, whereas people who were treated unfairly are entitled to treat their offender unfairly. While unfairness is typically associated with the inequitable distribution of resources (e.g., Houser et al., 2012; Reference Koning, Dijk, Beest and SteinelKoning et al., 2010), dishonesty is associated with direct lying and deception committed by one party against another (e.g., Koning et al., 2010; Reference Thielmann and HilbigThielmann & Hilbig, 2019). To the extent that justifications facilitate dishonesty (Reference Shalvi, Gino, Barkan and AyalShalvi et al., 2015), people who were treated dishonestly, but not mistreated in general, might feel entitled to cheat their counterparts. For instance, a cheated individual might more easily recast stealing or cheating the other as merely claiming back what she justly deserves or taking back what was unlawfully taken away from her. Hence, revenge on a dishonest other may pave the way for guilt-free dishonest behavior (e.g., Bandura, 1999; Reference Detert, Trevin˜o and SweitzerDetert et al., 2008).

In sum, one line of research supports the claim that both unfairness and dishonesty (as well as other types of mistreatment) could serve as a justification for cheating behavior (e.g., Reference Gneezy and ArielyGneezy & Ariely, 2010; Reference Motro, Ordonez, Pittarello and WelshMotro et al., 2016). Other studies suggest that the effect of mistreatment on revenge is dependent on the specific type of mistreatment (e.g., Detert et al., 2008; Reference JonesJones, 1995). However, none of these studies manipulated more than one type of mistreatment at a time. In the current work, participants were treated fairly or unfairly (by notifying them that they had been given an unequal share of the profits) while being treated honestly or dishonestly (by informing them they had been cheated). This made it possible to test whether cheating creates a different justification from unfairness, which authorized increased dishonesty as retaliation.

In addition to measuring the magnitude of cheating behavior, we used a lie detector test to examine the associated physiological arousal. The lie detector test measures the Galvanic Skin Response (GSR, which responds to sweat gland activity) and was used here to detect arousal linked to dishonesty. Since skin conductance increases or decreases proportionally to the individual’s stress levels (Reference AndreassiAndreassi, 2000), it is considered an indication of deception (e.g., Reference Barland and RaskinBarland & Raskin, 1975; Reference PatrickPatrick, 2011; Reference KrapohlKrapohl, 2013). Previous research suggests that physiological arousal is correlated with ethical dissonance (Reference Hochman, Glöckner, Fiedler and AyalHochman et al., 2016) but that justifications can lessen the physiological arousal associated with cheating (Reference Hochman, Glöckner, Fiedler and AyalHochman et al., 2016; Reference Peleg, Ayal, Ariely and HochmanPeleg et al., 2019; Reference Hochman, Peleg, Ariely and AyalHochman et al., 2021). If revenge serves as a justification for dishonesty, it should lead to increased cheating while decreasing arousal, due to its inhibiting effect on guilt (Reference Gino and PierceGino et al., 2013). The two lines of research presented above may thus imply that this physiological effect could be general and lead to similar arousal in response to cheating to avenge any type of mistreatment. Alternatively, physiological arousal in response to cheating may depend on the kind of mistreatment the victim wishes to avenge.

In real-life situations, unfairness, as in the form of resource distribution, may be more common and visible than dishonesty, since fairness considerations are legitimate bargaining chips. In contrast, dishonesty is flatly disapproved and is by nature concealed. Moreover, deception detection is typically poor (Bond & DePauol, 2006) and often occurs well after the fact (Reference Park, Levine, Harms and FerraraPark et al., 2002). Thus, in many real-life circumstances, people can decide whether and how to react to unfairness before they can detect and react to dishonesty. Consistent with this presumed time ordering, the setting of our first study exposed participants to (un)fairness, gave them a chance to decide how to react to it, and only then exposed them to (dis)honesty. By contrast, in our second study, participants were exposed simultaneously to both types of mistreatment.

1.2 Hypotheses

Based on the rationales of the two theoretical approaches outlined above, we derived two competing hypotheses on the role of revenge as a justification for dishonest behavior. According to the view that revenge to any type of mistreatment can serve as a justification for cheating back, a general mistreatment effect hypothesis was formulated in terms of the magnitude of cheating and its associated psychological arousal:

Hypothesis 1a:

Participants will cheat an individual who behaved dishonestly towards them or treated them unfairly more than an honest or fair other.

Hypothesis 2a:

Physiological arousal on the lie detector test will increase in response to cheating an honest or fair other. However, no increase will be observed in participants who cheat back in response to any kind of mistreatment.

By contrast, if we assume that revenge can justify cheating an individual back only after s/he behaved dishonestly first, but no other type of mistreatment can justify cheating, a distinctive mistreatment effect hypothesis can be formulated as follows:

Hypothesis 1b:

Participants will cheat an individual who behaved dishonestly towards them more than an honest one and/or someone who treated them unfairly.

Hypothesis 2b:

Physiological arousal on the lie detector test will not increase in response to cheating back a dishonest individual but will increase in response to cheating other individuals who were honest and/or treated them unfairly.

To test these competing hypotheses, we conducted two studies using a two-phase procedure. In the first phase, participants played one round of a bargaining game (the Ultimatum game in Study 1 and the Dictator game in Study 2) in which we manipulated whether the players had been treated (un)fairly and (dis)honestly by their opponent. In the second phase, they played a perceptual task that allowed them to cheat for monetary gain at the expense of their opponent from the first phase. In Study 1, we also had a third phase, in which the participants underwent a lie detector test to determine whether we could detect their cheating level in the second phase. This research design enabled us to compare the effect of prior dishonesty or unfairness towards participants on their subsequent moral behavior as well as physiological arousal. All data are available online at https://osf.io/5j3y7/files/.

2 Study 1

Using a modified version of the Ultimatum game (Reference Güth and KocherGüth & Kocher, 2014), Study 1 examined the effect of the allocator’s level of fairness and honesty on the participant’s subsequent moral behavioral and physiological response on the lie detector. This served to test our competing hypotheses and examine whether unfairness and dishonesty have the same general effect on revenge (H1), or whether each has a distinctive effect (H2).

2.1 Method

2.1.1 Participants

The sample size was predetermined using G*Power (Reference Faul, Erdfelder, Buchner and LangFaul et al., 2009). We used a medium-large effect size (0.25), with an α of .05 and a power of .90. This calculation yielded a minimum target sample size of 185 participants. The final sample consisted of 197 Tel Aviv University students (81 males, 116 female), who volunteered to participate in the study. Their mean age was 24.41 years (SD= 4.218). The participants received a 20 ILS (approximately 5.50 USD) show-up fee for their participation, but actual payment was contingent on their selections in the task and could amount to 40 ILS (approximately 11.00 USD).

2.1.2 Design and procedure

The study had 2 computerized phases consisting of the manipulation and the retaliation task, after which the participants took a lie detector test. All phases were administered consecutively in a between-subject design with 4 conditions.

2.1.3 The manipulation

In the manipulation phase, participants played a modified version of the Ultimatum Game (Reference Güth, Schmittberger and SchwarzeGüth et al., 1982). In the classic version of this game (Reference Güth, Schmittberger and SchwarzeGüth et al., 1982), one individual (the proposer) suggests how to divide a sum of money (the pie) with a second individual. The responder then has the opportunity to either accept the proposed division, in which case both players earn the amount proposed, or reject it, in which case both earn nothing. In the current study, participants played only the role of the responder. They were told that they would play one trial with another participant on the computer. In actuality, there were no real proposers, and all offers were controlled by the computer (programmed in Visual Basic 6.0). Participants were seated in front of a computer screen and were asked to accept or reject the offer. To facilitate their decision, the following information was provided: the total pie (as reported by the proposer), the split of the total pie between them and the proposer (in percentages), and the absolute value (in new Israeli shekels, ILS, equivalent to about $0.30 each) of what was being offered to them. To motivate their choices, participants were told that at the end of the experiment, they would be paid based on the amount allocated from that trial (if they accepted the offer).

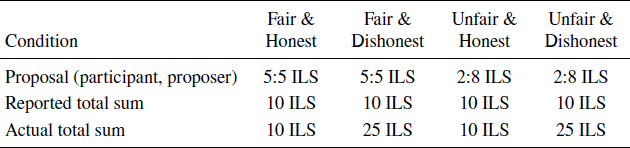

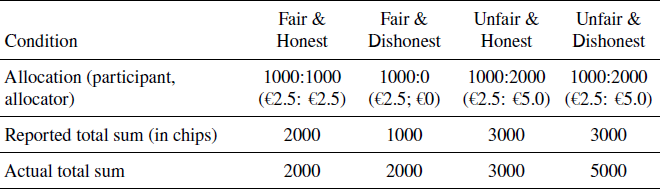

Importantly, the “actual” amounts divided between the proposer and participants were 10 or 25 ILS. The split of the pie was either 50:50 or 80:20 (in favor of the proposer). However, participants were informed that the proposer would report the total amount to be divided at their discretion. The report could be 10, 15, 20, or 25 ILS. Participants were randomly assigned to one of the 2 × 2 between-subjects conditions. As shown in Table 1, in the Fair & Honest condition, participants were offered 50% of the actual sum (5 out of 10 ILS). In the Fair & Dishonest condition, participants initially thought they had been offered 50% of the sum, but actually got only 20% (5 out of 25 ILS). In the Unfair & Honest condition, participants were offered 20% of the actual sum (2 out of 10 ILS). Finally, in the Unfair & Dishonest condition, the participants thought they had been offered 20% of the sum, but actually they got only 8% (2 out of 25 ILS).

Table 1: Distribution by condition of offered and actual sums

After deciding whether to accept or reject the offer in the Ultimatum Game, participants were informed on the screen whether they had been treated dishonestly or not by the proposer. Thus, we manipulated both fairness and honesty in the same design, and randomly assigned the participants to one of four conditions.Footnote 1

2.1.4 The retaliation task

In the second phase, participants could increase their monetary gain by cheating. They were told that this monetary gain would be deducted from the earnings of their opponent (the proposer in the UG). Participants played the Flexible Dot task (Reference Hochman, Glöckner, Fiedler and AyalHochman et al., 2016), a visual perception task. In this task, participants are presented with multiple trials of 50 non-overlapping dots appearing in different arrangements within a square. The square is divided down the middle by a vertical line. The task is to determine which side of the screen, right or left, contains more dots. Although participants are always requested to accurately judge which side of the square contains more dots, they are paid more if they say there are more dots on a specific side regardless of their accuracy. (They were told this at the outset.) Participants aiming to maximize their profits should thus indicate the highest paying side on each trial regardless of the number of actual dots appearing in the square. Variations of this task have been validated as a reliable measure of cheating behavior in previous research (e.g., Reference Ayal and GinoAyal & Gino, 2011; Reference Gino, Norton and ArielyGino et al., 2010; Reference Hochman, Glöckner, Fiedler and AyalHochman et al., 2016; Reference Mazar and ZhongMazar & Zhong, 2010; Reference Peleg, Ayal, Ariely and HochmanPeleg et al., 2019).

To examine the extent of cheating, we calculated the difference between trials in which participants chose the high-paying side when it was also the inaccurate one (beneficial errors) and trials in which participants chose the low-paying response when it was the inaccurate one (detrimental errors; see Reference Hochman, Glöckner, Fiedler and AyalHochman et al., 2016). This measure represents cheating, since it captures the extent to which participants purposely violated the experimental ‘rules’ set forth by the experimenters in the instructions to increase their personal gain. Participants played 100 trials of the task for real money. They were notified in advance that their accumulated gains would be deducted from the earnings of the proposer against whom they had played in the Ultimatum Game. Thus, they were given the opportunity to retaliate if they felt they had been mistreated.

2.1.5 The Lie Detector test

Finally, participants were taken to another room where they underwent a lie detector test. In the test, they were asked about their own honesty on the retaliation dots task while their physiological arousal was monitored.

To examine dishonesty in the dots task, participants’ GSR was recorded using the Limestone technologies© system (Data Pac USB Ltd.) connected to a laptop computer. The stimulus questions for the test and GSR signals were recorded using cogito software (Ltd.) by SDS © (Suspect detection systems). Participants were seated in front of the computer and connected to the GSR machine. Two 24k gold plated electrodes were attached to the palmar surface of participants’ index and ring fingertips of their right hand. The data were sampled at 60 Hz, with 16 bits per sample. The GSR data were down-sampled to 30 Hz after smoothing by 3 sample kernels. The data were then separated using wavelets to analyze the peaks of tension and temporal responses by an in-house MATLAB script. The exam itself was fully computerized. Findings have confirmed that the automation of polygraph tests increases lie detection accuracy (Reference Honts and AmatoHonts & Amato, 2007; Reference Kircher and RaskinKircher & Raskin, 1988) and is viewed more positively by both examiners and examinees (Reference Novoa, Malagon and KrapholNovoa et al., 2017). Note that this short-automated lie detector test is successfully used worldwide in 15 different countries as well as in international air and sea terminals (Suspect Detection Systems. Ltd).

GSR is the most frequently used measure in physiological lie detection (Reference VrijVrij, 2000) and is considered the most sensitive and critical parameter of the three channels used in polygraph examinations (Reference Kircher, Raskin and KleinerKircher & Raskin, 2002). The GSR measure was the baseline-to-peak amplitude difference in the 0.5-s to 8-s time window from the response provided by the participant. The measures were composed of arousal in response to 6 changing probable lie control (PLC) questions and 3 changing irrelevant questions. The control question arousal responses were compared to the arousal in response to the two relevant test questions (see Appendix for all questions). This format is in line with the most frequently administered polygraph tests known as the Control Question Test (CQT; see Reference Honts and ReavyHonts & Reavy, 2015; Reference RaskinRaskin, 1986; Reference Vrij and FisherVrij & Fisher, 2016) and is one of the most common instruments to test people’s integrity in the US, Europe, Israel, and South Africa (Reference Honts and ReavyHonts & Reavy, 2015; Reference Raskin, Honts and KircherRaskin, Honts & Kircher, 2014).

Questions were presented to the participants on the computer screen and aurally through a headphone set placed on their ears. Responses were recorded orally via a microphone attached to the headset. All participants were notified before the test that if they were identified as honest, they would get a 5 ILS bonus. In actuality, at the end of the study, all participants (regardless of their performance on the lie detector test) were given the bonus. Participants answered all the questions in a changing order in 3 consecutive rounds. Their GSR to the target questions (regarding cheating on the dots task) compared to the control questions were calculated to obtain the final Sympathetic Arousal Index, which fell between –1.2 and 1.8 98% of the time. This continuum measure allowed for a precise analysis of physiological arousal levels on the target questions.

There is substantial body of laboratory and field research in forensic settings that supports the rationale and the validity of the PLC version of the CQT (see reviews by Honts, 2004; Reference Raskin, Honts and KleinerRaskin & Honts, 2002). Given the constraints of the laboratory setting and the administration of multiple tests, we used a semi-automated version of the standard CQT test. The procedure, which is identical to the one used in Peleg et al. (2019), was composed of a pre-test phase consisting of a brief explanation of the upcoming process followed by a short presentation of all the test questions by the interviewer (research assistants), and the participant’s informed consent, which conformed closely to standard CQT procedures.

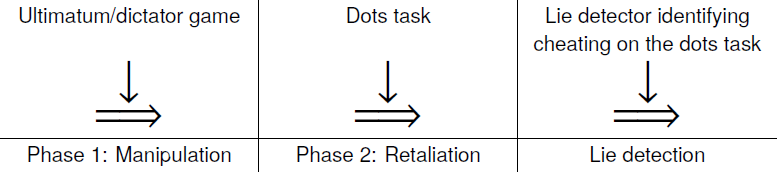

Figure 1 outlines the experimental procedure. Immediately upon completion of the study, participants were debriefed on the experimental manipulation, its purpose, the aim of the experiment and were paid.

Figure 1: Experimental Procedure. Lie detection was implemented only in Study 1.

2.2 Results

2.2.1 Behavioral results

First, we examined acceptance rate on the UG task. Since participants were informed about the actual total sum after making their decision to accept or reject the offer, we expected the acceptance rate to be affected by fairness but not by honesty, since it was unknown. Similar to previous findings (e.g., Camerer, 2003; Reference Güth and KocherGüth & Kocher, 2014), participants exhibited higher acceptance rates for fair than for unfair offers. The acceptance rate was 86% (74 out of 86 participants) when the offer was fair, and 32.4% (36 out of 111 participants) when the offer was not fair. A two -tailed chi-square test revealed that the difference between these two conditions was significant (χ2(1, n=197) = 56.485, p<0.001). Thus, as expected, unfair offers were rejected more often than fair offers. This tendency was not influenced by the honesty level of the proposer, which was unknown to the participants at this stage.

Next, we asked whether the cheating behavior in Phase 2 was affected by whether the proposer had been fair and honest (or not) on the UG. To examine cheating levels on the dots task, we classified the errors (selections of the side that had fewer dots) into two types: beneficial errors; that is, trials in which participants chose the high-paying side when more dots were on the low-paying side of the square, and detrimental errors; that is, errors in which participants chose the low-paying side when more dots were on the high-paying side (Reference Hochman, Glöckner, Fiedler and AyalHochman et al., 2016). The cheating level was calculated as the difference between the percentage of beneficial and detrimental errors, which estimates the extent to which participants purposely violated the instructions to increase their personal gain.

Analyzing the cheating results in the second phase, we realized that our original design had shortcomings. As previously mentioned, the (dis)honesty of the proposer was revealed to participants only in the second phase after they had already decided to accept or reject the offer. Thus, this methodological issue actually eliminated one of the experimental conditions: in the Fair & Dishonest condition, participants received a fair offer (5:10). Still, they then discovered after making their choice that the proposer was not honest. That is, they were informed that instead of getting 50% of the total pie (5 out of 10), they had actually been offered 20% of the total pie (5 out of 25). Thus, from the second phase onward, our paradigm did not include a Fair & Dishonest condition, but rather a High-Unfair & Dishonest (2 out of 25), and Low-Unfair & Dishonest (5 out of 25) condition. Since there were no differences in the subsequent cheating levels on the dots task between the High- and Low-Unfair & Dishonest conditions (M = 44.44, SD = 49.3 for the High Unfair & Dishonest and M = 45.79, SD = 44.85 for the Low Unfair & Dishonest, t= 0.142, p = 0.888, Cohen’s d= 0.028), these two treatments were collapsed into one condition. The behavioral results on the dots task were thus analyzed for three experimental conditions: Fair & Honest (5:10), Unfair & Honest (2:10), and Unfair & Dishonest (High, 2:25; and Low, 5:25).

A 3 X 2 between subject ANOVA analysis was conducted to examine the main

effects of the experimental condition (fair & honest, unfair &

honest, and unfair & dishonest) and the UG response (accept/reject)

on cheating behavior. This analysis revealed a significant main effect for

condition (F (2,194) = 3.06, p = 0.049,

![]() = 0.031) but not for the UG

response (F (1,195) = 0.001, p = 0.994,

= 0.031) but not for the UG

response (F (1,195) = 0.001, p = 0.994,

![]() = 0.001). More importantly,

adding the interaction between the two factors to the model revealed a

significant interaction effect (F(2,191) = 3.012,

p = 0.052,

= 0.001). More importantly,

adding the interaction between the two factors to the model revealed a

significant interaction effect (F(2,191) = 3.012,

p = 0.052, ![]() = 0.031).

= 0.031).

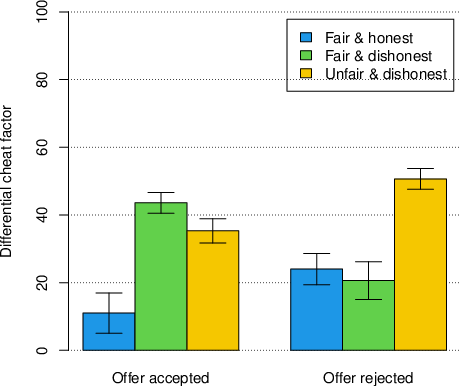

Post-hoc multiple comparisons tests (LSD) were conducted to further examine how unfairness and dishonesty of the proposer affected the cheating level of the participants who accepted or rejected the offer on the UG. When participants rejected the offer (n = 87), there was no difference in cheating level between the Unfair & Honest (M = 43.6, SD = 42.1) and the Unfair & Dishonest (M = 35.3, SD = 42.3; p = 0.374) conditions. Thus, if the participants had already retaliated by rejecting the offer, they tended to cheat to a similar extent, regardless of whether they were mistreated unfairly or dishonestly (since only 4 participants rejected the offer in the Fair & Honest condition, we could not examine the difference between this condition and the other two). However, participants who accepted the offer (n = 110) tended to cheat an Unfair & Dishonest proposer more (M = 50.7, SD = 48.4) than a Fair & Honest proposer (M = 24.0, SD = 49.8;, p = 0.016,95% C.I. = [5.007, 48.294])) or a Unfair & Honest proposer (M = 20.6, SD = 47.6;, p = 0.023,95% C.I. = [4.262, 55.817]). No difference was found between the Fair & Honest and Unfair & Honest conditions (p = 0.817). These results are presented in Figure 2.

Figure 2: Participants’ differential cheat Factor as a function of the initial proposer’s offer and the UG response (accept/reject): Offer rejected: Fair & Honest (n = 4), Unfair & Honest (n = 48), Unfair & Dishonest (n = 35); Offer accepted: Fair & Honest (n = 29), Unfair & Honest (n = 18), Unfair & Dishonest (n = 63). Vertical lines indicate the standard errors.

Thus overall, participants who retaliated by rejecting an unfair (or unfair and dishonest) offer appeared to be less likely to also cheat their offender. This was true even if they were treated both unfairly and dishonestly. However, participants who had not already retaliated tended to cheat those who had previously cheated them more, not those who had only treated them unfairly. These latter results support the distinctive mistreatment effect hypothesis (H1b) and suggest that revenge is sensitive to the type of mistreatment.

2.2.2 Physiological results

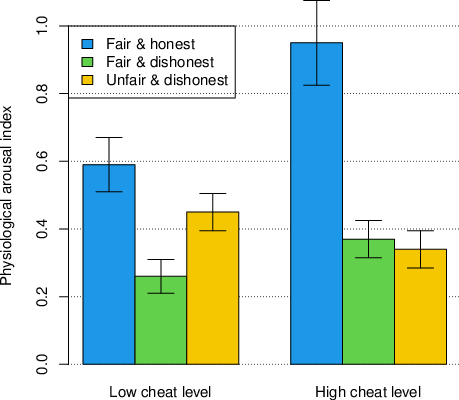

To test the possible justification effect of cheating others to get revenge for their wrongdoing, we examined physiological arousal on a lie detector test. Specifically, after the dots task, participants underwent a lie detector test in which their moral conduct on the task was evaluated. This was done to assess whether the arousal associated with cheating behavior (Reference Hochman, Glöckner, Fiedler and AyalHochman et al., 2016; Reference Wang, Spezio and CamererWang, Spezio & Camerer, 2010) was affected by whether the participants were treated unfairly, dishonestly (or both), and by their actual cheating level. Importantly, we examined whether cheating in general, which is assumed to be reflected in physiological arousal on the lie detector test (Reference Raskin, Honts and KircherRaskin et al., 2014), could be justified by a different kind of mistreatment. Thus, we divided participants into high versus low cheaters (based on the median split, which was the 36.0% difference between beneficial and detrimental errors; Reference Peleg, Ayal, Ariely and HochmanPeleg et al., 2019; Reference Hochman, Peleg, Ariely and AyalHochman et al., 2021).

Since we aimed to examine the relationship between physiological arousal and

actual cheating behavior, we compared the arousal of participants who

cheated to a large extent on the dots task to the arousal of participants

who cheated to a small extent. A 3 X 2 (Condition X Cheating level)

between-subjects ANOVA was conducted to examine the main effects of the

experimental conditions (Fair & Honest, Unfair & Honest,

Unfair & Dishonest) and the cheating level (high vs. low) on the

physiological arousal index on the lie detector test. This analysis revealed

a significant main effect for experimental condition (F(2,

189) = 3.31, p = 0.039, ![]() = 0.034), but not for

cheating level (F(1, 190) = 0.002, p =

0.966,

= 0.034), but not for

cheating level (F(1, 190) = 0.002, p =

0.966, ![]() = 0.001). Adding the

interaction between the two factors to the model revealed a non-significant

interaction effect (F(1, 186) = 1.351, p =

0.262,

= 0.001). Adding the

interaction between the two factors to the model revealed a non-significant

interaction effect (F(1, 186) = 1.351, p =

0.262, ![]() = 0.014). Post-hoc multiple

comparisons tests (LSD) were conducted to delve into the significant main

effect of the experimental condition. This analysis indicated that the

physiological arousal index in the Fair & Honest condition

(M=0.698, SD = 0.77) was significantly

higher than the Unfair & Honest (M=0.312,

SD = 0.60, p = 0.013,95%

C.I. = [0.083, 0.689]) and the Unfair & Dishonest

conditions [M=0.390, SD = 0.76,

p = 0.034,95% C.I. = [0.023, 0.592]).

However, the difference between the two latter conditions (Unfair &

Honest vs. Unfair & Dishonest) was not significant

(p = 0.499). These results are presented in Figure 3.

= 0.014). Post-hoc multiple

comparisons tests (LSD) were conducted to delve into the significant main

effect of the experimental condition. This analysis indicated that the

physiological arousal index in the Fair & Honest condition

(M=0.698, SD = 0.77) was significantly

higher than the Unfair & Honest (M=0.312,

SD = 0.60, p = 0.013,95%

C.I. = [0.083, 0.689]) and the Unfair & Dishonest

conditions [M=0.390, SD = 0.76,

p = 0.034,95% C.I. = [0.023, 0.592]).

However, the difference between the two latter conditions (Unfair &

Honest vs. Unfair & Dishonest) was not significant

(p = 0.499). These results are presented in Figure 3.

Figure 3: Participants’ physiological arousal index as a function of cheat level and initial proposer’s offer. Low cheat level: Fair & Honest (n = 23), Unfair & Honest (n = 32), Unfair & Dishonest (n = 42); High cheat level: Fair & Honest (n = 10), Unfair & Honest (n = 31), Unfair & Dishonest (n = 54). Vertical lines represent the standard errors.

This pattern of results runs counter the behavioral results. Although participants in the Fair & Honest condition exhibited the lowest level of cheating, they also showed higher levels of physiological arousal. By contrast, participants who experienced any type of mistreatment cheated to a greater extent but also experienced lower levels of physiological arousal. As indicated in the literature, cheating behavior is associated with increased physiological arousal (e.g., Hochman et al., 2016, Wang et al., 2010). Thus, this pattern of results supports the general mistreatment hypothesis (H2a) and suggests that both the unfairness and dishonesty of the proposer could have served as a justification that diffused the ethical dissonance associated with cheating behavior. Presumably, when the participants were mistreated in general (and not just cheated), they may have felt entitled to get revenge by cheating the proposer, while maintaining a clear conscience.

2.3 Discussion

The results provide initial support for the claim that behaviorally, revenge is not blind. Similar to previous findings (Reference Brethel-Haurwitz, Stoycos, Cardinale and MarshBrethel-Haurwitz et al., 2016), our participants were prone to rejecting unfair offers and punishing the proposer even at a personal cost. Second, participants who had not already punished the proposer tended to cheat the dishonest proposer more than the unfair one. These results support the distinctive mistreatment effect (H2a) since they indicate a differential retribution response to unfairness vs. dishonesty. This pattern of results suggests that the participants may have adapted their retaliation to fit the way they were mistreated. Thus, on the behavioral level, revenge might be considered a sensitive form of an ’eye for an eye’ between the type of mistreatment and the nature of the retaliation. On the other hand, the physiological results suggest that both types of mistreatment served as a justification to increase the level of cheating while attenuating the tension associated with it.

One main characteristic of our experimental setting is that participants were first exposed to unfairness and only then to dishonesty. This sequential order resembles many realistic social interactions in which fairness is more visible than honesty. Individuals often know immediately if someone has treated them fairly or not, but dishonesty is not usually detectable until later. However, this sequential nature also makes the distinction between unfairness and dishonesty more difficult to determine. This stems from the fact that participants could retaliate by cheating only after they already had a chance to avenge the unfair offer by rejecting it. Presumably, the revenge that exacted by participants on unfair proposers by rejecting their offer affected the follow-up decision concerning the magnitude of cheating. Alternatively, after rejecting the offer, participants perhaps no longer cared about whether the proposer was honest or not toward them, because it did not affect them in the end.

The second issue related to this sequential design is a consequence of this information gap between the two phases. The first phase of the design precluded examining a fair and dishonest proposer. Once the participants had been informed of the proposer’s dishonesty in the second phase, they also found out that the seemingly fair offer (5:10) was also unfair (5:25).

To address these two limitations, we first isolated specific comparisons and focused solely on those participants who had accepted the offer and thus were not influenced by earlier revenge. The results showed that participants who accepted the offer cheated those who were Low Unfair & Dishonest (5:25) more than those who were Unfair & Honest (2:10) ( t(61) = 2.147, p = 0.036, Cohen’s d = 0.559). That is, participants in the Low Unfair & Dishonest condition cheated more even though they received a greater absolute monetary sum, and when their relative part of the total pie (20%) was held constant. This lends credence to the claim that they preferred to take revenge in the same way they had been mistreated. To further test this interpretation, we ran Study 2 with a revised procedure that exposed unfairness and dishonesty at the same time and allowed the participants only one opportunity to get revenge.

3 Study 2

Study 2 was designed to address the limitations in Study 1 by using the Dictator Game (Reference EngelEngel, 2011). This allowed us to simultaneously manipulate the opponent’s levels of unfairness and dishonesty and test which effect would lead to stronger revenge. Specifically, after participants were informed about how much money they received, they were given a chance to retaliate by cheating back on a subsequent task. Unfortunately, due to COVID-19 restrictions, this study was run online and did not include a lie detector test.

3.1 Method

3.1.1 Participants

The sample size was predetermined using G*Power (Reference Faul, Erdfelder, Buchner and LangFaul et al., 2009). We used a medium-large effect size (0.30), with an α of .05 and a power of .90. This calculation yielded a minimum target sample size of 195 participants. The final sample consisted of 231 Prolific academic participants (84 males, 145 females, 2 other) who volunteered to participate in the study. The mean age was 36.4 years (SD = 13.98). Participants received €0.2 for their participation, and up to an additional €4.8, contingent upon their selections in the task.

3.1.2 Design and procedure

Similar to the first two phases of Study 1, the design implemented 2 computerized phases: the manipulation and the retaliation task. The phases were administered consecutively in a 2 (Fair vs. Unfair) × 2 (Honest vs. Dishonest) between-subject design.

3.1.3 The manipulation

In the first phase, participants played a variation of the Dictator Game (DG; Reference EngelEngel, 2011). In the classic version of this game, one individual (the allocator) suggests how to divide a sum of money (the pie) with a second individual (the receiver). The receiver does not affect the allocated division. In the current study, participants played only the role of the receiver. They were told that they would play one trial with another participant via the computer. In actuality, there were no real allocators, and all offers were controlled by the computer. Participants were told that the other player received an initial amount of chips (5000, 10000, 15000, or 25000, with equal probability) from the experimenter. This initial amount is only known to the other player, not to the participant. Then, the other player would decide how much of this amount to keep and how much to give to the participant. The participants were further informed that the other player would notify them about the initial amount (the total pie) and their proposed allocation. To make this clear, the participants were also presented with the split of the pie in percentages. Finally, the participants were informed that each chip was worth €0.25, and that they would be paid at the end of the experiment based on their allotted sum.

In all conditions, the participants were offered 1000 chips (i.e., €2.5), and they differed solely in the information provided to them about the total pie and the split, as follows. In the Fair & Honest condition, participants were told that the other participant got a total of 2000 chips, and decided to give them 1/2 of the overall amount. In the Fair & Dishonest condition, participants were informed that the other participant got a total of 1000 chips, and decided to give them all that amount. In the Unfair & Honest condition, participants were told that the other participant got a total of 3000 chips, and decided to give them 1/3 of the overall amount. Finally, in the Unfair & Dishonest condition, the participants were told that the other participant got a total of 3000 chips, and decided to give them 1/3 of the overall amount. The participants were randomly assigned to one of these 4 conditions.

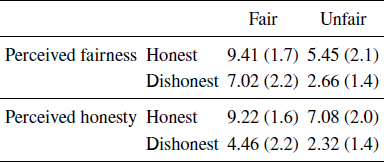

After the offer in the DG was presented to them, the participants were informed whether they had been treated dishonestly and unfairly (or not) by the allocator. Specifically, in the the Fair & Honest and Unfair & Honest conditions, they were told that indeed the other player got the reported amount of chips. By contrast, in the Fair & Dishonest condition, the participants were informed that the actual amount was 2000 chips (rather than the reported 1000), and in the Unfair & Dishonest condition, 5000 chips (rather than the reported 3000). Thus, we were able to manipulate both fairness and honesty in the same design. These experimental conditions are summarized in Table 2.

Table 2: Distribution by condition of offered and actual sums

To validate our manipulation and examine whether participants perceive an unfair offer as unfair and dishonest offer as dishonest, we ran a pilot on 203 Prolific Academic participants (69 males, 134 females; M = 32.72 years, SD = 12.42). Participants received €0.88 for their participation. The experiment was identical to the 2 × 2 between participants design of the DG described above, except that the amounts were presented in EUROs and not in chips. After the participants saw what the allocator reported getting and offered to them, and then learned the actual total sum, we asked them to rate the extent to which they perceived the allocator to be a fair and honest person (on a 1–10 scale).

The results of this pilot are presented in Table 3. 2 × 2 between subjects ANOVA analyses were

conducted to compare the effects of fairness (fair vs. unfair) and honesty

(honest vs. dishonest) in the DG on the two dependent measures (perceived

fairness and perceived honesty of the allocater). For perceived fairness,

there was a significant main effect for fairness (F(1,198)

= 254.799, p < 0.001, ![]() = 0.563)

and a weaker but significant main effect for honesty

(F(1,198) = 98.853, p < 0.001,

= 0.563)

and a weaker but significant main effect for honesty

(F(1,198) = 98.853, p < 0.001,

![]() = 0.333). In addition,

there was no significant interaction between the two factors

(F(1,198) = 0.587, p = 0.445,

= 0.333). In addition,

there was no significant interaction between the two factors

(F(1,198) = 0.587, p = 0.445,

![]() = 0.003). Similarly, the

analysis of perceived honesty yielded a significant main effect for honesty

F(1,198) = 333.894, p < 0.001,

= 0.003). Similarly, the

analysis of perceived honesty yielded a significant main effect for honesty

F(1,198) = 333.894, p < 0.001,

![]() = 0.628, and a weaker but

significant main effect for fairness (F(1,198) = 67.484,

p < 0.001,

= 0.628, and a weaker but

significant main effect for fairness (F(1,198) = 67.484,

p < 0.001, ![]() = 0.254). Here again, there

was no significant interaction between the two factors

F(1,198) = 0.00, p = 0.996,

= 0.254). Here again, there

was no significant interaction between the two factors

F(1,198) = 0.00, p = 0.996,

![]() = 0.00).

= 0.00).

Table 3: Mean perceived fairness (upper panel) and honesty (lower panel) of the allocator in the pilot, as a function of the experimental design. SDs are in parentheses

These results lend credence to the validity of our manipulation since the level of fairness and honesty were accurately reflected in the way participants perceived the allocator. That is, participants perceived allocators who made fair offers as fair, and honest offers as honest. At the same time, however, the results also highlight the interplay between these two factors, since unfair allocators were also perceived as dishonest (albeit to a lesser extent) and vice-versa.

3.1.4 The retaliation task

In the second phase, participants played the same Flexible Dot task (Reference Hochman, Glöckner, Fiedler and AyalHochman et al., 2016) as in Study 1, for actual money that was deducted from the earnings of their opponent (the allocator in the DG). The participnats got 1 chip (equal to 0.025 penny) for indicating that there were more dots on the left side and 10 chips (equal to 0.25 penny) for indicating that there were more dots on the right-hand side.

3.2 Results

A 2 × 2 between subject ANOVA analysis was conducted to examine if

cheating behavior in Phase 2 was affected by whether the allocator was fair and

honest (or not) on the DG. This analysis revealed a nearly significant main

effect for fairness (F(1,229) = 3.397, p =

0.067, ![]() = 0.015) and a significant main

effect for honesty (F(1,229) = 7.521, p =

0.007,

= 0.015) and a significant main

effect for honesty (F(1,229) = 7.521, p =

0.007, ![]() = 0.032). Adding the

interaction between the two factors to the model, revealed a non-significant

interaction effect (F(1,227) = 0.073, p =

0.787,

= 0.032). Adding the

interaction between the two factors to the model, revealed a non-significant

interaction effect (F(1,227) = 0.073, p =

0.787, ![]() < 0.001). Planned

contrasts were conducted to examine how unfairness and dishonesty on the part of

the allocator affected the cheating level. While participnats tended to cheat a

Fair & Honest allocator (M = 15.5, SD =

36.5) less than an Unfair & Honest one (M = 25.5,

SD = 35.3), this difference was not significant

(t (227) = 1.418, p = 0.157,

Cohen’s d = 0.277). However, in the Fair &

Honest condition, cheating was significantly lower than in the Fair &

Dishonest condition (M = 29.9, SD = 39.9;

t(227) = 2.084, p = 0.038,

Cohen’s d = 0.376), or the Unfair & Dishonest

one (M = 37.3, SD = 36.4;

t(227) = 3.206, p = 0.002,

Cohen’s d = 0.596). Finally, a nearly significant

difference was found between participants who cheated an Unfair and Dishonest

proposer and those who cheated an Unfair and Honest one (t(227)

= 1.705, p = 0.089, Cohen’s d = 0.328).

These results are presented in Figure

4.

< 0.001). Planned

contrasts were conducted to examine how unfairness and dishonesty on the part of

the allocator affected the cheating level. While participnats tended to cheat a

Fair & Honest allocator (M = 15.5, SD =

36.5) less than an Unfair & Honest one (M = 25.5,

SD = 35.3), this difference was not significant

(t (227) = 1.418, p = 0.157,

Cohen’s d = 0.277). However, in the Fair &

Honest condition, cheating was significantly lower than in the Fair &

Dishonest condition (M = 29.9, SD = 39.9;

t(227) = 2.084, p = 0.038,

Cohen’s d = 0.376), or the Unfair & Dishonest

one (M = 37.3, SD = 36.4;

t(227) = 3.206, p = 0.002,

Cohen’s d = 0.596). Finally, a nearly significant

difference was found between participants who cheated an Unfair and Dishonest

proposer and those who cheated an Unfair and Honest one (t(227)

= 1.705, p = 0.089, Cohen’s d = 0.328).

These results are presented in Figure

4.

Figure 4: Participants’ differential cheating factor as a function of the proposer’s offer in the DG: Fair & Honest (n = 58), Unfair & Honest (n = 54), Fair & Dishonest (n = 57), and Unfair & Dishonest (n = 62). Vertical lines indicate the standard errors.

Thus overall, this pattern of results suggests that participants’ cheating level was more affected by the honesty than the fairness of the proposer. In fact, the most cheating was observed against dishonest proposers (regardless of whether they were fair or not), and the main effect of fairness was only marginally significant. As in Study 1, these behavioral results support the distinctive mistreatment effect hypothesis (H1b) and suggest that revenge is not blind. Moreover, the magnitude of mistreatment was directly associated with the magnitude of retaliatory cheating.

4 General discussion

The current studies were designed to assess whether retaliation can serve as a pre-violation justification that increases cheating while at the same time decreasing its associated guilt feelings. Although some studies have concluded that all types of mistreatment can justify retaliating in the form of cheating (Reference Motro, Ordonez, Pittarello and WelshMotro et al., 2016), other findings indicate that different kinds of mistreatment may result in different patterns of revenge (Reference Schurr and RitovSchurr & Ritov, 2016). To disentangle these hypotheses, we designed a distinctive setting in which we manipulated whether participants were treated (un)fairly and (dis)honestly. This made it possible to examine how they chose to retaliate to different types of mistreatment, and how this affected their associated physiological arousal.

Behaviorally, both studies supported the distinctive mistreatment effect (H2a) since they indicated that the opponent’s dishonesty was a stronger driver for cheating as a form of retaliation than the opponent’s unfairness. This was true in the sequential design of Study 1, where participants who had not already punished the proposer by rejecting the offer in the UG tended to cheat dishonest proposers more than the unfair ones. Similarly, in Study 2 participants tended to cheat the dishonest allocators more, whereas the effect of unfairness was not quite significant. However, the results of the pilot study and the finding that the participants cheated to some extent those who cheated them or treated them unfairly suggest that it may be hard in general for people to tease apart these two different types of mistreatment.

It is worth noting that the current results cannot be explained by the absolute monetary sum of the different offers or by the relative amount of money earned by the opponent. This is because the two designs manipulated honesty and fairness while controlling for the absolute payment of the participants as well as the percentage out of the total pie. Thus, comparing the effects of a payment of 5 offered out of 10 vs. 5 out of 25 in the UG in Study 1 kept the participants’ payoff constant while manipulating fairness. Similarly, a comparison of the offers of 2 out of 10 vs. 5 out of 25 preserved a constant ratio while manipulating honesty. The results showed that participants chose to cheat more when the offer was 2 or 5 out of 25 (dishonest) than when the offer was 2 or 5 out of 10 (honest), even if they were offered the same or a lower amount of money. Moreover, the fact that in Study 2, participants in all conditions received the same absolute amount (€2.5) and still cheated the dishonest allocators more further undermines this alternative explanation.

The designs in these two studies differed in some critical ways. In Study 1, participants were first exposed only to the (un)fairness of the proposal. They were then required to react to the proposer’s offer by rejecting or accepting it, and only then were informed of the proposer’s (dis)honesty and had an opportunity to retaliate by cheating. In Study 2, however, participants were exposed to both types of mistreatment (unfairness and dishonesty) simultaneously and could only react to this mistreatment by cheating on the retaliation task. While the variety of tasks certainly contributed to the internal validity of our findings, they may also contribute to its external validity, as they represent different real-life situations. The sequential design in Study 1 reflects many realistic social interactions in which fairness is more visible than honesty. Individuals may know immediately if someone has treated them fairly or not, by contrast to dishonesty which may not be immediately detectable. If indeed unfairness can be immediately exposed and responded to, it may no longer sustain negative feelings. Conversely, if dishonesty is frequently made known in retrospect, it could generate, sustain, and perhaps even inflate negative feelings. These feelings could pave the way for avenging and cheating back as a possible mechanism for reducing victimhood without additional guilt. Moreover, Study 2 showed that even when we simultaneously manipulated (un)fairness and (dis)honesty, dishonesty appeared as a much stronger driver for vindictive cheating. Thus, at least when revenge can take the form of cheating, prior dishonesty was perceived as a more severe violation than unfairness. Future research should test whether the unique severity of dishonesty also holds when participants consider revenge in terms of unfairness or other types of mistreatment.

Taken together, the current results suggest that revenge is not blind when individuals choose how to retaliate by cheating. However, it is blind when they examine their conscience since, at least in terms of physiological arousal, the participants seemed to feel less accountable when cheating someone who mistreated them. These results contribute to the field of lie detection by providing another example where cheating is less likely to be detected when the unethical behavior can be justified (Reference Hochman, Peleg, Ariely and AyalHochman et al., 2021). Alongside the fact that people value morality and honesty very highly (Reference Chaiken, Giner-Sorolla, Chen, Gollwitzer and BarghChaiken et al., 1996; Reference GreenwaldGreenwald, 1980; Reference Sanitoso, Kunda and FongSanitoso et al., 1990) and strive to maintain a positive moral image (Reference JonesJones, 1973; Reference RosenbergRosenberg, 1979), the results nevertheless suggest that preferring sincerity over revenge in these situations might also take a psychological toll. Thus, previous mistreatments may allow individuals to balance the scale by choosing to cheat to a certain extent without feeling its associated guilt.

Appendix

Questions on the CQT (Reference RaskinRaskin, 1986) in Studies 1 and 2.

Test questions

-

1 - Did you cheat on the task you just completed in this study?

-

2 - In the previous task, did you select the side that would give higher payoffs regardless of whether it was the side with more dots?

Control questions

-

1 - Is there a door in this room?

-

2 - Have you ever lied to avoid taking responsibility for your actions?

-

3 - Have you ever acquired something in a dishonest way?

-

4 - Is there a chair in this room?

-

5 - Have you ever cheated out of self-interest?

-

6 - Are we currently in Tel Aviv, Israel?